Abstract

The paper deals with prototyping strategies aimed at supporting engineers in the design of the multisensory experience of products. It is widely recognised that the most effective strategy to design it is to create working prototypes and analyse user’s reactions when interacting with them. Starting from this consciousness, we will discuss of how virtual reality (VR) technologies can support engineers to build prototypes suitable to this aim. Furthermore we will demonstrate how VR-based prototypes do not only represent a valid alternative to physical prototypes, but also a step forward thanks to the possibility of simulating and rendering multisensory and real-time modifiable interactions between the user and the prototype. These characteristics of VR-based prototypes enable engineers to rapidly test with users different variants and to optimise the multisensory experience perceived by them during the interaction. The discussion is supported both by examples available in literature and by case studies we have developed over the years on this topic. Specifically, in our research we have concentrated on what happens in the physical contact between the user and the product. Such contact strongly influences the user’s impression about the product.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The term Experience is largely used for advertising purposes: cars, appliances, coffee machines and many other products, including services and software, are advertised as experiences (Schmitt 1999; Pine and Gilmore 2011). Companies are pushed to invest a lot of efforts in putting on the market products eliciting worthwhile experiences (as defined in (Hassenzahl (2010a)). In years, that term has been used with many different connotations and has assumed multifaceted meanings: from referring to pure usability until a more abstract association, i.e. a story. As stated by Hassenzahl (2010a), when looking for a new product we have to first figure out the story to render through it, and only after put effort in designing and implementing the technology needed to create it. The term story is used to synthesise the dynamic and worthwhile patterns of actions, feelings and emotions the product should elicit to whom somehow deal with it (alone or together with other users) (Hassenzahl 2010a). An interesting example and further considerations are provided in (Hassenzahl et al. 2013).

This interpretation extends what in the Human Computer Interaction (HCI) field is labelled as the User Experience (i.e. the experience focused on the set of actions performed by a user, interacting physically with the product, in order to successfully perform a task (DIS 2009)). Indeed, when designing the User Experience of products there is something more to investigate beyond usability or performance indexes: that one is itself an experience created and shaped through technology (Hassenzahl 2005, 2014). During the interaction/usage, the product features (its sensory properties, behaviours and functionalities) trigger a complex set of emotional, behavioural and appraisal effects (Hassenzahl 2005; Schifferstein and Hekkert 2011) into the user. These effects shape the overall perception of the user about the product and thus her/his willingness to get positively involved with it. From a design perspective, the creation of worthwhile experiences is a complex task to accomplish: it requires to simultaneously take into account not only the new features and functionalities to be implemented on the product, but also the way and how the user will experience them and the context of use where this experience will take place. Consequently, several expertise are needed to deal with this design problem, from human sciences to engineering (Schifferstein and Hekkert 2011), while the variables to take into account are the following: the specific interaction/usage situation and its background scenario; the products and its features; the user and his/her experiential attitudes; the presence of other people (Hassenzahl 2010a; Forlizzi and Battarbee 2004; Hassenzahl and Tractinsky 2006).

To deal with this complex design problem, prototypes are fundamental especially in the early stages of the product development process. It is a current practice in industry to build a number of physical variants of the same product idea in order to observe and measure differences when performing tests with users. Prototypes must be put in front of the user and used; engineers must observe how users act, interact and behave in front of them. Then, engineers must collect users’ impressions and feedback and, on this basis, they must rethink the product and the experience it should be able to elicit. Norman (2004) suggests this approach in emotional design, which is very much related to experience design, since emotion is a subset of experience (Desmet and Hekkert 2007). However, even if this strategy works properly when designing software, since no physical material is needed to build variants, it is not always sustainable in case of consumer goods industries: the building of physical prototypes demands for high time and investment costs to R&D departments. Not only economical, but also technical limitations are present: having working prototypes in the early stages of the product development process (behaving as the final product will do), is not that feasible.

In this paper, we analyse alternative prototyping strategies for product experience design and provide indications to guide practitioners toward the selection and implementation of the most effective one. We narrow our discussion on the technical and implementing requirements that a prototyping strategy should take into account to enable the building of an effective testing/design environment where the final user can play an active role. Among the prototyping strategies currently available, we focus on Virtual Reality (VR) technologies and on the strong relevance they have gained in the product development process (Ottosson 2002). Even if there is a huge amount of literature on the role played by such technologies in optimising the design and engineering phases of new products, too little has still been written on how such technologies can support engineers in the design of the multisensory experience of products. Thanks to the big evolutions of visualisation technologies, the attention of practitioners is still continuing to be too focused on the digital creation of high quality aesthetic (visual) renderings while less on the other sensorial cues such as haptic, sound and olfactory ones.

To address all these issues we start the discussion analysing the characteristics that a prototype used for multisensory product experience design should have (“Prototypes for product experience design” section). We concentrated on that part of the experience, which lasts few seconds, and occurs at the interaction between the product and the user. In (Hassenzahl 2010b) such stage is defined as the act of experiencing the product. Despite of its short life span, this stage of the interaction has a strong influence on the user’s perception of the product since, it is the moment in which the contact between the product and the user is established and thus, the impression about the product is shaped. In “Prototyping strategies: real, virtual and mixed” section these multisensory characteristics are used to evaluate the effectiveness of currently available prototyping techniques. Then we describe a framework to be used as a conceptual map of the possible prototyping directions to follow according to the engineering target to pursuit (“A framework to guide the design of multisensory testing scenarios” section). Finally, using the framework as reference, we detail indications and examples to put it into practice (“Put the framework into practice: examples of testing scenarios for the experience design of appliances interfaces” section). These examples will be used to point out the potentials of mixed (a combination of virtual and real) prototypes in guaranteeing a good balance between the faithfulness of the experience perceived and the technical/economic feasibility of the prototyping phase. The examples and considerations provided in this paper are the result of years we have spent researching within the field of Virtual Prototyping and working in collaboration with consumer goods industries.

Prototypes for product experience design

In this Section we discuss the characteristics that prototypes for multisensory experience design should embody. Buchenau and Suri (2000) have labelled them as Experience Prototypes. Merging the insights available in their work and in the literature cited in “Introduction” section, we extrapolated a set of requirements which are hereby discussed and summarised in Table 1. This set takes also into account the testing conditions needed to evaluate the multisensory experience of new products and the requirements stated by the engineer so as to use the prototype as a design tool for exploring alternative solutions.

As primary requirement we consider the necessity, during the test with users, of enabling an active/firsthand appreciation of the interaction. This implies for the user the possibility to experience the prototype subjectively and thus to not consider prototypes only as “do not touch” demonstrators (Buchenau and Suri 2000). Moreover, the user’s behaviour and reaction should be observed and acquired.

Secondly, following the motto exploring by doing (Buchenau and Suri 2000) designers should be allowed to explore design alternatives as soon as possible, so as to find the optimum interaction when it is still possible to apply changes to the initial idea. This implies that prototype variants are necessary. Since companies are seeking in reducing costs related to the product development process and also in shortening it, low cost and fast prototyping strategies would be preferred for this purpose.

Merging this aspect with the necessity of guaranteeing a firsthand involvement of the user, the third aspect comes out: the user should have the possibility to directly change the prototype behaviour/characteristics or ask for modifications. In addition, the multisensory perception elicited by the prototype should be variable over time and in real-time; that is mandatory also for any modification applied to the prototype. The reason here is twofold. First, being the act of experiencing the result of an interaction it is by definition dynamic. Second, it is only by guaranteeing the controllability of the experiencing over time that the designer can explore different interaction modalities and product behaviours. This fact implies two related consequences: during the testing phase prototypes must be modified quite easily in order to match their behaviour to the user’s requests; an effective prototyping strategy should guarantee the modifiability of the prototype.

Widening the perspective of our analysis another critical aspect rises: how to set the stage for the experiencing (Buchenau and Suri 2000). Actually, in the work of Buchenau and Suri this expression has a broader meaning (not limited to time and space aspects but including also cognitive ones). However, what is important to us is how to faithfully contextualise the interaction not only over time but also in space, making explicit the relationships established between the person interacting with the product, the product itself and the place where the experience takes place. This aspect tells us that designers must prototype both the product under analysis and the specific moment and context of use. Obviously, that is not necessary during field tests, where real contexts of use are selected as the scenario for the analysis.

Together with these implementing indications we have also to consider the kind of information flow to be conveyed between the user, the product and the context. Norman (2004) describes three levels of information processing involved in the emotional experience with products: visceral, behavioural and reflective. He advices designers to take care of the visual appearance of products (see also (Mugge and Schoormans 2012)), in order to stimulate the visceral part and, of other sensory modalities, including the sense of touch, to stimulate the behavioural processing. This visceral part of humans is also the focus of our paper since what we concentrate on is the engineering of the aesthetic experience of products as defined in the Desmet and Hekkert’s framework (Desmet and Hekkert 2007).

As also pointed out in (Schifferstein and Hekkert 2011; Spence and Gallace 2011; Gallace and Spence 2013) the act of experiencing is by nature multisensory, involving not only visual appearance, but also touch (e.g. see (Gallace and Spence 2013; Klatzky and Peck 2012)), hearing (e.g. see (Schifferstein and Hekkert 2011; Spence and Zampini 2006; Langeveld et al. 2013)) and smell (Krishna et al. 2010; Spence et al. 2014). All these aspects have to be taken into account and thus included when setting the stage: the information flow between the prototype and the user must be multisensory, involving all the senses that are naturally part of the interaction as it would be the real product in a real context. Therefore, the interaction should be as faithful as possible.

After this discussion, the questions arising are now the following: if the best way to design a successful multisensory product experience is building (as soon as possible) several working and easily modifiable prototypes and put them in front of the user, are lo-fi physical prototypes suitable to this aim? Are they able to reproduce the right multisensory experience? In both cases the answer is negative. Different materials generate different sounds, produce different haptic feedbacks, and release different odours. Therefore, the resulting perception is different. Hence, what is the right prototyping strategy we can apply? The next “Prototyping strategies: real, virtual and mixed” section poses the conceptual basis for answering to this question.

Prototyping strategies: real, virtual and mixed

Currently available prototyping strategies are the following: physical, virtual and mixed (Zorriassatine et al. 2003; Bordegoni and Rizzi 2011). They are briefly introduced in the following. We also explain how multisensory experiences could be rendered with these three prototyping modalities.

Real (physical and rapid) prototypes

Physical prototypes usually are built so as to reproduce faithfully the aesthetics, or the functional/technical aspects of the product that are under investigation. If we are not at the end of the design process, they only reproduce some specific aspects, because of cost and time reasons (e.g. prototypes for aesthetic evaluation purposes only reproduce the product appearance). They can be made with raw materials by means of very rough manufacturing techniques (these are lo-fi prototypes for example made with cardboard), or be very sophisticated, made through processes and materials similar to the ones that will be used to manufacture the product. Their main drawbacks are: the cost, the time required to be built and the limited modifiability. Sometimes, if many modifications are required, prototypes must be built again: when several variants of the same product must be produced, a high number of prototypes must be built, thus the time increases and so does the cost (Graziosi et al. 2014).

Rapid prototypes are also physical prototypes but made with rapid prototyping techniques and rapid prototyping materials (Chua et al. 2010). Rapid prototyping techniques are improving quite rapidly, as well as the kind of materials that can be used. While requiring less time to be built compared to physical prototypes, they still suffer from the same limits in term of modifiability, flexibility and cost.

Physical and rapid prototypes can reproduce sensory cues faithfully only if they are very similar to the final product. If the material used to build the prototype is different, the sensory cues it reproduces are not faithful: its weight, sounds, and smell might be not as the ones of the product. To explain this point, in Fig. 1 is displayed a simplified model of a human involved in a multisensory interaction with a real environment through an action-perception loop. The user perceives the world in order to perform an action, something happens and thus a new perception occurs in a loop: only if the physical prototypes is exactly as the final product will (e.g. same appearance and same materials), the multiple inputs it provides are faithful and thus adequate for testing the product experience with users.

Virtual and mixed prototypes

Nowadays the term Virtual Prototypes (VP) is widely used in the industrial field for any kind of digital simulations. As a consequence, different definitions of VP exist in literature (see for example (Zorriassatine et al. 2003; Bordegoni and Rizzi 2011; Wang 2002)). Our definition of VP is a ’computer-based simulation of a product prototype the user can interact with in a way that is natural and intuitive’. This is strictly related to Virtual Reality (VR) technology. In (Burdea and Coiffet 2003) VR is defined as a realtime high end simulation involving different sensory modalities. Indeed, one of the main differences among any kind of digital simulations and VPs is that they are still digital alternatives to physical prototypes but, accessed by users by means of multiple sensory modalities through VR technologies. The real-time requirement (i.e. they have to react to users’ actions in realtime) strongly influences the choice of the algorithms used to simulate a physical phenomenon, which sometimes are very accurate and therefore not suited to work in realtime.

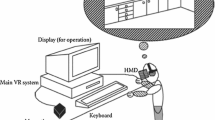

In Fig. 2 is represented a multisensory interaction with a virtual world. Simulations generate the information to be sent to the user through the different sensory modalities. Each sensory simulation (e.g. visual, audio, haptic) is sent to a specific output interface, and finally displayed to the user. Generally each interface communicates with one sense only. If the virtual world is sensed through multiple modalities, an integration occurs and it is finally perceived. When the user perceives the virtual world she can act on it through input interfaces (that input interfaces could be the same as the output ones since, for example, haptic interfaces act both as input and output). Something happens in the virtual world as a consequence of the user action (acquired through the input interface), and a new perception occurs. VPs are thus built through the combination of multiple (input/output) interfaces and the fidelity of the rendering of the sensory cues is closely related to their quality (and cost).

Multisensory interaction with a virtual world. The virtual environment is a combination of sensory models. Each model is rendered through a dedicated VR interface and sent to the user, who perceives the virtual world through her sensory system. The perception of the virtual environment will be a combination of the inputs coming from each sensory modality

Despite the development of the technologies in years, VR still does not offer the tools for a faithful simulation of a real world because of both software and hardware limitations (which determines the quality of the interfaces used) as well as for the limited knowledge of the human sensory/perceptual system as it happens for example for the sense of touch. Actually, considerable effort has been spent by the scientific community on VR visualisation technologies, both software and hardware (for an overview of the development of hardware visualisation technologies see Hainich and Bimber 2011), so that high fidelity visual prototypes can be easily created and in some cases (e.g. in aesthetic design review sessions) they can successfully substitute physical prototypes. Sound technologies are also sufficiently mature to make them a valid tool to create virtual prototypes (Farnell 2010). The haptic technology is the one that is currently receiving a high interest from the VR community and is growing rapidly, despite the complexity of the touch sensory system that makes the development of haptic technologies very challenging (Hayward et al. 2004; Grunwald 2008). The olfactory technologies are still at the earliest stages of their development but some interesting case studies are already available in literature (for a recent overview see (Nakamoto 2013)). Finally, the taste sensory system and its related technologies are the ones up to now less studied and developed. Examples of technology for taste are based on a cross-modal illusion where the gustatory interface is a combination of visual and olfactory cues (Narumi et al. 2011).

For what concern the applicability of VR with respect to the range or type of product to be designed, limitations exist. These limits are a consequence of the current limits of the technological interfaces used. For example, wearable products are, up to now, part of this range.

When VR technologies are not able to reproduce faithfully all the sensory cues or the use scenario already exists and, we are interested only in adding further information on it (we do not want to reproduce virtually something that is already available), a good solution is a mix of real and virtual information. This mix can help in overcoming limitations, exploiting existing information and finally creating faithful prototypes. These ones, which are mix of real and virtual cues, are called Mixed Prototypes (MP) (Bordegoni et al. 2009).

Mixed prototypes are related to Mixed Reality (MR), which is a coherent combination of virtual and real information (Milgram and Kishino 1994). From earliest works on MR and in particular on Augmented Reality (AR) (which is a subset of MR) up to now, many applications and examples have been developed especially for the sense of vision (e.g. see (Azuma et al. 2001; Krevelen and Poelman 2010)). World augmentation can be done not only for the sense of vision but even for other sensory modalities, such as touch and hearing, as stated by one of the first surveys on AR (Azuma 1997). World augmentation requires technologies for sensing the real environment and technologies to add further information. Some examples on audio augmented reality can be found in (Bederson 1995; Mynatt et al. 1997), where audio tracks are added to real scenarios. Also for touch some examples of AR exist. Nojima et al. (2002) describe a system including a haptic interface specifically developed for haptic AR, thus sensing what happens into the real world, and augmenting human perception through a force. Another interesting contribution concerning AR for touch can be found in (Jeon and Choi 2009). Here, given the difficulties to simulate deformable bodies in realtime with a high rendering quality, some forces are added through a haptic interface while touching a real deformable body.

MPs currently represent the most interesting alternative to physical ones, despite presenting very complex issues to be addressed. Figure 3 refers to human multisensory interaction with a mixed world. The user senses the real world as well as the virtual one through all her modalities. An integration of the senses occurs and the user perceives the mixed world. At this point she can act both on the real and the virtual world: when a change occurs in one of them this has to be reflected into the other, and the user starts sensing and perceiving the new mixed world. In this picture real and virtual worlds are separated but not independent. This is the first challenge to solve, if something occurs in the real world this has to be reflected into the virtual one and vice versa. Ishii and Ullmer (1997) describe this problem in one of the pioneering works on Tangible User Interfaces (TUI), but this is still an open issue as described in (Leithinger et al. 2013), especially when the mixed world is multisensory. Hence, when building mixed prototypes, how to faithfully combine the virtual and real components is an aspect that should not be underestimated.

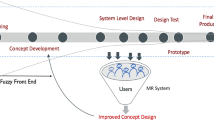

A framework to guide the design of multisensory testing scenarios

On the basis of the requirements set in “Prototypes for product experience design” section, the prototyping modalities currently available and the modalities though which humans perceive the external world (“Prototyping strategies: real, virtual and mixed” section), we have drawn the map reported in Fig. 4. This map shows the combination of prototypes we can build for the multisensory product experience design. These are real, virtual and mixed. The three elements at the basis of the design/engineering problem are here recalled (see “Introduction” section): the user, the product and the context of use. According to the way each element is rendered we can thus build different kinds of multisensory testing scenarios. That frameworks will be used to suggest when and why a specific prototyping strategy should be preferred to the others.

The user experiencing framework: the real human can interact with a real, virtual or mixed prototype in a real, virtual or mixed context of use. The framework is based on the continuum described in (Milgram and Kishino 1994)

A real testing scenario consists of a human interacting with a physical prototype within a real context. As said in “Prototyping strategies: real, virtual and mixed” section the testing scenario built is faithful only if both the context of use and the prototype itself are as, or very close to, the real (final) one. If that is the case, we are almost at the end of the development phase: modifications are no more feasible with a reasonable effort in terms of cost and time. If a non satisfactory multisensory experience between the user and the prototype is measured, there is no more room for substantial changes. These high fidelity prototypes are effectively used for running field tests analyses, which are testing activities driven by purposes which are completely different by the experience design ones. Hence, the use of a physical prototype is suggested when: the company can easily afford the prototyping costs, the high-fidelity requisite is a must have one, the new product to be designed implies small changes to an existing one whose prototype is already available.

In a virtual testing scenario both the product and the context of use, are reproduced within a VR environment. Virtual simulations can be controlled overtime and parametrised, but the faithfulness of the experience perceived is strongly influenced by the technical limits of the output/input interfaces used for building the prototype and the context. Hence, this testing scenario can be used to roughly explore different solutions at the very beginning of the design process. Even if the level of faithfulness is low (as it happens for the low-fi physical prototype) an effective exploration activity is however possible thanks to the variables that can be controlled within a simulated environment.

There are also intermediate testing scenarios. They are built when one of the following two situations occurs: the prototype of the product is virtual or it is a mix of virtual and physical information and the context is a real one; the prototype of the product is real but the context is virtual or it is a mix of virtual and real information. In both cases we have a mixed testing scenario. Such scenario can be useful both at the beginning as well as in more advanced stages of the design process. For example, we can have a mixed prototype built through both digital and physical parts where the digital ones are those parts of the product that need to be changed. The mixed prototype is put within a virtual scenario if we need also to evaluate the effects given by different contexts of use. If a unique context exists for the product or the target context has been already defined, there is no need to prototype it: we can use a real one.

To conclude this section we point out that the more we move towards the fully virtual testing scenario the more the number of design variables we can control increases (from almost “0” in the real testing scenario to “several” in the fully virtual one). That statement is further valid if we consider also the following aspect: since in principle each sensory simulation is independent from the others, we can test the single contribution of each sensory stimulus to the whole product experience. For example, sounds can be independent from forces or shapes and so on. This fact enables engineers to more deeply explore the design space. Indeed, even if a multisensory experience is by definition holistic, to optimise it during the design phase we can tune each single contribution separately as it will be explained in the next section. For example we can analyse the quality of the experience perceived when closing a door, separating the contribution given by the force needed to close it (i.e. the kinaesthetic stimulus) with the one of the sound produced (i.e. the sound stimulus).

Put the framework into practice: examples of testing scenarios for the experience design of appliances interfaces

Once realised what kind of testing scenarios we need to build we can now start the prototyping activity. This one should be carried out, as already underlined, keeping in mind the following three main aspects: what technology or physical parts we need to render the multisensory cues (what kind of output/input interfaces); how to control the behaviour of such interfaces so as to make them adjustable on users’ requests (we need to test variants); how to transform the users’ preferences into design specifications so that the results of the testing activity can be used as input for the next engineering phases.

In the following we make use of case studies available in literature (some of them are the ones we have developed in years on the topic) to demonstrate how each aspect could be put into practice (taking into account the suggestions already provided in “A framework to guide the design of multisensory testing scenarios” section) and how much they are strictly correlated. Finally, we will concentrate on VR/MR prototypes which are the focus of the paper.

Prototyping multisensory concepts: defining the output/input interfaces

As pointed out in “Prototypes for product experience design” section, prototypes must be multisensory. All the senses are usually involved in the product experience, even if in some cases we are not aware of. Therefore, in which way can the prototyping activity take into account all the sensory modalities?

In case of a redesign activity the first thing to do is to observe how the user interacts with the existing product, and then identify which senses and how they are involved in the interaction. If the aim is to design a completely new product the designer should first state what kind of multisensory interaction she would need to implement in the product (e.g. a strongly automatic product would need audio and visual feedback to inform the user). Then, in both cases, the building of the simulations can start taking into account all the senses and thus the input/output interfaces needed. The main requirements for these simulations are the following: they must allow the designer vary the sensory feedback independently and they should guarantee a faithful experience.

Figures 5, 6 and 7 show three examples of virtual and mixed prototyping activity that we have developed to design user experiencing with the door of a refrigerator and a dishwasher. In all these cases the context is only virtual and reproduced for the sense of vision. For the fridge case study reported in Fig. 5 the visual simulation of the context as well as of the product (i.e. a kitchen and a fridge) has been performed using the Unity3D environment (www.unity3d.com). Sound is rendered through Unity3D and the haptic interface is controlled via MiddleVR for Unity (http://www.middlevr.com). MiddleVR is also used to adapt prototype position and orientation in realtime according to the user’s point of view through an optical tracking system.

The multisensory VP of a refrigerator door. The example is similar to what described in Ferrise et al. (2010) except for the object under analysis (here is a refrigerator instead of a washing machine) and for the simulation of the context of use. The simulation generates three stimuli: visual, through a rear projected display (1), a tracking system (2), and a tracked pair of glasses (3); haptic, rendered through a 6DOF device (4); sound, generated through speakers (5)

The multisensory MP of a refrigerator door as described in Ferrise et al. (2013). A rapid prototype of the door handle (6) is attached to the device in order to improve the overall haptic feedback. The simulation generates three stimuli: visual, through a rear projected display (1), a tracking system (2), and a tracked pair of glasses (3); haptic, rendered through a 3DOF device (4) and a rapid prototype (6); sound, generated through speakers (5)

The multisensory MP of a dishwasher door described in Phillips Furtado et al. (2013), Graziosi et al. (2014). In order to improve the haptic feedback, a real handle (6) is attached to the haptic device which reproduces different parametric haptic models. The simulation generates three stimuli: visual, through a rear projected display (1), a tracking system (2), and a tracked pair of glasses (3); haptic, rendered through a 3DOF device (4) and a real handle (6); sound, generated through speakers (5)

The examples shown in Figs. 6 and 7 have been developed using the H3DAPI (www.h3dapi.org) development environment and make use of another haptic interface. Since we were interested in optimising the force necessary (i.e. the kinaesthetic stimulus) to open and close the door we have used a 3DOF MOOG-HapticMaster (www.moog.com/products/haptics-robotics/), instead of the 6DOF Virtuose Haption interface (www.haption.com) since it is able to render higher forces. Furthermore, we have created a rapid prototypes of the handle of the fridge (see Fig. 6). The decision to use this rapid prototype has been made as a consequence of the results gathered from previous research activities we have described in (Ferrise et al. 2010; Bordegoni and Ferrise 2013; Ferrise et al. 2013). In these studies it is detailed how to virtualise human interaction with a virtual prototype of a washing machine. The users, who were asked to compare the simulation of the washing machine with the real one, reported the following limitation: the haptic feedback is influenced by the shape of the handle of the haptic device, which is different from the handle of the real washing machine. Hence, we decided to replace the haptic handle with a rapid prototype having the same geometry of the handle of the product.

In the case study summarised in Fig. 5, we were focused on exploring different opening/closing modalities of the fridge door and its internal drawers. For this reason, since the faithfulness of the force feedback was not a requirement (as for the case study in Fig. 6), we have used the 6DOF Haption Virtuose general purpose haptic device. The dishwasher example in Fig. 7 is similar to the fridge one in Fig. 6. However, in this case, instead of using a rapid prototype of the handle we have used the real interface of the product. In this way we have guaranteed that the tactile feedback perceived by the user on his hand was realistic.

As a further example, in (Bordegoni 2011) is described a simulation of, again, a washing machine but, based on a different approach. Here touch and vision and both haptic feedback and visual feedback are obtained combining real and virtual information. A physical mockup of the washing machine is assembled putting together lo-fi components and a rapid prototype of the shape of the physical interface where usually knobs and buttons are positioned. It is important that this interface is reproduced faithfully because it is where the user will touch when interacting with the knob. The knob is controlled by a haptic interface, so its behaviour can change in realtime. The visual appearance of the washing machine is superimposed onto the mockup in mixed reality. The user sees the virtual washing machine and the real surrounding environment.

All these examples demonstrate how big is the range of testing scenarios offered by VR/MR prototypes as well as the reasoning behind the selection of the interfaces needed to render a faithful interaction. It is clear that all these aspects have to be tuned according to the objectives of the testing and engineering activities.

Exploring design alternatives through parametrisation of the interfaces behaviour

One of the features of prototypes is to enable the engineer to explore the design space: the user can test different product variants and can ask for real-time modifications. With VR/MR prototypes this can be done through the parametrisation of the simulation.

When simulating a phenomenon in VR for prototyping purposes there are two approaches that can be used. We will call them physical and sensory. The idea is similar to what described in (Gallace et al. 2012) but with some differences. In Gallace et al. (2012) the authors describe how to design effective multisensory simulations concentrating on the way the user perceives the simulation. They analyse how the simulation can be affected where some senses are not involved and present some practical examples of how to overcome limitations through what the authors define neurally-inspired VR, i.e. creating simulation based on human brains principles rather than concentrating on reproducing the characteristics of the physical stimuli.

We are interested in giving the user a faithful representation of the experiencing but also we are interested on the prototyping of the sensory features which are responsible of it (as discussed in “Prototyping multisensory concepts: defining the output/input interfaces” section). To clarify the meaning of physical and sensory simulations we will use a simple example. Let us simulate what the user feels on his hand when rotating a knob and also the sound produced. This is a multisensory simulation involving vision, touch and hearing at ease. For simplicity we will concentrate on touch and hearing. We can simulate the physical phenomenon underlying the knob rotation, which in the simplest case is a friction, a very well known physical law that can be simulated in realtime. Simulating friction (stick and slip) we can derive the kind of torque to be rendered on user’s hand through a specific haptic interface, and the sound produced. Realtime simulations of the sound of friction are described in (Avanzini et al. 2005). As an alternative we can simulate a generic torque profile and sampled sounds and play them. As the physical phenomenon increases in complexity (for example we can add some clicks to the knob) it is easy to understand how the first approach tends to get more complicated while the second does not. At some point it will get to a situation where it will be no more suitable to be simulated in real time. In this case simplifications will be necessary to run the simulation in realtime. The main difference between the two approaches is that in the first one we are interested in simulating the physical phenomenon, while in the second we are interested in simulating only what the user will feel of it with his sensory modalities. There is a similar distinction in the field of sonic interaction design (Rocchesso and Serafin 2009) where sounds are divided into synthesised and sampled. The former reproduce physical phenomena while the latter reproduce sampled sounds interactively. In physical simulations touch and sound are in someway related: if we change the materials, the sounds produced and the forces returned will vary accordingly. In the case of sensory simulations the feedbacks are independent each other and we can play with combinations. We can, for example, associate different sounds to the same force feedback and so on.

When focused in designing product experiencing, designers might be interested in exploring different alternatives without concentrating on the physical phenomena underlying them. Therefore the second approach might be preferred to the first one. As an example in (Bordegoni et al. 2011; Ferrise et al. 2013) are described some virtual/mixed prototypes based on sensory simulations of doors feedback, while in (Strolz et al. 2011; Shin et al. 2012) two haptic devices are used to implement the physical approach to simulate the doors of car and appliances. As said, depending on the purpose of the simulation the physical might be preferred to the sensory one and vice versa.

In this view it comes out that in order to create product variants there are two parameterisation strategies to follow. One is parameterising the physical phenomena, and the other one is parameterising the final perception through parameterising the sensory simulations. In our case the second strategy is preferred. Programmable interfaces enables the possibility to modify their characteristics in order to generate different sensory stimuli. For example, commercially available haptic devices behaviour can be controlled over time and in real-time (an example will be discussed in the next section). The same activities can be done for sound stimuli using the Unity3D environment. For the vision (but not only limited to this stimulus), as already mentioned, the Unity3D environment as well as H3DAPI offer a wide range of possibilities where visual cues can be mixed with sound ones and correlated to haptic stimuli (as done in the case study reported in Fig. 5).

From simulations to design specifications

Simulations, especially those based on pure digital information, can give as output situations that are not physically reproducible. Mixing sensory simulations separately a question might arise: we can quite easily decouple the sound produced by a door slamming, and we can record an experiencing which the user likes most. Thus, how can we extract from the simulation design specifications for real products? This is a reverse engineering problem from simulation to design specifications.

Examples in literature exist for the sense of hearing (Auweraer et al. 1997). In (Phillips Furtado et al. 2013; Graziosi et al. 2013, 2014) we described a prototype and a dedicated approach built to quantify users’ preferences and transform these ones into design specifications. To reach this objective a MP of the product to be redesigned is created (in that case a dishwasher) within a virtual context (see Fig. 7). The issue here is to investigate the overall experiencing during the opening/closing of the dishwasher door.

In this example the behaviour of the haptic device (i.e. the behaviour of the door) is controlled through parameters so as to give to the user the possibility to test different opening/closing modalities using a unique simulation. The parameterisation is created using the software which controls the dynamics of the device (see “Exploring design alternatives through parametrisation of the interfaces behaviour” section). In addition, being the simulation parametric, it is possible to adapt, on user’s request, such behaviour. The user is able to ask for changes of the haptic feedback of the door while the other two sensory feedback are fixed. By means of a dedicated experimental campaign with users, their preferences are captured and translated into design specifications through the use of optimisation algorithms. Indeed, the values of the parameters retrieved from the experimental test are used as input for solving the equations of the physically-based model of the opening/closing system of the dishwasher that has been previously created and verified using the LMS-AMESim (www.lmsintl.com) software. Designers can choose what component or technical parameter (e.g. the spring, the friction) of the door opening system needs to be optimised (so as the final product will return the same haptic behaviour of the simulation). In this way, in case of multisensory optimisation (e.g. a specific sound plus a specific force) different physical components can be selected to be optimised for generating the desired sensory feedback.

Conclusions

This paper describes the main aspects at the basis of the design and optimisation of the multisensory experience of products. It analyses the possibilities offered by VR-based technologies to support engineers in prototyping, testing and designing worthwhile experiences (Hassenzahl 2010a). The discussion starts from the analysis of the main characteristics that a prototype built for such purposes should have: it should be multisensory and modifiable in real-time. Besides not only the product under analysis but also its context of use should be part of the prototyping activity if needed. This discussion is grounded on the main contributions in the same field available in literature (Hassenzahl 2010a; Schifferstein and Hekkert 2011; Forlizzi and Battarbee 2004; Hassenzahl and Tractinsky 2006; Buchenau and Suri 2000; Spence and Gallace 2011; Gallace and Spence 2013).

The discussion underlines how virtual and mixed prototyping strategies are becoming a valid alternative to physical prototypes when dealing with this kind of design problems. Specifically, a mixed prototyping strategy to date seems to be the most interesting one. It can be used to overcome the limitations of both the virtual and physical prototypes: when VR technologies are not able to guarantee the faithfulness of the multisensory experience perceived due to technical limits of the input/output interfaces used, the real world, adding real components, is the solution.Vice versa, when the real world is not able to guarantee any realtime modification of the prototype behaviour and appearance, virtual simulations can be of great help. The issue here is how to properly combine the real and virtual representations within a unique prototype. Actually, that is feasible as demonstrated by the examples and case studies reported along the discussion.

Anyway a little bit of skepticism in the use of VR-based technologies is still present in industries due to the technical limitations which indubitably characterise them, especially concerning the interfaces of some sensory modalities as touch, olfaction and taste. However, the scientific community is investing a lot of efforts trying to overcome them through different strategies: on the one hand understanding better the principles at the basis of the human perceptual system and exploiting it in simulations (Gallace et al. 2012) and, on the other, improving the integration of the virtual and physical world in order to limit the number of information that must be virtually simulated so as to guarantee, as much as possible, the faithfulness of the multisensory interaction. These are good premises for considering Experience Virtual or Mixed Prototypes as effective tools for supporting engineers in the design and optimisation of the multisensory experience of products.

References

Avanzini, F., Serafin, S., & Rocchesso, D. (2005). Interactive simulation of rigid body interaction with friction-induced sound generation. IEEE Transactions on Speech and Audio Processing, 13(5), 1073–1081. doi:10.1109/TSA.2005.852984.

Azuma, R., Baillot, Y., Behringer, R., Feiner, S., Julier, S., & MacIntyre, B. (2001). Recent advances in augmented reality. IEEE Computer Graphics and Applications, 21(6), 34–47. doi:10.1109/38.963459.

Azuma, R. T. (1997). A survey of augmented reality. Presence, 6, 355–385.

Bederson, B. B. (1995). Audio augmented reality: A prototype automated tour guide. In Conference companion on human factors in computing systems, ACM, New York, NY, USA, CHI ’95, pp. 210–211. doi:10.1145/223355.223526.

Bordegoni, M. (2011). Product virtualization: An effective method for the evaluation of concept design of new products. Innovation in product design (pp. 117–141). London: Springer.

Bordegoni, M., & Ferrise, F. (2013). Designing interaction with consumer products in a multisensory virtual reality environment. Virtual and Physical Prototyping, 8(1), 51–64. doi:10.1080/17452759.2012.762612.

Bordegoni, M., & Rizzi, C. (Eds.) (2011). Innovation in product design: From CAD to virtual prototyping. Berlin: Springer.

Bordegoni, M., Cugini, U., Caruso, G., & Polistina, S. (2009). Mixed prototyping for product assessment: A reference framework. International Journal on Interactive Design and Manufacturing, 3, 177–187.

Bordegoni, M., Ferrise, F., & Lizaranzu, J. (2011). Use of interactive virtual prototypes to define product design specifications: A pilot study on consumer products. In VR innovation (ISVRI), 2011 IEEE international symposium on (pp. 11–18).

Buchenau, M., & Suri, J. F. (2000). Experience prototyping. In Proceedings of the 3rd conference on designing interactive systems: Processes, practices, methods, and techniques (pp. 424–433). ACM.

Burdea, G. C., & Coiffet, P. (2003). Virtual reality technology (2nd ed.). New Jersey: Wiley-IEEE Press.

Chua, C. K., Leong, K. F., & Lim, C. S. (2010). Rapid prototyping: Principles and applications. Singapore: World Scientific.

Desmet, P. M. A., & Hekkert, P. (2007). Framework of product experience. International Journal of Design, 1(1), 57–66.

DIS I (2009) 9241-210: 2010. Ergonomics of human system interaction-part 210: Human-centred design for interactive systems. Switzerland: International Standardization Organization (ISO).

Farnell, A. (2010). Designing sound. Cambridge: MIT Press.

Ferrise, F., Bordegoni, M., & Lizaranzu, J. (2010). Product design review application based on a vision-sound-haptic interface. In R. Nordahl, S. Serafin, F. Fontana & S. Brewster (Eds.), Haptic and audio interaction design, Lecture Notes in Computer Science (Vol. 6306, pp. 169–178). Berlin, Heidelberg: Springer.

Ferrise, F., Bordegoni, M., & Cugini, U. (2013a). Interactive virtual prototypes for testing the interaction with new products. Computer-Aided Design and Applications, 10(3), 515–525.

Ferrise, F., Bordegoni, M., & Graziosi, S. (2013b). A method for designing users’ experience with industrial products based on a multimodal environment and mixed prototypes. Computer-Aided Design and Applications, 10(3), 461–474.

Forlizzi, J., & Battarbee, K. (2004). Understanding experience in interactive systems. In Proceedings of the 5th conference on designing interactive systems: Processes, practices, methods, and techniques (pp. 261–268). ACM.

Gallace, A., & Spence, C. (2013). In touch with the future. Oxford: Oxford University Press.

Gallace, A., Ngo, M. K., Sulaitis, J., & Spence, C. (2012). Multisensory presence in virtual reality: Possibilities & limitations. In G. Ghinea, F. Andres, & S. Gulliver (Eds.), Multiple sensorial media advances and applications: New developments in MulSeMedia (pp. 1–38). Hershey, PA: Information Science Reference. doi:10.4018/978-1-60960-821-7.ch001.

Graziosi, S., Ferrise, F., Bordegoni, M., Ozbey, O., et al. (2013). A method for capturing and translating qualitative user experience into design specifications: The haptic feedback of appliance interfaces. In DS 75-7: Proceedings of the 19th international conference on engineering design (ICED13), design for harmonies, Vol. 7: Human behaviour in design, Seoul, Korea, 19-22.08.

Graziosi, S., Ferrise, F., Phillips Furtado, G., & Bordegoni, M. (2014). Reverse engineering of interactive mechanical interfaces for product experience design. Virtual and Physical Prototyping, 9(2), 65–79.

Grunwald, M. (Ed.). (2008). Human haptic perception: Basics and applications (1st ed.). Basel, Boston, Berlin: Birkhäuser Basel.

Hainich, R. R., & Bimber, O. (2011). Displays: Fundamentals and applications. Boca Raton: A K Peters/CRC Press.

Hassenzahl, M. (2005). The thing and I: Understanding the relationship between user and product. In Funology (pp. 31–42). Netherlands: Springer.

Hassenzahl, M. (2010a). Experience design: Technology for all the right reasons. Synthesis Lectures on Human-Centered Informatics, 3(1), 1–95.

Hassenzahl, M. (2010b). Experiencing and experience. Interview–Generalist 4, 2010 issue on “Use and Habit”.

Hassenzahl, M., (2014). User experience and experience design. In M. Soegaard & R. F. Dam (Eds.), The encyclopedia of human-computer interaction (2nd ed.). Aarhus, Denmark: The Interaction Design Foundation.

Hassenzahl, M., & Tractinsky, N. (2006). User experience-a research agenda. Behaviour & Information Technology, 25(2), 91–97.

Hassenzahl, M., Eckoldt, K., Diefenbach, S., Laschke, M., Lenz, E., & Kim, J. (2013). Designing moments of meaning and pleasure experience design and happiness. International Journal of Design, 7(3), 21–31.

Hayward, V., Ashley, O., Hernandez, M. C., Grant, D., & Robles-De-La-Torre, G. (2004). Haptic interfaces and devices. Sensor Review, 24(1), 16–29.

Ishii, H., & Ullmer, B. (1997). Tangible bits: Towards seamless interfaces between people, bits and atoms. In Proceedings of the ACM SIGCHI conference on human factors in computing systems, ACM, New York, NY, USA, CHI ’97, pp. 234–241. doi:10.1145/258549.258715.

Jeon, S., & Choi, S. (2009). Haptic augmented reality: Taxonomy and an example of stiffness modulation. Presence: Teleoperators and Virtual Environments, 18(5), 387–408. doi:10.1162/pres.18.5.387.

Klatzky, R., & Peck, J. (2012). Please touch: Object properties that invite touch. IEEE Transactions on Haptics, 5(2), 139–147. doi:10.1109/TOH.2011.54.

Krishna, A., Lwin, M. O., & Morrin, M. (2010). Product scent and memory. Journal of Consumer Research, 37(1), 57–67.

Langeveld, L., van Egmond, R., Jansen, R., & Özcan, E. (2013). Product sound design: Intentional and consequential sounds. In D. Coelho (Ed.), Advances in industrial design engineering. http://www.intechopen.com/books/advances-in-industrial-design-engineering/product-sound-design-intentional-and-consequential-sounds.

Leithinger, D., Follmer, S., Olwal, A., Luescher, S., Hogge, A., Lee, J., & Ishii, H. (2013). Sublimate: State-changing virtual and physical rendering to augment interaction with shape displays. In Proceedings of the SIGCHI conference on human factors in computing systems, ACM, New York, NY, USA, CHI ’13, pp. 1441–1450. doi:10.1145/2470654.2466191.

Milgram, P., & Kishino, F. (1994). A taxonomy of mixed reality visual displays. IEICE Trans Information Systems, E77–D(12), 1321–1329.

Mugge, R., & Schoormans, J. P. (2012). Newer is better! The influence of a novel appearance on the perceived performance quality of products. Journal of Engineering Design, 23(6), 469–484.

Mynatt, E.D., Back, M., Want, R., & Frederick, R. (1997). Audio aura: Light-weight audio augmented reality. In Proceedings of the 10th annual ACM symposium on user interface software and technology, ACM, New York, NY, USA, UIST ’97, pp 211–212. doi:10.1145/263407.264218.

Nakamoto, T. (Ed.) (2013). Human olfactory displays and interfaces: Odor sensing and presentation. Hershey: IGI Global.

Narumi, T., Nishizaka, S., Kajinami, T., Tanikawa, T., & Hirose, M. (2011). Augmented reality flavors: Gustatory display based on edible marker and cross-modal interaction. In Proceedings of the SIGCHI conference on human factors in computing systems, ACM, New York, NY, USA, CHI ’11, pp. 93–102. doi:10.1145/1978942.1978957.

Nojima, T., Sekiguchi, D., Inami, M., & Tachi, S. (2002). The smarttool: A system for augmented reality of haptics. In Virtual reality, 2002. Proceedings (pp. 67–72). IEEE. doi:10.1109/VR.2002.996506.

Norman, D. (2004). Emotional design: Why we love (or hate) everyday things. New York: Basic Civitas Books.

Ottosson, S. (2002). Virtual reality in the product development process. Journal of Engineering Design, 13(2), 159–172.

Phillips Furtado, G., Ferrise, F., Graziosi, S., & Bordegoni, M. (2013). Optimization of the force feedback of a dishwasher door putting the human in the design loop. In A. Chakrabarti & R. V. Prakash (Eds.), ICoRD’13, Lecture Notes in Mechanical Engineering (pp. 939–950). India: Springer.

Pine, B. J., & Gilmore, J. H. (2011). The experience economy. Boston: Harvard Business Press.

Rocchesso, D., & Serafin, S. (2009). Sonic interaction design. International Journal of Human-Computer Studies, 67(11), 905–906.

Schifferstein, H. N., & Hekkert, P. (2011). Product experience. Amsterdam: Elsevier.

Schmitt, B. (1999). Experiential marketing. Journal of Marketing Management, 15(1–3), 53–67.

Shin, S,, Lee, I., Lee, H., Han, G., Hong, K., Yim, S., et al. (2012). Haptic simulation of refrigerator door. In Haptics Symposium (HAPTICS) (pp. 147–154). IEEE. doi:10.1109/HAPTIC.2012.6183783.

Spence, C., & Gallace, A. (2011). Multisensory design: Reaching out to touch the consumer. Psychology and Marketing, 28(3), 267–308. doi:10.1002/mar.20392.

Spence, C., & Zampini, M. (2006). Auditory contributions to multisensory product perception. Acta Acustica united with Acustica, 92(6), 1009–1025. http://www.ingentaconnect.com/content/dav/aaua/2006/00000092/00000006/art00020.

Spence, C., Puccinelli, N. M., Grewal, D., & Roggeveen, A. L. (2014). Store atmospherics: A multisensory perspective. Psychology & Marketing, 31(7), 472–488. doi:10.1002/mar.20709.

Strolz, M., Groten, R., Peer, A., & Buss, M. (2011). Development and evaluation of a device for the haptic rendering of rotatory car doors. IEEE Transactions on Industrial Electronics, 58(8), 3133–3140. doi:10.1109/TIE.2010.2087292.

Van der Auweraer, H., Wyckaert, K., & Hendricx, W. (1997). From sound quality to the engineering of solutions for nvh problems: Case studies. Acta Acustica united with Acustica, 83(5), 796–804.

Van Krevelen, D., & Poelman, R. (2010). A survey of augmented reality technologies, applications and limitations. International Journal of Virtual Reality, 9(2), 1.

Wang, G. G. (2002). Definition and review of virtual prototyping. Journal of Computing and Information Science in Engineering, 2(3), 232–236. doi:10.1115/1.1526508. http://link.aip.org/link/?CIS/2/232/1.

Zorriassatine, F., Wykes, C., Parkin, R., & Gindy, N. (2003). A survey of virtual prototyping techniques for mechanical product development. Proceedings of the Institution of Mechanical Engineers, Part B: Journal of Engineering Manufacture, 217(4), 513–530.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ferrise, F., Graziosi, S. & Bordegoni, M. Prototyping strategies for multisensory product experience engineering. J Intell Manuf 28, 1695–1707 (2017). https://doi.org/10.1007/s10845-015-1163-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-015-1163-0