Abstract

Nonlinear biophysical properties of individual neurons are known to play a major role in the nervous system, especially those active at subthreshold membrane potentials that integrate synaptic inputs during action potential initiation. Previous electrophysiological studies have made use of a piecewise linear characterization of voltage clamped neurons, which consists of a sequence of linear admittances computed at different voltage levels. In this paper, a fundamentally new theory is developed in two stages. First, analytical equations are derived for a multi-sinusoidal voltage clamp of a Hodgkin–Huxley type model to reveal the quadratic response at the ionic channel level. Second, the resulting behavior is generalized to a novel Hermitian neural operator, which uses an algebraic formulation capturing the entire quadratic behavior of a voltage clamped neuron. In addition, this operator can also be used for a nonlinear identification analysis directly applicable to experimental measurements. In this case, a Hermitian matrix of interactions is built with paired frequency probing measurements performed at specific harmonic and interactive output frequencies. More importantly, eigenanalysis of the neural operator provides a concise signature of the voltage dependent conductances determined by their particular distribution on the dendritic and somatic membrane regions of neurons. Finally, the theory is concretely illustrated by an analysis of an experimentally measured vestibular neuron, providing a remarkably compact description of the quadratic responses involved in the nonlinear processing underlying the control of eye position during head rotation, namely the neural integrator.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In an innovative paper, FitzHugh (1983) derived analytically the nonlinear response to a single sinusoidal stimulation for the voltage clamped (Hodgkin and Huxley 1952) model. He showed that the steady-state current response to a single sinusoidal frequency f has harmonic components f, 2f, 3f, ... This analysis provided a quantitative interpretation of the harmonic components in voltage clamped squid axons (Moore et al. 1980).

However, the single sinusoidal stimulation is generally insufficient to characterize the nonlinear behavior in neurons. In particular, it is unable to predict the quadratic response to a double sinusoidal stimulation of frequencies f 1, f 2 since additional intermodulation products f 1 + f 2 and |f 1 − f 2| occur in the measured membrane current. In this paper, a quadratic approximation of the neuronal response to a double sinusoidal stimulation is derived analytically and extended to a multi-sinusoidal stimulation.

This approach leads to the development of a Quadratic Sinusoidal Analysis, referred to as QSA, relying on matrix calculus and eigendecomposition to provide a characterization of the nonlinear behavior from neuronal responses to a multi-sinusoidal stimulation in the steady state. This method explores in depth the behavior of individual neurons, which are fundamentally nonlinear and cannot be described by linear theory alone.

Previously, nonlinear systems analysis has been extensively applied to neural systems using extracellular spike train measurements, however in this area relatively little has been done with intracellular membrane potential measurements. In this paper QSA is applied to voltage clamp experiments by using nonoverlapping frequencies, providing a new piecewise quadratic analysis that not only incorporates linear analysis (Fishman et al. 1977; Murphey et al. 1995; Mauro et al. 1970) but adds second order nonlinearity. Fundamentally, the context of this paper deals with single neuronal membrane biophysics and not with a general nonlinear system identification approach on spike discharge rates in neural networks. The purpose of this paper is to quantitatively measure single neuron nonlinear voltage dependent conductance properties up to threshold membrane potentials that are under voltage clamp control.

The development of the patch clamp and imaging techniques have clearly shown that subthreshold voltage dependent channels throughout the dendritic tree dynamically control the firing properties of neurons. Two of the most important conductances for the control of the frequency of action potential activity are the persistent sodium and hyperpolarized activated ion channels. Previously, a multi-sinusoidal and dynamic clamp study showed that the subthreshold gNaP channels of one type of prepositus hypoglossi neurons (PHN) directly controlled the firing rate (Idoux et al. 2008). This was done by injecting virtual gNaP channels with a dynamic clamp and restoring the firing pattern of the PHN neurons whose gNaP channels had been pharmacologically blocked. The virtual gNaP conductance had been previously determined from subthreshold measurements on these neurons. Neuronal models derived from these experiments have also been extended to include action potential channels and thus simulate both the subthrehold behavior and spike trains (Idoux et al. 2006). Although QSA specifically avoids neurons firing action potentials, it does characterize them during the critical integrating states that lead to action potentials, which consequently allows an analysis of how neurons process synaptic inputs in order to finally reach threshold.

Thus, the nonlinear experimental and theoretical studies presented here provide a new quantitative voltage clamp analysis of intact neurons, even when a space clamp is not possible. Furthermore, this is an important contribution to the more general nonlinear systems analysis using Volterra techniques that have usually been applied to action potential data, generally measured as point processes versus time. Although current clamp subthreshold membrane potentials could be used, the voltage clamp is used here because it is the most effective way to rigorously control the membrane potential and acquire data for quantitative analysis.

2 Theory

2.1 Double sinusoidal voltage clamp

The proposed model implements a minimal soma with only one kinetic equation in order to simplify the calculations while preserving their physiological significance. The parameters were selected to be consistent with experimental data published in Idoux et al. (2008).

Here \(I_{L}=\overline{g_{L}}\left(V-V_{L}\right)\), \(I_{K}=\overline{g_{K}}n\left(V-V_{k}\right)\) and \(I_{Na}=\overline{g_{Na}}m_{\infty}\left(1-n\right)\left(V-V_{Na}\right)\) represent the leakage, K + and Na + ionic currents respectively. V is the imposed membrane potential, I the measured current, n the gating variable for K + and m ∞ the gating variable for Na + at equilibrium (m′ = 0). The other values are constant parameters : the membrane capacitance C = 20.5 pF; the maximal conductances \(\overline{g_{L}}=1.37~\rm{nS}\), \(\overline{g_{K}}=1.18~\rm{nS}\) and \(\overline{g_{Na}}=0.64~\rm{nS}\); the reversal potentials V L = − 53 mV, V K = − 87 mV and V Na = 77 mV for leakage, K + and Na + respectively. The functions α n and β n depend on the variable V and their mathematical expressions are fully described in Murphey et al. (1995) for v m = − 35 mV, s m = 0.056 mV − 1, v n = − 39 mV, s n = 0.09 mV − 1 and t n = 0.1 s when defining \(\alpha_{n}=e^{2s_{n}\left(V-v_{n}\right)}/\left(2t_{n}\right)\), \(\beta_{n}=e^{-2s_{n}\left(V-v_{n}\right)}/\left(2t_{n}\right)\) and \(m_{\infty}=1/\big(1+e^{-4s_{m}\left(V-v_{m}\right)}\big)\).

Fitzhugh imposes a single sinusoidal command for the membrane potential V( t ) = V 0 + V 1 cos (ω 1 t) where V 0 is the DC constant, V 1 is the amplitude and ω 1 is the angular frequency. This can be extended to a double sinusoidal command

where V 1, V 2 are the amplitudes, ω 1, ω 2 are the angular frequencies and ϕ 1, ϕ 2 the phases. The phase difference |ϕ 2 − ϕ 1| is especially important to ensure that the two sine waves are uncorrelated. Also it is necessary to have ω 1 and ω 2 distinct to avoid degenerate cases. The notations can be simplified by putting \(c_{1}=\cos\left(\omega_{1}t+\phi_{1}\right)\), \(c_{2}=\cos\left(\omega_{2}t+\phi_{2}\right)\), \(s_{1}=\sin\left(\omega_{1}t+\phi_{1}\right)\) and \(s_{2}=\sin\left(\omega_{2}t+\phi_{2}\right)\).

The goal is to determine an analytical expression for the current I. In this paper, the solutions are limited to a quadratic approximation, which is the minimal degree of nonlinearity. Indeed, although neuronal responses generally show higher degrees of nonlinearity, they can be ignored if the stimulation amplitude is sufficiently small.

The gating variable n can be approximated by a quadratic polynomial near the steady state n 0 with respect to the input fluctuations amplitudes, V 1 and V 2:

where n 1, n 2, n 11, n 22, and n 12 are unknown functions of time. Similarly, α n and β n can be approximated by quadratic polynomials with respect to V 1 and V 2 after a quadratic Taylor decomposition:

The approximated expressions of n, α n and β n are polynomials in variables V 1 and V 2, which can be substituted into Eq. (2). Then, by identification with the zero polynomial, the system reduces to a set of five linear differential equations as well as the common steady-state expression \(n_{0}=\frac{\alpha_{0}}{\alpha_{0}+\beta_{0}}\):

where \(\lambda=\alpha_{n}\left(V_{0}\right)+\beta_{n}\left(V_{0}\right)\) is constant, A is constant, and B 1(ω 1), B 2(ω 2), C 1(ω 1), C 2(ω 2), D 12(ω 1, ω 2), E 1(ω 1) and E 2(ω 2) are rational functions. The details of these cumbersome expressions are not important, except for their frequency content. From trigonometric calculus, c 1, c 2, \(c_{1}^{2}\), \(c_{2}^{2}\) contain frequencies ω 1, ω 2, 2ω 1, 2ω 2, respectively, and c 1 c 2, c 2 s 1, c 1 s 2 contain |ω 1 ± ω 2|. The five differential equations being linear, their stationary solutions must preserve the frequencies, namely the functions n 1, n 2, n 11, n 22, n 12 are associated with the frequencies ω 1, ω 2, 2ω 1, 2ω 2, |ω 1 ± ω 2|, respectively. This remark is the fundamental principle of the QSA method, namely these are the response frequencies that characterize the nonlinear behavior. These differential equations were solved by MATHEMATICA 7 (Wolfram Research, Champaign, IL, USA) after transformation into algebraic equations by the Laplace transform. The transient terms like \(e^{-t\left(\alpha_{n}\left(V_{0}\right)+\beta_{n}\left(V_{0}\right)\right)}\) were ignored to retain only stationary solutions.

The current I can also be approximated by a quadratic polynomial near the steady state I 0 with respect to the input amplitudes V 1 and V 2:

The expressions of I 1, I 2, I 11, I 22 and I 12 are directly determined by polynomial identification from Eq. (1) after substitution of n by its quadratic polynomial approximation (Eq. (4)). Similarly, I 1, I 2, I 11, I 22 and I 12 are associated with the frequencies ω 1, ω 2, 2ω 1, 2ω 2 and |ω 1 ± ω 2|, respectively.

2.2 Multi-sinusoidal voltage clamp

For a single sinusoidal voltage clamp, the frequency space is described by one variable ω 1. For a double sinusoidal voltage clamp, the frequency space is described by two variables ω 1 and ω 2. If each variable describes N frequencies, then \(\frac{1}{2}N\left(N+1\right)\) pairs of frequencies are required to probe the quadratic neuronal response. For instance, N = 10 would require 55 experiments for only one voltage level and stimulus amplitude. This would be experimentally not reasonable since an excessively long recording duration for a whole cell voltage clamped neuron would be required.

A solution consists of computing the quadratic response for all pairs in parallel instead of sequentially. For this, the double sinusoidal command can be extended to a multi-sinusoidal command as follows

The quadratic polynomials in the two variables of Eqs. (4) and (5) have to be extended to quadratic polynomials in several variables. The current response is then approximated by

The coefficients I i , I ii , I ij are determined by polynomial identification as in the previous section. In particular, it can be shown that for all V i = 0 except V k ≠ 0 and V l ≠ 0 (k ≠ l) the multi-sinusoidal current of Eq. (7) coincides with the double sinusoidal current of Eq. (5). In practice however, the analytical expression of Eq. (7) is more simply constructed by looping the double sinusoidal voltage clamp over all the frequency pairs \(\left\{ i,j\right\} \). A result of this algorithm is illustrated (Fig. 1(a)) for the frequency set {0.2, 0.8, 2, 3.4, 5.8, 10.4, 13.4, 17.8} (in Hertz) with sinusoidal amplitudes equal to 0.5 mV and randomized phases. The voltage command has a mean of V 0 = − 43 mV and standard deviation 0.99 mV. The quadratic terms of I(t) are required to accurately describe the neuronal response, which clearly cannot be done by the usual linear analysis.

Nonlinear analysis of the model. (a) Superposition of the original normal current response (in blue), the linear analysis (in green) and the quadratic analysis (in red). The red curve is almost perfectly superimposed to the blue curve. Clearly, the quadratic analysis is required to accurately describe the neuronal response. (b) Magnitude of the QSA matrix. Each cell represents a coefficient at the corresponding row and column of the matrix, coded by colors. (c) Eigenvalues sorted by decreasing magnitude. There are two dominant eigenvalues suggesting that the quadratic neuronal function can be considered as a sum of two squares as a first approximation. For these plots, the frequency components were computed with the MATLAB command FFT divided by the number of points

2.3 Linear and quadratic behavior

The multi-sinusoidal voltage clamp formulas (6) and (7) can be rewritten in matrix form in order to simplify the calculations, and further to analyse experiments. It is well known from Fourier analysis that complex exponentials are optimal to represent stationary signals. More precisely, for an experiment of duration T (in seconds), the elementary wave functions \(\mathbf{e_{k}}\left(t\right)=e^{i2\pi kt/T}\) are able to reconstruct V(T) and I(T) by linear superposition. The stimulation frequencies being integer multiples of 2π/T, they can be denoted by ω i = 2πn i /T where i is an index describing the set \(\Gamma=\left\{ -N,\ldots,-1,+1,\ldots,+N\right\} \). Also, by convention n − i = − n i . The multi-sinusoidal voltage command can be directly written as a superposition of elementary waves through the common trigonometric formula \(\cos\left(\theta\right)=\frac{1}{2}\left(e^{i\theta}+e^{-i\theta}\right)\)

where \(v_{k}=\frac{1}{2}V_{k}e^{i\phi_{k}}\) for k > 0 and \(v_{-k}=\overline{v_{k}}\) (bar is complex conjugate). In fact, this expression represents the multi-sinusoidal voltage command as a vector v with components v k in the basis of elementary waves \(\mathbf{e_{n_{k}}}\).

The linear part of the current response

involves stimulation frequencies only and thus can be written as a linear superposition of the elementary waves with complex coefficients L k acting on the input like an admittance

By contrast, the quadratic part of the current response

involves frequencies 2ω i and \(\left|\omega_{i}\pm\omega_{j}\right|\). Therefore, products \(\mathbf{e_{n_{i}}}\mathbf{e_{n_{j}}}\) are produced such that the quadratic response can be written as a quadratic mixing of the elementary waves

In order to ignore constant DC in the pure quadratic response, the coefficients B i, − i must be set to zero. Moreover, since the current response has no imaginary part, the coefficients must satisfy \(B_{i,j}=\overline{B_{-i,-j}}\). Also, note the symmetry B i,j = B j,i .

Remarkably, the row flipped matrix Q i,j = B − i,j is Hermitian (Lang 2002):

This is very convenient because Hermitian matrices have many important properties. In particular, their eigenvectors can be used to decompose the quadratic current response as a sum of squares weighted by real eigenvalues playing the role of amplitudes. The general skeleton of Q i,j is as follows

This matrix is the essential tool of the method and is called the QSA Matrix. In fact, it is especially appropriate for computations with the Fourier transform, as explained in the following section on experimental measurements.

This matrix allows the reconstruction of the current response through simple algebraic manipulations. Indeed, if the clock matrix is defined by \(U_{t}={\rm{diag}}\left(\bf{e_{n_{k}}}\left(t\right)\right)\) then the voltage command vector as well as the linear and quadratic transformations, L and Q can be made explicitly dependent on time, namely v t = U t v and L t = LU t and \(Q_{t}=U_{t}^{*}QU_{t}\) (the upper * denotes the conjugate transpose). This allows a reconstruction of the current response in the time domain by considering L as linear and Q as a Hermitian form

or equivalently

It is interesting to note the duality of these two formulations, analogous to the Schrödinger/Heisenberg pictures in quantum mechanics. Indeed, either the vector is time-dependent and operators are time-independent, or the converse. Moreover, although B and Q encode the same information, they have different interpretations. In particular, B is similar to a bilinear Volterra kernel that could be generalized to higher orders such as \(\sum B_{i,j,k}v_{i}v_{j}v_{k}\mathbf{e_{n_{i}}}\mathbf{e_{n_{j}}}\mathbf{e_{n_{k}}}\). By contrast, Q is a self-adjoint linear operator similar to an observable acting on an input state. The quadratic behavior is obtained a posteriori through \(\mathbf{v_{t}}^{*}Q\mathbf{v_{t}}=\left\langle \mathbf{v_{t}}|Q\mathbf{v_{t}}\right\rangle =\left\langle Q\right\rangle _{\mathbf{v_{t}}}\), that is similar to an expectation value in quantum mechanics. However, there is no obvious generalization of Q to higher orders, which means that B and Q are two different representations of the quadratic neuronal behavior.

The QSA matrix being Hermitian, it can be diagonalized through Q = P * DP where P is a unitary matrix satisfying P * = P − 1. In this expression, each column in P * contains the coordinates of an eigenvector expressed in the basis of elementary waves. Also, D = diag(d i ) is the diagonal matrix containing the eigenvalues. The quadratic part can then be rewritten as

where w t = P v t . The transformation matrix P being unitary, it preserves the signal energy of the stimulation vector, namely \(\left\Vert \mathbf{w_{t}}\right\Vert ^{2}=\left\Vert \mathbf{v_{t}}\right\Vert ^{2}\). On the other hand, the diagonal matrix D plays the role of a quadratic filter, such that in the above change of basis, the quadratic part of the response is reduced to a sum of squares

This reduction has a special meaning when only a few eigenvalues are dominant. In this case, the neuronal function can be approximated by ignoring the small eigenvalues, providing a more compact description. However, the total contribution of all the eigenvalues is equal to zero because \(\sum d_{i}={\rm{Tr}}\left(D\right)={\rm{Tr}}\left(Q\right)=0\). The QSA matrix and the eigenvalues of the model are illustrated (Fig. 1(b) and (c)), showing two dominant eigenvalues. The computations were made with MATLAB (The MathWorks, Natick, MA, USA).

2.4 Nonoverlapping measurements

In practice, experimental measurements are subject to difficulties due to frequency overlapping. More precisely, it is possible that n i + n j = n k + n l for distinct pairs of frequencies \(\left\{ n_{i},n_{j}\right\} \) and \(\left\{ n_{k},n_{l}\right\} \). In this case, the terms \(B_{i,j}v_{i}v_{j}\mathbf{e_{n_{i}}}\mathbf{e_{n_{j}}}\) and \(B_{k,l}v_{k}v_{l}\mathbf{e_{n_{k}}}\mathbf{e_{n_{l}}}\) share the same output component \(\mathbf{e_{n_{i}+n_{j}}}=\mathbf{e_{n_{k}+n_{l}}}\). This means that the measurement of such a shared component is unable to separate the coefficients B i,j and B k,l . This problem is quite general and sometimes encountered when measuring Volterra kernels in nonlinear signal theory. Harmonic probing has been developed as a practical measurement technique to determine the kernels in the frequency domain (Boyd et al. 1983). For instance, when a multi-sinusoidal voltage command is imposed with incommensurable frequencies ω 1, ..., ω N then every coefficient of the corresponding second order Volterra kernel \(G_{2}\left(\omega_{i},\omega_{j}\right)\) can be deduced from the output measured at ω i + ω j . In this paper, harmonic probing was adapted to determine the coefficients of the QSA matrix without frequency overlapping. In particular, a flexible algorithm was developed to generate sets of nonoverlapping frequencies appropriate for the voltage clamp conditions (controlled duration and frequency range).

Then, for a set of nonoverlapping frequencies, Eq. (10) can be solved in which B i,j are the unknown coefficients.

where \(\hat{I}\left(n_{i}+n_{j}\right)\) coincides with the Fourier component of \(I\left(t\right)\) at the frequency \(\omega_{i}=2\pi\left(n_{i}+n_{j}\right)/T\). The term \(\gamma_{i,j}=\frac{1}{2}+\frac{1}{2}\delta_{i,j}\) is a coefficient of symmetry such that γ i,i = 1 and \(\gamma_{i,j}=\frac{1}{2}\) for i ≠ j, which implies \(B_{i,i}=\frac{\hat{I}\left(2n_{i}\right)}{v_{i}^{2}}\) and \(B_{i,j}=B_{j,i}=\frac{1}{2}\frac{\hat{I}\left(n_{i}+n_{j}\right)}{v_{i}v_{j}}\) respectively.

3 Results

3.1 Prepositus hypoglossi neurons

The neurons of the prepositus hypoglossi nucleus (PHN) in the brainstem integrate head velocity signals to control eye position in order to stabilize an image at the center of the visual field during head rotation. This operation is called neural integration (Aksay et al. 2007) due to an analogy with integration in mathematical calculus. Individual neurons of the PHN show nonlinear properties that are likely to play an important role in the neural integrator. In particular, there are many studies suggesting that these nonlinear properties are essential for the network behavior of the neural integrator (Koulakov et al. 2002; Goldman et al. 2003). In addition, nonlinear behavior has been observed in neurons involved in eye movement, as described by Idoux et al. (2006).

In this section, the QSA analysis is applied to experimental measurements of PHN neurons in order to understand the fundamental subthreshold nonlinear behavior determining spike trains during neural integration. In particular, this paper is focused on the nonlinearities that exist primarily at the intracellular membrane potential levels up to the action potential threshold.

Previous studies have been done to characterize neurons by piecewise linear analysis, especially in Fishman et al. (1977), Murphey et al. (1995) and Idoux et al. (2008) where the neuronal response have been modelled by a series of linear admittances at different voltage clamp levels and amplitudes. However, as explained above, it is critical to also consider nonlinear behavior during normal PHN physiological activity. This has been done by generalizing the linear admittance to a quadratic function, which can be computed by interpolation of the QSA matrix. In this way, a fundamentally new piecewise quadratic analysis describing the complete second order and linear behavior of PHN neurons is done. Without such a quadratic description, the linear identification can be dramatically insufficient compared to an accurate quadratic identification as shown in Fig. 2(a) for experimental data of a PHN neuron. Thus, quadratic analysis is required to correctly characterize the responses of PHN neurons, typically observed during synaptic activity.

Analysis of the experimental data of a prepositus hypoglossal neuron. (a) Experimental current response I(t) to a stimulation V(t) with nonoverlapping frequencies centered at − 55.26±2.85 mV. The quadratic analysis (in red) is dramatically more accurate than the linear analysis (in green) to describe the experimental response (in blue). Indeed, the red curve is almost superimposed on the blue curve. (b and c) Magnitude of the QSA matrix computed at − 60 mV and at − 55 mV. Clearly, the coefficients (color coded) are increased at the depolarized level. (d and e) Magnitude of the interpolated QSA matrix at − 60 mV and − 55 mV. The peaks of the frequency interactions are approximately in the same location after depolarization, moreover additional peaks appear at high frequencies. For these plots, the frequency components were computed with the MATLAB command FFT divided by the number of points. The interpolations were performed by the MATLAB command GRIDDATA (linear method). The current was measured in nA and the voltage in mV

The voltage clamp data of PHN neurons were provided by Professor Daniel Eugène (personal communication) and analyzed using nonoverlapping frequencies { 0.2, 0.8, 2, 3.4, 5.8, 10.4, 13.4, 17.8 } (in Hertz) at two voltage levels − 60 mV and − 55 mV. A rectangle low-pass filter was applied a posteriori to remove noise greater than 36 Hz. The highest stimulation frequency is 17.8 Hz which implies that the highest frequency of the quadratic response is 2 * 17.8 = 35.6 Hz, hence the cutoff at 36 Hz is valid for a quadratic analysis. Figure 2(a) shows the current responses in the time domain. Clearly, the quadratic response is more accurate than the linear one. The residual error is due to experimental noise or higher order frequency contamination, which is inevitable in any experiment. Each data sequence is an average of four recordings or more using the experimental protocol as described in Idoux et al. (2008). The quadratic analysis was adequate in all experiments, except when the stimulation amplitude is either too large evoking higher order frequency contamination or too small to overcome synaptic or intrinsic channel fluctuations.

One of the most important conditions to ensure the quality of a nonlinear voltage clamp experiment is the time invariance, which means that the same voltage input must always generate the same current output. Therefore, it is necessary to compute the correlation between all the recordings in order to ensure that they are reasonably time invariant. The quadratic response i 2 (t ) (defined previously) can be extracted from the full response I(t) by Fourier analysis for each of the M recordings (in this experiment M = 4). This provides M signals \(r_{1}=\mathbf{i_{2,1}}\left(t\right),\ldots,r_{M}=\mathbf{i_{2,M}}\left(t\right)\). In fact, each i 2,m is the quadratic part of the m-th recording. The pairwise correlations are then encoded into the matrix of pairwise products \(\left\langle r_{i},r_{j}\right\rangle =\int r_{i}\left(t\right)r_{j}\left(t\right)dt\)

The symmetry of the matrix reduces the number of computations. From this, the time invariance correlation coefficient, ticc, can be defined as the coefficient of variation of the elements of this matrix, that is ticc = σ/μ where σ and μ are the mean and the standard deviation of the elements of this matrix. When all dot products are identical the ticc is zero, otherwise it increases depending on the lack of correlation. Although empirical, the ticc has proved to be particularly efficient to make automatic data extraction from large pools of experiments. In particular, the criterion ticc < 1 was used for the analyzed experiments. For the two experiments (Figs. 2 and 3), the ticc is 0.5989 at − 60 mV and 0.1087 at − 55 mV.

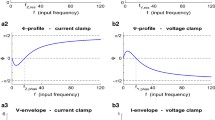

Linear and nonlinear analysis at two membrane potentials. At the top, the impedance computed from usual linear analysis. At the middle, the eigenvalues of each QSA matrix. At the bottom, the R summation of each QSA matrix. For these plots, the frequency components were computed with the MATLAB command FFT divided by the number of points. The current was measured in nA and the voltage in mV

Figures 2(b) and (c) compare the magnitudes of the QSA matrices. In general, the magnitudes tend to globally increase at depolarized levels, as illustrated here when comparing − 60 to − 55 mV. Figures 2(d) and (e) compare the magnitudes of the interpolated QSA matrices. The interpolations were performed by the MATLAB command GRIDDATA (linear method) in order to represent the responses in 3D color plots over a continuous range of frequencies. The approach allows a coarse approximation of the response including overlapping frequencies. This can be further improved by combining additional QSA matrices constructed from other nonoverlapping measurements. As can be observed, the peaks of the frequency interactions are approximately in the same location after depolarization. Moreover, new peaks appear at high frequencies.

Figure 3 compares the linear and quadratic analyses for a voltage clamped neuron at two membrane potentials. The upper plot shows the linear impedance, which dramatically decreases for a 5 mV change of potential. The middle plot of Fig. 3 shows eigenvalues that increase with depolarization and furthermore, the interesting result that there is mainly one dominant eigenvalue, especially at − 55 mV. This means that a single large square \(d_{i}\left|\mathbf{w_{t}}\right|_{i}^{2}\) plays a major role in the description of the neuronal function. However, at different membrane potentials or with other types of neurons there may be two or more significant eigenvalues. It would appear that the distribution of eigenvalues provides an indication of the complexity of the information processing used by neurons. An important observation is that the eigenvalues increase at the depolarized levels consistent with the increased QSA matrix amplitudes.

The bottom plot of Fig. 3 shows a summation by columns of the QSA matrix

Hence, each value R(j) represents the quadratic interactions involving each stimulation frequency ω j . The advantage of this summation is that a Bode-like plot can be made, which is easier to read than a square matrix. Again, the magnitude is larger at the depolarized level, which is consistent with and confirms the eigenvalue analysis. This plot shows that each stimulation frequency can significantly contribute to the nonlinear response. Clearly, R(j) has been enhanced during the voltage clamped depolarization at the higher frequencies as shown in Fig. 3. Thus, the nonlinear behavior becomes more important near the spiking threshold and is quantitatively determined by the QSA analysis.

3.2 Functional interpretation as a nonlinear–linear processing unit

The quadratic neuronal response given by Eq. (10) is encoded in the matrix B. The current i 2 (t) is then computed as a quadratic form, and thus B is actually the matrix of an associated bilinear form b. This means that b maps pairs of vectors to complex numbers and b is linear in each argument. Let E be the vector space of input vectors, which is spanned by the elementary waves \(\mathbf{e_{n_{k}}}\) defined in Section 2.3. With this notation, the bilinear map is b : E × E → ℂ.

The tensor product is said to be universal (Lang 2002) amongst all bilinear maps because it turns bilinear maps of E × E into linear maps of E ⊗ E. Thus, the tensor product E ⊗ E is a linear space with a particular bilinear map ⊗ : E × E → E ⊗ E such that for any bilinear map β : E × E → ℂ, there exists a unique linear map ℓ : E ⊗ E → ℂ, making the diagram commutative in Fig. 4(a).

Functional interpretation as a nonlinear–linear processing unit. (a) The commutative diagram of the tensor product, which turns bilinear maps into linear maps. (b) The voltage clamp quadratic response computed for PHN neurons. The neuronal operator is decomposed as a quadratic nonlinearity followed by a linear filter applied to second order frequency interactions

Then, the neuronal bilinear response b can be decomposed as b(x, y) = L 2(x ⊗ y), where L 2 is linear due to the universal property of the tensor product. L 2 is completely determined on the basis vectors by \(L_2(\mathbf{e_{n_{i}}} \otimes \mathbf{e_{n_{j}}}) = b(\mathbf{e_{n_{i}}},\mathbf{e_{n_{j}}}) = B_{i,j}\). This leads to the definition of a quadratic nonlinearity N(v t ) = v t ⊗ v t such that the quadratic neuronal response becomes a linear filter on second order frequencies

This interpretation is illustrated on Fig. 4(b), and is comparable to the sandwich model (Victor and Shapley 1980) without the linear prefilter. It can be useful to point out that the tensor product is independent of frequency overlaps. Thus, this interpretation is also appropriate for the analytical model (7), which considers symbolic second order frequency combinations. In addition, the universal property of the tensor product can be generalized to multilinear maps, which means that the interpretation above is also generalizable to higher order systems. This suggests that although QSA and kernel methods (Victor and Shapley 1980) use different mathematical frameworks, the concepts are essentially the same, namely the polynomial modeling of nonlinear systems.

3.3 Functional interpretation of eigenvalues

As suggested by Eq. (14), the real eigenvalues of the matrix Q can be interpreted as the amplitudes of a quadratic filter. This is due to the Hermitian property of Q, which should be seen as a Hermitian operator rather than a bilinear kernel. Clearly, Q is a complicated linear transformation because it has only cross terms. However, after a change of basis via eigendecomposition, it becomes a simple filter encoded in the diagonal matrix D (Eq. (13)).

Let E i be an elementary matrix having 0 everywhere except at the intersection of the i-th row and i-th column on the diagonal where it is 1. Then D can be expanded as

The quadratic neuronal response can be rewritten as

This suggests the introduction of a quadratic nonlinearity

Therefore, the quadratic neuronal response can be interpreted as a parallel combination of nonlinear–linear processing units

This interpretation is illustrated by Fig. 5, and is comparable to the sandwich model (Victor and Shapley 1980). In the case of one dominant eigenvalue, the diagram is reduced to a single nonlinear–linear processing unit.

3.4 Functional interpretation of traceless constraint

However, as explained in a prior section, the trace of the matrix Q is zero in order to avoid DC terms in the quadratic response. This implies a slightly different interpretation of the eigenvalues. The trace of the matrix D is also zero, thus the 2N eigenvalues d i are subject to a constraint

which implies that 2N − 1 eigenvalues are free and 1 eigenvalue is bound, such as

The eigenvalue d N is chosen as an example, without loss of generality.

The goal is to reformulate the matrix D as a linear combination of 2N − 1 instead of 2N matrices, in order to take into account the traceless constraint. Traceless matrices of dimension 2N × 2N over complex numbers, like Q, are elements of the so called special linear algebra sl(2N, ℂ), which is actually a Lie algebra (Erdmann 2007). As a special case, traceless diagonal matrices, like D, are elements of the so called Cartan subalgebra \(\mathfrak{h}\), which is an abelian Lie subalgebra of sl(2N, ℂ).

Let F i = E i − E i + 1 be a set of 2N − 1 matrices for i ∈ Γ − { N }. Then, the set of matrices F i forms a basis of \(\mathfrak{h}\) (Erdmann 2007). Thus, the matrix D can be reformulated as a linear combination of 2N − 1 matrices

It can be checked that for k ∈ Γ − {N}

Hence

This formula provides an alternative interpretation (Fig. 6) of the quadratic neuronal behavior as a sum of 2N − 1 differential units N k − N k + 1, which has the advantage of taking into account the traceless constraint. This approach is especially appropriate in absence of dominant eigenvalues.

4 Discussion

Membrane biophysics

In this paper, the quadratic sinusoidal analysis, namely QSA, has been developed to probe the quadratic structure of voltage clamped neuronal responses, and to experimentally demonstrate that such a structure fundamentally exists in PHN neurons. This new result definitely confirms the necessity to extend the previous development of piecewise linear analysis to quantitatively investigate the nonlinear properties of neurons and their dendrites (Idoux et al. 2008). The piecewise approach consists of using small signals at different steady state membrane potentials and then computing an admittance linear operator in each case, as described in Fishman et al. (1977) and Murphey et al. (1995). With QSA, it is now possible to compute a linear + quadratic neural operator, providing a nonlinear analysis for a range of membrane potentials appropriate for synaptic integration.

The QSA has been introduced at the biophysical level of ionic channels by using a simplified Hodgkin– Huxley model (Hodgkin and Huxley 1952). The differential equations were solved under MATHEMATICA 7, significantly improving the work pioneered by FitzHugh (1983) by extending it to multi-sinusoidal stimulations. The result is a quadratic polynomial explicitly showing the quadratic interactions that otherwise are not directly apparent in the HH type model. The method is a perturbative analysis similar to that commonly used in theoretical physics where analytical solutions to a complicated nonlinear problem are approximated by a power series. In particular, the formal approach developed in this paper could be useful to improve parameter estimation of HH models to quadratic precision, where linearized neuronal models could be replaced by quadratic neuronal models. Furthermore, unlike spike train centric methods, the intracellular membrane potential measurements allow one to establish relationships between model parameters and measured neuron responses.

The multi-sinusoidal voltage clamp response has been reformulated with linear and bilinear algebra by projecting signals in a basis of elementary waves. This led to the definition of a Hermitian matrix, which further expands the current response into two parts characterized by linear and Hermitian forms. In this way, the neuronal response is expressed in an algebraic framework (Eqs. (11) and (12)), which suggests a possible connection with self-adjoint operator theory (Blackadar 2009) through the Hermitian matrix. Moreover, the compactness and efficiency of matrix calculus used in this paper suggests new approaches to represent neurons by algebraic operators in order to address the problem of large scale neural network simulations.

Nonlinear methods

As explained before, there are two mathematical representations for the quadratic response function, corresponding to B i,j and Q i,j respectively. The QSA emphasizes on the Hermitian matrix representations Q i,j because it is much more efficient when dealing with complex coefficients. However, the matrix B i,j is similar to a second order Volterra kernel, which is a classical tool in computational neuroscience (see Schetzen (2006) for basic theory and Westwick and Kearney (2003) for more physiological applications). Most of previous studies have considered spiking neurons from a phenomenological point of view. For example, Poggio and Torre (1977) derive analytical expressions for the instantaneous firing rate using a Volterra representation. In particular, these authors consider a multi-sinusoidal input in order to obtain frequency kernels that have been determined by a harmonic input method (Bedrosian and Rice 1972; Victor 1977).

Alternatively, the Wiener theory has been frequently used in computational neuroscience. It consists of probing a nonlinear system with a Gaussian white noise stimulus and computing Wiener kernels. The resulting Wiener series is an orthogonalized Volterra series with uncorrelated kernel outputs. It can be shown (Schetzen 2006) that Wiener kernels can be estimated independently by cross-correlation techniques. This approach was pioneered by Marmarelis and Naka in retinal neurons, who provided a quantitative description of the nonlinear function for the catfish retinal neuron chains (Marmarelis and Naka 1973). A practical estimation in the frequency domain was provided by French (1976) improving the speed of computation by replacing cross correlations with complex multiplications. Unfortunately, Wiener theory has at least three major sources of inaccuracies in practice, as pointed out by Korenberg et al. (1988): (1) ideal Gaussian white noise is not realistic because of the intrinsic noise frequency response of stimulation devices; (2) the infinite time average cross-correlation must be approximated by a finite time average due to the limited duration of experiments; and (3) the Wiener G-functionals are not perfectly orthogonal over a finite data record.

Major progress in nonlinear analysis was made by approximating white noise approach using sums of sinusoids (Victor 1977, 1979; Victor and Shapley 1980). This approach has the great advantage of using deterministic inputs, which is fundamentally different from white noise analysis. Thus, multiple sets of inputs and time averaging are used to estimate nonlinearities. As explained in Victor and Shapley (1980), this approach is designed for extracting information from a system in order to develop models of its higher order nonlinear behavior. Similarly, the experimental QSA method makes uses of a noiseless deterministic sum of sinusoids to provide a complete quadratic algebraic characterization for a particular set of frequencies.

Clearly, the mathematical foundations of QSA have their roots in functional analysis where signals are decomposed in vector spaces and transformations are represented by linear, bilinear or Hermitian operators. The Fourier basis is extremely efficient with both analytical models and experimental data. The discussion above has shown that QSA can also be connected to Volterra theory and compared to other methods such as Wiener theory. This paper has demonstrated the novel result that PHN neurons can be characterized by QSA theory, namely that synaptic response of a few mV are fundamentally quadratic. This suggests that neuronal responses are well suited to functional analysis and vector spaces. A modern introduction to many of underlying concepts can be found in Mallat (2008). Moreover, multilinear algebra with matrix calculus has previously been used in neuroscience studies, such as Ahrens et al. (2008).

Interacting frequencies

Some techniques have been proposed earlier to generate second order nonoverlapping frequencies, such as with relatively prime integers (Boyd et al. 1983) or particular algorithms (Victor and Shapley 1980). Interestingly in the latter paper, the problem of higher order frequency overlaps is treated by using multi sinusoidal stimulations with different relative phases. In this way, it is possible to separate the contributions of different input frequencies to a shared output frequency. The voltage clamp experiments described in this paper impose constraints on the waveform of the command input in order to satisfy biological criteria (piecewise level, random nonverlapping frequency set, amplitude like synaptic inputs) or to optimize Fourier computations (duration and sampling time as decimal numbers without roundoff). Moreover, identical time-invariant deterministic responses were averaged to obtain a reliable and accurate second order description. In particular, an important aspect of these measurements is the use of a fixed deterministic stimulus with phases selected to minimize the dynamic range, which can be averaged in real time to reduce spontaneous synaptic noise. For this purpose, a solver was implemented in MATLAB to satisfy all constraints for generating suitable waveforms with nonoverlapping frequencies. In addition, the algorithm was directly embedded in a whole cell clamp acquisition program making it directly available during an experiment.

There also exists a fundamental arithmetic characterization of frequency processing. Let Ω = {n 1, ⋯ , n p } ⊂ ℕ − { 0 } be a set of input frequencies simplified to integer numbers and < Ω > the corresponding frequency group as a subgroup of ℤ. The frequency group encodes the set of all output frequencies like those measured in the neuronal response, including harmonic and intermodulation products of any order. The group Λ = ℤp is called the formal frequency group and its elements are formal frequencies. It encodes the symbolic frequency combinations like those computed in the analytical models (Section 2.1 or 2.2). For example, a tuple (1, − 1 , 0 ⋯ 0) represents a symbolic frequency n 1 − n 2. Then it is natural to define the surjective evaluation homomorphism \(\mu : \Lambda \rightarrow \left\langle \Omega \right\rangle\) such that

The key point is that frequency overlaps are due to the noninjectivity of μ. More precisely, frequency overlaps can be defined as equivalence classes [λ] of the quotient group \(\Lambda / \ker \mu\). Then the isomorphism \(\Lambda / \ker \mu \simeq \left\langle \Omega \right\rangle\) implies a one-to-one relationship between overlaps and measured output frequencies. Therefore, each frequency overlap is determined by a linear diophantine equation (Manin 2005)

This approach suggests that the usual polynomial approximations may be refined by considering that a neuron in fact does arithmetics with frequencies. Thus, the frequency arithmetic of a neuron, rather than truncating the order of nonlinearity, could be explored as a fundamental neuronal algorithm.

5 Conclusion

In conclusion, QSA is a novel computational method to characterize the nonlinear responses of individual neurons. This paper demonstrates that voltage clamped PHN neurons involved in the neural integrator can be adequately described by a linear + quadratic operator, with no need for higher orders for physiological synaptic inputs. The eigendecomposition allows an intrinsic description of the neuron’s quadratic function as a sum of squares. Remarkably, very few eigenvalues can be sufficient to describe the experimentally measured PHN neurons, leading to a very compact description of the neural unit. Furthermore, the complexity inherent in the nonlinear neuronal behavior as illustrated by the analytical expressions derived in this paper, has been explored through Hermitian matrix calculus. This model provides an algebraic neural operator for the entire voltage clamped PHN neuron with its particular ion conductance channel distributions throughout its dendritic structure.

References

Ahrens, M. B., Linden, J. F., & Sahani, M. (2008). Nonlinearities and contextual influences in auditory cortical responses modeled with multilinear spectrotemporal methods. The Journal of Neuroscience, 28(8), 1929–1942.

Aksay, E., Olasagasti, I., Mensh, B. D., Baker, R., Goldman, M. S., & Tank, D. W. (2007). Functional dissection of circuitry in a neural integrator. Nature Neuroscience, 10, 494–504.

Bedrosian, E., & Rice, S. O. (1972). Applications of Volterra—System analysis. Report, Rand Corp., Santa Monica, CA.

Blackadar, B. (2009). Operator algebras. New York: Springer.

Boyd, S., Tang, Y., & Chua, L. (1983). Measuring volterra kernels. IEEE Transactions on Circuits and Systems, 30(8), 571–577.

Erdmann, K. (2007). Introduction to lie algebras. New York: Springer.

Fishman, H. M., Poussart, D. J., Moore, L. E., & Siebenga, E. (1977). K+ conduction description from the low frequency impedance and admittance of squid axon. Journal of Membrane Biology, 32(3–4), 255–290.

FitzHugh, R. (1983). Sinusoidal voltage clamp of the hodgkin-huxley model. Biophysics Journal, 42(1), 11–16.

French, A. S. (1976). Practical nonlinear system analysis by Wiener kernel estimation in the frequency domain. Biological Cybernetics, 24, 111–119.

Goldman, M. S., Levine, J. H., Major, G., Tank, D. W., & Seung, H. S. (2003). Robust persistent neural activity in a model integrator with multiple hysteretic dendrites per neuron. Cerebral Cortex, 13, 1185–1195.

Hodgkin, A. L., & Huxley, A. F. (1952). A quantitative description of membrane current and its application to conduction and excitation in nerve. Journal of Physiology, 117(4), 500–544.

Idoux, E., Serafin, M., Fort, P., Vidal, P. P., Beraneck, M., Vibert, N., et al. (2006). Oscillatory and intrinsic membrane properties of guinea pig nucleus prepositus hypoglossi neurons in vitro. Journal of Neurophysiology, 96, 175–196.

Idoux, E., Eugene, D., Chambaz, A., Magnani, C., White, J. A., & Moore, L. E. (2008). Control of neuronal persistent activity by voltage-dependent dendritic properties. Journal of Neurophysiology, 100(3), 1278–1286.

Korenberg, M. J., Bruder, S. B., & Mcllroy, P. J. (1988). Exact orthogonal kernel estimation from finite data records: Extending Wiener’s identification of nonlinear systems. Annals of Biomedical Engineering, 16, 201–214.

Koulakov, A. A., Raghavachari, S., Kepecs, A., & Lisman, J. E. (2002). Model for a robust neural integrator. Nature Neuroscience, 5, 775–782.

Lang, S. (2002). Algebra. New York: Springer.

Mallat, S. (2008). A wavelet tour of signal processing. New York: Academic.

Manin, Y. (2005) Introduction to modern number theory. New York: Springer.

Marmarelis, P. Z., & Naka, K. I. (1973). Nonlinear analysis and synthesis of receptive-field responses in the catfish retina. I. Horizontal cell leads to ganglion cell chain. Journal of Neurophysiology, 36(4), 605–618.

Mauro, A., Conti, F., Dodge, F., & Schor, R. (1970). Subthreshold behavior and phenomenological impedance of the squid giant axon. The Journal of General Physiology, 55(4), 497–523.

Moore, L. E., Fishman, H. M., & Poussart, D. J. (1980). Small-signal analysis of K+ conduction in squid axons. Journal of Membrane Biology, 54(2), 157–164.

Murphey, C. R., Moore, L. E., & Buchanan, J. T. (1995). Quantitative analysis of electrotonic structure and membrane properties of nmda-activated lamprey spinal neurons. Neural Computation, 7(3), 486–506.

Poggio, T., & Torre, V. (1977). A Volterra representation for some neuron models. Biological Cybernetics, 27, 113–124.

Schetzen, M. (2006). The Volterra and Wiener theories of nonlinear systems. Malabar: Krieger.

Victor, J. (1977). Nonlinear analysis of cat retinal ganglion cells in the frequency domain. Proceedings of the National Academy of Sciences of the United States of America, 74(7), 3068–3072.

Victor, J. (1979). Nonlinear systems analysis: Comparison of white noise and sum of sinusoids in a biological system. Proceedings of the National Academy of Sciences of the United States of America, 76(2), 996–998.

Victor, J., & Shapley, R. (1980). A method of nonlinear analysis in the frequency domain. Biophysics Journal, 29(3), 459–483.

Westwick, D. T., & Kearney, R. E. (2003). Identification of nonlinear physiological systems. Piscataway: IEEE.

Author information

Authors and Affiliations

Corresponding author

Additional information

Action Editor: Jonathan D. Victor

Rights and permissions

About this article

Cite this article

Magnani, C., Moore, L.E. Quadratic sinusoidal analysis of voltage clamped neurons. J Comput Neurosci 31, 595–607 (2011). https://doi.org/10.1007/s10827-011-0325-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-011-0325-0