Abstract

The autism intervention literature focuses heavily on the concept of evidence-based practice, with less consideration of the acceptability, feasibility, and contextual alignment of interventions in practice. A survey of 130 special educators was conducted to quantify this “social validity” of evidence-based practices and analyze its relationship with knowledge level and frequency of use. Results indicate that knowledge, use, and social validity are tightly-connected and rank the highest for modeling, reinforcement, prompting, and visual supports. Regression analysis suggests that greater knowledge, higher perceived social validity, and a caseload including more students with autism predicts more frequent use of a practice. The results support the vital role that social validity plays in teachers’ implementation, with implications for both research and practice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Between 2008 and 2015, nearly 500 million government dollars were invested in research seeking effective treatments and interventions for individuals with Autism Spectrum Disorder (ASD; U.S. Department of Health & Human Services 2016). Unfortunately, many of the evidence-based practices (EBPs) born out of such laboratory studies never take root in the classrooms where students with ASD await their benefit. Research suggests that, although interventions have been proven efficacious through empirical studies, teachers often apply EBPs with novel adaptations, in untested combinations, or not at all (Dingfelder and Mandell 2011). Teachers and schools face substantial barriers to operationalizing EBPs for students with ASD, including macro-level structures and policies, resource limitations, and perceptions of the goodness of fit and feasibility of use (Cook and Odom 2013). The present body of literature centered on EBP for students with ASD is sizable in its measures of effectiveness within tightly-controlled protocols, but respectively minimal in its consideration of acceptability for those practitioners it aims to support.

The EBP movement has gained traction within schools as a result of legislation mandating the use of instructional methods aligned with research within both general (No Child Left Behind Act 2002) and special education (Individuals with Disabilities Education Act 2004). Researchers in the area of autism intervention have developed a comprehensive catalog of EBPs as a result of multiple systematic reviews identifying those practices supported by robust empirical evidence. The National Autism Center (NAC) identified fourteen “established” intervention practices for children with autism in 2015 and The National Professional Development Center (NPDC) on ASD currently recognizes 27 EBPs supported by experimental evidence (Wong et al. 2015). With considerable overlap, both reviews have identified practices that support developmental, behavioral, and educational outcomes (NAC 2015; Wong et al. 2015). Self-report of school practitioners suggests that the most frequently-used EBPs include visual supports, reinforcement, social skills strategies, modeling, and prompting (Paynter et al. 2018; Robinson et al. 2018; Sansosti and Sansosti 2013). Nevertheless, EBP remains confronted with issues of implementation that threaten to limit its functional application in the day-to-day business of classrooms and teachers (Cook and Odom 2013; Dingfelder and Mandell 2011).

A practice’s designation as evidence-based relies staunchly on positive student outcomes across multiple peer-reviewed studies utilizing a rigorous group and/or single-subject design methodology (NAC 2015; Wong et al. 2015). Yet, as a direct result of the requirement for those studies to include “variables [that] are so well-controlled that independent scholars can draw firm conclusions from the results,” (NAC 2015, p. 22), the empirical evidence base does not account for the idiosyncratic factors that affect different people, settings, and organizations. These components can be defined as “social validity,” a construct that is subjectively measured in terms of the social value of goals, the acceptability of procedures, and consumer satisfaction with outcomes (Wolf 1978). The resulting description of an intervention’s social validity has implications for how readily it will be accepted and maintained in applied settings. Recently, Callahan et al. (2017) undertook a systematic review of social validity measurement within all 828 articles that either the NAC or NPDC included in their respective inventories. Although experts have recommended the use of social validity measurements as a quality indicator for EBPs (Horner et al. 2005), neither team required the presence of these measurements for a study’s inclusion in their review. Findings indicate that only 27% of the articles supporting EBPs on the basis of efficacy also included a measure of social validity. Of those, the vast majority (73%) focused on consumer satisfaction, or how pleased the practitioner was with the specific intervention. Of the 221 studies that directly measured social validity, less than half considered “socially important dependent variables,” while only about a quarter addressed issues of time and cost-effectiveness that often associate with procedural execution (Callahan et al. 2017). Clearly, there is a dearth of social validity measurement occurring within the field of ASD interventions at the present time.

Echoing Wolf’s (1978) call for subjective measurements in applied behavior analysis, the accepted method of measuring social validity involves directly “ask[ing] those receiving, implementing, or consenting to a treatment about their opinions of the treatment” (Carter 2010, p. 2). Previous surveys of social validity have collected ratings across the concept of EBP in general rather than within individual practices. Educational practitioners have reported that individual student needs are an important consideration in their selection and use of EBP (Robinson et al. 2018), although contextual factors like time, cost, and resource access have rarely been studied. However, surveys of early intervention and school psychology practitioners have linked EBP use to positive organizational culture (Paynter and Keen 2014), personnel capacity and perceptions (Robinson et al. 2018), and training in the targeted practice (Combes et al. 2016). Lastly, a study specifically considering social skills EBPs suggested that an individual’s “openness” to research was a significant predictor of implementation (Combes et al. 2016).

The moniker of “evidence-based” as the gold standard for intervention has the potential to exclude practical and personal considerations that go beyond effectiveness. Even the widely-disseminated and cited NPDC report on EBPs acknowledges that practitioners cannot rely on efficacy alone, but also must consider factors such as “students’ previous history… teachers’ comfort… feasibility of implementation… and family preferences” (Wong et al. 2015, p. 33). A combined approach of “evidence-based practice” and “practice-based evidence” invites bidirectional relationships between researchers and practitioners in order to elucidate not only what works, but also why and how. Self-reports from practitioners can provide a view into their classrooms to better understand which EBPs are the most acceptable, feasible, and well-aligned with their practice. If, as Wolf (1978) suggests, social validity is fundamental to widespread acceptance and use of EBPs, this nuanced understanding is vital to the goal of developing and adapting EBPs for everyday settings faced with everyday challenges.

This study sought to fill a gap in the literature by describing the current status of knowledge and use of the NPDC’s 27 EBPs for students with ASD and to further clarify the perceived social validity of each individual practice through a cross-sectional, anonymous online survey of North Carolina special education teachers. Additionally, the study was designed to explore what, if any, potentially meaningful connections exist among teacher and school-specific characteristics and the components of social validity (namely, a practice’s acceptability, feasibility, and contextual alignment), and how those factors might predict teachers’ frequency of use of EBPs. Research questions included:

-

1.

What is the level of knowledge and use of the 27 EBPs among public-school special education teachers?

-

2.

Which EBPs do special education teachers perceive as the most and least socially valid in public-school settings?

-

3.

What relationships exist among knowledge, use, and social validity of EBPs?

-

4.

How do attitudinal, training, and demographic factors relate to EBP knowledge, use, and social validity perceptions of special education teachers?

Methods

Participants

The population for this study was comprised of pre-kindergarten through grade 12 special education teachers in North Carolina who were currently teaching at least one student with ASD. North Carolina teachers represent a range of characteristics related to experience level and geographic region, and the state houses multiple renowned universities and research institutions focused on ASD. Public school teachers were eligible to participate in the study if they were currently teaching special education in North Carolina and currently taught at least one student identified with ASD. Other school practitioners, such as paraprofessionals and related service providers, were excluded. Informed consent was obtained from all individual participants included in the study and no personally identifiable information was obtained.

Participants were recruited through snowball sampling, with the survey initially distributed to special education directors across the state for dissemination to their district’s teachers. The survey was fully completed by 130 eligible educators who represented 26 of the 115 individual school districts throughout the state. A full description of respondent characteristics appears in Table 1. Participants represented a range of North Carolina school districts in terms of geographic location within the state and district size. The sample was compared to the most current available demographic data of North Carolina teachers across all grade levels and content areas to verify representativeness. The sample reflected the overall distribution of years of teaching experience but included a slightly higher proportion of females (79.8% statewide), teachers holding Master’s Degrees (27% statewide), and those in rural districts (14.9% statewide), as compared to the overall population of teachers (North Carolina Department of Public Instruction 2019).

Measures

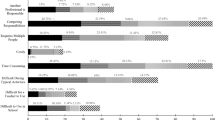

Data was collected via a self-reported, online survey. The first portion of the survey included demographic questions for the respondent characteristics previously depicted. The second portion was comprised of matrices of the 27 EBPs designated by the NPDC (Wong et al. 2015) and a Likert-type rating of agreement with statements about subjective knowledge of the practice, adapted from the Usage Rating Profile-Intervention Revised (URP-IR; Chafouleas et al. 2011), and frequency of use, from never to frequently (adapted from Paynter and Keen 2014). Respondents were then conditionally presented with a series of rating questions related to the social validity of those EBPs for which they indicated any level of subjective knowledge on the initial matrix. These statements were selected from the Usage Rating Profile-Intervention (URP-IR; Chafouleas et al. 2011), an instrument designed to measure the multiple factors that may influence uptake and implementation of specific interventions. A sample of the individual items and rating method used in this survey are shown in Fig. 1. Items were was selected based on their high factor loadings on factor analyses of the URP-IR (Briesch et al. 2013). The first two questions were chosen to measure the social validity construct of “acceptability,” the second two to measure “feasibility,” and the final two to measure “contextual alignment.”

Adapted from Chafouleas et al. (2011)

Social validity survey items.

Additionally, the respondent selected the type of training they received on each of these EBPs using definitions adapted from the Autism Treatment Survey (ATS; Hess et al. 2008). The final section of the survey asked respondents to indicate their attitudes about the concept of EBP, in general, using elements of the Evidence-Based Practice Attitudes Scale (EBPAS; Aarons et al. 2010) to assess their openness to research, the perceived divergence between research and practice, and the requirement to implement EBP. All measures included in the survey were selected due to their previously-established validity and reliability.

Procedure

The electronic survey was developed and pre-tested by individuals with knowledge of the content and experience teaching in the public-school setting, with slight language adjustments made in response to feedback. The survey information and direct link were emailed to the special education program directors across North Carolina with a description of the research and a request to forward the survey to their relevant staff. Respondents completed the one-time, self-administered electronic survey independently. The survey was estimated to take between 30 and 45 min, depending upon how many conditional questions were triggered by participant responses. Participants were given the option to enter their name into a random drawing for one of eleven gift cards modestly valued between $25 and $100 in order to encourage participation (Dillman et al. 2014). Program directors were offered an executive summary of results in return for assisting with dissemination.

The survey was made available to respondents for 1 month in the fall of 2018 and the sample comprised all responses received during that time. One-hundred-eighty-seven responses were received, but 28 indicated demographics that made them ineligible (not a public-school special education teacher or not currently teaching any students with ASD) and an additional 29 submitted incomplete responses that did not include ratings of frequency, the primary dependent variable. As a result, 130 responses were retained for analysis.

Results

Results are reported both descriptively and analytically in order to address the study questions. Descriptive analysis was conducted on practice-specific responses from the 130 participants, while group comparisons and regression utilized a combined dataset across all EBPs. Survey results were screened for missing data, which appeared as minimal randomly-skipped items and was therefore excluded from individual analyses through listwise deletion. Ratings of knowledge and use for each practice contained a maximum of three missing data points each, with most representing reports from the full sample. Statistical procedures were selected for their robustness in relation to small samples and potentially non-normal data.

Ranking EBPs by Knowledge, Use, and Social Validity

Table 2 displays respondents’ ratings of knowledge and use of the 27 EBPs, ranked from the highest percentage of respondents indicating agreement with having knowledge of the practice to the lowest percentage. The percentage of respondents using the practice “often (about once per day)” or “frequently (more than once per day)” was used as an indicator of daily use. Teachers reported knowledge of an average of 22.8 practices each, but only indicated daily use of an average of 12.7 practices. The top four practices in knowledge and daily use were identical (reinforcement, prompting, modeling, and visual supports), and pivotal response training was ranked last in both categories.

Respondents’ overall rankings of practices in terms of social validity, from highest to lowest, appears in Table 3. The individual factors of acceptability (i.e. appropriateness for addressing student goals and respondent’s enthusiasm for its use), feasibility (i.e. availability of time and material resources), and contextual alignment (i.e. aligned with school climate and supported by administration) are also specifically delineated. Across all EBPs, the three individual factors of social validity were significantly correlated with one another (p < .001*** for all comparisons), supporting the reliability of the combined variable for inferential analysis. Mean overall social validity ranged from 3.81 to 5.17 (on a 6-point scale), indicating at least a low to moderate level of agreement with social validity statements for all practices. Notably, the same four practices that were most known and used were rated highest in social validity, and the lowest-rated practice (Pivotal Response Training) was also the least known and used.

Spearman rank-order correlations were conducted in order to quantify the relationships among knowledge, daily use, and social validity. Spearman correlations allowed for comparisons among EBPs in relation to one another as rankings on an ordinal scale. All rank order correlations were positive and significant, suggesting that practices that are more well-known are more likely to be used daily (ρ = .743, p < .001***) and have greater social validity (ρ = .892, p < .001***) and that practices ranked higher on social validity are more likely to be used daily (ρ = .838, p < .001***). Functional behavior assessment appeared as an outlier due to its low ranking as a frequently-used practice (20th) despite its high ranking for knowledge (5th) and social validity (6th). Pearson product-moment correlations among the Likert-type ratings of the three variables within each individual practice were all moderate to strong (r range .20–.67), further supporting the closely related nature of knowledge, use, and social validity for all identified EBPs.

Group Comparisons

In order to compare differences in knowledge, use, and perceptions of social validity among teachers with differing characteristics, group comparisons were conducted using mixed-effect ANOVA (see Table 4). For these analyses, ratings of all practices were combined into composite vectors to elucidate patterns across EBPs in general. As a result, 3510 ratings were recorded for knowledge and use (130 respondents who each rated all 27 practices). Because respondents only rated social validity for EBPs that they reported knowledge of, listwise deletion removed unrated practices from the analyses of social validity, resulting in 2282 observations of social validity for known practices. Continuous variables were grouped into categories using cut-points for analysis. Mixed-effect models were employed in order to control for the nested effects of multiple observations from each respondent, and the necessary assumptions of linearity, homogeneity of residuals, and normal distribution of residuals were met without the need for additional corrections.

Social validity ratings did not differ across any of the teacher or school characteristics groups but did differ significantly based upon the type of training received. Groups did not differ significantly in their knowledge or use of EBPs based upon years of experience or grade level taught and no significant differences in knowledge appeared based on classroom type or geographic region. Frequency of use differed significantly across the one binary variable of geographic region, indicating that teachers in non-rural districts used practices more often than their rural counterparts (p = .03*). For the remaining significant group comparisons, post hoc analysis using pairwise comparison of least squares means were conducted to determine which specific groups accounted for the difference.

Classroom Type

Teachers in self-contained special education classrooms reported the highest frequency of use, significantly more than those in inclusive (t-value = − 3.74; p < .001***) and resource (t-value = − 3.27; p = .001**) settings.

ASD Caseload

Teachers who taught ten or more students with ASD reported significantly more knowledge than those teaching 1–3 students (t-value = − 2.38; p = .02*), 4–6 students (t-value = − 2.06; p = .04*), or 7–9 students (t-value = − 2.76; p = .007**). The only significant group difference for frequency of use existed between teachers with 1–3 students and those with 4–6 students with ASD (t-value = − 2.04; p = .04*).

Training Type

Finally, significant differences were found in training type and level of knowledge, frequency of use, and social validity. The primary differences related to teachers who reported being “self-taught” in an EBP. Self-taught teachers identified significantly less knowledge than those trained in all other methods (university: t-value = 5.24, p < .001***; full-day: t-value = 2.28, p = .02*; half-day: t-value = 3.14, p = .002**; peer coach: t-value = 3.04, p = .002**) and used EBPs significantly less often than all others (university: t-value = 5.34, p < .001***; full-day: t-value = 3.43, p < .001***; half-day: t-value = 3.80, p < .001***; peer coach: t-value = 3.10, p = .002**). Additionally, teachers who were self-taught in a practice rated the social validity of that practice significantly lower than those trained in all other ways (university: t-value = 10.32, p < .001***; full-day: t-value = 5.08, p < .001***; half-day: t-value = 8.97, p < .001***; peer coach: t-value = 6.33, p < .001***). Other significant intergroup differences indicated that teachers trained in a practice through a more intensive training model rated the practice’s social validity more highly (university > full-day or peer coaching; full-day > half-day; half-day > peer coaching).

Predictors of Use

Finally, in order to better elucidate the factors or combination of factors that are most predictive of teachers’ rate of usage of EBPs, a mixed-effects linear regression was conducted using all of the potential continuous independent variables. Years of experience and attitude toward EBP did not contribute significantly to the model and were therefore removed. The subsequent, most parsimonious model (Table 5) included knowledge level, the three components of social validity, and the number of students with ASD taught in order to explain 40.7% of the variance (marginal R2 = .407 using Nakagawa and Schielzeth’s (2013) method).

Discussion

There is a relative lack of analysis of social validity and its impact on teacher knowledge and decision-making related to intervention selection and implementation (Callahan et al. 2017). The results of this cross-sectional survey of North Carolina special educators provide a promising start to considering which EBPs are most socially valid and how those factors relate to teacher knowledge and use. The top ranking of the same four practices in all three categories of knowledge, daily use, and social validity indicate that modeling, prompting, reinforcement, and visual supports are highly useful practices for teachers in public-school settings. All four were similarly endorsed as frequently-used practices by school psychologists and allied health professionals in previous studies (Paynter et al. 2018; Robinson et al. 2018; Sansosti and Sansosti 2013), further supporting their utility to practitioners across professional domains and locations. Pre-service and in-service training may benefit from focusing first on these most socially valid practices when determining prioritization of focus areas. Functional Behavior Assessment appeared as a prominent outlier, with a low ranking for daily use despite high levels of knowledge and social validity, but this is likely attributable to its nature as an assessment process used as a basis for intervention rather than a daily intervention itself (Wong et al. 2015). Notably, many of the EBPs that appeared near or at the bottom of the rankings of knowledge, daily use, and social validity include more rigid and manualized procedures (e.g. Pivotal Response Training, Discrete Trial Training) or require time and resources supplemental to the standard school program (e.g. Parent-implemented Interventions, Video Modeling; Robinson et al. 2018; Wong et al. 2015). This supports the call for researchers and intervention developers to more purposefully consider the components of implementation that make some interventions more easily adaptable to public-school settings than others (Cook and Odom 2013).

Results revealed no difference in EBP knowledge levels of teachers across differing experience levels, grades, classroom types, or geographic regions, suggesting that no one group of special educators is being disproportionately excluded from research dissemination. The single significant difference in knowledge indicated that teachers with more than ten students with ASD on their caseload reported greater knowledge of EBPs than others, which would seem to be expected for such a high level of autism specialization. Teachers in self-contained classrooms reported using EBPs more frequently than their counterparts in either inclusive or resource settings. These teachers may have fewer competing priorities as a result of focusing on a smaller number of students in a single classroom. However, EBPs have been identified as efficacious across the autism spectrum, with many specifically supporting social and executive functioning skills that are vital to success in inclusive settings (Leblanc et al. 2009), so it is important for future research to consider how to better support special educators in their EBP implementation within less restrictive environments. Finally, ratings of social validity across EBPs notably failed to vary significantly based on any teacher or school characteristics, which bolsters the assertion that social validity is highly practice-specific and individualized (Carter 2010). Judgments of acceptability, feasibility, and contextual alignment must depend upon the unique combination of the specific EBP, the individual practitioner, and the conditions and context of implementation.

The pattern of differences across different teacher training models clearly implicates “self-taught” methods as being related to lower levels of knowledge and use of EBPs. A large body of research in professional development supports the need for training that is intensive and ongoing and that provides coaching and feedback in order to substantively change teacher practice, both for teachers in general (Desimone and Garet 2015; Guskey and Yoon 2009; Joyce and Showers 2002) and specifically those providing specialized interventions for students with disabilities (Brock and Carter 2017). Although significant differences among more and less intensive in-person training models were not found, potentially due to the limited sample size, it seems clear that individual responsibility for learning a practice is not enough. Online modules and other independent forms of training are becoming increasingly common and have been deemed useful and relevant by users (Sam et al. 2019), but it is important to note that these methods may not be adequate on their own to effect changes in practice. Professional development in EBPs could benefit from a “flipped classroom” model (Hardin and Koppenhaver 2016), in which teachers complete such self-directed online modules on their own time and dedicate in-person training time to applied practice and individualized coaching. The finding that teachers trained in an EBP through a more intensive training model also rated the social validity of that practice more highly is worthy of further study, as the directionality of the relationship cannot be inferred. It is possible that universities and workshop developers are focusing their efforts on more socially valid practices, but there is also the potential that teachers are actively seeking out more intensive professional development for those practices that they already view as more relevant and acceptable to their practice. Previous studies have similarly surmised that multiple considerations, including a practice’s relevance, feasibility, context, and even collegial perception, likely intersect when teachers decide which professional development opportunities to pursue (Brock et al. 2014).

Finally, the regression demonstrates that increased frequency of EBP implementation can be predicted by greater knowledge of the practice, perception of the practice as acceptable, feasible, and aligned with the context in which they work, and a caseload including more students with ASD. Contrary to a previous study’s results (Combes et al. 2016), teachers’ attitude toward EBP, in general, was not a significant predictor of frequency of use. All three components of social validity contributed significantly to the model, suggesting that each is a valuable indicator of potential use above and beyond the others. Even though these three areas are tightly correlated, a practice is most likely to be frequently used if a teacher views it as simultaneously practical and appropriate for, and supported by, their individual circumstances. Consequently, research-practice partnerships should emphasize the development, adaptation, and empirical study of EBPs with fully established, multifaceted social validity, not simply tokenistic measures of “consumer satisfaction” (Callahan et al. 2017).

This study was subject to limitations, including the non-probability snowball sampling technique that resulted in a small sample size. The sample did appear to be representative of North Carolina teachers, notably in terms of statewide representation and years of teaching experience. A larger pool of respondents or the use of randomization would increase the statistical power, but this is logistically difficult due to access issues in contacting teachers directly. The statistical significance of multiple correlates and predictors and the consistency of rankings of individual practices despite the limited sample, however, is encouraging, as is the agreement with multiple previous surveys of other professionals’ usage of specific EBPs. Although these results cannot be generalized to practitioners in other states or in differing professional roles, they should invite replication in order to ascertain patterns and unique considerations for the vast range of teachers, therapists, and clinicians who utilize EBPs in their practice with individuals with ASD.

Although self-report is often implicated as a source of potential bias, such subjective measurement is paramount to the construct of social validity (Wolf 1978). Future work may benefit from a more objective measurement of knowledge level, in particular, in order to reveal differences in teachers’ understanding of using a practice with procedural fidelity as opposed to a simpler conceptual familiarity. Finally, the combination of ratings across all EBPs for analysis precludes the ability to make predictive comparisons across individual practices or other relevant groupings. For instance, predictors of use may be different depending on an intervention’s characteristics, such as naturalistic versus behavioral foundations or level of manualization. Specific practices may have higher or lower social validity for different age groups or classroom types, but the present sample is not large enough to allow for such analyses. Nevertheless, the results support the positive correlations among social validity, knowledge, and use as a general “rule of thumb” across all EBPs within the population of interest, which is a valuable starting point for further research and practical applications.

Collaborative partnerships between researchers and school-based practitioners are integral to building a more complex understanding of how contextual and attitudinal factors can facilitate the meaningful implementation of EBPs (Parsons et al. 2013), and future work should prioritize the voices of those working in the field every day. Mixed methodologies could provide the opportunity to triangulate quantitative survey-based data from a large sample with more personal perceptions of practitioners or even field-based observations in public-school classrooms. Richer qualitative data related to teacher attitude and beliefs may shed more practical light on the factors that influence teacher implementation of EBPs. In addition, a deeper analysis of individual practices is needed in order to better reveal differences across the discrete factors of social validity. The use of the full URP-IR questionnaire for every practice would have been beyond the scope of this study; however, as a validated and reliable measure, it could be a beneficial tool for a multifaceted analysis of individual practices by larger samples of teachers. Such study could pinpoint areas of relative weakness in social validity for individual EBPs and invite research partnerships to identify feasible adaptations for different settings or practitioners. Practices are often targeted for use based solely on student characteristics, with existing tools such as a decision-making matrix from the NPDC designed to support practitioners in selecting EBPs appropriate to student age and desired outcome (Wong et al. 2015). With evidence that non-student-related elements, such as time-intensity, material resource requirements, teacher enthusiasm, and physical context, also play a vital role in implementation, future guidance and supports for practitioner selection of EBPs would benefit from the inclusion of social validity considerations alongside student-specific factors.

Conclusion

The autism intervention field is burgeoning as a result of increased prevalence rates and policy focus on research-based interventions, and the ongoing research in the field is certainly contributing to increased knowledge and use of EBPs in practice-based settings. Even so, it is clear that components of teachers, schools, the larger education system, and the interventions themselves have complicated and intersecting bearing on when and how those EBPs are being provided to students with ASD in public-school classrooms. By valuing the self-reported perspectives of teachers themselves, this study has revealed practices that are socially valid and demonstrated the significant connection between that validity and increased frequency of implementation. Future research must not avoid the real-world dynamics that influence EBP uptake and maintenance but should embrace partnerships with teachers in order to develop and strengthen practices that are not only efficacious but also practical and valued. The prioritization of social validity offers an opportunity for researchers and practitioners alike to better understand the factors that may well hold the key to maximizing the impact of EBPs for all of our students with ASD.

References

Aarons, G. A., Glisson, C., Hoagwood, K., Kelleher, K., Landsverk, J., & Cafri, G. (2010). Psychometric properties and US national norms of the evidence-based practice attitude scale (EBPAS). Psychological Assessment, 22(2), 356–365.

Briesch, A. M., Chafouleas, S. M., Neugebauer, S. R., & Riley-Tillman, T. C. (2013). Assessing influences on intervention implementation: Revision of the usage rating profile-intervention. Journal of School Psychology, 51, 81–96.

Brock, M. E., & Carter, E. W. (2017). A meta-analysis of educator training to improve implementation of interventions for students with disabilities. Remedial and Special Education, 38(3), 131–144.

Brock, M. E., Huber, H. B., Carter, E. W., Juarez, A. P., & Warren, Z. E. (2014). Statewide assessment of professional development needs related to educating students with autism spectrum disorder. Focus on Autism and Other Developmental Disabilities, 29(2), 67–79.

Callahan, K., Hughes, H. L., Mehta, S., Toussaint, K. A., Nichols, S. M., Ma, P. S., et al. (2017). Social validity of evidence-based practices and emerging interventions in autism. Focus on Autism and Other Developmental Disabilities, 32(3), 188–197.

Carter, S. L. (2010). The social validity manual: A guide to subjective evaluation of behavior interventions. London: Academic Press.

Chafouleas, S. M., Briesch, A. M., Neugebauer, S. R., & Riley-Tillman, T. C. (2011). Usage rating profile—intervention (Revised). Storrs, CT: University of Connecticut.

Combes, B. H., Chang, M., Austin, J. E., & Hayes, D. (2016). The use of evidenced-based practices in the provision of social skills training for students with autism spectrum disorder among school psychologists. Psychology in the Schools, 53(5), 548–563.

Cook, B. G., & Odom, S. L. (2013). Evidence-based practices and implementation science in special education. Exceptional Children, 79(2), 135–144.

Desimone, L. M., & Garet, M. S. (2015). Best practices in teachers’ professional development in the United States. Psychology, Society, & Education, 7(3), 252–263.

Dillman, D. A., Smyth, J. D., & Christian, L. M. (2014). Internet, phone, mail, and mixed-mode surveys: The tailored design method (4th ed.). Hoboken, NJ: Wiley.

Dingfelder, H. E., & Mandell, D. S. (2011). Bridging the research-to-practice gap in autism intervention: An application of diffusion of innovation theory. Journal of Autism and Developmental Disorders, 41(5), 597–609.

Guskey, T. R., & Yoon, K. S. (2009). What works in professional development? Phi Delta Kappan, 90(7), 495–500.

Hardin, B. L., & Koppenhaver, D. A. (2016). Flipped professional development: An innovation in response to teacher insights. Journal of Adolescent & Adult Literacy, 60(1), 45–54.

Hess, K. L., Michael, A. E., Morrier, J., Juane, A. L., Ae, H., & Ivey, M. L. (2008). Autism treatment survey: Services received by children with autism spectrum disorders in public school classrooms. Journal of Autism and Developmental Disorders, 38, 961–971.

Horner, R. H., Carr, E. G., Halle, J., McGee, G., Odom, S., & Wolery, M. (2005). The use of single-subject research to identify evidence-based practice in special education. Exceptional Children, 71(2), 165–179.

Individuals with Disabilities Education Act, (2004) 20 U.S.C. § 1400.

Joyce, B. R., & Showers, B. (2002). Student achievement through staff development. Alexandria, VA: Association for Supervision and Curriculum Development.

Leblanc, L., Richardson, W., & Burns, K. A. (2009). Autism spectrum disorder and the inclusive classroom: Effective training to enhance knowledge of ASD and evidence-based practices. Teacher Education and Special Education, 32(2), 166–179.

Nakagawa, S., & Schielzeth, H. (2013). A general and simple method for obtaining R2 from generalized linear mixed-effects models. Methods in Ecology and Evolution, 4(2), 133–142.

National Autism Center. (2015). Findings and conclusions: National standards project, phase 2. Randolph, MA: Author

No Child Left Behind Act [NCLB], Pub. L. No. 1425 (2002). 115.

North Carolina Department of Public Instruction. (2019). State summary of public school full-time personnel. http://apps.schools.nc.gov/ords/f?p=145:21:::NO:::.

Parsons, S., Charman, T., Faulkner, R., Ragan, J., Wallace, S., & Wittemeyer, K. (2013). Commentary-bridging the research and practice gap in autism: The importance of creating research partnerships with schools. Autism, 17(3), 268–280.

Paynter, J., & Keen, D. (2014). Knowledge and use of intervention practices by community-based early intervention service providers. Journal of Autism and Developmental Disorders, 45, 1614–1623.

Paynter, J., Sulek, R., Luskin-Saxby, S., Trembath, D., & Keen, D. (2018). Allied health professionals’ knowledge and use of ASD intervention practices. Journal of Autism and Developmental Disorders, 48, 2335–2349.

Robinson, L., Bond, C., & Oldfield, J. (2018). A UK and Ireland survey of educational psychologists’ intervention practices for students with autism spectrum disorder. Educational Psychology in Practice, 34(1), 58–72.

Sam, A. M., Cox, A. W., Savage, M. N., Waters, V., & Odom, S. L. (2019). Disseminating information on evidence-based practices for children and youth with autism spectrum disorder: AFIRM. Journal of Autism and Developmental Disorders. https://doi.org/10.1007/s10803-019-03945-x.

Sansosti, F. J., & Sansosti, J. M. (2013). Effective school-based service delivery for students with autism spectrum disorders: Where we are and where we need to go. Psychology in the Schools, 50(3), 229–244.

U.S. Department of Health & Human Services. (2016). IACC/OARC autism research database. https://iacc.hhs.gov/funding/data/. Accessed 20 June 2019.

Wolf, M. M. (1978). Social validity: The case for subjective measurement or how applied behavior analysis is finding its heart. Journal of Applied Behavior Analysis, 11(2), 203–214.

Wong, C., Odom, S. L., Hume, K. A., Cox, A. W., Fettig, A., Kucharczyk, S., et al. (2015). Evidence-based practices for children, youth, and young adults with autism spectrum disorder: A comprehensive review. Journal of Autism and Developmental Disorders, 45(7), 1951–1966.

Acknowledgments

The author thanks Dr. Daniel Riffe, Professor of Media and Journalism at UNC Chapel Hill, for assistance with methodological design, implementation, and participant incentives. Additional acknowledgments provided to Dr. Cathy Zimmer and the consultants at the Odum Institute at UNC Chapel Hill for their statistical consulting services.

Author information

Authors and Affiliations

Contributions

JM was the lead researcher and designed the study, collected data, conducted statistical analyses, and drafted and revised the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The author declares that they have no conflict of interest.

Ethical Approval

All procedures performed in this study involving human participants were in accordance with the ethical standards of the institutional research committee. IRB #18-2576 was reviewed by the Office of Human Research Ethics and was determined to be exempt from further review according to regulatory category 2, survey, interview, or public observation, under 45 CFR 46.101(b).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

McNeill, J. Social Validity and Teachers’ Use of Evidence-Based Practices for Autism. J Autism Dev Disord 49, 4585–4594 (2019). https://doi.org/10.1007/s10803-019-04190-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-019-04190-y