Abstract

The purpose of this study was to identify evidenced-based, focused intervention practices for children and youth with autism spectrum disorder. This study was an extension and elaboration of a previous evidence-based practice review reported by Odom et al. (Prev Sch Fail 54:275–282, 2010b, doi:10.1080/10459881003785506). In the current study, a computer search initially yielded 29,105 articles, and the subsequent screening and evaluation process found 456 studies to meet inclusion and methodological criteria. From this set of research studies, the authors found 27 focused intervention practices that met the criteria for evidence-based practice (EBP). Six new EBPs were identified in this review, and one EBP from the previous review was removed. The authors discuss implications for current practices and future research.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

With acceleration of the prevalence of autism spectrum disorder (ASD) has come the imperative to provide effective intervention and treatment. A commonly held professional value is that practitioners and professionals base their selection of intervention practices on scientific evidence of efficacy (Suhrheinrich et al. 2014). An active intervention research literature provides the source for identifying interventions and treatments that generate positive outcomes for children and youth with ASD and their families. However, it is impractical for professionals and practitioners to conduct a search of the literature whenever they are designing an intervention program for a child or youth with ASD. Although there are many claims for intervention practices that are evidence-based, and researchers have reviewed research studies that support individual practices (e.g., Reichow and Volkmar 2010), few systematic, comprehensive reviews of the intervention research literature have been conducted. The purpose of this paper is to report a comprehensive review of the intervention literature that identifies evidence-based, focused intervention practices for children and youth with ASD.

To specify the focus of this paper, it is important to delineate two types of practices that appear in the literature. Comprehensive treatment models (CTMs) consist of a set of practices organized around a conceptual framework and designed to achieve a broad learning or developmental impact on the core deficits of ASD. In their summary of education programs for children with autism, the National Academy of Science Committee on Educational Interventions for Children with Autism (National Research Council 2001) identified 10 CTMs. Examples included the UCLA Young Autism Program by Lovaas and colleagues (Smith et al. 2000) and the TEACCH program developed by Schopler and colleagues (Marcus et al. 2000). In a follow-up to the National Academy review, Odom et al. (2010a) identified 30 CTM programs operating within the U.S. These programs were characterized by organization (i.e., around a conceptual framework), operationalization (i.e., procedures manualized), intensity (i.e., substantial number of hours per week), longevity (i.e., occur across one or more years), and breadth of outcome focus (i.e., multiple outcomes such as communication, behavior, social competence targeted) (Odom et al. 2014a). The Lovaas model, and its variation known as Early Intensive Behavioral Intervention, has the strongest evidence of efficacy (Reichow and Barton 2014). At this writing, developers of three other CTMs have published RCT-level efficacy studies, with two showing positive effects [Early Start Denver Model (Dawson et al. 2010) and LEAP (Strain and Bovey 2011)] and one showing mixed effects [More than Words (Carter et al. 2011)]. Other CTMs have not been studied with sufficient rigor to draw conclusions about efficacy (Wilczynski et al. 2011) although some have substantial and positive accumulated evidence [e.g., Pivotal Response Treatment (Koegel and Koegel 2012; Stahmer et al. 2011)], and there are many active efficacy studies of CTMs currently in progress. This literature will not be part of the current review.

Focused interventions are a second type of practice that appears in the literature. Focused intervention practices are designed to address a single skill or goal of a student with ASD (Odom et al. 2010b). These practices are operationally defined, address specific learner outcomes, and tend to occur over a shorter time period than CTMs (i.e., until the individual goal is achieved). Examples include discrete trial teaching, pivotal response training, prompting, and video modeling. Focused intervention practices are the building blocks of educational programs for children and youth with ASD, and they are highly salient features of the CTMs just described. For example, peer-mediated instruction and intervention (Sperry et al. 2010), is a key feature of the LEAP model (Strain and Bovey 2011).

The historical basis for employing focused intervention practices that are supported by empirical evidence of their efficacy began with the evidence-based medicine movement that emerged from England in the 1960s (Cochrane 1972; Sackett et al. 1996) and the formation of the Cochrane Collaboration to host reviews of the literature about scientifically supported practices in medicine (http://www.cochrane.org/). In the 1990s, the American Psychological Association Division 12 established criteria for classifying an intervention practice as efficacious or “probably efficacious,” which provided a precedent for quantifying the amount and type of evidence needed for establishing psychosocial intervention practices as evidence-based (Chambless and Hollon 1998; Chambless et al. 1996). Similarly, other professional organizations such as the National Association for School Psychology (Kratochwill and Shernoff 2004), American Speech and Hearing Association (2005), and Council for Exceptional Children (Odom et al. 2004) have developed standards for the level of evidence needed for a practice to be called evidence-based.

Previous to the mid-2000s, the identification of evidence-based practices (EBPs) for children and youth with ASD was accomplished through narrative reviews by sets of authors or organizations. Although these reviews, for the most part, were thorough and useful, they often did not follow a standard process for searching the literature, a stringent review process that incorporated clear criteria for including or excluding studies for the reviews, or a systematic process for organizing the information into sets of practices. In addition, even when systematic reviews were conducted, many traditional systematic review processes such as the Cochrane Collaborative only included studies that employed a randomized experimental group design (also called randomized control trial or RCT) and excluded single case design (SCD) studies (Kazdin 2011). By excluding SCD studies, such reviews omitted a vital experimental research methodology now being recognized as a valid scientific approach (Kratochwill et al. 2013) and eliminated the major body of research literature on interventions for children and youth with ASD.

In recent years, there have been reviews of empirical support for individual focused intervention practices that have included SCD as well as group design studies. Researchers have published reviews of behavioral interventions to increase social interaction (Hughes et al. 2012), social skills training (Camargo et al. 2014; Walton and Ingersoll 2013), peer-mediated interventions (Carter et al. 2010), exercise (Kasner et al. 2012), naturalistic interventions (Pindiprolu 2012), adaptive behavior (Palmer et al. 2012), augmentative and alternative communication (Schlosser and Wendt 2008), and computer- and technology-based interventions (Knight et al. 2013). The reviews are directed in their focus and provide support for individual practices, but they do not always include an evaluation of the quality of the studies included in their reviews.

Two reviews have specifically focused their work on interventions (also called treatments) for children and youth with ASD, included both group and SCD studies, followed a systematic process for evaluating published, peer-reviewed journal articles before including (or excluding) it in their review, and identified a specific set of interventions that have evidence of efficacy. These reviews were conducted by the National Standards Project (NSP) and the National Professional Development Center on Autism Spectrum Disorders (NPDC). The NSP conducted a comprehensive review of the literature that included early experimental studies on interventions for children and youth with ASD and extended through September 2007 (National Autism Center 2009). At this writing, a report of the updated version of the NSP review is forthcoming.

The NPDC also conducted a review of the literature, including articles published over the 10-year period from 1997 to 2007 (Odom et al. 2010b). The NPDC was funded to promote teachers’ and practitioner’s use of EBPs with infant, and toddlers, children, youth, and youth adults with ASD. The purpose of the review was to identify such practices, although the NPDC mission narrowed the age range of participants in the reviewed studies to the first 22 years. It should be acknowledged that identifying EBPs for adults with ASD is also an important endeavor but out of the purview of the NPDC’s mission.

NPDC investigators began with a computer search of the literature, first using autism and related terms for the search and specifying outcomes. They then used the research design quality indicator criteria established by the CEC-Division for Research (Gersten et al. 2005; Horner et al. 2005) to evaluate articles for inclusion or exclusion from the review. Articles included in the review were evaluated by a second set of reviewers. This review yielded 175 articles. Investigators conducted a content analysis of the intervention methodologies, created intervention categories, and sorted articles into those categories. Adapting criteria from the Chambless et al. (1996) group, they found that 24 focused intervention practices met the criteria for being evidence-based.

Evidence-based practice is a dynamic, rather than static, concept. That is, the intervention literature moves quickly. The NPDC staff undertook the current review to broaden and update the previous review. Many researchers have made contributions to the ASD intervention literature since the original review was conducted, so one purpose of the current review was to incorporate the intervention literature from the years subsequent to the initial review (i.e., 2007 to the beginning of 2012). As in the previous review, the emphasis is on practices appropriate for infants/toddlers, preschool-age, and school-age children. A second purpose was to expand the timeframe previous to the initial review, extending the coverage to 1990 to be consistent with other research synthesis organizations that have examined literature over a 20-year period (e.g., What Works Clearinghouse, WWC). The third purpose was to create and utilize a broader and more rigorous review process than occurred in the previous review. In the current review, investigators recruited and trained a national set of reviewers to evaluate articles from the literature rather than relying exclusively on NPDC staff. Also, NPDC investigators developed a standard article evaluation process that incorporated criteria from several parallel reviews that have occurred (NSP; WWC). The research questions driving this review are: What focused intervention practices are supported as evidence-based by empirical intervention literature? What outcomes are associated with evidence-based focused intervention practices? What are the emerging practices in the field? What are recommendations for the future?

Method

Inclusion/Exclusion Criteria for Studies in the Review

Articles included in this review were published in peer-reviewed, English language journals between 1990 and 2011 and tested the efficacy of focused intervention practices. Using a conceptual framework followed by the Cochrane Collaborative [Participants, Interventions, Comparison, Outcomes, Study Design (PICOS)], the study inclusion criteria are described in the subsequent sections.

Population/Participants

To qualify for the review, a study had to have participants whose ages were between birth and 22 years of age and were identified as having autism spectrum disorder (ASD), autism, Asperger syndrome, pervasive developmental disorder, pervasive developmental disorder-not otherwise specified, or high-functioning autism. Participants with ASD who also had co-occurring conditions (e.g., intellectual disability, genetic syndrome such as Retts, Fragile X or Down Syndrome) were included in this review.

Interventions

To be included in this review, the focused intervention practices examined in a study had to be behavioral, developmental, and/or educational in nature. Studies in which the independent variables were only medications, alternative/complementary medicine (e.g., chelation, neurofeedback, hyperbaric oxygen therapy, acupuncture), or nutritional supplements/special diets (e.g., melatonin, gluten-casein free, vitamins) were excluded from the review. In addition, only interventions that could be practically implemented in typical educational, clinical, home, or community settings were included. As such, intervention practices requiring highly specialized materials, equipment, or locations unlikely to be available in most educational, clinic, community, or home settings were excluded (e.g., dolphin therapy, hyperbaric chambers).

Outcomes

Studies had to generate behavioral, developmental, or academic outcomes (i.e., these were dependent variables in the studies). Outcome data could be discrete behaviors (e.g., social initiations, stereotypies) assessed observationally, ratings of behavior or student performance (e.g., the Social Responsiveness Scale), standardized assessments (e.g., nonverbal IQ tests, developmental assessments), and/or informal assessment of student academic performances (e.g., percentage of correct answers on an instructional task). Studies only reporting physical health outcomes were excluded.

Study Designs

Studies included in the review had to employ an experimental group design, quasi-experimental design, or SCD to test the efficacy of focused intervention practices. Adequate group designs included randomized controlled trials (RCT), quasi-experimental designs (QED), or regression discontinuity designs (RDD) that compared an experimental/treatment group receiving the intervention to at least one other control or comparison group that did not receive the intervention or received another intervention (Shadish et al. 2002). SCD studies had to employ within subjects (cases) designs that compared responding of an individual in one condition to the same individual during another condition (Kazdin 2011). Acceptable SCDs for this review were withdrawal of treatment (e.g., ABAB), multiple baseline, multiple probe, alternating treatment, and changing criterion designs (Kratochwill et al. 2013).

Search Process

Research articles were obtained through an electronic library search of published studies. Before beginning the search, the research team and two university librarians from the University of North Carolina at Chapel Hill developed and refined the literature search plan. One librarian had special expertise in the health sciences literature and the second had expertise in the behavioral and social sciences literature. The research team employed the following databases in the search: Academic Search Complete, Cumulative Index to Nursing and Allied Health Literature (CINAHL), Excerpta Medica Database (EMBASE), Educational Resource Information Center (ERIC), PsycINFO, Social Work Abstracts, MEDLINE, Thomson Reuters (ISI) Web of Knowledge, and Sociological Abstracts. Broad diagnostic (autism OR aspergers OR pervasive developmental disorder) AND practice (intervention OR treatment OR practice OR strategy OR therapy OR program OR procedure OR approach) search terms were used to be as inclusive as possible. The only filters used were language (English) and publication date (1990–2011).

After eliminating duplicate articles retrieved from the different databases, the initial broad search yielded 29,105 articles. The research team then conducted two rounds of screening to select articles that fit the study parameters. The first round of screening focused on titles, which eliminated commentaries, letters to the editor, reviews, and biological or medical studies. The second round of screening investigators examined abstracts to determine if the article included participants with ASD under 22 years of age and used an experimental group design, quasi-experimental group design, or SCD. In both rounds of screening, articles were retained if the titles and/or abstracts did not have enough information to make a decision about inclusion. This screening procedure resulted in 1,090 articles (i.e., 213 group design and 877 SCD) remaining in the pool. All of these articles were retrieved, archived in PDF form, and served as the database for the subsequent review.

Review Process

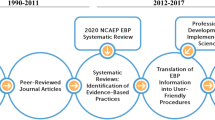

The review process consisted of establishing review criteria, recruiting reviewers, training reviewers, and conducting the review (See Fig. 1).

Criteria and Protocols

Protocols for reviewing group design and SCD studies were designed to determine methodological acceptability, describe the key features of the study (e.g., participants, type of design), and describe the intervention procedures. The initial protocols drew from the methodological quality indicators developed by Gersten et al. (2005) for group design and Horner et al. (2005) for SCD. In addition, selected review criteria for group and SCD from the WWC were incorporated into the review protocol. Central project staff had participated in WWC training and been certified by WWC as reviewers for group design and SCD studies. Protocols went through two iterations of pilot testing within the research group. Two national leaders with expertise in SCD and group design, respectively, and who were not members of the research team reviewed the protocols and provided feedback. From this process the protocols were finalized and formatted for online use.

National Board of Reviewers

To assist in reviewing the identified articles, external reviewers were recruited through professional organizations (e.g., Association for Behavior Analysis International, Council for Exceptional Children) and departments of education, psychology, health sciences, and related fields in higher-education institutions. To be accepted as a reviewer, individuals must have had experience with or knowledge about ASD and have taken a course or training related to group design and/or SCD research methodology. The reviewers self-identified their methodological expertise and interests as group, SCD, or both. Reviewers completed an online training process described fully in the project report (http://autismpdc.fpg.unc.edu/sites/autismpdc.fpg.unc.edu/files/2014-EBP-Report.pdf). After completing the reviewer training, external reviewers were required to demonstrate that they could accurately apply reviewer criteria by evaluating one article of their assigned design type. The reviewer’s evaluation was then compared to a master code file established for the article and their accuracy was calculated. Accuracy was defined as the rater coding the same answer on an item as occurred in the master code file. The criterion for acceptable accuracy was set at 80 %. Reviewers had two opportunities to meet accuracy criteria.

One hundred fifty-nine reviewers completed the training and met inter-rater agreement criteria with the master code files; 63 % completed requirements for single case design articles (n = 100), 24 % completed requirements for group design articles (n = 39), and 13 % completed requirements for both design types (n = 20). All reviewers had a doctoral degree, master’s degree, and/or were enrolled in a graduate education program at the time of the review. Most reviewers received their degrees in the area of special education or psychology and were faculty (current or retired), researchers, or graduate students. The majority of reviewers had professional experience in a classroom, clinic, or home setting and conducted research related to individuals with ASD. In addition, approximately one-third of the reviewers (n = 53) had Board Certified Behavior Analyst (BCBA) or Board Certified Assistant Behavior Analyst (BCaBA) certification. All reviewers received a certificate of participation in the EBP training and article review. BCBA/BCaBA reviewers received continuing education credit if requested.

Each reviewer received between 5 and 12 articles. Articles were randomly assigned to coders, with the exception that a check was conducted after assignment to make sure that the coder had not been assigned an article for which they were an author. In total, they evaluated 1,090 articles. Articles that did not meet all the criteria in the group or SCD protocols were excluded from the database of articles providing evidence of a practice.

Inter-Rater Agreement

Research staff collected inter-rater agreement for 41 % of the articles across all reviewers. The formula for inter-rater agreement was total agreements divided by agreements plus disagreements multiplied by 100 %. Two levels of agreement were calculated: (1) agreement on individual items of the review protocol and (2) agreement on the summative evaluation of whether a study met or did not meet criteria for inclusion in the review. Mean inter-rater agreement on the individual study design evaluation criteria was 84 % for group design articles and 92 % for SCD articles, generating a total mean agreement of 91 %. Mean inter-rater agreement for summary decisions about article inclusion was 74 % for group design articles and 77 % for SCD articles, generating a total agreement of 76 %.

Final Check

As a final check, members of the EBP evaluation team reviewed each article that had been identified as meeting criteria by reviewers as well as articles that were flagged by reviewers for further review by the evaluation team. Studies that did not meet criteria were then eliminated from the database.

Analysis and Grouping Literature

The review process resulted in 456 articles meeting inclusion criteria for study parameters. A process of content analysis (Krippendorff 1980) was then followed using procedures established in the first NPDC review (Odom et al. 2010b). Because categories for practices were already created by the NPDC (e.g., reinforcement, discrete trial teaching, pivotal response training), these categories and established definitions were initially used to sort the articles. If a practice was not sorted into an existing category, it was placed in a general “outlier” pool. A second round of content analysis was then conducted to create new categories. Following a constant comparative method, a category and definition was created for a practice in the first outlier study; the intervention practice in the second study was compared to the first study and if it was not similar, a second practice category and definition was created. This process continued until studies were either sorted into the new categories or the study remained as an idiosyncratic practice. Seven articles were used to support two different practice categories because it either demonstrated efficacy of two different practices as compared to a control group or baseline phase or the article presented several studies showing efficacy for different practices. Finally, research staff reviewed all articles sorted into categories. For individual studies, they compared the practices reported in the method section with the definition of the practice into which the study had been sorted. If research staff disagreed with the assignment of an article to a focused intervention category, the staff member and original coder discussed their differences and reached consensus on the appropriate categorical assignment.

When all articles were assembled into categories, a final determination was made about whether a practice met the level of evidence necessary to be classified as an EBP using criteria for evidence established by the NPDC. The NPDC’s criteria were drawn from the work of Nathan and Gorman (2007), Rogers and Vismara (2008), Horner et al. (2005), and Gersten et al. (2005), as well as the earlier work by the APA Division 12 (Chambless and Hollon 1998). It specifies that a practice is considered evidence-based if it was supported by: (a) two high quality experimental or quasi-experimental design studies conducted by two different research groups, or (b) five high quality single case design studies conducted by three different research groups and involving a total of 20 participants across studies, or (c) a combination of research designs that must include at least one high quality experimental/quasi-experimental design, three high quality single case designs, and be conducted by more than one researcher or research group. These criteria are aligned with criteria proposed by other agencies and organizations (Chambless and Hollon 1998; Kratochwill and Shernoff 2004; Odom et al. 2004).

Results

The summary of these findings includes information about the types of experimental designs employed in the studies, participants, the identified evidence-based practices, outcomes addressed by the EBPs, and practices that had some empirical support but did not meet the criteria for this review.

Design Types

Of the 456 studies accepted for this review, 48 (11 %) utilized a group design. The majority (n = 38) of group design studies were randomized controlled trials (i.e., experimental group designs), although authors also employed quasi-experimental designs in 10 studies. Researchers employed SCD in 408 articles (89 %). Multiple baseline designs were used most frequently (n = 183), although withdrawal of treatment (n = 79; i.e., ABAB) and multiple probe design (n = 52) also were utilized in a substantial number of articles. In addition, research sometimes employed a combination of designs, such as a withdrawal of treatment embedded in a multiple baseline design, which was classified as a mixed design (n = 57).

Participants

In the majority of studies, authors described participants as having autism, which was usually confirmed by a formal diagnosis. Other terms, which under DSM 5 would be classified as ASD, were also used to describe participants (i.e., PDD/PDD-NOS, Asperger/High Functioning Autism, and actually ASD). Co-occurring conditions were identified for participants in a substantial minority (37.9 %) of studies. The co-occurring condition descriptor identified most frequently was intellectual disability (25.4 % of all studies).

The majority of the participants in studies were children between the ages of 6 and 11 years, with preschool-age children (3–5 years) also participating in a large proportion of studies (see Fig. 2). Relatively fewer studies included children below 3 years of age (i.e., in early intervention). While a substantial minority of studies included participants above 12 years of age, this number declined as the ages increased.

Outcomes

Although studies in the literature incorporated a wide range of outcomes, research focused primarily on outcomes associated with the core symptoms of ASD: social, communication and challenging behaviors (Table 1). Researchers focused on communication and social outcomes most frequently, followed closely by challenging behaviors. Play and joint attention were also reported in a considerable number of studies, perhaps reflecting the large representation in the literature of studies with preschool children. However, school readiness and pre-academic/academic outcomes also appeared in a substantial number of studies, perhaps reflecting the elementary school age range of participants in many studies. Outcomes of concern in the adolescent years, such as vocational skills and mental health, appeared infrequently in studies.

Evidence-Based Practices

Twenty-seven practices met the criteria for being evidence-based. These practices with their definitions appear in Table 2. The evidence-based practices consist of interventions that are fundamental applied behavior analysis techniques (e.g., reinforcement, extinction, prompting), assessment and analytic techniques that are the basis for intervention (e.g., functional behavior assessment, task analysis), and combinations of primarily behavioral practices used in a routine and systematic way that fit together as a replicable procedure (e.g., functional communication training, pivotal response training). Also, the process through which an intervention is delivered defines some practices (e.g., parent-implemented interventions, peer-mediated intervention and instruction, technology-aided interventions).

The number of studies identified in support of each practice also appears in Table 2; the specific studies supporting the practice are listed in the original report (Wong et al. 2014). As noted, SCD was the predominant design methodology employed, and some practices had very strong support in terms of the number of studies that documented their efficacy (e.g., antecedent-based intervention, differential reinforcement, prompting, reinforcement, video modeling). Other practices had strong support from studies using either SCD or group design methodologies (e.g., parent-implemented interventions, social narratives, social skills training, technology-aided instruction and intervention, visual supports). No practices were exclusively supported through group design methodologies.

The current set of EPBs includes six new focused intervention practices. Five of these categories—cognitive behavioral intervention, exercise, modeling, scripting, and structured play groups—are entirely new since the last review. The new technology-aided instruction and intervention practice reflects an expansion of the definition of technology interventions for students with ASD, which resulted in the previous categories of computer-aided instruction and speech generating devices/VOCA being subsumed under this classification. It is important to note that video modeling involves technology, but is included as its own category (i.e., rather than being merged within technology-assisted intervention and instruction) because it has a large and active literature with well-articulated methods. The new methodological criteria also resulted in one former practice, structured work systems, being eliminated from the list, although subsequent research may well provide the necessary level of empirical support for future inclusion.

A matrix that identifies the type of outcomes produced by an EBP appears in Fig. 3. These outcomes are referenced by age; a “filled-in” cell indicates that at least one study documented the efficacy of that practice for the age identified in the column. Most EBPs produced outcomes across multiple developmental and skill areas (called outcome types here). EBPs with the most dispersed outcome types were prompting, reinforcement, technology, time delay, and video modeling (i.e., all with outcomes in at least 10 areas). EBPs with the fewest outcome types were Picture Exchange Communication System (3), pivotal response training (3), exercise (4), functional behavior assessment (5), and social skills training (5). Importantly, the least number of practices were associated vocational and mental health outcomes.

Outcomes are also analyzed by age of the participants. Figure 3 reflects the point made previously that much of the research has been conducted with children (age <15 years) rather than adolescents and young adults. Some EBPs and outcomes were logically associated with the young age range and were represented in that way in the data. For example, naturalistic intervention and parent-implemented intervention are EBPs that are often used with young children with ASD and produced effects for young children across outcome areas. However, many EBPs extended across age ranges and outcomes. For example, technology-aided instruction and intervention produced outcomes across a variety of areas and ages.

Other Practices with Some Support

Some practices had empirical support from the research literature, but they were not identified as EBPs. In some studies researchers combined practices into behavioral packages to address special intervention goals, but the combination of practices was idiosyncratic. In other cases, an intervention practice did not have the required number of studies to meet the EBP criteria or there were characteristics about the studies (i.e., all conducted by one research group) that excluded them.

Idiosyncratic Behavioral Intervention Packages

In the studies categorized as idiosyncratic behavioral intervention packages, researchers selected combinations of EBPs and other practices to create interventions to address participants’ individual and unique goals. The study by Strain et al. (2011) is an example of an idiosyncratic behavioral intervention package. The authors used functional behavior assessment, antecedent intervention, and differential reinforcement of alternative behavior to address the problem behaviors of three children with ASD. The entire list of idiosyncratic intervention packages and studies may be found in the original EBP report.

Other Practices with Empirical Support

Some focused intervention practices with well-defined procedures were detected by this literature review but were not included as EBPs because they did not meet one or more of the specific criteria. A common reason for not meeting criteria was insufficient numbers of studies documenting efficacy. For example, the efficacy of the structured work system practice is documented by multiple studies (Bennett et al. 2011; Hume and Odom 2007; Mavropoulou et al. 2011) and was included as an EBP in the previous EBP review. However, with the methodological evaluation employed in this review, only three SCD studies met the criteria, which was less than the five SCD studies needed to be classified as an EBP. One practice, behavioral momentum interventions, did have support from nine SCD studies; however, the total number of participants across the studies (16) did not meet the EBP qualification criteria (i.e., total of at least 20 participants across the SCD studies).

Other practices were also supported by multiple demonstrations of efficacy, but all the studies were conducted by one research group (i.e., the practice efficacy needs to be replicated by at least two research groups). For example, the reciprocal imitation training (RIT) approach developed by Ingersoll and colleagues had a substantial and impressive set of studies documenting efficacy (Ingersoll, 2010, 2012; Ingersoll and Lalonde 2010; Ingersoll et al. 2007), but the same research group conducted all of the research. Similarly, the joint attention and symbolic play instruction practice has been studied extensive by Kasari and colleagues (Gulsrud et al. 2007; Kasari et al. 2006, 2008), but at the time of this review had not been replicated in an acceptable study by another research group.

A number of researchers designed interventions to promote academic outcomes, but because their procedures differed, the studies could not be grouped into a single EBP category. To promote reading and literacy skills, Ganz and Flores (2009) and Flores and Ganz (2007) used Corrective Reading Thinking Basics. To teach different writing skills, Rousseau et al. (1994) used a sentence combining technique; Delano (2007) used an instruction and self-management strategy; and Carlson et al. (2009) used a multisensory approach. For teaching different math skills, Cihak and colleagues (Cihak and Foust 2008; Fletcher et al. 2010) employed touch point instruction, and Rockwell et al. (2011) designed a schema-based instructional strategy. Test taking behavior, a particular problem for some children and youth with autism, was promoted through the use of modeling, mnemonic strategies, and different forms of practice to improve test taking performance by Songlee et al. (2008). Also, Dugan et al. (1995) employed a cooperative learning approach to promote engagement in a number of academic activities for children with ASD. This focus on academic outcomes has emerged primarily in post-2007 studies and appears to represent a trend in current and possibly future research.

Discussion

The current review extends and improves on the previous review of the literature conducted 5 years ago (Odom et al. 2010b) in several ways. First, the authors expanded coverage of the literature from 10 years (1997–2007) in the previous review to 21 years in the current review (1990–2011), bringing in more current research and aligning the length of the review coverage with the procedures followed by other research review organizations such as the What Works Clearinghouse. Also, the review procedures were enhanced by employing a national panel of reviewers, using a standardized article evaluation format based on quality indicators derived from multiple sources (Gersten et al. 2005; Horner et al. 2005; NSP; WWC) and multiple screening and evaluation processes before articles were included in the review. All of these added features improved the rigorous quality of the review process. In addition, the review was conducted in a highly transparent way so that readers could see exactly how practices were identified and which specific studies provided empirical support (i.e., studies are found in the original report accessible online).

Confidence that a practice is efficacious is built on replication, especially by different groups of researchers. In systematic, evaluative reviews of the literature such as this, the number of studies that support a given practice does not necessarily reflect the relative effect or impact of the practice (i.e., how powerful the intervention is in changing behavior), but does reflect the degree to which a practitioner may expect that the practice, when implemented with fidelity, will produce positive outcomes. Fifteen of the EBPs had over 10 studies providing empirical support for the practice, and among those, the foundational applied behavior analysis techniques (e.g., prompting, reinforcement) have the most support. Antecedent-based intervention, differential reinforcement, and video modeling also have substantial support with over 25 studies supporting their efficacy. The number and variety of these replications speak to the relative strength of these EBPs.

A clear trend in the set of studies found in this review was the authors’ use of combinations of EBPs to address a specific behavior problem or goal for the participant. These idiosyncratic packages differ from the multicomponent EBPs (e.g., pivotal response training, functional communication training, peer-mediated intervention and instruction). Multicomponent EBPs consist of the same methods used in the same way in multiple studies. In the idiosyncratic packages, combinations of methods were unique and not used in subsequent studies. They do demonstrate, however, that practitioners and researchers may employ multiple EBPs to address unique goals or circumstances.

Some focused intervention practices with well-defined procedures (e.g., independent works systems) were detected by this literature review, and despite strong evidence, were not included as EBPs because they did not meet one or more of the specific criteria (e.g., insufficient numbers of studies documenting efficacy, insufficient number of participants across studies). Other practices (e.g., reciprocal imitation training, joint attention interventions) were also supported by multiple demonstrations of efficacy, but all the studies were conducted by one research group (i.e., the practice efficacy needs to be replicated by at least one other research group). These focused intervention practices have national visibility and are likely to be replicated by other researchers in the future, which will meet the inclusionary criteria. It is important, however, to issue a cautionary note. There is a continuum of empirical support for practices falling below the EBP criteria, such as these just described that have multiple studies documenting efficacy and others for which only one or two methodologically acceptable studies exist. The further a practice is from the evidentiary criteria just noted, the greater scrutiny and caution practitioners should exercise in their choice of the practice for use with children and youth with ASD.

Limitations

As with nearly any review, some limitations exist for this review. As noted, the review was only of studies published from 1990 to 2011. Two limitations exist regarding this timeframe. First, we acknowledge that we are missing studies that occurred before 1990, although one might expect early (i.e., pre-1990) studies of important and effective practices to have been replicated in publications over subsequent years. Second, because of the time required to conduct a review of a very large database and involve a national set of reviewers, there is a lag between the end date for a literature search (i.e., 2011) and the publication of the completed review. Studies have been published in the interim that could have moved some practices into the EBP classification. The implication is that beginning an update of this review should start immediately.

The age range of participants in the studies reviewed was from birth to 22, or the typical school years (i.e., if one counts early intervention). This is important information for early intervention and service providers for school-age children and youth. The practices also have implications for older individuals with ASD, but the review falls short of specifically identifying EBPs for adults with ASD. Also, a major oversight was not collecting demographic information on the gender, race, and ethnicity of the participants of studies. Such information could have been a useful and important feature of this review. Last, in this review, authors placed the emphasis on identifying the practices that are efficacious. It provides no information about practices that researchers documented as not having an effect or for practices that have deleterious effects. Certainly, studies showing no effects are difficult to publish, and a well-acknowledged publication bias exists in the field, but such a limitation is difficult to avoid if one chooses to include only peer-reviewed articles.

Since this is a critical review and summary of the literature rather than a meta-analysis, there was no plan to calculate effect size, which could be seen as a limitation. The advantage of having effect size estimates is that one can compare the relative strength of interventions. For other disabilities, investigators have used meta-analysis effectively to document relative effect size of practices in special education (see Kavale and Spaulding 2011), but those analyses have been based on group experimental design studies. Currently there is not agreement on the best methodology for statistically analyzing SCD data and calculating effect size (Kratochwill et al. 2013), nor on whether effect sizes for group designs and SCD studies can or should be combined. Progress in developing and validating such techniques is occurring (Kratochwill and Levin 2014), and meta-analysis of comprehensive reviews, such as this one, may be a direction for the future.

Implications for Practice

An identified set of EBPs, such as described in this review, is a tool or resource for creating an individualized intervention program for children and youth with ASD. Practitioners’ expertise plays a major role in that process. From the evidence-based medicine movement, Sackett et al. (1996) noted that “the practice of evidence-based medicine means integrating individual clinical experience with the best available external clinical evidence from systematic research. By clinical expertise we mean the proficiency and judgment that individual clinicians acquire through clinical experience and clinical practice” (p. 71). Drawing from the clinical psychology and educational literatures, Odom et al. (2012) proposed a “technical eclectic model” in which practitioners initially establish goals for the learner and then use their professional expertise to select the EBP(s) that has or are likely to produce the desired outcome. Practitioners base their selection of practices on characteristics of the learner, their or other’s (e.g., family members’) previous history with the learner, their experience using a practice, access to professional development to learn the practice, as well as other features embedded in “professional judgment.” Adopting such a model requires professional development (Odom et al. 2013) and an intentional decision about supporting implementation (Fixsen et al. 2013) that extends beyond just having access to information about the practices. A major implication for practice in the future would be to employ both the knowledge generated by this and other systematic reviews of EBPs and preparation of practitioners to use their judgment in ways that will lead to effective programs for learners with ASD.

Implications for Future Research

This review has several implications for future research. Progress in developing and validating methods for calculating effects sizes for SCD could allow a comparison of the relative strength of EBPs, which could be seen as a direction for future research. Such intervention comparisons could also be conducted directly by employing group experimental designs or SCDs. For example, Boyd et al. (2014) directly compared the relative effects of the TEAACH and LEAP comprehensive treatment programs. In an older study, Odom and Strain (1986) used SCD to compare relative effects of peer-mediated and teacher antecedent interventions for preschool children with ASD. Certainly, the examination of the relative effects of different EBPs that focus on the same outcomes would be a productive direction for future research.

Scholars have distinguished between evidence-based programs and evidence-supported programs (Cook and Cook 2013). As noted, developers of some CTMs, such as the Lovaas Model (McEachin et al. 1993) and the Early Start Denver Model (Dawson et al. 2010), have conducted RCT efficacy studies that provide empirical support for their program models, which would qualify them as evidence-based programs. The technical eclectic program described previously would be characterized as an evidence-supported program in that EBPs are integral features of the program model, but the efficacy of the entire program model has not been validated through a randomized controlled trial. Given that the evidence-based term has been used loosely in the past, it is important to be specific about how the EBPs generated by this report fit with the entire movement toward basing instruction and intervention for children and youth with ASD on intervention science. Certainly, conducting efficacy trials for this technical eclectic program would be an important direction for future research.

This review reveals gaps that exist in current knowledge about focused intervention practices for children and youth with ASD. The majority of the intervention studies over the last 20 years have been conducted with preschool-age and elementary school-age children. A clear need for the field is to expand the intervention literature up the age range to adolescents and young adults with ASD (Rue and Knox 2013; Volkmar et al. 2014). The small number of studies that addressed vocational and mental health outcomes reflects this need. Similarly, fewer studies were identified for infants and toddlers with ASD and their families. While the evidence for comprehensive treatment programs for toddlers with ASD is expanding (Odom et al. 2014a), there is a need for moving forward the research agenda that addresses focused intervention practices for this age group. Early intervention providers and service providers for adolescents with ASD who build technical eclectic programs for children and youth with ASD now have to extrapolate from studies conducted with preschool and elementary-age children with ASD. This practice is similar to the psychopharmacological concept of off-label use of medications (e.g., those tested with adults and used with children and youth). The need for expanding the age range of intervention research has been identified by major policy initiative groups such as the Interagency Autism Coordinating Committee (2012), and the prospect for future research in this area is bright.

Because of the demographics of ASD, much of the research has been conducted with boys and young men with ASD, and less is known about the effects of interventions and outcomes for girls and young women. In addition, while acknowledging the oversight in not coding information about race/ethnic/cultural diversity and underrepresented groups in this review, it will be important for future studies to include an ethnically diverse sample in the studies (Pierce et al. 2014). Similarly, information about children’s or their families’ socioeconomic status is rarely provided in studies.

Conclusion

The current review identifies focused intervention practices for children and youth with ASD supported by efficacy research as well as the gaps in the science. As the volume and theoretical range of the literature has expanded, the number of EBPs has increased. This bodes well for a field that is in need of an empirical base for its practice and also for children and youth with ASD and their families, who may expect that advances in intervention science will lead to better outcomes. The prospect of better outcomes, however, is couched on the need for translating scientific results into intervention practices that service providers may access and providing professional development and support for implementing the practices with fidelity. Fortunately, the emerging field of implementation science may provide the needed guidance for such a translational process (Fixsen et al. 2013) and professional development models for teachers and service providers working with children and youth with ASD have begun to adopt an implementation science approach (Odom et al. 2014b). Such movement from science to practice is a clear challenge and also an important next step for the field.

References

American Speech and Hearing Association. (2005). Evidence-based practice. Washington, DC: Author. Retrieved from http://www.asha.org/members/ebp/

Bennett, K., Reichow, B., & Wolery, M. (2011). Effects of structured teaching on the behavior of young children with disabilities. Focus on Autism and Other Developmental Disabilities, 26(3), 143–152. doi:10.1177/1088357611405040.

Boyd, B. A., Hume, K., McBee, M. T., Alessandri, M., Guitierrez, A., Johnson, L., et al. (2014). Comparative efficacy of LEAP, TEACCH and non-model-specific special education programs for preschoolers with autism spectrum disorders. Journal of Autism and Developmental Disorders, 44, 366–380. doi:10.1007/s10803-013-1877-9.

Camargo, S. P., Rispoli, M., Ganz, J., Hong, E. R., Davis, H., & Mason, R. (2014). A review of the quality of behaviorally-based intervention research to improve social interaction skills of children with ASD in inclusive settings. Journal of Autism and Developmental Disorders, 44, 2096–2116. doi:10.1007/s10803-014-2060-7.

Carlson, B., McLaughlin, T., Derby, K. M., & Blecher, J. (2009). Teaching preschool children with autism and developmental delays to write. Electronic Journal of Research in Educational Psychology, 7(1), 225–238.

Carter, A. S., Messinger, D. S., Stone, W. L., Celimli, S., Nahmias, A. S., & Yoder, P. (2011). A randomized controlled trial of Hanen’s ‘More Than Words’ in toddlers with early autism symptoms. Journal of Child Psychology and Psychiatry, 52, 741–752. doi:10.1111/j.1469-7610.2011.02395.x.

Carter, E. W., Sisco, L. G., Chung, Y., & Stanton-Chapman, T. (2010). Peer interactions of students with intellectual disabilities and/or autism: A map of the intervention literature. Research and Practice for Persons with Severe Disabilities, 35, 63–79. doi:10.2511/rpsd.35.3-4.63.

Chambless, D. L., & Hollon, S. D. (1998). Defining empirically supported therapies. Journal of Consulting and Clinical Psychology, 66, 7–18. doi:10.1037/0022-006X.66.1.7.

Chambless, D. L., Sanderson, W. C., Shoham, V., Johnson, S. B., Pope, K. S., Crits-Christoph, P., et al. (1996). An update on empirically validated therapies. Clinical Psychologist, 49, 5–18.

Cihak, D. F., & Foust, J. L. (2008). Comparing number lines and touch points to teach addition facts to students with autism. Focus on Autism and Other Developmental Disabilities, 23(3), 131–137. doi:10.1177/1088357608318950.

Cochrane, A. L. (1972). Effectiveness and efficiency: Random reflections on health services. London: Nuffield Provincial Hospitals Trust.

Cook, B. G., & Cook, S. C. (2013). Unraveling evidence-based practices in special education. Journal of Special Education, 47, 71–82. doi:10.1177/0022466911420877.

Dawson, G., Rogers, S. J., Munson, J., Smith, M., Winter, J., Greenson, J., et al. (2010). Randomized, controlled trial of an intervention for toddlers with autism: The early start Denver model. Pediatrics, 125, 17–23. doi:10.1542/peds.2009-0958.

Delano, M. E. (2007). Use of strategy instruction to improve the story writing skills of a student with Asperger syndrome. Focus on Autism and Other Developmental Disabilities, 22(4), 252–258. doi:10.1177/10883576070220040701.

Dugan, E., Kamps, D., Leonard, B., Watkins, N., Rheinberger, A., & Stackhaus, J. (1995). Effects of cooperative learning groups during social studies for students with autism and fourth-grade peers. Journal of Applied Behavior Analysis, 28(2), 175–188. doi:10.1901/jaba.1995.28-175.

Fixsen, D., Blase, K., Metz, A., & Van Dyke, M. (2013). Statewide implementation of evidence-based programs. Exceptional Children, 79, 213–232.

Fletcher, D., Boon, R. T., & Cihak, D. F. (2010). Effects of the TOUCHMATH program compared to a number line strategy to teach addition facts to middle school students with moderate intellectual disabilities. Education and Training in Autism and Developmental Disabilities, 45(3), 449–458.

Flores, M. M., & Ganz, J. B. (2007). Effectiveness of direct instruction for teaching statement inference, use of facts, and analogies to students with developmental disabilities and reading delays. Focus on Autism and Other Developmental Disabilities, 22(4), 244–251. doi:10.1177/10883576070220040601.

Ganz, J. B., & Flores, M. M. (2009). The effectiveness of direct instruction for teaching language to children with autism spectrum disorders: Identifying materials. Journal of Autism and Developmental Disorders, 39(1), 75–83. doi:10.1007/s10803-008-0602-6.

Gersten, R., Fuchs, L. S., Compton, D., Coyne, M., Greenwood, C. R., & Innocenti, M. S. (2005). Quality indicators for group experimental and quasi-experimental research in special education. Exceptional Children, 71, 149–164.

Gulsrud, A. C., Kasari, C., Freeman, S., & Paparella, T. (2007). Children with autism’s response to novel stimuli while participating in interventions targeting joint attention or symbolic play skills. Autism, 11(6), 535–546. doi:10.1177/1362361307083255.

Horner, R., Carr, E., Halle, J., McGee, G., Odom, S., & Wolery, M. (2005). The use of single subject research to identify evidence-based practice in special education. Exceptional Children, 71, 165–180.

Hughes, C., Kaplan, L., Bernstein, R., Boykin, M., Reilly, C., Brigham, N., et al. (2012). Increasing social interaction skills of secondary students with autism and/or intellectual disability: A review of interventions. Research and Practice for Persons with Severe Disabilities, 37, 288–307. doi:10.2511/027494813805327214.

Hume, K., & Odom, S. (2007). Effects of an individual work system on the independent functioning of students with autism. Journal of Autism and Developmental Disorders, 37(6), 1166–1180. doi:10.1007/s10803-006-0260-5.

Ingersoll, B. (2010). Brief report: Pilot randomized controlled trial of reciprocal imitation training for teaching elicited and spontaneous imitation to children with autism. Journal of Autism and Developmental Disorders, 40(9), 1154–1160. doi:10.1007/s10803-010-0966-2.

Ingersoll, B. (2012). Brief report: Effect of a focused imitation intervention on social functioning in children with autism. Journal of Autism and Developmental Disorders, 42(8), 1768–1773. doi:10.1007/s10803-011-1423-6.

Ingersoll, B., & Lalonde, K. (2010). The impact of object and gesture imitation training on language use in children with autism spectrum disorder. Journal of Speech, Language and Hearing Research, 53(4), 1040–1051. doi:10.1044/1092-4388(2009/09-0043.

Ingersoll, B., Lewis, E., & Kroman, E. (2007). Teaching the imitation and spontaneous use of descriptive gestures in young children with autism using a naturalistic behavioral intervention. Journal of Autism and Developmental Disorders, 37(8), 1446–1456. doi:10.1007/s10803-006-0221-z.

Interagency Autism Coordinating Committee. (2012). IACC strategic plan for autism spectrum disorder research: 2012 update. Retrieved from the U.S. Department of Health and Human Services Interagency Autism Coordinating Committee website: http://iacc.hhs.gov/strategic-plan/2012/index.shtml

Kasari, C., Freeman, S., & Paparella, T. (2006). Joint attention and symbolic play in young children with autism: A randomized controlled intervention study. Journal of Child Psychology and Psychiatry, 47(6), 611–620. doi:10.1111/j.1469-7610.2005.01567.x.

Kasari, C., Paparella, T., Freeman, S. N., & Jahromi, L. (2008). Language outcome in autism: Randomized comparison of joint attention and play interventions. Journal of Consulting and Clinical Psychology, 76, 125–137. doi:10.1037/0022-006X.76.1.125.

Kasner, M., Reid, G., & MacDonald, C. (2012). Evidence-based practice: Quality indicator analysis of antecedent exercise in autism spectrum disorders. Research in Autism Spectrum Disorders, 6, 1418–1425. doi:10.1016/j.rasd.2012.02.001.

Kavale, K., & Spaulding, L. S. (2011). Efficacy of special education. In M. Bray & T. Kehle (Eds.), The Oxford handbook of school psychology (pp. 523–554). New York, NY: Oxford University Press.

Kazdin, A. E. (2011). Single-case research designs: Methods for clinical and applied settings (2nd ed.). New York, NY: Oxford University Press.

Knight, V., McKissick, B. R., & Saunders, A. (2013). A review of technology-based interventions to teach academic skills to students with autism spectrum disorder. Journal of Autism and Developmental Disorders, 43, 2628–2648. doi:10.1007/s10803-013-1814-y.

Koegel, R. L., & Koegel, L. K. (2012). The PRT pocket guide. Baltimore, MD: Brookes.

Kratochwill, T. R., Hitchcock, J. H., Horner, R. H., Levin, J. R., Odom, S. L., Rindskoff, D. M., & Shadish, W. R. (2013). Single-case intervention research design standards. Remedial and Special Education, 34, 26–38. doi:10.1177/0741932512452794.

Kratochwill, T. R., & Levin, J. R. (2014). Single-case intervention research: Methodological and statistical advances. Washington, DC: American Psychological Association.

Kratochwill, T. R., & Shernoff, E. S. (2004). Evidence-based practice: Promoting evidence-based interventions in school psychology. School Psychology Review, 33, 34–48.

Krippendorff, K. (1980). Content analysis: An introduction to its methodology. Beverly Hills, CA: Sage.

Marcus, L., Schopler, L., & Lord, C. (2000). TEACCH services for preschool children. In J. Handleman & S. Harris (Eds.), Preschool education programs for children with autism (2nd ed., pp. 215–232). Austin, TX: PRO-ED.

Mavropoulou, S., Papadopoulou, E., & Kakana, D. (2011). Effect of task organization on the independent play of students with autism spectrum disorders. Journal of Autism and Developmental Disorders, 41, 913–925. doi:10.1007/s10803-010-1116-6.

McEachin, J. J., Smith, T., & Lovaas, I. O. (1993). Long-term outcome for children with autism who received early intensive behavioral treatment. American Journal on Mental Retardation, 97, 359–372.

Nathan, P. E., & Gorman, J. M. (2007). A guide to treatments that work (3rd ed.). New York, NY: Oxford University Press.

National Autism Center. (2009). National standards project findings and conclusions. Randolph, MA: Author.

National Research Council. (2001). Educating children with autism. Washington, DC: National Academy Press.

Odom, S. L., Boyd, B., Hall, L., & Hume, K. (2010a). Evaluation of comprehensive treatment models for individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 40, 425–436. doi:10.1007/s10803-009-0825-1.

Odom, S. L., Boyd, B., Hall, L., & Hume, K. (2014a). Comprehensive treatment models for children and youth with autism spectrum disorders. In F. Volkmar, S. Rogers, K. Pelphrey, & R. Paul (Eds.), Handbook of autism and pervasive developmental disorders (Vol. 2, pp. 770–778). Hoboken, NJ: Wiley.

Odom, S. L., Brantlinger, E., Gersten, R., Horner, R. D., Thompson, B., & Harris, K. (2004). Quality indicators for research in special education and guidelines for evidence-based practices: Executive summary. Arlington, VA: Council for Exceptional Children Division for Research. Retrieved from http://www.cecdr.org/pdf/QI_Exec_Summary.pdf

Odom, S. L., Collet-Klingenberg, L., Rogers, S., & Hatton, D. (2010b). Evidence-based practices for children and youth with autism spectrum disorders. Preventing School Failure, 54, 275–282. doi:10.1080/10459881003785506.

Odom, S. L., Cox, A., & Brock, M. (2013). Implementation science, professional development, and autism spectrum disorders: National Professional Development Center on ASD. Exceptional Children, 79, 233–251.

Odom, S. L., Hume, K., Boyd, B., & Stabel, A. (2012). Moving beyond the intensive behavior therapy vs. eclectic dichotomy: Evidence-based and individualized program for students with autism. Behavior Modification, 36, 270–297. doi:10.1177/0145445512444595.

Odom, S. L., & Strain, P. S. (1986). A comparison of peer-initiation and teacher-antecedent interventions for promoting reciprocal social interaction of autistic preschoolers. Journal of Applied Behavior Analysis, 19, 59–71. doi:10.1901/jaba.1986.19-59.

Odom, S. L., Thompson, J. L., Hedges, S., Boyd, B. L., Dykstra, J., Duda, M. A., et al. (2014b). Technology-aided interventions and instruction for adolescents with autism spectrum disorder. Journal of Autism and Developmental Disorders. doi:10.1007/s10803-014-2320-6.

Palmer, A., Didden, R., & Lang, R. (2012). A systematic review of behavioral intervention research on adaptive skill building in high functioning young adults with autism spectrum disorder. Research in Autism Spectrum Disorders, 6, 602–617. doi:10.1016/j.rasd.2011.10.001.

Pierce, N. P., O’Reilly, M. F., Sorrells, A. M., Fragale, C. L., White, P. J., Aguilar, J. M., & Cole, H. A. (2014). Ethnicity reporting practices for empirical research in three autism-related journals. Journal of Autism and Developmental Disorders, 44, 1507–1519. doi:10.1007/s10803-014-2041-x.

Pindiprolu, S. S. (2012). A review of naturalistic interventions with young children with autism. Journal of the International Association of Special Education, 13(1), 69–78.

Reichow, B., & Barton, E. E. (2014). Evidence-based psychosocial interventions for individuals with autism spectrum disorders. In F. Volkmar, S. Rogers, K. Pelphrey, & R. Paul (Eds.), Handbook of autism and pervasive developmental disorders (Vol. 2, pp. 969–992). Hoboken, NJ: Wiley.

Reichow, B., & Volkmar, F. R. (2010). Social skills interventions for individuals with autism: Evaluation of evidence-based practices within a best evidence synthesis framework. Journal of Autism and Developmental Disorders, 40, 149–166. doi:10.1007/s10803-009-0842-0.

Rockwell, S. B., Griffin, C. C., & Jones, H. A. (2011). Schema-based strategy instruction in mathematics and the word problem-solving performance of a student with autism. Focus on Autism and Other Developmental Disabilities, 26(2), 87–95. doi:10.1177/1088357611405039.

Rogers, S. J., & Vismara, L. A. (2008). Evidence-based comprehensive treatments for early autism. Journal of Clinical Child and Adolescent Psychology, 37, 8–38. doi:10.1080/15374410701817808.

Rousseau, M. K., Krantz, P. J., Poulson, C. L., Kitson, M. E., & McClannahan, L. E. (1994). Sentence combining as a technique for increasing adjective use in writing by students with autism. Research in Developmental Disabilities, 15(1), 19–37. doi:10.1016/0891-4222(94)90036-1.

Rue, H. C., & Knox, M. (2013). Capacity building: Evidence-based practice and adolescents on the autism spectrum. Psychology in the Schools, 50, 947–956. doi:10.1002/pits.21712.

Sackett, D. L., Rosenberg, W. M., Gray, J. M., Haynes, R. B., & Richardson, W. S. (1996). Evidence-based medicine: What it is and what it isn’t. British Medical Journal, 312, 71–72. doi:10.1136/bmj.312.7023.71.

Schlosser, R. W., & Wendt, O. (2008). Effects of augmentative and alternative communication intervention on speech production in children with autism: A systematic review. American Journal of Speech-Language Pathology, 17, 212–230. doi:10.1044/1058-0360(2008/021.

Shadish, W., Cook, T. D., & Campbell, D. T. (2002). Experimental and quasi-experimental designs for generalized causal inference. Boston, MA: Houghton Mifflin.

Smith, T., Groen, A. D., & Wynn, J. W. (2000). Randomized trial of intensive early intervention for children with pervasive developmental disorders. American Journal on Mental Retardation, 105, 269–285. doi:10.1352/0895-8017(2000)105%3C0269:RTOIEI%3E2.0.CO;2.

Songlee, D., Miller, S. P., Tincani, M., Sileo, N. M., & Perkins, P. G. (2008). Effects of test-taking strategy instruction on high-functioning adolescents with autism spectrum disorders. Focus on Autism and Other Developmental Disabilities, 23(4), 217–228. doi:10.1177/108835760832471.

Sperry, L., Neitzel, J., & Engelhardt-Wells, K. (2010). Peer-mediated instruction and intervention strategies for students with autism spectrum disorders. Preventing School Failure, 54, 256–264. doi:10.1080/10459881003800529.

Stahmer, A. C., Suhrheinrich, J., Reed, S., Schreibman, L., & Bolduc, C. (2011). Classroom pivotal response teaching for children with autism. New York, NY: Guilford.

Strain, P. S., & Bovey, E. (2011). Randomized, controlled trial of the LEAP model of early intervention for young children with autism spectrum disorders. Topics in Early Childhood Special Education, 313, 133–154. doi:10.1177/0271121411408740.

Strain, P. S., Wilson, K., & Dunlap, G. (2011). Prevent-teach-reinforce: Addressing problem behaviors of students with autism in general education classrooms. Behavioral Disorders, 36, 160–165.

Suhrheinrich, J., Hall, L. J., Reed, S. R., Stahmer, A. C., & Schreibman, L. (2014). Evidence based interventions in the classroom. In L. Wilkinson (Ed.), Autism spectrum disorder in children and adolescents: Evidence-based assessment and intervention in schools (pp. 151–172). Washington, DC: American Psychological Association. doi:10.1037/14338-008.

Volkmar, F. R., Reichow, B., & McPartland, J. C. (2014). Autism spectrum disorder in adolescents and adults: An introduction. In F. Volkmar, B. Reichow, & J. McPartland (Eds.), Adolescents and adults with autism spectrum disorder (pp. 1–14). New York, NY: Springer.

Walton, K. M., & Ingersoll, B. R. (2013). Improving social skills in adolescents and adults with autism and severe to profound intellectual disability: A review of the literature. Journal of Autism and Developmental Disorders, 43, 594–615. doi:10.1007/s10803-012-1601-1.

Wilczynski, S. M., Fisher, L., Sutro, L., Bass, J., Mudgal, D., Zeiger, V., et al. (2011). Evidence-based practice and autism spectrum disorders. In M. Bray & T. Kehle (Eds.), The Oxford handbook of school psychology (pp. 567–592). New York, NY: Oxford University Press.

Wong, C., Odom, S. L., Hume, K., Cox, A. W., Fettig, A., Kucharczyk, S., et al. (2014). Evidence-based practices for children, youth, and young adults with autism spectrum disorder. Chapel Hill: The University of North Carolina, Frank Porter Graham Child Development Institute, Autism Evidence-Based Practice Review Group. Retrieved from autismpdc.fpg.edu/content/elop-update.

Acknowledgments

Funding for this study was provided by Grant No. H325G07004 from the Office of Special Education Programs and R324B090005 from the Institute of Education Science, both in the Department of Education. Opinions expressed do not reflect those of the funding agency.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wong, C., Odom, S.L., Hume, K.A. et al. Evidence-Based Practices for Children, Youth, and Young Adults with Autism Spectrum Disorder: A Comprehensive Review. J Autism Dev Disord 45, 1951–1966 (2015). https://doi.org/10.1007/s10803-014-2351-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-014-2351-z