Abstract

This study improves weighted genetic programming and uses proposed novel genetic programming polynomials (GPP) for accurate prediction and visible formulas/polynomials. Representing confined compressive strength and strain of circular concrete columns in meaningful representations makes parameter studies, sensitivity analysis, and application of pruning techniques easy. Furthermore, the proposed GPP is utilized to improve existing analytical models of circular concrete columns. Analytical results demonstrate that the GPP performs well in prediction accuracy and provides simple polynomials as well. Three identified parameters improve the analytical models—the lateral steel ratio improves both compressive strength and strain of the target models of circular concrete columns; compressive strength of unconfined concrete specimen improves the strength equation; and tie spacing improves the strain equation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Confined concrete with lateral reinforcements increases its strength and ductility in axial compression. Many researchers have expended considerable effort to identify confinement mechanisms. Most of these studies are empirical or semi-empirical. Some assumptions are conventionally adopted to create an empirical or analytical equation, and unknowns in an equation are obtained by fitting data. Various analytical models have been applied to predict the compressive strength and strain of confined concrete beams. For instance, Mander et al. [1] developed a novel equation with five parameters for confined concrete beams. Some studies followed the work by Mander et al. [1] and analyzed different assumptions or parameters [2–5]. However, analytical models are frequently limited by their calculation accuracy. Soft-computing approaches have potential to enhance prediction accuracy.

Soft-computing approaches include neural networks (NNs), fuzzy logic, support vector machines, genetic algorithms (GAs), evolutionary polynomial regression (EPR), and genetic programming (GP). Each has unique benefits when applied to particular application categories. NNs are the most commonly used soft-computing approaches for inference tasks, from which many NN derivatives have been developed and applied [6–15]. However, NNs have been characterized as “black box” models due to the extremely large number of nodes and connections within their structures. Since it was first proposed by Koza [16], GP has garnered considerable attention due to its ability to model nonlinear relationships for input–output mappings. Baykasoglu et al. [17] compared a promising set of GP approaches, including Multi Expression Programming (MEP), Gene Expression Programming (GEP), and Linear Genetic Programming (LGP) [18–20]. Notably, LGP was the most efficient algorithm for studied limestone strengths. Differences between these algorithms are rooted in the methodology utilized to generate a GP individual. A chromosome representation, a tree topology, and a linear string are used by MEP, GEP, and LGP, respectively. Although, some formulas generated by MEP, GEP, and LGP have coefficients, all coefficients are fixed constants [17]. Several studies have utilized GP derivatives for construction industry problems. Baykasoglu et al. [21] applied GEP to determine concrete strength, cost, and slump. Yeh and Lien [22] developed a genetic operation tree (GOT) to investigate concrete strength. The GOT uses a tree topology (as does GEP) and optimized coefficients that differ from other GP derivatives. Coefficients do not frequently appear in formulas programmed using any of these GP models. Tsai [23] proposed a weighted genetic programming (WGP) to introduce weight coefficients into tree connections and generate a fully weighted formula.

Giustolisi and Savic [24] proposed an evolutionary polynomial regression (EPR) and its applications had been validated in civil engineering [25, 26]. Giustolisi and Savic [24] argued that GP is not very powerful in finding constants and that it tends to produce functions that grow in length over time. This paper significantly improves GP in finding constants and controls formula length with layered tree structures and a terminal. Additionally, EPR has to set up the number of additive terms. Detailed comparisons on EPR and the proposed model will be revealed after resultant formulas are available.

The main aims of this paper are as follows

-

1.

Improve previous WGP;

-

2.

Model confined compressive strength and strain of circular concrete columns with good prediction accuracy and visible formulas;

-

3.

Provide polynomials with a modified WGP, namely, genetic programming polynomials (GPP);

-

4.

Improve analytical models with the GPP;

-

5.

Study parameter impact using sensitivity analysis;

-

6.

Prune techniques for compacting formulas.

The remainder of this paper is organized as: (1) the proposed WGP, GPP methods and GAs; (2) details of confined compressive strength and strain of circular concrete columns; (3) analytical results and discussions; and (4) conclusions.

2 Weighted genetic programming

Following the study by Tsai [23], this paper presents a WGP method with an NL-layered tree structure (Fig. 1). The eventual layer has 2NL−1 parameter nodes and each parameter node (\( x_{i}^{NL} \)) selects one input (including a unit parameter “1”). When a unit parameter is selected, the value of the parameter node uses its weight (i.e., value of w is not 1) to create a coefficient.

where \( x_{i}^{NL} \) represents nodes in the NL-th layer and i is a related node number; P j is the j-th input parameter; and NI is the number of inputs. Each \( x_{i}^{NL} \) node selects one attached P j . All nodes in the remaining layers are operator nodes, which use operators to calculate the values of parent nodes in a down-top order and are functions of child nodes (Fig. 1). Each operation node y is operated by a set of defined functions with the two child nodal inputs of x i and x j with weights of w i and w j , respectively.

This paper adopts f 0 and nine functions in Eq. (2) for each operator selection F. A unique operator, f 0, is designed as a branch terminal ( “T”). When “T” is selected for an operator node, it uses the value of the left-most parameter node as its nodal value directly. Therefore, some operator nodes do not exist in the final WGP results when “T” is used; thus, “T” is a default operator function and novel in WGP comparing to Tsai and Lin [27]. f 1 is designed to inherit the child nodes on the left with w i scaling, and is a unary operator (using “S”). This “S” does not exist in GP function sets. Although f 2 is a plus operator (+), the “−” operator in GP is absent in WGP, because f 2 fulfills negative weights. Thus, f 3, f 4, f 5, f 6, f 7, f 8, and f 9 are “×”, “/”, “^”, “sin”, “cos”, “exp”, and “log” with balanced weights, respectively. Furthermore, a lucky guess function may be utilized. Consequently, variables that must be optimized include (2NL−1 − 1) selected operators, (2NL−1) selected parameters, and (2NL − 2) optimized weights.

2.1 Genetic programming polynomials

Many operator functions can be created to model problems. If a lucky function is suggested, training performance will be improved markedly. However, such good luck is rare. Thus, another choice for modeling problems in a concise and simple format seems to be a good idea. Polynomials exist in a wide range of disciplines, including mathematics and the sciences. Mathematically, a polynomial is an expression of finite length constructed from variables and constants using only addition, subtraction, multiplication, and non-negative integer exponents. This paper further designs a novel WGP derivative to provide polynomials for modeling engineering problems, namely, genetic programming polynomials (GPP). Therefore, a new function set is designed for WGP to create GPP:

where f 1, f 2, f 3, and f 4 are “S”, “+”, “×”, and “^”, respectively; the exponent term, w j , in f 4 uses integers 1–10 to create positive integers as exponent terms for polynomials. The division function (f 4) in Eq. (2) is not allowed for polynomials. All materials for the WGP and GPP are considered ready, with the exception of operator selection, parameter selection, and weight optimization. Obviously, optimization generates difficult challenges for the WGP and GPP when a large NL is used. For an NL-layered WGP or GPP, variables that must be optimized include (2NL−1 − 1) selected operators, (2NL−1) selected parameters, and (2NL − 2) optimized weights, and typed of integers, integers and floats, respectively (Table 1). The global search for (2NL+1 − 3) variables is performed by means of GA [28]. Standard GA uses a binary alphabet to form chromosomes. Instead, float GA coding is used here to determine integer and float variables simultaneously. Each GA individual has a double vector varied within 0–1, containing parameter selections (one \( x_{i}^{NL} \) node selects an attached P j ); operator selections (F) and weights (w). Values are linearly transferred to boundaries of \( x_{i}^{NL} \), F, and w at integer 0–NI, integer 0–9 for WGP (integer 0–4 for GPP), and float −10 to 10 respectively. In this study, the fitness function was directly set as inverse of the training root mean square error (RMSE). A larger fitness value indicates a healthier individual. Procedures of WGP and GPP are demonstrated in Fig. 2. Only one difference exists among WGP and GPP procedures, i.e. the boundaries of operator selections (step 4 in Fig. 2).

The following GA parameters are also used in both WGP and GPP implementations [23]:

-

1.

Scattered crossover at a rate of 0.8.

-

2.

Uniform mutation at a rate of 0.05.

-

3.

Uniform selection stochastically.

-

4.

200 individuals.

-

5.

5,000 iterations without any other terminate criterion.

For instance, a 3-layed GPP needs 13 GA float variables ranged within 0–1 to identify three used operators, four selected parameters, and six balanced weights (Fig. 3). Each child node has an attached weight connecting to its parent node. Sequentially, a corresponding GPP tree structure can be obtained. Finally, the associated formula can be found.

3 Confined compressive strength and strain of circular concrete columns

3.1 Data for confined compressive strength and strain of circular concrete columns

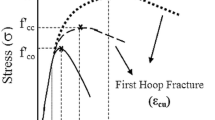

Confined concrete is subjected to transverse reinforcement in the form of closed hoops or spirals to prevent lateral swelling. Such confinement increases the compressive strength of concrete and enhances its ductility. Many studies have focused on developing analytical models and identifying factors affecting confined concrete. Oreta et al. [29] adopted the three datasets for circular concrete columns from Mander et al. [1], Sakai et al. [30], and Sakai [4]. Eight factors were listed for compressive strength of confined concrete specimen \( f_{cc}^{\prime } \) and confined strain at peak stress, ε cc . These eight factors are: (1) compressive strength of an unconfined concrete cylinder, \( f_{c}^{\prime } \); (2) compressive strength of an unconfined concrete specimen with the same size and geometry, \( f_{co}^{\prime } \); (3) core diameter of a circular column, d; (4) column height, H; (5) yield strength of lateral or transverse reinforcements, f yh ;(6) ratio of volume of a lateral reinforcement to volume of a confined concrete core, ρ s ; (7) spacing of a lateral reinforcement or spiral pitch, s; and, (8) ratio of longitudinal steel to the area of a core of section, ρ cc . Although \( f_{co}^{\prime } \) was not used in NN prediction by Oreta et al. [29], it is retained in this work. Of 38 column experiments, 29 were used for training and 9 for testing (Table 2). An NN result set (N7-4-2) was adopted from the work by Oreta et al. [29]. Their attached RMSE were calculated as 1.68 MPa and 0.054 for \( f_{cc}^{\prime } \) and ε cc , respectively. Such analytical results provide references for prediction accuracy. Therefore, this work attempts to achieve prediction accuracies as good as those of NNs and provide visible formulas for \( f_{cc}^{\prime } \) and ε cc against black-box NNs.

3.2 Analytical models for confined compressive strength and strain of circular concrete columns

Various analytical models have been applied to calculate compressive strength, \( f_{cc}^{\prime } \), and the corresponding strain, ε cc , of confined concrete columns. However, these models usually have disadvantages in terms of prediction accuracy and advantages in reasonable/visible formulas comparing to NN learning. This study improves analytical models with formulas via soft computing approaches. Since both WGP and GPP models can provide visible formulas, two analytical models are chosen to be improved.

Hoshikuma et al. [3] stated:

Sakai [4] provided:

where \( f_{cc}^{\prime HOS} \) and \( \varepsilon_{cc}^{HOS} \) are models developed by Hoshikuma et al. [3]; and \( f_{cc}^{\prime SAK} \) and \( \varepsilon_{cc}^{SAK} \) were developed by Sakai [4]. Their corresponding RMSEs can be calculated using aforementioned datasets. They are 10.2 MPa and 0.155 for \( f_{cc}^{\prime HOS} \) and \( \varepsilon_{cc}^{HOS} \), and 6.24 MPa and 0.159 for \( f_{cc}^{\prime SAK} \) and \( \varepsilon_{cc}^{SAK} \), respectively. The calculation accuracy of analytical models also provides references for soft computing approaches and will be improved by the proposed GPP later.

4 Results and discussion

4.1 Predictions and visible formulas

This paper utilizes NLs in the range of 2–6 to model circular concrete columns. Tables 3 and 4 list statistical results of 10 runs for \( f_{cc}^{\prime } \) and ε cc , respectively, with concerns of the use of the “T” operator. Results focus on training/testing RMSE, execution time, and “Count”. The “Count” is used to count the number of activated operators. For instance, a fully linked four-layer tree has 7 operator nodes; thus, the “Count” is 7. When a “T” function is used in the third layer, the “Count” is 6. As a “T” occurs in the second layer, three operator nodes are eliminated and the “Count” is 4. Therefore, the “Count” is designed to represent the number of operator nodes needed to model \( f_{cc}^{\prime } \) and ε cc . The “Count” is markedly related to prune the complexity of GP trees and effect on conciseness of resulted formulas. Additionally, the use of one-handed operators also positively decreases tree complexity. As NL increases, computational time increases, the complexity of the tree structure increases, and the accuracy of computational results increases. Theoretically, both WGP and GPP may achieve better prediction accuracy without using “T” than those with employments of “T”. However, when a tree structure uses “T” operators, parts of its variables do not impact on the target function and need not be optimized. That eases the loading of optimization and may lead results to further prediction accuracy easily. Additionally, it seems that both WGP and GPP achieve results under acceptable accuracies with the uses of “T” operator. Therefore, they are primarily compared hereafter. Overall four-layer tree structures are sufficient for achieving good prediction accuracy (Tables 3, 4), as in WGP findings obtained by in Tsai and Lin [27]. Obviously, WGP gives better accuracy than GPP because WGP uses more complicated functions for operators. However, GPP always adopts simpler trees to model circular concrete columns than WGP and provide compact formulas (see “Count” in Tables 3, 4). Moreover, the accuracy of GPP remains acceptable. Finally, a best trial is selected among the 10 runs according to minimum training and testing RMSE summations. The final \( f_{cc}^{\prime } \) and ε cc formulas are defined as \( f_{cc\;NL}^{\prime WGP} \) and \( \varepsilon_{cc\,NL}^{WGP} \) for WGP; and \( f_{cc\;NL}^{\prime GPP} \) and \( \varepsilon_{cc\,NL}^{GPP} \) for GPP. They are listed as follows:

.

Although WGP usually provides better prediction accuracy than GPP, WGP formulas are much complex than those of GPP. Obviously, some WGP operators pick functions of “sin”, “cos”, “exp”, and “log”. However, their significances are hard to be identified. Thereby, the functions are suggested for specific problems, when particular attributes are determined or some of their parameters are angles (or periodical). When the background knowledge in detail is not available, the proposed GPP is more applicable than WGP to model problems with polynomials. Finally, GPP formulas are suggested in terms of good prediction accuracy and simplicity of formulas. Of which, \( f_{cc\; 4}^{\prime GPP} \) is proposed for \( f_{cc}^{\prime } \) with accuracy at 2.00 MPa/1.92 MPa (associated value of coefficient of determination R2 are 0.966/0.969) (bold values in Table 3); and \( \varepsilon_{cc\,4}^{GPP} \) for ε cc with 0.104/0.080 accuracy (0.842/0.754 in R2) (bold values in Table 4).

4.2 Results of k-fold cross validations and comparisons with neural networks

As previous results, a four-layer GPP structure is enough for modeling circular concrete columns. A k-fold cross validation method is further used to evaluate performance of the four-layer GPP model. Fivefold cross validations are conducted. Source data are partitioned into five equal-sized random datasets. Four folds are used to train the GPP model and a fifth fold for testing. Each experience conducts 10 runs and is repeated five times such that each of five folds is used for testing the resultant model trained by the other four. The uses of random folds are primary for measuring model stability, especially where further datasets are costly to collect. Additionally, in order to compare with neural network results [29], which used 7 parameters excepting \( f_{co}^{\prime } \), GPP results with 7 parameters are also provided. Results of cross validations are shown in Table 5. With 8 parameters, it seems that results of \( f_{cc}^{\prime } \) are not as stable as those of ε cc ; but they performance well in terms of R2 (over 0.9) and are acceptable. When \( f_{co}^{\prime } \) is withdrawn, RMSE of \( f_{cc}^{\prime } \) results are obviously increased, which indicates the significance of \( f_{co}^{\prime } \) to \( f_{cc}^{\prime } \). Comparing to neural network results (1.68 MPa for \( f_{cc}^{\prime } \) and 0.054 for ε cc ), the best runs of GPP give 2.31/1.74 MPa (0.957/0.970 in R2) for \( f_{cc}^{\prime } \) and 0.112/0.068 (0.816/0.773 in R2) for ε cc . Although, GPP does not provide results as accurate as black-box neural networks, the prediction accuracy is still acceptable in terms of R2 and visible formulas can be obtained against black-box approaches. Moreover, it is a fact that that ε cc measurements are not as accurate as that of \( f_{cc}^{\prime } \). It may be the reason that GPP always gives better R2 values for \( f_{cc}^{\prime } \) than those for ε cc .

4.3 Comparisons on EPR and GPP

The proposed WGP and GPP are models of symbolic regression. EPR is another symbolic data-driven technique. One of the general model structures of EPR is formed as:

where a is a coefficient term, m is the number of non-constant terms, P is a input parameter (candidate-independent input therein), ES is a NI by m exponent matrix valued within user-defined bounds, and Z is a product of inputs. Since ERP and GPP are innately different, this paper compares them with GPP resultant formulas. In terms of Eq. (24), four terms are included and the coefficients are products of several GPP weights. To obtain the same polynomial of Eq. (24), EPR needs settings: four coefficient terms ranged within [−0.0356, 0.185], and a 4 by 8 ES matrix valued at −1, 0, or 1. In Eq. (25), there are 6 terms after simplification. Using EPR to obtain Eq. (25), the maximum value of a should be ranged within [−1.51 × 0.005464, 0.512], and a 6 by 8 ES matrix valued from −8 to 8 should be used. As aforementioned comparisons, GPP shows differences to EPR in settings of resultant polynomial, high flexibilities in polynomial forms, and products of weights to provide extreme coefficient values. Additionally, GPP improves GP in finding constants and does WGP in resulting polynomials. Furthermore, an extended use of GPP is revealed in the coming sub-section.

4.4 Improving analytics models

The abilities of GPP were proved in aforementioned descriptions. Another use is to improve analytical models using the proposed GPP. The idea of improving analytical models can be found in Tsai [23], which used previous WGP (without “T” operators). The idea is to make a prediction based on a summation of calculations by analytical models and GPP prediction to fit the targets. Therefore, the GPP makes predictions basing on analytical models and improves analytical models, namely, improving GPP (IGPP). Following a process similar to that of Tables 3, 4, results of improving \( f_{cc}^{\prime HOS} \), \( \varepsilon_{cc}^{HOS} \), \( f_{cc}^{\prime SAK} \), and \( \varepsilon_{cc}^{SAK} \) can be made in Table 6. The accuracy of NN results can be treated as references to IGPP still; however, the original accuracy achieved by analytical models is the most important object to this IGPP learning. The IGPP markedly improves \( f_{cc}^{\prime HOS} \) from 10.21 MPa to 1.97 MPa/2.03 MPa (0.967/0.966 in R2); and \( \varepsilon_{cc}^{HOS} \) from 0.155 to 0.089/0.059 (0.883/0.866 in R2). Improvements for \( f_{cc}^{\prime SAK} \) and \( \varepsilon_{cc}^{SAK} \) are not outstanding but are good. Improving \( f_{cc}^{\prime SAK} \) are from 6.24 MPa to 2.62 MPa/1.16 MPa (0.941/0.989 in R2), and \( \varepsilon_{cc}^{SAK} \) from 0.159 to 0.118/0.071 (0.795/0.806 in R2). The final IGPP formulas are \( f_{cc\,NL}^{\prime IGPP} \) and \( \varepsilon_{cc\,NL}^{IGPP} \). The IGPP formulas are as follows:

.

To reveal the improvements of the aforementioned equations upon \( f_{cc}^{\prime HOS} \) and \( \varepsilon_{cc}^{HOS} \), Figs. 4 and 5 compare results of experiments, analytical models, and IGPP formulas. It can be easily obtained that \( f_{cc}^{\prime HOS} \) and \( \varepsilon_{cc}^{HOS} \) frequently overestimate the strength and strain based on experimental results. Therefore, all IGPP formulas generally contribute negative impacts on improving \( f_{cc}^{\prime HOS} \) and \( \varepsilon_{cc}^{HOS} \). Comparing all IGPP formulas in terms of result accuracy and formula simplicity (Table 6), Eqs. (28) and (32) are selected in improving \( f_{cc}^{\prime HOS} \) and \( \varepsilon_{cc}^{HOS} \), respectively, and both of them have good consistency with experimental results, especially Eq. (28). Since these formulas are visible, they render parameter studies easy. Table 7 shows the occurrences of input parameters in IGPP formulas. For instance, \( f_{cc\;4}^{\prime \,IGPP} \) results are more accurate than \( f_{cc\;3}^{\prime \,IGPP} \) with presence of P 8 and \( f_{cc\;5}^{\prime \,IGPP} \) makes further improvements with three additional input parameters. Obviously, P 6 is significant in improving \( f_{cc}^{\prime HOS} \) and \( \varepsilon_{cc}^{HOS} \), P 2 improves \( f_{cc}^{\prime HOS} \), P 7 improves \( \varepsilon_{cc}^{HOS} \), and P 3 does nothing. The same process can be applied to Eqs. (6)–(25) and the parameters will impact on modeling the confined compressive strength and strain of circular concrete columns instead of improving analytical models.

There are many manners to improve prediction errors of Eqs. (4) and (5). For instance, GA can be directly applied to optimize coefficients of analytical equations, which can be formed as:

where c 1–c 8 are coefficients for optimization. Same GA settings are used and 10 runs for each equation. The best trial results, according to minimization of summation of training and testing errors, can be found. The prediction RMSE of \( f_{cc}^{\prime HOS} \) is improved from 10.2 MPa to 4.41/4.89 MPa (training/testing RMSE); \( \varepsilon_{cc}^{HOS} \) from 0.155 to 0.111/0.0792; \( f_{cc}^{\prime SAK} \) from 6.24 MPa 3.98/4.17 MPa; and \( \varepsilon_{cc}^{SAK} \) from 0.159 to 0.138/0.148. Although the improvement in prediction accuracy is significant, IGPP still provide better results. Two major differences existed between the aforementioned manner and IGPP. One is that IGPP does not have to set a fixed format for the studied equation. Therefore, better prediction accuracy can be expected for IGPP and users need not concern about which coefficient should be optimized (c 1 in Eq. (36) or their exponent coefficients). The other difference is that the analytical model is completely kept in the obtained IGPP formulas. Improvements are directly achieved upon analytical models. The manner of tuning coefficients breaks the original model, but it is useful for figuring out an empirical model under prerequisites of influenced parameter relationships.

4.5 Sensitivity analysis

Sensitivity analysis can be applied to assess the impact of parameters [31]. A common approach in sensitivity analysis is to change one factor at a time to determine its effect on output. This work adopts mean values for all inputs and standard deviation of a targeted input is treated as the variation of the factor changed.

where M represents mean values of all inputs, δ i is the standard deviation of the i-th input and impacts the mean value of the i-th input (found in Table 2), and V is an output value calculated by IGPP formulas. Therefore, SA 1 i is a sensitivity measurement with a positive variation on the i-th input and SA 2 i with a negative variation. Table 8 lists the IGPP sensitivity results. When an input parameter is linear in an IGPP formula, the values of SA 1 i and SA 2 i are the same. As the effect of the i-th input on output increases, SA 1 i or SA 2 i increase. When an input is insensitive to outputs, it can be omitted or replaced. For instance, P 8 can be omitted to compact \( f_{cc\;4}^{\prime \,IGPP} \). Notably, P 2 and P 6, especially P 6, are important to IGPP formulas for \( f_{cc}^{\prime } \).

4.6 Pruned IGPP formulas

Another method for studying the impact of parameters is pruning technique [32], which replaces an input with a fixed value (e.g., a mean or median) to determine the effect of the input on outputs. Furthermore, the pruning technique can be used to prune/compact formulas. Selecting \( f_{cc\;4}^{\prime \,IGPP} \) as an example, it has training/testing RMSEs at 1.97/2.03 MPa originally (step (a) in Table 9). The process continues replacing parameters one by one. As P 2 is replaced in \( f_{cc\;4}^{\prime \,IGPP} \), RMSEs increase to 4.38/5.07 MPa. Thus, P 2 cannot be replaced by its mean. After steps (b)–(d), P 8 is a good candidate for removal from \( f_{cc\;4}^{\prime \,IGPP} \) with an accuracy at 2.25/2.01 MPa. However, coefficients in the formula of step (d) are not yet optimized. The GAs can be applied again to optimize constants of A, B, and C in the formula of step (e). The accuracy after step (e) is improved again relative to that in step (d). Sequentially, steps (f)–(h) (Table 9) remove two parameters at a time. Finally, the formula from step (e) is applied to prune \( f_{cc\;4}^{\prime \,IGPP} \). Furthermore, the pruned and optimized \( f_{cc\;4}^{\prime \,IGPP} \) is very similar to those in Eqs. (12) and (16), although they differ innately. This work considers above evidences as the reliability of the proposed GPP. Finally, the formula in step (e) (Table 9) is applied to model \( f_{cc}^{\prime } \) and two parameters are involved. The formula is listed below with an accuracy of 2.20/1.91 MPa, indicating that \( f_{co}^{\prime } \) and ρ s are essential to improve \( f_{cc}^{\prime HOS} \).

Equations (32), (33), and (35) have similar formats but have entirely different tree structures (Fig. 6). The evidence of reliable GPP is therefore proved again. A formula with two variables can be assigned to GAs to improve \( \varepsilon_{cc}^{HOS} \).

Variables A and B in Eq. (41) can be easily obtained by GAs. When optimization is achieved, Eq. (41) can be treated as the ultimate IGPP solution for improving \( \varepsilon_{cc}^{HOS} \) with RMSEs at 0.089/0.059. Moreover, ρ s and s are identified important when improving \( \varepsilon_{cc}^{HOS} \). Furthermore, this work follows same steps in Table 9 for \( \varepsilon_{cc\, 5}^{IGPP} \), and the same formula in Eq. (41) can be obtained. Certainly, such a pruning process can be applied to Eqs. (6)–(25). Summarily, as visible formulas can be obtained, extended uses can be easily carried out.

To combine Eq. (4), (40), and (41) together, improved \( f_{cc}^{\prime HOS} \) and \( \varepsilon_{cc}^{HOS} \) can be formed as:

The proposed formulas attempt to modify the influences of \( f_{co}^{\prime } \) and ρ s on confined compressive strength \( f_{cc}^{\prime } \) and achieve higher calculation accuracy based on current datasets. Although eight input parameters are used herein, no new parameter is altered to impact the improved \( f_{cc}^{\prime HOS} \). A new parameter, i.e. s, is used to improve \( \varepsilon_{cc}^{HOS} \). The occurrence of s can be justified by Sakai [4] (see Eq. (5)). Owing to the coefficients in the improved terms are numerical results not analytical, they are not meaningful in structural mechanism and are changed when using different datasets. In spite of lacking complicated structural mechanism, the improved formulas for confined compressive strength and strain of circular concrete columns achieve better accuracy than those of the original model (Figs. 7, 8). The influenced parameters are key results of this paper to identify potential parameters on improving analytical models.

5 Conclusions

This study improves previous WGP with a terminate operator, which reduces tree complexity and makes formulas compact, and proposes a novel GPP, which allow GP approaches providing polynomials for problems. Engineering applications focus on not only modeling compressive strength and strain, but also particularly improving analytical models with GPP. Employments of sensitivity analysis and pruning techniques can also be found in this study. Both WGP and GPP achieve good prediction accuracy and visible formulas for target strength and strain. The significant findings of this study are as follows.

-

1.

Visible formulas are bonus production of the WGP and GPP compared with black-box approaches. Unlike analytical models, the proposed WGP and GPP directly provide functional relationships for strength and strain based on fitting data without a prior setting of equation formats.

-

2.

The GPP provides polynomials for compressive strength and strain of circular concrete columns. This approach provides simple solutions for problems.

-

3.

Improving analytical models with soft computing approaches is a novel idea. This IGPP may open a new avenue for improving existing models using soft computing approaches. Both WGP and GPP, even EPR, can be implemented to proceed same manner on improving various analytical models.

-

4.

Visible formulas make parameter studies, sensitivity analysis, and application of pruning techniques easy.

-

5.

Equations (38) and (39) improve the analytical equations developed by Hoshikuma et al. [3]. The ratio of lateral reinforcement volume to confined concrete core volume, ρ s , impacts improvements to both confined compressive strength and strain equations; the compressive strength of an unconfined concrete specimen of the same size and geometry, \( f_{co}^{\prime } \), improves the strength equation and the spacing of lateral reinforcement or spiral pitch, s, in the strain equation.

-

6.

The proposed GPP is a data-driven approach. Owing to only 38 datasets are used herein, there is possibility of over-fitting in the obtained formulas although cross-validation method is conducted.

References

J.B. Mander, M.J.N. Priestley, R. Park, Observed stress-strain behavior of confined concrete. J. Struct. Eng. 114(8), 1827–1849 (1988)

M. Saatcioglu, S.R. Razvi, Strength and ductility of confined concrete. J. Struct. Eng. 118(6), 1590–1607 (1992)

K. Hoshikuma, K. Kawashima, K. Nagaya, A.W. Taylor, Stress-strain model for confined reinforced concrete in bridge piers. J. Struct. Eng. 123(5), 624–633 (1997)

J. Sakai, Effect of Lateral Confinement of Concrete and Varying Axial Load on Seismic Response of Bridges. Doctor of Engineering Dissertation, Dept. of Civil Engineering, Tokyo Institute of Technology, Tokyo (2001)

G.G. Penelis, A.J. Kappos, Earthquake-Resistant Concrete Structures, (E&FN Spon, London, 1997) Sec. 7.4, pp. 177–196

M. Mehrjoo, N. Khaji, H. Moharrami, A. Bahreininejad, Damage detection of truss bridge joints using Artificial Neural Networks. Expert Syst. Appl. 35(3), 1122–1131 (2008)

E. Mesbahi, Y. Pu, Application of ANN-based response surface method to prediction of ultimate strength of stiffened panels. J. Struct. Eng. 134(10), 1649–1656 (2008)

Y.Q. Ni, H.F. Zhou, J.M. Ko, Generalization capability of neural network models for temperature- frequency correlation using monitoring data. J. Struct. Eng. 135(10), 1290–1300 (2009)

H.-C. Tsai, Hybrid high order neural networks. Appl. Soft Comput. 9, 874–881 (2009)

N.B. Dash, S.N. Panda, R. Remesan, N. Sahoo, Hybrid neural modeling for groundwater level prediction. Neural Comput. App. 19(8), 1251–1263 (2010)

H.-C. Tsai, Predicting strengths of concrete-type specimens using hybrid multilayer perceptrons with center-unified particle swarm optimization. Expert Syst. Appl. 37, 1104–1112 (2010)

A. Nazari, G. Khalaj, S. Riahi, ANFIS-based prediction of the compressive strength of geopolymers with seeded fly ash and rice husk-bark ash. Neural Comput. App. 1–13 (2011)

S. Cawley, F. Morgan, B. McGinley, S. Pande, L. McDaid, S. Carrillo, J. Harkin, Hardware spiking neural network prototyping and application. Genet. Program. Evol. M. 12(3), 257–280 (2011)

H. Yaprak, A. Karaci, I. Demir, (2011) Prediction of the effect of varying cure conditions and w/c ratio on the compressive strength of concrete using artificial neural networks. Neural Comput. App. 1–9 (2011)

H.-C. Tsai, Y.-W. Wu, Y.-Y. Tyan, Y.-H. Lin, Programming Squat Wall Strengths and Tuning Associated Codes with Pruned Modular Neural Network. Neural Comput App, Accepted (2012)

J.R. Koza, Genetic programming: On the programming of computers by means of natural selection (MIT Press, Massachusetts, 1992)

A. Baykasoglu, H. Güllü, H. Çanakçi, L. Ozbakir, Prediction of compressive and tensile strength of limestone via genetic programming. Expert Syst. Appl. 35(1–2), 111–123 (2008)

M. Oltean, D. Dumitrescu, Multi expression programming, technical report, UBB-01-2002, Babes-Bolyai University, Cluj-Napoca, Romania (2002). www.mep.cs.ubbcluj.ro

C. Ferreira, Gene expression programming: A new adaptive algorithm for solving problems. Compl. Syst. 13(2), 87–129 (2001)

M. Bhattacharya, A. Abraham, B. Nath, A linear genetic programming approach for modeling electricity demand prediction in Victoria. In Proceedings of the hybrid information systems, first international workshop on hybrid intelligent systems, Adelaide, Australia, pp 379–393 (2001).

A. Baykasoglu, A. Oztas, E. Ozbay E, Prediction and multi-objective optimization of high-strength concrete parameters via soft computing approaches. Expert Syst. Appl. 36(3), 6145–6155 (2009)

I.-C. Yeh, L.-C. Lien, Knowledge discovery of concrete material using genetic operation trees. Expert Syst. Appl. 36(3), 5807–5812 (2009)

H.-C. Tsai, Using weighted genetic programming to program squat wall strengths and tune associated formulas. Eng. Appl. Artif. Intell. 24, 526–533 (2011)

O. Giustolisi, D.A. Savic, A symbolic data-driven technique based on evolutionary polynomial regression. J. Hydroinf. 8(3), 207–222 (2006)

L. Berardi, Z. Kapelan, O. Giustolisi, D. Savic, Development of pipe deterioration models for water distribution systems using EPR. J. Hydroinf. 10(2), 113–126 (2008)

A. Doglioni, D. Mancarella, V. Simeone, O. Giustolisi, Inferring groundwater system dynamics from hydrological time-series data. Hydrol. Sci. J. 55(4), 593–608 (2010)

H.-C. Tsai, Y.-H. Lin, Predicting high-strength concrete parameters using weighted genetic programming. Eng. Comput. 27(4), 347–355 (2011)

J.H. Holland, Adaptation in neural and artificial systems (The University of Michigan Press, Ann Arbor, 1975)

A.W.C. Oreta, K. Kawashima, Neural network modeling of confined compressive strength and strain of circular concrete columns. J. Struct. Eng. 129(4), 554–561 (2003)

J. Sakai, K. Kawashima, H. Une, K. Yoneda, Effect of tie spacing on stress-strain relation of confined concrete. J. Struct. Eng. 46A(3), 757–766 (2000)

M. Scardi, L.W. Harding, Developing an empirical model of phytoplankton primary production: a neural network case study. Ecol. Modell. 120(2), 213–223 (1999)

C.-H. Peng, I.-C. Yeh, L.-C. Lien, Modeling strength of high-performance concrete using genetic operation trees with pruning techniques. Comput. Concrete 6(3), 203–223 (2009)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tsai, HC., Pan, CP. Improving analytical models of circular concrete columns with genetic programming polynomials. Genet Program Evolvable Mach 14, 221–243 (2013). https://doi.org/10.1007/s10710-012-9176-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10710-012-9176-3