Abstract

Slope stability analysis is an enduring research topic in the engineering and academic sectors. Accurate prediction of the factor of safety (FOS) of slopes, their stability and their performance is not an easy task. The current study aims at predicting the FOS on the geometrical and geotechnical input parameters [unit weight (γ), cohesion (C), slope angle (β), height (H), angle of internal friction (φ) and pore pressure ratio (ru)]. The Markov chain Monte Carlo (MCMC) methods have become a ubiquitous tool in Bayesian analysis. This paper implements MCMC methods for Bayesian analysis of models using the WinBUGS package, freely available software. The WinBUGS software is implemented to identify the most appropriate models for estimating the FOS among twenty (20) candidate models that have been proposed. The models were applied to available data given in open source literature. The unknown parameters of the models are considered as random variables. The WinBUGS software which uses Bayesian analysis of complex statistical models and MCMC techniques is employed to compute the posterior predictive distributions. The mean values of the model parameters obtained via MCMC simulations are considered for the model prediction performance evaluation. The performances of the proposed predictive models were examined according to two performance indices, i.e., coefficient of determination (R2) and mean square error. Overall, the results indicate that the proposed FOS model possesses satisfactory predictive performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Due to hazardous consequences of slope instability, the evaluation of slope stability is one of the challenging issues in rock and geotechnical engineering. The factor of safety (FOS) based on an appropriate geotechnical model as an index of stability, is required in order to evaluate slope stability. Many variables are involved in slope stability evaluation and the calculation of the FOS requires physical data on the geologic materials, information on pore-water pressures, geometrical data and their shear-strength parameters, etc. Traditionally, the methods available to solve the FOS of a given slope are classified into the categories including: limit equilibrium method (LEM) (Cheng et al. 2007a; Gu et al. 2015; Qi et al. 2016; Yu et al. 1998; Zhu et al. 2003), material point method (MPM) (Bhandari et al. 2016; Wang et al. 2016), finite element method (FEM) (Duncan 1996; Griffiths and Fenton 2004; Griffiths and Lane 1999; Luo et al. 2016), discontinuous deformation analysis for rock failure (DDARF) (Li et al. 2018), probabilistic stability analysis (Zhu and Yang 2018), multiple regression analysis (Marrapu and Jakka 2017), finite element limit analysis (Tschuchnigg et al. 2015), finite difference method (Sun et al. 2014), FEM-based shear strength reduction (Kaya 2017), discrete element method (Babanouri and Sarfarazi 2018), numerical limit analysis methods (Lim et al. 2015), boundary element method (Martel and Muller 2000), strength reduction FEM (Jiang et al. 2015) and numerical back analysis (Salmi and Hosseinzadeh 2015). Previously, several techniques for calculating the safety factor have been developed. Two well-known methods which were extensively used for SF analysis include numerical methods (NM) and LEM (Duncan1996). Nevertheless, these methods (LEMs and NMs) are complex and need repetitive operations. Additionally, they sometimes require a well-fitted constitutive model and real mechanical parameters which are difficult to be determined precisely. Recently, soft computing methods have been successfully applied to predict slope stability as a complex, non-linear and multivariate problem (Cheng et al. 2007b; Ercanoglu and Gokceoglu 2002; Fattahi 2017; Gao 2015; Gelisli et al. 2015; Hoang and Pham 2016; Kahatadeniya et al. 2009; Kang et al. 2016, 2017; Koopialipoor et al. 2019; Lu and Rosenbaum 2003; McCombie and Wilkinson 2002; Pradhan 2010; Qi and Tang 2018; Rukhaiyar et al. 2018; Saboya Jr et al. 2006; Sakellariou and Ferentinou 2005; Suman et al. 2016; Tun et al. 2016; Wang et al. 2005; Yang et al. 2004; Zolfaghari et al. 2005). Although, soft computing techniques have been successfully employed for prediction of slope stability, the main problem of most these techniques is that they are black box. This means that they don’t give a transparent model which shows the relationship between input and output parameters.

In recent years, Bayesian methods have attracted researchers (Cao et al. 2016; Chiu et al. 2012; Zhou et al. 2014) because of their ability to model and analyze complex problems that were previously difficult or impossible to solve. The growth in use of the Bayesian methods can be attributed mainly to two reasons. The first reason for the growth in use of Bayesian statistics is the development of the computer-based Markov chain Monte Carlo (MCMC) simulation methods, which allow Bayesian analysis to be performed flexibly and for very complex models (Herath 2018). The second reason for the growth in the Bayesian statistics is that traditional data analysis methods (classical statistics or frequentist statistics) which rely on null hypothesis significant testing have known problems (Cashen and Geiger 2004; Kruschke et al. 2012). In Bayesian statistics, parameters are viewed as unobserved realizations of the random processes or random variables with probability distributions. That is, before obtaining data, a prior distribution is chosen to represent all available information regarding the possible value of the parameter. Then information regarding the parameter is updated using observed data and is represented by the posterior distribution, which is used to estimate parameter values and quantify uncertainty. Bayesian analysis is hence a more versatile estimation approach because it uses not only the available data but also existing knowledge about model parameters (Herath 2018). In this paper a methodology based on Bayesian MCMC method using free software package, WinBUGS (Adoko et al. 2017; Fattahi and Zandy Ilghani 2019a, b; Lunn et al. 2000; Spiegelhalter et al. 2002)) aimed at identifying the most appropriate models to predict FOS among several selected candidate models, is proposed. To validate the performance of the models proposed, it is applied to field data given in open source literatures.

2 Methodology

2.1 Markov Chain Monte Carlo (MCMC) Simulation Approach

Typical statistical problems involve estimating a vector of parameters, θ, using the available data. The classical approach assumes that the parameters are fixed, but have unknown values to be estimated. Classical maximum likelihood estimates generally provide a point estimate of the parameter of interest. The Bayesian approach assumes that the parameters themselves have some unknown distribution. The approach is based upon the idea that the experimenter begins with some prior beliefs about the system, and then updates these beliefs on the basis of observed data. Using Bayes’ Theorem, the posterior distribution of the parameters given the data π(θ|data) has density proportional to the product of the likelihood of the data given the parameters L(data|θ) and the prior distribution of the parameters π(θ): π(θ|data) ∝L(data|θ) × π(θ) (Gimenez et al. 2009).

The Bayesian approach does this through integration using the MCMC algorithm. The high-dimensional integral associated with the posterior density is actually estimated using appropriate Monte Carlo integration, which consists of constructing a Markov chain with stationary distribution equal to the posterior distribution of interest (Gimenez et al. 2009). Then, once the chain has converged, realizations can be regarded as a dependent sample from this distribution. WinBUGS implements powerful ways of constructing these chains, adapting to a wide range of target (posterior) distributions and therefore allowing a large number of possible models to be fitted. Further details on Bayesian modeling using MCMC algorithms can be found in Brooks et al. (2011). Also for more information about Bayesian modeling using WinBUGS can be found in Ntzoufras (2011).

2.2 MCMC Simulation Software

There are numerous kinds of software, both open source and proprietary, that can be used to implement Bayesian analysis using Bayesian MCMC simulation. The popular WinBUGS software is an interactive Windows program for Bayesian analysis of complex statistical models (Herath 2018). It uses BUGS [Bayesian inference Using Gibbs Sampling (Chun 2008)], and samples are drawn from their conditional (posterior) distribution instead of the marginal posterior distributions. The standard MCMC software such as WinBUGS uses the Gibbs sampling algorithm, the Metropolis–Hastings (MH) algorithm (Hastings 1970), or a hybrid of both. In addition, the reversible-jump MCMC algorithm is gaining acceptance. In order to overcome the inefficiencies in Gibbs sampling and MH algorithms, ideas from physics have been used develop the newer and more robust Hamiltonian Monte Carlo (HMC) algorithm, which can be used independently (continuous parameters) or combined with Gibbs sampling and the MH algorithm for discrete parameters (Herath 2018). This paper uses the open source software WinBUGS to derive the posterior distributions. The WinBUGS software is currently freely available at https://www.mrc-bsu.cam.ac.uk/software/bugs/the-bugs-project-winbugs/.

3 The Features of the Database

The main scope of this work was to implement the methodology in the problem of slope stability estimation. To achieve this, datasets given in previous papers are borrowed (Fattahi 2017; Madzic 1988; Sah et al. 1994). The parameters that were selected were related to the geometry and the geotechnical properties of each slope. More specifically, the parameters utilized for circular failure were slope angle (β), pore pressure ratio (ru), angle of internal friction (φ), height (H), cohesion (C) and unit weight (γ) (Fig. 1). The output layer composed of a single output parameter (FOS). The data set consisted of 62 case studies of the slopes analyzed for the circular critical failure mechanism. Partial dataset used in this study are presents in Table 1. Also, descriptive statistics of the all data sets are shown in Table 2.

4 Bayesian Models: Inference, Selection and Prediction

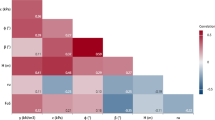

First of all, the database containing 62 datasets were divided into two. The first part representing 75% of the total datasets (i.e. containing 47 datasets) was used to establish the model while the second part served for the model performance evaluation. Based on the training database, a Bayesian predictive model was proposed. Firstly, a preliminary correlation analysis was performed to investigate the possible type of relationships between the FOS (dependent) and each of the independent variable (γ, C, φ, β, H and ru) in order to explore the potential candidate terms in developing the correlation for FOS. The following candidates are used:

In this study, the unknown parameters of the different candidate models are considered as random variables. The aim of this study as stated previously is to identify objectively the most suitable models that fit best the FOS datasets using a Bayesian framework where the inference of model parameters is conducted in WinBUGS software based on Bayesian MCMC methods. Therefore, one of the essential tasks in this study is to sample values of the unknown parameters from their conditional posterior distribution given the stochastic nodes that have been observed, after having specified the model as a full joint distribution on all quantities for both parameters and observables.

5 Modeling and Results

After specifying the models in WinBUGS language at the logical nodes, normal (or lognormal or other distributions) were selected at the stochastic nodes for γ, C, φ, β, H and ru respectively. Subsequently, the first group of the datasets was loaded and the models compiled and the MCMC sampler was applied to compute the model parameters. A trial-and error approach was used to identify the optimal settings of the modeling. It can be seen that for Model #2, the mean values of the unknown parameters a1, a2,…, a5 and b1, b2, …, b5 are 24.04, 10.32, − 5.941, − 1.974, 3.888, − 2.058, 0.8093, − 0.05221, − 3.596 and 4.243 respectively. These values are the most probable the model parameters would take for the predicted FOS to have maximum accuracy since those values correspond to the peak of the posterior distributions which are plotted in Fig. 2. The summaries of the different models are provided in Table 3.

As the models contain a maximum number of 20, checking the convergence for every parameter could be afforded and were monitored. If the trace plots move around the mode of the distribution and do not show a trend in the sample space, then the model has converged as shown in Fig. 3. As seen from Fig. 3, an example of the dynamic traces of the model parameters corresponding to Model #2 indicating convergence.

The dynamic trace plots of the sample values versus iteration suggested that the simulation appears to have stabilized.

To verify the performance of the models, four statistical criteria viz. mean squared error (MSE) and squared correlation coefficient (R2) were chosen to be the measure of accuracy (Fattahi et al. 2019). Let tk be the actual value and \(\hat{t}_{k}\) be the predicted value of the kth observation and n be the number of observations, then MSE and R2 could be defined, respectively, as follows:

With the purpose of evaluating the prediction performance of the model, the datasets (training and testing datasets) were used to assess the optimal model. Performance analysis of 20 models for training and testing datasets is shown in Table 4 and Fig. 4.

In general, the results indicated that the proposed model (Model #2) could be used to predict the FOS. Finally, the Model #2 is ranked the best candidate while Model #20 is the worst candidate for predicting the FOS using the training and testing datasets. It is evident that the prediction model constructed in this research is open for more development if more data are available.

6 Conclusions

Slope stability assessment is a critical research area in civil engineering. Disastrous consequences of slope collapse necessitate better tools for predicting their occurrences. In this paper, a new methodology based on Bayesian inference was implemented to identify the most appropriate models for estimating the FOS among several candidate models that had been analyzed using the WinBUGS software. The input of the predictive model included the γ, C, φ, β, H and ru. Overall, the results suggest that the proposed models FOS possess satisfactory predictive performance. Based on the R2 and MSE, the model #2 was the most adequate model (among those considered) which was in agreement with performance indices. This study shows that the Bayesian MCMC method can be applied as a powerful tool for modeling of some problems involved in rock and soil engineering.

References

Adoko AC, Gokceoglu C, Yagiz S (2017) Bayesian prediction of TBM penetration rate in rock mass. Eng Geol 226:245–256

Babanouri N, Sarfarazi V (2018) Numerical analysis of a complex slope instability: pseudo-wedge failure. Geomech Eng 15:669–676

Bhandari T, Hamad F, Moormann C, Sharma KG, Westrich B (2016) Numerical modelling of seismic slope failure using MPM. Comput Geotech 75:126–134. https://doi.org/10.1016/j.compgeo.2016.01.017

Brooks S, Gelman A, Jones G, Meng X-L (2011) Handbook of markov chain monte carlo. CRC Press, Boca Raton

Cao Z-J, Wang Y, Li D-Q (2016) Site-specific characterization of soil properties using multiple measurements from different test procedures at different locations—a Bayesian sequential updating approach. Eng Geol 211:150–161

Cashen LH, Geiger SW (2004) Statistical power and the testing of null hypotheses: a review of contemporary management research and recommendations for future studies. Organ Res Methods 7:151–167

Cheng Y, Lansivaara T, Wei W (2007a) Two-dimensional slope stability analysis by limit equilibrium and strength reduction methods. Comput Geotech 34:137–150

Cheng Y, Li L, Chi S-C, Wei W (2007b) Particle swarm optimization algorithm for the location of the critical non-circular failure surface in two-dimensional slope stability analysis. Comput Geotech 34:92–103

Chiu C, Yan W, Yuen K-V (2012) Reliability analysis of soil–water characteristics curve and its application to slope stability analysis. Eng Geol 135:83–91

Chun YH (2008) Bayesian analysis of the sequential inspection plan via the Gibbs sampler. Oper Res 56:235–246

Duncan JM (1996) State of the art: limit equilibrium and finite-element analysis of slopes. J Geotech Eng 122:577–596

Ercanoglu M, Gokceoglu C (2002) Assessment of landslide susceptibility for a landslide-prone area (north of Yenice, NW Turkey) by fuzzy approach. Environ Geol 41:720–730

Fattahi H (2017) Prediction of slope stability using adaptive neuro-fuzzy inference system based on clustering methods. J Min Environ 8:163–177

Fattahi H, Zandy Ilghani N (2019a) Applying Bayesian models to forecast rock mass modulus. Geotech Geol Eng 37:4337–4349

Fattahi H, Zandy Ilghani N (2019b) Bayesian prediction of rotational torque to operate horizontal drilling. J Min Environ 10:507–515

Fattahi H, Varmazyari Z, Babanouri N (2019) Feasibility of Monte Carlo simulation for predicting deformation modulus of rock mass. Tunn Undergr Space Technol 89:151–156

Gao W (2015) Stability analysis of rock slope based on an abstraction ant colony clustering algorithm. Environ Earth Sci 73:7969–7982

Gelisli K, Kaya T, Babacan AE (2015) Assessing the factor of safety using an artificial neural network: case studies on landslides in Giresun, Turkey. Environ Earth Sci 73:8639–8646

Gimenez O et al (2009) WinBUGS for population ecologists: Bayesian modeling using Markov Chain Monte Carlo methods. In: Modeling demographic processes in marked populations. Springer, pp 883–915

Griffiths D, Fenton GA (2004) Probabilistic slope stability analysis by finite elements. J Geotech Geoenviron 130:507–518

Griffiths D, Lane P (1999) Slope stability analysis by finite elements. Geotechnique 49:387–403

Gu T, Wang J, Fu X, Liu Y (2015) GIS and limit equilibrium in the assessment of regional slope stability and mapping of landslide susceptibility. Bull Eng Geol Environ 74:1105–1115. https://doi.org/10.1007/s10064-014-0689-2

Hastings WK (1970) Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57:97–109

Herath HS (2018) Post-auditing and cost estimation applications: an illustration of MCMC simulation for bayesian regression analysis. Eng Econ 300:1–33

Hoang N-D, Pham A-D (2016) Hybrid artificial intelligence approach based on metaheuristic and machine learning for slope stability assessment: a multinational data analysis. Expert Syst Appl 46:60–68

Jiang Q, Qi Z, Wei W, Zhou C (2015) Stability assessment of a high rock slope by strength reduction finite element method. Bull Eng Geol Environ 74:1153–1162. https://doi.org/10.1007/s10064-014-0698-1

Kahatadeniya KS, Nanakorn P, Neaupane KM (2009) Determination of the critical failure surface for slope stability analysis using ant colony optimization. Eng Geol 108:133–141

Kang F, Xu Q, Li J (2016) Slope reliability analysis using surrogate models via new support vector machines with swarm intelligence. Appl Math Model 40:6105–6120

Kang F, Xu B, Li J, Zhao S (2017) Slope stability evaluation using Gaussian processes with various covariance functions. Appl Soft Comput 60:387–396

Kaya A (2017) Geotechnical assessment of a slope stability problem in the Citlakkale residential area (Giresun, NE Turkey). Bull Eng Geol Environ 76:875–889. https://doi.org/10.1007/s10064-016-0896-0

Koopialipoor M, Armaghani DJ, Hedayat A, Marto A, Gordan B (2019) Applying various hybrid intelligent systems to evaluate and predict slope stability under static and dynamic conditions. Soft Comput 23:5913–5929

Kruschke JK, Aguinis H, Joo H (2012) The time has come: Bayesian methods for data analysis in the organizational sciences. Organ Res Methods 15:722–752

Li Y, Zhou H, Dong Z, Zhu W, Li S, Wang S (2018) Numerical investigations on stability evaluation of a jointed rock slope during excavation using an optimized DDARF method. Geomech Eng 14:271–281

Lim K, Li AJ, Lyamin AV (2015) Three-dimensional slope stability assessment of two-layered undrained clay. Comput Geotech 70:1–17. https://doi.org/10.1016/j.compgeo.2015.07.011

Lu P, Rosenbaum M (2003) Artificial neural networks and grey systems for the prediction of slope stability. Nat Hazards 30:383–398

Lunn DJ, Thomas A, Best N, Spiegelhalter D (2000) WinBUGS—a Bayesian modelling framework: concepts, structure, and extensibility. Stat Comput 10:325–337

Luo N, Bathurst RJ, Javankhoshdel S (2016) Probabilistic stability analysis of simple reinforced slopes by finite element method. Comput Geotech 77:45–55

Madzic E (1988) Stability of unstable final slope in deep open iron mine. Landslides 1:455–458

Marrapu BM, Jakka RS (2017) Assessment of slope stability using multiple regression analysis. Geomech Eng 13:237–254

Martel SJ, Muller JR (2000) A two-dimensional boundary element method for calculating elastic gravitational stresses in slopes. Pure Appl Geophys 157:989–1007

McCombie P, Wilkinson P (2002) The use of the simple genetic algorithm in finding the critical factor of safety in slope stability analysis. Comput Geotech 29:699–714

Ntzoufras I (2011) Bayesian modeling using WinBUGS, vol 698. Wiley, Hoboken

Pradhan B (2010) Application of an advanced fuzzy logic model for landslide susceptibility analysis. Int J Comput Intel Syst 3:370–381

Qi C, Tang X (2018) Slope stability prediction using integrated metaheuristic and machine learning approaches: a comparative study. Comput Ind Eng 118:112–122

Qi C, Wu J, Liu J, Kanungo DP (2016) Assessment of complex rock slope stability at Xiari, southwestern China. Bull Eng Geol Environ 75:537–550. https://doi.org/10.1007/s10064-015-0763-4

Rukhaiyar S, Alam M, Samadhiya N (2018) A PSO–ANN hybrid model for predicting factor of safety of slope. Int J Geotech Eng 12:556–566

Saboya F Jr, da Glória Alves M, Dias Pinto W (2006) Assessment of failure susceptibility of soil slopes using fuzzy logic. Eng Geol 86:211–224

Sah N, Sheorey P, Upadhyaya L (1994) Maximum likelihood estimation of slope stability. Int J Rock Mech Min Sci 31:47–53

Sakellariou M, Ferentinou M (2005) A study of slope stability prediction using neural networks. Geotech Geol Eng 23:419–445

Salmi EF, Hosseinzadeh S (2015) Slope stability assessment using both empirical and numerical methods: a case study. Bull Eng Geol Environ 74:13–25. https://doi.org/10.1007/s10064-013-0565-5

Spiegelhalter DJ, Best NG, Carlin BP, Van Der Linde A (2002) Bayesian measures of model complexity and fit. J R Stat Soc Ser B (Stat Methodol) 64:583–639

Suman S, Khan S, Das S, Chand S (2016) Slope stability analysis using artificial intelligence techniques. Nat Hazards 84:727–748

Sun S, Sun H, Wang Y, Wei J, Liu J, Kanungo DP (2014) Effect of the combination characteristics of rock structural plane on the stability of a rock-mass slope. Bull Eng Geol Environ 73:987–995. https://doi.org/10.1007/s10064-014-0593-9

Tschuchnigg F, Schweiger HF, Sloan SW (2015) Slope stability analysis by means of finite element limit analysis and finite element strength reduction techniques. Part II: back analyses of a case history. Comput Geotech 70:178–189. https://doi.org/10.1016/j.compgeo.2015.07.019

Tun YW, Pedroso DM, Scheuermann A, Williams DJ (2016) Probabilistic reliability analysis of multiple slopes with genetic algorithms. Comput Geotech 77:68–76. https://doi.org/10.1016/j.compgeo.2016.04.006

Wang H, Xu W, Xu R (2005) Slope stability evaluation using back propagation neural networks. Eng Geol 80:302–315

Wang B, Vardon PJ, Hicks MA (2016) Investigation of retrogressive and progressive slope failure mechanisms using the material point method. Comput Geotech 78:88–98. https://doi.org/10.1016/j.compgeo.2016.04.016

Yang C, Tham L, Feng X-T, Wang Y, Lee P (2004) Two-stepped evolutionary algorithm and its application to stability analysis of slopes. J Comput Civ Eng 18:145–153

Yu H, Salgado R, Sloan S, Kim J (1998) Limit analysis versus limit equilibrium for slope stability. J Geotech Geoenviron 124:1–11

Zhou W-H, Yuen K-V, Tan F (2014) Estimation of soil–water characteristic curve and relative permeability for granular soils with different initial dry densities. Eng Geol 179:1–9

Zhu J, Yang X (2018) Probabilistic stability analysis of rock slopes with cracks. Geomech Eng 16:655–667

Zhu D, Lee C, Jiang H (2003) Generalised framework of limit equilibrium methods for slope stability analysis. Geotechnique 53:377–395

Zolfaghari AR, Heath AC, McCombie PF (2005) Simple genetic algorithm search for critical non-circular failure surface in slope stability analysis. Comput Geotech 32:139–152

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Fattahi, H., Zandy Ilghani, N. Slope Stability Analysis Using Bayesian Markov Chain Monte Carlo Method. Geotech Geol Eng 38, 2609–2618 (2020). https://doi.org/10.1007/s10706-019-01172-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10706-019-01172-w