Abstract

Probabilistic linguistic variable is a kind of powerful qualitative fuzzy sets, which permits the decision makers (DMs) to apply several linguistic variables with probabilities to denote a judgment. This paper studies group decision making (GDM) with normalized probability linguistic preference relations (NPLPRs). To achieve this goal, an acceptably multiplicative consistency based interactive algorithm is provided to derive common probability linguistic preference relations (CPLPRs) from PLPRs, by which a new acceptably multiplicative consistency concept for NPLPRs is defined. When the multiplicative consistency of NPLPRs is unacceptable, models for deriving acceptably multiplicatively consistent NPLPRs are constructed. Then, it studies incomplete NPLPRs (InNPLPRs) and offers a common probability and acceptably multiplicative consistency based interactive algorithm to determine missing judgments. Furthermore, a correlation coefficient between CPLPRs is provided, by which the weights of the DMs are ascertained. Meanwhile, a consensus index based on CPLPRs is defined. When the consensus does not reach the requirement, a model to increase the level of consensus is built that can ensure the adjusted LPRs to meet the multiplicative consistency and consensus requirement. Moreover, an interactive algorithm for GDM with NPLPRs is provided, which can address unacceptably multiplicatively consistent InNPLPRs. Finally, an example about the evaluation of green design schemes for new energy vehicles is provided to indicate the application of the new algorithm and comparative analysis is conducted.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Due to various subjective and objective reasons, it becomes more and more difficult to demand the DMs to offer exact or fuzzy numerical judgments. In view of this situation, linguistic variables (LVs) proposed by Zadeh (1975) are powerful as a tool to express the subjective recognitions of DMs such as “very good”, “very bad”, and “fair”. After the original work of Zadeh (1975), various decision-making methods with linguistic information are proposed. Taking the merits of LVs and preference relations (PRs), Herrera and Herrera-Viedma (2000) introduced LVs to PRs and presented linguistic preference relations (LPRs). Then, the authors studied the application of LPRs in decision making by the linguistic choice function and mechanism. Xu (2004a) first noted the consistency of LPRs and introduced an additive consistency concept for LPRs, which are defined on the additive linguistic scale (ALS) T = {sα|α = − t, − t + 1, …, t − 1, t}. Alonso et al. (2008) offered an additive consistency concept for LPRs defined on the ALS S = {sα|α = 0, 1, …, 2t}. Then, the authors offered an interactive algorithm to determine missing LVs in unacceptable InLPRs and defined a distance measure based consensus index. Different from the additive consistency concepts for LPRs (Alonso et al., 2008; Xu, 2004a), Xia et al. (2014) proposed a multiplicative consistency concept for LPRs defined on the ALS S = {sα|α = 0, 1, …, 2t}. In a similar way as Alonso et al. (2008), Xia et al. (2014) offered an interactive algorithm to ascertain missing LVs in InLPRs. Furthermore, Alonso et al. (2009) researched GDM with InLPRs that follows the additive consistency concept in the literature (Alonso et al., 2008). Different from the above consistency-based research, Herrera et al. (1996) first studied the consensus of GDM with LPRs by the linguistic quantifier function, which ensures the ranking results with the given agreement level. To measure the consensus level and to detect the non-consensus judgments, the authors defined three consensus measures. Considering the situation where different DMs may use different linguistic granularities to better express their quality judgments, Herrera-Viedma et al. (2005) discussed the consensus of GDM with multigranular LPRs using the proximity measure and guidance advice system. To achieve these goals, the authors defined three similarity based consensus levels and offered a non-consensus judgment identification rule. Meanwhile, Herrera-Viedma et al. (2005) gave four adjustment direction rules.

With the development of decision making with linguistic information, some researchers noted that LVs still have some limitations of denoting the judgments of DMs. Considering the issue, many extension forms of LVs are proposed (Meng et al., 2019; Rodriguez et al., 2011; Xu, 2004b). They are defined to denote different kinds of qualitative judgments such as uncertain qualitative judgments, preferred and non-preferred qualitative judgments, and hesitant qualitative judgments. With respect to hesitant fuzzy linguistic variables (Rodriguez et al., 2011), Pang et al. (2016) noted that this type of linguistic fuzzy sets can only express the hesitant qualitative judgments, but cannot discriminate their probabilities. Thus, Pang et al. (2016) introduced the concept of probabilistic linguistic term sets (PLTSs), which are composed by several LVs with each one having a probability to show the difference of corresponding judgments. Then, the authors offered a probabilistic linguistic TOPSIS method based on the defined aggregation operator. Following the original work of Pang et al. (2016), some probabilistic linguistic matrix based decision-making methods are proposed such as probabilistic linguistic ORESTE method (Wu & Liao, 2018), probabilistic linguistic MULTIMOORA method (Liu & Li, 2019), and probabilistic linguistic ELECTRE III method (Liao et al., 2019).

In contrast to the above methods, Zhang et al. (2016) developed the first decision-making method with probabilistic linguistic preference relations (PLPRs), which is based on the additive consistency analysis. To deal with incomplete PLPRs (InPLPRs), where the probabilities of some LVs in PLTSs are missing, Gao et al. (2019a) developed an expected additive consistency based decision-making method. Different from InPLPRs discussed by Gao et al. (2019a), Tang et al. (2020) studied decision making with InPLPRs whose LVs in PLTSs are incompletely known. To do this, the authors considered any unknown LV as an interval LV [s−t, st] for the ALS T = {sα|α = − t, − t + 1, …, t − 1, t}. Then, they transformed InPLPRs to interval fuzzy preference relations (IFPRs) and developed an additive consistency based decision-making method with InPLPRs. Besides the above additive consistency based decision-making methods, Gao et al. (2019b) proposed a multiplicative consistency concept for PLPRs, which is based on the score of PLTSs. According to the relationship between interval judgments and priority weights (Tanino, 1984), Gao et al. (2019b) built a model for calculating the priority weight vector from score based acceptably multiplicatively consistent PLPRs. It should be noted that this concept is a direct utilization of Xia et al.’s concept for LPRs (Xia et al., 2014). There are some drawbacks: (i) it causes information loss because this concept only uses one LPR; (ii) it cannot reflect the qualitative hesitancy of the DMs; (iii) none of LPRs constructed by LVs in PLPRs is multiplicatively consistent, while it is score based multiplicatively consistent; (iv) the numerical priority weight vector cannot indicate the qualitative information. Song and Hu (2019) also researched decision making with PLPRs based on the multiplicative consistency analysis, which is similar to Gao et al.’s method (Gao et al., 2019b). After reviewing previous research about decision making with PLPRs, we find that there are some limitations: (i) all previous consistency concepts cause information loss; (ii) none of them is sufficient to cope with InPLPRs. Besides these two issues, multiplicative consistency based methods (Gao et al., 2019b; Song & Hu, 2019) have more drawbacks such as (i) neither of them studies InPLPRs; (ii) interactive methods for improving the multiplicative consistency level cannot ensure the minimum total adjustments; (iii) they cannot ascertain which LVs case the inconsistency; (iv) neither of them considers GDM with PLPRs.

Since any PLPR can be easily converted into NPLPRs by normalizing the probability distribution on PLTSs, the paper further studies GDM with NPLPRs and offers a new method. The main contributions include: (i) a new acceptably multiplicative consistency based interactive algorithm is provided to derive CPLPRs, and then a new acceptably multiplicative consistency concept for NPLPRs is defined; (ii) models for deriving acceptably multiplicatively consistent NPLPRs from unacceptable ones are constructed; (iii) a common probability and acceptably multiplicative consistency based interactive algorithm to determine missing judgments is offered, which can fully cope with InNPLPRs; (iv) a correlation coefficient between CPLPRs is provided to obtain the weights of the DMs; (v) a distance measure based consensus index to measure the agreement degrees of individual opinions is given; (vi) a model is established to improve the level of consensus, which makes the adjusted LPRs meet the requirements of multiplicative consistency and consensus; (vii) an interactive algorithm for GDM with NPLPRs is provided that can address unacceptably multiplicatively consistent InNPPRs; (viii) numerical example and comparative analysis are offered. The originalities of this paper include: (i) this is the first (acceptably) multiplicative consistency concept for NPLPRs that fully considers PLTSs offered by the DMs; (ii) this is the first multiplicative consistency based method that can cope with InNPLPRs; (iii) this is the first method for increasing the multiplicative consistency (and consensus) level of NPLPRs in view of the minimum total adjustment; (iv) this is the first method for GDM with InNPLPRs that follows the multiplicative consistency and consensus analysis.

The rest of this paper is organized as follows: Sect. 2 offers some basic knowledge about LPRs and NPLTRs. Section 3 gives an interactive algorithm to derive CPLPRs in view of the multiplicative consistency of LPRs. Then, an acceptably multiplicative consistency concept for NPLPRs based on CPLPRs is defined. Section 4 discusses InNPLPRs and offers a common probability and acceptably multiplicative consistency based interactive algorithm to determine missing judgments. Section 5 constructs models for getting acceptably multiplicatively consistent NPLPRs from unacceptable ones. Section 6 studies GDM with NPLPRs. First, a similarity measure between individual NPLPRs is defined, which is used to determine the weights of the DMs. Based on the comprehensive expect LPR, a new consensus index is defined. When the consensus level is lower than the given threshold, a model for improving the consensus degree is built that can ensure the acceptably multiplicative consistency of the adjusted LPRs. Then, an interactive algorithm for GDM with NPLPRs is provided. Section 7 selects the evaluation of green design schemes for new energy vehicles to show the application of the new method. Conclusion is offered in Sect. 8.

2 Basic concepts

To show the pairwise qualitative judgments, Herrera and Herrera-Viedma (2000) introduced LPRs as follows:

Definition 2.1

(Herrera & Herrera-Viedma, 2000) The matrix \(R = (r_{ij} )_{n \times n}\) on the finite object set X = {xi| i = 1, 2, …, n} for the ALS S = {sα| α = 0, 1, …, 2t} is called a LPR if.

where \(r_{ij} \in S\) is the qualitative preferred degree of the object \(x_{i}\) over \(x_{j}\) for all i, j = 1, 2, …, n.

Remark 2.1

Let \(s_{\alpha }\) and \(s_{\beta }\) be any two LVs in the ALS S = {sα| α = 0, 1, …, 2t}. Then, their operational laws are defined as (Xu, 2004a):

-

(i)

\(s_{\alpha } \otimes s_{\beta } = s_{\alpha \beta }\);

-

(ii)

\(s_{\alpha } { \oslash }s_{\beta } = s_{\alpha /\beta }\);

-

(iii)

\(\lambda s_{\alpha } = s_{\lambda \alpha }\) and \(\left( {s_{\alpha } } \right)^{\lambda } = s_{{\alpha^{\lambda } }}\), \(\lambda \in [0,1]\).

For the convenience of following discussion, let I be a function defined on the ALS S = {sα|α = 0, 1, …, 2t}, where I: S \(\to\){0, 1, …, 2t}, namely, I(sα) = α for any sα \(\in\) S.

Similar to the multiplicative consistency concept for fuzzy preference relations (FPRs) (Tanino, 1984), Xia et al. (2014) offered the following multiplicative consistency concept:

Definition 2.2

(Xia et al., 2014) Let \(R = (r_{ij} )_{n \times n}\) be a LPR on the finite object set X = {xi| i = 1, 2, …, n} for the ALS S = {si|i = 0, 1, 2, …, 2t}. R is multiplicatively consistent if.

for all \(i,k,j = 1,2,...,n\).

According to Definition 2.2, Xia et al. (2014) offered another equivalent condition to judge the multiplicative consistency of LPRs.

Property 2.1

(Xia et al., 2014) Let \(R = (r_{ij} )_{n \times n}\) be a LPR on the finite object set X = {xi|i = 1, 2, …, n} for the ALS S = {si|i = 0, 1, 2, …, 2t}. It is multiplicatively consistent if and only if the following condition is true, where

for all i, j = 1, 2, …, n such that \(i < j\).

For each triple of (i, k, j), by Eq. (3) we have

where \(\Delta = \max_{i,j = 1,i < j}^{n} \left( {\frac{{I(r_{ij} )}}{{2t - I(r_{ij} )}},\frac{{2t - I(r_{ij} )}}{{I(r_{ij} )}}} \right)\).

To make \(\frac{{I(r_{ij} )}}{{2t - I(r_{ij} )}}\) and \(\frac{{2t - I(r_{ij} )}}{{I(r_{ij} )}}\) meaningfully on the ALS S = {si|i = 0, 1, 2, …, 2t}, we let \(I(r_{ij} ) = 0.001{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{or}}{\kern 1pt} {\kern 1pt} {\kern 1pt} 2t - 0.001\) when \(I(r_{ij} ) = 0{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{or}}{\kern 1pt} {\kern 1pt} {\kern 1pt} 2t\), namely, we replace \(r_{ij} = s_{0} {\kern 1pt} {\kern 1pt} {\text{or}}{\kern 1pt} {\kern 1pt} s_{2t}\) with \(r_{ij} = s_{0.001} {\kern 1pt} {\kern 1pt} {\text{or}}{\kern 1pt} {\kern 1pt} s_{2t - 0.001}\), where i, j = 1, 2, …, n such that \(i < j\).

To measure the multiplicative consistency of LPRs, we define the following formula for any LPR \(R = (r_{ij} )_{n \times n}\) on the ALS S = {sα| α = 0, 1, …, 2t}:

From the definition of Δ, we know that \(\frac{1}{\Delta } \le \frac{{I(r_{ij} )}}{{2t - I(r_{ij} )}},\frac{{2t - I(r_{ij} )}}{{I(r_{ij} )}} \le \Delta\) for all i, j = 1, 2, …, n such that \(i < j\). Therefore, \(- 1 \le \log_{\Delta } \left( {\frac{{I(r_{ij} )}}{{2t - I(r_{ij} )}}} \right),\log_{\Delta } \left( {\frac{{2t - I(r_{ij} )}}{{I(r_{ij} )}}} \right) \le 1\) for all i, j = 1, 2, …, n such that \(i < j\).

Because \(- 3 \le \log_{\Delta } \left( {\frac{{I(r_{ij} )}}{{2t - I(r_{ij} )}}} \right) - \frac{1}{n - 2}\left( {\sum\nolimits_{k = 1,k \ne i,j}^{n} {\left( {\log_{\Delta } \left( {\frac{{I(r_{ik} )}}{{2t - I(r_{ik} )}}} \right) + \log_{\Delta } \left( {\frac{{I(r_{kj} )}}{{2t - I(r_{kj} )}}} \right)} \right)} } \right) \le 3\), and there are \(\frac{n(n - 1)}{2}\) items in Eq. (5), we know that \(0 \le MCI(R) \le 1\). Furthermore, the bigger the value of Eq. (3) is, the higher the multiplicative consistency level will be. Especially, when \(MCI(R) = 1\), we have

for each pair of (i, j) such that \(i < j\), by which one can conclude that this LPR R is completely multiplicatively consistent.

Different from LPRs that can only denote the exact qualitative recognitions of the DMs, Pang et al. (2016) presented PLTSs to express the qualitative hesitant judgment and endow each LV with a probability to discriminate the difference.

Definition 2.3

(Pang et al., 2016) Let S = {si|i = 0, 1, …, 2t} be an ALS. A PLTS Lp is denoted as: L(p) = {Ll(pl)| Ll \(\in\) S, pl ≥ 0, l = 1, 2, …, m, \(\sum\nolimits_{l = 1}^{m} {p_{l} }\) ≤ 1}, where Ll (pl) is the LV Ll with the probability pl, and m is the number of LVs in L(p). When \(\sum\nolimits_{l = 1}^{m} {p_{l} }\) = 1, then L(p) is called a normalized PLTS (NPLTS).

Based on the concept of PLTSs, Zhang et al. (2016) introduced them to preference relations and defined PLPRs.

Definition 2.4

(Zhang et al., 2016) A PLPR B on the object set X = {x1, x2, …, xn} for the ALS S = {si|i = 0, 1, …, 2t} is denoted as: B = \(\left( {L_{ij} \left( p \right)} \right)_{n \times n}\), where Lij(p) = {\(L_{ij,l}\)(\(p_{ij, l}\))|\(L_{ij,l}\) ∈ S, \(p_{ij, l}\) ≥ 0, \(l\) = 1, 2, …, \(m_{ij}\), \(\sum\nolimits_{l = 1}^{{m_{ij} }} {p_{ij, l} }\) ≤ 1} is a PLTS denoting the preference judgment of the object xi over xj, and \(m_{ij}\) is the number of LVs in Lij(p). Elements in B have the following properties:

where l = 1, 2, …, \(m_{ij}\) for all i, j = 1, 2, …, n with i ≤ j.

Because any PLTS L(p) = {Ll(pl)| Ll \(\in\) S, pl ≥ 0, l = 1, 2, …, m, \(\sum\nolimits_{l = 1}^{m} {p_{l} }\) ≤ 1} can be easily derived its NPLTS by normalizing the probability distribution, namely, L(p) = {Ll(pl/\(\sum\nolimits_{l = 1}^{m} {p_{l} }\))| Ll \(\in\) S, pl/\(\sum\nolimits_{l = 1}^{m} {p_{l} }\) ≥ 0, l = 1, 2, …, m}. Therefore, this paper restricts to normalized PLPRs (NPLPRs) whose elements are NPLTSs. Notably, NPLPRs are equivalent to distribution linguistic preference relations, which was first introduced by Zhang et al. (2014).

Considering the multiplicative consistency of NPLPRs, Gao et al. (2019b) introduced the following score based multiplicative consistency concept:

Definition 2.5

(Gao et al., 2019b) Let B = \(\left( {L_{ij} \left( p \right)} \right)_{n \times n}\) be an NPLPR on the object set X = {x1, x2, …, xn} for the ALS S = {si|i = 0, 1, …, 2t}. It is multiplicatively consistent if

for all i, k, j = 1, 2, …, n such that i \( \ne \) k \(\ne \) j, where E(Lij(p)) = \( \sum\nolimits_{l = 1}^{{m_{ij} }} {L_{ij,l} } p_{ij,l}\) is the score of the PLTS Lij(p) for all i, j = 1, 2, …, n.

Remark 2.2

From Definition 2.5, one can find that E(Lij(p)) is a LV for all i, j = 1, 2, …, n. This means that Definition 2.5 analyzes the multiplicative consistency of PLPRs based on LPRs. This process cannot reflect the hesitancy of the DMs and will ineluctably lead to information loss. Let us consider the following NPLPR

which is defined on the object set X = {x1, x2, x3} for the ALS S = {si|i = 0, 1, …, 8}.

According to Eq. (7), we derive the score based LPR \(E(R) = \left( {\begin{array}{*{20}c} {s_{4} } & {s_{4} } & {s_{2} } \\ {s_{4} } & {s_{4} } & {s_{2} } \\ {s_{6} } & {s_{6} } & {s_{4} } \\ \end{array} } \right)\). One can check that each LV in \(E(R)\) is derived from one LV in the corresponding NPLTS. According to Definition 2.5, we conclude that this NPLPR B is multiplicatively consistent from the multiplicative consistency of E(R). However, this concept neither considers the LVs in the sets {s3, s5} and {s1, s4} derived from the NPLTSs L12(p) and L13(p) nor considers any probability information. Especially, it cannot show the qualitative hesitancy of the DMs. On the other hand, an NPLPR may be score based multiplicatively consistent while none of LPRs derived from the NPLPR is multiplicatively consistent. This further shows that Definition 2.5 is unsuitable to define the multiplicative consistency of NPLPRs.

Example 2.1

Let X = {x1, x2, x3} be the object set and S = {si| i = 0, 1, …, 8} be the ALS. The NPLPR B on X for the ALS S is defined as:

According to Eq. (7), we derive the score based LPR \(E(R) = \left( {\begin{array}{*{20}l} {s_{4} } \hfill & {s_{8/3} } \hfill & {s_{16/3} } \hfill \\ {s_{16/3} } \hfill & {s_{4} } \hfill & {s_{32/5} } \hfill \\ {s_{8/3} } \hfill & {s_{8/5} } \hfill & {s_{4} } \hfill \\ \end{array} } \right)\), which is multiplicatively consistent according to Definition 2.2. Following Definition 2.5, we conclude that the NPLPR B is multiplicatively consistent. However, one can check that none of LPRs directly obtained from the LVs in the NPLPR B is multiplicatively consistent, where

Following Tanino’s equivalent multiplicative consistency concept for FPRs, Gao et al. (2019b) further offered the following multiplicative consistency concept, which is then used to calculate the priority weight vector.

Definition 2.6

(Gao et al., 2019b) Let B = \(\left( {L_{ij} \left( p \right)} \right)_{n \times n}\) be an NPLPR on the object set X = {x1, x2, …, xn} for the ALS S = {si|i = 0, 1, …, 2t}. It is multiplicatively consistent if.

for all i, j = 1, 2, …, n, where w = (w1, w2, …, wn) is the priority weight vector such that \(\sum\nolimits_{i = 1}^{n} {w_{i} } = 1\) and wi ≥ 0 for all i = 1, 2, …, n.

Equation (8) is incorrect because E(Lij(p)) is a LV while \(2t\frac{{w_{i} }}{{w_{i} + w_{j} }}\) is a numerical value. Thus, it should be I(E(Lij(p))) = \(2t\frac{{w_{i} }}{{w_{i} + w_{j} }}\). In this case, Definitions 2.5 and 2.6 are equivalent, namely, a PLPR is multiplicatively consistent following Definition 2.5 if and only if it is multiplicatively consistent according to Definition 2.6. Therefore, Definition 2.6 has the same issues as the above analysis. Furthermore, references (Gao et al., 2019b; Song & Hu, 2019) both employ Eq. (8) to calculate the numerical priority weight vector, which cannot show the qualitative judgments of the DMs.

3 Multiplicative consistency analysis of NPLPRs

Based on the analysis of previous multiplicative consistency concepts for NPLPRs, one can find that they are insufficient to define multiplicatively consistent NPLPRs. More reasonable and natural multiplicative consistency concept is needed. Therefore, the section continues to discuss the multiplicative consistency of NPLPRs and offers a new multiplicative consistency concept based on CPLPRs, which fully considers the NPLTSs offered by the DMs.

For any given NPLPR B = \(\left( {L_{ij} \left( p \right)} \right)_{n \times n}\), its corresponding linguistic hesitant preference relation (LHPR) (Zhu & Xu, 2014) is defined as L = \(\left( {L_{ij} } \right)_{n \times n}\), where Lij(p) = {\(L_{ij,l}\)(\(p_{ij, l}\))| \(L_{ij,l}\) \(\in\) S, \(p_{ij, l}\) ≥ 0, \(l\) = 1, 2, …, \(m_{ij}\), \(\sum\nolimits_{l = 1}^{{m_{ij} }} {p_{ij, l} } \) = 1} and Lij = {\(L_{ij,l}\)|\(L_{ij,l}\) \(\in\) S, \(l\)= 1, 2, …,\({m}_{ij}\)} for all i, j = 1, 2, …, n. Following the work of Tang et al. (2020), any LPR \(R = (r_{ij} )_{n \times n}\) obtained from the LHPR L = (Lij \({)}_{n\times n}\) can be expressed as:

where \(\chi_{ij,l} = \left\{ {\begin{array}{*{20}l} 1 \hfill & {{\text{if}}\;{\text{the}}\;{\text{LV}}\;L_{ij,l} \;{\text{is}}\;{\text{chosen}}} \hfill \\ 0 \hfill & {{\text{otherwise}}} \hfill \\ \end{array} } \right.\) such that \(\sum\nolimits_{l = 1}^{{m_{ij} }} {\chi_{ij,l} } = 1\) and \(\chi_{ij,l} = \chi_{{ij,m_{ij} + 1 - l}}\) for all i, j = 1, 2, …, n and all l = 1, 2, …, mij.

When the LPR \(R = (r_{ij} )_{n \times n}\) is acceptably multiplicatively consistent, we have

where MCI* is the given multiplicative consistency threshold.

Put Eq. (9) into Eq. (10), we obtain

where \(\Delta = \max_{i,j = 1,i < j}^{n} \left( {\sum\nolimits_{l = 1}^{{m_{ij} }} {\chi_{ij,l} } \frac{{I(L_{ij,l} )}}{{2t - I(L_{ij,l} )}},\sum\nolimits_{l = 1}^{{m_{ij} }} {\chi_{ij,l} } \frac{{2t - I(L_{ij,l} )}}{{I(L_{ij,l} )}}} \right)\).

Now, we offer an acceptably multiplicative consistency based interactive algorithm to derive all CPLPRs from the given PLPR B = \(\left( {L_{ij} \left( p \right)} \right)_{n \times n}\).

3.1 Algorithm I. The procedure of deriving CPLPRs from NPLPRs

-

Step 1: Let g = 1, and \(R^{g} = (r_{ij}^{g} )_{n \times n}\) be the LPR derived from the LHPR L = \(\left( {L_{ij} } \right)_{n \times n}\) for the NPLPR B = \(\left( {L_{ij} \left( p \right)} \right)_{n \times n}\), where \(r_{ij}^{g} = \prod\nolimits_{l = 1}^{{m_{ij} }} {\left( {L_{ij,l} } \right)^{{\chi_{ij,l} }} }\) for all i, j = 1, 2, …, n and \(\chi_{ij,l}\) is a 0–1 indicator variable as shown in Eq. (9). Let

$$ \begin{aligned} & \sum\nolimits_{l = 1}^{{m_{ij} }} {\chi_{ij,l} } \log_{\Delta } \left( {\frac{{I(L_{ij,l} )}}{{2t - I(L_{ij,l} )}}} \right) \\ & \quad - \frac{1}{n - 2}\left( {\sum\nolimits_{k = 1,k \ne i,j}^{n} {\left( {\sum\nolimits_{l = 1}^{{m_{ik} }} {\chi_{ik,l} } \log_{\Delta } \left( {\frac{{I(L_{ik,l} )}}{{2t - I(L_{ik,l} )}}} \right) + \sum\nolimits_{l = 1}^{{m_{kj} }} {\chi_{kj,l} } \log_{\Delta } \left( {\frac{{I(L_{kj,l} )}}{{2t - I(L_{kj,l} )}}} \right)} \right)} } \right) - \varepsilon_{ij}^{g, + } + \varepsilon_{ij}^{g, - } = 0 \\ \end{aligned} $$(12)

for each pair of (i, j) such that i < j, where \(\Delta\) as shown in Eq. (11), \(\varepsilon_{ij}^{g, + }\) and \(\varepsilon_{ij}^{g, - }\) are deviation values such that \(\varepsilon_{ij}^{g, + } \varepsilon_{ij}^{g, - }\) = 0 and \(\varepsilon_{ij}^{g, + }\),\(\varepsilon_{ij}^{g, - }\) ≥ 0.

To estimate whether the LPR Rg is acceptably multiplicatively consistent, according to Eqs. (11) and (12) we build the following model:

where δg is the consistency deviation value, the first constraint is obtained from Eq. (11), the second constraint is Eq. (12), and the third to eighth constraints ensure \(\Delta = \max_{i,j = 1,i < j}^{n} \left( {\sum\nolimits_{l = 1}^{{m_{ij} }} {\chi_{ij,l} } \frac{{I(L_{ij,l} )}}{{2t - I(L_{ij,l} )}},\sum\nolimits_{l = 1}^{{m_{ij} }} {\chi_{ij,l} } \frac{{2t - I(L_{ij,l} )}}{{I(L_{ij,l} )}}} \right)\).

Solving model (M-1), we get the optimal 0–1 indicator variables \(\chi_{ij,l}^{*}\) for all i, j = 1, 2, …, n and all l = 1, 2, …, mij. For each pair of (i, j), without loss of generality, let \(\chi_{{ij,l_{g} }}^{*} = 1\). Then, we obtain the LPR \(R^{g} = (r_{ij}^{g} )_{n \times n}\), where \(r_{ij}^{g} = L_{{ij,l_{g} }}\) for all i, j = 1, 2, …, n. When \(\phi^{*g}\) = 0, we know that \(R^{g}\) is acceptably multiplicatively consistent.

-

Step 2: Determine the common probability pg of \(R^{g}\), where

namely, pg equals to the minimum value of the probabilities of all LVs \(L_{{ij,l_{g} }}\) in B. According to the common probability pg, we derive the CPLPR \(R^{g} (p^{g} ) = \left( {r_{ij}^{g} (p^{g} )} \right)_{n \times n}\).

Delete the CPLPR \(R^{g} (p^{g} )\) from the NPLPR B = (Lij(p)\({)}_{n\times n}\) and obtain the PLPR \(B^{g} = \left( {L_{ij}^{ - g} (p^{ - g} )} \right)_{n \times n}\), where

for all i, j = 1, 2, …, n.

Construct the corresponding LHPR L−g = (\(L_{ij}^{ - g}\)\({)}_{n\times n}\) for the PLPR \(B^{ - g} = \left( {L_{ij}^{ - g} (p^{ - g} )} \right)_{n \times n}\), where \(L_{ij}^{ - g} = \left\{ {\begin{array}{*{20}l} {\{ L_{ij,l}^{{}} |L_{ij,l}^{{}} = L_{ij,l} ,l = 1,2,...,m_{ij} \} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} p_{{ij,l_{g} }} > p^{g} } \hfill \\ {\{ L_{ij,l}^{{}} |L_{ij,l}^{{}} = L_{ij,l} ,l = 1,2,...,m_{ij} ,l \ne l_{g} \} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} p_{{ij,l_{g} }} = p^{g} } \hfill \\ \end{array} } \right.\) for all i, j = 1, 2, …, n.

-

Step 3: Let \(R^{g + 1} = (r_{ij}^{g + 1} )_{n \times n}\) be the LPR derived from the LHPR L−g = (\(L_{ij}^{ - g}\)\({)}_{n\times n}\), where \(r_{ij}^{g + 1} = \left\{ {\begin{array}{*{20}l} {\prod\nolimits_{l = 1}^{{m_{ij} }} {\left( {L_{ij,l}^{{}} } \right)^{{\chi_{ij,l} }} } } \hfill \\ {\prod\nolimits_{{l = 1,l \ne l_{g} }}^{{m_{ij} }} {\left( {L_{ij,l}^{{}} } \right)^{{\chi_{ij,l} }} } } \hfill \\ \end{array} } \right.\) for all i, j = 1, 2, …, n, and \(\chi_{ij,l} = \left\{ {\begin{array}{*{20}l} {1{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} \;\;\;{\text{if}}{\kern 1pt} {\kern 1pt} {\text{the}}{\kern 1pt} {\kern 1pt} {\text{LV}}{\kern 1pt} {\kern 1pt} {\kern 1pt} L_{ij,l} {\kern 1pt} {\kern 1pt} {\text{is}}{\kern 1pt} {\kern 1pt} {\text{chosen}}} \hfill \\ {0{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{otherwise}}} \hfill \\ \end{array} } \right.\) such that \(\left\{ \begin{gathered} \sum\nolimits_{{l = 1:p_{{ij,l_{g} }} > p^{g} }}^{{m_{ij} }} {\chi_{ij,l} } = 1 \hfill \\ \chi_{ij,l} = \chi_{{ji,m_{ij} + 1 - l}} ,l = 1,2, \ldots ,m_{ij} \hfill \\ \end{gathered} \right.\) or \(\left\{ \begin{gathered} \sum\nolimits_{{l = 1,l \ne l_{g} :p_{{ij,l_{g} }} = p^{g} }}^{{m_{ij} }} {\chi_{ij,l} } = 1 \hfill \\ \chi_{ij,l} = \chi_{{ji,m_{ij} + 1 - l}} ,\;l = 1,2, \ldots ,m_{ij} ,l \ne l_{g} \hfill \\ \end{gathered} \right.\) for all i, j = 1, 2, …, n.

With respect to the LPR \(R^{g + 1} = (r_{ij}^{g + 1} )_{n \times n}\), return to Step 1. Solving model (M-1), we can obtain the LPR \(R^{g + 1} = (r_{ij}^{g + 1} )_{n \times n}\) by the optimal 0–1 indicator variables \(\chi_{ij,l}^{*}\) for all \( \left\{ \begin{gathered} i,j = { 1},{ 2}, \, \ldots ,n \hfill \\ l = 1,2,...,m_{ij} \hfill \\ \end{gathered} \right.\) or all \( \left\{ \begin{gathered} i,j = { 1},{ 2}, \, \ldots ,n \hfill \\ l = 1,2,...,m_{ij} ,l \ne l_{g} \hfill \\ \end{gathered} \right.\). Similar to the analysis of the LPR \(R^{g}\), let \(\chi_{{ij,l_{g + 1} }}^{*} = 1\) for each pair of (i, j) with i < j. Then, we get \(r_{ij}^{g + 1} = L_{{ij,l_{g + 1} }}^{{}}\) for all i, j = 1, 2, …, n;

-

Step 4: Determine the common probability pg +1 of \(R^{g + 1}\), where

namely, pg+1 equals to the minimum value of the probabilities of all LVs \(L_{{ij,l_{g + 1} }}\) in \(B^{ - g}\). By the common probability pg+1, we derive the CPLPR \(R^{g + 1} (p^{g + 1} ) = \left( {r_{ij}^{g + 1} (p^{g + 1} )} \right)_{n \times n}\).

Again deleting the CPLPR \(R^{g + 1} (p^{g + 1} )\) from the PLPR \(B^{ - g} = \left( {L_{ij}^{ - g} (p^{ - g} )} \right)_{n \times n}\), and the PLPR \(B^{ - (g + 1)} = \left( {L_{ij}^{ - (g + 1)} (p^{ - (g + 1)} )} \right)_{n \times n}\) is obtained, where

for all i, j = 1, 2, …, n.

-

Step 5: Repeat Steps 3 and 4 until we have \(p^{1} + p^{2} + ... + p^{\pi } = 1\), where pg is the common probability of the LPR \(R^{g}\), g = 1, 2, …, π, and π is the number of derived LPRs from the NPLPR B. Meanwhile, we obtain the CPLPRs \(R^{g} (p^{g} )\), where g = 1, 2, …, π.

Remark 3.1

Let \(R = (r_{ij} )_{n \times n}\) be a LPR on the object set X = {x1, x2, …, xn} for the ALS S = {si| i = 0, 1, …, 2t}, and p be a probability such that 0 < p < 1. Then, the corresponding CPLPR \(R(p) = (r_{ij} (p))_{n \times n}\) is defined as:

such that \(r_{ij} (p) = pr_{ij} = s_{{pI(r_{ij} )}}\) for all \(i,j = 1,2,...,n\).

Next, we offer the concept of multiplicatively consistent CPLPRs.

Definition 3.1

Let \(R = (r_{ij} )_{n \times n}\) be a LPR, and \(R(p) = (r_{ij} (p))_{n \times n}\) be its corresponding CPLPR. \(R(p)\) is multiplicatively consistent if.

for all \(i,k,j = 1,2,...,n\), where p is a probability such that 0 < p < 1.

Property 3.1

Let \(R = (r_{ij} )_{n \times n}\) be a LPR and \(R(p) = (r_{ij} (p))_{n \times n}\) be its corresponding CPLPR. \(R\) is multiplicatively consistent if and only if \(R(p)\) is multiplicatively consistent.

Proof

From Definitions 2.2 and 3.1, one can easily derive the conclusion.□

Remark 3.2

Similar to LPRs, let us further consider the acceptably multiplicative consistency of CPLPRs. Let \(R = (r_{ij} )_{n \times n}\) be a LPR and \(R(p) = (r_{ij} (p))_{n \times n}\) be its corresponding CPLPR. Similar to Eq. (4), the multiplicative consistency level of \(R(p)\) is defined as:

Equation (19) shows that LPRs and their corresponding CPLPRs have the same multiplicative consistency level. According to the above analysis, we offer the following acceptably multiplicative consistency concept for NPLPRs.

Definition 3.2

Let B = \(\left( {L_{ij} \left( p \right)} \right)_{n \times n}\) be an NPLPR on the object set X = {x1, x2, …, xn} for the ALS S = {si|i = 0, 1, …, 2t}. It is acceptably multiplicatively consistent if all CPLPRs \(R^{g} (p^{g} ) = \left( {r_{ij}^{g} (p^{g} )} \right)_{n \times n}\), g = 1, 2, …, π, derived from Algorithm I are acceptably multiplicatively consistent. Furthermore, when all CPLPRs for the NPLPR B = \(\left( {L_{ij} \left( p \right)} \right)_{n \times n}\) derived from Algorithm I are multiplicatively consistent, then B is multiplicatively consistent.

To show the concrete application of Algorithm I, let us consider the following example.

Example 3.1

Let X = {x1, x2, x3} be the object set and S = {si|i = 0, 1, …, 8} be the ALS. The NPLPR B on X for the ALS S is defined as:

Let MCI* = 0.95. Following Algorithm I, we obtain the LPRs

Furthermore, their corresponding common probabilities are \(p^{1} = 0.3\),\(p^{2} = 0.3\), and \(p^{3} = 0.4\). Therefore, the CPLPRs are

From \(\phi^{*g}\) = 0, g = 1, 2, 3, we know that these three CPLPRs are acceptably multiplicatively consistent. Thus, the NPLPR B is acceptably multiplicatively consistent. In fact, these three CPLPRs are fully multiplicatively consistent following Eq. (18), by which we conclude that the NPLPR B is multiplicatively consistent.

Remark 3.3

From Examples 2.1 and 3.1, one can find that Definitions 2.5 and 3.2 don’t contain each other. When an NPLPR is multiplicatively consistent following Definition 2.5, it may be inconsistent according to Definition 3.2. On the other hand, when an NPLPR is multiplicatively consistent based on Definition 3.2, we cannot conclude that it is multiplicatively consistent following Definition 2.5.

4 InNPLPRs

This section discusses InNPLPRs, namely, there are missing judgments. Considering the construction of PLPRs, the missing information can be classified into three cases: (i) probability is missing, (ii) LV is missing and (iii) both of them are missing.

Let B = \(\left( {L_{ij} \left( p \right)} \right)_{n \times n}\) be an InNPLPR, where Lij(p) = {\(L_{ij,l}\) (\(p_{ij, l}\))| \(L_{ij,l}\) \(\in\) S, \(p_{ij, l}\) ≥ 0, \(l\) = 1, 2, …, \(m_{ij}\), \(\sum\nolimits_{l = 1}^{{m_{ij} }} {p_{ij, l} } \) = 1} for all i, j = 1, 2, …, n. Furthermore, let UP(i, j) = {l|The probability if Lij,l (pij,l) is missing, where l = 1, 2, …, mij}, let US(i, j) = {l|The LV of Lij,l (pij,l) is missing, where l = 1, 2, …, mij}, and let UPS(i, j) = UP(i, j)\(\cap\) US(i, j). Let S = {si|i = 0, 1, …, 2t} be the ALS.

Now, we offer a common probability and multiplicative consistency based interactive algorithm to estimate missing information in the InNPLPR B = \(\left( {L_{ij} \left( p \right)} \right)_{n \times n}\).

4.1 Algorithm II. The procedure of ascertaining missing judgments in InNPLPRs

-

Step 1: Construct LPRs from InNPLPRs.

Let g = 1. With respect to the InNPLPR B = (Lij(p)\()_{n \times n}\), let L = (Lij\()_{n \times n} \) be the corresponding incomplete LHPR (InLHPR), which is defined as

and

where \(\tau_{ij,l,\iota }\) is a 0–1 indicator variable such that \(\sum\nolimits_{\iota = 0}^{2t} {\tau_{ij,l,\iota } } = {\kern 1pt} {\kern 1pt} {\kern 1pt} 1\) and \(\tau_{ij,l,\iota } = \tau_{{ij,m_{ij} + 1 - l,\iota }}\) for all τ = 0, 1, …, 2t and all \({\kern 1pt} l \in US\left( {i,j} \right){\kern 1pt} {\kern 1pt} \cup UPS\left( {i,j} \right)\). \(\sum\nolimits_{\iota = 0}^{2t} {\tau_{ij,l,\iota } } = {\kern 1pt} {\kern 1pt} {\kern 1pt} 1\) ensures only one item in the ALS S = {si|i = 0, 1, …, 2t} to be chosen as the value of Lij,l, and \(\tau_{ij,l,\iota } = \tau_{{ji,m_{ij} + 1 - l,\iota }}\) ensures to \(L_{ij,l} \oplus L_{{ji,m_{ij} + 1 - l}} = s_{2t}\) for any \({\kern 1pt} {\kern 1pt} l \in US\left( {i,j} \right){\kern 1pt} {\kern 1pt} \cup UPS\left( {i,j} \right)\).

Remark 4.1

The first case in Eqs. (20) and (21) means that all LVs in the PLTS Lij(p) are known. The second case in Eqs. (20) and (21) indicates that there are known and unknown LVs in the PLTS Lij(p) simultaneously. The third case in Eqs. (20) and (21) shows that all LVs in the PLTS Lij(p) are unknown. Because any LPR only takes one LV in each NPLTS, we use \(\otimes_{\iota = 0}^{2t} (s_{\iota } )^{{\tau_{ij,l,\iota } }}\) to denote an unknown LV. Any LPR \(R^{g} = (r_{ij}^{g} )_{n \times n}\) obtained from the InLHPR L = (Lij \({)}_{n\times n}\) can be expressed as:

and \(r_{ji}^{g} = s_{{2t - I(r_{ij}^{g} )}}\), where \(\gamma_{ij,l} = 1{\kern 1pt} {\kern 1pt} {\text{or}}{\kern 1pt} {\kern 1pt} 0\) such that \(\sum\nolimits_{l = 1}^{{m_{ij} }} {\gamma_{ij,l} } = 1\) for all i, j = 1, 2, …, n with i < j and all l = 1, 2, …, mij, \(\tau_{ij,\iota } = 1{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{or}}{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} 0\) such that \(\sum\nolimits_{\tau = 1}^{2t} {\tau_{ij,\iota } } = 1\) for all i, j = 1, 2, …, n with i < j and all τ = 1, 2, …, 2t, and other notations as shown in Eq. (20).

-

Step 2: Determine missing LVs based on the optimal model.

As we know, the values of missing judgments make the consistency of incomplete preference relations the higher the better. In view of the common probability and multiplicative consistency analysis, we build the following optimal model for the LPR \(R^{g} = (r_{ij}^{g} )_{n \times n}\):

where the first constraint is derived from Eq. (4) by taking the logarithm 10 and adding the nonnegative deviation values \(\varepsilon_{ij}^{g, + }\) and \(\varepsilon_{ij}^{g, - }\) for each pair of (i, j) such that i < j, the second to fifth constraints are obtained from the first case in Eq. (22), the sixth to ninth constraints are obtained from the second case in Eq. (22), the tenth constraint is obtained from the third case in Eq. (22), the eleventh constraint is based on the concept of LPRs on the ALS S = {si|i = 0, 1, …, 2t}, and other notations as shown in Eq. (22).

Remark 4.2

In model (M-2), it adopts the logarithm 10. In fact, we can take any integer bigger than one. To avoid the situation where \(\log_{10} \left( {\frac{{I(r_{ij}^{g} )}}{{2t - I(r_{ij}^{g} )}}} \right) = \log_{10} \left( 0 \right)\) is meaningless, we replace \(I(r_{ij}^{g} ) = 0\) with \(I(r_{ij}^{g} ) = 0.01\). On the other hand, when \(I(r_{ij}^{g} ) = 2t\), then \(I(r_{ji}^{g} ) = 2t - I(r_{ij}^{g} ) = 0\), and \(\log_{10} \left( {\frac{{I(r_{ji}^{g} )}}{{2t - I(r_{ji}^{g} )}}} \right) = \log_{10} \left( 0 \right)\) is meaningless. In this case, we let \(I(r_{ij}^{g} ) = 2t - 0.01\). For this purpose, we classify four cases for the first and second cases in Eq. (22), respectively.

According to the relationship of LVs in LPRs, we have \(I(r_{ji}^{g} ) = 2t - I(r_{ij}^{g} )\) for each pair of (i, j) such that i < j. Thus,

where

Equation (23) shows that we can only employ the upper triangular LVs in the InLHPR L = \(\left( {L_{ij} } \right)_{n \times n} \) to derive the LPR \(R^{g} = (r_{ij}^{g} )_{n \times n}\). Thus, model (M-2) can be equivalently converted to the following model:

where \(\Xi_{k = 1}^{i - 1}\),\(\Xi_{k = i + 1}^{j - 1}\) and \(\Xi_{k = j + 1}^{n}\) as shown in Eq. (23), and all other constraints as those in model (M-2).

Solving model (M-3), according to the optimal values of 0–1 indicator variables, we obtain the LPR \(R^{g} = (r_{ij}^{g} )_{n \times n}\). Without loss of generality, let \(\gamma_{{ij,l_{g} }}^{*} = 1\),\(\tau_{{ij,\iota_{g} }}^{*} = 1\) and \(\tau_{{ij,l,\iota_{g} }}^{*} = 1\) for all i, j = 1, 2, …, n such that i < j, all lg = 1, 2, …, mij, and all τg = 0, 1, 2, …, 2t. From \(\tau_{{ij,\iota_{g} }}^{*} = 1\), we obtain that \(s_{{\iota_{g} }}\) is one LV in the PLTS Lij whose all LVs are unknown. Furthermore, if \(l \in US(i,j) \cup UPS(i,j)\), from \(\tau_{{ij,l,\iota_{g} }}^{*} = 1\), we derive that the unknown LV Lij,l is \(s_{{\iota_{g} }}\). Especially, when \(r_{ij}^{g} = 0.01\), we get \(r_{ij}^{g} = s_{{0{\kern 1pt} }}\) and \(r_{ji}^{g} = s_{2t}\); When \(r_{ij}^{g} = s_{2t - 0.01}\), we derive \(r_{ij}^{g} = s_{2t}\) and \(r_{ji}^{g} = s_{{0{\kern 1pt} }}\).

-

Step 3: Determine missing probabilities based on LPRs.

According to the LPR \(R^{g} = (r_{ij}^{g} )_{n \times n}\), determine the common probability pg of LVs in \(R^{g}\), which is defined as

where \(\mathop {\min }\limits_{{{\kern 1pt} {\kern 1pt} 1 \le i < j \le n,l_{g} \notin UP\left( {i,j} \right) \cup UPS\left( {i,j} \right)}} p_{{ij,l_{g} }}\) is the minimum value of the probabilities of the LVs that construct the LPR \(R^{g}\),\(\mathop {\min }\limits_{{1 \le i < j \le n,l_{g} \in UP\left( {i,j} \right) \cup UPS\left( {i,j} \right)}} \left( {1 - \sum\nolimits_{{l = 1,l \notin UP\left( {i,j} \right) \cup UPS\left( {i,j} \right)}}^{{m_{ij} }} {p_{ij,l} } } \right)\) is the minimum value of the unknown probabilities of the LVs that construct the LPR \(R^{g}\), and \(1 - \sum\nolimits_{{l = 1,l \notin UP\left( {i,j} \right) \cup UPS\left( {i,j} \right)}}^{{m_{ij} }} {p_{ij,l} }\) ensures that the sum of the known probabilities and \(p^{g}\) is no bigger than 1. In this way, we know that each LV with unknown probability in \(R^{g}\) has a probability that is no smaller than \(p^{g}\). Furthermore, we get the CPLPR \(R^{g} (p^{g} ) = \left( {r_{ij}^{g} (p^{g} )} \right)_{n \times n}\).

-

Step 4: Construct InPLPRs by deleting CPLPRs.

Delete the CPLPR \(R^{g} (p^{g} ) = \left( {r_{ij}^{g} (p^{g} )} \right)_{n \times n}\) from the InNPLPR B = \(\left( {L_{ij} \left( p \right)} \right)_{n \times n}\), by which we derive the corresponding InPLPR \(B^{g} = \left( {L_{ij}^{g} \left( {p^{g} } \right)} \right)_{n \times n}\), where

for each pair of (i, j), the first two cases are for LVs with the known probabilities in the LPR \(R^{g}\). When \(p_{{ij,l_{g} }} > p_{g}\), then we replace the item \(L_{{ij,l_{g} }} \left( {p_{{ij,l_{g} }} } \right)\) with \(L_{{ij,l_{g} }} \left( {p_{{ij,l_{g} }} - p_{g} } \right)\). Otherwise, we delete the item \(L_{{ij,l_{g} }} \left( {p_{{ij,l_{g} }} } \right)\) from Lij(p). The third and fourth cases are for LVs with the unknown probabilities in the LPR \(R^{g}\). When \({\kern 1pt} p_{g} {\kern 1pt} + \sum\nolimits_{{l = 1,l \notin UP\left( {i,j} \right) \cup UPS\left( {i,j} \right)}}^{{m_{ij} }} {p_{ij,l} } {\kern 1pt} < 1\), the probability of the LV \(r_{ij}^{g}\) is no smaller than pg, and we replace \(L_{{ij,l_{g} }} \left( {p_{{ij,l_{g} }} } \right)\) with \(L_{{ij,l_{g} }} \left( {p_{{ij,l_{g} }} - p_{g} } \right)\). However, if \({\kern 1pt} p_{g} {\kern 1pt} + \sum\nolimits_{{l = 1,l \notin UP\left( {i,j} \right) \cup UPS\left( {i,j} \right)}}^{{m_{ij} }} {p_{ij,l} } {\kern 1pt} = 1\), we know that the probability of the LV \(r_{ij}^{g}\) is pg and all other unknown probabilities of LVs in the PLTS Lij(p) equal to zero. In this case, we delete them. In the fifth case, the item \(L_{{ij,l_{g} }} (p_{{ij,l_{g} }} )\) belongs to the PLTS \(L_{ij} (p)\) such that \(p_{{ij,l_{g} }}\) ≥ pg, where \(L_{{ij,l_{g} }} = r_{ij}^{g}\).

-

Step 5: Process the InPLPR \(B^{g} = \left( {L_{ij}^{g} \left( {p^{g} } \right)} \right)_{n \times n}\) in a similar way as the InPLPR \(B = \left( {L_{ij} \left( p \right)} \right)_{n \times n}\).

With respect to the InPLPR \(B^{g} = \left( {L_{ij}^{g} \left( {p^{g} } \right)} \right)_{n \times n}\), return to Step 1. Construct the LPR \(R^{g + 1} = (r_{ij}^{g + 1} )_{n \times n}\) in a similar way as the LPR \(R^{g} = (r_{ij}^{g} )_{n \times n}\). Then, go to Step 2 and use model (M-3) to obtain the LPR \(R^{g + 1} = (r_{ij}^{g + 1} )_{n \times n}\). Furthermore, let \(\gamma_{{ij,l_{g + 1} }}^{*} = 1\),\(\tau_{{ij,\iota_{g + 1} }}^{*} = 1\) and \(\tau_{{ij,l,\iota_{g + 1} }}^{*} = 1\) for each pair of (i, j) such that i < j, all lg = 1, 2, …, mij, and all τg = 0, 1, 2, …, 2t. According to Step 3 and the LPR \(R^{g + 1} = (r_{ij}^{g + 1} )_{n \times n}\), ascertain the common probability pg +1 of LVs in \(R^{g + 1}\), where

where \(p^{\kappa }\) is the probability of the CPLPR \(R^{\kappa } (p^{\kappa } )\) for all κ = 1, 2, …, g, \(1 - \sum\nolimits_{{l = 1,l \notin UP\left( {i,j} \right) \cup UPS\left( {i,j} \right)}}^{{m_{ij} }} {p_{ij,l}^{g} - \sum\nolimits_{\kappa = 1}^{g} {p_{\kappa } } }\) is the amount of uncertain probability, and all other notations as shown in Eq. (24).

-

Step 6: Repeat Steps 4 and 5 until we have p1 + p2 + \(\cdots\) + pπ = 1, where π is the number of iterations. Furthermore, let \(R^{g} = (r_{ij}^{g} )_{n \times n}\) be the derived LPR and \(R^{g} (p^{g} ) = \left( {r_{ij}^{g} (p^{g} )} \right)_{n \times n}\) be the corresponding CPLPR, where g = 1, 2, …, π. Following the CPLPRs, we derive the complete PLPR \(B = \left( {L_{ij} \left( p \right)} \right)_{n \times n}\), where

for all i, j = 1, 2, …, n.

Remark 4.3

From the procedure of Algorithm II, one can check that its principle is simple. Based on the LPRs derived from the corresponding InLHPRs and the multiplicative consistency, missing LVs are determined by solving model (M-3). Then, missing probabilities of LVs are ascertained following the common probability and the condition of normalized probability distributions on NPLTSs.

To illustrate the concrete application of Algorithm II, we offer the following example.

Example 4.1

Let X = {x1, x2, x3, x4} be the object set, and let S = {si|i = 0, 1, 2,…, 8} be the ALS. An InNPLPR B on X for the ALS S may be defined as:

According to Algorithm II, the CPLPRs are derived as follows:

Furthermore, based on Eq. (27) we derive the following complete NPLPR

5 Optimal models for reaching the multiplicative consistency requirement

The section discusses another type of frequently encountered NPLPRs: unacceptably multiplicatively consistent NPLPRs. The ranking of objects based on such type of NPLPRs may be unreasonable. Therefore, the section studies how to derive acceptably multiplicatively consistent NPLPRs.

Definition 5.1

Let B = (Lij(p)\({)}_{n\times n}\) be an NPLPR on the object set X = {x1, x2, …, xn} for the ALS S = {si|i = 0, 1, …, 2t}. It is unacceptably multiplicatively consistent if there is unacceptably multiplicatively consistent CPLPR that is derived from Algorithm I.

Equation (19) shows that when an NPLPR is unacceptably multiplicatively consistent. Then, there is unacceptably multiplicatively consistent LPR derived from Algorithm I. Let \(R^{g} = \left( {r_{ij}^{g} } \right)_{n \times n}\), g = 1, 2, …, π, be the LPRs from Algorithm I for the NPLPR B = (Lij(p)\({)}_{n\times n}\). Furthermore, let \(\Theta = \left\{ {R^{g} |\phi^{g, * } \ne 0,g = 1,2, \ldots ,\pi } \right\}\) where \(\phi^{g, * }\) is the objective function value of model (M-3). Then, \(\Theta\) is the set of unacceptably multiplicatively consistent LPRs.

According to Definition 3.2 and Eq. (19), we know that the corresponding acceptably multiplicatively consistent NPLPR B * = \(\left( {L_{ij}^{*} (p^{*} )} \right)_{n \times n}\) for the NPLPR B can be derived by adjusting unacceptably multiplicatively consistent LPRs in \(\Theta\). With respect to LPRs in \(\Theta\), besides considering their consistency levels, the smaller the adjustment, the better to retain the original information. Considering these two aspects, inspired by Dong et al.’s methods (Dong et al., 2008, 2013) for deriving additively consistent LPRs, we construct the following model to derive the acceptably multiplicatively consistent LPR \(R^{*g} = \left( {r_{ij}^{*g} } \right)_{n \times n}\) from the LPR \(R^{g}\):

where MCI* is the given multiplicative consistency threshold, the first two constraints are derived from Eq. (5) that ensure the LPR \(R^{*g} = (r^{*g}_{ij})_{n \times n}\) to satisfy the consistency requirement, the third constraint reflects the deviation between LVs \(r^{*g}_{ij}\) and \(r_{ij}^{g}\), the fourth constraint indicates that \(r^{*g}_{ij}\) belongs to the ALS S = {si| i = 0, 1, …, 2t}. Furthermore, we replace \(r^{*g}_{ij} = 0{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{or}}{\kern 1pt} {\kern 1pt} {\kern 1pt} 2t\) with \(r^{*g}_{ij} = 0.001{\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{or}}{\kern 1pt} {\kern 1pt} {\kern 1pt} 2t - 0.001\) to make the second constraint meaningful. The fifth to eighth constraints make Δ equal to \(\max_{i,j = 1,i < j}^{n} \left( {\frac{{I\left( {r_{ij}^{*g} } \right)}}{{2t - I\left( {r_{ij}^{*g} } \right)}},\frac{{2t - I\left( {r_{ij}^{*g} } \right)}}{{I\left( {r_{ij}^{*g} } \right)}}} \right)\), and the eleventh constraint shows that \(r_{ij}^{*g}\) only takes one linguistic term in the ALS S.

Considering the fact that all variables in model (M-4) only relate to the LPR \(R^{*g} = \left( {r_{ij}^{*g} } \right)_{n \times n}\), we can adjust all unacceptably multiplicatively consistent LPRs in \(\Theta\) simultaneously. Thus, we further build the following model:

where all constraints as those shown in model (M-4).

Solving model (M-4), we can derive all acceptably multiplicatively consistent LPRs. According to Eq. (27), we obtain the corresponding acceptably multiplicatively consistent PLPR \(B^{*} = \left( {L_{{_{ij} }}^{*} \left( p \right)} \right)_{n \times n}\).

6 Group decision making with NPLPRs

This section discusses GDM with NPLPRs following the derived CPLPRs from Algorithm I. Assume that there are ς DMs, denoted by E = {1, 2, …, ς}. Let \(B^{o} = (L_{ij}^{o} (p^{o} ))_{n \times n}\) be the individual NPLPR (I-NPLPR) offered by the DM eο, ο \(\in\) E.

For GDM, we usually need to calculate comprehensive preference relations and measure the consensus degree of individual opinions. Therefore, we next offer a similarity measure based method for determining the weights of DMs.

Definition 6.1

Let \(R^{1} (p^{1} ) = \left( {r_{ij}^{1} (p^{1} )} \right)_{n \times n}\) and \(R^{2} (p^{2} ) = \left( {r_{ij}^{2} (p^{2} )} \right)_{n \times n}\) be any two CPLPRs. Then, their similarity measure is defined as:

One can check that the similarity measure between the CPLPRs \(R^{1} (p^{1} )\) and \(R^{2} (p^{2} )\) have the following properties:

-

(i)

\(0 < Sim\left( {R^{1} (p^{1} ),R^{2} (p^{2} )} \right) \le 1\);

-

(ii)

\(Sim\left( {R^{1} (p^{1} ),R^{2} (p^{2} )} \right) = Sim\left( {R^{2} (p^{2} ),R^{1} (p^{1} )} \right)\);

-

(iii)

\(Sim\left( {R^{1} (p^{1} ),R^{2} (p^{2} )} \right) = 1\) if and only if \(R^{1} (p^{1} ) = R^{2} (p^{2} )\), namely,\(p^{1} = p^{2}\) and \(r_{ij}^{1} = r_{ij}^{2}\) for all i, j = 1, 2, …, n.

Based on Definition 6.1, we further offer the similarity measure between CPLPRs and NPLPRs as follows:

Definition 6.2

Let \(R(p) = \left( {r_{ij} (p)} \right)_{n \times n}\) be a CPLPR, and let \(B = \left( {L_{ij} (p)} \right)_{n \times n}\) be an NPLPR. Furthermore, let Ω(B) = {\(R_{1} (p_{1} ) = \left( {r_{1,ij} (p_{1} )} \right)_{n \times n}\),\(R_{2} (p_{2} ) = \left( {r_{2,ij} (p_{2} )} \right)_{n \times n}\),…, \(R_{\pi } (p_{\pi } ) = \left( {r_{\pi ,ij} (p_{\pi ,ij} )} \right)_{n \times n}\)} be the set of CPLPRs obtained from B. Then, the similarity measure between \(R(p)\) and B is defined as:

Based on the properties of Definition 6.1, one can verify that \(Sim\left( {R(p),B} \right) = 1\) if and only if \(R(p) \in\) Ω(B).

Following Definition 6.2, we further define the similarity measure between NPLPRs as follows:

Definition 6.3

Let \(B^{1} = \left( {L_{ij}^{1} (p^{1} )} \right)_{n \times n}\) and \(B^{2} = \left( {L_{ij}^{2} (p^{2} )} \right)_{n \times n}\) be any two NPLPRs. Furthermore, let Ω(Bo) = {\(R_{1}^{o} (p_{1}^{o} ) = \left( {r_{1,ij}^{o} (p_{1}^{o} )} \right)_{n \times n}\),\(R_{2}^{o} (p_{2}^{o} ) = \left( {r_{2,ij}^{o} (p_{2}^{o} )} \right)_{n \times n}\),…, \(R_{{\pi_{o} }}^{o} \left( {p_{{\pi_{o} }}^{o} } \right) = \left( {r_{{\pi_{o} ,ij}}^{o} \left( {p_{{\pi_{o} }}^{o} } \right)} \right)_{n \times n}\)} be the set of CPLPRs obtained from Bo, where o = 1, 2. Then, the similarity measure from B1 to B2 is defined as:

and the similarity measure from B2 to B1 is defined as:

Furthermore, the similarity measure between B1 and B2 is defined as:

According to Eq. (32), it is easy to verify that the above three properties for Eq. (28) are still true for Eq. (32). It should be noted that the similarity measure between NPLPRs is based on their CPLPRs obtained from Algorithm I.

Based on the similarity measure between NPLPRs, we provide the following formula to determine the weights of the DMs:

where ο \(\in\) E.

Equation (33) shows that the higher the similarity measure between one DM’s CPLPRs and all other DMs’, the bigger the weight of the DM.

Based on the weights of the DMs, we next discuss how to measure the consensus of individual opinions. First, let us consider expect LPRs of NPLPRs.

Definition 6.4

Let \(B = (L_{ij} (p))_{n \times n}\) be an NPLPR, and let Ω(B) = {\(R_{1} (p_{1} ) = \left( {r_{1,ij} (p_{1} )} \right)_{n \times n}\),\(R_{2} (p_{2} ) = \left( {r_{2,ij} (p_{2} )} \right)_{n \times n}\),…, \(R_{\pi } (p_{\pi } ) = \left( {r_{\pi ,ij} (p_{\pi } )} \right)_{n \times n}\)} be the set of corresponding CPLPRs obtained from Algorithm I. Then, \(E(B) = \left( {E(L_{ij} (p))} \right)_{n \times n}\) is called the expect LPR of the NPLPR B, where.

for all i, j = 1, 2, …, n.

One can check that the expect LPR defined by Eq. (34) is also a LPR defined on the ALS S = {si|i = 0, 1, …, 2t}. Based on the concept of expect LPRs, we further offer the concept of comprehensively expect LPRs.

Definition 6.5

Let \(B^{o} = (L_{ij}^{o} (p^{o} ))_{n \times n}\) be the I-NPLPR offered by the DM eo, and \(E(B^{o} ) = \left( {E(L_{ij}^{o} (p^{o} ))} \right)_{n \times n}\) be its expect LPR as shown in Definition 6.4, where o = 1, 2, …, ς. Then, \(E(B) = \left( {E(L_{ij} (p))} \right)_{n \times n}\) is called the comprehensively expect LPR, where.

for all i, j = 1, 2, …, n, \(\omega_{{e_{o} }}\) is the weight of the DM eo such that \(\sum\nolimits_{o = 1}^{\varsigma } {\omega_{{e_{o} }} } = 1\) and \(\omega_{{e_{o} }} \ge 0\) for all o = 1, 2, …, ς.

According to the comprehensively expect LPR, we offer the following consensus measure of I-NPLPRs:

Definition 6.6

Let \(B^{o} = (L_{ij}^{o} (p^{o} ))_{n \times n}\) be the I-NPLPR offered by the DM eo, where o = 1, 2, …, ς. The consensus degree of Bο is defined as:

where \(R_{1}^{o} (p_{1}^{o} ) = \left( {r_{1,ij}^{o} (p_{1}^{o} )} \right)_{n \times n}\),\(R_{2}^{o} (p_{2}^{o} ) = \left( {r_{2,ij}^{o} (p_{2}^{o} )} \right)_{n \times n}\),…, and \(R_{{\pi_{o} }}^{o} \left( {p_{{\pi_{o} }}^{o} } \right) = \left( {r_{{\pi_{o} ,ij}}^{o} \left( {p_{{\pi_{o} }}^{o} } \right)} \right)_{n \times n}\) are the CPLPRs obtained from Algorithm I for the NPLPR Bo, and \(E(B) = \left( {E(L_{ij} (p))} \right)_{n \times n}\) is the comprehensively expect LPR as defined by Eq. (35).

Similar to the similarity measure between NPLPRs, the consensus measure of the DMs is also based on the CPLPRs obtained from Algorithm I for I-NPLPRs.

If the I-NPLPR \(B^{o} = (L_{ij}^{o} (p^{o} ))_{n \times n}\) does not satisfy the consensus requirement, we know that there is LPR \(R_{g}^{o} = \left( {r_{g,ij}^{o} } \right)_{n \times n}\) obtained from Algorithm I for the I-NPLPR Bo whose consensus level is smaller than the given consensus threshold COI*, namely,

To make the ranking of objects representatively, we need to improve the consensus level of such LPRs. On the other hand, we only adjust one LPR with the lowest consensus level at one time to retain more original information. Without loss of generality, let \(COI\left( {R_{g}^{o} } \right) = \min_{h = 1}^{{\pi_{o} }} COI\left( {R_{h}^{o} } \right) < COI^{*}\). To make the LPR \(R_{g}^{o} = \left( {r_{g,ij}^{o} } \right)_{n \times n}\) reach the consensus requirement, we build the following model:

where the first two constraints are derived from Eq. (36) for the adjusted LPR \(R_{g}^{o,(1)} = \left( {r_{g,ij}^{o,(1)} } \right)_{n \times n}\) whose consensus level is no smaller than the given threshold COI*, the third constraint and the objective function make the adjusted LPR \(R_{g}^{(1)o}\) to have the smallest total adjustment, the fourth and fifth constraints ensure that \(R_{g}^{o,(1)}\) is still a LPR on the ALS S = {si| i = 0, 1, 2,…, 2t}, and the constraints for the multiplicative requirement are the same as those listed in model (M-4).

Solving model (M-6), we can obtain the adjusted LPR \(R_{g}^{o,(1)} = \left( {r_{g,ij}^{o,(1)} } \right)_{n \times n}\), which satisfies the multiplicative consistency and consensus requirements. Furthermore, according to \(R_{g}^{o,(1)} = \left( {r_{g,ij}^{o,(1)} } \right)_{n \times n}\), we can obtain the corresponding CPLPR \(R_{g}^{o,(1)} (p_{g}^{o} ) = \left( {r_{g,ij}^{o,(1)} (p_{g}^{o} )} \right)_{n \times n}\).

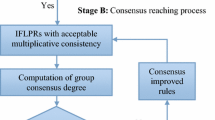

In view of the multiplicative consistency and consensus analysis, we offer the following algorithm for GDM with NPLPRs:

6.1 Algorithm III. A procedure for GDM with NPLPRs

-

Step 1: Let \(B^{o} = (L_{ij}^{o} (p^{o} ))_{n \times n}\), o = 1, 2, …, ς, be the given I-NPLPRs. If all of them are complete, go to Step 2. Otherwise, we adopt Algorithm II to derive the complete I-NPLPR for each incomplete one;

-

Step 2: With respect to each complete I-NPLPR \(B^{o} = (L_{ij}^{o} (p^{o} ))_{n \times n}\), we employ Algorithm I to obtain the associated LPRs \(\left\{ {R_{1}^{o} = \left( {r_{1,ij}^{o} } \right)_{n \times n} } \right.,R_{2}^{o} = \left( {r_{2,ij}^{o} } \right)_{n \times n} , \ldots ,\left. {R_{{\pi_{o} }}^{o} = \left( {r_{{\pi_{o} ,ij}}^{o} } \right)_{n \times n} } \right\}\) and their corresponding CPLPRs \(\left\{ {R_{1}^{o} (p_{1}^{o} ) = \left( {r_{1,ij}^{o} (p_{1}^{o} )} \right)_{n \times n} ,R_{2}^{o} \left( {p_{2}^{o} } \right) = \left( {r_{2,ij}^{o} (p_{2}^{o} )} \right)_{n \times n} , \ldots ,R_{{\pi_{o} }}^{o} (p_{{\pi_{o} }}^{o} ) = \left( {r_{{\pi_{o} ,ij}}^{o} (p_{{\pi_{o} }}^{o} )} \right)_{n \times n} } \right\}\). If each I-NPLPR is acceptably multiplicatively consistent, go to Step 4. Otherwise, turn to the next step;

-

Step 3: With respect to each unacceptably multiplicatively consistent I-NPLPR, we use model (M-5) to adjust all corresponding unacceptably multiplicatively consistent LPRs;

-

Step 4: According to acceptably multiplicatively consistent CPLPRs, we calculate the expect LPR \(E(B^{o} ) = \left( {E\left( {L_{ij}^{o} (p^{o} )} \right)} \right)_{n \times n}\) by Eq. (34), where o = 1, 2, …, ς. Furthermore, we determine the weights of the DMs by Eq. (33), where \(\omega = \left\{ {\omega_{{e_{1} }} ,\omega_{{e_{2} }} , \ldots ,\omega_{{e_{\varsigma } }} } \right\}\);

-

Step 5: Using Eq. (35) to calculate the comprehensively expect LPR \(E(B) = \left( {E(L_{ij} (p))} \right)_{n \times n}\). Then, we measure the consensus degree of each I-NPLPR by Eq. (36). If all I-NPLPRs satisfy the consensus requirement, skip to Step 7. Otherwise, go to Step 6;

-

Step 6: Let \(COI(B^{o} ) = \min_{\theta = 1}^{\varsigma } COI(B^{\theta } )\) and let \(COI\left( {R_{g}^{o} } \right) = \min_{h = 1}^{{\pi_{o} }} COI\left( {R_{h}^{o} } \right)\). For the LPR \(R_{g}^{o} = \left( {r_{g,ij}^{o} } \right)_{n \times n}\), we adopt model (M-6) to adjust it and obtain the adjusted LPR \(R_{g}^{(1)o} = \left( {r_{g,ij}^{(1)o} } \right)_{n \times n}\). Then, we further calculate the expect LPR \(E(B^{(1)o} ) = \left( {E\left( {L_{ij}^{(1)o} (p^{(1)o} )} \right)} \right)_{n \times n}\) of the adjusted I-NPLPR \(B^{(1)o} = (L_{ij}^{(1)o} (p^{(1)o} ))_{n \times n}\) and return to Step 5;

-

Step 7: With respect to each LPR \(R_{g}^{o} = \left( {r_{g,ij}^{o} } \right)_{n \times n}\), we calculate the priority linguistic value of each object xi by the following formula:

-

$$ s_{i,g}^{o} = s_{{\frac{1}{{\sum\nolimits_{j = 1}^{n} {I\left( {\frac{1}{{r_{g,ij}^{o} }}} \right) - \frac{n}{2t}} }}}} $$(38)

where i = 1, 2, …, n, g = 1, 2, …, πo, and o = 1, 2, …, ς.

-

Step 8: From the priority linguistic value of each object xi for each LPR \(R_{g}^{o} = \left( {r_{g,ij}^{o} } \right)_{n \times n}\), we calculate the expect priority linguistic value vector \(s^{o} = \left( {s_{1}^{o} ,s_{2}^{o} ,...,s_{n}^{o} } \right)\), where \(s_{i}^{o} = s_{{\frac{{2t\prod\nolimits_{g = 1}^{{\pi_{o} }} {I(s_{i,g}^{o} )^{{p_{g}^{o} }} } }}{{\sum\nolimits_{i = 1}^{n} {\prod\nolimits_{g = 1}^{{\pi_{o} }} {I(s_{i,g}^{o} )^{{p_{g}^{o} }} } } }}}}\), and \(p_{g}^{o}\) is the common probability of the LPR \(R_{g}^{o}\) for all i = 1, 2, …, n, and all o = 1, 2, …, ς;

-

Step 9: Based on the expect priority linguistic value vectors \(s^{o}\), o = 1, 2, …, ς, we further calculate the comprehensively expect priority linguistic value vector \(s = \left( {s_{1} ,s_{2} ,...,s_{n} } \right)\), where \(s_{i} = s_{{\frac{{2t\prod\nolimits_{o = 1}^{\varsigma } {I(s_{i}^{o} )^{{\omega_{{e_{o} }} }} } }}{{\sum\nolimits_{i = 1}^{n} {\prod\nolimits_{o = 1}^{\varsigma } {I(s_{i}^{o} )^{{\omega_{{e_{o} }} }} } } }}}}\) for all i = 1, 2, …, n. Meanwhile, we derive the ranking of objects x1, x2, …, xn based on \(s = \left( {s_{1} ,s_{2} ,...,s_{n} } \right)\).

From the above procedure, one can find that Algorithm III is based on the acceptably multiplicative consistency and consensus analysis. Furthermore, this algorithm can deal with incompletely and unacceptably multiplicatively consistent NPLPRs that only uses and fully considers the information offered by the DMs.

7 An illustrative example

Nowadays, people pay more and more attention to environmental pollution and energy consumption caused by economic activities. Green supply chain is developed in this back ground, which is an important component of strategies for achieving sustainable development. To achieve this goal, enterprises have been committed to the development of new technologies, which determines their survival and developments. Vehicles are a representative industry. More and more car companies are developing new technologies. Recently, new energy vehicles enter the people's horizon, which gathers almost all the latest advanced technologies in the automotive field. Due to the merits of new energy vehicles, it has become the future development direction of automobile companies. There is a new energy vehicle company who wants to update its production process to meet its development needs in the next 5 years. It is a very complex decision-making problem that needs to consider various criteria such as cost, feasibility, reliability and maintainability. Based on the market research and the enterprise status, four production processes are selected as the preliminary options, denoted by x1, × 2, × 3 and × 4. To select the most suitable one, this company founds three expert teams, namely, e1, e 2, and e 3. Each expert team contains 8–10 experts that are formed by engineers, technical R & D personnel, department managers, and front-line production workers. Now, each expert team is required to independently offer the judgments by using LVs in S = {s0: extreme bad; s1: quite bad; s2: very bad; s3: bad; s4: slight bad; s5: fair; s6: slight good; s7: good; s8: very good; s9: quite good; s10: extreme good}. Consider the heterogeneity between experts, when they cannot reach agreement for some judgment, more than one LV is permitted. Besides, when there is more than one LV for some judgment, they need to further offer the probabilities of these LVs to discriminate them. However, when their divergences are too big or they are unwilling or unable to give some judgments, missing information is permitted. To compare these four production processes pairwise, InNPLPRs are efficient that can cope with all above analyzed situations. Assume that the individual InNPLPRs (I-InNPLPRs) offered by these three expert teams are listed in Tables 1, 2, and 3:

To rank these four production processes from the above I-InNPLPRs, the following procedure is needed:

-

Step 1: With respect to each I-InNPLPR, according to Algorithm II, we derive complete I-NPLPRs as shown in Tables 4, 5, and 6:

-

Step 2: Let MCI* = 0.95. With respect to each I-NPLPR, following Algorithm I, the associated LPRs can be obtained. Taking the first I-NPLPR B1 for example, the associated LPRs are listed as follows:

Furthermore, their associated CPLPRs are

-

Step 3: With the obtained LPRs for each I-NPLPR, we can judge whether their consistency is acceptable from the objective function value of model (M-1). When the objective function value of model (M-1) for some LPR is not equal to zero, we can employ model (M-5) to derive its associated acceptably multiplicatively consistent LPR. Taking the LPRs obtained from the I-NPLPR B1 for example, because the objective function values of model (M-1) for the LPRs \(R_{4}^{1}\) and \(R_{5}^{1}\) are not equal to zero, these two LPRs are unacceptably consistent. In this situation, we adopt model (M-5) to adjust them and derive the following acceptably multiplicatively consistent LPRs:

Furthermore, the corresponding CPLPRs are

-

Step 4: Based on the acceptably multiplicatively consistent LPRs for I-NPLPRs, the expect LPRs are derived as follows:

Furthermore, according to Eq. (33), the weights of the DMs are \(\omega_{{e_{1} }} = \omega_{{e_{2} }} = 0.34,\omega_{{e_{3} }} = 0.32\).

-

Step 5: Following Eq. (35), the comprehensively expect LPR is

Let COI* = 0.95. Based on the acceptably multiplicatively consistent CPLPRs obtained from Steps 1 and 2, the consensus levels of I-NPLPRs are \(COI(B^{1} ) = 0.96\),\(COI(B^{2} ) = 0.93\) and \(COI(B^{1} ) = 0.91\).

-

Step 6: Because \(COI(B^{3} ) = \min_{\theta = 1}^{3} COI(B^{\theta } )\) and \(COI\left( {R_{1}^{3} } \right) = \min_{h = 1}^{6} COI\left( {R_{h}^{3} } \right) = 0.89\), we increase the consensus level of the LPR \(R_{1}^{3}\) by model (M-6) and get the following adjusted LPR

Furthermore, the corresponding CPLPR is \(R_{1}^{3,(1)} (0.3) = \left( {\begin{array}{*{20}c} {s_{5} (0.3)} & {s_{6} (0.3)} & {s_{5} (0.3)} & {s_{4} (0.3)} \\ {s_{4} (0.3)} & {s_{5} (0.3)} & {s_{4} (0.3)} & {s_{3} (0.3)} \\ {s_{5} (0.3)} & {s_{6} (0.3)} & {s_{5} (0.3)} & {s_{4} (0.3)} \\ {s_{6} (0.3)} & {s_{7} (0.3)} & {s_{6} (0.3)} & {s_{5} (0.3)} \\ \end{array} } \right)\). With respect to the adjusted CPLPR \(R_{1}^{3,(1)} (0.3)\), the corresponding expect LPR is \(E\left( {B^{3,(1)} } \right) = \left( {\begin{array}{*{20}c} {s_{5} } & {s_{5.4} } & {s_{3.85} } & {s_{3.22} } \\ {s_{4.6} } & {s_{5} } & {s_{3.46} } & {s_{2.85} } \\ {s_{6.15} } & {s_{6.54} } & {s_{5} } & {s_{3.98} } \\ {s_{6.78} } & {s_{7.15} } & {s_{6.02} } & {s_{5} } \\ \end{array} } \right)\). Again using Eq. (35), the comprehensively expect LPR is \(E(B^{(1)} ) = \left( {\begin{array}{*{20}c} {s_{5} } & {s_{4.55} } & {s_{5.47} } & {s_{3.68} } \\ {s_{5.45} } & {s_{5} } & {s_{5.87} } & {s_{4} } \\ {s_{4.53} } & {s_{4.13} } & {s_{5} } & {s_{3.02} } \\ {s_{6.32} } & {s_{6} } & {s_{6.98} } & {s_{5} } \\ \end{array} } \right)\). Moreover, the consensus levels of I-NPLPRs are \(COI(B^{1} ) = 0.97\),\(COI(B^{2} ) = 0.94\) and \(COI\left( {B^{3,(1)} } \right) = 0.93\).

Repeat this process six times, we obtain the expect LPR \(E(B^{3,(6)} ) = \left( {\begin{array}{*{20}c} {s_{5} } & {s_{5.3} } & {s_{4.7} } & {s_{3.22} } \\ {s_{4.7} } & {s_{5} } & {s_{4.39} } & {s_{2.94} } \\ {s_{5.3} } & {s_{5.61} } & {s_{5} } & {s_{3.22} } \\ {s_{6.78} } & {s_{7.06} } & {s_{6.78} } & {s_{5} } \\ \end{array} } \right)\) and the comprehensive expect LPR \(E(B^{(6)} ) = \left( {\begin{array}{*{20}c} {s_{5} } & {s_{4.55} } & {s_{5.75} } & {s_{3.68} } \\ {s_{5.45} } & {s_{5} } & {s_{6.14} } & {s_{4.01} } \\ {s_{4.25} } & {s_{3.86} } & {s_{5} } & {s_{2.8} } \\ {s_{6.32} } & {s_{5.99} } & {s_{7.2} } & {s_{5} } \\ \end{array} } \right)\). Meanwhile, the consensus levels of I-NPLPRs are \(COI(B^{1} ) = 0.97\),\(COI(B^{2} ) = 0.95\) and \(COI(B^{3,(6)} ) = 0.95\).

-

Step 7: With respect to each LPR, we calculate the priority linguistic value of each object. Taking the LPRs obtained from Steps 1 and 2 for the I-NPLPR B1 for example, the priority linguistic value vectors are

Furthermore, the expect priority linguistic value vectors are \(s^{1} = \left( {s_{2.18} ,s_{2.95} ,s_{1.30} ,s_{3.37} } \right)\),\(s^{2} = \left( {s_{2.00} ,s_{3.24} ,s_{0.93} ,s_{3.83} } \right)\) and \(s^{3} = \left( {s_{1.97} ,s_{1.73} ,s_{2.07} ,s_{4.23} } \right)\).

-

Step 8: Based on the expect priority linguistic value vectors, the comprehensively expect priority linguistic value vector is \(s = \left( {s_{2.05} ,s_{2.57} ,s_{1.35} ,s_{3.86} } \right)\). Thus, the ranking is x4 \(\succ\) x2 \(\succ\) x1 \({ } \succ\) x3, namely, the fourth production process is the most suitable choice.

Remark 7.1

There are only two methods (Gao et al., 2019b; Song & Hu, 2019) for decision making with PLPRs in view of the multiplicative consistency. However, neither of them studies GDM with PLPRs. Furthermore, neither of them discussed InPLPRs. Therefore, none of previous research can be used in this example and the numerical comparison is omitted.

To indicate the differences between the new method and two previous multiplicative consistency based methods (Gao et al., 2019b; Song & Hu, 2019), we further analyze them in view of principle.

-

(i)

Gao et al. (2019b) give a decision-making method with PLPRs that uses the score-based multiplicative consistency concept. The main issue of such type of consistency concepts is to cause information loss. It should be noted that the score-based LPR may not be any possible LPR constructed by LVs in PLPRs. Therefore, it is unreasonable to employ this LPR to define the consistency of PLPRs. Just like random variables, their expects cannot reflect the randomness, the score-based multiplicative consistency concept cannot indicate the hesitancy of the DMs. For a given PLPR, Gao et al.’s method only obtains one exact numerical priority weight vector, which is also unreasonable. This numerical weight vector neither reflects the qualitative recognitions nor the hesitancy of the DMs. Furthermore, Gao et al.’s method for improving the consistency level needs to adjust all LVs in one PLTS with the same proportion. However, according to model (22) in the literature (Gao et al., 2019b), we cannot determine which LV causes the inconsistency. Neither decision making with InPLPRs nor GDM with PLPRs is studied in Gao et al.’s method (Gao et al., 2019b).

-

(ii)

Song and Hu (2019) also research decision making with PLPRs following the multiplicative consistency discussion. It is noted that the multiplicative consistency concept and method for improving the consistency level are the same as those offered by Gao et al. (2019b). Thus, Song and Hu’s method has the same issues as those in Gao et al.’s method (Gao et al., 2019b). Furthermore, Gao et al. (2019b) restrict to study decision making with complete PLPRs and disregard decision making with InPLPRs and GDM with PLPRs.

It should be noted that the multiplicative consistency concepts for PLPRs in the references (Gao et al., 2019b; Song & Hu, 2019) are based on NPLPRs. Therefore, we directly reviewed them on NPLPRs as listed in Definitions 2.5 and 2.6.

-

(iii)

The new method avoids the issues of previous multiplicative consistency concepts and previous methods for improving the consistency level. Furthermore, the new method discusses the determination of missing information in InNPLPRs and GDM with NPLPRs that follows the acceptably multiplicative consistency and consensus analysis.

8 Conclusion

Since Pang et al. (2016) first introduced PLTSs, decision making with probability linguistic information has been received many attentions of scholars. However, most research focuses on decision making with probability linguistic matrices. The studies about decision making with PLPRs are relatively fewer. At present, we only find two references (Gao et al., 2019b; Song & Hu, 2019) about decision making with NPLPRs based on the multiplicative consistency. However, these two methods are insufficient to cope with NPLPRs. Especially, they are inefficient for unacceptably multiplicatively consistent NPLPRs and InNPLPRs. Considering this case, this paper further introduces a new interactive algorithm for GDM with NPLPRs that is based on the acceptably multiplicative consistency and consensus analysis. The new method fully considers the NPLTSs offered by the DMs. To show the application of the new algorithm, we employ it to evaluate production processes of new energy vehicles. When the consensus requirement is not reached, we adopt the built model to adjust one LPR with the lowest consensus level at a time. Although this procedure can retain more original information offered by the DMs, it increases the interactive times. Similar to models for improving the consistency level, we can simultaneously adjust all LPRs whose consensus levels are smaller than the given threshold. All main procedures of the new method are based on the built optimal models which needs the help of computers and associated software.

Due to the powerful information expression of PLTSs, the new method can cope with more complex decision-making problems than previous preference relation based linguistic decision-making methods. It should be noted that we can extend the new method to other types of preference relations such as probability hesitant fuzzy preference relations, probability multiplicative hesitant fuzzy preference relations, and probability multiplicative linguistic preference relations. In addition, we can similarly study the application of the new method in some other fields including the selection of PPP models, the evaluation of project managements and the assessment closed-loop supply chain recovery models.

References

Alonso, S., Cabrerizo, F. J., Chiclana, F., Herrera, F., & Herrera-Viedma, E. (2009). Group decision making with incomplete fuzzy linguistic preference relations. International Journal of Intelligent Systems, 24, 201–222.

Alonso, S., Chiclana, F., Herrera, F., Herrera-Viedma, E., Alcala-Fdez, J., & Porcelet, C. (2008). A consistency-based procedure to estimate missing pairwise preference values. International Journal of Intelligent Systems, 23, 155–175.

Dong, Y. C., Hong, W. C., & Xu, Y. (2013). Measuring consistency of linguistic preference relations: A 2-tuple linguistic approach. Soft Computing, 17, 2117–2130.

Dong, Y. C., Xu, Y. F., & Li, H. Y. (2008). On consistency measures of linguistic preference relations. European Journal of Operational Research, 189, 430–444.

Gao, J., Xu, Z. S., Liang, Z. L., & Liao, H. C. (2019a). Expected consistency-based emergency decision making with incomplete probabilistic linguistic preference relations. Knowledge-Based Systems, 176, 15–28.

Gao, J., Xu, Z. S., Ren, P. J., & Liao, H. C. (2019b). An emergency decision making method based on the multiplicative consistency of probabilistic linguistic preference relations. International Journal of Machine Learning and Cybernetics, 10, 1613–1629.

Herrera, F., & Herrera-Viedma, E. (2000). Choice functions and mechanisms for linguistic preference relations. European Journal of Operational Research, 120, 144–161.

Herrera, F., Herrera-Viedma, E., & Verdegay, J. L. (1996). A model of consensus in group decision making under linguistic assessments. Fuzzy Sets and Systems, 78, 73–87.

Herrera-Viedma, E., Martinez, L., Mata, F., & Chiclana, F. (2005). A consensus support system model for group decision-making problems with multigranular linguistic preference relations. IEEE Transactions on Fuzzy Systems, 13, 644–658.

Liao, H. C., Jiang, L. S., Lev, B., & Fujita, H. (2019). Novel operations of PLTSs based on the disparity degrees of linguistic terms and their use in designing the probabilistic linguistic ELECTRE III method. Applied Soft Computing, 80, 450–464.

Liu, P. D., & Li, Y. (2019). An extended MULTIMOORA method for probabilistic linguistic multi-criteria group decision-making based on prospect theory. Computers & Industrial Engineering, 136, 528–545.

Meng, F. Y., Tang, J., & Zhang, Y. L. (2019). Programming model-based group decision making with multiplicative linguistic intuitionistic fuzzy preference relations. Computers & Industrial Engineering, 136, 212–224.

Pang, Q., Wang, H., & Xu, Z. S. (2016). Probabilistic linguistic term sets in multi-attribute group decision making. Information Sciences, 369, 128–143.

Rodriguez, R. M., Martinez, L., & Herrera, F. (2011). Hesitant fuzzy linguistic term sets for decision making. IEEE Transactions on Fuzzy Systems, 20, 109–119.

Song, Y. M., & Hu, J. (2019). Large-scale group decision making with multiple stakeholders based on probabilistic linguistic preference relation. Applied Soft Computing, 80, 712–722.

Tang, X. A., Zhang, Q., Peng, Z. L., Pedrycz, W., & Yang, S. L. (2020). Distribution linguistic preference relations with incomplete symbolic proportions for group decision making. Applied Soft Computing, 88, 106005.

Tanino, T. (1984). Fuzzy preference orderings in group decision making. Fuzzy Sets and Systems, 33, 117–131.

Wu, X. L., & Liao, H. C. (2018). An approach to quality function deployment based on probabilistic linguistic term sets and ORESTE method for multi-expert multi-criteria decision making. Information Fusion, 43, 13–26.

Xia, M. M., Xu, Z. S., & Wang, Z. (2014). Multiplicative consistency-based decision support system for incomplete linguistic preference relations. International Journal of Systems Science, 45, 625–636.

Xu, Z. S. (2004a). EOWA, EOWG operators for aggregating linguistic labels based on linguistic preference relations. International Journal of Uncertainty Fuzziness and Knowledge-based Systems, 12, 791–810.

Xu, Z. S. (2004b). Uncertain linguistic aggregation operators based approach to multiple attribute group decision making under uncertain linguistic environment. Information Sciences, 168, 171–184.

Zadeh, L. A. (1975). The concept of a linguistic variable, its application to approximate reasoning-part I. Information Sciences, 8, 199–249.

Zhang, G. Q., Dong, Y. C., & Xu, Y. F. (2014). Consistency and consensus measures for linguistic preference relations based on distribution assessments. Information Fusion, 17, 46–55.

Zhang, Y. X., Xu, Z. S., Wang, H., & Liao, H. C. (2016). Consistency-based risk assessment with probabilistic linguistic preference relation. Applied Soft Computing, 49, 817–833.

Zhu, B., & Xu, Z. S. (2014). Consistency measures for hesitant fuzzy linguistic preference relations. IEEE Transactions on Fuzzy Systems, 22, 35–45.

Acknowledgements

This work was supported by the Startup Foundation for Introducing Talent of NUIST (No. 2020r001), the National Natural Science Foundation of China (No. 71571192), and the Beijing Intelligent Logistics System Collaborative Innovation Center (No. 2019KF-09).

Author information