Abstract

Navigation in complex and unknown environments is a major challenge for elderly blind people. Unfortunately, conventional navigation aids such as white canes and guide dogs provide only limited assistance to blind people with walking impairments as they can hardly be combined with a walker, required for walking assistance. Additionally, such navigation aids are constrained to the local vicinity only. We believe that technologies developed in the field of robotics have the potential to assist blind people with walking disabilities in complex navigation tasks as they can provide information about obstacles and reason on both global and local aspects of the environment. The contribution of this article is a smart walker that navigates blind users safely by leveraging recent developments in robotics. Our walker can support the user in two ways, namely by providing information about the vicinity to avoid obstacles and by guiding the user to reach the designated target location. It includes vibro-tactile user interfaces and a controller that takes into account human motion behavior obtained from a user study. In extensive qualitative and quantitative experiments that also involved blind and age-matched participants we demonstrate that our smart walker safely navigates users with limited vision.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

According to a study of the World Health Organization, 81.7 % of all 39 million blind people worldwide are at least 50 years old (Pascolini and Mariotti 2011). Especially elderly blind people have an inherent risk towards walking disabilities. Also, genetic disorders such as the Usher syndrome cause visual impairments that often coincide with hearing loss and balance difficulties (Smith et al. 1994). Most of the navigation aids for blind people are not designed for users with walking impairments and provide limited physical assistance only. For example, a conventional technique for a blind person who depends on a walker is to regularly stop and monitor the environment with a cane stick. This is tediously slow, stigmatizing and restricts the radius of operation substantially.

Users require different kinds of guidance depending on the task at hand and their preferences. For example, blind users navigating in well-known environments may only need spatial information about their vicinity to avoid colliding into nearby obstacles. They may already know how to reach the desired destination. In contrast, a blind user negotiating an unknown environment such as an exhibition can benefit from global knowledge about the environment and guidance through it. In such a case, the navigation system can take over certain aspects and guide the user directly to the desired goal. In some situations, even a combination of both approaches might be beneficial for the user. For example, in crowded environments the navigation system can maintain the global plan while the user is responsible for local navigation decisions.

In this paper, we present a navigation system based on a smart walker that provides assistance for blind people with walking disabilities. It can either be used in the spatial information setting for sensing local obstacles or in the guided navigation setting for reaching the desired goal. The walker uses vibro-tactile signals to communicate obstacle information or navigation commands to the user. We rely on haptic feedback instead of auditory signals to allow the blind users to use their hearing for other purposes such as communication and orientation.

Our approach applies robotic techniques for the purpose of giving navigation guidance to human users. The interaction of the navigation modules with the user requires additional considerations compared to typical use cases in a robot. For example, humans take a longer time to react. A delay in reaction exists between the perceived navigation command in the form of a vibration signal and the resulting action. Also, humans are not as good as robots in accurately following commands. Finally, human users are less good than robots in performing accurate turns.

This article is a substantial extension of previous work (Reyes Adame et al. 2015; Wachaja et al. 2015). We outline the overall system of our smart walker and explain the different feedback modes and navigation settings. Furthermore, we derive a model of the human motion with the walker based on recorded trajectories and use this model to design a controller that considers human characteristics. Finally, we evaluate our controller and both navigation settings in combination with different feedback devices with extensive user studies which also include blind and elderly participants. The results demonstrate that our smart walker is suitable for safely and reliably providing both spatial information and navigational guidance to blind users with walking disabilities. By considering human motion behavior, our prediction-based controller guides the user to the desired goal in less time with shorter travel distance.

2 The smart walker

Our smart walker is built by externally mounting sensors on an off-the-shelf walker. This ensures that the user can quickly adapt to the new system and the ergonomic considerations of the walker are still maintained. We retrofit the walker with laser scanners for perception, a notebook for data-processing and one vibration motor attached to each handle for tactile feedback. The sensors and the data processing capabilities are installed as a complete unit that can be easily mounted on our smart walker as shown in Fig. 1. The walker is also connected to a vibro-tactile belt, illustrated in Fig. 2. The belt contains five vibration motors that are evenly distributed around the waist of the user in an angular range from \(-90^{\circ }\) to \(+90^{\circ }\). Furthermore, it incorporates a battery for power supply and a bluetooth-enabled microcontroller of the type ArduinoBT that receives data from the walker and controls the vibration motors. The total weight of the belt is 385 g so that it can easily be worn over longer time periods.

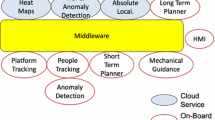

Hardware (dark blue) and software (light blue) architecture of the smart walker. Our system provides two basic settings. In the spatial information setting, the walker detects and relays positive and negative obstacles in the vicinity of the user via vibration motors in the handles or a vibration belt. The guided navigation setting navigates the user to a desired goal under the consideration of a map and the detected obstacles. Vibration signals from the belt or from the handles guide the user on the planned path. A third mode for guided navigation combines both feedback devices. In this mode, our walker guides the user on a path which is planned based on information from the static map only. Furthermore, the user perceives information about local dynamic obstacles through the vibration belt. In this way, the user is able to follow a global plan created by the walker but still has the freedom to make individual local navigation decisions such as how to avoid dynamic obstacles

The walker has two caster wheels on the front that can rotate freely in all directions. The two back wheels are mounted in parallel and cannot be rotated around the yaw-axis. Therefore, we can abstract the kinematic of our walker towards a differential drive. The rotation axis of the walker is always on the line which goes through both contact points of the back wheels with the ground, as long as the wheels do not slip. We define the reference-frame with its origin in the middle of the contact points, the x-axis pointing forward and the z-axis perpendicular to the ground.

An overview of our system architecture is presented in Fig. 3. Our software is based on the ROS framework (Quigley et al. 2009). We use two 2D laser range finders for perception and estimation of the ego-motion. The first laser scanner, a Hokuyo UTM-X002S, is fixed with respect to the walker and it is used to compute the ego-motion by laser scan matching (Censi 2008). Additionally, we create a 3D model of the environment using a second laser scanner, a Hokuyo UTM-30LX, that is continuously tilted up and down by a servo motor.

Our approach leverages terrain classifiers to detect positive and negative obstacles, such as sidewalks and downward stairways, from point clouds. Specifically, we modified the “height-length-density” (HLD) classifier, which determines safe and traversable cells for robots (Morton and Olson 2011). Our modification improves its suitability to human motion with a walker in tight narrow indoor spaces. Reasoning on the full three-dimensional environment is important as blind people often have difficulties in avoiding head-high obstacles or negative obstacles. These typically are hard to detect with standard blind-guides when also using a walker.

The smart walker can adjust to the individual needs of the user both in terms of the desired mode of guidance and the form of vibro-tactile feedback. It can operate in two basic settings, spatial information and guided navigation. Each setting uses the vibration belt or the vibrating handles for relaying information.

2.1 Setting 1: spatial information

For the first setting, spatial information, the walker detects positive and negative obstacles in the environment and relays them to the user. Similar to the navigation process with a white cane, our system enables the user to perceive obstacles, but the user is responsible for all navigation decisions. The walker informs the user via the vibration handles or the vibration belt about all obstacles in its vicinity. Each vibration motor is assigned to the closest obstacle within its angular region of \(45^{\circ }\). These regions are marked for the belt in Fig. 2. The handles provide a lower spatial resolution of \(180^{\circ }\) compared to the belt as they can only relay whether the obstacle is on the left or the right side of the user. We use pulse-frequency modulation to encode the distances in the vibration signal. Lower distances result in a higher repetition rate. A motor vibrates continuously as soon as a critical distance threshold is undercut and is turned completely off whenever there is no obstacle in the range of interest. This is similar to the parking distance control system of cars. One of the main requirements of blind users was that the signal for obstacle feedback is easy to perceive. Therefore the smart walker does not encode the obstacle type in the signal. We use pulse-frequency modulation and do not vary the vibration intensity for two reasons. First, preliminary experiments indicated that the just-noticeable difference for vibration intensities is higher and the perceived intensity depends on external circumstances such as the thickness of the clothing layer worn below the belt. Second, pulse-frequency modulation enables the user to clearly recognize obstacles which are below the critical distance threshold as the continuous vibration is easy to perceive.

2.2 Setting 2: guided navigation

The second setting, guided navigation, guides the user to the desired destination. This setting depends on a global map of the environment. The walker computes a safe path and then sends navigation commands to the user through the vibro-tactile interface. The map is created with a Rao-Blackwellized particle filter (Grisetti et al. 2007). For large buildings such as offices and nursing homes, existing maps and points of interest could be stored on a cloud server, so that the walker can acquire this information autonomously via a Wi-Fi access point. The user could specify target locations based on a speech interface. Both the distribution of maps and the speech recognition is not a focus of this paper, as there is a wide range of existing solutions available. We use adaptive Monte Carlo localization to estimate the pose of the walker in the map (Fox 2003). As the smart walker is an unmotorized off-the-shelf walker without odometry sensors at the wheels, we use the output of the laser scan matcher for pose prediction.

The planning module relies on both the occupancy map and the traversability information of the height-length-density classifier. It generates a safe path that guides the user to a desired destination. Figure 4 illustrates an example test course and the corresponding planned paths. The system provides low-level navigation commands to the user in the form of vibro-tactile feedback based on the planned path and the current position of the user.

We have three different interaction modes, namely handles, belt and a combination of both to guide the user on the computed path.

-

Handles The walker plans a path to the goal under consideration of static and dynamic obstacles. It guides the user on the path with navigation commands from the handles.

-

Belt The walker plans a path to the goal under consideration of static and dynamic obstacles. Instead of the handles, it uses the vibro-tactile belt to communicate navigation commands.

-

Handles and belt combined In this mode, our system uses the handles to guide the user on the desired path. In contrast to the first mode, our planner only incorporates information of the static map. Dynamic obstacles are conveyed by the belt which operates as described in the spatial information setting.

In the handles and the belt mode, both the local navigation and the global navigation are handled by the walker at the cost of a reduced autonomy of the user. In the third mode, the walker still creates a global plan, but it is left to the responsibility of the user to avoid dynamic obstacles. Therefore, we increase the contextual knowledge of the user based on the obstacle feedback signals from the vibration belt. We assume this mode may be preferred by users as they are more involved in the decision making process.

The walker guides the user for navigational tasks with four different vibration signals, namely go straight, turn left, turn right and goal reached. Each signal is repeated continuously until it is overwritten by another one. In order to create signals which are intuitive and easily distinguishable, we vary the signal pattern in addition to the location of the signal:

-

Go straight: Pulsed vibration on both motors (handles) or the front motor (belt). After an on-cycle of 100 ms, the motors are turned off for 400 ms.

-

Turn left / right

-

Handles Continuous vibration on the left or right vibration motor.

-

Belt We enable each motor for 250 ms sequentially in a cyclic manner. This results in a clock-wise (right) and anti-clockwise (left) vibrating pattern on the belt that is similar to the SOLO ONCE rotational pattern described by Cosgun et al. (2014).

-

-

Goal reached All motors either of the belt or the handles are first enabled with 100 % vibration intensity, then enabled with 50 % and finally turned off. Each step is executed with a duration of 500 ms.

2.3 Prediction-based controller

The controller module in the guided navigation setting is challenging as humans can only sense and interpret a limited number of commands and will have a delay in their reaction. An important prerequisite for guiding users on a path is to understand how humans react to navigation signals. In particular, we identified the following important parameters for guiding humans with a walker on a path:

-

Reaction time \((t_{R})\) Time between the system sends a navigation command and the user performs the command.

-

Rotational radius (r) Radius of curvature for the trajectory resulting out of a turn command.

-

Velocities \((v_{ straight }, v_{ rot }):\) Velocities of the user while moving straight respectively while turning.

Carrot planner for controlling the walker without prediction (left) and with prediction (right). The controller computes a reference point on the desired trajectory in a fixed lookahead distance L from the walker. The navigation commands are sent to the user based on the angular deviation \(\alpha \) between the x-axis of the walker (red) and the line between the reference-frame and the carrot position. The prediction-based controller considers the delay in the reaction time of the user. It computes the navigation command based on the predicted pose (box with dashed lines) (Color figure online)

The exact values for each of these parameters are obtained with a user-study explained in the experimental section.

Our controller is based on a modified carrot-follower approach. We extend the existing approach described by Hogg et al. (2002) to allow incorporating the parameters described above. Additionally, our modification makes sure that the path is not followed in the wrong direction in case that the walker is oriented in the wrong way. The carrot-following algorithm estimates a waypoint on the path in a fixed lookahead distance L from the walker. This waypoint is called the carrot position. It is calculated by intersecting a circle around the reference frame with a radius that equals the lookahead distance L with all path segments (see also Fig. 5). We examine the direction vector which indicates the direction of the path at the intersection point. Such a point is considered as a potential carrot position in case that this direction vector points away from the circle. This ensures that the user does not follow the path in the wrong direction. A new carrot position is only accepted if its distance from the old carrot position measured over the path length is below a threshold. As a result, the user follows a self-intersecting path all the way from the beginning to the end and does not choose a wrong carrot position at the intersection of two path segments.

Four configurations of the test course (left) for the evaluation of the spatial information setting with elderly people. The number of collisions per run (middle) and the travel time (right), both averaged over two runs. Nearly all runs resulted in collisions in narrow passages, but, with two exceptions, the participants could reach the goal. We had to cancel two runs with the belt, because the test participants got disoriented and walked back towards the starting point. In this case, the data refers only to the remaining completed run with the belt and we marked the bar with an asterisk. Both in terms of number of collisions and travel time, no feedback mode is clearly preferred

Our controller monitors the angular difference \(\alpha \) between the roll-axis of the walker and the line between the reference-frame and the carrot position. It sends a turn command to the user as soon as \(|\alpha | > \alpha _{ thresh }\). We introduce a hysteresis value \(\alpha _{ hyst }\) to avoid oscillation when \(\alpha \approx \alpha _{ thresh }\). After a turn command was sent, the controller switches back to the go-straight command as soon as \(|\alpha | \le \alpha _{ thresh } - \alpha _{ hyst }\).

We state the following three requirements to compute the lookahead distance L and the angular threshold \(\alpha _{ thresh }\):

-

1.

The walker driving on a straight line parallel to a straight path line commands the user to turn as soon as the distance between the two lines is above a maximum distance \(d_{ max }\):

$$\begin{aligned} L\sin \left( \alpha \right) \le d_{ max } \end{aligned}$$(1) -

2.

We consider a situation in that the user is driving on a straight path line towards a rectangular corner of the path. Under the assumption that the user has no delay and turns with the walker with a fixed curve radius r, the turn command has to be sent as soon as the distance between the user and the corner of the path equals r:

$$\begin{aligned} L \cos \left( \alpha _{ thresh }\right) = r \end{aligned}$$(2) -

3.

The lookahead distance should be chosen as high as possible within the first two constraints in order two avoid oscillation.

From these requirements we can derive suitable values for the lookahead distance L and the angular threshold \(\alpha _{ thresh }\):

In its basic version, the carrot planner does not consider the reaction time \(t_R\) of the user. As we explained earlier this delay can cause significant guidance errors. Therefore, we extend our carrot planner with a prediction module that predicts the pose of the walker at the time \(t_0 + t_R\), where \(t_0\) is the current point in time. This is a technique commonly used when handling delay times in systems, for example Engel et al. (2012) use this method to handle delay times caused by off-board computations. We consider a constant velocity model and the navigation commands sent in the time range \([t_{0}-t_{R}; t_{0}]\) to predict the specific pose of the walker, which is relevant for the current navigation decision (see Fig. 5).

3 Experiments

We evaluated the performance of our smart walker in six experiments both qualitatively and quantitatively. We test individual modules as well as the whole system in an end-to-end manner. The first experiment is the evaluation of the spatial information setting with blindfolded age-matched participants. In the following three experiments, we determined parameters of the prediction-based controller for guided navigation, evaluated the performance of this controller and different modes of application. These three experiments involved normally-aged participants without visual impairments. The last two experiments evaluate our smart walker in both settings with blind test participants.

3.1 Spatial information for elderly people

We tested the capabilities of the smart walker to provide spatial information regarding nearby obstacles to elderly people. Furthermore, the aim of these experiments was to assess the reliability of our positive and negative obstacle detector. These experiments allowed us to gain insights into whether elderly people are able to perceive the vibration signals distinctly and whether they prefer the handles or the belt as a tactile feedback device. We evaluated our system with ten participants, of which six were females and four were males. The age of the participants ranges from 65 to 80 years. One participant was blind (number 10), the other nine participants were blindfolded during the experiments. All participants had no prior experience with the walker.

The test environment with a head-high obstacle for the spatial information experiment (left), resulting point cloud captured by the tilting laser scanner and a model of our walker (middle left) and obstacle map created by the height-length-density classifier (middle right). The white areas in the map are classified as safe to traverse, the black areas are classified as obstacles and the medium gray areas are classified as unknown or uncertain terrain. The participants required a longer time to perceive the obstacle signal from the belt, which resulted in a higher number of collisions (right)

Test person in our spatial information experiment walking towards a downward stairway which is a typical negative obstacle (left). The height-length-density classifier uses the point cloud (middle left) to detect the hazardous areas and marks them in a map (middle right). The success rates for the recognition and avoidance of the negative obstacle are on the right

In the first experiment we set up a 10 m long test course containing several boxes as obstacles (see Fig. 6a). After a short introduction to our system and 5–10 min of training the participants traveled blindfolded from one end to the other only relying on the haptic feedback. Each participant performed four runs, two with vibration feedback from the handles and two runs with vibration feedback from the belt. We randomized the order of runs and varied the positions of the obstacles between each run to avoid any bias.

Figure 6b shows the number of collisions for belt and handles mode averaged over both runs. Nearly all runs resulted in collisions in narrow passages but, with two exceptions, the participants could reach the end of the 10 m long course. We had to cancel two runs with the belt, because the test participants got disoriented and walked back towards the starting point. Both participants had one collision before their run was canceled. For these participants, we report only the result of the remaining completed run with the belt in Fig. 6b and c. An asterisk marks the corresponding bars. Figure 6c illustrates the average travel time per run. Based on the observations, no feedback mode is clearly preferred. We attribute the slow walking speed mainly to the fact that the participants required additional time to interpret the vibration signals. Therefore, we expect the travel time to decrease when the users become more familiar with the system.

The purpose of the next two experiments was to assess the performance of the detector for positive and negative obstacles. We used a barricade tape to create a head-high obstacle as illustrated in Fig. 7 and a downward stairway as a negative obstacle (Fig. 8). The participants were asked to walk towards both of these obstacles and stop as soon as the vibration signal informed them that they were directly in front of the obstacle. Each obstacle was evaluated once with feedback from the handles and once with feedback from the belt. All participants completed these experiments.

Figures 7 and 8 illustrate that around half of the participants had trouble avoiding the obstacles with the tactile belt. In contrast, most participants could successfully avoid both obstacles with the vibrating handles. This was also confirmed with the questionnaire-based interview conducted after the participants had finished all three experiments (Fig. 9). Most of the participants stated that they had trouble sensing the vibration signals from the tactile belt. We believe this is because hands are more sensitive to vibrations compared to the abdomen (Wilska 1954). Furthermore the participants had difficulties to determine the location of a vibration source in the runs with the belt. The vibration signals on the belt were more challenging to sense for the users (see Fig. 9), but this did not result in a significant increase in the number of collisions in the test course. We are currently in the process of designing a belt with stronger vibration motors. An interesting topic for future work is the development of training methods, which enable the users to better understand the relationship between obstacle positions and vibration signals.

Results of the qualitative evaluation for the spatial information experiment. We asked the participants to rate three statements on a Likert Scale at the end of the evaluation. Half of the participants stated that they had difficulties to sense the vibration signals of the belt (left). With the exception of one test participant, all users found our system to be rather easy or very easy to handle (middle). More than 75 % of the users stated that they felt safe while traveling with our system (right) (Color figure online)

Results from one run of the experiments for obtaining the parameters of the prediction-based controller. We directed the participant to move straight and then turn when one of the handles vibrates. The point at which the turn-signal is sent to the participant is marked with a cross sign in all the figures. The left image illustrates that we compute the delay in reaction time of the user by measuring the difference in time between the signal sent (cross) and the change in orientation (intersection of the dotted lines). The center image illustrates the rotational radius computed by fitting a circle (dotted lines). The right image illustrates the two distinct velocities. The solid line depicts the actual user trajectory while the dotted lines represent the best model fit based on k-means clustering and least square estimation

The participants would use the smart walker in case of walking disabilities and vision impairments in order to maintain social contacts and increase the autonomy. Several participants stated that the product price of the smart walker should be affordable. While the choice of an unmotorized off-the-shelf platform considers this concern, we are currently testing configurations that reduce the costs of the sensor setup and still work reliably indoors and outdoors.

This first experiment shows that our system successfully provides functionalities that are beyond the capabilities of a classical white cane. It can inform users about head high obstacles and it reduces the number of object contacts required to explore the environment to a minimum. This is important in all environments where obstacle contacts can potentially cause damage. Our experiments indicate that the vibration signals of the handles are easier to perceive and better suited for warning the user of dangerous obstacles.

So far in all of our experiments we start sending proximity warnings as soon as the obstacles are within a fixed distance. This does not consider delay in human reaction or the walking speed of the user. Such factors become even more important when the walker is used in the guided navigation mode. The experiments in the next section model such human-specific characteristics and incorporate them into our prediction-based controller.

3.2 Parameter estimation for guided navigation

The goal of our second experiment was to identify the parameters for the prediction-based controller outlined in Sect. 2.3. Ten test participants were blindfolded and asked to move straight till a vibration-feedback on one handle directed the participant to stop and turn on the spot either in the left or the right direction depending on the signal. We instructed the participants to turn on the spot to avoid generating trajectories with large radius in order to facilitate navigation in narrow passages. The participants were between the ages of 22 and 28 years. All participants were male and did not have any walking disabilities. They were first allowed to get familiar with the walker and vibration feedback. Once the participants were familiar with the setup we collected ten evaluation runs per participant to obtain the parameters of the prediction-based controller. A motion capture system tracked the position and orientation of the walker over time and we fused this data with the navigation signals. Figure 10 illustrates exemplary results from one run.

For calculating the reaction time per run, we consider the orientation of the walker over time. K-means clustering was used to fit two lines which approximate the rotation velocity before and during the turning process. The intersection point of these lines marks the point in time where the user reacts to the signal and actually turns. The reaction time is the difference between the signal sent to the user and the walker being rotated. This is further illustrated in Fig. 10a. The cross mark is the time at which the turn signal was sent to the user while the intersection of the dotted lines estimates when the user actually turned the walker.

To calculate the rotational radius we fit a circle to the rotational trajectory using a least square minimization technique. The radius of the circle is the approximated rotation radius. Fig. 10b illustrates the circle fit to the trajectory. The velocity of the user in both situations, while going straight and while turning, is computed with a similar k-means method as the one used to estimate the reaction time. The dotted lines in Fig. 10c illustrate the straight and rotational velocities.

The parameters computed from this experiment can be found in Table 1. The estimated reaction time is almost 1 second. This is much more than that of a typical robot setup. Hence, we believe that incorporating the reaction time of a human user is a key for improving the guidance process. For example, if the reaction time is not considered, the user could overshoot the goal by more than 35 cm based on the typical straight velocity of a participant. The rotational radius is also important for guiding a human on the desired path and to predict the current position. Our experiments indicate that the estimated parameters vary from individual to individual and we expect that these parameters correlate with the age of the user, the level of visual impairment and the level of walking impairment. For example, elderly users tend to have increased reaction times (Der and Deary 2006). Therefore, we are currently working on a quick and easy-to-use calibration procedure which enables the walker to automatically detect and adjust the controller parameters according to individual user behavior.

3.3 Controller performance for guided navigation

We conducted a user study with eight blindfolded participants to evaluate the advantages of the prediction-based controller compared to a standard carrot-follower controller without any prediction. Our study included two female and six male participants, all within the age group of 22 to 30 years. We set the reaction time of the user \(t_{R}\) to 0.87 s and the rotational radius to 0.36 m based on the estimated system parameters in Table 1. These values were used to determine the parameters for the carrot planner. The hysteresis value \(\alpha _{ hyst }\) is set to \(5^{\circ }\) and \(d_{ max }\) set to 0.09 m. This results in \(\alpha _{ thresh }\) of \(14^{\circ }\). The lookahead distance L is rounded to 0.35 m.

For this evaluation, we generated six random paths. Half of the paths consist of lines connected at right angles simulating indoor environments. The other half of the random paths contain lines and arcs which are connected with varying angle. This simulates typical outdoor trajectories. Out of the six paths, we used two to train the participants and the other four paths for evaluation. Fig. 11 provides an overview of the paths, which were all 30 m in length to allow comparable results.

The four randomly generated paths which we used for the evaluation of our prediction-based controller. The start point is marked with x. Path 1 and Path 2 contain only straight lines joined at right angles. This simulates indoor environments. Path 3 and Path 4 have segments joining with arcs and lack an orthogonal structure to simulate complex outdoor environments. All units are in meters

The left figure shows the ground truth path (dashed line) and the trajectories of one participant with the different controllers. The start point is marked with a circle. It can be seen that the controller without prediction (dark purple line) results in a trajectory that oscillates around the ground truth path and overshoots at the corners. Both effects are reduced by our prediction-based controller (light turquoise line). The difference in trajectory length l (center) and in travel time t (right) illustrate that the prediction-based controller (subscript p) results in shorter trajectories and results in reaching the goal faster compared to the standard controller (subscript np). Negative values indicate shorter distance and lesser time for the prediction-based controller

We provided each participant with a short introduction to the smart walker and the guidance signals followed by two training runs. After the training, every test person completed the remaining four paths two times each, once with the prediction-based controller and once with the controller without prediction. The order of paths and controllers were randomized. For the purpose of quantitative evaluation, we tracked the trajectory of the test person using a motion capture system. The use of motion capture eliminates any errors in the evaluation due to mistakes in the mapping and localization system. Additionally, after every run the test person graded the guidance style on a scale from 1 (very bad) to 10 (excellent).

Figure 12a illustrates the desired path that the participant is guided along and the trajectories resulting from the two controllers. The controller without pose prediction oscillates around the ground truth path and often overshoots at the corners. This is also illustrated in Fig. 12b where we compare the length of each trajectory pair per participant and path. We can see that the length of the trajectories resulting from our controller with prediction which considers the reaction time of the user is shorter compared to the controller without prediction in 25 out of 32 cases. We conducted three paired-samples t-tests to compare the performance of both controllers based on the trajectory lengths, the time of travel and the average path deviation. There is a very significant difference in the trajectory lengths resulting from the controller with prediction (\(\mathrm {M}=30.1\, \hbox { m}\), \(\mathrm {SD}=1.55\, \hbox { m}\)) and the trajectory lengths of the standard controller (\(\mathrm {M}=31.3\, \hbox { m}\), \(\mathrm {SD}=1.59\, \hbox { m}\)); \(t(31)=-3.51, p=0.0014\). Also the difference in the time required for the user to reach the goal on the desired path is significantly different (prediction-based controller: \(\mathrm {M}=193.2\, \hbox { s}\), \(\mathrm {SD}=40.9\, \hbox { s}\), standard controller: \(\mathrm {M}=211.7\, \hbox { s}\), \(\mathrm {SD}=33.2\, \hbox { s}\)); \(t(31)=-3.16, p=0.0035\), see also Fig. 12c. These results suggest that the prediction-based controller enables the users to reach their goal on a shorter path in less time.

The mean-deviation from the desired trajectory also helps to compare the performance of both the controllers. The deviation was calculated by choosing equidistant points on the desired trajectory and then computing how far the user was from this point using both the controllers. We fail to reject the null hypothesis that there is no difference between both controllers regarding the path deviation at a significance level of \(\alpha = 0.01\) (prediction-based controller: \(\mathrm {M}=0.065\, \hbox { m}\), \(\mathrm {SD}=0.020\, \hbox { m}\), standard controller: \(\mathrm {M}=0.055\, \hbox { m}\), \(\mathrm {SD}=0.022\, \hbox { m}\)); \(t(31)=2.42, p=0.021\). We believe that the slightly increased path deviation of the prediction-based controller stems from the fact that the user oscillates less around the ground truth path with this controller.

Left Comparison of the prediction-based controller with the non-prediction-based controller. The mean number of navigation signal changes per second for the four different paths (left). The controller with prediction relays a higher frequency of navigation commands compared to a controller without prediction. Path 3 and Path 4 require a higher frequency of signal changes, which is a result of the more complex path geometry. Right Number of trials in which the prediction-based controller performs better than the standard one in terms of traveled path length (shorter better), travel time (quicker better) and user preference (higher score better)

The improved path guidance performance for the controller with prediction comes at the cost of a higher frequency of navigation signal changes, as can be seen in Fig. 13a. Also, the qualitative evaluation reveals that the participants preferred the controller without prediction (see Fig. 13b). As a few participants stated, this was mainly due to the fact that the controller with prediction caused a high number of signal changes which could not always be interpreted clearly. This result is in contrast to the improved path guidance performance in terms of resulting path length and trajectory execution time. In average, the traveled path was 4 % shorter and the participants saved 9 % of travel time with our controller. We believe that these results which we acquired with healthy test persons can also be transferred to elderly people with walking disabilities. On one hand these users tend to walker slower but on the other hand they also suffer from increased reaction times (Der and Deary 2006) that motivate the need for the prediction-based controller. The results of this experiment indicate a correlation between the user preference and the number of signal changes of a controller. We will consider this finding in future versions of the prediction-based controller.

Test environment (left) and its map (right) used for the guided navigation experiments. We defined three pairs of start (circle) and goal (cross) locations. The first pair \((S_0, G_0)\) is for training while the remaining two pairs are used for evaluation. The size of our environment is \(8 \hbox { m} \times 8.25 \hbox { m}\)

An exemplary evaluation run in the test environment for the guided navigation experiments. The participant starts from the lower left corner and is guided towards the goal position in the upper right corner. Our system navigates the user around a dynamic obstacle that blocks the direct path of the user

3.4 Application for guided navigation

In this section we evaluate the guidance provided by our smart walker in a complete end-to-end manner. The objective is to verify if we can use the smart walker to navigate users safely to the desired goal. We also want to gain insights into the advantages and disadvantages of the three different guidance modes, namely handles, belt and a combination of both.

Logged paths of the training (left) run, the first (middle) run, and second (right) run for one participant in the guided navigation experiments. The start locations are marked with a circle, the goal locations with a cross. Some of the deviations between the paths are caused by the human dynamic obstacle. For all three paths, the handles resulted in the smoothest trajectory. In the combined mode, the participant had trouble to avoid the dynamic obstacle in Path 0 and Path 2 as the participant sensed the obstacle but had to develop the obstacle avoidance strategy on his or her own

For the evaluation we used a setup that resembles a complex indoor environment. It contained walls, objects on the floor and one dynamic obstacle in the form of one person walking around in the course. For these experiments we did not rely on the tilting laser as the indoor test environment was free of negative obstacles. We identified three pairs of start and goal locations as shown in Fig. 14. The first pair of locations \((S_0, G_0)\) was used for training the test participants while the other two pairs were used for evaluation. During the training phase, each test person completed three runs from \(S_0\) to \(G_0\), one in each mode (handles, belt and combined). After the training is over, participants were navigated on the remaining two tracks once in each of the three modes. The order of tracks and modes was randomized to avoid any bias. After each run, the supervisor guided the participant to the next start position. Per run, we confronted the test participants with one dynamic obstacle, a human which stepped into the way of the participant. This is important as any realistic scenario will always contain some form of dynamic obstacles. Ten people in the age of 22–30 years, all male, took part in this study. We blindfolded all test persons and equipped them with acoustic earmuffs so that they could not hear noises caused by the dynamic obstacle. This ensured that the participants relied only on our system for obstacle avoidance, as might be necessary in noisy environments, e.g. when walking next to main streets. The prediction-based controller was used for all our experiments. For each test run, we tracked the path of the participant to the goal while measuring the time, distance and number of collision encountered on the way.

A series of images in Fig. 15 shows one participant guided in the test environment avoiding both static and dynamic obstacles. The participant bypasses all obstacles safely and also avoids the dynamic obstacle successfully, as the planner incorporates it into an updated path.

Number of collisions per run for the guided navigation experiments. Majority of the collisions occurred in the combined mode. These collisions often resulted in uncontrolled correction-movements which caused further collisions. The belt resulted in the highest number of collision-free runs but also required the longest travel time

The logged paths for one participant can be seen in Fig. 16. For all paths, using the handles resulted in the smoothest trajectory. The belt mode tended to cause abrupt and large changes in orientation. This is caused by most participants requiring more time to interpret the turn signal, causing them to stop and turn on the spot. The majority of participants recognized the dynamic obstacle in the combined mode based on the belt signals but had trouble to find a detour path on their own. For example, as illustrated in Fig. 16, the test person recognized the obstacle in Path 0, but the chosen path resulted in a collision. We anticipate that this combined mode may be helpful with more training and if the user is familiar with the environment.

Figure 17 provides an overview of the number of collision-free runs. Both the belt and the handles mode resulted in a low number of collisions compared to the combined mode. Around 50 % of the runs in the combined mode had at least one collision. These collisions were caused due to the change in the local navigation strategy, which required the user to understand and interpret the obstacle vibration signals from the belt carefully. The traveled distances are illustrated in Fig. 18. Except of a few outliers, there was no significant difference between the path lengths. This shows that all of our three guidance strategies were able to guide the user from start to goal without larger detours. The travel time per path reveals differences in the performance of our three modes (see Fig. 19). The handles mode guided all participants in the shortest time. The belt mode was slower than the combined mode in all but one case, which seems contrary to our observation that the users typically required a longer time in the combined mode to detour the dynamic obstacle. This can be explained by the fact that most of the participants required more time to interpret the turn commands of the belt correctly. The user rating (Fig. 20) reflects this result as well. All but one participant preferred the handles mode. The combined mode was preferred over the belt mode, even though this mode resulted in a higher number of collisions. As Fig. 21 shows, there is a correlation between the understandability of the navigation command and the user preference. Interestingly, feedback from test participants revealed that some participants liked the combined mode better as this mode provided them with an increased contextual knowledge of their surrounding.

The travel time for first path (left), second path (center) and combined (right) per participant and mode for the guided navigation experiment. For every participant, navigation with handles resulted in the shortest total travel time. Except for one participant, the travel time in the combined mode was lower than the travel time in belt mode

A summary of our findings is shown in Table 2. Both the handles and the belt mode guide the user after a minimal training time with a low collision risk to the goal. The belt mode resulted in increased travel times as the navigation commands are harder to perceive. While the combined mode has a higher collision risk, the participants appreciated the contextual knowledge provided by the belt.

User rating from 1 (very bad) to 10 (excellent) per participant and mode for the guided navigation experiment. Each value is averaged over the participant’s rating for Path 1 and Path 2 in the same mode. With one exception, all participants preferred the navigation by handles. The majority of the participants rated the combined mode better than the belt mode even if this mode resulted in a higher number of collisions

Qualitative evaluation for the guided navigation experiment. We asked the participants at the end of the experiment to rate three statements on a Likert Scale. Several participants had trouble to distinguish between the different navigation commands in the belt mode (left). In contrast, the participants could clearly distinguish the navigation commands in the handles mode (left). They stated that the handling of the walker felt intuitive (middle) and they felt safe while using our system (right) (Color figure online)

3.5 Evaluation with blind users

While we evaluated basic technical functionality of the smart walker with blindfolded participants, we also continuously considered the feedback of blind users and mobility teachers in our development process. This is important, as there are differences between navigation methods used by sighted and blind users (Williams et al. 2014).

3.5.1 Exploratory evaluation

We presented an prototype of our smart walker at SightCity 2014 and SightCity 2015, Germany’s biggest exhibition about aids for the blind (sig). This was an exploratory evaluation where around 30 visitors tested our system. Most of these visitors were either visually impaired or worked as mobility teachers. The visitors assessed our walker in the spatial information setting for a few minutes in the crowded exhibition floor. Afterwards, we asked them for feedback regarding the usability of the system, the quality of the vibration signals and potential improvements. Six visitors additionally answered a detailed anonymous questionnaire.

The results from this early evaluation indicate that our walker enabled the participants to successfully identify obstacles in their environment. One of the main goals of this evaluation was to analyze if blind users across all ages could sense the vibration feedback signals. Most of the participants stated that they were able to identify the haptic signals clearly and felt safe while using our system. People who had problems to identify the vibration signals mostly stated that this was a sensitivity-related issue. Either the vibration signals were considered too intense (e.g. when the participant tested the belt and was ticklish around the waist) or the participant could not sense the vibration signals well. Most problems were related to the vibration belt. The test participants expressed that our smart walker was easy to adapt to, requiring only a few instructions. Additionally, the capability to robustly identify negative obstacles was highly appreciated as available electronic blind assistances are limited in this regard. Suggestions included the introduction of semantic obstacle feedback and additionally allowing the distinction between positive and negative obstacles. We are planning to incorporate this type of feedback in future versions of the smart walker. While the participants could avoid obstacles, they had trouble to estimate the exact position and dimension of obstacles. This was specifically a drawback when navigating in narrow spaces and motivates our approach towards guided navigation. Overall, the outcome of this exploratory evaluation supports the results of our experiments with the age-matched user group in Section 3.1.

3.5.2 Pilot study

We designed a pilot study with the aim to understand how well the overall system is accepted by blind people and how the smart walker compares to conventional navigation techniques. The participant of our pilot study was a 69 years old male who is completely blind. He uses a white cane since fourteen years and did not have any prior experience with the smart walker. We setup a test course which resembles an office environment with one large main floor, three small floors and one room attached to each small floor as shown in Fig. 22. The test course has a size of 12.5 m \(\times \) 6.0 m. The smart walker setup was identical to the one described in Sect. 3.4. Initially we introduced the participant to all navigation modes of the smart walker. As a first test, we asked the participant to explore the environment in the setting spatial information, first with the vibrating handles and then with the vibration belt. The main outcome of this experiment is:

-

The participant was able to explore the main floor safely and successfully using the spatial information setting. He had trouble finding the openings of the smaller corridors and the doors to the rooms in this setting.

-

The participant preferred the vibration handles over the vibration belt, as he felt that the vibration signals from the handles were easier to perceive.

In order to evaluate the guided navigation setting the participant traveled four different routes from the main floor to the rooms in randomized order, each route twice, once with the smart walker and once with his conventional white cane. In the runs with the walker, we used the vibrating handles, as the participant preferred this mode in the previous experiment. Before the runs with the white cane, the experimenter provided a sequence of oral direction instructions. In two evaluation runs, the experimenter had to repeat single instructions, because the participant could not remember them. The experimenter noted the remarks of the participant given during the experiment and in a final interview. The main findings are:

-

In all test runs, the participant reached the goal location successfully. In average, the participant took around twice the time to reach the goal location with the walker compared to the runs with his white cane (walker: \(\mathrm {M}=77.5\, {s}\), \(\mathrm {SD}=28.8\, {s}\), white cane: \(\mathrm {M}=31.5\, {s}\), \(\mathrm {SD}=12.2\, {s}\)). The travel time with the white cane does not include the additional time required for the communication of direction instructions (around 20 s). These instructions were not required in the test runs with the walker. The travel time with the walker is higher, because the user required additional time to interpret the navigation signals and walked more slowly. We anticipate that this difference in travel time will be reduced with increasing familiarization. Moreover, the system is designed for blind people with walking disabilities who need a walker on a regular basis and have typically slower walking speeds in general.

-

In general, the participant preferred the guided navigation setting over the spatial information setting.

-

The participant remarked that one of the potential risks of the smart walker technology is a loss of orientation during guided navigation. The walker should provide semantic information about the current environment and inform about important guidelines such as curbs and typical landmarks.

The outcome of this experiment is that the spatial information setting is helpful for exploration in wide open spaces, but is of limited use for navigation in narrow indoor regions. In contrast, the guided navigation setting is able to guide blind users successfully to their goal, as already indicated in the previous experiment with blindfolded participants. An important scope for future work is the integration of semantic environmental feedback for better orientation. Overall, these experiments support the previous findings that we acquired in experiments with non-blind participants.

4 Related work

The area of elderly care and blind assistance has been researched extensively throughout the recent past. Several researchers presented novel robotic walkers, designed primarily for elderly non-blind people with walking disabilities. They provide structural support and navigational assistance at varying levels. Graf (2001) introduced Care-O-bot II that is a multi-functional home care system for elderly people. The robot moves in a direction desired by the user based on forces measured at displacement transducers located at the handles. Yu et al. (2003) proposed a motorized walker which aims to guide elderly people with cognitive impairments using shared-autonomy control. The walker monitors the user and adjusts the autonomy level between user-control and computer-control according to the measured performance. The work of Morris et al. (2003) to navigate elderly people with cognitive disability is closely related to our smart walker. They guide people with a shared control robot system where a robot walker is built on top of a motorized omni-directional robot. The walker has two modes of operations—passive or active. In the passive mode, the robot walker moves in the direction the user wants it to move. In the active mode, the robot plans a trajectory to a goal and guides the user by driving along the trajectory. The user of the walker is pulled forward by the robot and reaches the goal in this way. Glover et al. (2003) presented a walker retrofitted with motors so that it is able to park itself and return to the user. The main focus of this work is to reduce the fall risk of an elderly user while walking to the walker. The guidance style is passive, the walker displays visual directional hints, but the user finally controls the travel direction. The goal can be selected from a list of potential places displayed in an graphical user interface.

MacNamara and Lacey (2000) presented a personal adaptive mobility aid (PAM-AID) that is an unmotorized walker which assists frail visually impaired people by guiding them away from obstacles by actively controlling the steering angle of the front wheels. The system detects the user’s intended walking direction by the turning angle of a handlebar. Additionally, it provides environment information via a speech interface. Compared to our approach, the PAM-AID is not able to plan a path in the global environment and the authors evaluate the guidance performance based on qualitative observations only. The PAM-AID walker was improved and later called Guido. Rentschler et al. (2008) evaluated Guido in a clinical study with 45 visually impaired participants and showed that it did not have any significant advantage in terms of travel time or safety over a low-tech mobility aid. These findings may be ascribed to the fact that most of the test participants were only partially visually impaired. Guido’s simple obstacle avoidance functionality is related to our spatial information setting. The fact that the local obstacle avoidance mode did not have significant advantage over low-tech mobility aids further motivated us to provide navigational functionality that is beyond simple obstacle avoidance.

Rodriguez-Losada et al. (2005) extended the robotic walker Guido with map-based navigation techniques based on simultaneous localization and mapping methods. The front wheels of the walker are steered so that the user is guided on a computed trajectory to a goal location under the consideration of dynamic obstacles. They compare their approach against the previous obstacle avoidance mode of the walker and show that the path guidance technique performs better in terms of comfort, travel time and number of collisions. They also suggest an extension of the system to full 3D sensing for safer application.

In contrast to the majority of the existing work, our system is unmotorized and it is built as a standalone module so that it can easily be attached to a conventional walker. The lack of propulsion of the walker increases the autonomy of the user who has full control over the locomotion. However, this also results in a higher task complexity to guide the user close to a computed trajectory as a human executes the control commands and not motors. Therefore, we designed a specific controller which takes human motion behavior into account and two different vibro-tactile interfaces, which communicate navigation commands or obstacle positions to the user. One of our findings is that it is beneficial to consider the delay time of the user in the guidance process. This outcome is also confirmed in previous work where a vibro-tactile belt was used to guide a user around obstacles in a virtual game environment (Moeller et al. 2009). A high number of collisions could be traced back to an overshoot of motion caused by not considering the human delay time.

We designed our smart walker from the ground up to serve blind users with walking impairments. This results in stricter design requirements as we have to ensure that all obstacles in the environment are detected by the walker and either communicated to the user or incorporated into the navigation process. Unlike all above systems, our sensor setup can identify both positive and negative obstacles such as downward stairways. Negative obstacles are a large danger for blind people, as they can lead to falls with serious injuries.

Dakopoulos and Bourbakis (2010) survey electronic travel aids, which enable obstacles avoidance. Existing techniques can be categorized by their level of autonomy. High-level systems track the user position and plan complete paths in order to guide the user along a specific route (Kulyukin et al. 2006; Rodriguez-Losada et al. 2005). These high-level approaches provide functionality that is far beyond the one of conventional electronic travel aids for the visually impaired, but they often reduce the autonomy of the user by incorporating the decision making process into the navigation process. This reduces the cognitive load but the user is not a part of the decision making and all the navigation decisions are made by the system. Devices with a medium level of autonomy propose a direction to avoid nearby obstacles but do not guide a user to the desired goal over large distances (Ulrich and Borenstein 2001; Rentschler et al. 2008; MacNamara and Lacey 2000). Low-level approaches detect obstacles in the vicinity of the users and only inform about their positions (Rodríguez et al. 2012; Kay 1974). Feng et al. (2015) showed in a user study with a robot guide for blind people, that blind users prefer systems that allow a customization of the navigation experience according to the users’ experiences and requirements. Our sensor setup allows us to operate in any of the above three modes, so that it can flexibly be used according to user preference and navigation situation. Furthermore, we introduced a hybrid mode, which combines low-level spatial information on obstacle positions with high-level path planning and guidance.

Common techniques to guide a user on a path or inform about hazardous areas rely on different feedback mechanisms. They range from vibration signals (Cosgun et al. 2014; Tsukada and Yasumura 2004), over audio output (Rodríguez et al. 2012; Ran et al. 2004) to force feedback (Ulrich and Borenstein 2001; Morris et al. 2003). We use vibro-tactile signals as feedback representation because this method does not overlay important noises from the environment (Dakopoulos and Bourbakis 2010).

Vibro-tactile feedback has been successfully used in related context previously. For example, Bosman et al. (2003) propose a system which guides users without walking impairments in complex indoor environments with tactile cues from vibration motors mounted on the wrist. They show that their tactile guidance system can even be helpful for non-blind users. Azenkot et al. (2011) compare different types of vibro-tactile feedback for smartphone-based turn-by-turn navigation of blind users. The results of their user study indicate that blind people prefer especially vibration patterns for the communication of navigation commands. Cosgun et al. (2014) use a vibration belt with distinct vibration patterns to communicate directional and rotational navigation commands. Their main focus is the comparison of different navigation signals and the guidance of the user to a pre-defined goal location. Our smart walker provides two different feedback devices. The vibration motors in the handles are easier to perceive by the human user (Wilska 1954) and their locations are easy to distinguish. In contrast, the vibration belt provides a higher spatial resolution at the cost of decreased localization accuracy regarding the position of a currently active vibration motor (Cholewiak et al. 2004). Both devices enable the user to perceive vibration signals and steer the walker simultaneously and they are unobtrusive, as the belt can be worn under clothing. Additionally, commercially available vibro-tactile feedback devices may be easily integrated into our walker (Elitac BV; feelSpace; Sensodrive GmbH).

5 Conclusions

In this paper we presented a smart walker designed to enable elderly blind people with walking disabilities to navigate safely in unknown and complex environments. The walker can operate in two different settings, where the first one enables the user to avoid collisions with obstacles in the environment and the second one is targeted towards navigating a user to a given goal location. To achieve this, our walker processes data acquired from on-board laser scanners to detect positive and negative obstacles and communicates with the user through vibration feedback on the handles and the belt.

Our experiments suggest that both settings of the smart walker work reliably and support blind users in their navigation tasks. While the collision avoidance mode is useful for the exploration of unknown or highly dynamic environments, the guided navigation mode supports the user during the navigation in complex environments. In our experiments we could show that the walker is able to detect positive as well as negative obstacles. The experiments furthermore revealed that the users preferred the vibration motors in the handles over the belt as the feedback channel for both settings. At the same time, the belt appears to provide a higher resolution which may be exploited for different tasks.

We also demonstrated that by incorporating typical human motion behavior into the controller, e.g., reaction time, users can be guided more effectively along a given path. Our controller guides the user with shorter trajectories and results in reaching the goal faster compared to a standard controller. Our experiments on guided navigation with static and dynamic obstacles show that our system can successfully guide users to their destination. More than 90 % of the runs with the handles or the belt when used independently were free of collisions, though the combined mode resulted in more collisions.

Our smart walker is a novel system that combines recent advances in robotic navigation and in obstacle avoidance with a controller that incorporates human motion behavior. The vibro-tactile interfaces provide clear feedback without obstructing the auditory channel. We believe that the smart walker will substantially improve the mobility and self-reliance of elderly blind users and blind users with walking impairments. As next steps, we plan to investigate into methods and output modalities which allow the walker to provide semantic information about the environment and we want to develop algorithms that enable the walker to learn and adapt to individual user behavior. Additionally, we plan to evaluate our system with users of the target group in a real-world everyday scenario.

References

Azenkot, S., Ladner, R. E., & Wobbrock, J. O. (2011). Smartphone haptic feedback for nonvisual wayfinding. In The proceedings of the 13th international ACM SIGACCESS conference on Computers and accessibility.

Bosman, S., Groenendaal, B., Findlater, J.-W., Visser, T., de Graaf, M., & Markopoulos, P. (2003). GentleGuide: An exploration of haptic output for indoors pedestrian guidance. In Human–computer interaction with mobile devices and services. New York: Springer.

Censi, A. (2008). An ICP variant using a point-to-line metric. In Proceedings of the IEEE international conference on robotics and automation (ICRA).

Cholewiak, R. W., Brill, J. C., & Schwab, A. (2004). Vibrotactile localization on the abdomen: Effects of place and space. Perception & Psychophysics, 66(6), 970–987.

Cosgun, A., Sisbot, E. A., & Christensen, H. (2014). Guidance for human navigation using a vibro-tactile belt interface and robot-like motion planning. In International Conference on Robotics & Automation.

Dakopoulos, D., & Bourbakis, N. (2010). Wearable obstacle avoidance electronic travel aids for blind: A survey. IEEE Transactions on Systems, Man, and Cybernetics, Part C: Applications and Reviews, 40, 25–35.

Der, G., & Deary, I. (2006). Age and sex differences in reaction time in adulthood: Results from the united kingdom health and lifestyle survey. Psychology and Aging, 21(1), 62.

Elitac, B. V. Science suit and tactile belt. http://www.elitac.nl/.

Engel, J., Sturm, J., & Cremers, D. (2012). Camera-based navigation of a low-cost quadrocopter. In Proceedings of the IEEE/RSJ international conference on intelligent robots and systems (IROS).

feelSpace. feelSpace. http://feelspace.cogsci.uni-osnabrueck.de/.

Feng, C., Azenkot, S., & Cakmak, M. (2015). Designing a robot guide for blind people in indoor environments. In Proceedings of the tenth annual ACM/IEEE international conference on human–robot interaction extended abstracts.

Fox, D. (2003). Adapting the sample size in particle filters through kld-sampling. International Journal of Robotics Research (IJRR), 22(12), 985–1003.

Glover, J., Holstius, D., Manojlovich, M., Montgomery, K., Powers, A., Wu, J., et al. (2003). A robotically-augmented walker for older adults. Technical report. Carnegie Mellon University, Computer Science Department.

Graf, B. (2001). Reactive navigation of an intelligent robotic walking aid. In Proceedings of 10th IEEE international workshop on robot and human interactive communication, 2001.

Grisetti, G., Stachniss, C., & Burgard, W. (2007). Improved techniques for grid mapping with rao-blackwellized particle filters. IEEE Transactions on Robotics (TRO), 23(1), 34–46.

Hogg, R. W., Rankin, A. L., Roumeliotis, S. I., McHenry, M. C., Helmick, D. M., Bergh, C. F., et al. (2002). Algorithms and sensors for small robot path following. In Proceedings of the IEEE international conference on robotics and automation (ICRA).

Kay, L. (1974). A sonar aid to enhance spatial perception of the blind: Engineering design and evaluation. Radio and Electronic Engineer, 44, ISSN 0033-7722.

Kulyukin, V., Gharpure, C., Nicholson, J., & Osborne, G. (2006). Robot-assisted wayfinding for the visually impaired in structured indoor environments. Autonomous Robots, 21(1), 29–41.

MacNamara, S., & Lacey, G. (2000). A smart walker for the frail visually impaired. In International Conference on Robotics & Automation (Vol. 2, pp. 1354–1359). IEEE.

Moeller, K., Toth, F., Wang, L., Moeller, J., Arras, K., Bach, M., et al. (2009). Enhanced perception for visually impaired people. In Bioinformatics and Biomedical Engineering, 2009. 3rd International Conference on ICBBE 2009.

Morris, A., Donamukkala, R., Kapuria, A., Steinfeld, A., Matthews, J., Dunbar-Jacob, J., et al. (2003). A robotic walker that provides guidance. In International Conference on Robotics & Automation.

Morton, R. D., & Olson, E. (2011). Positive and negative obstacle detection using the HLD classifier. In Proceedings of the IEEE/RSJ international conference on intelligent robots and systems (IROS).

Pascolini, D., & Mariotti, S. P. (2011). Global estimates of visual impairment: 2010. British Journal of Ophthalmology.

Quigley, M., Conley, K., Gerkey, B., Faust, J., Foote, T., Leibs, J., et al. (2009). ROS: an open-source Robot Operating System. In Proceedings of the IEEE international conference on robotics and automation (ICRA).

Ran, L., Helal, S., & Moore, S. (2004). Drishti: an integrated indoor/outdoor blind navigation system and service. In Annual conference on pervasive computing and communications.

Rentschler, A., Simpson, R., Cooper, R., & Boninger, M. (2008). Clinical evaluation of Guido robotic walker. Journal of Rehabilitation Research & Development, 45(9), 1281.

Reyes Adame, M., Kamperschroer, J., Winter, M. H.-J. & Moeller, K. (2015). A smart walking aid for visually impaired elderly people: Comparison of two vibrotactile feedback systems. In 49th DGBMT Annual Conference. Luebeck, Germany.

Rodriguez-Losada, D., Matia, F., Jimenez, A., Galan, R., & Lacey, G. (2005). Implementing map based navigation in guido, the robotic smartwalker. In Proceedings of the IEEE international conference on robotics and automation (ICRA).

Rodríguez, A., Yebes, J. J., Alcantarilla, P., Bergasa, L., Almazán, J., & Cela, A. (2012). Assisting the visually impaired: obstacle detection and warning system by acoustic feedback. Sensors, 12, 17476–17496.

Sensodrive GmbH. Vibrotac. http://www.sensodrive.de/DE/Produkte/Vibrotac.php.

Sight City. http://www.sightcity.net/en/.

Smith, R., Berlin, C., Hejtmancik, J., Keats, B., Kimberling, W. J., Lewis, R., et al. (1994). Clinical diagnosis of the usher syndromes. American Journal of Medical Genetics, 50(1), 32–38.

Tsukada, K., & Yasumura, M. (2004). Activebelt: Belt-type wearable tactile display for directional navigation. In K. Lyytinen & Y. Yoo (Eds.), Ubiquitous computing. New York: Springer.

Ulrich, I., & Borenstein, J. (2001). The GuideCane—Applying mobile robot technologies to assist the visually impaired. IEEE Transactions on Systems, Man and Cybernetics, Part A: Systems and Humans, 31, 131–136.

Wachaja, A., Agarwal, P., Zink, M., Reyes Adame, M., Möller, K., & Burgard. W. (2015). Navigating blind people with a smart walker. In International Conference on Intelligent Robots and Systems (IROS). Hamburg, Germany.

Williams, M. A., Galbraith, C., Kane, S. K., & Hurst, A. (2014). “Just let the cane hit it”: How the blind and sighted see navigation differently. In Proceedings of the 16th international ACM SIGACCESS conference on Computers & accessibility.

Wilska, A. (1954). On the vibrational sensitivity in different regions of the body surface. Acta Physiologica Scandinavica, 31(2–3), 285–289.

Yu, H., Spenko, M., & Dubowsky, S. (2003). An adaptive shared control system for an intelligent mobility aid for the elderly. Autonomous Robots, 15(1), 53–66.

Acknowledgments

We thank Professor Maik Winter, Barbara Weber-Fiori and Johannes Kamperschroer who were involved in the experiments with elderly people. We also thank R. Broer from RTB GmbH & Co. KG, Germany, for helpful comments and the exhibition space at SightCity. Sven Heinrich helped us with valuable information on human tactile sensitivity. Additionally, we thank Henrich Kolkhorst and three anonymous reviewers for their helpful comments. We are grateful to all our participants for their consent to publish all results. This work has been partially supported by the German Federal Ministry of Education and Research (BMBF), Contract Number 13EZ1129B-iVIEW and by a grant from the Ministry of Science, Research and the Arts of Baden-Württemberg (Az: 32-7545.24-9/1/1) for the Project ZAFH-AAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

This is one of several papers published in Autonomous Robots comprising the “Special Issue on Assistive and Rehabilitation Robotics”.

Rights and permissions

About this article

Cite this article

Wachaja, A., Agarwal, P., Zink, M. et al. Navigating blind people with walking impairments using a smart walker. Auton Robot 41, 555–573 (2017). https://doi.org/10.1007/s10514-016-9595-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-016-9595-8