Abstract

Large and crowded public places can easily disorientate elderly people. The EU FP7 project Devices for Assisted Living (DALi) aims at developing a robotic wheeled walker able to assist people with moderate cognitive problems to navigate in complex indoor environments where other people, obstacles and multiple points of interest may confuse or intimidate the users. The walking assistant, called c-Walker, is designed to monitor the space around the user, to detect possible hazards and to plan the best route towards a given point of interest. In this chapter, an overview of the system and some of its most important functions are described.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Information and communication technologies (ICT) contribute to innovation in a variety of ways. At the European level, elderly care is recognised as one of the crucial societal challenges of the near future. With the median age in Europe projected to grow from 37.7 in 2003 to 52.3 in 2050, the population potentially afflicted by mobility problems is substantial, not only because their social lives are restricted, but also because they have a limited access to good nutrition, leisure and other activities. Several factors adversely affect mobility, the most obvious being physical impairment as well as loss or reduction of visual and auditory ability. A less recognised, but equally critical problem is the decline of cognitive abilities, which reduces confidence when a person is supposed to move in unfamiliar environments, where the presence of both crowd and multiple points of interest (PoIs) may easily cause users’ disorientation. In such situations, the afflicted person gradually perceives public places as intimidating and starts to withdraw.

In order to tackle this problem, the EU project Devices for Assisted Living (DALi) aims at developing a smart walking assistant called c-Walker able to support navigation and to improve confidence of senior users moving within large and unstructured spaces, such as shopping malls, railway stations or airports. The main features of the walker in terms of functions and usability have been defined in cooperation with groups of potential users in England and Spain under the supervision of a team of psychologists. A key innovation factor of the c-Walker is its ability to detect and to interpret human behavioural patterns in order to plan the route that minimises the level of anxiety for the user, while preserving naturalness of his/her navigation.

Robotic walkers have gained an undisputed popularity in the research community working in the field of Ambient Assisted Living (AAL). Closely related to DALi is the system developed within the project Assistants for Safe Mobility (ASSAM) [1]. However, ASSAM is focused on the seamless transition from indoors to outdoors, while DALi specifically considers large indoor environments and the interaction with other people around the user. Other projects, such as iWalkActive and E-NO-FALLS [2, 3], have complementary goals, i.e. supporting physical exercise and preventing falls, respectively. Although of interest, these aspects are different from those faced in DALi. In the rest of this chapter the main features of the first c-Walker prototype are shortly summarised.

2 Functional and Architectural Overview

In a typical application, the assisted person (AP) selects a destination from a list of possible PoI (e.g. the restrooms, a shop, or a ticket office) on a map of the chosen environment, which is preloaded in a tablet. After selecting the PoI using the tablet touch-screen, a safe route is established and shown to the user. The c-Walker is capable to track its own position on the map while approaching the destination point. If the AP wants to move along a different trajectory, he/she is free to do so. In this case, the c-Walker suggests a correction, not only by showing it on the screen, but also through a pair of Bluetooth-driven bracelets working as haptic interfaces. Such bracelets vibrate to inform the AP when a left or a right turn should be taken. The use of bracelets has been preferred to the integration of haptic interfaces within the c-Walker handles for two reasons. First of all, installing haptic handles with a suitable form factor was quite complex from the mechanical point of view. Secondly, the pressure exerted on the handles while pushing the device could reduce user’s sensitivity to haptic stimulation. Alternatively, the route to follow can be indicated to the AP through suitable audio signals generated by Bluetooth earphones.

The sensing part of the system is composed by two encoders CUI Inc. AMT10X mounted on the rear wheels, an inertial measurement unit (IMU) equipped with a gyroscope Inversense IMU-3000, an RFID reader Feig ISC.MR101-USB and a front USB camera or a Kinect. These sensors are used for localisation purposes (see Sect. 3). The front Kinect is used also to detect possible hazardous situations and to track other people moving in the proximity of the device. A second IMU is mounted on the earphones to determine the AP’s head attitude with respect to the direction of the walker (see Sect. 5).

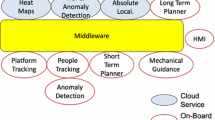

A picture of the first c-Walker prototype along with its main components is shown in Fig. 1. The block diagram depicted in Fig. 2 highlights instead the system architecture from a functional point of view. The higher level functions (image processing, situation assessment, trajectory planning and guidance) are implemented in a small Intel Barebone mini desktop (11.7 cm × 11.2 cm × 3.9 cm) equipped with a 2.80-GHz Intel Core i5-3427U processor, 8 GB of DDR3 RAM and a 120-GB solid state drive. This platform is powered by an external rechargeable 118-Wh Li-ion battery. Communication with peripherals and subsystems relies on a USB 3.0 port, a Gigabit Ethernet link, WiFi (IEEE 802.11a/b/g/n) and Bluetooth 4.0. The USB link is used to control and to acquire images from the front camera. WiFi provides fast communication between the Barebone platform and a Google Nexus 10 tablet running the graphic user’s interface (GUI). The Gigabit Ethernet connection is used to collect the results of the low-level functions (i.e. sensor data acquisition and preprocessing) as well as to control some of the output actuators (i.e. two brakes Liedtke FAS21 on the back wheels and two pivoting motors M1233031 by LAM Technologies located on the front caster wheels). Such low-level functions are implemented in a Beaglebone platform equipped with an ARM Cortex A8 processor running at 720 MHz, 256 MB DDR2 RAM and a microSD memory for storage. The Beaglebone is connected to the RFID reader via USB. The input data from encoders and IMU, as well as the output control signals driving brakes and pivoting motors are transferred to/from the Beaglebone via CAN bus.

3 Localisation and Position Tracking

Walker localisation is essential to support high-level functions such as route planning and guidance. Map construction and localisation techniques play a complementary role. A map of the chosen environment is required to define the allowed areas (namely where the user can move freely) and the forbidden areas (e.g. due to obstacles or boundaries such as walls). Once a map is available, a 2D Cartesian reference frame (given by the position of the origin and the direction of axes x and y) can be defined on it. The position tracking algorithm relies on an extended Kalman filter (EKF). The EKF is built upon a simple five-state unicycle-like kinematic model. A qualitative description of the localisation and position tracking algorithm is shown in Fig. 3. The state vector includes the following variables: the (x, y) coordinates of the midpoint of the rear axle in the chosen Cartesian reference frame, the orientation angle θ with respect to the x-axis and, finally, the linear and angular velocity offsets (referred to as μ and δ, respectively) due to encoders nonidealities and left-side/right-side walker asymmetries. The encoders are used in the prediction step of the EKF to reconstruct the relative position and motion direction of the c-Walker with respect to the initial state. Even if the data rate from such sensors is high enough (i.e. 250 Hz) to assure continuous position tracking, the open-loop estimation uncertainty tends also to grow indefinitely, as customary when dead-reckoning techniques are used.

In order to keep position uncertainty within wanted boundaries (e.g. ±1 m with 99 % probability), a grid of low-cost passive RFID tags stuck on the floor at known locations is used. In this way, the position estimated by the c-Walker on the map can be updated by simply knowing the ID of a detected tag, with uncertainty no larger than the RFID reading range (i.e. about ±10 cm). Due to the fusion of odometry and RFID data, the granularity of the grid can be coarser than other solutions found in the literature [4]. Several simulations showed that a tag distance of about 2–3 m can provide a reasonable trade-off between wanted accuracy and deployment costs [5]. Unfortunately, RFID tag detection does not provide precise information about the direction of motion.

In order to update more effectively the estimated direction, the angular velocity values measured by the gyroscope inside the on-board IMU are integrated to update the state of the EKF anytime the walker turns left or right. However, also the gyroscope-based angle measurements are affected by unbounded uncertainty growth. Therefore, sporadic absolute orientation values in the chosen reference frame are needed to reset the angular drift introduced by the gyroscope. Since compasses and magnetometers proved to be unreliable indoors, the absolute orientation values can be obtained by measuring the angle between the direction of the walker at a given time and the x-axis direction pointed by a grid of visual markers (e.g. arrow-shaped stickers or QR-codes [6]) put on the floor and recognised in the images collected from the front camera. Again, various simulations showed that a distance of a few metres between adjacent markers is enough to assure a good trade-off between estimation accuracy and deployment complexity [5]. It is worth emphasizing that a localisation fully based on computer vision is not feasible in the case considered, as the camera has to be used also for trajectory planning and the available computational resources are limited. In fact, marker detection relies on low-rate image acquisition (e.g. at 10 Hz).

4 Route Planning

Route planning is based on a two-level approach. The first level, called long-term planner, is based on a global view of the environment and computes a trajectory according to the objectives of the AP, while considering fixed obstacles and critical areas known a priori. The second level, called short-term planner, exploits the available on-board sensors (particularly the camera) to detect dynamic obstacles (e.g. other people [7]) and to update the trajectory, while assuring comfort and minimising the probability of collisions [8]. Figure 4 shows the main modules of the route planning algorithm running on the Barebone platform.

The long-term planner takes care of building a complete route between the current AP position and the selected PoIs. Route construction takes into consideration static obstacles (e.g., walls, pieces of furniture) and temporarily forbidden areas including, but not limited to, crowded places and critical regions identified by appropriate signs (e.g. wet-floor or out-of-order signs). Such signs are detected by a vision algorithm that uses live images recorded by the front camera. The algorithm relies on both optical character recognition (OCR) and geometric shape recognition and exploits the depth information associated to the images to localize the corresponding signs on the map. The core of the planner is the Dijkstra algorithm executed on a Quadtree representation of the map of the environment [9]. From the technical point of view, the vectorial map and its representation are stored in a spatial database.Footnote 1 The set and the weight of nodes and edges of the graph representing the map are periodically updated to reflect the status of the environment at a given time. For example, if a wet-floor sign is detected, the planner disables all the nodes of the graph that are located within a circular area of a few squared metres around the position of the sign.

The short-term planner relies on a control algorithm based on a receding horizon approach, which exploits social rules driving the cohabitation of humans [10]. The algorithm uses the well-known Social Force Model (SFM) that represents people as 2D circles with attractive and repulsive forces [11]. Thus, it is possible to model the force that attracts people towards the same PoI, as well as the repulsive force that naturally prevents people from crashing into walls or other people. The control algorithm predicts the evolution of people moving in the environment and, consequently, it estimates the probability for the AP to reach safely the goal without any collision. In particular, the position of pedestrians is obtained using a people-tracker algorithm that relies on the images collected from the camera. The evolution of pedestrians moving in the environment is predicted by integrating the SFM over a limited time horizon. In principle, there is no upper limit to the number of pedestrians that can be handled by the algorithm. Further details on this algorithm are reported in [8]. Pedestrians’ directions can be estimated from the vectors built using pairs of consecutive position values. The control algorithm exploits Statistical Model Checking techniques to verify the evolution of the SFM against temporal-logic high-level constraints [12, 13], such as, for instance, “reach the goal in a finite time, but no closer than 0.5 m from people and no closer than 1 m from obstacles”. In order to estimate the probability of success, at first the possible evolution of the people moving around the c-Walker in a limited time horizon (a few seconds) is simulated many times. Then, every predicted scenario is verified against the above temporal-logic constraints and, finally, the probability of success is estimated under the assumption that the AP slightly changes its initial direction. This step is repeated till when the algorithm tests all possible directions (e.g. 0°, ±25°, ±50°, ±75°, ±90°). Finally, the direction that maximises the probability of success is suggested to the AP through the guidance mechanism (see Sect. 5). If the AP ignores the suggested trajectory or if an unforeseen obstacle is detected, a new route is computed.

5 Guidance Mechanism

The guidance system has the purpose of gently inviting the user to follow the planned route. In order to improve comfort during navigation, the force feedback has to be perceived as soft by the user and it should be properly modulated while he/she approaches the boundaries of an area of interest. Guidance is implemented using various complementary techniques, i.e. mechanical, haptic and audio-based.

Normally, the AP is free to push the c-Walker according to his/her needs. However, the two pivoting motors mounted on the front caster wheels can be selectively activated by Beaglebone to steer the walker along the suggested path. As long as the user moves within a safety region around the desired path, only sporadic and soft corrective actions are exerted. The control becomes more authoritative when the walker deviates significantly from the path, thus approaching potentially dangerous areas.

Although the mechanical feedback is effective, sometimes it can be perceived as annoying. This is the reason why other solutions (e.g. based on haptic guidance) have been investigated and implemented. Various studies have demonstrated that sensitivity to vibrations is particularly high on hairy skin and in bony areas [14]. Thus, two identical wearable haptic bracelets have been developed to invite the AP to turn right or left. In each bracelet, two cylindrical vibro-motors are controlled independently via Bluetooth. Each vibrotactile bracelet can be fitted to the arm, just below the elbow. This configuration has proved to be very effective to discriminate the haptic stimuli from the intrinsic vibrations of the c-Walker.

Another way to inform the AP about the best path to follow is based on the generation of appropriate sound stimuli. In daily life, humans are able to estimate the direction of arrival of sounds because our brain is able to recognise relative differences in amplitude and phase of sound waves impinging the two ears (binaural cues). Also, the brain can identify the spectral cues that originate at a single ear (monaural cues) because of the reflections generated by the pinna folds. These cues depend on the relative position between the listener and the sound source [15]. By modeling and reproducing these phenomena, it is possible to synthesise positional audio signals associating a sound source to the route suggested by the route planner. As a result, the user can be invited to move in the direction where the perceived sound comes from.

Each synthesised audio signal is obtained by means of a convolution between a monoaural sound signal and an impulse response. These Head-Related Impulse Responses (HRIR) can be obtained through direct measurements, or can be synthesised using models that compute the response based on anthropometric measurements. To increase the sensation of spaciousness, the sound rendering algorithm includes the Image Source Method (ISM) that performs reverberation taking care of both the relative position of the virtual sound sources and the size of the environment. The sensation of distance is accentuated by introducing a proper amount of delay and attenuation into the sound signal [16, 17]. In order to ensure a correct displacement of the spatial sound stimuli, the AP’s head orientation with respect to the c-Walker direction of motion has to be monitored. For this reason, the headphone is equipped with an inertial platform. Moreover, the IMU allows the AP to exploit small head movements to discriminate ambiguous situations. In fact, also in real life humans take advantage of small head movements to collect more cues on the position of the auditory event [18].

6 Experimental Results

The first experiments on the c-Walker prototype have been conducted at the University of Trento. Figure 5 shows the results of localisation and guidance in a simple case: an L-shaped path along a corridor towards an elevator. In Fig. 5a the planned route (dashed line) and the estimated trajectory (solid line) are compared. Figure 5b shows the difference between the planned and the measured directions of motion along the way expressed in radians. Anytime the difference values exceed ±0.2 rad (dash-dotted horizontal lines) the actuators used to guide the AP (e.g. the haptic bracelets) are activated to correct the user’s trajectory. The activation signals for the right-side (dashed line) and for the left-side (solid line) actuators finally are shown in Fig. 5c.

Table 1 shows the average mean value and the average standard deviation of the position and the heading errors, computed over about 40 routes of different length and shape in an room of 150 m2. In all the experiments the distance between pairs of adjacent RFID tags and visual markers on the floor is about 2 m. The individual errors result from the difference between the coordinates and angles estimated by the EKF and the corresponding values (properly aligned in time) measured by a laser scanner SICK S300 Expert located in the origin of the chosen reference frame (i.e. one corner of the room). The results in Table 1 confirm that the worst-case 3σ positioning uncertainty is well below ±1 m, as desired.

7 Conclusions and Ongoing Work

The c-Walker system developed within the EU project Devices for Assisted Living (DALi) has the ambitious goal to assist people with moderate cognitive impairments to navigate safely and with improved confidence in large and complex environments. The system consists of modules for localisation, route planning and user guidance and it is conceived to enhance the sensitive and cognitive abilities of the user, but without forcing him/her to act unwillingly. A first prototype of c-Walker has been tested in the laboratories of the University of Trento. Extensive experiments on the field with potential final users are planned in the next future.

Notes

- 1.

Spatialite: http://www.gaia-gis.it/gaia-sins/.

References

Assistants for safe mobility (assam). http://assam.nmshost.de/

The e-no-falls project. http://www.e-nofalls.eu

The iwalkactive project. http://www.iwalkactive.eu/

Nazari Shirehjini, A.A., Yassine, A., Shirmohammadi, S.: An RFID-based position and orientation measurement system for mobile objects in intelligent environments. IEEE Trans. Instrum. Measur. 61(6), 1664–1675 (2012)

Nazemzadeh, P., Fontanelli, D., Macii, D., Palopoli, L.: Indoor positioning of wheeled devices for ambient assisted living: a case study. In: Proceedings of IEEE International Instrumentation and Measurement Technology Conference (I2MTC), pp. 1421–1426. Montevideo, Uruguay, May 2014

Cimini G., Ferracuti F., Freddi A., Iarlori S., Monteriù A.: An inertial and QR code landmarks-based navigation system for impaired wheelchair users. Longhi, S., Siciliano, P., Germani, M., Monteriù, A. (eds.) Ambient Assisted Living, pp. 205–214. Springer International Publishing (2014)

Padeleris, P., Zabulis, X., Argyros, A.A.: Multicamera tracking of multiple humans based on colored visual hulls. In: IEEE Conference on Emerging Technologies and Factory Automation (ETFA) pp. 1–8. Cagliari, Italy, Sep. 2013

Colombo, A., Fontanelli, D., Legay, A., Palopoli, L., Sedwards, S.: Motion planning in crowds using statistical model checking to enhance the social force model. In: Proceedings of the IEEE 52nd Annual Conference on Decision and Control (CDC), Florence, Italy, pp. 3602–3608. Dec. 2013

Finkel R.A., Bentley, J.L.: Quad trees a data structure for retrieval on composite keys. Acta Informatica 4(1), 1–9 (1974) (English)

Colombo, A., Fontanelli, D., Gandhi, D., De Angeli, A., Palopoli, L., Sedwards, S., Legay, A.: Behavioural templates improve robot motion planning with social force model in human environments. In: IEEE Conference on Emerging Technologies and Factory Automation (ETFA), pp. 1–6, Cagliari, Italy, Sep. 2013

Helbing, D., Molnár, P.: Social force model for pedestrian dynamics. Phys. Rev. E 51, 4282–4286 (1995)

Jegourel, C., Legay, A., Sedwards, S.: A platform for high performance statistical model checking—PLASMA. In: Proceedings of the 18th International Conference on Tools and Algorithms for the Construction and Analysis of Systems TACAS’12, pp. 498–503, Springer, Berlin (2012)

Legay, A., Delahaye, B., Bensalem, S.: Statistical model checking: an overview. Barringer, H., Falcone, Y., Finkbeiner, B., Havelund, K., Lee, I., Pace, G., Roşu, G., Sokolsky, O., Tillmann, N. (eds.) Runtime Verification. Lecture Notes in Computer Science. vol. 6418, pp. 122–135. Springer, Berlin Heidelberg (2010)

Gemperle, F., Ota, N., Siewiorek, Dan.: Design of a wearable tactile display, Wearable Computers 2001. In: Proceedings of Fifth International Symposium on (Zurich, Switzerland), pp. 5–12. Oct 2001

Blauert, J.: Spatial Hearing: the Psychophysics of Human Sound Localization, MIT press (1997)

Rizzon L., Passerone, R.: Embedded soundscape rendering for the visually impaired. In: IEEE International Symposium on Industrial Embedded Systems (SIES) pp. 101–104, Porto, Portugal, June 2013

Rizzon L., Passerone, R.: Spatial sound rendering for assisted living on an embedded platform. Applications in Electronics Pervading Industry, Environment and Society. de Gloria A. (ed.) Lecture Notes in Electrical Engineering, vol. 289, pp. 61–73. Springer International Publishing (2014)

Begault, D.R., Wenzel, E.M., Anderson, M.R.: Direct comparison of the impact of head tracking, reverberation, and individualized head-related transfer functions on the spatial perception of a virtual speech source. J. Audio Eng. Soc 49(10), 904–916 (2001)

Acknowledgments

This work has been funded by the EU FP7 project Devices for Assisted Living (DALi), GA n° 288917, FP7. http://www.ict-dali.eu/dali

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Aggravi, M. et al. (2015). A Smart Walking Assistant for Safe Navigation in Complex Indoor Environments. In: Andò, B., Siciliano, P., Marletta, V., Monteriù, A. (eds) Ambient Assisted Living. Biosystems & Biorobotics, vol 11. Springer, Cham. https://doi.org/10.1007/978-3-319-18374-9_45

Download citation

DOI: https://doi.org/10.1007/978-3-319-18374-9_45

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-18373-2

Online ISBN: 978-3-319-18374-9

eBook Packages: EngineeringEngineering (R0)