Abstract

This paper focuses on the design of a recurrent Takagi-Sugeno-Kang interval type-2 fuzzy neural network RTSKIT2FNN for mobile robot trajectory tracking problem. The RTSKIT2FNN is incorporating the recurrent frame of internal-feedback loops into interval type-2 fuzzy neural network which uses simple interval type-2 fuzzy sets in the antecedent part and the Takagi-Sugeno-Kang (TSK) type in the consequent part of the fuzzy rule. The antecedent part forms a local internal feedback loop by feeding the membership function of each node in the fuzzification layer to itself. Initially, the rule base in the RTSKIT2FNN is empty, after that, all rules are generated by online structure learning, and all the parameters of the RTSKIT2FNN are updated online using gradient descent algorithm with varied learning rates VLR. Through experimental results, we demonstrate the effectiveness of the proposed RTSKIT2FNN for mobile robot control.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, mobile robots are extensively used in industry and for tasks in dangerous and harsh environments, such as space exploration, military, rescue in the event of natural disasters, and nuclear areas, etc. the ability to track a planned path and perform tasks in these environments autonomously is still among the main challenges facing researchers in the intelligent mobile robot’s field. Significant recent studies have been focused on trajectory tracking control. Most of the proposed control strategies are using the kinematic model which produces a reference velocity based on position errors [1,2,3,4,5].

Recently, many studies have been done on the artificial intelligence application in mobile robot control such as fuzzy logic (FLC) and neural network (NN). The Fuzzy control (FLC) uses linguistic information based on human expertise, as a result, the FLC has several advantages such as stability, robustness, no models are required (free model), using expert knowledge and the IF-THEN rules algorithm [6, 7]. Thus, the FLC has attracted more attention in mobile robot control research. In [8], Castillo et al. designed a dynamic fuzzy logic controller for mobile robot trajectory tracking with membership functions optimization using Ant Colony algorithm. In [9], Huq et al. proposed a behaviour-modulation technique which combined the state-based formalism of discrete event system (DES) with the deterministic fuzzy decision making. In [10], Hou et al. proposed adaptive control via fuzzy approach and backstepping for mobile robot control. In [11], Bencherif and Chouireb presented a sensors data fusion based on the fuzzy logic technique for navigation of wheeled mobile robot in an unknown environment. In [7], Hagras. presented a control architecture using type-2 FLC for mobile robot navigation in dynamical unknown environments.

Previous studies achieved the main control objectives and thus have a huge impact on mobile robot control. But on the other hand, the fuzzy logic needs a human expertise knowledge information (there is no learning ability) and Fuzzy rule derivation is often difficult [12]. Recently, much research is interested in the development and applications neural network (NN) for control and system identification. In [13], Bencherif and Chouireb proposed a new training method for a neural network which is based on a combination between Levenberg-Marquardt optimisation method and iterated Kalman Filter for mobile robot trajectory tracking control. In [14], Yacine et al. presented the design of a kinematic controller for wheeled mobile robot trajectory tracking based on an adaptive neural network (ADALINE). In [15], Li et al. proposed an incorporating Neural-Dynamic optimisation based on predictive model control approach for mobile robot tracking control. In [16], Shojaei et al. presented mobile robots formation trajectory tracking control of type (m, s) by using neural adaptive feedback.

More recently, there has been much research that discusses incorporating the behaviours of fuzzy logic (FLSs) and neural networks (NNs). The fuzzy neural networks (FNNs) get their learning ability from NNs, and their inference technology from fuzzy systems, FNNs are an effective tool for mobile robot control. FNNs include, the adaptive network based fuzzy inference system (ANFIS) [17, 18] with a fixed structure and all parameters are tuned with a backpropagation or a hybrid learning algorithms and use for the consequent part the Takagi-Sugeno-Kang (TSK) type. The self-constructing neural fuzzy inference network (SONFIN) [19] uses the Mamdani type for the consequent part. The recurrent FNN (RFNN) [20, 21] takes the form of self-feedback connections used as internal memories and are done by returning the output of each membership function (MF) back as an input.

Moreover, all of the aforementioned FNNs and RFNN are based on type-1 fuzzy sets. In recent years, type-2 fuzzy logic systems (FLSs) have drawn much attention in many studies, the type-2 fuzzy logic introduced by Zedah in [22]. The use of type-2 fuzzy sets in nonlinear dynamic systems that are exposed to a variety of internal and external perturbations shows that type-2 methods have more potential than type-1 methods. However, the theory of type-2 FLS is more complicated than type-1 FLS theory. The interval type-2 fuzzy sets have been proposed in [23] to remove complexity and improve the type-2 fuzzy sets theory. The interval type-2 FNN has attracted a great attention in [12, 24,25,26,27,28,29,30,31,32,33], and proposed a learning process for an interval type-2 FNN system with uncertain means Gaussian membership functions. In [24], Abiyev and Kaynak described the design of IT2FNN using gradient descent learning algorithm for identification and control of time varying systems. In [25, 26], the authors proposed a self-learning IT2FNN with a fixed structure using sliding mode (SM) theory for parameters learning . In [27], Lin et al. used IT2FNN with adaptive control law for motion control. Castro et al. [28] proposed a hybrid learning algorithm for IT2FNN. In [12, 29], the authors proposed a self-evolving interval type-2 FNN (SEIT2FNN)uses interval type-2 fuzzy sets with type-2 Gaussian MFs uncertain means in the antecedent parts and Takagi-Sugeno-Kang (TSK) fuzzy sets in the consequent parts. Also, it uses the structure learning process to self-structure evolving for the IT2FNN and parameter learning to tuned the antecedent parameters using gradient descent algorithm. The consequent parameters are learned by using a rule-ordered Kalman filter. In [30], Juang et al. proposed a RSEIT2FNN for Dynamic nonlinear Systems, the antecedent part of RSEIT2FNN forms a local internal feedback connections loop by feeding the firing strength of each rule back to itself. In [31], Lin et al. applied a functional-link network (FLN) to the self-evolving IT2FNN for identification and control time-varying plants. In [32], Lin et al. designed a self-organisation T2FNN using gradient descent for parameters learning and the particle swarm optimization (PSO) method to find the optimal learning rates, applied for antilock braking systems. In [33], Lin, Y et al. proposed a simplified interval type-2 fuzzy neural networks with design factors qr and ql are learned to set the upper and lower values to avoid using K-M iterative method to find L and R points.

The motivation of this paper is to construct a self evolving recurrent TSK interval type 2 FNN for mobile robot trajectory tracking. A self evolving algorithm gives the ability to achieve the suitable structure of RTSKIT2FNN during the online learning, and the varied learning rates algorithm VLR is used to find the optimal learning rates of the gradient descent algorithm and to guarantee a good stability convergence of the control system which is proved using the discrete Lyapunov function. The major contributions of the proposed control are summarized as:

- (1)

Successful apply a recurrent frame into interval type-2 FNN structure by using internal-feedback loops in the antecedent part are formed by feeding the interval output of each membership function back to itself.

- (2)

A type-reduction process in the RTSKIT2FNN is performed by fixing the design factors ql and qr to reduce the computational time.

- (3)

The online structure and parameters learning algorithms are used to ameliorate the learning performances of the RTSKIT2FNN-VLR. Initially, the rule base in the RTSKIT2FNN is empty, and all rules are generated online by the structure learning algorithm. Concurrently to structure learning all the consequent and the antecedent parameters of the RTSKIT2FNN are learned by gradient descent algorithm.

- (4)

The design of the varied learning rates algorithm VLR to adjust the learning rates of the gradient descent algorithm to guarantee the robustness and the stability convergence of the system.

- (5)

In addition, experimental results were conducted to verify the proposed control RTSKIT2FNN-VLR performances by a comprehensive comparison with type-1 FNN and RFNN and other type 2 IFNN’s.

The rest of this paper is organized as follows. Section 2 introduces the Kinematics model of the mobile robot. Section 3 presents the RTSKIT2FNN structure. In Section 4, the online structure and parameter learning for RTSKIT2FNN are described in detail. Section 5 presents the experimental results, and Section 6 contains the conclusion.

2 Kinematics model

The unicycle mobile robot kinematics model is the basis of many types of nonholonomic Wheeled Mobile Robots WMRs. For this reason, it has attracted much theoretical attention of researchers.

Unicycle WMRs have usually two driving wheels, one mounted on each side of their center, and a free rolling wheel for carrying the mechanical structure. These two wheels have the same radius r and separated by 2R and are driven by two electrical actuators for the motion and orientation, and the free rolling wheel is a self-adjusted supporting wheel. It is assumed that this mobile robot is made up of a rigid frame and non-deformable wheels, and they are moving in a horizontal 2D plane with the global coordinate frame (O, X, Y). The configuration is represented by vector coordinates: q = [x, y, 𝜃], that is, the position coordinates of the point q the centre of the WMR in the fixed coordinate frame OXY, and its orientation angle as shown in Fig. 1. The linear velocity of the wheels represented by v and the angular velocity of the mobile robot is w. The kinematics model (or equation of motion) of the mobile robot is then given by:

To consider a trajectory tracking problem, a reference trajectory should be generated. The reference trajectory is:

The error coordinates represented by the world coordinates are:

In the view of moving coordinates, the error coordinates are transformed into:

3 Recurrent TSK interval type-2 fuzzy neural networks description

This section introduces the structure of a RTSKIT2FNN. This multi-input single-output (MISO) system consists of n inputs and one output. Figure 2 shows the proposed six-layered network which realizes an interval type-2 fuzzy neural network using Takagi-Sugeno-Kang (TSK) type for the consequent part. The basic functions of each layer are described as follows.

- Layer 1:

(Input layer): Each node in this layer represents an input variable xj, j = 1,..., n and there are no weights to be modified in this layer.

- Layer 2:

(Fuzzification Layer or membership function layer): each node in the membership layer performs a recurrent interval type-2 MF to execute the fuzzification operation.

The input of the membership layer can be represented by:

Where t denotes the number of iterations, \({\alpha _{i}^{j}} \)represents the weight of the self-feedback loop,\(\mu _{\overline {A}_{j}^{i} } (t-1)\) represents the output signal of layer 2 in the time (t-1) and is defined with Gaussian membership functions as

Each MF of the premise part can be represented as a bounded interval in terms of upper MF, \(\overline {\mu }_{j}^{i}\), and a lower MF,\(\underline {\mu }_{j}^{i} \), where

And

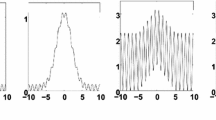

And thus, the output of this layer can be represented as an interval \([\overline {\mu }_{j}^{i} ,\underline {\mu }_{j}^{i} ]\) as shown in Fig. 3 .

- Layer 3 (Firing Layer)::

-

In this layer, there are M nodes each node represents one fuzzy rule, and it computes the firing strength using the values that it receives from layer 2 and an algebraic product operator adopted in fuzzy meet operations, to generate the spatial firing strength Fi, and is computed as follows

$$ {\underline f^{i}} = \prod\limits_{j = 1}^{n} {\underline \mu_{j}^{i}},{\overline f^{i}} = \prod\limits_{j = 1}^{n} {\overline \mu_{j}^{i}} \text{~and~} F^{i} = [\underline f^{i} ;\overline f^{i} ] \ $$(10) - Layer 4 (Consequent Layer)::

-

In this layer, each node represents a TSK-type consequent node, for each rule node in layer 3 there is a corresponding consequent node in layer 4. The output is an interval type-1 set and can be represented as \([{w_{l}^{i}} ,{w_{r}^{i}} ]\) where l and r indices represent the left and right limits respectively, the node output can be expressed as

$$ [{w_{l}^{i}} ,{w_{r}^{i}} ]=[{c_{0}^{i}} -{s_{0}^{i}} ,{c_{0}^{i}} +{s_{0}^{i}} ]+\sum\limits_{j=1}^{n}[{c_{j}^{i}} -{s_{j}^{i}} ,{c_{j}^{i}} +{s_{j}^{i}} ]x_{j} $$(11)that is

$$ {w_{l}^{i}} =\sum\limits_{j=0}^{n}{c_{j}^{i}} x_{j} -\sum\limits_{j=0}^{n}\left|x_{j} \right| {s_{j}^{i}} $$(12)and

$$ {w_{r}^{i}} =\sum\limits_{j=0}^{n}{c_{j}^{i}} x_{j} +\sum\limits_{j=0}^{n}\left|x_{j} \right| {s_{j}^{i}} $$(13)where x0 ≡ 1.

- Layer 5 (Output Processing Layer)::

-

Each node in this layer computes an interval output [yl, yr] by using the output from layer 4 and the firing strength Fi from layer 3, and the design factors [ql, qr] proposed in [33] to adjust the upper and lower values without using the Karnik-Mendel iterative method. In this paper, ql and qr are non-adjustable values and set to 0.5. The outputs of this layer can be computed by

$$ y_{l} =\frac{(1-q_{l} ){\sum}_{i=1}^{M}\underline{f}^{i} {w_{l}^{i}} +q_{l} {\sum}_{i=1}^{M}\overline{f}^{i} {w_{l}^{i}} }{{\sum}_{i=1}^{M}\underline{f}^{i} +\overline{f}^{i} } $$(14)And

$$ y_{r} =\frac{(1-q_{r} ){\sum}_{i=1}^{M}\overline{f}^{i} {w_{r}^{i}} +q_{r} {\sum}_{i=1}^{M}\underline{f}^{i} {w_{r}^{i}} }{{\sum}_{i=1}^{M}\underline{f}^{i} +\overline{f}^{i} } $$(15) - Layer 6 (output layer)::

-

The node in this layer computes the output variable of the RTSKIT2FNN using a sum operation of the output interval of layer 5 yl and yr. In this paper the output of this layer is given by

$$ y=\frac{y_{l} +y_{r} }{2} $$(16)

4 Online learning algorithm

This section presents the two-phase learning scheme algorithm for RTSKIT2FNN. The objective of the first phase is structure learning that discusses how to construct the RTSKIT2FNN rules. The learning starts with empty rule-base in the RTSKIT2FNN and all fuzzy rules are evolved by an online structure learning scheme. The second phase is the parameter learning phase that uses a gradient descent algorithm with varied learning rates for online parameters learning.

4.1 Structure learning

The structure learning used the rule firing strength variation as a criterion for rule generation, this idea was used for type-1 fuzzy rule generation in [19], and developed to type-2 fuzzy rule generation [12, 33] by using the interval firing strength Fi in (10), the structure learning of the proposed RTSKIT2FNN is introduced as follows.

For the first incoming data point \(\overrightarrow x \) is used to generate the first fuzzy rule with mean, width, centre and the weight of the self-feedback loop of recurrent interval type 2 fuzzy set as

where σfixed is a predefined threshold, Δx indicates the width of the uncertain mean region. If Δx is too small, then the type-2 fuzzy set approximates a type-1 fuzzy set and if Δx is too large, then a type-2 fuzzy set will cover most of the input domain. In this paper Δx and σfixed are set to 0.1 and 0.3 respectively and αfixed is set to 0.1.

For each subsequent of incoming data \(\overrightarrow x \), we compute the firing strengths interval in (10) then we compute the mean or centre value of firing strength for each rule i denoted as

\({f_{c}^{i}}\) the spatial firing strength centre is a rule generation criterion to generate a new rule based on a predetermined threshold fth . For each new incoming data,

With M(t) is the number of generated rules at time t. If \({f_{c}^{I}}\le f_{th} \), then new fuzzy rules will be generated M(t+ 1)=M(t)+ 1; this condition means that the data point does not match well from any existing rules, so the new fuzzy rule evolved. The uncertain means, width and centre of type 2 fuzzy set related to the new fuzzy rule are defined in the same manner as the first rule

and

where β > 0 determines the overlap degree between fuzzy sets. Equation (21) denotes that the width is equal to Euclidean distance between the average centres of the new fuzzy set and fuzzy set I. The high overlapping between fuzzy sets is obtained when β is too large, and there is no overlapping if β is too small. In this paper is set to 0.5; this indicates that the width is equal to the half distance between fuzzy sets

The initial consequent parameters \({c_{j}^{1}}\) with j = 1,.., n in the first rule are set to very small values 0.05, and \({s_{j}^{1}}\) are defined as the initial output interval range are set to very small values 0.002. For the new generated rules the consequent parameters are assigned to the same values as the first rules

The structure learning and fuzzy rules generation algorithm as follows:

Parameters of each evolved rules are modified by the parameter-learning algorithm introduced in the next section.

4.2 Parameter learning

Parameter learning is performed concurrently with the structure learning process. Parameter Learning tunes all parameters for each fuzzy rule, including the current fuzzy rule and that was newly generated. To describe the parameter learning, we define the objective function as

where yd(t + 1) is the desired output and y(t + 1) is the output of RTSKIT2FNN. The updated parameters based on gradient descent algorithm are given by the following equations:

where ηm1, ηm2, ησ, ηα, ηc, ηs are the learning rates , j = 1,., n and i = 1,, M, n and M are the number of inputs and rules respectively . The derivation of the premise part in (25)–(28) can be expressed by the following:

The derivation of \(\frac {\partial y}{\partial f}\) can be expressed by the following equations :

Now, we calculate the derivatives of firing functions with respect to the premise parameters

Then the derivation of consequent parameter in (29–30) can be expressed by the following:

The structure of the global online learning process is presented in the flowchart Fig. 4.

4.3 Convergence analysis

In this section, we propose the varied learning rates of parameter learning to assure the convergence of the tracking errors based on the analysis of a discrete-type Lyapunov function. Consider the objective function in (24) as the discrete Lyapunov function.

The derivative of the proposed discrete Lyapunov function can be written as

The linearized model of (49) can be calculated via (25)–(30) as follow

Then E(t + 1) can be expressed as

where ηm1, ηm2, ησ, ηα, ηc, ηs are the learning rates parameters. The derivatives of the objective function in (51) are calculated in parameter learning Section 4.2. The learning-rate parameters of the RTSKIT2FNN are designed as

where ε is positive constant, then (51) can be rewritten as

Applying the learning rates values from (52–57) into the (58) we have

From (59), we can guarantee that the error represented in (24) will converge to zero with varied learning rates designed in (52)–(57). Moreover, the mobile robot with the proposed RTSKIT2FNN control system has the ability to track the reference path stably.

5 Experimental results

5.1 Robot description and control architecture

Herein, we present experimental results to validate the control performance of the proposed RTSKIT2FNN with online structure and parameter learning for stable path tracking of mobile robots. In this paper, a Pioneer 3-DX mobile robot is used as an example to verify the effectiveness of the proposed trajectory tracking control scheme shown in Fig. 5.

Pioneer 3-DX mobile robot is shown in Fig. 6. The platform is assembled with motors featuring 500-tick encoders, 19 cm wheels, tough aluminum body, 8 forward-facing ultrasonic (sonar) sensors, 1, 2 or 3 hot-swappable batteries, and a complete software development kit. The base Pioneer 3-DX platform can reach speeds of 1.6 meters per second. The Pioneer 3-DX robot is an all-purpose base, used for research and applications involving mapping, teleportation, localization, monitoring, reconnaissance, and other behaviors.

5.2 Results

For RTSKIT2FNN’s, the used parameters are chosen as follows. The RTSKIT2FNN for linear velocity has two inputs \((x_{e} ,\dot {x}_{e} )\) and one output v, the spatial firing strength threshold is set to fth = 0.6. The RTSKIT2FNN for angular velocity has two inputs (ye, 𝜃e) and one output w, the spatial firing strength threshold is fth = 0.3. For the fixed learning rates FLR are set to ηv = 0.5 for linear velocity and ηw = 0.01 for the angular velocity. For the varied learning rates VLR the positive constant ε is set to 0.01 and 0.00001 for the linear and angular velocities respectively. The objective functions for parameters learning of the two RTSKIT2FNN’s are chosen as follows

The sampling time (ts) is 0.01s. In order to exhibit the superiority of the proposed RTSKIT2FNN control system, seven different network structures including ANFIS [17], RFNN [34], IT2FNN [24], IT2FNN-SM [26], SOT2FNN-PSO [32], SEFT2FNN [31] and SIT2FNN in [33] are considered. The SIT2FNN, SEFT2FNN and SOT2FNN-PSO are also examined with the same structure and learning parameters in RTSKIT2FNN with FLR. As a performance criterion, we evaluate the root mean square error (RMSE)

Experimental results are shown in Figs. 7, 8, 9, 10, and Tables 1, 2, 3 and 4. Figures 7 and 8 show the reference paths Lemniscate and sinusoidal respectively with the real tracked path of the mobile robot using RTSKIT2FNN with varied learning rates (VLR), the dashed red line is the reference trajectory; the solid blue line is the trajectory followed by the mobile robot with RTSKIT2FNN-VLR. Figure 9 presents the position coordinates x, y and 𝜃 of the mobile robot with reference coordinates for the Lemniscate trajectory, the tracking errors xe, ye and 𝜃e are shown in the Fig. 10. From these figures, we can see that, the effectiveness of the proposed control scheme using RTSKIT2FNN -VLR for mobile robot trajectory tracking with stable speed and high accuracy.

Table 1 studies the RTSKIT2FNN performances with varied and fixed learning rates for two cases of trajectories. The performances are evaluated using the RMSE of the position (x, y) and the orientation 𝜃. The obtained results show that the RTSKIT2FNN with VLR has better performances than RTSKIT2FNN with FLR. The table shows the difficulty to ensure the good convergence and tracking stability during the online learning by using fixed learning rates (FLR) because the choice of fixed learning rate values is not necessarily appropriate to all of the cases and conditions of tracking control in real time and it may cause in some cases the divergence of the system. On the other hand, the varied learning rates VLR guaranteed the stability, robustness and the good parameters adaptation of the RTSKIT2FNN during the online learning, this VLR property changes the learning rates according to the objective function represented in (60) and (61) to guarantee the convergence of tracking errors.

Additionally, Tables 2 and 3 show the influence of firing strength thresholds fthv and fthw for linear and angular velocity respectively on the performance of RTSKIT2FNN. Table 2 represents the influence of fthv with a fixed fthw and the opposite in Table 3. We notice that larger values of fthv or fthw generate larger numbers of rules and improve the performance of RTSKIT2FNN in general. However, when the value of fthv or fthw is too large, the performance saturates for reason that the online learning process achieved the local minimum, and also the number of rules change according to the learning algorithm types VLR and FLR as shown in Table 4 for the same network. From the two tables the small RMS errors are obtained when fthv = 0.6 and fthw = 0.3.

Table 4 compares the RMSE values and generated rules number for other related networks ; we can note that the T2FNN networks have the smaller RMSE compared to T1FNN which is represented by two structures ANFIS and RFNN. This note shows the high superiority of T2FNN structure for the mobile robot trajectory tracking. The T2FNN is divided into two types in terms of learning principle: static and evolving. For the static type we have two algorithms IT2FNN-SM that uses sliding mode for learning and IT2FNN which uses the gradient descent. For the evolving learning type we have SOT2FNN-PSO, SEFT2FNN, SIT2FNN, RTSKIT2FNN-FLR, and RTSKIT2FNN-VLR. We can note that the IT2FNN-SM has the smaller RMSE than IT2FNN with gradient descent algorithm, and we can note also that the evolving algorithms have lower RMSE than the static algorithms; this demonstrates the superiority of the evolving algorithms. For the evolving algorithms, the proposed RTSKIT2FNN-VLR has the smaller RMSE compared to the other evolving algorithms. This result shows the high superiority and the effectiveness of the proposed algorithm, we can also note that RTSKIT2FNN-VLR and SEFT2FNN have the largest number of generated rules and the lower RMSE this demonstrates, the relationship between the generated rule and the effectiveness of the evolving algorithms.

6 Conclusion

This paper proposed a recurrent TSK Interval Type-2 Fuzzy Neural Networks with online structure and parameters learning control scheme for path tracking of mobile robots. The proposed RTSKIT2FNN has successfully incorporated the recurrent frame of internal-feedback loops into the interval Type-2 Fuzzy Neural Networks. The online learning of RTSKIT2FNN, there is no need to determine an initial network structure for the reason that the structure learning algorithm enables the network to evolve online and generate the required network structure and rules based on the rule firing strength. The structure learning is performed concurrently with parameter learning using the proposed gradient descent algorithm with varied learning rates (VLR) to tune all parameters of the RTSKIT2FNN, the learning convergence and the stability of the control system are proved using the discrete-type Lyapunov function. The experimental results have shown the obvious superiority of the proposed control method for mobile robot trajectory tracking compared to the other control methods.

References

Matraji I, Al Durra A, Haryono A, Al Wahedi K (2018) Trajectory tracking control of skid-steered mobile robot based on adaptive second order sliding mode control. Control Eng Practice 72:167–176

Saradagi A, Muralidharan V, Krishnan V, Menta S, Mahindrakar AD (2017) Formation control and trajectory tracking of nonholonomic mobile robots. IEEE Transactions on Control Systems Technology

Hao Y, Wang J, Chepinskiy SA, Krasnov AJ, Liu S (2017) Backstepping based trajectory tracking control for a four-wheel mobile robot with differential-drive steering. In: 2017 36th Chinese on control conference (CCC). IEEE, pp 4918–4923

Rubagotti M, Della Vedova ML, Ferrara A (2011) Time-optimal sliding-mode control of a mobile robot in a dynamic environment. IET Control Theory Appl 5(16):1916–1924

Xin L, Wang Q, She J, Li Y (2016) Robust adaptive tracking control of wheeled mobile robot. Robot Auton Syst 78:36–48

Wai RJ, Liu CM (2009) Design of dynamic petri recurrent fuzzy neural network and its application to path-tracking control of nonholonomic mobile robot. IEEE Trans Indus Electron 56(7):2667–2683

Hagras HA (2004) A hierarchical type-2 fuzzy logic control architecture for autonomous mobile robots. IEEE Trans Fuzzy Syst 12(4):524–539

Castillo O, Neyoy H, Soria J, Melin P, Valdez F (2015) A new approach for dynamic fuzzy logic parameter tuning in ant colony optimization and its application in fuzzy control of a mobile robot. Appl Soft Comput 28:150–159

Huq R, Mann GK, Gosine RG (2006) Behavior-modulation technique in mobile robotics using fuzzy discrete event system. IEEE Trans Robot 22(5):903–916

Hou ZG, Zou AM, Cheng L, Tan M (2009) Adaptive control of an electrically driven nonholonomic mobile robot via backstepping and fuzzy approach. IEEE Trans Control Syst Technol 17(4):803–815

Aissa B, Fatima C, Yassine A (2017) Data fusion strategy for the navigation of a mobile robot in an unknown environment using fuzzy logic control. In: 2017 5th International conference on electrical engineering-boumerdes (ICEE-B). IEEE, pp 1–6

Juang CF, Tsao YW (2008) A self-evolving interval type-2 fuzzy neural network with online structure and parameter learning. IEEE Trans Fuzzy Syst 16(6):1411–1424

Aissa BC, Fatima C (2017) Neural networks trained with levenberg-marquardtiterated extended Kalman filter for mobile robot trajectory tracking. J Eng Sci Technol Rev, 10(4)

Yacine A, Fatima C, Aissa B (2015) Trajectory tracking control of a wheeled mobile robot using an adaline neural network. In: 2015 4th International conference on electrical engineering (ICEE). IEEE, pp 1–5

Li Z, Deng J, Lu R, Xu Y, Bai J, Su CY (2016) Trajectory-tracking control of mobile robot systems incorporating neural-dynamic optimized model predictive approach. IEEE Trans Syst Man Cybern Syst 46(6):740–749

Shojaei K (2016) Neural adaptive output feedback formation control of type (m, 0s) wheeled mobile robots. IET Control Theory Appl 11(4):504–515

Aissa BC, Fatima C (2015) Adaptive neuro-fuzzy control for trajectory tracking of a wheeled mobile robot. In: 2015 4th International conference on electrical engineering (ICEE). IEEE, pp 1–4

Jang JO (2011) Adaptive neuro-fuzzy network control for a mobile robot. J Intell Robot Sys 62(3–4):567–586

Juang CF, Lin CT (1998) An online self-constructing neural fuzzy inference network and its applications. IEEE Trans Fuzzy Syst 6(1):12–32

Lee CH, Chiu MH (2009) Recurrent neuro fuzzy control design for tracking of mobile robots via hybrid algorithm. Expert Syst Appl 36(5):8993–8999

Wai RJ, Lin YW (2013) Adaptive moving-target tracking control of a vision-based mobile robot via a dynamic petri recurrent fuzzy neural network. IEEE Trans Fuzzy Syst 21(4):688–701

Zadeh LA (1975) The concept of a linguistic variable and its application to approximate reasoning-I. Inf Sci 8(3):199–249

Mendel JM, John RB (2002) Type-2 fuzzy sets made simple. IEEE Trans Fuzzy Syst 10(2):117–127

Abiyev RH, Kaynak O (2010) Type 2 fuzzy neural structure for identification and control of time-varying plants. IEEE Trans Ind Electron 57(12):4147–4159

Lu X, Zhao Y, Liu M (2018) Self-learning interval type-2 fuzzy neural network controllers for trajectory control of a delta parallel robot. Neurocomputing 283:107–119

Kayacan E, Maslim R (2017) Type-2 fuzzy logic trajectory tracking control of quadrotor vtol aircraft with elliptic membership functions. IEEE/ASME Trans Mechatron 22(1):339–348

Lin FJ, Chou PH, et al. (2009) Adaptive control of two-axis motion control system using interval type-2 fuzzy neural network. IEEE Trans Ind Electron 56(1):178–193

Castro JR, Castillo O, Melin P, Rodríguez Díaz A (2009) A hybrid learning algorithm for a class of interval type-2 fuzzy neural networks. Inform Sci 179(13):2175–2193

Lin YY, Chang JY, Lin CT (2014) A tsk-type-based self-evolving compensatory interval type-2 fuzzy neural network (tscit2fnn) and its applications. IEEE Trans Ind Electron 61(1):447–459

Juang CF, Huang RB, Lin YY (2009) A recurrent self-evolving interval type-2 fuzzy neural network for dynamic system processing. IEEE Trans Fuzzy Syst 17(5):1092–1105

Lin CM, Le TL, Huynh TT (2018) Self-evolving function-link interval type-2 fuzzy neural network for nonlinear system identification and control. Neurocomputing 275:2239–2250

Lin CM, Le TL (2017) Pso-self-organizing interval type-2 fuzzy neural network for antilock braking systems. Int J Fuzzy Syst 19(5):1362–1374

Lin YY, Liao SH, Chang JY, Lin CT et al (2014) Simplified interval type-2 fuzzy neural networks. IEEE Trans Neural Netw Learning Syst 25(5):959–969

Mon YJ, Lin CM, Leng CH (2008) Recurrent fuzzy neural network control for mimo nonlinear systems. Intell Autom Soft Comput 14(4):395–415

Acknowledgments

The authors would like to thank Telecommunications Signals and Systems Laboratory (TSS) for the support given to this research project

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Bencherif, A., Chouireb, F. A recurrent TSK interval type-2 fuzzy neural networks control with online structure and parameter learning for mobile robot trajectory tracking. Appl Intell 49, 3881–3893 (2019). https://doi.org/10.1007/s10489-019-01439-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-019-01439-y