Abstract

A novel dynamic multi-objective optimization evolutionary algorithm is proposed in this paper to track the Pareto-optimal set of time-changing multi-objective optimization problems. In the proposed algorithm, to initialize the new population when a change is detected, a modified prediction model utilizng the historical optimal sets obtained in the last two times is adopted. Meantime, to improve both convergence and diversity, a self-adaptive differential evolution crossover operator is used. We conducted two experiments: the first one compares the proposed algorithm with the other three dynamic multiobjective evolutionary algorithms, and the second one investigates the performance of the two proposed operators. The statistical results indicate that the proposed algorithm has better conergence speed and diversity and it is very promising for dealing with dynamic environment.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Many real-world industrial applications and scientific reserch present a time-dependent feature. The dynamic multi-objective optimization problem (DMOP) is characterized in that either the objective function and constraint or the associated parameters or both change over time with respect to static multi-objective optimization problem [1]. The difficulties of dealing with a DMOP lie in how to converge to the new true Pareto-optimal front (PF) rapidly and find a widely distributed set of the Pareto-optimal solutions (PS) when a environment change coming. A great success has been achieved since the evolutionary algorithms(EAs) were applied to the multi-objective optimization and more approximate optimal solutions widely distributed can be generated by the multi-objective optimization evolutionary algorithms (MOEAs) [2] compared to the traditional multi-objective optimization algorithm. So the dynamic multi-objective optimization evolutionary algorithm(DMOEA) would be promising for the multi-objective optimization in dynamic environments.

The Dynamic Orthogonal Multi-Objective Evolutionary Algorithm(DOMOEA)[3] applies an orthogonal design method proposed in the previous MOEA to enhance the fitness of the population during the static stages between two successive changes of environment. Goh et al. [4] proposed a new coevolutionary strategy that competitive and cooperative mechanisms are combined to solve MOPs and extended the strategy to deal with DMOPs. In this algorithm, through the iterative process of competition and cooperation, the subcomponents are optimized by different species subpopulations based on the optimization target of the particular time, which enabling the coevolutionary algorithm to handle both the static and dynamic multiobjective optimization problems. In the work of Wang et al. [5], during the static stages the static multi-objective optimization problem is changed into a two-objective optimization problem that deal with two re-defined objectives. Simultaneously, a new crossover operator and mutation operator that are suitable for the changing environment are adopted in the algorithm. Koo et al. [6] presented a new method to detect the environment changes, a new prediction strategy known as the predictive gradient, and a new memory technique suitable for solving DMOPs in a fast changing environment. The predictive strategy is easy to implement and solutions updated through the predictive gradient will remain in the vicinity of the new Pareto-optimal Set and pull the rest of the population to converge.

Artificial Immune Systems (AIS) inspired by the human immune system (HIS) have exhibited its adventages in terms of immune memory, diversity, robustness and other aspects. Since Coello Coello proposed an artificial immune system algorithm MISA [7] based on the clonal selection principle to handle MOPs, some successful multi-objective optimization immune algorithms have been proposed afterwards [8–10]. And the experiment results show that such kind of optimization techniques is one of the most promising strategies for dealing with the multi-objective optimization problem. Moreover, dynamic multi-objective optimization immune algorithms (DMOIAs) have also attracted a lot of attention, but few works have been reported at present. Shang et al. [11] proposed a clone selection algorithm (CSADMO) based on nonuniform mutation strategy and distance method to solve two DMOPs. The nonuniform mutation strategy makes the algorithm search in a large range in the early evolution and implement the local search in the latter stage. Zhang [12, 13] researched immune-based optimization techniques for DMOPs in which the dimension of the variable or objective space may be time-varying. And the algorithms has been successfully applied to greenhouse control and signal simulation. A hybrid dynamic multi-objective immune algorithm using the linear prediction model (HDMIO) was proposed to deal with four DMOPs by Ma et al. [14]. Zhang et al. [15] developed an artificial immune system to solve time-varying non-linear constrained multi-objective problems with changing variable dimensions. In this algorithm, T-module, B-module, and M-module are used to create an initial population by using the history information when the environment changes, search for the desired non-dominated front of a given environment, and store temporarily the non-dominated solutions to help create initial populations for the next environment, respectively.

In this paper, a well-known static multi-objective immune algorithm with non-dominated neighbor-based selection (NNIA) [10], which has been proved to be an effective algorithm for sloving static MOPs, is extended to solve DMOPs. Due to the fact that NNIA pays more attention to the less-crowded regions in the current trade-off front in the process of crossover, the diversity of the solutions obtained by NNIA may not be ideal. To remedy this, we use an improved simple self adaptive differential evolution crossover operator to improve the diversity of the solution in this paper. In our new dynamic MOEA (DMOEA), firstly, in the decision space, to take individuals closer to the PS at the beginning of a new environment, we propose a modified prediction model to take full advantage of search informations in the past two times. Secondly, in the objective space, to improve the convergence speed and the diversity of the population, we propose an improved adaptive differential evolution crossover operator to help the population evolution.

This paper is structured as following: Section 2 presents some related theoretical background including dynamic multiobjective optimization, forward-looking forecasting model, the original static multi-objective immune algorithm used in this paper, differential evolution crossover operator and several important terms throughout the paper. Section 3 presents the details of the proposed dynamic immune inspired multi-objective algorithm. Section 4 introduces the test instances and performance metics, and then carried out experiments to evaluate the effectiveness of our algorithm. Finally, Section 5 outlines some conclusions and proposes a few future research directions.

2 Related theoretical background

2.1 Dynamic multi-objective optimization

In the case of time changing, the objective functions and the constrained functions may be related to the time, so a static multi-objective problem extends to the following expression:

This is a DMOP, where t represents time. x = (x 1, … x l ) ∈ Ω is the decision vector, Ω is the feasible region in decision space, F(x, t) represents the set of m objective functions with respect to t, g i (x, t) and h j (x, t) represent the inequality and equality constraints, respectively.

When considering minimization problem, a vector x 1 is said to dominate vector x 2 (denoted as x 1 ≺ x 2) if and only if x 1 is partially less than x 2, ie,

For a given DMOP F(x, t), at a certain moment, the Pareto-optimal set P S t in the decision space is defined as:

That means the Pareto-optimal set in the decision space consists of the decision vectors that can not be dominated by any other decision vectors.

Accordingly, for a given DMOP F(x, t) and Pareto-optimal set P S t , at a certain moment, the Pareto-optimal front P F t in the objective space is defined as:

According to the PS t and the PF t whether change over time, Farina et al. [1] divided the DMOPs into four types:

- Type I:

-

: The PS t changes over time, the PF t does not change.

- Type II:

-

: Both the PS t and the PF t change over time.

- Type III:

-

: The PS t does not change over time, the PF t change over time,.

- Type IV:

-

: Both the PS t and the PF t do not change over time.

2.2 Summary of related terms

We describe the nomenclature of immunology and the terms throughout the paper in Table 1.

2.2.1 Antibody and antibody population

To put it simply, as we use the real-valued presentation like the NNIA, an antibody b i = (b i, 1, b i, 2, ..., b i, l ) is the coding of a decision variable x in the dynamic multi-objective optimization. So the antibody population is B = {b 1 ,b 2 ,...,b n }, b i ∈ Ω, 1 ≤ i ≤ n.

2.2.2 Dominant population, active population, and clone population

Based on the defination of domination in the 2.1, a dominant antibody represents an antibody which cannot be dominated by any other antibody, that is to say it is a good solution. The dominant population is denoted as D, a set of all dominant antibodies.

An active antibody represents a dominant antibody with greater crowding-distance [16] in the approximate Pareto-optimal front. Corresponding with the concept in artificial immune system, an active antibody means a competitive individual or a better solution in the dynamic multi-objective optimization. The active population is denoted as A, consisting of active antibodies. So during the evolution, the antibodies in the active population are more likely to enter the next generation.

During the evolution, copy the antibodies in the active population into the clone population, which is denoted as C, and carry out the genetic operation on C. So more active antibodies with greater crowding-distance will be evolved by the cloning operation.

2.2.3 Proportional cloning, recombination, and hypermutation

According to the interpretation of the terms above, the genetic operators corresponding to immunology will be easy to understand. The operation detail of the proportional cloning operator T C acts on the active population A = (a 1, a 2, … , a |A|)(|A| is the size of A) is as following:

where q i is the cloning number of the active antibody a i . q i is a self-adaptive value depends on the crowding-distance d i of a i , it is calculated as following:

where n C is an expectant value of the size of the clone population that is usually set as same as the initial population size.

And then the recombination operator T R acts on the clone population C = (c 1, c 2, … , c |C|)(|C| is the size of C) is as following:

And the hypermutation operator T R acts on the population R = (r 1, r 2, … , r |R|)(|R| is the size of R) is as following:

2.3 The used static MOEA

We describe the traditional static multi-objective immune algorithm used in this paper, the NNIA. Firstly,at a certain moment of t when the DMOPs is not changing, the dominant population, active population and clone population at the g-th evolution generation are denoted as D g , A g , C g , respectively. In NNIA, realize the recombination and the hypermutation with the simulated binary crossover (SBX) operator and polynomial mutation respectively. The main loop of NNIA is shown in Table 2.

2.4 Differential evolution crossover operator

DE is a promising evolutionary optimization method proposed by Storn and Price [17]. It introduces new parameter vectors by adding a weighted difference vector between two members in the population to the third member. The principle is simple and the process can be depicted as Fig. 2.

As shown in the figure above, at the g-th generation, three different individuals are randomly selected from the population for the i-th individual X i, g to be crossovered. And then a new intermediate individual V i, g is produced. With the crossover probability CR, X i, g and V i, g , the offspring U i, g + 1 is calculated as following:

Owing to the good performance of DE in solving single-objective optimization problems [17], some researchers have tried to extend it to MOPs. Abbass was the first to apply DE to MOPs in the Pareto Differential Evolution algorithm [18]. Many new Multiobjective Differential Evolution Algorithm being continuously has been published since then [19, 20].

2.5 The forward-looking predictive model

In DMOEA, a DMOP is usually considered as a continuous combination of unchanged static problems in a period of time, during which a static MOEA is used to guide the evolution. The search information of the optimal solutions in the last few moments might be useful for producing initial population in the new environment. The original and simplest framework is to save and deal with optimal solutions of the last time. Deb et al. [21] proposed two re-initialization techniques to replace a portion of the optimal solutions in the last time: randomly generate new individuals (DNSGAII-A) or generate mutated solutions of existing solutions (DNSGAII-B). The hyper-mutation operator and randomly generate individuals strategy are combined by Zheng [22]. Wei et al. [23] proposed a hyper rectangle search to predict m + 2 initial solutions (m is the number of objectives) for the next environment.

The other frameworks are based on the search information of the past several times and the information is saved to help the search process in the new environment. If the change of the optimal solutions’ location that obtained in the history moment presents some kind of fixed trend in the dynamic environment, it seems improtant to store the optimal solutions in the past several moments to generate a forward-looking model, which is used for acclerating the convergence speed. But if the changing trend is not obvious or stable, the predictive model will go wrong either. The forward-looking predictive model based on memory storage is firstly proposed by Hatzakis and Wallace in [24] to improve the performance of DMOEA. This predictive model utilizes the optimal solution sets obtained in the past t moments, that is from 0 moment to t − 1 moment, to procude the intial population of the next moment, that is the t moment. This model in the two-dimensional space is shown in Fig. 3.

Since then, some researchers have also proposed new predictive models. Zhou et al. [25] proposed a linear predictive model and four re-initialization methods were proposed with this model. The initial population at the new moment obtained by the four re-initialization method is near the PS location and converage to the true PS more quickly. Koo et al. [6] proposed a new predictive gradient strategy which is suitable for solving DMOPs in a rapidly changing environment.

As Hatzakis and Wallace mentioned [24], the design of predictive model is very important and it directly affects the accuracy of the initial solutions at the new moment. But in most cases, the design depends on the feature of the dynamic multi-objective problem. The fact that the only available information is the optimal solutions obtained in the past moments makes it diffcult to design a commonly used predictive model that can be used in different types of problems.

3 Description of the algorithm

In this section, we describe the main operators used in our dynamic immune inspired multi-objective algorithm and the whole flow of the proposed algorithm.

3.1 Environment change detection and modified prediction mechanism

3.1.1 Environment change detection

The detection operator is used to detect whether the environment has changed, or whether the change is big enough to regard it as a environment change rather than a noise. In this paper, the environment change detection operator [1] is calculated by the objective function values of the several individuals at two consecutive moment. It can be described as following:

where R(t) = (r 1, r 2, … , r m )T and U(t) = (u 1, u 2, … , u m )T are the maximum vector and minimum vector of the objective function value at the t moment, respectively. m is the number of objective functions. Variable r i is the maximum value of the i − th objective function in the population and variable u i is the minimum value of the i − th objective function in the population. n ε is the number of individuals chosen to detect the environment change. If the detective value ε(t) is greater than a predefined value \(\widetilde {\varepsilon }\), it means that a significant change has taken place, and then the modified predictive model is used to predict the initial population. In this paper, n ε and \(\widetilde {\varepsilon }\) are set to 5 and 2e-2, respectively.

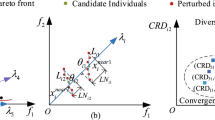

3.1.2 Modified prediction mechanism

When the environment changes, we use an improved predictive model to predict the initial population. The improved predictive model is based on the model proposed by Zhou et al. [25]. In [25], it is assumed that the PS at the past two moments is Q t − 1and Q t − 2, respectively, which are used to predict the initial population Q t at the t moment.The whole predictive model is inspired by the forward-looking predictive model in the Section 2.5 and it is shown in Fig. 4 (left ).

The predictive model in [25] is described as following:

where x t − 1 is an individual in Q t − 1, and x t − 2 is the individual in Q t − 2 and it is the nearest individual to x t − 1 according to the Euclidean distance:

That means the new initial individuals at the new moment depend on the shortest distance between the two points at the previous two moments and the predictive model is linear. Then we add a Gaussian noise σ ∼ N(0, I δ) to the predicted locations to increase the possibility of the new initial indiciduals falling on the true PS t at the new moment. Where I is an identity matrix and δis the standard deviation, which is calculated by the Euclidean distance of x t − 1 and x t − 2 as follows:

where n is the dimensions of the decision variables.

Although the predictive model proposed above can be commonly used and is particularly applicable for the problems with P S t linear changing over time. As demonstrated in [25], when the P S t is nonlinear changing over time, its performance is poor. Therefore, to make the predictive model more generic in more types of DMOPs, we propose a modified predictive model and it is shown in Fig. 4 (right ).

In the modified predictive model, half of the individuals are randomly selected to use in the linear formula (11), the remaining individuals are used in the new model. The new model expression is as following:

where \(x_{t-2}^{r1} \) and \(x_{t-2}^{r2} \) are two individuals randomly selected from Q t − 2, rand() returns an uniform random number in [0, 1]. Selecting two individuals to guide the new predictive individual can prevent the new individual moving toward the wrong direction when it is only guided by one individual, just like a PSO based crossover operator. Meanwhile, in the new model, only half of the individuals is calculated by distance, which means the computational complexity of the new model will reduce. It can be observed from the statistical experimental results in Section 4 that the modified predictive performs better than the original predictive model and it can obtain better results.

As the predictive model is based on the history search information, the model can not work at the early evolution with deficient information. We use a simple Gussian interference to generate the initial population. So the predictive strategy is showed as following:

3.2 Modified self-adaptive differential evolution crossover operator

The parameter F is important in the DE crossover operator. In the most literatures, there is no discussion about the influence of parameter F on the performance of multi-objective differential evolution algorithm, but take the value between 0.5 and 0.9 according to the experience. Qian et al. [26] proposed an adaptive method to make up for the defect above, the value of F is depend on the crowding distance in different Pareto levels, the sizes of the dominant population and the size of the whole population. This adaptive method makes the algorithm focus on the global search to find the feasible region in the early stage of evolution, and focus on the local search to speed up the converage speed in the late stage of evlution. The F is calculated as following:

where d i j is the crowding distance of the i − th solution in the j − th Pareto level; \(\overline d_{j} \) is the average value of crowding distances of the all solutions in the j − th Pareto level; \(\overline d \) is the average value of crowding distance of the all solutions in every iteration; |P| is the number of the dominant solutions; |Q| is the number of the population set; the parameter df represents the Euclidean distance between two boundary solutions in Q; l min is the minimu value for F. The results in [26] showed that the convergence and the diversity of the algorithm with this differential evolution crossover operator are significantly improved when dealing with different static multi-objective problems.

In our algorithm, because the DE crossover operator is implemented in the non-dominated population consistsing of individuals with greater crowding-distance, we can ignore the influence of boundary solutions on F and propose a more simple and effective model of F. The new F is calculated as following:

where d i is the crowding distance of the i − th antibody in the population, \(\overline d \) is the average value of crowding distances of the all antibodies in the population, k is the size of the population. It can be observed from the comparative trial results in Section 4 that the modified self-adaptive differential evolution crossover operator can achieve better results compared with the original self-adaptive differential evolution crossover operator.

3.3 The whole flow

In this paper, unlike the common strategy for dealing with the DMOP, at the beginning of a new moment, an environment change detection operator is used to detect whether the problem has really changed or not. Then the algorithm uses the modified forecasting model to get the new initial population. And finally, NNIA based on the modified self-adaptive differential evolution crossover is used to solve the static DMOPs.

The whole flow of the proposed algorithm is shown in Table 3:

Where g is the generation counter, τ T is the total number of generations at t moment. T m a x is the maximum number of moments, n D is maximum size of dominant Population. A g (t) is Active Population with the maximum size of n A . C g (t) is Clone Population with the maxmum size of n C. In PDNNIA, as the general method does, we assume that a dynamic problem remains unchanged within the t moment. That is, during the entire period of t moment, there is no change in the environment and thus a DMOP can be treated as a static MOP which can be solved by the modified NNIA which is improved with the modified self-adaptive differential evolution crossover operator.

The flowchart is shown in Fig. 5.

At the beginning of time t, Environment Change Detection and Modified Prediction Mechanism are applied to detect environment change and produce the initial population. After obtaining the dominant population D g (t) and the active population A g (t), evolution operators including proportional cloning, Modified Self-adaptive DE operator and polynomial mutation are used to guide the population to evolve until met g < τ T. In the process of forming the non-dominated population D g (t), if the number of non-dominated antibodies is greater than the maximum limitation n D , the algorithm uses the crowding-distance sorting strategy [16] to select n D antibodies with greater crowding-distance. Likewise, in the process of forming the active population A g (t), if the size of non-dominated population n D is greater than the limitation size of active population n A , the algorithm applies the crowding-distance sorting strategy as above.

4 Experimental results

In this section, we introduce six DMOPs and three metrics to test this algorithm and evaluate its performance. As a comparison, we test three other DMOEAs. And finally, we conducted two sets of comparative experiments to test the performance of the two mechanism, that is, the modified predictive model and adaptive differential evolution operator.

4.1 Test problems

In the introduction of the DMOP in Section 2.1, we do not introduct the time t in detail. In fact, the time t is controlled by the formula as following:

where n T and τ T are the severity and frequency of the environment change, respectively. τrepresents the current iterative counter or the current function evaluation counter and is added from zero to τ T . So τ T also corresponds to the total number of iteration or function evaluation when the environment remains unchanged. As this formula describes, a large value of n T will cause a small severity of change. Likewise, a large value of τ T will make the frequency of change slow.

Six different DMOPs are tested in this paper. The first four problems are DMOP1(FDA1), DMOP2(FDA3) [1], DMOP3(dMOP1), DMOP4(dMOP2) [4] and they are two objective functions. The last two problems are DMOP5(FDA4), DMOP6(FDA5) [1] and they are three objective functions. The details of all the six test problems are shown in Table 4.

4.2 Performance metrics

The Generational Distance (GD) [27] and the Spacing (SP)[28] are used for measuring the convergence and the distribution of solutions obtained by four algorithms, respectively, and the lower value of GD or SP represents the better performance. The two metrics are summarized as follows.

Convergence Metric: The Generational Distance (GD) represents the convergence of the solutions. GD t is calculated at the last generation of each t moment and is defined as following:

So the mean convergence indicators \(\overline {GD} \) is expressed as follows:

Let PF t be a set of uniformly distributed points on the true Pareto-optimal front at the t moment, and A t be the approximate Pareto-optimal set of dominant antibodies obtained by DMOEA at the t moment. Where d(v, PF t ) is the minimum Euclidean distance among all the distances between each vector v in A t and every point in PF t , T max is the maximum number of environmental change in a run.

Distribution Metric: The Spacing (SP) represents the distribution of the solutions. SP t is calculated at the last generation of each t moment and is defined as following:

where

So the mean Spacing \(\overline {SP} \) s expressed as follows:

Let PF t be a set of uniformly distributed points on the true Pareto-optimal front at the t moment, and A t be the approximate Pareto-optimal set of dominant antibodies obtained by DMOEA at the t moment. Where d(v, PF t ) is the minimum Euclidean distance among all the distances between each vector v in A t and every point in PF t , \(\overline d \) is the average value of all d i , and k is the number of the objective functions.

4.3 Comparison of different DMOEAs

The other three algorithms that are used to be compared with PDNNIA are DNSGAII-A [3], CSADMO [9], HDMIO [10]. In all the four algorithms, the population uses real number coding and the population size is set to be N = 100. In DNSGAII-A, set the crossover probability p c and the mutation prabability p m to be 1 and 1/n, respectively, where n is the dimensions of decision variables. In CSADMO, the clone proportion is set to be 3. In HDMIO the parameters are set as following: n D = n C = 100, n A = 20, in the DE, CR =0.1, F = 0.5. In PDNNIA, the parameters are set as following: n D = n C = 100, n A = 20, in the DE, CR =0.1. For each DMOP, we measure the performance of the algorithm in different environmental change combinations (τ T , n T ), that is the τ T can take a value of 15 or 25 and n T can take a value of 5 or 10. We run each algorithm 20 times for each test instance in each enrivonmental change combination independently. The algorithms stop when t > 50, i.e., there are 50 environmental changes in each run. Table 5 shows the means and variances of GD and SP obtained by the four algorithms, in which M represents the mean and V represents the variance.

As can be seen from Table 5, for all the six test problems and in all the environmental change combinations (τ T , n T ), PDNNIA can get the best means except for DMOP3 and one environmental change combination of DMOP2, and in most cases, the smallest variance values are also obtained by PDNNIA. This means that PDNNIA performs the best among the four algorithms and has stable performance. The results obtained by PDNNIA have the best the convergence and distribution.

The inverted generational distance(IGD t ) [29] reflects both the convergence and diversity. IGD t is used to evaluate the performance of the algorithms in all 50 iterations (0∼49). I G D t is calculated in the last generation of every time t and is defined as follows:

Let PF t be a set of uniformly distributed points on the true Pareto-optimal front at the t moment, and A t be the approximate Pareto-optimal set of dominant antibodies obtained by DMOEA at the t moment. Where d(v, PF t ) is the minimum Euclidean distance among all the distances between each vector v in PF t and every point in A t , T max is the maximum number of environmental changes in a run.

For each test problem, we compare the IGD t obtained by each algorithm in the whole process of the evolution with the second environmental change combination,i.e. (τ T , n T ) = (25,10), and the results are shown in Fig. 6. In the figure, for convenience, algorithm 1, algorithm 2, algorithm 3 and algorithm 4 represent DNSGAII-A, CSADMO, HDMIO and PDNNIA respectively.

From Fig. 6, we can see that IGD t obtained by PDNNIA always maintains the minimum value in the whole process of evolution for each DMOP. For DMOP1, DMOP2, DMOP4 and DMOP5, the proposed algorithm PDNNIA significantly outperforms the other three algorithms. For DMOP3 and DMOP6, the performance of PDNNIA as good as HDMIO and they get the best results together.

4.4 Experiental study on the modified predictive model and the modified adaptive DE

In the following experiments, we conducted two sets of comparative experiments to test the performance of the two modified operators, i.e., the modified predictive model and adaptive differential evolution operator.

4.4.1 Experiental study on the modified predictive model

The predictive model comes into action at the begining generation of a new environment that used to predict the initial population. In this experiment, the modified predictive model will compare with the other two predictive model, which are shown in Table 6. In Table 6, the predictive strategy of [25] is the original distance model. The predictive strategy proposed in this paper is described in Section 3.1.2. We combine the three preditive models with the same static MOP algorithm, that is the NNIA which based on the modified self-adaptive differential evolution crossover, and get three DMOP algorithm, which are denoted as Prediction 1, Prediction 2 and Prediction 3 respectively. Expect the predictive model, the other operators and parameters of all the three algorithms are set as same as those in Section 4.1.

With the three DMOP algorithm testing the six DMOPs respectively, We use the metric IGD t to evaluate the performance of the three predictive model. We run each algorithm 20 times for each test instance in the second enrivonmental change combination, i.e., (τ T , n T ) = (25,10), independently. There are 50 environmental changes in each run, i.e., T max = 50. The results are shown in Fig. 7.

Because the predictive model is based on the historical information, we only analyze the performance of the three algorithm when t ≥ 3. When 0 < t < 3, the predictive can not come into action, so the three algorithms use the same strategy to initialize the polulation.

We can see from Fig. 7 that, for DMOP1, DMOP2, DMOP4 and DMOP5, the Prediction 3 algorithm significantly outperforms the other two algorithms, this suggests that the model proposed in this paper is significantly better than the other two predictive models. For DMOP3 and DMOP6, the performances of the three predictive models are almost the same. So we can draw a conclusion that the predictive model proposed in this paper has advantages in predicting and tracking the environmental change.

4.4.2 Experiental study on the modified adaptive DE

In this experiment, the modified adaptive DE will compare with the other two DE operator, which are shown in Table 7. DE1 is the original differential evolution operator and the parameter F is set to be 0.5. The DE2 is the adaptive differential evolution operator in [26]. DE3 is the modified adaptive differential evolution operator proposed in this paper. Introduce the three DE operator to the same DMOP algorithm respectively and get three DMOP algorithm, which are denoted as Algorithm 1, Algorithm 2 and Algorithm 3 respectively. Except the DE operator, the other operators and parameters of all the three algorithms are set as same as those in Section 4.1.

With the three algorithms testing the six DMOPs respectively, we run each algorithm 20 times for each test instance in the second enrivonmental change combination, i.e., (τ T , n T ) = (25, 10), independently. There are 50 environmental changes in each run, i.e., T max = 50. We keep a record of the IGD t of each time in one run and evalute the average value of the 50 IGD t , which denoted by \(I\overline G D_{t} \). We figure out the boxplot of \(I\overline G D_{t} \) in 20 independent runs in Fig. 8.

We can see from Fig. 8 that our modified simpler adaptive differential evolution operator can obtain a relatively better values of \(I\overline G D_{t} \) with a better stability for the most problems except for DMOP6. In DMOP6, the algorithm 3 with the DE3 operator obtain the second best result. This shows that our modified simpler adaptive differential DE is effective though it is simple.

5 Conclusion

A novel DMOEA based on the improved predictive model and the modified adaptive differential evolution crossover operator is proposed in this paper. Two sets of comparative experiments are conducted to test the performances of the proposed algorithm and the effectiveness of the two modified operators. Experimental results show that the proposed algorithm is effective and generic for all types of DMOPs, and two modified operators can significantly obtain better performances with respect to the original versions. For the two-objective problems with unchanging PS t and changingPF t or the three-objective problems with changingPS t and changingPF t , the superiority of the proposed algorithm is not very obvious. In the next work, we will analyze which types of problems our algorithm is particularly applicable for and modify the algorithm further to improve the performance of the algorithm on these two types of DMOPs mentioned above.

References

Farina M, Amato P, Deb K (2004) Dynamic multi-objective optimization problems: Test cases, approximations and applications. IEEE Trans Evol Comput 8:425–442

Zitzler E, Deb K, Thiele L (2000) Comparison of multiobjective evolutionary algorithms: Empirical results. Evol Comput 8:173–195

Zeng SY, Chen G, Zheng L, Shi H, Garis HD, Ding LX, Kang LS (2006) A dynamic multi-objective evolutionary algorithm based on an orthogonal design. In: IEEE congress on evolutionary computation, pp 573–580

Goh CK, Tan KC (2009) A competitive–cooperative coevolutionary paradigm for dynamic multiobjective optimization. IEEE Trans Evol Comput 13:103–127

Wang YP, Dang CY (2008) An evolutionary algorithm for dynamic multi-objective optimization. Appl Math Comput 205:6–18

Koo WT, Goh CK, Tan KC (2010) A predictive gradient strategy for multiobjective evolutionary algorithms in a fast changing environment. Memetic Computing 2:87–110

Coello Coello CA, Cortes NC (2002) An approach to solve multiobjective optimization problems based on an artificial immune system, Proceedings of the First International Conference on Artificial Immune Systems, pp 212–221

Freschi F, Repetto M (2005) Multiobjective optimization by a modified artificial immune system algorithm, Proceedings of the Fourth International Conference on Artificial Immune Systems, ICARIS 2005, vol 3627 of Lecture Notes in Computer Science, pp 248–261

Cutello V, Narzisi G, Nicosia G (2005) A class of Pareto archived evolution strategy algorithms using immune inspired operators for ab-initio protein structure prediction, Third EuropeanWorkshop on Evolutionary Computation and Bioinformatics, EvoWorkshops 2005–EvoBio 2005, vol. 3449 of Lecture Notes in Computer Science, pp 54–63

Gong MG, Jiao LC, Du HF, Bo LF (2008) Multi-objective immune algorithm with nondominated neighbor-based selection. Evol Comput 16:225–255

Shang RH, Jiao LC, Gong MG, et al. (2005) Clonal Selection Algorithm for Dynamic Multiobjective Optimization. In: Hao Y. (ed) CIS 2005, Part I, LNCS(LNAI), vol 3801. Springer, Heidelberg, pp 846–851

Zhang ZH (2008) Multiobjective optimization immune algorithm in dynamic environments and its application to greenhouse control. Appl Soft Comput 8:959–971

Zhang ZH, Qian SQ (2009) Multi-objective immune optimization in dynamic environments and its application to signal simulation. In: 2009 International conference on measuring technology and mechatronics automation, vol 3. Hunan, China, pp 246–250

Ma YJ, Liu RC, Shang RH (2011) A Hybrid Dynamic Multi-objective Immune Optimization Algorithm Using Prediction Strategy and Improved Differential Evolution Crossover Operator. In: Lu B.L., Zhang L., Kwok J. (eds) ICONIP 2011, Part II, LNCS, vol 7063. Springer, Heidelberg, pp 435–444

Zhang ZH, Qian SQ (2011) Artificial immune system in dynamic environments solving time-varying non-linear constrained multi-objective problems. Soft Comput 15:1333–1349

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitistmultiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6:182–197

Storn R, Price K (1997) Differential Evolution–A Simple and Efficient Heuristic for global Optimization over Continuous Spaces. J Glob Optim 11:341–359

Abbass HA, Sarker R., Newton C. (2001) PDE, A Pareto-frontier differential evolution approach for multi-objective optimization problems, Proceedings of the Congress on Evolutionary Computation 2001 (CEC2001), vol 2. IEEE Service Center, Piscataway, pp 971–978

Xue F, Sanderson AC, Graves RJ Pareto-based multi-objective differential evolution, Proceedings of the 2003 Congress on Evolutionary Computation (CEC’2003), vol 2003. IEEE Press, Canberra, Australia, pp 862–869

Rolic T, Filipic B (2005) DEMO: Differential Evolution for Multiobjective Optimization. In: Coello CA et al. (eds) EMO 2005, LNCS 3410, pp 520–533

Deb K, Bhaskara UN, Karthik S (2007) Dynamic Multi-Objective Optimization and Decision-Making Using Modified NSGA-II: A Case Study on Hydro-Thermal Power Scheduling. In: Obayashi S et al. (eds) Proceedings of EMO 2007, LNCS, vol 4403. Springer, Verlag, pp 803–817

Zheng BJ (2007) A New Dynamic Multi-objective Optimization Evolutionary Algorithm, In: Third International Conference on Natural Computation (ICNC 2007), pp 565–570

Wei JX , Wang YP (2012) Hyper rectangle search based particle swarm algorithm for dynamic constrained multi-objective optimization problems, In: IEEE World Congress on Computational Intelligence (WCCI 2012), pp 1–8

Hatzakis I, Wallace D (2006) Dynamic multi-objective optimization with evolutionary algorithms: A forward-looking approach. In: Proceedings of Genetic and Evolutionary Computation Conference (GECCO 2006). Seattle, Washington, USA, pp 1201–1208

Zhou AM, Jin YC, Zhang QF, Sendhoff B, Tsang E (2007) Prediction–Based Population Re-Initialization for Evolutionary Dynamic Multi-Objective Optimization. In: Obayashi S. et al. (eds) EMO 2007, LNCS, vol 4403. Springer, Heidelberg, pp 832–846

Qian WY, Li AJ (2008) Adaptive differential evolution algorithm for multiobjective optimization problems. Appl Math Comput 201:431–440

Van V (1999) A. D, Multi-Objective evolutionary algorithms: Classification, analyzes, and new innovations, Ph.D. Thesis. Wright-Patterson AFB. Air Force Institute of Technology

Schott JR (1995) Fault tolerant design using single and multictiteria gentetic algorithm optimization, Master thesis. Massachusetts Institute of Technology

Zhang QF, Li H (2007) MOEA/D: A multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evol Comput 11:712–731

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, R., Fan, J. & Jiao, L. Integration of improved predictive model and adaptive differential evolution based dynamic multi-objective evolutionary optimization algorithm. Appl Intell 43, 192–207 (2015). https://doi.org/10.1007/s10489-014-0625-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-014-0625-y