Abstract

Dynamic multiobjective optimization problems exist in daily life and industrial practice. The objectives of dynamic multiobjective optimization problems conflict with each other. In most dynamic multiobjective optimization algorithms, the decision variables are optimized in the same way, without considering the different characteristics of the decision variables. To better track Pareto-optimal front and Pareto-optimal set at different times, a dynamic multiobjective optimization algorithm based on decision variable relationship (DVR) is proposed. Firstly, the decision variables are divided into two categories based on the detection mechanism of the contribution of decision variables to diversity and convergence. Secondly, different optimization methods are used for different types of decision variables. And a diversity maintenance mechanism is proposed. Finally, the individuals generated by these two parts and the perturbed individuals are combined. The combination individuals are nondominated sorted to form a population in the new environment. To verify the performance of the proposed algorithm, DVR is compared with five state-of-the-art dynamic multiobjective optimization evolutionary algorithms on 15 benchmark instances. The experimental results show that the DVR algorithm obtains 24 inverse generation distance optimal values in 45 groups of test data.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Dynamic multiobjective optimization problems (DMOPs) [1, 2] have gotten extensive attention in theoretical research and engineering fields, such as production scheduling [3], machine learning [4, 5] and so on. DMOPs are time (environment)-dependent optimization problems in which the objective function and decision variables are time (environment)-dependent, and the optimal individual changes dynamically with time. Pareto-optimal front (PF) and Pareto-optimal set (PS) are dynamic or fixed with the change of environment. Due to the dynamic change of the environment, it is difficult to solve the dynamic multiobjective optimization problems [6].

At present, there are many optimization algorithms. Optimization algorithms include evolution algorithms (EA), swarm intelligence algorithms, human-based algorithms, and physic-based and chemistry-based algorithms. Evolutionary algorithm mainly simulates the evolution rule of survival of the fittest in nature to achieve the overall progress of the population and finally complete the solution of the optimal solution. Among them, genetic algorithm (GA) [7] and differential evolution (DE) [8] are the main representatives. The swarm intelligence optimization algorithm simulates the wisdom of the group to achieve the global optimal solution. In this algorithm, each population is a biological population. It mainly includes particle swarm optimization (PSO) [9], ant colony optimization (ACO) [10], etc. Human-based algorithms mainly include teaching learning-based optimization (TLBO) [11], harmony search (HS) [12], etc. The algorithms based on physics and chemistry mainly come from the physical rules and chemical reactions in the universe. It mainly includes: simulated annealing (SA) [13], gravitational search algorithm (GSA) [14], etc. Among many optimization algorithms, EA and PSO algorithm have been the consistent pursuit of researchers because of their high development efficiency, strong robustness and other advantages.

In order to solve DMOPs, many dynamic multiobjective evolutionary algorithms (DMOEAs) have been proposed in recent years. The majority of DMOEAs are divided into one of two categories: prediction method [15,16,17] or diversity method [18, 19]. The prediction-based approach makes use of a prediction mechanism to give the population evolution a direction after every environmental change, enabling the algorithm to react quickly to the new change. The main drawback of the prediction-based algorithms is that they completely depend on the training model, and the training data cannot accurately represent the real situation. The population’s diversity can be preserved or increased using the diversity-based approach, but if diversity is merely increased randomly, the speed of convergence will be slow. Therefore, the design using a mix of diversity introduction and prediction methods can further make up for the above-mentioned defects. Nowadays, the majority of DMOEAs evolve decision variables in the same way without taking into account their various characteristics, and they struggle to strike a good balance between population diversity and convergence [20]. Recently, there have been related article based on decision variable analysis (DVA), which the decision variables are divided into different types by determining the dominant relationship between perturbed individuals [21]. However, mixed decision variables are simply treated as diversity-related variables by DVA, and variable classification strategy cannot further distinguish them. Because some mixed decision variables contribute more to diversity than convergence while others do the opposite, it is still possible to further categorize them. Based on the above problem, a mechanism to detect the magnitude of contribution of decision variables to diversity and convergence is proposed. There are currently two drawbacks to the angle-based classification method for decision variables [22]. First, when calculating the angle, it uses only the normal of the hyperplane \(f_{1}+f_{2}+\cdots +f_{m}=1\) as the direction of convergence, which cannot accurately distinguish the mixed decision variables with complex shape frontier. Therefore, it is essential to take weight vector guidance into account when examining the relationship between decision variables. Second, it is impossible to further distinguish the magnitude of contribution to convergence when various dimensional decision variables have the same projection length or angle. By combining the angle and projection length between the fitting line and the weight vector, the decision variables are further categorized.

In this study, a dynamic multiobjective evolutionary algorithm based on decision variable relationship (DVR) is proposed. The algorithm classifies decision variables using the detection mechanism of the contribution of decision variables to diversity and convergence in combination with the guidance of reference vector, and uses various evolutionary strategies for various types of decision variables to better balance diversity and convergence. The decision variable classification, prediction methods, and diversity introduction methods are all combined in the DVR algorithm.

The main contributions of this study are stated below:

-

DVR approach uses the detection mechanism of the contribution to diversity and convergence, and divides the decision variables into two categories: convergence-related variables (CV) and diversity-related variables (DV). Combined with historical information, different types of decision variables adopt different evolutionary strategies to improve the convergence and diversity of the population.

-

For convergence-related variables, a multi-region prediction strategy that divides subregions based on guide individuals is proposed. New solutions are generated based on the evolutionary direction of the guide individuals.

-

To increase the diversity of individuals, a multi-regional diversity maintenance strategy is proposed. The ratio of the distance from the guide individuals to the upper and lower boundaries is calculated in the subregion to explore new individuals nearby.

Each section of this paper is described as follows. Some related work is introduced in Sect. 2. Section 3 describes the content of the proposed DVR algorithm. In Sect. 4, the test examples and performance metrics we used are described. Section 5 analyzed the experimental results of the proposed algorithm and the comparison algorithm. In Sect. 6, we summarize the whole article and give the next work.

2 Related work

In general, the minimization dynamic multiobjective optimization problems [23] are considered, which is defined as follows:

where \(x=\left\{ x_{1},x_{2}, \ldots ,x_{n} \right\}\) is the decision variable in decision space \(\Omega\) and n is the dimension of decision space; \(F(x,t)=\left( f_{1},f_{2},\cdots ,f_{m} \right)\) represents the objective function to be minimized and m is the dimension of objective space. \(g(x,t)\leqslant 0\) and \(h(x,t)=0\) are inequality constraints and equality constraints. The time or environment variable t is defined as: \(t=(1/n_{t})\left\lfloor \tau /\tau _{t} \right\rfloor\), where \(\tau\) is iteration counter, \(n_{t}\) is intensity of environmental change, and \(\tau _{t}\) is change frequency. There are some definitions of DMOPs as follows:

Definition 1

Pareto dominance, let p and q be any two solutions in decision space \(\Omega\), where solution p dominates solution q, if and only if \(f_{i}(p)\leqslant f_{i}(q)\), \(\forall i \in \left\{ 1,2,\ldots , m \right\}\) and \(\exists f_{j}(p)< f_{j}(q)\), \(j \in \left\{ 1,2,\ldots ,m\right\}\), written as \(f_{i}(p)\prec f_{i}(q)\).

Definition 2

The Pareto-optimal solution (PS), x is the decision vector; \(\Omega\) is the decision space. A solution \(x^{*} \in \Omega\) is Pareto-optimal solution if and only if there is no other \(x \in \Omega\), \(x \prec x^{*}\). At time t, the PS is the set of all Pareto-optimal solutions and can be defined as:

\(PS=\left\{ x^{*} \in \Omega \mid \lnot \exists x \in \Omega , x\prec x^{*} \right\}\).

Definition 3

The Pareto-optimal front (PF), the set of the objective vectors at time t, is denoted by \(PF=\{F(x) \mid x \in PS\}\).

The key to solving DMOPs is to achieve fast convergence and uniform distribution. Most of the existing DMOEA are built by combining the classic multiobjective evolutionary algorithm (MOEA) [24,25,26] with efficient dynamic technology, which can be roughly divided into the following three types.

-

(A)

Diversity strategy Depending on the potential for diversity enhancement, diversity-based methods can be divided into two categories [27]: diversity protection [28] and diversity introduction [29]. The diversity protection method only depends on the static evolution to generate a collection of optimal solutions, it lacks the convergence ability. The latter method takes into account the population’s potential diversity loss in a dynamic environment. Once a change in the environment has been detected, random or specific individuals are introduced. Based on a fast and elitist multiobjective genetic algorithm (NSGA-II) [24], Deb and Karthik [30] proposed two DMOEAs (Dynamic multiobjective optimization and decision-making using modified NSGA-II:DNSGA-II-A and DNSGA-II-B). When environmental changes are detected, some randomly reinitialized individuals will be generated in DNSGA-II-A, while a portion of the existing population will be replaced with mutants in DNSGA-II-B. Ruan et al. [31] proposed a hybrid method for maintaining diversity. Using the gradual search method between the minimum and maximum points, evenly distributed solutions are produced to increase the population’s diversity.

-

(B)

Memory strategy The memory-based method [32, 33] responds to the dynamic environment by recording the historical information and directly reusing the optimal solution in the historical information [34]. Chen et al. [35] proposed a dynamic double archive evolutionary algorithm, which maintains two coevolutionary populations at the same time. The two groups are complementary: one pays more attention to convergence and the other pays more attention to diversity. Once the environment changes, the composition of the two populations will be reconstructed adaptively. In addition, the two populations interact through mating selection mechanism. Zhang et al. [36] decomposed a dynamic multiobjective optimization problem into multiple dynamic scalar optimization subproblems and optimized these subproblems at the same time. Since neighboring subproblems have similar optimal solutions, each subproblem is optimized by using the current information of its neighboring subproblems. The strategy based on memory can transfer the information from the historical environment to the new environment, which can deal with the problem of periodic change; When the environment changes dramatically, the strategy will lead to inaccurate prediction results [37].

-

(C)

Prediction strategy After the environment changes, the prediction-based method is used to guide the population evolution [38, 39], and the history information is used to initialize the population in the new environments [5, 40, 41]. Zhou et al. [42] proposed a population-based prediction strategy (PPS) in 2014 that divides the Pareto solution set into two parts: central point and manifold. Through the autoregression (AR) model, the center point of the continuous time series is used to predict the center of the next time, and the manifold of the previous time is used to estimate the manifold of the next time. Therefore, when the environment changes, PPS obtains the population at the next time by combining the prediction center and estimation manifold. The quality of the prediction is not high as there is insufficient historical information about previous environmental changes. However, with the operation of the algorithm and the accumulation of historical information, the prediction accuracy will continue to improve. Rong et al. [43] proposed a multi-directional prediction strategy. This method selects representative individuals through clustering method, and determines the number of representative individuals depending on the severity of PF and PS changing with the environment. And it predicts new location of PS through the evolutionary direction of multiple representative individuals in decision space, so as to reinitialize the population. Ma et al. [44] proposed a multi-region prediction strategy. The number of decompositions of the objective space according to the severity of the changes in adjacent moments, and multiple evolutionary directions are determined according to the center point of the subregion. In the above references, the prediction method shows the ability to improve the convergence speed.

From the above three studies of dynamic multiobjective evolutionary optimization, we can see that all three approaches have their own advantages in solving DMOPs. In this paper, the decision variables are classified into different types and different optimization methods are used for the two decision variables. The combination of prediction-based and diversity-based methods further improves the quality of the solution. A specific description of the proposed algorithm will be given in Sect. 3.

3 Proposed DVR algorithm

A dynamic multiobjective optimization algorithm based on decision variable relationship is proposed to generate solution close to PF in a changing environment. The algorithm mainly analyzes the influence of each decision variable according to the magnitude of its contribution to convergence and diversity. The decision variables are divided into two categories: convergence-related variables and diversity-related variables, and adopts different prediction strategies for each of the two types of decision variables. In addition, a diversity maintenance approach is presented to further uniformize the individual distribution. The algorithm primarily consists of the following steps, which are described below: decision variable relationship, classification prediction strategy, and diversity maintenance strategy.

3.1 Decision variable relationship method

The convergence relevance degree (CRD) value is used to measure the contribution of each decision variable to convergence and diversity. The CRD value for each decision variable contains two factors: the angle \(\theta\) and projection length LN. First, the population P is re-initialized with N individuals, and n weight vectors \(\lambda =\left\{ \lambda _{1}, \lambda _{2}, \ldots , \lambda _{n}\right\}\) are generated uniformly in the objective space [45]. Weight vectors \(\lambda _{i}\) indicate the direction of convergence. Next, the Euclidean distances between individuals in the population and the n weight vectors are calculated separately. And the nSel candidate individuals \(x_{ij}, i\in \left\{ 1,2,\ldots ,n\right\} , j\in \left\{ 1,2,\ldots ,nSel \right\}\) with the smallest distance from each weight vector \(\lambda _{i}\) are selected. Therefore, the total number of candidate individuals is \(n*nSel\). Finally, perturbations are performed on the ith dimension of nSel candidate individuals respectively. The perturbation value is taken as a random value within the upper and lower boundaries, and the other dimensions remain unchanged. The perturbed individuals are normalized, as shown in Eq. 2, where \(Y_{ij}^{new}\) represents the normalized objective function value, \(Y_{ij}\) represents the current objective function value, \(Y_{\min }\) represents the minimum objective function value of this dimension, \(Y_{\max }\) represents the maximum, and \(Y_{mean}\) represents the average. As shown in Eq. 3, using the singular value decomposition method to calculate the direction vector of fitting line \(L_{ij}\). As shown in Eqs. 4 and 5, the angle \(\theta _{ij}\) and projection length \(LN_{ij}\) between the fitting line \(L_{ij}\) and the weight vector \(\lambda _{i}\) are calculated. \(CRD_{ij}\) corresponding to each dimensional decision variable is calculated. The number of \(CRD_{ij}\) is the same as the number of candidates. To calculate the contribution of each variable to convergence and diversity, the CRD contains two parts: the angle \(\theta\) and projection length LN. Specifically, the calculation of CRD value is shown in Eq. 6. Where, \(\theta _{ij}\) and \(LN_{ij}\) represent the angle and projection length between the fitted line \(LN_{ij}\) and weight vectors \(\lambda _{i}\). \(\theta _{max}\) and \(\theta _{min}\) represent the maximum and minimum values of the current generation.

As shown in Fig. 1a, in this example, for each weight vector, two nearest candidate individuals \(x_{i}^{near1}\) and \(x_{i}^{near2}\) are selected. As shown in Fig. 1b, the i-th dimension of two candidate individuals \(x_{i}^{near1}\) and \(x_{i}^{near2}\) are perturbed six times, and the perturbed individuals are normalized to produce the fitted straight lines \(L_{i1}\) and \(L_{i2}\). The angle \(\theta _{i1}\), the projection length \(L_{i1}\) and the angle \(\theta _{i2}\), the projection length \(L_{i2}\) between the fitted lines \(L_{i1}\) and \(L_{i2}\) and the weight vector \(\lambda _{i}\) are calculated, respectively. The CRD values of the two candidates (\(CRD_{i1}\) and \(CRD_{i2}\)) are calculated by Eq. 6.

A smaller CRD value represents a larger contribution to convergence than to diversity. The “smaller CRD value” refers to the cluster where the average CRD value of the two clusters is closer to the origin. This is because the angle reflects the search bias for the direction of convergence, and a smaller angle indicates a greater contribution of the decision variable to the direction of convergence. The projection length LN indicates the search intensity along the convergence direction, and a larger projection length means a larger perturbation in the convergence direction. Figure 1c presents the calculated CRD values when the dimension of the decision variable is five and two candidate individuals are selected for perturbation in each dimension. The CRD values are divided into two categories using the k-means clustering method: the category with the smaller CRD value, which decision variables contributes less to diversity than convergence, is classified as convergence-related variables, and the rest ones are classified as diversity-related variables. The perturbed individuals are stored in C. The number of individuals in C is \(n *nSel *nPer\). The pseudo-code of decision variable relationship method is given in Algorithm 1.

3.2 Classification prediction strategy

When an environmental change is noticed, the proposed prediction strategy finds solutions that approximate the true Pareto solution set. The difference with other prediction strategies is that the proposed prediction strategy is classified according to the type of decision variables in each dimension at the previous moment (t) and the current moment (\(t+1\)). If the ith dimensional decision variable is convergence decision variable at moment t and diversity decision variable at moment \(t+1\), or diversity decision variable at moment t and convergence decision variable at moment \(t+1\), the historical information is not useful for optimizing the population. Therefore, the ith dimensional decision variable uses a random initialization strategy. When both the t moment and the \(t+1\) moment are diversity decision variables, an adjustable precision mutation operator strategy [46, 47] is used. When both the t moment and the \(t+1\) moment are convergence decision variables, a multi-region-guided individual prediction strategy is proposed.

3.2.1 Adjustable precision mutation operator Strategy

When the decision variable \(x_{i}\) is a diversity decision variable at both the previous and current moments, an adjustable precision mutation operator prediction strategy is used to obtain new individuals with the required search precision for exploiting and exploring the decision space. For a population of an individual \(x=\left[ x_{1}, x_{2}, \ldots , x_{N}\right] ^{T}\), \(x_{i}\) is the ith decision variable of x. It is necessary to exploit individuals near x and explore individuals far from x.

where the regulation accuracy of the individuals around the exploit x is controlled by the parameter p. And when p is set to 1, the \(\frac{1}{10^{p}}\) equals 0.1. The value of random (9) function can generate a random integral number between 1 and 9, the difference between \(x_{i}^{{'}}\) and \(x_{i}\) ranges from 0.1 to 0.9. From Eq. 7, it can be concluded that this mutation operator can generate a little mutation in the initial value, in order to exploit the individuals near x.

where the regulation accuracy of the individuals around the explore x is controlled by the parameter q. When q is set to 1, the \(10^{q}\) equals 10, The value of random(9) function can generate a random integral number between 1 and 9, the difference between \(x_{i}^{{'}}\) and \(x_{i}\) ranges from 10 to 90. From Eq. 8, the mutation operator can produce a large mutation on the initial value, which is helpful for global search to jump out of the local optimum and increase the diversity of the population.

Equations 7 and 8 can generate individuals with values less than \(x_{i}\) as well as individuals with values greater than \(x_{i}\). To make the local search and global exploration random, a random number r from 1 to 4 is generated. \(Q_{dv}\) is equal to the population number of P, so the size of \(Q_{dv}\) is N. The pseudo-code is given in Algorithm 2.

3.2.2 Multi-regional prediction strategy

The multi-region prediction method is used when the decision variable \(x_{i}\) is a convergence decision variable at both the current and the previous moment. \(Q_{dv}\) is equal to the population number of P, so the size of \(Q_{dv}\) is N. The pseudo-code of multi-region prediction strategy is given in Algorithm 3.

-

(A)

Identify the guide individuals

Guide individuals to divide all individuals in the population into different groups can better represent the shape and distribution of PF. The extreme point of a dimension is the farthest in decision space, so it can well describe the position of PS; The sampling points combine the PS center point with the corresponding PF extreme points and equally spaced sampling points as the guide individual. Therefore, the guiding individual is composed of boundary point [48], center point [49] and sampling point. The following three parts are introduced in detail.

-

(1)

Boundary point

For the minimum problems, the boundary points are the individuals with the minimum value in a certain dimension of the objective space. If the dimension of the objective space is three or more, the number of boundary points will be three or more. As shown in Fig. 2, the blue individual represents the boundary individuals.

-

(2)

Center point

The following is the Eq. 9 for calculating the center point at time t:

where \(PS^{t}\) is the Pareto set at time t, \(\left| PS^{t} \right|\) is the number of intermediate solutions and \(x_{i}^{t}\) is the ith solution in \(PS^{t}\). The purple individual in Fig. 2 is the center point.

-

(3)

Sampling point

All sample values are sorted by the system sampling method in equal intervals from small to large. The ratio of the total number of individuals to the number of sampling points is rounded down to be equal to k, and the segmentation interval is determined to be k. In the first segment (1, k), the first sampling point is selected by simple random sampling, and then the samples are taken according to the step k for equal interval sampling. As shown in Fig. 2a, a dimension of the objective function is randomly selected, the objective function values are ranked from smallest to largest, and sampling points are selected among nondominated individuals using the systematic sampling method. In Fig. 2, the green points represent the sampling individuals. As the sampling point randomly selects a dimension in the objective space for sampling, the principle of bi-objective and tri-objective instance is the same.

-

(B)

Subregional division

As shown in Fig. 2b, the Euclidean distance between the nondominant individuals and the guide individuals is calculated, and the individuals are divided into the region where the guide individual is nearest. Due to the fact that guide individuals can represent the general distribution of PF in the subregion, they provide a better indication of the distribution of the data than the mean.

-

(C)

Determine the evolutionary steps

Using multiple guide individuals instead of just centroid for prediction is called multi-region prediction. The evolutionary direction of each individual represents the evolutionary direction of all individuals in the region. Considering both prediction accuracy and computational cost, multiple time-series models are constructed to predict the evolutionary direction of multiple subregions \(\Delta c_{i}^{t}\) according to the previous data supplied by the guided individuals in the first two moments. As shown in Fig. 3, the set of individual solutions \(C^{t}=\left\{ c_{1}^{t},c_{2}^{t},\cdots ,c_{k}^{t} \right\}\) and \(C^{t-1}=\left\{ c_{1}^{t-1},c_{2}^{t-1},\cdots ,c_{l}^{t-1} \right\}\), \(c_{i}^{t}\) is any individual in \(C^{t}\) and \(c_{j}^{t-1}\) is any individual in \(C^{t-1}\). First, we select individual \(c_{j}^{t-1}\) from all nondominated individuals at time \(t-1\), which is closest to the ith guide individual \(c_{i}^{t}\) at time t, and \(c_{j}^{t-1}\) is the parent of \(c_{j}^{t}\). Therefore, the evolutionary step size of each region guide individual is shown in Eq. 10.

where k denotes the number of guided individuals and the number of subregions. l denotes the number of nondominated solutions at moment \(t-1\).

-

(D)

Generation of the prediction individuals

As shown in Fig. 3, the positions of other individuals in the region at moment \(t+1\) are predicted by guiding the evolutionary step of individual \(C^{t}\) by Eq. 11.

where \(i=1,2,\ldots ,k\), \(\varepsilon ^{t}\sim N(0,\sigma ^{t})\), \(\varepsilon ^{t}\) is a normal distribution random number with mean value of 0 and variance of \(\sigma ^{t}\). The calculation of \(\sigma ^{t}\) is shown in Eq. 12:

where \(x_{i}^{t}\) is the ith guide individual at time t and \(x_{i}^{t+1}\) denotes the predicted individual for the \(t+1\)-th environmental change. The individuals in each subregion at moment \(t+1\) are generated according to the evolutionary direction of the guiding individuals in their subregion.

3.3 Multi-regional diversity maintenance strategy

Diversity maintenance strategy plays an important role in dynamic optimization. In a dynamic environment, the environment keeps changing over time. And at each period of environmental change, the population evolves toward adapting to the environment, which tends to lead to a local optimum. Therefore, a variety of individuals need to added to boost the diversity of the population. When the environment changes, the diversity maintenance strategy serves the role of introducing varied individuals. Therefore, we propose a multi-regional diversity maintenance strategy. Dividing the objective space into k subregions according to the guide individuals. As shown in Fig. 4, in the decision space, the distances to the upper and lower boundaries are calculated for each dimension of the guide individuals in each subregion by Eqs. 13, 14, where the ratio of the relatively smaller distance to the larger distance is calculated according to Eq. 15. The new individuals are generated by calculating each subregion individual by Eq. 16. The population number of R is N. The pseudo-code is given in Algorithm 4.

where \(x_{ij}^{t}\) is the jth dimensional decision variable of the ith individual at time t, \(x_{ij}^{t+1}\) denotes the predicted solution of the jth dimensional decision variable of the ith solution at time \(t+1\). \(N\left( 0, d_{ij}\right)\) is a normal distribution random number with mean value of 0 and variance of \(d_{ij}\).

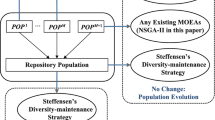

3.4 Framework of DVR

The MOEA/D framework is included into the classification prediction method and multi-regional diversity maintenance strategy, which are provided for Algorithm 5. Chebyshev [36] is the decomposition method. Each weight vector has equal weights to guarantee that they are spreading uniformly.

First, step 1 randomly initializes the individuals in the population. Second, when an environmental change is recognized and t is larger than 1, the population is reinitialized using the change response method of the DVR (steps 5–10). Step 5 is the classification of decision variables according to Algorithm 1. Step 6 represents the prediction of diversity-related decision variables. Step 7 is to generate a new solution Q using a multi-region prediction method for convergent decision variables. Step 8 is to generate new populations R using a multi-regional diversity maintenance strategy. For step 9, combined with the prediction strategy, the diversity method obtains the population and perturbed individuals, and sets the combined population as \(P_{ar}\). For step 10, generated individuals are mixed with the individuals, and the nondominated sort [24] is used to select N individuals with superior convergence and diversity to improve the prediction accuracy. Step 14 shows that the population is optimized using MOEA/D-DE if the environment has not changed. Step 16 is to check if the maximum number of iterations is reached, if not then continue the loop, if it is reached then stop the iteration and output the final population \(P_{new}\).

3.5 Complexity of the DVR framework

DVR algorithm is made up of two parts: static optimization and dynamic response. The computational complexity of static optimization of DVR algorithm is \(O(MN^{2})\), where M is the number of objectives space and N is the population size. The computational cost of the change response is mainly involved in decision variable relationship, classification prediction approach, and diversity maintenance mechanism. In Algorithm 5, the computational complexity of decision variable relationship (line5) is O(nN), where n is the dimension of decision space. It takes O(nN) to calculate the distance from the individual to the weight vector. The computational complexity of calculating the angle and projection length between the weight vector and the fitting line is \(O(n *nSel)\). The computational complexity of the computational complexity adjustable precision mutation operator strategy (line6) is O(N) and multi-regional prediction strategy (line7) is \(O(k *n_{cv})\). The diversity maintenance strategy requires O(kn) computations. As a result, the computational complexity of dynamic response is O(nN). To sum up, the overall computational complexity of DVR algorithm is \(O(MN^{2})\).

4 Benchmark instances and test indicators

4.1 Benchmark instances

15 typical test functions are used for experimental simulations to demonstrate the performance of the proposed algorithm. The test problems include F test set [49] (F1–F10) and DF test set [50] (DF1–DF5). F test set, which characterizes more complex real-world optimization problems that include linear and nonlinear correlation problems with decision variables. Among them, the decision variables of F1–F4 are linearly correlated and those of F5–F10 are nonlinearly correlated. F4 and F8 are three-dimensional objective functions, and the other F test problems are two-dimensional objective functions. In terms of environmental changes, F1–F8 are affected by continuously changing environments, and F9 and F10 are affected by sharp environmental changes. DF1–DF5 are test problems with two objectives. DF1 is a variant of dMOP2. The PS of DF1 undergoes a simple change, with the PF changing from concave to convex. The ability of the algorithm to follow concave and convex variations is evaluated using this test problem. DF1 is a variant of dMOP3. DF2 changes over PS, while PF does not change with time. DF3 is a variant of ZJZ. The concavity–convexity of DF3 varies over time, and the variables are correlated. The PS length and position of DF4 change with time, and the length and curvature of PF also change with time. The PS structure of DF5 is simple, but the shape of PF changes with time. As shown in Table 1, the above DMOPs can be classified into three types [51].

4.2 Test indicators

To determine whether an algorithm can solve DMOPs efficiently and assess the diversity and convergence of individuals, the performance of algorithm needs to be quantified with a performance metric function [52]. We use the mean inverse generation distance (MIGD) [42, 53], the mean hypervolume difference (MHVD) [54] and the Schott’s mean spacing metric (MSP) [41]. The details of these three performance indicators are as follows:

-

(1)

In addition to reflecting the convergence of MOEA, the inverse generation distance (IGD) indicator is also a good reflection of the uniformity and breadth of the distribution of MOEA. Let \(P^{t^{*}}\) represent a set of uniformly distributed Pareto-optimal points in the \(PF^{t}\). Let \(P^{t}\) be an approximate set of \(PF^{t}\). The definition of IGD metric is shown in Eq. 17:

$$\begin{aligned} {\text {IGD}}\left( P^{t^{*}}, P^{t}\right) =\frac{\sum _{j \in P^{t^{*}}} d \overline{(j)}}{\left| P^{t^{*}}\right| } \end{aligned}$$(17)where \(\left| P^{*}\right|\) is the cardinality of \(P^{t^{*}}\); \(d(\bar{j})=\min _{i \in P^{\prime }}\Vert \bar{j}-\bar{i}\Vert\) represents the minimum Euclidean distance from the point on approximate PF to the individual in the true PF. If the IGD value is small, it means that the solution set is well converged and uniformly distributed. Since IGD is mostly used to evaluate the static algorithm performance, the mean inverse generation distance is proposed by Zhou et al. [42]. MIGD is defined as follows:

$$\begin{aligned} {\text {MIGD}}\left( P^{t^{*}}, P^{t}\right) =\frac{1}{|T|} \sum _{t \in T} {\text {IGD}}\left( P^{t^{*}}, P^{t}\right) \end{aligned}$$(18)where \(t\in T,T=\left\{ 1,2,\ldots \right\}\) is a set of discrete time points in a run, and \(\left| T \right|\) is the cardinality of T.

-

(2)

The hypervolume difference (HVD) metric calculates the hypervolume difference in the objective space from the true PS to the approximate PS provided by the algorithm. The expressions are as follows:

$$\begin{aligned} {\text {HVD}}\left( P_{*}^{t}, P^{t}\right) =\left| {\text {HV}}\left( P_{*}^{t}\right) -{\text {HV}}\left( P^{t}\right) \right| \end{aligned}$$(19)where \(HV(P_{*}^{t})\) is a constant. A smaller HVD value indicates a better approximation. MHVD metric is the average value of the HVD at each generation in a certain run.

$$\begin{aligned} {\text {MHVD}}\left( P_{*}^{t}, P^{t}\right) =\frac{1}{|T|} \sum _{t \in T} {\text {HVD}}\left( P_{*}^{t}, P^{t}\right) \end{aligned}$$(20)where HV(S) is the hypervolume of the set S. \(z^{*}=\left( z_{1}^{*}, \cdots , z_{m}^{*}\right)\) is the reference point, m is the dimension of objective space. The reference point is set to \(\left( y_{1}^{t}+0.5, \cdots , y_{m}^{t}+0.5\right)\) to calculate the hypervolume. Herein, \(y_{i}^{t}\) is the maximum value of the ith objective function value of the true PF at time t.

-

(3)

The spacing metric (SP) metric is used to measure the distributivity of the approximate Pareto solution set obtained by the algorithm. SP is calculated as follows:

$$\begin{aligned} \rm{SP}=\sqrt{\frac{1}{\left| P^{t}-1\right| } \sum _{i=1}^{\left| P^{\prime }\right| }\left( D_{i}-\bar{D}\right) ^{2}} \end{aligned}$$(21)

where the Euclidean distance between the ith person in \(P^{t}\) is denoted by \(D_{i}\) and the closest solution to it. \(\bar{D}\) is the average of \(D_{i}\). \(D_{i}\) is calculated as shown below.

When SP = 0, the individuals in \(P^{t}\) are uniformly distributed on the Pareto front. The average of SP over a time period T is defined as MSP. MSP is calculated as shown below.

Just like the SP, a smaller MSP metric value means that the solution obtained by the algorithm is more uniformly distributed and the algorithm is better distributed.

5 Experiment setting and result analysis

5.1 Compared algorithm

The proposed algorithm DVR is compared to four existing state-of-the-art algorithms in this section.

-

(1)

PPS [42]: A population-based prediction strategy that divide a PS into two parts: center point and a manifold. Through the autoregression (AR) model, the center point of the continuous time series is used to predict the center of the next time, and the manifold of the previous time is used to estimate the manifold of the next time.

-

(2)

SVR [55]: Evolutionary dynamic multiobjective optimization assisted by a support vector is a nonlinear mapping of historical individuals to a high-dimensional feature space and linear regression in that space.

-

(3)

MDP [43]: Multidirectional prediction approach clusters populations into a number of representative groups, where the number of clusters is adapted depends on the intensity of the environmental change.

-

(4)

DMOES [47]: Multiobjective evolution strategy uses the simulated isotropic magnetic particles niching to guide individuals to maintain uniform distance and range, thus automatically approximating the entire Pareto front.

-

(5)

DVA [21]: The prediction strategy based on decision variable analysis divides decision variables into two categories. The decision variables are divided into convergence-related variables and diversity-related variables according to the dominance relationship between the perturbed individuals. But the mixed decision variables are simply classified as diversity convergence variables.

5.2 Parameter setting

In the experimental part, all algorithms use MOEA/D-DE as their optimizer, allowing us to compare their dynamic performance objectively. The decomposition method is Chebyshev decomposition method. Chebyshev method is a nonlinear multiobjective aggregation method. The method generates a group of weight vectors and reference points uniformly in the objective space, and divides the multiobjective optimization problem into multiple single objective subproblems, each of which is assigned an individual. The individuals in the neighborhood are updated by the size of the aggregation function value, and all Pareto-optimal solutions are saved. This method can not only ensure the convergence of the population, but also ensure the good diversity of the population. The number of individuals for test problems is \(N=100\). The dimensions of decision variables are \(n=\) 10. In addition, the crossover probability \(CR=\) 1 and mutation probability \(F=\) 0.5 are differential evolution parameters. The intensity of environmental change is \(n_{t}=10\). The change frequency is \(\tau _{t}=\) 5,10,20 in each test instances. The maximum number of generations in each run is set to be \(100*\tau _{t}\), ensuring that each experiment sees 100 environmental changes. To generate statistically significant results, we execute each algorithm runs 20 times on each test problem. The following are the detailed parameter settings for five algorithms.

-

(1)

PPS [42]: It uses AR (p) model and the order is \(p=\) 3 and the length of history mean point series is M = 23.

-

(2)

SVR [55]: Settings for the SVR-based predictor: the dimension of the input samples \(q=\) 4; regularization constant \(C=\) 1000; precision parameter representing the radius of the tube located around the regression function \(\varepsilon =\) 0.05.

-

(3)

MDP [43]: The number of subregions is K, the lower bound is M+1, and the upper bound is 3M. Where M is the dimension of the objective function.

-

(4)

DMOES [47]: The parameter \(p=\) 3 that controls the accuracy of the mining search and the parameter \(q=\) 1 that controls the exploration search accuracy.

-

(5)

DVA [21]: The number of solutions generated by Latin hypercube sampling method combined with central point prediction method \(N_{1}=\) 0.8 and the number of solutions generated by combined random sampling method and central point prediction method \(N_{2}=\) 0.2. Threshold value \(\sigma =\) 0.9998.

-

(6)

DVR: The number of perturbed individuals nSel for each dimension of the decision variable is set to 2 and the number of perturbations nPer is set to 4. The number of subregions is \(k=\) 6. The parameter settings of adjustment accuracy \(p=\) 3 and \(q=\) 1 are the same as those in DMOES [47].

5.3 Comparison of different parameter settings

The number of candidate individuals nSel, the number of perturbations nPer, and the number of subregions k, are determined from the following experimental results.

-

(1)

Setting different candidate numbers nSel result comparison: in order to determine whether the number of perturbed candidate individuals (nSel) has an impact on the classification of CRD calculated by the algorithm, F1 and F5 test functions are selected for experimental analysis when \(\tau _{t}=30\). Figures 5 and 6 show the classification results of two candidates (\(nSel=\) 2) and three candidates (\(nSel=\) 3), respectively. The specific classification results are as follows. It can be seen from Figs. 5 and 6 that in F1, the convergence decision variables are all \(x_{1},x_{2},x_{3},x_{7},x_{8},x_{9}\), and the diversity decision variables are all \(x_{4},x_{5},x_{6},x_{10}\); In F5, the convergence decision variables are all \(x_{5},x_{6}\), and the diversity decision variables are all \(x_{1},x_{2},x_{3},x_{4},x_{7},x_{8},x_{9},x_{10}\). It can be concluded that the number of perturbed candidate individuals has no effect on the classification of calculated CRD. Therefore, since the computational complexity of selecting two candidates is lower than that of three candidates, this paper selects two candidates.

Fig. 5 -

(2)

Comparison of nPer results with different disturbance times: in order to judge the influence of the disturbance number nPer on the performance of DVR algorithm, nPer is set to 2, 3, 4, 5, 6, 7, when \(\tau _{t}=\) 10, \(nSel=\) 2, \(k=\) 6, respectively. The settings of other parameters are the same as those in Sect. 5.2. Table 2 shows the MIGD values of DVR algorithm on test functions F1, F5, DF1 and DF2 when the disturbance number nPer is set with different parameters. Table 2 shows that when \(nPer=\) 4, the MIGD values of the DVR algorithm run 20 times on the test functions F1, F5 and DF2 are the minimum. The best results in the Table 2 are shown in bold. The smaller the MIGD value, the better the convergence and diversity of the algorithm. In DF1, the DVR algorithm performs best when the number of perturbations \(nPer=\) 3. Therefore, in order to achieve better convergence and diversity of the algorithm, the number of perturbations nPer is set to 4.

Table 2 MIGD values of DVR on four test instances with nPer is set to different values when \(\tau _{t}=10\), \(k=\) 6, \(nSel=\) 2, \(p=\) 3, \(q=\) 1 -

(3)

Set the number of different subregions k result comparison: the comparisons of different the number of subregions k has been discussed. we consider four problems F1, F5, DF1, and DF2. k is set to 4, 5, 6, 7, 8 and 9, when \(\tau _{t}=\) 10, \(nSel=\) 2, \(nPer=\) 4, respectively. The settings of other parameters are the same as those in Sect. 5.2. Table 3 shows the MIGD values of DVR algorithm on these test functions F1, F5, DF1 and DF2 when the number of subregions k is set with different parameters. Table 3 shows that when \(k=\) 6, the MIGD values of the DVR algorithm run 20 times on the test functions F1, F5 and DF2 are the minimum. The best results in the Table 3 are shown in bold. In DF1, the DVR algorithm performs best when the number of subregions \(k=\) 7. Therefore, in order to achieve better convergence and diversity of the algorithm, the number of different subregions k is set to 6.

Table 3 MIGD values of DVR on four test instances with k is set to different values when \(\tau _{t}=10\), \(nSel=\) 2, \(nPer=4\), \(p=\) 3, \(q=\) 1

5.4 Experimental results and analysis

The DVR is compared to four other state-of-the-art DMOEAs on F and DF test problems. Each algorithm is subjected to 100 environmental changes, with each test instances being executed 20 times separately. Tables 4, 5 and 6 show the statistical values of MHVD, MSP and MIGD indicators for the six algorithms on the 15 test instances, comparing the performance of the six algorithms in terms of convergence, diversity, respectively. The best results in terms of mean and standard deviation are marked in bold. Herein, the experimental results are subjected to the Wilcoxon rank sum test [56] at a significance level of 5%. (+) denotes a significant difference compared with the best result, (\(-\)) indicates no significant difference. The following is a detailed analysis of the experimental findings. The last row of the table indicates the total number of indicators ranked first, second and third.

-

(1)

MHVD performance metrics from Table 4: The MHVD metric is used to evaluate the convergence of the algorithm. As far as the MHVD metric is concerned, DVR obtained the best results in the majority of the test problems, which denotes that DVR has better convergence relative to the solutions implemented by the compared algorithms. Except for F4 and DF3 test instances, DVR algorithm has better MHVD value than other algorithms at \(\tau _{t}=5\), which indicates that DVR can better deal with the drastic changes of the environment. However, when \(\tau _{t}=20\), the effect of DVR is worse than other algorithms except F7 and F10. For DF4 and DF5 test instances with complex PF variations at \(\tau _{t}=5,10\), DVR gets the best MHVD value. Because DVR adopts the method of classifying decision variables and adopts multi-region prediction strategy for convergence-related variables, the convergence of the algorithm is further improved.

Table 4 Statistics of MHVD values of six algorithms -

(2)

MSP performance metrics from Table 5: MSP is used to evaluate the diversity of algorithms in different environments. The DVR obtained the best results on the most of the benchmark instances, indicating a more uniform distribution compared to other algorithms. This is due to the fact that the DVR adopts a diversity maintenance mechanism, which further increases the diversity of the algorithm. For F5-F10 and DF2, the DVR obtains the best distribution regardless of the frequency of environmental changes. For F1, F2, F3 and DF4, the MSP of DVR are the best when \(\tau _{t}=\) 5, but when \(\tau _{t}\) increased to 10 and 20, other algorithms are better than DVR. For F1, MDP is only a little better than DVR when \(\tau _{t}=\) 10,20, which indicates that the distributions of the individuals of two algorithms do not differ much. When compared with PPS, DVR performed worse on DF3 and DF5. For triple objective test instances F4 and F8, the DVR obtained the best results except for \(\tau _{t}=\) 10 on F4.

Table 5 Statistics of MSP values of six algorithms -

(3)

MIGD performance metrics from Table 6: MIGD is used to evaluate the diversity and convergence of the algorithms in different environments. We use MIGD in combination with MSP and MHVD to demonstrate the performance of the algorithm in depth and extensively on test instances. DVR outperforms the other five algorithms in most of the tested problems with MIGD, which is due to the use of different optimization strategies for convergence and diversity decision variables. However, for the F2, DF3 and DF4 test instances, it performs worse than the PPS algorithm. This is mainly because of the reuse of historical solutions by PPS algorithm. For DF4 at \(\tau _{t}=5\) and DF5 \(\tau _{t}=5,10\), the DVR is second only to the PPS algorithm. It can be concluded that DVR is suitable for solving test problems with drastic changes. For F4 and F8 at \(\tau _{t}=5,10\) and F9 at \(\tau _{t}=10,20\), the DVR algorithm is no better than other algorithm. Although the DVR achieves good MHVD values of DF5 at \(\tau _{t}=5\), its MIGD values are slightly higher than the PPS because of its poor performance in the diversity metric MSP. However, DVR achieves the best values at \(\tau _{t}=20\). It shows that for triple objective functions, DVR performs better without drastic environmental changes. For DF1, the MDP performs best regardless of the frequency of environmental changes, with the DVR in second place. Of note is the comparison between the DVR and the MDP, which use different subregional methods, with the DVR losing out to the MDP only on F4 and DF1.

The IGD plots of the six algorithms on the test instances F1, F3, F6, F7, DF2, DF5 at \(\tau _{t}=10,20\) are given in Figs. 7 and 8, respectively. In these six test instances, DVR obtains lower IGD values than the other comparative algorithms in most of the generations, indicating that the solution set produced by the DVR algorithm is closer to the true Pareto front. This is mainly due to the fact that DVR combines decision variable classification, multi-region prediction and diversity introduction strategies, which can lead to better convergence of populations to the Pareto frontier.

The analysis of the above three performance metrics shows the superiority of the proposed algorithm DVR. As shown in Figs. 9, 10, 11 and 12, four test problems F1, DF2, F5 and F7 are chosen to better show the distribution of Pareto solutions over PF generated by the six algorithms at different moments when \(\tau _{t}=30\). As shown in Figs. 9 and 10, the overall distribution of the final PF of the five algorithms for F1 and DF2 at \(t=\) 10, 20, 30, 40, 50. The PF of F1and DF2 do not change with time. For a more intuitive change of the optimal frontier and the true front, the fronts at different moments are shown in the same subplot, and both \(f_{1}\) and \(f_{2}\) take values in the range [0,1]. As shown in Figs. 11 and 12, the PF distributions of the other three test problems F5, and F7 at different moments. The PFs of these test problems change with time. The PF distributions of the solutions of test function F5 at moments t = 11, 15, 25, 32, 49 and test function F7 at moments t = 13, 22, 34, 41, 52 are selected. As can be seen from Figs. 11 and 12, due to the combination of decision variable classification, subregional prediction and diversity maintenance, the DVR algorithm ensures the convergence of the solution set along with good distribution, and the convergence and distribution of the other three algorithms are slightly worse than that of algorithm DVR.

5.5 Further discussion

This section mainly discusses the impact of decision variable relationship strategies, multi-regional prediction methods, and multi-regional diversity maintenance strategies on DVR. To investigate the effect of each component on the experimental results, we modified the DVR into three variants, where variant 1 did not classify the decision variables and all dimensional decision variables were predicted using a multi-regional approach. Variant 2 replaces the multi-regional prediction technique with a single center prediction strategy. Variant 3 does not use a multi-regional diversity maintenance strategy. The three variants are named DVR-V1, DVR-V2, and DVR-V3. MHVD, MSP, and MIGD metrics of the three variants are compared with those of DVR when \(n_{t}=10,\tau _{t}=10\), and the corresponding results are presented in Tables 7, 8 and 9. The best results in the Tables 7, 8 and 9 are shown in bold. The impact of each component is detailed below.

-

(1)

Decision variable classification strategy It is clear that DVR outperforms DVR-V1 on complex test instances. DVR applies different change response strategies to decision variables to increase the population diversity and convergence.

-

(2)

Multi-regional prediction strategy It is easy to see that the DVR outperforms the DVR-V2 on most of the test problems. The reason is that using a multi-region prediction strategy is more accurate than using a single centroid prediction strategy.

-

(3)

Multi-regional diversity maintenance strategy From the three tables, it can be concluded that the diversity maintenance strategy plays a significant role in the performance of the algorithm. The diversity maintenance strategy explores nearby individuals in decision space to enhance the diversity of population.

As can be seen from the three metrics, all three variants do not perform as well as the DVR algorithm in most test instances. It proves that all three strategies are effective and indispensable.

5.6 Running time

The computation time can be used to measure the computational complexity of the algorithm. Table 10 shows the running time of all algorithm test functions F1, F2, F4, F5, DF1 and DF5 to track 100 times of environment changes when \(\tau _{t}=10\). All algorithms run independently under the same configuration conditions. F1, F2, F5, DF1 and DF5 are bi-objective test functions, and F4 is tri-objective test functions. Table 10 shows that the running time of PPS and SVR is shorter than that of other algorithms. However, considering MIGD, MHVD and MSP, the performance of PPS and SVR algorithms is far worse than that of DVR algorithms. The running time of MDP and DVR algorithms is similar. DMOES algorithm takes the longest calculation time, probably because it is time-consuming to re-evaluate population individuals. The classification strategy of decision variables in DVR algorithm increases the computational complexity of the algorithm. The running time of DVR algorithm is medium among the five algorithms and has achieved good convergence and diversity.

5.7 Application of DVR algorithm in rolling load distribution

Dynamic multiobjective optimization algorithms exist in a large number of industrial fields and are applied to many practical problems. For example, rolling load distribution. The author proposes a multiobjective rolling optimization method, which is supported by multiobjective evolutionary algorithm [40]. In the rolling process, the quality of the load distribution scheme directly affects the product quality. In the past engineering calculations, more attention was usually paid to the optimization of the rolling constant speed stage, while the variable speed stage used the linearization of the optimization results of the adjacent constant speed stage. In order to improve the quality and control accuracy of rolled products, DVR algorithm and five comparison algorithms are used to solve the dynamic load distribution problem in the process of rolling speed change.

The continuous rolling device is composed of five stands. The objective function is to establish the comprehensive slip factor, equal power margin and minimum rolling force, the rolling speed is the environmental change factor, and the stand reduction rate, the inlet and outlet tension of each stand are the decision variables.

-

(1)

Objective function establishment

In order to maximize the ability of the motor, equal power margin is selected as the objective function. The expression of the objective function is as follows:

where \(NH_i\) represents the rated power of i rack; \(N_i\) represents the power of rack i.

In order to achieve the goal of energy saving and emission reduction in the rolling process, the minimum rolling energy consumption is selected as the objective function. The expression of the objective function is as follows:

In order to reduce the number of objective functions, five slip factor functions are integrated into one objective function to evaluate the slip of five racks. The expression of the objective function is as follows:

where \(W_i\) represents the slip factor of the ith rack, \(V_1\) represents the evenness of the slip factor; \(\mu _{i}\) is the friction coefficient of the ith rack; \(V_2\) represents the overall situation of the slip factor in all racks; \(T_{fi}\) represents the front tension of the ith rack; \(T_{bi}\) represents the rear tension of the ith rack; \(R_i^{\prime }\) stands for the flattening radius of the working roll of the ith rack; \(\Delta h_i\) is the absolute reduction of the ith rack, \(P_i\) represents the rolling force of the ith stand; a is the weighting coefficient, a = 0.4.

-

(2)

Experimental result

Figure 13 shows the front graph of power margin and comprehensive slip factor. Figure 14 shows the Pareto front of minimum energy consumption and comprehensive slip factor, where the individuals on the Pareto front are nondominated individuals. For ROCA1, the DVR algorithm has good distributivity, but poor convergence. For ROCA2, DVR algorithm has better distribution and convergence than other five algorithms.

6 Conclusion

To quickly respond to environmental changes and better adapt the population to the new environment, a dynamic multiobjective optimization algorithm based on the relationship of decision variables is proposed. The algorithm classifies decision variables into two categories based on the magnitude of their contribution to convergence and diversity, and adopts different evolutionary optimization strategies for different categories of decision variables. The systematic sampling method is used to determine the guide individuals, divide the population individuals into different subregions. By the evolution direction of guide individuals and guiding the evolution of individuals in this subregion, the convergence and distribution diversity of Pareto front are ensured. Based on the comparison experiment of 15 test instances with four state-of-the-art algorithms in different environments, the results reveal that the proposed DVR algorithm can better track the changing PF or PS with good distributivity when the environment changes.

However, the algorithm adopts perturbation strategy, which wastes computing resources and leads to slow computing speed. Therefore, it is required to devise appropriate methods to speed up the calculation. We will be devoted to using DVR to address the problem of variable number of objectives or many objective. In addition, we will use DVR to solve practical applications.

Data availability

All data generated or analyzed during this study are included in this published article (and its supplementary information files).

References

Huang W, Zhang W (2022) Multi-objective optimization based on an adaptive competitive swarm optimizer. Inf Sci 583:266–287

Gao W, Wang Y, Liu L, Huang L (2021) A gradient-based search method for multi-objective optimization problems. Inf Sci 578:129–146

Luo S, Zhang L, Fan Y (2021) Dynamic multi-objective scheduling for flexible job shop by deep reinforcement learning. Comput Ind Eng 159:107489

Han Y, Gong D, Jin Y, Pan Q (2019) Evolutionary multiobjective blocking lot-streaming flow shop scheduling with machine breakdowns. IEEE Trans Cybern 49(1):184–197

Jiang M, Huang Z, Qiu L, Huang W, Yen GG (2018) Transfer learning-based dynamic multiobjective optimization algorithms. IEEE Trans Evolut Comput 22(4):501–514

Hu Z, Yang J, Cui H, Wei L, Fan R (2019) MOEA3D: a MOEA based on dominance and decomposition with probability distribution model. Soft Comput 23(4):1219–1237

Morris GM, Goodsell DS, Halliday RS, Huey R, Hart WE, Belew RK, Olson AJ (2015) Automated docking using a Lamarckian genetic algorithm and an empirical binding free energy function. J Comput Chem 19(14):1639–1662

Rainer S, Kenneth P (1997) Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(5):341–359

Venter G, Sobieszczanski-Sobieski J (2002) Particle swarm optimization. AIAA J 41:1942–1948

Dorigo M, Blum C (2005) Ant Colony Optimization Theory: A Survey. Theoretical Computer Science 344:243–278

Rao RV, Savsani VJ, Vakharia DP (2011) Teaching-learning-based optimization: a novel method for constrained mechanical design optimization problems. Butterworth-Heinemann 43(3):303–315

Lee KS, Geem ZW (2005) A new meta-heuristic algorithm for continuous engineering optimization: harmony search theory and practice. Comput Methods Appl Mech Eng 194(36):3902–3933

Kirkpatrick S (1984) Optimization by simulated annealing: quantitative studies. J Stat Phys 34(5): 975–986

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) GSA: a gravitational search algorithm. Inf Sci 179(13):2232–2248

Wang C, Yen GG, Jiang M (2020) A grey prediction-based evolutionary algorithm for dynamic multiobjective optimization. Swarm Evolut Comput 56:100695

Liu X-F, Zhou Y-R, Xue Yu (2020) Cooperative particle swarm optimization with reference-point-based prediction strategy for dynamic multiobjective optimization. Appl Soft Comput 87:105988

Sun H, Cao A, Ziyu H, Li X, Zhao Z (2021) A novel quantile-guided dual prediction strategies for dynamic multi-objective optimization. Inf Sci 579:751–775

Liu Y, Hu Y, Zhu N, Li K, Li M (2021) A decomposition-based multiobjective evolutionary algorithm with weights updated adaptively. Inf Sci 572(6):343–377

Li X, Yang J, Sun H, Ziyu H, Cao A (2021) A dual prediction strategy with inverse model for evolutionary dynamic multiobjective optimization. ISA Trans 117:196–209

Jiang M, Qiu L, Huang Z, Yen GG (2018) Dynamic multi-objective estimation of distribution algorithm based on domain adaptation and nonparametric estimation. Inf Sci 435:203–223

Zheng J, Zhou Y, Zou J, Yang S, Junwei O, Yaru H (2021) A prediction strategy based on decision variable analysis for dynamic multi-objective optimization. Swarm Evolut Comput 60:100786

Zhang X, Tian Y, Cheng R, Jin Y (2018) A decision variable clustering-based evolutionary algorithm for large-scale many-objective optimization. IEEE Trans Evolut Comput 22(1):97–112

Farina M, Deb K, Amato P (2004) Dynamic multiobjective optimization problems: test cases, approximations, and applications. IEEE Trans Evolut Comput 8(5):425–442

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evolut Comput 6(2):182–197

Jain H, Deb K (2014) An evolutionary many-objective optimization algorithm using reference-point based nondominated sorting approach, part II: handling constraints and extending to an adaptive approach. IEEE Trans Evolut Comput 18(4):602–622

Zhang H, Sun JY, Liu TL, Zhang K, Zhang QF (2019) Balancing exploration and exploitation in multiobjective evolutionary optimization. Inf Sci 497:129–148

Cui X, Li M, Fang T (2001) Study of population diversity of multiobjective evolutionary algorithm based on immune and entropy principles. In: Proceedings of the 2001 congress on evolutionary computation (IEEE Cat. No. 01TH8546), vol 2, pp 1316–1321

Liu R, Fan J, Jiao L (2015) Integration of improved predictive model and adaptive differential evolution based dynamic multi-objective evolutionary optimization algorithm. Appl Intell 43(1):192–207

Trojanowski K, Michalewicz Z (2000) Evolutionary optimization in non-stationary environments. J Comput Sci Technol 1(2):93–124

Deb K, Rao NUB, Karthik S (2007) Dynamic multi-objective optimization and decision-making using modified NSGA-II: a case study on hydro-thermal power scheduling. In: International conference on evolutionary multi-criterion optimization. Springer, pp 803–817

Ruan G, Guo Yu, Zheng J, Zou J, Yang S (2017) The effect of diversity maintenance on prediction in dynamic multi-objective optimization. Appl Soft Comput 58:631–647

Coello CA, Pulido GT, Lechuga MS (2004) Handling multiple objectives with particle swarm optimization. IEEE Trans Evolut Computat 8(3):256–279

Helbig M, Engelbrecht AP (2012) Analyses of guide update approaches for vector evaluated particle swarm optimisation on dynamic multi-objective optimisation problems. In: IEEE congress on evolutionary computation. IEEE, pp 1–8

Hu Z, Yang J, Sun H, Wei L, Zhao Z (2017) An improved multi-objective evolutionary algorithm based on environmental and history information. Neurocomputing 222:170–182

Chen R, Li K, Yao X (2018) Dynamic multiobjectives optimization with a changing number of objectives. IEEE Trans Evolut Comput 22(1):157–171

Zhang Q, Li H (2007) MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evolut Comput 11(6):712–731

Hu Z, Wei Z, Sun H, Yang J, Wei L (2021) Optimization of metal rolling control using soft computing approaches: a review. Arch Comput Methods Eng 28(2):405–421

Gee SB, Tan KC, Alippi C (2017) Solving multiobjective optimization problems in unknown dynamic environments: an inverse modeling approach. IEEE Trans Cybern 47(12):4223–4234

Peng Z, Zheng J, Zou J, Liu M (2015) Novel prediction and memory strategies for dynamic multiobjective optimization. Soft Comput 19(9):2633–2653

Hu Z, Wei Z, Ma X, Sun H, Yang JM (2020) Multi-parameter deep-perception and many-objective autonomous-control of rolling schedule on high speed cold tandem mill. ISA Trans 102:193–207

Liu RC, Chen YY, Ma WP, Mu CH, Jiao LC (2014) A novel cooperative coevolutionary dynamic multi-objective optimization algorithm using a new predictive model. Soft Comput 18(10):1913–1929

Zhou A, Jin Y, Zhang Q (2014) A population prediction strategy for evolutionary dynamic multiobjective optimization. IEEE Trans Cybern 44(1):40–53

Rong M, Gong D, Zhang Y, Jin Y, Pedrycz W (2019) Multidirectional prediction approach for dynamic multiobjective optimization problems. IEEE Trans Cybern 49(9):3362–3374

Ma X, Yang J, Sun H, Hu Z, Wei L (2021) Multiregional co-evolutionary algorithm for dynamic multiobjective optimization. Inf Sci 545:1–24

Zou J, Fu LW, Yang SX, Zheng JH, Ruan G, Pei TR, Wang L (2019) An adaptation reference-point-based multiobjective evolutionary algorithm. Inf Sci 488:41–57

Qu BY, Liang JJ, Suganthan PN (2012) Niching particle swarm optimization with local search for multi-modal optimization. Inf Sci 197:131–143

Zhang K, Shen C, Liu X, Yen GG (2020) Multiobjective evolution strategy for dynamic multiobjective optimization. IEEE Trans Evolut Comput 24(5):974–988

Li QY, Zou J, Yang SX, Zheng JH, Ruan G (2019) A predictive strategy based on special points for evolutionary dynamic multi-objective optimization. Soft Comput 23(11):3723–3739

Yan W, Jin Y, Liu X (2015) A directed search strategy for evolutionary dynamic multiobjective optimization. Soft Comput 19(11):3221–3235

Jiang S, Yang S, Yao X, Tan KC, Kaiser M, Krasnogor N (2017) Benchmark problems for cec2018 competition on dynamic multiobjective optimisation. Tech. rep

Chung PS, Jhon MS (2015) Film conformation and dynamic properties of atomistically architectured perfluoropolyethers on the carbon overcoated surfaces. IEEE Trans Magn 51(11):1–4

Helbig M, Engelbrecht A (2015) Benchmark functions for cec special session and competition on dynamic multi-objective optimization. Comput. Sci., Univ. Pretoria, Pretoria, South Africa, Rep, Dept

Muruganantham A, Tan KC, Vadakkepat P (2015) Evolutionary dynamic multiobjective optimization via Kalman filter prediction. IEEE Trans Cybern 46(12):2862–2873

Zhou A, Jin Y, Zhang Q, Sendhoff B, Tsang E (2007) Prediction-based population re-initialization for evolutionary dynamic multi-objective optimization. In: International conference on evolutionary multi-criterion optimization. Springer, pp 832–846

Cao L, Xu L, Goodman ED, Bao C, Zhu S (2019) Evolutionary dynamic multiobjective optimization assisted by a support vector regression predictor. IEEE Trans Evolut Comput 24(2):305–319

Wilcoxon F (1992) Individual comparisons by ranking methods. Springer, pp 196–202

Acknowledgements

This work was supported by Project supported by National Natural Science Foundation of China (Nos. 62003296, 62073276), National Key Research and Development Program of China (2022YFB3705504), the Natural Science Foundation of Hebei (No. F2020203031), Science and Technology Project of Hebei Education Department (No. QN2020225), Provincial Key Laboratory Performance Subsidy Project (22567612H).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hu, Z., Li, Z., Wei, L. et al. A dynamic multiobjective optimization algorithm based on decision variable relationship. Neural Comput & Applic 35, 17749–17775 (2023). https://doi.org/10.1007/s00521-023-08633-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-08633-7