Abstract

The simulation-driven metaheuristic algorithms have been successful in solving numerous problems compared to their deterministic counterparts. Despite this advantage, the stochastic nature of such algorithms resulted in a spectrum of solutions by a certain number of trials that may lead to the uncertainty of quality solutions. Therefore, it is of utmost importance to use a correct tool for measuring the performance of the diverse set of metaheuristic algorithms to derive an appropriate judgment on the superiority of the algorithms and also to validate the claims raised by researchers for their specific objectives. The performance of a randomized metaheuristic algorithm can be divided into efficiency and effectiveness measures. The efficiency relates to the algorithm’s speed of finding accurate solutions, convergence, and computation. On the other hand, effectiveness relates to the algorithm’s capability of finding quality solutions. Both scopes are crucial for continuous and discrete problems either in single- or multi-objectives. Each problem type has different formulation and methods of measurement within the scope of efficiency and effectiveness performance. One of the most decisive verdicts for the effectiveness measure is the statistical analysis that depends on the data distribution and appropriate tool for correct judgments.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The family of stochastic search and optimization algorithms has a unique characteristic of randomness, where an algorithm executes different paths towards the best solution by the same input. This attributed the applicability of the algorithms to a wide range of optimization problems. The stochastic algorithms can be further divided into two categories: heuristic and metaheuristic algorithms. Both methods are based on the same concept, which is to find the solution by some kind of guided trial and error (Yang 2010). Heuristics are mostly problem-dependent and for various problems, different heuristics can be defined. A metaheuristic method, on the other hand, makes almost no prior assumption about the problem, can integrate several heuristics inside, and is usually described in terms of a set (commonly known as a population) of candidate solutions to the problem. Thus, metaheuristics can be applied to a wide range of problems that they treat as black-boxes. Some examples of heuristic algorithms are nearest neighbor search and tabu search, whereas some well-known metaheuristic algorithms are Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO), Cuckoo Search (CS), and Harmony Search (HS) algorithms. The metaheuristic algorithms employ certain trade-offs between randomization and local search. Randomization offers a good alternative for the algorithm to escape from local optima and explore the search on the global scale, whereas the local search mechanism exploits the search towards finer search regions zeroing on an optimum. These properties are simplified in a definition of exploration (diversification of the search over a large search volume) and exploitation (intensification of the search in a smaller volume) mechanisms that favor the metaheuristic algorithms to be suited for most of the global optimization problems. However, due to their stochastic nature, metaheuristic algorithms do not guarantee the best solution (global optima in terms of optimization problems) and every trial may result in a spectrum of approximate or near-optimal solutions. A good metaheuristic algorithm is expected to find an acceptably good quality solution within a given computational budget on a wide spectrum of problems.

The quality of each metaheuristic algorithm is measured with criteria that reflect the exploration and exploitation abilities in finding a global optimum. There are numerous measurements of the algorithmic performance for metaheuristics in diverse optimization scenarios as can be found in the literature. Some pieces of literature such as Rardin and Uzsoy (2018), Ivkovic et al. (2016) and Memari et al. (2017) divided the assessments into two main concepts which are the solution quality and computation speed. Others include robustness as another criterion (Heliodore et al. 2017; Hamacher 2014). Despite these concepts, the performance measure of a metaheuristic algorithm can be generalized into two broad categories: efficiency and effectiveness measures. Several works also generalized the same concepts (Bartz-Beielstein 2005; Yaghini et al. 2011). The efficiency is referred to as the number of resources required by the algorithm to solve any specific problem. Some algorithms are more efficient than others for a specific problem. The efficiency is generally related to time and space, such as speed and the rate of convergence towards a global optimum. As a general example, two algorithms (algorithms A and B) performed equally well in terms of the final solution quality. However, algorithm A has a faster execution time due to a lesser amount of computations involved, compared to algorithm B that has more code segments, maybe with nested looping. Thus, in the perspective of algorithm complexity, algorithm A has a better performance compared to B. On the other hand, the effectiveness is a measure that relates to the solutions returned by an algorithm. The effectiveness reveals the capability of the stochastic algorithm within a given number of trials such as the number of optimal solutions, count of global or local optima, and the comparative statistical analysis to judge the significance of the results. In essence, the existing literature related to the quality of a metaheuristic algorithm performed various analyses to compare and validate their performance within the scope of efficiency and effectiveness. Nonetheless, numerous discussions on review papers related to performance metrics are mostly problem-specific and limited to specific criteria. Some of the review papers are briefly summarized in Table 1.

In addition to the reviews on specific problem type as in Table 1, there also exist several discussions and review papers related to the statistical evaluation on the performance analysis. Each paper specifies the analytical methodology and some of these proposed new approaches in comparison to the alternatives as shown in the following Table 2.

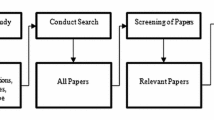

Despite each scope proposed by others, this paper covers and extends the review on important performance measures for each problem type that includes single- and multi-objective as well as continuous and discrete optimization problems. Furthermore, this paper also discusses the scope of applications as summarized in Table 1 and other recent problems discussed in the present literature on single-objective problems concerning the effectiveness and efficiency point of view. For the multi-objective problems, effectiveness and efficiency are described in more general terms. To ensure significant and qualitative metrics for each problem type, comprehensive surveys from reputed venues and well-cited publications are carried out, which include various journal articles, book chapters, and conferences. The reviewed publications have been selected mostly based on their effectiveness in the relevant areas.

This paper is further organized as follows: Sect. 2 discusses the scope of a single objective for continuous and discrete problems. Section 3 discusses the performance measures for multi-objective problems, followed by future challenges of performance measures in Sects. 4 and 5 with the conclusion.

2 Single-objective optimization problems

In essence, the goal of single-objective optimization is to find the best solution that corresponds to either minimum or maximum value of a single objective function. To date, numerous measures on single-objective problems for both continuous and discrete search spaces have been proposed in the literature. This section discusses the efficiency and effectiveness measures for both domains since some of the measures apply to both. Any specific metric related to only continuous or discrete problems is duly remarked in the section or discussed in individual sub-sections. The review for continuous problems is usually related to the constrained and unconstrained (bound-constrained) function optimization. In discrete domain, most of the algorithm comparisons are applied for the combinatorial problems that include such as the Assignment Problem (AP), Quadratic Assignment Problem (QAP), Travelling Salesman Problem (TSP), Travelling Thief Problem (TTP) (Bonyandi et al. 2013), Knapsack Problem (KP), Bin-Packing Problem (BP), Graph Coloring Problem, Scheduling Problem, and Orienteering Problem (OP). The performance measure for these problems may be similar in certain aspects such as the time measurement and convergence. Other metrics of relative performance may differ due to the nature of the objective function of each problem.

2.1 Efficiency measure

The efficiency measure is related to the algorithm’s response towards finding the optimal solution. It is heavily related to the computational speed, rate of convergence, and the time to find accepted optimal solutions. Rardin and Uzsoy (2018) highlighted that this criterion has attracted more attention in the literature than the quality of the solution. This has also driven more concerns in the field of parallel metaheuristic as the technology of parallel computing advanced (Nesmachnow 2014). The typical measurement of algorithmic efficiency is the graph representation of fitness convergence. Some other methods being used in the literature include the convergence rate (Senning 2015; Dhivyaprabha et al. 2018; Hamacher 2007; Paul et al. 2015; He 2016), algorithm complexity (Aspnes 2017) and several statistical measurements, see for example, from Chiarandini et al. (2007), Hoos (1998), Ribeiro et al. (2009), Hansen et al. (2016) and Derrac et al. (2014). In what follows, we discuss some basic measures related to the efficiency of the metaheuristic algorithms.

2.1.1 Rate of convergence

The rate of convergence is a measure of how fast the algorithm converges towards optimum per iteration or sequence. Some of the theoretical studies on convergence of the stochastic algorithms are based on the Markov chain process (Yang 2011) by estimating the eigenvalues of the state transition matrix or specifically the second largest eigenvalues of the matrix. Nonetheless, this method is complicated and difficult to estimate (Ming et al. 2006; Suzuki 1995). There is another method of evaluating the convergence, which is using an iterative function. In general, the function is equivalent to the rate of fitness change as expressed below (Senning 2015; Dhivyaprabha et al. 2018):

with \(f_{opt}\) as the optimum fitness value, \(f_{i}\) as the best value at ith iteration or step of function evaluation, and \(f_{i - 1}\) as the best value at previous ith iteration or step of function evaluation. Equation (1) is a basic representation of the convergence rate. The convergence rate is applicable for both continuous and discrete domains. Based on the review from numerous literature, there are mainly four representations of convergence pattern for test function optimization as summarized in Table 3.

The first metric is the fitness convergence that refers to the dynamic change of fitness value concerning the steps. The convergence is usually represented as a graph showing the variation of fitness/cost value with either time unit, the number of iterations, or function evaluations (denoted as FEVs). This method shows straightforward information on the algorithm performance and most literature added this as one of their performance indicators. Nonetheless, it is limited to the scale of a feasible solution to the problem, such as towards 0 for \(f_{opt} = 0\), or towards − 1 for \(f_{opt} = - 1\). An example of fitness convergence is in Fig. 1. For an unknown optimum value, which is usually true for practical cases, the convergence rate is characterized by the ratios of consecutive errors (Senning 2015) as follows:

with \(f_{i + 1} ,f_{i} ,\) and \(f_{i - 1}\) as the fitness of the next, current, and previous ith iteration. The second measure is the convergence progressive rate that is equivalent to the absolute difference \(\left| {f_{i} - f_{opt} } \right|\) as implemented by Liu et al. (2017a). Measurement without known \(f_{opt}\) can be expressed with \(\left| {f_{i} - f_{i - 1} } \right|\) that represents the relative change of error with respect to the iterations or FEVs. This method is suitable for dynamic optimization problems as the objective changes over time. An example of such an application is in De Sousa Santos et al. (2019) that used this metric as a convergence in the stopping criterion. The third measure is the logarithmic convergence rate (Salomon 1998; Mortazavi et al. 2019) that is defined by \(log\left| {f_{i} - f_{opt} } \right|\). The logarithmic convergence measures the dynamic fitness change throughout the iteration (He and Lin 2016). The curves of absolute and logarithmic convergence are depicted in Fig. 1. The figure compares convergence rate for Accelerated Particle Swarm Optimization (APSO) (Yang et al. 2011), Crow Search Algorithm (CSA) (Askarzadeh 2016), and Gravitational Search Algorithm (GSA) (Rashedi et al. 2009). As observed in the figure, the logarithmic convergence magnifies the absolute convergence pattern. As observed for CSA and GSA, both algorithms converged towards an optimum solution before 30th FEVs. However, the logarithmic convergence magnifies the pattern and reveals that GSA converged to its optimum solution on 86th FEVs, whereas CSA is still fine-tuning its convergence in 100th FEVs. The logarithmic convergence is also proposed by IEEE CEC (Liang et al. 2006) as a guideline for optimization algorithm competition by using the run-length distribution of \(\log \left( {f\left( x \right) - f_{opt} } \right)\) concerning FEVs. For an unknown global optimum, the global optimum \(f_{opt}\) is replaced with the best of the run error (Das 2018).

The fourth type of convergence representation is the average convergence rate that specifically measures the rate of fitness change as proposed by He and Lin (2016). The formulation is a geometric rate of fitness change between sequences of \(f_{i}\) to \(f_{opt}\) as defined in Eq. (3):

where \(f_{0}\) and \(f_{1}\) is the initial fitness and fitness by i = 1 respectively. The convergence rate \(R\left( i \right) = 1\) if \(f_{i} = f_{opt}\). This method was also implemented by Dhivyaprabha et al. (2018). An important point needs to be highlighted considering the observations in some papers related to convergence curve comparison. Usually, the convergence curves of a specific problem with n algorithms are compared and summarized in one chart. However, if the experiment is carried out with a huge number of FEVs or iterations, the presentation may look tedious and difficult to interpret. For this reason, a representable chart with a shorter number of evaluations or a zoomed version of the convergence curve is more appropriate. The objective is to compare and deduce the convergence trend of each algorithm. This shorter version chart can be attached at the side of the convergence with full FEVs. This applies to both continuous (such as constrained or unconstrained) and discrete such as combinatorial problems. An example of such a problem is shown in Fig. 2. The figure depicts the optimization comparison of 6 algorithms on berlin52 TSP problem as demonstrated by Halim and Ismail (2019). As observed in the figure, the highest convergence rate for all of the algorithms is in the first 500–700 FEVs, even though the experiments were run for 10,000 FEVs. Thus, the essential part to indicate which algorithms react faster towards a better solution is observed in this initial period. The latter period (say from 1000 to 10,000 FEVs) is for long term response of the algorithm and it is more appropriate to be represented in a tabulated solution. Some other examples are from Kiliç and Yüzgeç (2019) and Jalili et al. (2016) that superimposed the convergence curve of a shorter number of iterations into the original curve. Some examples of convergence curve with long FEVs that may need to be improved are from Degertekin et al. (2016) as well as El-Ghandour and Elbeltagi (2018).

Convergence curve of TSP (Halim and Ismail 2019).

The graphical representation of convergence is equivalent to the fitness rate of the algorithm and it is problem-dependent. In TSP, fitness is usually demonstrated by distance traveled over time that decreased towards an optimum solution. However, in TTP, the profit is increased towards the optimum solution. In Bin-packing problems, a graphical correlation between algorithms for initial and final solutions related to the number of items required (either in percentage or instance categories) with respect to the number of bins is one of the fruitful comparisons for relative efficiency, which is presented partly in Santos et al. (2019). Such graphical comparison can describe the relative performance of each algorithm (Grange et al. 2018) and is used for numerous practical Bin-packing problems such as the optimization of the last-mile distribution of nano-stores in large cities (Santos et al. 2019) and e-commerce logistic cost (Zhang et al. 2018).

Further enhancement of convergence rate comparison in continuous problems is using relative error (Hamacher 2007). The similar formulation is defined with a different name such as the index of error rate (Ray et al. 2007; Paul et al. 2015), RAE (He 2016) and the relative difference between best values as demonstrated by Agrawal and Kaur (2016) and Kaur and Murugappan (2008). The formulation at the ith generation is defined as follows.

with \(F_{i }\) being the fitness value of ith generation or FEVs and \(f_{opt}\) as the optimum solution. The values from RAE pretty much exhibit a similar trend with the absolute convergence rate as shown in Fig. 1. Another convergence related index is the percentage of average convergence (Paul et al. 2015) as follows:

with \(\bar{f}_{x}\) as the average fitness. The index is used to measure the quality of the initial population since a good average convergence of the initial population increases the convergence speed with better exploration (Kaur and Murugappan 2008).

Apart from the convergence curve, other problem-related convergence measures were also proposed for combinatorial problems such as for TTP and TOP (Thief Orienteering Problem): the convergence of an algorithm A over algorithm B can be formulated by a fraction of average value found by algorithm A to the best solution found by either algorithm as shown in Santos and Chagas (2018) as follows:

with \(\bar{A}\) as the average objective value of algorithm A, \(A_{best}\) and \(B_{best}\) as the best solution found by both the algorithms respectively. This average convergence can be categorized by the different number of items and item relation types from the knapsack problem. To compare the convergence of algorithms by different problem types, an approximate of convergence relation between current best generation (or FEVs, or iteration) with the total number of generation calculated as follows:

with \({\text{C}}_{\text{G}}\) as the current generation that corresponds to the best solution of an algorithm and \({\text{T}}_{\text{G}}\) as the total number of generations. Hence, lower \(C_{\text{relation}}\) reflects a faster convergence of the algorithm. An example of implementation can be found in Zhou et al. (2019). A similar method can be adopted concerning the number of dimensions. A trend of convergence behavior can be observed by plotting the number of \(succesful \;evaluation/dimension\) over the number of dimensions. A higher slope corresponds to faster convergence by each dimension (Nishida et al. 2018). Another metric related to the convergence in design optimization is proposed by Mora-Melia et al. (2015). The metric is a combination of two formulations of rates defined as follows:

where \(\upeta_{\text{quality}}\) is the effectiveness of success rate equivalent to the fraction of successful run over the total run represented as \(\upeta_{\text{quality}} = \left( {{\text{Successful run}}/{\text{total run}}} \right)\). The second term, \(\upeta_{\text{convergence}}\) is equivalent to the speed of convergence that is either time or number of FEVs to compute the final solution. An example of the application of this metric is observed in El-Ghandour and Elbeltagi (2018) that also used for the water distribution network problem, relatively similar problem application as Mora-Melia et al. (2015). This metric seems to be universal and can be applied to other problem types.

The convergence measure for the dynamic environment has different formulation due to the change of objective functions and constraints over time, which also reflects most of the real-world problems. These changes influence the optimization process throughout the measured time (Mavrovouniotis et al. 2017). There are several convergence measures proposed in the literature such as offline error (Yang and Li 2010), modified offline error and modified offline performance (Branke 2002), staged accuracy, and adaptability (Trojanowski and Michalewicz 1999). The offline error measures the average of differences between the best solution by an algorithm before the environment change and optimum value after the change defined as follows:

with \(f_{k}\) as the best solution found by the algorithm before the kth environmental change, \(h_{k}\) as the changed optimum value at the kth environment and K as the total number of environments. Examples of applications on this measure can be found in Zhang et al. (2020). The staged accuracy measures the difference between the current best of the population ‘before change ‘generation and the optimum value and averaged over the entire run, whereas the adaptability measure the difference between the current best individual of each generation and the optimum averaged value throughout the run. Both formulations are shown in (10) and (11) respectively.

where K is the number of changes, \(G_{k}\) is the number of generations within stage k, \(f_{opt}^{{\left( {G_{k} } \right)}}\) is the optimum value of each change or stage, and \(f_{i}^{{\left( {G_{k} } \right)}}\) is the current best individual value in the population of ith generation. Other distinguished measures for dynamic environments are the Recovery Rate (RR) and the Absolute Recover Rate (ARR) proposed by Nguyen and Yao (2012). RR is denoted as a response speed for an algorithm to recover from an environmental change towards converging to a new solution before the next change occurs as in Eq. (12):

where \(f_{best} \left( {i,j} \right)\) as the fitness of the best feasible solution since the last change discovered by the algorithm until the jth generation of change period i, m is the number of changes and \(p\left( i \right),i = 1:m\) as the number of generations by every change of period i. RR =1 if the algorithm able to recover and converges towards a solution immediately after the change. On the other hand, RR =0 if the algorithm is unable to recover after the environment change. Another measure, ARR is used to analyze the response speed of an algorithm to converge towards global optimum as in Eq. (13):

with \(f_{global}\) as the global optimum of the respective problem. The rating of ARR is similar to RR whereas ARR = 1 if the algorithm able to recover and converges towards the global optimum. Both RR and ARR can be further described in a graphical representation (RR–ARR diagram in Fig. 3) to understand the convergence/recovery behavior of the algorithm as illustrated by Nguyen and Yao (2012). The RR–ARR diagram consists of a diagonal line and points of RR/ARR scores by each algorithm. The score is represented in x and y coordination of the point below the diagonal line. Each point’s position corresponds to the characteristics of each algorithm. If the point lies on the diagonal line (such as algorithm A), it can recover from dynamic change and converged to a new global optimum. For other algorithms, such as algorithm B that lies closer to diagonal in more right side shows characteristics of faster recovery towards global optimum, whereas algorithm C is more likely converged to the local optimum with faster recovery and algorithm D is more likely not converged yet to an optimum solution with slower recovery (Nguyen and Yao 2012). In most cases, the RR–ARR measures can be used together to indicate if an algorithm can converge to the global optimum within a defined time frame between changes and also reveal how fast the algorithm requires to converge (Yang and Yao 2013).

An example of the RR–ARR diagram (Nguyen and Yao 2012)

2.1.2 Diversity

Another measure related to the convergence behavior of an algorithm is the diversity. Diversity is referred to as a distribution of the algorithm’s population in the search space and it reflects the information of exploration and exploitation throughout the iteration. Higher diversity shows the ability of exploration, whereas the lower diversity of the population indicates an exploitative tendency of the search. Therefore, diversity measure affects the convergence behavior of the algorithm significantly and a good diversity will avoid premature convergence. The diversity of a population-based algorithm is heavily related to the speed, thus it is an efficiency-related measure. To date, numerous diversity measures are introduced in the literature. Cheng et al. (2014) introduced dimension-wise population diversity as formulated in Eq. (14):

with D being the total number of dimensions and \(Div_{j}\) as the solution diversity based on the \(L_{1}\) norm for the jth dimension. Other measurements are the diversity of population-entropy (Yu et al. 2005), diversity of average distance around swarm center (Krink et al. 2002), diversity of normalized average-distance around swarm center (Tang et al. 2015), and the diversity based on average of the average distance around the particles in the swarm (Olorunda and Engelbrecht 2008). This method extends the concept of the distance around the swarm center, where each swarm particle is denoted as a center and the average over all these distances is calculated. The diversity measure based on entropy (Yu et al. 2005; Tang et al. 2015) divides the search space into Q areas of equal size with Zi search agents in a population of size N. The probability of search agents situated in ith area is then determined by \(Z_{i} /N\). The population-entropy diversity for the continuous optimization problem is then defined as:

In combinatorial problems such as TSP, the diversity measure based on entropy is formulated based on the number of edges, thus defined as the edge entropy. The edge entropy of a population is measured as (Tsai et al. 2003):

where \(X = E\left( {s_{1} } \right) \cup E\left( {s_{2} } \right) \cup \ldots \cup E\left( {s_{n} } \right)\) is the series of edges, \(F\left( e \right)\) is the number of edges, e is the current edge and N is the population size. Higher edge entropy implies higher population diversity. Another related measure is the edge similarity of a population is defined as (Tsai et al. 2003):

with \(T_{i,j}\) as the number of edges shared by pairs \(\left( {s_{i} ,s_{j} } \right)\). Both equations imply a diverse population by larger edge entropy and low edge similarity. Both equations can be represented with a curve versus the number of generations or iterations and plotted with the compared algorithms. A faster decrease of edge entropy from high value denotes a faster reduction of diversity towards the solution. Several examples of the metric application can be observed in Tsai et al. (2004) and Nagata (2006).

Another diversity measures for continuous problems highlights the diversity of search particles around the swarm center. The measure is based on the average distance around the swarm center (Krink et al. 2002) is shown as follows:

where S is the swarm, \(\left| S \right|\) is the swarm size, D is the dimensionality of the problem, \(p_{ij}\) is the jth value of the ith particle and \(\bar{p}_{j}\) is the jth value of the average point of particles \(\bar{p}\). A lower \(D_{Swarm\;center} \left( S \right)\) value shows convergence around the swarm center, whereas higher values indicate a higher dispersion of search agents away from the center.

Another concept similar to above was defined by Riget and Vesterstrøm (2002), but with further normalization concerning the swarm diameter, L. The formulation of the normalized average distance around the swarm center is shown in Eq. (19). This method is used in most of the literature such as by Mortazavi et al. (2019) and Aziz et al. (2014). Apart from swarm diameter, this normalization method can also be replaced with a swarm radius.

with \(p_{ij}\) as the jth value of the ith search agent and the dth value of the average point \(\bar{p}_{d}\) of all search agents. The value \(\bar{p}_{d}\) also denotes the center of the swarm in the dth dimension. N is the population size and L is the longest diagonal length in the search space or the swarm diameter. A graphical representation of diversity concerning the FEVs can be used for observing the diversity of the search agents during the attraction–repulsion phase as a countermeasure to avoid the premature convergence as depicted in (Riget and Vesterstrøm (2002). As a definition, the swarm diameter is equal to the maximum distance between any two search agents, whereas the swarm radius is defined by the distance between the swarm center and the furthest path of the search agent from the swarm center. The swarm diameter is calculated as follows:

where \(x_{ik}\) and \(x_{jk}\) are the kth dimension of the ith and jth search agent’s position respectively. The swarm radius is defined as shown in Eq. (21) with \(\bar{x}_{k }\) as the average of kth dimension from the swarm center. Both swarm diameter and radius can be used in diversity measures. Large values denoting highly dispersed search agents, whereas lower value showing convergence.

Another concept of diversity is the average of average distances around the swarm center (Olorunda and Engelbrecht 2008) that evaluates the average distance around each search agent in the swarm that is using each particle as a center, then calculating the average overall distances as formulated in Eq. (22):

where the second term inside the bracket denotes the average distance around search agent \(x_{i}\). This method indicates the average dispersion of all search agents in the swarm relative to each agent in the swarm. Another recently introduced diversity measurement is O-diversity (Chi et al. 2012). The method calculates the average distance around the global optimum point O without including the outliers that may affect the accuracy judgments of convergence or divergence at each specific state. The O-diversity is defined as follows:

where \(N_{0}\) is the number of outliers, \(S_{O}\) is the sum of outliers on dimension k and \(O_{k}\) is the optimal point at the kth dimension. The O-diversity \(D_{O}\) is also defined as the population position diversity (Chi et al. 2012). Declining value of \(D_{O}\) indicates better optimization performance as the algorithm reaches the global best value O.

The dynamics of the diversity move of each search agent also has been considered in the literature. These measures defined as swarm coherence (Hendtlass and Randall 2001), which is defined as:

with \(V_{S }\) as the speed of swarm center and \(\bar{V}\) as the average agent’s speed. The swarm coherence is a ratio of swarm center speed concerning the average speed of all agents in the swarm. The speed of the swarm center is defined as in the following equation (Hendtlass 2004).

with \(\vec{V}_{i}\) as the velocity of each agent over N number of swarm and \(x\) is a distance norm such as Euclidean distance. Larger \(S_{C}\) shows a higher center velocity and implies a high percentage of search agents that traverse with the same velocity vector (in the same direction) in high acceleration. Conversely, lower values of swarm center show either a high percentage of the search agents are traversing in the opposite direction or still traversing in the same direction but with a much slower speed. For the average agent’s speed, larger values show that the search agents on average are making large changes compared to current positions, which is also denoted with higher exploration. On the contrary, smaller values of average speed denote that the search agents are wandering around their relative proximity and the neighborhood bests, hence also exploitation. In a perspective of swarm coherence, a lower value shows either the agents are highly dispersed in the search space or they are traversing in relatively small direction. A higher swarm coherence denoting either the swarm is converging or they are traversing in the same resultant direction.

The diversity measure in discrete optimization shows the capability of an algorithm to converge to local optimum at early iterations. An example from Tsai et al. (2014) proposed the diversity measure as an average in a search space for TSP as follows:

where n is the number of cities and \(e_{i,j}^{t}\) as the edge between city i and j. \(e_{i,j}^{t} = 1\) if an edge between both cities exists and \(e_{i,j}^{t} = 0\) otherwise. The value \(\bar{S}^{t}\) represents the average path of all cities in t generations. As an overview comparison, a curve of diversity by different algorithms can be plotted against the number of generations. Usually, the diversity of an effective algorithm increases in the early step of generations, and later become smaller as it converging towards the optimum.

2.1.3 Combinatorial problem-specific metrics

Some of the efficiency metrics related to the combinatorial problems are discussed in this sub-section. The first metric is the sub-optimum solution. The speed of an algorithm to reach an optimal solution can be measured by summing up the sub-solution of optimal results (such as TSP, TTP, or OP). As an instance, \(,\varphi = \left\{ {\varphi_{1} ,\varphi_{2} , \ldots ,\varphi_{n} } \right\}\) are the optimal sub-solution found by an algorithm at a specific generation. Thus, the rate of edge optimum at generation t can be expressed as follows (Tsai et al. 2014):

with n being the number of instances, and \(O_{i,j}^{t}\) as the condition of sub-solution existence; \(O_{i,j}^{t} = 1\) refers to the existence of an optimum sub-solution edge between pairs i and j, whereas \(O_{i,j}^{t} = 0\) is otherwise. The second metric related to the combinatorial problem is tour improvement. The average improvements by an algorithm can be compared with other algorithms with Eq. (28) as follows:

where N is the population size, \(s_{i}\) as the ith individual in the population, \(f_{A}\) as the proposed algorithm and \(f_{B}\) as the benchmarked algorithm. The motivation for this measure is to observe any improvement of the proposed algorithm against a benchmarked algorithm. This comparison can also be made between the older and improved algorithms as used by Tsai et al. (2004). For the Bin-packing problem, a convergence of total cost with the number of bins for compared algorithms can be highlighted as showed by Zhang et al. (2018). Any algorithm that converged faster towards lower cost is considered as more efficient.

The efficiency measure related to the Travelling Thief Problem (TTP): TTP consists of the combination between Travelling Salesman Problem (TSP) and Knapsack Problem (KP) as proposed by Bonyandi et al. (2014). The optimization for TTP need a tradeoff between each sub-problem (TSP and KP) since higher picking item of KP resulted in the higher total value of items, but also resulted in reducing speed and increases the renting rate. The combination of each sub-problem leads to the variation of TTP instances such as TSP related routes and KP-types: uncorrelated, uncorrelated with similar weights, and bounded strongly correlated types. Other TTP instances are item factor (number of items per city in each TSP-KP combination) and renting rate that links between each sub-problem. The performance measures between algorithms can be described graphically by plotting the gain of tour time, f with the total value, g, and the best solution must be compromised between both gains as demonstrated by Bonyandi et al. (2013). Furthermore, the importance of TSP and KP can be dynamically interchanged throughout the process. Reducing the value of the renting rate might reduce the contribution of the TSP component to the final objective value, whereas increasing the renting rate might lead to less significant of the total profit items to the impact of final objective value (Bonyandi et al. 2014).

For Orienteering problem, OP, the significant performance measure is the computation time between nodes or also defined as service time (Li and Hu 2011), the set of visited nodes in a tour, and the score of visiting each node to maximize the fitness as in the following equation:

with \(S_{i}\) as the associate score of node i, and \(x_{ij} = 1\) if the tour visits node j immediately after visiting node i, \(x_{ij} = 0\) otherwise. The algorithms shall visit each prescribed node within a defined maximum time. Thus, an algorithm that fails to score within maximum time is considered an underperformer. A unique measure of the Lin-Kernighan (LK) algorithm for solving TSP is using the search effort. The LK algorithm and its variants consist of edge exchanges in a tour and this procedure consumes more than 98% of the algorithm’s run-time (Smith-Miles et al. 2010). The search effort of the LK-based algorithms is measured based on the count of edge exchange occurs during the search and it is independent of the hardware, compiler, and programming language used (Smith-Miles et al. 2010). Further readings on LK-based algorithms and its efficiency measures can be found in Van Hemert (2005) and Smith-Miles and Van Hemert (2011).

2.1.4 Computation cost

The computation cost of an algorithm is dependent mainly on the number of iterations and population size (Polap et al. 2018). Each algorithm is executed until exceeding the number of iterations or until no improvement is achieved (global optimum solution). Based on Rardin and Uzsoy (2018), computation time is crucial and increased more attention than the solution quality due to different hardware and software technology. Jackson et al. (1991) and Barr et al. (1995) discussed several factors that need to be considered to evaluate the computation time. The time measurement can be captured in mainly three parts of the algorithm execution: time to best-found solution, total computation time, and timing per phase (Barr et al. 1995). The time to best solution is referred to as the computation time required by the algorithm to find the global optimal solution. The total computation time is referred to as the run time of an algorithm until it is terminated due to its stopping criteria. Thus, the time to the best solution may be lesser than the total computation time, and this performance can be easily demonstrated in a classical convergence of fitness versus time curve. The third computation time (the time per phase) measurement is another quality option that is referred to as the timing of each defined phase such as initial population, improved version, the sequence of a collaborative hybrid algorithm, and percentage to the global optimum.

There are several methods proposed in the literature on capturing the runtime of algorithms in continuous and discrete problems. Some literature (Conn et al. 1996; Benson et al. 2000) proposed performance calculation based on the average and cumulative sum over all the problems. However, this may bias the results for small numbers of difficult problems as it may dominate the whole results (Dolan and Moré 2002). Another approach is by comparing the medians and quartiles between solver times (Bongartz et al. 1997). This method is superior to the average method since it did not bias the results, however, the information of trends between one quartile to the next may lead to uncertain assumptions (Dolan and Moré 2002). Some researchers capture the computation time descriptively. An example of such a method is the ratio of computation time to converge towards 5% of the best-found solution (Barr et al. 1995) as formulated in Eq. (30):

Another method of comparing the ratio of computation time with others is by rating the percentage of time consumed by an algorithm to the best runtime (Billups et al. 1997). The rating is categorized by competitive or very competitive. The rating is defined as competitive if the time consumed by the proposed algorithm is \(t \le 2T_{min}\) and very competitive if \(t \le \frac{4}{3}T_{min}\), with \(T_{min}\) as the minimum time obtained among all the algorithms on a specific problem. However the definition of classification limit (competitive or very competitive) is depending on the researcher’s arbitrary option that may have some tightness or looseness in question. Some pieces of literature compare computation cost via a ratio between an unsuccessful and a successful run that concerns on the runtime of the algorithm. The proposed method is defined with average runtime, aRT (Hansen et al. 2012), which is formulated as in the following equation:

with \(n_{u}\) as the number of unsuccessful runs and \(n_{s}\) as the number of successful runs. The runtime did not necessarily refer to the computation time; it can also be correlated with the number of Function Evaluations or FEVs. It is not recommended to use the number of iterations to benchmark the computation steps. The number of FEVs is usually taken as a reliable measure of the computation complexity, provided there is no hidden cost inside the algorithm. The number of iterations for different algorithms may perform different amounts of computation in their inner loops. Furthermore, comparing using CPU time is inappropriate and it is preferable to use the more abstract FEVs for comparing stochastic algorithms (Hellwig and Beyer 2019).

In discrete optimization problems, one of the most commonly used for runtime measure is the first hitting time (FHT) (Nishida et al. 2018) that is defined as the number of function evaluations required by the algorithm to reach the first hit of the optimum solution. Lehre and Witt (2011) defined the hitting time measurement by considering the time required to reach bound of optimum \(\varepsilon > 0\) for which \(\left| {f\left( x \right) - f\left( {x_{opt} } \right)} \right| < \varepsilon\) that denoted as the expected FHT with respect to \(\varepsilon\). The growth of expected FHT is also bounded by a polynomial in \(1/\varepsilon\) and the number of dimensions of the specific problem (Lehre and Witt 2011). The performance of FHT can be plotted against the number of dimensions, thus a comparison of FHT between algorithms with respect to dimensions can be analyzed graphically.

Another useful method of comparing the computation time between algorithms for both continuous and discrete domains is using a Box and Whisker plot as illustrated in Fig. 4. The Box and Whisker plot summarizes important features of the data and helps to demonstrate the comparison among the algorithms. The definition of each section (Fig. 4 right) on the box-whisker plot is summarized in Table 4. The analysis in the scope of computation time with the number of dimensions and variables is also another performance measure for algorithm efficiency. Ideally, the computation time increases with the number of algorithmic variables. Saad et al. (2017) demonstrate the relationship between the computation time in seconds and FEVs with the number of variables in various algorithms. This comparison was able to show which algorithm has a better performance for what number of variables.

During comparison among different algorithms, computing system between each comparison instance must be taken into careful consideration. Precise time measurement for algorithm comparison is crucial. Some literature mentioned the method of capturing the computation time in high resolution as demonstrated by Shukor et al. (2018), Ngamtawee and Wardkein (2014) and Bahameish (2014), where the elapsed time was captured in Nanoseconds using JAVA function code System.nanoTime(). Other literature such as from Pan et al. (2019) measured and presented the time in milliseconds.

2.1.5 Comparing different platforms

The combinatorial NP-hard problems usually consume higher computation cost and directly influence the computer CPU. Thus, it is not straightforward to distinguish the computational time of each method that being compared if it is executed from different computer configurations. Numerous researchers compared their algorithm’s computation with other published papers especially in the Vehicle Routing Problem and Orienteering Problem. The benchmarked comparison is the processor efficiency rate or CPU speed. There are plentiful benchmark programs available, but it is important to keep in mind that the performance of various processors depends on many external factors such as compiler efficiency and type of operation used for measurement. However, it is fairly enough to attach as a reference in the paper if it meant to indicate a rough estimation of the computational speed of different platforms. Some examples of benchmark comparisons are using a million of the floating-point operations per second, Mflop/s (Lo et al. 2010; Rouky et al. 2019). Other study benchmarked CPU speed using a million instructions per second, MIPS (Attiya et al. 2020; Jena et al. 2020). Another example is using System Performance Evaluation Cooperative (later is defined as the Standard Performance Evaluation Corporation), SPEC (Fenet and Solnon 2003; Crainic et al. 2011; Wu et al. 2018). Both MIPS and Mflop/s are relatively easy to understand and measurable. The MIPS metric measures the number of CPU instructions per unit time. It is usually used to compare the speed of different computer systems and to derive the execution time. It is mathematically defined as follows (Dawoud and Peplow 2010):

where CPU clocks denote the total number of clock cycles for program execution. The execution time can be derived with a known value of MIPS as follows: \(Execution \;time = Instruction \;count/\left( {MIPS * 10^{6} } \right)\). In contrast, the Mflops metric measures the floating-point operations per million of execution time or mathematically defined as follows (Dawoud and Peplow 2010).

The metric signifies floating point operations such as addition, subtraction, multiplication, and division that applied to numbers represented by single or double precision. The data are specified in program language using keywords such as float, real or double. It is necessary to keep in mind the drawbacks of these methods. The Mflop/s metric depends on the type of floating-point operations present in the processor and it treats the floating-point operation for addition, subtraction, multiplication, or division equally. Practically, the complexity of floating-point division is much higher and time-consuming than the floating-point addition. Some of the problems for MIPS are its nonlinearity behavior and inconsistent with the correlation of performance (Noergaard 2013). Furthermore, the metric can also be inconsistent even comparing processors of the same architecture due to the possibility of not measuring throughout processor performance for instance I/O or interrupt latency (Dawoud and Peplow 2010). The SPEC method is more rigorous than MIPS and Mflop/s. The SPEC method evaluates the rate metric by measuring the time required to execute each benchmark program on a tested system and normalized the time measured for each program by the required execution time. The normalized values are then averaged based on the geometric mean. Nonetheless, this method has shortcomings such that the geometric mean is not linearly related to the program’s actual execution time and it was shown unreliable metric where a given program executes faster on a lower SPEC rating (Dawoud and Peplow 2010). Another possible benchmark comparison is CoreMark (Ibrahim 2019). The method is mostly used to indicate the processor performance in microcontroller technology and it is more reliable than Mflop/s and MIPS (Embedded 2011). As far as our knowledge goes, there is no literature benchmarked with this method yet.

2.1.6 Algorithm complexity

The efficiency of the algorithm for the CPU time can also be measured by its complexity. The complexity of an algorithm’s performance is related to space and time (Aspnes 2017; Dawoud and Peplow 2010). Space and time complexities quantify the amount of memory and time taken by an algorithm to run as a function of the length of the input. Numerous factors can affect the time and space complexity such as hardware, operating system, processor, compiler software, and many more, which may not be included in the algorithm performance comparison. The main concern of complexity in algorithm performance is how the algorithm is executed. An excellent tool for comparing the asymptotic behavior of algorithms’ complexity is the Big-O notation (Brownlee 2011; Cormen et al. 2001). This method provides a problem independent way of characterizing an algorithms space and time complexity. As an example is a coding of nested-loop as follows.

In the worst case in Fig. 5a, the for loop runs n times, then the counter++ will run for \(0 + 1 + 2 + \cdots + \left( {n - 1} \right) = \frac{{n*\left( {n - 1} \right)}}{2}\). Therefore the time complexity for an asymptotic upper bound of the algorithm will be \({\text{O}}\left( {n^{2} } \right)\) with O denotes as the Big-O-notation. The computation under O-notation will ignore the lower order terms since it is insignificant for larger input. However, in Fig. 5b, the algorithm computes counter++ with \(n + {n \mathord{\left/ {\vphantom {n 2}} \right. \kern-0pt} 2} + {n \mathord{\left/ {\vphantom {n 4}} \right. \kern-0pt} 4} + \cdots + 1 = 2*n\). Thus, the time complexity of the algorithm will be \({\text{O}}\left( n \right)\) since higher order is ‘1’. Therefore the time complexity for the algorithm in Fig. 5b is more efficient. The simplification of the algorithm is not a recent topic and was discussed in numerous works of literature such as (McGeoh 1996) and to date such as from Zhan and Haslbeck (2018), Rakesh and Suganthan (2017). The complexity experiment shall be tested on a predefined function with several trials and the CPU time for each trial. Some standard guidelines of algorithm complexity comparison as presented in Hansen et al. (2012) for Black-Box Optimization Benchmarking (BBOB) and Suganthan et al. (2005), Awad et al. (2016) for the Congress on Evolutionary Computation (IEEE CEC). For BBOB competition, the participants shall run the complexity test on Rosenbrock function with defined dimensions. The setup, coding language, compiler, and computational architecture during experimenting also need to be recorded. For CEC competition (CEC17), the complexity analysis is shown in Fig. 6. The time for executing the program in Fig. 6 is denoted as T0. Then the same procedure is repeated to evaluate time T1 with 200,000 evaluations of the same D dimension of specific test function (Function 18 from CEC17). The procedure is repeated five times with the same function (Function 18 from CEC17) to evaluate time T2. The five values are then averaged to evaluate \(\hat{T}2\). All of the results \(\left( {T0, T1, \hat{T}2} \right)\) are tabulated and these procedures are calculated for 10, 30, and 50 dimensions to observe the algorithm complexity’s relationship with dimension.

The complexity measure is also widely used in discrete optimization. Different kinds of literature define the time complexity according to the expectation of the algorithm’s performance. As an example, Ambati et al. (1991) proposed time complexity of GA-based algorithms with \(O\left( {n\log n} \right)\), whereas Tseng and Yang (2001) shows the time complexity of GA is \(O\left( {n^{2} ml} \right)\) and Tsai et al. (2014) proposed with \(O\left( {nml} \right)\) with n as the number of cities, m as the number of chromosomes, and l as the number of generations.

2.1.7 Statistical analysis

There are several statistical analyses proposed in the literature for measuring the efficiency of algorithms. These are empirical cumulative distribution functions and ordered alternative tests.

2.1.7.1 Empirical cumulative distribution function, ECDF

The performance measure of T run-time from experiments of metaheuristic algorithms on a specific problem can be described by a probability that is equivalent to the cumulative distribution function (Chiarandini et al. 2007; Hoos 1998). The cumulative distribution of sampled data \(\varvec{T}_{{\mathbf{1}}} , \ldots ,\varvec{T}_{\varvec{n}}\) is then characterized by empirical cumulative distribution function (ECDF) that is equivalent to Eq. (34). The distribution is also denoted as a run time distribution.

where n is the number of sampled data and I(·) denotes the indicator function. This general formulation hold for both censored (if a time limit is defined before reaching the optimum solution) and uncensored data. An example of the ECDF curve of the SA algorithm for solving the combinatorial problem is depicted in Fig. 7 (top). The mid-line of ECDF represents the median of the overall solution. The ECDF also can be used to compare between algorithms as shown in Fig. 7 (bottom), where three algorithms (PSO, TPO, and DE) are superimposed in one chart and revealed that DE outperformed the other two algorithms, whereas TPO performed better than PSO due to the probability,\(P_{r} \left( {T_{DE} \le T_{TPO} \le T_{PSO} } \right)\). Some literature benchmarked ECDF as a performance indicator (Ribeiro et al. 2009; Hansen et al. 2016).

2.1.7.2 Ordered alternative test

The ordered alternative test consists of non-parametric multiple tests that assume the null hypothesis of equal trends or medians and the alternative hypothesis of series of treatments of unequal trends or medians. There are three types of ordered alternatives used in metaheuristic performance analysis that include Page test, Terpstra-Jonckheere test, and Match test as simplified in the following subsections.

Method construct order from k treatments on N samples and ranked from the best (with 1) to the worst (with k). The number of k treatments depends on the number of cut-points of N samples. Then the Page L statistic as in Eq. (35) is computed using the sum of ranks. An alternative ranks procedure is applied for algorithms that reached an optimum solution before the end of total cut-points (either algo1 or algo2 or both) (Derrac et al. 2014). Advantage: two graphical instances: (1) convergence in a discrete manner based on the cut-points, (2) deviation of ranks between two algorithms concerning the cut-points.

with \(R_{j}\) as the rank of jth of k measures from N samples. The sum of ranks values \(R_{j}\) will follow an increasing order of measured algorithm convergence. Thus for an increasing order, the null hypothesis will be rejected in favor of the alternative. Example of Page test from (Derrac et al. 2014) that compares two algorithms in 10 cut-points regular intervals on a minimization problem is shown in Fig. 8.

Page test for comparison between algorithms (Derrac et al. 2014)

Method Essentially based on Mann–Whitney U statistic, where U is obtained for each pair of samples and added. For k samples, the U statistic is calculated for each of \(\frac{{k\left( {k - 1} \right)}}{2}\) pairs and ordered. Then the test statistic is computed by summing each U statistic as in Eq. (36). Advantage: powerful alternative hypothesis with ordered medians of either decreasing or increasing pattern.

where U is the Mann–Whitney U statistic for an individual sample with \(j = \left[ {1,2, \ldots ,k} \right]\). For large sample sizes, the null distribution of \(W_{TJ }\) approaches a normal distribution. Thus, calculation of mean \(\left( \mu \right)\) and standard deviation \(\left( \sigma \right)\) is necessary to determine the critical value with \(W_{TJ} \le \mu - z\sigma - 1/2\). An example of metaheuristic algorithms analysis using the TJ test is by Obagbuwa and Adewumi (2014).

-

(c)

Match test (Neave and Worthington 1988)

Method Similar to Page and TJ test but the calculation is based on rank-sums. Determined by the number of matches of ranks with the expected ranks and half of near matches. Null hypothesis similar to other non-parametric methods and alternative hypotheses similar to the TJ test. The test is computed by ranking a row from 1 to k and ties are assigned as average ranks. Each rank is compared with the expected rank, defined as the column index. A match is counted if the rank equals the column index. Every non-match that lies between \(0.5 \le \left| {r_{i} } \right| \le 1.5\) is counted as a near match, with \(r_{i }\) as individual rank. The test statistic is calculated as in Eq. (37):

with \(L_{1 }\) as the number of matches and \(nm\) as the number of near matches. Similar to the TJ test, large sample sizes approach a normal distribution, thus the mean and standard deviation is calculated and the critical value is determined with \(L_{Match} \ge \mu + z\sigma + 1/2\) with z as the upper tail critical value from normal distribution and \(1/2\) as a continuity correction.

2.2 Effectiveness measure

2.2.1 The effectiveness rate of solution

Variants of rate measures to demonstrate the algorithm effectiveness are defined in the literature such as the successful convergence, feasible rate, FEVs by successful runs, success rate, and performance. These methods are clustered as the effectiveness rate of solution since they are based on the same foundation of rate measurement.

2.2.1.1 Successful convergence

In essence, the successful convergence can be presented by calculating either count or percentage of local or global optimum under a defined number of trials and can be represented in tabular or graphical form. The interpretation from this analysis is straightforward, which is to show which algorithm has a higher percentage and frequency of solutions towards the near optimum. This measure applies to both continuous and discrete domains. Some literature defined a threshold for a specific problem and the algorithm is accepted as successful if it converges to a lower or equal to the threshold (minimization); defined as a percentage of success rates (Kiran 2017).

Typical function optimization, especially for the benchmark functions in various CEC competitions like the CEC 2014 (Liang et al. 2013), requires the measures of error value such that \(\left( {f_{i} \left( x \right) - f_{opt} } \right)\) with \(f_{i} \left( x \right)\) as the current solution found by algorithm and \(f_{opt}\) as the optimum solution of the respective test function. Most of the papers presented their absolute results after the end of FEVs or after the maximum function of evaluation is elapsed. The obtained error values are then stored for n runs and the statistical indices (such as mean and standard deviation) are ranked and further analyzed with the statistical inference method. This process is denoted as the static comparison and some examples are in Chen et al. (2010) and Epitropakis et al. (2011). The main disadvantage of this type of static comparison and ranking is due to the fixed computational budget and the ranking might be different if another computational budget is used for the comparison. A superior method is calculated based on several rankings on several cut-points within a defined computational budget, which is also referred to as the dynamic comparison and ranking (Liu et al. 2017b). A good example of such a method applies to the CEC competitions (Liang et al. 2013) that require function values at several cut-points. In the CEC 2014 competition, the computational budget is limited to \(MaxFEV = 10000*D\) with D as the dimension. The dynamic ranking is calculated in 14 cut-points with \(\left( {0.01, 0.02, 0.03, 0.05, 0.1, 0.2, \ldots , 0.9, 1.0} \right)*MaxFEV\) for each run. From this dynamic, the algorithm performance can be identified with respect to the path of the cut-points. This method applies to both continuous and discrete domains.

In various combinatorial problems, the percentage of improvement is usually defined as the rate of differences between the best-known optimum found by algorithms and the global optimum solution. Most of the combinatorial problems are already set with the global optimum value. Examples for most of the TSPs are defined in http://comopt.ifi.uni-heidelberg.de/software/TSPLIB95/tsp/ and for TTPs in https://cs.adelaide.edu.au/~ec/research/combinatorial.php. Thus, the general algorithm effectiveness can be calculated easily by the difference between found solutions for the prescribed objective value as follows:

with \({\text{AE}}\%\) as the percentage of algorithm effectiveness, \(p_{\text{algorithm}}\) as the optimum solutions found by algorithm and \(p_{opt}\) as the prescribed optimum value of the particular problem. It is to highlight that some papers presented the effectiveness such as Zhou et al. (2019) with the deviation of the best solution found by the algorithm concerning the prescribed optimum value:

where \(p_{best}\) as the best solution found by the algorithm. This expression is straightforward, however, it did not represent the true algorithm characteristic as it only shows the best solution found by the algorithm and maybe the algorithm converges in large standard deviation within n number of runs. The absolute calculation of Eq. (39) is not wrong and can be inserted as one of the metrics; however, two other metrics that represent the overall algorithm effectiveness must also be presented. These are the average of converged solutions within n number of trials and the variation of solutions generated by the algorithm. Appropriate representation is by calculation of the difference between the averages of solution within n trials with the optimum result as expressed below:

with \(p_{average}\) as the average of the solutions of the algorithm in n many trials. Some examples of recent papers using such metrics are Ali et al. (2020) and Campuzano et al. (2020). A similar metric is also used for the Crew Scheduling Problems (CrSPs) that measure the percentage gap between solutions found by the algorithm with respect to the best-known solution (García et al. 2018). Most of the literature on combinatorial problems defined the difference between the proposed algorithm and the best value or another algorithm of the same portfolio as the gap percentage. In the TTP problem, the gap percentage can be divided into several categories such as the number of cities, number of picked items, and the ratio between numbers of items concerning the cities as demonstrated by Bonyandi et al. (2014). With these comparisons, more understanding of algorithm performance over each instance can be introduced. The same formulation is also applicable in the QAP problem, where it is defined as the percentage excess that is equivalent to the average of best-known solutions over n number of trials (Merz and Freisleben 2000). The second important metric is the variation or spread of the solutions, which will be discussed in Sect. 2.2.5. Another useful measure for both continuous and discrete problems is the relative effectiveness with respect to the benchmarked solution in either time or distance relation. The formulation is simplified as the following equation:

with p as either distance or computation time and \(p_{A}\) as the solution of the proposed algorithm and \(p_{B }\) as the benchmarked algorithm that has a better performing solution. A positive value of \(\Delta p\) denotes that the benchmarked algorithm is superior to the proposed algorithm. Some examples of literature using this feature are from Skidmore (2006), Tsai et al. (2014) and Silva et al. (2020) for TSP optimization and Wu et al. (2020) for QKP.

2.2.1.2 Asymptotic performance ratio

In combinatorial problems especially for Bin packing problem, such as Balogh et al. (2015) presented an asymptotic performance ratio denoted as the ratio of an optimal number of bins converge by an algorithm to the optimum solution as defined in the following equation:

where L as input number of bins used by algorithm A to pack and \(Opt\left( L \right)\) as the number of bins in an optimal solution. Zehmakan (2015) presented a graphical comparison of this ratio between algorithms over a defined number of problem instances. Thus, a better algorithm shows a lower ratio over the problem instances.

2.2.1.3 Feasible rate

The feasible rate is an independent run that generates at least one feasible solution. This metric applies to both continuous and discrete problems, where it is equivalent to the number of feasible trials divided by the total number of trials as shown in Eq. (43). A higher feasible rate shows that more solutions reached the feasible region of the search space, thus denoting a better performance. This metric is one of the standard procedures for CEC competition (Suganthan et al. 2005; Liang et al. 2006). Other literature defined this term as feasibility probability (Mezura-Montes et al. 2010).

2.2.1.4 Average number of function evaluation for optimality (AFESO)

AFESO is determined based on the average of FEV of each successful trial that reaches close to the neighborhood of \(f\left( {x_{opt} } \right)\) (Das 2018) with the formulation as:

with SuR as the number of successful runs and FEVsi as the function evaluation at the ith run. A lower AFESO has better performance since it denotes a lower average cost required by the algorithms to reach the near-optimum solution.

2.2.1.5 Success rate and performance

A successful run is an independent run with absolute difference between the best solutions \(f\left( x \right)\) and optimum \(f\left( {x_{opt} } \right)\) that less than a defined threshold value. Liang et al. (2006) suggests a success condition with \(f\left( x \right) - f\left( {x_{opt} } \right) \le 0.0001\). The success rate and success performance are defined as in (45) and (46). Note that FEV is the number of function evaluations. Both measures are standard procedure for CEC competition (Suganthan et al. 2005; Liang et al. 2006).

Note that SR is also used in the formulation of an efficiency metric (Mora-Melia et al. 2015) as defined in Eq. (8) that represents the effectiveness rate of SR over FEVs. The SP metric from Eq. (46) can also be evaluated with the combination of the probabilities of convergence with AFESO. The metric estimates the speed and reliability of the algorithm (Mezura-Montes et al. 2010), a lower SP denotes a better combination of speed and consistency, thus reliability of the algorithm as shown in the following equation.

with \(P\) as the probability of convergence, this is calculated by the ratio of the number of successful runs to the total number of runs.

2.2.1.6 Scoring rate of best to worst solution

A relatively similar measure as the success rate is the score of the best concerning the worst converged solution or simply \(best\;solution/worst\;solution\). This metric describes the improvement ratio of the algorithm and generally indicates the coverage of solutions generated by the algorithm in its specific problem space. Such a metric can be usually applied in the design and combinatorial optimization problems in order to compare the potential capability of the algorithm in solving the problem. The algorithm with a higher score is denoted as poorer compared to the lower ratio. Examples such as Adekanmbi and Green (2015) and Lee et al. (2019a) utilize a ratio of best to worst solution as an indicator of algorithm improvement in engineering optimization and water distribution problems. Another example is Santos et al. (2019) in the combinatorial Bin-packing problem that evaluates the ratio between the best and worst solutions of total bins. The average and variation of this ratio may also indicate the effectiveness of the algorithm over the others for n trials of solutions.

Another related quality indicator is the scaled median and scaled average performance as described by Wagner et al. (2018). In this method, the best and worst objective scores are defined as boundaries of solutions interval and the actual scores are mapped within [0, 1] with 1 associated as the highest score. The scaled performance is quite similar to the median plot that normalized within [0, 1]. This metric has a better overview when the comparison is carried out with many algorithms.

2.2.2 Measures of profile

This section discusses several performance metrics based on the profile of an algorithm. There are mainly six performance metrics based on characteristics or profiles proposed in the literature. Each method has an essentially similar perception of indicator but with slight differences.

2.2.2.1 Performance profile (Dolan and Moré 2002)

The performance profile is defined with computational cost \(t_{p,s}\) that obtained in each pair of problems and algorithms. The method illustrates a percentage of problems solved by computation cost that can be referred to as time, FEVs, or other cost-related units. Thus, larger \(t_{p,s}\) reflects the worst performance. Then a performance ratio that is proportional to the computation cost is defined as:

with s as the solver (or algorithm) and S as the set of algorithms. In other words, the performance ratio is identified by dividing the computation time of an algorithm by the minimum computation time returned from all algorithms. Then the performance profile of solver s is defined as:

with \(\left| P \right|\) as the number of elements of the test set P and \(\rho_{s} \left( \tau \right)\) as the portion of time that corresponds to the performance ratio \(r_{p,s}\) of algorithm \(s \in S\) within \(\tau \in {\mathbb{R}}\). Note that \(\rho_{s} \left( \tau \right)\) is the cumulative distribution function of the performance ratio. The best algorithm \(s \in S\) is represented by a higher value of \(\rho_{s} \left( \tau \right)\). The performance profile \(\rho_{s} \left( \tau \right)\) compares different algorithm versus the best algorithm that has the highest \(\rho_{s} \left( \tau \right)\). Some examples of performance profiles can be observed in Beiranvand et al. (2017), Monteiro et al. (2016) and Vaz and Vicente (2009). To derive the second best algorithm, a chart of performance profile needs to be drawn without the first best performer. Some drawbacks are that the criteria for passing and failing of convergence are flexible that may change the profile itself. Furthermore, the performance profile did not provide sufficient information for expensive optimization problems (Moré and Wild 2009). The main advantage of this method is it combines speed and success rate in one graphical form. The main information gained from the performance profiles is to show how the proportion of solved solution increases by increasing of performance ratio. Liu et al. (2017b) improved the profile with the confidence interval by adding the upper and lower bound of the confidence interval in the profile chart to observe the variances generated by the algorithms.

2.2.2.2 Operational characteristic and operational zones (Sergeyev et al. 2017)

The operational zone is a further development of performance profile. The number of successful solutions within several trials can be represented graphically using the operational zone (Sergeyev et al. 2017). The method was originated from the idea of operational characteristic for comparing deterministic algorithms introduced by Grishagin (1978). The operational characteristic is a non-decreasing function that indicates the number of problems solved after each FEV within the total number of trials. The operational zone consists of n operational characteristics performed by the metaheuristic algorithm with n as the total number of trials since a set of n operational characteristics resulted in n patterns or zone of the metaheuristic solutions. The zone is then extracted with upper- and lower bounds, representing the worst- and best case solutions and an average of operational characteristic for all runs can be estimated. The graphical construction of operational characteristics and the operational zone is shown in Fig. 9 (inspired by Sergeyev et al. 2018). The operational zone with average characteristics can be used to compare several algorithms in each chart. The example of operational zones in Fig. 9 consists of n number of trials from an algorithm that shaded between two red curves, these red curves represent the best (upper boundary) and the worst (lower boundary) trial of the measure algorithm. Then an average of the algorithm performance that relates the number of solved problems concerning the number of trials can be estimated as a middle line (represented in the blue curve). The quality of compared metaheuristic algorithms can be compared based on the average line and the size of the shaded area, which represents the total n trials of that particular algorithm. The lower the size denotes the lesser variance of solutions and better reliability of the algorithm. As an example, if the lower bound of algorithm b is higher than the operational zone of algorithm a, then it can be concluded that algorithm b outperformed algorithm a. The measurement of this method also shows no significant difference if the algorithms are executed with a different number of trials (Sergeyev et al. 2018).

2.2.2.3 Data profile (Moré and Wild 2009)

It is a modified version of the performance profile for a comparison of derivative-free algorithms (Moré and Wild 2009). The information from the data profile shows the percentage of problems solved in a given tolerance of τ time within the budget of k FEVs. It is assumed that the numbers of FEVs are increased by a higher number of variables to satisfy the convergence criteria. The data profile is suitable for optimization problems particularly with a high computational burden and is defined as follows:

where \(t_{p,s }\) as the number of FEVs required to satisfy the convergence test, \(n_{p}\) is the number of variables in the problem \(p \in P\), and \(d_{s}\) as the percentage of solved problems in \(k\left( {n_{p} + 1} \right)\) FEVs. The data profile approximates the operational characteristic if the term \(\frac{{t_{p,s} }}{{n_{p} + 1}}\) is replaced by the number of iterations. Some examples of a data profile can be observed in Beiranvand et al. (2017) and Hellwig and Beyer (2019). In a nutshell, the data profile shows how the proportion of solved solution increases by increasing the relative measure of the computational budget. Liu et al. (2017b) improved the data profile with a confidence interval to observe the variances generated by the algorithms.

2.2.2.4 Accuracy profile (Hare and Sagastizábal 2006)

The accuracy profile (Hare and Sagastizábal 2006) is designed for a fixed cost dataset. Fixed-cost is referred as a final optimization error \(f\left( x \right) - f\left( {x_{opt} } \right)\) that is fixed after running the algorithm for a certain period, the number of FEVs, or iterations. The accuracy profile is based on the accuracy measure of fixed-cost datasets as follows:

with \(\gamma_{p,s}\) as the accuracy measure,\(f_{acc}^{p,s} = \log_{10} \left( {f\left( {\bar{x}_{p,s} } \right) - f\left( {x_{p opt} } \right)} \right) - \log_{10} \left( {f\left( {x_{p}^{0} } \right) - f\left( {x_{p opt} } \right)} \right)\), \(\bar{x}_{p,s}\) is the solution obtained by algorithm s on problem p, \(x_{p opt}\) is the optimum solution and \(x_{p}^{0}\) is the initial point of problem p. The term \(f_{acc}^{p,s}\) in the equation above is interpreted as negative since the improvements from starting \(f\left( {x_{p}^{0} } \right)\) towards global optimum shall be decremented. The performance of the accuracy profile is then calculated as in Eq. (52).

The accuracy profile \(R_{s} \left( \tau \right)\) is a proportion of problems that algorithm \(s \in S\) able to solve within an accuracy of \(\tau\) of the best solution. Some examples of an accuracy profile can be observed in Beiranvand et al. (2017).

2.2.2.5 Function profile (Vaz and Vicente 2009)

It is a modified version of the data profile that shows the number of FEVs required to achieve some level of global optimum (Vaz and Vicente 2009). The reason for the modification is due to the characteristic of stochastic algorithms that did not necessarily produce a sequence monotonically decreasing towards best value (Vaz and Vicente 2009). The function profile is formulated as follows:

with \(r_{p,s}\) as the average number of FEVs taken by algorithm s to solve problem p for \(p \in P\) and \(s \in S\). The value of \(r_{p,s}\) is condition-based, \(r_{p,s} = + \infty\) (denote as failure) if the algorithm is unable to find a feasible solution of problem p within a defined relative error ε with \(\left( {f_{p,s} - f_{p,L} } \right)/\left| {f_{p,L} } \right| > \varepsilon\). The value \(f_{p,s}\) is the objective function obtained by algorithm s on problem p and \(f_{p,L}\) represents the best objective function obtained by all algorithms for problem p. The value \(\rho_{s} \left(\Upsilon \right)\) is equal to the function profile of algorithm or solver \(s \in S\) as a fraction of problems where the number of the objective function is lower than \(\Upsilon\).

2.2.2.6 Performance metric based on stochastic dominance (Chicco and Mazza 2019)

This metric is generally defined as the Optimization Performance Indicator based on Stochastic Dominance, OPISD. The method is based on the first-order stochastic dominance of CDF between two algorithms. The first-order stochastic dominance is defined as follows: the solutions obtained by an algorithm A has the first-order stochastic dominance over the solutions obtained by algorithm B if and only if the CDF of any solutions obtained by algorithm B lie on the right side of CDF from solutions of algorithm A as shown in Fig. 10 and expressed mathematically as follows:

with H as the number of solutions from each algorithm and F as the CDF constructed for each algorithm A and B sorted in ascending order of variable y.