Abstract

A spatial lattice model for binary data is constructed from two spatial scales linked through conditional probabilities. A coarse grid of lattice locations is specified, and all remaining locations (which we call the background) capture fine-scale spatial dependence. Binary data on the coarse grid are modelled with an autologistic distribution, conditional on the binary process on the background. The background behaviour is captured through a hidden Gaussian process after a logit transformation on its Bernoulli success probabilities. The likelihood is then the product of the (conditional) autologistic probability distribution and the hidden Gaussian–Bernoulli process. The parameters of the new model come from both spatial scales. A series of simulations illustrates the spatial-dependence properties of the model and likelihood-based methods are used to estimate its parameters. Presence–absence data of corn borers in the roots of corn plants are used to illustrate how the model is fitted.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Binary spatial data are involved in various domains such as economics, social sciences, ecology, image analysis, and epidemiology; see below for references. Considering the spatial framework, one common model for regularly spaced binary data is the auto-logistic model, which belongs to Besag’s auto-models class (Besag 1974); it is a particular case of a Markov Random Field, analogous to a classical logistic model, except that the explanatory variables are replaced by neighbouring values of the process. The auto-logistic model has seen a lot of use in the last 40 years and in various contexts; see, for example, Augustin et al. (1996), He et al. (2003) and Sanderson et al. (2005) in ecology, Gumpertz et al. (1997) in epidemiology, Koutsias (2003) in image analysis, and Jiang et al. (2015) and Moon and Russell (2008) in land-use. The auto-logistic model has been reparameterized by Caragea and Kaiser (2009), which helps with interpretation of the parameters, and this was extended recently to the spatio-temporal case by Wang and Zheng (2013).

In a hierarchical framework, when the data are noisy and missing, a Generalised Linear Model (McCullagh and Nelder 1989; McCulloch et al. 2001) can be implemented with a link appropriate for binary data. The logit link is canonical, but models involving other link functions have been developed; for example, in Marsh et al. (2000) and LeSage et al. (2011), a spatial probit model is applied to problems in agriculture and economics, and Roy et al. (2016) introduce a Bayesian spatial probit model that is more robust against extreme observations. When the spatial variable represents presence/absence of a rare event, Elkink and Calabrese (2015) suggest the quantile function of the Generalised Extreme Value (GEV) distribution as a link function.

In this paper, we focus on binary data on a spatial lattice with two spatial scales. For the sake of simplicity, we assume that the process is observed on a regular lattice, but the model can be extended to irregular lattices; see the discussion in Sect. 6. We specify a coarse regular grid of sites, say at resolution \(\varDelta >1,\) where the fine-scale lattice is at resolution 1. The locations on the coarse grid define what we call the Grid, and all remaining locations on the underlying lattice define what we call the Background.

The models on the Grid and the Background account respectively for large-scale and fine-scale spatial variation. We start with the Background model, which consists of a classical hierarchical logistic model, linked to a hidden Gaussian field \(\varvec{\varepsilon }\); clearly, the local spatial dependence relies on the covariance structure of this hidden field. Then, conditional on the Background observations, we consider an auto-logistic model on the Grid, where the large-scale spatial dependence is expressed via the parameters of the auto-logistic model. Thus, the final model is non-stationary, which allows us to capture spatial dependence at different scales, and it combines a geostatistical model with a Markov random field (MRF) model in a new approach.

Section 2 is devoted to the description of the model; we display its properties and behaviours by varying different values of the parameters in Sect. 3. The results of this section show how we can identify the Grid resolution \(\varDelta .\) We present parameter estimation in Sect. 4, where both geostatistical and MRF parameters are involved. Section 5 contains an application to modelling the occurrence of corn borer larvae in agricultural fields in Iowa, USA. Finally, in Sect. 6 we discuss further aspects of the construction of the model and give our conclusions, followed by a technical appendix.

2 Two-scale spatial modelling

2.1 The Background and the Grid

Let us consider a two-dimensional domain of spatial-process locations \( D\subset {\mathbb {R}}^{2}\); \( D=\{{\mathbf {s}}_{1},{{\mathbf {s}}}_{2}, \ldots ,{{\mathbf {s}}}_{n}\}\) is a finite set of sites, with \({{\mathbf {s}}} _{i}=(s_{i1},s_{i2})^{\text {T}}\) for \(i=1, \ldots ,n,\) and we denote P(D) as its perimeter (or boundary). We are thinking of D as having no missing locations, but the following definition for perimeter covers all cases:

For the sake of simplicity, we assume that D is a fine regular lattice, but the situation can be generalised to irregular lattices, as is discussed in Sect. 6.

Let \({\mathbf {Z=}}(Z({\mathbf {s}}):~{\mathbf {s}}\in D)^{\mathrm {T}}\) be the process on D, taking its values in the state space \(E=\{0,1\}^{n}.\) We consider two scales of spatial dependence, which occur locally at fine-scale resolution 1, and at a coarse-scale resolution \(\varDelta \), where \(\varDelta \in \{2,3, \ldots \}\). Here we assume that \( \varDelta \) is known; in practice, it may be obtained from a preliminary exploratory analysis of the data, or by subject-matter experts. We shall come back to this point in Sect. 5 but, in what follows, \(\varDelta \) is not a parameter of the model.

Let G be a Grid such that the nodes are equally spaced at distance \(\varDelta ; \) in order to avoid edge effects, we position the Grid in the domain, such that for each site \({\mathbf {s}}\in P(G),\) the four sites at distance \(\varDelta \) from \({\mathbf {s}}\) belong to D, see Fig. 1.

More precisely, define \(D^{0}\equiv \{{\mathbf {s}}:\parallel {\mathbf {s}}-\mathbf {u }\parallel \ge \varDelta ~;~{\mathbf {u}}\in P(D)\}\cap D;\) then we define the Grid

All remaining locations outside the Grid define the so-called Background,

For the sake of simplicity, we replace \(B(\varDelta )\) and \(G(\varDelta )\) with B and G, respectively, in all that follows. Thus, the fine-scale variation happens at the scale of the Background, while the large-scale variation happens at the scale of the Grid. Moreover, if \(n_{A}=|A|\) is the cardinality of set A, we have \(n=n_{D}=n_{G}+n_{B}.\)

With these notations established, we write \({\mathbf {Z}}=({\mathbf {Z}}_{G}^ {\mathrm {T}},{\mathbf {Z}}_{B}^{\mathrm {T}})^{\mathrm {T}},\) where \({\mathbf {Z}}_{G}=(Z_{G}( {\mathbf {s}}):~{\mathbf {s}}\in G)^{\mathrm {T}}\), \({\mathbf {Z}}_{B}=(Z_{B}({\mathbf {s}}):~{\mathbf {s}}\in B)^{\mathrm {T}},\) and in general \({\mathbf {Z}}_{A}\) denotes a spatial process on a set \(A\subset D,~\)such that \({\mathbf {Z}}_{A}=(Z_{A}({\mathbf {s}}):~ {\mathbf {s}}\in A)^{\mathrm {T}}.\)

We now turn to spatial-process modelling. We start with the Background in Sect. 2.2, which involves a conditional logistic model for \( {\mathbf {Z}}_{B}\); then, conditional on \({\mathbf {Z}}_{B}\), we define \({\mathbf {Z}} _{G}\) on the Grid in Sect. 2.3.

2.2 Fine-scale process on the Background

We consider a conditional model for binary spatial data on the Background. We model the binary variables using a Bernoulli distribution, where the mean depends on an underlying (and unobserved) spatial process \({\mathbf { \varepsilon }}\). Moreover, we assume conditional independence of the Bernoulli random variables given the hidden process.

Thus, denoting the Background locations as\(\ \{{{\mathbf {s}}_{i}}:~i=1, \ldots ,n_{B} \},~\) for each \({\mathbf {s}}_{i}\in B,\) we write the following independent conditional distributions for \(Z_{B}({\mathbf {s}}_{i})\) given \(\varvec{ \varepsilon }=(\varepsilon ({\mathbf {s}}_{1}), \ldots ,\varepsilon ({\mathbf {s}}_{n}))^{\mathrm {T }}\) as those given by Bernoulli random variables,

where

The hidden process \(\varvec{\varepsilon }\) is assumed to be Gaussian with mean \({\mathbf {0}}\) and spatial covariance matrix \(\Sigma .\) It is possible to incorporate explanatory variables in the mean, but we choose not to do so initially; see the discussion in Sect. 6. That is,

2.3 Coarse-scale process on the Grid

We define the model on the Grid conditional on the Background using a Markov random field (MRF) model with a neighborhood graph on the Grid, which recall has resolution \(\varDelta \). For the sake of simplicity we consider here the four nearest neighbours, but the model can be modified easily to account for extra spatial dependence.

For each site \({\mathbf {s}}\in G,~\)we define the four-nearest-neighbourhood set \(N_{G}({\mathbf {s}})=\{\mathbf {u}\in G:~{\mathbf {u}}={\mathbf {s}}\pm (\varDelta ,0),{\mathbf {s}}\pm (0,\varDelta )\}\), and let \(\langle {\mathbf {u}},\mathbf {v} \rangle _{G}\) denote the edge between \({\mathbf {u}}\) and \({\mathbf {v}}\), where \({\mathbf {u}} \in N_{G}({\mathbf {v}})\). Our conditional model for the Grid values is the spatial auto-logistic model:

where dependence on \({\mathbf {Z}}_{B}\) is captured in \(\alpha _{B}({\mathbf {s}})\) ; see below.

From Besag (1974), we know that these conditional distributions are compatible and the joint distribution is,

where the energy U is given by

and C is the normalising constant, \(\sum _{{\mathbf {Z}}_{G}\in \{0,1 \}^{n_{G}}}\exp U({\mathbf {Z}}_{G};{\mathbf {Z}}_{B}).\) Here, \(\beta \) is the spatial interaction parameter, which we assume to be constant over the Grid; \(\beta <0\) implies competitive behaviour, while \(\beta >0\) implies co-operative behaviour, and \(\beta =0\) corresponds to spatial conditional independence.

In the next paragraphs, we emphasize the role of \({\mathbf {Z}}_{B}\) in (3) and (4). Now, \(\alpha _{B}({\mathbf {s}})\) accounts for the underlying behaviour of the binary process on the Background “around” \({\mathbf {s}}\). We propose the following model:

where \(N_{B}({\mathbf {s}})\equiv \{\mathbf {u=(}u_{1},u_{2})\in B:~|u_{1}-s_{1}|\le \varDelta ,~|u_{2}-s_{2}|\le \varDelta \}\) is the set of the neighbour Background locations. Notice that \(N_{B}({\mathbf {s}})\cap G=\emptyset \) for all \({\mathbf {s}}\in G,\) since \(B\cap G=\emptyset .\)

To understand the role of the parameters, consider the following calibration. States 0 and 1 are equiprobable if \(\alpha _{B}({\mathbf {s}})+ \frac{\beta }{2}=0,~\) whereas \(\alpha _{B}({\mathbf {s}})+\frac{\beta }{2}>0\) favours state 1 and \(\alpha _{B}({\mathbf {s}})+\frac{\beta }{2}<0\) favours state 0. In building the model, we want to have the same type of equilibrium behaviour on the Grid as on the Background: We want equilibrium of states 0 and 1, or prevalence of the same state 0 or 1, on both B and G. For equal numbers of 0 and 1 in \(N_{B}({\mathbf {s}}),\) \( \dfrac{1}{|N_{B}({\mathbf {s}})|}\sum _{\mathbf {u}\in N_{B}({\mathbf {s}})}Z_{B}( \mathbf {u})=\frac{1}{2}\), in which case \(\alpha _{B}({\mathbf {s}})+\frac{\beta }{ 2}=\gamma +\frac{\alpha }{2}+\frac{\beta }{2},\) and hence we will have equilibrium on the Grid if \(\gamma =-\dfrac{\alpha +\beta }{2}.\) When \( \gamma =-\dfrac{\alpha +\beta }{2},\) we can write \(\alpha _{B}({\mathbf {s}} )+\frac{\beta }{2}=\alpha (\frac{1}{|N_{B}({\mathbf {s}})|}\sum _{\mathbf {u}\in N_{B}({\mathbf {s}})}Z_{B}(\mathbf {u})-\frac{1}{2})\), which depends only on \(\alpha .\) If \(\alpha >0\) and if we have a predominance of 1s in \( N_{B}({\mathbf {s}}),\) that is \(\frac{1}{2}<\frac{\sum _{\mathbf {u}\in N_{B}( {\mathbf {s}})}Z_{B}(\mathbf {u})}{|N_{B}({\mathbf {s}})|}<1\), we obtain \( \alpha _{B}({\mathbf {s}})+\frac{\beta }{2}>0,\) which reinforces state 1 on the Grid. The opposite happens if \(\alpha >0\) and \(0<\frac{\sum _{\mathbf {u}\in N_{B}({\mathbf {s}})}Z_{B}(\mathbf {u})}{|N_{B}({\mathbf {s}})|}<\frac{1}{2}.\) Thus, the general behaviour on the Background will be reflected in the Grid.

For the reason given above, we calibrate our model with \(\gamma =-\dfrac{\alpha +\beta }{2}\). Hence, we can re-write (4) as:

where the normalising constant C depends on \(\alpha \) and \(\beta .\) In (6), there are two terms that express the departure of the average in the neighbourhood from the equilibrium value of \(\frac{1}{2}\); one is for the Background and the other is for the Grid. The sum related to the Grid is divided by \(2|N_{G}({\mathbf {s}})|\) because each edge \(\langle \cdot ,\cdot \rangle _G\) is added twice in this expression.

Let [A|B] denote the conditional probability distribution of A given B. Then the model (1) and (2) for \([{\mathbf {Z}}_{B}]\) and the model (6) for \([{\mathbf {Z}}_{G}| {\mathbf {Z}}_{B}]\) defines the two-scale spatial model, \([{\mathbf {Z}}_{G},{\mathbf {Z}}_{B}]\). In Sect. 4, we discuss estimation of the model’s parameters \(\Sigma , \ \alpha \), and \(\beta \). The theoretical properties that would be obtained from \([{\mathbf {Z}}_G|{\mathbf {Z}}_{B}]\) are largely intractable, so in the following section, we illustrate a number of them through simulation.

3 The model’s properties through simulation

In this section, we show through simulations that the two-scale spatial model given by (1), (2), and (6) allows both competitive and co-operative behaviours. Further, we look for possible edge effects, and we examine measures that can better represent the spatial dependence. We introduce the spatial odds ratio, which accounts for dependence better than the spatial correlation when the data are binary.

3.1 Parameter settings

Let D be a square lattice of size \(53\times 53\); we fix \(\varDelta =4,\) and we overlay a \(12\times 12\) Grid G onto D, with an edge region of width \( \varDelta ,\) analogous to the scheme shown in Fig. 1. That is \( G=\{(s_{i1},s_{i2}):i_{1},i_{2}=5,9, \ldots ,49\}.\)

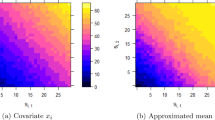

Following the model’s hierarchical description in Sect. 2, we simulated forward as follows: In Step 1, we simulated a Gaussian random field \(\varvec{\varepsilon }\) on the domain D with spatial covariance \( \Sigma ,\) using the the R package RandomFields (Schlather et al. 2015). In Step 2, we simulated independent Bernoulli random variables on the Background. Finally, in Step 3, we used a Gibbs sampler (with 2000 runs) to simulate the auto-logistic model on the Grid.

-

1.

The Gaussian random field \(\varvec{\varepsilon }\) is simulated on D with distribution \(N_{n}(\mathbf {0},\Sigma );\) we choose the exponential covariance function to characterize the spatial covariance matrix \(\Sigma ;\) that is, \(\varSigma =(\varSigma _{ij})\) with \(\varSigma _{ij}=C({\mathbf {s}}_{i}-{\mathbf {s}} _{j})\) and \(C({\mathbf {h}})=\sigma _{\varepsilon }^{2}e^{-||\mathbf {h}||/\theta }, \) for \({\mathbf {h}}\in {\mathbb {R}}^{2}.\) In order to obtain reasonable spatial dependence, we choose \(\theta =5\) and then \(\theta =20\), the latter value ensuring stronger spatial dependence\(.\ \) We set \(\sigma _{\varepsilon }^{2}=1.\)

-

2.

Conditional on the simulated \(\varvec{\varepsilon },\) we simulate independent Bernoulli random variables \(Z_{B}({\mathbf {s}})\) on the Background, with parameters \(p({\mathbf {s}})=\dfrac{e^{\varepsilon ({\mathbf {s}})} }{1+e^{\varepsilon ({\mathbf {s}})}};\) \({\mathbf {s}}\in B.\)

-

3.

For the standard auto-logistic model with constant \(\alpha \) and \(\beta , \) values of \(\beta \) that would give weak spatial dependence, stronger spatial dependence, and very strong spatial dependence would be around 3, 8, and 16, respectively. But for our two-scale model, we note a reinforcement of spatial interaction due to the Background effect, through \(\alpha _{B}( {\mathbf {s}});\) then, a choice of \(\beta =2\) is large enough to obtain strong positive spatial dependence. Similarly, a strong competitive behaviour can be obtained with \(\beta =-2.\) We ran simulations for different values of parameters \(\alpha \) and \(\beta \), both possibly negative and positive. Specifically, we ran simulations for \(\alpha \in \{-6,-5, \ldots ,-1,1, \ldots ,5,6\}\) and \(\beta \in \{-4,-3,-2,-1,1,2,3,4\}\).

Each model was simulated \(L=1600\) times, and we denote \({\mathbf {Z}}^{(l)}\) as the \(l-\)th realization of \({\mathbf {Z=(Z}}_{B}^{\mathrm {T}},{\mathbf {Z}}_{G}^{ \mathrm {T}})^{\mathrm {T}}.\) The properties of our model for \({\mathbf {Z}}\) are obtained by Monte Carlo averaging of \(\{{\mathbf {Z}}^{(l)}:l=1, \ldots ,L\}.\)

3.2 Simulation results

3.2.1 Visualization and edge effects

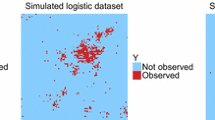

Figure 2 shows one realization of the process with parameters \( \sigma _{\varepsilon }^{2}=1,~\theta =5,~\alpha =2,~\beta =2.\) The whole process gives \({\mathbf {Z}}=\mathbf {(Z}_{B}^{\mathrm {T}},{\mathbf {Z}}_{G}^{\mathrm {T}})^{ \mathrm {T}},\) and Fig. 3 shows the corresponding \({\mathbf {Z}}_{G} \) used in the simulation of \({\mathbf {Z}}\). Looking at the Grid only, it is obvious that there is strong positive spatial dependence, despite the small value of \(\beta =2.\) Clearly, the Grid dependence is strengthened by the Background dependence. The proportion of 1s equals 0.5372 on D, and 0.5000 on the Grid.

In order to study edge effects, we considered the first, middle, and last columns of the lattice, and computed the average values (taken over the \( L=1600\) simulations), \(\dfrac{1}{L}\sum \nolimits _{l=1}^{L}Z^{(l)}(s_{1},s_{2}),\) for \(s_{2}=1,25,53\). Figure 4 plots these average values against \(s_{1}\), and we see similar behaviour whether in the middle or on the edge of the lattice. Similar results were obtained for all sets of parameters (and we would obviously obtain analogous results if we fix \(s_{2}\) and look at the average values functions of \(s_{1})\). Our conclusion is that there is no striking edge effect.

3.2.2 Covariance and spatial odds ratio

The spatial covariance or spatial correlation are the usual measures used to quantify spatial dependence; however, when the state space is \(\{0,1\}\), there is some doubt about the usefulness of the empirical covariance. In fact, when we consider pair-values \((z_{i},z_{j}),\) only (1, 1) contributes to the covariance. For this reason, we introduce a different characterization of spatial dependence that is more appropriate in the binary 0-1 context and incorporates all pair-values. The idea is to adapt the notions of relative risk and odds ratio to a binary spatial setting. Since we obtain very similar results for both measures, we only present here the spatial odds ratio. It is easy to derive and study the spatial relative risk in an analogous way.

For \({\mathbf {s}}\in D,\) the spatial odds ratio (SOR), at location \(\mathbf {s,} \) in the direction \({\mathbf {e}}\) and at distance h is defined by

where \(p_{jk}({\mathbf {s}},h,{\mathbf {e}})\) is the probability of the pair \((Z( {\mathbf {s}})=j,Z({\mathbf {s}}+h{\mathbf {e}})=k),~\) and the vector \({\mathbf {e}}\) defines the direction of interest. The quantity in (7) is a property of the model, which we study here via simulation. Based on the \(L=1600\) simulations, we can define Monte Carlo averages \(\hat{p}_{jk}\) that estimate \(p_{jk}\); these allow us to compute

where \(\hat{p}_{jk}({\mathbf {s}},h,{{\mathbf {e}}}_{i})\equiv \dfrac{1}{L}\sum \nolimits _{l=1}^{L}\varvec{1}_{\left\{ Z^{(l)}({\mathbf {s}})=j,~Z^{(l)}( {\mathbf {s}}+h{{\mathbf {e}}}_{i})=k\right\} }\), and \(\varvec{1}_{A}\) denotes the indicator function for the set A. Here, we shall study (8) in two directions, horizontal and diagonal, meaning that we set directional vectors \({\mathbf {e}}_{1}\) and \({{\mathbf {e}}}_{2}\) with coordinates \({\mathbf {e}} _{1}=(1,0)\) and \({{\mathbf {e}}}_{2}=(1,1).\)

To compare this spatial-dependence property to the spatial covariance \(\text {cov}(Z({\mathbf {s}}),Z({\mathbf {s}}+h{\mathbf {e}}_{i}))\equiv C_{{\mathbf {e}} _{i}}({\mathbf {s}},h)\), we computed it from Monte Carlo averages as follows:

where \(\overline{Z}({\mathbf {s}})=\dfrac{1}{L}\sum _{l=1}^{L}Z^{(l)}({\mathbf {s}} ).\) Then the global covariance and global SOR are spatial averages, respectively given by

and

where \(D_{{\mathbf {e}}_{i},h}=\{\mathbf {s\in }D:{\mathbf {s}}+{\mathbf {e}}_{i}h\in D\},\) for the i-th direction \({\mathbf {e}}_i, \ i =1,2.\)

In the case of a continuous spatial index, the global covariance, \(C_{ {\mathbf {e}}_{i}}(h),\) can be written as \(\int _{D_{{\mathbf {e}}_{i},h}}\text {cov}(Z( {\mathbf {s}}),Z(\mathbf {s+}h{\mathbf {e}}_{i}))d\mathbf {s}{/}\int _{D_{{\mathbf {e}} _{i},h}}d\mathbf {s,}\) and the global SOR, \(SOR_{{\mathbf {e}}_{i}}(h),\) can be written as \(\int _{D_{{\mathbf {e}}_{i},h}}SOR_{{\mathbf {e}}_{i}}(\mathbf {s,}h)d \mathbf {s}{/}\int _{D_{{\mathbf {e}}_{i},h}}d\mathbf {s.}\)

We plotted the values (9) versus \(h,~h=1, \ldots ,5\varDelta =20,~\) for different sets of parameters \((\theta ,\alpha ,\beta ).~\) We summarize the main results in the text below and present some illustrative plots for selective parameter values. These plots represent averages over 1600 simulations, and consequently the curves are smooth; if estimated from a single realization, the curves are much more irregular, and some features such as peaks and bumps used to determine \(\varDelta \) will be much harder to discern (e.g., see Sect. 5).

As expected, the covariance values as well as the SOR values increase with parameter \(\theta ;\) however, more interesting to see is the influence of the Grid. Its effect can be visually detected by the presence of a peak when \( \beta >0\) or a dip when \(\beta <0.\) In intermediate cases, it is hardly observable, or not at all; see for instance the left plot of Fig. 6. An important observation is that, in most cases, \(\varDelta \) is more easily detected from the SOR than from the covariance. Figure 5 displays the plots of the global covariance and SOR for \(\sigma _{\varepsilon }^{2}=1,~\theta =5,~\alpha =4,~\beta =4.\) We can see that both the covariance and SOR are decreasing with h. We can observe peaks in the horizontal direction \( {\mathbf {e}}_{1}\) and almost nothing in the diagonal direction \({\mathbf {e}} _{2};\) this is expected, because our model is simulated using the four nearest neighbours (i.e., no diagonal dependence) and \(\beta >0.\ \)

Furthermore, for the global SOR, we clearly observe a peak at lag \(h=4,\) which is the size of \(\varDelta ,\) and a smaller peak at lag 8; there is fainter evidence of peaks at lags 12 and 16. This periodic pattern is also present in the global covariance, but it is much less obvious. This underlines our recommendation that one use SOR rather than covariance to explore the behaviour of binary data.

Figure 5 demonstrates that peaks can be seen at lags \(\varDelta ,~2\varDelta ,~3\varDelta ,\) and maybe further if \(\alpha \) and \(\beta \) take large positive values. In fact, the presence and magnitude of the peaks is very sensitive to both Grid parameters, \(\alpha \) and \(\beta \). Figure 6 gives three plots of SOR for different values of the Markov-random-field parameters, where we hold \(\theta \) fixed. The plot on the left-hand side shows no apparent bumps or concavities. We observe a bump in the middle, at lag \( h=\varDelta =4,\) but none for higher lags. The plot on the right-hand side shows that when \(\beta \) is negative, we can also obtain a dip.

Finally, we observe that both the covariance and the magnitude of the possible peaks increase with \(\alpha >0,\) as shown in Fig. 7, and this is more obvious for larger values of the exponential parameter \(\theta \). From Fig. 8, we see that the magnitude of the peaks can be increased by increasing \(\beta .\)

We conducted other simulations to study the covariance and SOR values for different sites, belonging to the Grid or to the Background, located in the domain’s centre or in the border region. Our conclusion is that the results seen in Figs. 5, 6, 7 and 8 are essentially repeated for the other sites.

4 Parameter estimation

In this section, we consider the task of obtaining maximum likelihood estimates of the parameters \((\varphi _{\varepsilon },\alpha ,\beta )\), where \( \varphi _{\varepsilon }\equiv (\sigma _{\varepsilon }^{2},\theta )\) are parameters of the Background process \({\mathbf {Z}}_B\). We suppose that \( \varDelta \) is known; in practice, there is information about \(\varDelta \) in the empirical SOR (Sects. 3, 5).

The parameters come from two structures, the Grid and the Background. Because of the conditional manner in which the model is defined, the joint distribution of the process is the product of two terms, corresponding to the distribution on the Grid given the Background, times the distribution on the Background. Taking the logarithm of the likelihood, we see that estimation of the Grid parameters can be obtained separately from estimation of the Background parameters, which is an advantageous feature of our model. Due to the intractable normalising constant, the auto-model parameters \(\alpha \) and \(\beta \) are estimated by maximizing the (conditional on the Background) pseudo-likelihood introduced by Besag (1977). The second term involves the latent Gaussian field’s parameters \(\sigma ^2_\varepsilon \) and \(\theta \); their estimation in a hierarchical statistical model typically requires an EM algorithm; see Dempster et al. (1977) or McLachlan and Krishnan (2008). The E-step needs the expectation of the latent field \(\varvec{\varepsilon }\) given the observations, but we do not know in closed form the distribution over which the expectation is taken. There are several ways to overcome this issue: A common approach is to use Monte Carlo procedures; see for instance Robert and Casella (2004) and Cappé et al. (2005). Here, instead, we use Laplace approximations to approximate the intractable integrals. Recall that the notation [A|B] denotes the conditional probability distribution of A given B.

The likelihood is given by the distribution of \(\mathbf {Z=(Z}_{B}^{\mathrm {T}},{\mathbf {Z}}_{G}^{\mathrm {T}})^{\mathrm {T}}:\)

The first term in (10) is explicit, given by (6):

The normalising constant \(C(\alpha ,\beta )\) is potentially problematic, since it depends on \(\alpha \) and \(\beta \); for example, for the model we considered in the previous section on a \(12\times 12\) Grid, \( C(\alpha ,\beta )\) is given by the summation of \(2^{144}\) terms. Some bypass the problem by approximating C using efficient Monte Carlo methods. In the MRF context, another method is to replace maximizing the likelihood with maximizing the (conditional) pseudo likelihood (Besag 1974, 1977; Cressie 1993; Gaetan and Guyon 2010), which allows fast and easy computation. The pseudo likelihood here would be the product of conditional probabilities, \(\prod \nolimits _{{\mathbf {s}}\in G}\pi _{{\mathbf {s}}}(Z_{G}(\mathbf { s})\mid {\mathbf {Z}}_{B},\alpha ,\beta )\), expressed as a function of \(\alpha \) and \(\beta .\) Of course, maximum pseudo likelihood estimators are less efficient (Besag 1977).

The second term in (10) involves the Gaussian distribution of \( \varvec{\varepsilon }\) and the following conditional distribution of \(\mathbf { Z}_{B}\) given \(\varvec{\varepsilon ,}\)

Now, the underlying spatial process \(\varvec{\varepsilon }\) is not observed, but we can define the complete likelihood based on both \({\mathbf {Z}}\) and the missing data \(\varvec{\varepsilon }\).

Finally then, a quantity we call the pseudo complete log likelihood is given by

where

and

where recall that \([{\mathbf {Z}}_G(\cdot )|{\mathbf {Z}}_B,\alpha ,\beta ]\) and \(\Sigma \) are given in (3) and (2), respectively.

The first term, \(A_{1},\) concerns the estimation of the Grid parameters, while the second term, \(A_{2},\) is devoted to the estimation of the hidden Gaussian field via the observations \({\mathbf {Z}}_{B}\) on the Background \(B.\ \) The second term will be used to obtain an EM estimate of \( \varphi _{\varepsilon }=(\sigma ^2_\varepsilon ,\theta ).\)

4.1 Estimation of the Grid parameters

The goal is to obtain \(\widehat{\alpha }\) and \(\widehat{\beta }\) that maximize \(A_{1}(\alpha ,\beta )=\sum \limits _{{\mathbf {s}}\in G}\log [Z_{G}({\mathbf {s}})\mid {\mathbf {Z}}_{B},\alpha ,\beta ].\) It is straightforward to obtain

where \(V({\mathbf {s}})\equiv \dfrac{\sum _{\mathbf {u}\in N_{B}({\mathbf {s}})}Z_G( \mathbf {u})}{|N_{B}({\mathbf {s}})|}\).

The maximization of this pseudo likelihood is achieved via a standard optimization algorithm and is straightforward to implement.

4.2 Estimation of the Background parameters

4.2.1 The EM algorithm

We want to obtain estimates \(\widehat{\sigma _{\varepsilon }^{2}}\) and \( \widehat{\theta }\) from the relevant component of the pseudo complete log likelihood. Recall that \(\varphi _\varepsilon =(\sigma ^2_\varepsilon ,\theta )\) and

Since \(\varvec{\varepsilon }\) has not been observed, estimation is performed using the EM algorithm; see Dempster et al. (1977) and McLachlan and Krishnan (2008). Here we define

Starting with an initialization \(\hat{\varphi }_{\varepsilon }^{(0)},\) the k-th iteration of the algorithm is achieved in two steps. For \(k=1,2, \ldots ,\)

-

the E-step computes the expectation \(q(\varphi _{\varepsilon },\hat{ \varphi }_{\varepsilon }^{(k-1)})\), and

-

the M-step maximizes \(q(\varphi _{\varepsilon },\hat{\varphi } _{\varepsilon }^{(k-1)})\) with respect to \(\varphi _{\varepsilon };\) that is, \( \hat{\varphi }_{\varepsilon }^{(k)}=\arg \max _{\varphi _{\varepsilon }}q( \varphi _{\varepsilon },\hat{\varphi }_{\varepsilon }^{(k-1)}).\)

In our case, the E-step is problematic, since we do not have a closed-form expression for the conditional distribution of \(\varvec{\varepsilon }\) given the observations \({\mathbf {Z}}.\ \)There are several possible approaches, one being to implement a stochastic EM (SEM) algorithm (e.g., Robert and Casella 2004; McLachlan and Krishnan 2008), where the expectations are evaluated using Monte Carlo integration. The problem with this approach lies in the simulation, where a Metropolis algorithm is typically used to simulate \(\varvec{\varepsilon }. \) Choosing the “right” proposal density (see Chib and Greenberg 1995; Roberts and Rosenthal 2001) can be problematic, and when datasets are large, computations can be very slow.

4.2.2 Laplace approximations in the EM algorithm

We now derive Laplace approximations (LA) to approximate the E-step in (14), which are based on second-order Taylor series expansions of the logarithm of the integrands around their respective modes. This approach gives us a stable estimation procedure.

Write \(A_{2}(\varphi _{\varepsilon })\) more completely as \(A_{2}(\varphi _{\varepsilon };{\mathbf {Z}}_{B},\varvec{\varepsilon })\). Let us denote \(\varvec{ \varepsilon }_{m}\) as the vector maximizing \(A_{2}(\varphi _{\varepsilon }; {\mathbf {Z}}_{B},\varvec{\varepsilon });\) then, a second-order Taylor series expansion for \(A_{2}(\varphi _{\varepsilon };{\mathbf {Z}}_{B},\varvec{\varepsilon })\) around \(\varvec{\varepsilon }_{m}\) yields:

Now, looking at the right-hand side, the second term is zero, so we have the following approximation:

where \(H(\varvec{\varepsilon }_{m})=\dfrac{\partial ^{2}}{\partial \mathbf { \varepsilon }\partial \varvec{\varepsilon }^{\mathrm {T}}}A_{2}(\varphi _{ \varepsilon };{\mathbf {Z}}_{B},\varvec{\varepsilon })\Bigr |_{\varvec{\varepsilon }=\varvec{\varepsilon }_m}\).

Hence, the probability density function, \([\varvec{\varepsilon }\mid {\mathbf {Z}} _{B},\varphi _{\varepsilon }]\), is approximately proportional to \(\exp A_{2}(\varphi _{\varepsilon };{\mathbf {Z}}_{B},\varvec{\varepsilon }_{m})\times \exp \left[ -\frac{1}{2}(\varvec{\varepsilon }-\varvec{\varepsilon }_{m})^{ \mathrm {T}}(-H(\varvec{\varepsilon }_{m}))(\varvec{\varepsilon }-\varvec{ \varepsilon }_{m})\right] ;\) that is, \([\varvec{\varepsilon }\mid {\mathbf {Z}} _{B},\varphi _{\varepsilon }]\) is proportional to a Gaussian density. Moreover, one can evaluate the constant that ensures a probability density, resulting in:

We deduce from (15) that \(E\left[ \varvec{\varepsilon }\mid \mathbf { Z}_{B},\varphi _{\varepsilon }\right] \simeq \varvec{\varepsilon }_{m}\) and \( \text {var}(\varvec{\varepsilon }\mid {\mathbf {Z}}_{B},\varphi _{\varepsilon })\simeq -H( \varvec{\varepsilon }_{m})^{-1}\).

The remaining term in (13), for which we need an approximation of its expectation, is \(E[\ln (1+e^{\varepsilon ({\mathbf {s}})})~|~{\mathbf {Z}} _{B},\varphi _{\varepsilon }].\) We again use a second-order Taylor-series expansion, now of \(\ln (1+e^{\varepsilon ({\mathbf {s}})})\) around \(\varepsilon _{m}({\mathbf {s}}):\)

Taking the expectation, we obtain

where \(A_{\mathbf {ss}}\) denotes the diagonal element of the square matrix A corresponding to the spatial location \({\mathbf {s}}\).

Finally, we obtain the Laplace approximation of the expectation as:

where

Finally, starting with an initialization \(\hat{\varphi }_{\varepsilon }^{(0)}, \) the k-th iteration of the EM algorithm is achieved in the following two steps: at the E-step, we compute the mode, \(\varvec{\varepsilon }_{m}^{(k-1)}, \) of \(A_{2}(\hat{\varphi }_{\varepsilon }^{(k-1)};{\mathbf {Z}}_{B},\varvec{ \varepsilon }),\) the Hessian \(-H(\varvec{\varepsilon }_{m}^{(k-1)}),\) and \( \widetilde{q}(\varphi _{\varepsilon },\hat{\varphi }_{\varepsilon }^{(k-1)}).\) We give the details for the computation of the mode \(\varvec{\varepsilon }_{m}\) and the matrix \(H(\varvec{\varepsilon }_{m})\) in the Appendix.

At the M-step, we maximize \(q(\varphi _{\varepsilon },\hat{\varphi } _{\varepsilon }^{(k-1)})\). This is achieved by a simple minimization of a single variable function as we now demonstrate. Writing the covariance matrix as \( \Sigma (\varphi _{\varepsilon })=\sigma _{\varepsilon }^{2}Q(\theta ),\) we want to minimize:

with respect to \(\sigma _{\varepsilon }^{2}\) and \(\theta .\) The derivative with respect to \(\sigma _{\varepsilon }^{2}\) for a fixed \(\theta \) gives the following explicit solution:

Then the M-step is to minimize, with respect to \(\theta ,\)

4.3 Simulation experiments

We ran \(L=1600\) simulations, as described in Sect. 3 , with the values of the parameters given by \(\sigma _{\varepsilon }^{2}=1,~ \theta =5,~\alpha =2,~\beta =2.\ \)Then estimation is performed on each simulation based on the procedures outlined in Sects. 4.1 and . We present in Table 1 the means and standard deviations of the estimates, obtained from the 1600 simulations.

We observe a negative bias, especially for the Background parameters. This bias may come from the Laplace approximation and might be reduced using higher-order terms. Looking inside the EM algorithm, we observe that, at each iteration, very often the new value of the estimate \( \hat{\theta }^{(k)}\) is less than \(\hat{\theta }^{(k-1)}\). The initial bias for parameter \(\hat{\theta }\) transfers to a bias for \(\widehat{\sigma _{\varepsilon }^{2}}\), since the latter is obtained directly from \(\hat{\theta }\) by (17).

It is worth noticing that the EM procedure is not sensitive to the choice of starting values, and the number of iterations is often less than or equal to 8; when it is larger, we obtain a \(\hat{\theta }\) that is typically highly biased.

Estimation of the Grid parameters is obtained from the 144 observations in \( {\mathbf {Z}}_{G}\), which is not a large number. In spite of this, it is encouraging that our estimates are close to the true values.

We conducted other experiments with the two-scale-model parameters given by \( \sigma _{\varepsilon }^{2}=1,~\theta =20,~\alpha =2,~\beta =2\). The results we obtained were similar but with higher bias for the parameter estimate \(\hat{\theta }\). For lattice size \(53\times 53\), the parameter \(\theta =20\) induces high spatial dependence, and a typical realization on this lattice does not show enough contrast to estimate \(\theta \) accurately. Nevertheless, a model-based spatial prediction using this estimate can still be good.

5 Application to corn borers dataset

An extensive entomological field study of European corn borer larvae was conducted in northwest Iowa (McGuire et al. 1957). The original data are available in a 1954 technical report from the Iowa State Statistical Laboratory (“Uniformity Data from European Corn Borer, Pyrausta nubilalis (Hbn.)”). Lee et al. (2001) selected one dataset from this study, which they published, to examine whether the occurrence of corn borer larvae exhibited spatial-dependence structure. The data come from a (1/3)-acre square plot containing 36 rows, in which seeds had been planted in 36 equally spaced “hills” in each row, at an average rate of 3 seeds per hill. The area was divided into 324 regular subplots, each containing 4 hills. The response variables analyzed were defined as binary variables for the subplots, where the value 0 was obtained if corn borer larvae were absent, and the value 1 was obtained if one or more larvae were present. Now, let \(u_{i}\in \{1, \ldots ,18\}\) denote the E-W position and \( v_{i}\in \{1, \ldots ,18\}\) the N-S position of subplot i in a regular \( 18\times 18\) lattice S; define \({\mathbf {s}}_{i}=(u_{i},v_{i})\) and, for \( i=1, \ldots ,324\),

A figure showing the regular spatial lattice of subplots and observed values \(\{Z({\mathbf {s}}_{i}):i=1, \ldots ,324\}\) is presented in Fig. 9, where black illustrates the value 1, and white illustrates the value 0.

Corn borers data given in Lee et al. (2001)

In their paper, Lee et al. considered four Bernoulli conditional models with possible multi-way dependence. The first and second models are classic auto-logistic models with pairwise-only dependence, respectively associated with the four-nearest-neighbour system and the eight-nearest-neighbour system; the third and fourth models involve cliques of sizes 3 and 4 associated with the eight-nearest-neighbour system. They show that the extra cliques contained in Model 2, due to to the diagonally adjacent pairs of sites, does not bring much additional information to Model 1. Models 3 and 4 allow multi-way dependence and incorporate relative positional information in observed patterns of data. Lee et al. suggest that, for a given number of infested neighbours, the spread of infestation among spatial subplots is higher if infested neighbours occur in all directions rather than in just one direction. Having infested neighbours in all directions implies that biological conditions are favourable for infestation throughout the whole immediate region, as opposed to a situation in which conditions are favourable for infestation in one direction, but not in others.

We apply our model to this dataset; more precisely, we consider a centered Gaussian spatial field, \(\varvec{\varepsilon \sim }N_{n}(\mathbf {0},\Sigma ), \) with \(\Sigma _{ij}=\sigma _{\varepsilon }^{2}\exp (-||i-j||/\theta ),\) and conditionally independent Bernoulli variables on the Background as defined in (1). Then, for a given \(\varDelta ,\) we superimpose the Grid (cell size \(\varDelta )\) on S; we consider a Markov random field \( {\mathbf {Z}}_{G}\) on the Grid with a four-nearest-neighbour system (based on Lee et al.), defined by (4) and (5), and with parameters \(\alpha \) and \(\beta \). We estimate the parameters \( (\sigma _{\varepsilon }^{2},\theta ,\alpha ,\beta )\) using the procedure described in Sect. 4.

The resolution \(\varDelta \) of the Grid is chosen according to a preliminary exploratory step, by inspecting the spatial odds ratio at different lags. A first exploratory approach is to plot the SOR defined in (7) for different lags \(h=1\) to 10, in the directions \({\mathbf {e}}_{1}\) (E-W), \( {\mathbf {e}}_{2}\) (SW-NE), and \({\mathbf {e}}_{3}\) (N-S) with coordinates \(\mathbf { e}_{1}=(1,0)\), \({\mathbf {e}}_{2}=(1,1),\) and \({\mathbf {e}}_{3}=(0,1).\)

Since we have a single dataset, we compute the empirical SOR at lag h in direction \(\mathbf {e,}\) as follows:

where \(\hat{p}_{jk}(h,{\mathbf {e}})=\frac{1}{|D_{{\mathbf {e}},h}|}\sum \limits _{ {\mathbf {s}}\in D_{{\mathbf {e}},h}}\varvec{1}_{\left\{ Z({\mathbf {s}})=j,~Z( \mathbf {s+}h{\mathbf {e}})=k\right\} },\ j,k=0,1,\) and recall that \(D_{{\mathbf {e}},h}=\{ \mathbf {s\in }D:{\mathbf {s}}+{\mathbf {e}}h\in D\}.\)

In Fig. 10, we present the plots of the spatial odds ratios as a function of h in the three directions, \({\mathbf {e}}_{1},~{\mathbf {e}}_{2},\) and \({\mathbf {e}}_{3}\).

Empirical SOR in directions \({\mathbf {e}}_{1}\) (left), \({\mathbf {e}}_{2} \) (middle), and \({\mathbf {e}}_{3}\) (right), for lags \(h=1,\ldots ,10.\) The units of lag in direction \({\mathbf {e}}_{2}\) have to be multiplied by \(\sqrt{ 2}\) to compare to the units of lag in the directions \({\mathbf {e}}_{2}\) and \( {\mathbf {e}}_{3}\)

It is apparent that \(\varDelta _{E-W}=2\) is a good selection for the E-W direction, while we have possible choices of \(\varDelta _{N-S}=2\) and \(\varDelta _{N-S}=4\) in the N-S direction; we also notice a peak in the diagonal direction which likely comes from \(\varDelta _{E-W}=2\) and \(\varDelta _{N-S}=4.\) Based on this exploratory spatial data analysis, we consider two Grids, \(G_{1}\) with lags \(\varDelta _{E-W}=2\) and \( \varDelta _{N-S}=2,~\)and \(G_{2}\) with lags \(\varDelta _{E-W}=2\) and \(\varDelta _{N-S}=4\). Grids \(G_{1}\) and \(G_{2}\) involve, respectively, 64 and 32 sites.

Now we want to specify the location of the Grids \(G_{1}\) and \(G_{2}\); for that purpose, we again use the empirical SOR. We compute \(\widehat{SOR}_{ {\mathbf {e}}_{1}}(\varDelta _{E-W}),\ \widehat{SOR}_{{\mathbf {e}}_{3}}(\varDelta _{N-S})\), and

for different positionings. We specify each location of the Grid by giving the coordinates (in terms of row and column numbers) of its north-west corner. For both Grid \(G_{1}\) and Grid \(G_{2}\), the largest \(\widehat{SOR}_{G_{1}}(\varDelta _{E-W},\varDelta _{N-S})\) and \(\widehat{SOR }_{G_{2}}(\varDelta _{E-W},\varDelta _{N-S})\) values were obtained for positioning the Grid at (2, 2).

For each of the two Grids, we estimated the parameters using the procedure described in Sect. 4. We note that since the sizes of the Grids are small, we could use the true likelihood instead of the pseudo-likelihood for estimation of the Grid parameters, but we chose here to follow the general procedure given in Sect. 4. The results are summarized in Table 2. For each Grid, we give the estimates as well as the value of the log likelihood terms, \(A_{1}(\widehat{\alpha },\widehat{\beta })\) and \( \tilde{q}(\widehat{\varphi }_{\varepsilon }^{(k_f)},\widehat{\varphi } _{\varepsilon }^{(k_f)})\), defined by (12) and (16), where for the latter, \(\widehat{\varphi }_{\varepsilon }^{(k_f)}\) is the value of \(\widehat{\varphi }_{\varepsilon }\) obtained at the final iteration \(k_f\) of the EM algorithm.

First, we can see that strong spatial dependence is estimated for both models. Indeed, the scale parameter \(\theta \) of the spatial covariance is at least 21, which is quite large; and for both Grids, the interaction parameter \( \beta \) indicates strong spatial dependence. However, we can see that spatial dependence is much stronger in the model associated with Grid \(G_1\); indeed, both spatial-interaction parameters \(\theta \) (in the Background)and \(\beta \) (in the Grid) are greater than their values obtained for Grid \(G_2\). Grid \(G_2\) has a larger estimate of \(\alpha \) and a smaller estimate of \(\beta \). Finally, the values of \(\tilde{q}(\widehat{\varphi }_{\varepsilon }^{(k_f)},\widehat{\varphi } _{\varepsilon }^{(k_f)})\) indicate preference for the two-scale model with Grid \(G_{1}.\)

Now, since, Grid \(G_2\) has different scale on the E-W and N-S directions, it is interesting to consider different values for parameter \(\beta \). Thus, we adapt our model and consider the East-West two-nearest neighbourhood and North-South two-nearest neighbourhood, defined respectively by, for each \( {\mathbf {s}}\in G\),

and

We apply the procedure for both Grids \(G_1\) and \(G_2\). The results are summarized in Table 3. Estimates of the Background parameters are not impacted. In both cases (Grids \(G_1\) and \(G_2\)), the estimate value of \( \alpha \) in the anisotropic model is a bit lowered compared to the isotropic model, which goes with the fact that \(\widehat{\beta }_{E-W}+\widehat{\beta } _{N-S}\) is a bit larger than \(\widehat{\beta }\). The log-pseudo-likelihood values \(A_{1}(\widehat{\alpha },\widehat{\beta }_{E-W},\widehat{\beta }_{N-S})\) are quite similar to those obtained for the isotropic model. Finally, we compare \(\widehat{\beta }_{E-W}\) and \(\widehat{\beta }_{N-S}\); we have \( \widehat{\beta }_{E-W}<\widehat{\beta }_{N-S}\) for Grid \(G_1\), and \(\widehat{ \beta }_{E-W}>\widehat{\beta }_{N-S}\) for Grid \(G_2\); this is in accordance with the empirical Spatial Odds Ratio \(\widehat{SOR}_{{\mathbf {e}} _1}(\varDelta _{E-W})\) and \(\widehat{SOR}_{{\mathbf {e}}_3}(\varDelta _{N-S})\), equaling respectively 4.75 and 5.42 for Grid \(G_1\) and 3.17 and 3.00 for Grid \(G_2\).

6 Discussion and conclusions

In this paper, we have developed a hierarchical (in spatial scale) spatial statistical model for binary data. The process model is spatially dependent and is obtained from a hidden Gaussian spatial process. This fine-scale Background dependence is captured through Bernoulli random variables with success probabilities given by the logit transformation of the latent process. Then, conditional on this Background, binary data on a Grid at a coarser resolution is overlaid; the coarse-scale dependence is captured through a binary Markov random field.

Some extensions of our research could be considered. The estimation of parameters given in Sect. 4 requires computation of an inverse covariance matrix, which can be problematic for large data sets. In this case, we could consider modelling \(\varvec{\varepsilon }\) with a Spatial Random Effects (SRE) model, as described by Cressie and Johannesson (2008); see also Kang and Cressie (2011) and Sengupta and Cressie (2013a). Other reduced-rank approaches could also be used (e.g. Wikle and Hooten 2010), or indeed the inverse covariance matrix could be modelled directly (e.g., Lindgren et al. 2011).

The methodology presented here for regular lattices could be generalised to irregular lattices. The Background (representing fine-scale variation) would be irregular, but the Grid (representing coarse-scale variation) is regular and obtained by moving nearest locations on the irregular lattice to the Grid locations. In Cressie and Kornak (2003), it is shown that the effect on spatial variability is small.

Furthermore, covariates could be incorporated in the modelling through the latent Gaussian process as follows: In (1), write

where \(\mathbf {X}(\mathbf {.})\) denotes a p-dimensional vector of known covariates, and \(\mathbf {b}\) is a p-dimensional vector of regression coefficients. This model and the estimation of its parameters is currently under investigation.

In this paper, we considered binary data, although the approach is clearly generalisable to count data or other data arising from a generalised linear model. The binary Markov random field on the superimposed Grid could quite naturally be generalised to a spatial auto-model from the same member of the exponential family that is used to generate the Background.

Simple assumptions on spatial dependence on the Grid were made, namely the four-nearest-neighbour system and pairwise-only dependence. These assumptions can be easily extended to more neighbours and, indeed, the pairwise-only assumption can be relaxed in a manner similar to that given in Lee et al. (2001). This might be particularly useful within the context of image analysis, where higher-order interactions allow for improved image processing (e.g., see Descombes et al. 1995; Tjelmeland and Besag 1998).

7 Appendix

In Sect. 4, we use the EM algorithm where the E-step is to compute the Laplace approximation, \(\widetilde{q}(\varphi _{\varepsilon }, \hat{\varphi }_{\varepsilon }^{(k)}),\) given by (16); its expression depends on the mode \(\varvec{\varepsilon }_{m}\) and on the Hessian \(H(\varvec{\varepsilon }_{m})\) of \(A_{2}(\varphi _{\varepsilon };{\mathbf {Z}}_{B}, \varvec{\varepsilon }),\) computed at the mode. Recall that the process \( \varvec{\varepsilon }\) can be written as a vector, \(\varvec{\varepsilon }^{ \mathrm {T}}=(\varvec{\varepsilon }_{B}^{\mathrm {T}},\varvec{\varepsilon }_{G}^{ \mathrm {T}}),\) with covariance matrix, \(\Sigma =\left( \begin{array}{cc} \Sigma _{B} &{} \quad \Sigma _{BG} \\ \Sigma _{GB} &{} \quad \Sigma _{G} \end{array} \right) .\) We note \(\Sigma ^{-1}=\left( \begin{array}{cc} A &{} \quad C^{\mathrm {T}} \\ C &{} \quad B \end{array} \right) ,\) with \(A=(\Sigma _{B}-\Sigma _{BG}\Sigma _{G}^{-1} \Sigma _{GB})^{-1},~B=(\Sigma _{G}-\Sigma _{GB}\Sigma _{B}^{-1} \Sigma _{BG})^{-1},~\) and \(C=-\Sigma _{G}^{-1}\Sigma _{GB}A=-B\Sigma _{GB} \Sigma _{B}{}^{-1}.\) Then, with respect to this decomposition,

The gradient of this function is given by \(\frac{\partial }{\partial \varvec{ \varepsilon }}A_{2}(\varphi _{\varepsilon };{\mathbf {Z}}_{B},\varvec{\varepsilon } )=\left( \begin{array}{c} V(\varvec{\varepsilon }_{B}) \\ V(\varvec{\varepsilon }_{G}) \end{array} \right) ,~\)with \(V(\varvec{\varepsilon }_{B})={\mathbf {Z}}_{B}-vec(\dfrac{e^{ \varvec{\varepsilon }_{B}}}{1+e^{\varvec{\varepsilon }_{B}}})-C^{\mathrm {T}} \varvec{\varepsilon }_{G}-A\varvec{\varepsilon }_{B},\) and \(V(\varvec{ \varepsilon }_{G})=-C\varvec{\varepsilon }_{B}-B\varvec{\varepsilon }_{G}.\) Since \(V(\varvec{\varepsilon }_{G})=0,\) we have \(\varvec{\varepsilon } _{G}=-B^{-1}C\varvec{\varepsilon }_{B},\) which results in the equation,

This equation can be solved by a Newton-Raphson algorithm; from it, we obtain \(\varvec{\varepsilon }_{B,m},\) and then the mode is \(\varvec{ \varepsilon }_{m}^{\mathrm {T}}=(\varvec{\varepsilon }_{B,m}^{\mathrm {T}}, \varvec{\varepsilon }_{G,m}^{\mathrm {T}})\), where \(\varvec{\varepsilon } _{G,m}=-B^{-1}C\varvec{\varepsilon }_{B,m}.\)

A simple calculation gives the Hessian \(H(\varvec{\varepsilon }_{m});\) that is,

\(-H(\varvec{\varepsilon }_{m})=\left( \begin{array}{ccc} diag\left( \dfrac{e^{\varvec{\varepsilon }_{B,m}}}{(1+e^{\varvec{\varepsilon } _{B,m}})^{2}}\right) +A &{} &{} C^{\mathrm {T}} \\ C &{} &{} B \end{array} \right) .\)

References

Aldworth J, Cressie N (1999) Sampling designs and prediction methods for Gaussian spatial processes. In: Ghosh S (ed) Multivariate analysis, designs of experiments, and survey sampling. Marckel Dekker Inc, New York, pp 1–54

Aitchison J (1982) The statistical analysis of compositional data. J R Stat Soc Ser B 44(2):139–177

Albert JH, Chib S (1993) Bayesian analysis of binary and polychotomous response data. J Am Stat Assoc 88(422):669–679

Augustin NH, Mugglestone MA, Buckland ST (1996) An autologistic model for the spatial distribution of wildlife. J Appl Ecol 33(2):339–347

Besag J (1974) Spatial interaction and the statistical analysis of lattice systems. J R Stat Soc Ser B 36(2):192–236

Besag J (1977) Efficiency of pseudo likelihood estimation for simple Gaussian fields. Biometrika 64:616–618

Cappé O, Moulines E, Rydén T (2005) Inference in hidden Markov models. Springer series in statistics. Springer, New York

Caragea P, Kaiser M (2009) Autologistic models with interpretable parameters. J Agric Biol Environ Stat 14(3):281–300

Chen M-H, Shao Q-M, Ibrahim JG (2000) Monte Carlo methods in Bayesian computation. Springer series in statistics. Springer, New York

Chib S, Greenberg E (1995) Understanding the Metropolis algorithm. Am Stat 49(4):327–335

Chib S, Greenberg E (1996) Markov Chain Monte Carlo simulation methods in econometrics. Econom Theory 12:409–431

Cressie N (1993) Statistics for spatial data, rev edn. Wiley, New York

Cressie N, Johannesson G (2008) Fixed rank kriging for very large spatial data sets. J R Stat Soc Ser B 70:209–226

Cressie N, Kornak J (2003) Spatial statistics in the presence of location error with an application to remote sensing of the environment. Stat Sci 18(4):436–456

Cressie N, Wikle CK (2011) Statistics for spatio-temporal data. Wiley, Hoboken

Descombes X, Mangin J-F, Pechersky E, Sigelle M (1995) Fine structures preserving Markov model for image processing. In: Proceedings of the 9th scandinavian conference on image analysis, Uppsala, Sweden, pp 349-356

Dempster AP, Laird N, Rubin D (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B 39:1–38

Diggle PJ, Tawn JA, Moyeed RA (1988) Model-based geostatistics. J R Stat Soc Ser C 47:299–350

Elkink JA, Calabrese R (2015) Estimating binary spatial autoregressive models for rare events. In: The annual meeting of the american political science association, Washington DC, pp 28–31

Gaetan C, Guyon X (2010) Spatial statistics and modeling. Springer series in statistics. Springer, New York

Gumpertz ML, Graham JM, Ristaino JB (1997) Autologistic model of spatial pattern of Phytophthora epidemic in bell pepper: effects of soil variables on disease presence. J Agric Biol Environ Stat 2:131–156

Guyon X (1995) Random fields on a network: modeling. Statistics and applications. Springer, New York

He F, Zhou J, Zhu H (2003) Autologistic regression model for the distribution of vegetation. J Agric Biol Environ Stat 8(2):205–222

Jiang W, Chen Z, Lei X, Jia K, Wu Y (2015) Simulating urban land use change by incorporating an autologistic regression model into a CLUE-S model. J Geogr Sci 25(7):836–850

Kang EL, Cressie N (2011) Bayesian inference for the spatial random effects model. J Am Stat Assoc 106:972–983

Katzfuss M, Cressie N (2012) Bayesian hierarchical spatio-temporal smoothing for very large datasets. Environmetrics 23:94–107

Koutsias N (2003) An autologistic regression model for increasing the accuracy of burned surface mapping using landsat thematic mapper data. Int J Remote Sens 24:2199–2204

Lee J, Kaiser MS, Cressie N (2001) Multiway dependence in exponential family conditional distributions. J Multivar Anal 79:171–190

LeSage JP, Pace RK, Lam N, Campanella R, Liu X (2011) New Orleans business recovery in the aftermath of Hurricane Katrina. J R Stat Soc Ser A 174:1007–1027

Lindgren F, Rue H, Lindström J (2011) An explicit link between Gaussian fields and Gaussian Markov random fields: the stochastic partial differential equation approach. J R Stat Soc Ser B 73(4):423–498

Lindsay BG (1988) Composite likelihood methods. Contemp Math 80:221–239

Liu JS (2008) Monte Carlo strategies in scientific computing. Springer series in statistics. Springer, New York

Marsh L, Mittelhammer RC, Huffaker RG (2000) Probit with spatial correlation by field plot: Potato leafroll virus net necrosis in potatoes. J Agric Biol Environ Stat 5:22–36

McCullagh P, Nelder JA (1989) Generalized linear models, 2nd edn. Chapman and Hall, London

McCulloch CE, Searle SR, Neuhaus JM (2001) Generalized, linear, and mixed models. Wiley, New York

McGuire J, Brindley T, Bancroft T (1957) The distribution of European corn borer larvae Pyrausta nubi/alis (Hbn.) in field corn. Biometrics 13:65–78

McLachlan GJ, Krishnan T (2008) The EM algorithm and extensions, 2nd edn. Wiley, New York

Moon S, Russell GJ (2008) Predicting product purchase from inferred customer similarity: an autologistic model approach. Manag Sci 54(1):71–82

Robert CP, Casella G (2004) Monte Carlo statistical methods. Springer, New York

Roberts GO, Rosenthal JS (2001) Optimal scaling for various Metropolis-Hastings algorithms. Stat Sci 16(4):351–367

Roy V, Evangelou E, Zhu Z (2016) Efficient estimation and prediction for the Bayesian binary spatial model with flexible link functions. Biometrics 72(1):289–298

Rue H, Held L (2005) Gaussian Markov random fields: theory and applications. Chapman & Hall, London

Sanderson RA, Eyre MD, Rushton SP (2005) Distribution of selected macroinvertebrates in a mosaic of temporary and permanent freshwater ponds as explained by autologistic models. Ecography 28(3):55–362

Schlather M, Malinowski A, Menck PJ, Oesting M, Strokorb K (2015) Analysis, simulation and prediction of multivariate random fields with package RandomFields. J Stat Softw 63(8):1–25

Sengupta A, Cressie N (2013a) Empirical hierarchical modeling for count data using the Spatial Random Effects model. Spat Econ Anal 8(3):389–418

Sengupta A, Cressie N (2013b) Hierarchical statistical modeling of big spatial datasets using the exponential family of distributions. Spat Stat 4:14–44

Tjelmeland H, Besag J (1998) Markov random fields with higher-order interactions. Scand J Stat 25:415–433

Wang Z, Zheng Y (2013) Analysis of binary data via a centered spatial–temporal autologistic regression model. Environ Ecol Stat 20(1):37–57

Wikle CK, Hooten MB (2010) A general science-based framework for spatio-temporal dynamical models. Test 19:417–451

Acknowledgements

Hardouin’s research was conducted as part of the project Labex MME-DII (ANR11-LBX-0023-01). Cressie’s research was supported by a 2015–2017 ARC Discovery Project, DP 150104576.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hardouin, C., Cressie, N. Two-scale spatial models for binary data. Stat Methods Appl 27, 1–24 (2018). https://doi.org/10.1007/s10260-017-0391-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-017-0391-1