Abstract

This work is concerned with the classical problem of finding a zero of a sum of maximal monotone operators. For the projective splitting framework recently proposed by Combettes and Eckstein, we show how to replace the fundamental subproblem calculation using a backward step with one based on two forward steps. The resulting algorithms have the same kind of coordination procedure and can be implemented in the same block-iterative and highly flexible manner, but may perform backward steps on some operators and forward steps on others. Prior algorithms in the projective splitting family have used only backward steps. Forward steps can be used for any Lipschitz-continuous operators provided the stepsize is bounded by the inverse of the Lipschitz constant. If the Lipschitz constant is unknown, a simple backtracking linesearch procedure may be used. For affine operators, the stepsize can be chosen adaptively without knowledge of the Lipschitz constant and without any additional forward steps. We close the paper by empirically studying the performance of several kinds of splitting algorithms on a large-scale rare feature selection problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For a collection of real Hilbert spaces \(\{{\mathcal {H}}_i\}_{i=0}^{n}\), consider the problem of finding \(z\in {\mathcal {H}}_{0}\) such that

where \(G_i:{\mathcal {H}}_{0}\rightarrow {\mathcal {H}}_i\) are linear and bounded operators, \(T_i:{\mathcal {H}}_i \rightarrow 2^{{\mathcal {H}}_i}\) are maximal monotone operators and additionally there exists a subset \({\mathcal {I}}_{{{\,\mathrm{F}\,}}}\subseteq \{1,\ldots ,n\}\) such that for all \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) the operator \(T_i\) is Lipschitz continuous. An important instance of this problem is

where every \(f_i:{\mathcal {H}}_i\rightarrow {\mathbb {R}}\) is closed, proper and convex, with some subset of the functions also being differentiable with Lipschitz-continuous gradients. Under appropriate constraint qualifications, (1) and (2) are equivalent. Problem (2) arises in a host of applications such as machine learning, signal and image processing, inverse problems, and computer vision; see [4, 9, 12] for some examples. Operator splitting algorithms are now a common way to solve structured monotone inclusions such as (1). Until recently, there were three underlying classes of operator splitting algorithms: forward–backward [29], Douglas/Peaceman–Rachford [25], and forward–backward–forward [35]. In [14], Davis and Yin introduced a new operator splitting algorithm which does not reduce to any of these methods. Many algorithms for more complicated monotone inclusions and optimization problems involving many terms and constraints are in fact applications of one of these underlying techniques to a reduced monotone inclusion in an appropriately defined product space [5, 6, 11, 13, 22]. These four operator splitting techniques are, in turn, a special case of the Krasnoselskii-Mann (KM) iteration for finding a fixed point of a nonexpansive operator [24, 28].

A different, relatively recently proposed class of operator splitting algorithms is projective splitting: this class has a different convergence mechanism based on projection onto separating sets and does not in general reduce to the KM iteration. The root ideas underlying projective splitting can be found in [20, 32, 33], which dealt with monotone inclusions with a single operator. The algorithm of [16] significantly built on these ideas to address the case of two operators and was thus the original projective “splitting” method. This algorithm was generalized to more than two operators in [17]. The related algorithm in [1] introduced a technique for handling compositions of linear and monotone operators, and [8] proposed an extension to “block-iterative” and asynchronous operation—block-iterative operation meaning that only a subset of the operators making up the problem need to be considered at each iteration (this approach may be called “incremental” in the optimization literature). A restricted and simplified version of this framework appears in [15]. The potentially asynchronous and block-iterative nature of projective splitting as well as its ability to handle composition with linear operators gives it an unprecedented level of flexibility compared with prior classes of operator splitting methods. Further, in the projective splitting methods of [8, 15] the order with which operators can be processed is deterministic, variable, and highly flexible. It is not necessary that each operator be processed the same number of times either exactly or approximately; in fact, one operator may be processed much more often than another. The only constraint is that there is an upper bound on the number of iterations between the consecutive times that each operator is processed.

Projective splitting algorithms work by performing separate calculations on each individual operator to construct a separating hyperplane between the current iterate and the problem’s Kuhn–Tucker set (essentially the set of primal and dual solutions), and then projecting onto this hyperplane. In prior projective splitting algorithms, the only operation performed on the individual operators \(T_i\) is a proximal step (equivalently referred to as a resolvent or backward step), which consists of evaluating the operator resolvents \((I + \rho T_i)^{-1}\) for some scalar \(\rho > 0\). In this paper, we show how, for the Lipschitz continuous operators, the same kind of framework can also make use of forward steps on the individual operators, equivalent to applying \(I - \rho T_i\). Typically, such “explicit” steps are computationally much easier than “implicit”, proximal steps. Our procedure requires two forward steps each time it evaluates an operator, and in this sense is reminiscent of Tseng’s forward–backward–forward method [35] and Korpelevich’s extragradient method [23]. Indeed, for the special case of only one operator, projective splitting with the new procedure reduces to the variant of the extragradient method in [20] (see [21, Section 4] for the derivation). In our forward-step procedure, each stepsize must be bounded by the inverse of the Lipschitz constant of \(T_i\). However, a simple backtracking procedure can eliminate the need to estimate the Lipschitz constant, and other options are available for selecting the stepsize when \(T_i\) is affine.

1.1 Intuition and contributions: basic idea

We first provide some intuition into our fundamental idea of incorporating forward steps into projective splitting. For simplicity, consider (1) without the linear operators \(G_i\), that is, we want to find z such that \(0\in \sum _{i=1}^n T_i z\), where \(T_1,\ldots ,T_n: {\mathcal {H}}_0 \rightarrow 2^{{\mathcal {H}}_0}\) are maximal monotone operators on a single real Hilbert space \({\mathcal {H}}_0\). We formulate the Kuhn–Tucker solution set of this problem as

It is clear that \(z^*\) solves \(0\in \sum _{i=1}^n T_i z^*\) if and only if there exist \(w_1^*,\ldots ,w_{n-1}^*\) such that \((z^*,w_1^*,\ldots ,w_{n-1}^*)\in {\mathcal {S}}\). A separator-projection algorithm for finding a point in \({\mathcal {S}}\) finds, at each iteration k, a closed and convex set \(H_k\) which separates \({\mathcal {S}}\) from the current point, meaning \({\mathcal {S}}\) is entirely in the set and the current point is not. One can then move closer to the solution set by projecting the current point onto the set \(H_k\).

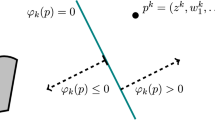

If we define \({\mathcal {S}}\) as in (3), then the separator formulation presented in [8] constructs the set \(H_k\) through the function

for some \(x_i^k,y_i^k \in {\mathcal {H}}_0\) such that \(y^k_i\in T_i x^k_i\), \(i \in 1,\ldots ,n\). From its expression in (5) it is clear that \(\varphi _k\) is an affine function on \({\mathcal {H}}^n_0\). Furthermore, it may easily be verified that for any \(p = (z,w_1,\ldots ,w_{n-1}) \in {\mathcal {S}}\), one has \(\varphi _k(p)\le 0\), so that the separator set \(H_k\) may be taken to be the halfspace \(\left\{ p \; \left| \;\; \varphi _k(p) \le 0 \right. \right\} \). The key idea of projective splitting is, given a current iterate \(p^k = (z^k,w_1^k,\ldots ,w_{n-1}^k) \in {\mathcal {H}}_0^n\), to pick \((x^k_i,y^k_i)\) so that \(\varphi _k(p^k)\) is positive if \(p^k \not \in {\mathcal {S}}\). Then, since the solution set is entirely on the other side of the hyperplane \(\left\{ p \; \left| \;\; \varphi _k(p)=0 \right. \right\} \), projecting the current point onto this hyperplane makes progress toward the solution. If it can be shown that this progress is sufficiently large, then it is possible to prove (weak) convergence.

Let the iterates of such an algorithm be \(p^k = (z^k,w_i^k,\ldots ,w_{n-1}^k) \in {\mathcal {H}}_0^n\). To simplify the subsequent analysis, define \(w_n^k \triangleq -\sum _{i=1}^{n-1} w_i^k\) at each iteration k, whence it is immediate from (4) that \(\varphi _k(p^k) = \varphi _k(z^k,w_1^k,\ldots ,w_{n-1}^k) = \sum _{i=1}^n \langle {z^k-x^k_i},{y^k_i-w^k_i}\rangle \). To construct a function \(\varphi _k\) of the form (4) such that \(\varphi _k(p^k) = \varphi _k(z^k,w_1^k,\ldots ,w_n^k) > 0\) whenever \(p^k \not \in {\mathcal {S}}\), it is sufficient to be able to perform the following calculation on each individual operator \(T_i\): for \((z^k,w_i^k)\in {\mathcal {H}}_0^2\), find \(x_i^k,y_i^k\in {\mathcal {H}}_0\) such that \(y_i^k\in T_i x^k_i\) and \(\langle {z^k - x_i^k},{y_i^k - w_i^k}\rangle \ge 0\), with \(\langle {z^k - x_i^k},{y_i^k - w_i^k}\rangle > 0\) if \(w_i^k \not \in T_i z^k\). In earlier work on projective splitting [1, 8, 16, 17], the calculation of such a \((x_i^k,y_i^k)\) is accomplished by a proximal (implicit) step on the operator \(T_i\): given a scalar \(\rho > 0\), we find the unique pair \((x_i^k,y_i^k)\in {\mathcal {H}}_0^2\) such that \(y_i^k \in T_i x^k_i\) and

We immediately conclude that

and furthermore that \(\langle {z^k - x_i^k},{y_i^k - w_i^k}\rangle > 0\) unless \(x_i^k = z^k\), which would in turn imply that \(y_i^k = w_i^k\) and \(w_i^k \in T_i z^k\). If we perform such a calculation for each \(i= 1,\ldots ,n\), we have constructed a separator of the form (4) which, in view of \(\varphi _k(p^k) = \sum _{i=1}^n \langle {z^k-x^k_i},{y^k_i-w_i^k}\rangle \), has \(\varphi _k(p^k) > 0\) if \(p^k \not \in {\mathcal {S}}\). This basic calculation on \(T_i\) is depicted in Fig. 1a for \({\mathcal {H}}_0 = {\mathbb {R}}^1\): because \(z^k-x_i^k = \rho (y_i^k-w_i^k)\), the line segment between \((z^k,w_i^k)\) and \((x_i^k,y_i^k)\) must have slope \(-1/\rho \), meaning that \(\langle {z^k - x_i^k},{w_i^k - y_i^k}\rangle \le 0\) and thus that \(\langle {z^k - x_i^k},{y_i^k - w_i^k}\rangle \ge 0\). It also bears mentioning that the relation (7) plays (in generalized form) a key role in the convergence proof.

Consider now the case that \(T_i\) is Lipschitz continuous with modulus \(L_i \ge 0\) (and hence single valued) and defined throughout \({\mathcal {H}}_0\). We now introduce a technique to accomplish something similar to the preceding calculation through two forward steps instead of a single backward step. We begin by evaluating \(T_i z^k\) and using this value in place of \(y_i^k\) in the right-hand equation in (6), yielding

and we use this value for \(x_i^k\). This calculation is depicted by the lower left point in Fig. 1b. We then calculate \(y_i^k = T_i x^k_i\), resulting in a pair \((x_i^k,y_i^k)\) on the graph of the operator; see the upper left point in Fig. 1b. For this choice of \((x_i^k,y_i^k)\), we next observe that

Here, (9) follows because \(T_i z^k - w_i^k = (1/\rho )(z^k - x_i^k)\) from (8) and because we let \(y_i^k = T_i x^k_i\). The inequality (10) then follows from the Cauchy-Schwarz inequality and the hypothesized Lipschitz continuity of \(T_i\). If we require that \(\rho < 1/L_i\), then we have \(1/\rho > L_i\) and (11) therefore establishes that \(\langle {z^k - x_i^k},{y_i^k - w_i^k}\rangle \ge 0\), with \(\langle {z^k - x_i^k},{y_i^k - w_i^k}\rangle > 0\) unless \(x_i^k = z^k\), which would imply that \(w_i^k = T_i z^k\). We thus obtain a conclusion very similar to (7) and the results immediately following from it, but using the constant \(1/\rho - L_i > 0\) in place of the positive constant \(1/\rho \).

Backward and forward operator calculations in \({\mathcal {H}}_0 = {\mathbb {R}}^1\). The goal is to find a point \((x_i^k,y_i^k)\) on the graph of the operator such that line segment connecting \((z^k,w_i^k)\) and \((x_i^k,y_i^k)\) has negative slope. Part a depicts a standard backward-step-based construction, while b depicts our new construction based on two forward steps

For \({\mathcal {H}}_0 = {\mathbb {R}}^1\), this process is depicted in Fig. 1b. By construction, the line segment between \(\big (z^k,T_i z^k\big )\) and \((x_i^k,w_i^k)\) has slope \(1/\rho \), which is “steeper” than the graph of the operator, which can have slope at most \(L_i\) by Lipschitz continuity. This guarantees that the line segment between \((z^k,w_i^k)\) and \((x_i^k,y_i^k)\) must have negative slope, which in \({\mathbb {R}}^1\) is equivalent to the claimed inner product property.

Using a backtracking line search, we will also be able to handle the situation in which the value of \(L_i\) is unknown. If we choose any positive constant \(\varDelta > 0\), then by elementary algebra the inequalities \((1/\rho ) - L_i \ge \varDelta \) and \(\rho \le 1/(L_i + \varDelta )\) are equivalent. Therefore, if we select some positive \(\rho \le 1/(L_i + \varDelta )\), we have from (11) that

which implies the key properties we need for the convergence proofs. Therefore we may start with any \(\rho = \rho ^0 > 0\), and repeatedly halve \(\rho \) until (12) holds; in Sect. 5.1 below, we bound the number of halving steps required. In general, each trial value of \(\rho \) requires one application of the Lipschitz continuous operator \(T_i\). However, for the case of affine operators \(T_i\), we will show that it is possible to compute a stepsize such that (12) holds with a total of only two applications of the operator. By contrast, most backtracking procedures in optimization algorithms require evaluating the objective function at each new candidate point, which in turn usually requires an additional matrix multiply operation in the quadratic case [3].

1.2 Summary of contributions

The main thrust of the remainder of this paper is to incorporate the second, forward-step construction of \((x_i^k,y_i^k)\) above into an algorithm resembling those of [8, 15], allowing some operators to use backward steps, and others to use forward steps. Thus, projective splitting may become useful in a broad range of applications in which computing forward steps is preferable to computing or approximating proximal steps. The resulting algorithm inherits the block-iterative features and flexible capabilities of [8, 15].

We will work with a slight restriction of problem (1), namely

In terms of problem (1), we are simply requiring that \(G_n\) be the identity operator and thus that \({\mathcal {H}}_n = {\mathcal {H}}_0\). This is not much of a restriction in practice, since one could redefine the last operator as \(T_{n}\leftarrow G^*_{n} \circ T_{n} \circ G_{n}\), or one could simply append a new operator \(T_n\) with \(T_n(z) = \{0\}\) everywhere.

The principle reason for adopting a formulation involving the linear operators \(G_i\) is that in many applications of (13) it may be relatively easy to compute the proximal step of \(T_i\) but difficult to compute the proximal step of \(G_i^*\circ T_i\circ G_i\). Our framework will include algorithms for (13) that may compute the proximal steps on \(T_i\), forward steps when \(T_i\) is Lipschitz continuous, and applications (“matrix multiplies”) of \(G_i\) and \(G_i^*\). An interesting feature of the forward steps in our method is that while the allowable stepsizes depend on the Lipschitz constants of the \(T_i\) for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\), they do not depend on the linear operator norms \(\Vert G_i\Vert \), in contrast with primal-dual methods [6, 13, 36]. Furthermore, as already mentioned, the stepsizes used for each operator can be chosen independently and may vary by iteration.

We also present a previously unpublished “greedy” heuristic for selecting operators in block-iterative splitting, based on a simple proxy. Augmenting this heuristic with a straightforward safeguard allows one to retain all of the convergence properties of the main algorithm. The heuristic is not specifically tied to the use of forward steps and also applies to the earlier algorithms in [8, 15]. The numerical experiments in Sect. 6 below attest to its usefulness.

The main contribution of this work is the new two-forward-step procedure. The main proposed algorithm is a block-iterative splitting method that performs well in our numerical experiments when combined with the greedy block selection strategy. However, the analysis also allows for the kind of asynchronous operation developed in [8, 15]. Empirically investigating such asynchronous implementations is beyond the scope of this work. Since allowing for asynchrony introduces little additional complexity into the convergence analysis, we have included it in the theoretical results.

After submitting this paper, we became aware of the preprint [34], which develops a similar two-forward-step procedure for projective splitting in a somewhat different setting than (13). The scheme is equivalent to ours when \(G_i=I\), but does not incorporate the backtracking linesearch or its simplification for affine operators. Their analysis also does not allow for asynchronous or block-iterative implementations.

2 Mathematical preliminaries

2.1 Notation

Summations of the form \(\sum _{i=1}^{n-1}a_i\) for some collection \(\{a_i\}\) will appear throughout this paper. To deal with the case \(n=1\), we use the standard convention that \( \sum _{i=1}^{0} a_i = 0. \) To simplify the presentation, we use the following notation throughout the rest of the paper, where I denotes the identity map on \({\mathcal {H}}_n\):

Note that when \(n=1\), \(w_1^k = 0\). We will use a boldface \(\mathbf{w}= (w_1,\ldots ,w_{n-1})\) for elements of \({\mathcal {H}}_1\times \ldots \times {\mathcal {H}}_{n-1}\).

Throughout, we will simply write \(\Vert \cdot \Vert _i = \Vert \cdot \Vert \) as the norm for \({\mathcal {H}}_i\) and let the subscript be inferred from the argument. In the same way, we will write \(\langle \cdot ,\cdot \rangle _i\) as \(\langle \cdot ,\cdot \rangle \) for the inner product of \({\mathcal {H}}_i\). For the collective primal-dual space defined in Sect. 2.2, we will use a special norm and inner product with its own subscript.

For any maximal monotone operator A we will use the notation \( {\text {prox}}_{\rho A} = (I+\rho A)^{-1}, \) for any scalar \(\rho > 0\), to denote the proximal operator, also known as the backward or implicit step with respect to A. This means that

The x and y satisfying this relation are unique. Furthermore, \({\text {prox}}_{\rho A}\) is defined everywhere and \(\text {range}({\text {prox}}_A) = \text {dom}(A)\) [2, Prop. 23.2].

We use the standard “\(\rightharpoonup \)” notation to denote weak convergence, which is of course equivalent to ordinary convergence in finite-dimensional settings.

The following basic result will be used several times in our proofs:

Lemma 1

For any vectors \(v_1,\ldots ,v_n\), \( \left\| \sum _{i=1}^n v_i\right\| ^2\le n\sum _{i=1}^n\left\| v_i\right\| ^2. \)

Proof

\(\left\| \sum _{i=1}^n v_i\right\| ^2 = n^2\left\| \frac{1}{n}\sum _{i=1}^n v_i\right\| ^2 \le n^2\cdot \frac{1}{n}\sum _{i=1}^n\Vert v_i\Vert ^2, \) where the inequality follows from the convexity of the function \(\Vert \cdot \Vert ^2\). \(\square \)

2.2 Main assumptions regarding problem (13)

Let \(\varvec{{\mathcal {H}}} = {\mathcal {H}}_0\times {\mathcal {H}}_1\times \cdots \times {\mathcal {H}}_{n-1}\) and \({\mathcal {H}}_n = {\mathcal {H}}_0\). Define the extended solution set or Kuhn–Tucker set of (13) to be

Clearly \(z\in {\mathcal {H}}_{0}\) solves (13) if and only if there exists \(\mathbf{w}\in {\mathcal {H}}_1 \times \cdots \times {\mathcal {H}}_{n-1}\) such that \((z,\mathbf{w})\in {\mathcal {S}}\). Our main assumptions regarding (13) are as follows:

Assumption 1

Problem (13) conforms to the following:

-

1.

\({\mathcal {H}}_0 = {\mathcal {H}}_n\) and \({\mathcal {H}}_1,\ldots ,{\mathcal {H}}_{n-1}\) are real Hilbert spaces.

-

2.

For \(i=1,\ldots ,n\), the operators \(T_i:{\mathcal {H}}_{i}\rightarrow 2^{{\mathcal {H}}_{i}}\) are monotone.

-

3.

For all i in some subset \({\mathcal {I}}_{{{\,\mathrm{F}\,}}}\subseteq \{1,\ldots ,n\}\), the operator \(T_i\) is \(L_i\)-Lipschitz continuous (and thus single-valued) and \(\text {dom}(T_i) = {\mathcal {H}}_i\).

-

4.

For \(i \in {\mathcal {I}}_{{{\,\mathrm{B}\,}}}\triangleq \{1,\ldots ,n\} \backslash {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\), the operator \(T_i\) is maximal and that the map \({\text {prox}}_{\rho T_i}:{\mathcal {H}}_i\rightarrow {\mathcal {H}}_i\) can be computed to within the error tolerance specified below in Assumption 4 (however, these operators are not precluded from also being Lipschitz continuous).

-

5.

Each \(G_i:{\mathcal {H}}_{0}\rightarrow {\mathcal {H}}_i\) for \(i=1,\ldots ,n-1\) is linear and bounded.

-

6.

The solution set \({\mathcal {S}}\) defined in (16) is nonempty.

Lemma 2

Suppose Assumption 1 holds. The set \({\mathcal {S}}\) defined in (16) is closed and convex.

Proof

We first remark that for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) the operators \(T_i\) are maximal by [2, Proposition 20.27], so \(T_1,\ldots ,T_n\) are all maximal monotone. The claimed result is then a special case of [5, Proposition 2.8(i)] with the following change of notation, where “MM” stands for “maximal monotone” and “BL” stands for “bounded linear”:

\(\square \)

2.3 A generic linear separator-projection method

Suppose that \(\varvec{{\mathcal {H}}}\) is a real Hilbert space with inner product \(\langle \cdot ,\cdot \rangle _{\varvec{{\mathcal {H}}}}\) and norm \(\Vert \cdot \Vert _{\varvec{{\mathcal {H}}}}\). A generic linear separator-projection method for finding a point in some closed and convex set \({\mathcal {S}}\subseteq \varvec{{\mathcal {H}}}\) is given in Algorithm 1.

The update on line 4 is the \(\beta _k\)-relaxed projection of \(p^k\) onto the halfspace \(\{p:\varphi _k(p) \le 0\}\) using the norm \(\Vert \cdot \Vert _{\varvec{{\mathcal {H}}}}\). In other words, if \({\hat{p}}^k\) is the projection onto this halfspace, then the update is \(p^{k+1} = (1-\beta _k)p^k + \beta _k{\hat{p}}^k\). Note that we define the gradient \(\nabla \varphi _k\) with respect to the inner product \(\langle \cdot ,\cdot \rangle _{\varvec{{\mathcal {H}}}}\), meaning we can write

We will use the following well-known properties of algorithms fitting the template of Algorithm 1; see for example [7, 16]:

Lemma 3

Suppose \({\mathcal {S}}\) is closed and convex. Then for Algorithm 1,

-

1.

The sequence \(\{p^k\}\) is bounded.

-

2.

\(\Vert p^k - p^{k+1}\Vert _{\varvec{{\mathcal {H}}}}\rightarrow 0\);

-

3.

If all weak limit points of \(\{p^k\}\) are in \({\mathcal {S}}\), then \(p^k\) converges weakly to some point in \({\mathcal {S}}\).

Note that we have not specified how to choose the affine function \(\varphi _k\). For our specific application of the separator-projection framework, we will do so in Sect. 2.4.

2.4 Our hyperplane

In this section, we define the affine function our algorithm uses to construct a separating hyperplane. Let \(p =(z,\mathbf{w}) = (z,w_1,\ldots , w_{n-1})\) be a generic point in \(\varvec{{\mathcal {H}}}\), the collective primal-dual space. For \(\varvec{{\mathcal {H}}}\), we adopt the following norm and inner product for some \(\gamma >0\):

Define the following function generalizing (4) at each iteration \(k\ge 1\):

where the \((x_i^k,y_i^k)\) are chosen so that \(y_i^k\in T_i x_i^k\) for \(i=1,\ldots ,n\) (recall that each inner product is for the corresponding Hilbert space \({\mathcal {H}}_i\)). This function is a special case of the separator function used in [8]. The following lemma proves some basic properties of \(\varphi _k\); similar results are in [1, 8, 15] in the case \(\gamma =1\).

Lemma 4

Let \(\varphi _k\) be defined as in (18). Then:

-

1.

\(\varphi _k\) is affine on \(\varvec{{\mathcal {H}}}\).

-

2.

With respect to inner product \(\langle \cdot ,\cdot \rangle _\gamma \) on \(\varvec{{\mathcal {H}}}\), the gradient of \(\varphi _k\) is

$$\begin{aligned} \nabla \varphi _k = \left( \frac{1}{\gamma }\left( \sum _{i=1}^{n-1} G_i^* y_i^k+ y_n^k\right) \!\!,\; x_1^k - G_1 x_{n}^k,\ldots ,x_{n-1}^k - G_{n-1} x_{n}^k\right) . \end{aligned}$$ -

3.

Suppose Assumption 1 holds and that \(y_i^k\in T_i x_i^k\) for \(i=1,\ldots ,n\). Then \(\varphi _k(p)\le 0\) for all \(p\in {\mathcal {S}}\) defined in (16).

-

4.

If Assumption 1 holds, \(y_i^k\in T_i x_i^k\) for \(i=1,\ldots ,n\), and \(\nabla \varphi _k = 0\), then \( (x_n^k,y_1^k,\ldots ,y_{n-1}^k)\in {\mathcal {S}}. \)

Proof

To see that \(\varphi _k\) is affine, rewrite (18) as

It is now clear that \(\varphi _k\) is an affine function of \(p = (z,\mathbf{w})\). Next, fix an arbitrary \({\tilde{p}}\in \varvec{{\mathcal {H}}}\). Using that \(\varphi _k\) is affine, we may write

Equating terms between this expression and (19) yields the claimed expression for the gradient.

Next, suppose Assumption 1 holds and \(y_i^k\in T_i x_i^k\) for \(i=1,\ldots ,n\). To prove the third claim, we need to consider \((z,\mathbf{w})\in {\mathcal {S}}\) and establish that \(\varphi _i(z,\mathbf{w}) \le 0\). We do so by showing that all n terms in (18) are nonpositive: first, for each \(i=1,\ldots ,n-1\), we have \(\langle {G_i z - x_i^k},{y_i^k - w_i}\rangle \le 0\) since \(T_i\) is monotone, \(w_i\in T_i(G_i z)\), and \(y_i^k\in T_i x_i^k\). The nonpositivity of the final term is established similarly by noting that \(y_{n}^k \in T_{n} x_{n}^k\), \(-\sum _{i=1}^{n-1} G_i^* w_i \in T_n z\), and that \(T_{n}\) is monotone.

Finally, suppose \(\nabla \varphi _k = 0\) for some \(k\ge 1\). Then \(y_n^k = -\sum _{i=1}^{n-1}G_i^* y_i^k\) and \(x_i^k - G_i x_n^k = 0\) for all \(i=1,\ldots ,n-1\). The latter implies that \(y_i^k\in T_i(G_i x_n^k)\) for all \(i=1,\ldots ,n-1\). Since we also have \(y_n^k\in T_n(x_n^k)\), we obtain that \((x_n^k,y_1^k,\ldots ,y_{n-1}^k)\in {\mathcal {S}}\). \(\square \)

3 Our algorithm

3.1 Algorithm definition

Algorithm 2 is our flexible block-iterative projective splitting algorithm with forward steps for solving (13). It is essentially a special case of the weakly convergent Algorithm of [8], except that we use the new forward-step procedure to deal with the Lipschitz continuous operators \(T_i\) for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\), instead of exclusively using proximal steps. For our separating hyperplane in (18), we use a special case of the formulation of [8], which is slightly different from the one used in [15]. Our method can be reformulated to use the same hyperplane as [15]; however, this requires that it be computationally feasible to project on the subspace given by the equation \(\sum _{i=1}^{n} G_i^*w_i = 0\).

Under appropriate conditions, Algorithm 2 is an instance of Algorithm 1 (see Lemma 6). Lines 12–26 of Algorithm 2 essentially implement the projection step on line 4 of Algorithm 1. Lines 2–11 construct the points \((x_i^k,y_i^k)\) used to define the affine function \(\varphi _k\) in (18), which defines the separating hyperplane.

The algorithm has the following parameters:

-

For each iteration \(k\ge 1\), a subset \(I_k\subseteq \{1,\ldots ,n\}\). These are the indices of the “active” operators that iteration k processes by either a backward step or two forward steps. The remaining, “inactive” operators simply have \((x_i^k,y_i^k)=(x_i^{k-1},y_i^{k-1})\).

-

For each iteration \(k\ge 1\) and \(i=1,\ldots ,n\), a delayed iteration index \(d(i,k)\in \{1,\ldots ,k\}\) which allows the subproblem calculations on lines 4–9 to use outdated information \((z^{d(i,k)}, w_i^{d(i,k)})\). In the most straightforward case of no delays, d(i, k) is simply k.

-

For each \(k\ge 1\) and \(i=1,\ldots ,n\), a positive scalar stepsize \(\rho _{i}^{k}\).

-

For each iteration \(k\ge 1\), an overrelaxation parameter \(\beta _k\in [{\underline{\beta }},{\overline{\beta }}]\) for some constants \(0<{\underline{\beta }}\le {\overline{\beta }}<2\).

-

A scalar \(\gamma >0\) which controls the relative emphasis on the primal and dual variables in the projection update in lines 24–25; see (17) in Sect. 2.4 for more details.

-

Sequences of errors \(\{e_i^k\}_{k\ge 1}\) for \(i\in {\mathcal {I}}_{{{\,\mathrm{B}\,}}}\) modeling inexact computation of the proximal steps.

In the form directly presented in Algorithm 2, the delay indices d(i, k) may seem unmotivated; it might seem best to always select \(d(i,k) = k\). However, these indices can play a critical role in modeling asynchronous parallel implementation. There are many ways in which Algorithm 2 could be implemented in various parallel computing environments; a specific suggestion for asynchronous implementation of a closely related class of algorithms is developed in [15, Section 3].

The error parameters \(e_i^k\) for the proximal steps would simply be zero for proximal steps that are calculated exactly. When nonzero, they would not typically in practice be explicitly chosen prior to calculating \(x_i^k\) and \(y_i^k\), but instead implicitly defined by some (likely iterative) procedure for approximating the \({\text {prox}}\) operation. We present the error parameters as shown in order to avoid cluttering the algorithm description with additional loops and abstractions as in [18, 19].

3.2 A block-iterative implementation

Before proceeding with the analysis of Algorithm 2, we present a somewhat simplified block-iterative version. This version eliminates the possibility of delays, setting \(d(i,k) \equiv k\). The strategy for deciding which operators \(I_k\) to select at each iteration is left open for the time being and is determined entirely by the algorithm implementer. We will propose one specific strategy for the case \(|{I_k}|\equiv 1\) in Sect. 5.3, but one may use any approach conforming to Assumption 2(1) below.

4 Convergence analysis

We now start our analysis of the weak convergence of the iterates of Algorithm 2 to a solution of problem (13). While the overall proof strategy is similar to [15], considerable innovation is required to incorporate the forward steps. Before the main proof, we will first state our assumptions on Algorithm 2 and its parameters, state the main convergence theorem, and sketch an outline of the proof.

4.1 Algorithm assumptions

We start with our assumptions about parameters of Algorithm 2. With the exception of (20), they are taken from [8, 15] and use the notation of [15].

Assumption 2

For Algorithm 2, assume:

-

1.

For some fixed integer \(M\ge 1\), each index i in \(1,\ldots ,n\) is in \(I_k\) at least once every M iterations, that is,

$$\begin{aligned} (\forall \,j\ge 1) \qquad \bigcup \limits _{k=j}^{j+M-1}I_k = \{1,\ldots ,n\}. \end{aligned}$$ -

2.

For some fixed integer \(D\ge 0\), we have \(k-d(i,k)\le D\) for all i, k with \(i\in I_k\). That is, there is a constant bound on the extent to which the information \(z^{d(i,k)}\) and \(w_i^{d(i,k)}\) used in lines 4 and 8 is out of date.

Assumption 3

The stepsize conditions for weak convergence of Algorithm 2 are:

Note that (20) allows the stepsize to be larger than the right hand side for a finite number of iterations.

The last assumption concerns the possible errors \(e_i^k\) in computing the proximal steps and requires some notation from [15]: for all i and k, define

In words, s(i, k) is the most recent iteration up to and including k in which the index-i information in the separator was updated, or 0 if index-i information has never been processed. Assumption 2 ensures that \(0\le k-s(i,k)< M\).

Next, for all \(i=1,\ldots ,n\) and iterations k, define \( l(i,k) = d\big (i,s(i,k)\big ). \) Thus, l(i, k) is the iteration in which the algorithm generated the information \(z^{l(i,k)}\) and \(w_{i}^{l(i,k)}\) used to compute the current point \((x_i^k,y_i^k)\). For initialization purposes, we set \(d(i,0) = 0\).

Assumption 4

The error sequences \(\{e_i^k\}\) are bounded for all \(i\in {\mathcal {I}}_{{{\,\mathrm{B}\,}}}\). For some \(\sigma \) with \(0\le \sigma <1\) the following hold for all \(k \ge 1\):

4.2 Main result

We now state the main technical result of the paper, asserting weak convergence of Algorithm 2 to a solution of (13).

Theorem 1

Suppose Assumptions 1–4 hold. If Algorithm 2 terminates at line 21, then its final iterate is a member of the extended solution set \({\mathcal {S}}\). Otherwise, the sequence \(\{(z^k,\mathbf{w}^k)\}\) generated by Algorithm 2 converges weakly to some point \((\bar{z},{\overline{\mathbf{w}}})\) in the extended solution set \({\mathcal {S}}\) of (13) defined in (16). Furthermore, \(x_i^k\rightharpoonup G_i {{\bar{z}}}\) and \(y_i^k\rightharpoonup {\overline{w}}_i\) for all \(i=1,\ldots ,n-1\), \(x_{n}^k\rightharpoonup {{\bar{z}}}\), and \(y_n^k\rightharpoonup -\sum _{i=1}^{n-1}G_i^* {\overline{w}}_i\).

Before establishing this result, we first outline the basic proof strategy: first, since it arises from a projection method, the sequence \(\{p^k\}\) has many desirable properties, as outlined in Lemma 3. In particular, Lemma 3(3) allows us to establish (weak) convergence of the entire sequence to a solution if we can prove that all its limit points must be elements of \({\mathcal {S}}\). To that end, we will establish that

By the definition of \(w_n^k\) on line 26, the iterates \((z^k,\mathbf{w}^k)\) always meet the linear relationship between the \(w_i\) implicit in the definition (16) of \({\mathcal {S}}\), whereas the \((x_i^k,y_i^k)\) iterates always meet its inclusion conditions. Therefore, if (23) holds, then one may expect all limit points of \((z^k,\mathbf{w}^k)\) to satisfy all the conditions in (16) and thus to to lie in \({\mathcal {S}}\). In finite dimension, this result is in fact fairly straightforward to establish. The general Hilbert space proof is more delicate, but was carried out in [1, Proposition 2.4].

In order to establish (23), we will first establish that the gradient of the affine function \(\varphi _k\) defined in (18) remains bounded. Then, consider the projection update as written on line 4 of Algorithm 1, which implies

If \(\Vert \nabla \varphi _k\Vert _{\varvec{{\mathcal {H}}}}\) remains bounded, then since Lemma 3(2) implies the left hand side goes to 0, \(\limsup \varphi _k(p^k)\le 0\).

The key to establishing (23) is then to show that the cut provided by the separating hyperplane is “sufficiently deep”. This will amount to proving (in simplified form)

for some \(C>0\). Then, using \(\limsup \varphi _k(p^k)\le 0\), the first part of (23) follows. The second part of (23) is then established by a similar argument.

4.3 Preliminary lemmas

To begin the proof of Theorem 1, we first deal with the situation in which Algorithm 2 terminates at line 21.

Lemma 5

For Algorithm 2:

-

1.

Suppose Assumption 1 holds. If the algorithm terminates via line 21, then \((z^{k+1},\mathbf{w}^{k+1})\in {\mathcal {S}}\). Furthermore \(x_i^k = G_i z^{k+1}\) and \(y_i^k = w_i^{k+1}\) for \(i=1,\ldots ,n-1\), and \(x_n^k = z^{k+1}\) and \(y_n^k = -\sum _{i=1}^{n-1}G_i^* w_i^{k+1}\).

-

2.

Additionally, suppose Assumption 2(1) holds. Then if \(\pi _k=0\) at some iteration \(k\ge M\), the algorithm terminates via line 21.

Proof

The condition \(\cup _{j=1}^k I_j=\{1,\ldots ,n\}\) on line 20 implies that \(y_i^k\in T_i x_i^k\) for \(i=1,\ldots ,n\). Let \(\varphi _k\) be the affine function defined in (18). Simple algebra verifies that for \(u^k\) and \(v^k\) defined on lines 12 and 13, \(u_{i}^k = \nabla _{w_i}\varphi _k\) for \(i=1,\ldots ,n-1\), \(v^k = \gamma \nabla _z\varphi _k\), and \(\pi _k = \Vert \nabla \varphi _k\Vert _\gamma ^2\).

If for any such k, \(\pi _k\) equals 0, then this implies \(\nabla \varphi _k = 0\). Then we can invoke Lemma 4(4) to conclude that \((x_n^k,y_1^k,\ldots ,y_{n-1}^k)\in {\mathcal {S}}\). Thus, the algorithm terminates with

Furthermore, when \(\nabla \varphi _k = 0\), Lemma 4(2) leads to

We immediately conclude that \(y_n^k = -\sum _{i=1}^{n-1} G_i^* y_i^k = -\sum _{i=1}^{n-1} G_i^* w_i^{k+1}\) and, for \(i=1,\ldots ,n-1\), that \(x_i^k = G_i x_n^k = G_i z^k\).

Finally, note that for any \(k\ge M\), \(\cup _{j=1}^k I_j=\{1,\ldots ,n\}\) by Assumption 2(1). Therefore whenever \(\pi _k = 0\) for \(k\ge M\), the algorithm terminates via line 21. \(\square \)

Lemma 5 asserts that if the algorithm terminates finitely, then the final iterate is a solution. For the rest of the analysis, we therefore assume that \(\pi _k \ne 0\) for all \(k\ge M\). Under Assumption 2, Algorithm 2 is a projection algorithm:

Lemma 6

Suppose that Assumption 1 holds for problem (13) and Assumption 2(1) holds for Algorithm 2. Then, for all \(k\ge M\) such that \(\pi _k\) defined on Line 14 is nonzero, Algorithm 2 is an instance of Algorithm 1 with \(\varvec{{\mathcal {H}}}= {\mathcal {H}}_0 \times \cdots \times {\mathcal {H}}_{n-1}\) and the inner product in (17), \({\mathcal {S}}\) as defined in (16), and \(\varphi _k\) as defined in (18). All the statements of Lemma 3 hold for the sequence \(\{p^k\} = \{(z^k,w_1^k,\ldots ,w_{n-1}^k)\}\) generated by Algorithm 2.

Proof

For \(k\ge M\) in Algorithm 2, by Assumption 2(1) all \((x_i^k,y_i^k)\) have been updated at least once using either lines 5–6 or lines 8–9, and thus \(y_i^k\in T_i x_i^k\) for \(i=1,\ldots ,n\). Therefore, Lemma 4 implies that \(\varphi _k(z,\mathbf{w})\le 0\).

Next we verify that lines 12–26 of Algorithm 2 are an instantiation of line 4 of Algorithm 1 using \(\varphi _k\) as defined in (18) and the norm defined in (17). As already shown, \(\pi _k = \Vert \nabla \varphi _k\Vert _\gamma ^2\). Considering the decomposition of \(\varphi _k\) in (19), it can then be seen that lines 14–25 of Algorithm 2 implement the projection on line 4 of Algorithm 1.

To conclude the proof, we note that Lemma 2 asserts that \({\mathcal {S}}\) is closed and convex, so all the results of Lemma 3 apply. \(\square \)

The next two lemmas concern the indices s(i, k) and l(i, k) defined in Sect. 2.

Lemma 7

Suppose Assumption 2(1) holds. For all iterations \(k\ge M\), if Algorithm 2 has not already terminated, then the updates may be written as

Proof

The proof follows from the definition of l(i, k) and s(i, k). After M iterations, all operators must have been in \(I_k\) at least once. Thus, after M iterations, every operator has been updated at least once using either the proximal step on lines 4–6 or the forward steps on lines 8–9 of Algorithm 2. Recall the variables defined to ease mathematical presentation, namely \(G_{n} = I\) and \(w_{n}^k\) defined in (14) and line 26. \(\square \)

We now derive some important properties of l(i, k). The following result was proved in Lemma 6 of [15] but since it is short we include the proof here.

Lemma 8

Under Assumption 2, \(k-l(i,k) < M+D\) for all \(i=1,\ldots ,n\) and iterations k.

Proof

From the definition, we know that \(0\le k-s(i,k) < M\). Part 2 of Assumption 2 ensures that \(s(i,k)-l(i,k)=s(i,k) - d(i,s(i,k))\le D\). Adding these two inequalities yields the desired result. \(\square \)

Lemma 9

Suppose Assumptions 1 and 2 hold and \(\pi _k>0\) for all \(k\ge M\). Then \(w_i^{l(i,k)}-w_i^k\rightarrow 0\) for all \(i=1,\ldots ,n\) and \(z^{l(i,k)}-z^k \rightarrow 0\).

Proof

For \(z^k\) and \(w_i^k\) for \(i=1,\ldots ,n-1\), the proof is identical to the proof of [15, Lemma 9]. For \(\{w_n^k\}\), we have from line 26 of the algorithm that

where final line uses Lemma 8. Since the operators \(G_i\) are bounded and Lemma 3(2) implies that \(w_i^{k+1}-w_i^k \rightarrow 0\) for all \(i=1,\ldots ,n-1\), we conclude that \(w_n^{l(n,k)}-w_n^k\rightarrow 0\). \(\square \)

Next, we define

Note that (27) simply expands the definition of the affine function in (18) and we may write \(\varphi _k(p^k) = \phi _k\).

Lemma 10

Suppose Assumptions 1 and 2 hold and \(\pi _k>0\) for all \(k\ge M\). Then \(\phi _{ik}-\psi _{ik}\rightarrow 0\) for all \(i=1,\ldots ,n\).

Proof

In view of Lemma 9, we may follow the same argument as given in [15, Lemma 12]. \(\square \)

4.4 Three technical lemmas

We now prove three technical lemmas which pave the way to establishing weak convergence of Algorithm 2 to a solution of (13). The first lemma upper bounds the norm of the gradient of \(\varphi _k\) at each iteration.

Lemma 11

Suppose Assumptions 1–4 hold. Suppose that \(\pi _k>0\) for all \(k\ge M\). Recall the affine function \(\varphi _k\) defined in (18). There exists \(\xi _1\ge 0\) such that \( \Vert \nabla \varphi _k\Vert _\gamma ^2 \le \xi _1 \) for all \(k \ge 1\).

Proof

For \(k< M\) the gradient can be trivially bounded by \(\max _{1\le k< M} \Vert \nabla \varphi _k\Vert _\gamma ^2\). Now fix any \(k \ge M\). Using Lemma 4,

Using Lemma 1, we begin by writing the second term on the right of (29) as

The linear operators \(G_i\) are bounded by Assumption 1. We now check the boundedness of sequences \(\{x_i^k\}\), \(i=1,\dots ,n\). For \(i\in {\mathcal {I}}_{{{\,\mathrm{B}\,}}}\), the boundedness of \(\{x_i^k\}\) follows from exactly the same argument as in [15, Lemma 10]. Now taking any \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\), we use the triangle inequality and Lemma 7 to obtain

Now the sequences \(\{\Vert z^k\Vert \}\) and \(\{\Vert w_i^k\Vert \}\) are bounded by Lemma 3, implying the boundedness of \(\{\Vert z^{l(i,k)}\Vert \}\) and \(\{\Vert w_i^{l(i,k)}\Vert \}\). Since \(\{z^{l(i,k)}\}\) is bounded, \(G_{i}\) is bounded, and \(T_i\) is Lipschitz continuous, \(\{T_iG_{i}z^{l(i,k)}\}\) is bounded. Finally, the stepsizes \(\rho _i^k\) are bounded by Assumption 3. Therefore, \(\{x_{i}^k\}\) is bounded for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\), and we may conclude that the second term in (29) is bounded.

We next consider the first term in (29). Rearranging the update equations for Algorithm 2 as given in Lemma 7, we may write

Using \(G_n = I\), the squared norm in the first term of (29) may be written as

In the above, (a) uses Lemma 1, while (b) is obtained by substituting (30)–(31) into the first squared norm and using \(y_i^k =T_i x_i^k\) for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) in the second, and then using Lemma 1 on both terms. Finally, (c) uses Lemma 1, the Lipschitz continuity of \(T_i\), and Assumption 3. For each \(i=1,\ldots ,n\), we have that \(G_i\) is a bounded operator, the sequences \(\{z^{l(i,k)}\}\), \(\{x_i^k\}\), and \(\{w_i^{l(i,k)}\}\) are already known to be bounded, \(\{\rho _i^{l(i,k)}\}\) is bounded by Assumption 3, and for \(i\in {\mathcal {I}}_{{{\,\mathrm{B}\,}}}\), \(\{e_i^{s(i,k)}\}\) is bounded by Asssumption 4. We conclude that the right hand side of (33) is bounded. Therefore, the first term in (29) is bounded and the sequence \(\{\nabla \varphi _k\}\) must be bounded. \(\square \)

The second technical lemma establishes a lower bound for the affine function \(\varphi _k\) evaluated at the current point which is similar to (24). This shows that the cut provided by the hyperplane is “deep enough” to guarantee weak convergence of the method. The lower bound applies to the quantity \(\psi _k\) defined in (28): this quantity is easier to analyze than \(\phi _k\) and Lemma 10 asserts that the difference between the two converges to zero.

Lemma 12

Suppose that Assumptions 1–4 hold. Suppose \(\pi _k>0\) for all \(k\ge M\). Then there exists \(\xi _2>0\) such that

Proof

For \(k\ge M\), we have

In the above derivation, (a) follows by substitution of (25) into the \({\mathcal {I}}_{{{\,\mathrm{B}\,}}}\) terms and algebraic manipulation of the \({\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) terms. Next, (b) follows by algebraic manipulation of the \({\mathcal {I}}_{{{\,\mathrm{B}\,}}}\) terms and substitution of (26) into the \({\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) terms. Finally, (c) is justified by using (21) in Assumption 4 and the Lipschitz continuity of \(T_i\) for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\).

Now consider any two sequences \(\{a_k\}\subset {\mathbb {R}}, \{b_k\} \subset {\mathbb {R}}_+\). We note that

Applying this fact to the expression in (34) yields the desired result with

and Assumption 3 guarantees that \(\xi _2>0\). \(\square \)

In the third technical lemma, we provide what is essentially a complementary lower bound for \(\psi _k\):

Lemma 13

Suppose Assumptions 1–4 hold. Suppose \(\pi _k>0\) for all \(k\ge M\). Then there exists \(\xi _3>0\) such that

Proof

For all \(k\ge M\), we have

In the above derivation, (a) follows by substition of (25) into the \({\mathcal {I}}_{{{\,\mathrm{B}\,}}}\) terms and algebraic manipulation of the \({\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) terms. Next (b) is obtained by algebraic simplification of the \({\mathcal {I}}_{{{\,\mathrm{B}\,}}}\) terms and substitution of (26) into the two groups of \({\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) terms. Finally, (c) is obtained by substituting the error criterion (22) from Assumption 4 for the \({\mathcal {I}}_{{{\,\mathrm{B}\,}}}\) terms and using the Lipschitz continuity of \(T_i\) for the \({\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) terms. Adding the last term in (37) to both sides yields

Assumption 4 requires that \(\sigma <1\) and Assumption 3 requires that \(\rho _i^k\ge {\underline{\rho }}>0\) for all i, so taking limits in the above inequality implies that (35) holds with \( \xi _3 = (1-\sigma ){\underline{\rho }}. \) \(\square \)

4.5 Proof of Theorem 1

We are now in a position to complete the proof. The assertion regarding termination at line 21 follows immediately from Lemma 5. For the remainder of the proof, we therefore consider only the case that the algorithm runs indefinitely and thus that \(\pi _k > 0\) for all \(k \ge M\).

The proof has three parts. The first part establishes that \(G_i z^k - x_i^k\rightarrow 0\) for all i and the second part proves that \(y_i^k - w_i^k\rightarrow 0\) for all i. Finally, the third part uses these results in conjunction with a result in [1] to show that any convergent subsequence of \(\{p^k\} = \{(z^k,\mathbf{w}^k)\}\) generated by the algorithm must converge to a point in \({\mathcal {S}}\), after which we may simply invoke Lemma 3.

\(\underline{\hbox {Part 1. Convergence of }G_i z^k - x_i^k\rightarrow 0}\)

Lemma 3(2) guarantees that \(p^k - p^{k+1} \rightarrow 0\), so it follows that

since \(\Vert \nabla \varphi _k\Vert _\gamma \le \sqrt{\xi _1}<\infty \) for all k by Lemma 11. Thus, \(\limsup _{k\rightarrow \infty } \phi _k\le 0\). Since Lemma 10 implies that \(\phi _k - \psi _k\rightarrow 0\), it follows that \(\limsup _{k\rightarrow \infty }\psi _k\le 0\). With (a) following from Lemma 12, we next obtain

Thus, \( G_i z^{l(i,k)}-x_i^k\rightarrow 0 \) for \(i=1,\ldots ,n\). Since \(z^k - z^{l(i,k)}\rightarrow 0\) and \(G_i\) is bounded, we obtain that \( G_iz^k - x_i^k\rightarrow 0 \) for \(i=1,\ldots ,n\).

\({\underline{\hbox {Part 2. Convergence of }y_i^k - w_i^k\rightarrow 0}}\)

From \(\limsup _{k\rightarrow \infty } \psi _k \le 0\) and \(G_iz^{l(i,k)} - x_i^k\rightarrow 0\), we obtain

Combining (38) with (35) in Lemma 13, we infer that

where the implications follow from Lemma 9, the Lipschitz continuity of \(T_i\) for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\), and the continuity of the linear operators \(G_{i}\). Finally, for each \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) and \(k\ge M\), we further reason that

Here, (a) uses (26) from Lemma 7, (b) uses the Lipschitz continuity of \(T_i\), and (c) relies on (39) and part 1 of this proof.

Part 3. Subsequential convergence

Consider any increasing sequence of indices \(\{q_k\}\) such that \((z^{q_k},\mathbf{w}^{q_k})\) weakly converges to some point \((z^\infty ,\mathbf{w}^\infty ) \in \varvec{{\mathcal {H}}}\). We claim that in any such situation, \((z^\infty ,\mathbf{w}^\infty )\in {\mathcal {S}}\).

By part 1, \(z^k - x^k_{n}\rightarrow 0\), so \(x_{n}^{q_k}\rightharpoonup z^\infty \). For any \(i=1,\ldots , n\), part 2 asserts that \(y_i^k-w^k_i\rightarrow 0\), so \(y^{q_k}_i\rightharpoonup w^\infty _i\). Furthermore, part 2, (14), and the boundedness of \(G_i\) imply that

Finally, part 1 and the boundedness of \(G_i\) yield

Next we apply [1, Proposition 2.4] with the following change of notation where “MM” stands for “maximal monotone” and “BL” stands for “bounded linear”:

We then conclude from [1, Proposition 2.4] that \((z^\infty ,\mathbf{w}^\infty )\in {\mathcal {S}}\), and the claim is established.

Invoking Lemma 3(3), we immediately conclude that \(\{(z^k,\mathbf{w}^k)\}\) converges weakly to some \(({{\bar{z}}},{\overline{\mathbf{w}}}) \in {\mathcal {S}}\). For each \(i=1,\ldots ,n\), we finally observe that since \(G_i z^k - x_i^k\rightarrow 0\) and \(y_i^k-w_i^k\rightarrow 0\), we also have \(x_i^k\rightharpoonup G_i {{\bar{z}}}\) and \(y_i^k\rightharpoonup {\overline{w}}_i\). \(\square \)

5 Extensions

5.1 Backtracking Linesearch

This section describes a backtracking linesearch procedure that may be used in the forward steps when the Lipschitz constant is unknown. The backtracking procedure is formalized in Algorithm 4, to be used in place of lines 8–9 of Algorithm 2.

We introduce the following notation: as suggested in line 8 of Algorithm 4, we set J(i, k) to be the number of iterations of the backtracking algorithm for operator \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) at outer iteration \(k\ge 1\); the subsequent theorem will show that J(i, k) can be upper bounded. As also suggested in line 8, we let \({\hat{\rho }}_i^{d(i,k)} = \rho _i^{(J(i,k),k)}\) for \(i \in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\cap {\mathrm {I}}_k\). When using the backtracking procedure for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\), it is important to note that the interpretation of \(\rho _i^{d(i,k)}\) changes: it is the initial trial stepsize value for the \(i^{\text {th}}\) operator at iteration k, and the actual stepsize used is \({\hat{\rho }}_i^{d(i,k)}\). When \(i\notin I_k\), we set \(J(i,k) = 0\) and \({\hat{\rho }}_i^{d(i,k)} = \rho _i^{d(i,k)}\).

Assumption 5

Lines 8–9 of Algorithm 2 are replaced with the procedure in Algorithm 4. Regarding stepsizes, we assume that

and either:

or

In words, (42) allows us to initialize the linesearch with a stepsize which is at least as large as the previously discovered stepsize, which is a common procedure in practice.

Theorem 2

Suppose that Assumptions 1, 2, 4, and 5 hold. Then all the conclusions of Theorem 1 follow. Specifically, either the algorithm terminates in a finite number of iterations at point in \({\mathcal {S}}\), or there exists \(({{\bar{z}}},\overline{\mathbf{w}})\in {\mathcal {S}}\) such that \((z^k,\mathbf{w}^k)\rightharpoonup ({{\bar{z}}},\overline{\mathbf{w}})\), \(x_i^k\rightharpoonup G_i {{\bar{z}}}\) and \(y_i^k\rightharpoonup {\overline{w}}_i\) for all \(i=1,\ldots ,n-1\), \(x_{n}^k\rightharpoonup {{\bar{z}}}\), and \(y_n^k\rightharpoonup -\sum _{i=1}^{n-1}G_i^* {\overline{w}}_i\),

Proof

The proof of finite termination at an optimal point follows as before, via Lemma 5. From now on, suppose \(\pi _k>0\) for all \(k\ge M\) implying that the algorithm runs indefinitely.

The proof proceeds along the following outline: first, we upper bound the number of iterations of the loop in Algorithm 4, implying that the stepsizes \({\hat{\rho }}_i^{d(i,k)}\) are bounded from above and below. We then argue that lemmas 6–10 hold as before. Then we show that lemmas 11–13 essentially still hold, but with different constants. The rest of the proof then proceeds identically to that of Theorem 1.

Regarding upper bounding the inner loop iterations, fix any \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\). For any \(k \ge 1\) such that \(i\in I_k\) and for any \(j\ge 1\), substituting the values just assigned to \(\theta _i^k\) and \(\zeta _i^k\) allows us to expand the forward step on line 5 of Algorithm 4 into

Following the arguments used to derive the \({\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) terms in (34), we have

Using that \(\rho _i^{(j,k)} = 2^{1-j}\rho _i^{d(i,k)}\), some elementary algebraic manipulations establish that once

one must have \( \varDelta \le \big (\rho _i^{(j,k)}\big )^{-1}-L_i, \) and by (43) the condition triggering the return statement in Algorithm 4 must be true. Therefore, for any \(k\ge 1\) we have

By the condition \({\overline{\rho }} < \infty \) in (40), we may now infer that \(\{J(i,k)\}_{k\in \mathbb {N}}\) is bounded. Furthermore, by substituting (44) into \({{\hat{\rho }}}_i^{d(i,k)} = 2^{1-J(i,k)}\rho _i^{d(i,k)}\), we may infer for all \(k\ge 1\) that

If (41) is enforced, then

On the other hand, if (42) is enforced, then for all k such that \(i\in {\mathcal {I}}_k\), we have

If \(k\notin {\mathcal {I}}_k\) then \({\hat{\rho }}_i^{d(i,k)} = \rho _i^{d(i,k)}\) and \(\rho _i^{d(i,k+1)}\ge \rho _i^{d(i,k)}\). Therefore, we may recurse (47) to yield

Finally since \({\hat{\rho }}_i^{d(i,k)}\le \rho _i^{d(i,k)}\le {\bar{\rho }}\) for all \(k \ge 1\), we must have

Since the choice of \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) was arbitrary, we know that \(\{{{\hat{\rho }}}_i^{d(i,k)}\}_{k\in \mathbb {N}}\) is bounded for all \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\), and the first phase of the proof is complete.

We now turn to lemmas 6–10. First, Lemma 6 still holds, since it remains true that \(y_i^k = T_i x_i^k\) for all \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) and \(k\ge M\). Next, a result like that of Lemma 7 holds, but with \(\rho _i^{l(i,k)}\) replaced by \({\hat{\rho }}_i^{l(i,k)}\) for all \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\). The arguments of Lemmas 8–10 remain completely unchanged.

Next we show that Lemma 11 holds with a different constant. The derivation leading up to (32) continues to apply if we incorporate the substitution in Lemma 7 specified in the previous paragraph. Therefore, we replace \(\rho _i^k\) by \({\hat{\rho }}_i^k\) in (32) for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\). Using (46)/(48) and the fact that \(\limsup _{k\rightarrow \infty } \{{\hat{\rho }}_i^{d(i,k)} \} \le {\bar{\rho }}\) we conclude that Lemma 11 still holds, with the constant \(\xi _1\) adjusted in light of (46)/(48).

Now we show that Lemma 12 holds with a different constant. For \(k\ge M\), we may use Lemma 7 and the termination criterion for Algorithm 4 to write

Here, the terms involving \({\mathcal {I}}_{{{\,\mathrm{B}\,}}}\) are dealt with the same way as before in Lemma 12. We conclude that Lemma 12 holds with \(\xi _2\) replaced by

Now we show that Lemma 13 holds with a different constant. The derivation up to (36) proceeds as before, but replacing \(\rho _i^{l(i,k)}\) with \({\hat{\rho }}_i^{l(i,k)}\) for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\). Using (46)–(48) and Assumption 4, it is clear that the conclusion of Lemma 13 follows with the constant \(\xi _3\) adjusted in light of (46)–(48).

Finally, the rest of the proof now follows in the same way as in the proof of Theorem 1. \(\square \)

5.2 Backtracking is unnecessary for affine operators

When \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) and \(T_i\) affine, it is not necessary to iteratively backtrack to find a valid stepsize. Instead, it is possible to directly solve for a stepsize \(\rho = \rho _i^{(j,k)}\) such that the condition on line 7 of Algorithm 4 is immediately satisfied. Thus, one can process an affine operator with only two forward steps, even without having estimated its Lipschitz constant.

From here on, we continue to use the notation \(\theta _i^k = G_i z^{d(i,k)}\) and \(\zeta _i^k = T_i\theta _i^k\) introduced in Algorithm 4. Fix \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) and suppose that \(T_i x = T_i^lx+c_i\) where \(c_i\in {\mathcal {H}}_i\) and \(T_i^l\) is linear. The loop termination condition on line 7 of Algorithm 4 may be written

Substituting the expressions for \({\tilde{x}}_i^{(j,k)}\) and \({\tilde{y}}_i^{(j,k)}\) from lines 5–6 of Algorithm 4 into the left-hand side of (49), replacing \(\rho _i^{(i,j)}\) with \(\rho \) for simplicity, and using the linearity of \(T_i^l\) yields

Substituting the expression for \({\tilde{x}}_i^{(i,j)}\) from line 5 of Algorithm 4, the right-hand side of (49) may be written

Substituting (50) and (51) into (49) and solving for \(\rho \) yields that the loop exit condition holds when

If \(\zeta _i^k - w_i^{d(i,k)} = 0\), then (52) is not defined, but in this case the step acceptance condition (49) holds trivially and lines 5–6 of the backtracking procedure yield \({\tilde{x}}_i^{(j,k)} = \theta _i^k\) and \({{\tilde{y}}}_i^{(j,k)} = \zeta _i^k\) for any stepsize \(\rho _i^{(j,k)}\).

We next show that \({\tilde{\rho }}_i^k\) as defined in (52) will behave in a bounded manner even as \(\zeta _i^k - w_i^{d(i,k)}\rightarrow 0\). Temporarily letting \(\xi = \zeta _i^k - w_i^{d(i,k)}\), we note that as long as \(\xi \ne 0\), we have

where the inclusion follows because \(T_i\) is monotone and thus \(T_i^l\) is positive semidefinite, and because \(T_i\) is \(L_i\)-Lipschitz continuous and therefore so is \(T_i^l\). Thus, choosing \({\tilde{\rho }}_i^k\) to take some arbitrary fixed value \({\bar{\rho }} > 0\) whenever \(\zeta _i^k - w_i^{d(i,k)}=0\), the sequence \(\{{\tilde{\rho }}_i^k\}\) is bounded from both above and below, and all of the arguments of Theorem 2 apply if we use \({\tilde{\rho }}_i^k\) in place of the results of the backtracking line search.

To calculate (52), one must compute \(\zeta _i^k = T_iG_i z^{d(i,k)}\) and \(T_i^l\big (\zeta _i^k - w_i^{d(i,k)}\big )\). Then \(x_i^k\) can be obtained via \(x_i^{k} = \theta _i^k - \rho \big (\zeta _i^k - w_i^{d(i,k)}\big )\) and

In total, this procedure requires one application of \(G_i\) and two of \(T_i^l\).

5.3 Greedy block selection

We now introduce a greedy block selection strategy which may be useful in some block-iterative implementations of Algorithm 2, such as Algorithm 3. In essence, this selection strategy provides a way to pick \(I_k\) at each iteration in Algorithm 3, and we have found it to improve performance on several empirical tests.

Consider Algorithm 3 with \(|I_k |= 1\) for all k (only one subproblem activated per iteration), and \(\beta _k = 1\) for all k (no overrelaxation of the projection step). Consider some particular iteration \(k \ge M\) and assume \(\Vert \nabla \varphi _k\Vert >0\) (otherwise the algorithm terminates at a solution). Ideally, one might like to maximize the length of the step \(p^{k+1} - p^k\) toward the solution set \({\mathcal {S}}\), and \(\left\| p^{k+1}-p^k\right\| _\gamma = \varphi _k(p^k)/\left\| \nabla \varphi _k\right\| _\gamma \).

Assuming that \(\beta _k=1\), the current point \(p^{k}\) computed on lines 24–25 of Algorithm 2 is the projection of \(p^{k-1}\) onto the halfspace \(\{p:\varphi _{k-1}(p)\le 0\}\). If \(p^{k-1}\) was not already in this halfspace, that is, \(\varphi _{k-1}(p^{k-1}) > 0\), then after the projection we have \(\varphi _{k-1}(p^k) = 0\).

Using the notation \(G_n = I\) and \(w_n^k\) defined in (14), \(\varphi _{k-1}(p^k) = 0\) is equivalent to

Suppose we select operator i to be processed next, that is, \(I_k=\{i\}\). After updating \((x_i^k,y_i^k)\), the corresponding term in the summation in (55) becomes bounded below by \(\xi \Vert G_i z^k - x_i^k\Vert ^2 \ge 0\), where \(\xi = (1-\sigma )/\rho _i^k\) for \(i\in {\mathcal {I}}_{{{\,\mathrm{B}\,}}}\), \(\xi = \varDelta \) for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) with backtracking, and \(\xi = {\bar{\rho }}_i^{-1} - L_i\) for \(i\in {\mathcal {I}}_{{{\,\mathrm{F}\,}}}\) without backtracking. In any case, processing operator i will cause the \(i^{\text {th}}\) term to become nonnegative while the other terms remain unchanged, so if we select an i with \(\langle G_i z^{k} - x_i^{k-1}, y_i^{k-1} - w_i^{k}\rangle < 0\), then the sum in (55) must increase by at least \(- \langle G_i z^{k} - x_i^{k-1}, y_i^{k-1} - w_i^{k}\rangle \), meaning that after processing subproblem i we will have

Choosing the i for which \(\langle G_i z^{k} - x_i^{k-1}, y_i^{k-1} - w_i^{k}\rangle \) is the most negative maximizes the above lower bound on \(\varphi _k(p^k)\) and would thus seem a promising heuristic for selecting i.

Note that this “greedy” procedure is only heuristic because it does not take into account the denominator in the projection operation, nor how much \(\langle G_i z^{k} - x_i^{k}, y_i^{k} - w_i^{k}\rangle \) might exceed zero after processing block i. Predicting this quantity for every block, however, might require essentially the same computation as evaluating a proximal or forward step for all blocks, after which we might as well update all blocks, that is, set \(I_k = \{1,\ldots ,n\}\).

In order to guarantee convergence under this block selection heuristic, we must include some safeguard to make sure that Assumption 2(1) holds. One straightforward option is as follows: if a block has not been processed for more than \(M>0\) iterations, we must process it immediately regardless of the value of \(\langle G_i z^{k} - x_i^{k-1}, y_i^{k-1} - w_i^{k}\rangle \).

5.4 Variable metrics

Looking at Lemmas 12 and 13, it can be seen that the update rules for \((x_i^k,y_i^k)\) can be abstracted. In fact any procedure that returns a pair \((x_i^k,y_i^k)\) in the graph of \(T_i\) satisfying, for some \(\xi _4>0\),

yields a convergent algorithm. As with lemmas 12 and 13, these inequalities need only hold in the limit.

An obvious way to make use of this abstraction is to introduce variable metrics. To simplify the following, we will ignore the error terms \(e_i^k\) and assume no delays, i.e. \(d(i,k)=k\). The updates on lines 4–6 and 7–9 of Algorithm 2 can be replaced with

where \(\{U_i^k:{\mathcal {H}}_i\rightarrow {\mathcal {H}}_i\}\) are a sequence of bounded linear self-adjoint operators such that

where \(0<\underline{\lambda },\overline{\lambda }<\infty \). In the finite dimensional case, (61) simply states that the eigenvalues of the set of matrices \(\{U_i^k\}\) can be uniformly bounded away from 0 and \(+\infty \). It can be shown that using (59)–(60) leads to the desired inequalities (56)–(58).

The new update (59) can be written as

It was shown in [11, Lemma 3.7] that this is a proximal step with respect to \(U_i^k T_i\) and that this operator is maximal monotone under an appropriate inner product. Thus the update (62) is single valued with full domain and hence well-defined. In the optimization context where \(T_i = \partial f_i\) for closed convex proper \(f_i\), solving (62) corresponds to the subproblem

where \(a = G_i z^{k}+\rho _i^{k}U_i^k w_i^{k}\). For the variable-metric forward step (60), the stepsize constraint (20) must be replaced by \(\rho _i^k < {1}/{\Vert U_i^k\Vert L_i}\).

6 Numerical experiments

We now present some preliminary numerical experiments with Algorithm 3, evaluating various strategies for selecting \(I_k\) and comparing efficiency of forward and (approximate) backward steps. All our numerical experiments were implemented in Python (using numpy and scipy) on an Intel Xeon workstation running Linux.

6.1 Rare feature selection

The work in [38] studies the problem of utilizing rare features in machine learning problems. In this context, a “rare feature” is one whose value is rarely nonzero, making it hard to estimate the corresponding model coefficients accurately. Despite this, such features can be highly informative, so the standard practice of discarding them is wasteful. The technique in [38] overcomes this difficulty by making use of an auxiliary tree data structure \({\mathcal {T}}\) describing feature relatedness. Each leaf of the tree is a feature and two features’ closeness on the tree measures how “related” they are. Closely related features can then be aggregated (summed) so that more samples are captured, increasing the accuracy of the coefficient estimate for a single coefficient for the aggregated features.

To formulate the resulting aggregation and fitting problem, [38] introduced the following generalized regression problem:

where \(\ell :\mathbb {R}^m\times \mathbb {R}^m \rightarrow \mathbb {R}\) is a loss function, \(X\in \mathbb {R}^{m\times d}\) is the data matrix, \(b\in \mathbb {R}^m\) is the target (response) vector, and \(\varvec{\beta }\in \mathbb {R}^d\) are the feature coefficients. Each \(\varvec{\gamma }_i\) is associated with a node of the similarity tree \({\mathcal {T}}\), and \(\varvec{\gamma }_{-r}\) denotes the subvector of \(\varvec{\gamma }\) corresponding to all nodes except the root node. The matrix \(H\in \mathbb {R}^{d\times |{\mathcal {T}}|}\) contains a 1 in positions i, j for those features i which correspond to a leaf of \({\mathcal {T}}\) that is descended from node j, and elsewhere contains zeroes. Due to the constraint \(\varvec{\beta }= H\varvec{\gamma }\), the coefficient \(\varvec{\gamma }_j\) of each tree node j contributes additively to the coefficient \(\varvec{\beta }_i\) of each feature descended from j. H thus fuses coefficients together in the following way: if \(\varvec{\gamma }_i\) is nonzero for a node i and all descendants of \(\varvec{\gamma }_i\) in \({\mathcal {T}}\) are 0, then all coefficients on the leaves which are descendant from \(\varvec{\gamma }_i\) are equal (see [38, Sec. 3.2] for more details). The \(\ell _1\) norm on \(\varvec{\gamma }\) enforces sparsity of \(\varvec{\gamma }\), which in turn fuses together coefficients in \(\varvec{\beta }\) associated with similar features. The \(\ell _1\) norm on \(\varvec{\beta }\) itself additionally enforces sparsity on these coefficients, which is also desirable. The model can allow for an offset variable by incorporating columns/rows of 1’s and 0’s in X and H, but for simplicity we omit the details.

6.2 TripAdvisor reviews

We apply this model to a dataset of TripAdvisor reviews of hotels from [38]. The response variable was the overall review of the hotel in the set \(\{1,2,3,4,5\}\). The features were the counts of certain adjectives in the review. Many adjectives were very rare, with \(95\%\) of the adjectives appearing in fewer than \(5\%\) of the reviews. The authors of [38] constructed a similarity tree using information from word embeddings and emotion lexicon labels; there are 7573 adjectives from the \(209,\!987\) reviews and the tree \({\mathcal {T}}\) had \(15,\!145\) nodes. A test set of \(40,\!000\) examples was withheld, leaving a sparse \(169,\!987\times 7573\) matrix X having only \(0.32\%\) nonzero entries. The \(7,\! 573\times 15,\!145 \) matrix H arising from the similarity tree \({\mathcal {T}}\) is also sparse, having \(0.15\%\) nonzero entries. In our implementation, we used the sparse matrix package sparse in scipy.

In [38], the elements of b are the review ratings and the loss function is given by the standard least-squares formula \(\ell (X\varvec{\beta },b)=(1/2m)\Vert X\varvec{\beta }-b\Vert _2^2.\) To emphasize the advantages of our new forward-step version of projective splitting over previous backward-step versions, we instead use the same data and regularizers to construct a classification problem with the logistic loss. We assigned the \(73,\! 987\) reviews with a rating of 5 a value of \(b_i=+1\), while we labeled the \(96,\!000\) reviews with value 4 or less with \(b_i=-1\). The loss is then

where \(x_j\) is the jth row of X. The classification problem is then to predict which reviews are associated with a rating of 5.

In practice, one typically would solve (63) for many values of \((\alpha ,\lambda )\) and then choose the final model based on cross validation. To assess the computational performance of the tested methods, we solve three representative examples corresponding to sparse, medium, and dense solutions. The corresponding values for \(\lambda \) were \(\{10^{-8},10^{-6},10^{-4}\}\). In preliminary experiements, we found that the value of \(\alpha \) had little effect on algorithm performance, so we fixed \(\alpha =0.5\) for simplicity.

6.3 Applying projective splitting

The work in [38] solves the problem (63), with \(\ell \) set to the least-squares loss, using a specialized application of the ADMM. The implementation involves precomputing the singular value decompositions (SVDs) of the (large) matrices X and H, and so does not fall within the scope of standard first-order methods. Instead, we solve (63) with the logistic loss by simply eliminating \(\varvec{\beta }\), so that the formulation becomes

To utilize block-iterative updates in Algorithm 3, we split up the loss function as follows: Let \({\mathcal {R}}= \{R_1,..,R_P\}\) be an arbitrary partition of \(\{1,\ldots ,m\}\). For \(i=1,\ldots ,P\), let \(X_i\in \mathbb {R}^{|R_i|\times d}\) be the submatrix of X with rows corresponding to indices in \(R_i\) and similarly let \(b^i\in \mathbb {R}^{|R_i|}\) be the corresponding subvector of b. Then (65) is equivalent to

There are several ways to formulate this problem as a special case of (2), leading to different realizations of Algorithm 3. The approach that we found to give the best empirical performance was to set \(n=P+3\) and

where

and the last element of \(\varvec{\gamma }\), \(\varvec{\gamma }_{|{\mathcal {T}}|}\), is the root of the tree. We append the trivial function \(f_n = 0\) in order to comply with the requirement that the final linear operator \(G_n\) be the identity; see (13). The functions \(f_{n-2}\) and \(f_{n-1}\) have easily-computed proximal operators, so we process them at every iteration. Further, the proximal operator of \(f_n\) has is simply the identity, so we also process it at each iteration. Therefore, \(\{n-2,n-1,n\}\subseteq I_k\) for all \(k\ge 1\). On the other hand, the functions \(f_i(t)\) for \(i=1,\ldots ,P\) are

where \(x_{ij}\) is the jth row of the submatrix \(X_i\) and \(b_j^i\) is the jth element of \(b^i\). These functions are Lipschitz differentiable and so may be processed by our new forward-step procedure. We use the backtracking procedure in Sect. 5.1 so that we do not need to estimate the Lipschitz constant of each \(\ell _i\), a potentially costly computation involving the SVD of each \(X_i\). The most time-consuming part of each gradient evaluation are two matrix multiplications, one by \(X_i\) and one by \(X_i^\top \). We will refer to the approach of setting \({\mathcal {I}}_{{{\,\mathrm{F}\,}}}= \{1,\ldots ,P\}\) and using backtracking as “Projective Splitting with Forward Steps” (psf).

On the other hand, even though the proximal operators of \(f_1,\ldots ,f_{P}\) lack a closed form, it is still possible to process these functions with an approximate backward step. The exact proximal map for \(f_i\) is the solution to

This is an unconstrained nonlinear convex program and there are many different ways one could approximately solve it. Since we are interested in scalable first-order approaches, we chose the L-BFGS method—see for example [30]—which has small memory footprint and only requires gradient and function evaluations. So, we choose some \(\sigma \in [0,1)\) and apply L-BFGS to solve (67) until the relative error criteria (21) and (22) are met.

For a given candidate solution \(x_{i}^k\), we have \(y_{i}^k = \nabla \ell (X_i x_i^k,b_i)\), and the error can be explicitly computed as \(e_{i}^k = x^k_{i} +\rho _{i} y^k_{i} -(H z^{k}+\rho _{i} w_{i}^{k})\). Every iteration of L-BFGS requires at least one gradient and function evaluation, which in turn requires two matrix multiplies, one by \(X_i\) and one by \(X_i^\top \). We “warm-start” L-BFGS by initializing it at \(x^{k-1}_i\). We will refer to this approach as “Projective Splitting with Backward Steps” (psb).

The coordination procedure (lines 12–26) is the same for psf and psb, requiring two multiplies by H, two by \(H^\top \), vector additions, inner products, and scalar multiplications.

We tried \(P=1\) and \(P=10\), with each block chosen to have the same number of elements (to within P, since m is not divisible by P) of contiguous rows from X. At each iteration, we selected one block from among \(1,\ldots ,P\) for a forward step in psf or backward step with L-BFGS in psb, and blocks \(P+1\), \(P+2\), and \(P+3\) for backward steps. Thus, \(I_k\) always has the form \(\{i,P+1,P+2,P+3\}\), with \(1 \le i\le P\). To select this i, we tested three strategies: the greedy block selection scheme described in Sect. 5.3, choosing blocks at random, and cycling through the blocks in a round-robin fashion. For the greedy scheme, we did not use the safeguard parameter M as in practice we found that every block was updated fairly regularly.

We refer to the greedy variants with \(P=10\) blocks as psf-g and psb-g, those with randomly selected blocks as psf-r and psb-r, and those with cyclically selected blocks as psf-c and psb-c. Finally, the versions with \(P=1\) are referred to as psf-1 and psb-1.

6.4 The competition

To compare with our proposed methods, we restricted our attention to algorithms with comparable features and benefits. In particular, we only considered first-order methods which do not requre computing Lipschitz constants of gradients and matrices. Very few such methods apply to (65). The presence of the matrix H in the term \(\Vert H\varvec{\gamma }\Vert _1\) makes it difficult to apply Davis-Yin three-operator splitting [14] and related methods [31], since the proximal operator of this function cannot be computed in a simple way. We compared our projective splitting methods with the following methods:

-

The backtracking linesearch variant of the Chambolle-Pock primal-dual splitting method [26], which we refer to as cp-bt.

-

The algorithm of [10]. This approach is based on the “monotone + skew” inclusion formulation obtained by first defining the monotone operators

$$\begin{aligned} T_1(\varvec{\beta })&= \lambda (1-\alpha )\partial \Vert \varvec{\beta }\Vert _1&T_2(\varvec{\gamma })&=\lambda \alpha \partial \Vert \varvec{\gamma }_{-r}\Vert _1&T_3(\varvec{\gamma })&= \nabla _{\varvec{\gamma }}\big [\ell (XH\varvec{\gamma },b)\big ], \end{aligned}$$and then formulating the problem as \(0\in \tilde{A}(z,w_1,w_2) + \tilde{B}(z,w_1,w_2)\), where \(\tilde{A}\) and \(\tilde{B}\) are defined by

$$\begin{aligned} \tilde{A}(z,w_1,w_2)&= \{0\} \times T_1^{-1}w_1 \times T_{2}^{-1}w_{2} \end{aligned}$$(68)$$\begin{aligned} \tilde{B}(z,w_1,w_2)&= \left[ \begin{array}{c} T_3(z)\\ 0\\ 0 \end{array} \right] + \left[ \begin{array}{ccc} 0&{}H^{\top }&{}I\\ -H&{}0&{} 0\\ -I &{} 0 &{} 0 \end{array} \right] \left[ \begin{array}{c} z\\ w_1\\ w_{2} \end{array} \right] . \end{aligned}$$(69)\(\tilde{A}\) is maximal monotone, while \(\tilde{B}\) is the sum of two Lipshitz monotone operators (the second being skew linear), and therefore is also Lipschitz monotone. The algorithm in [10] is essentially Tseng’s forward–backward–forward method [35] applied to this inclusion, using resolvent steps for \(\tilde{A}\) and forward steps for \(\tilde{B}\). Thus, we call this method tseng-pd. In order to achieve good performance with tseng-pd we had to incorporate a diagonal preconditioner as proposed in [37]. We used the following preconditioner:

$$\begin{aligned} U = \text {diag}(I_{d\times d},\gamma _{pd} I_{d\times d},\gamma _{pd} I_{d\times d}) \end{aligned}$$(70)where U is used as in [37, Eq. (3.2)] for tseng-pd.

-

The recently proposed forward-reflected-backward method [27], applied to this same primal-dual inclusion \(0\in \tilde{A}(z,w_1,w_2) + \tilde{B}(z,w_1,w_2)\) specified by (68)–(69). We call this method frb-pd. For this method, we used the same preconditioner given in (70), used as \(M^{-1}\) on [27, p. 7].

6.5 Algorithm parameter selection

For psf, we used the backtracking procedure of Sect. 5.1 with \(\varDelta =1\) to determine \(\rho _1^k,\ldots ,\rho _{n-3}^k\). For the stepsizes associated with the regularizers, we simply set \(\rho _{n-2}^k=\rho _{n-1}^k=\rho _n^k=1\). For backtracking in all methods, we set the trial stepsize equal to the previously discovered stepsize.

For psb, we used \(\rho _1^k=\ldots =\rho _{n}^k=1\) for simplicity. For the L-BFGS procedure in psb, we set the history parameter to be 10 (i.e. the past 10 variables and gradients were used to approximate the Hessian). We used a Wolfe linesearch with \(C_1=10^{-4}\) and \(C_2=0.9\).

Each tested method then had one additional tuning parameter: \(\beta \) given in line 2.a of Algorithm 4 of [26] for cp-bt, \(\gamma _{pd}\) given in (70) for tseng-pd and frb-pd, and \(\gamma \) for psf and psb. The values we used are given in Table 1. These values were chosen by running each method for 2000 iterations and picking the tuning parameter from \(\{10^{-6},10^{-5},\ldots ,10^5,10^6\}\) giving the smallest final function value. We then ran a longer experiment (about 10 minutes) for each method, using the chosen tuning parameter. The greedy, random, cyclic, and 1-block variants of psf and psb all used the same tuning parameter values.

6.6 Results

In Fig. 2 we plot the objective function values against elapsed wall-clock running time, excluding time to compute the plotted function values. For psf and psb, we computed function values for the primal variable \(z^k\). For cp-bt, we computed the objective at \(y^{k}\) as given in [26, Algorithm 4]. For tseng-pd and frb-pd, we computed the objective values for the primal iterate corresponding to z in (68)–(69).