Abstract

We present an improved algorithm for finding exact solutions to Max-Cut and the related binary quadratic programming problem, both classic problems of combinatorial optimization. The algorithm uses a branch-(and-cut-)and-bound paradigm, using standard valid inequalities and nonstandard semidefinite bounds. More specifically, we add a quadratic regularization term to the strengthened semidefinite relaxation in order to use a quasi-Newton method to compute the bounds. The ratio of the tightness of the bounds to the time required to compute them depends on two real parameters; we show how adjusting these parameters and the set of strengthening inequalities gives us a very efficient bounding procedure. Embedding our bounding procedure in a generic branch-and-bound platform, we get a competitive algorithm: extensive experiments show that our algorithm dominates the best existing method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Maximizing a quadratic function over the vertices of an hypercube is an important problem of discrete optimization. As far as formulation is concerned, it is the simplest problem of nonlinear (mixed-)integer programming. However, this problem is NP-hard [27] and it is considered a computational challenge to be solved to optimality, even for instances of moderate size.

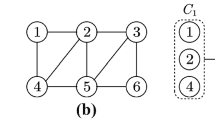

The Max-Cut problem is one of the most famous NP-hard problems of this type, as is evidenced by its consideration in such seminal works as [16] and [12], and in the survey chapter [25] of the recent handbook [2]. Given a graph \(G = (V,E)\) with edge weights \(w_{ij}\) for \(ij \in E\) and \(w_{ij} = 0\) for \(ij \not \in E\), Max-Cut is the problem of finding a bipartition of the nodes \(V\) such that the sum of the weights of the edges across the bipartition is maximized. Let \(n = |V|\) be the cardinality of \(V\); we can state Max-Cut as

We can rewrite the problem of Max-Cut as

where the matrix \(Q\) is defined as \(Q := \frac{1}{4}L\), and \(L\) is the Laplacian matrix of the weighted graph \(G\); see, e.g., [3].

There have been many methods for finding exact solutions of Max-Cut using semidefinite programming (SDP), including early efforts of [14], the QCR method [4], and most recently the state-of-the-art Biq Mac method [29]. This paper builds on this line of research: we present an improved branch-and-bound algorithm inspired by the above mentioned approaches and introducing new techniques. As shown by the extensive numerical experiments of this paper, our new algorithm dominates the best existing methods.

The algorithm uses a branch-and-(cut-and)-bound paradigm, using the standard valid triangle inequalities together with nonstandard semidefinite bounds. Bounds of the same type have been used recently in several papers (for general binary quadratic problems [20, 21] and specifically for the \(k\)-cluster problem [22]). More precisely, we apply here the bounds to the Max-Cut problem that have been introduced in [21] for general quadratic optimization problems with quadratic and linear constraints; however, we provide a different motivation for these bounds, looking at them from a quadratic penalty point of view (see Sect. 3). This reveals the key role of two positive parameters, \(\alpha \) and \(\varepsilon \), controlling the tightness of the bound.

Our main contribution is an improved bounding procedure obtained by reducing the \(\alpha \) and \(\varepsilon \) parameters to zero and iteratively adding triangle inequality cuts (see Sect. 4). We show that our bounding procedure converges to the classic reinforced semidefinite bound for the Max-Cut problem, in an efficient and robust way.

We embed our bounding procedure within a generic branch-and-bound platform for solving Max-Cut to optimality (see Sect. 5). We compare the overall algorithm with the best existing exact resolution method, the Biq Mac solver [29] on the 328 instances in the Biq Mac Library [31] with size \(n < 500\). To prove unambiguously that the new bounding procedure is of high practical value, we make a fair and complete computational study between the two codes: we have compiled and run both our code and the Biq Mac code (kindly provided by the authors of Biq Mac) on the same machine, and have used the same libraries and compilation flags for both codes.

We finish this section with a remark about our choice to focus on Max-Cut for presenting our approach. With the help of classic reformulation techniques, more general binary quadratic optimization problems can be recast as Max-Cut. We could, for example, add a linear term to the objective in problem (2), or use variables in \(\{0,1\}\). For instance, the binary homogeneous quadratic optimization problem

can be reformulated as Max-Cut by considering a change of variable and by increasing the size of the problem by one; see, e.g., [14, Section 2]. Therefore, to keep the presentation as simple as possible, we only present our developments for the Max-Cut problem (1). On the other hand, we consider both Max-Cut problems and binary quadratic optimization problems in our numerical experiments.

2 Semidefinite relaxations and Biq Mac

In this section, we introduce notation, briefly recall the classic semidefinite relaxation of Max-Cut (see, e.g., [19, 28]), and provide a sketch of the Biq Mac method [29], the method to which we will compare.

Semidefinite formulation and relaxation. The inner product of two matrices \(X\) and \(Y\) is defined and denoted by \(\left\langle X, Y \right\rangle =\,\text{ trace}\,(X^TY)\). The notation \(X \succeq 0\) means that \(X\) is symmetric positive semidefinite. Introducing the symmetric positive semidefinite rank-one matrix \(X=xx^T\), we observe that we can write the Max-Cut problem as

where \(\,\text{ diag}(X)\) is the vector of the diagonal entries of the matrix \(X\), and the vector of all ones is represented by \(e\)—for simplicity, we allow the size of the vector \(e\) to be determined from the context. The classic semidefinite relaxation is obtained by dropping the nonconvex rank-one constraint in problem (4):

Since the feasible set of problem (5) is strictly larger than that of problem (4), we can easily see that the optimal value of problem (5) provides an upper bound on the weight of the optimal cut:

There exist some theoretical results quantifying the tightness of the above inequality (see, in particular, the seminal work [12]). However, in practice, the ratio of the tightness of the bound to the time needed to compute the bound is too low to allow this bound be used efficiently in branch-and-bound schemes to solve Max-Cut problems to optimality (see, e.g., [14, 29, 30]).

Strengthening inequalities. In order to improve the performance of the bound, it is necessary to tighten the bounds by adding valid inequalities to the SDP relaxation (5). The most popular class of inequalities are the triangle inequalities, defined for \(1 \le i < j < k \le n\) by

(see [14, Section 4] and [29, Section 3.2]). They represent the fact that for any \(3\)-cycle of vertices in the graph, we can only have an even number of edges cut (i.e., either no edges are cut, or exactly two edges are cut). There are

triangle inequalities in total. Adding any subset of those valid inequalities can strengthen the SDP bound (5). In particular, let \({I}\) be a subset of the triangle inequalities, and \({A}_{I}:\mathbb S ^n \rightarrow \mathbb R ^{|{I}|}\) be the corresponding linear operator describing the inequalities in this subset. We define the \((\mathrm{SDP}_{I})\) problem as

Adding such valid constraints gives an improved bound on the value of the maximum cut:

Note that the dual of problem (6) is given by

where \(\,\text{ Diag}(y)\) is the diagonal matrix with the vector \(y\) along its diagonal, and \({A}_{I}^*\) is the adjoint linear operator of \({A}_{I}\).

Let us call \((\mathrm{SDP}_{{I}_\mathrm{all}})\) the problem with all the triangle inequalities added, which gives the best possible \((\mathrm{SDP}_{I})\) bound. Unfortunately, since there is a very large number of triangle inequalities, the \((\mathrm{SDP}_{{I}_\mathrm{all}})\) problem is intractable even for moderate values of \(n\). However, we can get this bound by using only the inequalities that are active at an optimal solution of \((\mathrm{SDP}_{{I}_\mathrm{all}})\). Such a set of active inequalities is obviously unknown, but we will see in Sect. 4 how we approximate it (see Algorithm 1 and Theorem 1). For the moment, we just note that in practice we have to select a (small) number of promising inequalities.

In addition to the triangle inequalities, other cutting planes could be used to tighten the relaxation: hypermetric inequalities [14], general gap inequalities [18], or even a sophisticated choice based on the objective function [9]. The nature of the valid inequalities has no impact on the theoretical developments of the next section; we will deal with a general set of inequalities \({I}\). However, in the computational experiments, as is done in [29], we only use triangle inequalities as it greatly simplifies the selection of inequalities and leads to satisfactory results.

Best existing method. Biq Mac [29, 30] is currently the best solver for solving Max-Cut problems to optimality. This method uses a branch-and-bound approach and solves the strengthened SDP relaxation \((\mathrm{SDP}_{I})\) in Eq. (6) with a nonsmooth optimization algorithm. By dualizing the triangle inequality constraints in problem (6), the authors of the Biq Mac solver obtain the nonsmooth convex dual function

where \(z\in \mathbb R ^{|{I}|}_+\) is the dual variable. The value \(\theta _{I}(z)\) provides an upper bound on the value of the maximum cut for each \(z \in \mathbb R ^{|{I}|}_+\); the goal is to minimize \(\theta _{I}(z)\) to obtain the tightest such upper bound. The two main ingredients of the bounding procedure of Biq Mac are as follows:

-

1.

the function value \(\theta _{I}(z)\) and a subgradient \(g \in \partial \theta _{I}(z)\) are evaluated by solving the semidefinite programming problem in Eq. (9) by a customized primal-dual interior-point method;

-

2.

the nonsmooth convex function \(\theta _{I}\) is minimized by a bundle method [15].

The extensive numerical experiments of [29, 30] show that Biq Mac dominates all other approaches (see the six tested approaches in [29, Section 7]).

3 Our semidefinite bounds

We present the family of semidefinite bounds that we will use in our branch-and-bound method for solving Max-Cut to optimality. By construction, these bounds are less tight than corresponding usual SDP bounds (see Sect. 3.1). On the other hand, Sect. 3.2 presents their useful properties that will lead us to the bounding procedure of the next section (Algorithm 1) whose bounds converge to the usual SDP bounds in the limit.

First we need some more notation. For any matrix \(A\), we denote by \(\left\Vert A \right\Vert_F\) the Frobenius norm of \(A\), which is defined by \(\left\Vert A \right\Vert_F = \sqrt{\left\langle A, A \right\rangle }\). For a real number \(a\), we denote its nonnegative part by \(a_+=\max \{a,0\}\). We extend this definition to vectors as follows: if \(x \in \mathbb R ^n\), then \((x_+)_i = (x_i)_+\), for \(i = 1,\ldots ,n\). For a given symmetric matrix \(A\), we denote its positive semidefinite part by \(A_ +\); more specifically, if the eigendecomposition of A is given by \(A=U\,\text{ Diag}(\lambda )U^T\), with the eigenvalues \(\lambda \in \mathbb R ^n\), and orthogonal matrix \(U \in \mathbb R ^{n \times n}\), then we have

We denote similarly \(a_-, x_-\), and \(A_-\).

3.1 The family of semidefinite bounds

We begin with the following simple fact.

Lemma 1

Let \(0\le \varepsilon \le 1\) and \(X \succeq 0\) such that all the diagonal entries \(X_{ii}\) lie in \([1-\varepsilon , 1+ \varepsilon ]\). Then \((1-\varepsilon )^2n \le \left\Vert X \right\Vert_F^2 \le (1+ \varepsilon )^2n^2\).

Proof

Let \(X \succeq 0\) as defined above. We have

Now take \((i,j)\) and extract the submatrix whose determinant is nonnegative

Thus \(X_{ij}^2\le X_{ii}X_{jj} \le (1+\varepsilon )^2\). Also, for each \(i\), we have \(X_{ii}^2 \le (1+\varepsilon )^2\), so that \(\left\Vert X \right\Vert_F^2 = \sum _{ij} X_{ij}^2 \le (1+\varepsilon )^2n^2\). \(\square \)

A particular case of the above result is when \(X\) is feasible in \((SDP_{I})\): we have \(\,\text{ diag}(X)=e\), so we take \(\varepsilon =0\) in Lemma 1 to get

Adding a multiple of this nonnegative term to the objective function, we obtain the regularized problem

Proposition1

The following holds: for all \({I}\) and for all \(\alpha \ge 0\),

If \(\alpha = 0\), the second inequality is an equality. If \(\alpha >0\), the two inequalities are equalities if and only if there exists a rank-one optimal solution of \((\mathrm{SDP}_{I})\) or \((\mathrm{SDP}^\alpha _{I})\). Moreover, we have

and

Proof

Inequality (13) clearly holds since the feasible set of \((\mathrm{SDP}_{I}^{\alpha })\) is included in that of \((\mathrm{SDP}_{{I}^{\prime }}^{\alpha })\). We now prove (12), from which we can deduce the remaining easily. For any feasible matrix \(X\) in problem (10), we have \(n^2-\left\Vert X \right\Vert_F^2\ge 0\). Therefore \(\alpha ^{\prime }\le \alpha \) yields

which gives \((\mathrm{SDP}_{I}^{\alpha ^{\prime }}) \le (\mathrm{SDP}_{I}^{\alpha })\). Taking now \(\alpha ^{\prime }=0\) gives the second inequality in (11); the first inequality comes from (7). For the case of equality, note first from problem (4) that \((\mathrm{MC}) = (\mathrm{SDP}_{I})\) if and only if there is a rank-one optimal solution of \((\mathrm{SDP}_{I})\). Finally, we use a corollary of [20, Theorem 1] which states that if \(X \succeq 0\) and \(\,\text{ diag}(X) = e\), then \(\left\Vert X \right\Vert_F = n\) if and only if \(\,\text{ rank}\,(X)=1\), completing the proof. \(\square \)

This proposition says, first, that the bound \((\mathrm{SDP}_{I}^\alpha )\) is strictly weaker than \((\mathrm{SDP}_{I})\) (except in the very special situation that \((\mathrm{SDP}_{I})\) has a rank-one optimal solution), and, second, that making \(\alpha \) smaller reduces the difference. We will come back to this second point when describing our algorithm where we gradually decrease \(\alpha \).

Let us now fix \(\alpha \) and \({I}\), and let us dualize the affine constraints of \((\mathrm{SDP}_{I}^ \alpha )\). We define the Lagrangian function with respect to the primal variable \(X\succeq 0\) and the dual variables \(y \in \mathbb R ^n\) and \(z\in \mathbb R _+^{|{I}|}\) as

The associated dual function is then defined by

By weak duality, we have an upper bound on \((\mathrm{MC})\) for given \(({I},\alpha ,y,z)\) with \(\alpha \ge 0\) and \(z \ge 0\). More precisely, we have

These \(F_{I}^\alpha (y,z)\) for given \(({I},\alpha ,y,z)\) are the bounds that we are going to use to solve Max-Cut to optimality; we study them in the next section.

3.2 Mathematical study of the bounds \(F_{I}^\alpha (y,z)\)

This first result gives us a useful explicit expression of the bound \(F_{I}^\alpha (y,z)\) for \(\alpha > 0\). Let us consider the positive semidefinite matrix

Proposition 2

Let \(\alpha > 0\) and let \({I}\) be a set of inequalities. Then, for \(F_{I}^\alpha \) and \(X_{I}\) defined in Eqs. (14) and (16), respectively, we have

Proof

Let \(y\) and \(z\) be given, and let \(M := Q-\,\text{ Diag}(y)+{A}_{I}^*(z)\) and \(f(X) := \left\langle M, X \right\rangle - \frac{\alpha }{2}\left\Vert X \right\Vert_F^2\). Then we have by Eq. (14) that

Since \(\alpha > 0\), we have that \(\max _{X \succeq 0} f(X)\) is a convex optimization problem. The dual problem of \(\max _{X \succeq 0} f(X)\) is given by

Since \(\max _{X \succeq 0} f(X)\) is strictly feasible, strong duality holds (see, e.g., [7]); therefore,

Let \(S \succeq 0\) be fixed. Since \(\nabla f(X) = M - \alpha X\), the maximum of \(f(X) + \left\langle S, X \right\rangle \) over all \(X\) is attained when \(X = \frac{1}{\alpha }(M+S)\). Therefore,

Simplifying the expression on the right-hand-side, we have

Therefore,

This last problem asks what is the nearest positive semidefinite matrix \(S\) to the matrix \(-M\). The answer to this question is \(S = (-M)_+\). Furthermore, it is not hard to show that \(M + (-M)_+ = M_+\), so we have that

Substituting this back into Eq. (18), we have the result in Eq. (17). \(\square \)

This proposition makes it clear that the bounds that we consider here correspond (up to a change of sign and notation) to the ones introduced in [21] for general 0-1 quadratic optimization problems (see \(\Theta (\lambda ,\mu ,\alpha )\) in Theorem 2 of [21]). The introduction of the bounds we give here is different though: it is more direct, as it does not rely on the reformulation of the initial problem with the so-called matrix spherical constraint (see [20]).

Fixing \(\alpha \) and \({I}\), we obtain the best bound \(F_{I}^\alpha (y,z)\) when \((y,z)\) is an optimal solution of the problem

which is the dual problem of \((\mathrm{SDP}_{I}^\alpha )\).

Observe that when \(\alpha =0\) the dual function has the usual expression

which leads us back to the dual problem (8). We see that the condition

is exactly \(X_{I}(y,z)=0\), which opens another view on the approach we consider. Indeed, from Eq. (17) the function \(F_{I}^{\alpha }(y,z)\) can be viewed as a penalization of the standard dual function \(F_{I}^0(y,z)\). The parameter \(\alpha \) is interpreted as a penalization parameter controlling the loss in the tightness of the bound.

In view of solving problem (19), the next proposition is crucial.

Proposition 3

Let \(\alpha > 0\). Then the dual function \(F_{I}^\alpha \) is convex and differentiable, with partial gradients given by

Proof

Let \(f :\mathbb R ^n \rightarrow \mathbb R \) be defined by \(f(x) = \frac{1}{2}\left\Vert x_+ \right\Vert_2^2\). Then \(f\) is a convex and differentiable function invariant under permutations of the entries of \(x\). The gradient of \(f\) is \(\nabla f(x) = x_+\). From [6, Sect. 5.2], we have that the function \(g :\mathbb S ^n \rightarrow \mathbb R \) defined by \(g(X) = f(\lambda (X))\) is convex and differentiable with gradient

where \(U\) is any \(n \times n\) orthogonal matrix satisfying \(X = U \,\text{ Diag}(\lambda (X)) U^T\); thus \(\nabla g(X) = X_+\). Now note that

so we immediately have that \(F_{I}^\alpha \) is convex and differentiable. Now we simply apply the chain rule, \(\nabla _x \left[ g({M}x) \right] = {M}^* \nabla g({M}x)\), where \({M} :\mathbb R ^p \rightarrow \mathbb S ^n\) is any linear operator, to get the desired result. \(\square \)

As expected, this proposition is in the same vein as [21, Theorem 2] establishing differentiability results for the corresponding bounds. The proof given here is original though, using the differentiability of spectral functions as a direct argument.

The final proposition considers what could happen to the value of the dual function \(F_{I}^\alpha \) when reducing the value of \(\alpha \) with fixed variables \(y\) and \(z\).

Proposition 4

Let \(0\!\!\le \!\! \varepsilon \!\!\le \!\! 1\) and \(0 \!\!<\!\! \alpha ^{\prime } \!\!<\!\! \alpha \). Let \((y, z)\) such that \(\left\Vert \nabla _y F_{I}^\alpha (y,z) \right\Vert_\infty \!\!\le \!\! \varepsilon \). Then

and

Proof

We have

We apply Lemma 1 to \(X = \frac{1}{\alpha } X_{I}( y, z)\), which satisfies \(\max _i|X_{ii}-1|\le \varepsilon \) by Proposition 3 and the assumption \(\left\Vert \nabla _y F_{I}^\alpha (y,z) \right\Vert_\infty \le \varepsilon \). This gives

We deduce the first desired bound, as

Using the other inequality, we get in a similar way the other bound. \(\square \)

This result tells us that \(F_{I}^{\alpha ^{\prime }}(y,z)\) may increase over \(F_{I}^{\alpha }(y,z)\), but not much if \(\alpha -\alpha ^{\prime }\) is small enough and \(\alpha ^{\prime }\) is not too small. This gives the intuition that we should not decrease \(\alpha \) too much or too quickly. In our numerical experiments, we take \(\alpha ^{\prime } = \frac{\alpha }{2}\) and never take \(\alpha \) smaller than \(10^{-5}\) (see Sect. 5.2). Later we will present Lemma 3 which will give us a more precise picture.

3.3 Computing the bounds

For a given set of inequalities \({I}\) and a tightness level \(\alpha >0\), the computation of the value of the bounding function \(F_{I}^\alpha \) and its gradient essentially corresponds to the computation of \(X_{I}(y,z)\), which, in turn, reduces to computing the positive eigenvalues and corresponding eigenvectors of the symmetric matrix \(Q - \,\text{ Diag}(y) + {A}_{I}^*(z)\) (see Eq. 16). To compute this partial eigenvalue decomposition, we use the LAPACK [1] routine DSYEVR—for improved performance we use the Intel Math Kernel Library (MKL) rather than the Automatically Tuned Linear Algebra Software (ATLAS) package. When requesting a partial eigenvalue decomposition of a matrix, DSYEVR first tridiagonalizes the matrix, then finds the positive eigenvalues using bisection, and finally uses the inverse iteration method to find the corresponding eigenvectors; see [13] for more information.

In view of the differentiability result (Proposition 3), we can solve problem (19) with any classic nonlinear optimization algorithm that can handle nonnegativity constraints. Among all possible algorithms and software, quasi-Newton methods are known to be efficient [5, 24]. In our experiments, we use the FORTRAN code L-BFGS-B [8, 32] which has been recently upgraded to version 3.0 [23]. We use the default parameters of the code. Note, though, that we do an initial scaling of the problem by normalizing the constraints we dualize.

In summary, the two main ingredients needed to use our bounds in practice are the following:

-

1.

the function value \(F_{I}^\alpha (y,z)\) and gradient \(\nabla F_{I}^\alpha (y,z)\) are evaluated by computing a single partial eigenvalue decomposition;

-

2.

the smooth convex function \(F_{I}^\alpha \) is minimized by a quasi-Newton method.

Thus, our method is built using reliable and efficient numerical codes: the eigensolver DSYEVR and the quasi-Newton solver L-BFGS-B.

4 Improved semidefinite bounding procedure

This section presents the bounding procedure using the bounds \(F^{\alpha }_{{I}}(y,z)\) introduced in the previous section, provides a theoretical analysis, and finally a numerical illustration.

4.1 Description of the bounding procedure

We have three ways to improve the tightness of the bound \(F_{I}^\alpha (y,z)\): adding triangle inequalities, reducing the parameter \(\alpha \), or reducing the tolerance \(\varepsilon \) in the stopping criteria of the quasi-Newton method:

-

1.

Adding inequalities. In view of (13), we can improve the bound by adding violated (triangle) inequalities to \({I}\); that is,

$$\begin{aligned} \text{ find}\, i\, \text{ such} \text{ that}\, {A}_i(X) + 1 < 0 \end{aligned}$$where \(X = \frac{1}{\alpha } X_{I}(y,z)\). Recall that we only need to add the triangle inequalities that are active at the optimal solution that satisfies all triangle inequalities. We do this by adding a predefined number of the most violated inequalities each time to improve the bound as quickly as possible. We add just a few inequalities at the beginning when we are far from the optimal solution, then we add more inequalities when we are closer to this optimal solution. Moreover, we borrow an idea from the description of Biq Mac in [30] where, instead of using the current iterate \(X_k\) to find violated triangle inequalities, a convex combination of the current iterate with the previous iterate is used: \(X^{\text{ test}} = \lambda X_{k-1} + (1-\lambda ) X_k\). However, while Biq Mac uses \(\lambda = 0.9\), we take the opposite strategy and use \(\lambda = 0.2\) since we found this works well in our code.

-

2.

Reducing \(\alpha \). We can reduce \(\alpha > 0\) and solve problem (19) again, warm-starting with the current \((y, z)\). In practice, we reduce \(\alpha \) if the number of violated triangle inequalities is small for the current value of \(\alpha \). In other words, our strategy consists of changing the target when we can no longer make good progress by adding inequalities.

-

3.

Reducing \(\varepsilon \). We can request more accuracy from the quasi-Newton method by reducing the tolerance \(\varepsilon > 0\) in the stopping test:

$$\begin{aligned} \max \left\{ \left\Vert \nabla _y F_{I}^\alpha (y,z) \right\Vert_{\infty },\; \left\Vert [\nabla _z F_{I}^\alpha (y,z)]_- \right\Vert_{\infty } \right\} < \varepsilon . \end{aligned}$$Note that the expressions of the gradients of \(F_{I}^{\alpha }\) (Proposition 3) gives

$$\begin{aligned} \max \left\{ \left\Vert \,\text{ diag}\left( \frac{1}{\alpha }X_{{I}}(y,z) \right) - e \right\Vert_{\infty }, \; \left\Vert \left[ {A}_{{I}}\left( \frac{1}{\alpha }X_{{I}}(y,z) \right) + e \right]_- \right\Vert_{\infty } \right\} <\varepsilon . \end{aligned}$$

How we control the decrease of \(\varepsilon \) and \(\alpha \), and how we add inequalities, is important for the overall efficiency. Our algorithm, described formally in Algorithm 1, can be viewed as a succession of cutting plane methods (alternating adding cuts and reducing \(\alpha \) and \(\varepsilon \)). We give details in Sect. 5.1 on the parameters we chose to efficiently interlace the decrease of \(\alpha \) and \(\varepsilon \) with the management of the set of enforced inequalities.

4.2 Convergence of the bounding procedure

We now analyze Algorithm 1, starting with the case when the set of triangle inequalities \({I}\) is fixed.

Lemma 2

Let \({I}\) be a (fixed) set of valid triangle inequalities. Consider five sequences indexed by \(k\): \(\alpha _k >0, \varepsilon _k >0, y_k \in \mathbb R ^{n}, z_k\in \mathbb R _+^{|{I}|}, X_k \succeq 0\) such that

and \(X_k := X_{{I}}(y_k,z_k)/\alpha _k\). If both \((\alpha _k)_k\) and \((\varepsilon _k)_k\) tend to \(0\), and if the sequence \((X_k,y_k,z_k)_k\) converges, then its limit is a primal-dual solution of \((\mathrm{SDP}_{I})\), and

Proof

First note that the feasible set of problem (6) is closed and bounded (by \(n\)), so there exists a primal optimal solution. Moreover, problem (6) satisfies Slater’s constraint qualification: the identity matrix satisfies the affine constraints (equalities and inequalities) and strictly satisfies the positive semidefinite constraint. Therefore (see, e.g., [7]), there is no duality gap between between primal-dual problems (6) and (8), and there exists a dual optimal solution. Thus the following conditions are necessary and sufficient for optimality of \((X,S,y,z)\):

Assume that \((X_k,y_k,z_k)_k\) converges to \((\bar{X}, \bar{y}, \bar{z})\). Let us show that the triple satisfies the above optimality conditions. By construction of \(X_k\),

where \(S_k := \left[ Q-\,\text{ Diag}(y_k)+{A}_{I}^*(z_k) \right]_-\). The sequence \((S_k)_k\) converges to

by continuity of the operator \([\cdot ]_-\). Passing to the limit shows that \((\bar{X}, \bar{S}, \bar{y}, \bar{z})\) satisfies (22) since \(\alpha _k\) vanishes. By construction we also have \(\left\langle S_k, X_k \right\rangle =0, X_k\succeq 0\), and \(S_k\preceq 0\). Since the cones of positive semidefinite and negative semidefinite matrices are closed, passing to the limit, we get that \(\bar{X}\) and \(\bar{S}\) satisfy (23).

By Proposition 3, condition (20) can be written as

Passing to the limit we have that \(\bar{X}\) satisfies (24). By assumption, we have that \(z_k \ge 0\) for all \(k\), so we also have that \(\bar{z} \ge 0\). Finally, we write

Since \(\varepsilon _k\) vanishes, at the limit, we have that \(\bar{z}\) and \(\bar{X}\) satisfy (25). Altogether, this shows that \((\bar{X}, \bar{y}, \bar{z})\) is indeed a primal-dual solution.

Finally (17) yields

Therefore we have \(\lim _{k\rightarrow +\infty } F_{I}^{\alpha _k}(y_k,z_k) = e^T \bar{y} + e^T \bar{z}\). Since there is no duality gap for the primal-dual problems (6) and (8), we conclude that (21) holds.\(\square \)

The parameters \(\alpha \) and \(\varepsilon \) control the level of tightness of the bound in that smaller values give tighter upper bounds. Furthermore, we can reach the usual SDP bound, \((\mathrm{SDP}_{I})\), in the limit as \(\alpha \) and \(\varepsilon \) both approach zero. In practice, we interlace the decrease of these control parameters with the process of adding violated inequalities; the overall convergence follows from the next lemma.

Lemma 3

Let the sequence \((\alpha _k, \varepsilon _k, X_k, y_k, \hat{z}_k, z_k, {I}_k, F_k)_k\) be generated by Algorithm 1. Then the following holds:

-

(i)

For all \(k = 1, 2,\ldots \), we have:

$$\begin{aligned} X_k = \frac{1}{\alpha _k}X_{{I}_{k}}(y_k,z_k) \qquad \text{ and} \qquad F_k = F^{\alpha _k}_{{I}_{k}}(y_k,z_k). \end{aligned}$$ -

(ii)

There exists \(\gamma > 0\) such that:

$$\begin{aligned} F_{k+\ell } \le F_k + \gamma \alpha _k , \quad \text{ for} \text{ all}\, k, \ell . \end{aligned}$$ -

(iii)

If \(\alpha _k \rightarrow 0\), then \((F_k)_k\) is a convergent sequence.

Proof

The first item follows from the observation that, based on the definition of \({I}_k\) and \(z_k\) in Algorithm 1, we have \({A}^*_{{I}_{k}}(z_k) = {A}^*_{{I}_{k-1}}(\hat{z}_k)\) and \(e^T z_k = e^T \hat{z}_k\); this follows from the fact that inequalities are removed only if they have a zero multiplier \((\hat{z}_k)_i\), and multipliers for added inequalities are initialized with \((z_k)_i = 0\).

For the second item, first let \(r := \mathtt{ScaleAlpha }^{-1} > 1\). Since we have the bound \(\Vert \nabla _y F_{{I}_{k-1}}^{\alpha _k}(y_k,\hat{z}_k)\Vert _\infty < \varepsilon _k\), Proposition 4 gives:

From the previous item, we have

where the inequality follows from the fact that \((y_{k+1},\hat{z}_{k+1})\) is generated using a descent method starting from \((y_{k},z_{k})\). Therefore, if \(\alpha _{k+1} = \mathtt{ScaleAlpha }\cdot \alpha _k = r^{-1}\alpha _k\), by inequality (26) we have

Since \(0 < \mathtt{ScaleEps } < 1\), we have \(\varepsilon _k \le \varepsilon _1\), for all \(k\). Letting

we have that

for all \(k\). Let \(\ell \) and \(k\) be given, and let \(k_1,\ldots ,k_p\) be the \(p\) indices \(k \le k_i < k+\ell \) such that \(\alpha _{k_i+1} = \mathtt{ScaleAlpha } \cdot \alpha _{k_i}\). Then, from repeated application of the inequalities (27), we obtain

Letting \(\gamma := \frac{\delta r}{r-1} > 0\), we obtain the desired result.

For the third item, suppose \(\alpha _k \rightarrow 0\). Then, by the previous item, we can conclude

so the sequence \((F_k)_k\) converges. \(\square \)

Theorem 1

Let the sequence \((\alpha _k, \varepsilon _k, X_k, y_k, \hat{z}_k, z_k, {I}_k, F_k)_k\) be generated by Algorithm 1. If \((\alpha _k)_k\) and \((\varepsilon _k)_k\) both converge to zero, and \((\bar{X}, \bar{y}, \bar{z}, \bar{I})\) is an accumulation point of the sequence \((X_k, y_k, z_k, {I}_k)_k\), then \((\bar{X}, \bar{y}, \bar{z})\) is a primal-dual solution of \((\mathrm{SDP}_{\bar{I}})\), and the sequence \(F_k\) converges to the classic semidefinite bound:

Proof

Consider a subsequence of \((X_k, y_k, z_k, {I}_k)_k\) that converges to \((\bar{X}, \bar{y}, \bar{z}, \bar{I})\). Since the \({I}_k\) are finite sets, the convergence of a subsequence to \(\bar{I}\) means that there are infinitely many indexes \(k_i\) of this subsequence such that \({I}_{k_i}=\bar{I}\). Observe now that the sequence \((X_{k_i}, y_{k_i}, z_{k_i})_i\) satisfies the assumptions of Lemma 2 with \({I}= \bar{I}\). Therefore,

Since \(\alpha _k \rightarrow 0\), from Lemma 3 we also have that the entire sequence \((F_k)_k\) converges, so we conclude that \(\lim _{k \rightarrow +\infty } F_k = (\mathrm{SDP}_{\bar{I}})\). \(\square \)

This result gives an important observation about our bounding procedure Algorithm 1. Although, by Proposition 1, the bounds \(F_{I}^\alpha (y,z)\), for \(\alpha > 0\), are weaker than the usual SDP bound \((\mathrm{SDP}_{I})\), we have, by Theorem 1, that we can get as close as we like using Algorithm 1.

Theorem 1 thus shows that, in the limit, the tightness of the bounds is only governed by the selection of promising inequalities \({I}_k\). In the next corollary, we look closely at a special case to show that Algorithm 1 can approximate an “optimal” set of inequalities.

Corollary 1

Let the assumptions of Theorem 1 hold. If the sequence \((X_k, y_k, z_k, {I}_k)_k\) converges to \((\bar{X}, \bar{y}, \bar{z}, \bar{I})\) and if \(\mathtt{ViolTol }_k\rightarrow 0\), then \((\mathrm{SDP}_{\bar{I}})\) is equal to the semidefinite bound \((\mathrm{SDP}_{{I}_{\mathrm{all}}})\) with all the inequalities, and \((\bar{X}, \bar{y}, \tilde{z})\) is a primal-dual solution of \((\mathrm{SDP}_{{I}_{\mathrm{all}}})\), where \(\tilde{z} \in \mathbb R ^{4{n \atopwithdelims ()3}}\) is obtained from \(\bar{z}\) by expanding with zeros the entries that are not indexed by \(\bar{I}\).

Proof

We use the assumption of the convergence of the whole sequence. For \(k\) large enough, the set \({I}_k\) is equal to \(\bar{I}\). Thus no more inequalities are added at iteration \(k\), which means

Since \((X_k^{\text{ test}})_k\) also converges to \(\bar{X}\), we pass to the limit to get \({A}_i(\bar{X})+1\ge 0\) for all \(i\not \in \bar{I}\). We also know by Theorem 1 that \(\bar{X}\) is optimal, hence feasible, for \((\mathrm{SDP}_{\bar{I}})\). Therefore we have that

and that \(\bar{X}\) is in fact feasible for \((\mathrm{SDP}_{{I}_{\mathrm{all}}})\). We observe now \((\bar{X}, \bar{y}, \tilde{z})\) satisfies the optimality conditions of \((\mathrm{SDP}_{{I}_{\mathrm{all}}})\) [i.e., conditions (22–25) from the proof of Lemma 2]. Indeed (29) implies that (24) is satisfied for \(X=\bar{X}\) and \({I}={I}_{\mathrm{all}}\), and so are (22) and (25) by construction of \(\tilde{z}\). Thus we have \((\mathrm{SDP}_{{I}}) = (\mathrm{SDP}_{{I}_{\mathrm{all}}})\) and the rest of the proof follows from Theorem 1.\(\square \)

4.3 Numerical illustration of the bounding procedure

This section presents a numerical comparison of our bounding procedure Algorithm 1 with the one of Biq Mac [29]. The code for each solver was compiled on the same machine using the same numerical libraries. We take one problem of the Biq Mac Library [31]—specifically Beasley bqp250.6, the problem highlighted in [29, Figure 1]—and we plot the convergence curve for the two bounding procedures in Fig. 1. For this particular problem, note that we have \((\mathrm{SDP}_{{I}_{\mathrm{all}}}) \approx 41178\) (see [29]) and \((\mathrm{MC}) = 41014\). In our test, our bounding procedure computes a bound of 41210 after 206 s while the Biq Mac bounding procedure is only able to attain a bound of 41251 after 604 s of computing time.

Comparison of the bounding procedure of Biq Mac [29] and our bounding procedure Algorithm 1 on the Beasley bqp250.6 problem—the behaviour shown here is typical

The plot of Fig. 1 is typical of the behaviour of the two solvers: our bounding procedure has a slower start since we take a large value of \(\alpha \) at the beginning, then the convergence curves intersect, after which we see that our bounding procedure is able to compute good bounds in less time than that of Biq Mac. Therefore, the computing time needed for our solver to get to a zone of “useful bounds” is smaller than the one used by Biq Mac—this is crucial for the relative efficiency of our branch-and-bound method and is the reason for the good numerical results of the next section.

Figure 1 also shows large decreases in the value of the dual function on the curve for our bounding procedure: this corresponds to the iterations when \(\alpha \) is decreased. We also note that our bounding procedure is able to attain a tighter bound than the one of Biq Mac. This may be due to a combination of: a different selection of inequalities, and the slow convergence of the bundle method near the end of the solving process.

5 Solving Max-Cut to optimality

In this section, we describe our implementation of a branch-and-bound method for solving Max-Cut to optimality. The scheme of our algorithm is simple and follows the usual branch-and(-cut-and)-bound paradigm—the novelty of our approach is essentially our bounding procedure that we described in Algorithm 1. Finally, we provide a complete numerical comparison to the leading Biq Mac solver [29].

5.1 Branch-and-bound implementation

While Biq Mac uses a dedicated code, we embed our bounding procedure in a generic code: we use the BOB Branch & Bound platform [10], which provides an easy and flexible way to implement a branch-and-bound algorithm. The BOB platform only requires the user to implement the following: (1) a bounding procedure, (2) a method for generating a feasible solution, and (3) a method for generating subproblems. Here we give some details about these points.

We have already described our bounding procedure in Sect. 4, but we would like to mention here how we decide when to stop our bounding procedure within the branch-and-bound method. Suppose the value of the best-known solution for the current subproblem is given by \(\beta \). If we are able to compute a bound that is below \(\beta \), we can stop our bounding procedure and prune this subproblem from the branch-and-bound tree. Indeed, in our method, stopping the run of the quasi-Newton solver at any time gives the valid bound \(F_{{I}_k}^{\alpha _k}(y_k,z_k)\). Similarly, Biq Mac can stop their feasible interior-point method at any time and get a valid bound; this is because Biq Mac uses the following form of their dual function

implying that \((MC) \le \theta _{I}(z) \le e^T z + e^T y\), for any feasible vector \(y\).

We can also stop the bounding procedure when we detect that we will not be able to reach the value of \(\beta \) in a reasonable time. Biq Mac estimates the time to reach \(\beta \) using a linear approximation to the time-bound curve [30]. In our method, we fit a hyperbolic function to the time-bound curve, given its hyperbolic nature that can be observed in Fig. 1. If this hyperbola has a horizontal asymptote that is far above the value of \(\beta \), we terminate the bounding procedure and add the current subproblem, together with its computed bound and fractional solution, to the list of subproblems on which we will branch. We also terminate the bounding procedure if the difference between consecutive bounds, \(F_{k-1} - F_k\), is less than one, we are still at least two away from the lower bound \(\beta \), and \(\alpha _k\) is very small (on the order of \(10^{-4}\)).

In all subproblems in the branch-and-bound tree, except the root problem, we wait until the termination of the bounding procedure to compute a feasible solution (upper-bound) using the Goemans-Williamson (GW) random hyperplane algorithm [12] then local swapping, as is done in Biq Mac. However, we do not use the technique used in Biq Mac of repeatedly running the GW algorithm (while progress is still made) on a matrix obtained by shifting the current matrix \(X\) in the direction \(\tilde{x}\tilde{x}^T\), where \(\tilde{x} \in \left\{ -1,1 \right\} ^n\) is the best cut found so far. In the root problem, to get an upper-bound \(\beta \), we run the GW procedure up to two times during the bounding procedure—this value of \(\beta \) is then used to inform us when we should terminate the bounding procedure in the root problem.

For generating subproblems, Biq Mac considers two branching strategies: “easy first” (R2), and “difficult first” (R3); for details, see [29]. We use two different but similar “easy first” and “difficult first” branching strategies in the \(\left\{ 0,1 \right\} \)-model: we branch on the variable in the first row/column of \(X\) with fractional value that is furthest from, or closest to, \(\frac{1}{2}\), respectively. For the sake of simplicity, we also refer to our “easy first” and “difficult first” branching strategies as (R2) and (R3), respectively. In our numerical results, we compare our method using (R2) branching and our method using (R3) branching to Biq Mac using (R2) branching and Biq Mac using (R3) branching—in total, we compare four methods.

Finally, we note that we have used a best-first exploration strategy in that we always take the subproblem from the current list of subproblems that has the greatest computed bound.

5.2 Description of the numerical tests

Comparison. There exist several efficient methods for solving Max-Cut and binary quadratic programming problems, such as [4] and [26]. However, the numerical tests of [29] show that the results of the other methods are dominated by Biq Mac, so we only compare our solution method to Biq Mac.

Test problems. We use the test problems in the Biq Mac Library [31], which consists of both Max-Cut problems and binary quadratic optimization problems. Some are randomly generated instances, others come from a statistical physics application. We refer to [29, Section 6] for a description of the data set. Since solving the instances in the Biq Mac Library with \(n = 500\) is beyond the reach of current solvers (including Biq Mac and our method) we restrict our tests to the 328 instances in the Biq Mac Library with \(n < 500\).

Machine. In our tests we used a Dell T-7500 (using a single core) with 4 GB of memory running the Linux operating system. We implemented our algorithm in C / FORTRAN and have used the Intel Math Kernel Library (MKL) for the eigenvalue computations. We have compiled and run both our code and the Biq Mac code (kindly provided by the authors) on the same machine, and have used the same libraries (i.e., MKL) and compilation flags for both codes.

Parameters. We used Biq Mac with its default parameters, except for using both the non-default (R2) and the default (R3) branching strategies; additionally, we changed the time limit to 100 h. We used the following values for Algorithm 1 in our tests:

-

1.

Reducing \(\alpha \). We use \(\mathtt{ScaleAlpha } = 0.5\) and start with \(\alpha _1 = 10\); however, if no inequalities are added after the first iteration in the root problem (i.e., \(|{I}_1| = 0\) or \(|{I}_2| = 0\)), we start with a smaller \(\alpha _1\). We continue to reduce \(\alpha _k\) until the minimum value of \(\alpha _k = 10^{-5}\).

-

2.

Reducing \(\varepsilon \). With (R3) branching, we use \(\varepsilon _1 = 0.08\) and \(\mathtt{ScaleEps } = 0.93\), with a minimum value of \(\varepsilon _k = 0.05\). When using (R2) branching, we found that it is better to be less aggressive in decreasing \(\varepsilon \), so we take \(\varepsilon _1 = 0.2\) and \(\mathtt{ScaleEps } = 0.95\), with a minimum value of \(\varepsilon _k = 0.1\).

-

3.

Inequalities. We typically set \(\mathtt{ViolTol }_k = -5 \times 10^{-2}\). We set \(\mathtt{MaxIneqAdded }_k = 20k\) (resp. \(30k\)) and \(\mathtt{MinIneqViol } = 30\) (resp. \(60\)) for \(n < 150\) (resp. for \(n \ge 150\)). This means that we usually add less inequalities than Biq Mac.

Performance profiles. We plot the results using performance profiles [11], that we briefly describe here. Let \({P}\) be the set of problems used to benchmark the solvers. For each problem \(p \in {P}\), we define \(t_p^{\min }\) as the minimum time required to solve \(p\) over all the solvers. Then, for each solver, we consider the performance profile function \(\theta \), which is defined as

where \(t_p\) is the time required for the solver to solve problem \(p\). The function \(\theta \) is therefore a cumulative distribution function, and \(\theta (\tau )\) represents the probability of the solver to solve a problem from \({P}\) within a multiple \(\tau \) of the minimum time required by all solvers considered.

5.3 Computational results

We report aggregated results from the 328 test-problems and almost 1,600 h of computing time: Fig. 2 gives the performance profile, Table 1 counts the number of times a solver attains the minimum solution time, and Table 2 summarizes the computing times. For the full tables of numerical results, see the detailed results available online at the link lipn.univ-paris13.fr/BiqCrunch/repository/papers/KMR2012-results.pdf.

Performance profile curves (30) for the four solvers on the 328 problems

Compared to Biq Mac, our algorithm performs well for a large part of the problems: \(241\) out of the \(328\) problems are solved strictly faster by using our solver, which is around 75 % of the test-problems. When considering only the “hard problems” (those that Biq Mac does not solve at the root node), this percentage increases to 85 % (226 out of 269).

Table 2 shows that, on some problems (e.g., g05 or w100) our method is roughly equivalent to Biq Mac, and on some others it is two times quicker (e.g., gk) or even 6 times quicker (e.g., be250.d10). In particular, we obtain substantial improvements, in both time and number of nodes, for large-sized problems, as can be seen in the detailed results available online at the web address mentioned above. Additionally, we note that both our methods are able to solve all test problems within the 100 h time limit (Biq Mac (R3) fails to solve 6 instances within this time limit).

The performance profile in Fig. 2 shows that both of our methods dominate both of the Biq Mac methods in terms of speed, and also robustness, since the curves for our methods are constantly above the ones for Biq Mac.

6 Conclusions

In this paper, we presented an improved semidefinite bounding procedure to efficiently solve Max-Cut and binary quadratic programming problems to optimality. We precisely analyzed its theoretical convergence properties, and we conducted a complete numerical comparison with the leading Biq Mac method. Our algorithm is shown to be often faster and more robust than Biq Mac; in particular, our solver was able to solve around 75 % of the problems of the Biq Mac Library (with \(n <500\)) in less time than Biq Mac.

A key point is that the two main ingredients of our method are eigenvalue decomposition to evaluate the function and its gradient, and a quasi-Newton method to minimize this smooth function; on the other hand, the two main ingredients of the Biq Mac method are an interior-point method to evaluate a function and its subgradient, and a bundle method to minimize this nonsmooth function.

There is still room for improvement in our current implementation. In particular, we would like to investigate how we can make the eigenvalue decomposition more efficient by somehow using prior information. We could also try decoupling the optimization of the \(y\) and \(z\) dual variables, as is done in Biq Mac, to possibly make the bound computation more efficient.

By focusing on Max-Cut and binary quadratic programming problems, we have shown unambiguously the interest of our semidefinite-based method to solve these classes of problems to optimality. Because our approach is built on the general bounds of [21], it can also be applied to any binary quadratic problems with additional linear or quadratic, equality or inequality, constraints. In our future work, such as [17], we will consider this generalization, which is nontrivial due the work required to make a generic code and to the complication of having semidefinite relaxations that may not be strictly feasible. Due to its inherent flexibility, we believe that our method has a strong potential to handle other classes of problems, even those for which semidefinite-based methods are not yet competitive.

References

Anderson, E., Bai, Z., Bischof, C., Blackford, S., Demmel, J., Dongarra, J., Du Croz, J., Greenbaum, A., Hammarling, S., McKenney, A., Sorensen, D.: LAPACK Users’ Guide, 3rd edn. Society for Industrial and Applied Mathematics, Philadelphia (1999)

Anjos, M.F., Lasserre, J.B. (eds.): Handbook on Semidefinite, Conic and Polynomial Optimization, International Series in Operations Research & Management Science, vol.166. Springer, USA (2012)

Anjos, M.F., Lasserre, J.B.: Introduction to semidefinite, conic and polynomial optimization. In: Anjos, M.F., Lasserre, J.B (eds.) Handbook on Semidefinite, Conic and Polynomial Optimization, International Series in Operations Research & Management Science, vol.166, pp. 1–22. Springer, USA (2012)

Billionnet, A., Elloumi, S.: Using a mixed integer quadratic programming solver for the unconstrained quadratic 0–1 problem. Math. Program. 109, 55–68 (2007)

Bonnans, J., Gilbert, J., Lemaréchal, C., Sagastizábal, C.: Numerical Optimization. Springer, Berlin (2003)

Borwein, J.M., Lewis, A.S.: Convex Analysis and Nonlinear Optimization: Theory and Examples. Springer, Berlin (2000)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2008)

Byrd, R.H., Lu, P., Nocedal, J., Zhu, C.: A limited memory algorithm for bound constrained optimization. SIAM J. Sci. Comput. 16(5), 1190–1208 (1995)

Cadoux, F., Lemaréchal, C.: Reflections on generating (disjunctive) cuts. EURO J. Comput. Optim. (Submitted, 2012)

Cun, B.L., Roucairol, C., The PNN Team: BOB: A Unified Platform for Implementing Branch-and-Bound Like Algorithms. Technical Report 95/16, University of Versailles Saint-Quentin-en-Yvelines (1995).

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Goemans, M.X., Williamson, D.P.: Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J. ACM 42(6), 1115–1145 (1995)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 3rd edn. Johns Hopkins University Press, Baltimore (1996)

Helmberg, C., Rendl, F.: Solving quadratic (0,1)-problems by semidefinite programs and cutting planes. Math. Program. 82, 291–315 (1998)

Hiriart-Urruty, J.B., Lemaréchal, C.: Convex Analysis and Minimization Algorithms. Springer, Heidelberg (Two volumes) (1993)

Karp, R.M.: Reducibility among combinatorial problems. In: Complexity of Computer Computations (Proceedings of Symposium, IBM Thomas J. Watson Res. Center, Yorktown Heights, NY, 1972), pp. 85–103. Plenum, New York (1972)

Krislock, N., Malick, J., Roupin, F.: Improved semidefinite branch-and-bound algorithm for \(k\)-cluster (Submitted, 2012). Available online as preprint hal-00717212

Laurent, M., Poljak, S.: Gap inequalities for the cut polytope. Eur. J. Comb. 17(2–3), 233–254 (1996)

Lemaréchal, C., Oustry, F.: Semidefinite Relaxations and Lagrangian Duality with Application to Combinatorial Optimization. Rapport de Recherche 3710, INRIA (1999)

Malick, J.: Spherical constraint in Boolean quadratic programming. J. Glob. Optim. 39(4) (2007)

Malick, J., Roupin, F.: On the bridge between combinatorial optimization and nonlinear optimization: a family of semidefinite bounds leading to Newton-like methods. Math. Program. B (to appear, 2012). Available online as preprint hal-00662367

Malick, J., Roupin, F.: Solving k-cluster problems to optimality using adjustable semidefinite programming bounds. Math. Program. B Special Issue Mixed Integer Nonlinear Program (to appear, 2012)

Morales, J.L., Nocedal, J.: Remark on “Algorithm 778: L-BFGS-B: Fortran subroutines for large-scale bound constrained optimization”. ACM Trans. Math. Softw. 38(1), 1–4 (2011)

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer, Berlin (2006)

Palagi, L., Piccialli, V., Rendl, F., Rinaldi, G., Wiegele, A.: Computational approaches to Max-Cut. In: Anjos, M.F., Lasserre, J.B. (eds.) Handbook on Semidefinite, Conic and Polynomial Optimization, International Series in Operations Research& Management Science, vol.166, pp. 821–847. Springer, USA (2012)

Pardalos, P., Rodgers, G.: Computational aspects of a branch and bound algorithm for quadratic zero-one programming. Computing 45, 134–144 (1990)

Pardalos, P.M., Vavasis, S.A.: Quadratic programming with one negative eigenvalue is NP-hard. J. Glob. Optim. 1, 15–22 (1991)

Poljak, S., Rendl, F., Wolkowicz, H.: A recipe for semidefinite relaxation for (0,1)-quadratic programming. J. Glob. Optim. 7, 51–73 (1995)

Rendl, F., Rinaldi, G., Wiegele, A.: Solving Max-Cut to optimality by intersecting semidefinite and polyhedral relaxations. Math. Program. 121, 307–335 (2010)

Wiegele, A.: Nonlinear Optimization Techniques Applied to Combinatorial Optimization Problems. Ph.D. thesis, Alpen-Adria-Universität Klagenfurt (2006)

Wiegele, A.: Biq Mac Library–A collection of Max-Cut and quadratic 0–1 programming instances of medium size. Technical report, Alpen-Adria-Universität Klagenfurt, Klagenfurt, Austria (2007)

Zhu, C., Byrd, R.H., Lu, P., Nocedal, J.: Algorithm 778: L-BFGS-B: fortran subroutines for large-scale bound-constrained optimization. ACM Trans. Math. Softw. 23(4), 550–560 (1997)

Acknowledgments

We are grateful to Angelika Wiegele for the discussions we have had and for providing us with a copy of the Biq Mac solver for our numerical comparison.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Krislock, N., Malick, J. & Roupin, F. Improved semidefinite bounding procedure for solving Max-Cut problems to optimality. Math. Program. 143, 61–86 (2014). https://doi.org/10.1007/s10107-012-0594-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-012-0594-z