Abstract

In this paper, we develop a semidefinite relaxation-based branch-and-bound algorithm that exploits the chordal sparsity patterns of the max-cut problem. We first study how the chordal sparsity pattern affects the hardness of a max-cut problem. To do this, we derive a polyhedral relaxation based on the clique decomposition of the chordal sparsity patterns and prove some sufficient conditions for the tightness of this polyhedral relaxation. The theoretical results show that the max-cut problem is easy to solve when the sparsity pattern embedded in the problem has a small treewidth and the number of vertices in the intersection of maximal cliques is small. Based on the theoretical results, we propose a new branching rule called hierarchy branching rule, which utilizes the tree decomposition of the sparsity patterns. We also analyze how the proposed branching rule affects the chordal sparsity patterns embedded in the problem, and explain why it can be effective. The numerical experiments show that the proposed algorithm is superior to those known algorithms using classical branching rules and the state-of-the-art solver BiqCrunch on most instances with sparsity patterns arisen in practical applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given an undirected graph with weights associated with edges, the max-cut problem concerns the partition of vertices into two subsets such that the sum of weights of the edges across all pair of vertices that lie in different subsets is maximized. The max-cut problem can be formulated as the following binary quadratic programming problem [16]:

where Q is the Laplace matrix of the given graph.

The max-cut problem is a classical optimization problem with many practical applications that has received great attention in the past. Besides, it is known that the linearly constrained quadratic 0–1 programming problem can also be formulated as a max-cut problem [27]. Hence, the max-cut problem is quite general in nature. It is known that the max-cut problem is NP-hard in general [14].

The literature for the max-cut problem consists of a large number of solution methods in two strands. One is to design efficient heuristic algorithms for finding sub-optimal solutions. Goemans and Williamson [16] proposed a semidefinite relaxation-based approximation method for the max-cut problem. Burer et al. [7] proposed an alternative rank-two relaxation and developed a specialized version of rounding technique, which outperforms the classical rounding algorithm proposed in [16]. Besides the rounding algorithms, some neighborhood search-based heuristic methods have also been proposed (for example, [11] and [29]). The readers may refer to [9] for a comprehensive review and systematic comparison of various types of approximation algorithms. However, these approximation algorithms are only designed for finding sub-optimal solutions of the max-cut problem in practically acceptable computational complexity [9], but cannot guarantee to find global solutions in general.

The other strand is to design branch-and-bound algorithms for finding exact solutions of the max-cut problem. Depending on the relaxation methods used, we may further classify these algorithms into four types: linear relaxation-based [4, 22], convex quadratic programming relaxation-based [6], second-order cone relaxation-based [23, 31], and semidefinite relaxation-based [20, 32] branch-and-bound algorithms. Rendl et al. [33] discussed the advantages and limitations of the above four types of algorithms. For the linear relaxation-based branch-and-bound algorithms, some max-cut problem arising in statistical physics can be solved for instances with up to 12,100 variables for Q having \(\pm 1\) entries only, and 22,500 variables for Q having entries following a Gaussian distribution. However, Rendl et al. [33] also showed that the linear relaxation-based algorithms may even fail to solve a general max-cut problem with only 100 variables when the density is over 20%. Since 2010, some semidefinite relaxation-based algorithms became capable of solving certain types of sparse max-cut problem with 100–200 variables [25, 26, 33]. The Biq Mac algorithm [33] applies a bundle method to solve the semidefinite relaxations enhanced by triangle inequalities and achieves a high performance on solving problems with hundreds of variables in different densities. Krislock et al. [25] proposed another semidefinite relaxation-based algorithm by using a quasi-Newton algorithm to solve the relaxation problems. The numerical results in [25] showed that their algorithm outperforms Biq Mac on \(75\%\) instances for a test set of 328 instances. They further improved the bounding procedure in [26] and published a semidefinite relaxation-based solver “BiqCrunch” for binary quadratic problems. As far as the authors know, BiqCrunch has achieved the state-of-the-art efficiency, and the numerical results in [26] showed that BiqCrunch runs faster than Biq Mac on most test instances in a large benchmark test set.

Sparsity is often utilized in designing efficient algorithms to solve the max-cut problem exactly. The existing algorithms generally exploit the edge sparsity of a graph to reduce the number of variables in a linear relaxations or to exploit the cycles in a graph to derive new valid inequalities [4, 22]. However, there lacks research on exploiting the “chordal sparsity patterns” of the max-cut problem to design efficient global algorithms. A sparsity pattern can be naturally interpreted as an undirected graph and the chordal sparsity pattern is then described as the clique decomposition of a sparsity pattern. Examples of matrices with chordal sparsity patterns include matrices with banded structure, overlapping block diagonal structure and block-arrow structure. These matrices are often observed in real-life applications [1, 17, 35, 37]. In recent years, many works started to explore the chordal sparsity pattern for solving semidefinite programming problems and sparse polynomial optimization problems [12, 15, 39,40,41,42,43,44]. The readers may refer to [38] for a review on the topic of chordal graph and its applications in optimization. However, as far as we know, in the area of global optimization, there is no work to study how to exploit the chordal sparsity pattern to design effective branching rules for a branch-and-bound algorithm.

This paper intends to design an efficient branch-and-bound algorithm for solving the max-cut problem with chordal sparsity patterns. In particular, we design a new branching rule based on the clique decomposition of the sparsity pattern of the max-cut problem to avoid exploring unpromising subproblems in order to achieve high efficiency. The contributions of this paper are twofold:

(1) In theory, in order to analyze how the chordal sparsity pattern affects the tightness of a convex relaxation of a max-cut problem, we derive a polyhedral relaxation and provide sufficient conditions for the tightness of the polyhedral relaxation. The sufficient conditions imply that the convex relaxation of a max-cut problem can be tight if the sparsity pattern of the problem has the following two properties: (i) the treewidth is small, and (ii) the number of vertices in the intersection of maximal cliques is small.

(2) Based on the theoretical results, we derive a new branching rule called “hierarchy branching rule,” which aims at reducing the treewidth of the sparsity pattern and reducing the number of vertices in the intersection of maximal cliques. The intuitive idea behind the proposed method is that the new rule aims to manipulate the sparsity pattern of max-cut problem toward the one of small treewidth. To the best of our knowledge, the proposed algorithm is the first one exploiting chordal sparsity pattern for designing a branching rule. We test the proposed algorithm on instances of the max-cut problem with five types of sparsity patterns. The numerical results clearly show the benefit of our new branching rule.

The rest of this paper is organized as follows: Section 2 reviews some useful definitions and theories in graph theory. Section 3 designs a polyhedral relaxation of the max-cut problem and studies the tightness of the proposed relaxation. Section 4 proposes a new branching rule and a cutting-plane selection scheme based on the clique decomposition of sparsity pattern embedded in the max-cut problem. Section 5 designs a branch-and-bound algorithm that adopts the new branching rule and cutting-plane selection scheme. Numerical results are presented in Sect. 6, while Sect. 7 concludes the paper.

The following notations are adopted in this paper. \(\mathbb {S}^n\) is the set of real symmetric matrices of size \(n\times n\) and \(\mathbb {S}^n_+\) is the set of real positive semidefinite matrices of size \(n\times n\). For a matrix \(X\in \mathbb {S}^n\) and an index set \(C\subseteq \{1,2,\ldots ,n\}\), X[C] represents the square submatrix of X with the rows and columns indexed by the set C. |C| is the cardinality of set C. \(X_{ij}\) denotes the ith row and jth column component of X, \(X_{i:}\) denotes the ith row of X, and \(X_{:j}\) denotes the jth column. \(X \succeq 0 \) means that the matrix X is positive semidefinite. \(Z=\mathcal {X}(P,C)\) represents the mapping that lifts a matrix \(P\in \mathbb {S}^{|C|}\) to the matrix \(Z \in \mathbb {S}^n\) where \(Z[C]=P\) and \(Z_{ij}=0\) if either \(i\notin C\) or \(j\notin C\). For any two matrices \(A, B\in \mathbb {S}^n\), their inner product is defined as \(A\bullet B = \sum _{i=1}^{m}\sum _{j=1}^{n} A_{ij}B_{ij}\).

2 Preliminary Results in Graph Theory

Since the sparsity pattern of a matrix \(Q\in \mathbb {S}^n\) can be represented by a graph, we review some related results in graph theory in this section.

An undirected graph \(\mathcal {G}=(\mathcal {V},\mathcal {E})\) is defined by its vertex set \(\mathcal {V}\) and edge set \(\mathcal {E}=\{[i,j]\,|\,i,j\in \mathcal {V},i\ne j\}\). For an undirected graph, both the two notations [i, j] and [j, i] represent the same edge. A cycle of length r in a graph is defined by a sequence of vertices \(i_1,\ldots ,i_r,i_{r+1}\) in \(\mathcal {V}\) such that \([i_{t},i_{t+1}]\in \mathcal {E}\) for all \(t=1,\ldots ,r\), \(i_{r+1}=i_{1}\) and \(i_1,\ldots ,i_r\) are different vertices in \(\mathcal {V}\). A chord in a cycle \(i_1,\ldots ,i_r,i_{r+1}\) of length \(r\ge 4\) is an edge \([i_s,i_t] \in \mathcal {E}\) with the pair of vertices \(i_s,i_t\) being two nonconsecutive vertices in the cycle.

To represent the sparsity pattern of a matrix \(Q\in \mathbb {S}^n\), we define the graph \(\mathcal {G}=(\mathcal {V},\mathcal {E})\) corresponding to Q, where \(\mathcal {V}=\{1,2,\ldots ,n\}\) and \(\mathcal {E}=\{[i,j]\,|\, Q_{ij}\ne 0,~i,j\in \mathcal {V},~i\ne j\}\). The extended set of edges is then defined as

The set of real symmetric matrices having a given sparsity pattern \(\mathcal {G}=(\mathcal {V}, \mathcal {E})\) is then denoted as

Similarly, the set of real positive semidefinite matrices with a given sparsity pattern \(\mathcal {G}=(\mathcal {V}, \mathcal {E})\) is denoted as

Some definitions in graph theory, including clique, tree decomposition, chordal graph and graph contraction, are important for deriving the branch-and-bound algorithm that will be proposed in Sect. 5. We introduce them in sequence.

Definition 2.1

(Clique, maximal clique and maximum clique) Given an undirected graph \(\mathcal {G}=(\mathcal {V},\mathcal {E})\), a clique of \(\mathcal {G}\) is a subset of vertices \(C\subseteq \mathcal {V}\), such that for any two different vertices \(i,j\in C\), we have \([i,j]\in \mathcal {E}\). A clique C is called maximal clique if \(C\bigcup \{i\}\) is not a clique of \(\mathcal {G}\) for any vertex \(i\notin C\). A clique C is called maximum clique if |C| achieves the largest value among all different cliques.

Definition 2.2

(Tree decomposition ([8], Section 12.3)) For an undirected graph \(\mathcal {G}=(\mathcal {V},\mathcal {E})\), a tree decomposition of \(\mathcal {G}\) is defined by a graph \(\mathcal {T}=(\mathcal {U},\mathcal {H})\), where vertex set \(\mathcal {U}=\{C_1,\ldots ,C_k\}\) is a family of nonempty subsets (sometimes called bags) of \(\mathcal {V}\), where \(k\le n\), and edge set \(\mathcal {H}\) is a tree on the k vertices, satisfying the Properties (P1), (P2) and (P3), or Properties (P1), (P2) and (P3’)Footnote 1:

-

(P1) For each \(i\in \mathcal {V}\), there exists a \(C_r\in \mathcal {U}\), such that \(i\in C_r\).

-

(P2) For each \([i, j]\in \mathcal {E}\), there exists a \(C_r\in \mathcal {U}\), such that \(i, j\in C_r\).

-

(P3) For two different vertices \(C_s,C_t\in \mathcal {U}\), each vertex \(C_r\) in the path that connects \(C_s\) and \(C_t\) in \(\mathcal {T}\) satisfies \(C_s\bigcap C_t \subseteq C_r\).

-

(P3’) For any \(i\in \mathcal {V}\), the set of vertices in \(\mathcal {T}\) that contain i, defined by \(\{C_r\,|\,i\in C_r,C_r\in \mathcal {U}\}\), forms a connected sub-tree of \(\mathcal {T}\).

The width of \(\mathcal {T}\) is defined by \(\max \{|C_1|,\ldots ,|C_k|\}-1\). The treewidth of \(\mathcal {G}\), denoted by \(\text {tw}(\mathcal {G})\), is the minimum width among all tree decompositions of \(\mathcal {G}\). Treewidth is an important parameter to describe how a graph looks like a tree. It can be shown that \(\mathcal {G}\) is a tree if and only if \(\text {tw}(\mathcal {G})=1\). Apparently, the tree decomposition of a graph is far from unique. For example, a trivial tree decomposition contains all vertices of the graph in its single root node and has width \(n-1\). Finding a tree decomposition of a graph with minimum width is not easy. It is shown that for an integer r, to check whether \(\mathcal {G}\) has a tree decomposition with width at most r is NP-complete, if r is part of the input [2].

Definition 2.3

(Chordal graph) A graph \(\mathcal {G}\) is chordal if every cycle of \(\mathcal {G}\) with length no less than four has at least one chord.

A matrix is said to have a chordal sparsity pattern if its sparsity pattern can be represented by a chordal graph. The interest of exploiting the chordal sparsity pattern of a matrix is motivated from a well-defined tree decomposition structure of a chordal graph. In detail, if a graph \(\mathcal {G}\) is chordal, then there exists a tree decomposition \(\mathcal {T}=(\mathcal {U},\mathcal {H})\) such that \(\mathcal {U}\) is exactly the set of all maximal cliques of \(\mathcal {G}\). In this case, we call this tree decomposition \(\mathcal {T}\) as the clique decomposition of \(\mathcal {G}\) with \(\text {tw}(\mathcal {G})=|C^*|-1\) exactly, where \(C^*\) is one maximum clique of \(\mathcal {G}\). It has been shown that there exist linear-time algorithms (in terms of the number of vertices and edges) to test chordality of a graph and identify the maximal cliques of a chordal graph efficiently [36]. When \(\mathcal {G}\) is not chordal, there are heuristic algorithms to expand \(\mathcal {G}\) to a chordal graph by adding edges into \(\mathcal {G}\) [19]. Figure 1 illustrates an example of chordal extension, tree decomposition and clique decomposition of a graph.

Illustrations of the definitions in graph theory: a a ladder graph cited from Figure 4 in [28]; b the chordal extension of the ladder graph; c the tree decomposition of the ladder graph, which is also the clique decomposition of the chordal graph in b

Now we give the definition of vertex contraction, which will be an important graph operation in the branching rule to be proposed in Sect. 4.2.

Definition 2.4

(Vertex contraction) Let \(i,j\in \mathcal {V}\) be two different vertices in \(\mathcal {G}\). By \(\mathcal {G}/[i,j]\), we denote the graph obtained from \(\mathcal {G}\) by contracting the two vertices i, j into a new vertex e, which is adjacent to all the neighbors of i and of j.

In the next, we define a similar vertex contraction operation in a tree decomposition.

Definition 2.5

(Vertex contraction in a tree decomposition) Let \(\mathcal {T}=(\mathcal {U},\mathcal {H})\) be a tree decomposition of \(\mathcal {G}\), \(C_s,C_t\in \mathcal {U}\) be two different vertices in \(\mathcal {T}\), and \([C_s,C_t]\in \mathcal {H}\). By \(\mathcal {T}/[C_s,C_t]\), we denote the graph obtained by contracting the two vertices \(C_s, ~ C_t\) into a new vertex \(C_e=C_s\bigcup C_t\), which is adjacent to all the neighbors of \(C_s\) and of \(C_t\).

Notice that, in Definition 2.4, the contracted vertices i and j are not required to be adjacent in \(\mathcal {G}\). They can be selected arbitrarily. However, in Definition 2.5, the pair of vertices \(C_s\) and \(C_t\) is required to be an edge in \(\mathcal {T}\). This requirement is necessary to guarantee that \(\mathcal {T}/[C_s,C_t]\) is still a tree. Following from Definition 2.5, we have the following result.

Lemma 2.1

Let \(\mathcal {T}=(\mathcal {U},\mathcal {H})\) be a tree decomposition of \(\mathcal {G}\) that has k vertices with \(k\ge 2\). Then, for any \([C_s,C_t]\in \mathcal {H}\), \(\mathcal {T}':=\mathcal {T}/[C_s,C_t]\) is also a tree decomposition of \(\mathcal {G}\) with \(k-1\) vertices.

Proof

It is easy to check that \(\mathcal {T}'\) is a tree with \(k-1\) vertices that satisfies properties (P1) and (P2) in Definition 2.2. We show that \(\mathcal {T}'\) also satisfies property (P3). Denote \(C_e\) as the vertex contracted from \(C_s\) and \(C_t\). Let \(C_u,C_v\) be two different vertices in \(\mathcal {T}'\), and \(C_{i_1},\ldots ,C_{i_r}\) be the unique path in \(\mathcal {T}'\) that connects \(C_u\) and \(C_v\), where \(C_{i_1}=C_u\), \(C_{i_r}=C_v\), and r is the number of vertices in the path. Now consider the following three cases:

Case 1) If \(C_e\) is not in the path of \(C_{i_1},\ldots ,C_{i_r}\), then the path is also a path in \(\mathcal {T}\) and thus \(C_u\bigcap C_v\subseteq C_p\) for all \(C_p\in \{C_{i_1},C_{i_2},\ldots ,C_{i_r}\}\).

Case 2) If \(C_e\) is in the interior of the path, i.e., there exists a vertex \(C_{i_w}\), \(1<w<r\), such that \(C_e=C_{i_w}\), then one of the following four paths is the unique path in \(\mathcal {T}\) that connects \(C_u\) and \(C_v\):

Hence, \(C_u \bigcap C_v= C_{i_1} \bigcap C_{i_{r}}\subseteq C_{p}\) holds for each

and either \(C_u \bigcap C_v \subseteq C_{s}\) or \(C_u \bigcap C_v \subseteq C_{t}\) holds (or both). Then, for a vertex \(C'_{p}\) in the path of \(C_{i_1},\ldots ,C_{i_r}\) in \(\mathcal {T}'\), either \(C'_{p} \in \{C_{i_1},\ldots ,C_{i_{w-1}},C_{i_{w+1}},\ldots ,C_{i_{r}}\}\) or \(C'_{p}=C_s \bigcup C_t\) holds. In both situations, we have \(C_u \bigcap C_v \subseteq C'_p\).

Case 3) If \(C_e\) is an end point of the path, without loss of generality, we may assume that \(C_e=C_u\). Then, either \(C_s,C_t,C_{i_2},\ldots ,C_{i_r}\) or \(C_t,C_s,C_{i_2},\ldots ,C_{i_r}\) is a path in \(\mathcal {T}\). In both situations, we have \(C_s\bigcap C_{i_r} \subseteq C_p\) and \(C_t\bigcap C_{i_r} \subseteq C_p\) for all \(C_p \in \{C_{i_2},\ldots ,C_{i_{r-1}}\}\). Then, \(C_u\bigcap C_v=(C_s\bigcup C_t)\bigcap C_{i_r} \subseteq C_p\) for all \(C_p\in \{C_{i_1},C_{i_2},\ldots ,C_{i_r}\}\).

Based on the discussions on the above three cases, we have shown that \(\mathcal {T}'\) also satisfies property (P3) to be a tree decomposition of \(\mathcal {G}\) that has \(k-1\) vertices. \(\square \)

3 A Sparse Polyhedral Relaxation of the Max-Cut Problem

In this section, we analyze how the chordal sparsity pattern affects the hardness of a max-cut problem. To do this, we design a sparse polyhedral relaxation of the max-cut problem. Although this polyhedral relaxation is not computational trackable, it helps us to understand when a max-cut problem can be relaxed to a convex problem that has a small relaxation gap. Such a convex relaxation provides a new approach to designing an effective branching rule for a branch-and-bound algorithm.

By introducing \(X=xx^T\), problem (MC) can be reformulated as follows:

where \(\mathbb {B}^n=\left\{ X=xx^T\in \mathbb {S}^{n} \,|\, x_i\in \{-1,+1\},i=1,\ldots ,n\right\} \). Note that \(xx^T=(-x)(-x)^T\), hence there are \(2^{n-1}\) distinct points in \(\mathbb {B}^n\). Note that (RF1) has a linear objective function, thus the problem can be reformulated as

where \(\mathbb {P}^n\), called the max-cut polytope of order n, represents the convex hull of \(\mathbb {B}^n\). Because the number of extreme points in \(\mathbb {P}^n\) is \(2^{n-1}\), it is impractical to solve (RF2) directly unless n is small. However, when n is large and the problem has certain type of sparsity patterns, we may exploit the sparse patterns to design a tight polyhedral relaxation for (MC) from (RF2).

Assume that \(Q\in \mathbb {S}^n(\mathcal {G})\), where \(\mathcal {G}\) is a connected graph with vertices \(\{1,\ldots ,n\}\). Without loss of generality, we may also assume that \(\mathcal {G}\) is a chordal graph, otherwise we can expand \(\mathcal {G}\) to a chordal one by applying the heuristic algorithms in [19]. Let \(\mathcal {T}=(\mathcal {U},\mathcal {H})\) be a clique decomposition of \(\mathcal {G}\) with \(\mathcal {U}=\{C_1,\ldots ,C_k\}\) being the set of all maximal cliques of \(\mathcal {G}\). If \(X\in \mathbb {P}^n\), then \(X[C_r] \in \mathbb {P}^{n_r}\) holds for each \(r=1,\ldots ,k\), where \(n_r=|C_r|\) and \(\mathbb {P}^{n_r}\) is the max-cut polytope of order \(n_r\) for \(r=1,\ldots ,k\). Hence, we define the following polyhedral relaxation of (RF2):

The constraint \(X[C_r] \in \mathbb {P}^{n_r}\) can be represented by using the exact subgraph constraint formulation [13] or approximated by the facet-defining inequalities [3, 4]. Here, we use the exact subgraph constraints to describe the constraint \(X[C_r] \in \mathbb {P}^{n_r}\). Let \(\mathbb {B}^{n_r}\) be the set of extreme points in \(\mathbb {P}^{n_r}\), i.e., \(\mathbb {B}^{n_r}=\{P^{n_r}_j\,|\,j=1,\ldots ,N_r\}\) with \(N_r=2^{n_r-1}\) and \(P^{n_r}_j\)’s being the extreme points of \(\mathbb {P}^{n_r}\). Then, \(X[C_r] \in \mathbb {P}^{n_r}\) can be decomposed as

A natural question is when the relaxation (PR) becomes tight. The following two lemmas are useful for deriving the conditions of the tightness of (PR).

Lemma 3.1

Assume that \(Q\in \mathbb {S}^n(\mathcal {G})\), \(\mathcal {T}=(\mathcal {U},\mathcal {H})\) is a tree decomposition of \(\mathcal {G}\), and \(C_s\) and \(C_t\) are two adjacent vertices in the tree decomposition. If \(X[C_s] \in \mathbb {P}^{n_s}\), \(X[C_t] \in \mathbb {P}^{n_t}\), and \(|C_s \bigcap C_t|\le 3\), then there exists a matrix \(\bar{P} \in \mathbb {P}^{|C_s\bigcup C_t|}\) such that, for the matrix \(\bar{X}=\mathcal {X}(\bar{P},C_s\bigcup C_t)\), we have \(\bar{X}[C_s]=X[C_s]\) and \(\bar{X}[C_t]=X[C_t]\).

Proof

Denote \(n_{s,t}=|C_s\bigcap C_t|\) and \(N_{s,t}=2^{n_{s,t}-1}\). Let \(\mathbb {B}^{n_{s,t}}\) be the set of extreme points of \(\mathbb {P}^{n_{s,t}}\), represented by \(\mathbb {B}^{n_{s,t}}:=\{P^{n_{s,t}}_j\,|\,j=1,\ldots ,N_{s,t}\}\). Let \(r=s\) or t, we partition the set \(\{1,2,\ldots ,N_{r}\}\) into \(N_{s,t}\) disjoint sets defined by

Then, the decomposition of \(X[C_r]\) in (1) can be reformulated as

Based on the construction of \(\mathcal {D}^r_q\), \(r=s\) or t, we have

When \(n_{s,t}\le 3\), it is easy to check that the points in the set \(\mathbb {B}^{n_{s,t}}\) are affinely independent. Therefore,

For each \(q=1,\ldots ,N_{s,t}\), let \(\mu _q= \sum _{j\in \mathcal {D}^s_q} \lambda ^s_j= \sum _{j\in \mathcal {D}^t_q} \lambda ^t_j\). For each pair \(i\in \mathcal {D}^s_q\) and \(j\in \mathcal {D}^t_q\), there exists an extreme point in \(\mathbb {B}^{|C_s\bigcup C_t|}\), denoted by \(P^{|C_s\bigcup C_t|}_{i,j}\), such that

and

Then, we can construct

and \(\bar{X}=\mathcal {X}(\bar{P},C_s\bigcup C_t)\). It is not difficult to verify that \(\bar{P} \in \mathbb {P}^{|C_s\bigcup C_t|}\), \(\bar{X}[C_s]=X[C_s]\) and \(\bar{X}[C_t]=X[C_t]\). \(\square \)

Lemma 3.2

Assume that \(Q\in \mathbb {S}^n(\mathcal {G})\) and \(\mathcal {T}=(\mathcal {U},\mathcal {H})\) is a tree decomposition of \(\mathcal {G}\). If \(|C_s \bigcap C_t|\le 3\) for all \(s,t\in \{1,\ldots ,k\}\) with \(s\ne t\), then for a matrix \(X\in \mathbb {S}^{n}\), the following two statements are equivalent:

-

(S1) There exists a matrix \(\bar{X} \in \mathbb {P}^{n}\) such that the equation \(\bar{X}[C_r]=X[C_r]\) holds for \(r=1,\ldots ,k\).

-

(S2) \(X[C_r] \in \mathbb {P}^{n_r}\) for \(r=1,\ldots ,k\).

Proof

If (S1) is true, then we naturally have \(X[C_r]=\bar{X}[C_r] \in \mathbb {P}^{n_r}\) for all \(r=1,\ldots ,k\), thus (S2) is true. We now show the reverse direction using the induction on k.

For \(k=2\), following Lemma 3.1, we can construct a matrix \(\bar{X}\in \mathbb {P}^{|C_1\bigcup C_2|}=\mathbb {P}^{n}\) such that \(\bar{X}[C_1]=X[C_1]\) and \(\bar{X}[C_2]=X[C_2]\). Hence (S1) holds for \(k=2\).

Now assume that (S1) can be derived from (S2) when \(k\le q\), where \(q \ge 2\), and consider the case of \(k=q+1\). We choose a leaf vertex \(C_s\) from the tree \(\mathcal {T}\) and let \(C_t\) be the unique neighborhood of \(C_s\). Using Lemma 3.1, we can construct a matrix \(\bar{P}\in \mathbb {P}^{|C_s\bigcup C_t|}\) such that, for \(\hat{X}=\mathcal {X}(\bar{P},C_s\bigcup C_t)\), the equations \(\hat{X}[C_s]=X[C_s]\) and \(\hat{X}[C_t]=X[C_t]\) hold. Then, we generate a new matrix \(\tilde{X}\) by assigning \(\tilde{X}[C_s\bigcup C_t]=\bar{P}\) and \(\tilde{X}_{ij}=X_{ij}\) if \(i\notin C_s\bigcup C_t\) or \(j\notin C_s\bigcup C_t\). Based on this construction, we know that if \(\tilde{X}_{ij}\ne X_{ij}\), then either \(i\in C_s\setminus C_t, j\in C_t\setminus C_s\) or \(i\in C_t{\setminus } C_s, j\in C_s{\setminus } C_t\) must hold. Now we analyze the block \(\tilde{X}[C_u]\) where \(u\notin \{s,t\}\). Since \(C_u \bigcap C_s \subseteq C_t\) (according to the running intersection property (P3) of tree decomposition), which implies \((C_u \bigcap C_s) {\setminus } C_t=\emptyset \), i.e., for any \(i\in C_u\), we have \(i\notin C_s\setminus C_t\). Hence, for any \(i,j\in C_u\), the equation \(\tilde{X}_{ij}=X_{ij}\) must hold, and thus \(\tilde{X}[C_u]=X[C_u]\in \mathbb {P}^{|C_u|}\). Then, \(\tilde{X}\) satisfies \(\tilde{X}[C_r] \in \mathbb {P}^{|C_r|}\) for all vertices \(C_r\) in \(\mathcal {T}/[C_s,C_t]\), and the contracted tree \(\mathcal {T}/[C_s,C_t]\) has \(q=k-1\) vertices. Moreover, let \(C_e\) be the vertex contracted from \([C_s,C_t]\). Then, for a neighbor \(C_r\) of \(C_e\), we have \(C_r\bigcap C_e=C_r \bigcap (C_s\bigcup C_t)=C_r \bigcap C_t\), where the last equality holds due to the property \(C_r \bigcap C_s \subseteq C_t\). Thus, we have shown that \(|C_r\bigcap C_e|=|C_r \bigcap C_t|\le 3\). Consequently, \(\mathcal {T}/[C_s,C_t]\) is another tree decomposition of \(\mathcal {G}\) such that for each pair of vertices \(C_s\) and \(C_t\) in \(\mathcal {T}/[C_s,C_t]\), we have \(|C_s \bigcap C_t|\le 3\). By induction, we know that (S1) is true. \(\square \)

Note that Lemma 2.1 shows that \(\mathcal {T}/[C_s, C_t]\) is a tree decomposition, but not necessary a clique decomposition, of the chordal graph \(\mathcal {G}\). In general, the set \(C_e = C_s \bigcup C_t\) may not be a clique in \(\mathcal {G}\). Hence, in Lemmas 3.1 and 3.2, we assume that \(\mathcal {T}\) is a tree decomposition, rather than a clique decomposition, of \(\mathcal {G}\). Furthermore, in the proof of Lemma 3.2, the induction procedure only requires \(\mathcal {T}/[C_s, C_t]\) to be a tree decomposition. Now, we are ready to prove the conditions for the tightness of the polyhedral relaxation (PR).

Theorem 3.1

Assume that \(Q\in \mathbb {S}^n(\mathcal {G})\) and \(\mathcal {T}=(\mathcal {U},\mathcal {H})\) is a tree decomposition of \(\mathcal {G}\). If \(|C_s \bigcap C_t|\le 3\) for all \(s,t\in \{1,\ldots ,k\}\) with \(s\ne t\), then the polyhedral relaxation (PR) is tight.

Proof

Since (PR) is a relaxation of (RF2), we only need to show that, for every feasible solution X of (PR), there is a feasible solution \(\bar{X}\) of (RF2) such that both X and \(\bar{X}\) give the same objective value. Following Lemma 3.2, we know that X is feasible to (PR) if and only if there is a matrix \(\bar{X} \in \mathbb {P}^{n}\) such that \(\bar{X}[C_r]=X[C_r]\) for \(r=1,\ldots ,k\). Since \(Q\in \mathbb {S}^n(\mathcal {G})\), we have \(Q\bullet \bar{X}=\sum _{[i,j] \in \mathcal {E}} Q_{ij}\bar{X}_{ij}\) and \(Q\bullet X = \sum _{[i,j] \in \mathcal {E}}Q_{ij}X_{ij}\). Note that \(\mathcal {T}=(\mathcal {U},\mathcal {H})\) is a tree decomposition of \(\mathcal {G}\), then, for any \([i,j]\in \mathcal {E}\), there is a \(C_r \in \mathcal {U}\), \(r\in \{1,\ldots ,k\}\), such that \(i, j\in C_r\). Therefore, we have \(Q\bullet \bar{X} =Q\bullet X\). Consequently, (PR) is equivalent to (RF2), and thus tight. \(\square \)

Corollary 3.1

Assume that \(Q\in \mathbb {S}^n(\mathcal {G})\) and \(\mathcal {T}=(\mathcal {U},\mathcal {H})\) is a tree decomposition of \(\mathcal {G}\). If the width of \(\mathcal {T}\) is no more than 3, then the polyhedral relaxation (PR) is tight.

Proof

When the width of \(\mathcal {T}\) is no more than 3, we have that \(|C_s \bigcap C_t|\le 3\) for all \(s,t\in \{1,\ldots ,k\}\) with \(s\ne t\). The conclusion then follows from Theorem 3.1. \(\square \)

Based on Theorem 3.1 and Corollary 3.1, we can see that relaxation (PR) is tight if the treewidth of the sparsity pattern \(\mathcal {G}\) corresponding to Q is no more than three, or if the intersection set \(C_s \bigcap C_t\) among different maximal cliques has no more than three elements. These theoretical results give the sufficient conditions for the tightness of relaxation (PR).

4 The Hierarchy Branching Strategy

The sufficient conditions for the tightness of problem (PR) provide important insights on how to design an effective branch-and-bound rule—if the treewidth of the sparsity pattern is small, and the intersections of different cliques have very few vertices, then (PR) is likely to be a tight relaxation for problem (MC). Motivated by this intuitive idea, we exploit the clique decomposition structure in the sparsity pattern of problem (MC) to design a new branching rule, such that the sparsity patterns of subproblems in the deep level of the proposed branch-and-bound algorithm have small treewidth and small clique intersection. The new features of the proposed algorithm include:

-

A new branching rule, which aims to manipulate the sparsity pattern of the problem toward a sparsity pattern that has a low treewidth and few vertices in the intersections of different cliques.

-

A new cutting-plane selection scheme that only involves the valid inequalities on variables in \(X[C_r]\) for \(r= 1,\ldots ,k\).

To further explain the ideas of the proposed branch-and-bound techniques, we first analyze the relation between the proposed polyhedral relaxation and the classical semidefinite relaxations of the max-cut problem. Then, we develop a new semidefinite relaxation-based branch-and-bound algorithm

4.1 Semidefinite Relaxations

If there is a clique \(C_r\in \mathcal {U}\) with large \(n_r=|C_r|\), then it is computationally expensive to solve (PR) directly. A practical way is to further relax \(X[C_r]\in \mathbb {P}^{n_r}\) to a computationally efficient relaxation. One straightforward way is to relax \(X[C_r]\in \mathbb {P}^{n_r}\) to \(X[C_r]\succeq 0\), and then add valid inequalities on \(X[C_r]\). One set of such valid inequalities is \(X_{ii}=1\) for \(i=1,\ldots ,n\). Then, we arrive at the following semidefinite relaxation:

This relaxation has actually appeared in [12]. It is a compact reformulation of the following classical semidefinite relaxation for (MC):

To see the equivalence between (CSDR) and (SDR), we cite the following result of [18]:

Lemma 4.1

([18], Theorem 7) Assume that \(\mathcal {G}\) is a chordal graph and \(X\in \mathbb {S}^n(\mathcal {G})\). Let \(\mathcal {T}=(\mathcal {U},\mathcal {H})\) be a clique decomposition of \(\mathcal {G}\), where \(\mathcal {U}=\{C_1,\ldots ,C_k\}\) is the set of all maximal cliques in \(\mathcal {G}\). Then, the following two statements are equivalent:

(S1) \(X[C_r]\succeq 0\) for \(r=1,\ldots ,k\).

(S2) There exists an \(\bar{X}\in \mathbb {S}^n_{+}\) such that \(\bar{X}[C_r]=X[C_r]\) for \(r=1,\ldots ,k\).

As indicated in Theorem 3.1 and Corollary 3.1, if the treewidth of sparsity pattern is no more than three or the intersection of any two cliques has no more than three vertices, then the relaxation (PR) is tight. Since (CSDR) is derived by further relaxing (PR), it can be regarded as an approximation of (PR). Hence, when the treewidth of the sparsity pattern is reduced, and the number of elements in the intersection of cliques is small, it is expected that the gap between (CSDR) and (MC) is small. Intuitively speaking, the gap between the polyhedral constraint \(X[C_r] \in \mathbb {P}^{n_r}\) and its semidefinite relaxation \(X[C_r]\succeq 0\) depends on the size of \(C_r\). When \(n_r\) is small, \(\mathbb {P}^{n_r}\) is a low-dimension polyhedral, and the gap between \(\mathbb {P}^{n_r}\) and its semidefinite relaxation is small [21]. In this sense, when the treewidth of the sparsity pattern is small, the semidefinite relaxation (CSDR) or (SDR) may involve a tight relaxation to each submatrix constraint \(X[C_r] \in \mathbb {P}^{n_r}\) such that it is likely to provide a tight bound.

This motivates us to design a new branching rule such that the treewidth of the sparsity pattern and the intersection of any two cliques are reduced as the branch-and-bound tree traverses deep. To achieve this purpose, we propose a new branching rule based on the clique decomposition of sparsity pattern in problem (MC) in the next section.

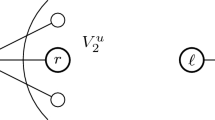

4.2 A Hierarchy Branching Rule

In this subsection, we will develop a new branching rule based on the clique decomposition of the sparsity pattern of problem (MC). In the existing three semidefinite relaxation-based branch-and-bound algorithms [20, 25, 33], two branching rules are widely adopted: the easy first rule (called rule R2) and the difficult first rule (called rule R3). For an optimal solution X of a semidefinite relaxation, rule R2 selects i and j such that their rows of X are closest to a \(\{-1,1\}\) vector, i.e., they are the minimum solution and the second minimum solution of \(\arg \min _{s}\sum _{t=1}^n (1-|X_{st}|)^2\), respectively. Rule R3 picks the one which minimizes \(|X_{ij}|\). One may refer to [20, 33] for more detailed descriptions of rules R2 and R3.

As we can see, both rules R2 and R3 are numerical-driven rules, which only consider the information of the optimal solution of current relaxation problem. However, the branching procedure has significant impacts on the sparsity pattern of the problem. Note that by assigning \(X_{ij}\) to either \(X_{ij}=1\) or \(X_{ij}=-1\), the constraint \(x_i = x_j\) or \(x_i =-x_j\) is added implicitly in the child nodes. This is equivalent as saying that the vertices i and j are contracted to a new vertex in the sparsity pattern of the child problems. Viewing from this perspective, the branching rules R2 and R3 are actually vertex contraction on the sparsity pattern of the problem. Here, different from the above two branching rules, we design a new rule that takes the sparsity pattern of the problem into consideration.

We first analyze how the branching procedure will affect the sparsity pattern of a problem. For a problem with \(Q\in \mathbb {S}^n(\mathcal {G})\), when we select a variable \(X_{ij}\) to branch, the branching procedure will eliminate the variable \(x_j\) by replacing it with \(x_i\) or \(-x_i\) in the child nodes, the dimension of the problem reduces by one, and the matrix Q in the objective function can be updated by Algorithm 1 as given below, where \(p=-1\) in case \(x_i=-x_j\), and \(p=1\) in case \(x_i=x_j\). The set \(\mathcal {V}_Q:= \{\ell _1,\ldots ,\ell _r\}\subseteq \{1,\ldots ,n\}\) in Algorithm 1 defines an ordered sequence, in which, for each \(s=1,\ldots ,r\), \(\ell _s\) represents the vertex in \(\mathcal {G}\) that the sth row (or column) of Q corresponds to. In a branch-and-bound algorithm, \(\mathcal {V}_Q\) is initialized as the set of all vertices in the root node. After some branching steps, some variables have been eliminated, and the number of undetermined variables in the objective function will be reduced to r with \(r<n\). In general, \(\mathcal {V}_Q\) in an enumeration node is a subset of \(\mathcal {V}\). Algorithm 1 provides a general procedure for the case \(Q\in \mathbb {S}^r\) that corresponds to a subset of the vertices. The new vertex generated by contracting i and j is denoted by e. Then, we update \(\mathcal {V}_Q\) to a new ordered sequence \(\mathcal {V}_{Q'}\) according to the following \(\mathcal {V}\)-Procedure:

\(\mathcal {V}\)-Procedure: Replace the vertex i in the ordered sequence \(\mathcal {V}_Q\) by e, and delete the vertex j from the sequence to generate a new sequence \(\mathcal {V}_{Q'}\).

To simplify the notations, we use \(Q_{\mathcal {V}_Q(s,t)}\) to represent the entry of Q in the row that corresponds to s and the column that corresponds to t, where s and t are two vertices in \(\mathcal {V}_{Q}\). The next lemma shows how the branching procedure affects the sparsity pattern of the problem.

Lemma 4.2

For \(Q\in \mathbb {S}^r(\mathcal {G})\), if \(Q'\) is the matrix updated by Algorithm 1 with a given pair of indices \(i,j \in \mathcal {V}_{Q}\), then \(Q'\in \mathbb {S}^{r-1}(\mathcal {G}/[i,j])\).

Proof

Let e denote the vertex contracted from i and j and \(\mathcal {G}'=\mathcal {G}/[i,j]\). For two vertices \(s,t\in \mathcal {V}_{Q'}\), if \(s\ne e\) and \(t\ne e\), then the entity \(Q'_{\mathcal {V}_Q'(s,t)}\) is nonzero if and only if \(Q_{\mathcal {V}_Q(s,t)}\) is a nonzero of Q because the row and column in Q, corresponding to s and t, respectively, do not change in this case. Since \(Q_{\mathcal {V}_Q(s,t)}\ne 0\), [s, t] is an edge of \(\mathcal {G}\). Therefore, it is also an edge of \(\mathcal {G}'\). On the other hand, when one of the two vertices is e, say, \(s=e\) and \(t\ne e\), then \(Q'_{\mathcal {V}_Q'(s,t)}\) is nonzero if either \(Q_{\mathcal {V}_Q(i,t)}\) or \(Q_{\mathcal {V}_Q(j,t)}\) is nonzero, i.e., either [i, t] or [j, t] is an edge of \(\mathcal {G}\). In this case, according to the definition of vertex contraction, [e, t] is an edge of \(\mathcal {G}'\). Consequently, we have \(Q'\in \mathbb {S}^{r-1}(\mathcal {G}')\). \(\square \)

Lemma 4.2 shows that the branching procedure is in fact a vertex contraction on the sparsity pattern of the problem. Thus, the sparsity pattern will be changed in general. However, the chordal structure in the sparsity pattern will be kept when it is a chordal graph and an appropriate variable is carefully selected to branch. We prove this result in the next theorem.

Theorem 4.1

Let \(\mathcal {G}=\{\mathcal {V},\mathcal {E}\}\) be a chordal graph and \([i,j]\in \mathcal {E}\), then the graph \(\mathcal {G}'=\mathcal {G}/[i,j]\) remains to be a chordal graph.

Proof

Let e represent the new vertex in \(\mathcal {G}'\) that is contracted from vertices i, j. Let \(\ell _1,\ldots ,\ell _r,\ell _1\) be a cycle in \(\mathcal {G}'\) of length \(r\ge 4\), we show that there is a chord in the cycle. Consider the following situations: If \(e\notin \{\ell _1,\ldots ,\ell _r\}\), then \(\ell _1,\ldots ,\ell _r,\ell _1\) is also a cycle in \(\mathcal {G}\) of length r, and the chord of the cycle in \(\mathcal {G}\) is also a chord of the same cycle in \(\mathcal {G}'\). Otherwise, when \(e\in \{\ell _1,\ldots ,\ell _r\}\), without loss of generality, the cycle can be represented by \(e,\ell _2,\ldots ,\ell _r,e\). There are four possible cases to be discussed.

Case 1: The sequence \(i,\ell _2,\ldots ,\ell _r,i\) is a cycle in \(\mathcal {G}\). In this case, there exists a chord of the cycle in graph \(\mathcal {G}\). If the chord is constructed by \([\ell _s,\ell _t]\) with \(\ell _s,\ell _t\in \{\ell _2,\ldots ,\ell _r\}\), then \([\ell _s,\ell _t]\) is also a chord of the cycle \(\ell _1,\ldots ,\ell _r,\ell _1\) in \(\mathcal {G}'\). Otherwise, the chord is constructed by \([i,\ell _t]\) for some \(\ell _t\in \{\ell _3,\ldots ,\ell _{r-1}\}\), then \([e,\ell _t]\) is a chord of the cycle \(\ell _1,\ldots ,\ell _r,\ell _1\) in \(\mathcal {G}'\).

Case 2: The sequence \(j,\ell _2,\ldots ,\ell _r,j\) is a cycle in \(\mathcal {G}\). Using the same arguments as Case 1, we can show that there exists a chord of the cycle \(\ell _1,\ldots ,\ell _r,\ell _1\) in \(\mathcal {G}'\).

Case 3: The sequence \(i,j,\ell _2,\ldots ,\ell _r,i\) is a cycle of length \(r+1\) in \(\mathcal {G}\). In this case, there exists a chord of the cycle in graph \(\mathcal {G}\). If the chord is constructed by \([\ell _s,\ell _t]\) with \(\ell _s,\ell _t\in \{\ell _2,\ldots ,\ell _r\}\), then \([\ell _s,\ell _t]\) is also a chord of the cycle \(\ell _1,\ldots ,\ell _r,\ell _1\) in \(\mathcal {G}'\). Otherwise, the chord is constructed by \([w,\ell _t]\) for \(w\in \{i,j\}\) and \(\ell _t\in \{\ell _2,\ldots ,\ell _{r}\}\). We further consider the three subcases: (i) \(\ell _t\in \{\ell _3,\ldots ,\ell _{r-1}\}\). In this subcase, \([e,\ell _t]\) is a chord of the cycle \(\ell _1,\ldots ,\ell _r,\ell _1\) in \(\mathcal {G}'\); (ii) \([w,\ell _t]=[i,\ell _2]\). In this subcase, \(i,\ell _2,\ldots ,\ell _r,i\) is another cycle in \(\mathcal {G}\), then this subcase is reduced to Case 1, in which we have shown that there exists a chord of \(\ell _1,\ldots ,\ell _r,\ell _1\) in \(\mathcal {G}'\); (iii) \([w,\ell _t]=[j,\ell _r]\). In this subcase, \(j,\ell _2,\ldots ,\ell _r,j\) becomes a cycle in \(\mathcal {G}\), and this subcase is reduced to Case 2, thus there exists a chord of \(\ell _1,\ldots ,\ell _r,\ell _1\) in \(\mathcal {G}'\).

Case 4: The sequence \(j,i,\ell _2,\ldots ,\ell _r,j\) is a cycle of length \(r+1\) in \(\mathcal {G}\). Using the same arguments as Case 3, we can show that there exists a chord of the cycle \(\ell _1,\ldots ,\ell _r,\ell _1\) in \(\mathcal {G}'\).

In all, we conclude that for any cycle in \(\mathcal {G}'\) of length \(r\ge 4\), there must exist a chord in the cycle. Hence \(\mathcal {G}'\) is chordal. \(\square \)

Theorem 4.1 shows that the chordal structure of the sparsity pattern will be kept if we only select the variables \(X_{ij}\) with \([i, j]\in \mathcal {E}\) as candidates for branching. As we have mentioned, the purpose of our new branching rule is to reduce the treewidth of sparsity pattern, if possible, after branching. To do this, we need the next theorem.

Theorem 4.2

Let \(\mathcal {G}=\{\mathcal {V}, \mathcal {E}\}\) be a chordal graph, for any \([i,j]\in \mathcal {E}\), if \(\mathcal {T}=(\mathcal {U},\mathcal {H})\) with \(\mathcal {U}=\{C_1,\ldots ,C_k\}\) being a clique decomposition of \(\mathcal {G}\), then \(\mathcal {T}'=(\mathcal {U}',\mathcal {H}')\) is a tree decomposition of \(\mathcal {G'}=\mathcal {G}/[i, j]\) with

where e is the new vertex generated by the contraction of the vertices i and j in \(\mathcal {G}\), and for two vertices \(C'_s,C'_t\in \mathcal {U}'\), \([C'_s,C'_t]\in \mathcal {H}'\) if and only if \([C_s,C_t]\in \mathcal {H}\).

Proof

It is easy to verify that \(\mathcal {T}'\) satisfies properties (P1) and (P2) in Definition 2.2, we only need to show that it satisfies property (P3’).

For a vertex w in \(\mathcal {G'}\), if \(w\ne e\), then the set of vertices \(\{C'_t\,|\, w\in C'_t, C'_t \in \mathcal {U}'\}\) is the same as the set of vertices \(\{C_t\,|\, w\in C_t, C_t \in \mathcal {U}\}\), thus property (P3’) holds automatically. Otherwise, we consider the case that \(w=e\). Since \([i,j]\in \mathcal {E}_\mathcal {G}\), there is a vertex \(C_r\in \mathcal {U}\) such that both i and j are in \(C_r\). Based on property (P3’) for \(\mathcal {T}\), the sets \(\mathcal {U}_i:=\{C_t\,|\,C_t\in \mathcal {U},i\in C_t\}\) and \(\mathcal {U}_j:=\{C_t\,|\,C_t\in \mathcal {U},j\in C_t\}\) induce connected subgraphs in \(\mathcal {T}\), denoted by \(\mathcal {T}[\mathcal {U}_i]\) and \(\mathcal {T}[\mathcal {U}_j]\), respectively. Since \(C_r\in \mathcal {U}_i \bigcap \mathcal {U}_j\), the intersection of the two connected subgraphs \(\mathcal {T}[\mathcal {U}_i]\) and \(\mathcal {T}[\mathcal {U}_j]\) is nonempty. Hence, the subgraph \(\mathcal {T}[\mathcal {U}_i \bigcup \mathcal {U}_j]\) is also a connected subgraph in \(\mathcal {T}\). Note that for each \(C'_t\in \mathcal {U}'\), we have \(e\in C'_t\) if and only if \(i\in C_t\) or \(j\in C_t\), i.e., \(C_t\in \mathcal {U}_i \bigcup \mathcal {U}_j\). Then, the set of vertices in \(\mathcal {U}'\) that contains e induces a connected subgraph of \(\mathcal {T}'\). Therefore, property (P3’) holds for \(\mathcal {T}'\). \(\square \)

Theorem 4.2 shows that it is possible to reduce the treewidth of the problem if the indices i and j of the selected variable \(X_{ij}\) are both in the same maximum clique of the \(\mathcal {G}\). Moreover, we have the following two observations:

-

(i)

If \(i,j\in C_r\) for some \(r\in \{1,\ldots ,k\}\), then \(|C'_r|=|C_r|-1\).

-

(ii)

If \(i,j\in C_s \bigcap C_t\) for some \(s,t\in {1,\ldots ,k}\), then \(|C'_s\bigcap C'_t |=|C_s \bigcap C_t|-1\).

Hence, if we select a suitable pair \([i,j]\in \mathcal {E}\), then we may reduce not only the number of vertices in some cliques, but also the number of vertices in the intersections of different maximal cliques.

On the other hand, if we arbitrarily select a pair \([i,j]\notin \mathcal {E}\) for contracting, then the chordal structure of the sparsity pattern may be destroyed and the treewidth of graph \(\mathcal {G}\) may even increase. For example, consider a simple graph \(\mathcal {G}\) with 5 vertices \(\{1,\ldots ,5\}\) and 4 edges

It is easy to see that \(\mathcal {G}\) is a tree (and also a chordal graph), with treewidth being 1. Let \(\mathcal {G}'=\mathcal {G}/[1,5]\). Then, \(\mathcal {G}'\) becomes a circle with vertices \(\{e,2,3,4\}\) and edges

It is straightforward to verify that \(\mathcal {G}'\) is not a chordal graph and the treewidth of \(\mathcal {G}'\) is increased to 2. Hence, the sparsity pattern of the problem may become more complicated if the pair i, j to be contracted is not well selected.

The above simple example indicates that we should carefully choose a variable to branch in the algorithm to keep the chordal structure intact. In our branch-and-bound algorithm, we only select the variables \(X_{ij}\) with \([i,j]\in \mathcal {E}\) as candidates to branch. An immediate question is whether such an entry exists when the relaxation (SDR) is not tight. The following theorem gives a positive answer to this question.

Theorem 4.3

Assume that \(\mathcal {G}=\{\mathcal {V},\mathcal {E}\}\) is a connected chordal graph. Let \(X^*\) be an optimal solution of (SDR). If \(|X^*_{ij}|=1\) for all \([i,j]\in \mathcal {E}\), then problem (SDR) is tight.

Proof

We prove the theorem by showing that \(X^*\) is a rank-one matrix. Since \(X^* \succeq 0\), it can be decomposed as \(X^* = V^\top V\), where \(V:=[v_1,\ldots , v_n]\in \mathbb {S}^n\) and \(v_i\in \mathbb {R}^n\) for \(i=1,\ldots ,n\). We have \(v_i^\top v_i = X_{ii}^* = 1\) for all \(i=1,\ldots ,n\), thus each column of V is a unit vector. For any entry \(X^*_{ij}\) with \([i,j]\in \mathcal {E}\), since \(|X^*_{ij}|=1\), we have \(|v_i^\top v_j| = |X^*_{ij}|=1\). Following the Cauchy–Schwartz inequality \(|v_i^T v_j|\le \Vert v_i\Vert \Vert v_j\Vert \), together with \(\Vert v_i\Vert =1\), \(\Vert v_j\Vert =1\), the equality \(|v_i^T v_j|=1\) holds if and only if \(v_i=v_j\) or \(v_i=-v_j\). Also note that \(\mathcal {G}\) is connected, then for any \(j\in \{2,\ldots ,n\}\), there exists a path \(i_0,\ldots ,i_r\) with \(i_0=1\) and \(i_r=j\), such that \((i_t,i_{t+1})\in \mathcal {E}\) for \(t=0,1,\ldots ,r-1\). Hence, \(v_1 = v_j\) or \(v_1 = -v_j\) for any \(j\in \{2,\ldots ,n\}\). It follows that V is a rank-one matrix, so is \(X^*\). \(\square \)

Theorem 4.3 shows that if the relaxation (SDR) is not tight, then there must exist an edge \([i, j]\in \mathcal {E}\) such that \(|X^*_{ij}|<1\), where \(X^*\) is an optimal solution of (SDR). Therefore, it is valid to design a branching rule that only selects variables \(X_{ij}\) with \([i, j]\in \mathcal {E}\) to branch.

Obviously, there could be multiple candidates \(X_{ij}\) with \([i,j]\in \mathcal {E}\). To pick the one that is effective in reducing the treewidth and the number of vertices in the intersections of maximal cliques, the proposed branching rule needs to consider the priorities of the edges [i, j] in \(\mathcal {E}\). That is, in order to reduce the treewidth of the sparsity pattern, the edges in the maximum cliques should have higher priorities. Similarly, in order to reduce the number of vertices in the intersections of cliques, the edges in many different maximal cliques will have higher priorities. For this purpose, we define two priority values for each \([i,j]\in \mathcal {E}\):

-

\(P_{ij}^1 = \max _{i,j \in C_r} {|C_r|}\) is the largest cardinality of the maximal cliques that contain both vertices i and j.

-

\(P^2_{ij}=\left| \{C_r \,|\, i, j \in C_r, ~r=1,\ldots ,k\}\right| \) is the number of different maximal cliques that contain both vertices i and j.

A good candidate \([i,j]\in \mathcal {E}\) should have large values in both \(P^1_{ij}\) and \(P^2_{ij}\). We then define the unified priority value \(P_{ij}\) of [i, j] as the cardinality of the set \(\mathcal {U}_{i,j}\), where

Note that \(\mathcal {G}\) is assumed to be a chordal graph (or have been expanded to a chordal graph) and the set \(\mathcal {U}_{i,j}\) consists of all vertices v that are adjacent to both i and j in \(\mathcal {G}\), \(P_{ij}\) actually denotes the number of vertices that are located in the same cliques with i and j. When \(P_{ij}\) is large for some \([i,j]\in \mathcal {E}\), there are many vertices v adjacent to both i and j. If these vertices v belong to one maximal clique, then the cardinality of this maximal clique is large and \(P_{ij}^1\) is large. On the other hand, if these vertices v belong to different maximal cliques, then \(P_{ij}^2\) is large. Therefore, if \(P_{ij}\) is large, then either \(P^1_{ij}\) or \(P^2_{ij}\) should be large. In this sense, \(P_{ij}\) simultaneously takes the priority values \(P^1_{ij}\) and \(P^2_{ij}\) into consideration. We prefer to select the variable \(X_{ij}\) with the largest priority value \(P_{ij}\) and \([i,j]\in \mathcal {E}\) as the one to branch.

Based on the above discussion, we now propose our new branching rule, referred to as hierarchy branching rule (rule HB in short): From the set \(\big \{ [i,j] \in \mathcal {E} \,\big |\,|X_{ij}|<1 \big \},\) select the pair [i, j] that achieves the largest priority value \(P_{ij}\) for branching. If there are multiple edges that achieve the largest priority, then we break the tie by using the conventional branching rule R3, i.e., we select the variable such that \(|X_{ij}|\) achieves the smallest value among the candidate edges [i, j] that have the largest priority. If there still exist more than one candidate, then we randomly select one among them.

4.3 A Sparsity-Pattern-Driven Cutting-Plane Scheme

In this subsection, we develop a cutting-plane selection scheme based on the sparsity pattern to further improve the efficiency of the branch-and-bound method for the max-cut problem.

Cutting planes are usually added into the relaxation problem (SDR) to obtain a tighter upper bound for problem (MC). One set of such cutting planes are the so-called triangle inequalities proposed in [20]:

Let \(X^*\) be an optimal solution of (SDR) before adding any triangle inequalities in (3). It is not difficult to find the most violated \(\gamma \) inequalities from (3) by \(X^*\) and add them into (SDR). We call this process as traditional cutting-plane (TCP in short) scheme.

To keep the algorithm efficient it is extremely important to select just a small number of promising inequalities from the vast set of violated triangle inequalities. Hence, we derive a heuristic criterion based on the clique decomposition to select the violated triangle inequalities from a subset of (3). By the equivalent formulation of (SDR), i.e., problem (CSDR), we can see that variable \(X_{ij}\) can be eliminated whenever i and j do not simultaneously belong to any clique \(C_r\), \(r=1,\ldots ,k\). In this case, the triangle inequalities involving \(X_{ij}\) would be expected to have little improvement in the bound. To avoid selecting these triangle inequalities, we only enumerate triangle inequalities from the following set:

for each clique \(C_r\), \(r=1,\ldots ,k\), and add no more than \(\gamma \) triangle inequalities that are most violated by \(X^*[C_r]\) into (SDR). We call this process as sparsity driven cutting-plane (SCP in short) scheme. Note that SCP is different from TCP in that the entries i, j and t are required to be in the same clique \(C_r\).

Following the SCP scheme, the added valid inequalities have the form \(A_i \bullet X\le b_i\), \(i=1,\ldots ,m\le \gamma \) with \(A_i \in \mathbb {S}^n(\mathcal {G}[C_r])\), and \(\mathcal {G}[C_r]\) being the subgraph of \(\mathcal {G}\) induced by \(C_r\). Thus, as a byproduct of SCP, the sparsity pattern of matrix \(A_i\) is in fact a subgraph of \(\mathcal {G}\). That is, SCP will not complicate the sparsity pattern of subproblems in the branch-and-bound tree.

We would like to point out that the redundancy of a variable \(X_{ij}\) in (CSDR) does not indicate that all triangle inequalities in (3) involving \(X_{ij}\) are ineffective in improving the tightness of (SDR). The effect of these triangle inequalities depends on the structure of the problem. More discussions on the effectiveness of the SCP and TCP schemes will be provided in Sect. 6.

5 A New Branch-and-Bound Algorithm

In this section, we describe the main steps of the proposed semidefinite relaxation-based branch-and-bound algorithm. The main framework of the proposed algorithm is similar to the ones in Biq Mac [33], except that the proposed one uses the new branching rule and cutting-plane selection scheme described in Sects. 4.2 and 4.3, respectively. The main steps and some implementation details of the proposed algorithm are provided as follows.

Preprocessing Before solving the problem, we first construct a graph to represent the sparsity pattern of the matrix Q and expand the graph to a chordal graph by using the greedy fill-in heuristic chordal extension algorithm in [24]. The expanded graph is denoted as \(\mathcal {G}\). We also obtain the clique decomposition of \(\mathcal {G}\) by using Algorithm 3.1 in [38, Section 3.5]. The details of Algorithm 3.1 can be referred to [38, Section 4].

Problem enumeration procedure The problem enumerated by the branch-and-bound algorithm is represented by a tree. In each enumeration step, we adopt the best-first search rule. In other words, we choose the leaf node in the tree that has the largest upper bound. If there are multiple leaf node that achieve the same largest upper bound, we break the tie arbitrary.

Iterative upper bound procedure We solve the relaxation (SDR) by Mosek [30], a commercial interior-point based solver. Meanwhile, an iterative cutting-plane method that is similar to the one in [20] is adopted as follows: Valid triangle inequalities are iteratively added into (SDR) after solving the semidefinite relaxation, and then the relaxation with newly added triangle inequalities is re-optimized by Mosek. Following the SCP scheme, we only select the violated triangle inequalities from (4). In our implementation, we add at most 250 valid triangle inequalities in each iteration, and the number of iterations is limited to 30. Moreover, we set an early-stop rule to terminate the iteration if one of the following two conditions holds: (i) Let \(\ell ^*\) be the best-known lower bound and \(u_j\) be the upper bound obtained in the jth iteration. Under the assumption that the entries in Q are all integers, the iteration terminates immediately when \(u_j<\ell ^*+0.99\). (ii) If the improvement in the successive iterations is marginal, then we terminate the iteration. In our implementation, when \(\frac{u_j-u_{j+1}}{u_0-\ell ^*}<0.1\) for some j, the iteration terminates, where \(u_0\) is the upper bound obtained by solving the initial relaxation (SDR) without adding any triangle inequality.

Lower bound procedure For each enumeration node, after obtaining the optimal solution of relaxation (SDR), we use the classical semidefinite relaxation-based rounding scheme in [16] to generate a feasible solution of problem (MC). The best-known feasible solution is recorded, and its corresponding objective value \(\ell ^*\) serves as a global lower bound of problem (MC).

Branching rule We compute the priority value \(P_{ij}\) for each \([i,j]\in \mathcal {E}\), and select the variable \(X_{ij}\) to branch following the rule HB described at the end of Sect. 4.2.

We would like to point out that the framework of the proposed branch-and-bound algorithm is flexible. For example, the SCP scheme can be replaced by TCP scheme in the iterative upper bound procedure, and other branching rules, such as rule R2, instead of rule HB, can be applied in branching step.

Since it is not always more efficient to solve (CSDR) than (SDR) by using an interior-point-based solver when the maximal cliques in the clique decomposition have large intersections with each other, we use (SDR) rather than (CSDR) for computing the upper bound. In fact, we introduce (CSDR) for analyzing its connection to (PR) and explaining how the chordal sparsity pattern affects the tightness of (SDR), rather than for computational purpose.

6 Computational Experiments

In this section, we test the proposed algorithm with different options on randomly generated instances. The algorithm is implemented using MATLAB R2017a on a personal computer with Intel Core i7-9700 CPU and 16 GB RAM. We adopt the commercial solver Mosek [30] (version 9.2) to solve the semidefinite relaxations in the proposed algorithm. We first describe different option settings of the proposed algorithm, and the datasets used in our experiment, and then present and analyze the numerical results. More experiments on larger instances and discussions on the feature of the proposed algorithm are presented at the end of this section.

6.1 Experiment Settings and Datasets

To study the effects of rule HB and SCP scheme, we implemented the proposed algorithm with three different variants as follows.

HB-SCP algorithm This is the exact algorithm described in Sect. 5, which applies both of rule HB and SCP scheme.

HB algorithm This algorithm uses TCP, instead of SCP, scheme in the upper bound procedure. The purpose here is to study the effectiveness of SCP scheme.

R2 algorithm This algorithm turns off both rule HB and SCP scheme, but applies rule R2 and TCP scheme. This option is similar to Biq Mac [33], except that we solve the semidefinite relaxations using Mosek while Biq Mac uses the conic bundle algorithm.

We have also implemented another variant called “R3 algorithm,” which uses rule R3 as the branching rule. However, our preliminary numerical results show that this variant performed worse than the R2 algorithm. This phenomenon has also been observed in other works, such as [20, 25] and [33]. Hence, we use R2 algorithm as a benchmark to study the effectiveness of rule HB and SCP scheme. Since all three algorithms use the same solver and run on the same computer, the comparison between these three algorithms is fair, and can reflect the effects of rule HB and SCP scheme.

Moreover, we have tested BiqCrunch [26] in our experiment. As introduced in Sect. 1, BiqCrunch is currently the best semidefinite relaxation-based branch-and-bound solver for solving the max-cut problemFootnote 2. The code of BiqCrunchFootnote 3 was compiled by GCC compiler and ran on the same computer.

Since most of the public benchmark test sets, such as Biq Mac Library,Footnote 4 do not have instances with any chordal sparsity pattern, we generate random instances of five different sparsity patterns with wide applications for our experiment.

Set A Ten instances with the overlapping block diagonal sparsity pattern are randomly generated in this set. In this sparsity pattern, there are k blocks, each of which contains w fully connected vertices. The two adjacent blocks are overlapped, with s vertices in their intersection. See Fig. 2a for an illustration. The vertices in the overlapping block diagonal sparsity pattern are local-clustered and graph-connected. Such a graph often appears in a social network, where each vertex is an individual and an edge represents the relation between two individuals. The individuals in a common community are connected with each other (locally clustered) while individuals in different communities are connected via others [5]. In our experiment, we set \(k=4\), \(w=30\) and \(s=10\). The generated instances are indexed by “Block_w_s_k_id,” where id\(\in \{1,\ldots ,10\}\) denotes the index of the instance. Given the sparsity pattern with the above parameters, the graph has 90 vertices and 1695 edges. The density of the graph is \(42.3\%\).

Set B Ten instances with the banded sparsity pattern are generated in this set. The banded sparsity often appears in the finite-difference and finite-element models for various practical problems [17, 37]. In this sparsity pattern, two vertices \(i,j\in \{1,\ldots ,n\}\) are adjacent if and only if \(|i-j|\le w\), where w is a positive integer that denotes the bandwidth. In our experiment, we set \(n=100\) and \(w=30\). The generated instances are indexed by “Band_n_w_id.” Each graph in this set has 100 vertices and 2635 edges with density being \(53.2\%\).

Set C Ten instances with the block-arrow sparsity pattern are generated in this set. The matrices of block-arrow sparsity pattern appear in Statistics [1] and power network [35]. In this sparsity pattern, there are n vertices in total, and the first k vertices are connected with each other while any vertex \(i\in \{k+1,\ldots ,n\}\) is adjacent to the first k vertices. We set \(n=120\) and \(k=40\) in our experiment. The generated instances are indexed by “Arrow_n_k_id.” Each graph in this set has 120 vertices and 4100 edges with density being \(57.4\%\).

Set D Ten instances with the sparsity pattern derived from a disk graph are generated in this set. The disk graph appears in communication networks [10]. For each instance, we first generate a random disk graph following the procedure in [10, Section 5.1]: We sample n points \(p_1,\ldots ,p_n \in \mathbb {R}^2\) uniformly on the unit square. Edges are created between pairs of vertices i and j if \(\Vert p_i-p_j\Vert \le d\), where \(d>0\) is a constant parameter. The graph is then expanded with edges between pairs of vertices which have a neighbor in common. We set \(n=100\) and \(d=0.3\). The generated instances are indexed by “DiskGraph_n_id.” Different from the previous three sparsity patterns that have deterministic structures in the positions of nonzero elements, the number and positions of nonzero elements in the disk-graph sparsity pattern are random. Hence, the density of different instances varies. Our Monte Carlo simulation shows that the expected density of the instances in this set is about \(56.8\%\).

Set E Ten instances with the sparsity pattern derived from a random graph are generated in this set. In a random graph with n vertices, each pair of vertices is adjacent with probability p. This type of sparsity pattern is a generic random sparsity pattern in which it does not have any deterministic structure. In our experiment, we set \(n=100\) and \(p=0.3\). The generated instances are indexed by “Rand_n_P_id,” where \(P=100p\). The expected number of edges in such a graph is 1485, and the density is expected to be \(30\%\).

Please refer to Fig. 2 for an illustration of the five sparsity patterns. In addition, we generate another set of test instances by using RUDY [34] as follows.

Set F Ten instances with the random-graph sparsity are generated in this set. The random graphs are generated by issuing the command “rudy -rnd_graph n d s1 -random -50 50 s2” in RUDY, where \(n=120\) is the number of vertices, \(d\in \{20,50\}\) represents the density, and s1 and s2 are two seeds that are both equal to id. Note that the first five instances in this set have a density of \(20\%\), and the other five instances have a density of \(50\%\). The generated instances are indexed by “Rudy_n_d_id.”

For every instance with a given sparsity pattern \(\mathcal {G}=(\mathcal {V},\mathcal {E})\), we uniformly sample the entry \(Q_{ij}\) of the symmetric matrix Q from \(\{-50,-49,\ldots ,50\}\) for each edge \([i,j]\in \mathcal {E}\). In total, we have 60 test instances, whose size ranges from 90 to 120 and density from \(20\%\) to \(57.4\%\). The sparsity patterns in Sets A-C have the deterministic structure. The aim of the experiments on these instances is to test the proposed algorithms on problems with special structures arisen in various practical applications. The sparsity patterns in Sets D-F are derived from random graphs. The sparsity pattern for Set D contains some geometrical information: the vertices are defined by a set of points in the unit square, and a point is only connected with its nearby points. In comparison, the sparsity patterns for Sets E and F are derived from generic random graphs that do not have any structural information. By evaluating the performance of the proposed algorithm on these random sparsity patterns, we could assess whether the proposed algorithm remains to be efficient on general sparsity patterns.

6.2 Numerical Results

The numerical results for Sets A-F are reported in Tables 1, 2, 3, 4, 5 and 6, respectively. All computational time is the wall clock time in seconds. The computation time for both HB-SCP algorithm and HB algorithm includes the time for chordal extension, clique decomposition and the branch-and-bound procedure. Since the time for applying chordal extension and clique decomposition is much less than the one for branch-and-bound procedure, we do not show them separately in the tables.

First, we evaluate the effectiveness of SCP scheme by comparing the results of HB-SCP and HB algorithms. The computational time in Tables 1 and 4 shows that HB-SCP runs faster than HB on all instances in Set A, and slightly faster on 9 out of 10 instances in Set D. However, Tables 2, 3, 5 and 6 show that HB-SCP performs worse than HB on almost all instances in Sets B, C, E and F. Based on this observation, we could conclude that the effectiveness of SCP scheme depends on the sparsity pattern. An intuitive reason for the above observation is that the sparsity patterns in Sets A and D are locally clustered while they are not in other sets. For example, in the overlapping block diagonal sparsity pattern in Set A, the vertices in the first block are not adjacent with the vertices in the third or fourth blocks. Specifically, for any two vertices i and j that are located in the first and fourth block, respectively, they do not have a common neighbor vertex. Therefore, the interaction between the two vertices is extremely weak. Adding valid triangle inequalities on vertices with weak interaction may lead to marginal improvement in the upper bound. Similarly, for the sparsity pattern in set D, a vertex in a disk graph is only adjacent to a small number of nearby vertices. On the other hand, the SCP scheme is less effective for the instances in Sets B, C, E and F because the vertices in these sets have strong connections with each other. Consider the block-arrow sparsity pattern in set C, each pair of vertices i and j in the graph is either adjacent, or has at least 40 common neighbor vertices. Based on the above analysis, we may conclude that the SCP scheme is effective on instances whose sparsity pattern is similar to a locally clustering graph. This is true when the intersections between different pairs of maximal cliques in a tree decomposition have only a few vertices. On the other hand, if the intersections between different pairs of maximal cliques have many vertices, then the SCP scheme may filter some promising valid triangle inequalities, thus lead to only a small improvement in upper bounds.

Second, we study the effects of the new branching rule by comparing the HB algorithm with R2 algorithm. Note that both HB and R2 algorithms use the same upper bound scheme, the only difference between the two algorithms is the branching rule. Hence, the comparison shows the effects of different branching rules. We first analyze the results for Sets A-C, whose instances have deterministic sparsity patterns. From Tables 1, 2 and 3, we can see that HB runs faster than R2 on most test instances, except two easy instances in Set B that can be solved within no more than 10 enumerations. Particularly, HB runs at least five times faster than R2 for the instances with the block-arrow sparsity pattern in Set C. These results show that, by exploiting the clique decomposition of sparsity pattern with a deterministic structure, the new branching rule could be very effective in improving the overall performance of a branch-and-bound algorithm. We then analyze the results of Sets D-F, whose sparsity patterns are random. In Table 4, we discover that HB performs better than R2 on all test instances, and the dominance is significant on several instances (including instances 1, 9 and 10 in set D). This result shows that rule HB is effective for test instances with disk-graph sparsity pattern. For the instances in Sets E and F, we observe from Tables 5 and 6 that HB runs faster than R2 on 9 out of 10 test instances in Set E, and 4 out of 5 instances with density \(20\%\) in Set F. This result indicates that rule HB is effective when the density of the random graph is relatively low. However, as shown in Table 6, HB performs worse than R2 for most of the instances with a density of \(50\%\). The reason is that when the density of a random graph becomes high, after the chordal extension, the graph is close to a complete graph, and rule HB becomes almost equivalent to rule R3 in this case. Since R3 performs worse than R2 in general, the proposed rule HB is dominant by rule R2 for the dense random sparsity patterns. Lastly, for all instances in Tables 1, 2, 3, 4, 5 and 6, HB enumerates fewer nodes than R2. This is reasonable because rule HB makes the sparsity pattern in the child nodes more likely to satisfy the sufficient conditions in Theorem 3.1 and Corollary 3.1. Consequently, the bounds of child nodes under rule HB are more likely to be tight, and the nodes can be pruned earlier under rule HB.

Finally, we compare HB-SCP and HB with BiqCrunch. By comparing the computational time in Tables 1, 2, 3, 4, 5 and 6, we discover that HB-SCP might be the best choice for Sets A and D, and HB performs best on most test instances in Sets B, C and E, and the instances with density \(20\%\) in Set F. Since HB-SCP, HB and BiqCrunch use different methods to solve their semidefinite relaxations, and these algorithms are implemented in different programming language, it might be hard to conclude that our new branching rule is the key factor that leads to the better performance. However, by comparing the computational time of the proposed algorithms with BiqCrunch, we may conclude that HB-SCP and HB algorithms do perform better than BiqCrunch on randomly generated test instances that have certain types of sparsity patterns.

Although we listed the number of nodes enumerated by all algorithms in the tables, it is not a major indicator of algorithmic performance in this case. It is quite possible that an algorithm runs faster, but needs more enumerations than another algorithm, because the time for computing upper bounds depends on the number of iterations when using the iterative upper bound scheme. One branching rule may generate nodes that can be easily pruned while others branching rules lead to vast nodes to explore. Therefore, the number of nodes does not reflect the overall performance of the proposed algorithm. Instead, we compare the computational time, which is directly related to the efficiency of every algorithm.

6.3 Larger Instances with Sparsity Patterns

According to the numerical results in Sect. 6.2, we see that HB algorithm performs best for the banded and block-arrow sparsity patterns, and HB-SCP algorithm is the best one for the overlapping block diagonal and disk-graph sparsity patterns. In this subsection, we further evaluate the proposed algorithms by stress testing them on “hard” instances with the above four sparsity patterns. That is, we generate largest random instances that can be solved by HB-SCP or HB within 3 h. The two sets of instances used in this subsection are as follows.

Set G: This set includes five instances of the banded sparsity pattern and five instances of the block-arrow sparsity pattern. For the instances of banded sparsity pattern indexed by “Band_n_w_id,” we set \(n=120\) and \(w=40\). For the instances of block-arrow sparsity pattern indexed by “Arrow_n_k_id,” we set \(n=180\) and \(k=5\). This set is used for testing algorithms HB, R2 and BiqCrunch.

Set H: This set includes five instances of the overlapping block diagonal sparsity pattern and five instances of the disk-graph sparsity pattern. For the instances of overlapping block diagonal sparsity pattern that are indexed by “Block_w_s_k_id,” we set \(w=30\), \(s=10\) and \(k=8\).Footnote 5 For the instances of disk-graph sparsity pattern indexed by “DiskGraph_n_id,” we set \(n=160\).Footnote 6 This set is used for testing algorithms HB-TIP, R2 and BiqCrunch.

The numerical results for Sets G and H are reported in Tables 7 and 8, respectively. We have the following two observations in this experiment:

-

(i)

Same as the results in Tables 1, 2, 3 and 4, HB and HB-SCP are still more efficient than R2 and BiqCrunch on all, except two, test instances. Especially for the block-arrow sparsity pattern, HB solves all the five instances while BiqCrunch fails to solve any.

-

(ii)

The efficiency of the proposed algorithms strongly depends on the types of sparsity patterns. The same observation also holds for BiqCrunch.

The reason for the second observation deserves a further explanation. For the instances of banded and block-arrow sparsity patterns, Table 7 shows that HB can only solve up to 120 and 180 dimensions within 3 h, respectively. In contrast, HB-SCP can easily solve the 180-dimensional instances of overlapping block diagonal sparsity pattern within 7 min as shown in Table 8. Similarly, BiqCrunch solves the instances of overlapping block diagonal sparsity pattern in at most 1308 s but fails to solve any instance of block-arrow sparsity pattern within 3 h. By comparing the structure of the above three sparsity patterns, we can see that the overlapping block diagonal sparsity pattern has two distinct features: the size of maximal clique is small (i.e., small cluster) and the intersection set between every pair of maximal clique has only a few vertices (i.e., small cluster intersection). These two features make the sufficient conditions in Theorem 3.1 and Corollary 3.1 easy to be satisfied; thus, the semidefinite relaxations provide tight bounds for problem (MC). In this case, both HB-SCP and BiqCrunch are efficient to solve the instances of overlapping block diagonal sparsity pattern. In comparison, for the banded and block-arrow sparsity patterns, the intersection sets between two cliques could have many vertices (up to the same size of the treewidth), thus the instances of these two sparsity patterns are relatively hard to solve for those algorithms.

Moreover, for the block-arrow sparsity pattern, we discover that all the intersection sets of any two different maximal cliques are the same, i.e., all the maximal cliques share the common intersection set. Then, according to the rule HB, the edge that connects two vertices in this common intersection set has a high priority. By contracting the two vertices in the common intersection set, all the maximal cliques will have one less vertex in the child nodes. Therefore, rule HB reduces the treewidth of the sparsity pattern and the number of vertices in the intersection set. As shown in Table 7, HB successfully solves all the five instances with block-arrow sparsity pattern while BiqCrunch fails to solve any of them within the time limit. In comparison, in the banded sparsity pattern, each pair of maximal cliques has a different intersection set, so the rule HB is not so effective as in the case of block-arrow sparsity pattern.

7 Conclusions

In this paper, we design a new semidefinite relaxation-based branch-and-bound algorithm for solving the classic max-cut problem. The main feature of the proposed algorithm lies in the utilization of the chordal sparsity patterns embedded in the max-cut problem. We first develop a polyhedral relaxation from the clique decomposition of the sparsity pattern and then derive two sufficient conditions for the tightness of the polyhedral relaxation. Then, based on the discussions on the relation between (PR), (CSDR) and (SDR), we investigate how the chordal sparsity pattern affects the tightness of a semidefinite relaxation of the max-cut problem. Motivated by the theoretical results, a hierarchy branching rule is proposed to manipulate the sparsity pattern of the problem in order to reduce the treewidth of the sparsity pattern and the number of elements in the intersections of different cliques.

The computational results show that the proposed hierarchy branching rule is more effective than the conventional numerical-driven rule R2 on test instances with various chordal sparsity patterns. The proposed algorithm also outperforms BiqCrunch in terms of computational time on most tested instances with sparsity patterns. Meanwhile, the sparsity-pattern-driven cutting-plane scheme is effective in improving the overall efficiency of the proposed algorithm when the instances have certain types of chordal sparsity patterns. These results indicate that the chordal sparsity pattern of the problem may provide important information that can be utilized to improve the efficiency of a branch-and-bound algorithm.

Data availability statement