Abstract

In this paper, a new procedure, called generalized shrinkage conjugate gradient (GSCG), is presented to solve the \(\ell _1\)-regularized convex minimization problem. In GSCG, we present a new descent condition. If such a condition holds, an efficient descent direction is presented by an attractive combination of a generalized form of the conjugate gradient direction and the ISTA descent direction. Otherwise, ISTA is improved by a new step-size of the shrinkage operator. The global convergence of GSCG is established under some assumptions and its sublinear (R-linear) convergence rate in the convex (strongly convex) case. In numerical results, the suitability of GSCG is evaluated for compressed sensing and image debluring problems on the set of randomly generated test problems with dimensions \(n\in \{2^{10},\ldots ,2^{17}\}\) and some images, respectively, in Matlab. These numerical results show that GSCG is efficient and robust for these problems in terms of the speed and ability of the sparse reconstruction in comparison with several state-of-the-art algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

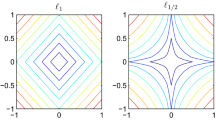

Consider the following unconstrained optimization problem for the sparse recovery

where \(f:\mathbb {R}^n\rightarrow \mathbb {R}\) is a smooth convex function, \(\Vert \cdot \Vert _1\) is the \(\ell _1\)-norm of a vector \(x\in \mathbb {R}^n\), usually called regularizer or regularization function, and \(\mu \in \mathbb {R^{+}}\) is a regularization parameter that can be interpreted as a trade-off parameter or relative weight between the objective terms. The problem (1) generalizes the well known basis pursuit denoising (BPDN) or \(\ell _2-\ell _1\) problem, found in the signal and image processing literature, as

In (2), \(\Vert \cdot \Vert \) stands for the standard Euclidean norm, \(A\in \mathbb {R}^{m\times n}\) \((m\ll n)\) and \(b\in \mathbb {R}^m\). As a basic idea, a sparse solution of the underdetermined linear system \(Ax=b\) may be obtained by the \(\ell _0-\)norm optimization problem. This nonconvex combinatorial problem is NP-hard [23] and hence is difficult to solve. Instead of solving it, Candès et al. [12] and Donoho [15] suggested the \(\ell _1\)-norm convex relaxation problem to recover the sparse solution under some conditions. However, the problem (2) is one of the most famous models in sparse recovery area which gives the sparsest solution of the aforementioned underdetermined linear system. This model is a robust version of reconstruction process when the measurements are contaminated with noise. Some popular applications for the problem (2) are the areas of compressed sensing (CS) and image debluring (ID) problems. For more information about these applications, readers can refer to [17, 18, 23].

Contribution This paper aims to present an innovative algorithm based on a new direction which is descendant under a descent condition and a new shrinkage step-size in order to accelerate ISTA. Such an algorithm generates a new generalized form of the CG direction and uses it under a mild norm condition. Moreover, it uses the ISTA descent direction with the improved shrinkage step-size, produced based on a pseudo CG idea, whenever the generated direction is not descendant or does not satisfy the norm condition.

Organization The remaining of this article is organized as follows. In Sect. 2, advantages and shortcomings of some algorithms and software are investigated. We have a review of ISTA, a gradient based method, in Sect. 3. Section 4 is dedicated to our method, which has three subsections: presenting the new GSCG approach in detail in the first subsection, presenting the acceptance criterion of the new method based on a modified nonmonotone Armijo line search strategy in the second subsection and finally presenting a flowchart and a pseudo code of the new algorithm in the third subsection. The global convergence and sublinear/R-linear convergence rate of the proposed algorithm are analyzed in Sect. 5. In Sect. 6, numerical results on CS and ID problems are reported. At the end, in Sect. 7, some conclusions are presented.

Preliminaries We first recall some definitions, which will play a key role in the convex optimization analysis.

Definition 1

A directional derivative of a function \(F:\mathbb {R}^n\rightarrow \mathbb {R}\) at point \(x\in \mathbb {R}^n\) along the direction \(d\in \mathbb {R}^n\), denoted by \(F'(x;d)\), is given by the following limit if it exists:

The directional derivative may be well defined even when F is not continuously differentiable. In fact, it is the most useful one in such a situation.

Definition 2

A point \(x^*\in \mathbb {R}^n\) is called a stationary point of a function \(F:\mathbb {R}^n\rightarrow \mathbb {R}\), if \(F'(x^*;d)\ge 0\) for all \(d\in \mathbb {R}^n\), see [38, page 394].

Definition 3

Suppose that \(F:\mathbb {R}^{n}\rightarrow \mathbb {R}\cup \{+\infty \}\) is a closed proper convex function and \(\varsigma \) is a positive parameter. A proximal operator \(\mathbf {Prox}_{\varsigma F}:\mathbb {R}^{n}\rightarrow \mathbb {R}^{n}\) of the scaled function \(\varsigma F\) at \(y\in \mathbb {R}^{n}\) is defined by

where parameter \(\varsigma \) controls the relative weight of the two terms. For more information about the proximal operator and its algorithms, we refer to [35] which is a monograph about this class of optimization algorithms.

Notation Throughout this paper, for the convenience of notation, write \(F_k:=F(x_k)\) and \(f_k:=f(x_k)\) and let \(\nabla f_k:=\nabla f(x_k)\) be the gradient of f at \(x_k\). The subscript k often represents the iteration number in an algorithm and \(D_{\mathrm {S}}\) denotes the diameter of a bounded set \(\mathrm {S}\).

2 Related algorithms

There are a lot of solvers for nonsmooth optimization. Here, we review only some of them, related to our study as tested in numerical results section. The objective function of problem (1) is convex but nonsmooth since it includes the \(\ell _1\)-norm term. This problem can be transformed to a linear optimization problem and solved via standard tools such as simplex or interior point methods [7, 34]. These methods have a high computational cost because of using a dense data structure, especially for large-scale problems. For large problems, there are gradient-based algorithms, as well, which calculate the direction approximately without computing or approximating the Hessian, using gradients only. Despite requiring little storage, they have so slow convergence properties; see [11, 13, 16, 19, 21, 27, 28, 40]. One popular method of this class is iterative shrinkage-thresholding algorithm (ISTA) [13, 16] with the general step

where \(\tau _k\) is the shrinkage step-size and \(\mathcal {S}_\nu :\mathbb {R}^n\rightarrow \mathbb {R}^n\) is the shrinkage operator, defined by

in which \(\nu >0\), \(\text {sgn}(\cdot )\) stands for the signum function and \(\odot \) denotes the component-wise product, i.e., \((x\odot y)_i:=x_iy_i\). The important characteristic of ISTA is its simple form. However, ISTA has been characterized as a slow method and its convergence analysis has been well studied in [8, 13, 16, 20], showing its sublinear convergence rate. Some methods have been proposed in order to accelerate ISTA in the past years. We consider some of them as follows:

-

(i)

Fixed-point continuation algorithm (fpc) [27] uses an operator-splitting technique to solve a sequence of problem (1), defined by a decreasing sequence of parameter \(\{\mu _j\}\) with the fixed shrinkage step-size. In fpc, based on the continuation (homotopy) strategy, the solution of the current problem is used as the initial estimate of the solution to the next problem.

-

(ii)

fpcbb [28] is a fixed-point continuation algorithm for solving (1) which constructs its shrinkage step-size by Barzilai–Borwein (BB) technique [6].

-

(iii)

NBBL1 [42] is a nonmonotone Barzilai–Borwein gradient algorithm for solving (1). At each step, such an algorithm generates the search direction by minimizing a local approximal quadratic model of (1) which contains an approximated form of the \(\ell _1\)-regularization term, due to its non-differentiability.

-

(iv)

TwIST [11] is a two-step ISTA which relies on computing the next iteration based on two previously computed steps instead of one previous step.

-

(v)

FISTA [8] is a fast iterative shrinkage-thresholding algorithm, employed on a special linear combination of the previous two iterations and this is its main difference from ISTA. This method keeps computational simplicity of ISTA and improves its global convergence rate to \(\mathcal {O}(\frac{1}{k^2})\), comparable to ISTA with \(\mathcal {O}(\frac{1}{k})\).

-

(vi)

SpaRSA [41] is a sparse reconstruction by separable approximation which solves (1) with separable structures. Improved practical performance of SpaRSA results in the variation of the shrinkage step-size.

3 Review of ISTA

The classical steepest descent method is the simplest approach to solve (1), in the case \(\mu =0\). This method produces a sequence \(\{x_k\}_{k\ge 0}\) via

for which \(\tau _k>0\) is a suitable step-size. The gradient iteration (4) can be converted into the following iterative scheme, known as the proximal regularization of the linearized function f at \(x_k\),

By applying the structure of (5) for (1) and setting \(d:=x-x_k\), we get

Having ignored the constant terms, we can rewrite (6) as

As shown in [35], the favorable structure of (7) adopts the explicit solution (3) which leads to the ISTA iteration for the problem (1). Both (3) and (7) yield to

called shrinkage direction or ISTA direction. The following lemma shows that the shrinkage direction, obtained by (8), is a descent direction whenever \(d_k^s\ne 0\).

Lemma 1

Suppose that \(\tau _k>0\) and \(d_k^s\) is the shrinkage direction (8). Then, we have

for all \(\alpha \in (0,1]\) and

Proof

The proof can be found in [38]. \(\square \)

The next lemma, the proof of which can be found in [38], characterizes the situation of \(d_k^s=0\).

Lemma 2

Suppose that \(\tau _k>0\) and \(d_k^s\) is the shrinkage direction (8). Then, \(x_k\) is a stationary point for the problem (1) if and only if \(d_k^s=0\).

It is worth noting that ISTA uses a more conservative choice of the shrinkage step-size \(\tau _k\), related to the Lipschitz constant of \(\nabla f\). Furthermore, in some fixed point methods like [27], \(\tau _k\) is considered to be fixed so that these methods could not produce a suitable step-size close to the optimizer or far away from it. Hence, a bad value of \(\tau _k\) leads to a slow convergence rate. To overcome this disadvantage, in [28, 40,41,42], \(\tau _k\) is chosen dynamically by the BB method. BB method is an accelerated form of the classical steepest descent method using two consecutive iterations to introduce the step-size of the next step, i.e., \(x_{k+1}:=x_k-\tau _k^{\mathrm{bb}}\nabla f_k\), where \(\tau _k^{\mathrm{bb}}\), called BB step-size, is presented as one of the following

where \(s_{k-1}:=x_k-x_{k-1}\), \(y_{k-1}:=\nabla f_k-\nabla f_{k-1}\). In (11), \(0<\tau _{\min }<\tau _{\max }<\infty \), known as the safeguard parameters, are used to prevent the production of a very small or large BB step-size. Such an improved BB step-size is presented by

where \(i=1,2\). To simplify our notation, we set \(\tau ^{\mathrm{sbb}}_k:=\tau ^{\mathrm{sbb},i}_k\).

4 Our method

In this section, an algorithmic framework of the new approach is presented to solve large-scale nonsmooth convex optimization problems. This section is followed in three subsections. In the first subsection, the new method is introduced in details. In the second subsection, determining the step-size \(\alpha _k\in (0,1]\), we utilize a modified nonmonotone Armijo line search strategy for nonsmooth convex optimization problems and in the third subsection, the flowchart and the detailed pseudo code of the new method are presented.

4.1 New generalized shrinkage conjugate gradient approach (GSCG)

Accelerating the proximal gradient methods such as ISTA, one can use the idea of momentum term \(\Theta _{k}(x_{k}-x_{k-1})\), through which the next step \(x_{k+1}\) depends on the two previous steps \(x_k\) and \(x_{k-1}\) with \(\Theta _{k}>0\). FISTA and TwIST, accelerated forms of ISTA, use this idea in order to solve (1), see [8, 11]. It is remarkable that CG methods [22, 26, 29, 36], the improved forms of the descent gradient method, have a momentum term in their common iterative schemes. In addition, in [33], a generalized form of the nonlinear CG methods has been presented where the negative gradient is replaced with a general descent direction. Our goal here is to accelerate ISTA by either strengthening its descent direction or improving the shrinkage step-size. In some iterations of the GSCG iterative scheme, a pseudo CG direction is produced where the negative gradient-based term of the CG direction is replaced with the descent direction \(d_k^s\) in (8) as follows,

where \(\alpha _k\) is a suitable step-size and \(\{\beta _k\}_{k\ge 0}\), named CG parameter, is a slowly diminishing constant sequence with \(\lim _{k\rightarrow \infty }\beta _k=0\). This CG parameter is defined by

where \(\lambda \in (0,1)\). Relation (14) is different from the conventional CG parameters and is similar to the \(\beta _k\) presented in [31, 32] for a convex optimization problem over a fixed-point set of a nonexpansive mapping.

The following remark shows the existence of a momentum term in some iterations of GSCG.

Remark 1

Substituting k with \(k- 1\) in (12) leads to

Then, by replacing (13) and (15) in (12), we obtain

Since (13) is not descendant, in general, we impose a mild descent condition on this direction, leading to its descent property. This condition is defined by

The following lemma shows that the direction defined by (13) is descendant whenever \(d_k\ne 0\).

Lemma 3

Suppose that \(\tau _k>0\), \(\beta _k\ge 0\) and \(d_k\) is generated by (13). If the condition (16) holds, then we have

and

Proof

The proof of (17) is similar to the first part of Lemma 1, presented in [38]. To prove (18), we consider three cases:

- (i)

-

(ii)

If \(\beta _k>0\) and \(d_k\ne 0\), then from the definition of \(d_k\), Lemma 1 and (16), we get

$$\begin{aligned} \begin{aligned} \varDelta ^{\mathrm{cg}}_k&=\langle \nabla f_k, d_k\rangle +\mu \Big (\Vert x_k+d_k\Vert _1-\Vert x_k\Vert _1\Big ) \\&\le \langle \nabla f_k, d_k^s\rangle +\mu \Big (\Vert x_k+d^s_k\Vert _1-\Vert x_k\Vert _1\Big )+\beta _k(\langle \nabla f_k, d_{k-1}\rangle \\&\quad +\mu \Vert d_{k-1}\Vert _1)<0, \end{aligned} \end{aligned}$$which shows that \(d_k\ne 0\) is a descent direction for F(x) at \(x_k\).

-

(iii)

If \(\beta _k>0\) and \(d_k=0\), then the proof is trivial. \(\square \)

Whenever the descent condition (16) is not satisfied, GSCG takes advantages of the descent direction \(d_k^s\) with a new form of \(\tau _{k}\), called CG step-size and introduced by

In (19), \(\tau _k^{\mathrm{sbb}}\) is computed using (11), \(\omega \in (0,1)\) is an impact factor for controlling the effect of \(\gamma _k\tau _{k-1}\) and diminishing constant sequence \(\{\gamma _k\}_{k\ge 0}\) is defined by

where \(\upsilon \in (0,1)\). Note that \(\tau _{k}\), generated by (19), is a special linear combination of \(\tau _k^{\mathrm{sbb}}\) and \(\tau _{k-1}\), and its structure is similar to that of the CG direction (13). It is clear that \(\tau ^{\mathrm{sbb}}_k+\omega \gamma _k\tau _{k-1}\ge \tau ^{\mathrm{sbb}}_k\). In addition, \(\Vert d_k^{s}\Vert \) is nondecreasing in \(\tau _k\) for each \(x_k\); see [40]. Thus, Lemma 1 guarantees the descent property of \(d_k^s\), produced by (19).

In GSCG, in order to investigate the convergence rate, we impose a mild norm condition on using (13) whenever (16) is satisfied as follows,

When (21) is not satisfied, the descent direction \(d_k^s\) is utilized, using (19). Let us define the event

Iteration k is said to be a CG iteration with the BB step-size (11) if the event \(\mathtt{cGrad}\) occurs; i.e., \(\mathtt{cGrad}:=1\). Otherwise, the iteration will be ISTA with the CG step-size (19) (\(\mathtt{cGrad}:=0\)). In other words, \(\mathtt{cGrad}\), \(\mathtt{dCon}\) and nCon are the integral parameters. According to the mentioned information, for the iterative scheme (12), we now represent

with

Based on Lemmas 1–3, whether the event \(\mathtt{cGrad}\) happens or not, we have the following inequality for \(d_k\ne 0\) in (22) which confirms its descent property,

where \(\varDelta _k\) is defined by

4.2 Acceptance criterion

Monotone methods (descent methods) generate a sequence of iterations such that the corresponding sequence of function values is monotonically decreasing in (1). Their strategies may trap the iterations to the bottom of a curved narrow valley of the objective function which makes the iterative algorithms lose their efficiency.

In contrast, in nonmonotone line search techniques, some growth in function value is permitted which overcomes the difficulty mentioned above and improves convergence speed, see [3,4,5, 24, 43]. Let us define l(k) as an integer satisfying \(k-m(k)\le l(k)\le k\), in which \(m(0)=0\) and \(0\le m(k)\le \min \{m(k-1)+1,N-1\}\), with \(N>0\). To increase the efficiency of the new algorithm and to guarantee its global convergence, we use a modified form of the nonmonotone Armijo scheme suggested in [4] as follows,

where

\(\eta _k\in [\eta _{\min },\eta _{\max }]\), \(\eta _{\min }\in [0,1]\), \(\eta _{\max }\in [\eta _{\min },1]\), and \(\sigma \in (0,1)\) is a constant, usually chosen to be close to zero. The step-size \(\alpha _k\) is the largest member of \(\{s,\varrho s, \ldots \}\), with \(s>0\), \(\varrho \in (0,1)\), and

This nonmonotone line search method, modified for the nonsmooth convex optimization problem (1), uses a stronger nonmonotone technique whenever iterations are far away from the optimizer and a weaker nonmonotone strategy whenever iterations are close to the optimizer by using an adaptive value for \(\eta _k\), which can improve convergence results.

The procedure of modified nonmonotone Armijo strategy (NMA) is described below.

In NMA, the while loop is usually called backtracking loop. Note that, based on Lemmas 1 and 3, NMA is well defined for the proposed approach.

It is worth mentioning that the combination of a nonmonotone line search strategy and the BB step-size with safeguards was originally introduced by Rayden [37] for unconstrained optimization problems, and by Birgin et al. [9, 10] for convex constrained optimization problems. In the new algorithm, we also somehow utilize this idea according to the above NMA technique.

4.3 New algorithm

Flow chart for GSCG in Fig. 1 is presented and then the GSCG algorithm is described.

In Algorithm 1, if (16) or (21) is not satisfied (\(\mathtt{cGrad}=0\)) then to compute \(d_k\), GSCG uses the CG step-size (Lines 10 and 13). Otherwise (\(\mathtt{cGrad}=1\)), it uses the BB step-size (Line 6). In addition, Lines 7–11 help us to establish the convergence rate.

5 Convergence analysis

This section is devoted to analyzing the convergence of GSCG. To do so, we use some tools for nonsmooth and smooth optimization which have been presented in [4, 24, 25, 30, 38], but have been extensively modified to allow for GSCG. In order to investigate convergence analysis, we utilize the following two assumptions:

-

(H1)

The level set \(L(x_0):=\{x\mid F(x)\le F_0\}\) is bounded, for any \(x_0\in \mathbb {R}^n\).

-

(H2)

The \(\nabla f(x)\) is Lipschitz continuous with constant L.

The following lemma characterizes a stationary point. Our approach in it follows that of Lemma 2 in [38].

Lemma 4

Suppose that \(\tau _k>0\), \(\beta _k\ge 0\) and \(d_k\) is a direction generated by GSCG. Then, \(x_k\) is a stationary point for the problem (1) if and only if \(d_k=0\).

Proof

If (16) or (21) is not satisfied, then \(d_k=d_k^s\). Thus, Lemma 2 gives the desired result. Otherwise, \(d_k=d_k^s+\beta _k d_{k-1}\). In the case \(d_k\ne 0\), Lemma 3 implies that \(F'(x_k;d_k)<0\), thus \(x_k\) is not a stationary point. Now, take \(d_k=0\). Since \(d_k^s=-\beta _k d_{k-1}\) is the solution of (7), the descent condition (16) results in

for any \(\alpha d\in \mathbb {R}^n\) with \(\alpha >0\). Hence, proceeding the same argument as Lemma 2 in [38], we get \(F'(x_k;d)\ge 0\), leading to this fact that \(x_k\) is a stationary point of F(x). \(\square \)

In the sequel, let us define the following index sets:

\(\mathcal {I}_1\) is the set of all iterations where cGrad=1 and \(\mathcal {I}_2\) includes all iterations where \(\mathtt{cGrad}=0\).

Lemma 5

Let \(\{\tau _k\}_{k\ge 0}\) be the sequence generated by GSCG. Then, \(\{\tau _k\}_{k\ge 0}\) is bounded.

Proof

The proof is done in two cases:

-

(i)

\(k\in \mathcal {I}_1\). Then, relation (11) gives the result.

-

(ii)

\(k\in \mathcal {I}_2\). In this case, the proof is by induction. Since \(\lim _{k\rightarrow \infty } \gamma _{k}=0\), there exists \(m\in \mathbb {N}\) such that \(\gamma _{k}\le \displaystyle \frac{1}{2}\), for all \(k\ge m\). Let \(T:=\max \{\tau _{\max },\tau _m\}\); it is clear that \(\tau _m\le 2T\). Suppose that \(\tau _k\le 2T\) for some \(k\ge m\). Then, by (19) and the induction hypothesis, for all \(k\ge m\), we have

$$\begin{aligned} \tau _{k+1}\le \tau _{\max }+\omega \gamma _{k+1}\tau _k\le T+\displaystyle \frac{1}{2}\tau _k\le 2T, \end{aligned}$$which results in the proof. \(\square \)

Lemma 6

Suppose that the sequence \(\{x_k\}_{k\ge 0}\) is generated by GSCG and Assumptions (H1) and (H2) hold. Then, there exists a constant \(\overline{\alpha }\in (0,1]\) such that the acceptance criterion (26) is satisfied for any \(\alpha \in (0,\overline{\alpha }]\). In addition, for all \(\alpha _k\) that satisfies (26), we can find a lower bound \(\underline{\alpha }\) such that \(\alpha _k\ge \underline{\alpha }\).

Proof

Lipcshitz continuity of \(\nabla f\), convexity of \(\Vert \cdot \Vert _1\) and the definition of \(\varDelta _k\) in (25) result in

From (24), it follows that

Therefore, the acceptance criterion (26) is satisfied whenever

Since \(x_k\) is not stationary, based on Lemma 4 and (24), \(\varDelta _k<0\). Thus, (30) yields to

where \(\overline{\alpha }:= \displaystyle \frac{1-\sigma }{\overline{\tau }_{\max }L}\) with

Proving the lower bound for \(\alpha _k\), we know that either \(\alpha _k=1\) or the acceptance criterion (26) will fail at least once; hence

This fact along with (29) leads to

so that

since \(\overline{\tau }_{\max }\ge \tau _k\) for all k. Thus, the proof is completed. \(\square \)

The proof of the next lemma has been inspired by the works of [4, 24] who analyzed a nonmonotone line search for smooth optimization problems, but it has been broadly modified to allow for the problem (1) with the acceptance criterion (26) which is analogous to that of ibid.

Lemma 7

Suppose that the sequence \(\{x_k\}_{k\ge 0}\) is generated by GSCG with acceptance criterion (26) and Assumptions (H1) and (H2) hold. Then,

- (a) :

-

the sequence \(\{F_{l(k)}\}_{k\ge 0}\) is convergent.

- (b) :

-

\(\lim _{k\rightarrow \infty }d_{k}=0\).

- (c) :

-

\(\lim _{k\rightarrow \infty } F_{k}=\lim _{k\rightarrow \infty } F_{l(k)}\).

- (d) :

-

\(\lim _{k\rightarrow \infty } R_{k}=\lim _{k\rightarrow \infty }F_{k}\).

Proof

-

(a)

We show that the sequence \(\{F_{l(k)}\}_{k\ge 0}\) is non-increasing. The inequality \(R_k\le F_{l(k)}\) implies that

$$\begin{aligned} F_{k+1}\le R_k\le F_{l(k)}. \end{aligned}$$(31)For the case \(k+1\ge N\), from (31) and \(m(k+1):=N-1\), we have

$$\begin{aligned} F_{l(k+1)}=\max _{0\le j\le N-1} \{F_{k-j+1}\}\le \max _{0\le j\le m(k)+1} \{F_{k-j+1}\} =\max \{F_{l(k)},F_{k+1}\}= F_{l(k)}. \end{aligned}$$Furthermore, for the case \(k+1<N\), we have \(m(k+1):=k+1\) and \(F_{l(k+1)}:=F_0\). These cases give the non-increasing property of the sequence \(\{F_{l(k)}\}_{k\ge 0}\). In addition,

$$\begin{aligned} F_{k+1}\le R_k{\le } F_{l(k)}\le F_{l(k-1)}\le \cdots \le F_{l(0)}=F_0, \end{aligned}$$(32)so that \(\{x_k\}_{k\ge 0}\) remains in \(L(x_0)\) for all k. Also, based on the Assumption (H1) and the definition of F(x), \(L(x_0)\) is compact. Thus, \(\{F_{l(k)}\}_{k\ge 0}\) assumes a limit, named \(\widetilde{F}\), for \(k\rightarrow \infty \).

-

(b)

Replacing k with \(l(k)-1\) in (26) and using (31), we get

$$\begin{aligned} F_{l(k)} \le F_{l({l(k)-1})}+\sigma \alpha _{l(k)-1}\varDelta _{l(k)-1}, \end{aligned}$$(33)which, along with part (a), implies that

$$\begin{aligned} \lim _{k\rightarrow \infty }\alpha _{l(k)-1}\varDelta _{l(k)-1}=0. \end{aligned}$$Hence, (24) gives

$$\begin{aligned} \lim _{k\rightarrow \infty }\alpha _{l(k)-1}\Vert d_{l(k)-1}\Vert =0. \end{aligned}$$(34)Based on Lemma 6, \(\alpha _r\ge \underline{\alpha }\) for all r. Thus, (34) implies that

$$\begin{aligned} \lim _{k\rightarrow \infty }d_{l(k)-1}=0. \end{aligned}$$(35)Now, by induction, for any \(j\ge 1\), we show that

$$\begin{aligned} \lim _{k\rightarrow \infty }d_{{l}(k)-j}=0, \end{aligned}$$(36)and

$$\begin{aligned} \lim _{k\rightarrow \infty } F_{{l}(k)-j}=\widetilde{F}. \end{aligned}$$(37)It has been already shown in (35) that (36) holds for \(j=1\); hence

$$\begin{aligned} \lim _{k\rightarrow \infty }\Vert x_{ l(k)}-x_{ {l}(k)-1}\Vert = 0. \end{aligned}$$(38)From (38) and the uniform continuity of F(x) on \(L(x_0)\), we get (37) for \(j=1\). Now, we assume that (36) and (37) hold for a given j. Setting \(l(k):=l(k)-j\) in (33), we get

$$\begin{aligned} F_{{l}(k)-j}&\le F_{l({{l}(k)-(j+1)})}+\sigma \alpha _{{l}(k)-(j+1)}\varDelta _{{{l}(k)-(j+1)}}, \end{aligned}$$where k is assumed to be large enough such that \(l(k)-(j+1)\ge 0\). By letting \(k\rightarrow \infty \), using the inductive hypothesis along with part (a) and following the same arguments employed for driving (35), we deduce

$$\begin{aligned} \lim _{k\rightarrow \infty }d_{{l}(k)-(j+1)}=0, \end{aligned}$$so that

$$\begin{aligned} \lim _{k\rightarrow \infty }\Vert x_{{l}(k)-j}-x_{{l}(k)-(j+1)}\Vert =0. \end{aligned}$$This fact and the uniform continuity of F(x) on \(L(x_0)\) result in

$$\begin{aligned} \lim _{k\rightarrow \infty } F_{{l}(k)-(j+1)}=\lim _{k\rightarrow \infty }F_{{l}(k)-j}=\widetilde{F}, \end{aligned}$$proving the inductive step. Based on (28), let \(l(k):=\arg \max \Big \{F_j\mid \max (0,k-N+1)\le j\le k\Big \}\). Thus, l(k) is one of the members of the index set \(\{k-N+1, k-N+2,\ldots ,k\}\). Hence, we can denote \(k-N=l(k)-j\), for some \(j=1, 2,\ldots , N\). Therefore, from (36) we deduce,

$$\begin{aligned} \lim _{k\rightarrow \infty }\alpha _{k}\Vert d_{k}\Vert =\lim _{k\rightarrow \infty } \alpha _{k-N}\Vert d_{k-N}\Vert =0, \end{aligned}$$(39)leading to

$$\begin{aligned} \lim _{k\rightarrow \infty }d_{k}=0, \end{aligned}$$by Lemma 6.

-

(c)

For any \(k\in \mathbb {N}\), we have

$$\begin{aligned} x_{k-N}:=x_{{l}(k)}-\sum _{j=1}^{{l}(k)-(k-N)}\alpha _{{l}(k)-j}d_{{l}(k)-j}. \end{aligned}$$(40)$$\begin{aligned} \lim _{k\rightarrow \infty }\Vert x_{k-N}-x_{{l}(k)}\Vert =0. \end{aligned}$$(41)Part (a), along with (41) and the uniform continuity of F(x) on \(L(x_0)\), implies that

$$\begin{aligned} \lim _{k\rightarrow \infty } F_{k}=\widetilde{F}. \end{aligned}$$ -

(d)

From \(F_{k}\le R_{k}\le F_{l(k)}\) and the previous part, we obtain the desired result. \(\square \)

We now prove the main global convergence theorems of the new approach in two sections.

5.1 Convergence rate for convex case

In this part a sublinear convergence estimate for the error in the objective function value F(x) is presented. The first theorem implies that GSCG converges to a global solution of the problem (1).

Theorem 1

Suppose that the Assumptions (H1) and (H2) hold and let \(\{x_k\}_{k\ge 0}\) be the sequence generated by GSCG. Then, any accumulation point of \(\{x_k\}_{k\ge 0}\) is a stationary point of the problem (1). In addition,

where \(F^*\) is the optimal value for the problem (1).

Proof

By (32), the sequence \(\{x_k\}_{k\ge 0}\) remains in \(L(x_0)\). In addition, since \(L(x_0)\) is compact, there exists an accumulation point. Furthermore, we get from (11) and Lemma 5

On the other hand, the sequence \(\{\nabla f_k\}_{k\ge 0}\) is bounded since Assumption (H2) holds and \(L(x_0)\) is compact. Lemma 7(b), (42), boundedness of \(\{\nabla f_k\}_{k\ge 0}\) and continuity of the shrinkage operator in (3) (see [27, Theorem 4.5]), result in

where

We consider the proof by contradiction. Let \(x^*\) be the accumulation point of the sequence \(\{x_k\}_{k\ge 0}\) that is not stationary. Hence, by Lemma 4, for all sufficiently large k, there is an \(\epsilon >0\) such that \(\Vert d_{k}\Vert \ge \epsilon >0\). Thus, (39) results in

which contradicts Lemma 6. By Lemma 7, \(\{F_k\}_{k\ge 0}\) approaches a limit denoted by \(\widetilde{F}\). Furthermore, from convexity of F(x), a stationary point is a global minimizer; hence \(\widetilde{F}=F^*\) which completes the proof. \(\square \)

In the sequel, we show the sublinear convergence of GSCG in a similar way as Theorem 3.2 in [25] and Theorem 2 in [30].

Theorem 2

Suppose that \(\{x_k\}_{k\ge 0}\) is a sequence generated by GSCG, and Assumptions (H1) and (H2) hold. Then, there exists a constant c such that for all sufficiently large k,

Proof

Convexity of F(x) and \(\alpha _k\in (0,1]\) result in

If (16) and (21) are satisfied, then \(d_k=d_k^s+\beta _k d_{k-1}\). Taking

and using Lipschitz continuity of \(\nabla f\), we get

Otherwise, \(d_k=d_k^s\). Again Lipschitz continuity of \(\nabla f\) results in

Since \(d_k^s\) is the minimizer of (7) and f(x) is convex, for \(\tau _k>0\), it follows that

Let us now denote \(x_k+d:=(1-\vartheta )x_k+\vartheta x^*\), where \(\vartheta \in [0, 1]\) and \(x^*\) is an optimal solution of the problem (1). Convexity of F(x) yields to

where \(\Phi _k:=\displaystyle \frac{1}{2\tau _{\min }}\Vert x_k-x^*\Vert ^2\). By (32) and Assumption (H1), \(x_{k}\) and \(x^*\) lie in \(L(x_0)\), so that \(\Phi _k\le c_1<\infty \), where \(c_1:=\displaystyle \frac{1}{2\tau _{\min }} {D^2_{L(x_0)}}\). We now get from (43) to (46)

Acceptance criterion (26) along with (24) results in

where \(c_2:=\displaystyle \frac{L\overline{\tau }_{\max }}{\sigma }\). Combining (47), (48) and \(F_k\le R_k\), it follows that

The right hand side of (49) reaches its minimum value when \(\vartheta _{\min }:=\displaystyle \min \Big \{1, \frac{R_k-F^*}{2 c_1}\Big \}\). As a consequence of Theorem 1 and Lemma 7, the sequence \(\{R_k\}_{k\ge 0}\) converges to \(F^*\). Hence, \(\vartheta _{\min }\) also approaches zero as \(k\rightarrow \infty \); hence there is an integer \(k_0\) with \(\vartheta _{\min }<1\) for all \(k>k_0\). Therefore

for all \(k>k_0\), where \(c_3:=\displaystyle \frac{1}{4 c_1}{\underline{\alpha }}\), with \(\underline{\alpha }\) given by Lemma 6. Let us define \(E_k:=F_k-F^*\) and \(E_{r(k)}:=R_k-F^*\). By subtracting \(F^*\) from the both side of (50), we have

so that

where \(c_4:=\displaystyle \frac{c_3}{1+c_2}\). We find after division by \(E_{r(k)}\ne 0\) that

yielding to

since \(E_{k+1}\le E_{r(k)}\), \(R_k\le F_{l(k)}\) and \(E_{l(k)}:=F_{l(k)}-F^*\). An integer \(i_0\) can be found such that \(k_0\in \Big ((i_0-1)N, i_0N-1 \Big ]\). For all \(k\in \Big ((i-1)N, iN-1\Big ]\) with \(i>i_0\), the definition of l(k) in (28) results in \(k-N+1\le l(k)\le k\). By applying (51) recursively, for all \(i>i_0\), we have

so that

for all \(k\in \Big ((i-1)N, iN-1\Big ]\) with \(i>2i_0\). Since there exists a finite number of integers \(k\in [1, 2i_0N]\), one finds \(c_5\) satisfying \(c_5>\frac{2}{c_4}\) and

By taking \(c:=c_5 N\), the proof is completed. \(\square \)

5.2 Convergence rate for strongly convex case

This part is dedicated to the R-linear convergence rate of GSCG, when F(x) satisfies

for all \(y\in \mathbb {R}^n\), with \(\xi >0\). The relation (52) holds, when f(x) is a strongly convex function and therefore \(x^*\) is a unique minimizer of F(x). The proof of the following theorem is similar to those of theorem 4.1 in [25] and Theorem 3 in [30].

Theorem 3

Suppose that \(\{x_k\}_{k\ge 0}\) is a sequence generated by GSCG, and Assumptions (H1) and (H2) and (52) hold. Given \(c_2\) and \(\underline{\alpha }\) satisfying Theorem 2 and Lemma 7, there are constants \(\psi \) and \(\varphi \in (0,1)\) such that for all sufficiently large k,

Proof

We first show that there exists \(\chi \in (0,1)\) such that

Assume that \(\varpi \) satisfies

We consider two cases:

-

(i)

If \(\Vert d_k\Vert ^2\ge \varpi (F_{l(k)}-F^*)\), then we get from (48)

$$\begin{aligned} \frac{\varpi \underline{\alpha }}{2}(F_{l(k)}-F^*)\le \frac{1}{2} \underline{\alpha }\Vert d_k\Vert ^2\le c_2(F_{l(k)}-F_{k+1}), \end{aligned}$$so that

$$\begin{aligned} F_{k+1}-F^*\le \chi (F_{l(k)}-F^*), \end{aligned}$$where \(\chi :=(1-\frac{\varpi \underline{\alpha }}{2c_2})\in (0,1)\); it can be obtained by (54).

-

(ii)

If \(\Vert d_k\Vert ^2<\varpi (F_{l(k)}-F^*)\), then (52) results in

$$\begin{aligned} \Phi _k=\frac{1}{2\tau _{\min }}\Vert x_k-x^*\Vert ^2\le \frac{1}{2\tau _{\min }\xi }(F_k-F^*)\le c_6(F_{l(k)}-F^*), \end{aligned}$$where \(c_6=\displaystyle \frac{1}{2\tau _{\min }\xi }\). We now get from (43) to (46),

$$\begin{aligned} F_{k+1}\le & {} (1-\alpha _k \vartheta )F_k+\alpha _k(\vartheta F^*+\Phi _k \vartheta ^2)+\frac{L\alpha _k}{2}\Vert d_k\Vert ^2\\\le & {} F_{l(k)}+\,\alpha _k\left( c_6\vartheta ^2-\vartheta +\frac{L\varpi }{2}\right) (F_{l(k)}-F^*), \end{aligned}$$so that

$$\begin{aligned} F_{k+1}-F^*\le \left[ 1+\alpha _k\left( c_6\vartheta ^2-\vartheta +\frac{L\varpi }{2}\right) \right] (F_{l(k)}-F^*),~~~~~~\forall ~\vartheta \in [0,1]. \end{aligned}$$Let \(\chi :=1+\alpha _k(c_6\vartheta ^2-\vartheta +\frac{L\varpi }{2})\); its minimum value is at

$$\begin{aligned} \widehat{\vartheta }=\left\{ 1, \displaystyle \frac{1}{2c_6}\right\} \in [0,1]. \end{aligned}$$(55)If \(\widehat{\vartheta }=1\), then (55) gives \(c_6<\frac{1}{2}\). Thus, \(\varpi \le \frac{1}{L}\), obtained from (54), leads to

$$\begin{aligned} \chi =1+\alpha _k\left( c_6-1+\frac{\varpi L}{2}\right) \le 1-\frac{1-\varpi L}{2}\alpha _k<1. \end{aligned}$$Otherwise, the inequality \(\varpi <\displaystyle \frac{\xi \tau _{\min }}{L}\), obtained from (54), gives

$$\begin{aligned} \chi =1+\alpha _k\left( \displaystyle \frac{1}{4c_6}-\displaystyle \frac{1}{2c_6}+\displaystyle \frac{\varpi L}{2}\right) = 1-\alpha _k\left( \displaystyle \frac{1}{4c_6}-\displaystyle \frac{\varpi L}{2}\right) <1. \end{aligned}$$Therefore, (53) holds for all \(k\ge 0\).

By replacing k with \(l(k)-1\) in (53), setting \(k\ge N\) and utilizing monotonicity of \(F_{l(k)}\), we have

There exists \(i\ge 1\) such that \(k\in \big ((i-1)N, iN-1]\) for any \(k\ge N\). Applying (56) recursively gives

Since \(F_{k+1}\le F_{l(k)}\) and \(l\big (k-(i-1)N\big )\in (0,N]\), we get

so that

which completes the proof with \(\psi =\displaystyle \frac{1}{\chi }\) and \(\varphi =\displaystyle \root N \of {\chi }\). \(\square \)

6 Numerical results

We give some details of the implemented algorithms on CS problems in Sect. 6.1 and ID problems in Sect. 6.2.

A comparison among fpcbb, SpaRSA, TwIST and GSCG for matrix A in item (1) with \(\sigma _1=\sigma _2=10^{-7}\) and \(\rho =\delta =0.1\). a Diagram of function values versus iterations. b Diagram of real errors versus iterations. c Diagram of the original signal. d Diagram of the observation (noisy measurement). e–h Diagrams of recovered signals by fpcbb, SpaRSA, TwIST and GSCG (red circle) versus the original signal (blue peaks) (color figure online), respectively

A comparison among fpcbb, SpaRSA, TwIST and GSCG for matrix A in item (2) with \(\sigma _1=\sigma _2=10^{-7}\) and \(\rho =\delta =0.1\). a Diagram of function values versus iterations. b Diagram of real errors versus iterations. c Diagram of the original signal. d Diagram of the observation (noisy measurement). e–h Diagrams of recovered signals by fpcbb, SpaRSA, TwIST and GSCG (red circle) versus the original signal (blue peaks) (color figure online), respectively

A comparison among fpcbb, SpaRSA, TwIST and GSCG for matrix A in item (3) with \(\sigma _1=\sigma _2=10^{-7}\) and \(\rho =\delta =0.1\). a Diagram of function values versus iterations. b Diagram of real errors versus iterations. c Diagram of the original signal. d Diagram of the observation (noisy measurement). e–h Diagrams of recovered signals by fpcbb, SpaRSA, TwIST and GSCG (red circle) versus the original signal (blue peaks) (color figure online), respectively

A comparison among fpcbb, SpaRSA, TwIST and GSCG for matrix A in item (4) with \(\sigma _1=\sigma _2=10^{-7}\) and \(\rho =\delta =0.1\). a Diagram of function values versus iterations. b Diagram of real errors versus iterations. c Diagram of the original signal. d Diagram of the observation (noisy measurement). e–h Diagrams of recovered signals by fpcbb, SpaRSA, TwIST and GSCG (red circle) versus the original signal (blue peaks) (color figure online), respectively

A comparison among fpcbb, SpaRSA, TwIST and GSCG for matrix A in item (5) with \(\sigma _1=\sigma _2=10^{-7}\) and \(\rho =\delta =0.1\). a Diagram of function values versus iterations. b Diagram of real errors versus iterations. c Diagram of the original signal. d Diagram of the observation (noisy measurement). e–h Diagrams of recovered signals by fpcbb, SpaRSA, TwIST and GSCG (red circle) versus the original signal (blue peaks) (color figure online), respectively

A comparison among fpcbb, SpaRSA, TwIST and GSCG for matrix A in item (6) with \(\sigma _1=\sigma _2=10^{-7}\) and \(\rho =\delta =0.1\). a Diagram of function values versus iterations. b Diagram of real errors versus iterations. c Diagram of the original signal. d Diagram of the observation (noisy measurement). e–h Diagrams of recovered signals by fpcbb, SpaRSA, TwIST and GSCG (red circle) versus the original signal (blue peaks) (color figure online), respectively

6.1 Quality of CS reconstruction

Here, the ability of GSCG for the sparse recovery in CS problems, a well-known application for (2), is evaluated. The field of CS, presented by Candès et al. [12] and Donoho [15], has grown considerably for the past few years. This fact that many real-world signals may be sparse or compressible in nature, well approximated by a sparse signal in a suitable basis or dictionary, is the motivation to create CS. Simply put it, CS refers to the idea of encoding a large sparse signal x through a relatively small number of linear measurements A and storing \(b=Ax\), instead. The main aspect is decoding the observation vector b to recover the original signal x.

We report the results obtained by running our algorithm (GSCG) in comparison with fixed point continuation method with Barzilai–Borwein (fpcbb) [28], two-step ISTA (TwIST) [11], sparse reconstruction by separable approximation (SpaRSA) [41], nonmonotone BB gradient algorithm (NBBL1) [42] and fast iterative shrinkage-thresholding algorithm (FISTA) [8] on some CS problems. The codes of compared algorithms are available in

-

(SpaRSA) http://www.lx.it.pt/~mtf/SpaRSA

-

(FISTA) http://iew3.technion.ac.il/~becka/papers/wavelet_FISTA.zip

Given the dimension of the signal n, according to the values of \(\delta \) and \(\rho \) presenting in Table 1, we produce the dimension of the observation m and the number of nonzero elements k in an exact solution, by \(m:=\lfloor \delta n\rfloor \) and \(k:=\lfloor \rho m\rfloor \). Now we present six types of matrix A, see [27, 28, 39], used to produce our test problems as follows:

-

(1)

Gaussian matrix (\(\text {randn(m,n)}\)) whose elements are pseudo random values drawn form the standard normal distribution \(\mathcal {N}(0,1)\);

-

(2)

Scaled Gaussian matrix whose columns are scaled to unit norm;

-

(3)

Orthogonalized Gaussian matrix whose rows are orthogonalized by QR decomposition;

-

(4)

Bernoulli matrix whose elements are \(+/- 1\) independently with equal probability;

-

(5)

Partial Hadamard matrix, a matrix of \(+/-1\) whose columns are orthogonal and whose m rows are chosen randomly from the \(n\times n\) Hadamard matrix;

-

(6)

Partial discrete cosine transform (PDCT) matrix whose m rows are chosen randomly from the \(n\times n\) DCT matrix.

The matrices in items (1)–(5) are stored explicitly whose signals with dimensions \(n\in \{2^{10},\ldots ,2^{15}\}\) are tested. The matrices in item (6) are stored implicitly whose signals with dimensions \(n\in \{2^{10},\ldots ,2^{17}\}\) are tested. Since real problems are usually ruined by noise, according to the Gaussian noise in [27, 28, 39], we explain how to contaminate \(\widetilde{x}\) and b by impulse noise in the following procedure:

In this procedure, the noise scenarios \((\sigma _1,\sigma _2)\), which are input arguments, belong to

The n-by-1 matrix containing zeros is returned in Line 2 and a random permutation of the integers from 1 to n is returned in Line 3. Line 4 returns the original signal of the test problems which is an n-by-1 matrix containing k nonzero pseudo random values drawn from the standard normal distribution and \(n-k\) zero elements. Both \(\widetilde{x}\) and b, respectively, contaminated by impulsive noise are returned in Line 5 and 7.

Now, we explain how to choose the other parameters of all algorithms as follows:

-

For fpcbb and GSCG, like that of [27, 28, 40], the parameter \(\tau _0\) is chosen by

$$\begin{aligned} \tau _0:=\min \{2.665-1.665m/n,1.999\}. \end{aligned}$$ -

For all algorithms, the initial point is selected by \(x_0:=\text {zeros}(n,1)\) and we set \(\mu =2^{-8}\) and \(\sigma =10^{-3}\).

-

For fpcbb and GSCG, according to [4], the parameter \(\eta _{k}\) is updated by

$$\begin{aligned} \eta _{k}:=\left\{ \begin{array}{ll} \frac{2}{3}\eta _{k-1}+0.01,\ \ &{} \hbox {if }\Vert \nabla f_k\Vert \le 10^{-2},\\ \max \{0.99\eta _{k-1},0.5\},\ \ &{} \hbox {else}, \\ \end{array} \right. \end{aligned}$$for which \(\eta _0=0.85\).

-

GSCG employs \(\alpha _0=s=1\), \(\lambda =0.25\), \(\upsilon =0.999\) and \(\omega =0.001\) and uses \(\tau _k^{\mathrm{sbb}}=\tau _k^{\mathrm{sbb},1}\).

-

For GSCG, fpcbb, NBBL1 and SpaRSA, \(\tau _{\min }=10^{-4}\) and \(\tau _{\max }=10^{4}\) are selected.

-

For GSCG, fpcbb and NBBL1, we set \(\alpha _0=s=1\).

Note that each algorithm is run five times. This fact and different choices of the above listed parameters lead to generate more than 1620 random test problems. In our numerical experiments, the algorithms are stopped whenever

where \(\mathtt{ftol}:=10^{-10}\) or the total number of iterations exceeds 10000.

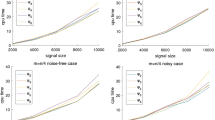

Having a more reliable comparison, demonstrating the total behavior of the new presented procedure and better understanding of the performance of the all compared algorithms, we evaluated the performance profiles of all codes based on a set of metrics such as the number of total iterations (\(\mathtt{ni}\)), the number of function evaluations (\(\mathtt{nf}\)) and time in seconds (\(\mathtt{sec}\)), from left to right in Fig. 2, by applying performance profiles MATLAB code proposed by Dolan and Morè in [14]. In subfigures a–c of Fig. 2, \(P(\zeta )\) designates the percentage of problems which are solved within a factor \(\zeta \) of the best solver.

In view of graphs depicted in the subfigures of Fig. 2, GSCG is clearly the winner of all performance metrics and attains most wins in terms of \(\mathtt{ni}\) for around 99%, \(\mathtt{nf}\) around 98% and \(\mathtt{sec}\) around 92%. Furthermore, Fig. 2 verifies the ability of GSCG to solve the set of all test problems for \(\zeta \ge 1\) in \(\mathtt{ni}\) and \(\mathtt{nf}\) and for \(\zeta \ge 2\) in \(\mathtt{sec}\).

Table 2 contains the average of \(\mathtt{ni}\), \(\mathtt{nf}\) and \(\mathtt{sec}\) of the reconstructions with respect to the original signal \(x_s\) over 5 runs for the algorithms tested; these values are rounded (towards zero) to integers. These results show that, in solving problem (1), GSCG is faster than TwIST, SpaRSA and fpcbb and much faster than FISTA with the clear lowest values of \(\mathtt{ni}\) and \(\mathtt{nf}\).

Let us first measure the quality of restoration \(x^*\) through the relative error to the original signal \(x_s\) by

Figures 3, 4, 5, 6, 7 and 8 are dedicated to the different six types of matrix A, respectively. These figures give some comparisons among GSCG, SpaRSA, TwIST and fpcbb in terms of function values versus iterations (subfigures a), relative error versus iterations (subfigures b) and the ability of reconstructions [subfigures c–h with recovered signal (red circles) versus original signal (blue peaks)]. These subfigures verify that GSCG is competitive with fpcbb, TwIST and SpaRSA in terms of compared values. In addition, comparing graphs and noticing to the real errors of the reconstructions, one get the efficiency and robustness of the GSCG process in recovering large sparse signals in comparisons with the other solvers.

6.2 ID problem

Here, we present an application of the GSCG method to ID problems in order to demonstrate another applicative side of it. An image is a signal that conveys information about the behavior or attributes of a physical object. Often the image is more or less blurry which may arise due to environmental effects, sensor imperfection, communication errors or poor illumination. In ID, the main goal is to recover the original, sharp image. There are many directions and tools explored in studying ID. One of them is the class of algorithms which uses sparse and redundant representation modeling. In many situations, the blur is indeed linear or at least well approximated by a linear model which leads us to study the following linear system:

where X is a finite-dimensional vector space, x is a clean image, A is a blurring operator, b is the observed image and \(\kappa \) is an impulsive noise. Since (57) is mostly underdetermined and \(\kappa \) is not commonly available, an approximate solution of it can be found by solving (2). For a quantitative evaluation of the results, we use the peak signal-to-noise ratio \(\mathtt{PSNR}\) defined as

where \(\Vert \cdot \Vert _F\) is the Frobenius norm, \(x_t\) denotes \(m\times n\) true image and pixel values are in [0, 1]. In general, a higher \(\mathtt{PSNR}\) points out that the reconstruction is of higher quality, see [1, 2]. The true images are available in http://homepage.univie.ac.at/masoud.ahookhosh/uploads/OSGA_v1.1.tar.gz.

Here, the parameter values are taken the same as those in the CS experiment. In our numerical experiments, all algorithms are stopped whenever the total number of iterations exceeds 50. Figures 9, 10 and 11 show that GSCG achieves the best \(\mathtt{PSNR}\) among fpcbb, FISTA and TwIST.

7 Conclusion

In this study, we have introduced and tested GSCG to solve the convex \(\ell _1\)-regularized optimization problem. The main goal here is to improve and accelerate ISTA by introducing a new descent condition, a new search direction and a new shrinkage step-size. GSCG takes advantages of the new generated direction when it satisfies descent property under the new descent condition and also meets a mild norm condition. Otherwise, it utilizes the ISTA descent direction which uses the new shrinkage step-size. Based on the CG idea, the new presented direction is a special linear combination of the ISTA descent direction and the previous direction. The new shrinkage step-size is a specific linear combination of the BB step-size and the previous step-size. The global convergence along with sublinear (R-linear) convergence rate of GSCG for the convex (strongly convex) \(\ell _1\)-regularized optimization problem is established. In a series of numerical experiments, we give the experimental evidences that show the efficiency and robustness of GSCG in comparison with some state-of-the-art solvers when applied to the CS and ID problems.

References

Ahookhosh, M.: High-dimensional nonsmooth convex optimization via optimal subgradient methods. Ph.D. Thesis, Faculty of Mathematics, University of Vienna (2015)

Ahookhosh, M.: User’s manual for OSGA (Optimal SubGradient Algorithm). http://homepage.univie.ac.at/masoud.ahookhosh/uploads/User’s_manual_for_OSGA.pdf (2014)

Ahokhosh, M., Amini, K.: An efficient nonmonotone trust-region method for unconstrained optimization. Numer. Algorithms 59(4), 523–540 (2012)

Amini, K., Ahookhosh, M., Nosratpour, H.: An inexact line search approach using modified nonmonotone strategy for unconstrained optimization. Numer. Algorithm 66, 49–78 (2014)

Amini, K., Shiker, M.A.K., Kimiaei, M.: A line search trust-region algorithm with nonmonotone adaptive radius for a system of nonlinear equations. 4OR-Q. J. Oper. Res. 14(2), 133–152 (2016)

Barzilai, J., Borwein, J.M.: Two point step size gradient method. IMA J. Numer. Anal. 8, 141–148 (1988)

Bazaraa, M.S., Sherali, S.D., Shetty, C.M.: Nonlinear Programming: Theory and Algorithms, 3rd edn. Wiley, New York (2006)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Birgin, E.G., Mart̀inez, J.M., Raydan, M.: Inexact spectral projected gradient methods on convex sets. IMA J. Numer. Anal. 23(4), 539–559 (2003)

Birgin, E.G., Mart̀inez, J.M., Raydan, M.: Nonmonotone spectral projected gradient methods on convex sets. SIAM J. Optim. 10(4), 1196–1211 (2000)

Bioucas-Dias, J., Figueiredo, M.: A new TwIST: two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans. Image Process. 16, 2992–3004 (2007)

Candès, E., Romberg, J., Tao, T.: Robust uncertainty principles, exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory. 52(2), 489–509 (2006)

Combettes, P.L., Wajs, V.R.: Signal recovery by proximal forward–backward splitting. Multiscale Model. Simul. 4(4), 1168–1200 (2005)

Dolan, E., Morè, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Donoho, D.: Compressed sensing. IEEE Trans. Inf. Theory. 52(4), 1289–1306 (2006)

Daubechies, I., Defrise, M., Mol, C.D.: An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57(11), 1413–1457 (2004)

Elad, M.: Sparse and Redundant Representation from Theory to Application in Signal and Image Processing. Springer, Berlin (2010). ISBN 978-1-4419-7011-4

Eldar, C.Y., Kutyniok, G.: Compressed Sensing: Theory and Application. Cambridge University Press, New York (2012). ISBN 978-1-107-00558-7

Esmaeili, H., Rostami, M., Kimiaei, M.: Combining line search and trust-region methods for \(\ell _1\)-minimization. Int. J. Comput. Math. 95(10), 1950–1972 (2018)

Figueiredo, M.A., Nowak, R.D.: An EM algorithm for wavelet-based image restoration. IEEE Trans. Image Process. 12(8), 906–916 (2003)

Figueiredo, M.A., Nowak, R.D., Wright, S.J.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 1(4), 586–597 (2007)

Fletcher, R., Reeves, C.: Function minimization by conjugate gradients. Comput. J. 7, 149–154 (1964)

Foucart, S., Rauhut, H.: A Mathematical Introduction to Compressive Sensing. Springer, New York (2013)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23, 707–716 (1986)

Hager, W.W., Phan, D.T., Zhang, H.: Gradient based methods for sparse recovery. SIAM J. Imaging Sci. 41, 146–165 (2011)

Hager, W.W., Zhang, H.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2(1), 35–58 (2006)

Hale, E.T., Yin, W., Zhang, Y.: Fixed-point continuation for \(\ell _1\)-minimization: methodology and convergence. SIAM J. Optim. 19(3), 1107–1130 (2008)

Hale, E.T., Yin, W., Zhang, Y.: Fixed-point continuation applied to compressed sensing: implementation and numerical experiment. J. Comput. Math. 28(2), 170–194 (2010)

Hestenes, M.R., Stiefel, E.L.: Methods of conjugate gradients for solving linear systems. J. Res. Nat. Bur. Stand. 49, 409–436 (1952)

Huang, Y., Liu, H.: A Barzilai–Borwein type method for minimizing composite functions. Numer. Algorithm 69, 819–838 (2015)

Iiduka, H.: Hybrid conjugate gradient method for a convex optimization problem over the fixed-point set of a nonexpansive mapping. J. Optim. Theory Appl. 140, 463–475 (2009)

Iiduka, H., Yamada, I.: A use of conjugate gradient direction for the convex optimization problem over the fixed point set of a nonexpansive mapping. SIAM J. Optim. 19(4), 1881–1893 (2009)

Kowalski, R.M.: Proximal algorithm meets a conjugate descent. Pac. J. Optim. 12(3), 549–667 (2011)

Nocedal, J., Wright, S.: Numerical Optimization. Springer, Berlin (1999)

Parikh, N., Boyd, S.: Proximal algorithms. Found. Trend. Optim. 1(3), 123–231 (2013)

Polak, E., Ribière, G.: Note sur la convergence de directions conjugées. Rev. Fr. Inform. Rech. Opèrtionnelle 3e Année 16, 35–43 (1969)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7(1), 26–33 (1997)

Tseng, P., Yun, S.: A coordinate gradient descent method for nonsmooth separable minimization. Math. Program. 117(1), 387–423 (2009)

Wen, Z., Yin, W., Goldfarb, D., Zhang, Y.: A fast algorithm for sparse reconstruction based on shrinkage subspace optimization and continuation. SIAM J. Sci. Comput. 32(4), 1832–1857 (2010)

Wen, Z., Yin, W., Zhang, H., Goldfarb, D.: On the convergence of an active set method for \(\ell _1\)-minimization. Optim. Methods Softw. 27(6), 1127–1146 (2012)

Wright, S.J., Nowak, R.D., Figueiredo, M.A.T.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 57(7), 2479–2493 (2009)

Xiao, Y., Wu, S.-Y., Qi, L.: Nonmonotone Barzilai–Borwein gradient algorithm for \(\ell _1\)-regularized nonsmooth minimization in compressive sensing. J. Sci. Comput. 61, 17–41 (2014)

Zhang, H.C., Hager, W.W.: A nonmonotone line search technique and its application to unconstrained optimization. SIAM J. Optim. 14(4), 1043–1056 (2004)

Acknowledgements

We would like to thank the high performance computing (HPC) center, a branch of institute for research in fundamental Physics and Mathematics (IPM), to help us to use HPC’s cluster for computing numerical results. The third author acknowledges the financial support of the Doctoral Program “Vienna Graduate School on Computational Optimization” funded by Austrian Science Foundation under Project No. W1260-N35.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Esmaeili, H., Shabani, S. & Kimiaei, M. A new generalized shrinkage conjugate gradient method for sparse recovery. Calcolo 56, 1 (2019). https://doi.org/10.1007/s10092-018-0296-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-018-0296-x

Keywords

- \(\ell _1\)-Minimization

- Compressed sensing

- Image debluring

- Shrinkage operator

- Generalized conjugate gradient method

- Nonmonotone technique

- Line search method

- Global convergence