Abstract

This study aims to minimize the sum of a smooth function and a nonsmooth \(\ell _{1}\)-regularized term. This problem as a special case includes the \(\ell _{1}\)-regularized convex minimization problem in signal processing, compressive sensing, machine learning, data mining, and so on. However, the non-differentiability of the \(\ell _{1}\)-norm causes more challenges especially in large problems encountered in many practical applications. This study proposes, analyzes, and tests a Barzilai–Borwein gradient algorithm. At each iteration, the generated search direction demonstrates descent property and can be easily derived by minimizing a local approximal quadratic model and simultaneously taking the favorable structure of the \(\ell _{1}\)-norm. A nonmonotone line search technique is incorporated to find a suitable stepsize along this direction. The algorithm is easily performed, where each iteration requiring the values of the objective function and the gradient of the smooth term. Under some conditions, the proposed algorithm appears globally convergent. The limited experiments using some nonconvex unconstrained problems from the CUTEr library with additive \(\ell _{1}\)-regularization illustrate that the proposed algorithm performs quite satisfactorily. Extensive experiments for \(\ell _{1}\)-regularized least squares problems in compressive sensing verify that our algorithm compares favorably with several state-of-the-art algorithms that have been specifically designed in recent years.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The focus of this paper is on the following structured minimization:

where \(f:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) is a continuously differentiable (may be nonconvex) function that is bounded below, \(\Vert \cdot \Vert _1\) is the \(\ell _{1}\)-norm of a vector, and parameter \(\mu >0\) is used to trade off both terms for minimization. Given its structure, problem (1.1) covers a wide range of apparently related formulations in different scientific fields, including linear and logistic regression [45], compressive sensing [18], sparse inverse covariance estimation [46], and sparse principal component analysis [27, 42].

1.1 Problem Formulations

A special case of model (1.1) is the \(\ell _{1}\)-norm regularized least squares problem shown below

where \(A\in {\mathbb {R}}^{m\times n}\,(m\ll n)\) is a linear operator, and \(b\in {\mathbb {R}}^m\) is an observation. Model (1.2) mainly appears in compressive sensing, which is an emerging methodology in digital signal processing, and has attracted intensive research activities over the past years [8–11, 18]. Compressive sensing is based on the fact that if the original signal is sparse or approximately sparse in some orthogonal basis, an exact restoration can be produced by solving problem (1.2).

Another prevalent case of (1.1) that has attracted much interest in machine learning is the linear and logistic regression. Assume the training date \(A=[a_1,\ldots ,a_m]^\top \in {\mathbb {R}}^{m\times n}\) and class labels \(y\in \{-1,+1\}^m\). A linear classifier is a hyperplane \(\{w_i:x^\top a_i+b=0\}\), where \(x\in {\mathbb {R}}^n\) is a set of weights, and \(b\in {\mathbb {R}}\) is the intercept. A frequently used model is the \(\ell _{2}\)-loss support vector machine, which is given by

The \(\ell _{2}\)-lose function is continuous, but not differentiable because of the “max” operation. In the logistic model, the probability distribution of the classifier label \(y\), given \(a_i\), has the form

The weights \(x\) and the intercept \(b\) can be found by minimizing the average loss as

where \(\phi (z)=\log (1+e^{-z})\) is the logistic loss function. Finding the maximum likelihood estimate of \(x\) and \(b\) is called logistic regression. To derive a sparse vector \(x\), the \(\ell _{1}\)-regularized logistic regression can be formulated as

Obviously, the logistic loss function is twice differentiable.

Although the models of these problems have similar structures, they may be very different from a real-data point of view. For example, in compressive sensing, the length of measurement \(m\) is much smaller than that of the original signal \((m\ll n)\), and the encoding matrix \(A\) is dense. However, in machine learning, the numbers of instance \(m\) and features \(n\) are both large, and the data \(A\) is very sparse.

1.2 Existing Algorithms

As the \(\ell _{1}\)-regularized term is non-differentiable when \(x\) contains zero values, the use of the standard unconstrained smooth optimization tools are generally precluded. In the past decades, various approaches have been proposed, analyzed, and implemented in compressive sensing and machine learning literature. One approach involves a variety of algorithms for special cases where in \(f(x)\) has a specific functional form, such as the least squares (1.2), the square loss (1.3), and the logistic loss (1.4). In the following, we briefly review some of these approaches in the literature.

The first popular approach falls into the coordinate descent method. At the current iterate \(x_k\), the simple coordinate descent method updates one component at a time to generate \(x_k^j,\,j=1,\ldots ,n+1\) such that \(x_k^1=x_k,\,x_k^{n+1}=x_{k+1}\) and solves a one-dimensional subproblem

where \(e^j\) is defined as the \(j\)th column of an identity matrix. Clearly, the objective function has one variable and one non-differentiable point at \(z=-e^j\). To solve the logistic regression model (1.4), Bayesian binary regression (BBR) [21] solves the sub-problem approximately using the trust region method with Newton step; coordinate descent with Newton step (CDN)[12] improves the performance of BBR by applying a one-dimensional Newton method and a line search technique. Instead of cyclically updating one component at a time, the stochastic coordinate descent method [35] randomly selects the working components to achieve enhanced performance; the block Coordinate Gradient Descent (CGD) algorithm [37, 47] is based on the approximated convex quadratic model for \(f\) and selects the working variables according to some rules.

The second type of approach is to transform model (1.1) into an equivalent box-constrained optimization problem by variable splitting. Let \(x=u-v\) with \(u_i=\max \{0,x_i\}\) and \(v_i=\max \{0,-x_i\}\). Then, model (1.1) can be reformulated equivalently as

The objective function and constraints are smooth. Therefore, the model can be solved by any standard box-constrained optimization technique. However, an obvious drawback of this approach is that it doubles the number of variables. Gradient projection for sparse reconstruction (GPSR) [20] solves (1.6) and subsequently solves (1.2) using the Barzilai–Borwein gradient method [2] with an efficient nonmonotone line search [22]. The method is actually an application of the well-known spectral projection gradient [5] in compressive sensing. The trust region Newton algorithm [26, 45] minimizes (1.6) then solves the logistic regression model (1.4) and exhibits powerful vitality through a series of comparisons. To solve (1.3) and (1.4), the interior-point algorithm [24, 25] forms a sequence of unconstrained approximations by appending a “barrier” function to the objective function (1.6), thereby ensuring that \(u\) and \(v\) remain sufficiently positive. Moreover, truncated Newton steps and preconditioned conjugate gradient iterations are used to produce the search direction.

The third type of method approximates the \(\ell _{1}\)-regularized term with a differentiable function. The simple approach replaces the \(\ell _{1}\)-norm with a sum of multi-quadric functions

where \(\epsilon \) is a small positive scalar. This function is twice-differentiable, and \(\lim _{\epsilon \rightarrow 0^+}l(x)=\Vert x\Vert _1\). Subsequently, several smooth unconstrained optimization approaches can be applied based on this approximation. However, the performance of these algorithms is highly influenced by the parameter values, and the condition number of the corresponding Hessian matrix becomes large as \(\epsilon \) decreases. Nesterov’s smoothing technique [28] is used to construct smooth functions to approximate some specific structured convex nonsmooth function. Based on this technique, NESTA (Nesterov’s Algorithm) [4] solves problem (1.2) using first-order gradient information.

The fourth type of approach falls into the subgradient-based Newton-type algorithm. An important attempt in this class is that of Andrew and Gao [1], who extend the well-known limited memory BFGS method [30] to solve \(\ell _{1}\)-regularized logistic regression model (1.4) and propose an orthant-wise limited memory quasi-Newton method. At each iteration, this method computes a search direction over an orthant containing the previous point. The subspace BFGS method [44] involves an inner iteration approach to find the descent quasi-Newton direction and subgradient Wolfe conditions to determine the stepsize that ensures decreasing the objective functions. This method is globally convergent and capable of solving general nonsmooth convex minimization problems.

Aside from GPSR and NESTA, other specially designed solvers are available. Using an operator splitting technique, Hale, Yin, and Zhang derive the iterative shrinkage/thresholding (IST) fixed-point continuation algorithm (FPC) [23]. By combining the interior-point algorithm [24], FPC is also extended to solve large-scale \(\ell _{1}\)-regularized logistic regression [36]. A closely related algorithm to FPC is the fixed-point continuation and active set FPC_AS [39, 40], which solves a smooth subproblem to determine the magnitudes of the nonzero components of \(x\) based on an active set. Two-step IST algorithm (TwIST) [6] and fast IST algorithm (FISTA) [3] speed up the performance of IST algorithm and have virtually the same complexity but with better convergence properties. Another closely related method is the sparse reconstruction algorithm (SpaRSA) [41], which involves minimizing a non-smooth convex problem with separable structures. The spectral projected gradient algorithm named SPGL1 [38] solves the lasso model (1.2) by the spectral gradient projection method with an efficient Euclidean projection on \(\ell _{1}\)-norm ball. The alternating directions method called YALL1 [43] investigates \(\ell _{1}\)-norm problems from either the primal or the dual forms and solves \(\ell _{1}\)-regularized problems of different types.

All the reviewed algorithms differ in various aspects such as convergence speed, ease of implementation, and practical applicability. No evidence can verify that which algorithm outperforms the others under all scenarios.

1.3 Contributions and Organization

Although much progress has been achieved in solving problem (1.1), existing algorithms mainly deal with cases where \(f\) is a convex function and even least squares. In this study we propose a Barzilai–Borwein gradient algorithm to solve \(\ell _{1}\)-regularized nonsmooth minimization problems. At each iteration, we approximate \(f\) locally by a convex quadratic model, where the Hessian is replaced by the multiples of a spectral coefficient with an identity matrix. The search direction is determined by minimizing the quadratic model and maximizing the use of the \(\ell _{1}\)-norm structure. We show that the generated direction contains the one in FPC_AS [40] as a special case and is descent, which guarantees the existence of a positive stepsize along the direction. In our algorithm, we adopt the nonmonotone line search of Grippo, Lampariello, and Lucidi [22], which allows the function values to increase occasionally in some iteration but decrease in the whole iterative process. The nonmonotone line search is attractive because it saves a considerable number of function evaluations, which are the computational burden in large datasets. The method is easily performed, as only the value of objective function and the gradient of the smooth term are needed at each iteration. We show that each cluster of the iterates generated by this algorithm is a stationary point of \(F\). Although we mainly consider the \(\ell _{1}\)-regularizer in this study, the \(\ell _{2}\)-norm regularization problem and the matrix trace norm problems can also be readily included in our framework, thereby broadening the capability of the algorithm. We implement the algorithm to solve problem (1.1), where \(f\) is a nonconvex smooth function from the CUTEr library to show the efficiency of the algorithm. We also run the algorithm to solve \(\ell _{1}\)-regularized least squares and do performance comparisons with state-of-the-art algorithms namely NESTA, CGD, TwIST, FPC_BB, FPC_AS, and GPSR. The results of the comparisons show that the proposed algorithm is effective, comparable, and promising.

We organize the rest of this paper as follows. In Sect. 2, we briefly recall some preliminary results in optimization literature to describe our motivation for our work, construct the search direction, and present the steps of our algorithm along with some remarks. In Sect. 3, we establish the global convergence theorem under some mild conditions. In Sect. 4, we show how to extend the algorithm to solve \(\ell _{2}\)-norm and matrix trace norm minimization problems. In Sect. 5, we present experiments to show the efficiency of the algorithm in solving the \(\ell _{1}\)-regularized nonconvex problem and least squares problem. In Sect. 6, we conclude our paper.

2 Algorithm

2.1 Preliminary Results

First, consider the minimization of the smooth function without the \(\ell _{1}\)-norm regularization

The basic idea of Newton’s method for this problem is to iteratively use the quadratic approximation \(q_k\) to the objective function \(f(x)\) at the current iterate \(x_k\) and to minimize the approximation \(q_k\). Let \(f:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) be twice continuously differentiable, and let its Hessian \(G_k=\nabla ^2 f(x_k)\) be positive definite. Function \(f\) at the current \(x_k\) is modeled by the quadratic approximation \(q_k\) as

where \(s=x-x_k\). Minimizing \(q_k(s)\) yields

which is Newton’s formula, and \(s_k=x_{k+1}-x_k=-G_k^{-1}\nabla f(x_k)\) is the so-called Newton’s direction.

For the positive definite quadratic function, Newton’s method can reach the minimizer with one iteration. However, when the starting point is far away from the solution, the method cannot guarantee that \(G_k\) is positive definite and that Newton’s direction \(d_k\) is a descent direction. Let the quadratic model of \(f\) at \(x_{k+1}\) be

Finding the derivative yields

Setting \(x=x_k,\,s_k=x_{k+1}-x_k\), and \(y_k=\nabla f(x_{k+1})-\nabla f(x_k)\), we obtain

For various practical problems, either the computing efforts of the Hessian matrices are very expensive or the evaluation of the Hessian is difficult; the Hessian is not even available analytically. These challenges lead to the quasi-Newton method, which generates a series of Hessian approximations through the use of the gradient while maintaining a fast rate of convergence. Instead of computing the Hessian \(G_{k}\), the quasi-Newton method constructs the Hessian approximation \(B_{k}\), where the sequence \(\{B_k\}\) possesses positive definiteness and satisfies

In general, \(B_{k+1}\) is obtained by updating \(B_k\) with some typical and popular formulae, such as BFGS, DFP, and SR1.

Unfortunately, the standard quasi-Newton algorithm, or even its limited memory versions, does not scale well enough to train very large-scale models involving millions of variables and training instances, which are commonly encountered, for example, in natural language processing. The main computational burden of the Newton-type algorithm is the storage of a large matrix at per-iteration, which may exceed the memory capability of a personal computer (PC). Hence, a matrix-free algorithm that particularly deals with large-scale problems is urgently needed. For this purpose, the approximation Hessian \(B_k\) should be furthermore simplified as a diagonal matrix with positive components, i.e., \(B_k=\lambda _k I\) with an identity matrix \(I\) and \(\lambda _k>0\). Then, the quasi-Newton condition changes to the form

Multiplying both sides by \(s_k^\top \) yields

Similarly, multiplying both sides by \(y_k^\top \), yields

As indicated by both formulae, if \(s_k^\top y_k>0\), then the matrix \(\lambda _{k+1}I\) is positive definite, which ensures that the search direction \(-\lambda _{k}^{-1}\nabla f(x_k)\) is descent at current point.

The formulae (2.4) and (2.5) were first developed by Barzilai and Borwein [2] for the quadratic case of \(f\). This method essentially comprises the steepest descent method and adopts either (2.4) or (2.5) as the stepsize along a negative gradient direction. Barzilai and Borwein [2] showed that the corresponding iterative algorithm is R-superlinearly convergent for the quadratic case. Raydan [32] presented a globalization strategy based on nonmonotone line search [22] for the general non-quadratic case. Other developments of Barzilai–Borwein gradient algorithm can be found in [5, 13, 15–17, 31, 49].

2.2 Algorithm

Given its simplicity and numerical efficiency, the Barzilai–Borwein gradient method is very effective in dealing with large-scale smooth unconstrained minimization problems. However, the application of the Barilai-Borwein gradient algorithm in \(\ell _{1}\)-regularized nonsmooth optimization is problematic because the regularization is non-differentiable. In this subsection, we construct an iterative algorithm to solve the \(\ell _{1}\)-regularized structured nonconvex optimization problem. The algorithm can be described as the iterative form

where \(\alpha _k\) is a stepsize, and \(d_k\) is a search direction defined by minimizing a quadratic-approximated model of \(F\).

Now, we turn our attention to consider the original problem with \(\ell _{1}\)-regularizer. As \(\ell _{1}\)-term is non-differentiable, at \(x_k+d_k\), we use the approximated form

where \(h\) is a small positive number, and the case \(h=1\) is reduced to the equivalent form. Subsequently, the objective function \(F\) is approximated by the quadratic approximation \(Q_k\),

Minimizing (2.6) yields

where \(x_k^i,\,d^i\), and \(\nabla f^i(x_k)\) denote the \(i\)th component of \(x_k,\,d\), and \(\nabla f(x_k)\), respectively. The favorable structure of (2.7) adopts the explicit solution

Hence, the search direction at current point is

where \(|\cdot |\) and “\(\max \)” are interpreted as componentwise, and the convention \(0\cdot 0/0=0\) is followed. When \(\mu =0\), (2.8) is reduced to \(d_k=-\lambda _k^{-1}\nabla f(x_k)\), i.e., the traditional Barzilai–Borwein gradient algorithm in smooth optimization. The key motivation for this formulation is that the optimization problem in Eq. (2.7) can be easily solved by exploiting the structure of the \(\ell _{1}\)-norm.

For \(y\in {\mathbb {R}}^n\) and \(\tau \in {\mathbb {R}}\), the unique minimizer of \(\tau \Vert x\Vert _1+\frac{1}{2}\Vert x-y\Vert ^2\) is given explicitly by

As a result, in the case of \(h=1\), (2.8) is reduced to

which is essentially the search direction of the algorithm FPC_AS [39, 40]. Just because of this, in the following, we only consider the case where \(h\in (0,1)\). The important observation illustrates that our approach generalizes the definition of the search direction of FPC_AS using a small scalar \(h\). For the reason, we believe that the search direction should be generated by theoretically minimizing the quadratic approximation of both terms in \(F\), not just in the smooth function \(f\). Another advantage of our approach is that an appropriate value of \(h\) may result in an improved numerical performance, and that the approach is not restricted to the special case \(h=1\).

Lemma 2.1

For any real vectors \(a\in {\mathbb {R}}^n\) and \(b\in {\mathbb {R}}^n\), the following function \(L(x)\) is non-decreasing

Proof

Note that

hence, it is reduced to prove that \(l^i(x)\) is non-decreasing for each \(i\).

-

(a)

When \(a^i\ge 0\) and \(a^ix+b^i\ge 0,\,l^i(x)=b^i\).

-

(b)

When \(a^i\ge 0\) and \(a^ix+b^i\le 0\), we obtain

$$\begin{aligned} l^i(x)=\frac{-2a^i-b^ix}{x}=\frac{-2a^i}{x}-b^i. \end{aligned}$$ -

(c)

When \(a^i\le 0\) and \(a^ix+b^i\ge 0\), we obtain

$$\begin{aligned} l^i(x)=\frac{2a^i+b^ix}{x}=\frac{2a^i}{x}+b^i. \end{aligned}$$ -

(d)

When \(a^i\le 0\) and \(a^ix+b^i\ge 0\), we have \(l^i(x)=-b^i\).

Clearly \(l^i(x)\) is non-decreasing for each case. Hence, \(L(x)\) is non-decreasing. \(\square \)

The following lemma shows that the direction defined by (2.8) is descent if \(d_k\ne 0\).

Lemma 2.2

Suppose that \(\lambda _k>0\) and \(d_k\) is determined by (2.8). Then,

and

Proof

By the differentiability of \(f\) and the convexity of \(\Vert x\Vert _1\), we show that for any \(\theta \in (0,h]\,(\theta /h\in (0,1])\),

which is exactly (2.10).

Noting \(d_k\) is the minimizer of (2.6) and \(\theta \in (0,h]\), from (2.6) as well as the convexity of \(\Vert x\Vert _1\), we have

Hence,

The last three terms of the left hand side in (2.12) can be re-organized as

where the inequality is from Lemma 2.1. Combining (2.12) with (2.13), we obtain

Dividing both sides of (2.14) by \((1-\theta )\) and noting \(1-\theta >0\) when \(h\in (0,1)\), we arrive at the desired result (2.11). \(\square \)

When the search direction is determined, a suitable stepsize along this direction should be found to determine the next iterative point. In this study we pay particular attention to a nonmonotone line search strategy, which differs from the traditional Armijo line search or the Wolfe–Powell line search. The traditional Armijo line search requires the function value to decrease monotonically at each iteration. This requirement may cause the sequence of iterations to follow the bottom of a curved narrow valley, which commonly occurs in difficult nonlinear problems. To overcome this difficulty, a possible alternative is to allow an occasional increase in the objective function at each iteration. To clarify further the proposed algorithm, we briefly recall the earliest nonmonotone line search technique by Grippo, Lampariello, and Lucidi [22]. Let \(\delta \in (0,1),\,\rho \in (0,1)\), and \(\tilde{m}\) be a positive integer. The nonmonotone line search is used to choose the smallest nonnegative integer \(j_k\) such that the stepsize \(\alpha _k=\tilde{\alpha }\rho ^{j_k}\) satisfies

where

If \(m(k)=0\), the above nonmonotone line search reduces to the standard Armijo line search.

Based on Lemma 2.2, the inequality (2.15) should be modified as

where

As shown in (2.11) \(\Delta _k\le -\frac{\lambda _k}{2}\Vert d_k\Vert ^2_2<0\) whenever \(d_k\ne 0\). Hence, \(\alpha _k\) given by (2.16) is well-defined.

Given all the derivations above, we now describe the nonmonotone Barzilai–Borwein gradient algorithm (hereafter referred to NBBL1) as follows.

Remark 1

If \(\lambda _k>0\), then the generated direction is descent. However, the condition \(\lambda _k>0\) in this case may not be fulfilled and the hereditary descent property can no longer be guaranteed. To cope with this drawback, we should keep the sequence \(\{\lambda _k\}\) uniformly bounded; that is, for sufficiently small \(\lambda _{(\min )}>0\) and sufficiently large \(\lambda _{(\max )}>0\), the \(\lambda _k\) is forced as

This approach ensures that \(\lambda _k\) is bounded from zero and subsequently ensures that \(d_k\) is descent at per-iteration.

Remark 2

Lemma 2.2demonstrates the existence of a constant \(\theta \in (0,h]\) such that \(x_k+\theta d_k\) is a descent point. Hence, choosing the initial stepsize as \(\tilde{\alpha }=h\) is suggested in practical computation.

3 Convergence Analysis

Thfis section is devoted to presenting some favorable properties of the generated direction and establishing the global convergence of Algorithm 1. Our convergence result utilizes the following assumption:

Assumption 1

The level set \(\Omega =\{x:f(x)\le f(x_0)\}\) is bounded.

To easily understand the lemma given below, we present two frequently used definitions in convex analysis.

Definition 3.1

The directional derivative of a multivariate function \(f(x_1,\ldots ,x_n)\) along a given vector \(d=(d_1,\ldots ,d_n)\) at a given point \(x\) is defined by

Definition 3.2

A feasible point \(x\in {\mathbb {R}}^n\) is said to be a stationary point of \(f\) if \(f'(x;d)\ge 0\) for all \(d\in {\mathbb {R}}^n\).

Lemma 3.3

Suppose that \(\lambda _k>0\) and \(d_k\) is defined by (2.8) with \(h\in (0,1]\). Then, \(x_k\) is a stationary point of problem (1.1) if and only if \(d_k=0\).

Proof

If \(d_k\ne 0\), then Lemma 2.2 shows that \(d_k\) is descent direction at \(x_k\), which implies that \(x_k\) is not a stationary point of \(F\). If \(d_k=0\) is the solution of (2.7), we obtain the following for any \(\alpha d\in {\mathbb {R}}^n\) with \(\alpha >0\):

Combining \(f(x_k+\alpha d)-f(x_k)=\alpha \nabla f(x_k)^\top d+o(\alpha )\) with (3.1) yields

where the second inequality is from Lemma 2.1. Hence, \(x_k\) is a stationary point of \(F\). \(\square \)

The proof of the following lemma is similar to the Theorem in [22].

Lemma 3.4

Let \(l(k)\) be an integer such that

Then, the sequence \(\{F(x_{l(k)})\}\) is non-increasing, and the search direction \(d_{l(k)}\) satisfies

Proof

From the definition of \(m(k)\), we have \(m(k+1)\le m(k)+1\). Hence,

Based on (2.16), we obtain the following for all \(k>\tilde{m}\):

By assumption 1, the sequence \(\{F(x_{l(k)})\}\) admits a limit for \(k\rightarrow \infty \). Hence,

Given the definition of \(\Delta _k\) in (2.17) and the inequality (2.11), we can deduce that

Combining the previous equation with (3.3) yields

which shows the desired result (3.2). \(\square \)

Theorem 3.5

Let the sequences \(\{x_k\}\) and \(\{d_k\}\) be generated by Algorithm 1. Then, a subsequence \({\mathcal {K}}\) exists such that

Proof

As shown in [22], (3.2) also implies that

Now, let \(\bar{x}\) be a limit point of \(\{x_k\}\) and \(\{x_k\}_{{\mathcal {K}}_1}\) be a subsequence of \(\{x_k\}\) converging to \(\bar{x}\). Then, by (3.5), either \(\lim \limits _{k\rightarrow \infty ,k\in {\mathcal {K}}_1} \Vert d_k\Vert _2=0\), which implies \(\Vert \bar{d}\Vert _2=0\), or a subsequence \(\{x_k\}_{{\mathcal {K}}}\,({\mathcal {K}}\subset {\mathcal {K}}_1)\) exists such that

In this case, we assume that a constant \(\epsilon >0\) exists such that

As \(\alpha _k\) is the first value that satisfies (2.16), we can assume based on Step 3 in Algorithm 1 that an index \(\bar{k}\) exists such that for all \(k\ge \bar{k}\) and \(k\in {\mathcal {K}}\),

As \(f\) is continuously differentiable, we can find based on the mean-value theorem on \(f\) that a constant \(\theta _k\in (0,1)\) exists such that

By combining the previous equation with (3.8), we obtain

As \(\tilde{\alpha }=h\) and \(\alpha _k\rightarrow 0\) in (3.6), we obtain \(\alpha _k<\rho h\) as \(k\rightarrow \infty \). Based on Lemma 2.1,

Subtracting both sides of (3.9) by \(\Delta _k\) and noting the definition of \(\Delta _k\)

Taking the limit as \(k\in {\mathcal {K}},\,k\rightarrow \infty \) in both sides of (3.10) and using the smoothness of \(f\), we obtain

which implies that \(\Vert d_k\Vert _2\rightarrow 0\) as \(k\in {\mathcal {K}},\,k\rightarrow \infty \). This result yields a contradiction because (3.7) indicates that \(\Vert d_k\Vert _2\) is bounded away from zero. \(\square \)

4 Some Extensions

In this section, we show that our algorithm can be readily extended to solve \(\ell _{2}\)-norm and matrix trace norm minimization problems in machine learning, thereby broadening the applicable range of our approach significantly.

First, we consider the \(\ell _{2}\)-regularization problem

The search direction \(d_k\) is clearly determined by minimizing

Based on [19], the explicit solution is

i.e.,

Now, we consider the matrix trace norm minimization problem

where the functional \(\Vert X\Vert _*\) is the trace norm of matrix \(X\), which is defined as the sum of its singular values. That is, assume that \(X\) has \(r\) positive singular values of \(\sigma _1\ge \sigma _2\ge \cdots \ge \sigma _r\ge 0\); then, \(\Vert X\Vert _*=\sum _{i=1}^r\sigma _i\). The matrix trace norm is also known as the Schatten \(\ell _{1}\)-norm, Ky Fan norm, and nuclear norm [33]. This problem has received much attention because it is closely related to the affine rank minimization problem, which has appeared in many control applications, including controller design, realization theory, and model reduction.

Similar to the steps undertaken in previous sections, we can readily reformulate (2.6) as the following quadratic model to determine the search direction:

To obtain the exact solution of (4.2), we now consider the singular value decomposition (SVD) of a matrix \(Y\in {\mathbb {R}}^{m\times n}\) with rank \(r\) as

where \(U\) and \(V\) are \(m\times r\) and \(r\times n\) matrices, respectively, with orthonormal columns, and the singular value \(\sigma _i\) is positive. For each \(\tau >0\), let

where \([\cdot ]_+=\max \{0,\cdot \}\). \({\mathcal {D}}_{\tau }(Y)\) obeys the following nuclear norm minimization problem [7], i.e.,

Comparing (4.2) with (4.3), we deduce that

or, equivalently,

Subsequently, the NBBL1 framework to solve \(\ell _{2}\)-norm and matrix trace norm regularization problems is easily derived.

5 Numerical Experiments

In this section, we present numerical results to illustrate the feasibility and efficiency of NBBL1. We partition our experiments into three classes based on different types of \(f\). In the first class, we use our algorithm to solve \(\ell _{1}\)-regularized nonconvex problem. In the second class, we use our algorithm to solve \(\ell _{1}\)-regularized least squares, which mainly appear in compressive sensing. In the third class, we compare some state-of-the-art algorithms in compressive sensing to show the efficiency of our algorithm. All experiments are performed under Windows XP and Matlab 7.8 (2009a) running on a Lenovo laptop with an Intel Atom CPU at 1.6 GHz and 1 GB of memory.

5.1 Test on \(\ell _{1}\)-Regularized Nonconvex Problem

Our first test is performed on a set of nonconvex unconstrained problems from the CUTEr [14] library. The second-order derivatives of all the selected problems are available. Given our interest in large problems, we only consider the problems with a size of at least 100. For such problems, we use the dimensions that is admissible of the “double large” installation of CUTEr. The algorithm stops if the norm of the search direction is small enough; that is,

The iterative process is also stopped if the number of iterations exceeds 10,000 without achieving convergence.

In this experiment, we take \(tol_1=10^{-8},\,h=1,\,\lambda _{(\min )}=10^{-20}\), and \(\lambda _{(\max )}=10^{20}\). In the line search, we choose \(\tilde{\alpha }_0=1,\,\rho = 0.35,\,\delta =10^{-4}\), and \(\tilde{m}=5\). We test NBBL1 with different parameter values \(\mu =\{0, .25, 1, 1.5, 2\}\). The numerical results are presented in Tables 1 and 2, which contain the name of the problem (Problem), the dimensions of the problem (Dim), the number of iterations (Iter), the number of function evaluations (Nf), the CPU time required in seconds (Time), the final objective function values (Fun), the norm of the final gradient of \(f\) (Normg), or the number of nonzeros components of solutions (Nzero), and the norm of final direction (Normd).

As show in Tables 1 and 2, NBBL1 works successfully for all the test problems in each case. Particularly, NBBL1 consistently produces highly accurate solutions within a short amount of time. The proposed algorithm requires a large number of iterations for a number of special problems, such as problems FLETCHER, NONCVXU2, and BROYDN7D with parameter \(\mu =0\), problems FLETCHER and BROYDN7D with \(\mu =0.25\); problems FLETCHER and CHAIWOO with \(\mu =1\), problems VARDIM, FLETCHER and CHAIWOO with \(\mu =1.5\) and \(\mu =2\). It can also be observed that the number of iterations and function evaluations both decrease universally as \(\mu \) increase from 1 except for problem VARDIM. As illustrated at the third column on the right, the number of the non-zero components of final solutions decrease dramatically as \(\mu \) gets small, and reach zero when \(\mu =2\) for a coupe of problems. Moreover, if larger \(\mu \) is permitted, the number of non-zero components of final solutions are all zero for each test problem, which means that zero is the optimal solution. The phenomenon is not surprising once we note the separable structure of the original problem and the balanced parameter \(\mu \). From Table 2 we also note that for problems COSINE and GENROSE, the proposed algorithm requires only two steps to achieve convergence. From CUTEr [14], it is easy to see that the fundamental cause of the thing lies in the starting point near to zero, i.e. the solution. Meanwhile, the norm of the final direction is exactly zero for problems COSINE and GENROSE, because at the solution it has \(x_k=0\) and \(|x_k-\frac{h}{\lambda _k}\nabla f(x_k)|<\frac{\mu h}{\lambda _k}\) in (2.8) at this case. Table 1 presents the numerical results of NBBL1 after solving a smooth nonconvex minimization problem without any regularization. As shown in the second to the last column of this table, the norm of the final gradient is sufficiently small. The important observation verifies that the proposed algorithm is very efficient in solving unconstrained smooth minimization problems. This result is not surprising, because the proposed algorithm reduces to the effective nonmonotone Barzailai-Borwein gradient method of Raydan [32] in this case.

5.2 Test on \(\ell _{1}\)-Regularized Least Squares

Let \(\bar{x}\) be a sparse or a nearly sparse original signal, \(A\in {\mathbb {R}}^{m\times n}\, (m\ll n)\) be a linear operator, \(\omega \in {\mathbb {R}}^m\) be a zero-mean Gaussian white noise, and \(b\in {\mathbb {R}}^m\) be an observation that satisfies the relationship

Recent compressive sensing results show that under some technical conditions, the desired signal can be reconstructed almost exactly by solving the \(\ell _{1}\)-regularized least squares (1.2). In this subsection, we perform two classes of numerical experiments to solve (1.2) using the Gaussian matrices as the encoder. In the first class, we demonstrate that our algorithm effectively decodes a sparse signal. In the second class, we carry out a series of experiments with different \(h\) to choose the best one. We measure the quality of restoration \(x^*\) through the relative error to the original signal \(\bar{x}\); that is,

In the first test, we use a random matrix \(A\) with independent identically distributed Gaussian entries. The \(\omega \) is the additive Gaussian noise of zero mean and standard deviation \(\sigma \). Given the storage limitations of the PC, we test a small size signal with \(n=2^{11},\,m=2^{9}\). The original signal contains randomly \(p=2^6\) nonzero elements. We also choose the noise level \(\sigma =10^{-3}\). The proposed algorithm starts at a zero point and terminates when the relative change of two successive points are sufficiently small, i.e.,

In this experiment, we take \(tol=10^{-4},\,h=10^{-2},\,\lambda _{(\min )}=10^{-30}\), and \(\lambda _{(\max )}=10^{30}\). In the line search, we choose \(\tilde{\alpha }_0=10^{-2},\,\rho = 0.35,\,\delta =10^{-4}\), and \(\tilde{m}=5\). The original signal, the limited measurement, and the reconstructed signal are given in Fig. 1.

Comparing the left plot with the right one in Fig. 1, we clearly see that the original sparse signal is restored almost completely. All the blue peaks are encircled by the red circles, illustrating that the original signal has been found almost exactly. Overall, this simple experiment shows that the proposed algorithm performs quite well and provides an efficient approach to the recovery of large sparse non-negative signal.

The last term in the approximate quadratic model (2.6) is equivalent to \(\Vert x_k+d\Vert _1\) exactly when \(h=1\). Next, we provide evidence to show that other values can be potentially and dramatically better than \(h=1\). We conduct a series of experiments and compare the performance at each case. In our experiments, we set all the parameters values as in the previous test except for \(n=2^{10}\). We present in Fig. 2 the impact of the parameter \(h\) values on the total number of iterations, the computing time, and the quality of the recovered signal. In each plot, the level axis denotes the values of \(h\) from 0.01 to 1 in a log scale.

In Fig. 2, the number of iterations, the computing time, and the quality of restorations are greatly influenced by the \(h\) values. Generally, as \(h\) increases, NBBL1 consistently performs satisfactorily. On the other hand, the right plot clearly demonstrates that the relative error decreases dramatically at the very beginning and then decreases slightly after 0.2. However, the quality of restoration cannot be improved further after 0.7. On the other hand, the left and middle plots show that the number of iterations and the computing time slightly increase after \(h=0.8\). The these plots verify that the performance of NBBL1 is sensitive to the \(h\) values, and that the value of \(h\in [0.7,0.9]\) may be preferable.

5.3 Comparisons with NESTA_Ct, GPSR_BB, CGD, TwIST, FPC_BB, and FPC_AS

In the third class of the experiment we test the proposed algorithm against several state-of-the-art algorithms to solve \(\ell _{1}\)-regularized problems in compressive sensing or linear inverse problems. Compare each algorithm in a very fair way is difficult, because each algorithm is compiled with different parameter settings, such as the termination criterions, the starting points, or the continuation techniques. Hence, in our performance comparisons, we run each code from the same initial point, use all the default parameter values, and only observe the convergence behavior of each algorithm to attain a solution of similar accuracy. We provide below a brief review of each solver.

NESTAFootnote 1 uses Nesterov’s smoothing technique [28] and gradient method [29] to solve basis pursuit denoising problem. The current version can solve \(\ell _{1}\)-norm regularization problems with different types, including (1.2). In this experiment, we test NESTA with continuation (hereafter referred to as NESTA-Ct) for comparison. This algorithm solves a sequence of problems (1.2) using a decreasing sequence of \(\mu \) values. Additionally, NESTA-Ct initially uses the intermediated solution for the next problem. In running NESTA, all the parameters are taken as default except TolVar, which is set to \(1\hbox {e}-5\), to obtain solutions of similar quality with other solvers.

Gradient projections for sparse reconstruction (referred to as GPSR_BB Footnote 2 [20]) reformulates the original problem (1.2) as a box-constrained quadratic programming problem (1.6) by splitting \(x=u-v\). Figueiredo, Nowak, and Wright use a gradient projection method with Barziali-Borwein steplength [2] for its solution, the performance of which is improved using a nonmonotone line search [22]. For the comparison of the proposed algorithm with GPSR-BB, we use the former’s continuation variant and set all parameters as default.

The well-known CGDFootnote 3 uses a gradient algorithm to solve (1.5) and obtain the search direction \(d_k^i=ze^i\) in \(i\in {\mathcal {J}}\), where \({\mathcal {J}}\) is a nonempty subset of \(\{1,\ldots ,n\}\). Moreover, CGD chooses the index subset \({\mathcal {J}}\) following the Gauss-Southwell rule. The iterative process \(x_{k+1}=x_k+\alpha _kd_k\) continues until some termination criteria are met, (i.e., \(d_k^i=0\) with \(i\notin {\mathcal {J}}\)), and the stepsize \(\alpha _k\) using an Armijo rule. In running CGD, we use the code CGD according to its Matlab package and set all the parameters as default except for init=2 to start the iterative process at \(A^\top b\).

TwISTFootnote 4 is a two-step IST algorithm that is used to solve a class of linear inverse problems. Specifically, TwIST is designed to solve

where \(A\) is a linear operator, and \({\mathcal {J}}(\cdot )\) is a general regularizer, which can be either a \(\ell _{1}\)-norm or total variation [34]. The iteration framework of TwIST is

where \(\alpha ,\delta >0\) are parameters, \(\xi _k=u_k+A^\top (f-Au_k)\), and

We use the default parameters in TwIST and terminate the iteration process when the relative variation of function value falls below \(10^{-4}\).

FPCFootnote 5 is used to solve the general \(\ell _{1}\)-regularized minimization problem (1.1), where \(f\) is a continuously differentiable convex function. At current \(x_k\) and any scalar \(\tau >0\) (might be \(1/\lambda _k\)), the next iteration is produced by the so-called fixed point iteration

To obtain a good practical performance, FPC is augmented with a continuation approach. Moreover, FPC is further modified using Barzilai–Borwein stepsize (code FPC-BB in Matlab package FPC_v2), resulting in a significantly improved performance. In running FPC-BB, we use all the default parameter values except xtol = \(1\cdot \hbox {e}-5\) to stop the algorithm when the relative change between successive points is below xtol to derive solutions of similar quality with other solvers. The closely related algorithm FPC_AS Footnote 6 searches along

to estimate the support to the solution using the nonmonotone line search of Zhang and Hager [48]. Then, a smooth “subspace optimization” is solved to recover the magnitudes of the nonzero components of \(x\). In running FPC_AS, we use the latest version in its Matlab package and set all the parameters as default except for opts.init=2 to start at \(A^\top b\).

In this test, \(A\) is a partial discrete cosine transform (DCT) coefficient matrix, whose \(m\) rows are chosen randomly from the \(n\times n\) DCT matrix. This encoding matrix \(A\) does not require storage and allows fast matrix-vector multiplications involving \(A\) and \(A^\top \). Therefore, it can be used to test larger-size problems compared with those tested using Gaussian matrices. In NBBL1, we take \(tol_2=10^{-4},\,h=0.83,\,\lambda _{(\min )}=10^{-30}\), and \(\lambda _{(\max )}=10^{30}\). In the line search, we choose \(\tilde{\alpha }_0=h,\,\rho = 0.35,\,\delta =10^{-5}\), and \(\tilde{m}=5\). In this comparison, we let \(n= 2^{14},\,m = \mathtt{floor}(n/4)\), where “floor” is a Matlab command used to round off an element to the nearest integer toward minus infinity. The original signal \(\bar{x}\) contains \(p=\mathtt{floor}(m/8)\) number of nonzero components. Moreover, the observation \(b\) is contaminated by Gaussian noise with level \(\sigma = 1\hbox {e}-\)3. The goal is to use each algorithm to reconstruct \(\bar{x}\) from the observation \(b\) by solving (1.2) with \(\mu =2^{-8}\). All the tested algorithms start at \(x_0=A^\top b\) and terminate with different stopping criterions to produce resolutions of similar quality. Note that for the continuation technique used in some of the tested algorithms, the value of \(\mu \) may change occasionally. For this reason, we only observe the convergence behavior of the algorihtms with respect to relative error as the iteration numbers and computing time increase to specifically illustrate the performance of each algorithm.. The results of NBBL1, NESTA_Ct, CGD, TwIST, and GPSR_BB are presented in Fig. 3.

The left plot in Fig. 3 shows that NBBL1 usually decreases the relative errors faster than NESTA_Ct, CGD, GPSR_BB, and TwIST throughout the entire iteration process. Meanwhile, the right plot shows that NBBL1 requires lesser number of iterations than NESTA_Ct, CGD, and GPSR_BB. Notably, TwIST requires a nearly equal number of iterations as NBBL1 but with more computing time. The reason lies in solving the de-noising subproblem (5.5) per-iteration. Among the compared solvers, CGD performs the weakest. However, if CGD starts at zero, its performance should significantly improve (see [43]). In sum, the simple experiment verifies that NBBL1 is the fastest algorithm among those included inthis set of test.

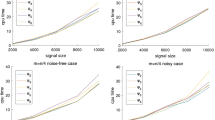

Now we proceed to testing the algorithm against two other related solvers, namely, PFC_BB and FPC_AS, while keeping the experiment settings as the same as previous test. The reason behind our choice to conduct this particular experiment is that the three categories of algorithms all use the Barzilai–Borwein coefficient and nonmonotone line search strategy but in different ways. The search direction of FPC_AS is clearly included in the one defined by (2.8) as a special case. Nevertheless, we still test NBBL1 when \(h=1\) [abbreviated as NBBL1(1.0)], the purpose of which is to demonstrate that the search direction (2.8) would greatly benefit the performance of the algorithm. The results of NBBL1, FPC_BB, and FPC_AS are presented in Fig. 4.

As shown in Fig. 4 all the tested algorithms eventually attain nearly equal relative errors at the end. FPC_BB is the most competitive with NBBL1(0.83), whereas NBBL1(1.0) and FPC_AS are much slower. FPC_AS is the slowest in decreasing relative errors throughout the entire iteration process, but requires the least number of iterations, because at each iteration, FPC_AS solves a subspace minimization problem. One fact that must be emphasized is that the performance of NBBL1 indeed improves dramatically using \(h=0.83\). In conclusion, the proposed algorithm is generally more efficient than FPC_AS and competes strongly with FPC_BB.

To further illustrate the benefit of NBBL1, we present the comparison results of each algorithm behind the previous two experiments in Table 3 at the end of this section. In this comparison, we only consider the iteration numbers (Iter), the computing time (Time), and the relative error (RelErr) and the function values (Fun) at the final solutions for each algorithm.

It can be seen from Table 3 that, each algorithm obtained solutions with comparable objective function values and comparable relative errors. From the results, once again we see that FPC_BB is most competitive with NBBL1(0.83), while others are relatively slow. Based on the experimental results, NBBL1(1.0) is appreciably slower than NBBL1(0.83). We also did a series of tests with different of experiments settings and observed consistent results. Specifically, FPC_BB is the most competitive with NBBL1(0.83). These results and observations sufficiently demonstrated the efficiency and stability of NBBL1.

6 Conclusions

In this study, we proposed, analyzed, and tested a new practical algorithm to solve the separable nonsmooth minimization problem consisting of an \(\ell _{1}\)-norm regularized term and a continuously differentiable term. This type of problem mainly appears in signal/image processing, compressive sensing, machine learning, and linear inverse problems. However, the problem is challenging because of the non-smoothness of the regularization term. Our approach minimizes an approximal local quadratic model to determine a search direction at each iteration. We showed that the search direction contains the one of FPC_AS as a special case, and is reduced to the classic Barzilai–Borwein gradient method when \(\mu =0\). We also proved that the objective function is descent along the direction provided that the initial stepsize does not exceed \(h\) in the nonmonotone line search step. We established the algorithm’s global convergence theorem by assuming that \(f\) is bounded below. Extensive experimental results illustrated that the proposed algorithm is an effective tool to solve \(\ell _{1}\)-regularized nonconvex problems from CUTEr library. Moreover, we ran our algorithm to recover a large sparse signal from its noisy measurement. The performance comparisons with several state-of-the-art solvers verified that our algorithm is competitive with FPC_BB and faster than FPC_AS, NESTA_Ct, CGD, GPSR, and TwIST.

Unlike all the existing algorithms in the literature, our approach uses a linear model to approximate \(\Vert x_k+d\Vert _1\) for computing the search direction with a small scalar \(h\); that is,

Although the equations may hold exactly when \(h=1\), a series of numerical experiments in this paper showed that an appropriate \(h\) may result in an improved performance with suitable experiment settings. This approach is distinctive and novel; therefore, it is one of the important contributions of this study. A natural question is whether the performance of FPC_AS, even its related variants, can be improved using the search direction defined in (2.8). The answer deserves a thorough investigation. The nonmonotone Barzilai–Borwein gradient algorithm of Raydan [32] is known to be very effective in smooth unconstrained minimization, and its equally remarkable effectiveness in signal reconstruction problems involving \(\ell _{1}\)-regularized problems has not been clearly explored. Hence, our approach can be considered as a modification or extension of the algorithm in [32]. Moreover, the numerical experiments illustrated that our approach is comparable with or even better than several state-of-the-art algorithms. This advantage certainly serves as the numerical contribution of this study. Our algorithm readily solves the \(\ell _{1}\)-regularized logistic regression, the \(\ell _{2}\)-norm, as well as matrix trace norm and \(\ell _{2,1}\)-norm minimization problems in machine learning. However, tests related to these problems were not conducted in the study. Further investigations are therefore necessary.

Notes

Available at http://www.acm.caltech.edu/~nesta.

Available at http://www.lx.it.pt/~mtf/GPSR.

Available at http://www.math.nus.edu.sg/~matys/.

Available at: http://www.lx.it.pt/~bioucas/TwIST/TwIST.htm.

Available at http://www.caam.rice.edu/~optimization/L1/fpc.

Available at http://www.caam.rice.edu/~optimization/L1/FPC_AS/.

References

Andrew, G., Gao, J.: Scalable training of \(\ell _{1}\)-regularized log-linear models. In: Proceedings of the Twenty Fourth International Conference on Machine Learning, (ICML) (2007)

Barzilai, J., Borwein, J.M.: Two point step size gradient method. IMA J. Numer. Anal. 8, 141–148 (1988)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2, 183–202 (2009)

Becker, S., Bobin, J., Candès, E.: NESTA: a fast and accurate first-order method for sparse recovery. SIAM J. Imaging Sci. 4, 1–39 (2011)

Birgin, E.G., Martínez, J.M., Raydan, M.: Nonmonotone spectral projected gradient methods on convex sets. SIAM J. Optim. 10, 1196–1211 (2000)

Bioucas-Dias, J.M., Figueiredo, M.: A new TwIST: two-step iterative shrinkage/thresholding algorithms for image restoratin. IEEE Trans. Image Process. 16, 2992–3004 (2007)

Cai, J.F., Candès, E., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 20, 1956–1982 (2010)

Candès, E., Romberg, J.: Quantitative robust uncertainty principles and optimally sparse decompositions. Found. Comput. Math. 6, 227–254 (2006)

Candès, E., Romberg, J., Tao, T.: Stable signal recovery from imcomplete and inaccurate information. Commun. Pure Appl. Math. 59, 1207–1233 (2005)

Candès, E., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequence information. IEEE Trans. Inf. Theory 52, 489–509 (2006)

Candès, E., Tao, T.: Near optimal signal recovery from random projections: universal encoding strategies. IEEE Trans. Inf. Theory 52, 5406–5425 (2004)

Chang, K.W., Hsieh, C.J., Lin, C.J.: Coordinate descent method for large-scale L2-loss linear SVM. J. Mach. Learn. Res. 9, 1369–1398 (2008)

Cheng, W., Li, D.H.: A derivative-free nonmonotone line search and its application to the spectral residual method. IMA J. Numer. Anal. 29, 814–825 (2009)

Conn, A.R., Gould, N.I.M., Toint, PhL: CUTE: constrained and unconstrained testing environment. ACM Trans. Math. Softw. 21, 123–160 (1995)

Dai, Y.H., Fletcher, R.: On the asymptotic behaviour of some new gradient methods. Math. Program. 103, 541–559 (2005)

Dai, Y.H., Hager, W.W., Schittkowski, K., Zhang, H.C.: The cyclic Barzilai–Borwein method for unconstrained optimization. IMA J. Numer. Anal. 26, 604–627 (2006)

Dai, Y.H., Liao, L.Z.: R-linear convergence of the Barzilai and Borwein gradient method. IMA J. Numer. Anal. 26, 1–10 (2002)

Donoho, D.L.: Compressed sensing. IEEE Trans. Inf. Theory 52, 1289–1306 (2006)

Duchi, J., Singer, Y.: Efficient online and batch learning using forward backword splitting. J. Mach. Learn. Res. 10, 2899–2934 (2009)

Figueiredo, M., Nowak, R.D., Wright, S.J.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process 1, 586–597 (2007)

Genkin, A., Lewis, D.D., Madigan, D.: Large-scale Bayesian logistic regression for text categorization. Technometrices 49, 291–304 (2007)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23, 707–716 (1986)

Hale, E.T., Yin, W., Zhang, Y.: Fixed-point continuation for \(\ell _{1}\)-minimization: methodology and convergence. SIAM J. Optim. 19, 1107–1130 (2008)

Kim, S., Koh, K., Lustig, M., Boyd, S., Gorinevsky, D.: An interior-point method for large-scale \(\ell _{1}\)-regularized least squares. IEEE J. Sel. Top. Signal Process. 1, 606–617 (2007)

Koh, K., Kim, S., Boyd, S.: An interior-point method for large-scale \(\ell _{1}\)-regularized logistic regression. J. Mach. Learn. Res. 8, 1519–1555 (2007)

Lin, C.J., Moré, J.J.: Newton’s method for large-scale bound constrained problems. SIAM J. Optim. 9, 1100–1127 (1999)

Lu, Z., Zhang, Y.: An augmented Lagrangian approach for sparse principal component analysis. Math. Program. 135, 149–193 (2012)

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Program. 103, 127–152 (2005)

Nesterov, Y.: Gradient methods for minimizing composite objective function, ECORE Discussion Paper 2007/76. http://www.ecore.be/DPs/dp_1191313936.pdf (2007)

Nocedal, J.: Updating quasi-Newton matrices with limited storage. Math. Comput. 35, 773–782 (1980)

Raydan, M.: On the Barzilai and Borwein choice of steplength for the gradient method. IMA J. Numer. Anal. 13, 321–326 (1993)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7, 26–33 (1997)

Recht, B., Fazel, M., Parrilo, P.A.: Guaranteed minimum rank solutions of matrix equations via nuclear norm minimization. SIAM Rev. 52, 471–501 (2010)

Rudin, L., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 60, 259–268 (1992)

Shalev-Shwartz, S., Tewari, A.: Stochastic method for l1 regularized loss minimization. In: Proceedings of the Twenty Sixth International Conference on Machine Learning (ICML) (2009)

Shi, J., Yin, W., Osher, S., Sajda, P.: A fast hybrid algorithm for large-scale \(\ell _{1}\)-regularized logistic regression. J. Mach. Learn. Res. 11, 713–741 (2010)

Tseng, P., Yun, S.: A coordinate gradient descent method for nonsmooth separable minimization. Math. Program. 117, 387–423 (2009)

van den Berg, E., Friedlander, M.P.: Probing the Pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 31, 890–912 (2008)

Wen, Z., Yin, W., Goldfarb, D., Zhang, Y.: A fast algorithm for sparse reconstruction based on shrinkage, subspace optimization, and continuation. SIAM J. Sci. Comput. 32, 1832–1857 (2010)

Wen, Z., Yin, W., Zhang, H., Goldfarb, D.: On the convergence of an active-set method for \(\ell _{1}\) minimization. Optim. Method Softw 27, 1127–1146 (2012)

Wright, S.J., Nowak, R.D., Figueiredo, M.A.T.: Sparse reconstruction by separable approximation. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing, pp 3373–3376 (2008)

Wright, J., Ma, Y., Ganesh, A., Rao, S.: Robust principal component analysis: exact recovery of corrupted low-rank matrices via convex optimization. J. ACM 5, 1–44 (2009)

Yang, J., Zhang, Y.: Alternating direction algorithms for \(\ell _{1}\)-problems in compressive sensing. SIAM J. Sci. Comput. 33, 250–278 (2011)

Yu, J., Vishwanathan, S.V.N., Günter, S., Schraudolph, N.N.: A quasi-Newton approach to nonsmooth convex optimization problems in machine learning. J. Mach. Learn. Res. 11, 1145–1200 (2010)

Yuan, G.X., Chang, K.W., Hsieh, C.J., Lin, C.J.: A comparison of optimization methods and software for large-scale \(\ell _{1}\)-regularized linear classification. J. Mach. Learn. Res. 11, 3183–3234 (2010)

Yuan, X.: Alternating direction method for covariance selection models. J. Sci. Comput. 51, 261–273 (2012)

Yun, S., Toh, K.C.: A coordinate gradient descent method for \(\ell _{1}\)-regularized convex minimization. Comput. Optim. Appl. 48, 273–307 (2011)

Zhang, H., Hager, W.W.: A nonmonotone line search technique and its application to unconstrained optimization. SIAM J. Optim. 14, 1043–1056 (2004)

Zhang, Y., Sun, W., Qi, L.: A nonmonotone filter Barzilai-Borwein method for optimization. Asia Pac. J. Oper. Res. 27, 55–69 (2010)

Acknowledgments

We would like to thank two anonymous referees for their useful comments and suggestions which improved this paper greatly. The first version of the paper is finished during Y. Xiao’s stay as a postdoctoral research fellow in NCTS, National Cheng Kung University, Taiwan. The work of Y. Xiao is supported by Chinese Natural Science Foundation Grant 11001075, and the Natural Science Foundation of Henan Province Grant 2011GGJS030.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Xiao, Y., Wu, SY. & Qi, L. Nonmonotone Barzilai–Borwein Gradient Algorithm for \(\ell _{1}\)-Regularized Nonsmooth Minimization in Compressive Sensing. J Sci Comput 61, 17–41 (2014). https://doi.org/10.1007/s10915-013-9815-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-013-9815-8

Keywords

- Nonsmooth optimization

- Nonconvex optimization

- Barzilai–Borwein gradient algorithm

- Nonmonotone line search

- \(\ell _{1}\) regularization

- Compressive sensing