Abstract

In this paper, we consider a company whose surplus follows a rather general diffusion process and whose objective is to maximize expected discounted dividend payments. With each dividend payment, there are transaction costs and taxes, and it is shown in Paulsen (Adv. Appl. Probab. 39:669–689, 2007) that under some reasonable assumptions, optimality is achieved by using a lump sum dividend barrier strategy, i.e., there is an upper barrier \(\bar{u}^{*}\) and a lower barrier \(\underline{u}^{*}\) so that whenever the surplus reaches \(\bar{u}^{*}\), it is reduced to \(\underline{u}^{*}\) through a dividend payment. However, these optimal barriers may be unacceptably low from a solvency point of view. It is argued that, in that case, one should still look for a barrier strategy, but with barriers that satisfy a given constraint. We propose a solvency constraint similar to that in Paulsen (Finance Stoch. 4:457–474, 2003); whenever dividends are paid out, the probability of ruin within a fixed time T and with the same strategy in the future should not exceed a predetermined level ε. It is shown how optimality can be achieved under this constraint, and numerical examples are given.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Finding optimal dividend strategies is a classical problem in the financial and actuarial literature. The idea is that the company wants to pay some of its surplus as dividends, and the problem is to find a dividend strategy that maximizes the expected total discounted dividends received by the shareholders. The typical time horizon is until ruin occurs, i.e., until the surplus is negative for the first time.

However, left to their own, financial institutions may make decisions that can jeopardize their solvency, and those with a claim on the company, e.g. account holders of a bank or customers of an insurance company, have an unacceptably high probability of losing all or part of their claims. As a consequence, most countries impose some regulation on financial companies, and in addition the companies themselves will usually have their own, albeit sometimes lax, capital requirements.

The task for the management is therefore not to maximize expected discounted dividends as such, but to do it under proper solvency constraints. One such constraint was suggested in [6], and we shall apply the same idea in this paper. We also let the capital of the company follow the same diffusion process as in [6], originally presented in [11]. To explain this, in [11] it was proved that with their model, and provided there are no costs or taxes associated with dividend payments, an optimal policy, if it exists, is of barrier type, i.e., there is a barrier u ∗ so that whenever capital reaches u ∗, dividends are paid with infinitesimal amounts so that capital never exceeds u ∗. The resulting accumulated dividend process is a singular process, hence the name singular control. With the same setup, it was proved in [6] that when solvency requirements prohibit dividend payments unless capital is at least u 0>u ∗, then it is optimal to use a singular control at u 0. Therefore, it is natural to use u 0 as a barrier, and it was suggested that u 0 could be determined as follows: whenever capital is at u 0, ruin within a fixed time T by following the same policy should not exceed a small, predetermined number ε. We denote the corresponding u 0 by u ε . Thus the problem of optimal dividend payments was linked to the problem of calculating ruin probabilities, the latter being a key concept in risk theory. Clearly, increasing u 0 implies that the ruin probability is decreased, so the problem can be reduced to a one-dimensional search problem for u ε . Although in both [11] and [6] there were no transaction costs or taxes, proportional costs or taxes will not change the problem significantly. However, when each dividend payment carries a fixed cost, the problem changes from a singular control problem to an impulse control problem. It was shown in [7], using the same diffusion model as in [11], that if there is an optimal dividend strategy, it will be of a two-barrier type. To explain this, there is a lower barrier \(\underline{u}^{*}\geq0\) and an upper barrier \(\bar {u}^{*}\) so that whenever capital reaches \(\bar{u}^{*}\), dividends are paid bringing the capital down to \(\underline{u}^{*}\).

In this paper, we make the same assumptions as in [7], but slightly differently formulated. With each dividend payment, there is a fixed cost K and a tax rate 1−k with 0<k<1. We shall argue that if the optimal policy is too risky, one should look for a lower barrier \(\underline{u}_{\varepsilon}>0\) and an upper barrier \(\bar{u}_{\varepsilon}\) to maximize expected discounted dividends and at the same time satisfy the solvency constraint as presented in the above paragraph. This problem is more difficult than that in [6] since we must look for a pair \((\underline{u}_{\varepsilon},\bar{u}_{\varepsilon})\), not just a number u ε . One issue is to find a fast method to calculate the ruin probability for a given lower and upper barrier, and we shall show how we can adapt the Thomas algorithm for solving tridiagonal systems together with the Crank–Nicolson algorithm to solve the relevant partial differential equations. The paper ends with numerical examples.

2 The model and a general optimality result

Let \((\varOmega, \mathcal{F}, \{\mathcal{F}_{t}\}_{t\geq0},P)\) be a probability space satisfying the usual conditions, i.e., the filtration \(\{\mathcal{F}_{t}\}_{t\geq0}\) is right-continuous and P-complete. Assume that the uncontrolled surplus process follows the stochastic differential equation

where W is a Brownian motion on the probability space and μ(x) and σ(x) are Lipschitz-continuous. Let the company pay dividends to its shareholders, but at a fixed transaction cost K>0 and a tax rate 0<1−k<1. This means that if ξ>0 is the amount by which the capital is reduced, then the net amount of money the shareholders receive is kξ−K. Since every dividend payment results in a transaction cost K>0, the company should not pay out dividends continuously, but only at discrete time epochs. Therefore, a strategy can be described by

where \(\tau_{n}^{\pi}\) and \(\xi_{n}^{\pi}\) denote the times and amounts of dividends. For each π, we define the corresponding ruin time as

Thus, when applying the strategy π, the resulting surplus process X π is given by

The process X π is left-continuous with right limits, so when applying e.g. Itô’s formula, it will be on the right-continuous version with left limits {X t+}. Also, we define ΔX t =X t+−X t .

Sufficient conditions for existence and uniqueness of (2.2) are assumptions A1 and A2 below.

Definition 2.1

A strategy π is said to be admissible if we have the following.

-

(i)

\(0\leq\tau_{1}^{\pi}\) and for n≥1, \(\tau _{n+1}^{\pi }>\tau_{n}^{\pi}\) on \(\{\tau_{n}^{\pi}<\infty\}\).

-

(ii)

\(\tau_{n}^{\pi}\) is a stopping time with respect to \(\{\mathcal{F}_{t}\}_{t\geq0}\), n=1,2,….

-

(iii)

\(\xi_{n}^{\pi} \) is measurable with respect to \(\mathcal{F}_{\tau_{n}^{\pi}},\ n=1,2,\ldots{}\).

-

(iv)

\(P(\lim_{n\to\infty}\tau_{n}^{\pi}\leq T)=0\), ∀T≥0.

-

(v)

\(0<\xi_{n}^{\pi}\leq X_{\tau_{n}}^{\pi}\).

We denote the set of all admissible strategies by Π.

For each admissible strategy π, we define the performance function V π (x) as

where P x denotes the probability measure conditioned on X 0=x. The quantity V π (x) represents the expected total discounted dividends received by the shareholders until ruin when the initial reserve is x. Note that it follows from (2.2) and condition (v) above that for an admissible policy, \(X_{t}^{\pi}\equiv0\) for t>τ π.

Define the optimal return function

and the optimal strategy, if it exists, by π ∗. Then \(V_{\pi ^{*}}(x) =V^{*}(x)\).

Definition 2.2

A lump sum dividend barrier strategy \(\pi=\pi_{\bar{u},\underline{u}}\) with parameters \(\bar{u}\) and \(\underline{u}\) satisfies for \(X_{0}^{\pi}<\bar{u}\)

and for every n≥2

When \(X_{0}^{\pi}\geq\bar{u}\),

and for every n≥2, \(\tau_{n}^{\pi}\) is defined as above. For a given lump sum dividend barrier strategy \(\pi_{\bar{u},\underline {u}}\), the corresponding value function is denoted by \(V_{\bar{u},\underline{u}}(x)\).

The importance of lump sum dividend barrier strategies is exemplified in e.g. Theorem 2.3 below, proved in [7]. In order to present the theorem, we make a list of assumptions.

-

A1.

|μ(x)|+|σ(x)|≤C(1+x) for all x≥0 and some C>0.

-

A2.

μ(x) and σ(x) are continuously differentiable and Lipschitz-continuous, and the derivatives μ′(x) and σ′(x) are Lipschitz-continuous.

-

A3.

σ 2(x)>0 for all x≥0.

-

A4.

μ′(x)≤λ for all x≥0, where λ is the discounting rate.

For g∈C 2(0,∞), define the operator \(\mathcal{L}\) by

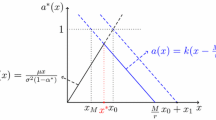

It is well known, see e.g. [5], Theorem 6.5.3, that under the assumptions A1–A3, any solution of \(\mathcal{L}g=0\) is in C 3(0,∞). Let g 1(x) and g 2(x) be two independent solutions of \(\mathcal{L}g(x)=0\), chosen so that g(x)=g 1(0)g 2(x)−g 2(0)g 1(x) has g′(0)>0. Any such solution will be called a canonical solution. Then any solution of \(\mathcal{L}V(x)=0\) with V(0)=0 and V′(0)>0 is of the form

Consider the following set of problems.

Note that k and K are equivalent to \(\frac{1}{1+d_{1}}\) and \(\frac{d_{0}}{1+d_{1}}\) in [7].

Theorem 2.3

(Theorem 2.1 in [7])

Assume that A1–A4 hold. Then exactly one of the following three cases will occur.

-

(i)

B1+B2 have a unique solution for unknown V(x), \(\bar{u}^{*}\) and \(\underline{u}^{*}\), and we have \(V^{*}(x)=V(x)=V_{\bar{u}^{*},\underline{u}^{*}}(x)\) for all x≥0. Thus the lump sum dividend barrier strategy \(\pi^{*}=\pi_{\bar{u}^{*},\underline{u}^{*}}\) is an optimal strategy.

-

(ii)

B1+B3 have a unique solution for unknown V(x), and it holds that \(V^{*}(x)=V(x)=V_{\bar{u}^{*},0}(x)\) for all x≥0. Thus the lump sum dividend barrier strategy \(\pi^{*}=\pi_{\bar{u}^{*},0}\) is an optimal strategy.

-

(iii)

There does not exist an optimal strategy, but

$$V^{*}(x)= \lim_{\bar{u}\rightarrow \infty}V_{\bar{u},\underline{u}(\bar{u})}(x)$$and this limit exists and is finite for every x≥0. In terms of a canonical solution,

$$V^{*}(x)=\frac{kg(x)}{\lim_{\bar{u}\rightarrow \infty}g'(\bar{u})}.$$Here \(V_{\bar{u},\underline{u}(\bar{u})}(x)=\sup_{\underline{u}\in[0,\bar{u})}V_{\bar{u},\underline{u}}(x)\).

Remark 2.4

As pointed out in Remark 2.2e in [7], if lim x→∞ g′(x)=∞, then either B1+B2 or B1+B3 apply, hence a solution exists. That lim x→∞ g′(x)=∞ is almost a necessary condition for existence of a solution can be shown as in Proposition 2.4 of [8]. Therefore, for simplicity we typically assume that lim x→∞ g′(x)=∞.

Here is a useful sufficient condition for lim x→∞ g′(x)=∞. The proof is given in Sect. 7.

Proposition 2.5

Assume A1–A4 and that there exist an x 0≥0 and an ε>0 so that

Then for any canonical solution g of \(\mathcal{L}g(x)=0\),

Remark 2.6

Arguing as in the end of the proof of Theorem 4.1, it follows that if there exists an x 0≥0 so that

then lim x→∞ g′(x)<∞. Therefore, Proposition 2.5 is quite sharp.

3 Optimality under payout restrictions

Consider e.g. an insurance company that wants to use the optimal barriers \(\bar{u}^{*}\) and \(\underline{u}^{*}\) for its dividend payments. However, when policyholders pay their premiums in advance, they expect to have their claims covered. It is therefore reasonable that the company should not be allowed to pay dividends if that makes the surplus too small. One natural condition is that the surplus is not allowed to be less than some \(\underline {u}_{0}>0\) after a dividend payment. Mathematically, such a restriction for a policy π can be written as

Let Π 0 denote the set of all admissible strategies satisfying (3.1). Define the new optimal return function \(V_{0}^{*}(x)\) as

Our aim is to find the optimal return function \(V_{0}^{*}(x)\) and the optimal strategy π 0∈Π 0 such that \(V_{\pi_{0}}(x) =V_{0}^{*}(x)\).

Following Remark 2.4, we assume that lim x→∞ g′(x)=∞ so that either B1+B2 or B1+B3 have a solution. Trivially, if B1+B2 have a solution V(x) for some c ∗, \(\bar{u}^{*}\) and \(\underline{u}^{*}\geq\underline{u}_{0}\), the optimal strategy in Theorem 2.3(i) is feasible under the constraint (3.1). Then \(V_{0}^{*}(x)=V(x)\) and the optimal strategy is as in Theorem 2.3(i).

Therefore we consider the cases when B1+B2 have a solution V(x) for some c ∗, \(\bar{u}^{*}\) and \(\underline{u}^{*}<\underline{u}_{0}\), or when B1+B3 have a solution V(x) for some c ∗, \(\bar{u}^{*}\) and \(\underline{u}^{*}=0\). In these cases, the optimal strategy given by Theorem 2.3 does not satisfy the constraint (3.1). Consequently, we need to look for the optimal return function and the optimal strategy again. To this end, consider the problem for unknown V and \(\bar{u}_{0}\):

The following result is proved in Sect. 7.

Theorem 3.1

Assume that A1–A4 hold and that lim x→∞ g′(x)=∞. Let \(\underline{u}_{0}>\underline{u}^{*}\), where \(\underline{u}^{*}\) is given in Theorem 2.3. Then Problem C has a unique solution for unknown V and \(\bar{u}_{0}\) and

where \(V_{0}^{*}(x)\) is defined in (3.2). Thus the lump sum dividend barrier strategy \(\pi_{\bar{u}_{0},\underline{u}_{0}} \) is an optimal strategy in Π 0. Also, for given \(\underline{u}_{1}\) so that \(\underline{u}^{*}<\underline{u}_{0}<\underline{u}_{1}\), it holds for the corresponding optimal upper barriers that \(\bar{u}^{*}<\bar{u}_{0}<\bar{u}_{1}\).

According to Theorems 2.3 and 3.1, for a given lower barrier \(\underline{u}_{0}\), the optimal strategy is the lump sum barrier strategy \(\pi_{\tilde{u},\underline{u}_{1}}\), where

Here \((\bar{u}^{*},\underline{u}^{*})\) is as in Theorem 2.3, while \(\bar{u}_{0}\) is as in Theorem 3.1. This addresses the problem of not being allowed to pay dividends that bring the capital too far down. The next result looks at the other end. What if the company cannot make a dividend payment when it wants, but has to postpone it until capital reaches a higher level? Let \(\bar{u}_{1}>\tilde{u}\) and let Π 1 be the set of all admissible policies satisfying

i.e., all policies so that paying dividends when capital is less than \(\bar{u}_{1}\) as well as reducing it below \(\underline{u}_{1}\) through a dividend payment are ruled out. Define the new optimal return function \(V_{1}^{*}(x)\) as

Consider the problem for unknown V:

We then have the following theorem. It is proved in Sect. 7.

Theorem 3.2

Assume that A1–A4 hold and that lim x→∞ g′(x)=∞. Let \(\underline{u}_{0}\) and \(\bar{u}_{1}>\tilde{u}\) be given, where \(\tilde{u}\) is defined in (3.3). Then Problem D has a unique solution for unknown V and

where \(V_{1}^{*}(x)\) is defined in (3.4) and \(\underline{u}_{1}\) in (3.3). Thus the lump sum dividend barrier strategy \(\pi_{\bar{u}_{1},\underline{u}_{1}}\) is an optimal strategy in Π 1.

The message of Theorems 3.1 and 3.2 is that if the optimal barriers are too small, it is still optimal to use lump sum barrier strategies with the barriers as close to the optimal ones as possible in some sense. Therefore, we should look for barrier strategies, but with barriers sufficiently large to satisfy solvency requirements. This is the topic of Sect. 4.

4 Optimality under a solvency constraint

Having argued in Sect. 3 that barrier strategies are optimal also under reasonable constraints, we show in this section how optimal barriers can be found that satisfy a natural solvency restriction. To describe this, let T<∞ be a fixed time horizon and define the survival probability as

where as before P x means that X 0=x and \(\pi_{\bar {u},\underline {u}}\) is the lump sum dividend strategy with barriers \(\bar{u}\) and \(\underline{u}\). For a given ruin tolerance ε, we say that the strategy \(\pi_{\bar {u},\underline{u}}\) is solvency admissible if

Note that \(\phi_{\bar{u},\underline{u}}(T,\underline{u})=\phi_{\bar{u},\underline{u}}(T,\bar{u})\). This means that for a solvency admissible strategy \(\pi_{\bar{u},\underline{u}}\), at the time of paying a dividend, the probability of survival during the next time interval of length T using the same strategy cannot be smaller than 1−ε.

Also note that even when Theorem 2.3(iii) applies, condition (4.1) in principle need not hold for any δ-optimal dividend strategy. The reason for this is that \(\underline{u}(\bar{u})\) may be bounded as \(\bar{u}\rightarrow \infty\). The following result shows that even in case (iii) there will exist a δ-optimal dividend strategy. It is proved in Sect. 7.

Theorem 4.1

Under the assumptions of Theorem 2.3(iii), for any b>0 and \(\bar{u}>0\), there exists a \(\tilde{\underline {u}}(\bar {u})<\bar{u}\) satisfying \(\tilde{\underline{u}}(\bar{u})\rightarrow\infty\) as \(\bar {u}\rightarrow\infty\) so that

By this result we can choose a \(\underline{u}\) so large that for any δ>0, there is a δ-optimal lump sum dividend barrier that satisfies the constraint (4.1). Consequently, from now on it is assumed that lim x→∞ g′(x)=∞ as in Remark 2.4.

As in [6] it can be proved that if there exists a \(C^{1,2}((0,T)\times(0,\bar{u}))\) function v that satisfies

with initial value

and boundary value for t>0

then \(v(T,x)=\phi_{\bar{u},\underline{u}}(T,x)\) is the survival probability. Here v t means the partial derivative with respect to t and so on. It is well known, see e.g. [12], Theorem 3.4.1, that if σ 2(x) and μ(x) are in \(C^{\infty}(0,\bar{u})\), then any weak solution of (4.2) is in \(C^{\infty}((0,T) \times(0,\bar {u}))\).

Let us discuss how the optimal solvency admissible strategy can be found. By definition, for \(\underline{u}>0\), clearly

Let ϕ(T,x)=P x (X t >0, ∀t∈[0,T]) be the survival probability when there is no control. If \(\phi(T,\underline{u})\leq1-\varepsilon \), then \(\underline{u}\) cannot be the lower barrier of a solvency admissible dividend strategy since paying dividends surely increases the ruin probability. However, if \(\phi(T,\underline{u})>1-\varepsilon\), then for sufficiently large \(\bar{u}\), \(\pi_{\bar{u},\underline{u}}\) will be a solvency admissible strategy. The lower bound \(\underline{u}_{m}\) for the lower barrier in a solvency admissible strategy is therefore of interest, and it is given by

It is easy to show that if there exists a C 1,2((0,T)×(0,∞)) function w that satisfies

with initial value

and boundary value for t>0

then we have w(T,x)=ϕ(T,x). Again, by [12], Theorem 3.4.1, if σ 2(x) and μ(x) are C ∞(0,∞), then any weak solution of (4.5) is C ∞((0,T)×(0,∞)).

We are now ready for the optimality algorithm. For that, it is assumed that lim x→∞ g′(x)=∞.

-

1.

Calculate the optimal V ∗(x) with corresponding barriers \(\bar{u}^{*}\) and \(\underline{u}^{*}\).

-

2.

Calculate \(\phi_{\bar{u}^{*},\underline{u}^{*}}(T,\underline{u}^{*})\). If \(\phi_{\bar{u}^{*},\underline{u}^{*}}(T,\underline{u}^{*})\geq 1-\varepsilon\), the optimal strategy satisfies the solvency constraint and we are done. If not, continue to Step 3.

-

3.

Find \(\underline{u}_{m}\) as the unique solution of \(\phi (T,\underline{u}_{m})=1-\varepsilon\). This can be done using a one-dimensional search.

-

4.

Let δ>0 be a small number, and set \(\underline{u}_{i}=\underline{u}_{m}+i\delta\), i=1,2,….

-

5.

For each \(\underline{u}_{i}\), find the corresponding optimal upper barrier by solving Problem C, and call this u i . Calculate \(\phi_{u_{i},\underline{u}_{i}}(T,\underline{u}_{i})\) and if \(\phi_{u_{i},\underline{u}_{i}}(T,\underline{u}_{i})\geq1-\varepsilon\), set \(\bar{u}_{i}=u_{i}\). Also let \(\bar{c}_{i}\) be the scaling factor so that the solution is \(V_{0}^{*}(x)=\bar{c}_{i}g(x)\) for \(x\leq\bar{u}_{i}\). On the other hand, if \(\phi_{u_{i},\underline{u}_{i}}(T,\underline{u}_{i})< 1-\varepsilon\), increase u i in steps of δ until the solvency constraint is satisfied. Let \(\bar{u}_{i}\) be the corresponding upper barrier and \(\bar{c}_{i}\) the scaling factor found by solving Problem D.

-

6.

Do this until \(\bar{c}_{i}\) falls significantly. Then let c ε be the highest \(\bar{c}_{i}\) and \(\bar{u}_{\varepsilon}\) and \(\underline {u}_{\varepsilon}\) the corresponding \(\bar{u}_{i}\) and \(\underline{u}_{i}\), respectively. The optimal solvency admissible strategy is then \(\pi_{\bar{u}_{\varepsilon},\underline {u}_{\varepsilon }}\), and the corresponding value function is

$$V_{\varepsilon}(x)=\begin{cases}c_{\varepsilon}g(x),&0\leq x\leq\bar{u}_{\varepsilon},\\V_{\varepsilon}(\bar{u}_{\varepsilon})+k(x-\bar{u}_{\varepsilon}),&x>\bar{u}_{\varepsilon}.\end{cases}$$

Equations (4.2) and (4.5) together with their respective initial and boundary conditions are not easily solvable, but taking the Laplace transform turns them into ordinary differential equations. To see how, consider (4.2) and define

Straightforward calculations, using (4.3), show that \(\tilde{v}\) satisfies

A particular solution is given by \(\tilde{v}_{p}(s,x)=s^{-1}\). Let \(\tilde{v}_{1}(s,x)\) and \(\tilde{v}_{2}(s,x)\) be independent solutions of the homogeneous equation in (4.7). Then

where a 1 and a 2 are determined from the initial and boundary conditions. Now v(t,0)=0 implies that \(\tilde{v}(s,0)=0\) as well, and \(v(t,\bar{u})=v(t,\underline{u})\) implies that \(\tilde{v}(s,\bar{u})=\tilde{v}(s,\underline{u})\). Therefore, after some straightforward calculations,

Let \(L_{h}^{-1}(t)\) be the inverse Laplace transform. Then \(L_{s^{-1}}(t)=1\), and using the Laplace transform property for integrals, we get

where

Therefore, \(P(\tau^{\pi}\in dt)=L_{h_{1}+h_{2}}^{-1}(t)\,dt\) when \(\pi =\pi _{\bar{u},\underline{u}}\).

Similarly, the function \(\tilde{w}(s,x)=L_{w}(s)\) also satisfies (4.7) with \(\tilde{w}(s,0)=0\) and \(\lim_{x\rightarrow \infty}\tilde{w}(s,x)=s^{-1}\). Thus, if we let \(\tilde{w}_{1}(s,x)\) and \(\tilde{w}_{2}(s,x)\) be two independent solutions of the homogeneous equation and assume that \(\hat{w}_{i}(s)=\lim_{x\rightarrow\infty}\tilde {w}_{i}(s,x)\), i=1,2 exist, then

where

Inversion formulas are similar to those above.

Example 4.2

Assume that μ and σ 2 are constants. Then it is easy to see that

where

Plugging this into (4.8) and (4.9) gives \(\tilde{v}(s,x)\). Inverting this Laplace transform is unfortunately not straightforward.

Also \(\hat{w}_{1}(s)=\infty\) and \(\hat{w}_{2}(s)=0\), hence b 1(s)=0 and b 2(s)=−s −1. Therefore,

This can be inverted using standard tables for the Laplace transform. However, the solution can also be obtained by other methods, see e.g. [3, p. 196], and is given by

Therefore, \(\underline{u}_{m}\) is given as the unique solution (in x) of

5 Numerical solutions

In order to provide a complete numerical solution to the problem, several differential equations, both ordinary and partial, have to be solved.

For problems B, C and D, it is necessary to find a canonical solution g, either analytically, or if that is not possible or practical, numerically. In the latter case, the Runge–Kutta method can be used, together with linear interpolation between the grid points, this for g, g′ and g″. In case the assumption of Proposition 2.5 does not hold, the numerical solution can be helpful to assess whether lim x→∞ g′(x)=∞ or not.

Problems B1+B2 or B1+B3

In [7], it is shown how this can be reduced to a one-dimensional search problem, but for completeness and since the notation is somewhat different, we include it here. This method will also reveal whether an optimum solution exists.

-

1.

Find x ∗∈(0,∞), if it exists, so that g″(x ∗)=0. If g is convex, we set x ∗=0, and if it is concave we set x ∗=∞. In the second case there is no solution, and by Lemma 7.2(b), x ∗=0 is equivalent to μ(0)≤0, so this case is easy to establish.

-

2.

Choose x<x ∗ and let \(c=\frac{k}{g'(x)}\) so that cg′(x)=k.

-

3.

Find (if possible) y>x ∗ so that \(g'(y)=\frac{k}{c}\). If this is not possible, try with a larger x until it is satisfied.

-

4.

Calculate k(y−x)−c(g(y)−g(x)). If this is larger than K, increase x. Otherwise decrease x.

-

5.

Repeat the process until a solution is obtained, or until it is clear that there is no solution. In case there is a solution, upon convergence \(\underline{u}^{*}=x\), \(\bar{u}^{*}=y\) and V ∗(x)=cg(x) for \(x\leq\bar{u}^{*}\).

Problem C

Assume it is clear that lim x→∞ g′(x)=∞. Then the following easy recipe works.

-

1.

Choose \(x>\underline{u}_{0}\) and let \(c=\frac{k}{g'(x)}\) so that cg′(x)=k.

-

2.

Calculate \(k(x-\underline{u}_{0})-c(g(x)-g(\underline{u}_{0}))\). If this is larger than K, decrease x, otherwise increase x.

-

3.

Repeat the process until convergence is obtained. Upon convergence, \(\bar{u}_{0}=x\) and V(x)=cg(x) for \(x\leq\bar{u}_{0}\).

Problem D

The unique solution is given in (7.14) in Sect. 7.

The function v(t,x) of (4.2)–(4.4)

This is a standard PDE, but with nonstandard boundary conditions. It turns out that the Crank–Nicolson algorithm together with an adaptation of the Thomas algorithm to solve tridiagonal systems is well suited for this problem. For more details on the Crank–Nicolson and the Thomas algorithms, the reader can consult [1], Chaps. 2.3 and 2.9.

To explain how this adaptation works, let h be the grid length and ih, with i=0,1,…,m, the gridpoints so that \(mh=\bar{u}\). Similarly, let k be the grid length and jk, with j=0,1,…,n, the gridpoints so that nk=T. Previously k was defined as the tax rate, but there should be no ambiguity so we follow the standard notation. Let \(\sigma_{i}^{2}=\sigma^{2}(ih)\) and μ i =μ(ih), i=0,1,…,m. With \(v_{i}^{j}\) an approximation to v(ih,jk), the Crank–Nicolson finite difference scheme is

Collecting terms, this can be written as

where, with r=k/h 2,

To start the iterations, we use the initial value v(0,x)=1, giving \(v_{i}^{0}=1\) as well, and so the \(d_{i}^{0}\), i=0,1,…,m, can be calculated.

Now to the Thomas algorithm. To use it, for numerical stability we should have

Let us check this condition:

-

1.

\(\sigma_{i}^{2}\geq\mu_{i}h\). Then \(|\alpha_{i}|+|\gamma_{i}|=\frac{1}{2} r\sigma_{i}^{2}<\beta_{i}\), so this case is unproblematic.

-

2.

\(\sigma_{i}^{2}< \mu_{i}h\). Then \(|\alpha_{i}|+|\gamma_{i}|=\frac{1}{2}r\mu _{i} h<\beta_{i}\) if and only if \(r<\frac{2}{\mu_{i} h-\sigma_{i}^{2}}\).

In order to have case 1 at all gridpoints, we can let

and then for good convergence, a typical choice of r is \(r=\frac{1}{2}\).

Assume that (5.2) is satisfied, and for simplicity write \(v_{i}=v_{i}^{j+1}\) and \(d_{i}=d_{i}^{j}\) in (5.1). The idea of the Thomas algorithm is to write

for unknown p i+1 and q i+1. Using this in (5.1) with i−1 instead of i, we get

Comparing (5.3) and (5.4) gives

The boundary condition v(t,0)=0 implies that 0=v 0=p 1 v 1+q 1, which is satisfied if p 1=q 1=0. We can now use (5.5) to recursively calculate (p i ,q i ), i=2,…,m. Then using (5.3) backwards yields

where

The boundary condition \(v(t,\bar{u})=v(t,\underline{u})\) implies that v m =v ℓ , where \(h\ell=\underline{u}\). Therefore,

We can now go backwards using (5.3) again.

Remark 5.1

Since k=rh 2 in the Crank–Nicolson method, the space grid is typically much coarser than the time grid. In our problem, we are searching for optimal points in the space variable, and therefore a fully implicit scheme with k=rh for some r may be more suitable, since this allows for a finer space grid with the same computation time. The relation (5.1) will still apply, but with different coefficients, and so the Thomas algorithm is again applicable. However, we have not tried this method.

The function w(t,x) of (4.5)–(4.6)

This is basically the same problem as that discussed above, except that we impose instead of the nonstandard boundary condition \(v(t,\bar{u})=v(t,\underline{u})\) the standard boundary condition \(w(t,\bar{u})=1\) for some large \(\bar{u}\). This will result in a slight overestimate of the survival probability, but if \(\bar{u}\) is chosen large enough, it should not be a real problem. Deciding when \(\bar{u}\) is large enough is not an obvious task, but one way may be to keep x fixed at a moderate value, and then try with increasing \(\bar{u}\) until the solution w(0,x) stabilizes. Given \(\bar{u}\), the Crank–Nicolson algorithm together with the standard Thomas algorithm should work well. Also, to find w analytically is easier than to find v, as we saw in Example 4.2.

6 Numerical examples

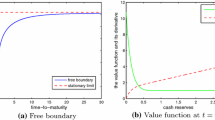

In this section, we give two numerical examples where optimal strategies with and without the solvency constraint are compared. In all plots, solid lines are for the case with the solvency constraint, while dashed lines are without solvency constraints. Each figure is split into three panels, where the first panel shows the optimal upper and lower barriers, both without and with the solvency constraint. The second panel shows the amount of dividends paid each time, i.e., \(\bar{u}_{\varepsilon}-\underline{u}_{\varepsilon}\) and \(\bar{u}^{*}-\underline{u}^{*}\). The third panel shows the constants c ε and c ∗ so that the value functions equal V ε (x)=c ε g(x), \(x\leq\bar{u}_{\varepsilon}\) and V ∗(x)=c ∗ g(x), \(x\leq\bar{u}^{*}\), where g is a canonical solution to be specified in each example. This means that for \(x\leq\bar{u}^{*}\), \(1-\frac{c_{\varepsilon}}{c^{*}}\) is the percentage loss of value due to the solvency constraint.

Before we give the examples, a few words on the numerics. All programs were written in R, but with subprograms in C for the number crunching. The simple algorithm described in Sect. 4 had to be modified. The reason is that the finite difference scheme (5.1) for solving (4.2) is accurate of order 2. However, a perturbation of size h in the boundary condition of a PDE will in general induce a change in the solution of order O(h). Experimentally, this seems to be the case here also for perturbations of \(\bar{u}\) and \(\underline{u}\), i.e., the most accurate numerical evaluations of the survival probability \(\phi_{\bar{u},\underline{u}}\) for a given lump sum strategy \(\pi_{\bar{u},\underline{u}}\) seem to come when \(\underline{u}\) and \(\bar{u}\) both are nodes on the PDE grid. This is especially true for \(\underline {u}\). The general idea behind the program is therefore to minimize the calculations of off-grid \(\underline{u}\) and \(\bar{u}\) by defining the grids so that \(\underline{u}\) is on the grid. To find the smallest solvency admissible \(\bar{u}\) for a fixed \(\underline{u} > u_{m}\), the program iterates as follows:

-

1.

Start with a fairly coarse grid and find two adjacent points \(\bar {v}_{1}<\bar{w}_{1}\) so that according to the numerical solution, \(\pi_{\bar{w}_{1},\underline{u}}\) is solvency admissible, while \(\pi_{\bar{v}_{1},\underline{u}}\) is not. Then use one iteration of the secant method to find \(\bar{u}_{1}\) between \(\bar{v}_{1}\) and \(\bar{w}_{1}\).

-

2.

Repeat the procedure with a finer grid, and find adjacent points \(\bar{v}_{2}<\bar{w}_{2}\) with the same properties as \(\bar{v}_{1}\) and \(\bar {w}_{1}\). Since the grid has changed, so has the numerical solution of the ruin probability, and frequently this results in \(\bar{v}_{2}>\bar{w}_{1}\).

-

3.

Repeat the process a certain number of times. We repeated it until there were about 100 million nodes, where we used \(k=\frac{1}{2} h^{2}\).

Although a bit circumstantial, this routine was in fact quite efficient in terms of total running time. As is seen from several of the figures below, the upper estimated values of \(\bar{u}\) are sometimes quite erratic. However, this does not matter much since the corresponding values of c ε do not vary much. When comparing different plots, it is important to note that the y-axis varies, and when the span on the y-axis is very small, the results may look more erratic than they actually are.

Example 6.1

Let μ(x)=μ and σ(x)=σ be constants, so that (2.1) becomes

By Proposition 2.5, lim x→∞ g′(x)=∞, hence an optimal strategy always exists.

In Figs. 1–5, μ=σ=1 and the canonical solution chosen is

with α=0.9636 (a bit arbitrary, admittedly) and

The other parameter values used are

In the figures, four of these are kept fixed, while one is varying. In the discussion below, \(\bar{u}\) is generic for both the unconstrained upper barrier \(\bar{u}^{*}\) and the constrained \(\bar{u}_{\varepsilon}\), and similarly with \(\underline{u}\).

In Fig. 1, the discounting factor λ is varied. When there is no solvency constraint, we see from the first panel that both upper and lower barriers decrease as λ increases, which reflects the fact that with large values of λ, early payments are important, since later payments are heavily discounted. When λ is small, the solvency constraint is not binding due to the long term perspective, and hence the necessity to avoid early ruin provides sufficiently large barriers. As λ increases, the constraint becomes binding, and the lower barrier even increases. The reason for this is that with a given constraint, there is more to gain by decreasing the upper barrier \(\bar{u}_{\varepsilon}\) a lot, even if that means a small increase in the lower barrier \(\underline{u}_{\varepsilon}\). However, it is interesting to see from the middle panel that the actual payout \(\bar {u}-\underline{u}\) is not much affected by the solvency constraint. From the right panel, we see that the relative impact of the solvency constraint on the values c ∗ and c ε increases quite a lot with λ, but for moderate values of λ it only causes small reductions in the value of the company.

In Fig. 2, the time horizon T varies. Without the solvency constraint, the optimum is independent of T, which is also seen from the figure. For small T, the optimiser gives sufficiently high survival probability, hence the solvency constraint is not binding. As T increases, the solvency constraint kicks in and both the lower and upper barriers increase, but it is seen from the middle panel that the actual dividend payout is again not much affected by the constraint. Why the payout first goes down and then increases, we cannot explain. The ruggednes of the graph in the middle panel is due to numerical issues as discussed above. However, looking at the scale on the y-axis, we see that the variations are not severe. From the right panel, it is seen that although the barriers are much influenced by the solvency constraint, the actual values c ε are far less so.

In Fig. 3, the retention rate k varies. As k increases, the amount received, \(k(\bar{u}-\underline{u})-K\), gets positive for lower amounts \(\bar{u}-\underline{u}\) paid, and so both barriers decrease with k, both in the unconstrained and the constrained case. The effect of the solvency constraint is just to increase the barriers, but from the middle panel we see that again the payout \(\bar{u}-\underline{u}\) is not much affected. From the right panel, it is seen that the actual value of the company is not much affected neither.

In Fig. 4, the fixed cost K is varied. Since for K large, the payout \(\bar{u}-\underline{u}\) must be large in order for the dividend received, \(k(\bar {u}-\underline {u})-K\), to be positive, the optimal payout must increase with K, which is confirmed in the middle panel. For the rest, the picture is much the same as before, with the solvency constrained barriers lying above those without the solvency constraint, but with the payout \(\bar{u}-\underline{u}\) rather unaffected. Also, as seen from the right panel, the solvency constraint does not reduce the value of the company by very much.

Finally, in Fig. 5 the ruin tolerance ε varies. For sufficiently large values of ε, the solvency constraint is not binding, but as soon as the constraint becomes binding (read the x-axis from right to left), the picture is much the same as before, with both lower and upper barriers increased due to the solvency constraint, but with payouts \(\bar{u}-\underline{u}\) almost the same, and values c ε moderately lower than the optimal c ∗. When the solvency constraint is binding, the somewhat rugged behavior of the curves in the first two panels is again due to numerical issues, but it is seen from the right panel that the optimal value c ε is not much influenced. Hence these numerical issues are rather unproblematic.

The tentative conclusion we can draw from this example is that the solvency constraint can have a quite large impact on the optimal barriers, but except in rather extreme cases, the impact on the actual payout \(\bar{u}-\underline{u}\) as well as on the value c ε versus c ∗ is much more modest. This is good news for the shareholders, since what counts for them is how much smaller c ε is than c ∗, i.e., their “loss” due to the solvency constraint.

Figure 6 shows the values of V ε (x) and V ∗(x) for the standard parameter choice. This gave \((\bar{u}_{\varepsilon},\underline {u}_{\varepsilon})=(4.65,3.13)\) and \((\bar{u}^{*},\underline {u}^{*})=(3.81,2.22)\). Although this is not so easy to see from the figure, V ∗(x) is concave up to x=2.82 and then convex. As to V ε (x), it is also concave up to x=2.82, and then convex up to \(\bar{u}_{\varepsilon}\). However, \(V_{\varepsilon }'(\bar{u}_{\varepsilon}-)=0.978>V_{\varepsilon}'(\bar{u}_{\varepsilon}+)=k=0.95\), and so V ε is not convex from x=2.82. That \(V_{\varepsilon }'(\bar{u}_{\varepsilon}-)\geq V_{\varepsilon}'(\bar{u}_{\varepsilon}+)\) is a general fact, proved in Lemma 7.6 in Sect. 7.

Example 6.2

Let the basic income process follow the linear Brownian motion

and assume that assets are invested in a risky investment so that the dynamics of the noncontrolled process is

We assume that R is a Black–Scholes investment generating process, meaning that R t =(λ−α)t+σ R W R,t , and that W P and W R are independent. Here λ can be seen as the market rate, also used for discounting, while α is a proportional cost associated with the investment.

Letting

it follows easily from Lévy’s theorem, see e.g. [3], Theorem 3.3.16, that W is a Brownian motion. This gives the representation

By Proposition 2.5, when α>0, we have lim x→∞ g′(x)=∞, hence an optimal strategy exists. Actually, using arguments similar to those in Sect. 3 in [7], together with the solutions given in the Appendix in [9], it can be proved that an optimal strategy exists if and only if α>0. Again using the solutions in that Appendix, a canonical solution can be found, but it is complicated; so we used the more convenient Runge–Kutta method to obtain a numerical solution of g(x), scaled so that g′(0)=1.

In Figs. 7–12, we have μ=σ P =1, σ R =0.25 and α=0.02. The other parameters used are the same as in Example 6.1, and in the figures five of these are kept fixed, while one is varying.

In Fig. 7, the discounting factor λ is varied. This is a somewhat different situation from that in Fig. 1. Ignoring the random elements, in Example 6.1, the only income is the linear μ, which is heavily deflated with an increasing λ. In the present example, there is in addition an investment income λ−α, which is exponential in nature and therefore partially offsets an increase in λ. When λ is small, the linear income μ dominates, but as λ increases, the exponential investment income takes over. This can explain the middle panel in Fig. 7 where for small λ, the payout decreases with λ as in Fig. 1, but as λ increases, it starts to increase again. From the left panel, we see that the upper barrier starts to increase when λ gets large, both in the unconstrained and in the constrained case. However, from the right panel, it is seen that the overall effect of increasing λ is somewhat smaller in Fig. 7 than in Fig. 1, which is to be expected.

Figures 8, 9, 10, 11 do not differ very much from Figs. 2–5, except that the effect of the solvency constraint seems even less serious here. In Figs. 8 and 11 (as well as in Fig. 7), the solvency constraint caused some ruggedness due to numerical issues, but again looking at the corresponding right panels shows that this is of no importance.

In Fig. 12, the effect of varying the cost factor α is shown. With small α, the investment return λ−α is almost as large as the discounting factor λ, and therefore there is no urgency to pay out dividends; hence the barriers can be set high, and the solvency constraint is not binding. As α increases, it is more urgent to pay dividends, and therefore the optimal unconstrained barriers will not satisfy the solvency constraint. Again the payouts \(\bar{u}-\underline {u}\) are almost unaffected by the solvency constraint, and from the right panel, we see that the reduction in value due to the solvency constraint is not very large.

The conclusion here is much the same as in Example 6.1; the solvency constraint can have a fairly large impact on the optimal policy, but the actual payout as well as the value of the company are only moderately affected.

We also tried with an “investment risk free” version, i.e., with σ R =0 so that

However, this gave much the same results, indicating that the results are quite robust.

7 Proofs

In this section, we prove Proposition 2.5, Theorems 3.1 and 3.2 and Theorem 4.1. To do so, we need the following lemmas, which are the same as Lemmas 2.1 and 2.2 in [7].

Lemma 7.1

Let μ(x) and σ(x) satisfy A2–A4 and let f be a solution of \(\mathcal{L}f(x)=0\). Consider the interval [0,∞).

-

(a)

If f has a zero on [0,∞), then f′ has no zero on [0,∞).

-

(b)

If \(f'(\tilde{x})>0\) and \(f^{\prime\prime}(\tilde {x})\leq0\) for some \(\tilde{x}\in[0,\infty)\), then f is a concave function on \([0,\tilde{x})\).

Lemma 7.2

Let μ(x) and σ(x) satisfy A2–A4 and let f satisfy \(\mathcal{L}f(x)=0\), f(0)=0 and \(f(\hat{x})>0\) for some \(\hat{x}>0\). Then:

-

(a)

f is strongly increasing.

-

(b)

There is an x ∗≥0 (possibly taking the value +∞) so that f is concave on (0,x ∗) and convex on (x ∗,∞). In particular, x ∗=0 if and only if μ(0)≤0, and trivially f′′(x ∗)=0 when 0<x ∗<∞.

Proof of Proposition 2.5

To keep fixed initial conditions, we restrict the definition of a canonical solution to mean that g(0)=0 and g′(0)=1. First note that for any δ>0,

and therefore it follows from Lemma 2.3 in [7] that it is sufficient to prove that for any a, a canonical solution of

satisfies lim x→∞ f′(x)=∞. By Lemma 7.2(b), such a canonical solution f is either ultimately convex or ultimately concave. In either case, there exists c≤∞ so that

Assume that c<∞. Then, since

there must exist a sequence {x n } with x n →∞ and \(f^{\prime\prime}(x_{n})=o(x_{n}^{-1})\). Also

Then, considering only the leading terms,

But this contradicts A1, hence c=∞, and we are done. □

The next step is to prove that Problem C really has a solution.

Lemma 7.3

Under the assumptions of Theorem 3.1, Problem C has exactly one solution and \(\bar{u}_{0}>x^{*}\), where x ∗ is given in Lemma 7.2.

Proof

We are looking for a solution \((\bar{c},\bar{u}_{0})\) of

Let

For given c>0, consider the equation

If \(\underline{u}_{0}\leq x^{*}\), since g′(x) is increasing on [x ∗,∞), it is easy to see that (7.3) has a solution if and only if \(c\leq \hat{c}\). A similar argument shows that this holds when \(\underline{u}_{0}>x^{*}\) as well. We can therefore define the function

Then (7.1) and (7.2) are equivalent to the existence of a c with I(c)=K. By the implicit function theorem, u c is continuously differentiable with respect to c and \(I'(c)=-\int_{\underline{u}_{0}}^{u_{c}}g'(y)\,dy<0\), i.e., I is continuous and strictly decreasing in \(c\in(0,\hat{c})\). Also, lim c→0 u c =∞, hence lim c→0 I(c)=∞ as well. Therefore, if we can prove that \(I(\hat{c})\leq0\), there must exist a unique \(\bar{c}\in(0,\hat{c})\) so that \(I(\bar{c})=K\).

To prove that \(I(\hat{c})\leq0\), assume first that \(\underline {u}_{0}\leq x^{*}\). Then since g′ has a minimum at x ∗,

and consequently \(I(\hat{c})\leq0\). If \(\underline{u}_{0}> x^{*}\), then g′ is increasing on \([\underline{u}_{0},\infty)\), hence \(\hat{c}g'(x)\geq k\) for \(x\in[\underline{u}_{0},\infty)\), and so \(I(\hat{c})\leq0\) again.

Denoting the corresponding \(u_{\bar{c}}\) by \(\bar{u}_{0}\) so that \(\bar{c}g'(\bar{u}_{0})=k\), we thus obtain

□

Lemma 7.4

Under the assumptions of Theorem 3.1, let V be as in Lemma 7.3. Then V′(x)<k for \(x\in[\underline {u}_{0},\bar{u}_{0})\).

Proof

By Lemma 7.2, it is sufficient to prove that \(V'(\underline{u}_{0})<k\). If \(\underline{u}_{0}\geq x^{*}\), the result is trivially true by convexity of g on [x ∗,∞). Assume therefore that \(\underline{u}_{0}<x^{*}\) and let V ∗(x)=c ∗ g(x) be the optimal value from Theorem 2.3. Assume that \(\bar{c}\geq c^{*}\). Then, since \(c^{*}g'(\bar{u}^{*})=\bar{c}g'(\bar{u}_{0})\), it is necessary that \(\bar{u}_{0}\leq\bar{u}^{*}\). But then

a contradiction. Therefore \(\bar{c}<c^{*}\) and by concavity of g on \([\underline{u}^{*},x^{*}]\),

□

For a function ϕ:[0,∞)↦[0,∞), define the maximum utility operator M by

Lemma 7.5

Let V be as in Lemma 7.3. Then V satisfies the quasi-variational inequalities

Furthermore, MV(x)<V(x) for \(x\in[0,\bar{u}_{0})\) and MV(x)=V(x) for \(x\in[\bar{u}_{0},\infty)\).

Proof

We first prove (7.5). Since \(\mathcal{L}V(x)=0\) when \(x\leq\bar{u}_{0}\), assume that \(x>\bar{u}_{0}\). Since \(\bar{u}_{0}>x^{*}\) by Lemma 7.3, \(V^{\prime\prime}(\bar{u}_{0}-)>0\) while trivially \(V''(\bar{u}_{0}+)=0\). Using that \(V(x)=V(\bar{u}_{0})+k(x-\bar{u}_{0})\), we get by assumption A4 that

We proceed to prove (7.6). For \(x\in[0,\underline{u}_{0}]\), MV(x)=−∞, hence the inequality is trivially satisfied. When \(x>\underline{u}_{0}\) by Lemma 7.4 and the definition of V(x), we have V′(x)<k when \(x\in[\underline{u}_{0},\bar{u}_{0})\) and V′(x)=k when \(x\in[\bar {u}_{0},\infty)\). Therefore the function V(x−η)+kη−K is increasing in η for nonnegative η and takes its maximum when \(\eta=x-\underline{u}_{0}\). Hence, for \(x\in[\underline{u}_{0},\bar{u}_{0})\),

For \(x\geq\bar{u}_{0}\), we have

This also proves (7.7) since \(\mathcal{L}V(x)=0\) for \(x\in (0,\bar {u}_{0})\) and MV(x)=V(x) for \(x\in[\bar{u}_{0},\infty)\). Finally (7.8) follows by the definition of V. □

Proof of Theorem 3.1

Let π∈Π 0 be an arbitrary strategy. By definition, V is continuously differentiable on (0,∞) and twice continuously differentiable on the set \((0,\bar{u}_{0})\cup(\bar{u}_{0},\infty)\). However, for \(x=\bar {u}_{0}\), the continuity of V″ might fail. Since the set \(\{0\leq t<\tau^{\pi}: X_{t}^{\pi}=\bar{u}_{0}\}\) has Lebesgue measure zero under each P x , we can use Itô’s formula, see e.g. [2, p. 460], together with (7.5) to get

Here we can let \(V''(\bar{u}_{0})=V''(\bar{u}_{0}-)\). Another argument for this formula would be to use Lemma 7.8 below, where now k=k 1.

Since V′ is bounded and the process satisfies assumptions A1–A4, it is fairly straightforward to show that

is a martingale. Taking expectations on both sides of (7.9) therefore yields

From (7.6) and the fact that \(X_{\tau_{n}^{\pi}}^{\pi}>X_{\tau _{n}^{\pi}+}^{\pi}\geq\underline{u}_{0}\), it follows that

on \(\{\tau_{n}^{\pi}\leq t\wedge\tau^{\pi}\}\). Then (7.10) and (7.11) together give

Letting t→∞ in (7.12), we have by nonnegativity of V that

which implies that \(V(x)\geq V_{0}^{*}(x)\).

Now consider the lump sum dividend barrier strategy \(\pi_{\bar {u}_{0},\underline{u}_{0}}\) given in Theorem 3.1. Since \(X_{s}^{\pi_{0}}\) does not exceed \(\bar{u}_{0}\), we have \(\mathcal{L}(X_{s}^{\pi_{0}})=0\) a.s. for \(0<s<\tau^{\pi_{0}}\). Therefore the inequality in (7.9) becomes an equality with the strategy π 0, i.e.,

Assume that \(x=X_{0}\geq\bar{u}_{0}\). Then

and

We can conclude that

and

Also by boundedness of \(X^{\pi_{0}}_{t\wedge\tau^{\pi_{0}}+}\) and the fact that \(P(\tau^{\pi_{0}}<\infty)=1\) and \(X^{\pi_{0}}_{\tau^{\pi_{0}}+}=0\), it follows from the bounded convergence theorem that

Therefore, taking expectations in (7.13) and then letting t→∞ gives

which implies that \(V(x)\leq V_{0}^{*}(x)\). In summary, we get \(V(x)=V_{0}^{*}(x)=V_{\bar{u}_{0},\underline{u}_{0}}(x)\).

When the initial reserve \(X_{0}=x<\bar{u}_{0}\), the result is proved similarly.

To prove the last part of the theorem, let \(\underline{u}^{*}\leq\underline{u}_{0}<\underline{u}_{1}\), and let \(V_{i}(x)=V_{\bar{u}_{i},\underline{u}_{i}}(x)\) be the two value functions. Write \(V_{i}(x)=\bar{c}_{i} g(x)\) for \(x\in[0,\bar{u}_{i}]\). By what we have just proved, V 0(x)>V 1(x), hence \(\bar{c}_{0}>\bar{c}_{1}\). Therefore, for \(V_{i}'(\bar{u}_{i})=k\) it is necessary that \(\bar {u}_{1}>\bar{u}_{0}\). □

Now we turn to the proof of Theorem 3.2. To prove that there is exactly one solution to the equations in Problem D, let \(V(x)=\bar{c}g(x)\) so that we get the equation

Solving for \(\bar{c}\) gives

Lemma 7.6

Let V be the solution of Problem D. Then there is \(\hat{u}\in[\underline{u}_{1},\bar{u}_{1}]\) so that V′(x)≤k on \([\underline{u}_{1},\hat{u}]\) and V′(x)≥k on \([\hat{u},\bar{u}_{1}]\).

Proof

Since \(\tilde{u}\) is an upper optimality point, see (3.3) and what follows there, we know by the previous analysis that the corresponding value function is

Since \(V_{0}^{*}(\tilde{u})=V_{0}^{*}(\underline{u}_{1})+k(\tilde {u}-\underline{u}_{1})-K\), we conclude that

Define the function G as

Since \(\bar{u}_{1}>\tilde{u}>x^{*}\), g′(x) is increasing on \([\tilde{u},\bar{u}_{1}]\). Therefore, G is a continuous and increasing function. Furthermore,

and

so that there must exist \(\hat{u}\in[\tilde{u},\bar{u}_{1}]\) such that \(G(\hat{u})=K\), that is,

Let \(\hat{V}\) be defined as

Then \(\mathcal{L} \hat{V}(x)=0\) for \(0<x<\bar{u}_{1}\) and by (7.15),

Using this together with (7.16) then gives for \(x> \bar{u}_{1}\) that

Therefore \(\hat{V}\) also solves Problem D, so by uniqueness \(\hat{V}=V\).

To finish the proof, let first \(x\in[\underline{u}_{1},\tilde {u}]\). We get from [7] when \((\tilde{u},\underline{u}_{1})=(\bar{u}^{*},\underline{u}^{*})\), and from Lemma 7.4 when \((\tilde{u},\underline{u}_{1})=(\bar{u}_{0},\underline{u}_{0})\), that \({V_{0}^{*}}'(x)=k\frac{g'(x)}{g'(\tilde{u})}\leq k\). Since \(\hat {u}\geq\tilde{u}> x^{*}\) and g′(x) is increasing on (x ∗,∞), \(V'(x)=k\frac{g'(x)}{g'(\hat{u})}\leq k\frac {g'(x)}{g'(\tilde{u})}\leq k\). Finally, let \(x\in[\tilde{u},\tilde{u}_{1}]\). Since \(V'(\tilde{u})\leq k\), \(V'(\hat {u})=k\) and \(V'(x)=k\frac{g'(x)}{g'(\hat{u})}\) is increasing on \([\tilde{u},\bar {u}_{1})\), we can conclude that V′(x)≤k on \([\tilde{u},\hat{u}]\) and V′(x)≥k on \([\hat{u},\bar{u}_{1})\). □

Note that V′(x) and V″(x) exist and are continuous except at \(x=\bar{u}_{1}\). Let \(V^{\prime-}(\bar{u}_{1})\) and \(V^{\prime +}(\bar{u}_{1})\) be the left and right derivatives of V(x) at \(\bar{u}_{1}\). From Lemma 7.6, we can see that \(V^{\prime-}(\bar{u}_{1})\geq k=V^{\prime+}(\bar{u}_{1})\). Therefore V(x) may fail to be differentiable at the point \(\bar{u}_{1}\) if \(V^{\prime-}(\bar{u}_{1})>k\). Thus, the classical Itô formula cannot be applied, but its generalization, the Meyer–Itô formula, is applicable. Since we are working with functions of the form e −λt f(Y t ), the standard Meyer–Itô formula needs a slight but straightforward modification.

Lemma 7.7

Let f be the difference of two convex functions and f ′− its left derivative. Let Y be a semimartingale and

where \(L_{t,0}^{a}\) is the local time of Y at a. Then

where μ is the signed measure (when restricted to compacts) which is the second derivative of f in the generalized function sense. Furthermore, for every bounded Borel measurable function v,

where \([Y,Y]_{s}^{c}\) is the quadratic variation of the continuous martingale part of Y.

Proof

The first part follows from Theorem IV.70 in [10], using that

and Fubini’s theorem on the local time term. Formula (7.17) follows from Corollary IV.1 in [10] and an application of Fubini’s theorem. □

Lemma 7.8

Let V be the solution of Problem D. Then we have for π∈Π 1 the equation

where k 1 is the left derivative of V(x) at \(\bar{u}_{1}\).

Proof

Since V′(x) and V″(x) exist and are continuous except at \(x=\bar{u}_{1}\), and \(V'^{\pm}(\bar{u}_{1})\), \(V^{\prime\prime\pm}(\bar{u}_{1})\) exist and are finite, fairly straightforward calculations show that V(x) can be written as the difference of the two convex functions

where x +=max(x,0) and x −=−min(x,0). By the property of V(x),

The identity (7.17) shows that

The result now follows from Lemma 7.7. □

Lemma 7.9

Let V be the solution of Problem D and define the operator \(\mathcal{L}^{-}\) by

Then V satisfies the quasi-variational inequalities

Here the operator M is as in (7.4), but with the lower limit \(\underline{u}_{0}\) there replaced by \(\underline{u}_{1}\).

Proof

By the construction of V(x), (7.18) holds. To prove (7.19), let \(x> \bar{u}_{1}\). Then

Since μ′(x)≤λ by assumption A4 and by the fact that V′(x)=k on \((\bar{u}_{1}, \infty)\), the function μ(x)k−λV(x) is decreasing on \((\bar{u}_{1}, \infty)\). Therefore,

If \(\mu(\bar{u}_{1})\leq0\), then clearly \(\mathcal{L^{-}}V(x)\leq 0\). If \(\mu(\bar{u}_{1})> 0\), then \(V^{\prime\prime-}(\bar{u}_{1})=k\frac {g''(\bar {u}_{1})}{g(\hat{u})}\geq0\) by \(\bar{u}_{1}>\bar{u}>x^{*}\). Then, since \(V^{\prime-}(\bar{u}_{1})\geq k\) and \(\mu(\bar{u}_{1})> 0\), we have

Finally, we prove (7.20). By Lemma 7.6, for \(x\geq \bar{u}_{1}\),

so optimality is achieved either by remaining at x or by going down all the way to \(\underline{u}_{1}\). This gives

□

Proof of Theorem 3.2

For π∈Π 1, we easily get from Lemma 7.8 that

Since π∈Π 1, it is necessary that \(X_{\tau_{n}^{\pi }}^{\pi }\geq\bar{u}_{1}\). Then by Lemma 7.9 and the fact that k 1≥k,

Taking expectations gives

Letting t→∞, we have by nonnegativity of V that

Taking the supremum over all strategies in Π 1 gives

Now consider the lump sum dividend barrier strategy \(\pi_{1}=\pi_{\bar{u}_{1},\underline{u}_{1}}\). By definition of that strategy, \({X}_{s}^{\pi_{1}}\leq\bar{u}_{1}\) for all s>0. Therefore \(\mathcal{L}^{-}({X}_{s}^{\pi_{1}})=L_{s}^{\bar{u}_{1}}=0\) for all s>0 and so

Furthermore, by (7.21),

Arguing as at the end of the proof of Theorem 3.1 now gives

Taking expectations and then letting t→∞ results in \(V(x)=V_{\bar{u}_{1}, \underline{u}_{1}}(x)\) which implies that \(V(x)\leq V_{1}^{*}(x)\). Together with (7.22), we can therefore conclude that \(V_{1}^{*}(x)=V(x)=V_{\bar{u}_{1},\underline{u}_{1}}(x)\). □

Proof of Theorem 4.1

By Theorem 2.3 and its proof in [7], \(V_{\bar{u},\underline{u}(\bar{u})}(x)\) is increasing in \(\bar{u}\). If \(\underline{u}(\bar{u})\rightarrow\infty\) as \(\bar{u}\rightarrow\infty\), there is nothing to prove, so assume that \(\underline{u}(\bar{u})\leq m\) for all \(\bar{u}\) for some positive m. Given δ>0, choose \(\bar{u}>b\) so large that we have \(V_{\bar {u},\underline{u}(\bar{u})}(x)>\allowbreak V^{*}(x)-\frac{\delta}{2}\), ∀x∈[0,b], and also so that \(\ln\bar{u}>m\). Consider the following two dividend barrier lump sum strategies:

-

1.

The strategy \(\pi_{0}=\pi_{\bar{u},\underline{u}(\bar{u})}\).

-

2.

The strategy \(\pi_{1}=\pi_{\bar{u},\ln\bar{u}}\).

The strategy π 1 clearly satisfies the conditions of the theorem. Let τ be the first time the process hits \(\bar{u}\) (with τ=∞ if it hits 0 before \(\bar{u}\)). By definition, τ is the same for both strategies when \(x\leq\bar{u}\). By the strong Markov property, we have for x∈[0,b]

Now since \(\ln\bar{u}>m\),

Therefore

Using this equation with \(x=\ln\bar{u}\) gives

and so

By assumption A4, μ(x)≤μ(0)+λx, so by letting τ′ be the same as τ, but with the drift μ(x) replaced by μ(0)+λx, it is clear that E x [e −λτ]≤E x [e −λτ′]. Define \(h_{\bar{u}}(x)=E_{x}[e^{-\lambda\tau'}]\) so that \(h_{\bar {u}}(0)=0\) and \(h_{\bar{u}}(\bar{u})=1\). Furthermore, by standard results, see e.g. [4, Chap. 15.3], \(h_{\bar {u}}\) satisfies

One solution of this equation is

Another solution is then given as, see e.g. [13, p. 31],

Here we used assumption A1 in the first inequality, where c is a suitable positive constant. Fitting the boundary conditions, we get

Therefore, \(h_{\bar{u}}(x)\sim(\lambda\bar{u})^{-1}\) as \(\bar{u}\) gets large and x is fixed. Consequently, in (7.23) we can choose \(\bar{u}\) so large that \(V_{\pi_{0}}(x)-V_{\pi_{1}}(x)\leq\frac{\delta}{2}\) for all x∈[0,b]. □

References

Ames, W.F.: Numerical Methods for Partial Differential Equations, 2nd edn. Academic Press, New York (1977)

Harrison, J.T., Sellke, T.M., Taylor, A.J.: Impulse control of Brownian motion. Math. Oper. Res. 8, 454–466 (1983)

Karatzas, I., Shreve, S.E.: Brownian Motion and Stochastic Calculus. Springer, New York (1988)

Karlin, S., Taylor, H.M.: A Second Course in Stochastic Processes. Academic Press, New York (1981)

Krylov, N.V.: Lectures on Elliptic and Parabolic Equations in Hölder Spaces. Graduate Studies in Mathematics. American Mathematical Society, Providence (1996)

Paulsen, J.: Optimal dividend payouts for diffusions with solvency constraints. Finance Stoch. 4, 457–474 (2003)

Paulsen, J.: Optimal dividend payments until ruin of diffusion processes when payments are subject to both fixed and proportional costs. Adv. Appl. Probab. 39, 669–689 (2007)

Paulsen, J.: Optimal dividend payments and reinvestments of diffusion processes when payments are subject to both fixed and proportional costs. SIAM J. Control Optim. 47, 2201–2226 (2008)

Paulsen, J., Gjessing, H.K.: Ruin theory with stochastic return on investments. Adv. Appl. Probab. 29, 965–985 (1997)

Protter, P.: Stochastic Integration and Differential Equations, 2nd edn. Springer, New York (2004)

Shreve, S.E., Lehoczky, J.P., Gaver, D.P.: Optimal consumption for general diffusions with absorbing and reflecting barriers. SIAM J. Control Optim. 22, 55–75 (1984)

Stroock, D.W.: Partial Differential Equations for Probabilists. Cambridge Studies in Advanced Mathematics, vol. 112. Cambridge University Press, Cambridge (2008)

Yosida, K.: Lectures on Differential and Integral Equations. Dover, New York (1990)

Acknowledgements

The research of Lihua Bai was supported by the National Natural Science Foundation of China (10871102) and (11001136) and the Fundamental Research Funds for the Central Universities (65010771). Also, financial support from the Department of Mathematics, University of Bergen, is appreciated. We should also like to thank the referees for some useful suggestions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bai, L., Hunting, M. & Paulsen, J. Optimal dividend policies for a class of growth-restricted diffusion processes under transaction costs and solvency constraints. Finance Stoch 16, 477–511 (2012). https://doi.org/10.1007/s00780-011-0169-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00780-011-0169-5

Keywords

- Optimal dividends

- General diffusion

- Solvency constraint

- Quasi-variational inequalities

- Lump sum dividend barrier strategy