Abstract

This study examined identification of emotional information in facial expression, prosody, and their combination in 23 adult patients with combined attention deficit-hyperactivity disorder (ADHD) versus 31 healthy controls (HC) matched for gender, age, and education. We employed a stimulus set which was carefully balanced for valence as well as recognizability of the expressed emotions as determined in an independent sample of HC to avoid potential biases due to different levels of task difficulty. ADHD patients were characterized by impaired recognition of all employed categories (neutral, happiness, eroticism, disgust, anger). Basic cognitive functions as assessed by neuropsychological testing, such as sustained attention, constancy of alertness, and verbal intelligence partially explained lower recognition rates. Removal of the correlated variance by means of regression analyses did not abolish lower performance in ADHD indicating deficits in social cognition independent of these neuropsychological factors (p < 0.05). Lower performance correlated with self-rated emotional intelligence (r = 0.38, p < 0.05) indicating that adults with ADHD are aware of their problems in emotion perception. ADHD patients could partly compensate their deficit in unimodal emotion perception by audiovisual integration as revealed by larger gains in emotion recognition accuracy during bimodal presentation (p < 0.05) as compared to HC. These behavioral results can serve as foundation for future neuroimaging studies and point rather towards sensory-specific regions than audiovisual integration areas in perception of emotional information in adult ADHD.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Attention deficit hyperactivity disorder (ADHD) in adults is considered to be frequent and of high clinical relevance (Wender et al. 2001; Schmidt et al. 2012). Deficits in social interactions of ADHD patients present a central problem causing social, occupational, and emotional disadvantages (Barkley 1997; Barkley et al. 2006; Murphy and Barkley 1996; Sobanski and Alm 2004; Barkley and Murphy 2010). Several studies revealed alterations in subcomponents of “emotional intelligence” (Salovey et al. 1995) in ADHD, like influencing one’s own (Barkley 1997; Wender et al. 2001; Shaw et al. 2014) as well as perceiving and interpreting emotions of others (Uekermann et al. 2010; Sjowall et al. 2013). This may be so prominent, that some authors argued for the establishment of a new core-symptom in diagnosis of ADHD, capable to explain problems on interpersonal levels independently from inattention and hyperactive-impulsive reacting (Barkley and Murphy 2010; Uekermann et al. 2010; Shaw et al. 2014; Bunford et al. 2014). This symptom complex includes social cognition deficiencies consisting of more fundamental abilities like attending to and recognition of emotions as well as more complex social functions including theory of mind (Uekermann et al. 2010). Impaired emotion recognition is associated with behavioral problems already in preschool children (Chronaki et al. 2015). Therefore, a better understanding of emotion recognition as fundamental function of social cognition in ADHD could contribute to the solution of some of their interpersonal problems.

There is a solid basis of behavioral studies evidencing deficits in facial emotion recognition in children and adolescents with ADHD (Singh et al. 1998; Corbett and Glidden 2000; Cadesky et al. 2000; Pelc et al. 2006; Yuill and Lyon 2007; Sinzig et al. 2008; Aspan et al. 2014; Boakes et al. 2008), which can to some extent be ameliorated by medication with methylphenidate (Beyer von Morgenstern et al. 2014). These impairments seem to diminish with age (Shapiro et al. 1993), but can still be present in adult ADHD patients (Rapport et al. 2002; Miller et al. 2011). While recognition of vocal emotions (prosody) in ADHD has received much less attention, lower recognition scores have also been described in children (Corbett and Glidden 2000; Kochel et al. 2015) and adults (Grabemann et al. 2013). Furthermore, comorbid ADHD tended to aggravate alterations of prosody processing in children with autismus spectrum disorder (Oerlemans et al. 2014). So far, however, it is unclear whether ADHD patients exhibit a more general deficit in emotion recognition (Cadesky et al. 2000; Serrano et al. 2015; Corbett and Glidden 2000) or specific impairments for negative connotations like “anger” (Singh et al. 1998; Pelc et al. 2006; Rapport et al. 2002), “fear” (Rapport et al. 2002; Sinzig et al. 2008; Aspan et al. 2014; Miller et al. 2011), or “sadness” (Pelc et al. 2006; Aspan et al. 2014; Miller et al. 2011). Any interpretation of results on such specific deficits is limited by the fact that these studies typically comprised several negative emotions, but only one positive emotion (happiness) which results in biases due to different levels of task difficulty for these two types of stimuli. Moreover, it has not been clarified whether these findings which are usually based on judgment of static photographs can be transferred to more realistic emotion displays with dynamic expressions of faces and voices as well as their combinations.

In the current study, we aimed to achieve a higher ecological validity by using dynamic video clips as stimulus material. Furthermore, we directly addressed the question to which extent deficits of ADHD patients during unimodal emotion perception can be compensated by audiovisual integration of simultaneously presented face-voice combinations or whether integration processes are additionally impaired in ADHD. To minimize potential biases of valence and task difficulty, we selected the same number of negative and positive emotions and carefully balanced the stimulus material based on recognition rates of the employed emotions in a pre-study. Regarding recruitment of ADHD patients and healthy controls (HC), we controlled for gender, age, and education as these factors can influence performance in social cognition tasks (Buitelaar et al. 1999; Sinzig et al. 2008; Ethofer et al. 2013). As results of some recent studies in ADHD children argue for a strong effect of comorbidities on emotion recognition (Schwenck et al. 2013; Noordermeer et al. 2015), we aimed to recruit patients without comorbid psychiatric disorders to avoid such confounds in our study.

Previous studies revealed a strong correlation between attention performance and recognition of facial emotions in children with ADHD (Sinzig et al. 2008) raising the question whether impairments of emotion recognition are a mere consequence of the lower performance in attention demanding tasks. To clarify this issue, all participants underwent neuropsychological testing and we determined if deficits in emotion recognition are still detectable if confounding factors due to lower attention or verbal intelligence (Sinzig et al. 2008) are regressed out. Additionally, we analyzed differences between arithmetic mean and median of the reaction time as task intrinsic measure for attentional lapses. Similarly, depression can also result in impaired emotion recognition (Schlipf et al. 2013), was found to influence judgment of facial emotions in childhood ADHD (Williams et al. 2008), is one of the most common comorbidities in adult ADHD (Sobanski et al. 2007) and was thus also considered as covariate in our analyses. Finally, we also examined to which extent the core features of ADHD (inattention, hyperactivity/impulsivity) and emotional intelligence as assessed by self-rating questionnaires are correlated with deficits in emotion recognition. This was done to clarify whether deficits in emotion recognition obtained under laboratory conditions are associated with daily life impairments of ADHD patients.

Considering the aforementioned previous studies we hypothesized that:

-

1.

ADHD is associated with impaired emotion recognition and these deficits correlate with self-rating scores of emotional intelligence as well as the ADHD core symptoms inattention and hyperactivity/impulsivity (unidirectional hypothesis).

-

2.

These impairments are correlated, but cannot be entirely explained by confounding factors such as attention in neuropsychological testing, verbal intelligence, or concurrent depressive symptoms. Thus, ADHD patients still reveal lower emotion recognition rates if the variance correlating with these confounding factors is removed by means of regression analysis (unidirectional hypothesis).

-

3.

Audiovisual integration of nonverbal emotional stimuli is altered in ADHD. As there are no studies on integration of emotional information in ADHD so far, this can either result in stronger integration effects as partial compensation of impairments in unimodal perception or weaker integration effects if this process is additionally impaired (bidirectional hypothesis).

Methods

Participants

Twenty-three patients with ADHD [seven women, 16 men, mean age ± standard deviation (SD): 27.6 ± 9.3 years] and 31 HC (ten women and 21 men, 29.3 ± 8.2 years) were included in the study. ADHD patients were outpatients treated at the University Hospital for Psychiatry and Psychotherapy Tübingen. Control participants were recruited via advertisement. ADHD patients and HC were balanced for sex, age, and educational level assessed by years in the educational system (not counting repetition of classes or unfinished professional training). All patients were diagnosed by an experienced psychiatrist and fulfilled the criteria of the combined subtype according to DSM-IV (American Psychiatric 2000), without comorbid psychiatric diseases, such as dementia, addiction or substance abuse (except to nicotine) as well as anxiety, psychotic, posttraumatic stress, mood, or personality disorders. The diagnosis of childhood manifestation of ADHD was retrospectively assessed with the Wender Utah Rating Scale (Retz-Junginger et al. 2003). In order to improve diagnostic validity, additional information was obtained via third-party anamnesis (parents or other close relatives) and from school report certificates. To quantify deficits in attention, all participants underwent neuropsychological testing.

Patients were without medication for 24 h prior to testing. All subjects were German native speakers, right-handed according to the Edinburgh Handedness Inventory (Oldfield 1971), had normal or corrected to normal vision and did not suffer from any neurologic diseases. HC had no history of psychiatric illness. The study was approved by the Ethical Committee of the University of Tübingen and written informed consent was obtained from all participants. All study procedures were performed according to the Declaration of Helsinki.

Stimuli

Stimulus material consisted of short color video sequences (resolution 720 × 576 pixels, sound 48 kHz, duration 2 s) showing professional actors (two women, two men) who spoke one of 12 German words either in neutral, happy, alluring, angry, or disgusted intonation with congruent facial expressions. The stimulus material was balanced for the sex of the speakers as well as semantic valence and was selected from a pool of 600 stimuli (120 words × 5 categories) which were evaluated in a pre-study (31 healthy volunteers, mean age: 27.3 ± 6.0 years, 16 females). Careful stimulus selection ensured that all emotional categories of every stimulus were correctly identified during audiovisual presentation with a minimum hit rate of 80 %. Furthermore, we balanced our stimulus material for recognition rates across emotional categories (mean hit rates: neutral = 93.4 %; happiness = 95.1 %; eroticism: 94.1 %; anger = 95.1 %; disgust = 95.2 %). All video sequences were presented using three different conditions: auditory (A), visual (V), and audiovisual (AV). Thus, the complete stimulus set comprised 180 stimuli (12 words × 5 emotional categories × 3 modalities).

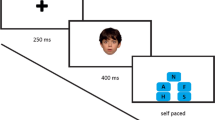

Experimental design

Participants were seated in a comfortable position approximately 60 cm from a 17-inch flat screen (LG Flatron L1953PM, resolution 800 × 600 pixels). Acoustic stimuli were presented through headphones (Sennheiser, HD515) in a volume individually adjusted to a comfortable level. During demonstration of visual stimuli the speakers’ faces had approximately the same size like real faces. Presentation of the experiment and recording of the behavioural data were performed using the software “Presentation” (Neurobehavioral Systems, http://www.neurobs.com/). The stimuli were presented during three sessions with 60 trials each. In each session the order of stimulus presentation was fully randomized. Prior to the three sessions, all participants absolved a short training consisting of ten trials under supervision to ensure that they understood their task.

In the beginning of each trial, one of those stimuli was presented for 2 s followed by a circular scale portraying the German words for “happiness”; “eroticism”, “neutral”, “anger”, and “disgust”. The word “neutral” was placed on top of the circle whereas the two positive connoted expressions “eroticism” and “happiness” where demonstrated on one side and the negative connoted emotions on the other side. In order to avoid possible laterality effects, the scale was flipped horizontally for half of the group. A white dot was randomly placed at one of the five categories and participants had 5 s to choose the response which was fitting best to the expressed affect by moving the white dot clockwise or counter-clockwise by pressing one of two buttons on the keyboard. The time interval between stimulus onset and first button press was defined as reaction time. Trials which did not necessitate any button press (because the white dot was already located at the location intended by the participant) were excluded from analyses of reaction times. Afterwards the scale was shown for another second before a fixation cross shown for one second announced beginning of the next trial.

Psychometric data

Depressive symptoms

The self-rating test Becks-Depressions-Inventory (BDI-II, Beck and Steer 1987) was completed by all participants. Participants which scored higher than 19 points were excluded from the study. Lower scores were registered in order to analyse the influence of actual mood on the performance in emotion recognition.

Verbal intelligence

Verbal intelligence was assessed with the Mehrfach-Wortschatz-Intelligenz-Test (MWT-B) which consists of 37 groups including one German word and four pseudowords. The task of the participants is to mark the word existing in German language. This test correlates to a relatively high content (r = 0.72) with other measures of global intelligence (Blaha and Pater 1979; Lehrl 1977; Lehrl et al. 1995).

Self-report emotional intelligence

The Self-Report Emotional Intelligence Test (SREIT, Schutte et al. 1998) estimates the abilities to recognize, to manage, and to use own and other peoples’ emotions. This self-report-measure which was developed based on the model of emotional intelligence from Salovey and Mayer (Salovey et al. 1995) comprises 33 statements. A 5-point Likert scale (1—strongly agree to 5—strongly disagree) is used and thus, scores range between 33 and 165 with higher scores indicating higher levels of trait emotional intelligence.

Self-report inattention and hyperactivity/impulsivity

The ADHS-Selbstbeurteilungsskala (ADHS-SB, Rösler et al. 2004) is a self-report-questionnaire used in clinical settings to rate the experienced level of inattention as well as hyperactivity/impulsivity in every-day life. The test contains 22 items concerning inattentive (items 1–9), hyperactive (items 10–14), and impulsive (15–18 items) symptoms as well as the duration and impact of these symptoms (items 19–22). The items range from 0 (does not apply) to 3 (fully applies) with higher scores indicating higher experienced levels of inattention, hyperactivity, and impulsivity in daily life situations.

Sustained attention and alertness

In order to asses an objective measure of basic attentional functions, we applied the subtests “sustained attention” and “alertness” from the Testbatterie zur Aufmerksamkeitspruefung (TAP, Zimmermann and Fimm 2009). During the sustained attention subtest a sequence of several geometrical shapes of different colours, fillings, and sizes was presented on a monitor. The participants’ task was to press a button each time two identical shapes were presented in direct sequence concerning colour, filling, and size (one-back task). False alarms, misses, and reaction times were recorded. In the subtest “alertness” the participants have to respond by pressing a button as quickly as possible irrespectively of whether it was cued by a warning sound or not. Intrinsic alertness is estimated as reaction time without cueing stimulus, phasic alertness as reaction time without cue minus reaction time with cue, and alertness constancy is estimated as standard deviation of reaction times without cueing stimulus.

Data analysis

Demographic values (age and number of educational years) are reported as mean ± standard deviation (SD). All other values are reported as mean ± standard error of the mean (SEM). Statistical analysis was performed employing SPSS statistics 22. To assess possible differences between the groups in sociodemographic and psychometric data (BDI, MWTB, SREIT, ADHS-SB, TAP subtests) independent two-sample t tests and Mann–Whitney U tests were used for normally and not normally distributed data, respectively. We expected higher scores for BDI and ADHS-SB, but lower scores for SREIT and neuropsychological testing (MWTB and TAP subtests) and thus applied one-tailed statistical tests for these comparisons between ADHD patients and HC. The unbiased hit rate described by Wagner (HU, Wagner 1993) was applied as measurement of performance in emotion recognition. By multiplying raw hit rate with the positive predictive value the HU does not only take account of the sensitivity, but also of the specificity of emotion recognition. Prior to statistical analysis, HUs were arcsine transformed.

2 × 3 × 5 repeated-measure analyses of variance (ANOVA) were conducted on the arcsine transformed HUs and mean reaction times with group as between-subjects factor (ADHD, HC) and modality (A, V, AV) and emotional category (neutral, happiness, eroticism, disgust, and anger) as within-subject factors. Non-sphericity was accounted for using the Greenhouse-Geisser correction (Geisser and Greenhouse 1958). Post-hoc paired t tests were Bonferroni-corrected for multiple comparisons. To assess potential attentional lapses during the experiment differences between arithmetic mean and median of reaction times were determined for each participant and compared between groups. In order to analyse performance changes across the experiment and if the two groups differ in terms of possible alterations between the three sessions, a 2 × 3 repeated-measure ANOVA was performed on the arcsine transformed HUs with group as between-subjects factor (ADHD, HC) and session (first, second, third) as within-subject factor. To investigate correlations between emotion recognition and depressive symptoms, verbal intelligence and basic attentional functions, Pearson and Spearman correlation analyses were conducted for normally and not normally distributed data, respectively. In case of significant correlations, the correlated variance was removed by regression analysis (single regression analysis for each parameter separately as well as multiple regression analysis for their conjoint effect) from the overall performance in emotion recognition (unbiased hit rate averaged across experimental conditions) and the resulting regression residuals were compared using one-tailed t tests in order to determine if group differences remain significant after accounting for differences in depressive symptoms, verbal intelligence, or basic attentional functions. Furthermore, we determined whether audiovisual emotion recognition correlates with SREIT values or inattentive and hyperactive/impulsive symptoms as measured by the ADHS-SB. Finally, audiovisual integration was estimated as the difference between the unbiased hit rate during audiovisual stimulation [HU(AV)] and the higher unbiased hit rate obtained for auditory and visual stimulation [max(HU(A), HU(V))] and compared between groups using a two-tailed t test.

Results

Demographic and psychometric data

Demographic and psychometric data of ADHD patients and healthy controls including statistical comparisons are presented in Table 1. The two groups did not differ regarding gender distribution [X 2(1) = 0.0; p = 0.89], age [U(52) = −0.9; p = 0.35], or educational level [U(52) = −0.4; p = 0.68]. Verbal intelligence scores, however, were significantly lower in ADHD patients (p < 0.01) reflecting lower academic success of ADHD patients in spite of comparable number of educational years in both groups. Patients exhibited significantly higher self-rating scores than HC for inattention and hyperactivity/impulsivity as well as depressive symptoms (all p < 0.01) while significantly lower emotional intelligence (p < 0.01) were observed in the ADHD group than in HC. Neuropsychological testing revealed significantly more errors and misses in the subtest “sustained attention” of the TAP and showed a higher variation of reaction times (alertness constancy) during the subtest “alertness” (both p < 0.01). Moreover, differences between reaction times without and those with reaction time during the subtest “alertness” were higher in ADHD, representing a higher “phasic alertness”. The parameters for intrinsic alertness of the subtest “alertness” failed to reach significance in the group comparison.

Unbiased hit rates

Mean unbiased hit rates depending on emotional category and sensory modality are shown in Fig. 1 for ADHD patients and HC (grey and white bars, respectively). The repeated-measures 2 × 3 × 5 ANOVA with group (ADHD, HC) as a between-subjects factor and emotion (neutral, happiness, eroticism, disgust, anger) as well as sensory modality (acoustic, visual, audiovisual) as within-subjects factors revealed a significant main effect of group [F(1, 52) = 16.6; p < 0.01]. This main effect of group reflected significantly lower unbiased hit rates (averaged across categories and modalities) in the ADHD group (66 ± 2 %) than in HC [76 ± 1 %; t(52) = 4,4; p < 0.01]. Furthermore, we found a significant main effect of modality [F(2, 104) = 195.6; p < 0.01]. Pairwise comparisons with Bonferroni correction revealed that emotion recognition was easier during bimodal than during unimodal acoustic or visual as well as visual compared to acoustic presentation (all p < 0.01). There was also a significant main effect of emotion [F(4, 208) = 19.6; p < 0.01] which was due to significantly lower unbiased hit rates for neutral and angry stimuli than happy, alluring, or disgusted cues (all p < 0.01). In agreement with the results of our pre-study, HC achieved similar unbiased hit rates across categories (90.6–96.2 %) during audiovisual stimulation. During unimodal presentation a larger variability across categories was observed with unbiased hit rates ranging from 76.3 to 88.3 % during visual and 52.4–75.1 % during acoustic stimulation. In line with these differences across modalities, we found a significant interaction between sensory modality and category [F(8, 416) = 4.3; p < 0.01]. Post-hoc t tests comparing recognition rates separately within the three modalities revealed that alluring and disgusted voices were better recognized than all other voice categories (all p < 0.05), while happiness was the emotion which was best recognized in unimodal visual stimuli (all p < 0.05). Importantly, however, these effects occurred to a similar extent in both groups and consequently, there were no significant interaction between group and modality [F(2, 104) = 0.8; p = 0.42], group and emotion [F(4, 208) = 0.5; p = 0.65], or group and modality and emotion [F(8, 416) = 0.1; p = 0.59]. A second 2 × 3 repeated-measure ANOVA with session (first, second, third) as within and group (ADHD, HC) as between-subjects factor revealed again a significant main effect of group [F(1, 52) = 15.9; p < 0.01] and a significant main effect of session [F(2, 104) = 29.7; p < 0.01]. Post hoc conducted t test showed that the overall performance for the first (average performance ± standard error: 74 ± 3 %) and second session (75 ± 3 %) was lower as for the last one [89 ± 3 %; both t(53) > 6.0, both p < 0.01]. There was no significant interaction between group and session [F(2, 104) = 0.6; p = 0.56].

Performance in emotion recognition (unbiased hit rate ± standard error of the mean) of healthy controls (white bars) and patients (grey bars) of neutral (N), happy (H), erotic (E), disgusted (D), and angry (A) stimuli presented in unimodal acoustic (right panel), unimodal visual (center panel), and audiovisual (right panel) modality

Reaction times

The repeated measure 2 × 3 × 5 ANOVA on reaction times showed no significant main effect for group [F(1, 52) = 1.9; p = 0.18], but a significant main effect of modality was found [F(2, 104) = 28.2, p < 0.01]. Pairwise comparisons with Bonferroni correction revealed that participant reacted faster during bimodal (1.1 ± 0.05 s) and unimodal visual (1.1 ± 0.04 s) than during unimodal acoustic presentation (1.3 ± 0.04 s, both p < 0.01). There was also a significant main effect of emotion [F(4, 208) = 7.6; p < 0.01] due to significantly shorter reaction times for happy cues than for angry, disgusted, or neutral stimuli (all p < 0.03). Mirroring the results of the ANOVA on unbiased hit rates, a significant interaction between modality and category was revealed for reaction times [F(8, 416) = 11.2; p < 0.01]. There were no significant interaction between group and emotion [F(4, 208) = 1.9; p > 0.12], group and modality [F(2, 104) = 3.0; p > 0.05] or group, modality and emotion [F(8, 416) = 0.6 respective 0.7; both p > 0.68]. A 2 × 3 repeated-measure ANOVA on mean reaction time with session as within and group as between-subjects factor revealed a significant main effect of session [F(2, 104) = 6.5; p < 0.01]. Post hoc conducted t tests showed that reaction times during the first session (average reaction time ± standard error: 1.2 ± 0.04 s) were longer than during the second (1.1 ± 0.03 s) and third session (1.1 ± 0.04 s, both p < 0.01). There was no significant main effect of group [F(1, 52) = 1.9; p = 0.17] nor a significant interaction between group and session [F(2, 104) = 0.5; p = 0.55]. t tests for independent samples did not show significant differences between groups for the differences between arithmetic mean and median of reaction times [t(52) < 0.8; p > 0.40].

Correlation analyses

Bivariate Pearson correlation analysis revealed a significant positive correlation (r = 0.33; p = 0.01) between verbal intelligence quotient and mean unbiased hit rates (averaged across sensory modalities and emotional categories). In the same way, significant inverse relationships were found between the latter and deficits in sustained attention (r = −0.43; p < 0.01) as well as alertness constancy (r = −0.46; p < 0.01). No such correlation was found for performance in emotion perception and depressive symptoms (r = −0.09; p = 0.45) or phasic alertness (r = −0.13; p = 0.33). After regressing out the correlated variances of verbal intelligence, sustained attention, and alertness constancy from the overall performance in affect recognition, group differences in emotion recognition were still significant [all t(52) > 2.0; all p < 0.05].

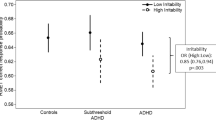

Within the group of ADHD patients, SREIT scores correlated significantly with audiovisual emotion recognition (r = 0.38; p = 0.04). No such significant correlation was found between the latter and the extent of inattentive (r = 0.30; p = 0.16) and hyperactive-impulsive symptoms (r = 0.09; p = 0.60). Within the group of HC, no significant correlations with SREIT (r = 0.17; p = 0.36), inattentive (r = −0.04; p = 0.85), or hyperactivity/impulsivity symptoms (r = −0.10; p = 0.59) were found. The difference between the mean HU during audiovisual presentation and the higher HU obtained during unimodal presentations [max(HU(A), HU(V))] was significantly larger [t(52) = 2.2; p = 0.04] in ADHD patients (15 ± 2 %) than in HC (11 ± 1 %).

Discussion

The current study investigated recognition of emotions in voices, faces, and their combination in patients with ADHD of combined subtype and HC balanced for gender, age, and education. We aimed to extent previous findings on affect recognition with respect to the following issues: First, we aimed to overcome limitations of previous studies and clarify whether deficits regarding specific emotions can be identified if a stimulus set is used which is balanced for task difficulty across emotional categories or whether adult ADHD patients display a more general impairment that occurs irrespective of the expressed emotion and is also present for neutral stimuli. Thus, we found a stimulus-independent deficit of ADHD patients in an emotion recognition task independent of the expressed category or valence. Second, we examined to which extent deficits in affect recognition can be explained by general differences of ADHD patients and HC in verbal intelligence and attention levels as obtained during neuropsychological testing. Third, we wanted to clarify whether audiovisual integration can partially compensate deficits in affect recognition or whether this process is additionally compromised in adult ADHD.

Our results indicate lower performance of ADHD patients which was observed for all five categories and all sensory modalities. Thus, our findings clearly argue for a general deficit in encoding of emotional cues which occurs independent of emotion type, valence or modality. A possible explanation could be a disturbance in earlier stages of emotion perception which would be in line with previous suggestions including e.g. altered viewing patterns of facial emotional cues as observed in children with ADHD (Serrano et al. 2015). However, the latter has been shown to be differently pronounced for the type of emotion and would not account for the deficits in prosody perception. Our results are in line with experiments showing general deficits in emotion recognition (Cadesky et al. 2000; Corbett and Glidden 2000), but inconsistent with other studies indicating specific impairments for certain negative emotions (Sinzig et al. 2008; Singh et al. 1998; Aspan et al. 2014; Miller et al. 2011; Pelc et al. 2006; Rapport et al. 2002). However, it should be noted that most of the aforementioned studies were conducted in childhood ADHD and it cannot be excluded that deficits regarding specific emotions can only be found in children, but not adults with ADHD. In some of these previous studies, task difficulty varied considerably across emotions with nearly perfect recognition of some categories (usually including happiness) while other emotions were identified with much lower accuracy (or even at chance level in ADHD patients and controls). We aimed to overcome this problem by choosing the same number of positive and negative emotions and balanced our stimulus set for recognition rates across categories. We selected our stimuli based on identification rates obtained in an independent sample of HC during audiovisual presentation which presents the ecologically most valid signal encountered in many daily situations while affect recognition based on only acoustic or visual information occurs much less frequently (e.g. telephone). It should be noted that balancing for affect recognition during audiovisual presentation does not automatically result in similar identification rates of emotions of unimodal acoustic or visual stimuli as vocal and facial cues contribute differently depending on the expressed emotion (Lambrecht et al. 2012, 2014). Such differential contributions explain the significant main effect of category and the interaction of category and emotion in our results. However, these effects occurred similarly in ADHD patients and HC and did thus not influence comparisons between the two groups. Significantly increased performance in the last and faster reaction times during the two last sessions in both groups indicate that there was a learning effect across the experiment. The lack for significant interactions between group and session shows that this effect was not pronounced differently for the two groups. Analysis of reaction times did not reveal a significant main effect of group or any interactions between group and the other factors. There were also no significant differences between the two groups regarding the difference between the arithmetic mean and median of reaction times as indicator of potential attentional lapses. These findings argue for a deficit in emotion recognition in ADHD which cannot be explained by confounds due to decreases in attention during the task. To further substantiate this line of argumentation we additionally included classical neuropsychological tests to measure attention parameters. In line with previous results (Sinzig et al. 2008), deficits in emotion recognition in ADHD correlated with neuropsychological parameters with variance explanation of 21 % for sustained attention, 18 % for alertness constancy, and 11 % for verbal intelligence. Removal of this correlated variance for each parameter separately or in combination, however, did not extinguish the difference in task performance between ADHD patients and HC indicating that such differences in neuropsychological functions contribute to but are not the sole explanation for deficits in emotion recognition. ADHD patients were characterized by slightly higher BDI scores than HC. No significant effect of depressive symptoms on emotion recognition, however, was found. The most likely explanation for this negative result is the explicit exclusion of participants with major depressive disorder as we aimed to recruit patients without comorbid mood disorders. This represents a strength of the current study, as the recruitment of patients without considerable comorbidities allowed us to assess the specific ADHD-related deficits in emotion recognition rather than secondary effects due to other disorders. As ADHD without comorbidities is rather the exception than the rule in clinical populations, however, it might be also interesting to assess the contributions of comorbid disorders which might further deteriorate affect recognition. Thus, future studies should address this issue by also including ADHD patients with depression or other important comorbid disorders (e.g. substance abuse) and quantify their impact on affect recognition by means of correlation analysis.

Performance in audiovisual affect recognition significantly correlated with self-rated emotional intelligence scores indicating that adult ADHD patients are well aware of their deficit. This concurs with previous results showing correlations between affect recognition deficits and pathologically increased intensity of experienced emotions in adult ADHD (Rapport et al. 2002). These findings, however, contrasts with results obtained in childhood ADHD where rating of task difficulty during emotional face recognition suggested lack of awareness for impaired emotion recognition (Pelc et al. 2006) in spite of the fact altered emotion processing correlated with parents’ ratings of emotional lability (Williams et al. 2008) and self-assessments of interpersonal problems (Pelc et al. 2006). These results obtained by us and others (Rapport et al. 2002) in adult ADHD on the one hand, and in children with ADHD on the other hand (Pelc et al. 2006; Williams et al. 2008) point to an increasing awareness for such deficits in the course of the disease.

No such significant relationship was found between performance in affect recognition and self-rated inattention or hyperactive-impulsive symptoms. On the first glance, this seems to contradict our neuropsychological test results that clearly showed a correlation between basic attentional functions and performance in emotion identification as well as data obtained in children with ADHD indicating correlations between impaired affect recognition and parent ratings of inattentive as well as hyperactive-impulsive behavior (Williams et al. 2008; Boakes et al. 2008; Aspan et al. 2014). The most likely explanation for this finding is that inattentive symptoms assessed by the ADHS-SB cover much broader areas in daily life including also organizational problems. It is also possible that correlation between hyperactive-impulsive symptoms and social dysfunctioning diminish with age (Zoromski et al. 2015) and therefore was absent in our study on adults. Furthermore, it is possible that adult ADHD patients can better discriminate whether problems arise from the ADHD core symptoms or additional deficits in social cognition.

Finally, our data suggest that participants with ADHD can partly compensate their deficits in emotion recognition in unimodal stimuli by means of audiovisual integration of emotional cues. Our stimulus selection aimed to achieve a high recognizability of all employed emotional categories in the audiovisual condition which guaranteed also sufficient emotion recognition in the unimodal condition and helped to avoid floor effects. This methodological approach, however, can result in ceiling effects (i.e. the unbiased hit rate cannot exceed 100 %). As only four of 31 healthy controls achieved an unbiased hit rate of 100 % a ceiling effect seems to be an improbable explanation for the larger gains of ADHD patients during audiovisual presentation. So far, little is known about audiovisual integration processes in ADHD, but a recent study observed that ADHD patients benefitted less from concurred presentation of visual stimuli by lip-reading during (non-emotional) speech comprehension than HC (Michalek et al. 2014). These opposing findings for emotional and non-emotional stimulus materials point to distinct mechanisms of audiovisual integration in ADHD.

Taken together, our data indicate general deficits in affect recognition of unimodal stimuli which are partially compensated by bimodal presentation. These results provide also the ground for hypotheses in neuroimaging studies and point rather to altered neural activity within sensory-specific cortices for face and voice processing, such as the fusiform face area (Vuilleumier et al. 2001) or emotional voice area (Ethofer et al. 2012), than audiovisual integration areas in the superior temporal sulcus (Kreifelts et al. 2007; Ethofer et al. 2013).

References

American Psychiatric A (2000) Diagnostic and statistical manual of mental disorders, 4th edn, text revision (DSM-IV-TR). American Psychiatric Association. doi:10.1176/appi.books.9780890423349

Aspan N, Bozsik C, Gadoros J, Nagy P, Inantsy-Pap J, Vida P, Halasz J (2014) Emotion recognition pattern in adolescent boys with attention-deficit/hyperactivity disorder. Biomed Res Int 2014:761340

Barkley RA (1997) Behavioral inhibition, sustained attention, and executive functions: constructing a unifying theory of ADHD. Psychol Bull 121(1):65–94

Barkley RA, Murphy KR (2010) Deficient emotional self-regulation in adulds with attention-deficit/hyperactivity disorder: the relative contributions of emotional impulsiveness and ADHD symptoms to adaptive impairments in major life activities. J ADHD Relat Disord 1(4):5–28

Barkley RA, Fischer M, Smallish L, Fletcher K (2006) Young adult outcome of hyperactive children: adaptive functioning in major life activities. J Am Acad Child Adolesc Psychiatry 45(2):192–202

Beck AT, Steer RA (1987) Beck depression inventory—manual. The Psychological Association, San Antonio

Beyer von Morgenstern S, Becker I, Sinzig J (2014) Improvement of facial affect recognition in children and adolescents with attention-deficit/hyperactivity disorder under methylphenidate. Acta Neuropsychiatrica 26(4):202–208

Blaha L, Pater W (1979) Zur Stabilität und Wiederholbarkeit (Reliabilität) des Intelligenz-Kurztestes MWT-B bei Langzeithospitalisierten. Der Nervenarzt 50(3):196–198

Boakes J, Chapman E, Houghton S, West J (2008) Facial affect interpretation in boys with attention deficit/hyperactivity disorder. Child Neuropsychol 14(1):82–96

Buitelaar JK, van der Wees M, Swaab-Barneveld H, van der Gaag RJ (1999) Verbal memory and Performance IQ predict theory of mind and emotion recognition ability in children with autistic spectrum disorders and in psychiatric control children. J Child Psychol Psychiatry 40(6):869–881

Bunford N, Evans SW, Langberg JM (2014) Emotion dysregulation is associated with social impairment among young adolescents with ADHD. J Atten Disord 18:1–17

Cadesky EB, Mota VL, Schachar RJ (2000) Beyond words: how do children with ADHD and/or conduct problems process nonverbal information about affect? J Am Acad Child Adolesc Psychiatry 39(9):1160–1167

Chronaki G, Garner M, Hadwin JA, Thompson MJ, Chin CY, Sonuga-Barke EJ (2015) Emotion-recognition abilities and behavior problem dimensions in preschoolers: evidence for a specific role for childhood hyperactivity. Child Neuropsychol 21(1):25–40

Corbett B, Glidden H (2000) Processing affective stimuli in children with attention-deficit hyperactivity disorder. Child Neuropsychol 6(2):144–155. doi:10.1076/chin.6.2.144.7056

Ethofer T, Bretscher J, Gschwind M, Kreifelts B, Wildgruber D, Vuilleumier P (2012) Emotional voice areas: anatomic location, functional properties, and structural connections revealed by combined fMRI/DTI. Cereb Cortex 22(1):191–200

Ethofer T, Bretscher J, Wiethoff S, Bisch J, Schlipf S, Wildgruber D, Kreifelts B (2013) Functional responses and structural connections of cortical areas for processing faces and voices in the superior temporal sulcus. Neuroimage 76:45–56

Geisser S, Greenhouse SW (1958) An extension of Box’s results on the use of the F distribution in multivariate analysis. Ann Math Statist 29:885–891

Grabemann M, Mette C, Zimmermann M, Heinrich V, Uekermann J, Wiltfang J, Abdel-Hamid M, Zepf FD, Kis B (2013) No clear effects of acute tryptophan depletion on processing affective prosody in male adults with ADHD. Acta Psychiatr Scand 128(2):142–148

Kochel A, Schongassner F, Feierl-Gsodam S, Schienle A (2015) Processing of affective prosody in boys suffering from attention deficit hyperactivity disorder: a near-infrared spectroscopy study. Soc Neurosci 10(6):583–591

Kreifelts B, Ethofer T, Grodd W, Erb M, Wildgruber D (2007) Audiovisual integration of emotional signals in voice and face: an event-related fMRI study. Neuroimage 37(4):1445–1456

Lambrecht L, Kreifelts B, Wildgruber D (2012) Age-related decrease in recognition of emotional facial and prosodic expressions. Emotion (Washington, DC) 12(3):529–539

Lambrecht L, Kreifelts B, Wildgruber D (2014) Gender differences in emotion recognition: impact of sensory modality and emotional category. Cogn Emot 28(3):452–469

Lehrl S (1977) Mehrfachwahl-Wortschatz-Intelligenztest: MWT-B. Perimed-Verlag Straube, Erlangen

Lehrl S, Triebig G, Fischer B (1995) Multiple choice vocabulary test MWT as a valid and short test to estimate premorbid intelligence. Acta Neurologica Scandinavia 91(5):335–345

Michalek AM, Watson SM, Ash I, Ringleb S, Raymer A (2014) Effects of noise and audiovisual cues on speech processing in adults with and without ADHD. Int J Audiol 53(3):145–152

Miller M, Hanford RB, Fassbender C, Duke M, Schweitzer JB (2011) Affect recognition in adults with ADHD. J Atten Disord 15(6):452–460

Murphy K, Barkley RA (1996) Attention deficit hyperactivity disorder adults: comorbidities and adaptive impairments. Compr Psychiatry 37(6):393–401

Noordermeer SDS, Luman M, Buitelaar JK, Hartman CA, Hoekstra PJ, Franke B, Faraone SV, Heslenfeld DJ, Oosterlaan J (2015) Neurocognitive deficits in attention-deficit/hyperactivity disorder with and without comorbid oppositional defiant disorder. J Atten Disord. doi:10.1177/1087054715606216

Oerlemans A, van der Meer JJ, van Steijn D, de Ruiter S, de Bruijn YE, de Sonneville LJ, Buitelaar J, Rommelse NJ (2014) Recognition of facial emotion and affective prosody in children with ASD (+ADHD) and their unaffected siblings. Eur Child Adolesc Psychiatry 23(5):257–271

Oldfield RC (1971) The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9(1):97–113

Pelc K, Kornreich C, Foisy M-L, Dan B (2006) Recognition of emotional facial expressions in attention-deficit hyperactivity disorder. Pediatr Neurol 35(2):93–97

Rapport LJ, Friedman SL, Tzelepis A, Van Voorhis A (2002) Experienced emotion and affect recognition in adult attention-deficit hyperactivity disorder. Neuropsychology 16(1):102–110

Retz-Junginger P, Retz W, Blocher D, Stieglitz RD, Georg T, Supprian T, Wender PH, Rösler M (2003) Reliabilität und Validität der Wender-Utah-Rating-Scale-Kurzform. Der Nervenarzt 74(11):987–993

Rösler M, Retz W, Retz-Junginger P, Thome J, Supprian T, Nissen T, Stieglitz RD, Blocher D, Hengesch G, Trott GE (2004) Instrumente zur Diagnostik der Aufmerksamkeitsdefizit-/Hyperaktivitätsstörung (ADHS) im Erwachsenenalter. Der Nervenarzt 75(9):888–895

Salovey P, Mayer JD, Goldman SL, Turvey C, Palfai TP (1995) Emotional attention, clarity, and repair: exploring emotional intelligence using the trait meta-mood scale. In: Emotion, disclosure, and health. American Psychological Association, Washington, DC, US, pp 125–154

Schlipf S, Batra A, Walter G, Zeep C, Wildgruber D, Fallgatter A, Ethofer T (2013) Judgment of emotional information expressed by prosody and semantics in patients with unipolar depression. Front Psychol 4:461

Schmidt S, Schussler G, Petermann F (2012) ADHD across the lifespan—an update on research and practice. Z Psychosom Med Psychother 58(3):236–256

Schutte NS, Malouff JM, Hall LE, Haggerty DJ, Cooper JT, Golden CJ, Dornheim L (1998) Development and validation of a measure of emotional intelligence. Personal Individ Differ 25(2):167–177

Schwenck C, Schneider T, Schreckenbach J, Zenglein Y, Gensthaler A, Taurines R, Freitag CM, Schneider W, Romanos M (2013) Emotion recognition in children and adolescents with attention-deficit/hyperactivity disorder (ADHD). Atten Defic Hyperact Disord 5(3):295–302

Serrano VJ, Owens JS, Hallowell B (2015) Where children with ADHD direct visual attention during emotion knowledge tasks: relationships to accuracy, response time, and ADHD symptoms. J Atten Disord. doi:10.1177/1087054715593632

Shapiro EG, Hughes SJ, August GJ, Bloomquist ML (1993) Processing of emotional information in children with attention-deficit hyperactivity disorder. Dev Neuropsychol 9(3–4):207–224

Shaw P, Stringaris A, Nigg J, Leibenluft E (2014) Emotion dysregulation in attention deficit hyperactivity disorder. Am J Psychiatry 171(3):276–293

Singh SD, Ellis CR, Winton ASW, Singh NN, Leung JP, Oswald DP (1998) Recognition of facial expressions of emotion by children with attention-deficit hyperactivity disorder. Behav Modif 22(2):128–142

Sinzig J, Morsch D, Lehmkuhl G (2008) Do hyperactivity, impulsivity and inattention have an impact on the ability of facial affect recognition in children with autism and ADHD? Eur Child Adolesc Psychiatry 17(2):63–72

Sjowall D, Roth L, Lindqvist S, Thorell LB (2013) Multiple deficits in ADHD: executive dysfunction, delay aversion, reaction time variability, and emotional deficits. J Child Psychol Psychiatry 54(6):619–627

Sobanski E, Alm B (2004) Attention deficit hyperactivity disorder in adults. An overview. Nervenarzt 75(7):697–715

Sobanski E, Bruggemann D, Alm B, Kern S, Deschner M, Schubert T, Philipsen A, Rietschel M (2007) Psychiatric comorbidity and functional impairment in a clinically referred sample of adults with attention-deficit/hyperactivity disorder (ADHD). Eur Arch Psychiatry Clin Neurosci 257(7):371–377

Uekermann J, Kraemer M, Abdel-Hamid M, Schimmelmann BG, Hebebrand J, Daum I, Wiltfang J, Kis B (2010) Social cognition in attention-deficit hyperactivity disorder (ADHD). Neurosci Biobehav Rev 34(5):734–743

Vuilleumier P, Armony JL, Driver J, Dolan RJ (2001) Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron 30(3):829–841

Wagner HL (1993) On measuring performance in category judgment studies of nonverbal behavior. J Nonverbal Behav 17(1):3–28

Wender PH, Wolf LE, Wasserstein J (2001) Adults with ADHD. An overview. Ann N Y Acad Sci 931:1–16

Williams LM, Hermens DF, Palmer D, Kohn M, Clarke S, Keage H, Clark CR, Gordon E (2008) Misinterpreting emotional expressions in attention-deficit/hyperactivity disorder: evidence for a neural marker and stimulant effects. Biol Psychiatry 63(10):917–926

Yuill N, Lyon J (2007) Selective difficulty in recognising facial expressions of emotion in boys with ADHD. General performance impairments or specific problems in social cognition? Eur Child Adolesc Psychiatry 16(6):398–404

Zimmermann P, Fimm B (2009) Testbatterie zur Aufmerksamkeitsprüfung (TAP) Version 2.2. Psytest, Herzogenrath

Zoromski A, Owens J, Evans S, Brady C (2015) Identifying ADHD symptoms most associated with impairment in early childhood, middle childhood, and adolescence using teacher report. J Abnorm Child Psychol 43(7):1243–1255

Acknowledgments

This study was supported by the University of Tübingen (Fortüne 2000-0-0) and the Deutsche Forschungsgemeinschaft (DFG ET 112/5-1).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bisch, J., Kreifelts, B., Bretscher, J. et al. Emotion perception in adult attention-deficit hyperactivity disorder. J Neural Transm 123, 961–970 (2016). https://doi.org/10.1007/s00702-016-1513-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00702-016-1513-x