Abstract

Shear strength criterion for rock discontinuities is a greatly important issue to most geoengineering analyses and designs. This paper aims to develop criteria and make a comparative study using statistical, lazy, and ensemble learning methods for fast predicting the shear strength of rock discontinuities. To do so, simple linear regression (SLR), multiple linear regression (MLR), least median squared regression (LMSR), isotonic regression (IR), pace regression (PR), k-nearest neighbors (kNN), and extreme gradient boosting (XGBoost) learning models are developed using compiled experimental data from direct shear tests based on commonly used variables in criteria. Statistical indices (RMSE, \({R}^{2}\), and MAE) and comparative analyses indicate that the adopted XGBoost model has a good generalization performance than other models. However, MLR- and PR-based-derived linear equations with \({R}^{2}\) = 0.98 and 0.96 for training and testing datasets are also promising new practical criteria. Interpretability and explainability of the proposed XGBoost model are demonstrated using feature important rank, partial dependence plots (PDPs), feature interaction, and local interpretable model-agnostic explanations (LIME) techniques. The Taylor diagram is also included to substantiate the capability of the developed data-driven surrogate models. Moreover, the proposed models provide satisfactory performance and comparable results to existing prediction models. Findings of this study will assist the geoengineers in estimating shear strength of rock discontinuities.

Highlights

-

Criteria developed to predict shear strength of rock discontinuities using statistical, lazy, and ensemble learning methods.

-

XGBoost showed best generalization performance, while MLR and PR-based equations were also promising practical criteria with high \({R}^{2}\) values.

-

XGBoost model's interpretability and explainability shown using various techniques.

-

Proposed models provide satisfactory performance and comparable results to existing prediction models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Rock mass behavior and, therefore, stability of the slope and underground cavities are strongly affected by the shear strength of rock joints in the rock mass. It is not straightforward to precisely predict the shear strength of the rock joints due to a variety of complex variables. Various methods, such as empirical (Patton 1966; Jaeger 1971; Barton 1973; Barton and Choubey 1977; Maksimovic 1996; Kulatilake et al. 1995; Zhao 1997; Grasselli 2001; Tatone 2009; Xia et al. 2014; Yang et al. 2016; Tang et al. 2016; Tian et al. 2018), semi-theoretical (Ladanyi and Archambault 1969; Seidel and Haberfield 1995; Johansson and Stille 2014), and theoretical (Lanaro and Stephansson 2003), have been applied to describe shear strength of rock discontinuities over the years. In the literature, there has been a comparative investigation of shear strength models of rock discontinuities (e.g., Singh and Basu 2018; Tian et al. 2018; Li et al. 2020).

Conventional regression methods can be improved to better capture and represent the multi-variable, nonlinear, complex, and constitutive responses of systems such as the shear behavior of rock discontinuities with the processing capability of current computers. Data-driven-based methods are widely used in geoengineering (Fathipour-Azar and Torabi 2014; Fathipour-Azar et al. 2017, 2020; Zhang et al. 2020a, b, c; Fathipour-Azar 2021a, b, 2022a, b, c, d, e, f, 2023). Based on the literature reviews, the previously published machine learning methods for predicting shear strength of rock discontinuity are summarized, as shown in Table 1.

The shear strength of a joint varies depending on parameters such as rock type, joint material, normal stress level, and morphology characteristics (e.g., Tang et al. 2016; Wang and Li 2018; Xia et al. 2019). Although some current machine-learning-based surrogate models and criteria are well suited for the shear behavior of rock joints, there is a still need for a simple adoptable model that takes into consideration all the influential factors that affect the nonlinear complicated nature of joint strength. Moreover, there is a need to use black-box visualization tools to explain and interpret black-box models developed by these techniques. However, Fathipour-Azar (2022a) proposed an effective shear strength criterion (\({R}^{2}\) = 0.98) to use in rock mechanics application with fewer input variables using interpretable multivariate adaptive regression splines (MARS) method.

The purpose of this study is to construct shear strength criteria for rock discontinuities based on data-driven models using the simple linear regression (SLR), multiple linear regression (MLR), least median squared regression (LMSR), isotonic regression (IR), pace regression (PR), k-nearest neighbors (kNN), and extreme gradient boosting (XGBoost) learning models. The universal applicability of the developed models is validated with the result of measured shear strength data of rock discontinuities in the earlier studies, previous machine-learning-based models, and several well-known shear strength criteria. To do this, \({R}^{2}\) (coefficient of determination), root mean square error (RMSE), and mean absolute error (MAE) are used to calculate the validity of the predictive models, and sensitivity analysis is carried out on the established XGBoost model to analyze the relationship between shear strength and influencing factors. This study can be used for the comparative analysis of the use of different surrogate-based methodologies for estimating shear strength of rock discontinuities.

2 Data-Driven Modeling Methodologies

Data-driven surrogate-based methodologies that have been used to construct the failure criteria and predict the shear strength of rock discontinuities are briefly outlined in this section.

2.1 Simple Linear Regression (SLR)

Linear regression is a method of mathematically modeling the relationship between a response variable (also called the outcome or dependent variable) and one or more input variables (also called the predictor, explanatory, or independent variable). A linear regression model with a satisfactory fit may be used to predict future values of the output variable. Simple linear regression (SLR) selects the variable feature that results in the lowest squared error. For a single explanatory variable, the SLR can be written as:

where \({\beta }_{0}\) and \({\beta }_{1}\) are the regression parameters to be estimated and \(\varepsilon\) is the error term.

2.2 Multiple Linear Regression (MLR)

Multiple linear regression (MLR) is the approach used when there are more than one estimator variable. In this study, MLR with ridge regularization is performed based on standard least squares linear regression. To solve ill-posed problems and control the potential over-fitting issues in the MLR, a regularized version of the MLR, namely, the ridge regression is used (Witten and Frank 2005). In the linear regression model, the least square method is used to determine \({\beta }_{0}\) and \({\beta }_{1}\) predictions such that the sum of squared distance from the real \({y}_{i}\) response \(\widehat{y}={b}_{0}+{b}_{1}{x}_{i}\) approaches the lowest of all feasible regression coefficients.

2.3 Least Median Squared Regression (LMSR)

Least median squared regression (LMSR) predicts using MLR. Least squared regression functions are created from random subsamples of the data. The final model is the least squared regression with the lowest median squared error (Rousseeuw 1984), that is:

2.4 Isotonic Regression (IR)

Isotonic regression (IR) is a nonparametric method for fitting a freestyle line to a sequential of perceptions under following conditions: the given freestyle line model needs to be consistent with the monotonicity and as close to the observed values as possible (Salanti 2003; Leeuw et al. 2010). It selects the attribute with the lowest squared error and bases its isotonic regression model on this decision. The IR optimization formula is as follows:

where \({w}_{i}\) is chosen weights.

2.5 Pace Regression

Projection adjustment by contribution estimation (pace) regression (PR) improves on classical MLR by assessing the influence of each variable and using clustering analysis to improve the statistical basis for determining their contribution to the overall regression. When the number of coefficients approaches infinity, PR is provably optimum under regularity conditions. PR is an approach to fitting linear models in high-dimensional spaces. It consists of a set of estimators that are either overall or conditionally optimum (Wang 2000; Wang and Witten 2002).

2.6 k-Nearest Neighbors (kNN) Model

k-nearest neighbors (kNN) is a nonparametric, instance-based, lazy learner algorithm (Aha et al. 1991). The target of test data sample is predicted by searching the entire training set for the \(k\) most similar samples (neighbors) and averaging the values of k-nearest neighbors as an output variable. In this study, the brute force search algorithm is used to find the nearest neighbors and Chebyshev distance is used to measure the distance. If D is a dataset consisting of \({\left({x}_{i}\right)}_{i\in \left[1,n\right]}\) training instances (where \(n=\left|D\right|\)) with a set of features \(F\) and the value of an unknown instance \(p\) is to be predicted, the Chebyshev distance between \(p\) and \({x}_{i}\) is (Cunningham and Delany 2021):

2.7 Extreme Gradient Boosting (XGBoost) Model

Extreme gradient boosting (XGBoost) model is an advanced ensemble tree boosting algorithm (Chen and Guestrin 2016). It is an improvement on Friedman's gradient boosting method (Friedman 2001). XGBoost functions by building a base model for the pre-existing model, which consists of training an initial tree, constructing a second tree combined with the initial tree, and repeating the second step until the stopping condition(i.e., required number of trees) is met (Zhang et al. 2020c). When \(t\) trees are created, the newly generated tree is utilized to fit the residual of the last prediction. The sum of each tree's predictions yields the model's ultimate prediction. The general function for approximating the system response at step \(t\) is as:

where \({f}_{t}({x}_{i})\) is the learner at step \(t\), \({\widehat{y}}_{i}^{(t)}\) and \({\widehat{y}}_{i}^{(t-1)}\) are the estimations at step \(t\) and \(t\) − 1, and \({x}_{i}\) is the input variable.

To optimize and prevent over-fitting issues, the objective function of XGBoost can be minimized as follows:

where \(l\) is a convex function (i.e., loss function) that is used to find the difference between exact and computed values, \({y}_{i}\) is a measured value, \(n\) is the number of observations used, and \(\Omega\) is the penalty factor (regularization term), and defined as:

where \(\omega\) is the vector of scores in the leaves, \(\lambda\) is the regularization parameter, and \(\gamma\) is the minimum loss needed to further partition the leaf node.

Using a greedy algorithm, the XGBoost technique examines all feature points adopting the objective function value as the evaluation function. The split objective function value is compared with the gain of a single leaf node's objective function within a preset threshold that restricts tree growth, and the split is executed only when the gain exceeds the threshold. As a consequence, the best features and splitting points for constructing the tree structure can be found (Zhang et al. 2020a).

3 Database

To predict peak shear strength of discontinuities, the results of direct shear tests from published literature (Grasselli 2001; Tatone 2009; Xia et al. 2014; Yang et al. 2015, 2016) are used for training and testing the proposed SLR, MLR, LMSR, IR, PR, kNN, and XGBoost techniques. A total of 83 tests were conducted on 6 different material types of discontinuities (granite, sandstone, limestone, marble, serpentinite, mortar).

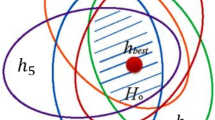

Based on the literature review conducted on the parameters considered by previous machine-learning-based models and common criteria to predict shear strength of rock discontinuity, eight main influencing input parameters are employed in the analyses: sampling interval \((l)\), maximum contact area ratio \({(A}_{0})\), distribution parameter \((C)\), maximum apparent dip angle \(({\theta }_{max}^{*})\), basic friction angle \(({\varphi }_{b})\), tensile strength (\({\sigma }_{t}\)), uniaxial compressive strength \({(\sigma }_{c})\), and normal stress \(({\sigma }_{n})\). The dataset consisting of 83 instances has been divided into 2 phases: 80% training dataset (66 experimental datasets) and 20% testing dataset (17 experimental datasets), by random sampling. Therefore, the training dataset is used for model construction and testing dataset is used for evaluating the prediction performance of the developed models for testing purposes.

Figure 1 presents a plot matrix for the variables considered, the lower triangle of the matrix shows scatter plots of each pair of variables, while the upper triangle shows the correlations of the variables. Different color represents each group of material type in the lower triangle of the matrix. It is seen that the \({\tau }_{p}\) is highly correlated to the \({\sigma }_{n}\).

In this study, various statistical analysis is computed to justify the attainment of the adopted SLR, MLR, LMSR, IR, PR, kNN, and XGBoost models. Three most frequently used metrics for model assessment in regression problems are \({R}^{2}\) (coefficient of determination), root mean square error (RMSE), and mean absolute error (MAE). The following four equations express the mathematical indicators:

where \(y_{i}\) and \(y_{i}^{^{\prime}}\) are measured and predicted values, respectively, \(\overline{y}\) is the mean of the measured values, and \(n\) is the total number of data. The predictive technique will be excellent if \({R}^{2}=1\), RMSE = 0, and MAE = 0.

4 Results and Discussion

4.1 Comparison Analysis of Models

Different surrogate models are constructed based on seven data-driven modeling techniques, viz., SLR, MLR, LMSR, IR, PR, kNN, and XGBoost, and used to predict the shear strength of rock discontinuity. A tuning phase was performed utilizing the grid search and tenfold cross-validation methods to determine suitable values for the parameters that characterize considered models. Ridge parameter of \(1\times {10}^{-8}\) is used in MLR model. The optimal number of closest instances in the training dataset for predicting the value of the test instance for the kNN model is 1. For the XGBoost model, tree (XGBtree) is considered as base learners and boosters. Accordingly, boosting iterations = 972, maximum depth of tree = 1, minimum loss reduction = 0, subsample ratio of columns = 0.7, minimum sum of instance weight (node size) = 1, subsample percentage = 0.71, and booster learning rate (shrinkage) = 0.1 are found optimal parameters for the model.

Regression equations obtained from the application of the statistical algorithms using tenfold cross-validation on train dataset are given as below:

To verify the reliability and accuracy of the models established, statistical indices, i.e., RMSE, \({R}^{2}\), and MAE are used. Table 2 presents the results of all data-driven criteria performances during the training and testing stages. It is seen from Table 2 that the XGBoost model demonstrates the highest prediction accuracy and generalization capability by achieving the highest \({R}^{2}\) and lowest RMSE and MAE compared with SLR, MLR, LMSR, IR, PR, and kNN models in both the training and testing phases.

As shown in Fig. 2, the measured data are plotted against predicted data to provide a better insight into the prediction success of the criteria. It can be seen that the data predicted by the XGBoost-based criterion match the measured experimental data perfectly on the regression line for the training and testing dataset.

The performance of established models is compared to those achieved with the shear strength criteria and other machine learning techniques used to address estimating problems. Shear strength criteria are given in Table 3 and machine learning paradigms considered to perform this comparison are MARS, Gaussian process (GP), alternating model tree (AMT), Cubist, radial basis function (RBF) networks, and elastic net (EN) (Fathipour-Azar 2022a). Figure 3 shows the comparison results of the criteria, previous machine-learning-based models, and developed data-driven surrogate models employed in this study for the same train and test dataset in the training and testing phases. Generally, Fig. 3 demonstrates that surrogate models established in this study performed satisfactory and comparable to those criteria and previous machine-learning-based models. A good correlation between measured and predicted data is seen for proposed data-driven models, particularly the XGBoost model with a lower error. However, the kNN-based model performs poorly.

Generally, a predefined functional representation of the model is not required for data-driven surrogate-based methodologies. Such data-driven intelligence modeling gains information from training data and then more effectively represents a complex and nonlinear constitutive behavior of rock discontinuities. Moreover, these developed surrogate models are computationally inexpensive. Statistical learning methods enable us to express the relationship between the input and response variables as a mathematical equation. SLR, MLR, LMSR, IR, PR techniques fall into this group. The regression equation is determined by minimizing the sum or median of squared residuals. kNN is an instance-based lazy learner. It stores the training data in the memory and uses it when predicting a new test instance. XGBoost is an ensemble machine learning algorithm. It creates a weak prediction model at each stage, which is then weighted and incorporated to the overall model, reducing variance and bias and so improving model performance. Therefore, the results of this study demonstrated that proposed techniques can assist scholars in comprehensively considering influential shear behavior parameters and make fast and accurate shear failure evaluation of rock discontinuities without the need for a computationally complex mathematical equation or expensive and specialized laboratory facilities.

A Taylor diagram (Taylor 2001) is a graphical representation of comparing various model outcomes to measured data. The standard deviation, RMSE, and R between different models and measurements are depicted in this diagram. This diagram is plotted for shear strength in Fig. 4. The location of each model in the diagram indicates how closely the predicted pattern matches with measurements. According to this figure, the distance between developed models points to the measured point indicates that while the developed XGBoost-based model is close to the measurement, and therefore a promising technique in estimating shear strength, the kNN-based model is far from the measurement.

4.2 Sensitivity Analysis

The feature importance score is a strategy for determining the relevance of features and the interpretability of models. Figure 8 depicts the relative importance of the features for the XGBoost model illustrating the influence of features on shear strength of rock discontinuities, \({\tau }_{p}\). A trained XGBoost model can automatically compute feature importance based on the interface feature important criteria, such as gain, cover, and frequency criteria. The sum of the importance of each feature equals 1. Gain denotes each feature’s contribution of each tree to the model performance improvement. Cover indicates the relative number of observations related to a feature. Frequency is the percentage defining the relative number of times a feature decides on a split in the trees. A higher value of these indices, compared with another, implies that such a feature is more important for making an estimation. Figure 5 presents the eight feature predictors’ average of feature relative importance (%) under tenfold cross-validation. The figure shows that the \({\sigma }_{n}\), \({A}_{0}\), C, and \({\theta }_{max}^{*}\) affect the shear strength more than other variables.

Figure 6 depicts one-way partial dependence plots (PDPs) (Friedman 2001) for the eight predictor features. PDPs demonstrate how variation of one or more variables throughout their marginal distributions affects the average predicted value (Goldstein et al. 2015). Each plot in Fig. 6 represents the influence of each variable while the other variables are held constant. Although positive associations with shear strength are seen for \({\sigma }_{n}\), \({\sigma }_{t}\), \({\theta }_{max}^{*}\), and \(l\) features, a negative correlation can be seen for \(C\). In general, the shear strength increases with all features except for \(C\). The strength of the association varies for all features. A wider variation range for the average estimated shear strength value could be seen for the \({\sigma }_{n}\) (0.839–11.590) compared with other features in the range 2.607–3.642. The sharp changes in shear strength occur particularly for \({\sigma }_{c}\), \({\varphi }_{b}\), and \({A}_{0}\). However, it can be seen that specific ranges of each feature affect the estimated shear strength value. Out of these specific ranges, these features are less important variables and variation of the average estimated shear strength is insignificant.

The H-statistic (Friedman and Popescu 2008) is used to determine how much variation in the shear strength value is attributable to feature interaction. Figure 7 depicts the interaction strength for each of the features with any other features for predicting the shear strength. Overall, each variable explains less than 4.5e-5% of the variance, implying that the interaction effects between the features are quite weak.

Finally, local interpretable model-agnostic explanations (LIME) (Ribeiro et al. 2016) is used to investigate and explain the relevance of each feature in the testing data set for each shear strength estimation by approximating it locally with an interpretable model. The results are shown in Fig. 8 as a heat map, with the rock joint profile numbers on the \(x\) axis and the categorized features on the \(y\) axis. Feature weights are represented by colors. The color of each cell reflects the importance of the features in determining the associated shear strength value. A feature with a positive (blue) weight supports the shear strength, whereas a feature with a negative (red) weight contradicts the shear strength. In addition, the LIME algorithm considers optimally four knobs for each feature (except for \(l\) with three knobs) that the model fits the local region. Therefore, similar to PDPs plots in Fig. 6, the influential range of each eight features could be understood that supports shear strength. In general, it can be seen that \({\sigma }_{n}\) feature is highly relevant to the estimated shear strength value. This result is consistent with feature importance obtained with the gain, cover, and frequency approaches for the training phase. This figure is useful for understanding how machine learning techniques, in this case the XGBoost model, estimate shear strength.

5 Conclusion

Shear strength estimation of rock discontinuities is crucial in geoengineering analysis and applications. The present study proposed a new approach to predict the \({\tau }_{p}\) from the \(l\), \({A}_{0}\), \(C\), \({\theta }_{max}^{*}\), \({\varphi }_{b}\), \({\sigma }_{t}\), \({\sigma }_{c}\), and \({\sigma }_{n}\) data. Using a compiled dataset from the direct shear tests, SLR, MLR, LMSR, IR, PR, kNN, and XGBoost models are introduced to establish the relationship between the \({\tau }_{p}\) and various indicators. According to statistical indices, the XGBoost model outperforms all other techniques in predicting shear strength values of rock discontinuity, with the highest \({R}^{2}\) and lowest error values, indicating that the model has a superior generalization performance for provided dataset. Based on the trained XGBoost model, feature importance rank is provided using gain, cover, and frequency indices. PDPs are used to demonstrate the effect of features on the average predicted value in the training phase. The H-statistic is utilized to evaluate how much of the shear strength value's variation is explained by feature interaction. Moreover, the LIME algorithm is employed to indicate the effect of the variables on the shear strength using the testing dataset. The shear strength models were then compared to some criteria and previous machine-learning-based surrogate models, which demonstrates the effectiveness of established data-driven models by achieving comparable performance in evaluating the shear strength of rock discontinuities. It is worth highlighting that the predictive accuracy of the proposed MLR and PR-based formula (\({R}^{2}\) = 0.98 and 0.96 for the training and test dataset, respectively) is better or comparable to that of conventional criteria.

Further studies based on more datasets are required to improve the accuracy of the developed models. The promising results of this paper certainly give hope that with sufficient amounts of experimental data, the underlying strength model describing datasets can be much more efficiently identified.

Data Availability

All data are provided within the manuscript.

References

Aha DW, Kibler D, Albert MK (1991) Instance-based learning algorithms. Mach Learn 6(1):37–66. https://doi.org/10.1007/BF00153759

Babanouri N, Fattahi H (2018) Constitutive modeling of rock fractures by improved support vector regression. Environ Earth Sci 77(6):1–13. https://doi.org/10.1007/s12665-018-7421-7

Babanouri N, Fattahi H (2020) An ANFIS–TLBO criterion for shear failure of rock joints. Soft Comput 24(7):4759–4773. https://doi.org/10.1007/s00500-019-04230-w

Barton N (1973) Review of a new shear-strength criterion for rock joints. Eng Geol 7(4):287–332. https://doi.org/10.1016/0013-7952(73)90013-6

Barton N, Choubey V (1977) The shear strength of rock joints in theory and practice. Rock Mech 10(1):1–54. https://doi.org/10.1007/BF01261801

Chen T, Guestrin C (2016) Xgboost: a scalable tree boosting system. In: Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining, pp 785–794

Cunningham P, Delany SJ (2021) k-nearest neighbour classifiers-A tutorial. ACM Comput Surv (CSUR) 54(6):1–25. https://doi.org/10.1145/3459665

De Leeuw J, Hornik K, Mair P (2010) Isotone optimization in R: pool-adjacent-violators algorithm (PAVA) and active set methods. J Stat Softw 32:1–24

Fathipour Azar H, Torabi SR (2014) Estimating fracture toughness of rock (KIC) using artificial neural networks (ANNS) and linear multivariable regression (LMR) models. In: 5th Iranian Rock Mechanics Conference

Fathipour-Azar H (2021a) Machine learning assisted distinct element models calibration: ANFIS, SVM, GPR, and MARS approaches. Acta Geotech. https://doi.org/10.1007/s11440-021-01303-9

Fathipour-Azar H (2021b) Data-driven estimation of joint roughness coefficient (JRC). J Rock Mech Geotech Eng 13(6):1428–1437. https://doi.org/10.1016/j.jrmge.2021.09.003

Fathipour-Azar H (2022a) New interpretable shear strength criterion for rock joints. Acta Geotech 17:1327–1341. https://doi.org/10.1007/s11440-021-01442-z

Fathipour-Azar H (2022b) Polyaxial rock failure criteria: Insights from explainable and interpretable data driven models. Rock Mech Rock Eng 55:2071–2089. https://doi.org/10.1007/s00603-021-02758-8

Fathipour-Azar H (2022c) Hybrid machine learning-based triaxial jointed rock mass strength. Environ Earth Sci. https://doi.org/10.1007/s12665-022-10253-8

Fathipour-Azar H (2022d) Stacking ensemble machine learning-based shear strength model for rock discontinuity. Geotech Geol Eng 40:3091–3106. https://doi.org/10.1007/s10706-022-02081-1

Fathipour-Azar H (2022e) Data-oriented prediction of rocks’ Mohr-Coulomb parameters. Arch Appl Mech 92(8):2483–2494. https://doi.org/10.1007/s00419-022-02190-6

Fathipour-Azar H (2022f) Multi-level machine learning-driven tunnel squeezing prediction: review and new insights. Arch Comput Methods Eng 29:5493–5509. https://doi.org/10.1007/s11831-022-09774-z

Fathipour-Azar H (2023) Mean cutting force prediction of conical picks using ensemble learning paradigm. Rock Mech Rock Eng 56:221–236. https://doi.org/10.1007/s00603-022-03095-0

Fathipour-Azar H, Saksala T, Jalali SME (2017) Artificial neural networks models for rate of penetration prediction in rock drilling. J Struct Mech 50(3):252–255. https://doi.org/10.23998/rm.64969

Fathipour-Azar H, Wang J, Jalali SME, Torabi SR (2020) Numerical modeling of geomaterial fracture using a cohesive crack model in grain-based DEM. Comput Part Mech 7:645–654. https://doi.org/10.1007/s40571-019-00295-4

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat. https://doi.org/10.1214/aos/1013203451

Friedman JH, Popescu BE (2008) Predictive learning via rule ensembles. Ann Appl Stat 2(3):916–954. https://doi.org/10.1214/07-AOAS148

Goldstein A, Kapelner A, Bleich J, Pitkin E (2015) Peeking inside the black box: visualizing statistical learning with plots of individual conditional expectation. J Comput Graph Statist 24(1):44–65. https://doi.org/10.1080/10618600.2014.907095

Grasselli G (2001) Shear strength of rock joints based on quantified surface description. Dissertation, Swiss Federal Institute of Technology.

Hasanipanah M, Meng D, Keshtegar B, Trung NT, Thai DK (2020) Nonlinear models based on enhanced Kriging interpolation for prediction of rock joint shear strength. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05252-4

Huang J, Zhang J, Gao Y (2021) Intelligently predict the rock joint shear strength using the support vector regression and firefly algorithm. Lithosphere. https://doi.org/10.2113/2021/2467126

Jaeger JC (1971) Friction of rocks and stability of rock slopes. Geotechnique 21(2):97–134. https://doi.org/10.1680/geot.1971.21.2.97

Johansson F, Stille H (2014) A conceptual model for the peak shear strength of fresh and unweathered rock joints. Int J Rock Mech Min Sci 69:31–38. https://doi.org/10.1016/j.ijrmms.2014.03.005

Kulatilake PHSW, Shou G, Huang TH, Morgan RM (1995) New peak shear strength criteria for anisotropic rock joints. Int Rock Mech Min Sci Geomech Abstr 32(7):673–697. https://doi.org/10.1016/0148-9062(95)00022-9

Ladanyi B, Archambault G (1969) Simulation of shear behavior of a jointed rock mass. In: Proceedings of the 11th US symposium on rock mechanics (USRMS), Berkeley, CA, pp 105–125.

Lanaro F, Stephansson O (2003) A unified model for characterisation and mechanical behaviour of rock fractures. Pure Appl Geophys 160(5):989–998. https://doi.org/10.1007/PL00012577

Li Y, Tang CA, Li D, Wu C (2020) A new shear strength criterion of three-dimensional rock joints. Rock Mech Rock Eng 53(3):1477–1483. https://doi.org/10.1007/s00603-019-01976-5

Maksimović M (1996) The shear strength components of a rough rock joint. Int J Rock Mech Min Sci Geomech Abstr 33(8):769–783. https://doi.org/10.1016/0148-9062(95)00005-4

Patton FD (1966) Multiple modes of shear failure in rock. In: Proceedings of the 1st ISRM Congress, Lisbon, pp 509–513.

Peng K, Amar MN, Ouaer H, Motahari MR, Hasanipanah M (2020) Automated design of a new integrated intelligent computing paradigm for constructing a constitutive model applicable to predicting rock fractures. Eng Comput. https://doi.org/10.1007/s00366-020-01173-x

Ribeiro MT, Singh S, Guestrin C (2016) “Why should i trust you?” Explaining the predictions of any classifier. Proc ACM SIGKDD Int Conf Knowl Discov Data Min 5:5. https://doi.org/10.1145/2939672.2939778

Rousseeuw PJ (1984) Least median of squares regression. J Am Stat Assoc 79(388):871–880

Salanti G (2003) The isotonic regression framework: estimating and testing under order restrictions. Doctoral dissertation, Ludwig-Maximilians University of Munich, Munich, Germany

Seidel JP, Haberfield CM (1995) The application of energy principles to the determination of the sliding resistance of rock joints. Rock Mech Rock Eng 28(4):211–226. https://doi.org/10.1007/BF01020227

Singh HK, Basu A (2018) Evaluation of existing criteria in estimating shear strength of natural rock discontinuities. Eng Geol 232:171–181. https://doi.org/10.1016/j.enggeo.2017.11.023

Tang ZC, Jiao YY, Wong LNY, Wang XC (2016) Choosing appropriate parameters for developing empirical shear strength criterion of rock joint: review and new insights. Rock Mech Rock Eng 49(11):4479–4490. https://doi.org/10.1007/s00603-016-1014-0

Tatone BS (2009) Quantitative characterization of natural rock discontinuity roughness in-situ and in the laboratory. Master’s thesis, Department of Civil Engineering, University of Toronto, Canada

Taylor KE (2001) Summarizing multiple aspects of model performance in a single diagram. J Geophys Res 106(D7):7183–7192. https://doi.org/10.1029/2000JD900719

Tian Y, Liu Q, Liu D, Kang Y, Deng P, He F (2018) Updates to Grasselli’s peak shear strength model. Rock Mech Rock Eng 51(7):2115–2133. https://doi.org/10.1007/s00603-018-1469-2

Wang Y, Witten IH (2002) Modeling for optimal probability prediction. In: Proceedings of the Nineteenth International Conference in Machine Learning, 2002, Sydney, Australia, pp 650–657

Wang H, Lin H (2018) Non-linear shear strength criterion for a rock joint with consideration of friction variation. Geotech Geol Eng 36(6):3731–3741. https://doi.org/10.1007/s10706-018-0567-y

Wang Y (2000) A new approach to fitting linear models in high dimensional spaces. Doctoral dissertation, University of Waikato, Hamilton, New Zealand.

Witten IH, Frank E (2005) Data mining: practical machine learning tools and techniques. Morgan Kaufmann Pub, San Francisco

Xia CC, Tang ZC, Xiao WM, Song YL (2014) New peak shear strength criterion of rock joints based on quantified surface description. Rock Mech Rock Eng 47(2):387–400. https://doi.org/10.1007/s00603-013-0395-6

Xia C, Huang M, Qian X, Hong C, Luo Z, Du S (2019) Novel intelligent approach for peak shear strength assessment of rock joints on the basis of the relevance vector machine. Math Probl Eng. https://doi.org/10.1155/2019/3182736

Yang J, Rong G, Cheng L, Hou D, Wang X (2015) Experimental study of peak shear strength of rock joints. Chin J Rock Mech Eng 34(5):884–894

Yang J, Rong G, Hou D, Peng J, Zhou C (2016) Experimental study on peak shear strength criterion for rock joints. Rock Mech Rock Eng 49(3):821–835. https://doi.org/10.1007/s00603-015-0791-1

Zhang WG, Li HR, Wu CZ, Li YQ, Liu ZQ, Liu HL (2020a) Soft computing approach for prediction of surface settlement induced by earth pressure balance shield tunneling. Undergr Space. https://doi.org/10.1016/j.undsp.2019.12.003

Zhang W, Zhang R, Wu C, Goh ATC, Lacasse S, Liu Z, Liu H (2020b) State-of-the-art review of soft computing applications in underground excavations. Geosci Front 11(4):1095–1106. https://doi.org/10.1016/j.gsf.2019.12.003

Zhang W, Zhang R, Wu C, Goh AT, Wang L (2020c) Assessment of basal heave stability for braced excavations in anisotropic clay using extreme gradient boosting and random forest regression. Underground Space. https://doi.org/10.1016/j.undsp.2020.03.001

Zhao J (1997) Joint surface matching and shear strength part B: JRC-JMC shear strength criterion. Int J Rock Mech Min Sci 34(2):179–185. https://doi.org/10.1016/S0148-9062(96)00063-0

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fathipour-Azar, H. Shear Strength Criterion for Rock Discontinuities: A Comparative Study of Regression Approaches. Rock Mech Rock Eng 56, 4715–4725 (2023). https://doi.org/10.1007/s00603-023-03302-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00603-023-03302-6