Abstract

Due to the promising performance of distribution linguistic preference relations (DLPRs) in eliciting the comparison information coming from decision makers (DMs), linguistic decision problems of this type of preference relations have attracted considerable research interest in recent years. However, to our best knowledge, there is little research on the personalized individual semantics of linguistic terms when dealing with computing with words (CWW) in the process of solving linguistic decision problems with DLPRs. As is well known, one statement about CWW in linguistic decisions is that words might exhibit different meanings for different people. Words need to be individually quantified when dealing with CWW. Hence, the objective of this study is to fill this gap by applying the idea of personalizing numerical scales of linguistic terms for different DMs in linguistic decision with DLPRs to manage the statement about CWW. First, this study connects DLPRs to fuzzy preference relations and multiplicative preference relations by using different types of numerical scales. Then, definitions of expected consistency for DLPRs are presented. On the basis of expected consistency, some goal programming models are built to derive personalized numerical scales for linguistic terms from DLPRs. Finally, a numerical study concerning football player evaluation is analyzed by using the proposed method to demonstrate its applicability in practical decision scenarios. A discussion and a comparative study highlight the validity of the proposed method in this paper.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Preference relations provide a useful tool for decision makers (DMs) to record their preference information over decision alternatives in decision making. There are two typical forms of preference relations: one is the fuzzy preference relations (FPRs) [35], and the other is the multiplicative preference relations (MPRs) [17, 33], both of which belong to the category of numerical preference relations. As decision problems may present qualitative aspects for which it is difficult for DMs to get numerical preference degrees of an alternative over another. DMs are generally more inclined to use linguistic variables (terms) [15] instead of using numerical entries to depict their preference information. Thus, linguistic decision problems with such preference relations as linguistic preference relations (LPRs) [15] and hesitant fuzzy linguistic preference relations (HFLPRs) [11, 24, 32, 39, 52] have attracted considerable research interest [1, 3, 14, 26, 40, 42, 44].

With HFLPRs, DMs can use multiple successive linguistic terms to represent their outcomes of pairwise comparisons. Recently, by considering the different proportions of the multiple linguistic terms in HFLPRs, Zhang et al. [47] and Zhang et al. [48] presented the concepts of distribution LPRs (DLPRs) and probabilistic LPRs, respectively. When the proportional information is complete, both the two forms of preference relations are indeed mathematically consistent [31]. For this reason, we uniformly call them DLPRs in this paper. Compared with LPRs and HFLPRs, the DLPRs not only allow DMs to describe their outcomes using multiple linguistic terms, but also reveal the terms’ proportional information. Due to the good performance and superiority of DLPRs in representing the outcomes of pairwise comparisons coming from DMs, this study focusses on the linguistic decision problems with this kind of preference relations.

Solving linguistic decision problems implies the need for invoking the principles of computing with words (CWW) [19, 27]. As is well known, a key point about CWW is that words might exhibit different meanings for different people [16, 28]. The existing methods presented in Refs. [18, 27, 29] are quite useful for solving multiple meanings of words, but they cannot represent the specific semantics of each individual. Different DMs may have different understandings of the same word. Let us provide an example to illustrate this point. When two teachers evaluate the academic performance of a student, they both are sure that the student is good. However, with respect to the linguistic term of “Good” academic performance, it often has different numerical meanings to the two teachers. Moreover, even for the same teachers, their understandings to this linguistic term may be different with the change in decision problem and decision environment, etc. It is natural that a word should be individually quantified when dealing with CWW. To deal with the point about CWW, several personalized individual semantics (PIS) models [5, 6, 21, 23] have been studied by considering numerical scale models [4, 8].

Although a large number of studies [10, 20, 49, 50] have been reported to discuss the decision making with DLPRs [47, 48], to our knowledge, no studies consider the PIS of linguistic terms among DMs when recording the preferences by way of DLPRs. Hence, the objective of this study is to address the point about CWW by applying the idea of setting personalized numerical scales (PNSs) of linguistic terms for different DMs in decision problems with DLPRs. To achieve that, the proposal of this study consists of two steps:

-

(a)

Definitions of expected consistency for DLPRs are presented. With the use of numerical scales, the numerical expectation of linguistic distribution preferences is introduced, thereby facilitating the linkages between DLPRs and numerical preference relations (i.e., FPRs and MPRs). Similar to the definitions of consistency for the numerical preference relations, the expected consistency definitions for DLPRs are presented.

-

(b)

Based on the expected consistency definitions, some goal programming models to derive PNSs from DLPRs by improving their expected consistencies are proposed.

The use of the proposed models to set PNSs of linguistic terms for different DMs is investigated. By using PNSs, the differences in the understanding of the meaning of words by different individuals can be covered. Overall, the design study described in this paper exhibits two facets of originality: the presentation of expected consistency definitions for DLPRs and the investigation of goal programming approaches to derive PIS of linguistic terms from DLPRs.

The structure of this study reads below: We give a compact review of CWW literature in Sect. 2. Section 3 reviews some basic knowledge. Section 4 introduces the expected consistency of DLPRs, based on which, some goal programming models are constructed for deriving PNSs from DLPRs in Sect. 5. Section 6 illustrates the applications of the aforesaid theoretical results to group decision-making scenarios by solving a numerical example. A discussion and a comparative analysis are also reported in Sect. 6 to demonstrate the validity of the proposed method in this study. Finally, we conclude this study in Sect. 7.

2 Literature review

Over the past few decades, various linguistic computational models have been proposed for CWW [19, 27]. Representative models include two-tuple linguistic representation model [16, 25], linguistic hierarchy model [9, 18] and proportional two-tuple linguistic model [36, 37]. Particularly, the model proposed in [16] provides an effective computation technique for tackling linguistic terms that are symmetrically and uniformly distributed. The linguistic terms are sometimes not symmetrically and uniformly distributed; then, the linguistic hierarchy model [18] and the proportional two-tuple linguistic model [36, 37] have been developed for dealing with such situations. Following that, the numerical scale model which integrates the above two classic models was recently investigated in [4, 8]. These linguistic computational models have been successfully applied to handle CWW in decision making with linguistic information.

As is well known, one statement about CWW in linguistic decisions is that words might exhibit different meanings for different people [16, 28]. Namely, the semantics of linguistic terms for DMs are usually specific and personalized. Although many existing methods [18, 27, 29] provide some useful ways for addressing multiple meanings of words, they fail to manage the specific semantics of each individual in linguistic decision problems. Since the numerical scale model can yield the computational models in [4, 8] by setting different numerical scales [6, 8], several PIS models [5, 6, 21, 23] have recently been studied by considering this scale model to manage the statement about CWW. For example, Li et al. [22, 23] developed some consistency-driven models to set PNSs for linguistic terms in decision making with HFLPRs. With the use of an interval numerical scale, Dong and Herrera-Viedma [6] and Li et al. [21], respectively, presented a consistency-driven automatic methodology and a PIS model to personalize numerical scales in group decision making with LPRs.

Because of the promising performance of DLPRs in expressing DMs’ preference information, linguistic decision problems of this type of preference relations have attracted considerable research interest in recent years [49, 50]. For instance, by using DLPRs, Gao et al. [10] presented an emergency decision support method to enhance emergency management. Zhang et al. [47] explored the consensus process in group decision problems with DLPRs. Zhang et al. [48] studied an investment risk evaluation problem by means of DLPRs. Although there are a large number of studies to discuss the decision making with DLPRs, according to the research data we have now, no studies consider the PIS of linguistic terms among DMs when recording the preferences by way of DLPRs. Namely, how to quantify the linguistic terms used in DLPRs individually when dealing with CWW is an interesting topic.

To fill the gap as outlined above, this study develops an expected consistency-based goal programming approach to derive PIS for linguistic terms in decision making with DLPRs. This study extends the current literature in three ways. First, when addressing the statement about CWW, the comparative linguistic expressions are in the form of DLPRs instead of LPRs or HFLPRs. Second, by using numerical scales, DLPRs are connected into numerical preference relations (i.e., FPRs and MPRs), which facilitates the presentation of the expected consistency definitions for DLPRs. As the last extension, some expected consistency-based models to derive PNSs from DLPRs by improving their expected consistencies are proposed.

3 Preliminaries

The relevant knowledge used in the following discussion will be briefly reviewed in this section.

3.1 Linguistic variables and linguistic preference relations

Fuzzy linguistic approaches model the preference information of DMs with the use of linguistic variables [15, 45]. Suppose there is a finite linguistic term set L = {lα|α = − T, …, − 1, 0, 1, …, T} whose odd cardinality is 2T + 1; then, the linguistic variable’s possible value lα should satisfy the conditions below:

-

1.

L is defined in an ordered structure: lα < lβ if and only if α < β.

-

2.

There is a negation operator: Neg(lα) = l−α.

It is obvious that the midterm represents a preference of "indifference" and the other terms are distributed symmetrically around it. Note that the set L is discrete, which can be extended to a continuous one L = {lα|α ∈ [− q, q]}, where q ≥ T [42]. The operational laws of linguistic terms read below [42]:

-

1.

lα ⊕ lβ = lα+β;

-

2.

lα ⊕ lβ = lβ ⊕ lα;

-

3.

λlα = lαλ;

-

4.

(λ1 + λ2)lα = λ1lα ⊕ λ2lα;

-

5.

λ(lα ⊕ lβ) = λlα ⊕ λlα,

where lα, lβ ∈ L and λ, λ1, λ2 ∈ [0, 1].

When DMs make pairwise comparisons of alternatives using linguistic terms to express their own outcomes, LPRs can be constructed.

Definition 1

[1, 3, 44] Given an alternative set X = {x1, x2,…, xn}, a LPR matrix on X comes as A ⊂ X × X, A = (aij)n×n with element aij indicating the preference degree of the alternative xi over xj and satisfying aii = l0 and Neg(aij) = aji for all i, j ∈ {1, 2,…, n}. If aij ∈ L, then we call the matrix A a discrete LPR; if aij ∈ L, then the matrix A is called a continuous LPR.

3.2 Distribution linguistic preference relations (DLPRs)

Similar to LPRs, if DMs are allowed to represent their outcomes of pairwise comparisons by using some linguistic distribution assessments, the concept of DLPR comes into being [47].

Definition 2

[47] Given an alternative set X = {x1, x2,…, xn} and a linguistic term set L = {lα|α = − T, …, − 1, 0, 1, …, T}, a DLPR matrix on X comes as D ⊂ X × X, D = (dij)n×n with a linguistic distribution assessment dij = {(lα, pij(lα)), α = − T, …, − 1, 0, 1, …, T} that is called a linguistic distribution preference of L, indicating the preference degrees of the alternative xi over xj and satisfying dii = {(l0, 1)} and Neg(dij) = {(lα, pij(l−α)), α = − T, …, − 1, 0, 1, …, T} = dji = {(lα, pji(lα)), α = − T, …, − 1, 0, 1, …, T}, namely pij(lα) = pji(l−α) for all i, j ∈ {1, 2,…, n}, in which pij(lα) represents the symbolic proportion associated with the linguistic term lα in the relation between xi and xj, 0 ≤ pij(lα) ≤ 1 and ∑ T α=− T pij(lα) = 1.

Note that the linguistic distribution preference not only can be used to model the linguistic preference of an individual but also can be employed to portray the preference information of a group [51]. To aggregate multiple linguistic distribution assessments, two aggregation operators along with their desirable properties have been discussed in [47].

Definition 3

[47] Given an alternative set X = {x1, x2,…, xn}, a linguistic term set L = {lα|t = − T, …, − 1, 0, 1, …, T} and a linguistic distribution preference of L, namely dij = {(lα, pij(lα)), t = − T, …, − 1, 0, 1, …, T}, where 0 ≤ pij(lα) ≤ 1 and \( \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } )} \) = 1, as defined before, the expectation of dij is defined in the form:

It is apparent that the expectation of dij is a linguistic term. Herein, we refer to it as linguistic expectation. For two linguistic distribution preferences \( d_{{ij}}^{1}\) and \( d_{{ij}}^{2}\), if \( E(d_{{ij}}^{1} ) < E(d_{{ij}}^{2} ) \), then \( d_{{ij}}^{1}\) is smaller than \( d_{{ij}}^{2}\), and vice versa; if \( E(d_{{ij}}^{1} ) = E(d_{{ij}}^{2} )\), then the linguistic expectation of \( d_{{ij}}^{1}\) is identical to that of \( d_{{ij}}^{2}\).

Theorem 1

[47] Suppose that D=(dij)n×nwith dij={(lα, pij(lα)), α=−T, …, −1, 0, 1, …, T} is a DLPR matrix, and E(dij) is the linguistic expectation of dij, as defined before. Then, E=(E(dij))n×nis a LPR matrix.

This theorem has been proved in [47].

3.3 Numerical scale model

Quantifying such qualitative information as linguistic assessments is an open research issue in fuzzy linguistic decision making. The numerical scale model introduced in [4] is a useful tool to facilitate the transformations between linguistic terms and real numbers.

Definition 4

[4] Given a linguistic term set L = {lα|α = − T, …, − 1, 0, 1, …, T} and a real number set R, the monotonically increasing function NS: L → R is defined as a numerical scale of L and NS(lα) is called the numerical index of lα.

One can see from Definition 4 that the numerical scale NS on L is ordered. By setting an appropriate NS for L, linguistic representation of preference information can be quantified. In other words, linguistic pairwise comparisons can be connected into numerical pairwise comparisons. Two commonly used types of scale functions are additive scale functions and multiplicative scale functions.

Definition 5

[3] Given a linguistic term set L = {lα|α = − T, …, − 1, 0, 1, …, T} and a monotonically increasing function g: L → [0, 1] as defined before, the function g is called an additive scale function if g(lα) + g(l−α) = 1, where g(lα) ≥ 0.

For example, the linear scale presented in [41] is a typically additive numerical scale and the corresponding scale function comes as follows:

By identifying an additive scale function to quantify a LPR [3, 41], one can construct such a numerical pairwise comparison as FPR [35].

Definition 6

[35] Given an alternative set X = {x1, x2,…, xn}, a FPR matrix on X comes as R ⊂ X × X, R = (rij)n×n with rij ∈ [0, 1] representing the preference degree of the alternative xi over xj and satisfying rij + rji = 1 for all i, j ∈ {1, 2,…, n}.

Definition 7

[2, 3] Given a linguistic term set L = {lα|α = − T, …, − 1, 0, 1, …, T} and a monotonically increasing function q: L → R+ as defined before, the function q is called a multiplicative scale function if q(lα) × q(l−α) = 1, where q(lα) > 0.

Several representative multiplicative scale functions have been reported in [33, 34]. When selecting certain q to character a LPR, one can obtain such a numerical pairwise comparison as MPR [17, 33].

Definition 8

[17, 33] Given an alternative set X = {x1, x2,…, xn}, a MPR matrix on X comes as F ⊂ X × X, F = (fij)n×n with fij ≥ 0 representing the preference degree of the alternative xi over xj and satisfying fij × fji = 1 for all i, j ∈ {1, 2,…, n}.

4 Expected consistency of DLPRs

The basic components of a DLPR matrix are actually linguistic distribution assessments, thereby increasing the difficulty of direct consistency analysis. So the consistency of DLPR is usually indirectly discussed by means of other transformed preference relations. As recalled earlier, the consistency of DLPR is defined through the consistency of its associated LPR in [47]. This section analyzes the consistency of DLPR from its associated numerical preference relations such as FPR and MPR, based on the numerical scale model.

4.1 Expected consistency based on fuzzy preference relation

Before presenting the definition of expected consistency for the DLPRs, we first introduce the numerical expectation of linguistic distribution preference.

Definition 9

Given an alternative set X = {x1, x2,…, xn}, a linguistic term set L = {lα|t = − T, …, − 1, 0, 1, …, T} and a linguistic distribution preference of L, namely dij = {(lα, pij(lα)), t = − T, …, − 1, 0, 1, …, T}, where 0 ≤ pij(lα) ≤ 1 and \( \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } )} \) = 1, the numerical expectation of dij is designed below:

in which NS(lα) denotes the numerical index of lα.

For convenience, NE(dij) is referred to simply as NEij in this paper. It is obvious from this definition that the value of NEij is a real number, which differs from the linguistic expectation defined in [47] that is a linguistic term. Thus, the linguistic distribution preference dij can be quantified as a numerical preference NEij with the use of certain numerical scale NS. Of course, by using different types of numerical scales, a linguistic distribution preference can be transformed into different numerical preferences. This will be discussed in detail later.

Based on the concept of NE(dij), different linguistic distribution preferences can be compared as well. Namely, for two linguistic distribution preferences \( d_{ij}^{1} \) and \( d_{ij}^{2} \), if \( {\text{NE}}_{ij}^{1} \) < \( {\text{NE}}_{ij}^{2} \) , then \( d_{ij}^{1} \) is smaller than \( d_{ij}^{2} \), and vice versa; if \( {\text{NE}}_{ij}^{1} \) = \( {\text{NE}}_{ij}^{2} \), then the numerical expectation of \( d_{ij}^{1} \) is equal to that of \( d_{ij}^{2} \). As function NS is monotonically increasing, one can easily verify that for different linguistic distribution preferences, their comparison results generated by the numerical expectation are consistent with the ones by the linguistic expectation.

If the function NS in (2) is an additive scale function, namely g: L → [0, 1] as defined in Definition 5, DLPRs can be transformed into FPRs.

Theorem 2

Suppose that D=(dij)n×nwith dij={(lα, pij(lα)), α=−T, …, −1, 0, 1, …, T} is a DLPR matrix, g is an additive scale function and NEijis the numerical expectation of dijdefined based on g. Then, NE = (NEij)n×nis a FPR matrix.

Proof

From Definitions 5 and 9, we can reason that

In virtue of Neg(dij) = {(lα, pij(l−α)), α = − T, …, − 1, 0, 1, …, T} = dji = {(lα, pji(lα)), α = − T, …, − 1, 0, 1, …, T}, we have

Consequently, according to Definition 6, we can prove that NE is a FPR. □

DMs are expected to be neither illogical nor random in their pairwise comparisons. In other words, DLPRs offered by the DMs should meet some pre-established transitive properties, such as additive transitivity and multiplicative transitivity [35]. However, due to a variety of reasons, DMs are not necessarily logical. They may offer inconsistent preference relations. Consistency analysis for verifying the logicality of their preference relations therefore needs to be done. In what follows, we first recall the concept of additive consistency [13, 35, 43] as the basis for the consistency analysis associated with the DLPRs.

Definition 10

[13, 35, 43] Given an alternative set X = {x1, x2,…, xn} and a FPR matrix R = (rij)n×n with rij ∈ [0, 1] on X, as defined before, then R is called additive consistent if the additive transitivity, namely rij + rjk + rki = rkj + rji + rik for all i, j, k ∈ {1, 2,…, n}, is satisfied, and such a FPR can be expressed by the following expression:

where ω = (ω1, ω2,…, ωn)T is the priority vector of R, satisfying 0 ≤ ωi ≤ 1 and \( \sum\nolimits_{i = 1}^{n} {\omega_{i} } = 1 \).

Based on Theorem 2 and Definition 10, the expected additive consistency for DLPRs is defined.

Definition 11

Given an alternative set X = {x1, x2,…, xn} and a DLPR matrix D = (dij)n×n with dij = {(lα, pij(lα)), α = − T, …, − 1, 0, 1, …, T} on X, as defined before, then D is called expectedly additive consistent if the additive transitivity, namely NEij + NEjk + NEki = NEkj + NEji + NEik for all i, j, k ∈ {1, 2,…, n}, is fulfilled, which can be given as follows:

where NEij is the numerical expectation of dij defined based on an additive scale function g and ω is the corresponding priority vector of D.

In a way similar to the presentation of the expected additive consistency for DLPRs, the definition of expected multiplicative consistency for DLPRs can be deduced based on the concept of multiplicative consistency [13, 35, 43].

Definition 12

[13, 35, 43] Given an alternative set X = {x1, x2,…, xn} and a FPR matrix R = (rij)n×n with rij ∈ [0, 1] on X, as defined before, then R is called multiplicative consistent if the multiplicative transitivity, namely rij ⋅ rjk ⋅ rki = rkj ⋅ rji ⋅ rik for all i, j, k ∈ {1, 2,…, n}, is satisfied, and such a FPR can be expressed by the following expression:

where ω = (ω1, ω2,…, ωn)T is the priority vector of R, satisfying 0 ≤ ωi ≤ 1 and \( \sum\nolimits_{i = 1}^{n} {\omega_{i} } = 1 \).

Based on the description of multiplicative consistent FPRs, the definition of expected multiplicative consistency for DLPRs is therefore presented.

Definition 13

Given an alternative set X = {x1, x2,…, xn} and a DLPR matrix D = (dij)n×n with dij = {(lα, pij(lα)), α = − T, …, − 1, 0, 1, …, T} on X, as defined before, then D is called expectedly multiplicative consistent if the multiplicative transitivity, namely NEij ⋅ NEjk ⋅ NEki = NEkj ⋅ NEji ⋅ NEik for all i, j, k ∈ {1, 2,…, n}, is fulfilled, which can be given as follows:

where NEij is the numerical expectation of dij defined based on an additive scale function g and ω is the corresponding priority vector of D.

Note that the foresaid consistency of a DLPR is defined based on the consistency of the corresponding FPR.

4.2 Expected consistency based on multiplicative preference relation

Section 4.1 discusses the situation where the function NS in (2) is an additive scale function, thereby establishing the connection between DLPRs and FPRs. In this section, we consider a different situation where certain multiplicative scale function is selected to build the relation between DLPRs and MPRs, then based on which we define the expected consistency of DLPRs.

If the function NS in (2) is a multiplicative scale function, namely q: L → R+, as defined in Definition 7, DLPRs can be transformed into MPRs.

Theorem 3

Suppose that D=(dij)n×nwith dij={(lα, pij(lα)), α=−T, …, −1, 0, 1, …, T} is a DLPR matrix, q is a multiplicative scale function and NEijis the numerical expectation of dijdefined based on q. Then, NE =(NEij)n×nis a MPR matrix.

Proof

As per Definitions 8 and 9, this theorem can be easily proved and thus omitted here.□

Before defining the consistency of DLPRs, a consistency definition for MPRs is presented [33].

Definition 14

[33] Given an alternative set X = {x1, x2,…, xn} and a MPR matrix F = (fij)n×n with fij ≥ 0 on X, as defined before, then F is considered to be consistent if the equation fik = fij ⋅ fjk for all i, j, k ∈ {1, 2,…, n} holds, and such a MPR can be expressed as follows:

where ω = (ω1, ω2,…, ωn)T is the priority vector of F, satisfying 0 < ωi and \( \sum\nolimits_{i = 1}^{n} {\omega_{i} } = 1 \).

Analogous to the foresaid definition of consistency, the following expected consistency definition for DLPRs is therefore proposed.

Definition 15

Given an alternative set X = {x1, x2,…, xn} and a DLPR matrix D = (dij)n×n with dij = {(lα, pij(lα)), α = − T, …, − 1, 0, 1, …, T} on X, as defined before, then D is considered to be expectedly consistent if the equation NEik = NEij ⋅ NEjk for all i, j, k ∈ {1, 2,…, n} holds, which can be expressed as follows:

where NEij is the numerical expectation of dij defined based on a multiplicative scale function q and ω is the corresponding priority vector of D.

In accordance with the earlier consistency definitions for FPRs and MPRs, this section defines the expected consistency of DLPRs.

5 Goal programming models to derive personalized numerical scales from DLPRs

From the above analysis, we know that when quantifying DLPRs, one needs to set certain numerical scales. Though several common numerical scales have been reported in previous studies [3, 33, 34, 41], the problem of setting a numerical scale is still an open research issue. In practical fuzzy linguistic decision situations, for different DMs, the linguistic term set may have different numerical meanings that cannot be totally characterized by those common numerical scale functions. Therefore, in this section, some programming models for deriving PNSs are established based on the expected consistency of DLPRs.

5.1 Programming model construction using expected additive consistency of DLPRs

As analyzed in the previous sections, due to the limitation of objective and subjective conditions, DMs may offer inconsistent DLPRs. As a result, by improving the expected consistency of the DLPRs, one can get the PNSs. Given that g(lα) ∈ [(α+T − 1)/2T, (α+T+1)/2T], α = − T + 1,…, 1, 0, 1,…, T − 1, then based on the assumption that the personalized scale function is additive but unknown, the following programming model can be constructed with the use of the expected additive consistency of DLPRs:

where g is an additive scale function as described in Definition 5 and δ is a constant used to restrict the distance between g(lα) and g(lα+1), and 0 < δ < 1. In this model, the first four constraints guarantee g is an additive scale function and the middle one is the normalization constraint on the priority vector ω.

One can reason from Definition 2 and Theorem 2 that: \( \left| {0.5(\omega_{i} - \omega_{j} ) + 0.5 - \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot g(l_{\alpha } )} } \right| \) = \( \left| {0.5(\omega_{j} - \omega_{i} ) + 0.5 - \sum\nolimits_{\alpha = - T}^{T} {p_{ji} (l_{\alpha } ) \cdot g(l_{\alpha } )} } \right| \) for i, j = 1, 2,…, n, i ≠ j; and \( \left| {0.5(\omega_{i} - \omega_{j} ) + 0.5 - \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot g(l_{\alpha } )} } \right| \) = 0 if i = j. This demonstrates that the deviation examination can be just done for the upper diagonal elements of the DLPRs. As a consequence, (9) can be simplified into the following model:

The model of (10) is a multiprogramming model. To simplify the calculation of (10), we suppose the negative deviation and the positive deviation with respect to the goal Jij are, respectively, denoted by \( \pi_{{ij}}^{-}\) and \( \pi_{{ij}}^{+}\), where \( \pi_{{ij}}^{-}\) ⋅ \( \pi_{{ij}}^{+}\) = 0 for i, j = 1, 2,…, n, j > i, and their corresponding weights are, respectively, ζij and εij. To this end, one can get the solution to model (10) by resolving the optimization model below:

In the case where all goal functions Jij (i = 1, 2,…, n − 1, j = i + 1,…, n) are equally important, (11) can be equivalently expressed as:

By solving this linear model, one can get a PNS for the given set of linguistic terms. Moreover, it is also possible to obtain a priority vector ω = (ω1, ω2,…, ωn)T. In individual decision making with DLPRs, the vector ω can be used to produce a ranking of the pairwise comparison alternatives.

Theorem 4

For the linear programming model (12), if J=0, then the DLPR is expectedly additive consistent.

Proof

If J = 0 in (12), then one can get π − ij = π + ij = 0 for i, j = 1, 2,…, n, j > i. Namely,

Further, we can reason from Definition 2 and Theorem 2 that \( \left| {0.5(\omega_{i} - \omega_{j} ) + 0.5 - \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot g(l_{\alpha } )} } \right| \) = \( \left| {0.5(\omega_{j} - \omega_{i} ) + 0.5 - \sum\nolimits_{\alpha = - T}^{T} {p_{ji} (l_{\alpha } ) \cdot g(l_{\alpha } )} } \right| \) for i, j = 1, 2,…, n, i ≠ j; and \( \left| {0.5(\omega_{i} - \omega_{j} ) + 0.5 - \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot g(l_{\alpha } )} } \right| \) = 0 if i = j.

Thus, we have \( \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot g(l_{\alpha } )} \) = 0.5(ωi − ωj) + 0.5 for all i, j ∈ {1, 2,…, n}, which demonstrates the preference relation can be represented by (4).

Consequently, we can conclude that the DLPR is expectedly additive consistent according to Definition 11. □

Example 1

Consider a DLPR D = (dij)4×4, based on a nine linguistic terms set L = {l − |α = − 4, …, − 1, 0, 1, …, 4}:

By (12), we can build the following goal programming model:

Solving the above model, we obtain

\( \pi_{12}^{ - } = \pi_{12}^{ + } = \pi_{13}^{ - } = \pi_{13}^{ + } = \pi_{14}^{ + } = \pi_{23}^{ - } = \pi_{23}^{ + } = \pi_{24}^{ - } = \pi_{34}^{ - } = \pi_{34}^{ + } = 0,\pi_{14}^{ - } = 0.0504,\pi_{24}^{ + } = 0.0313 \), J = 0.0817; ω = (ω1, ω2,…, ωn)T = (0.5167, 0.3617, 0.1217, 0.0000)T; and g(l−4) = 0, g(l−3) = 0.2375, g(l−2) = 0.2875, g(l−1) = 0.3708, g(l0) = 0.5, g(l1) = 0.6292, g(l2) = 0.7125, g(l3) = 0.7625, g(l4) = 1.

Based on the resulting numerical scales, the FPR R, associated with D, is obtained:

5.2 Programming model construction using expected multiplicative consistency of DLPRs

As per Definition 13, DLPRs are expectedly multiplicative consistent if they can be expressed by (6). However, DMs may provide inconsistent DLPRs because of the limitation of objective and subjective conditions. That is to say, Eq. (6) does not always hold in practical decision contexts. As such, the personalized scale function can be obtained by improving the expected multiplicative consistency of the DLPRs. By relaxing Eq. (6), the following programming model can be built:

where g is an additive scale function as described in Definition 5 and δ is a constant used to restrict the distance between g(lα) and g(lα+1), and 0 < δ < 1. In the above model, the first four constraints guarantee g is an additive scale function and the middle one is the normalization constraint on the priority vector ω.

As per Definition 2 and Theorem 2, we have that:\( \left| {(\omega_{i} + \omega_{j} ) \cdot \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot g(l_{\alpha } )} - \omega_{i} } \right| \) = \( \left| {(\omega_{j} + \omega_{i} ) \cdot \sum\nolimits_{\alpha = - T}^{T} {p_{ji} (l_{\alpha } ) \cdot g(l_{\alpha } )} - \omega_{j} } \right| \) for i, j = 1, 2,…, n, i ≠ j; and \( \left| {(\omega_{i} + \omega_{j} ) \cdot \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot g(l_{\alpha } )} - \omega_{i} } \right| \) = 0 if i = j. For this reason, (14) can be simplified into the model below:

Analogous to the considerations in Sect. 5.1, we assume that the negative deviation and the positive deviation with regard to the goal Zij are, respectively, denoted by \( \eta_{{ij}}^{-}\) and \( \eta_{{ij}}^{+}\), where \( \eta_{{ij}}^{-}\) ∙ \( \eta_{{ij}}^{+}\) = 0 for i, j = 1, 2,…, n, j > i. As a result, model of (15) is rewritten as the linear optimization model:

By solving the model above, one can get a PNS for the given set of linguistic terms as well as a priority vector ω = (ω1, ω2,…, ωn)T associated with the given DLPR. Of course, the DLPR is expectedly multiplicative consistent if Z = 0. This conclusion is presented in the following theorem.

Theorem 5

For the linear programming model (16), if Z=0, then the DLPR is expectedly multiplicative consistent.

Proof

This theorem is easily demonstrated based on Definitions 2 and 13 and Theorem 2. Therefore, we omit it here.□

Example 2

Let us proceed with the earlier DLPR D = (dij)4×4, provided in Example 1. One can construct the following goal programming model using (16):

By solving the model above, we get

\( \eta_{12}^{ - } = \eta_{12}^{ + } = \eta_{13}^{ - } = \eta_{13}^{ + } = \eta_{14}^{ - } = \eta_{23}^{ - } = \eta_{23}^{ + } = \eta_{24}^{ + } = \eta_{34}^{ - } = \eta_{34}^{ + } = 0,\eta_{14}^{ + } = 0.0638,\eta_{24}^{ - } = 0.0181 \), Z = 0.0245; ω = (ω1, ω2,…, ωn)T = (0.5342, 0.2876, 0.1125, 0.0657)T; and g(l−4) = 0, g(l−3) = 0.1056, g(l−2) = 0.1556, g(l−1) = 0.25, g(l0) = 0.5, g(l1) = 0.75, g(l2) = 0.8444, g(l3) = 0.8944, g(l4) = 1.

Similar to Example 1, according to the resulting numerical scales, the FPR R, associated with D, is derived.

5.3 Programming model construction using multiplicative preference-based expected consistency of DLPRs

Sections 5.1 and 5.2 present some programming models to personalize numerical scales for the used sets of linguistic terms in DLPRs, under which the unknown scale functions are assumed to be certain additive scale functions. In a way similar to the foregoing two sections, this section develops the programming models to address the same issue under the assumption that the scale functions are multiplicative scale functions.

As per Definition 15, if the DLPR D = (dij)n×n with dij = {(lα, pij(lα)), α = − T, …, − 1, 0, 1, …, T} can be expressed by:

where NEij is the numerical expectation of dij defined based on a multiplicative scale function q, and ω = (ω1, ω2,…, ωn)T is the priority vector of D, satisfying 0 < ωi and \( \sum\nolimits_{i = 1}^{n} {\omega_{i} } = 1 \). Then, D is expectedly consistent. Due to the fact that the preference information expressed as DLPRs by DMs may not always be consistent, Eq. (18) does not always hold. As such, relaxing (18) by allowing some deviation and improving the expected consistency of D, one can build a multiobjective programming model to deduce a PNS from D. Unless otherwise specified, it is given that q(l–T) = 1/(T + 1), q(lT) = (T + 1) and q(lα) ∈ [α, α+2], if α = 1,…, T − 1 and q(lα) ∈ [1/(2 − α), 1/(− α)], if α = − T + 1,…, − 1. Thus, the multiobjective programming model reads as follows:

where q is a multiplicative scale function as described in Definition 7, δ is a constant and δ > 1. In this model, the first six constraints guarantee q is a multiplicative scale function and the seventh one is the normalization constraint on the priority vector ω.

Reasoning from Definition 2 and Theorem 3, we have that: \( \left| {\omega_{j} \cdot \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot q(l_{\alpha } )} - \omega_{i} } \right| \) = \( \left| {\omega_{i} \cdot \sum\nolimits_{\alpha = - T}^{T} {p_{ji} (l_{\alpha } ) \cdot q(l_{\alpha } )} - \omega_{j} } \right| \) for i, j = 1, 2,…, n, i ≠ j; and \( \left| {\omega_{j} \cdot \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot q(l_{\alpha } )} - \omega_{i} } \right| \) = 0 if i = j. Therefore, model (19) can be simplified into the following model by only considering the upper diagonal elements of the DLPRs:

In order to simplify the calculation of (20), we assume that the negative deviation and the positive deviation with regard to the goal Mij are, respectively, denoted by \( \lambda_{ij}^{ - } \;{\text{and}}\;\lambda_{ij}^{ + } ,\;{\text{where}}\;\lambda_{ij}^{ - } \cdot \lambda_{ij}^{ + } = 0 \) for i, j = 1, 2,…, n, j > i, and all goals are fair. Therefore, one can find the solution to model (20) by resolving the optimization model (21):

Finally, by solving (21), a PNS together with a priority vector ω = (ω1, ω2,…, ωn)T of the given DLPR can be obtained.

Theorem 6

For the linear programming model (21), if M=0, then the DLPR is expectedly consistent.

Proof

If M = 0 in (21), then we have λ − ij = λ + ij = 0 for i, j = 1, 2,…, n, j > i. That is to say,

□

As per Definition 2 and Theorem 3, we have: \( \left| {\omega_{j} \cdot \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot q(l_{\alpha } )} - \omega_{i} } \right| \) = \( \left| {\omega_{i} \cdot \sum\nolimits_{\alpha = - T}^{T} {p_{ji} (l_{\alpha } ) \cdot q(l_{\alpha } )} - \omega_{j} } \right| \) for i, j = 1, 2,…, n, i ≠ j; and \( \left| {\omega_{j} \cdot \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot q(l_{\alpha } )} - \omega_{i} } \right| \) = 0 if i = j.

Moreover, 0 < ωi and \( \sum\nolimits_{i = 1}^{n} {\omega_{i} } = 1 \). Hence, the following conclusion is obtained:

\( \sum\nolimits_{\alpha = - T}^{T} {p_{ij} (l_{\alpha } ) \cdot q(l_{\alpha } )} \) = ωi/ωj for all i, j ∈ {1, 2,…, n}, which shows the preference relation can be represented by (8).

Therefore, we can conclude from Definition 15 that the DLPR is expectedly consistent. □

Example 3

Consider another DLPR D = (dij)4×4, based on a nine linguistic terms set L = {lα|α = − 4, …, − 1, 0, 1, …, 4}.

As per (19), one can build the following model:

By solving (22), one can obtain

\( \lambda_{12}^{ - } \) = \( \lambda_{12}^{ + } \) = \( \lambda_{13}^{ - } \) = \( \lambda_{13}^{ + } \) = \( \lambda_{14}^{ - } \) = \( \lambda_{14}^{ + } \) = \( \lambda_{23}^{ - } \) = \( \lambda_{23}^{ + } \) = \( \lambda_{24}^{ - } \) = \( \lambda_{24}^{ + } \) = \( \lambda_{34}^{ - } \) = 0, \( \lambda_{34}^{ + } \) = 0.0073, M = 0.0073; ω = (ω1, ω2,…, ωn)T = (0.4886, 0.2342, 0.1712, 0.1061)T; and q(l−4) = 0.2, q(l−3) = 0.3308, q(l−2) = 0.3521, q(l−1) = 0.3697, q(l0) = 1, q(l1) = 2.7047, q(l2) = 2.84, q(l3) = 3.0231, q(l4) = 5.

Based on the resulting numerical scales, the MPR F, associated with D, is obtained:

6 An application of the proposal and analysis

This section first reconsiders the football player evaluation problem investigated in [47] to show how the proposals work in practice. Then, a discussion and a comparative analysis are conducted to demonstrate the validity of the developed method.

6.1 Application to the football player evaluation problem

Football player evaluation is the most significant activity of building excellent football team [7, 38, 46, 47]. Suppose that three football coaches (t1, t2, t3) are invited to assess the overall level of four football players (x1, x2, x3, x4). The coaches provide their opinions over the players using DLPRs based on the following linguistic term set: L = {l−5 = absolutely poorer, l−4 = very much poorer, l−3 = much poorer, l−2 = moderately poorer, l−1 = slightly poorer, l0 = indifferent, l1 = slightly better, l2 = moderately better, l3 = much better, l4 = very much better, l5 = absolutely better}, because of the uncertainty of all players’ past performances.

The original DLPRs {D1, D2, D3} over the players are listed in Matrices 1–3. For example, when comparing the performances of player x1 with those of x3 in their past ten matches, Coach t1 feels that player x1 is moderately better than x3 in eight matches and very much better than x3 in the remaining two matches. Then, the comparison information of x1 and x3 coming from Coach t1 can be recorded as {(l2, 0.8), (l4, 0.2)}, which is represented by cell13 in Matrix D1:

Suppose that the scale functions of D1 and D2 are additive and the scale function of D3 is multiplicative, but all of them are personalized and unknown. In the case of the first DLPR D1, one can establish the following optimization model using (12):

By solving (23), one can get that:

\( \pi_{12}^{ - } = \pi_{12}^{ + } = \pi_{13}^{ - } = \pi_{13}^{ + } = \pi_{14}^{ + } = \pi_{23}^{ - } = \pi_{23}^{ + } = \pi_{24}^{ - } = \pi_{24}^{ + } = \pi_{34}^{ - } = 0,\pi_{14}^{ - } = 0.1725,\pi_{34}^{ + } = 0.1229 \), J1 = 0.2954; ω1 = (ω1, ω2,…, ωn)T = (0.4475, 0.2992, 0.1608, 0.0925)T, and g(l−5) = 0, g(l−4) = 0.2, g(l−3) = 0.3, g(l−2) = 0.3958, g(l−1) = 0.4458, g(l0) = 0.5, g(l1) = 0.5542, g(l2) = 0.6042, g(l3) = 0.7, g(l4) = 0.8, g(l5) = 1.

For the second DLPR D2, one can build a similar optimization model using (12) and get that:

\( \pi_{12}^{ - } = \pi_{12}^{ + } = \pi_{13}^{ - } = \pi_{13}^{ + } = \pi_{14}^{ + } = \pi_{23}^{ - } = \pi_{23}^{ + } = \pi_{24}^{ - } = \pi_{24}^{ + } = \pi_{34}^{ - } = \pi_{34}^{ + } = 0,\pi_{14}^{ - } = 0.1898 \), J2 = 0.1898; ω2 = (ω1, ω2,…, ωn)T = (0.5074, 0.3451, 0.1475, 0.0000)T; and g(l−5) = 0, g(l−4) = 0.1885, g(l−3) = 0.2571, g(l−2) = 0.3578, g(l−1) = 0.4078, g(l0) = 0.5, g(l1) = 0.5922, g(l2) = 0.6422, g(l3) = 0.7429, g(l4) = 0.8115, g(l5) = 1.

In the case of the third DLPR D3, one can build the following optimization model using (21):

By solving (24), one can obtain that:

\( \lambda_{12}^{ - } = \lambda_{12}^{ + } = \lambda_{13}^{ - } = \lambda_{13}^{ + } = \lambda_{14}^{ - } = \lambda_{14}^{ + } = \lambda_{23}^{ - } = \lambda_{23}^{ + } = \lambda_{24}^{ - } = \lambda_{24}^{ + } = \lambda_{34}^{ + } = 0,\lambda_{34}^{ - } = 0.029 \), M3 = 0.029; ω3 = (ω1, ω2,…, ωn)T = (0.5519, 0.2268, 0.1284, 0.0929)T; and q(l−5) = 0.1667, q(l−4) = 0.175, q(l−3) = 0.2879, q(l−2) = 0.5, q(l−1) = 0.8188, q(l0) = 1, q(l1) = 1.2212, q(l2) = 2, q(l3) = 3.4732, q(l4) = 5.7131, q(l5) = 6.

Based on the resulting numerical scales, the FPRs R1 and R2 and the MPR F3, associated with D1, D2 and D3, respectively, are obtained.

Suppose that by using the direct assessment method in [30], the relative weights of the coaches are determined as W = (w1, w2, w3)T = (0.25, 0.35, 0.40)T. Then, the following ranking matrix is obtained:

In accordance with the collective priority vector ωc = (0.5102, 0.2863, 0.1432, 0.0603)T, a final ranking of the players is generated as x1 ≻ x2 ≻ x3 ≻ x4, signifying that x1 is the best football player and x4 the worst one.

6.2 Further discussion

In the above analysis, (12) is employed for the DLPRs D1 and D2 to derive the PNSs and the corresponding priority vectors during the process of solving the football player evaluation problem. We call it the first situation. In what follows, let us consider the other three situations.

In the second situation, (16) is used for the same two preference relations to address this problem. As such, the following optimization model is constructed for D1 by using (16):

By solving (26), one can obtain that:

\( \eta_{12}^{ - } = \eta_{12}^{ + } = \eta_{13}^{ + } = \eta_{14}^{ - } = \eta_{23}^{ - } = \eta_{23}^{ + } = \eta_{24}^{ - } = \eta_{24}^{ + } = \eta_{34}^{ + } = 0,\eta_{13}^{ - } = 0.0463,\eta_{14}^{ + } = 0.0139,\eta_{34}^{ - } = 0.0464 \), Z1 = 0.1066; ω1 = (ω1, ω2,…, ωn)T = (0.6304, 0.2215, 0.0819, 0.0662)T; and g(l−5) = 0, g(l−4) = 0.1, g(l−3) = 0.15, g(l−2) = 0.2, g(l−1) = 0.3, g(l0) = 0.5, g(l1) = 0.7, g(l2) = 0.8, g(l3) = 0.85, g(l4) = 0.9, g(l4) = 1.

Similarly, for the DLPR D2, one can construct one corresponding optimization model using (16) and obtain that:

\( \eta_{12}^{ - } = \eta_{12}^{ + } = \eta_{13}^{ - } = \eta_{13}^{ + } = \eta_{14}^{ - } = \eta_{23}^{ - } = \eta_{23}^{ + } = \eta_{24}^{ + } = \eta_{34}^{ - } = \eta_{34}^{ + } = 0,\eta_{14}^{ + } = 0.0579,\eta_{24}^{ - } = 0.002 \),Z2 = 0.0599; ω1 = (ω1, ω2,…, ωn)T = (0.5173, 0.2868, 0.1293, 0.0666)T; and g(l−5) = 0, g(l−4) = 0.05, g(l−3) = 0.1, g(l−2) = 0.2416, g(l−1) = 0.3, g(l0) = 0.5, g(l1) = 0.7, g(l2) = 0.7584, g(l3) = 0.9, g(l4) = 0.95, g(l5) = 1.

According to the resulting numerical scales, the FPRs R1 and R2, associated with D1 and D2, respectively, are obtained.

As a result, one can get the following ranking matrix:

As per the collective priority vector ωc = (0.5594, 0.2465, 0.1171, 0.0770)T, a final ranking of the players is generated as x1 ≻ x2 ≻ x3 ≻ x4, showing that x1 is still the best choice and x4 the worst one.

Let us consider the third situation where (12) is used for the DLPR D1 while (16) is employed for the DLPR D2 to derive the PNSs and the corresponding priority vectors during the process of addressing the problem. Then, the ranking matrix is obtained below:

Based on the collective priority vector ωc = (0.5137, 0.2659, 0.1368, 0.0836)T, one can get a final ranking of the players as x1 ≻ x2 ≻ x3 ≻ x4 which indicates that x1 is the best choice and x4 the worst one.

Consider the fourth situation where (16) is applied for the DLPR D1 while (12) is used for the DLPR D2 to handle this problem. The associated ranking matrix is presented as follows:

In accordance with the collective priority vector ωc = (0.5560, 0.2669, 0.1235, 0.0537)T, a final ranking of the players is produced as x1 ≻x2 ≻x3 ≻x4 which is the same as the results generated in the first three situations.

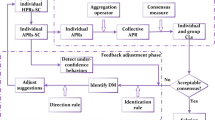

When comparing the first situation with the second situation, one can find that the numerical scales derived from D1 (or D2) by using (12) are totally different from the ones by using (16). This is because that (12) is developed based on the expected additive consistency of DLPRs while (16) is based on the expected multiplicative consistency. In addition, to clearly show the individual differences of the three coaches in understanding the used linguistic terms, their PNSs are shown in Table 1 and Fig. 1.

In-depth research and analysis Table 1 and Fig. 1 make it clear that for different coaches, there are individualized numerical scales with different linguistic terms, so even the same words have different meanings for different coaches.

6.3 Comparative study

Now the proposal is compared with the method developed by Zhang et al. [47] to highlight its feasibility and validity. The method in [47] is based on a consensus model which includes two rules over DLPRs, namely identification rule (IR) and adjustment rule (AR). Below, we use that method to analyze the football player evaluation problem.

One can obtain the consensus level based on the procedures of the method:

Given a pre-established consensus level CL = 0.8, because CL{D1, D2, D3} = 0.7555 < CL = 0.8, we identify the coach whose DLPR needs to be adjusted by calculating the index IR:

Thus, min{IR1, IR2, IR3} = IR1. That is to say, the Coach t1 needs to adjust his/her DLPR D1. Given the adjustment parameter 0.55, then the adjusted DLPR D1 is presented in Table 2.

As per the consensus level, we can get:

Because CL{D1, D2, D3} = 0.814 > CL = 0.8, the consensus reaching process ends.

The DLPRs D1, D2 and D3 are combined by utilizing the aggregation operator in [47] to form a collective one. The resulting collective DLPR Dc is presented in Table 3.

By using (1), the following expectation matrix Ec associated with Dc is obtained:

Next, the following expectation values are obtained:

Finally, according to the expectation values, a ranking of the players is generated as x1 ≻ x2 ≻ x3 ≻ x4, showing that x1 is the best alternative and x4 the worst one.

We can see that the proposed method and the one in [47] generate the same ranking order of the four players, which shows the validity of the developed method. But the method in [47] can only be suitable to handle situations where linguistic terms have identical numerical scales for different DMs. The proposed method allows DMs to hold their individual differences in understanding the linguistic terms used in DLPRs. In this sense, the proposed method is more flexible compared with the one in [47]. Furthermore, the computing complexity of the developed approach is lower than that of the approach of Zhang et al.

7 Conclusions

This paper applies the idea of setting PNSs of linguistic terms for different DMs in decision making with DLPRs to manage the statement about CWW that words might exhibit different meanings for different people. First, using different types of numerical scales, this paper connects DLPRs to FPRs and MPRs. This link allows us to define the expected consistency for a DLPR from the perspective of its associated numerical preference relations. Then, based on the expected consistency, some goal programming models are proposed to derive PNSs from DLPRs. Finally, the applications of the aforesaid theoretical results to practical decision situations are demonstrated by solving a problem of evaluating and selecting football players. It is clear from the results that there are differences in the way people understand the meaning of words.

Overall, the study fills the gap that no studies consider the PIS of linguistic terms among DMs when dealing with CWW in the process of solving linguistic decision problems with DLPRs. It exhibits two facets of novelty: (1) the definition of expected consistency for DLPRs and (2) the exploration of goal programming approaches to derive PIS of linguistic terms from DLPRs. In the future, personalizing individual semantics in large-scale group decision problems [12, 51] with multigranular linguistic information [16, 29] and unbalanced linguistic information [7, 8] is still worth examining to enrich the related studies in group decision making.

References

Alonso S, Cabrerizo FJ, Chiclana F, Herrera F, Herrera-Viedma E (2009) Group decision making with incomplete fuzzy linguistic preference relations. Int J Intell Syst 24:201–222

Dong YC, Xu YF, Li HY, Dai M (2008) A comparative study of the numerical scales and the prioritization methods in AHP. Eur J Oper Res 186:229–242

Dong YC, Xu YF, Yu S (2009) Linguistic multiperson decision making based on the use of multiple preference relations. Fuzzy Set Syst 160:603–623

Dong YC, Xu YF, Yu S (2009) Computing the numerical scale of the linguistic term set for the 2-tuple fuzzy linguistic representation model. IEEE Trans Fuzzy Syst 17:1366–1378

Dong YC, Zhang GQ, Hong WC, Yu S (2013) Linguistic computational model based on 2-tuples and intervals. IEEE Trans Fuzzy Syst 21:1006–1018

Dong YC, Herrera-Viedma E (2015) Consistency-driven automatic methodology to set interval numerical scales of 2-tuple linguistic term sets and its use in the linguistic GDM with preference relation. IEEE Trans Cybern 45:780–792

Dong YC, Wu YZ, Zhang HJ, Zhang GG (2015) Multi-granular unbalanced linguistic distribution assessments with interval symbolic proportions. Knowl Based Syst 82:139–151

Dong YC, Li CC, Herrera F (2016) Connecting the linguistic hierarchy and the numerical scale for the 2-tuple linguistic model and its use to deal with hesitant unbalanced linguistic information. Inf Sci 367:259–278

Dutta B, Guha D, Mesiar R (2015) A model based on linguistic 2-tuples for dealing with heterogeneous relationship among attributes in multi-expert decision making. IEEE Trans Fuzzy Syst 23:1817–1831

Gao J, Xu ZS, Ren PJ, Liao HC (2018) An emergency decision making method based on the multiplicative consistency of probabilistic linguistic preference relations. Int J Mach Learn Cybern 10:1–17

Ghadikolaei AS, Madhoushi M, Divsalar M (2018) Extension of the VIKOR method for group decision making with extended hesitant fuzzy linguistic information. Neural Comput Appl 30:3589–3602

Gou XJ, Xu ZS, Herrera F (2018) Consensus reaching process for large-scale group decision making with double hierarchy hesitant fuzzy linguistic preference relations. Knowl Based Syst 157:20–33

Herrera-Viedma E, Herrera F, Chiclana F, Luque M (2004) Some issues on consistency of fuzzy preference relations. Eur J Oper Res 154:98–109

Herrera-Viedma E, Martinez L, Mata F, Chiclana F (2005) A consensus support system model for group decision-making problems with multigranular linguistic preference relations. IEEE Trans Fuzzy Syst 13:644–658

Herrera F, Herrera-Viedma E, Verdegay JL (1996) A model of consensus in group decision making under linguistic assessments. Fuzzy Set Syst 78:73–87

Herrera F, Herrera-Viedma E, Martinez L (2000) A fusion approach for managing multi-granularity linguistic term sets in decision making. Fuzzy Set Syst 114:43–58

Herrera F, Herrera-Viedma E, Chiclana F (2001) Multiperson decision-making based on multiplicative preference relations. Eur J Oper Res 129:372–385

Herrera F, Martinez L (2001) A model based on linguistic 2-tuples for dealing with multigranular hierarchical linguistic contexts in multi-expert decision-making. IEEE Trans Syst Man Cybern B Cybern 31:227–234

Herrera F, Alonso S, Chiclana F, Herrera-Viedma E (2009) Computing with words in decision making: foundations, trends and prospects. Fuzzy Optim Decis Mak 8:337–364

Krishankumar R, Ravichandran KS, Ahmed MI, Kar S, Tyagi SK (2019) Probabilistic linguistic preference relation-based decision framework for multi-attribute group decision making. Symmetry 11:2

Li CC, Dong YC, Herrera F, Herrera-Viedma E, Martinez L (2017) Personalized individual semantics in computing with words for supporting linguistic group decision making. An application on consensus reaching. Inf Fusion 33:29–40

Li CC, Rodríguez RM, Herrera F, Martinez L, Dong YC (2017) A consistency-driven approach to set personalized numerical scales for hesitant fuzzy linguistic preference relations. In: IEEE international conference on fuzzy systems (FUZZ-IEEE), Naples, Italy. IEEE, pp 1–5

Li CC, Rodriguez RM, Martinez L, Dong YC, Herrera F (2018) Personalized individual semantics based on consistency in hesitant linguistic group decision making with comparative linguistic expressions. Knowl Based Syst 145:156–165

Liu HB, Ma Y, Jiang L (2019) Managing incomplete preferences and consistency improvement in hesitant fuzzy linguistic preference relations with applications in group decision making. Inf Fusion 51:19–29

Martinez L, Herrera F (2012) An overview on the 2-tuple linguistic model for computing with words in decision making: extensions, applications and challenges. Inf Sci 207:1–18

Massanet S, Riera JV, Torrens J, Herrera-Viedma E (2016) A model based on subjective linguistic preference relations for group decision making problems. Inf Sci 355:249–264

Mendel JM, Wu D (2010) Perceptual computing: aiding people in making subjective judgments. IEEE Press and John Wiley, New Jersey

Mendel JM, Zadeh LA, Trillas E, Yager R, Lawry J, Hagras H, Guadarrama S (2010) What computing with words means to me. IEEE Comput Intell Mag 5:20–26

Morente-Molinera JA, Perez IJ, Urena MR, Herrera-Viedma E (2015) On multi-granular fuzzy linguistic modeling in group decision making problems: a systematic review and future trends. Knowl Based Syst 74:49–60

Ölçer Aİ, Odabaşi AY (2005) A new fuzzy multiple attributive group decision making methodology and its application to propulsion/manoeuvring system selection problem. Eur J Oper Res 166:93–114

Pang Q, Wang H, Xu ZS (2016) Probabilistic linguistic linguistic term sets in multi-attribute group decision making. Inf Sci 369:128–143

Rodriguez RM, Martinez L, Herrera F (2013) A group decision making model dealing with comparative linguistic expressions based on hesitant fuzzy linguistic term sets. Inf Sci 241:28–42

Saaty TL (1980) The analytic hierarchy process. McGraw-Hill, New York

Salo AA, Hämäläinen RP (1997) On the measurement of preferences in the analytic hierarchy process. J Multi-Criteria Decis Anal 6:309–319

Tanino T (1984) Fuzzy preference orderings in group decision-making. Fuzzy Set Syst 12:117–131

Wang JH, Hao JY (2006) A new version of 2-tuple. Fuzzy linguistic, representation model for computing with words. IEEE Trans Fuzzy Syst 14:435–445

Wang JH, Hao J (2007) An approach to computing with words based on canonical characteristic values of linguistic labels. IEEE Trans Fuzzy Syst 15:593–604

Wu YZ, Li CC, Chen X, Dong YC (2018) Group decision making based on linguistic distributions and hesitant assessments: maximizing the support degree with an accuracy constraint. Inf Fusion 41:151–160

Wu ZB, Xu JP (2016) Managing consistency and consensus in group decision making with hesitant fuzzy linguistic preference relations. Omega Int J Manag S 65:28–40

Xu JP, Wu ZB (2013) A maximizing consensus approach for alternative selection based on uncertain linguistic preference relations. Comput Ind Eng 64:999–1008

Xu ZS (1999) Study on the relation between two classes of scales in AHP. Syst Eng Theory Pract 19:311–314

Xu ZS (2004) EOWA and EOWG operators for aggregating linguistic labels based on linguistic preference relations. Int J Uncertain Fuzz 12:791–810

Xu ZS (2007) A survey of preference relations. Int J Gen Syst 36:179–203

Xu ZS (2008) Group decision making based on multiple types of linguistic preference relations. Inf Sci 178:452–467

Zadeh LA (1975) The concept of a linguistic variable and its application to approximate reasoning. 1. Inf Sci 8:199–249

Zhang BW, Liang HM, Gao Y, Zhang GQ (2018) The optimization-based aggregation and consensus with minimum-cost in group decision making under incomplete linguistic distribution context. Knowl Based Syst 162:92–102

Zhang GQ, Dong YC, Xu YF (2014) Consistency and consensus measures for linguistic preference relations based on distribution assessments. Inf Fusion 17:46–55

Zhang YX, Xu ZS, Wang H, Liao HC (2016) Consistency-based risk assessment with probabilistic linguistic preference relation. Appl Soft Comput 49:817–833

Zhang YX, Xu ZS, Liao HC (2017) A consensus process for group decision making with probabilistic linguistic preference relations. Inf Sci 414:260–275

Zhang YX, Xu ZS, Liao HC (2018) An ordinal consistency-based group decision making process with probabilistic linguistic preference relation. Inf Sci 467:179–198

Zhang Z, Guo CH, Martinez L (2017) Managing multigranular linguistic distribution assessments in large-scale multiattribute group decision making. IEEE Trans Syst Man Cybern Syst 47:3063–3076

Zhang ZM, Wu C (2014) On the use of multiplicative consistency in hesitant fuzzy linguistic preference relations. Knowl Based Syst 72:13–27

Acknowledgements

This research was supported by the State Scholarship Fund of China (No. 201706690025), the Foundation for Innovative Research Groups of the Natural Science Foundation of China (No. 71521001) and the National Natural Science Foundation of China (Nos. 71690230, 71690235, 71501056, 71601066, 71501055, 71501054 and 71571166).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tang, X., Zhang, Q., Peng, Z. et al. Derivation of personalized numerical scales from distribution linguistic preference relations: an expected consistency-based goal programming approach. Neural Comput & Applic 31, 8769–8786 (2019). https://doi.org/10.1007/s00521-019-04466-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-019-04466-5