Abstract

Estimating the uniaxial compressive strength (UCS) of travertine rocks with an indirect modeling approach and machine learning algorithms is useful as models can reduce the cost and time required to obtain accurate measurements of UCS, which is important for the prediction of rock failure. This approach can also address the limitations encountered in preparing detailed measured samples using direct measurements. The current paper developed and compared the performance of three standalone tree-based machine learning models (random forest (RF), M5 model tree, and multivariate adaptive regression splines (MARS)) for the prediction of UCS in travertine rocks from the Azarshahr area of northwestern Iran. Additionally, an ensemble committee-based artificial neural network (ANN) model was developed to integrate the advantages of the three standalone models and obtain further accuracy in UCS prediction. To date, an ensemble approach for estimating UCS has not been explored. To construct and validate the models, a set of rock test data including p-wave velocity (Vp (Km/s)), Schmidt Hammer (Rn), porosity (n%), point load index (Is (MPa)), and UCS (MPa) were acquired from 93 travertine core samples. To develop the ensemble tree-based machine learning model, the input matrix representing Vp, Rn, n%, and Is data with the corresponding target variable (i.e., UCS) was incorporated with a ratio of 70:15:15 (train: validate: test). Results indicated that a standalone MARS model outperformed all other standalone tree-based models in predicting UCS. The ANN-committee model, however, obtained the best performance with an r-value of approximately 0.890, an RMSE of 3.980 MPa, an MAE of 3.225 MPa, a WI of 0.931, and an LMI of 0.537, demonstrating the improved accuracy of the ensemble model for the prediction of UCS relative to the standalone models. The results suggest that the proposed ensemble committee-based model is a useful approach for predicting the UCS of travertine rocks with a limited set of model-designed datasets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Structural rock characteristics are important for geological studies, mining, petroleum engineering, and geotechnical surveys. Lithology and uniaxial compressive strength (UCS) are two variables used in the prediction of general rock failure. Therefore, measuring and estimating the UCS of rock materials is important for intact rock mass classification, foundation construction, tunneling, slope stability issues, and other rock failure criteria [1]. Accurate estimation of UCS, however, remains a challenging, yet important problem in a range of engineering disciplines.

The UCS of rocks can be measured in both the field and laboratory, through direct and indirect approaches. The International Society for Rock Mechanics (ISRM) has standardized the procedure for direct measurement, which involves producing high-quality rock core samples in engineering laboratories. The procedure requires destructive tests and is time-consuming and expensive [1]. Indirect determination of UCS can be carried out through various methods including the point load strength index test, block punch strength index test, and the Schmidt hammer test. These methods generally require many core samples, expensive devices, as well as significant time and funding [2]. Extraction of rock samples with the specific dimensions required for laboratory testing purposes is not always possible. In such cases, it is important to develop other methods to define the correlation of physio-mechanical features of materials, where one attribute can be predicted from another. In the preliminary stage of planning and design activities, understanding property interdependencies is useful [3]. Many studies have investigated the possibility of estimating UCS according to other material features, since the methods used to measure other features are relatively rapid, incur low costs, and are easy to execute in comparison with the ISRM UCS test.

The indirect estimation of UCS can be performed using conventional regression methods, which propose a functional relationship between underlying variables related to UCS. However, due to the evolution of computers, new techniques based on artificial intelligence (AI) that employ machine learning have been utilized to create predictive models that can estimate essential parameters [4,5,6,7]. Many studies have focused on the indirect estimation of rock UCS with AI. Table 1 lists the previous studies that have applied AI models to the prediction of UCS.

Most of the above-mentioned AI models, however, are complex and computationally costly during the training processes. As such, tree-based machine learning models have become increasingly explored for regression problems because they are relatively simple, and have relatively lower computational costs [17, 18]. Although the aforementioned research studies confirmed that AI models showed significant advantages for UCS estimation, a comparative study among tree-based machine learning models (e.g., random forest (RF), M5 model tree, and multivariate adaptive regression splines (MARS)) has been not carried out. Moreover, developing an ensemble-based multi-model approach is useful as it integrates the advantages of different models. Thus, the current study developed an artificial neural network (ANN)-based committee ensemble model with three tree-based models. To the best of the authors’ knowledge, this study represents the first time such an ensemble model has been developed in this field.

In this study, data samples from travertine rocks were collected from different quarries in the Azarshahr area of northwest Iran, and the relationships between UCS (i.e., the target variable) and other rock properties (i.e., the predictor variables) were investigated. The goals of this research included: (1) development of single (or standalone) nonlinear tree-based machine learning models that utilized the RF, the M5 model tree, and MARS algorithms to model UCS, (2) comparison of the aforementioned models’ performances to select the best approach, and (3) integration of the advantages of the standalone tree-based machine learning models to construct and evaluate an ensemble committee-based model for USC prediction through application of an ANN algorithm. The purpose of this research was to propose a new ensemble-based model that could potentially lead to improved prediction accuracy, relative to standalone models, when applied to the prediction of UCS of travertine rock.

2 Materials and methods

2.1 Study area and data collection

Travertine, which is characterized by its high porosity, fine grain, and banded structure, is a particular form of carbonate deposit (also referred to as a type of limestone). It is deposited by hot spring water containing Ca2+ and CO32− and is mainly found in fault zones, karstic caves, and around spring cones. The travertine in the Azarshahr area is a fissure-ridge type, which extends to the eastern part of Urmia Lake in northwestern Iran [7].

A geological map of the study area and location of travertine formations is illustrated in Fig. 1. The active quaternary Sahand volcanic complex, near the travertine ridges, has had a significant impact on the Jurassic and Cretaceous carbonate solution, as well as the stained travertine layers [19]. Different colored travertine, aragonite, and onyx quarries exist in the area, which contain up to approximately 560 million m3 of travertine.

Travertine samples, for modeling purposes, were obtained from ten quarries in four different provinces in the area. Overall, 30 travertine blocks were collected, each with the approximate dimension of 40 × 40 × 20 cm. Samples were then transferred to the Rock Mechanics Laboratory at Tarbiat Modarres University, where rock cores were prepared with a core-drilling machine. The ratio of the length to the diameter of core samples was found to be approximately two. The edges of the cores were subsequently cut parallel and smoothed. Rock mechanics tests were carried out on the 93 core samples prepared from the travertine rock blocks. Porosity (n%), P-wave velocity [Vp (Km/s)], Schmidt rebound hardness (Rn), Is (MPa), and UCS (MPa) were the primary physical and mechanical properties measured by the laboratory tests, in accordance with the methods recommended by the ISRM [20].

Histogram plots for each test are presented in Fig. 2. The UCS values ranged between 37.5 and 67.8 MPa (with a median value of 54.07 MPa). Based on the ISRM [20] UCS classification, the rock samples were categorized as medium (25–50 MPa) to high (50–100 MPa) strength rocks.

2.2 Multiple linear and nonlinear regression models

In order to identify the relationships between multiple independent or explanatory variables (Xi) and a dependent variable (Yi), multiple linear regression (MLR) was used, based on the assumption of linearity between Xi and Yi. The MLR equation is shown in Eq. (1) [21, 22].

where k denotes the number of observed values, \(a\) represents the intercept, and \(\beta\) is the slope or coefficient.

A multiple nonlinear regression (MNLR) model was also used. In contrast to MLR, MNLR assumes a nonlinear association between the dependent variable Yi and the explanatory variables Xi. The MNLR formula is shown in Eq. (2) [22, 23].

where \(a\) denotes the intercept, \(\beta\) represents the slope (also called the regression coefficient), and k is the number of observed values. For predictions, fitted multiple regression equations can be used to estimate the value of Y with new known values of X.

2.3 Objective model 1: Random Forest

The first objective algorithm used in this study was the random forest (RF), a model created by Ho [24]. An extension of the algorithm was later developed by Breiman [25]. The RF, known as an ensemble method, produces a set of repeated predictions of the same phenomenon by combining multiple decision tree algorithms. The RF includes a set of tree-structured classifiers {h(x, k), k = 1,…}, where {k} represents independent and identically distributed random vectors, in which each tree casts a unit vote for the most widespread class at input x [25].

Decision trees can be grouped into classification or regression types. For classification purposes, an RF considers a class vote from each tree and subsequently classifies the features that receive the majority of votes. In contrast, for regression purposes, the predicted values from each tree at a target point, x, are averaged [26]. A set of conditions form a regression tree (RT), which is organized from a root to a terminal node [27, 28]. An RT is introduced through recursive splitting and by performing multiple regressions on the model’s training dataset. For each internal node of a rule (of the tree), data partitioning is performed repeatedly until a previously-specified stop condition is reached. Each terminal node, or leaf, has a simple regression model attachment applied specifically to that node section. Upon completion of the tree’s induction process, pruning action is used to ameliorate the tree’s generalization capacity by minimizing its structural complexity. In each node, the number of cases can be considered as the pruning criteria [29].

According to Breiman et al. [27], the RT’s induction process needs to select the optimal splitting measurement vectors at an initial stage. Binary splits are created by dependent variables or the parent node (root), in which the child nodes are purer than the roots. Throughout this step, the RT explores all candidate splits to discover the best split, s, which maximizes the purity of the produced tree (as indicated by the largest reduction in the impurity). The RF model structure used for the prediction of UCS is shown in Fig. 3. The equation for the reduction in impurity that results from a given split is shown in Eq. (3).

where s represents a particular split at node t, in which node t is divided by s into two different child nodes; the left child node (tL) and the right child node (tR). The pL and pR are the proportion of data cases in t partitioned to the tL and tR, respectively. The impurity measure at node t is defined by i(t) and the impurity for the left and right child nodes are defined by (tR) and i(tL), respectively. Δi(s,t) is the difference between the impurity measure for node t and the impurity measures for the tR and tL.

2.4 Objective model 2: M5 Model Tree

The second objective algorithm used in the current study, the M5 model tree, was first proposed by Quinlan [30]. This model, with linear regression functions at the terminal (leaf) nodes, has been used for continuous-class learning purposes and more recently for engineering problems [29,30,31]. The M5 model tree is a type of binary decision tree, which is generally applied to categorical datasets. Furthermore, the algorithm can be applied to quantitative data, which is an advantage in comparison with other tree-based regression models [30, 33].

The M5 model tree is developed in two steps [34, 35]. In the first step, the input-target data are divided into subcategories, and a decision tree is created. The division of the data is carried out based on two factors; first, the treatment of the standard deviation of the class values, and second, the calculation of the expected decrease in this error as a consequence of testing each attribute at that node [36]. The standard deviation reduction (SDR) is computed, as shown in Eq. (4) [37].

where T expresses a set of examples that reach the node, Ti denotes the subset of examples that have the ith outcome of the potential set, and sd represents the standard deviation of the class values [34, 38].

As a result of the division process, the data in the child nodes have a smaller standard deviation than that of the parent nodes, and therefore, provide purer nodes. Next, the M5 model tree selects nodes with the highest expected error reduction after scanning all of the possible divisions in the resulting tree structure. As a result of this division, a large tree-like structure is frequently produced, which in turn, can cause over-fitting. The overgrown tree can be pruned by replacing sub-trees by linear regression functions in the second step of the modeling to avoid overfitting. By pruning, the accuracy of estimation can be significantly increased. Overall, the input space is divided into areas (i.e., the subspaces) and a linear regression model is created for each area [39]. The M5 model tree structure used for the prediction of UCS is provided in Fig. 4.

2.5 Objective model 3: Multivariate Adaptive Regression Splines

The third objective model used in this study, the multivariate adaptive regression splines (MARS) model, was initially proposed by Friedman [40]. MARS is a multivariate nonparametric technique used to predict continuous numeric results. MARS is a flexible technique for organizing relationships that contain interactions between a few variables; it can lead to a significant degree of accuracy in solving engineering problems (e.g., [31, 32]). Furthermore, the MARS model can estimate the basic practical relationship between input and output variables without any set assumptions [40, 41].

The MARS model aims to divide the solution space (i.e., the input-target matrix) into various intervals that indicate the feature space of the indicator variables. The individual splines are then fit to each interval [40]. Subsequently, for each data interval, a unique mathematical regression equation is determined. For each interval of the independent variable, a relationship to the output of the modeled system is developed according to the established mathematical equations. Every spline function is considered on a given interval, and the endpoints of the interval are called knots. This process can be carried out in two stepwise methods, forward and backward. A set of appropriate inputs are selected in the forward stepwise approach and consequently split, generating an over-fitted model with a high number of knots. In the backward procedure, which aims to improve prediction accuracy, the unnecessary variables are then removed from the previously selected set [42]. In order to uproot the repetitive knots, a pruning technique is used. The “basis function” (BF) is used to demonstrate each distinct interval of predictors, which are formed, as shown in Eqs. (5) and (6) [43].

where X is a predictor variable and c is a threshold value. In order to maintain the coherency of the BFs, two close splines are intersected at a knot. Accordingly, the function is connected to every input variable, which is used to characterize the location of the knots [42, 44]. The MARS model employs a two-sided truncated power function as the spline BFs, as shown in Eqs. (7) and (8) [45].

where \(q \left( { \ge 0} \right)\) represents the power to which the splines are raised, along with the degree of evenness of the resulting function. A schematic view of the MARS model structure for the prediction of UCS is shown in Fig. 5.

2.6 Ensemble model: the ANN-committee-based model

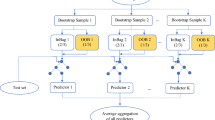

Previous studies (e.g., [7, 43, 46]) that designed and evaluated ensemble-based models revealed their improved performances in comparison with individual (standalone) machine learning models in a number of engineering problems. To develop an ensemble model for UCS prediction in the present study, the ANN-committee-based model, which is a multi-model ensemble framework, was used. A feed-forward multilayer perceptron (MLP) was employed to construct the ANN-committee model. The MLP was organized into three layers, including an input, one or more hidden layers, and an output layer, as shown in Eq. (9) [7, 46, 47].

where \(f_{\text{h}}\) is the activation function of the hidden neuron, \(f_{\text{o}}\) is the activation function of the output neuron, \(\hat{y}_{k}\) are the computed output variables, \(N_{N}\) is the number of neurons in the input layer, \(M_{N}\) is the number of neurons in the hidden layer, \(W_{ji}\) is a weight in the hidden layer connecting the \(i\) th neuron in the input layer and the jth neuron in the hidden layer, \(W_{j0}\) is the bias for the jth hidden neuron, \(W_{kj}\) is a weight in the output layer connecting the jth neuron in the hidden layer and the kth neuron in the output layer, \(W_{k0}\) is the bias for the kth output neuron, and \(X_{i}\) is the ith input variable for the input layer.

The structure of the developed ANN ensemble committee-based model utilizing the input, hidden layer, and output layer neurons, and used for the prediction of UCS, is shown in Fig. 6.

2.7 Model design framework

In this study, three different machine learning regression models, including the M5 model tree, MARS, and RF, were explored for the prediction of travertine UCS, with data obtained from the Azarshahr area in northwestern Iran. The input matrix (x) defined by Vp, Rn, n%, and Is datasets represented the predictor variables, and the target variable (y), defined by UCS, were used in each tree-based model. Seventy percent of the original dataset was randomly selected for the training phase, and the remainder of the dataset was partitioned for the validation (15%) and testing (15%) phases. Before developing the machine learning models, all variables were normalized to a value between zero and one by a scaling factor to guarantee that all input-target variables received equal attention during the training phase.

All models were implemented using the MATLAB software on an Intel(R) core i7-4470CPU @ quad-core 3.74 GHz computer system. To develop the RF model, the initial number of weak learners (i.e., regression trees) was set to 800, and the initial number of leaves in each tree was set to five, the default of the Bagger algorithm [48]. Notably, no universal mathematical formula is used to set the optimum number of trees [49]. Generally, a larger number of trees generates more accurate results but increases computational cost.

The M5 model tree was constructed using a set of tuning parameters for model initialization. A minimum tree split value of five, a smoothing value of 15, and a split threshold value of 0.05 were selected, as suggested by Yaseen et al. [32] and Deo et al. [50, 51]. The model was pruned to prevent over-fitting of the model to the data, which has the dual purpose of implementing the “divide-and-conquer rule” in which the problem is broken down by splitting it into several smaller problems [34,35,36,37,38,39,40,41,42,43,44]. In order to improve prediction accuracy, the sharp discontinuities generated as a result of joining multiple piece-wise linear regression functions were eliminated during the smoothing process [38]. The model found the optimum number of decision trees (or ‘rules’) to be seven, as this value attained the smallest RMSE in the training step.

The MARS model was constructed using the ARESLab toolbox and followed the approach of earlier studies [31, 32, 43, 50, 51]. Generally, the MARS modeling process consisted of two stages: the forward and backward stages. In the forward stage, the reflected pair(s) of the BFs were added and the potential knots were identified to obtain the greatest decrease in the training error (RMSE). The approximate number of available knot locations, which are controlled using midspan and endspan, were found to be ten, eight, ten, and ten for x1 (Vp (km/s)), x2 (Rn), x3 (n%), and x4 (Is (MPa)) inputs, respectively. The number of BFs in the model after the forward stage was found to be 21. It is important to note that over-fitting of the modeled data is a risk when a large model is generated at the end of the forward phase. Therefore, by deleting a set of redundant BFs through a backward procedure, the MARS model was pruned to achieve a model that only included the intercept term [32]. The total effective number of parameters after the backward stage was found to be 23. The BFs and the optimal prediction functions of the developed MARS model are provided in Table 2.

Following the construction and evaluation of the standalone (i.e., MARS, RF, M5 tree) models, integration of the predicted UCS values from the three developed machine learning models was performed to improve the prediction accuracy of the UCS data, and for subsequent use in an ANN model. The predicted UCS values simulated from the standalone models were employed as the ANN-committee-based model’s inputs, and the measured UCS values were given as the target (output) of the ANN model. In accordance with earlier studies [43], the utilization of the output of each standalone model in the final predictive model (i.e., the ANN) was done to better assimilate data features present in the predictor variables, as some of these features may not have been fully identifiable by the individual standalone models. To develop an optimal ANN-committee-based model, the Levenberg–Marquardt (LM) training algorithm was used to design a three-layered feed-forward neural network model. The LM minimizes the mean square error (MSE) between the predicted and measured UCS values by applying a computationally efficient second-order training technique. The optimal number of hidden neurons was selected by considering a value set by log (N) and (2n + 1), where N and n are the numbers of training samples and input neurons, respectively, as recommended by Wanas et al. [52], Mishra and Desai [53], and Barzegar et al. [7, 46, 54]. In this case, the number of neurons in the hidden layer was set to four. The sigmoid and linear functions were selected as the hidden transfer and output functions between layer two and three, respectively, with a learning rate and a momentum factor of 0.1. Through an iterative modeling process, the best validation performance was attained at 10 epochs, based on an MSE of approximately 9.551 × 10−1 MPa. After training and validation of the ANN ensemble committee-based model, the testing phase, in which an independent test dataset was used to evaluate the final predictive model, was established.

2.8 Statistical performance evaluation

Statistical metrics were used to assess the performance of the models in this study. These metrics are comprised of the correlation coefficient (r), root mean square error (RMSE), mean absolute error (MAE), and their normalized equivalents expressed in percentages (RRMSE and RMAE). The basic equations of these metrics are shown in Eqs. (10) to (14).

where N is the number of data points, \({\text{UCS}}_{i}^{\text{meas}}\) and \({\text{UCS}}_{i}^{\text{pred}}\) are the \(i\)th measured and predicted UCS values, respectively, and \(\overline{\text{UCS}}^{\text{meas}}\) and \(\overline{\text{UCS}}^{\text{pred}}\) are the mean of measured and predicted UCS values, respectively.

The covariance of the observed data, which is explained by the prediction model, was described by r. RMSE and MAE, which are represented in their absolute units, show the accuracy of the models as described by their goodness-of-fit. Moreover, RRMSE, which compares the percentage of deviation between the predicted and measured data, was used to evaluate the models’ precision. The RMAE was used to determine the average magnitude of total absolute bias error (in percent) between predicted and measured data.

The previously described statistical metrics demonstrate the linear agreement between the measured and predicted values in a modeling system. However, these metrics can be excessively sensitive to outliers in the measured data, while showing insensitivity to the additive or relative differences between predictions and measurements [32, 55, 56]. To overcome these challenges, two normalized performance indicators, the Willmott’s Index (WI) and Legates and McCabe Index (LMI), were used. The mathematical formulations associated with these metrics are given in Eqs. (15) and (16).

Note that the LMI has an advantage over WI when relatively high predicted values are expected, even for a poorly fitted model.

2.9 Results and discussion

Simple regression analysis was used to determine the relationships between the predicted (i.e., UCS) and predictor variables (i.e., Vp, Rn, n% and Is) (Table 3). The relationships between UCS and the predictor variables were analyzed using linear, exponential, power, and logarithmic functions. A meaningful relationship between UCS and Is (i.e., 0.7715 < r < 0.7726) was found, which was in accordance with several studies [53, 57, 58]. Conversely, weaker relationships were observed between UCS and the other input variables; Vp yielded a value of 0.4917 < r < 0.5078, Rn yielded a value of 0.5930 < r < 0.6093, and n% yielded a value of 0.5463 < r < 0.5842. These poor relationships may have been due to the use of different travertine rocks with diverse characteristics from the study area.

In addition to the simple regression analysis, a correlation analysis was carried out between the predicted and predictor variables. In previous studies, it has been demonstrated that n% is the main control factor for the durability and strength of rock, and can thus influence Rn, Is, and UCS [59,60,61]. It has also been reported that increasing porosity was linked with decreasing UCS [61,62,63]. However, the results (Table 4) of the present study showed weak correlations between Vp and n% (r = − 0.003), and Rn and n% (r = − 0.162). Additionally, moderate correlations were found between Vp and Is (r = 0.458), Rn and Is (r = 0.511), and n% and Is (r = − 0.419), reinforcing the study’s objective to employ models based on multiple input parameters for the prediction of UCS, namely multiple regression and machine learning models.

In the execution of multiple regression modeling, which included the MLR and MNLR procedures, the same datasets as the machine learning models were used. Implementation of the MLR and MNLR procedures yielded Eqs. (17) and (18), respectively, for the prediction of UCS. The MLR model obtained an r of 0.626, an RMSE of 7.527 MPa, and an MAE of 5.580 MPa. The MNLR yielded an r of 0.721, an RMSE of 6.172 MPa, and an MAE of 4.570 MPa, which highlighted the greater accuracy of nonlinear models, in comparison with linear models, for the prediction of UCS.

Sensitivity analysis was used to evaluate the relative influence of input variables on the models’ output variable using the relative strength of effect (RSE) method. In this method, all data pairs were used to construct a data array X [64,65,66].

where the variable \(x_{i}\) in the array X is the vector of length m, shown as:

The RSE for the input unit “i” on the output unit “j”, between dataset \(X_{i}\) and \(X_{j}\) is calculated using Eq. (19).

Figure 7 shows the calculated RSE values for the four input variables, with Is (MPa) (with an RSE of 0.993) having the highest impact on UCS prediction modeling. The variable n% had the lowest impact on UCS prediction modeling.

Table 5 shows a comparison of the statistical performances of the RF, M5 tree and MARS models, as well as the ANN-based ensemble model, in predicting UCS in travertine rocks. Statistical performance metrics (r, RMSE, and MAE) are provided for both the training and validation phases of each model. All standalone machine learning models that were trained and validated showed high values of r and low values of RMSE and MAE.

Following the training and validation phases of the standalone machine learning models, the viability of the models for the prediction of UCS data was established through a testing phase. The statistical performance of the developed models, in terms of UCS prediction during the testing phase, is given in Table 6. Based on the calculated statistical indicators, the MARS model (r = 0.830, RMSE = 5.588 MPa, MAE = 4.461 MPa, WI = 0.997, and LMI = 0.359) obtained superior performance followed by the M5 tree (r = 0.572, RMSE = 8.147 Mpa, MAE = 5.745 MPa, WI = 0.993, and LMI = 0.175) and RF (r = 0.488, RMSE = 8.071 MPa, MAE = 6.436 MPa, WI = 0.600, and LMI = 0.076) models. The LMI has greater robustness than the WI and is thus always lower than the WI. In the current study, LMI was very low for the M5 tree and RF models, but relatively good for the MARS model. Additionally, the relative errors (i.e., RRMSE and RMAE) were low for all the single models (MARS = 11.24%, 8.99% vs. M5 tree = 16.68%, 12.38% and RF = 16.24%, 14.29%). It was also observed that the predicted values of UCS obtained using the MARS model were closer to the measured UCS values than in the M5 tree and RF models. The MARS model also showed the highest r-value, as well as fit by the linear regression equation (UCSpred = 1.032 UCSmeas + 0.013). This indicated, along with the other metrics used, that the MARS model outperformed the other standalone models. The superior performance of the MARS model may be the result of more effective feature identification resulting from the use of several cubic splines at different intervals in the input-target dataset.

The ensemble model was then developed to integrate the advantages of each standalone machine learning model. After training and validation of the ANN-based committee model, the testing phase was executed. Based on the results given in Table 6, the ensemble model yielded an r of 0.890, an RMSE of 3.980 MPa, an MAE of 3.225 MPa, a WI of 0.931, and an LMI of 0.237, with a lower scatter than each of the standalone models, and a linear regression equation of UCSpred = 0.899 UCSmeas + 6.696.

Generally, results showed that the ensemble model improved the performance of the standalone machine learning models. Measured and predicted values of UCS by the RF, M5 tree, MARS, and ensemble models in the testing phase are compared in Fig. 8. It was observed that the ensemble model was the most efficient in predicting low and high USC values. The comparison between the simple multiple variable regression models and machine learning models for predicting UCS indicated that the performance of the RF and M5 tree models were relatively poor and that the MARS and ensemble models were superior. The improved performance of the AI models over the statistical approaches was confirmed, supporting the results of other studies [9, 11, 64, 67].

2.10 Conclusions

In this study, 93 core samples were collected from travertine rocks in the Azarshahr area of northwestern Iran. Laboratory tests, including P-wave velocity (Vp (Km/s)), Schmidt Hammer (Rn), porosity (n%), point load index (Is (MPa)), and UCS (MPa), were carried out on the samples according to the ISRM. Simple regression analysis demonstrated a meaningful relationship between UCS and Is and a relatively weak relationship between USC and the other measured parameters, which may have been the result of the use of different travertine rocks with diverse characteristics from the study area. Furthermore, poor to moderate correlations existed between the input variables. Therefore, nonlinear machine learning models based on multi-input parameters were required to accurately predict UCS. In this case, the dataset used included Vp, Rn, n%, and Is as the input variables and UCS as the target variable for the tree-based machine learning models (e.g., MARS, RF, and M5 tree).

After training and validation, the MARS model (r = 0.830, RMSE = 5.588 MPa, MAE = 4.461 MPa, WI = 0.997, and LMI = 0.359) showed superior performance in the testing phase for prediction of UCS, followed by the M5 tree and RF models. An ensemble model based on an ANN-committee was implemented to integrate the advantages of each single machine learning model. The ensemble model yielded an r of 0.890, RMSE of 3.980 MPa, MAE of 3.225 MPa, WI of 0.931, and LMI of 0.237, which improved the prediction of UCS in comparison to the standalone models. In addition, the superiority of the machine learning models over the multiple linear and nonlinear models was confirmed. Sensitivity analysis was also applied to assess the relative influence of input variables on the models’ output variable using the relative strength of effect (RSE) method. The calculated RSE values showed that the Is, with an RSE of 0.993, and n%, with an RSE value of 0.8, had the highest and lowest impacts, respectively, on predicting UCS.

Future research should use the models proposed in the current study to predict the UCS of other rock types, such as sedimentary, volcanic, and metamorphic, in different parts of the world. Applying and comparing other ensemble techniques, for example, bagging, boosting, and voting, provides an additional opportunity for future research. Furthermore, other machine learning models (e.g., extreme learning machine and deep learning models) could be applied for modeling the UCS of different rock types.

References

Dehghan S, Sattari GH, Chehreh-Chelgani S, Aliabadi MA (2010) Prediction of uniaxial compressive strength and modulus of elasticity for Travertine samples using regression and artificial neural networks. Min Sci Technol 20:41–46

Ozbek A, Unsal M, Dikec A (2013) Estimating uniaxial compressive strength of rocks using genetic expression programming. Rock Mech Geotech Eng 5(4):325–329

Briševac Z, Hrzenjak P, Buljan R (2016) Models for estimating uniaxial compressive strength and elastic modulus. Gradevinar 68(1):19–28

Karakus M, Tutmez B (2006) Fuzzy and multiple regression modeling for evaluation of intact rock strength based on point load, Schmidt hammer and sonic velocity. Rock Mech Rock Eng 39(1):45–57

Yilmaz I, Yuksek AG (2008) An example of artificial neural network (ANN) application for indirect estimation of rock parameters. Rock Mech Rock Eng 41:781–795

Tiryaki B (2008) Predicting intact rock strength for mechanical excavation using multivariate statistics, artificial neural networks and regression trees. Eng Geol 99(1–2):51–60

Barzegar R, Sattarpour M, Nikudel MR, Asghari-Moghaddam A (2016) Comparative evaluation of artificial intelligence models for prediction of uniaxial compressive strength of travertine rocks, Case study: Azarshahr area, NW Iran. Model Earth Sys Environ 2:76

Gokceoglu C (2002) A fuzzy triangular chart to predict the uniaxial compressive strength of the Ankara agglomerates from their petrographic composition. Eng Geol 66(1–2):39–51

Gokceoglu C, Zorlu K (2004) A fuzzy model to predict the uniaxial compressive strength and the modulus of elasticity of a problematic rock. Eng Appl Artif Intell 17:61–72

Liu Z, Shao J, Xu W, Wu Q (2015) Indirect estimation of unconfined compressive strength of carbonate rocks using extreme learning machine. Acta Geotech 10:651–663

Beiki M, Majdi A, Givshad AD (2013) Application of genetic programming to predict the uniaxial compressive strength and elastic modulus of carbonate rocks. Int J Rock Mech Min Sci 63:159–169

Ghasemi E, Kalhori H, Bagherpour R, Yagiz S (2018) Model tree approach for predicting uniaxial compressive strength and Young’s modulus of carbonate rocks. Bull Eng Geol Environ 77(1):331–343

Ceyran N (2014) Application of support vector machines and relevance vector machines in predicting uniaxial compressive strength of volcanic rocks. J Afr Earth Sci 100:634–644

Momeni E, Jahed Armaghani D, Hajihassani M, Amin MFM (2015) Prediction of uniaxial compressive strength of rock samples using hybrid particle swarm optimization-based artificial neural networks. Measurement 60:50–63

Saedi B, Mohammadi SD, Shahbazi H (2019) Application of fuzzy inference system to predict uniaxial compressive strength and elastic modulus of migmatites. Environ Earth Sci 78(6):208

Çelik SB (2019) Prediction of uniaxial compressive strength of carbonate rocks from nondestructive tests using multivariate regression and LS-SVM methods. Arab J Geosci 12(6):193

Hassan MA, Khalil A, Kaseb S, Kassem MA (2017) Exploring the potential of tree-based ensemble methods in solar radiation modeling. Appl Energy 203:897–916

Fan J, Yue W, Wu L, Zhang F, Cai H, Wang X, Lu X, Xiang Y (2018) Evaluation of SVM, ELM and four tree-based ensemble models for predicting daily reference evapotranspiration using limited meteorological data in different climates of China. Agric For Meteorol 263:225–241

Taghipour K, Mohajjel M (2013) Structure and generation mode of travertine fissure-ridges in Azarshahr area, Azarbaydjan, NW Iran. Iran J Geol 7(25):15–33

ISRM (1981) Rock characterization, testing and monitoring, ISRM suggested methods. ET Brown (ed.), Pergamon Press, Oxford

Pedhazur EJ (1982) Multiple regression in behavioral research: explanation and prediction. Holt Rinehart and Winston, New York

Adamowski J, Chan HF, Prasher SO, Ozga-Zielinski B, Sliusarieva A (2012) Comparison of multiple linear and nonlinear regression, autoregressive integrated moving average, artificial neural network, and wavelet artificial neural network methods for urban water demand forecasting in Montreal, Canada. Water Resour Res 48:W01528. https://doi.org/10.1029/2010WR009945

Ivakhnenko AG (1970) Heuristic self-organization in problems of engineering cybernetics. Automatica 6(2):207–219

Ho TK (1995) Random decision forests. In: Proceedings of the third international conference on document analysis and recognition, pp 278–282

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Hastie T, Tibshirani R, Friedman J (2009) The elements of statistical learning—data mining, inference and prediction. Springer, New York

Breiman L, Friedman JH, Olshen R, Stone CJ (1984) Classification and regression trees. Wadsworth, Belmont

Quinlan JR (1993) C4.5 programs for machine learning. Morgan Kaurmann, SanMateo, p 303

Rodriguez-Galiano V, Mendes MP, Garcia-Soldado MJ, Chica-Olmo M, Ribeiro L (2014) Predictive modeling of groundwater nitrate pollution using random forest and multisource variables related to intrinsic and specific vulnerability: a case study in an agricultural setting (Southern Spain). Sci Total Environ 476–477:189–206

Quinlan JR (1992) Learning with continuous classes. In: 5th Australian joint conference on artificial intelligence singapore, pp 343–348

Al-Musaylh MS, Deo RC, Adamowski JF, Li Y (2018) Short-term electricity demand forecasting with MARS, SVR and ARIMA models using aggregated demand data in Queensland, Australia. Adv Eng Info 35:1–16

Yaseen ZM, Deo RC, Hilal A, Abd AM, Bueno LC, Salcedo-Sanz S, Nehdi ML (2018) Predicting compressive strength of lightweight foamed concrete using extreme learning machine model. Adv Eng Softw 115:112–125

Mitchell TM (1997) Machine learning. Computer science series. McGraw-Hill, Burr Ridge, MATH

Rahimikhoob A, Asadi M, Mashal M (2013) A comparison between conventional and M5 model tree methods for converting pan evaporation to reference evapotranspiration for semi-arid region. Water Resour Manag 27:4815–4826

Solomatine DP, Xue Y (2004) M5 model trees compared to neural networks: application to flood forecasting in the upper reach of the Huai River in China. J Hydrol Eng 9:491–501

García Nieto PJ, García-Gonzalo E, Bové J, Arbat G, Duran-Ros M, Puig-Bargués J (2017) Modeling pressure drop produced by different filtering media in microirrigation sand filters using the hybrid ABC-MARS-based approach, MLP neural network and M5 model tree. Comput Electron Agr 139:65–74

Pal M, Deswal S (2009) M5 model tree based modelling of reference evapotranspiration. Hydrol Process 23(10):1437–1443

Wang YW, Witten IH (1997) Inducing model trees for predicting continuous classes. In: Proceedings of European conference on machine learning. University of Economics Prague

Pal M (2005) Random Forest classifier for remote sensing classification. Int J Remote Sens 26(1):217–222

Friedman JH (1991) Multivariate adaptive regression splines. Ann Stat 19:1–67

Samui P (2012) Slope stability analysis using multivariate adaptive regression spline. Metaheuristics in Water, Geotechnical and Transport Engineering: 327

Adamowski J, Chan HF, Prasher SO, Sharda VN (2012) Comparison of multivariate adaptive regression splines with coupled wavelet transform artificial neural networks for runoff forecasting in Himalayan micro-watersheds with limited data. J Hydroinf 14(3):731–744

Barzegar R, Asghari-Moghaddam A, Deo R, Fijani E, Tziritis E (2018) Mapping groundwater contamination risk of multiple aquifers using multi-model ensemble of machine learning algorithms. Sci Total Environ 621:697–712

Kisi O (2015) Pan evaporation modeling using least square support vector machine, multivariate adaptive regression splines and M5 model tree. J Hydro 528:312–320

Friedman JH, Roosen CB (1995) An introduction to multivariate adaptive regression splines. Stat Methods Med Res 4:197–217

Barzegar R, Asghari-Moghaddam A (2016) Combining the advantages of neural networks using the concept of committee machine in the groundwater salinity prediction. Model Earth Syst Environ. 2:26. https://doi.org/10.1007/s40808-015-0072-8

Barzegar R, Asghari-Moghaddam A, Baghban H (2016) A supervised committee machine artificial intelligent for improving DRASTIC method to assess groundwater contamination risk: a case study fromTabriz plain aquifer, Iran. Stoch Environ Res Risk Assess 30(3):883–899

MATLAB (2016) TreeBagger. mathworks. Available at http://www.mathworks.com/help/stats/treebagger. html (Accessed 28 Aug 2016)

Liaw A, Wiener M (2002) Classification and regression by random forest. R News 2(3):18–22

Deo RC, Downs N, Parisi A, Adamowski J, Quilty J (2017) Very short-term reactive forecasting of the solar ultraviolet index using an extreme learning machine integrated with the solar zenith angle. Environ 155:141–166

Deo RC, Kisi O, Singh VP (2017) Drought forecasting in eastern Australia using multivariate adaptive regression spline, least square support vector machine and M5Tree model. Atmos Res 184:149–175

Wanas N, Auda G, Kamel MS, Karray F (1998) On the optimal number of hidden nodes in a neural network. Proc IEEE Can Conf Electr Comput Eng 2:918–921

Mishra DA, Basu A (2013) Estimation of uniaxial compressive strength of rock materials by index tests using regression analysis and fuzzy inference system. Eng Geol 160:54–68

Barzegar R, Asghari-Moghaddam A, Adamowski J, Fijani E (2017) Comparison of machine learning models for predicting fluoride contamination in groundwater. Stoch Environ Res Risk Assess 31(10):2705–2718

Legates DR, McCabe GJ (1999) Evaluating the use of “goodness of fit” measures in hydrologic and hydroclimatic model validation. Water Resour Res 35(2):33–41

Willmott CJ (1981) On the validation of models. Phys Geogr 2:184–194

Diamantis K, Gartzos E, Migiros G (2009) Study on uniaxial compressive strength, point load strength index, dynamic and physical properties of serpentinites from Central Greece: test results and empirical relations. Eng Geol 108:199–207

Kohno M, Maeda H (2012) Relationship between point load strength index and uniaxial compressive strength of hydrothermally altered soft rocks. Int J Rock Mech Min Sci 50:147–157

Demirdag S, Tufekci K, Kayacan R, Yavuz H, Altindag R (2010) Dynamic mechanical behavior of some carbonate rocks. Int J Rock Mech Min Sci 47:307–312

Akin M, Ozsan A (2011) Evaluation of the long-term durability of yellow travertine using accelerated weathering tests. Bull Eng Geol Environ 70:101–114

Matin SS, Farahzadi L, Makaremi S, Chehreh-Chelgani S, Sattari GH (2018) Variable selection and prediction of uniaxial compressive strength and modulus of elasticity by Random Forest. Appl Soft Comput 70:980–987

Molina E, Cultrone G, Sebastian EJ, Alonso F (2013) Evaluation of stone durability using a combination of ultrasound, mechanical and accelerated aging tests. J Geophys Eng 10:1–18

Chentout M, Alloul B, Rezouk A, Belhai D (2015) Experimental study to evaluate the effect of travertine structure on the physical and mechanical properties of the material. Arab J Geosci 8:8975–8985

Jalali SH, Heidari M, Mohseni H (2017) Comparison of models for estimating uniaxial compressive strength of some sedimentary rocks from Qom Formation. Environ Earth Sci 76:753

Yang Y, Zang O (1997) A hierarchical analysis for rock engineering using artificial neural networks. Rock Mech Rock Eng 30:207–222

Jahed-Armaghani D, Tonnizam-Mohamad E, Momeni E, Monjezi M, Narayanasamy MS (2016) Prediction of the strength and elasticity modulus of granite through an expert artificial neural network. Arab J Geosci 9:48. https://doi.org/10.1007/s12517-015-2057-3

Jahed-Armaghani D, Mohammad ED, Hajihassani M, Yagiz S, Motaghedi H (2016) Application of several non-linear prediction tools for estimating uniaxial compressive strength of granitic rocks and comparison of their performances. Eng Comput 32(2):189–206

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Barzegar, R., Sattarpour, M., Deo, R. et al. An ensemble tree-based machine learning model for predicting the uniaxial compressive strength of travertine rocks. Neural Comput & Applic 32, 9065–9080 (2020). https://doi.org/10.1007/s00521-019-04418-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-019-04418-z