Abstract

Twin support vector regression (TSVR) aims at finding 𝜖-insensitive up- and down-bound functions for the training points by solving a pair of smaller-sized quadratic programming problems (QPPs) rather than a single large one as in the conventional SVR. So TSVR works faster than SVR in theory. However, TSVR gives equal emphasis to the points above the up-bound and below the down-bound, which leads to the same influences on the regression function. In fact, points in different positions have different effects on the regressor. Inspired by it, we propose an asymmetric ν-twin support vector regression based on pinball loss function (Asy- ν-TSVR). The new algorithm can effectively control the fitting error by tuning the parameters ν and p. Therefore, it enhances the generalization ability. Moreover, we study the distribution of samples and give the upper bounds for the samples locating in different positions. Numerical experiments on one artificial dataset, eleven benchmark datasets and a real wheat dataset demonstrate the validity of our proposed algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The support vector machine (SVM), introduced by Vapnik, is a successful model and prediction tool for classification and regression, which has spread to many fields [1, 2]. Compared with other machine learning approaches like artificial neural networks, SVM has many advantages. First, SVM solves a quadratic programming problem (QPP), assuring that once an optimal solution is obtained, it is the unique solution. Second, by maximizing the margin between two classes of samples, SVM derives a sparse and robust solution. Third, SVM implements the structural risk minimization principle rather than the empirical risk minimization principle, which minimizes the upper bound of the generalization error. The introduction of kernel function extends the linear case to the nonlinear case, and effectively overcomes the “curse of dimension” [3, 4]. Because of its great generalization performance, SVM has been successfully applied in various aspects ranging from pattern recognition, text categorization, and financial regression.

The ν-support vector regression (ν-SVR) [5], which is based on statistical learning theory, has become a standard tool in regression tasks. The ν-SVR extends standard SVR [2] techniques by Vapnik via enforcing a fraction of the data samples to lie inside an 𝜖-tube, as well as minimizing the width of this tube [6]. It introduces a new parameter ν to control the fitting error. ν-SVR has better generalization performance than the traditional techniques. In the ν-SVR, the 𝜖-insensitive loss function is defined as follows

ν-SVR owns better generalization ability compared with other machine learning methods. However, one of the main challenges for it is high computational complexity. In order to improve the computational speed, Peng proposed an efficient twin support vector machine for regression problem (TSVR) [7,8,9] based on twin support vector machine (TSVM) [11]. It aims at generating two nonparallel bound functions [10] by solving two smaller-sized QPPs such that each function determines the 𝜖-insensitive up- or down-bounds of the unknown regressor. Each QPP involves only one group of constraints for all samples, which makes TSVR work faster than the standard SVR in theory [7, 12]. Then, it receives many attentions, and many variants have also been proposed in literatures [13,14,15,16].

Recently, Huang extends 𝜖-insensitive loss L 𝜖 (u) to the pinball loss \(L_{\epsilon }^{p}(u)\). Samples locating in different positions are given different penalties [17,18,19,20], and then it yields better generalization performance. Inspired by it, we propose an asymmetric ν-twin support vector regression (Asy- ν-TSVR) in this paper. Where the asymmetric tube is used, and then Asy- ν-TSVR produces good generalization performance. Asy- ν-TSVR is especially suitable for dealing with the asymmetric noise [21,22,23,24].

Asy- ν-TSVR aims at finding two nonparallel functions: 𝜖 1-insensitive down function \(f_{1}(x)={w_{1}^{T}}x+b_{1}\) and 𝜖 2-insensitive up function \(f_{2}(x)={w_{2}^{T}}x+b_{2}\) [25, 26]. Similar to the TSVR [7], Asy- ν-TSVR also solves two smaller-sized QPPs rather than a larger one, and each involves only one group of constraints for all samples. By introducing the pinball loss [27] into it, the samples lying above and below the bounds are given different punishments [29, 30]. To verify the validity of our proposed algorithm, an artificial experiment, eleven benchmark experiments and a real wheat dataset have been performed. Compared with ν-SVR, Asy- ν-SVR, least squares for support vector regression (LS-SVR) [28], and TSVR, our proposed Asy- ν-TSVR has better generalization ability.

The paper is organized as follows: Section 2 briefly dwells on ν-SVR, Asy- ν-SVR, and TSVR. Asy- ν-TSVR is proposed in Section 3, which includes both the linear and nonlinear cases. The bounds are discussed in Section 4. Section 5 performs experiments on three kinds of datasets to investigate the feasibility and validity of our proposed algorithm. Section 6 ends the paper with concluding remarks.

2 Related works

In this section, we give a brief description of the ν-SVR, Asy- ν-SVR, and TSVR. Given a training set T = {(x 1,y 1),(x 2,y 2),⋯ ,(x l ,y l )}, where x i ∈ R d and y i ∈ R. For the sake of conciseness, let matrix A = (x 1,x 2,⋯ ,x l )T, and matrix Y = (y 1,y 2,⋯ ,y l )T. e is a vector of ones of appropriate dimensions.

2.1 ν-support vector regression

The nonlinear ν-SVR seeks to find a regression function f(x) = w T ϕ(x) + b in a high-dimensional feature space tolerating the small error in fitting the given data points. This can be achieved by utilizing the 𝜖-insensitive loss function L 𝜖 (u) that sets an 𝜖-insensitive “tube” as small as possible, within which errors are discarded. The ν-SVR can be obtained by solving the following QPP,

where C,ν are parameters chosen a priori. Parameter C controls the trade-off between the fitting errors and flatness of the regression function. ν has its theoretical interpretation that controls the fractions of the support vectors and the margin errors. To be more precise, ν is an upper bound on the fraction of errors and a lower bound on the fraction of support vectors [31,32,33]. ξ i and \(\xi _{i}^{*}\) are the slack vectors reflecting whether the samples locate into the 𝜖-tube or not.

By introducing the Lagrangian multiplies α and \(\alpha _{i}^{*}\), we can derive the dual problem of the ν-SVR as follows

Once the QPP (3) is solved, we can achieve its solution \(\alpha ^{(*)} =(\alpha _{1},\alpha _{1}^{*},\alpha _{2},\alpha _{2}^{*},\cdots ,\alpha _{l},\alpha _{l}^{*})\) and the threshold b, and then obtain the regression function,

Here K(x i ,x) represents a kernel function which gives the dot product in the high-dimensional feature space.

2.2 Asymmetric ν-support vector regression

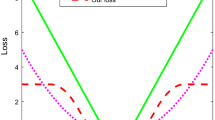

The ν-SVR considers only one possible location of the 𝜖-tube: it imposes that the numbers of samples above and below the tube are equal. To further improve the computational accuracy, Huang imposes that those outliers can be divided asymmetrically over both regions. To pursue an asymmetric tube, he introduces the following asymmetric loss function

where p is a parameter related to asymmetry. As we can learn that \(L_{\epsilon }^{p}(u)\) can reduce to L 𝜖 when p = 0.5.

The Asy- ν-SVR solves the following QPP,

The coefficients γ and ν control the trade-off among the margin, the size of the slack variables and the width of 𝜖-tube. The 𝜖 as unknown controls the width of the 𝜖-insensitive zone, which is used to fit the training data, and it can affect the number of support vectors. ξ i and \(\xi _{i}^{*}\) are the slack vectors reflecting whether the samples locating into the 𝜖-tube or not. Parameter p is related to the asymmetry. Where parameters γ,ν and p are chosen in advance. Apparently Asy- ν-SVR reduces to ν-SVR when p = 0.5. So Asy- ν-SVR is an extension of the ν-SVR.

We can derive the dual formulation of the Asy- ν-SVR as follows

Once the QPP (7) is solved, we can achieve its solution \(\lambda ^{(*)} =(\lambda _{1},\lambda _{1}^{*},\lambda _{2},\lambda _{2}^{*},\cdots ,\lambda _{l},\lambda _{l}^{*})\) and threshold b, and then obtain the following regressor

This extension gives an effective way to deal with skewed noise in regression problems.

2.3 Twin support vector regression

To improve the computational speed, Peng [7] proposed an efficient TSVR for the regression problem. TSVR generates an 𝜖-insensitive down-bound function \(f_{1}(x)={w_{1}^{T}}x+b_{1}\) and an 𝜖-insensitive up-bound function \(f_{2}(x)={w_{2}^{T}}x+b_{2}\). TSVR is illustrated in Fig. 1.

The final regressor f(x) is decided by the mean of these two bound functions, i.e.,

For the nonlinear case, TSVR solves the following pair of smaller-sized QPPs,

and

In the objective function of (10) or (11), the first term minimizes the squared distances from the training points to f 1(x) + 𝜖 1 and f 2(x) − 𝜖 2, the second term aims to minimize the sum of error variables. Where parameters C 1 and C 2 chosen in advance determines the trade-off between above goals. The constraints require the training points should be larger than the function f 1(x) at least 𝜖 1, at the same time they should be smaller than the function f 2(x) at least 𝜖 2. ξ and η are slack vectors. For the outliers, the same penalty is given to them in TSVR.

After introducing the Lagrangian function L, and differentiating L with respect to variables, we can derive their dual formulations of (10) and (11) as follows

and

where H = [K(A,A T) e], f = Y − e 𝜖 1, and h = Y + e 𝜖 2.

Once the dual QPPs (12) and (13) are solved, we can get

and

Note that TSVR is comprised of a pair of QPPs such that each QPP determines one of up- or down-bound function by using only one group of constraints compared with the standard SVR. Hence, TSVR solves two smaller-sized QPPs rather than a single large one, which implies that TSVR works faster than the standard SVR in theory.

3 Asymmetric ν-twin support vector regression

As we know that the same penalties are given to the points above the up-bound and below the down-bound in TSVR. In fact, they have different effects on the regression function. Motivated by studies above, we propose the following asymmetric ν-twin support vector regression based on the pinball loss function.

3.1 Linear case

We extend TSVR to the asymmetric case, where p is the parameter related to asymmetric. Asy- ν-TSVR generates an 𝜖 1-insensitive down-bound function \(f_{1}(x)={w_{1}^{T}}x+b_{1}\) and an 𝜖 2-insensitive up-bound function \(f_{2}(x)={w_{2}^{T}}x+b_{2}\), and they are nonparallel. Asy- ν-TSVR is illustrated in Fig. 2.

The final regressor f(x) is decided by the mean of these two bound functions, i.e.

In TSVR, after replacing the 𝜖-insensitive loss function L 𝜖 by the pinball loss \(L_{\epsilon }^{p}(u)\), Asy- ν-TSVR solves the following pair of smaller-sized QPPs,

and

where C 1, C 2, ν 1, ν 2 are parameters chosen in advance, ξ and η are slack vectors.

The first term in the objective function of (16) or (17) minimizes the sum of squared distances from the estimated function \(f_{1}(x)={w_{1}^{T}}x+b_{1}\) or \(f_{2}(x)={w_{2}^{T}}x+b_{2}\) to the training points. The second term means the 𝜖 1-tube and 𝜖 2-tube are as narrow as possible. The third term minimizes the sum of error variables. The constraints require the training points lie above \(f_{1}(x)-\epsilon _{1}={w_{1}^{T}}x+b_{1}-\epsilon _{1}\) or below \(f_{2}(x)+\epsilon _{2}={w_{2}^{T}}x+b_{2}+\epsilon _{2}\) as possible. The slack vector ξ or η is introduced to measure the error wherever the distance is closer than 𝜖 1 or 𝜖 2. Note that the equal emphasis is given to ξ,η in TSVR. Here, for the outliers, we apply a slightly different penalty to them with the parameter p, i.e., pinball loss. Moreover, it degrades into 𝜖-intensive loss when p = 0.5.

To derive the dual formulations of Asy- ν-TSVR, we first introduce the following Lagrangian function for the problem (16), which is

where α, β, and γ are nonnegative and the Lagrangian multipliers. After differentiating L in (18) with respect to variables w 1,b 1,𝜖 1 and ξ, we have

Combining (19) and (20) leads to

Define G = [A e], and μ 1 = [w 1,b 1]T, then we have

From (24), we can get

Then,

We can get

From (21) and (22), we can obtain the following constraints,

Finally, we can derive the dual formulation of (16) as follows

Similary, we can obtain the dual formulation of (17) as

Once (30) is solved, we can obtain

3.2 Nonlinear case

In order to extend our model to the nonlinear case, we consider the following kernel-generated functions instead of linear functions,

The corresponding formulations are designed as follows

and

where C 1, C 2, ν 1, and ν 2 are parameters chosen in advance, ξ and η are slack vectors. The dual formulations of (33) and (34) can be derived as follows

and

where H = [K(A,A T) e]. Once (35) and (36) are solved, we can get the augmented vectors

and

After the optimal values w 1,w 2 and b 1,b 2 are calculated, the regression function is expressed as

Compared with other algorithms, Asy- ν-TSVR owns following characteristics: First, it considers the sum of squared distances from the training points to the shifted functions f 1(x) and f 2(x) instead of others. Second, Asy- ν-TSVR introduces the asymmetric loss function \(L_{\epsilon }^{p}(u)\) not 𝜖-insensitive loss L 𝜖 , and different penalty is proposed to give the samples locating in different positions. Third, Asy- ν-TSVR degrades into the TSVR when p = 0.5, so Asy- ν-TSVR is an extension of TSVR.

4 Discussion about the bound

In this section, we discuss the bounds of three algorithms, i.e., ν-SVR, Asy- ν-SVR, and Asy- ν-TSVR. In ν-SVR, there are same upper bounds for the points lying above and below the ν-tube. However, in Asy- ν-SVR and Asy- ν-TSVR, there are different upper bounds for the points above the hyper-plane w T x + b + 𝜖 = 0 and below the hyper-plane w T x + b − 𝜖 = 0.

Proposition 1

The optimal solution in ν -SVR satisfies:

where L(a)stands for an indicator function, which is equal to one when a is true and is zero otherwise.

Please refer to literature [5] for proof of this proposition.

Proposition 2

The optimal solution in Asy- ν -SVR satisfies:

where p is the parameter related to asymmetric. It means that the points lying above and below the ν -tube have different upper bounds. And when p=0.5, it has the same upper bound as ν -SVR.

The proof of this proposition is in [18]. We can find that there are different upper bounds for the samples lying above and below the 𝜖-tube in the Asy- ν-SVR.

Proposition 3

The optimal solution in Asy- ν -TSVR satisfies:

where L(a)stands for an indicator function, which is equal to one when a is true and is zero otherwise.

Proof

Any point below the line \({w_{1}^{T}}x+b_{1}-\epsilon _{1}=0\) satisfies \(({w_{1}^{T}}x_{i}+b_{1}-\epsilon _{1})-y_{i}=2(1-p)\xi _{i}\), and ξ i > 0. According to the complementary slackness condition, we have γ i = 0. We can further get \(\alpha _{i}=\frac {C_{1}}{2(1-p)l}\) from (22). Since β ≥ 0, we get e T α ≤ C 1 ν 1 from (21), it means that \(\displaystyle \sum \limits _{i=1}^{l}\alpha _{i} \leq C_{1}\nu _{1}\). From above, we get

Similarly, the points lying above the line \({w_{2}^{T}}x+b_{2}+\epsilon _{2}=0\) satisfy

Combining (43) and (44), we can get

Note that there are different upper bounds for the samples lying above the upper bound and below the down-bound in the Asy- ν-TSVR.

□

5 Numerical experiments

To demonstrate the validity of our Asy- ν-TSVR, we compare it with other four algorithms, i.e., ν-SVR, LS-SVR, TSVR, and Asy- ν-SVR using one artificial dataset, eleven benchmark datasets, and a real wheat dataset. For the experiment on each dataset, we use 5-fold cross-validation to evaluate the performance of five algorithms. That is to say, the dataset is split randomly into five subsets; one of those sets is reserved as a test set, and the rest is training set. This process is repeated five times, and the average of five testing results is used as the performance measure.

5.1 Evaluation criteria

In order to evaluate the performance of our Asy- ν-TSVR, the evaluation criteria are specified before presenting the experimental results. The size of testing set is denoted by l, while y i denotes the real value of a sample point x i , \(\widehat {y_{i}}\) denotes the predicted value of x i , \(\displaystyle \overline {y}=\frac {1}{l}\sum \limits _{i=1}^{l}y_{i}\) is the mean of y 1,y 2,⋯ ,y l . We use the following criteria for algorithm evaluation [34].

MAE: Mean absolute error, defined as

MAE is also a popular deviation measurement between the real and predicted values.

RMSE: Root mean squared error, defined as

SSE/SST: Ratio between sum of squared error and sum of squared deviation of testing samples, defined as

SSR/SST: Ratio between interpretable sum of squared deviation and real sum of squared deviation of testing samples, defined as

In most cases, small SSE/SST means there is good agreement between the estimates and the real values, and decreasing SSE/SST is usually accompanied by an increase in SSR/SST.

Time: The total training time and testing time.

5.2 Parameter selection

The performance of these five algorithms depends heavily on the choices of parameters. In our experiments, we choose optimal values for the parameters by the grid search method. In five algorithms, the Gaussian kernel parameter σ is selected from the set {2i|i = −4,−3,⋯ ,8}. In TSVR and Asy- ν-TSVR, we set C 1= C 2, ν 1= ν 2 and 𝜖 1= 𝜖 2 to degrade the computational complexity of parameter selection. The parameter C is searched from the set {2i|i = −3,−2,⋯ ,8}. The optimal values for ν in four algorithms are chosen from the set {0.1,0.2,⋯ ,0.9}. The optimal values for 𝜖 in algorithms are selected from the set {0.1,0.2,⋯ ,0.9}. Parameter p is searched from the set {0.2,0.4,0.45,0.5,0.55,0.6,0.8}.

5.3 Experiment on artificial dataset with noises

To evaluate the performance of Asy- ν-TSVR, we carry out an artificial experiment under different cases. We firstly generate 100 points (X i ,i = 1,⋯ ,100) following a uniform distribution in [0,1]5, linear function \(Y(X)=w^{T}X+b+(\delta _{\chi ^{2}}-4)\) with w = [1;0.5;−0.5;−1;2], b = −3 is used to calculate their values, here \(\delta _{\chi ^{2}}\) follows a chi-squared distribution with 4 degrees of freedom. The generated points (X i ,Y i ),i = 1,⋯ ,100 are regarded as samples. Subsequently we randomly select 5% of the samples and replace their observed results by random values following a uniform distribution in the range of [−15,15]; thus, the new samples (X i ,Y i ),i = 1,⋯ ,100 are produced. Finally, a uniform distribution in the range of [−30,30] is used to build another samples. Fivefold cross-validation is applied as before, the experimental results of five algorithms are listed in Table 1. Apparently, our proposed algorithm Asy- ν-TSVR always performs the lowest MAE among five algorithms on this artificial dataset with different noises. The reason may lie in that our Asy- ν-TSVR adopts the pinball loss function but ν-SVR, LS-SVR, and TSVR adopt 𝜖-insensitive loss. It makes Asy- ν-TSVR less sensitive to noises and has better generalization ability since samples from different positions are given different punishments. The Gaussian kernel function k(x i ,x j ) = exp(−||x i − x j ||2/σ 2) is used on this artificial dataset. The optimal parameters used in the experiment are listed in the last column of Table 1.

5.4 Experiments on benchmark datasets

To further verify the efficiency of our algorithm, we conduct experiments on eleven benchmark datasets from the UCI machine learning repositoryFootnote 1. The datasets are Auto Price, Bodyfat, Chwirut, Con. S, Diabetes, Machine-Cpu, Pyrimidibes, Triazines, Housing, Istanbul Stock Exchange, and Yacht Hydrodynamics. Both linear kernel and Gaussian kernel are considered in five algorithms. Moreover, statistical tests, including the Wilcoxon signed-rank test and Friedman test, are also used to demonstrate the validity of our proposed method. At last, we also study the relationship between the efficiency of our Asy- ν-TSVR and the asymmetry of the datasets in Section 5.4.4.

5.4.1 Result comparison and discussion

The experimental results of five algorithms are summarized in Table 2 when linear kernel is employed, and in Table 3 when Gaussian kernel is used. In the error items, the first item denotes the mean value of five times testing results, and the second item stands for plus or minus the standard deviation. Time denotes the mean value of time taken by five time experiments, and each experimental time consists of training time and testing time.

In terms of MAE criterion, from Table 2, we can find that Asy- ν-SVR produces the lowest testing error among five algorithms in most cases when linear kernel is employed, followed by Asy- ν-TSVR. Both of them employ the pinball loss, which implies that pinball loss is more suitable than 𝜖-insensitive loss for these datasets. In addition, in terms of RMSE criterion, Asy- ν-TSVR yields the comparable testing error with Asy- ν-SVR and TSVR. It further shows that pinball loss is suitable for these datasets. Meanwhile, we can find that small MAE and RMSE corresponds to small SSE/SST and large SSR/SST in most cases.

No matter from MAE criterion or RMSE criterion, from Table 3, we can find that Asy- ν-TSVR yields the lowest testing errors among five algorithms on most datasets. Followed by TSVR. On dataset Pyrimidibes, although Asy- ν-TSVR produces lightly higher MAE than ν-SVR and Asy- ν-SVR. Asy- ν-TSVR produces the lowest RMSE among five algorithms. In addition, we can also find that Asy- ν-SVR yields slightly lower MAE than ν-SVR. It further testifies that the pinball loss function is effective in the Asy- ν-TSVR. Meanwhile, we can find that small MAE and RMSE corresponds to small SSE/SST and large SSR/SST in most cases.

In terms of computational time, from Tables 2 and 3, we can really find that TSVR costs more running time than Asy- ν-TSVR for most cases. It means that the introduction of pinball loss function does not increase computational cost of Asy- ν-TSVR. In addition, ν-SVR and Asy- ν-SVR cost larger running time than TSVR and Asy- ν-TSVR. The main reason lies in that they solve a larger-sized QPP but TSVR and Asy- ν-TSVR solve a pair of smaller-sized QPPs. LS-SVR costs the least running time among five algorithms since it solves a system of linear equations rather than a QPP.

To further verify the computational burden with different p values in our Asy- ν-TSVR, we analyze the experiments on 11 benchmark datasets with different p values. The average values of computational time on each dataset are summarized in Table 4, and the MAE of Asy- ν-SVR and Asy- ν-TSVR are displayed in Table 5.

From Table 4, we can find that Asy- ν-TSVR costs less time when p value is near to 0.5. That is, smaller or larger p value will increase the computational burden. From Table 5, we can see that the MAE of both Asy- ν-SVR and Asy- ν-TSVR are monotonic as p varies from 0.2 to 0.8 for most datasets. For example, the MAE is monotonically decreasing on Auto Price and Machine-Cpu. However, it is monotonically increasing on Pyrimidibes. The p value controls the imbalance ratio of punishments on different samples. The experimental results in Table 5 verify that the introduction of pinball loss can actually improve the performance of the model for Asymmetric datasets. And different datasets fit for different p values.

5.4.2 Wilcoxon signed-rank test

In these benchmark experiments, both linear kernel and nonlinear kernel are employed. To verify that which is more effective, the Wilcoxon signed-rank test [35] is used.

The Wilcoxon signed-rank test is a nonparametric statistical hypothesis test used when comparing two related samples, matched samples, or repeated measurements on a single sample to assess whether their population mean ranks differ (i.e., it is a paired difference test). Here, we use the “signrank” function in Matlab to do the Wilcoxon signed-rank test. For each algorithm, the null hypothesis H 0 demonstrates that the MAE in linear case is larger than that in the nonlinear case.

Table 6 lists the p values of a right-sided Wilcoxon signed-rank test, the test statistic W and a logical value indicating the test decision. h = 1 indicates a rejection of the null hypothesis, and h = 0 indicates a failure to reject the null hypothesis at the 5% significance level. We can see that the results are h = 0 for all five algorithms, which means that the nonlinear case is superior to the linear case.

5.4.3 Paired t test

From Table 3, one can easily observe that our proposed Asy- ν-TSVR does not outperform four other algorithms for all datasets in the nonlinear case. We use five times testing results corresponding to the lowest error to perform the paired t test. The null hypothesis H 0 demonstrates that there is no significant difference between the two algorithms tested. The hypothesis H 0 is rejected if the p value is less than 0.05 under the significance level α = 0.05. We compute the p values between Asy- ν-TSVR and other algorithms. From the experimental results, we can find that there are significant differences between Asy- ν-TSVR and other algorithms on datasets Chwirut, Housing, and Yacht Hydrodynamics. However, there is no significant difference on other datasets.

5.4.4 Friedman test

To further demonstrate the validity of our proposed Asy- ν-TSVR in the nonlinear case, Friedman test [36, 37] is employed. We assume Friedman test with the corresponding post hoc tests which is considered to be a simple, nonparametric yet safe test. For this, the average ranks of five algorithms on MAE for all datasets are calculated and listed in Table 7. Under the null hypothesis that all algorithms are equivalent, one can compute the Friedman statistic according to (50),

where \(\displaystyle R_{j}=\frac {1}{N}\sum \limits _{i}{r_{i}^{j}}\), and \({r_{i}^{j}}\) denotes the j-th of k algorithms on the i-th of N datasets. Friedman’s \({\chi _{F}^{2}}\) is undesirably conservative and derives a better statistic

which is distributed according to the F-distribution with k-1 and (k − 1)(N − 1) degrees of freedom.

We can obtain \({\chi _{F}^{2}}=12.09\) and F F = 3.789 according to (50) and (51), where F F is distributed according to F-distribution with (4, 40) degrees of freedom. The critical value of F(4, 40) is 2.61 for the significance level α = 0.05, and similarly it is 3.13 for α = 0.025 and 3.83 for α = 0.01. Since the value of F F is much larger than the critical value, there is significant difference between five algorithms. Note that the average rank of Asy- ν-TSVR is far lower than the remaining algorithms. It means that our Asy- ν-TSVR is more valid than other four algorithms.

5.4.5 Analyze the asymmetry of two datasets

In order to analyze the asymmetry of datasets, the kernel function-based method is used to estimate the p.d.f. of \(y-\widehat f(x)\). In the following, the p.d.f. of \(y-\widehat f(x)\) obtained by the ν-SVR and the Asy- ν-TSVR are listed. Where the results of Chwirut dataset are shown in Fig. 3, and Bodyfat dataset in Fig. 4.

Apparently, Figs. 3 and 4 imply that the asymmetry of Bodyfat dataset is not obvious. On the contrary, the Chwirut dataset shows noticeable asymmetry. However, we can find from Tables 2 and 3 that our proposed algorithm Asy- ν-TSVR outperforms the rest algorithms on these two datasets. This is because the pinball loss function enhances the generalization ability of our Asy- ν-TSVR for Asymmetric datasets. And the symmetric datasets can be regarded as the special case of the Asymmetric datasets.

5.5 Experiment on wheat dataset

There are 210 wheat samples from all over China in this real data experiments [34]. The protein content of wheat ranges from 9.83 to 20.26%, and the wet gluten of wheat ranges from 14.8 to 44.6%. They are provided by Heilongjiang research institute of agricultural science. Each sample has 1193 spectral features. They were scanned in transmission mode using a commercial spectrometer MATRIX-I. Samples were acquired in a rectangular quartz cuvette of 1-mm path length with air as reference at room temperature (20–24 ∘C). The reference spectrum was subtracted from the sample spectra to remove background noise. The rectangular quartz cuvette was cleaned after each sample was scanned to minimize cross-contamination.

In this high-dimensional data experiment, we predict the content of protein and the wet gluten of wheat using 1193 spectral features of wheat. Now that Gaussian kernel yields better generalization performance than linear kernel, we only consider the former, the prediction errors of five algorithms are summed in Tables 8 and 9.

From Tables 8 and 9, we can learn that Asy- ν-TSVR yields the lowest prediction errors (0.9122% for protein content and 2.2957% for wet gluten) among five algorithms. The prediction errors of TSVR follow. LS-SVR outperforms ν-SVR and Asy- ν-SVR either on the prediction of protein content or the prediction of wet gluten. ν-SVR and Asy- ν-SVR produce the comparable testing errors. In addition, Asy- ν-SVR produces lightly lower testing errors than ν-SVR. It implies that the pinball loss is effective in ν-SVR and TSVR. In terms of the computational time, LS-SVR costs the shortest running time since it solves a system of linear equations, but other four algorithms solve a pair of smaller-sized QPPs or a larger-sized QPP.

6 Conclusion

In this paper, we propose an Asy- ν-TSVR for the regression problem. Asy- ν-TSVR solves a pair of smaller-sized QPPs instead of a larger-sized one as in the traditional ν-SVR. So it works faster than Asy- ν-SVR. Asy- ν-TSVR employs the pinball loss function \(L_{\epsilon }^{p}(u)\) as opposed to the 𝜖-insensitive loss function L 𝜖 (u); then, it can effectively reduce the disturbance of the noise and improve the generalization performance. Three kinds of experiments demonstrate the validity of our proposed Asy- ν-TSVR. Asy- ν-TSVR degrades into the ν-TSVR when p = 0.5, so our Asy- ν-TSVR is an extended version of the ν-TSVR, and it is applicable to the symmetric and asymmetric datasets. How to apply the pinball loss function to other TSVMs is our future work.

References

Steinwart I, Christmann A (2008) Support vector machines. Springer, New York

Vapnik V (1995) The nature of statistical learning theory. Springer, New York

Shawe-Taylor J, Cristianini N (2004) Kernel methods for pattern analysis. Cambridge University Press

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines and other kernel-based learning methods. Cambridge University Press

Schölkopf B, Smola A, Williamson RC, Bartlett PL (2000) New support vector algorithms. Neural Comput 12:1207–1245

Bi J, Bennett KP (2003) A geometric approach to support vector regression. Neurocomputing 55:79–108

Peng XJ (2010) TSVR: an efficient twin support vector machine for regression. Neural Netw 23:365–372

Peng XJ (2012) Efficient twin parametric insensitive support vector regression model. Neurocomputing 79:26–38

Peng XJ, Xu D, Shen JD (2014) A twin projection support vector machine for data regression. Neurocomputing 138:131–141

Santanu G, Mukherjee A, Dutta PK (2009) Nonparallel plane proximal classifier. Signal Process 89:510–522

Jayadeva KR, Chandra S (2007) Khemchandani Twin support vector machines for pattern classification. IEEE Trans Pattern Anal Mach Intell 29:905–910

Xu YT, Xi WW, Lv X (2012) An improved least squares twin support vector machine. J Info Comput Sci 9:1063–1071

Singh M, Chadha J, Ahuja P, Jayadeva S (2011) Chandra Reduced twin support vector regression. Neurocomputing 74:1474– 1477

Zhao YP, Zhao J, Zhao M (2013) Twin least squares support vector regression. Neurocomputing 118:225–236

Kumar MA, Gopal M (2009) Least squares twin support vector machines for pattern classification. Expert Syst Appl 36:7535–7543

Tomar D, Agarwal S (2015) Twin Support Vector Machine: a review from 2007 to 2014. Egyptian Info J 16:55–69

Huang XL, Shi L, Suykens JAK (2014) Support vector machine classifier with pinball loss. IEEE Trans Pattern Anal Mach Intell 36:984–997

Huang XL, Shi L, Pelckmans K, Suykens JAK (2014) Asymmetric ν-tube support vector regression. Comput Stat Data Anal 77:371–382

Xu YT, Yang ZJ, Zhang YQ, Pan XL, Wang LS (2016) A maximum margin and minimum volume hyper-spheres machine with pinball loss for imbalanced data classification. Knowl-Based Syst 95:75–85

Xu YT, Yang ZJ, Pan XL (2016) A novel twin support vector machine with pinball loss. IEEE Trans Neural Netw Learn Syst 28(2):359–370

Hao PY (2010) New support vector algorithms with parametric insensitive/margin model. Neural Netw 23:60–73

Le Masne Q, Pothier H, Birge NO, Urbina C, Esteve D (2009) Asymmetric noise probed with a josephson junction. Phys Rev Lett 102:067002

Yu K, Moyeed RA (2001) Bayesian quantile regression. Stat Prob Lett 54:437–447

Sengupta RN (2008) Use of asymmetric loss functions in sequential estimation problems for multiple linear regression. J Appl Stat 35:245–261

Xu YT, Guo R (2014) An improved ν-twin support vector machine. Appl Intell 41:42–54

Xu YT, Wang L, Zhong P (2012) A rough margin-based ν-twin support vector machine. Neural Comput Applic 21:1307–1317

Steinwart I, Christmann A (2011) Estimating conditional quantiles with the help of the pinball loss. Bernoulli 17:211–225

Suykens JAK, Tony VG, Jos DB et al (2002) Least squares support vector machines. World Scientific Pub Co, Singapore

Xu YT (2012) A rough margin-based linear ν support vector regression. Stat Prob Lett 82:528–534

Xu YT, Wang LS (2012) A weighted twin support vector regression. Knowl-Based Syst 33:92–101

Navia-Vzquez F, Prez-Cruz A, Arts-Rodrguezand A, Figueiras-Vidal R (2001) Weighted least squares training of support vectors classifiers which leads to compact and adaptive schemes. IEEE Trans Neural Netw 12 (5):1047–1059

Prez-Cruz J, Herrmann DJL, Scholkopf B (2003) Weston Weston Extension of the nu-SVM range for classification. In: Prez-Cruz J, Herrmann DJL, Scholkopf B (eds) Advances in learning theory: methods, models and applications. IOS Press, pp 179–196

Scholkopf B, Smola A, Bartlett P, Williamson R (2000) New support vector algorithms. Neural Comput 12(5):1207–1245

Xu YT, Wang LS (2014) k-nearest neighbor-based weighted twin support vector regression. Appl Intell 41:299–309

Gibbons JD, Chakraborti S (2011) Nonparametric statistical inference, 5th Ed. Chapman & Hall CRC Press, Taylor & Francis Group, Boca Raton

Dems̆ar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

García S, Fernández A, Luengo J, Herrera F (2010) Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining. Experimental analysis of power. Info Sci 180:2044–2064

Acknowledgments

The authors gratefully acknowledge the helpful comments and suggestions of the reviewers, which have improved the presentation. This work was supported in part by the National Natural Science Foundation of China (No. 11671010) and Natural Science Foundation of Beijing Municipality (No. 4172035).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Xu, Y., Li, X., Pan, X. et al. Asymmetric ν-twin support vector regression. Neural Comput & Applic 30, 3799–3814 (2018). https://doi.org/10.1007/s00521-017-2966-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-017-2966-z