Abstract

This paper considers the exponential synchronization problem for chaotic neural networks with mixed delays and impulsive effects. The mixed delays include time-varying delays and unbounded distributed delays. Some delay-dependent schemes are designed to guarantee the exponential synchronization of the addressed systems by constructing suitable Lyapunov–Krasovskii functional and employing stability theory. The synchronization conditions are given in terms of LMIs, which can be easily checked via MATLAB LMI toolbox. Moreover, the synchronization conditions obtained are mild and more general than previously known criteria. Finally, two numerical examples and their simulations are given to show the effectiveness of the proposed chaos synchronization schemes.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

During the past several years, dynamics of delayed neural networks have been extensively studied because of their important applications in many areas such as associative memory, pattern recognition and nonlinear optimization problems, see [1–5]. Many researchers have a lot of contributions to these subjects. However, most previous works on delayed neural networks have predominantly concentrated on stability analysis and periodic oscillations, see [6–11]. It is well known that chaos synchronization of dynamics systems has important applications in many fields including biological systems, parallel image processing, neural networks, information science, see [12–16]. Moreover, it has been shown that if the network’s parameters and time delays are appropriately chosen, the delayed neural networks can exhibit some complicated dynamics and even chaotic behaviors [17, 18]. Hence, it has attracted many scholars to study the synchronization of chaotic delayed neural networks and many excellent papers and monographs dealing with synchronization of chaotic systems have been published [19–23, 27–33, 38–41]. For instance, Cheng et al. [20] investigated the exponential synchronization problem for a class of chaotic neural networks with or without constant delays via Lyapunov stability method and the Halanay inequality. Xia et al. [22] studied the asymptotical synchronization for a class of coupled identical Yang–Yang type fuzzy cellular neural networks with time-varying delays via Lyapunov–Krasovskii functional and linear matrix inequality (LMI) approach.

It is well known that due to the finite speeds of the switching and transmission of signals [7, 35], time delays which can cause instability and oscillations in system do exist in a working network and thus should be incorporated into the models. Most of the existing works on delayed neural networks have dealt with the neural networks with discrete delays, see for example Refs. [5, 7, 9, 18] and the references therein. As we known, neural networks have a spatial nature due to the presence of parallel pathways with a variety of axon sizes and lengths [35]. So it is desirable to model them by introducing unbounded distributed delays. In other words, unbounded distributed delays should also be taken into account in the neural networks models as well as discrete delays [10, 11, 24–26]. Recently, some works dealing with synchronization phenomena in chaotic neural networks with discrete delays and distributed delays have appeared [27–33]. In [29–31], Li et al. investigated the exponential synchronization of neural networks with time-varying delays and finite distributed delays by using the drive–response concept, LMI approach and the Lyapunov stability theorem. However, the time-varying delays in [29–31] are continuously differentiable and their derivatives have finite upper bounded. In [32, 33], Song further obtained the asymptotical and exponential synchronization LMIs-based schemes for the neural networks with time-varying delays and finite distributed delays by constructing proper Lyapunov–Krasovskii functional and inequality technique, which removed those restrictions on time-varying delays. However, all the synchronization problems in [27–33] have dealt with the chaotic neural networks with time-varying delays or finite distributed delays and cannot be applied to the models with unbounded distributed delays.

On the other hand, many evolutionary processes, particularly some biological systems such as biological neural networks and bursting rhythm models in pathology, undergo abrupt changes at certain moments of time due to impulsive inputs, that is, do exhibit impulsive effects [34, 35]. Neural networks as artificial electronic systems are often subject to impulsive perturbations that in turn affect dynamical behaviors of the systems. According to Haykin [35] and Arbib [36], when stimuli from the body or the external environment are received by receptors, the electrical impulses will be conveyed to the neural net and impulsive effects arise naturally. Moreover, impulses can also be introduced as a control mechanism to stabilize some otherwise unstable neural networks, see [37]. Hence, it is very important and, in fact, necessary to investigated the dynamics of neural networks with mixed delays and impulsive effects. To date, a large number of results on dynamics of neural networks with mixed delays and impulsive effects have been derived in the literatures, see [10, 11], and the references cited therein. Recently, there are several results on synchronization problems of chaotic neural networks with delays and impulsive effects [23, 38–41]. In particular, Yang and Cao [39] investigated the exponential synchronization of neural networks with delays and impulsive effects by employing the Lyapunov stability theory. However, the time delays addressed are constants. Sheng and Yang [40] further studied the exponential synchronization for a class of neural networks with unbounded distributed delays and impulsive effects by using the Lyapunov functional method. However, the obtained results in [40] not only ignore the information of delay kernels for synchronization of chaotic neural networks but also impose certain unnecessary restrictions on impulses. In addition, it is widely known that the results based on LMIs have advantages not only in that they can be easily verified via MATLAB LMI toolbox, but also in that they take into consideration the neuron’s inhibitory and excitatory effects on neural networks [42]. Despite all this, there are some rigorous drawbacks: (a) The considered neural networks in [38–41] are not expressed in terms of LMIs; (b) some restrictive conditions are rigorous; (c) they do not allow the existence of large-scale impulsive effects. Obviously, these drawbacks restricted the availability for applications .

The purpose of this paper is to consider a class of chaotic neural networks with mixed delays and impulsive effects. By constructing suitable Lyapunov–Krasovskii functional and employing stability theory, we present some delay-dependent schemes which contain all the information in chaotic neural networks to guarantee the exponential synchronization of the addressed systems. The synchronization conditions are given in terms of LMIs, which can be easily checked via MATLAB LMI toolbox. Moreover, we not only essentially drop the requirement of traditional Lipschitz condition on the activation functions but also remove the restrictions on differentiability of time-varying delays and the boundedness of their derivatives.

The rest of the paper is organized as follows. In Sect. 2, problem formulations and some preliminaries are introduced. In Sect. 3, we present some exponential synchronization schemes for chaotic neural networks with mixed delays and impulsive effects by constructing different suitable Lyapunov–Krasovskii functionals. Two numerical examples are given to illustrate the proposed results in Sect. 4. Finally, conclusions are given in Sect. 5.

2 Problem formulations

Let \({\mathbb {R}}\) denote the set of real numbers, \({\mathbb {Z}}_+\) denote the set of positive integers and \({\mathbb {R}}^n\) the n-dimensional real space equipped with the Euclidean norm \(||\cdot ||.\) \(\mathscr {A}> 0\) or \(\mathscr {A}<0\) denotes that the matrix \(\mathscr {A}\) is a symmetric and positive definite or negative definite matrix. The notation \(\mathscr {A}^T\) and \(\mathscr {A}^{-1}\) mean the transpose of \(\mathscr {A}\) and the inverse of a square matrix. If \(\mathscr {A}, \mathscr {B}\) are symmetric matrices, \(\mathscr {A}>\mathscr {B}\) means that \(\mathscr {A}-\mathscr {B}\) is positive definite matrix. I denotes the identity matrix with appropriate dimensions and \(\Lambda =\{1,2,\ldots ,n\}\). Moreover, the notation \(\star\) always denotes the symmetric block in one symmetric matrix.

Denote \({\mathbb {PC}}_b^1((-\infty ,0], {\mathbb {R}}^{n}) =\{\psi :(-\infty ,0]\rightarrow \ {\mathbb {R}}^{n}\) is continuously differentiable bounded everywhere except at finite number of points t, at which \(\psi (t^{+}), \psi (t^{-}),\psi ^{'}(t^{+})\) and \(\psi ^{'}(t^{-})\) exist, \(\psi (t^{+})=\psi (t),\) \(\psi ^{'}(t^{+})=\psi ^{'}(t),\) where \(\psi ^{'}\) denotes the derivative of \(\psi\)}. Especially, let \({\mathbb {PC}}_b^1\doteq {\mathbb {PC}}_b^1((-\infty ,0], {\mathbb {R}}^{n}).\)

For any \(\psi \in {\mathbb {PC}}^{1}_b,\) we introduce the following norm:

Consider the following chaotic neural networks with mixed delays and impulsive effects:

where the impulse times \(t_{k}\) satisfy \(0= t_{0}<t_{1}<\ldots <t_{k}<\ldots , \lim _{k\rightarrow \infty }t_{k}=+\infty\), \(x(t)=(x_1(t),\cdots ,x_n(t))^T\) is the neuron state vector and \(f_i(x(\cdot ))=(f_{i1}(x_1(\cdot )),\cdots ,f_{in}(x_n(\cdot )))^T\), \(i=1,2,3,\) represents neuron activation functions, \(C=\hbox {diag}(c_1,\cdots ,c_n)\) is a diagonal matrix with \(c_i>0\), A, B, W are the connection weight matrix, the delayed weight matrix and the distributively delayed connection weight matrix, respectively; I(t) is a time-varying input vector, \(\tau (t)\) is the time-varying delay of the neural networks satisfying \(0\le \tau (t)\le \tau\); \(h(\cdot )=\hbox {diag}(h_1(\cdot ),\cdots ,h_n(\cdot ))\) is the delay kernel and \(D_k=\hbox {diag}(d^{(1)}_k,\cdots ,d^{(n)}_k)\) is the impulsive matrix, \(\phi (\cdot )\in {\mathbb {C}},\ {\mathbb {C}}\) is an open set in \({\mathbb {PC}}^{1}_b\).

We remark that the model formulation given above implies that the states of neuron x will meet sudden changes at the discontinuity points \(t_k\) due to some stimuli from the internal or external environment, that is, \(x(t_k)=(I-D_k)x(t^{-}_k),\) where \(x(t^{-}_k)\) denotes the state of neuron x before changes at jump points \(t_k\) and \(x(t_k)=x(t^{+}_k)\) the state after changes.

For the sake of simplicity, we give the following assumptions:

- \((H_1)\) :

-

The neuron activation functions \(f_{1j},\,f_{2j},\,f_{3j}\) are bounded and satisfy the following conditions:

$$\begin{aligned} \sigma ^{-}_j\le & \frac{f_{1j}(u)-f_{1j}(v)}{u-v}\le \sigma ^{+}_j,\\ \zeta ^{-}_j\le & \frac{f_{2j}(u)-f_{2j}(v)}{u-v}\le \zeta ^{+}_j,\\ \rho ^{-}_j\le & \frac{f_{3j}(u)-f_{3j}(v)}{u-v}\le \rho ^{+}_j,\quad \forall \ u,v\in {\mathbb {R}},\ u\ne v,\,j\in \Lambda , \end{aligned}$$where \(\sigma ^{-}_j,\sigma ^{+}_j,\zeta ^{-}_j,\zeta ^{+}_j,\rho ^{-}_j,\rho ^{+}_j\) are some real constants.

- \((H_2)\) :

-

The delay kernels \(h_{j},j\in \Lambda ,\) are some real value nonnegative continuous function defined in \([0,\infty),\) and there exists a constant \(\eta >0\) such that

$$\begin{aligned} \int _{0}^{\infty }h_{j}(s){\text{d}}s\doteq {\mathbbm {h}}_{j},\quad \int _{0}^{\infty }h_{j}(s)e^{\eta s}{\text{d}}s\doteq {\mathbbm {h}}^\star _{j}<\infty ,\quad j\in \Lambda , \end{aligned}$$where \({\mathbbm {h}}_{j},{\mathbbm {h}}^\star _{j}\) denote some positive constants.

Remark 2.1

It should be noted that as discussed in [4, 43] in many electronic circuits, the input–output functions of amplifiers may be neither monotonically increasing nor continuously differentiable, thus non-monotonic functions can be more appropriate to describe the neuron activation in designing and implementing an artificial neural network. Hence, the constants \(\sigma ^{-}_j,\sigma ^{+}_j,\zeta ^{-}_j,\zeta ^{+}_j,\rho ^{-}_j,\) \(\rho ^{+}_j\) which are allowed to be positive, negative or zero in assumption \((H_1)\) are more general than the previously used Lipschitz conditions, see, for example, [21, 39, 40]. Assumption \((H_2)\) provides the information on the delay kernels which can be used in the stability criteria, and therefore more precise synchronization conditions can be obtained in the following section.

Now we consider the system (1) as the master/drive system, and the slave/response system can be as follows:

where \(\varphi (\cdot )\in {\mathbb {C}},\,u(t)\) is the appropriate control input that will be designed in order to obtain the synchronization of the drive system (1) and the controlled response system (2). And the other notations and conditions are the same as system (1).

For the synchronization scheme, the synchronization error is defined as

and the control input in the response system is designed as (Inspired by the ideas in [29–33])

where \(K_1,K_2\) are the gain matrices. Then the error dynamics between (1) and (2) can be expressed by

where \(g_i(e(\cdot ))=f_i(e(\cdot )+x(\cdot ))-f_i(x(\cdot )).\) Obviously, \(g_i(0)=0, i=1,2,3.\) Moreover, by \((H_1)\) we note that the following conditions hold:

In addition, we give the following definitions:

Definition 2.1

([22]) Systems (1) and (2) are said to be exponentially synchronized if there exist constants \(\lambda >0\) and \(\mathscr {M}\ge 1\) such that \(||e(t)||\le \mathscr {M}||\varphi -\phi ||_\tau e^{-\lambda t}\) for any \(t>0.\) Constant \(\lambda\) is said to be the degree of exponential synchronization.

Definition 2.2

([23]) Systems (1) and (2) are said to be globally asymptotically synchronized if the synchronization error system (3) is globally asymptotically stable.

3 Synchronization schemes

In the section, we will investigate the exponential synchronization and global asymptotical synchronization of systems (1) and (2) with or without impulsive effects by constructing suitable Lyapunov–Krasovskii functionals.

Theorem 3.1

Assume that assumptions \((H_1)\) and \((H_2)\) hold. Then systems (1) and (2) are exponentially synchronized if there exist four constants \(\alpha \in (0,\eta ),\gamma >0,\delta \in [0,\alpha ), {\mathbb {M}}\ge 1\), an \(n\times n\) matrix \(P>0,\) an \(n\times n\) inverse matrix \(Q_1\), four \(n\times n\) diagonal matrices \(Q_2>0,U_i>0,i=1,2,3,\) and an \(2n\times 2n\) matrix \(\left( \begin{array}{ll} T_{11}&T_{12}\\ \star &T_{22} \\ \end{array}\right) >0\) such that

and

where

\(\lambda ^{k}_{\max }\) denotes the maximum eigenvalue of matrix \(P^{-1}(I-D_k)P(I-D_k),k\in {\mathbb {Z}}_+\).

Proof

Consider the following Lyapunov–Krasovskii functional:

where

Calculating the time derivative of \(V_1,V_2,V_3,V_4\) along the solution of (3) at the continuous interval \([t_{k-1},t_k),k\in {\mathbb {Z}}_+\), we get

and

On the other hand, for any \(n \times n\) diagonal matrices \(U_i>0,i=1,2,3,\) it follows that

Thus, by (5)–(9), we can obtain

where

Since (4) holds, we know that matrix \(\Xi\) is a negative define matrix, then

On the other hand, we note that for any \(k\in {\mathbb {Z}}_+\)

Moreover, we know

Together with (11), it follows that for any \(k\in {\mathbb {Z}}_+\)

By simple induction, from (10) and (12) we get for \(t\in [t_m,t_{m+1}),m\in {\mathbb {Z}}_+\)

which implies that

i.e.,

In addition, it can be deduced that

where \(\rho _j=\max \{|\rho ^{-}_j|,\ |\rho ^{+}_j|\},j\in \Lambda ,\mu _{\max }\) and \(\lambda _{\max }\) denote the maximum eigenvalues of matrix \(T_{22}\) and P, respectively.

Substituting (14) into (13), we finally obtain

where

Hence, the origin of the synchronization error system (3) is globally exponentially stable, i.e., the networks (1) and (2) achieve global exponential synchronization. This completes the proof. \(\square\)

Remark 3.1

Theorem 3.1 provides some sufficient conditions to ensure the exponential synchronization of systems (1) and (2). Although the computation process is complex, the conditions are easy to check, which are given in terms of LMI. In order to show the design of the estimate gain matrices \(K_1\) and \(K_2,\) a simple transformation is made to obtain the following result.

Corollary 3.1

Assume that assumptions \((H_1)\) and \((H_2)\) hold. Then systems (1) and (2) are exponentially synchronized if there exist four constants \(\alpha \in (0,\eta ),\gamma >0,\delta \in [0,\alpha ), {\mathbb {M}}\ge 1\), three \(n\times n\) matrices \(P>0, Y_1,Y_2\), an \(n\times n\) inverse matrix \(Q_1,\) four \(n\times n\) diagonal matrices \(Q_2>0,U_i>0,i=1,2,3,\) and an \(2n\times 2n\) matrix \(\left( \begin{array}{ll} T_{11} & T_{12} \\ \star &T_{22}\\ \end{array}\right) >0\) such that

and

where

\(\lambda ^{k}_{\max }\) denotes the maximum eigenvalue of matrix \(P^{-1}(I-D_k)P(I-D_k),k\in {\mathbb {Z}}_+\).

Remark 3.2

Let \(K_i=Q_1^{-1}Y_i\) in Theorem 3.1, then we can obtain above result immediately. In particular, if P is a positive definite diagonal matrix, then by Theorem 3.1 the following result can be obtained:

Corollary 3.2

Assume that assumptions \((H_1)-(H_3)\) hold. Then systems (1) and (2) are exponentially synchronized if there exist four constants \(\alpha \in (0,\eta ),\gamma >0,\delta \in [0,\alpha ), {\mathbb {M}}\ge 1\), an \(n\times n\) inverse matrix \(Q_1\), five \(n\times n\) diagonal matrices \(P>0,Q_2>0,U_i>0,i=1,2,3,\) and an \(2n\times 2n\) matrix \(\left( \begin{array}{ll} T_{11}& T_{12} \\ \star & T_{22}\\ \end{array}\right) >0\) such that

and

where

To compare Corollary 3.2 with some previous results (e.g., [21, 38–40]), we derive the following remark:

Remark 3.3

In the literature [38–40], the impulsive condition is assumed to be \(d^{(j)}_k\in [0,2]\) (or (0,2)),\(j\in \Lambda ,\ k\in {\mathbb {Z}}_+.\) Obviously, it is just a special case of Corollary 3.2. Our results can be applied to neural networks with large impulses. In addition, consider a special case with \(D_k=0\) and \(W=0,\) that is, systems (1) and (2) are reduced to chaotic neural networks which have been studied in [21] with control input \(u(t)=K e(t)\). They gave some sufficient conditions that guaranteed the chaotic synchronization of neural networks under the assumption that the neuron activation functions are monotonous nondecreasing and the time-varying delay is differentiable. This implies that our development results have wider adaptive range.

Next, we consider the asymptotic synchronization of systems (1) and (2). First, condition \((H_2)\) in Sect. 2 will be properly relaxed by

\((H_2^{'})\) The delay kernels \(h_{j},j\in \Lambda ,\) are some real value nonnegative continuous function defined in \([0,\infty )\) and satisfy

where \({\mathbbm {h}}_{j}\) denotes a positive constant.

Theorem 3.2

Assume that assumptions \((H_1)\) and \((H_2^{'})\) hold. Then systems (1) and (2) are asymptotically synchronized if there exist a constant \(\gamma >0,\) a \(n\times n\) matrix \(P>0\), an \(n\times n\) inverse matrix \(Q_1,\) four \(n\times n\) diagonal matrices \(Q_2>0,U_i>0,i=1,2,3,\) and an \(2n\times 2n\) matrix \(\left( \begin{array}{ll} T_{11} & T_{12} \\ \star &T_{22}\\ \end{array}\right) >0\) such that

and

where

Proof

Consider the following Lyapunov–Krasovskii functional:

where

The rest of the proof is similar to that of Theorem 3.1. Here it is omitted.

Remark 3.4

So far, numerous synchronization schemes for chaotic neural networks have been established in the literature. We can find the recent papers [19–23, 27–33, 38–41] in this direction. However, the time-varying delays appearing in [21, 22, 29, 30] are differential and their derivatives are simultaneously required to be not greater than 1 or finite and the delay kernels need satisfy (i)—(iii) in [40]. Obviously, these requirements are relaxed in our results. Moreover, the sufficient conditions established in [40] ignore the information of delay kernels for synchronization of chaotic neural networks.

Remark 3.5

In Li and Bohner [23] investigated the exponential synchronization of chaotic neural networks with mixed delays and impulsive effects via output coupling with delay feedback. It can be applied to the case that only output signals can be measured in neural networks. In the present paper, via state coupling we investigate the synchronization problem of chaotic neural networks. For the different coupling strategies, state and output coupling, different synchronization schemes have been derived and they are complementary with each other.

4 Numerical examples

In this section, we will give two numerical examples showing the effectiveness of the results obtained. First, we consider a simple chaotic neural network with impulses, see [40].

Example 4.1

Consider a two-dimensional chaotic neural network with impulses ([40]):

where the initial condition \(\phi (s)=(-0.5,0.8)^T, s\in [-0.85,0],\, f_1=f_2=0.5(|x+1|-|x-1|),I(t)=(0,0)^T,\ D_k=\)diag\((0.1,0.1),\, t_k=2k,\,k\in {\mathbb {Z}}_+\), and parameter matrices C, A and B as follows:

To achieve synchronization, the response system is designed as follows:

where the initial condition \(\varphi (s)=(0.3,-0.2)^T, s\in [-0.85,0], \,u(t)=K_1 e(t)+K_2e(t-0.85),\,K_1\) and \(K_2\) are the controller gain matrices.

As shown in Fig. 1a–d, the state trajectories x, y and the synchronization error \(e_1,e_2\) between drive system (15) and response one (16) without control input (i.e., \(u(t)=(0,0)^T\)) does not approach to zero.

Let \(\eta =0.5, \alpha =0.49\) and \(\gamma =2\) and using the tools of LMI toolbox, we obtain that the LMIs in Corollary 3.1 have feasible solution. Consequently, the controller gain matrices \(K_1\) and \(K_2\) are designed as follows:

Note that

Hence, one may choose \({\mathbb {M}}=1,\delta =0\) in Corollary 3.1. Then the systems (15) and (16) are said to be exponentially synchronized. The simulation results are illustrated in Fig. 2a–d in which the controller designed in (17) is applied.

Remark 4.1

In fact, one may observe that matrices \(Q_2>0,U_3>0\) and constant \(\alpha \ge 0\) in Example 4.1 can be chosen arbitrarily since the distributed delays are not involved in system (15).

Remark 4.2

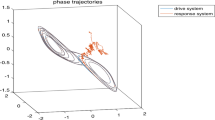

In [40], the authors have studied the chaotic synchronization between derive system (15) and response one (16) with control input \(u(t)=M(f(y(t)-f(x(t))).\) Note in Example 4.1, a different scheme is given to obtain the chaotic synchronization with control input \(u(t)=K_1 e(t)+K_2e(t-0.85).\) Moreover, when the impulsive condition \(D_k=\)diag\((-0.5,2.5), k\in {\mathbb {Z}}_+\) (abbrev. \(D^{\star }_k\)), let \({\mathbb {M}}=1,\delta =0.46(<0.49),\) by simple calculation, we get \(\lambda ^{k}_{\max } \approx 2.2957<e^{2\delta },\) which implies that systems (15) and (16) still are exponentially synchronized under control input \(u(t)=K_1 e(t)+K_2e(t-0.85)\) by Theorem 3.1 (see Figs. 3a, b, 2c, d). However, it is obvious that the sufficient conditions in [40] are not satisfied and chaotic synchronization cannot be guaranteed for the case \(D^{\star }_k\).

Remark 4.3

It should be noted that for the case \(D^{\star }_k,\) the corresponding error trajectories are the same as Fig. 2c, d since the impulsive interval \(t_k-t_{k-1}=2,k\in {\mathbb {Z}}_+\).

Example 4.2

Consider the following chaotic neural networks with mixed delays and impulsive effects:

where the initial condition \(\phi (s)=(0.5,-0.5)^T, s\in (-\infty ,0], \,f_i=0.5(|x+1|-|x-1|),i=1,2,3,\,\tau (t)=0.4,\ h(s)=0.2e^{-s},\ I(t)=(0,0)^T,\ D_k=\)diag\((0.1,0.2),\,t_k=3k,\ k\in {\mathbb {Z}}_+,\) and parameter matrices C, A, B and W as follows:

To achieve synchronization, the response system is designed as follows:

where the initial condition \(\varphi (s)=(-0.8,0.2)^T, s\in (-\infty ,0],\,u(t)=K_1 e(t)+K_2e(t-0.4),\,K_1\) and \(K_2\) are the controller gain matrices.

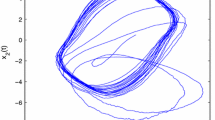

As shown in Fig. 4a–d, the state trajectories x, y and the synchronization error \(e_1,e_2\) between drive system (18) and response one (19) without control input (i.e., \(u(t)=(0,0)^T\)) does not approach to zero.

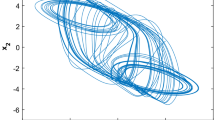

Let \(\eta =0.5,\alpha =0.48\) and \(\gamma =3\) and using the tools of LMI toolbox, we obtain that the LMIs in Corollary 3.1 has feasible solution. The controller gain matrices \(K_1\) and \(K_2\) are designed as follows:

Note that

Hence, one may choose \({\mathbb {M}}=1,\delta =0\) in Corollary 3.1. Then the systems (18) and (19) are said to be exponentially synchronized. The simulation results are illustrated in Fig. 5a–d in which the controller designed in (20) is applied.

5 Conclusions

In this paper, chaotic neural networks with mixed delays and impulsive effects have been studied. By constructing suitable Lyapunov–Krasovskii functional and employing stability theory, some delay-dependent schemes are designed to guarantee the exponential synchronization of neural networks, which are different from the existing ones and can be applied to a wider range of applications. Moreover, the obtained results are given in terms of LMIs, which can be easily checked via MATLAB LMI toolbox. Finally, two numerical examples and their simulations have been given to verify the theoretical results. The idea in this paper can be extended to the study of synchronization control of complex-value neural networks, but it is difficult to be applied to impulsive NNs with state-delay. More methods and tools should be explored and developed in this direction.

References

Shen Y, Wang M (2008) Broadcast scheduling in wireless sensor networks using fuzzy Hopfield neural network. Expert Syst Appl 34:900–907

Marcu T, Kppen-Seliger B, Stucher R (2008) Design of fault detection for a hydraulic looper using dynamic neural networks. Control Eng Pract 16:192–213

Maeda Y, Wakamura M (2005) Bidirectional associative memory with learning capability using simultaneous perturbation. Neurocomputing 69:182–197

Liao X, Chen G, Sanchez E (2002) LMI-based approach for asymptotically stability analysis of delayed neural networks. IEEE Trans Circuits Syst I 49:1033–1039

Liu X, Dickson R (2001) Stability analysis of Hopfield neural networks with uncertainty. Math Comput Model 34:353–363

Cao J, Dong M (2003) Exponential stability of delayed bi-directional associative memory networks. Appl Math Comput 135:105–112

Park J, Kwon O (2009) Global stability for neural networks of neutral-type with interval time-varying delays. Chaos Solitons Fractals 41:1174–1181

Xia Y, Huang Z, Han M (2008) Exponential \(p\)-stability of delayed Cohen–Grossberg-type BAM neural networks with impulses. Chaos Solitons Fractals 38:806–818

Liu X, Teo K, Xu B (2005) Exponential stability of impulsive high-order Hopfield-type neural networks with time-varying delays. IEEE Trans Neural Netw 16:1329–1339

Pan J, Liu X, Zhong S (2010) Stability criteria for impulsive reaction–diffusion Cohen–Grossberg neural networks with time-varying delays. Math Comput Model 51:1037–1050

Li X (2009) Existence and global exponential stability of periodic solution for impulsive Cohen–Grossberg-type BAM neural networks with continuously distributed delays. Appl Math Comput 215:292–307

Feki M (2003) An adaptive chaos synchronization scheme applied to secure communication. Chaos Solitons Fractals 18:141–148

Li C, Liao X, Wong K (2004) Chaotic lag synchronization of coupled time-delayed systems and its applications insecure communication. Phys D 194:187–202

Pecora L, Carroll T (1990) Synchronization in chaotic systems. Phys Rev Lett 64(24):821–824

Carroll T, Pecora L (1991) Synchronization chaotic circuits. IEEE Trans Circuits Syst 38(4):453–456

Sundar S, Minai A (2000) Synchronization of randomly multiplexed chaotic systems with application to communication. Phys Rev Lett 85:5456–5459

Lu H (2002) Chaotic attractors in delayed neural networks. Phys Lett A 298:109–116

Yu W, Cao J (2007) Synchronization control of stochastic delayed neural networks. Phys A 373:252–260

Liu M (2009) Optimal exponential synchronization of general chaotic delayed neural networks: an LMI approach. Neural Netw 22:949–957

Cheng C, Liao T, Hwang C (2005) Exponential synchronization of a class of chaotic neural networks. Chaos Solitons Fractals 24:197–206

Gao X, Zhong S, Gao F (2009) Exponential synchronization of neural networks with time-varying delays. Nonlinear Anal Theory Methods Appl 71:2003–2011

Xia Y, Yang Z, Han M (2009) Synchronization schemes for coupled identical Yang–Yang type fuzzy cellular neural networks. Commun Nonlinear Sci Numer Simul 14:3645–3659

Li X, Bohner M (2010) Exponential synchronization of chaotic neural networks with mixed delays and impulsive effects via output coupling with delay feedback. Math Comput Model 52:643–653

Liu Y, Wang Z, Liu X (2006) Global exponential stability of generalized recurrent neural networks with discrete and distributed delays. Neural Netw 19:667–675

Song Q, Cao J (2006) Stability analysis of Cohen–Grossberg neural network with both time-varying and continuously distributed delays. J Comput Appl Math 197:188–203

Rakkiyappan R, Balasubramaniam P, Lakshmanan S (2008) Robust stability results for uncertain stochastic neural networks with discrete interval and distributed time-varying delays. Phys Lett A 372:5290–5298

Wang K, Teng Z, Jiang H (2008) Adaptive synchronization of neural networks with time-varying delay and distributed delay. Phys A 387:631–642

Tang Y et al (2008) Adaptive lag synchronization in unknown stochastic chaotic neural networks with discrete and distributed time-varying delays. Phys Lett A 372:4425–4433

Li T et al (2008) Exponential synchronization of chaotic neural networks with mixed delays. Neurocomputing 71:3005–3019

Li T et al (2009) Synchronization control of chaotic neural networks with time-varying and distributed delays. Nonlinear Anal Theory Methods Appl 71:2372–2384

Li T, Fei S, Zhang K (2008) Synchronization control of recurrent neural networks with distributed delays. Phys A 387:982–996

Song Q (2009) Design of controller on synchronization of chaotic neural networks with mixed time-varying delays. Neurocomputing 72:3288–3295

Song Q (2009) Synchronization analysis of coupled connected neural networks with mixed time delays. Neurocomputing 72:3907–3914

Lakshmikantham V, Bainov D, Simeonov P (1989) Theory of impulsive differential equations. World Scientific, Singapore

Haykin S (1998) Neural networks: a comprehensive foundation. Prentice-Hall, Englewood Cliffs

Arbib M (1987) Branins, machines, and mathematics. Springer, New York

Liu X, Wang Q (2008) Impulsive stabilization of high-order hopfield-type neural networks with time varying delays. IEEE Trans Neural Netw 19:71–79

Ding W, Han M, Li M (2009) Exponential lag synchronization of delayed fuzzy cellular neural networks with impulses. Phys Lett A 373:832–837

Yang Y, Cao J (2007) Exponential lag synchronization of a class of chaotic delayed neural networks with impulsive effects. Phys A 386:492–502

Sheng L, Yang H (2008) Exponential synchronization of a class of neural networks with mixed time-varying delays and impulsive effects. Neurocomputing 71:3666–3674

Zhou J, Xiang L, Liu Z (2007) Synchronization in complex delayed dynamical networks with impulsive effects. Phys A 384:684–692

Gahinet P, Nemirovski A, Laub A, Chilali M (1995) LMI control toolbox user’s guide. The Mathworks, Natic

Wang Z, Shu H, Liu Y, Ho DWC, Liu X (2006) Robust stability analysis of generalized neural networks with discrete and distributed time delays. Chaos Solitons Fractals 30:886–896

Acknowledgments

This work was supported by National Natural Science Foundation of China (No. 11301308), China PSFF (2014M561956, 2015T80737), the Research Fund for International Cooperation Training Programme of Excellent Young Teachers of Shandong Normal University (201411201711) and the Fund of the University of Dammam College of Science (2015296).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Alzahrani, E.A., Akca, H. & Li, X. New synchronization schemes for delayed chaotic neural networks with impulses. Neural Comput & Applic 28, 2823–2837 (2017). https://doi.org/10.1007/s00521-016-2218-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-016-2218-7