Abstract

We put into action new analytical technique for solving nonlinear fractional partial differential equations arising in biological population dynamics system. We present in details the stability, the convergence, and the uniqueness analysis by constructing a suitable Hilbert space. Some exact analytical solutions are given, and a quantity of properties gives you an idea about signs of biologically practical reliance on the parameter values. The regularity of this course of action and the diminution in computations confer a wider applicability. In all examples, in the limit of infinitely, many terms of the series solution yield the exact solution.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, fractional calculus have been employed in many areas such as electrical networks, control theory of dynamical systems, probability and statistics, electrochemistry of corrosion, chemical physics, optics, engineering, acoustics, viscoelasticity, material science, and signal processing can be successfully modeled by linear or nonlinear fractional order differential equations [1–9]. But these nonlinear fractional differential equations are difficult to get their exact solutions [10].

Late eighteenth-century biologists began to develop techniques in population modeling in order to understand the dynamics of growing and shrinking populations of living organisms. Thomas Malthus was one of the first to note that populations grew with a geometric pattern while contemplating the fate of humankind [11]. One of the most basic and milestone models of population growth was the logistic model of population growth formulated by Pierre Francois Verhulst in 1838. The logistic model takes the shape of a sigmoid curve and describes the growth of a population as exponential, followed by a decrease in growth, and bound by a carrying capacity due to environmental pressures [12]. Population modeling became of particular interest to biologists in the twentieth century as pressure on limited means of sustenance due to increasing human populations in parts of Europe was noticed by biologist such as Raymond Pearl. In 1921, Pearl invited physicist Alfred J. Lotka to assist him in his laboratory. Lotka developed paired differential equations that showed the effect of a parasite on its prey. Mathematician Vivo Volterra equated the relationship between two species independent from Lotka. Together, Lotka and Volterra formed the Lotka–Volterra model for competition that applies the logistic equation to two species illustrating competition, predation, and parasitism interactions between species [3]. In 1939, contributions to population modeling were given by Patrick Leslie as he began work in biomathematics.

In this paper, we extend the make use of a modified iteration method to the time-fractional biological population model [13], and a representative biological population diffusion equation is u t (x, y, t) = u 2 x,x + u 2 y,y + σ(u) where u(x, y, t) denotes the population density and σ(u) represents the population supply due to births and deaths. In this paper, a generalized time-fractional nonlinear biological population diffusion equation of the following form is considered:

Subject to the initial condition

where a, b, r, and h are real numbers, and according to Malthusian law and Verhulst law, we consider a more general form of σ(u) as

The derivative in Eq. (1) is the Caputo derivative. Linear and nonlinear population systems were solved in [13] and [14] by using variational iteration method (VIM) and Adomian decomposition method (ADM). However, one of the disadvantages of ADM is the inherent difficulty in calculating the Adomian polynomials, VIM, the Lagrange multiplier, and the so-called correctional function. In this letter, we are interested in extending the applicability of HDM to population systems of fractional differential Eq. (1). The homotopy decomposition method (HDM) was recently proposed by Atangana [15–17] to solve fractional derivatives equation. To demonstrate the effectiveness of the HDM algorithm, several numerical examples of fractional biological population systems shall be presented.

2 Fundamental characters of the homotopy decomposition method

To exemplify the fundamental suggestion of this method, we think about a universal nonlinear nonhomogeneous fractional partial differential equation with initial conditions of the following form [15, 16]

Subject to the initial condition

Where \( \frac{{\partial^{\alpha } }}{{\partial t^{\alpha } }} \) denotes the Caputo or Riemann–Liouville fractional derivative operator, f is a known function, N is the general nonlinear fractional differential operator, and L represents a linear fractional differential operator. The technique first movement here is to change the fractional partial differential equation to the fractional partial integral equation by applying the inverse operator \( \frac{{\partial^{\alpha } }}{{\partial t^{\alpha } }} \) of on both sides of Eq. (4) to obtain: In the case of Riemann–Liouville fractional derivative

In the case of Caputo fractional derivative

or in general by putting

We obtain the following:

In the HDM, the basic assumption is that the solutions can be written as a power series in p

and the nonlinear term can be decomposed as

where \( p\int (0, 1] \) is an embedding parameter. \( {\mathcal{H}}_{n} (U) \) [21, 22] is the He’s polynomials that can be generated by

The HDM is obtained by means of the refined combination of homotopy technique with He’s polynomials and is given by

Comparing the terms of same powers of p gives solutions of various orders with the first term:

3 Convergence and uniqueness analysis

In the literature, there exist a lot of papers dealing with solutions of nonlinear differential equation. However, the stability and uniqueness analysis are not presented, and this leads to thing that these classes of papers are just a high school exercise since there is no piece of mathematics in, just presentation of some examples and figures. It is perhaps important to point out that the most difficult task after presenting these examples is to prove the stability of the method. We will devote this section to the analysis of the convergence of the method used for fractional biological equation and the uniqueness of the special solution obtained by using this method. We shall start this section by presenting the following useful definition (Fig. 1).

Definition 1

Let \( \varOmega = [a b]\left( { - \infty \le a < b \le \infty } \right) \) be a finite or infinite interval of real axis \( {\mathbb{R}} = \left( { - \infty , \infty } \right) \). We denote by \( L_{p} \left( {a, b} \right)\left( {1 \le p \le \infty } \right) \) the set of those Lebesgue complex-valued measurable functions f on Ω for which \( \left\| f \right\|_{p} < \infty, \) where

We shall in addition of the above definition present, the following useful theorem.

Theorem 1

If \( h(t) \in L_{1} \left( {\mathbb{R}} \right) \) and \( h_{1} (t) \in L_{p} \left( {\mathbb{R}} \right) \) , then their convolution \( \left( {h*h_{1} } \right)\left( x \right) \in L_{p} \left( {\mathbb{R}} \right)\left( {1 \le p \le \infty } \right) \) , and the following inequality holds [3]:

In particular, if \( h(t) \in L_{1} \left( {\mathbb{R}} \right) \) and \( h_{1} (t) \in L_{2} \left( {\mathbb{R}} \right) \) , then their convolution \( \left( {h*h_{1} } \right)\left( x \right) \in L_{2} \left( {\mathbb{R}} \right) \) then,

There exists a vast literature on different definitions of fractional derivatives. The most popular ones are the Riemann–Liouville and the Caputo derivatives. For Caputo, we have

For the case of Riemann–Liouville we have the following definition

Each one of these fractional derivative presents some compensations and weakness [2, 6]. The Riemann–Liouville derivative of a constant is not zero, while Caputo’s derivative of a constant is zero but demands higher conditions of regularity for differentiability [2, 6]: To compute the fractional derivative of a function in the Caputo sense, we must first calculate its derivative. Caputo derivatives are defined only for differentiable functions, while functions that have no first-order derivative might have fractional derivatives of all orders less than one in the Riemann–Liouville sense [18, 19]. Recently, Guy Jumarie (see [20]) proposed a simple alternative definition to the Riemann–Liouville derivative.

His modified Riemann–Liouville derivative seems to have advantages of both the standard Riemann–Liouville and Caputo fractional derivatives: It is defined for arbitrary continuous (nondifferentiable) functions, and the fractional derivative of a constant is equal to zero. However, from its definition, we do not actually give a fractional derivative of a function says f(x) but the fractional derivative of f(x) − f(0) and always leads to fractional derivative that is not defined at the origin for some function that does not exist at the origin.

Lemma 1

[3] the fractional integration operators with \( \Re (\alpha ) > 0 \) are bounded in \( L_{p} \left( {a, b} \right)\left( {1 \le p \le \infty } \right) \)

To prove the convergence and the uniqueness, let us consider equation in the Hilbert space \( {\mathcal{H}} = L^{2} \left( {\left( {\eta , \lambda } \right) \times \left[ {0, T} \right]} \right) \), defined as

Then, the operator is of the form

The projected investigative is convergent if the subsequent necessities are congregated.

Proposition 1

There is a possibility for us to establish a positive constant says β such that the inner product holds in \( {\mathbf{\mathcal{H}}} \)

Proposition 2

To the extent that for all \( v,u \in H \) are circumscribed entailing, we can come across a positive constant says χ such that: \( \left\| u \right\|,\left\| v \right\| \le \chi \), then we can discover \( \varPhi \left( \chi \right) > 0 \) such that

We can as a result shape the ensuing theorem for the sufficient condition for the convergence of Eq. (1)

Theorem 2

Let us think about

and consider the initial and boundary condition for Eq. (1), then the proposed method leads to a special solution of Eq. (1). We shall present the proof of this theorem by just verifying the hypothesis 1 and 2.

Proof 1

Using the defined operator, we obtain the following

With the above reduction in hand, it is therefore possible for us to evaluate the following inner product

We shall assume that \( u, v \) are bounded, and we can find a positive constant l such that their inner product is bounded as \( \left( {u,v} \right),\left( {v,v} \right) \rm and \left( {\it u,u} \right) < l \). By employing the so-called Schwartz inequality, we obtain first

And in view of the fact that, we are able to find two positive constant \( \rho_{1} , \rho_{2} \) such that \( \left\| {\left( {u^{2} - v^{2} } \right)_{xx} } \right\| \le \rho_{1} \rho_{2} \left\| {u^{2} - v^{2} } \right\| \) in addition to this, since (u, u) < l, then we have the following

So that

In the same manner, we obtain that

Let us take care of the following expression (h[u a − v a], u - v), now using again the Schwartz inequality

Note that,

Therefore, using the fact that \( \left( {u,v} \right),\left( {v,v} \right) and \left( {u,u} \right) < l \). Then, we can obtain the following inequality

In the similar manner, we can obtain

We can therefore conclude without fear that

Take now

We conclude that

Then, proposition 1 is verified. Let us handle now proposition 2. To do this, we compute

With

And proposition 2 is also verified. Now, in the light of theorem 1, proposition 1, and 2 together with lemma 1, we can happily conclude that the decomposition method used here works perfectly for fractional biological population equation.

Theorem 3

Taking into account the initial conditions for Eq. ( 1 ) then the special solution of Eq. ( 1 ) u esp to which u convergence is unique.

Proof 3

Assuming that we can find another special solution say v esp, then by making use of the inner product together with hypothesis (1), we have the following

using the fact that we can find a small natural number m 1 for which we can find a very small number ɛ such respecting the following inequality

Also, we can find another natural number m 2 for which we can find a very small positive number ɛ that can respect the fact that

taking therefore \( m = \hbox{max} \left( {m_{1} , m_{2} } \right) \) we have without fear that,

Making use of the triangular inequality, we obtain the following

It therefore turns out that

But according to the law of the inner product, the above equation implies that

This concludes the uniqueness of our special solution.

4 Application of algorithm

In this section, we apply this method for solving fractional biological population equation with time- and space-fractional derivatives.

Example 1

Consider (1) with a = 1, r = 0, corresponding to Malthusian law, we have the following fractional biological population equation:

Subject to the initial condition

According to the HDM that was presented earlier in Sect. 3, we obtain the following equation.

Comparing the terms of the same power of p yields:

The following solutions are obtained

It follows that for N = n the approximated solution from HDM is

therefore

Here, E α (ht α) is the Mittag–Leffler function, defined as

Now, notice that if α = 1, Eq. (46) is reduced to the following:

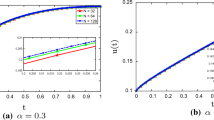

This is the exact solution for example 1 when α = 1. The following figures show the graphical representation of the approximated solution (45) and the exact solution for different values of α. It is easy to conclude that the approximate solution of fractional biological population model is continuous increasing function of alpha, as the parameter alpha is decreasing (Fig. 2).

Example 2

Let us consider the following fractional biological population model

Subject to the initial condition

Following the discussion presented earlier in Sect. 3, we arrive to the following:

The following solutions are obtained

It follows that for N = n the approximated solution from HDM is

Therefore,

Now, notice that if α = 1, Eq. (4) is reduced to the following:

This is the exact solution for example 2 when α = 1.

Example 3

Consider (1.1) with \( a = 1, r = 0\rm\, and \,\it h = 1 \), corresponding to Malthusian law, we have the following fractional biological population equation:

Subject to the initial condition

According to the HDM that was presented earlier in Sect. 3, we obtain the following equation.

Comparing the terms of the same power of p yields:

The following solutions are obtained

It follows that for N = n the approximated solution from HDM is

Therefore

Now notice that if α = 1, Eq. (1) is reduced to the following:

This is the exact solution for example 3 when α = 1. The following mure show the graphical representation of the approximated solution (62) when α = 1 (Fig. 3)

Example 4

Consider (1.1) with a = 1, b = 1, corresponding to Malthusian law, we have the following fractional biological population equation:

Subject to the initial condition

Following the discussion presented earlier in Sect. 3, we arrive to the following:

It follows that for N = n the approximated solution from HDM is

Therefore,

Now, notice that if α = 1, Eq. (4) is reduced to the following:

This is the exact solution for example 4 when α = 1.

5 Conclusion

Although many analytical techniques have been proposed in the recent decade to deal with nonlinear equations, most of them have their weakness and limitations. In this paper, we put into operation new analytical techniques, the HDM, for solving nonlinear fractional partial differential equations arising in biological population dynamics system. Population biology is a study of populations of organisms, exceptionally the adaptation of population size; life history traits for instance clutch size; and extinction. The expression population biology is frequently used interchangeably with population ecology, even though population biology is supplementary generally used when studying diseases, viruses, and microbes, and population ecology is used more frequently when studying plants and animals.

The most challenging part while using iteration method is to provide the stability of the method on one hand, and the other to show in detail the convergence and the uniqueness analysis. We have in this paper with care presented the stability, the convergence, and the uniqueness analysis. We have shown some example analytical solutions, and some properties of these solutions show signs of biologically matter-of-fact reliance on the parameter values. The uniformity of this procedure and the lessening in computations award a wider applicability. In all examples, in the limit of infinitely many terms of the series solution yields the exact solution.

References

Oldham KB, Spanier J (1974) The fractional calculus. Academic Press, New York

Podlubny I (1999) Fractional differential equations. Academic Press, New York

Kilbas AA, Srivastava HM, Trujillo JJ (2006) Theory and applications of fractional differential equations. Elsevier, Amsterdam

Caputo M (1967) Linear models of dissipation whose Q is almost frequency independent, part II. Geophys J Int 13(5):529–539

Kilbas AA, Srivastava HH, Trujillo JJ (2006) Theoryand applications of fractional differential equations. Elsevier, Amsterdam

Miller KS, Ross B (1993) An introduction to the fractional calculus and fractional differential equations. Wiley, New York

Samko SG, Kilbas AA, Marichev OI (1993) Fractional integrals and derivatives: theory and applications. Gordon and Breach, Yverdon

Zaslavsky GM (2005) Hamiltonian chaosand fractional dynamics. Oxford University Press, Oxford

M. Merdan, A. Yıldırım, and A. G¨okdo˘gan, “Numerical solution of time-fraction modified equal width wave equation,” engineering computations. In press

Wang SW, Xu MY (2009) Axial couette flow of two kinds of fractional viscoelastic fluids in an annulus. Nonlinear Anal: Real World Appl 10:1087–1096

McIntosh R (1985) The background of ecology. Cambridge University Press, Cambridge, pp 171–198

Renshaw E (1991) Modeling biological populations in space and time. Cambridge University Press, Cambridge, pp 6–9

Shakeri E, Dehghan M (2007) Numerical solutions of a biological population model using He’s variational iteration method. Comput Maths Appl 54:1197–1207

Rida SZ, Arafa AAM (2009) Exact solutions of fractional-order biological population model. Commun Theor Phys 52:992–996

Atangana A, Secer A (2013) The time-fractional coupled-korteweg-de-Vries equations, Abstract and Applied Analysis, vol. 2013, Article ID 947986, 8 pages, doi:10.1155/2013/947986

Atangana A, Alabaraoye E (2013) Solving system of fractional partial differential equations arisen in the model of HIV infection of CD4+ cells and attractor one-dimensional Keller-Segel equations. Adv Diff Equ 94:2013

Atangana A, Kılıçman A, (2013) “Analytical solutions of boundary values problem of 2d and 3d poisson and biharmonic equations by homotopy decomposition method,” Abstract and Applied Analysis, vol. 2013, Article ID 380484, 9 pages, doi:10.1155/2013/380484

Samko SG, Kilbas AA, Marichev OI (1993) Fractional integrals and derivatives, translated from the 1987 Russian original. Gordon and Breach, Yverdon

Jumarie G (2005) On the representation of fractional Brownian motion as an integral with respect to (dt)a. Appl Math Lett 18(7):739–748

Jumarie G (2006) Modified Riemann–Liouville derivative and fractional Taylor series of no differentiable functions further results. Comput Math Appl 51(9–10):1367–1376

He JH (1999) Homotopy perturbation technique. Comput Methods Appl Mech Eng 178(3–4):257–262

Ganji DD (2006) The application of He’s homotopy perturbation method to nonlinear equations arising in heat transfer. Phys Lett A 355(4–5):337–341

Conflict of interest

There is no conflict of interest for this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Atangana, A. Convergence and stability analysis of a novel iteration method for fractional biological population equation. Neural Comput & Applic 25, 1021–1030 (2014). https://doi.org/10.1007/s00521-014-1586-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-014-1586-0