Abstract

This paper investigates the problem of exponential synchronization of switched stochastic competitive neural networks (SSCNNs) with both interval time-varying delays and distributed delays. The distributed delays can be unbounded or bounded; the stochastic perturbation is of the form of multi-dimensional Brownian motion, and the networks are governed by switching signals with average dwell time. Based on new multiple Lyapunov-Krasovkii functionals, the free-weighting matrix method, Newton-Leibniz formulation, as well as the invariance principle of stochastic differential equations, two sufficient conditions ensuring the exponential synchronization of drive-response SSCNNs are developed. The provided conditions are expressed in terms of linear matrix inequalities, which are dependent on not only both lower and upper bounds of the interval time-varying delays but also delay kernel of unbounded distributed delays or upper bounds for bounded distributed delays. Control gains and average dwell time restricted by given conditions are designed such that they are applicable in practice. Numerical simulations are given to show the effectiveness of the theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Neural networks have attracted the attention of many researchers of different areas since they have been fruitfully applied in signal and image processing, associative memories, combinatorial optimization, automatic control, and so on [1–4]. In 1983, Cohen and Grossberg [5] proposed competitive neural networks (CNNs). Recently, Meyer-Bäse et al. [6–8] proposed the so called CNNs with different time scales, which can be seen as the extension of Hopfield neural networks [9], cellular networks [10], Cohen and Grossberg’s CNNs [5], and Amari’s model for primitive neuronal competition [11]. In the CNNs model, there are two types state variables: the short-term memory variables (STM) describing the fast neural activity and the long-term memory variables (LTM) describing the slow unsupervised synaptic modifications. Global exponential stability of CNNs with or without delays was investigated in [6–8, 12–15].

In the past decades, since the concept of drive-response synchronization for coupled chaotic systems was proposed in [16], much attention has been drawn to control and chaos synchronization [17] due to its potential applications such as secure communication, biological systems, information science, etc., [18]. In [16], a chaotic system, called the driver (or master), generates a signal sent over a channel to a responder (or slave), which uses this signal to synchronize itself with the driver. In other words, in the drive-response (or master-slave) systems, the response (or slave) system is influenced by the behavior of the drive (or master) system, but the drive (or master) system is independent of the response (or slave) one. The principle of using chaos to secure communication is this: the useful signal information with chaos signal is transmitted from driver to responder, then the useful signal information is recovered when synchronization between the states of driver to responder is realized. It was found in [19] that some delayed neural networks can exhibit chaotic dynamics. In [20], Lou and Cui studied the exponential synchronization for a class of CNNs with time-varying delays by instantaneous state feedback control scheme. The synchronization criteria were given by linear matrix inequality (LMI). By combining adaptive control scheme with LMI approach, Gu [21] investigated synchronization for CNNs with stochastic perturbations. However, authors of [20] and [21] did not consider distributed delays.

Since neural networks usually have a spatial extent due to the presence of an amount of parallel pathways with a variety of axon size and lengths [22], it is more practical to model them by introducing distributed delays. The unbounded distributed delay implies that the distant past has less influence compared to the recent behavior of the state [22], while bounded distributed delay means that there is a distribution of propagation delays only over a period of time [25–28]. We note that most existing results on stability or synchronization of neural networks with bounded distributed delays obtained by using LMI approach can not be directly extended to those with unbounded distributed delays (see Remark 3). Although there were some results on the exponential stability or synchronization of neural networks with unbounded distributed delays, they were all obtained by algebra approach [2, 15, 29]. As is well known, compared with LMI result, algebraic one is more conservative, and criteria in terms of LMI can be easily checked by using the powerful Matlab LMI toolbox. This motivates us to investigate the exponential synchronization of CNNs with unbounded distributed delays based on LMI approach. At the same time, the LMI approach of this paper is applicable to exponential synchronization of CNNs with bounded distributed delays.

Switched systems have attracted much research attention in control theory field during recent years. A switched system is composed of several dynamical subsystems and a switching law that specifies the active subsystem at each instant of time. Switched systems have numerous applications in communication systems, control of mechanical systems, automotive industry, aircraft and air traffic control, electric power systems [30–33], and many other fields. In [30, 31, 33], asymptotic synchronization of switched systems and application in communication is investigated. In this paper, we shall investigate exponential synchronization of switched competitive neural networks with both interval time-varying delays and distributed delays. In order to guarantee exponential synchronization, switches are required not to happen too frequently [34, 35]. The concept of average dwell time [34] is then introduced in this paper to describe this phenomenon. Inspired by multiple Lyapunov function in [36], a new multiple Lyapunov-Krasovskii functional will be designed to cope with the switching problem.

Recently, the research on stochastic models has received considerable interest, since stochastic perturbations have come to play an important role in many real systems [4, 21, 23, 24, 29, 37, 38]. However, to the best of our knowledge, no published paper concerning exponential synchronization of stochastic competitive neural networks (SCNNs) with both interval time-varying delays and distributed delays is reported in the literature. Since CNNs are extensions of neural networks, we further study SCNNs.

Based on the above discussions, in this paper, we aim to investigate exponential synchronization of SSCNNs with interval time-varying delays and distributed delays. Both unbounded and bounded distributed delays are considered. The switching rule is described by introducing the concept of average dwell time. By constructing new Lyapunov-Krasovskii functionals and employing a combination of the free-weighing matrix method, Newton-Leibniz formulation, and inequality technique, controller is designed to achieve exponential synchronization of the considered systems. The provided conditions are expressed in terms of LMIs, which are dependent on not only both lower and upper bounds of the interval time-varying delays but also delay kernel for unbounded distributed delays or upper bounds for bounded distributed delays and can be easily checked by the effective LMI toolbox in Matlab. Some restrictions are imposed on control gains and average dwell time for the practical application in engineering and secure communication. Some useful lemmas can be easily obtained from our results, and some existing results are extended and improved. Numerical simulations are given to demonstrate the effectiveness of the new results.

The rest of this paper is organized as follows. In Sect. 2, models of considered SSCNNs are presented. Some necessary assumptions, definitions, and lemmas are also given in this section. Our main results and their rigorous proofs are described in Sect. 3. In Sect. 4, one example with numerical simulations is offered to show the effectiveness of our results. In Sect. 5, conclusions are given. At last, acknowledgments.

2 Preliminaries

The CNNs with time-varying delays and unbounded distributed delays is described as:

where \({x(t)=(x_{1}(t),\ldots,x_{n}(t))^{T}\in\mathbb{R}^{n}}\) is the state vector of the CNNs; \(f(x(t))=(f^{1}(x_{1}(t)),\ldots,f^{n}(x_{n}(t)))^{T}\) denotes the neuron activation function; M(t) = (m il ) n×p is the synaptic efficiency; \(\Upxi={\rm diag}(\alpha_{1},\alpha_{2},\ldots,\alpha_{n})\) and \(\Upupsilon={\rm diag}(\beta_{1},\beta_{2},\ldots,\beta_{n})\) denote disposable scaling constants with \(\alpha_{i}>0; C={\rm diag}(c_{1},c_{2},\ldots,c_{n})^{T}\) with c i > 0 represents the time constant of the neuron; A = (a ij ) n×n , B = (b ij ) n×n , and D = (d ij ) n×n are the connection weight matrix, time-delayed weight matrix, and the distributively time-delayed weight matrix, respectively; \(w=(w_{1},w_{2},\ldots,w_{p})^{T}\) is the constant external stimulus; \(E={\rm diag}(E^{1},E^{2},\ldots,E^{n})\) is the strength of the external stimulus. \(\varepsilon\) is the time scale of STM state. Here, τ(t) denotes the time-varying delay of the CNNs. \(k(\cdot)\) is the delay kernel.

Let \(S(t)=(S_{1}(t),S_{2}(t),\ldots,S_{n}(t))^{T}\) and \(S_{i}(t)=\sum_{l=1}^{p}m_{il}(t)w_{l}=m_{i}^{T}(t)w, i=1,2,\ldots,n. \) Then the CNNs (5) can be rewritten in the following form:

where \(|w|^{2}=w_{1}^{2}+w_{2}^{2}+\cdots+w_{p}^{2}\) is a constant. Without loss of generality, the input stimulus vector w is assumed to be normalized with unit magnitude, i.e., |w|2 = 1, and the fast time-scale parameter \(\varepsilon\) is also assumed to be unit. Then the system (2) turns out to the following networks:

or

By introducing switching signal into the system (4), one can obtain the following switched CNNs:

A switching signal is simply a piecewise constant signal taking values on index set. In model (5), the \(\sigma:[0,+\infty)\rightarrow\mathcal{M}=\{1,2,\ldots,m\}\) is a switching signal. Then \(\{C_{\sigma},A_{\sigma},B_{\sigma},D_{\sigma},E_{\sigma},\Upxi_{\sigma},\Upupsilon_{\sigma},f_{\sigma}(\cdot),\tau_{\sigma}(\cdot),k_{\sigma}(\cdot)\}\) is a family of matrices, activation functions, time-varying delays, and delay kernels parametrized by index set \(\mathcal{M}. \) We always assume that the values of σ is unknown, but its instantaneous value is available in real time.

Based on the concept of drive-response synchronization, we design the following response CNNs:

where U σ is the state feedback controller given to achieve the exponential synchronization between drive and response systems, which is of the form (we assume that the information on the sizes of τσ(t) are available):

Here, K σ,1 and K σ,2 are feedback gain parameters to be scheduled. Moreover, \(\omega(t)=(\omega_{1}(t),\ldots,\omega_{n}(t))^{T}\) is a n-dimensional Brown motion defined on a complete probability space \((\Upomega,\mathcal{F},\mathcal{P})\) with a natural filtration \({\{\mathcal{F}_{t}\}_{t\geq0}}\) generated by {ω(s): 0 ≤ s ≤ t}. Here, the white noise \(\mathrm{d}\omega_{i}(t)\) is independent of \(\mathrm{d}\omega_{j}(t)\) for i ≠ j, and \({h_{\sigma}:\mathbb{R}^{+}\times\mathbb{R}^{n}\times\mathbb{R}^{n}\rightarrow\mathbb{R}^{n\times n}}\) is called the noise intensity function matrix. This type of stochastic perturbation can be regarded as a result from the occurrence of random uncertainties during the process of transmission. We assume that the output signals of (5) can be received by (6).

Throughout this paper, we make the following assumptions:

-

(H1) There exist constants τσ,1, τσ,2, and μσ such that \(0\leq\tau_{\sigma,1}\leq\tau_{\sigma}(t)\leq\tau_{\sigma,2}, \dot{\tau}_{\sigma}(t)\leq\mu_{\sigma}.\)

-

(H2) The delay kernel \(k_{\sigma}:[0,+\infty)\rightarrow[0,+\infty)\) is real-valued non-negative continuous function that satisfies

-

(1)

there exists positive number \(\varsigma_{\sigma}\) such that \(\int_{0}^{+\infty}k_{\sigma}(s)\,{\rm d}s=\varsigma_{\sigma}, \)

-

(2)

there exist positive numbers a and H σ such that \(\int_{0}^{+\infty}e^{as}k_{\sigma}(s)\,{\rm d}s\leq H_{\sigma}. \)

-

(1)

-

(H3) There exist constants f i σ and \(F^{i}_{\sigma}, i=1,2,\ldots,n,\) such that

$$ f^{i}_{\sigma}\leq\frac{f^{i}_{\sigma}(x)-f^{i}_{\sigma}(y)}{x-y}\leq F^{i}_{\sigma},\quad \forall x,y\in {\mathbb{R}},x\neq y. $$ -

(H4) h(t, 0, 0) = 0 and there exist symmetric positive-definite matrices J σ,1 and J σ,2 such that

$$ \begin{aligned} &{\rm trace}(h_{\sigma}^{T}(t,e(t),e(t-\tau_{\sigma}(t))) h_{\sigma}(t,e(t),e(t-\tau_{\sigma}(t))))\\ &\quad\leq e^{T}(t)J_{\sigma,1}e(t)+e^{T}(t-\tau_{\sigma}(t)) J_{\sigma,2}e(t-\tau_{\sigma}(t)). \end{aligned} $$

In order to investigate the problem of exponential synchronization between (5) and (6), we define the error state e(t) = y(t) − x(t) and z = R(t) − S(t). Subtracting (5) from (6) yields the following switched error system:

where g σ(e(t)) = f σ(y(t)) − f σ(x(t)), g σ(e(t − τσ(t))) = f σ(y(t − τσ(t))) − f σ(x(t − τσ(t))).

The initial condition associated with the switched error system (8) is given in the following form:

for any \({\varphi,\phi\in L_{\mathcal{F}_{0}}^{2}((-\infty,0],\mathbb{R}^{n}), }\) where \({L_{\mathcal{F}_{0}}^{2}((-\infty,0],\mathbb{R}^{n})}\) is the family of all \(\mathcal{F}_{0}\)-measurable \({\wp((-\infty,0],\mathbb{R}^{n})}\)-valued random variables satisfying \({\sup_{s\leq0}\mathbb{E}\|\varphi(s)\|^{2}<+\infty}\) and \({\sup_{s\leq0}\mathbb{E}\|\phi(s)\|^{2}<+\infty, }\) and \({\wp((-\infty,0],\mathbb{R}^{n})}\) denotes the family of all continuous \({\mathbb{R}^{n}}\)-valued functions \(\varphi(s),\phi(s)\) on \((-\infty,0]\) with the norm \(\|\varphi(s)\|=\max_{1\leq i\leq n}\sup_{s\leq0}|\varphi_{i}(s)|\) and \(\|\phi(s)\|=\max_{1\leq i\leq n}\sup_{s\leq0}|\phi_{i}(s)|.\)

Definition 1

[34] A switching signal σ is said to have average dwell time T a if there exist two positive constants N 0 and T a such that

where N σ(T, t) denotes the number of discontinuities of a switching signal σ on the interval (t, T).

Definition 2

The zero solution of (8) is said to be exponentially stable in mean square if for any initial condition (e T(t 0), z T(t 0))T, there exist some positive constants M 0 and η such that \({\mathbb{E}(\|e(t)\|^{2}+\|z(t)\|^{2})\leq M_{0}e^{-\eta(t-t_{0})}.}\)

Lemma 1

[39]. (Schur Complement). The linear matrix inequality (LMI)

is equivalent to any one of the following two conditions:

where S 11 = S T11 , S 22 = S T22 .

According to assumptions \((\mathrm{H}_{3})\) and \((\mathrm{H}_{4}),\) the system (8) admits a trivial solution. Obviously, if the trivial solution of (8) is exponentially stable in mean square for any given initial condition, then the global exponential synchronization between SSCNN (5) and (6) is achieved.

3 Main results

For any t > 0, let \(t_{i} (i=0,1,2,\ldots,k)\) be the switching points of σ over the interval (0, t) satisfying \(0=t_{0}<t_{1}<t_{2}<\cdots<t_{k}<t. \) Suppose system (8) with the σ(t i )th = lth subsystem is activated when \(t\in[t_{i},t_{i+1})\) for \(i=0,1,2,\ldots,k, \) where \(l\in\mathcal{M}=\{1,2,\ldots,m\}. \) Then, for \(t\in[t_{i},t_{i+1}), \) we get

For simplicity, we denote

Then system (10) can be rewritten as

The following theorem is the main result of this paper, which states the exponential mean square stabilization conditions for the error system (8).

Theorem 1

Suppose that the assumptions (H 1)–(H 4) hold, σ is a switching signal with average dwell time T a . Then the trivial solution of system (8) is exponentially stable in mean square, if, for a given scalar a, there exist symmetric positive-definite matrices R l,i , i = 1, 2, P l,j , j = 1, 2, 3, 4, Q l,k , k = 1, 2, 3, positive-definite diagonal matrices \(\Upphi_{l}, \Uppsi_{l}, \) matrices N l,i , S l,i , M l,i , Y l,i , i = 1, 2, an invertible matrix O l , positive scalars ρ l and θ, for each \(l\in\mathcal{M}\) such that the following LMIs hold,

further more, the convergence rate can be estimated as \(a-\frac{1}{T_{a}}\ln \theta, \) where \(\Updelta_{l}=(H_{l,21}S_{l},H_{l,20}N_{l},H_{l,21}M_{l},H_{l}\widehat{O}_{l} D_{l}), S_{l}^{T}=(S^{T}_{l,1},0,S^{T}_{l,2},0,0,0,0,0), M_{l}^{T}=(M^{T}_{l,1},0,M^{T}_{l,2},0,0,0,0,0), N_{l}^{T}=(N^{T}_{l,1},0,N^{T}_{l,2},0,0,0,0,0), \widehat{O}_{l}^{T}=(O^{T}_{l},0,0,0,0,O^{T}_{l},0,0), H_{l,21}=\frac{e^{a\tau_{l,2}}-e^{a\tau_{l,1}}}{a}, H_{l,20}=\frac{e^{a\tau_{l,2}}-1}{a}, \int_{0}^{+\infty}e^{as}k_{l}(s)\,{\rm d}s\leq H_{l}, \tau_{l,21}=\tau_{l,2}-\tau_{l,1}, \overline{F}_{l}={\rm diag}(F^{1}_{l}f^{1}_{l},F^{2}_{l}f^{2}_{l},\ldots,F^{n}_{l}f^{n}_{l}),\, \overline{f}_{l}={\rm diag}(\frac{1}{2}(F^{1}_{l}+f^{1}_{l}),\frac{1}{2}(F^{2}_{l}+f^{2}_{l}),\ldots,\frac{1}{2}(F^{n}_{l}+f^{n}_{l})),\)

Moreover, the estimation of the control gains is K l,i = O −1 l Y l,i , i = 1, 2.

Proof

Consider the following multiple Lyapunov-Krasovskii functional candidate:

where

Calculating the time derivative of V(l, e(t)) along the trajectory of system (13) by using Itô’s differential formula, one can obtain that

where

Now define new vector ξ l (t) as

For any matrices N l,i , S l,i , M l,i , i = 1, 2, and any invertible matrix O l with appropriate dimensions, from Newton-Leibniz formulation and (11)–(13), we have

On the other hand, one can derive the following inequality:

where \(\widetilde{S}^{T}_{l}=\xi_{l}^{T}S_{l}+G_{l}^{T}(s)e^{a(s-t)}(Q_{l,1}+Q_{l,2}).\) Similarly, one has

where \(\widetilde{N}^{T}_{l}=\xi_{l}^{T}N_{l}+G_{l}^{T}(s)e^{a(s-t)}Q_{l,1}, \widetilde{M}^{T}_{l}=\xi_{l}^{T}M_{l}+G_{l}^{T}(s)e^{a(s-t)}Q_{l,2}, \widetilde{O}^{T}_{l}=\xi_{l}^{T}\widehat{O}_{l} D_{l}+g_{l}^{T}(e(s))e^{a(s-t)}Q_{l,3}.\)

In view of assumption \((\mathrm{H}_{3}),\) for any positive diagonal matrices \(\Upphi_{l}={\rm diag}(\phi^{1}_{l},\phi^{2}_{l},\ldots,\phi^{n}_{l})\) and \(\Uppsi_{l}={\rm diag}(\psi^{1}_{l},\psi^{2}_{l},\ldots,\psi^{n}_{l}),\) the following two inequalities hold [40]:

Substituting (20)–(23) into (19), adding (24)–(33) to the right side of (19) and letting Y l,i = O l K l,i produce that

where

From e a(s-t) Q l,i > 0, i = 1, 2, 3 and k −1 l (t − s) ≥ 0, we get \(\Upsigma_{l}>0. \) By virtue of Lemma 1, (14) is equivalent to \(\Uptheta_{l}<0. \) Therefore, by integrating both sides of (34) from t 0 to t (t 0 < t) and taking expectation, one can obtain that

Particularly, when \(t\in[t_{k},t_{k+1}), \) it follows from (35) that

By (15), at the switching instant t k , one gets

where t − k is the left limitation of t k .

According to Definition 1, there exist positive constant N 0 such that the number k of discontinuities of the switching signal σ on the interval (0, t) satisfies \(k=N_{\sigma}(t,0)\leq N_{0}+\frac{t}{T_{a}}. \) By induction, it follows from (36) to (37) that

Let \(\lambda=\min_{l\in\mathcal{M}}\{\lambda_{\min}R_{l,1},\lambda_{\min}R_{l,2}\}.\) One has from (18) that

It follows from (38) to (39) that

Since \(a-\frac{1}{T_{a}}\ln \theta>0, \) in view of Definition 2, the trivial solution of system (8) is exponentially stable in mean square. This completes the proof. \(\square\)

Next we shall consider synchronization of drive-response SSCNNs with time-varying delays and bounded distributed delays, which are described as:

where (40) is the drive system and (41) is the response system, 0 < ϑσ(t) ≤ ϑσ, ϑσ is positive constant, U σ has the same form as (7).

By the same method as that used in the proof of Theorem 1, we can also derive sufficient conditions under which (41) exponentially synchronize with (40).

Subtracting (40) from (41) yields

Similarly, we get the following error system when \(t\in[t_{i},t_{i+1})\) for \(i=0,1,2,\ldots,k, \) and the lth subsystem is activated:

For simplicity, we also denote

Then system (43) can be rewritten as

Theorem 2

Suppose that the assumptions \((\mathrm{H}_{1}), (\mathrm{H}_{3})\), and \((\mathrm{H}_{4})\) hold, σ is a switching signal with average dwell time T a . The trivial solution of error system (42) is exponentially stable in mean square, if, for a given scalar a, there exist symmetric positive-definite matrices R l,i , i = 1, 2, P l,j , j = 1, 2, 3, 4, Q l,k , k = 1, 2, 3, positive-definite diagonal matrices \(\Upphi_{l}, \Uppsi_{l}, \) matrices N l,i , S l,i , M l,i , Y l,i , i = 1, 2, , an invertible matrix O l , positive scalars ρ l and θ, for each \(l\in\mathcal{M}\) such that the following LMIs hold,

further more, the convergence rate can be estimated as \(a-\frac{1}{T_{a}}\ln \theta, \) where \(\Updelta_{l}=(H_{l,21}S_{l},H_{l,20}N_{l},H_{l,21}M_{l},H_{l}\widehat{O}_{l} D_{l}), S_{l}^{T}=(S^{T}_{l,1},0,S^{T}_{l,2},0,0,0,0,0), M_{l}^{T}=(M^{T}_{l,1},0,M^{T}_{l,2},0,0,0,0,0), N_{l}^{T}=(N^{T}_{l,1},0,N^{T}_{l,2},0,0,0,0,0), \widehat{O}_{l}^{T}=(O^{T}_{l},0,0,0,0,O^{T}_{l},0,0), H_{l,21}=\frac{e^{a\tau_{l,2}}-e^{a\tau_{l,1}}}{a}, H_{l,20}=\frac{e^{a\tau_{l,2}}-1}{a}, \overline{H}_{l}=\frac{e^{a \vartheta}-1}{a}, \tau_{l,21}=\tau_{l,2}-\tau_{l,1}, \overline{F}_{l}={\rm diag}(F^{1}_{l}f^{1}_{l},F^{2}_{l}f^{2}_{l},\ldots,F^{n}_{l}f^{n}_{l}), \overline{f}_{l}={\rm diag}(\frac{1}{2}(F^{1}_{l}+f^{1}_{l}),\frac{1}{2}(F^{2}_{l}+f^{2}_{l}),\ldots,\frac{1}{2}(F^{n}_{l}+f^{n}_{l})),\)

Moreover, the estimation of the control gains is K l,i = O −1 l Y l,i , i = 1, 2.

Proof

Consider another multiple Lyapunov-Krasovskii functional candidate as:

where V i (l, e(t)), i = 1, 2, 3, are defined as those in the proof of Theorem 1, while

Calculating the time derivative of \(\overline{V}(l,e(t))\) along the trajectory of system (46) by using Itô’s differential formula derives that

where

From Newton-Leibniz formulation and (44), one has

Similar to (31), it is obvious that

The other part of the proof is same as that in the proof of Theorem 1. This completes the proof. \(\square\)

Note that, for a given a > 0, sometimes the feasible solution obtained by using LMI toolbox in Matlab is not applicable in practice, for example, the magnitude of feedback gains \(K_{l,i},i=1,2, l\in\mathcal{M}\) may be too large to operation, the scalar θ may be very large such that the average dwell time T a is also large. From a point of view of the engineering application, magnitude of feedback gains \(K_{l,i},i=1,2, l\in\mathcal{M}\) should not be very large. From a point of view of the secure communication, if T a is too large, which means the system switched to one subsystem and stay for too long, it is possible for an intruder to recover the digital binary signal [30]. Therefore, it is necessary to impose restrictions on the magnitude of feedback gains K l,1, K l,2, and θ (or T a ). Since \(K_{l,i}=O^{-1}_{l}Y_{l,i},i=1,2, l\in\mathcal{M}, \) restricting the magnitude of feedback gains K l,i is equivalent to restricting the norms of O l −1 and Y l,i , i.e. [41]:

where δ l , λ l,i are positive scalars. According to Lemma 1, (51) is equivalent to

It is easy to see that if θ < ω, then \(\frac{\ln \theta}{a}<\frac{\ln \omega}{a}.\) From (16) to (49), we know T θ a < T ω a , where T θ a and T ω a are minimum values of average dwell time corresponding to θ and ω, respectively.

Remark 1

If the following inequalities hold

then (15) and (48) hold. Hence, the θ in (15) and (48) can be replaced by

where

Remark 2

In this paper, the upper bound of the derivative of time-varying delays μσ is not necessarily less than 1. Both of the lower and upper bounds of the time-varying delays are considered in the delay-dependent LMIs, which are less conservative than those (see [26, 27, 42]) only include upper bounds of the time-varying delays when the delays are interval ones. Moreover, the main results of [42] are special case of our Theorem 1 when m = 1 and S(t) = 0. Furthermore, if τσ(t) is non-derivable, then by letting P l,1 = P l,2 = 0, Theorem 1 and Theorem 2 still hold.

Remark 3

When m = 1, the models of this paper become competitive neural networks with time-varying delays and distributed delays, and the results of this paper are also new. When replace c i x i (t) by α i (x i ), and assume \((\mathrm{H}1)\) in [20] holds, our results still hold when some changes are made accordingly. In addition, assumption \((\mathrm{H}_{3}),\) which was first proposed in [40], is weaker than the assumptions \((\mathrm{H}2)\) and \((\mathrm{H}2^{*})\) in [20]. Furthermore, the conditions \((\mathrm{H}3)\) and \((\mathrm{H}3^{*})\) in [20] that the time-varying delay is differentiable are necessary, however, as pointed out in Remark 2, these conditions can be removed from our results. It is worth mentioning that, in this paper, the distributed delays are also considered and the LMIs are related to lower and upper bounds of the time-varying delays. Hence, our model is more general and the synchronization criteria are less conservative than those in [20]. Summarizing the above analysis, results of this paper extend and improve those in [20].

Remark 4

If θ = 1, then \(R_{l,i}= R_{i},i=1,2,P_{l,j}=P_{j},j=1,2,3,4,Q_{l,k}=Q_{k},k=1,2,3, \forall l\in\mathcal{M}.\) Hence, there is one common Lyapunov-Krasovskii functional for (13) or (46), which implies that the global exponential synchronization between SSCNNs (5) and (6) or (40) and (41) can be achieved under arbitrary switching.

Remark 5

When m = 1 and S(t) = 0, (5), and (40) turn out to general stochastic neural networks with time-varying and distributed delays. Our LMI conditions are also applicable to exponential synchronization of stochastic neural networks with unbounded or bounded distributed delays. In [25–28], synchronization of neural networks with mixed delays was investigated by LMI approach, and the following lemma is indispensable:

Lemma

[43]. For any constant matrix \({D\in \mathbb{R}^{n\times n}, D^{T}=D>0,}\) scalar τ > 0 and vector function \({\omega:[t-\tau,t]\rightarrow\mathbb{R}^{n},}\) one has

provided that the integrals are all well defined, where T denotes transpose and D > 0 denotes that D is positive definite matrix.

One can see that the above lemma does not necessarily hold when \(\sigma\rightarrow\infty.\) Therefore, results of this paper can not be derived by simply extending results in [25–28] by replacing bounded distributed delays with unbounded distributed delays. On the other hand, in addition to time-varying and unbounded distributed delays, one can consider impulsive effects and get exponential synchronization criteria in terms of LMIs, which is also less conservative than algebraic criteria in [2]. Hence, results of this paper extend and improve those in [25–28] and [2].

Remark 6

In this paper, exponential synchronization of SSCNNs with time-varying delays, unbounded or bounded distributed delays are studied, two theorems are obtained formulated by LMIs. Moreover, several lemmas can be easily obtained from our results. For example, (1) from Remark 1 to Remark 5, one can gets some useful lemmas by taking some special parameters; (2) if a = 0,θ = 1 in Theorems 1 and 2, then globally asymptotic synchronization between SSCNNs (5) and (6) or (40) and (41) can be achieved under arbitrary switching, respectively. To the best of our knowledge, similar problem is seldom considered in the literature. Therefore, to some extent, the results of this paper are new.

4 A numerical example

In this section, we provide one example with its simulations to show that our theoretical results obtained above are effective and practical.

Consider the switched competitive neural networks with two switched subsystems as follows:

where \(x(t)=(x_{1}(t),x_{2}(t))^{T}, S(t)=(S_{1}(t),S_{2}(t))^{T}, \sigma:[0,+\infty)\rightarrow\mathcal{M}=\{1,2\}, k_{1}(\nu)=k_{2}(\nu)=e^{-2\nu}, f_{1}(x)=f_{2}(x)=(\tanh(x_{1}),\tanh(x_{2}))^{T}, \tau_{1}(t)=\tau_{2}(t)=1.5-|\sin t|,\)

In the case that initial values are chosen as \(x(t)=(5,2)^{T}, S(t)=(1.5,0.5)^{T}, \forall t\in[5, 0],\) and x(t) = 0, S(t) = 0 for t < −5, chaotic-like trajectories of subsystems 1 and 2 are depicted in Figs. 1 and 2, respectively.

Let system (53) be the driver network and design the response SSCNNs with the same two switched subsystems as

where e(t) = y(t) − x(t), z(t) = R(t) − S(t),

It is easily to see \(\tau_{l,1}=0.5, \tau_{l,2}=1.5, \tau_{l,21}=1, \varsigma_{l}=0.5, J_{l,i}={\rm diag}(0.5,0.5), f^{i}_{l}=0, F^{i}_{l}=1, i,l=1,2.\)

It is obvious that τ1(t) and τ2(t) are interval and non-derivable, \(\overline{F}_{l}=0, \overline{f}_{l}={\rm diag}(0.5,0.5), l=1,2. \) Given a = 0.5, we have H l,21 = 1.6659, H l,20 = 2.2340, H l = 0.6667. By letting P l,1 = P l,2 = 0, l = 1, 2, and solving LMIs (14), (15), (17) by using the Matlab LMI toolbox, we get the following feasible solutions

θ = 7.5514. Therefore, the average dwell time should satisfies \(T_{a}>\frac{\ln7.5514}{0.5}=4.0435,\) and the feedback gains are

The largest norm of control gains is 337.8669, and the average dwell time should satisfies T a > 4.0435. They seem to be large to implement in practice. Hence, we can impose restrictions on feedback gains K i,j , i, j = 1, 2 and θ to get ideal feasible solutions. Letting P l,1 = P l,2 = 0, l = 1, 2, and solving LMIs (14), (15), (17), and (52) with δ1 = δ2 = λ1,1 = λ1,2 = λ2,1 = λ2,2 = 20 and θ < ω = 2.5, by using the Matlab LMI toolbox, we get the following ideal feasible solutions

θ = 2.4231. Therefore, the average dwell time only needs to meet the \(T_{a}>\frac{\ln2.4231}{0.5}=1.7701, \) and the feedback gains are

The largest norm of control gains is 85.56, which is much smaller than 337.1340.

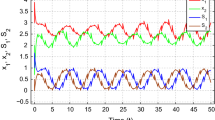

Let T a = 2, (55) and (56) are control gains of subsystem 1 and 2, respectively, the subsystem 1 is activated at t = 0. By virtue of Theorem 1, (53) and (54) can be exponentially synchronized with the estimated exponential synchronization rate index \(0.5-\frac{\ln 2.4231}{2}=0.0575. \) The initial conditions of the numerical simulations are taken as: \(x(t)=(5,2)^{T}, S(t)=(1.5,0.5)^{T}, y(t)=(-2,-1)^{T}, R(t)=(-2.5,-5)^{T}, \forall t\in[5, 0].\) Figure 3 shows the phase trajectory of response system (54). Figure 4 presents state trajectories of the error system between (53) and (54).

Phase trajectories of y(t) and R(t) for the response system (54)

5 Conclusions

In this paper, exponential synchronization of SSCNNs with both interval time-varying delays and distributed delays is studied. Especially, the distributed delays can be unbounded. Multi-dimensional Brownian motion stochastic perturbation is included. New multiple Lyapunov-Krasovkii functionals are designed to get new sufficient conditions guaranteeing the exponential synchronization. The derived conditions are expressed in terms of LMIs, which are less conservative than algebraic results and easy to be solved by using Matlab LMI toolbox. Some useful lemmas can be easily deduced from the new results, and some existing results are extended and improved. Moreover, some restrictions are imposed on control gains and average dwell time such that obtained feasible solutions are applicable in practice. Finally, numerical simulations show the effectiveness of the theoretical results.

References

Samidurai R, Marshal Anthoni S, Balachandran K (2010) Global exponential stability of neutral-type impulsive neural networks with discrete and distributed delays. Nonlinear Anal Hybrid Syst 4:103–112

Sheng L, Yang H (2008) Exponential synchronization of a class of neural networks with mixed time-varying delays and impulsive effects. Neurocomput 71:3666–3674

Hou Y, Lien C, Yan J (2007) Stability analysis of neural networks with interval time-varying delays. Chaos 17:033120

Yang X, Cao J (2009) Stochastic synchronization of coupled neural networks with intermittent control. Phys Lett A 373:3259–3272

Cohen MA, Grossberg S (1983), Absolute stability of global pattern formation and parallel memory storage by competitive neural networks. IEEE Trans Syst Man Cybern 13(5):815–825

Meyer-Bäse A, Scheich F (1996) Singular perturbation analysis of competitive neural networks with different time-scales. Neural Comput 8:1731–1742

Meyer-Bäse A, Pilyugin S, Chen Y (2003) Global exponential stability of competitive neural networks with different time scales. IEEE Trans Neural Netw 14(3):716–719

Meyer-Bäse A, Pilyugin S, Wismuler A, Foo S (2004) Local exponential stability of competitive neural networks with different time scales. Eng Appl Artif Intell 17:227–232

Alonso H, Mendonca T, Rocha P (2009) Hopfield neural networks for on-line parameter estimation. Neural Netw 22:450–462

Ding W (2009) Synchronization of delayed fuzzy cellular neural networks with impulsive effects. Commun Nonlinear Sci Numer Simulat 14:3945–3952

Amari S (1983) Field theory of self-organizing neural net. IEEE Trans Syst Man Cybern 13:741–748

Lu H, He Z (2005) Global exponential stability of delayed comprtitive neural networks with different time scales. Neural Netw 18:243–250

He H, Chen G (2005) Global exponential convergence of multi-time scale competitive neural networks. IEEE Trans Circuits Syst II 52:761–765

He H, Shun-ichi A (2006) Global exponential stability of multitime scale competitive neural networks with nonsmooth functions. IEEE Trans Neural Netw 17:1152–1164

Nie X, Cao J (2009) Multistability of competitive neural networks with time-varying and distributed delays. Nonlinear Anal Real World Appl 10:928–942

Pecora L, Carroll T (1990) Synchronization in chaotic systems. Phys Rev Lett 64:821–824

Chopra N, Spong MK (2009) On exponential sunchronization of Kuramoto oscillators. IEEE Trans Auto Control 54(2):353–357

Sundar S, Minai A (2000) Synchronization of randomly multiplexed chaotic systems with application to communication. Phys Rev Lett 85:5456–5459

Cilli M (1993) Strange attractors in delayed cellular neural networks. IEEE Trans Circuits Syst I 40(11):849–853

Lou X, Cui B (2007) Synchronization of competitive neural networks with different time scale. Phys A 380:563–576

Gu H (2009) Adaptive synchronization for competitive neural networks with different time scales and stochastic perturbation. Neurocomputing 73(1–3):350–356

Gopalsamy K, He XZ (1994) Stability in asymmetric Holpfield nets with transmission delays. Phys D 76:344–358

Wen F, Yang X (2009) Skewness of return distribution and coeffcient of risk premium. J Syst Sci Complex 22:360–371

Wen F, Liu Z (2009) A copula-based correlation measure and its application in Chinese stock marketm. Int J Inf Technol Decis Making 8:1–15

Yu W, Cao J, Chen G (2009) Local synchronization of a complex network model. IEEE Trans Syst Man Cybern 39(1):230–241

Song Q (2009) Design of controller on synchronization of chaotic neural networks with mixed time-varying delays. Neurocomputing 72:3288–3295

Li T, Fei SM, Zhang KJ (2008) Synchronization control of recurrent neural networks with distributed delays. Phys A 387(4):982–996

Li T, Fei SM, Guo YQ (2009) Synchronization control of chaotic neural networks with time-varying and distributed delays. Nonlinear Anal 71:2372–2384

Huang C, Cao J (2009) Almost sure exponential stability of stochastic cellular neural networks with unbounded distributed delays. Neurocomputing 72:3352–3356

Yu W, Cao J, Yuan K (2008) Synchronization of switched system and application in communication. Phys Lett A 372:4438–4445

Xia W, Cao J (2008) Adaptive synchronization of a switched system and its applications to secure communications. Chaos 18:023128

Maia C, Goncalves M (2008) Application of switched adaptive system to load forecasting. Electr Power Syst Res 78:721–727

Yu W, Cao J, Lu W (2010) Synchronization control of switched linearly coupled neural networks with delay. Neurocomputing 73(4–6):858–866

Hespanha JP, Liberzon D, Morse AS (1999) Stability of switched systems with average dwell time. In: Proceedings of 38th conference on decision and control, pp 2655–2660

Lu J, Ho DWC, Wu L (2009) Exponential stabilization of switched stochastic dynamical networks. Nonlinearity 22:889–911

Branicky MS (1999) Multiple Lyapunov functions and other analysis tools for switched and hybrid systems. IEEE Trans Autom Control 43:475–482

Sun Y, Cao J, Wang Z (2007) Exponential synchronization of stochastic perturbated chaotic delayed neural networks. Neurocomputing 70:2477–2485

Hassan S, Aria A (2009) Adaptive synchronization of two chaotic systems with stochastic unknown parameters. Commun Nonl Sci Numer Simul 14:508–519

Boyd S, EI Ghaoui L, Feron E, Balakrishman V (1994) Linear matrix inequalities in system and control theory. SIAM, Phiadelphia

Liu YR, Wang ZD, Liu XH (2006) Global exponential stability of generalized recurrent neural networks with discrete and distributed delays. Neural Netw 19:667–675

Liu M (2009) Optimal exponential synchronization of general chaotic delayed neural networks:an LMI approach. Neural Netw 22:949–957

Li X, Ding C, Zhu Q (2010) Synchronization of stochastic perturbed chaotic neural networks with mixed delays. J Franklin Inst 347(7):1266–1280

Gu KQ, Kharitonov VL, Chen J (2003) Stability of time-delay system. Birkhauser, Boston

Acknowledgments

This work was jointly supported by the National Natural Science Foundation of China (60874088), the Natural Science Foundation of Jiangsu Province of China (BK2009271), the National Natural Science Foundation of China (10801056), the Foundation of Chinese Society for Electrical Engineering (2008), the Excellent Youth Foundation of Educational Committee of Hunan Provincial (10B002), the Key Project of Chinese Ministry of Education (211118), the Scientific Research Fund of Yunnan Province (2010ZC150).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yang, X., Huang, C. & Cao, J. An LMI approach for exponential synchronization of switched stochastic competitive neural networks with mixed delays. Neural Comput & Applic 21, 2033–2047 (2012). https://doi.org/10.1007/s00521-011-0626-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-011-0626-2