Abstract

Big data (BD) approach has significantly impacted on the development and expansion of supply chain network management and design. The available problems in the supply chain network (SCN) include production, distribution, transportation, ordering, and inventory holding problems. These problems under the BD environment are challenging and considerably affect the efficiency of the SCN. The drastic environmental and regulatory changes around the world and the rising concerns about carbon emissions have increased the awareness of customers regarding the carbon footprint of the products they are consuming. This has enforced supply chain managers to change strategies to reframe carbon emissions.. The decisions such as an optimization of the suitable network of the proper lot sizes can play a crucial role in minimizing the whole carbon emissions in the SCN. In this paper, a new integrated production–transportation–ordering–inventory holding problem for SCN is developed. In this regard, a mixed-integer nonlinear programming (MINLP) model in the multi-product, multi-level, and multi-period SCN is formulated based on the minimization of the total costs and the related cost of carbon emissions. The research also uses a chance-constrained programming approach. The proposed model needs a range of real-time parameters from capacities, carbon caps, and costs. These parameters along with the various sizes of BD, namely velocity, variety, and volume, have been illustrated. A lot-sizing policy along with carbon emissions is also provided in the proposed model. One of the important contributions of this paper is the three various carbon regulation policies that include carbon capacity-and-trade, the strict capacity on emission, and the carbon tax on emissions in order to assess the carbon emissions. As there is no benchmark available in the literature, this study contributes toward this aspect by proposing two hybrid novel meta-heuristics (H-1) and (H-2) to optimize the large-scale problems with the complex structure containing BD. Hence, a generated random dataset possessing the necessary parameters of BD, namely velocity, variety, and volume, is provided to validate and solve the suggested model. The parameters of the proposed algorithms are calibrated and controlled using the Taguchi approach. In order to evaluate hybrid algorithms and find optimal solutions, the study uses 15 randomly generated data examples having necessary features of BD and T test significance. Finally, the effectiveness and performance of the presented model are analyzed by a set of sensitivity analyses. The outcome of our study shows that H-2 is of higher efficiency.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the current digital era, global data are increasing rapidly along with the swift development of the Internet of Things (IoT) and the Internet (Mousavi et al. 2020). Likewise, the development of virtual storage and cloud technology provides an opportunity for the development and application of big data (BD). The data as the necessary raw material in the information economy society have penetrated each industry, and this has led to the development of an industry under the context of BD (Duan et al. 2019). In recent years, several companies have started to exploit the potential of BD. Current studies have investigated the application and advantages of BD, and findings show that employing BD can support industries to modify their business and benefit models. BD utilization can assist industries to improve the capacity of production and performance (Cui et al. 2020; Sangaiah et al. 2020). However, not all industries have enough qualifications to deal with such a large and complex data set. Thus, many industries and enterprises have started outsourcing their BD projects. The current way is to purchase relevant Big Data Information (BDI) from the Data Firm (Wang et al. 2015). Hence, the Data Firms’ condition in a supply chain network (SCN) has become increasingly significant and this compels the modification of the SCN framework. In other words, Data Firm should be contained in an SCN as a main supply chain element. SCN creates a further volume of data in various cases under different business scenarios with the swift development of new technologies (Dev et al. 2019; Zhan and Tan 2020). Moreover, it has proven that 3Vs of BD characteristics including velocity, volume, and variety are beneficial and useful for SCN models (Günther et al. 2017).

The global concern over environmental menaces reasoned by different business activities has led practitioners and researchers to explore different methods to reduce the whole carbon footprint of a company and industry. Moreover, the businesses and industries have begun changing their operational and strategic policies to extend the environmental efficiency of whole manufacturing processes and/or products beginning from the preparation of commodities until the transfer of finished commodities. This requires successful coordination and complete integration between all the members of the supply chain including producers, distributors, retailers, and customers (Günther et al. 2017). The approach toward slow carbon has started to affect the world economy trend. In this regard, the policies of the carbon emission regulation include trade, carbon tax, carbon offset, carbon capacity that are gradually being considered by different companies and industries across the globe The globalization has led to raised demand of services and commodities all over the globe. As a result, transportation with the increasing demand for products, production, and warehouse of commodities cause to effect on increasing environmental effects.

These days data is attained from both the producer/manufacturer and customer sides in this information era. Therefore, decisions of the supply chain do not merge with the big data features in the operation research, decision-making, and optimization models. Moreover, it is significant to consider the communication and link between BD and supply chain problems. Available data from the producer/manufacturer and customer's sides are often voluminous as well as have velocity and variety features of BD. In this regard, according to the BD, these available data must be used during mathematical model formulation for effectual and useful decision-making. Thus, the mathematical modeling of the supply chain using BD properties provides a competitive advantage for the businesses and industry and improves the sustainability and resilience of supply chain network (Wang et al. 2015; Dev et al. 2019; Zhan and Tan 2020; Günther et al. 2017). While BD is necessary for optimization and decision-making in competitive and unstable markets, it is equally challenging to analyze BD. Since there is a massive scope of big data in different fields, there are very few attempts made so far to formulate and design optimization models utilizing BD in supply chain problems.

In this paper, a new production, transportation, and inventory holding problem for a multi-period and multi-product SCN model along with different carbon emission regulations using big data is developed. One of the important contributions in this paper is the three various carbon regulation policies containing carbon capacity-and-trade, the strict capacity on emission, and the carbon tax on emissions to assess the carbon emissions which are considered for the first time, simultaneously. The released carbon emissions during production, transportation, ordering, and inventory holding in the proposed model are considered. In this regard, an MINLP mathematical model for SCN under uncertainty is formulated. Then, the SCN model seeks to attain decreasing carbon emissions cost and total cost, simultaneously. To cope with the uncertain parameters, a chance-constrained programming approach (CCP) is employed that demands parameter as an uncertain parameter in this paper. The second contribution of this paper is a theoretical framework to further link with a supply chain model along with BD characteristics. The last important and significant contribution of this paper is about solution methodologies. This study proposes two hybrid meta-heuristic algorithms to solve the large-sized problems with BD characteristics, namely hybrid genetic algorithm and simulated annealing (H-1) and hybrid genetic algorithm and particle swarm optimization (H-2). Then, the hybrid algorithms are compared with the basic genetic algorithm (GA), simulated annealing (SA), and particle swarm optimization (PSO) in three sizes (small, medium, and large). The small-sized problems are considered to validate and evaluate the SCN model, and medium- and large-sized problems are provided based on big data characteristics. Finally, to validate the efficiency and performance of the developed model, T test significance has been carried out between methodology solutions together with a set of sensitivity analyses and the Taguchi method. Generally, the important contributions of this paper are summarized as follows:

-

Designing a new production–transportation–ordering–inventory holding problem for a new multi-period and multi-product supply chain network.

-

Formulating MINLP model along with chance-constrained programming.

-

Considering three different carbon emission regulations for the first time, simultaneously.

-

Employing chance-constrained programming (CCP) method.

-

Developing two new hybrid meta-heuristic algorithms called H-1 and H-2 to solve the SCN model for the first time in this paper.

-

Providing T test significance for statistical significance among GA, SA, PSO, H-1, and H-2 algorithms.

-

Presenting the Taguchi method and sensitivity analyses to validate the proposed model.

This paper is structured as follows: the literature review related to the SCN and BD is reviewed in Sect. 2. In Sect. 3, problem description, mathematical model formulating, and linking between BD and SCN model are stated. In Sect. 4, solution methods include three meta-heuristic algorithms (GA, SA, and PSO), and hybrid two algorithms to solve the SCN model are explained. Then, the computational experiments, the results for a set of designed problems with various scales, T test significance, sensitivity analysis of the proposed model, and managerial insight are proposed in Sect. 5. Finally, the conclusions and suggestions for further works are provided in Sect. 6.

2 Literature review

The literature review is categorized into three parts related to the suggested study. They are divided into the application of meta-heuristic algorithms in supply chain networks, BD, and BD in the SCN.

2.1 Application of meta-heuristic algorithms in supply chain networks

In terms of the SCN field, the application of meta-heuristic algorithms has been widely considered. For example, Khalifehzadeh et al. suggested various transportation systems with different reliability rates and shortages in four-level SCN. They considered two aims including minimizing the total operating costs of all the supply chain elements and maximizing the reliability of the system. A mixed-integer linear programming (MILP) model for the production–distribution problem was designed and formulated. A new comparative particle swarm optimization (CPSO) meta-heuristic algorithm was introduced to solve the model (Khalifehzadeh et al. 2015). Mousavi et al. introduced a location, allocation, and inventory problem in a bi-level SCN problem. A distributer–retailer network for a location allocation-inventory control problem was derived. A mixed-integer nonlinear programming (MINLP) model of the problem was then formulated in their paper. Their goal was to find the optimal number of packets and the distributors’ locations. They also proposed a modified fruit fly optimization algorithm (MOFA) to find the Pareto solutions and compared it with particle swarm optimization (PSO) and simulated annealing (SA) algorithms where the MFOA has a better performance than the PSO and SA (Mousavi et al. 2015). Sarrafha et al. presented an integrated procurement, production, and distribution problem for a multi-period multi-level SCN and formulated a bi-objective MINLP model. Their two objectives were to minimize the total costs and minimize the average tardiness of product to DCs. To find a near-optimum solution, a new multi-objective biogeography-based optimization (MBBO) algorithm was developed, and multi-objective SA and nondominated sorting genetic algorithm (NSGA-II) were employed. Finally, it was shown that MBBO has better efficiency than multi-objective SA and NSGA-II (Sarrafha et al. 2015). Maghsoudlou et al. offered a new bi-objective three-level multi-server supply chain problem for congested systems. A MILP mathematical model was formulated in this paper in which cross-dock facilities to transport the products are modeled as a M/M/m queuing system. An Epsilon constraint approach to solving small-scale problems was used, and a multi-objective particle swarm optimization (MOPSO), imperialist competition algorithm (MOICA), NSGA-II, and nondominated ranking genetic algorithm s(NRGA) were utilized to find the Pareto solutions in different size problems. The parameters of the solution algorithms were calibrated using the Taguchi technique. The comparison results according to five multi-objective performance metrics utilized in the AHP-TOPSIS approach indicated that the parameter-tuned MOPSO acts better than the other parameter-tuned algorithms to solve small-, medium-, and large-size problems (Maghsoudlou et al. 2016). Mahmoodirad and Sanei developed a multistage multi-product SCN problem and formulated a MILP model in which the objective was the minimization of the total costs of the supply chain. Their problem considered determining the optimal transportation network to satisfy the customer demands of products by using several kinds of conveyance with minimum costs. To solve their model, differential evolution (DE), PSO, and gravitational search algorithm (GSA) have been used. Additionally, the Taguchi experimental design technique is employed to tune the operators and parameters of the suggested algorithms (Mahmoodirad and Sanei 2016). Afrouzy et al. proposed to design a tri-objective multi-echelon multi-product multi-period supply chain model and formulated the MILP model, which incorporates product development and new product production and their effects on supply chain configuration. Their goals of the model were to maximize the total profit, maximize the satisfaction level of customer demands, and to maximize the production of developed and new products. To solve their model, they used NSGA-II and NRGA algorithms in different size problems (Afrouzy et al. 2017). In the same year, Khalifehzadeh et al. developed a multi-objective production–distribution system, in which the objectives were to minimize total costs and maximize the reliability of the transportations system. The problem has formulated using a MILP model. Ranking genetic algorithm (RGA) and concessive variable neighborhood search (CVNS) algorithms were proposed to solve the model (Khalifehzadeh et al. 2017). Mousavi et al. developed a modified particle swarm optimization (MPSO) for solving the integrated location and inventory control problems in a two-stage distribution supply chain. The main objective of their research was to find out the optimal locations of the potential vendors in addition to the quantity ordered (allocation) by the buyers so that the total costs were minimized. Additionally, a genetic algorithm (GA) was used to compare with the MPSO to validate the model. Finally, some computational examples are generated to evaluate the algorithms’ efficiency where MPSO indicated a better performance in comparison with the GA (Mousavi et al. 2017). Koc investigated an evolutionary algorithm (EA) for SCN design with assembly line balancing. Their main aim was to minimize the sum of transportation costs and fixed costs of stations in assemblers (Koç 2017). However, Saghaeeian and Ramezanian formulated a MINLP model for multi-product competitive SCN while developing a price-dependent demand function considering the complementary and substitutable relationship between products. Furthermore, a hybrid algorithm the genetic algorithm and the firefly algorithm was suggested to solve the model (Saghaeeian and Ramezanian 2018). Tavana et al. addressed a three-stage single-product SCN design. The overall aim of their problem was to decrease the total cost, which is composed of the opening and transportation costs in all three stages. Likewise, a hybrid genetic algorithm-variable neighborhood search (GA-VNS) and variable neighborhood search-simulated annealing (VNS-SA) were proposed to solve the model. A robust parameter and operator setting is implemented utilizing the Taguchi experimental design approach with several random test problems. The efficiency of these algorithms is evaluated and compared for different problem sizes. The experimental results indicated that the GA-VNS is robust and superior to the other competing algorithms (Tavana et al. 2018). Memari et al. proposed a novel modified firefly algorithm to solve multi-echelon SCN problems as well as designed an integer linear programming (ILP) model (Memari et al. 2019). Alavidoost et al. formulated a new biobjective MINLP model for multi-product three-level SCN, which they aimed to determine the optimum service level so that total cost is minimized. NSGA-II, NRGA, and PESA-II algorithms are applied to solve the model. The Taguchi approach was also used for controlling and calibrating the parameters of the employed triple algorithms (Alavidoost et al. 2018). Jiang et al. designed a multi-objective Pareto optimization three-echelon SCN problem to minimize cost and lead-time based on a complex network. Then, an artificial bee colony (ABC) algorithm in order to solve the model was proposed (Jiang et al. 2019a). More recently, Mohammed and Duffuaa formulated a MILP model for the multi-product, multi-objective, and multi-stage SCN problem. An improved augmented epsilon constraint method for the small-sized problems was utilized, and an effective Tabu search algorithm was developed and designed for small-scale, medium-scale, and large-scale examples of multi-objective supply chain problems (Mohammed and Duffuaa 2020). Bank et al. considered an integrated production–distribution problem in a two-level SCN and then formulated a mixed-integer programming (MIP) model. A hybrid simulated annealing (HSA) and a genetic algorithm (GA) with mixed repair and penalize strategies are introduced to solve their model. Computational results of HSA are compared with those of the GA algorithm as the current best algorithm for solving similar problems in their paper (Bank et al. 2020).

For interested scholars and more details on SCN along with meta-heuristic algorithms, studies by Mousavi et al. (2020), Sangaiah et al. (2020), Kazemi et al. (2015), Fattahi et al. (2015), Jamili et al. (2016), Ghasemiyeh et al. (2017), Woo and Kim (2019), Tamannaei and Rasti-Barzoki (2019), Shoja et al. (2019), Jiang et al. (2019b), Liu et al. (2020), Amiri et al. (2020), Gholizadeh et al. (2020a, c), Gholizadeh and Fazlollahtabar (2020) can be referred.

2.2 Big data

This subsection tries to summarize some fundamental categorization issues that were utilized to review the existing literature already available on the ‘BD’ field. Singh and Reddy surveyed the structure of BD analytics and evaluated the drawbacks and advantages of these frameworks according to the different metrics like data I/O rate, scalability, real-time processing, fault tolerance, interactive task support, and data size supported. Then, a k-means clustering algorithm on different structures in order to present more insights into BD analytics was proposed (Singh and Reddy 2015). Lee and Kang explored the opportunities and challenges of BD using several case studies BD (Lee and Kang 2015). Yaqoob et al. investigated functionalism and structuralism paradigms to analyze the origins of BD applications. Furthermore, they analyzed processing technologies, approaches, and analysis techniques for BD and then presented major challenges with BD and discussed several opportunities (Yaqoob et al. 2016). Sivarajah et al. addressed the analysis of BD challenges and analytical approaches. They presented a literature review of the BD challenges and proposed/employed/theorized the BD analytic approaches (Sivarajah et al. 2017). Lee explained the application of data analytics utilizing merchant review data and evaluated the impacts of BD on key business performances. In this regard, six technical and managerial challenges are stated (Lee 2017). Gunther et al. identified six debates on how organizations realize value from BD and cross-level interactions influence BD value realization. An integrated model of BD value realization was also offered (Günther et al. 2017). Oussous et al. reviewed recent technologies of BD that purpose to support choosing the right combination of various BD analytics based on their technological requirements (Oussous et al. 2018).

For more details on BD, studies by Duan et al. (2019), Cui et al. (2020), Gandomi and Haider (2015), Jin et al. (2015), Tsai et al. (2015), Hilbert (2016), Lv et al. (2017), Mikalef et al. (2018), Akter and Wamba (2019), Dai et al. (2019), Mohamed et al. (2020) can be referred.

2.3 Big data in supply chain network

Choi et al. examined the existing BD relevant analytics techniques, weaknesses and identified their strengths and also major functionalities. Then, they discussed various sorts of BD application into various operations management issues such as supply chain management, transportation management, risk analysis, inventory management, etc. (Choi et al. 2018). Kaur and Singh formulated a MILP model for SCN using the BD characteristics. Moreover, to find optimal solutions, a heuristic approach was developed. It is also indicated that large-scale problems have necessary 3Vs of BD, namely variety, velocity, and volume, which consume nonpolynomial time and cannot be solved optimally (Kaur and Singh 2018). Tiwari et al. analyzed and reviewed BD analytics in SCN between 1010 and 2016 (Tiwari et al. 2018). Liu introduced pricing policies and coordination of low-carbon SCN according to the targeted advertisement and carbon emission reduction costs using BD (Liu 2019).

There are many review papers published recently on BD in the supply chain fields. Then, for more details, we recommend to interested readers' references about BD in SCN (Zhan and Tan 2020; Addo-Tenkorang and Helo 2016; Wang et al. 2016; Nguyen et al. 2018; Lamba and Singh 2018; Gupta et al. 2019; Ben-Daya et al. 2019; Baryannis et al. 2019; Kamble and Gunasekaran 2020; Gholizadeh et al. 2020b; Giudice et al. 2020). The summary of the examined papers is reported in Table 1.

The examined papers (see Cui et al. 2020; Günther et al. 2017; Khalifehzadeh et al. 2015, 2017; Mousavi et al. 2015, 2017; Sarrafha et al. 2015; Maghsoudlou et al. 2016; Mahmoodirad and Sanei 2016; Afrouzy et al. 2017; Koç 2017; Saghaeeian and Ramezanian 2018; Tavana et al. 2018; Memari et al. 2019; Alavidoost et al. 2018; Jiang et al. 2019a, b; Mohammed and Duffuaa 2020; Bank et al. 2020; Kazemi et al. 2015; Fattahi et al. 2015; Jamili et al. 2016; Ghasemiyeh et al. 2017; Woo and Kim 2019; Tamannaei and Rasti-Barzoki 2019; Shoja et al. 2019; Fakhrzad et al. 2019; Liu et al. 2020; Amiri et al. 2020; Singh and Reddy 2015; Lee and Kang 2015; Yaqoob et al. 2016; Sivarajah et al. 2017; Lee 2017; Oussous et al. 2018; Gandomi and Haider 2015; Jin et al. 2015; Tsai et al. 2015; Hilbert 2016; Lv et al. 2017; Mikalef et al. 2018; Akter and Wamba 2019; Dai et al. 2019; Mohamed et al. 2020; Choi et al. 2018; Kaur and Singh 2018; Tiwari et al. 2018; Liu 2019; Addo-Tenkorang and Helo 2016; Wang et al. 2016; Nguyen et al. 2018; Lamba and Singh 2018; Gupta et al. 2019; Ben-Daya et al. 2019; Baryannis et al. 2019; Kamble and Gunasekaran 2020) indicated that the study on supply chain network considering carbon emissions regulation policies utilizing BD characteristics under chance-constrained programming is very finite. The previously studied papers mostly use the original meta-heuristic algorithms to solve the model address the single aspect of BD, namely variety or volume. Hence, to address this gaps, in this paper, a new mathematical model for the supply chain network considering carbon emissions regulation policies utilizing BD characteristics under chance-constrained programming is designed. Three carbon emissions regulation policies including carbon capacity-and-trade, the strict capacity on emission, and the carbon tax on emissions in the SCN problem are considered for the first time simultaneously. According to the rising trend in the usage of BD, it has become important to improve a supply chain model using the BD characteristics for useful and effectual decisions. Big data is the suggested model that provides 3 Vs called variety, velocity, and volume characteristics. Therefore, it has proven that 3Vs of BD characteristics are effective and useful for SCN models (Günther et al. 2017). In terms of the variety, a variety of products through multi-product is considered in the suggested model. Under volume characteristics, the number of manufacturers and the value of the products in the proposed model are provided. Additionally, in terms of the velocity aspect, capacity, demand, and costs with time are considered in the presented model. In order to solve the presented model, utilizing the 3 Vs characteristics of BD causes the SCN model to have the ability to control fluctuations. Further, the model solution is capable of absorbing the fluctuations by combining BD with supply chain modeling. A new production, transportation, ordering, and inventory holding problem is designed and a new MINLP model is formulated; hybrid two meta-heuristic algorithms are developed for the first time in this paper to solve this model. Hybrid meta-heuristic algorithms have been hybridized with GA, SA, and PSO algorithms called hybrid SA-GA (H-1) and PSO-GA (H-2) algorithms. To tune and control the parameters of the algorithms, the Taguchi method is used, and to validate the proposed model, T test significance and sensitivity analyses are employed.

3 Mathematical modeling for SCN problem

3.1 Problem description and model hypothesis

The SCM problem proposed in this study is for a multi-manufacturer, multi-product, multi-vehicle, and multi-period. Besides, the emission caused during transporting, ordering, and inventory holding through vehicles is considered in the presented problem. The study considers three various carbon emissions regularity policies, known as the strict cap on emission, carbon tax, and carbon cap-and-trade. In terms of the cap-and-trade policy, an obliging capacity is used over the total carbon emissions. Likewise, carbon emissions exceeded or saved according to the carbon capacity can be exchanged. The strict capacity of carbon emissions policy includes a maximum capacity of carbon emissions coming out from different supply chain operations such as transportation, production, inventory holding, ordering, etc. After that, in terms of the carbon tax, it does not put any limitation on carbon emission but offers to tax each unit of carbon evicted. The aim is to allocate manufacturers each period to minimize the total costs of containing transportation, production, carbon emission, and inventory holding costs.

The model is formulated under the following assumptions:

-

The demand for products, manufacturer and vehicle capacities, and transportation, production, inventory holding, and ordering costs parameters has the main BD traits in terms of volume, velocity, and variety. They have been hypothesized and computed utilizing real-time data related to the demand, capacities, and costs.

-

The cost of ordering is known for each product purchased from each manufacturer for the whole planning horizon.

-

Only one sort of vehicle is accessible with a specified volume. It is further hypothesized that the various products are so packaged that all of the volumes of the container can be used.

-

If a product is transported forward to the next period in the planning horizon, the cost of inventory holding is suitable.

-

Late deliveries and shortages are not permitted.

-

The cost of transportation is dependent on the whole covered distance by the vehicle.

-

Transportation, ordering, and inventory holding processes of products make the emission of carbon that are linear in nature.

According to the assumptions and some previous papers in the literature, some of the indices, parameters, and decision variables have been used in this paper. For instance, the parameters and variable decisions related to production and inventory holding are taken from the studies of Saghaeeian and Ramezanian (2018), Memari et al. (2019), respectively. Likewise, the parameters and variable decisions relevant to transportation are utilized in this paper from some references such as Khalifehzadeh et al. (2017), Mousavi et al. (2017), Alavidoost et al. (2018). The rest of the proposed indices, parameters, and decision variables are provided according to the current research assumptions to are shown the suggested mathematical model more realistic.

3.2 Linking big data with the proposed model

The proposed production, ordering, transportation, and inventory model in the SCN considering different carbon emission regulations is linked with BD utilizing the presented structure. The suggested structure proposes several ways to contain both inter and intra heterogeneity in the data to make it emphasize the fundamental 3 Vs requirements of BD, i.e., variety, velocity, and volume. Our reasons for selecting 3 Vs of the big data characteristics include the amount and size of the generated data that is collected and stored in large volumes (in terms of the value). Data variety is in the form of numbers and traditional information that can be categorized in the management, supply chain, decision-making issues. This variety of data helps managers and industries to achieve targeted results with proper use (in terms of the variety). Regarding velocity, to meet the demands and challenges ahead on the path of development and growth, the velocity of production and processing of data (products) is faced with the volume of information from the production and storage of data (products) which poses a significant challenge for all organizations and analysts. The structure has been changed to contain the whole parameters related to the model presented in this study and depicted on BD dimensions as follows.

-

Variety The presented model is a multi-manufacturer, multi-product, and multi-period, enveloping different parameters like carbon emission, costs, demand, and capacities as a function of time, so causing the diversity of viewpoints in the suggested model.

-

Velocity Velocity is relevant to the trend of the data to modify the variation in real/near real time. The parameters presented in the suggested model are modified according to each manufacturer, period, and product, to obtain the velocity viewpoint.

-

Volume To obtain the volume feature of BD as per the structure (Fig. 1), the presented model attends the ample number of manufacturers, the time periods, and products.

3.3 Mathematical formulation

The list of indices, parameters, decision variables utilized for the proposed model formulation is as follows.

3.3.1 Indices

\(p\) | Index for products |

\(m\) | Index for manufacturers |

\(v\) | Index for vehicles |

\(t\) | Index for time periods |

3.3.2 Parameters

\({\alpha }_{pm}^{t}\) | Production cost of product \(p\) from manufacturer \(m\) at the period \(t\) |

\({\beta }_{pm}^{t}\) | Ordering cost of product \(p\) produced from manufacturer \(m\) at the period \(t\) |

\({\delta }_{mv}^{t}\) | Transportation cost from manufacturer \(m\) using vehicle \(v\) at the period \(t\) |

\({\varphi }_{p}^{t}\) | Inventory holding cost of product \(p\) at the period \(t\) |

\({\theta }_{mp}^{t}\) | Manufacturer capacity \(m\) for product \(p\) at the period \(t\) |

\({n}_{v}\) | Vehicle capacity \(v\) (volume) |

\(f\) | Carbon emissions capacity for the whole planning horizon (in tons) |

\({\mu }_{p}^{t}\) | The demand of product \(p\) at the period \(t\) |

\({k}_{p}\) | Packaged volume of product \(p\) |

\(h\) | Carbon price per unit (tons) |

\(\rho \) | The rate of the carbon tax |

\({q}^{t}\) | The emission of carbon in placing order at the period \(t\) |

\({r}^{t}\) | The emission of carbon in placing inventory holding at the period \(t\) |

\({c}^{t}\) | The emission carbon of variable per unit each order at the period \(t\) |

\({d}_{m}\) | The distance of manufacturer \(m\) from the purchaser |

\({\gamma }_{v}\) | Mileage (\(\mathrm{kms}\)/1) of vehicle \(v\) |

\(\omega \) | The factor of carbon emission for transportation |

3.3.3 Decision variables

\({L}_{pmv}^{t}\) | Lot-size of product \(p\) manufactured from manufacturer \(m\) using vehicle \(v\) at the period \(t\) |

\({Y}_{p}^{t}\) | Inventory for product \(p\) transported from period \(t\) to \(t+1\) |

\({N}_{mv}^{t}\) | The number of vehicles \(v\) needed by manufacturer \(m\) at the period \(t\) |

\({z}^{+}/{z}^{-}\) | Carbon validity sold/bought over the whole emissions planning horizon |

\({W}_{m}^{t}\) | 1 if manufacturer \(m\) is chosen at the period \(t\); otherwise 0 |

3.3.4 Mathematical model

The objective function (1) shows the MINLP model under the three various carbon regulations. The objective as stated is to minimize the total cost over the whole planning horizon under carbon capacity-and-trade, the strict capacity on emission scenario, and the carbon emission tax regulation. Carbon capacity-and-trade contains four parts: the transportation cost which is the first term in the objective function representing the cost of ordering whereas the second term is the cost of transportation. The cost of ordering and the cost of transportation are caused only if a specific manufacturer opted in a certain period. Hence, both of these have been multiplied by a positive and binary variable (\({N}_{mv}^{t} \,\mathrm{and}\, {W}_{m}^{t}\)), respectively. The cost of purchasing, the cost of inventory holding, and the cost of excess carbon sold/ brought to cover the whole planning horizon are also considered. After that, the strict capacity through carbon emission scenarios where the purchaser requires to minimize the total cost related to ordering, procurement, inventory holding, and transportation and keeps the carbon emissions within the determined maximum allowable capacity. Afterward, the tax per unit of carbon emission and transportation costs is also considered.

Constraint (2) shows the inventory balance. It states that the inventory transported from the prior time period (t − 1), added to the lot size of the current period (\(\sum_{m}{L}_{pmv}^{t}\)) and purchased from all manufacturers is to be balanced by the current period inventory (\({Y}_{p}^{t}\)) and current demand (\({\mu }_{p}^{t}\)). Constraint (3) represents \({W}_{m}^{t}\)=1, while \({L}_{pmv}^{t}>0\). Constraint (4) displays the size of the order as per the capacity of the manufacturer. Constraint (5) depicts the number of vehicles/travels needed by the manufacturer for transportation. It is clear that this value can be a logical number that shows the use of the capacity of the vehicle. In order to calculate the cost of transportation, this value has been rounded to the next integer value, while keeping the same for the emission of carbon computing because of transportation. Constraint (6) indicates the emission of carbon constraint that balances the whole carbon emission related to the production, inventory holding, ordering, and handling of the products and transportation over the whole planning horizon versus the maximum allowed capacity of carbon and the surplus carbon sold or brought. Constraint (7) ensures the emission of carbon under the determined capacity. Integer and nonnegativity values of the inventory and lot size are considered in constraint (8), whereas Eq. (9) is the manufacturer allocation constraint that can consider only binary values. Equation (10) certifies the positive surplus carbon sold or bought values.

3.3.5 A chance-constrained problem approach

The CCP method is one of the most widely utilized methods that consider uncertain parameters in mathematical models, which has been used in many different papers (Shaw et al. 2016). Therefore, the CCP approach introduced by Shaw et al. (2016) is employed in the presented model. This approach satisfies the chosen constraints with a defined confidence level. According to the presented model, its efforts to determine the product demand at a confidence level above \(\alpha \%\). The converted constraints are attained as follows:

It is supposed that the amounts of demand pursue a normal distribution function and, therewith, the probabilities of constraint (11) are equal to zero. Indeed, when the operator of the probabilistic constraints is inequal, it could utilize the rules of CCP. If the operator is equal, some changes should be made according to the concept of the constraint to transform the equality to the inequality constraint. Therefore, constraint (11) is converted to constraint (13) as stated.

According to constraint (13), the inventory amount should be specified so that the lost demand is avoided at the level of confidence above \(\alpha \%\).

4 Meta-heuristic algorithms

Meta-heuristic algorithms are a type of random algorithm that is used to find the optimal solution. Optimization methods and algorithms are divided into two categories: exact algorithms and approximate algorithms. Exact algorithms can find the optimal solution accurately, but they are not efficient enough in the case of NP-hard optimization problems, and their implementation time increases exponentially according to the sizes of the problems. Approximate algorithms can find good (near-optimal) solutions in a short time for NP-hard optimization problems. Approximate algorithms are divided into three categories: heuristic, meta-heuristic, and hyper-heuristic algorithms. The two main problems with heuristic algorithms include they get stuck at optimal local points and precocious convergence to these points. Meta-heuristic algorithms have been developed and introduced to solve heuristic method problems. In fact, meta-heuristic algorithms are one of the types of approximation optimization algorithms that have solutions for exiting local optimal points and can be used in a wide range of problems. It has been proven that SCN models are an NP-hard problem. The literature review has shown that some meta-heuristic algorithms exist to solve these NP-hard problems. In this regard, simulated annealing (SA) as a famous single-solution algorithm surpasses most of the alike ones (Goodarzian and Hosseini-Nasab 2019). This advantage causes us to utilize it again in our study. In addition, the ‘No Free Lunch’ theory says that there is no algorithm to indicate robust efficiency for the total optimization models (Sahebjamnia et al. 2020). In this paper, three nature-inspired meta-heuristic algorithms, namely simulated annealing (SA) algorithm, particle swarm optimization (PSO) algorithm, and genetic algorithm (GA), as population-based algorithms are used. The main reason for the use of the suggested algorithms is based on single-solution. Then, these algorithms according to one solution change a solution during the search process, while population-based algorithms consider a population of solutions during the search. Another main novelty of this study is to develop and to suggest a set of hybridizations and modifications of these three algorithms for the first time in the supply chain problem using BD characteristics. In the next sections, solution representation and meta-heuristic algorithms will be explained, respectively.

4.1 Solution presentation of meta-heuristic algorithms

A pattern should be proposed to encode the problem for the implementation of the meta-heuristic algorithms (Fakhrzad et al. 2019; Goodarzian and Hosseini-Nasab 2019). According to this aim, a two-phase procedure, namely Random-Key (RK), is employed (Fakhrzad and Goodarzian 2019). This procedure changes an unfeasible solution to a feasible one by some techniques in two steps (Fakhrzad et al. 2018). In recent years, scholars have used this procedure in some subjects of engineering design, e.g., cross-docking systems (Fonseca et al. 2019) and Supply Chain Network Design (Sahebjamnia et al. 2020), etc. This paper, first of all, utilizes this procedure in the literature review for the SCN model using BD extended.

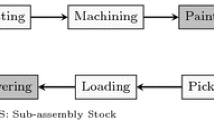

A numerical example is depicted to encode the solution presentation as follows. Consider that there are six manufacturers (\(m\)) with 12 products (\(p\)) and four vehicles (\(v\)). Firstly, the sort of utilized transportation system for each manufacturer should be determined. In the following, an array by a length of \(M\) is created by a uniform distribution: \(U\) (0, \(v\)). Hence, the sort of transportation system devoting to each manufacturer should be specified. After that, a set of processes is shown in Fig. 2. It is clear that the third sort of transportation system is used for manufacturers 3 and 4. Besides, the second and fourth vehicle types are employed for manufacturers 1, 6, and 5, respectively, whereas the first vehicle sorts are used for manufacturer 2.

An array by a length of \(M\) to determine the manufacturer routs is distributed by \(U(\mathrm{0,1})\). Secondly, these numbers are arranged. Then, these numbers are specified to schedule the visits of manufacturers and purchasers. Moreover, an instance of the utilized arrays of this pattern for meta-heuristics is indicated in Fig. 3. In particular, based on the distance of the manufacturer from the purchaser and vehicle capacity (volume), the route of three utilized manufacturers and purchasers is investigated and determined. According to this instance, the fourth manufacturer is not needed for the routes. These routes are generated as follows:

4.2 Simulated annealing algorithm

Simulated annealing (SA) was proposed by Kirkpatrick et al. as a sort of local search optimization meta-heuristic algorithm (Kirkpatrick et al. 1983). A single-objective version of SA is utilized according to the developed SCN model considering different Carbon Emission Regulations using BD in this paper. Similar to other algorithms, SA starts with a random solution that at the first iteration and it is considered the best solution. Then, a neighbor solution near its position is created based on a method. Besides, three neighboring methods containing Swap, Reversion, and Insertion are used in this paper. SA algorithm proposed an approach based on the gradual freezing technique to solve the NP-hard problems. The gradual freezing technique is an approach to achieve a condition in which the energy of solid material is well and uniformly minimizing. This technique involves keeping the material at a high temperature and then gradually reducing this temperature. To explain more, the interested researchers can investigate the recent papers, e.g., Fakhrzad et al. (2019), Sitek et al. (2017), Fang et al. (2020), and other relevant papers that these methods were illustrated as well.

4.3 Genetic algorithm

Genetic algorithm (GA), known as one of the random optimization methods, was introduced by Goldberg et al., this algorithm found its place, and today, due to its capabilities, is well-positioned among other algorithms (Goldberg et al. 1989). In the following, the problem to be solved is as input, and the solutions are coded according to a pattern called the fitness function and evaluates each candidate solution, most of which are randomly selected. A solution to the problem is shown by a list of parameters, namely chromosomes. Chromosomes are generally represented as a simple string of data, although other data structure types can also be used. First of all, several characteristics are randomly generated to create the first generation. During each generation, each characteristic is evaluated, and the value of fitness is measured by the function of fitness. The next step is the creation of the second generation of the population, based on selection operators, based on the selected traits with genetic operators: linking chromosomes to each other and changing. For each individual, a pair of parents is selected. Choices are designed to select the most appropriate elements so that even the weakest elements have the chance of being selected to avoid approaching the local solution. There are several selection patterns: Roulette wheel, Tournament selection, etc. Interested scholars can refer to recent papers such as Fakhrzad et al. (2019), Chung et al. (2015), Ko et al. (2017).

4.4 Particle swarm optimization algorithm

Particle Swarm Optimization (PSO) algorithm was introduced by Eberhart and Kennedy (1995). The PSO as an uncertain search method for functional optimization has been stated, while the algorithm is inspired by the group movement of birds looking for food. Then, a group of birds in the space are looking for food at random. There is only one piece of food in the search space. Each solution is named as the particle, while each particle has a merit value that is calculated by a merit function. The closer the particle in the search space to the objective, the more merit it is. Then, each particle has a speed that the movement guides of the particle are undertaken as well as each particle continues to move through the problem space by following the optimum particles in the current state. A group of particles is created at random at the start of the task and tries to find an optimal solution by updating generations. At each step, each particle is updated using the two best values. Firstly, it is the best situation the successful particle has ever achieved. This situation is known as the best value for that particle that we represent with \(pbest\). The next best value used by the algorithm is the best situation ever achieved by the particle population, namely \(gbest\). After finding the best values, the speed and location of each particle are updated. Hence, to details and information more, interested readers can survey recent studies. For instance, Goodarzian and Hosseini-Nasab (2019), Fakhrzad and Goodarzian (2019), Cheng et al. (2016), Yan (2017).

4.5 Hybrid SA-GA (H-1)

First of all, the proposed algorithm behaves like a GA algorithm, but if the best chromosome is found, the SA algorithm becomes active. For this aim, the best solution of the GA algorithm is selected as the first solution of the SA algorithm and with the same characteristics of the SA algorithm, other calculations are performed. The pseudo-code of the H-1 algorithm is described in Fig. 4.

4.6 Hybrid PSO-GA (H-2)

In this subsection, firstly, we set up parameters of the presented algorithm containing: Maximum Number of Iterations, Maximum Number of Sub-Iterations for GA, Maximum Number of Sub-Iterations for PSO, Swarm (Population) Size, Definition of Constriction Coefficients, Crossover Percentage, Number of Parents (Offspring), Mutation Percentage and Number of Mutants. Secondly, the range of searching for \(z\) is adjusted. The domain will affect the searching velocity. Then, start each particle randomly with an initial position, \({Z}_{jk}\), in the predetermined domain and velocity,\({V}_{jk}\), within the domain of maximum velocity, Smax. Moreover, the float coding procedure to create random numbers for the upper-level variables is utilized. Therefore, variable \(w\) solves at a lower level. The position of each particle \({Z}_{jk}\), is shown in Eq. (14). After that, the value of fitness for the use of each particle based on Eq. (15) is calculated.

Then, all the particles into two clusters are divided based on the efficiency of fitness value. The first group is particles with a better value of fitness that is formulated based on Eq. (16), while the second group is the particles with the worse value of fitness that is designed according to Eq. (17):

Likewise, the mutation and crossover for the first group are updated to calculate the corresponding value of fitness. The equation of crossover is in Eqs. (18) and (19), while the equation of mutation is in Eq. (20). In this regard, the value of fitness for each new particle utilizing Eq. (21) is computed.

In the next step, the current local best position,\({P}_{jk}\), and global best position, \({P}_{gk}\) and also all the particles, \(Z_{jk} = \left( {z_{j1} , \ldots ,z_{jn} ,w_{j1} , \ldots ,w_{jm} } \right)\), attained based on Eqs. (22) and (23) are updated.

Each particle’s speed is limited by the prespecified maximum speed, \({S}_{\text{max}}\), and each position of particle, \({Z}_{jk}\) which should be in the determined domain:\(l\le {x}_{i}\le u\). Finally, stop condition, if the determined number of generations is satisfied. The pseudo-code of the H-2 algorithm is indicated in Fig. 5.

The validation stage is the most significant part of suggesting an algorithm. A full validation process according to the proposed model is accessible. The process has three phases: small-, medium-, and large-scaled samples explained in the next section.

5 Experimental results

In this section, the experimental results of the proposed integrated SCN considering different carbon emission regulations are presented. The presented model contains capacity, emission, and cost parameters in real time that have necessary BD features (3 Vs). In this regard, the proof of the presented structure for real applications is not presented. In this section, a theoretical structure to further join it with the mathematical model of the SCN utilizing BD characteristics is provided. The proof of the presented structure is indicated through 15 (small-, medium-, and large-sized problems) data sets. These data sets of the medium- and large-sized problems are reported using the 3Vs of BD features. First of all, a small-scale example is presented because of its capability to compare with the global optimum. This proposed model is coded by GAMS CPLEX 24.1 software to attain the global optimum. The presented model is solved employing the CPLEX method which is not able to present a solution in a sensible computational time for problems having BD traits. Moreover, hybrid meta-heuristic algorithms are presented and developed to achieve a solution for the proposed integrated SCN considering different Carbon Emission Regulations models for BD features. After that, as the presented model is MINLP, moreover for solving the problem in real time, five other approaches (SA, GA, PSO, H-1, and H-2) are proposed and also are coded using Python 3.7 software. All computations are implemented on a Core i5-4200 M CPU 2.50 GHz 6 GB processor laptop. To set the appropriate values for the algorithm’s parameters, the Taguchi procedure is used. Hence, to investigate the validation of the developed model, T test significance between algorithms for statistical validation and several sensitivity analyses is applied. The structure of the solution methodology is indicated in Fig. 6. Parameters are indicated utilizing uniformly distributed data as proposed in Table 2. The size of test problems for 15 various randomly created problem instances for products are (p) = 4–600, time (t) = 1–24, manufacturer (m) = 4–200, vehicle (v) = 2–300, and all indexes include velocity, variety, and volume characteristics. Table 3 presents the problem sizes of samples.

5.1 Taguchi method

The meta-heuristic algorithms used and suggested in this study have a control parameter set; hence, a scheme should be evaluated to set the meta-heuristics’ parameters (Fakhrzad and Goodarzian 2019; Goodarzian et al. 2020a). The behavior of algorithms would be inefficient if the algorithms are not tuned well (Fakhrzad et al. 2019; Goodarzian and Hosseini-Nasab 2019; Fathollahi-Fard et al. 2020; Goodarzian et al. 2020c; Fakhrzad and Goodarzian 2020). In this regard, the Taguchi technique is utilized to perform this. Taguchi introduced this technique to decrease experiment numbers for engineering issues. Several recent and significant studies are stated to observe the application of this technique. Interested readers can follow the work of Fakhrzad et al. (2019), Rao et al. (2020), Goodarzian et al. (2020b) for better understanding. Taguchi technique categorizes the traits of comparison into two principal phases: noise and control factors. To tune the meta-heuristics, the technique considers computing the value of answer changes according to the signal to the noise portion. Therefore, the mechanism of the Taguchi technique relates to the sort of answer. In other words, the suggested answer relevant to each category of Taguchi categorization phases is: larger the better sort, the nominal is better, and the smaller is better. Since the suggested answer of this paper is a minimization sort, “the smaller is better” is utilized for the gradation of each metaheuristic parameters. Equation (24) indicates the chosen value of the signal-to-noise proportion in this paper:

where \({Y}_{i}\) is the value of answer for \(i\) th the array of orthogonal and \(n\) is the orthogonal array number.

Here, GA, PSO, and SA along with two hybrid algorithms, i.e., H-1 and H-2, are presented. The presented levels along with the factors are shown in Table 4. Therefore, the maximum of three levels is stated to algorithms’ factors. According to this problem, the Taguchi technique reduces all number of tests by presenting several arrays of orthogonal to set up the meta-heuristics in a sensible time. The Taguchi technique proposes L9 for GA in Table 12, and also L27 is used for SA, PSO, H-1, and H-2 algorithms in Tables 13, 14, 15, and 16. Figures 7, 8, 9, 10, and 11 show the S/N ratios of the algorithms. In this regard, Tables 12, 13, 14, 15, and 16 are presented in “Appendix”.

5.2 Samples analysis

To assess the performance of the presented algorithms, three small- (S1–S5), medium- (M6–M10), and large-scaled (L11–L15) categories that include five units of each group are created. In this stage of validation, 15 different kinds of examples (small-, medium-, and large-sized) are designed and the objective functions of CPLEX (global optimum), developed (H-1), and (H-2) algorithms, and SA, GA, and PSO algorithms are tested. Outcomes are indicated in Tables 5, 6, and 7 to analyze the values of the objective function and to analyze the computational (CPU) time of attaining optimal spots, respectively.

Therefore, Figs. 12 and 13 display the algorithm behaviors of the different sizes based on the objective function and CPU time, respectively.

The outcomes of Figs. 12 and 13 and Tables 5, 6, and 7, in terms of small-sized, show that the variation between the CPLEX and the presented algorithm and developed hybrid algorithm is large and small, respectively. Since the outcomes are investigated, just a 0.06% variation from the CPLEX is allowed to encourage the merit of the suggested algorithm’s efficiency. It should be noted that the SA devotes a normal efficiency with a 38.6% average distance to the CPLEX. Then, GA and PSO, with a 62.6% and 56.8% variation to the CPLEX, respectively, assign worst performances compared with the other algorithms, while H-2 with 0.06% differences to the CPLEX proposes robust performances compared with the other algorithms. Besides, the H-1 provides better performances with a 14% variation to the CPLEX compared with the SA, GA, and PSO. However, three SA, GA, and PSO algorithms could not attain solutions near the H-1 and H-2 algorithms. These outcomes can demonstrate the robust capabilities of the H-1 and H-2 algorithms in attaining better solutions. In Fig. 12, the H-2 algorithm shows better performance than the other algorithms in all samples without any exceptions. According to Table 7 and Fig. 12, to CPU time analysis, evidence the behaviors of sensible of the SA, GA, PSO, H-1, and H-2 algorithms with an average of 35.12, 43.28, 38, 33.04, and 30.38 s to attain the reasonable spots, respectively.

In terms of medium- and large-sized instances, the H-1 algorithm indicates a better efficiency than the other algorithms. From Tables 5 and 6, the outcomes display a total of 0.09% in medium- and large-scale the values of the better objective function of the developed algorithms. In this regard, the H-1 algorithm indicates better performance than the other algorithms in all ten samples according to the schematic view of Fig. 13. As a result, more reliable outcomes can be expected from the developed algorithms in larger cases. In Table 7, the acceptable performance of the H-2 algorithm based on the average time for achieving outcomes is proved, whereas attains reasonable spots after processing all iterations in around 94 and 164 s in medium- and large-sized, respectively. Finally, the efficiency of the two developed algorithms is better than the SA, GA, and PSO according to the presented analysis.

Consequently, total outcomes determine that the developed hybrid algorithms maximize the potential of attaining more reasonable solutions. The most important advantage of this algorithm in terms of the solution quality, both H-1 and H-2 algorithms propose a robust solution, and in terms of CPU time, both of the developed algorithms prove robust and powerful efficiencies. As the scale of the samples become larger, the CPLEX method could not attain reasonable solutions and using existing algorithms (SA, GA, and PSO) and hence the need for the developed algorithms is inevitable. In this regard, the presented algorithms demonstrate sensible capabilities. As a result, investigating the reliable and robust efficiency of both the H-1 and H-2 algorithms proves the validation process in comparison with the CPLEX.

5.3 T test significance

In this subsection, the T test is performed between the proposed algorithms for statistical comparison. The considered zero hypotheses in the T test show that there is no significant variation among the solution of the proposed algorithms (SA, GA, PSO, H-1, and H-2). Then, for the small-sized and medium- and large-sized problem instances possessing big data characteristics, the t test is reported separately. The CPLEX model and the proposed meta-heuristic algorithms for five small-, medium-, and large-scale examples are solved, respectively. Moreover, for the small-scale problem samples and medium- and large-scale problems examples generated utilizing BD characteristics, the T test significance is performed separately. Then, the two methods are not markedly different, if the attained t value is less than the first t value from the table. Thus, zero hypothesis is accepted that indicates that the presented algorithms can be utilized for problems in which MINLP (CPLEX) is unable to solve the medium- and large-sized problems in a reasonable time. The results of the T test for all problems in different sizes on 95% and 99% confidence intervals are reported in Table 8.

5.4 Sensitivity analysis

In this subsection, a set of sensitivity analyses have been carried out on the significant parameters of the presented model to recognize the behavior of the model of Integrated SCN considering different carbon emission regulations using BD along with the real savings of the presented problem more efficiently. Moreover, a large experiment instance such as L12 considering 14 products, 12 manufacturers, ten vehicles, and six periods of times is elected. To investigate the model, all the algorithms in this paper are considered. A number of variations containing the rate of the carbon tax (\(\rho \)) and production (\({\alpha }_{pm}^{t})\), ordering (\({\beta }_{pm}^{t}\)), transportation (\({\delta }_{mv}^{t}\)), and inventory holding (\({\varphi }_{p}^{t}\)) costs for the proposed SCN model are considered. Each analysis is categorized into five instances numbered as I1 to I5. Eventually, all outcomes are shown in Figs. 14 and 15 and Tables 9 and 10.

By increasing the rate of the carbon tax (\(\rho \)), sensitivity analyses have been presented. In Table 9, details are provided. To recognize the behavior of objective functions for a total cost, values are depicted in Fig. 14. As a result, by the increasing amount of this parameter, the objective function is decreased.

The costs of production, ordering, transportation, and inventory holding are analyzed. The outcomes of the objective function are indicated in Table 10, and the behavior of total cost is shown in Fig. 15. First of all, the behavior of the objective function is increased by increasing the number of costs.

The last sensitivity analysis is on two important factors to include crossover percentage (\(pCrossove\) r) and mutation percentage (\(pMutation\)) of the best meta-heuristic algorithm (H-2). Each sensitivity analysis is classified into five instances numbered as I1–I5. Then, all results of the H-2 algorithm are indicated in Figs. 16 and 17 and Table 11. According to Figs. 16 and 17, the value of the objective function increases slightly by raising two factors of the H-2 algorithm.

5.5 Discussion

In this paper, a new supply chain network problem considering carbon emissions regulation policies utilizing BD characteristics under chance-constrained programming is designed. Three carbon emissions regulation policies including carbon capacity-and-trade, the strict capacity on emission, and the carbon tax on emissions in the SCN problem are provided for the first time simultaneously. Therefore, according to the assumptions in the proposed problem, a new MINLP mathematical model is formulated. To solve the model, five meta-algorithms include SA, GA, PSO, H-1, and H-2 algorithms were utilized. The reason for choosing these meta-heuristic algorithms is that these algorithms are single-solution and population-based algorithms in the field of approximation optimization. GA and SA are easy to implement and have the ability to handle random types of objectives and constraints. GA and SA can be used independently to solve a given problem as it does not depend on other algorithms or heuristics. GA and PSO can be used to handle problems whose constraints and objective functions are nonlinear or discontinuous, and they use simple operators that can be used to solve problems that have high computational complexity. The calculation in PSO, SA, GA, and also hybrid algorithms is simple and useful in scientific research and in engineering. On the other hand, these algorithms are known as a very powerful and efficient algorithm in the literature. The ability of these proposed algorithms to search and exploit simultaneously provides a theoretical rationale, and successful application to real-world problems reinforces the conclusion that the proposed algorithms are very robust and coherent methods of optimization. SA, GA, and PSO algorithms are better than other methods based on artificial intelligence because of their greater strength and durability. Unlike older artificial intelligence systems, SA, GA, and PSO algorithms are not easily interrupted by small changes in input values or significant amounts of noise in the system. In addition, in the search for a large state space, the use of SA, GA, and PSO algorithms has many greater advantages over conventional search methods in other optimization techniques such as linear/nonlinear programming and random search. The idea of hybrid algorithms has been formed due to the functional and structural differences of different optimization algorithms. Its purpose is to create hybrid algorithms that can use the strengths of hybrid algorithms to perform better in solving various optimization problems. Both of the developed hybrid algorithms in this research are the hybrid SA and GA algorithms and also hybrid SA and PSO algorithms. This hybridization has improved the performance and efficiency of these developed algorithms. Compared to other existing algorithms in the literature, the speed of convergence and avoidance of the algorithm's inclination toward optimal local solutions have been significantly improved. Comparison of these algorithms can test the performance and efficiency of the hybrid new meta-heuristic algorithm very well.

As a result, the tuning of meta-heuristic algorithms according to the Taguchi method was discussed. In order to study the features of these algorithms in more detail, 15 numerical examples were generated and comparisons between these algorithms were performed. The statistical analysis of these algorithms shows that the H-2 algorithm has a significant advantage and the best efficiency in CPU time and T test significance. In terms of the CPU time and T test significance, GA and PSO algorithms presented almost the same results, whereas GA has the worst performance than other proposed algorithms. All in all, it can be argued that the H-2 algorithm has a high convergence speed than other presented algorithms that can be propped for solving single-objective optimization problems with BD characteristics.

5.6 Managerial and theoretical insights

In this study, the integration of three carbon regulations including the strict capacity on carbon emissions, a carbon tax on emissions, and capacity-and-trade has been discussed in the multi-product and multi-period supply chain problems and operation research models. The sensitivity analysis results show that operational decisions can be decrease carbon emissions without a considerable rise in the total costs. In terms of the sensitivity analysis on the parameters of the transportation, ordering, inventory holding, and production costs, it is specified that a gradual increase in costs can slightly increase the presented objective function in this paper. The results of the sensitivity analysis can help industries and companies to manage the increase in the carbon tax rate without causing any extra costs. This can hence generate a competitive advantage for them. It is clear that increasing available costs in the supply chain models leads to the increase in total cost. Hence, in order to limit generated carbon emissions from different supply chain operations such as production, ordering, and transportation, there need to be adequate processes that should be incorporating carbon emission parameters and use energy-efficient devices and equipment in the operational decisions. The SCN model seeks to integrate these three carbon emission regulations into a single model. Additionally, the parameters of the model use the 3 V (volume, velocity, and variety) of BD characteristics. The voluminous data being created in the SCN can be highly insightful. Accordingly, it can lead to increased profitability and better and more robust decision-making for all the industries, stakeholders, and companies. On the other hand, very little study has been performed in the literature that integrates the main characteristics of BD in the supply chain network models. In this regard, the suggested MINLP model is a novel integration of the supply chain problems along with BD and carbon emissions. The developed integrated mathematical modeling presents production, ordering, transportation, and inventory holding of the products in each time period in order to minimize the costs of the relevant carbon emission under three various carbon emission regulations. The proposed model can be further improved to combine more parameters to cope with large-sized problems. Finally, it opens up opportunities for scholars to provide a further contribution toward the integration of the BD characteristics in large-sized problems. The hybrid meta-heuristic algorithms have been developed due to possessing BD characteristics of the parameters and complexity of the SCN model. As a result, the hybrid algorithms have less CPU time and high convergence speed than the original meta-heuristics, while managers in industries and companies can employ the proposed hybrid meta-heuristic algorithms to solve the ordering–transportation–inventory holding–production problem in the supply chain network.

6 Conclusion and future works

In this research, firstly, a new SCN model for production, ordering, transportation, and inventory holding problem has been developed. In this regard, an MINLP mathematical model for the proposed problem under carbon emissions has been formulated. In the following, we will explain completely about findings of this research based on research novelty. Then, it is as follows:

-

The first novelty of this study is that it proposes a new production, ordering, transportation, and inventory holding problem for a supply chain network based on different carbon emission regulations using BD concepts. Also, a MINLP model for SCN problem has been formulated. A chance-constrained programming-based SCN model has been suggested. The carbon trading policy to manage and compute whole emissions incurred during production, inventory holding, and transportation, and sold/bought emissions is related to the objective function in terms of the cost of carbon in the SCN model. In this regard, three different carbon emission regulations including carbon capacity-and-trade, the strict capacity on emission, and the carbon tax on emissions simultaneously have been used to assess the carbon emissions and minimize total cost along with emission cost for the first time. Hence, to manage and recognize the whole carbon emissions through transportation, inventory holding, and production, the strict capacity on emission scenarios, the carbon emission tax regulation, and the carbon capacity-and-trade technique are employed in the mathematical model. Besides, the cost of carbon emission through transportation, ordering, production, and inventory holding is introduced.

-

The second contribution of this paper is that this study uses parameters possessing big data characteristics (3 Vs) to solve the proposed model, while BD characteristics in the SCN model are also examined. In terms of the fluctuations of capacities, demand, and costs, BD obtained a real-time modification in the parameters. Moreover, by combining BD into the supply chain network models, the optimal solution of the model can investigate the fluctuations. Our study indicated that combining BD in the mathematical model of the SCN problem increases the CPU time and the model cannot find optimal solutions. To fill this gap, two hybrid meta-heuristic algorithms for medium- and large-sized problems using BD characteristics were proposed to find a near-optimal solution. Medium- and large-sized problems possessing BD characteristics are solved in a fraction of a minute utilizing developed meta-heuristic algorithms (H-1 and H-2).

-

The next novelty of this paper, both hybrid meta-heuristic algorithms, namely hybrid GA and SA (H-1) and hybrid SA and PSO (H-2), is developed for three different scale (i.e., small-, medium-, large-scale) problems utilizing BD characteristics, and these algorithms provide very close to optimal solutions. In this regard, to validate the developed hybrid algorithms, the performance of these algorithms is compared with SA, GA, and PSO algorithms. Additionally, to evaluate and tune the parameters of the suggested algorithms, the Taguchi method has been used. T-test significance for statistical analysis between the proposed algorithms has been used. Also, to validate the proposed model, a set of sensitivity analyses on important parameters are provided in sect. 5. Our finding shows that the hybrid meta-heuristic algorithm has a high convergence speed and is more robust than the original algorithms based on three criteria including CPU time, T test significance, and sensitivity analysis.

This paper demonstrates a step forward in the planning of BD application to SCNs that covers the research gaps related to the most recent studies in the field. For example, Afrouzy et al. addressed a three-objective SCN model and propose NSGA and NRGA to solve the problem (Afrouzy et al. 2017). In one of the two approaches most similar to ours, Saghaeeian and Ramezanian developed a hybrid algorithm combining the genetic algorithm and firefly algorithm which was suggested to solve the model (Saghaeeian and Ramezanian 2018). Tavana et al. proposed two hybrid meta-heuristic algorithms including genetic algorithm with variable neighborhood search and variable neighborhood search with simulated annealing (Tavana et al. 2018), whereas Kaur and Singh provided the BD concepts in supply chain management (Kaur and Singh 2018). However, the reviewed papers do not consider a mathematical model based on various carbon emission regulation policies including the strict cap on emission, carbon tax, and carbon cap-and-trade using BD features. In this study, two new hybrid meta-heuristic algorithms have been proposed.

In terms of future works, due to uncertainty parameters such as cost, capacity, and demand in this paper, researchers can consider stochastic, robust, or fuzzy strategies to tackle uncertain parameters. Additionally, members having a risk appetite in a supply chain network should be discussed. Interested scholars can also explore relevant questions in various competitive environments. Hence, in the future, the coordination policies, the pricing laws of the low-carbon, and investment decision-making laws of an SCN can be suggested along with a multistage or a multi-channel SCN. Therefore, the risk superiority of supply chain members also should be addressed when scholars stated the coordination policies, the pricing rules of the low-carbon supply chain, and investment decision-making laws. Furthermore, the proposed problem utilizing BD features can also apply various meta-heuristic algorithms or new meta-heuristics to further enhance and compare the developed hybrid meta-heuristics in this paper.

References

Addo-Tenkorang R, Helo PT (2016) BD applications in operations/supply-chain management: a literature review. Comput Ind Eng 101:528–543

Afrouzy ZA, Paydar MM, Nasseri SH, Mahdavi I (2017) A meta-heuristic approach supported by NSGA-II for the design and plan of supply chain networks considering new product development. J Ind Eng Int 14(1):95–109

Akter S, Wamba SF (2019) BD and disaster management: a systematic review and agenda for future research. Ann Oper Res 283(1–2):939–959

Alavidoost MH, Tarimoradi M, Zarandi MF (2018) Bi-objective mixed-integer nonlinear programming for multi-commodity tri-echelon supply chain networks. J Intell Manuf 29(4):809–826

Amiri SAHS, Zahedi A, Kazemi M, Soroor J, Hajiaghaei-Keshteli M (2020) Determination of the optimal sales level of perishable goods in a two-echelon supply chain network. Comput Ind Eng 139:106156

Bank M, Mazdeh M, Heydari M (2020) Applying meta-heuristic algorithms for an integrated production–distribution problem in a two level supply chain. Uncertain Supply Chain Manag 8(1):77–92

Baryannis G, Validi S, Dani S, Antoniou G (2019) Supply chain risk management and artificial intelligence: state of the art and future research directions. Int J Prod Res 57(7):2179–2202

Ben-Daya M, Hassini E, Bahroun Z (2019) Internet of things and supply chain management: a literature review. Int J Prod Res 57(15–16):4719–4742

Cheng S, Zhang Q, Qin Q (2016) BD analytics with swarm intelligence. Ind Manag Data Syst 116(4):646–666

Choi TM, Wallace SW, Wang Y (2018) BD analytics in operations management. Prod Oper Manag 27(10):1868–1883

Chung SH, Tse YK, Choi TM (2015) Managing disruption risk in express logistics via proactive planning. Ind Manag Data Syst 115(8):1481–1509

Cui Y, Kara S, Chan KC (2020) Manufacturing BD ecosystem: a systematic literature review. Robot Comput Integr Manuf 62:101861

Dai HN, Wang H, Xu G, Wan J, Imran M (2020) Big data analytics for manufacturing internet of things: opportunities, challenges and enabling technologies. Enterp Inf Syst 14(9-10):1279–1303

Del Giudice M, Chierici R, Mazzucchelli A, Fiano F (2020) Supply chain management in the era of circular economy: the moderating effect of big data. Int J Logist Manag. https://doi.org/10.1108/IJLM-03-2020-0119

Dev NK, Shankar R, Gupta R, Dong J (2019) Multi-criteria evaluation of real-time key performance indicators of supply chain with consideration of BD architecture. Comput Ind Eng 128:1076–1087

Duan Y, Edwards JS, Dwivedi YK (2019) Artificial intelligence for decision making in the era of BD—evolution, challenges and research agenda. Int J Inf Manag 48:63–71

Eberhart R, Kennedy J (1995) A new optimizer using particle swarm theory. In: MHS'95. Proceedings of the sixth international symposium on micro machine and human science. IEEE, pp 39–43

Fakhrzad MB, Goodarzian F (2019) A fuzzy multi-objective programming approach to develop a green closed-loop supply chain network design problem under uncertainty: modifications of imperialist competitive algorithm. RAIRO Oper Res 53(3):963–990

Fakhrzad MB, Goodarzian F (2020) A new multi-objective mathematical model for a citrus supply chain network design: metaheuristic algorithms. J Optim Ind Eng 14(2):127–144

Fakhrzad MB, Goodarzian F, Golmohammadi AM (2019) Addressing a fixed charge transportation problem with multi-route and different capacities by novel hybrid meta-heuristics. J Ind Syst Eng 12(1):167–184

Fakhrzad MB, Talebzadeh P, Goodarzian F (2018) Mathematical formulation and solving of green closed-loop supply chain planning problem with production, distribution and transportation reliability. Int J Eng 31(12):2059–2067

Fang Y, Ming H, Li M, Liu Q, Pham DT (2020) Multi-objective evolutionary simulated annealing optimization for mixed-model multi-robotic disassembly line balancing with interval processing time. Int J Prod Res 58(3):846–862

Fathollahi-Fard AM, Ahmadi A, Goodarzian F, Cheikhrouhou N (2020) A bi-objective home healthcare routing and scheduling problem considering patients’ satisfaction in a fuzzy environment. Appl Soft Comput 93:106385

Fattahi M, Mahootchi M, Govindan K, Husseini SMM (2015) Dynamic supply chain network design with capacity planning and multi-period pricing. Transp Res Part E Logist Transp Rev 81:169–202

Fonseca GB, Nogueira TH, Ravetti MG (2019) A hybrid Lagrangian metaheuristic for the cross-docking flow shop scheduling problem. Eur J Oper Res 275(1):139–154

Gandomi A, Haider M (2015) Beyond the hype: BD concepts, methods, and analytics. Int J Inf Manag 35(2):137–144