Abstract

Clustering by fast search and find of density peaks (CFDP) is a popular density-based algorithm. However, it is criticized because it is inefficient and applicable only to some types of data, and requires the manual setting of the key parameter. In this paper, we propose the two-stage density clustering algorithm, which takes advantage of granular computing to address the aforementioned issues. The new algorithm is highly efficient, adaptive to various types of data, and requires minimal parameter setting. The first stage uses the two-round-means algorithm to obtain \(\sqrt{n}\) small blocks, where n is the number of instances. This stage decreases the data size directly from n to \(\sqrt{n}\). The second stage constructs the master tree and obtains the final blocks. This stage borrows the structure of CFDP, while the cutoff distance parameter is not required. The time complexity of the algorithm is \(O(mn^\frac{3}{2})\), which is lower than \(O (mn^2)\) for CFDP. We report the results of some experiments performed on 21 datasets from various domains to compare a new clustering algorithm with some state-of-the-art clustering algorithms. The results demonstrated that the new algorithm is adaptive to different types of datasets. It is two or more orders of magnitude faster than CFDP.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Clustering is a fundamental approach used to organize data into distinct groups to find intrinsic hidden patterns in the data. Its applications include image processing (Pappas 1992; Leong and Ong 2017), bioinformatics (Shuji et al. 2015), social networks (Chang et al. 2014) and pattern recognition (Guo et al. 2009). Popular clustering algorithms include partition-based (MacQueen et al. 1967; Kaufman 2008), density-based (Kriegel et al. 2011; Rodriguez and Laio 2014) and hierarchical (Johnson 1967; Dasgupta 2002). Partition-based clustering algorithms typically include the k-means (MacQueen et al. 1967) and k-medoid algorithms (Kaufman 2008). They construct a single partition of the dataset based on the distance between instances. Among them, the k-medoid algorithm always requires that each cluster center is an existing instance. By contrast, the k-means algorithm uses the average value of the cluster to build the virtual center. Because an instance is always assigned to the nearest center, these approaches are unable to detect non-spherical clusters.

Density clustering (Ester et al. 1996; Rodriguez and Laio 2014) performs well on data with circular, arc and some other irregular shapes. CFDP (Rodriguez and Laio 2014) has attracted much attention because of its simplicity and good results, and it can automatically find the clustering centers. However, it is criticized because it is inefficient and applicable only to some types of data, and requires the manual setting of the key parameter. First, the time complexity of the algorithm is \(O(mn^2)\), where m and n are the number of attributes and instances, respectively. Hence, the algorithm is inapplicable to data with millions of instances. Second, for many datasets, the clustering results are often unsatisfactory in terms of purity, the Jaccard coefficient (JC), Fowlkes and Mallows index (FMI) and Rand index (RI). Third, the quality of the results depends substantially on cutoff distance threshold \(d_c\). It is difficult, if not impossible, for the user to make an optimal setting in practice.

One solution to these issues is to introduce the granular computing (Hu et al. 2016; Li et al. 2016; Qian et al. 2015; Yao and Yao 2002; Chen et al. 2015) methodology. This methodology is widely used to manage many machine learning tasks, such as classification (Wang and Musa 2014), clustering (Wilderjans and Cariou 2016; Sarma et al. 2013), recommendation (Zhang et al. 2019, 2017), active learning (Wang et al. 2017), attribute reduction (Min et al. 2011; Li et al. 2011) and three-way decisions (Huang et al. 2017; Zhao et al. 2016a, b; Li et al. 2017; Yu et al. 2016; Liu and Liang 2017). For the clustering task, each instance can be considered as the finest granule, the entire dataset can be considered as the coarsest granule, and the clustering result has the granule level between the finest and coarsest. We may construct other granule levels to address the aforementioned issues.

In this paper, we propose the two-stage density clustering (TSD) algorithm, which is highly efficient, adaptive to various types of data, and requires minimal parameter setting. Figure 1 illustrates our new algorithm through a running example. Figure 1a describes a dataset of 100 instances. The first stage is pre-clustering, as shown in Fig. 1b. We divide the dataset into ten blocks and obtain ten virtual centers \([c_1, c_2, \dots , c_{10}]\). Block size array \(\rho = [17, 11, 9, 6, 12, 11, 9, 9, 6, 10]\) is obtained for the next stage. Pre-clustering ensures the local distribution of data and decreases the data size. The second stage is the density clustering of virtual centers, as shown in Fig. 1c. First, we obtain density \(\rho _i\) of each instance and calculate its minimum distance \(\delta _i\). Second, we construct the master tree according to (\(\rho _i\), \(\delta _i\)). Finally, we cluster ten virtual centers into three blocks and obtain their cluster indices \(cl = [1, 1, 1, 3, 3, 2, 2, 2, 3, 3]\). Figure 1d shows the cluster results. All instances in each block have the same cluster indices as the virtual centers.

Running example of the TSD algorithm. a describes the input, and b shows the first stage of TSD, namely pre-clustering. The pre-clustering stage obtains the virtual centers c and block density \(\rho \), and c shows the second stage of TSD, namely the density clustering of virtual centers. Finally, d shows the cluster results

The granular computing methodology is used to design the TSD algorithm. In the pre-clustering stage, approximately \(\sqrt{n}\) small local granules are obtained using a two-round-means subroutine, where n is the number of instances. This stage does not change the distribution of the data. In the density clustering stage, both the inner-granule size and inter-granule distance are used to construct the master tree. Then, local granules are accumulated to form the final clusters.

The two-stage density clustering (TSD) algorithm has the following advantages. First, the number of instances required for density clustering is reduced to \(\sqrt{n}\). Thus, the time complexity is \(O(mn^\frac{3}{2})\), which is much lower than \(O(mn^2)\) for clustering by fast search and find of density peaks (CFDP). Second, the TSD algorithm has the advantages of the k-means algorithm and CFDP and has good adaptability. It has good clustering performance for datasets containing data of various types. Third, the density \(\rho \) is set to the number of instances in each block. The method is simple and effective and avoids manually setting the cutoff distance \(d_c\). Therefore, the main problem solved is the efficiency and parameter setting in the CFDP algorithm. The new algorithm is \(\sqrt{n}\) times faster than CFDP. It does not require the value of \(\rho \) to be set.

Experiments were performed on 21 datasets to quantify the performance of the TSD algorithm. These datasets were chosen from different applications, such as botany, materials science and games, with different data distributions. The largest dataset, Poker (Cattral and Oppacher 2007), contains 1,025,009 instances. We compared the TSD algorithm with five types of clustering algorithms: partition clustering (MacQueen et al. 1967), peak density clustering (Rodriguez and Laio 2014; Xie et al. 2016; Liu et al. 2018; Xu et al. 2016), maximum margin clustering (MMC) (Li et al. 2009), spectral clustering (Wang et al. 2011) and balanced clustering (Liu et al. 2017a). Four external evaluation functions were used to evaluate the clustering results. The time complexity was verified on seven large datasets. The experimental results demonstrated that the TSD algorithm had good clustering performance on various types of datasets. It was two or more orders of magnitude faster than CFDP.

The remainder of this paper is organized as follows: In Sect. 2, we review five types of clustering algorithms. In Sect. 3, we present the TSD clustering algorithm. We describe experiments on 21 datasets in Sect. 4. Finally, we draw a conclusion in Sect. 5.

2 Related work

In this section, we review five types of clustering algorithms: partition-based clustering (MacQueen et al. 1967; Kaufman 2008), density-based clustering (Kriegel et al. 2011; Rodriguez and Laio 2014; Xie et al. 2016; Liu et al. 2018; Xu et al. 2016), maximum margin clustering (MMC) (Li et al. 2009), spectral clustering (Chen and Cai 2011; Wang et al. 2011) and balanced clustering (Liu et al. 2017a).

2.1 Partition-based clustering

Partition-based clustering algorithms, such as the k-means (MacQueen et al. 1967) and k-medoid (Kaufman 2008) algorithms, are classic and efficient. The k-means algorithm calculates cluster centers iteratively as follows:

-

Step 1. Initialize k centers \(c_1\) ...\(c_k\) using random sampling.

-

Step 2. Each instance belongs to the block of the nearest center.

-

Step 3. Each new center takes the mean values of all instances of its block.

-

Step 4. Repeat Steps 2 and 3 until the cluster centers do not change.

Because the Euclidean distance is typically used as a similarity measure, partition-based clustering algorithms cannot detect non-spherical clusters.

2.2 Peak density clustering

Density clustering (Ester et al. 1996; Rodriguez and Laio 2014) explores clusters with different shapes based on the data density. DBSCAN (Ester et al. 1996) can find clusters with various shapes and manage noise. It controls class growth based on a density threshold. However, it does not perform well for overlapping densities.

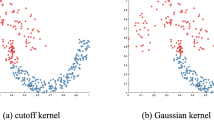

Similar to DBSCAN, CFDP (Rodriguez and Laio 2014) aims to detect non-spherical clusters. Cluster centers are characterized by a higher density than their neighbors and by a relatively large distance from instances with higher densities. For each instance i, CFDP computes two quantities: its density \(\rho _i\) and its minimum distance \(\delta _i\) from instances of a higher density. Density \(\rho _i\) of instance i is defined as

where \(\chi (x) = 1\) if \(x < 0\) and \(\chi (x) = 0\) otherwise, and \(d_c\) is a cutoff distance. \(\delta _i\) is measured by computing the minimum distance between instance i and any other instance with a higher density:

For the instance with the highest density, we conventionally take \({\delta _i} = {\max }(d_{ij})\).

CFDP detects non-spherical clusters and automatically finds the correct number of clusters. However, it encounters the following drawbacks in practice:

-

(1)

High time complexity: The time complexity of the algorithm is \(O(mn^2)\), where m and n are the number of attributes and instances, respectively. The high time complexity makes it impossible to use the algorithm for large-scale data clustering.

-

(2)

Difficult to set \(d_c\) and accurately select the cluster centers. The performance of the algorithm depends on user-specified cutoff distance \(d_c\). No specific method was presented in (Rodriguez and Laio 2014).

Liu et al. (2017b) also discovered this problem. He has the following description in the abstract of the paper. “However, the improper selection of its parameter cutoff distance \(d_c\) will lead to the wrong selection of initial cluster centers, but the CFDP cannot correct it in the subsequent assignment process. Furthermore, in some cases, even the proper value of \(d_c\) was set, initial cluster centers are still difficult to be selected from the decision graph.” Chen et al. (2016) described this problem in his paper. “But is has two big challenges when selecting cluster centers. The first challenge is it needs to manually select cluster centers. Even so, on some datasets, the number of cluster centers it generates will be either more or less than the right number. The second one is it is unable to group data points correctly when a cluster has more than one centers.”

Various methods have been proposed to further improve the CFDP algorithm. Liu et al. (2017b, 2018), Chen et al. (2016), Xie et al. (2016), Du et al. (2016) introduced k-nearest neighbors (KNN) ideas into the CFDP algorithm to improve its adaptive ability and performance. Xu et al. (2016), Liang and Chen (2016), Lu and Zhu (2017) combined the idea of hierarchy with the CFDP algorithm to improve efficiency.

Xie et al. (2016) proposed a new robust fuzzy KNN density peak clustering (FKNN-DPC) algorithm. The proposed algorithm introduced a uniform metric to calculate the local density and developed two assignment strategies to detect the true distribution of a dataset. Liu et al. (2018) proposed a shared-nearest-neighbor-based clustering by fast search and find of density peaks (SNN-DPC) algorithm. They presented three new definitions: SNN similarity, the local density \(\rho \) and the distance from the nearest larger density point \(\delta \). These definitions take the information about nearest neighbors and shared neighbors into account. Xu et al. (2016) proposed a density peak-based hierarchical clustering method (DenPEHC) algorithm that directly generates clusters on each possible clustering layer and introduced a grid granulation framework to enable DenPEHC to cluster large-scale and high-dimensional datasets.

As opposed to the above methods, TSD uses two-stage clustering. The first stage uses a two-round-means algorithm to better handle spherical datasets. The second stage uses the improved CFDP algorithm to efficiently process non-spherical datasets. Thus, TSD effectively integrates the idea of hierarchical clustering, which can improve the adaptability of the algorithm.

2.3 Maximum margin clustering

MMC algorithms (Li et al. 2009) aim to find an optimal (maximum) hyperplane in high-dimensional feature space. Specifically, the hyperplane and labeled sample can be obtained by optimizing the following objective function:

where \(\phi (\cdot )\) denotes a nonlinear mapping from the original space to high-dimensional space. \(\xi _i \ge 0\) is the relaxation variable that corresponds to \(x_i\). l is the constant controlling the balance between classes. \(\omega \) and b uniquely determine the hyperplane. Naturally, the clustering label is optimized by the objective function.

MMC is limited to small to medium-sized datasets because of the semidefinite program. The LGMMC algorithm (Li et al. 2009) improves efficiency and scalability by maximizing the margin of opposite clusters using label generation.

2.4 Spectral clustering

Spectral clustering (Chen and Cai 2011; Wang et al. 2011) is evolved from graph theory. It mainly includes three steps:

-

Step 1. Construct a new matrix to represent the original dataset.

-

Step 2. Compute the eigenvalues and eigenvectors of the matrix. Map each instance to a low-dimensional representation based on the eigenvectors.

-

Step 3. Assign cluster indices according to the new representation.

Spectral clustering has advantages in managing sparse and high-dimensional datasets. The disadvantage is that the time complexity is too high and cannot manage intersections. Chen and Cai (2011) proposed the landmark-based spectral clustering algorithm to improve efficiency. Wang et al. (2011) proposed the spectral multi-manifold clustering (SMMC) algorithm to manage intersections.

2.5 Balanced clustering

Balanced clustering (Liu et al. 2017a) is required in a variety of applications, such as photo query systems (Dengel et al. 2011) and wireless sensor networks (Chuang et al. 2009). These balanced algorithms can be categorized into two types: hard-balanced and soft-balanced. Liu et al. (2017a) proposed a soft-balanced BCLS algorithm based on least square linear regression. It considers a balance constraint to regularize the clustering model. The purpose is to minimize

The algorithm achieves balanced clustering by minimizing the square sum of instances in each cluster.

3 Proposed algorithm

In this section, we present the TSD algorithm with time complexity analysis.

3.1 Algorithm description

Figure 2 shows our TSD clustering framework. Table 1 is the symbols and variables used in Fig. 2. Stage I is pre-clustering. The dataset is divided into e clusters using the two-round-means algorithm (Algorithm 1), \(e = \sqrt{n}\). \(\sqrt{n}\) is an empirical value used in many studies. In Yu and Cheng (2001), the authors have provided a theoretical explanation for this rule. Therefore, we set \(\sqrt{n}\) as the block number in Algorithm 1. This stage computes block information \(b_{1 \times e}\) and determines virtual centers \(c_{1 \times e}\). Simultaneously, we obtain block size array \(\rho = [\left| b_1 \right| ,\left| b_2 \right| , \dots , \left| b_e \right| ]\) for the next stage. We do not use the k-means algorithm directly. Instead, we control the iteration to exactly two to save runtime because more iterations require more time, but the performance of the clustering cannot be substantially improved. We discuss this issue in Sect. 4.

Stage II is the density clustering of all virtual centers (Algorithm 2). This stage acquires cluster indices \(cl_{1 \times e}\). First, density \(\rho _i\) of each virtual center is obtained directly from the first stage. Second, we construct master tree \(ms_{1 \times e}\) based on density \(\rho \) and minimal distance \(\delta \). Finally, we cluster the virtual centers according to the master tree. The algorithm has the following advantages compared with CFDP. First, we only need to cluster e virtual centers. This is the major technique that reduces the time complexity. Second, density \(\rho _i\) is redefined as the size of \(b_i\) without setting cutoff distance \(d_c\).

Finally, all remaining instances in each block have the same cluster indices as the virtual centers. They are assigned cluster indices \(l = [l_1, l_2, \dots , l_n]\), that is, \(\forall x \in {b_i}, l_x = c{l_i}\).

3.1.1 Stage I: Two-round-means

Algorithm 1 lists the two-round-means algorithm. It mainly includes three steps:

-

Step 1. Initialization. Line 1 initializes \(b_1 = b_2 = \dots = b_e = \emptyset \). Line 2 initializes virtual centers \(cl_{1 \times e}\) using random sampling.

-

Step 2. Compute block information. Lines 6–11 find the nearest center of each instance and assign it to the corresponding block. Line 9 finds the nearest center \(c_j\) for \(x_i\), denoted as m. Line 12 adds the instance \(x_i\) to block \(b_m\).

-

Step 3. Recalculate the virtual centers. The virtual centers need to be updated based on the generated block information. Line 14 recalculates the virtual center \(c_k\) of block k according to Eq. (5).

$$\begin{aligned} {c_k} = \frac{{\sum \nolimits _{x_i \in {b_k}} x_i}}{{\left| {{b_k}} \right| }}. \end{aligned}$$(5)

The loop terminates after two iterations for the following reason: For the k-means algorithm, k is often a small integer that corresponds to the number of clusters. Hence, the algorithm may run many iterations to converge to k clusters. By contrast, in Algorithm 1, the number of blocks is e, which is quite large. These e local blocks influence, but do not determine, the final clusters.

3.1.2 Stage II: Density clustering

Algorithm 2 lists the density clustering algorithm. It mainly includes the following two steps:

Step 1. Construct the master tree.

Lines 2–3 obtain density \(\rho \) and sort. In CFDP, density \(\rho \) is computed based on Eq. (1). However, it requires the manual setting of cutoff parameter \(d_c\). Considering the local distribution characteristics and nonparametric properties, the density \(\rho _i\) is redefined as

where \(|b_i|\) is the size of block i. It can be obtained directly from the first stage of pre-clustering.

Lines 4–12 calculate minimum distance \(\delta \) and construct the master tree. From lines 5–12, \(\delta \) is computed based on Eq. (2). \(\delta _i\) is the minimum distance between instance i and any other instance with a higher density. Line 6 indicates that the search ranges from \(c_{q_1}\) to \(c_{q_{i-1}}\), where \(q = [q_1, \dots , q_e]\) is the index array according to \(\rho \) in descending order, \(\rho _{q_1} \ge \rho _{q_2} \ge \dots \ge \rho _{q_e}\). In line 8, closest distance \(d(c_{q_i}, c_{q_j})\) is determined, and termed \(\delta _{q_i}\). According to Definition 1, a master is the nearest neighbor with a higher density. Line 9 updates the master of \(q_i\) as \( q_j\). Thus, the master tree is built. When there are multiple masters, we choose that with the smallest index.

Definition 1

(Wang et al. 2017) Let \(x_i, x_j \in U\) and \(d(x_i, x_j)\) be the distance between \(x_i\) and \(x_j\). \(x_j \in U\) is called a master of \(x_i\) iff

-

1.

\(\rho (x_j) > \rho (x_i)\); and

-

2.

\(\forall x_l \in U\), \(\rho (x_l) > \rho (x_i) \Rightarrow d(x_i, x_j) \le d(x_i, x_l)\).

Step 2. Cluster and assign cluster indices to all virtual centers.

The cluster centers are characterized by a higher density than their neighbors and by a relatively large distance from instances with higher densities (Rodriguez and Laio 2014). To consider both density and distance, we introduce the importance measure:

Lines 13–14 compute \(\gamma \) and sort. \(p = [p_1, \dots , p_e]\) is the index array according to \(\gamma \) in descending order, and \(\gamma _{p_1} \ge \gamma _{p_2} \ge \dots \ge \gamma _{p_e}\). Lines 15–17 select k centers in turn according to \(p = [p_1, \dots , p_e]\). k is given by the user, and it is usually set to the actual number of clusters. Line 16 assigns the cluster index i to the ith center. Lines 18–22 assign cluster indices to non-center instances. The cluster assignment is performed in a single step (line 20), in contrast to other clustering algorithms, where an objective function is optimized iteratively (MacQueen et al. 1967; Kaufman 2008). We prove that each block is assigned a real label.

Property 1

On the completion of Algorithm 2, \(\forall 1 \le i \le e\), \(cl_i \ne -1\).

Proof

We prove the property using mathematical induction.

(Basis) From Eq. (6) and line 3, we know that \(q_1\) corresponds to the instance with the maximal density. Because it also has the maximal distance, according to Eq. (7) and Line 14, \(q_1 = p_1\).

Line 16 assigns \(cl_{p_1} = 1\), which also indicates that \(cl_{q_1} = 1 \ne -1\).

(Induction) Suppose that \(cl_{q_k} \ne -1\) for \(1 \le k \le i - 1\) while executing line 20.

Because \(ms_{q_i}\) is the master of \(q_i\), we have \(\rho _{ms_{q_i}} > \rho _{q_i}\). Moreover, blocks are sorted in line 3. Let \(q_j = ms_{q_i}\), then naturally \(j < i\). According to the hypothesis, \(cl_{q_j} \ne -1\), and \(cl_{q_i} \ne -1\) after executing line 20.

Combining the basis and induction, the property holds. This completes the proof. \(\square \)

3.2 Complexity analysis

We analyze the space and time complexities of the algorithm.

Proposition 1

Let m and n be the number of attributes and instances, respectively. For Algorithm TSD, the space and time complexity are O(n) and \(O(mn^\frac{3}{2})\), respectively.

Proof

Table 2 lists the space complexity. \(\rho \), \(\delta \) and \(\gamma \) require \(O(n^\frac{1}{2})\) of space. Virtual center c and its cluster index cl also require \(O(n^\frac{1}{2})\) of space. Block information b and cluster index l require O(n) of space. Thus, the total space complexity is

Table 3 lists the time complexity. Algorithm 1 takes \(O(mn^\frac{3}{2})\) of time. Algorithm 2 takes O(mn) of time. The final step for cluster index sharing takes O(n) of time. Therefore, the time complexity of Algorithm TSD is

This completes the proof. \(\square \)

4 Experiments

We conduct experiments to analyze the adaptability, clustering performance and efficiency of the TSD algorithm and to answer the following question:

-

(1)

Does the TSD algorithm have better clustering performance than classical and state-of-the-art clustering algorithms, such as k-means, CFDP, CFSFDP+A and SNN-DPC?

-

(2)

Is the TSD algorithm efficient?

The computations are performed on a Windows 10 64-bit operating system with 8 GB RAM and Intel (R) Core(TM) i5-8300H CPU @2.30 GHz processors, using Java and MATLAB software. The TSD source code is available at www.fansmale.com/software.html and https://github.com/FanSmale/TSD.

4.1 Datasets

We chose different types, shapes and sizes of datasets for the experiments. These included six synthetic datasets obtained from the literature (Rodriguez and Laio 2014), 12 from the University of California at Irvine (UCI) ML repository (Blake and Merz 1998) and one from the literature (Stenger 2011). The six synthetic datasets had typical shape distributions. The number of instances ranged from 150 to 1,025,009, the number of attributes ranged from 2 to 40, and the number of classes ranged from 2 to 17. These datasets are listed in Table 4.

Note that the class attributes of the datasets were not used in the clustering processing. We first removed the labels for all instances, then predicted the cluster indices and finally measured the clustering performance according to the true label.

Figure 3 illustrates the six synthetic datasets with two-dimensional visualization graphs. The six synthetic datasets had different data distributions, shapes, cluster sizes and numbers of clusters. Figure 3a shows the typical non-spherical dataset, which was divided into three classes, thereby forming three semicircular rings. Table 4 (lines 7–11) lists the selected five typical small UCI datasets. It is perhaps the best-known database in the clustering, classification literature (Chiroma et al. 2014). Table 4 (lines 12–21) lists ten large datasets of different types and different domains. The Poker (Cattral and Oppacher 2007) dataset contained 1,025,009 instances and 10 attributes, and the DLA (Ugulino et al. 2012) dataset contained 165,633 instances and 17 attributes.

4.2 Evaluation measure

We use four external evaluation functions to evaluate the clustering algorithm, including purity, JC, FMI and RI.

We assume that the clusters given by the clustering algorithm were divided into \(C = {(C_1, C_2, \dots , C_k)}\) and the clusters given by the reference model were divided into \(C^* = {(C_1^*, C_2^*, \dots , C_s^*)}\). \(\lambda \) and \(\lambda ^*\) denote the cluster index vectors that correspond to C and \(C^*\), respectively. Accordingly, we compute the following four parameters:

According to Eqs. (10)–(13), we obtain the external evaluation functions as follows:

where n is the number of instances. Obviously, the results of the above performance metrics were all between zero and one, the bigger the better.

4.3 Algorithm adaptability

In this section, we design our experiments to observe the effect of the preporcessing technique for different shapes of datasets and elaborated the parameter settings.

4.3.1 Impact of parameter settings

In this subsection, we mainly answer the following two questions through experiments:

-

(1)

Is it appropriate to set the number of iterations to two?

-

(2)

Is it appropriate to divide the dataset into e clusters?

We chose seven different sizes and different types of datasets, including Iris and Seeds. Figure 4a shows the change of purity when the number of iterations r varied from 2 to 19. For Twonorm, Magic, Glass and Ring, the purity variation was small. Increasing the number of iterations cannot improve its performance. For Seeds, the highest purity was achieved by iterating twice. For Jain and Iris, the difference in optimal performance was less than 10%. Therefore, it was appropriate to set number of iterations r to two. The efficiency of clustering is greatly improved while the clustering performance is guaranteed.

Figure 4b shows the change of purity when number of clusters k varied from 0.5e to 1.5e. The performance is the best when \(k = e\). If the number of clusters continued to increase, purity no longer increased. Therefore, it was appropriate to divide the dataset into e clusters.

4.3.2 Effect of the preprocessing technique for different shapes of datasets

Figure 5 shows the pre-clustering and sampling process of the original dataset. We choose synthetic datasets with typical shape distributions, such as R15, Jain, Aggregation and Compound. Figure 5a, d, g, j shows the original distribution of datasets. Jain is two semicircles; Aggregation is seven clusters with different shapes and sizes; Compound is petals and scatter patterns; R15 was three rings formed by 15 evenly distributed clusters.

Figure 5b, e, h, k shows the e clusters formed by two-round-means. Figure 5c, f, i, l shows the e virtual centers. Figure 5 shows that local blocks b and virtual centers c maintained good data distribution characteristics. The pre-clustering process did not destroy the data structure.

4.4 Comparison with state-of-the-art clustering algorithms

Because a considerable amount of work has been conducted on clustering, it is interesting and meaningful to compare our proposed TSD algorithm with these state-of-the-art methods. We compare the TSD algorithm with state-of-the-art eight clustering approaches, including partition-based clustering (k-means algorithm) (MacQueen et al. 1967) and density-based clustering (CFDP (Rodriguez and Laio 2014)Footnote 1), balanced clustering (BCLS) (Liu et al. 2017a)Footnote 2, SNN-DPC (Liu et al. 2018)Footnote 3, FKNN-DPC (Xie et al. 2016), DenPEHC (Xu et al. 2016)Footnote 4, CFSFDP+A and CFSFDP+DE (Bai et al. 2017).Footnote 5

k-means is tested using Weka’s built-in codes. CFDP, SNN-DPC, FKNN-DPC, DenPEHC, BCLS, CFSFDP+A and CFSFDP+DE use the source code provided by the algorithm authors. We list the URLs of the source code provided by the author. For SNN-DPC, we adopt the original results in the paper to avoid parameter adjustment. For datasets not available in the original paper, we choose k = 11 for testing. (For k values, the recommended range in the original paper is 5-30.) The CFDP algorithm uses the optimal parameter setting and the Gaussian distance described in the original paper. The TSD algorithm is run ten times, and the average purity is calculated. At the same time, we list the optimal results of the TSD algorithm. This result is for reference only and is referred to as TSD-H for short.

Comprehensive evaluations of clustering performance are performed on 21 datasets, which cover a wide range of properties. For CFDP, SNN-DPC, FKNN-DPC, DenPEHC and BCLS, the code runs on the MATLAB platform. Memory overflows when testing large datasets. Therefore, for the Penbased, Magic and Kr-vs-k datasets, we sample 10% of the instances for each class and formed new datasets. For the ConfLongDemo, DLA, Poker and Skin datasets, we sample 1% of the instances for each class and formed new datasets.

4.4.1 Comparison on synthetic datasets

Tables 5, 6, 7 and 8 compare the purity, JC, FMI and RI of TSD with that of the eight state-of-the-art clustering algorithms on synthetic datasets. The mean ranks of algorithms are listed from a statistical point of view. The best and second-best results are highlighted in boldface and italic, respectively. For TSD, the mean ranks are 5.83, 5.33, 6.42 and 4.67, respectively.

4.4.2 Comparison on benchmark datasets

Tables 9, 10, 11 and 12 compare the purity, JC, FMI and RI of TSD with that of the eight state-of-the-art clustering algorithms on benchmark datasets. We perform statistical analysis of the experiments using the methods described in Reyes et al. (2018) and Zhou (2016). The average ranks are obtained by applying a Friedman test Reyes et al. (2018), which is the most well-known nonparametric test. The Friedman test analyzes whether there are significant differences among the algorithms. TSD is generally superior to the eight clustering algorithms. For purity, the average rank is 3.30. The average rankings of other eight algorithms are 4.13, 4.37, 7.70, 3.33, 5.17, 8.17, 4.43 and 4.40, respectively. In particular, the TSD algorithm has the highest purity on three datasets.

4.4.3 Comparison on domain datasets

We adopt actual line loss data to further verify the performance of TSD. Line loss data contain 2585 instances, i.e., 2585 electrical transformer districts. The five attributes are wire size, wire length, active power, reactive power and energy indication. Decision attributes are five types of districts.

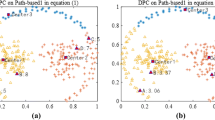

Figure 6a illustrates the purity of TSD and the eight clustering algorithms. Figure 6b–d illustrates the comparison of JC, FMI and RI, respectively. For TSD, purity, JC, FMI and RI are 0.9280, 0.8109, 0.8955 and 0.9058, respectively. TSD ranks first among all four indicators. The purity of the other eight clustering algorithms is 0.6572, 0.8300, 0.6112, 0.6274, 0.6112, 0.6112, 0.6758 and 0.6271. The JC of the other eight clustering algorithms is 0.5533, 0.3325, 0.4509, 0.1951, 0.2252, 0.4509, 0.4786 and 0.4478. The FMI of the other eight clustering algorithms is 0.7417, 0.5240, 0.6715, 0.3448, 0.3738, 0.6715, 0.6879 and 0.6516. The RI of the other eight clustering algorithms is 0.7973, 0.6538, 0.4509, 0.5404, 0.5175, 0.4509, 0.7623 and 0.7389. TSD has good performance in the actual line loss prediction.

4.4.4 Comparison with CFDP optimization algorithm

Finally, we compare TSD with three CFDP optimization algorithms: DPCG (Xu et al. 2018a), GDPC (Xu et al. 2018b) and CDPC (Xu et al. 2018b). DPCG (Xu et al. 2018a) is an improved grid-based density peak clustering algorithm. GDPC (Xu et al. 2018b) and CDPC (Xu et al. 2018b) are two improved density peak clustering algorithms that quickly find cluster centers. For these three algorithms, the author did not provide source code. To ensure optimal performance, we adopt the datasets and results provided in these references for comparison. There is no need to run the program and adjust parameters.

Table 13 compares the purity of TSD and DPCG. For six datasets, TSD performed better than DPCG on four datasets (e.g., Soybean, Ionosphere). The mean rank of TSD and DPCG are 1.33 and 1.67, respectively. Table 14 compares the purity of TSD, GDPC and CDPC. For six datasets, TSD performed better than DPCG on four datasets. The average rankings of GDPC, CDPC and TSD are 2.33, 2.00 and 1.67, respectively.

4.5 Runtime comparison

First, we compare the TSD algorithm with several state-of-the-art density peak clustering algorithms on the Matlab platform, including SNN-DPC, FKNN-DPC, DenPEHC, CFSFDP+A and CFSFDP+DE. CFSFDP+A and CFSFDP+DE are two fast optimization algorithms for CFDP (Bai et al. 2017). For the SNN-DPC algorithm, memory overflow occurred when computing datasets with more than 30,000 instances. Therefore, we select three datasets, DLA0.2, Magic and Penbased, to quantify the efficiency of the TSD algorithm, where DLA0.2 is a 20% random sample of DLA.

Figure 7 shows the relationship between the size n of the training dataset and the runtime. Note that we use logarithmic coordinates for the runtime. The results show that the TSD algorithm effectively improves efficiency and reduces running time. It is at least two orders of magnitude faster than SNN-DPC, DenPHEC and FKNN-DPC. For example, on the DLA0.2 dataset with \(n = 3.3 \times 10^4\), the runtime for TSD is 1891 ms, compared with \(1.7 \times 10^6\) ms for SNN-DPC, \(6.1 \times 10^5\) ms for FKNN-DPC, \(2.4 \times 10^7\) ms for DenPEHC, 21,561 ms for CFSFDP+A and 624 ms for CFSFDP+DE. That is, TSD is 12,728 times faster than DenPEHC, 923 times faster than SNN-DPC, 323 times faster than FKNN-DPC and 11 times faster than CFSFDP+A. TSD is slightly slower than CFSFDP+DE algorithm.

Second, we use some larger datasets to compare the TSD algorithm with the classical CFDP and k-means algorithms on the JAVA platform. Figure 8 shows the runtime comparison for four large datasets. We use four datasets with more than 160,000 instances, Poker0.5, Skin, DLA and ConfLongDemo, to quantify the efficiency of the TSD algorithm, where Poker0.5 is a 50% random sample of Poker. The number of instances in Poker0.5, Skin, DLA and ConfLongDemo were 512,504, 245,057, 165,633 and 164,860, respectively. The TSD algorithm is two or more orders of magnitude faster than CFDP and is able to process millions of datasets within an acceptable runtime. For example, on the Poker0.5 dataset, with \(n = 5.1 \times 10^5\), the runtimes for CFDP and TSD are \(2.2 \times 10^7\) ms and \(7.4 \times 10^3\) ms, respectively. That is, TSD is 2692 times faster than CFDP.

The experimental results demonstrate that the TSD algorithm was slightly slower than the k-means algorithm. For example, for the Poker0.5 dataset, with \(n = 5.1 \times 10^5\), the runtimes for TSD and k-means are \(7.4 \times 10^3\) ms and \(2.9 \times 10^3\) ms, respectively. Thus, k-means is 3 times faster than CFDP. Also, for the DLA dataset, with \(n = 1.6 \times 10^5\), k-means is 8 times faster than TSD.

According to Table 3, the time complexity of the TSD algorithm is \(O(mn^\frac{3}{2})\), while the time complexity for the k-means algorithm is O(mn). The experimental results demonstrate that the theoretical analysis is correct.

Finally, we compare the TSD algorithm on the MATLAB platform with three CFDP optimization algorithms adopting the original results in the reference paper (Xu et al. 2018a, b). Table 15 compares the running time of the TSD and DPCG algorithms. For the 14 datasets list in reference (Xu et al. 2018a), TSD is faster than DPCG algorithm. Table 16 compares the running time of the TSD and GDPC, CDPC algorithms. Similarly, for 12 datasets, TSD is faster than GDPC and CDPC algorithms.

4.6 Optimization of the TSD algorithm

Essentially, TSD is a two-stage hierarchy clustering algorithm. Therefore, we will further optimize the TSD algorithm from two aspects of time and performance. For time optimization, it is important to reduce the complexity of two-round-means. We adopt the idea of grid or wavelet clustering to optimize the two-round-means to reduce the complexity to O(n). In this way, the complexity of the optimized TSD algorithm is O(n).

For performance optimization, the selection of virtual centers is crucial. If initial centers are well adapted to the distribution of the data, we will obtain better performances. Therefore, we design a preprocessing module to make a preliminary estimation on the dataset. The two-stage clustering algorithms will be selected based on preprocessing judgments. In this way, we design an optimization algorithm for TSD, namely TSD-Improved (TSD-I).

Table 17 compares TSD-I with the TSD and CFDP algorithms on synthetic datasets. TSD-I effectively improves the performance of TSD on synthetic datasets. For six synthetic datasets, TSD-I achieved performance improvements in four of them. Table 18 compares TSD-I with the TSD and CFDP algorithms on benchmark datasets. For fifteen synthetic datasets, TSD-I achieved performance improvements in eleven of them.

4.7 Discussions

We are now able to answer the questions posed at the beginning of this work.

-

(1)

The TSD algorithm is more adaptive than popular clustering algorithms. It is effective for datasets of different types, shapes and sizes.

-

(2)

The TSD algorithm is more accurate than classical and state-of-the-art clustering algorithms on benchmark and domain data.

-

(3)

The TSD algorithm is efficient and scalable.

5 Conclusions and future works

In this paper, we proposed a TSD clustering algorithm that was highly efficient, and had good adaptability to multiple types of datasets, with minimal parameter setting. The new algorithm explored the use of two-round-means for pre-clustering so that the time and space complexity could be minimized. The time complexity of the algorithm was \(O(mn^\frac{3}{2})\), which was lower than \(O (mn^2)\) for CFDP. Experiments on 21 datasets demonstrated that the new algorithm was more accurate than state-of-the-art algorithms. It was two or more orders of magnitude faster than CFDP.

From the viewpoint of algorithms and applications, the following research problems merit further investigation:

-

(1)

Revising the TSD algorithm for active learning. The TSD algorithm can greatly reduce the time complexity. Naturally, it can be applied to clustering-based active learning algorithms to improve efficiency.

-

(2)

Nonparametric setting. The TSD algorithm requires the user to provide the final number of clusters. A better solution is to avoid the parameter setting altogether without sacrificing clustering performance.

-

(3)

Adopting other clustering algorithms. We proposed a specific algorithm under the new local–global granule idea. We can replace the two-stage clustering algorithm with others (e.g., DBSCAN (Ester et al. 1996), EM (Langford et al. 2010) and HierarchicalCluster(HC) (Johnson 1967)) under this framework. A comparison study on this issue may be interesting.

References

Bai L, Cheng XQ, Liang JY, Shen HW, Guo YK (2017) Fast density clustering strategies based on the \(k\)-means algorithm. Pattern Recogn 71:375–386

Blake C, Merz CJ (1998) UCI repository of machine learning databases. http://www.ics.uci.edu/mlearn/MLRepository.html

Cattral R, Oppacher F (2007) Discovering rules in the poker hand dataset. In: GECCO, pp 1870–1870

Chang MS, Chen LH, Hung LJ, Rossmanith P, Wu GH (2014) Exact algorithms for problems related to the densest \(k\)-set problem. Inf Process Lett 114(9):510–513

Chen XL, Cai D (2011) Large scale spectral clustering with landmark-based representation. In: AAAI, p 14

Chen DG, Zhang XX, Li WL (2015) On measurements of covering rough sets based on granules and evidence theory. Inf Sci 317:329–348

Chen M, Li LJ, Wang B, Chen JJ, Pan LN, Chen XY (2016) Effectively clustering by finding density backbone based-on KNN. Pattern Recongn 60:486–498

Chiroma H, Gital AY, Abubakar A, Zeki A (2014) Comparing performances of markov blanket and tree augmented Naïve Bayes on the iris dataset. Lect Notes Eng Comput Sci 2209(1):328–331

Chuang PJ, Yang SH, shin Lin C (2009) An energy-efficient balanced clustering algorithm for wireless sensor networks. In: WiCOM, pp 1–4

Dasgupta S (2002) Performance guarantees for hierarchical clustering. Comput Learn Theory 2375:351–363

Dengel A, Althoff T, Ulges A (2011) Balanced clustering for content-based image browsing. In: Gi-Informatiktage

Du MJ, Ding SF, Jia HJ (2016) Study on density peaks clustering based on k-nearest neighbors and principal component analysis. Knowl Based Syst 99:135–145

Ester M, Kriegel HP, Sander J, Xu X (1996) A density-based algorithm for discovering clusters in large spatial databases with noise. In: KDD, pp 226–231

Guo LJ, Ai CY, Wang XM, Cai ZP (2009) Real time clustering of sensory data in wireless sensor networks. In: Performance computing & communications conference, pp 33–40

Hu BQ, Wong H, Yiu KFC (2016) The aggregation of multiple three-way decision spaces. Knowl Based Syst 98:241–249

Huang CC, Li JH, Mei CL, Wu WZ (2017) Three-way concept learning based on cognitive operators: an information fusion viewpoint. Int J Approx Reason 84(1):1–20

Johnson CS (1967) Hierarchical clustering schemes. Psychometrika 32(3):241–254

Kaufman LJP (2008) Rousseeuw: finding groups in data: an introduction to cluster analysis. Wiley, London

Kriegel HP, Kröger P, Sander J, Zimek A (2011) Density-based clustering. Wiley interdisciplinary reviews: data mining and knowledge discovery 1(3):231–240

Langford J, Zhang XH, Brown G, Bhattacharya I, Getoor L, Zeugmann T (2010) EM clustering. Springer, Boston, MA

Leong SH, Ong SH (2017) Similarity measure and domain adaptation in multiple mixture model clustering: an application to image processing. PLOS ONE 12(7):e0180307

Li YFW, Tsang I, Zhou ZH (2009) Tighter and convex maximum margin clustering. AISTATS 5:344–351

Li JH, Mei CL, Lv YJ (2011) A heuristic knowledge-reduction method for decision formal contexts. Comput Math Appl 61(4):1096–1106

Li JH, Huang CC, Qi JJ, Qian YH, Liu WQ (2016) Three-way cognitive concept learning via multi-granularity. Inf Sci 361(1):1–15

Li XN, Sun BZ, She YH (2017) Generalized matroids based on three-way decision models. Int J Approx Reason 90:192–207

Liang Z, Chen P (2016) Delta-density based clustering with a divide-and-conquer strategy. Elsevier Science Inc., New York

Liu D, Liang DC (2017) Three-way decisions in ordered decision system. Knowl Based Syst 137:182–195

Liu HY, Han JW, Nie FP, Li XL (2017a) Balanced clustering with least square regression. AAA I:2231–2237

Liu YH, Ma ZM, Yu F (2017b) Adaptive density peak clustering based on k-nearest neighbors with aggregating strategy. Knowl Based Syst 133:208–220

Liu R, Wang H, Yu XM (2018) Shared-nearest-neighbor-based clustering by fast search and find of density peaks. Inf Sci 450:200–226

Lu JY, Zhu QS (2017) An effective algorithm based on density clustering framework. IEEE Access 5(99):4991–5000

MacQueen J et al (1967) Some methods for classification and analysis of multivariate observations. In: Proceedings of the fifth Berkeley symposium on mathematical statistics and probability, pp 281–297

Min F, He HP, Qian YH, Zhu W (2011) Test-cost-sensitive attribute reduction. Inf Sci 181:4928–4942

Pappas NT (1992) An adaptive clustering algorithm for image segmentation. IEEE Trans Signal Process 40(4):901–914

Qian J, Lv P, Yue XD, Liu CH, Jing ZJ (2015) Hierarchical attribute reduction algorithms for big data using mapreduce. Knowl Based Syst 73:18–31

Reyes O, Altalhi AH, Ventura S (2018) Statistical comparisons of active learning strategies over multiple datasets. Knowl Based Syst 145:274–288

Rodriguez A, Laio A (2014) Machine learning clustering by fast search and find of density peaks. Science 344(6191):1492–1496

Sarma TH, Viswanath P, Reddy BE (2013) A hybrid approach to speed-up the k-means clustering method. Int J Mach Learn Cybern 4(2):107–117

Shuji S, Masanori K, Takashi I, Yutaka A (2015) Faster sequence homology searches by clustering subsequences. Bioinformatics 31(8):1183–1190

Stenger J (2011) Disability living allowance. Mental Health Today 1(6):277

Ugulino W, Vega K, Velloso E (2012) Wearable computing: accelerometers data classification of body postures and movements. Adv Artif Intell SBIA 2012:52–61

Wang XZ, Musa AB (2014) Advances in neural network based learning. Int J Mach Learn Cybern 5(1):1–2

Wang Y, Jiang Y, Wu Y, Zhou ZH (2011) Spectral clustering on multiple manifolds. IEEE Trans Neural Netw 22(7):1149–1161

Wang M, Min F, Wu YX, Zhang ZH (2017) Active learning through density clustering. Exp Syst Appl 85:305–317

Wilderjans TF, Cariou V (2016) Clv3w : a clustering around latent variables approach to detect panel disagreement in three-way conventional sensory profiling data. Food Qual Prefer 47:45–53

Xie JY, Gao HC, Xie WX, Liu XH, Grant PW (2016) Robust clustering by detecting density peaks and assigning points based on fuzzy weighted k-nearest neighbors. Inf Sci 354:19–40

Xu J, Wang GY, Deng WH (2016) Denpehc: density peak based efficient hierarchical clustering. Inf Sci 373(12):200–218

Xu X, Ding SF, Du MJ, Xue Y (2018a) DPCG: an efficient density peaks clustering algorithm based on grid. Int J Mach Learn Cybern 9(5):743–754

Xu X, Ding SF, Shi ZZ (2018b) An improved density peaks clustering algorithm with fast finding cluster centers. Knowl Based Syst 158:65–74

Yao JT, Yao YY (2002) Induction of classification rules by granular computing. Springer, Berlin

Yu J, Cheng QS (2001) The upper bound of the optimal number of clusters in fuzzy clustering. Inf Sci 44(2):119–125

Yu H, Jiao P, Yao YY, Wang GY (2016) Detecting and refining overlapping regions in complex networks with three-way decisions. Inf Sci 373:21–41

Zhang HR, Min F, Shi B (2017) Regression-based three-way recommendation. Inf Sci 378:444–461

Zhang HR, Min F, Zhang ZH, Wang S (2019) Efficient collaborative filtering recommendations with multi-channel feature vectors. Int J Mach Learn Cybern 10(5):1165–1172

Zhao H, Wang P, Hu QH (2016a) Cost-sensitive feature selection based on adaptive neighborhood granularity with multi-level confidence. Inf Sci 366:134–149

Zhao YX, Li JH, Liu WQ, Xu WH (2016b) Cognitive concept learning from incomplete information. Int J Mach Learn Cybern 7(4):1–12

Zhou ZH (2016) Machine learning. Tsinghua Press, Beijing

Acknowledgements

This work was supported by the National Natural Science Foundation of China (41604114); the Sichuan Province Youth Science and Technology Innovation Team (2019JDTD0017).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declared that they have no conflicts of interest to this work.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, M., Zhang, YY., Min, F. et al. A two-stage density clustering algorithm. Soft Comput 24, 17797–17819 (2020). https://doi.org/10.1007/s00500-020-05028-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-020-05028-x