Abstract

An accurate estimation of deep foundation bearing capacity in different types of soils with the aid of the field experiments results is taken into account as the most important problems in geotechnical engineering. In recent decade, applying a broad range of artificial intelligence (AI) models has become widespread to solve various types of complicated problems in geotechnics. In this way, this study presents an application of two improved adaptive neuro-fuzzy inference system (ANFIS) techniques to estimate ultimate piles bearing capacity on the basis of cone penetration test (CPT) results basically used in analysis of pile foundations. The first model was combination of ANFIS and group method of data handling (GMDH) and the second one was related to the integration of fuzzy polynomial (FP) and GMDH model. Furthermore, in the ANFIS–GMDH, constant coefficients of ANFIS model were optimized using gravitational search algorithm (GSA). To improve the proposed AI models for carrying out training and testing stages, a reliable database in form of four input variables included information about different properties of soils and driven piles obtained from CPTs results. Performance of the proposed approaches indicated that FP–GMDH had better performance (RMSE = 0.0647 and SI = 0.378) in comparison with ANFIS–GMDH–GSA (RMSE = 0.084 and SI = 0.412). The use of multiple linear regression and multiple nonlinear regression equations showed lower level of precision in prediction of axial-bearing capacity of driven piles compared to the ANFIS–GMDH–GSA and FP–GMDH techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Deep foundations were widely utilized in civil engineering projects because of their relatively higher level of performance both in ascending of bearing capacity and plummeting settlement. Axial-bearing capacity of piles has two main elements including shaft friction and toe bearing (e.g., Alkroosh and Nikraz 2011a, b; Ebrahimian and Movahed 2017). There are different research works in terms of analytical and conventional models in order to evaluate bearing capacity of different types of piles (e.g., Long and Wysockey 1999; Józefiak et al. 2015; Xie et al. 2017). Hence, traditional equations were drawn attention-stricken by geotechnical experts. This maybe due to the fact that empirical equations do not take much time to estimate bearing capacity of piles and consequently, in some cases, numerical models giving an exact solution suffer from not only lack of accuracy, but also being lower of uncertainty level rater than traditional equations. Furthermore, some parameters leading to these shortcomings are at the mercy of varying physical behaviors in terms of soil–structure interaction when piles are installed. Basically, all empirical equations were extracted from applying linear and nonlinear regression techniques. In fact, general mathematical shapes of traditional equations remained constant and weighting coefficients of them are fixed within regression analysis. Lowering precision level of bearing capacity of pile maybe lied in the fact that there are no reliable pieces of information about a rational relationship among input and output variables (e.g., Alkroosh and Nikraz 2011a, b; Fatehnia et al. 2015; Ebrahimian and Movahed 2017).

With the advent of various soft computing tools, all the sorts of problems in different fields of civil engineering have been efficiently solved (e.g., Cevik 2007, 2011; Tanyildizi and Cevik 2010; Gandomi et al. 2010; Alavi et al. 2011; Alavi and Gandomi 2012; Najafzadeh et al. 2016a, b; Zahiri and Najafzadeh 2018; Moayedi and Armaghani 2018; Khandelwal et al. 2018). Furthermore, soft computing techniques, in terms of evolutionary computing models, have the capability of overcoming complicated nature of problems along with perception of physical meaning, mentioning knowledge extraction of a relationship for a given input–output system. From previous experience in geotechnical engineering, the vast range of artificial intelligence (AI) approaches such as artificial neural network (ANNs), gene-expression programming (GEP), evolutionary polynomial regression (EPR), and support vector machine (SVM) were employed successfully to predict pile bearing capacity in different soils and pile installation conditions. These AI models were fed by databases extracted from cone peneteration test (CPT) (e.g., Lee and Lee 1996; Alkroosh and Nikraz 2011a; Ahangar-Asr et al. 2014; Kordjazi et al. 2014). Taking apart from applications of AI models in solving geotechnical problems, group method of data handling (GMDH), as a predictive tool, has been successfully utilized in prediction of different parameters in various fields of civil engineering, as evaluation of mechanical properties of soil layers (Kalantary et al. 2009), prediction of scour depth at hydraulic structures (Najafzadeh and Barani 2011; Najafzadeh et al. 2013a, b, c, 2017), and design of stable open channels for water conveyance (Shaghaghi et al. 2017). From previous investigations, it was found that all the aspects of GMDH model has not been fully employed to predict bearing capacity of axial piles.

For this reason, in the current study, two new hybrid models of GMDH technique are developed to estimate axial-bearing capacity of pile. The first hybrid model is a combination of adaptive neuro-fuzzy inference system (ANFIS) and GMDH approaches which will be optimized by gravitational search algorithm (GSA). The second one includes an application of fuzzy polynomial (FP) in general structure of traditional GMDH model. Performance of ANFIS–GMDH and FP–GMDH is evaluated quantitatively and qualitatively. Moreover, results of the models development are compared with those obtained using multiple linear and nonlinear regression techniques.

2 A survey of AI applications into pile bearing capacity

Over the two decades, applications of AI models have become widespread through civil engineering projects in order to predict bearing capacity of piles in different soils conditions and physical properties of piles. In this way, a large number of experts in the field of geotechnical engineering have made rigorous efforts to employ various AI approaches to generate general traditional equation-based evolutionary computing so as to obtain more accurate prediction bearing capacity of piles rather than conventional models based on CPT databases.

Lee and Lee (1996) estimated ultimate bearing capacity (UBC) using 28 datasets extracted from pile load tests in in situ conditions. They used feed forward neural network based on back-propagation algorithm in order to obtain permissible level of accuracy for estimation of UBC. Abu-Kiefa (1998) presented an accurate prediction of bearing capacity of driven piles embedded in cohesionless soils using general regression neural network (GRNN).

Also, Shahin (2010) provided a good estimation of UBC for driven piles using the ANN model.

Alkroosh and Nikraz (2011a) applied GEP model for prediction of axial-bearing capacity of pile on the basis of CPT results. They used 58 series datasets which 28 and 30 series of all the data were related to the concrete and steel piles, respectively. From their study, GEP has produced an empirical equation with the most level of accuracy. Alkroosh and Nikraz (2012) utilized GEP model-based equation to obtain an acceptable level of precision by means of datasets corresponded to the 25 piles load tests. Furthermore, they indicated a good performance of the GEP approach so as to predict lateral bearing capacity of pile embedded in cohesive soils (Alkroosh and Nikraz 2013).

Ahangar-Asr et al. (2014) used EPR technique based equation for precise prediction of lateral load bearing in cohesive soils and additionally undrained conditions. Momeni et al. (2014) applied hybrid models of ANN on the basis of GA to estimate bearing capacity of driven piles. They utilized information about five dynamic tests to extract databases for modeling the ANN–GA. Results of ANN–GA technique provided more accurate estimation than empirical equations.

Also, Samui and Shahin (2014) obtained an accurate prediction of UBC for the driven piles using RVM and MARS. In Alkroosh and Nikraz (2014) investigation, GEP model produced higher precision level for pile dynamic bearing capacity in comparison with ANN models. From Kordjazi et al. (2014) study, SVM approach was developed to predict ultimate axial load-bearing capacity of piles on the basis of CPT databases. Results of their study indicated that SVM had good performance rather than traditional methods. Alkroosh et al. (2015) utilized SVM and GEP models for prediction of bearing capacity of bored piles. They employed 50 datasets extracted from CPT databases. Ultimately, results given by the proposed GEP were in good agreement with the observation rather than SVM approach. Through Shahin (2015) study, EPR and ANN techniques were applied for prediction of bearing capacity on the basis of 79 series datasets of load tests related to the driven piles in in situ circumstances and consequently leading perfect performances of EPR and ANN approaches. Fatehnia et al. (2015) applied the GEP model to predict UBC of driven piles with respect to the flap number. They found that results of GEP model stood at the highest level of accuracy rather than ANN and regression equations. From Ebrahimian and Movahed (2017) research, EPR model produced a precise equation for prediction of axial piles bearing capacity. The databases used in their study were related to the coarse and fine grain soils. Results of their investigation indicated that EPR approach provided more accurate estimation rather than empirical equations. Also, Armaghani et al. (2017) concluded that hybrid model of ANN and PSO (particle swarm optimization) presented an accurate prediction of UBC for rock-socketed piles. Kohestani et al. (2017) demonstrated that RF model presented permissible level of precision for estimation of UBC on the basis of 112 datasets extracted from results of in situ pile tests. Maizir (2017) applied ANN model, as a reliable predictive tool, to evaluate shaft bearing capacity based on information obtained from test results of pile driving analyzer (PDA). Moreover, Mohanty et al. (2018) concluded that MARS and GP techniques had a successful performance for prediction of UBC of driven pile embedded in non-cohesive soils.

3 Description of database

In this investigation, fully reliable datasets were collected from different sources. In fact, the datasets used in this study are related to the local precious work experience carried out by various researchers. All the datasets are on the basis of CPT for reaching an accurate prediction of bearing capacity of axial piles. Overall, it can said that all the variables applied in this study can be classified into two main groups which can have influences on pile bearing capacity extracted from pile load test (Qt) in in situ circumstances (Ebrahimian and Movahed 2017). The first category is corresponded to soil properties which might be cohesive and non-cohesive. Moreover, these properties contain pieces of information is provided by means of CPT, including cone tip resistance (qc) and sleeve friction (fs). The second one is related to pile geometric properties in terms of length (L) and diameter (D). Hence, to use the proposed intelligence approaches, four parameters of qc, fs, L, and D are considered as input variables. In this study, 72 pile load tests related to the in situ conditions were used to develop the proposed models. Details of these datasets are given in Table 1.

4 Database allocation

In this study, with the aid of K-fold conceptions, data allocation was performed. In fact, to use K-fold approach, the datasets are randomly divided into K subdivisions whose sizes have the same. From K subdivisions, one subdivision is considered for performance of testing stage, and the rest of subdivisions which have K − 1 number employed to train model. Afterward, this process is repeated K times, introducing with K iterations. In fact, datasets are divided randomly K times. Through every iteration, error value for testing stage is computed and ultimately average of errors for various iteration is computed (McLachlan et al. 2004). In the current study, to develop the proposed AI, there is a set of 72 series data. In this way, performance of AI models is investigated by means of various numbers of K-fold including 3, 4, 6, and 8. With respect to four K-fold numbers, 72 datasets were partitioned into four classes to develop the AI techniques, as seen in Table 2.

5 Framework of the proposed artificial intelligence models

In this section, in the first place, descriptions of the conventional GMDH model are presented. Next, ANFIS technique and GSA are introduced separately. As mentioned in introduction section, in the present study, two hybrid models are investigated. The first one is representation of an improved ANFIS–GMDH model using GSA. The second hybrid intelligence approach is related to the FP–GMDH.

5.1 Framework of GMDH model

The GMDH, whose general structure is on the basis of self-organized systems, is firstly proposed by Ivahnenko (1971). This type of artificial intelligence has several operations such as formation of quadratic polynomial in each neuron, selection of neurons with good fitness values, filtering partial description (or neuron), assigning error criterion for training stage termination, and driving a tree-like structure for solution of complicated problems (e.g., Amanifard et al. 2008; Mehrara et al. 2009; Najafzadeh et al. 2013a, b, c, d, e; Najafzadeh and Saberi-Movahed 2018).

Recent investigations indicated that GMDH network can be categorized as flexible intelligence approach in a way that it can be efficiently combined by other evolutionary algorithms including genetic algorithm (e.g., Nariman-Zadeh et al. 2003; Amanifard et al. 2008; Mehrara et al. 2009; Taherkhani et al. 2018), genetic programming (Iba and deGaris 1996; Najafzadeh et al. 2014a; Najafzadeh and Saberi-Movahed 2018), particle swarm optimization (Onwubolu 2008; Najafzadeh et al. 2014a; Najafzadeh and Tafarojnoruz 2016), and back propagations (Sakaguchi and Yamamoto 2000; Srinivasan 2008; Najafzadeh et al. 2014a, b, c).

To find an accurate solution for system identification problem, a function of \( \hat{f} \) which can be roughly utilized instead of actual function f, so as to estimate the final output of a complicated system, \( \hat{y} \), for a given input vector (or variable) \( X = \left( {x_{1} ,x_{2} ,x_{3} , \ldots ,x_{n} } \right) \) as close as possible to its actual (or observed) output y. Thus, for a given n observations of multivariables (or inputs), there is single-output as (Amanifard et al. 2008; Mehrara et al. 2009),

In the current status, it is feasible to train the GMDH model to prognosticate the final values of output, \( \hat{y}_{i} \), for every given input vector \( X = \left( {x_{i1} ,x_{i2} ,x_{i3} , \ldots ,x_{in} } \right) \). In fact, the following function is expressed to define a relationship connecting the final output to input variables as,

From the GMDH network, the following relationship notes error values given by the actual (or observed) final output and the predicted (or estimated) one as,

In the GMDH model, general relationship for given an input–output system is presented by the following mathematical expression, as a complicated discrete form of the Volterra function,

Furthermore, Eq. (4) is introduced as the Kolmogorov–Gabor polynomial (e.g., Ivahnenko 1971; Farlow 1984; Sanchez et al. 1997; Najafzadeh and Barani 2011). On the basis of previous experience, over the past decades, results of studies have demonstrated that applying quadratic polynomial, expressed as follows, provided relatively lower error of prediction for various problems rather than other types of polynomials (e.g., Amanifard et al. 2008; Mehrara et al. 2009; Najafzadeh et al. 2013a, b, c, d; Najafzadeh and Saberi-Movahed 2018).

The weighting coefficients related to Eq. (5) are computing by means of leas square technique so that the error value between actual (or observed) output, y, and the calculated (or computed) one, \( \hat{y} \), for each pair of \( x_{i} \) and \( x_{j} \), as input variables, is required to be minimized. Moreover, this error function which can evaluate performance of quadratic polynomial, \( G_{i} \), by virtue of least-square technique in order to optimally eliminate some of neurons in each layer, is noted as,

From general structure of the GMDH approach, all the possibilities of two independent variables (or inputs) out of total n input variables are considered for construction of the regression quadratic polynomial in the form of Eq. (5) whose weighting coefficients are extracted from a least-square method. Basically, number of neurons (or partial descriptions) in each (or current) layer can be computed as, \( C_{n}^{2} = n\left( {n - 1} \right)/2 \), which n is the number of inputs of previous layer. On the other hand, partial descriptions would be generated in the first layer of the from observations \( \left\{ {\left( {y_{i} ,x_{ip} ,x_{iq} } \right);\left( {i = 1,2, \ldots M} \right)} \right\} \) for various pair of \( p,q \in \left\{ {1,2, \ldots n} \right\} \). To put it another way, this is currently feasible to create M triples \( \left\{ {\left( {y_{i} ,x_{ip} ,x_{iq} } \right);\left( {i = 1,2, \ldots M} \right)} \right\} \), as input–output systems, from n observation (or event) by means of \( p,q \in \left\{ {1,2, \ldots n} \right\} \), written as in the following form (Amanifard et al. 2008; Mehrara et al. 2009):

From each row of M data series triples, applying quadratic polynomial, as seen in Eq. (5), can lead to form mathematical matrix equation as,

where W is the vector including six weighting coefficients of the quadratic polynomial as,

The superscript T is indicative of matrix transpose. Also, the vector of observed (or actual) output is obtained as,

From Eq. (8), A matrix is indicative of combination of two input variables being constructed in each neuron. Therefore, for all the number of data series, A can be extracted as follows:

As written in Eq. (12), the vector of coefficients is given by means of the least-squares approach as (Taherkhani et al. 2018),

which defines the vector of the six weighting coefficients of quadratic polynomial [Eq. (5)] for all the sets of M data series triples. Conceivably, it should be absolutely considered that this step-by-step way of learning the GMDH is repeated for each neuron of the next layers. The rest of description of the traditional GMDH model is given by relevant literature (e.g., Nariman-Zadeh et al. 2003; Amanifard et al. 2008; Mehrara et al. 2009).

5.2 Description of ANFIS model

Firstly, general structure of ANFIS model is introduced by Jang (1993) for an accurate approximation of system modeling. In fact, this technique combines the back propagation (BP) and the least-squares model (LSM), as learning models, extracting properties of structure such as number of fuzzy rules, coefficients of membership functions, and number of linear and nonlinear parameters by adaptive network (Qin et al. 2015). As written in the following, typical structure of a first order Takagi–Sugeno (TS) model is expressed as,

Then \( y = b_{i0} + b_{i1x1} + b_{2x2i} + \cdots + b \, \;{\text{in}}\; \, x_{n} \).where x1, x2, …, xn are the system inputs, y is the final output of system. Furthermore, Ai1, Ai2, …, Ain are fuzzy sets, bi0, bi1, …, bin are output parameters of fuzzy system.

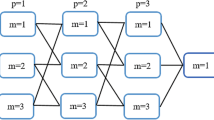

The schematic diagram of a simple ANFIS structure for TS fuzzy system is depicted in Fig. 1. Accordingly, Fig. 1 reflects that ANFIS model has two input variables and one output variable. From Fig. 1, the basic structure of ANFIS has five layers. Each node in the same layer indicates the same membership function and additionally considers that the square-shaped nodes are indicative of parameters, introduced as an adaptive node, varying within training stages. Fixed nodes are symbolized by means of circle. The function of each layer can be illustrated as follows.

In the first Layer 1, known as system fuzzification, all of the input variables are transformed in terms of meaning as linguistic labels in the current layer, and the node number of individual input variables demonstrates the number of membership functions (MFs). Moreover, reliable shape of MFs is selected on the basis of trial-and-error process. Generally, it can be said that, the parameters in the first layer are introduced as premise parameters.

In the second layer, firing strength calculation is carried out. Each node in the second layer multiplies input signals (i.e., the MFs) and outputs the product. Every output of individual node indicates the firing strength related to the fuzzy rule. Through the third layer, firing strength is normalized. Every node pertained to this layer outputs the ratio between the ith firing strength and the summation of all the firing strengths, and is known as normalized firing strength. In the next layer, system is defuzzificated. Every node in the fourth layer, outputs the crisp value of the corresponding fuzzy rule, and additionally the output is computed by virtue of the product between output of the third layer and linear combination of input variables. The parameters of this layer are introduced as consequent parameters.

Ultimately, in the fifth layer, final output of the ANFIS model is obtained. In fact, the current layer includes merely one node and outputs the overall crisp output value by means of summing all of the outputs related to the fourth layer. The way of training ANFIS model has two main steps of forward and backward pass. Detailed descriptions of ANFIS model were mentioned in relevant literature (e.g., Jang 1993; Qin et al. 2015).

5.3 Description of fuzzy c-means clustering (FCM)

Through data clustering approach, the datasets are categorized into many groups and datasets including the same properties are in connection with the same clusters and non-similar data sets to various clusters. The FCM (Bezdek 1973), introduced as a development and modification of K-means clustering, applies a data sample to determine C clusters by means of minimizing the objective function (U) (Yaseen et al. 2018). In fact, performance of FCM has four steps. For two given input variables, xi and xj, in the first step, centers of clusters are randomly determined using N data samples. The second step is to calculate membership matrix (wij) as,

where p is the fuzzifier exponent (between 0 and 1), C is the number of clusters.

In the third step, an objective function (U) which should be minimized, being expressed as,

Ultimately, new fuzzy cluster centers are calculated as follows:

In which ci is the centers of clusters.

5.4 Definition of GSA

Gravitational search algorithm (GSA) is relatively new heuristic search algorithm based on gravitational and motion laws and has been proposed by Rashedi et al. (2009).

In the case of GSA, a set of agents being introduced as masses are assigned to extract the optimal solution from applying gravity and motion Newtonian laws (Rashedi et al. 2009, 2010). The GSA can be indicated as a system with s masses in which the position of the ith mass is noted as,

Which x d i is the ith position of mass in the dimension of dth and n is the dimension of search space. Fitness of population is computed using Eq. (17) as,

and consequently the mass of each agent is computed by means of following equation:

In which fiti(t) and Mi(t) are indicative of the fitness and mass value of the agent i at t, respectively. Furthermore, to reach an optimal solution of a particular problem, values of best(t) and worst(t) are expressed as (Rashedi et al. 2011),

In order to calculate acceleration of agents, total forces from a set of agents which have higher masses is required to be considered on the basis of gravity law [Eq. (21)].

Afterward, acceleration of agents is calculated with help of motion law, as written in Eq. (22):

In which Rij(t) is known as the Euclidian distance between two agents i and j, computed by \( R_{ij} \left( t \right)\, = \,\left\| {X_{i} \left( t \right), X_{j} \left( t \right)} \right\|_{2} \) and additionally value of ɛ is remarkably low. kbest is a time function whose initial value is K0 at the beginning of time period and this value decreases with passing the time. After that, the velocity related to the next agent is calculated using Eq. (23) in form of a fraction of its current value of velocity added to its current value of acceleration (Rashedi et al. 2011),

and Eq. (24) is applied to calculate its new position of agent as,

In which randi and randj (observed in Eq. 22) are a pair of randomly uniform values being ranged between 0 and 1. Through GSA, the gravitational constant, G, depends on an initial value of G0 and time, expressing as,

Moreover, G(t) will have a downward trend with respect to t. In the current study, Eq. (26) has been used to evaluate the gravitational constant as a time-dependent function,

In this study, step-by-step way of applying GSA into the ANFIS–GMDH is mentioned in the following section.

5.5 Development of ANFIS–GMDH using GSA

The ANFIS–GMDH model is one of the newest hybrid approaches which was proposed firstly by Qin et al. (2015). In this section, definition of the ANFIS–GMDH is given briefly then combination mechanism of GSA with the ANFIS–GMDH technique is presented.

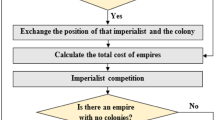

In the first place, it should be noted that the ANFIS–GMDH is a combination of ANFIS and GMDH in a way that final output layer of ANFIS model is considered as input variables for development of the convectional GMDH approach in order to reach higher level of precision within training and testing stages. A schematic diagram of general structure related to the proposed ANFIS–GMDH model is illustrated in Fig. 2. From Fig. 2, training performance of the ANFIS–GMDH can be categorized into seven steps. In the first step, datasets are classified into two phases of training and testing. From the second to the fourth steps, the ANFIS model is performed whereas rests of steps (fifth to seventh) are related performance of the GMDH. This mechanism is expressed step-by-step as follows.

The first step corresponds to datasets allocation to performing of training and testing stages. Next, in the second step, all the input variables are fuzzificated in the first layer of conventional ANFIS approach. In this way, a process of mapping, introduced as grid partition, is used to transform input parameters (attributes or variables) into fuzzy sets. Furthermore, the number and particular shape of membership functions (MFs) are required to be defined. Through the third step, known as fuzzy implication, general structure and number of fuzzy rules used in the ANFIS model are obtained. For instance, as seen in Fig. 2, nine fuzzy rules are constructed in this step. In the fourth step, all the parameters related to the ANFIS model are identified. This step pertains to the applying hybrid training algorithm in the ANFIS structure. The premise parameters and the consequent ones given in the second and third steps are computed by means of LSE and back-propagation (BP) gradient descent from the training datasets.

From the fifth step, number of input variables for the GMDH is determined. As depicted in Fig. 2, the output of the fourth step related to the ANFIS model is considered as input variables for the GMDH. As mentioned in the GMDH descriptions, in this step, all the possible quadratic polynomials are generated. Then, weighting coefficients of PDs are obtained by virtue of least squared method. As noticeable example, in Fig. 3, with respect to nine linear descriptions in the fourth layer of the ANFIS model, 36 partial descriptions will be generated in the first layer of the GMDH. In the sixth step, the best models with the lowest computational error [Eq. (6)] are selected to produce partial descriptions related to the next layer of the GMDH. Through the seventh step, an external value of error criterion is considered for evaluation of the GMDH performance in the training stage. In this way, the lowest value of external error criterion corresponded to PDs of the current layer and previous ones are compared with together. If the value of current error criterion is higher level than the previous one, the proposed GMDH network with permissible level of complexity is yielded. If not, fifth and seventh steps require to be performed again until the lowest error criterion value is minimized.

In the current investigation, GSA was applied in the general structure of the ANFIS model to optimize coefficients of MFs, as mentioned in the second step. Hence, Gaussian membership function was assigned to set fuzzy models. The controlling parameters of the GSA such as maximum number, agents number, G0 and α are shown in Table 3. In fact, ANFIS–GMDH–GSA was developed for different K-fold values and additionally computational error values for each K-fold number are presented in Table 4. Equation (6) is considered as an error function to evaluate performance of various K-fold number. From Table 4, results of assigning various K-fold numbers indicated that ANFIS–GMDH–GSA had the same performance for K-fold values of 3 and 4. Similarly, using the K = 6 and 8, average of computational errors had the same values. Generally, it can be said that ANFIS–GMSH–GSA with K-fold of 6 has provided the lowest level of error value (Average E = 0.01) in comparison with other K-fold numbers.

As mentioned in FCM section, FCM model was employed to developed the ANFIS approach. In this way, 10 clusters were used and consequently 10 fuzzy rules were applied for carrying out fuzzy implication process and additionally 50 linear descriptions were generated in the fourth step. In this way, traditional GMDH has 10 input variables and then 45 partial descriptions in the first layer. Due to value of error criterion in the first layer of the GMDH, three PDs were selected to create the second layer and consequently one PD has been generated in the second layer as final output of GMDH network.

5.6 Improvement in GMDH using fuzzy polynomial neuron (FPN)

The FPN was basically composed of a pair of steps. The first step, FPN is constructed being composed of fuzzy sets that generate a connection between the input variables and the processing part perceived by the neuron (Oh and Pedrycz 2006). The second part of FP–GMDH technique is related to the process of constructing quadratic polynomials as discussed in principle of the GMDH. In the current, FP–GMDH has been developed for different K-fold values. As a result, average of E values related to every K-fold number has been given in Table 4. Table 4 shows that FP–GMDH had the best performance (Average Error = 0.00368) in terms of accuracy for K = 8 compared with other performances. In contrast, for K = 3, results of FP–GMDH had relatively lower level of precision in comparison with performance of the FP–GMDH for other K-fold numbers. In this study, to develop a fuzzy polynomial system, again FCM was used with 10 clusters including 10 fuzzy rules. After that, a two-layer GMDH model was generated by three neurons in the first layer and one neuron as final output.

6 Multiple regression-based equations

In this section, multiple linear and nonlinear regression equations have been developed using least squared method for the training datasets. All the calculations related to the regression analysis have been performed in the MATLAB programming language. Multiple linear regression (MLR) equation for prediction of COD was acquired as,

and for multiple nonlinear regression analysis, equation was expressed as,

7 Results and discussions

The comparative evaluation of the proposed models performance is investigated in this section. In this way, coefficient of correlation (R), root mean square error (RMSE), mean absolute percentage error (MAPE), BIAS were employed in order to appraise precision level of ANFIS–GMDH and FP–GMDH techniques in both training and testing datasets, as follows:

in which \( \left( {Q_{t} } \right)_{P}^{{}} \) and (Qt)O are the predicted bearing capacity of driven pile and observed one, respectively, and N is the number of data sample.

Statistical results of the proposed models are given in Table 5. In the training stage, FP–GMDH approach provided more accurate prediction of bearing capacity of driven pile in terms of R (0.97) and RMSE (0.0594) compared with the ANFIS–GMDH–GSA (R = 0.965 and RMSE = 0.065). Furthermore, in the case of BIAS and SI values, FP–GMDH (BIAS = 0.0001 and SI = 0.302) has better performance than combination of the ANFIS–GMDH with GSA (BIAS = − 0.0001 and SI = 0.326). In terms of MAPE comparison, FP–GMDH model (MAPE = 0.49) had relatively better performance than ANFIS–GMDH–GSA (MAPE = 0.55). Overall, statistical parameters presented in Table 5 were indicative of highering level of accuracy in estimation of Qt for FP–GMDH rather than ANFIS–GMDH model. Qualitative results of the proposed artificial intelligence approaches are illustrated in Fig. 3. All the data points in Fig. 3 were normalized between 0 and 1.

Through the testing phase, FP–GMDH model has provided precise prediction of Qt with R of 0.96 and RMSE of 0.0647 in comparison with those yielded by the ANFIS–GMDH (R = 0.94 and RMSE = 0.082). Moreover, BIAS and SI values extracted from testing stage demonstrated that FP–GMDH model (BIAS = − 0.0005 and SI = 0.387) has provided ultimate bearing capacity of driven pile at higher level of precision rather than ANFIS–GMDH–GSA (BIAS = − 0.00285 and SI = 0.412). Similarly, according to Table 5, the proposed FP–GMDH model had superiority to the ANFIS–GMDH–GSA network in terms of MAPE values. Illustrative comparisons of the proposed techniques for the testing stage have been shown in Fig. 4. In the present study, with respect to the general structure of the two proposed models, it can be inferred that configuration of FP–GMDH network is simpler than ANFIS–GMDH–GSA model. In other words, volume of computations in the ANFIS–GMDH–GSA is much more than FP–GMDH model. Through development of the proposed AI approaches, it can be found that ANFIS–GMDH–GSA model is more time-consuming than FP–GMDH network due to application of GSA in the ANFIS–GMDH structure.

Furthermore, performance of the FP–ANFIS and ANFIS–GMDH models were compared with MLR [Eq. (27)] and MNLR [Eq. (28)]. Table 5 indicates that MLR given by Eq. (27) has produced larger computational error of Qt prediction in terms of RMSE (0.163), MAPE (2.61), and BIAS (0.00195) than ANFIS–GMDH and FP–GMDH models. Equation (28) extracted from MNLR technique has produced more accurate estimation (RMSE = 0.132 and MAPE = 2.146) rather than those obtained using Eq. (27) (RMSE = 0.163 and MAPE = 1.81). Also, BIAS (− 0.0105) and SI (0.492) values given by MNLR method were indicative of being lower error of Qt prediction in comparison with MLR (BIAS = 0.00195 and SI = 0.523). Performance of the regression-based techniques for the testing stage is demonstrated in Fig. 5. As seen in Fig. 5, both predicted Qt values and observed ones have been normalized scaling between 0 and 1.

8 Sensitivity analysis

To determine the comparative impact of every input variable on the axial-bearing capacity of driven pile, the FP–GMDH model was selected to carry out a sensitivity analysis technique. This approach has been performed such that, one of effective parameters including L, D, qc, and fs has been removed each time to evaluate the impact of that input on Qt. In this way, FP–GMDH model was redeveloped four times using three inputs. Quantitative results of sensitivity analysis indicated that pile diameter (D) is the most effective parameter on the Qt with R of 0.89 and RMSE of 0.122. Furthermore, with respect to other statistical parameters, FP–GMDH approach produced a significant large error in terms of MAPE (0.902) and BIAS (− 0.0056) by neglecting D from developing FP–GMDH technique. In contrast, sleeve friction (fs) has relatively lower level of influence on the Qt due to R of 0.524 and RMSE of 0.0513. On the other hand, FP–GMDH network developed by three inputs of D, L, and qc indicated the highest level of precision (MAPE = 0.65 and BIAS = − 0.0021), obtaining fs as the most insignificant parameter. The other influential parameters on the Qt include D and L being ranked from higher impacts to lower ones, respectively. The statistical analysis through the sensitivity analysis technique is summarized in Table 6.

9 Conclusion

In this study, two developed ANFIS models including FP–GMDH and ANFIS–GMDH techniques were applied to predict bearing capacity of driven piles. In this way, 72 datasets in form of an input–output system was composed of four input variables includes both coarse and fine grain soils, cone tip resistance and sleeve friction of CPTs, and geometric characterizations of piles. In the first place, general structure of ANFIS–GMDH model was optimized using GSA. In fact, the best ratio of datasets allocation was defined using K-folds technique in a way that K-folds of 8 and 6 have provided the most accurate prediction for the FP–GMDH and ANFIS–GMDH–GSA models. Through training phase, both proposed models were developed using 10 fuzzy rules. In the both training and testing stages, it can be concluded that FP–GMDH approach has provided relatively lower level of computational error when compared with an improved ANFIS–GMDH–GSA model. Furthermore, for the training datasets, MLR and MNLR techniques were fitted. Statistical results demonstrated that FP–GDHM and ANFIS–GMDH models have provided better performance than regression-based equations. On the other hand, Qt values predicted by MNLR approach had higher level of precision than those obtained using MLR model. Beside, results of sensitivity analysis showed that diameter of driven plie (D) and sleeve friction (fs) parameters had the highest and lowest impacts on the bearing capacity of driven pile.

Generally, performance of integrated models of ANFIS indicated that these soft computing tools can be employed efficiently to solve one of the most important subjects in geotechnical engineering.

References

Abu-Kiefa M (1998) General regression neural networks for driven piles in cohesionless soils. J Geotech Geoenviron Eng 124(12):1177–1185

Ahangar-Asr A, Javadi AA, Khalili N (2014) A new approach to thermo-mechanical modelling of the behaviour of unsaturated soils. Int J Numer Anal Methods Geomech 39:539–557

Alavi AH, Gandomi AH (2012) A new multi-gene genetic programming approach to nonlinear system modeling. Part I: materials and structural engineering problems. Neural Comput Appl 21(1):171–187

Alavi AH, Ameri M, Gandomi AH, Mirzahosseini MR (2011) Formulation of flow number of asphalt mixes using a hybrid computational method. Constr Build Mater 25(3):1338–1355

Alkroosh IS, Nikraz H (2011a) Correlation of pile axial capacity and CPT data using gene expression programming. Geotech Geol Eng 29(5):725–748

Alkroosh IS, Nikraz H (2011b) Predicting axial capacity of driven piles in cohesive soils using intelligent computing. Eng Appl Artif Intell 25(3):618–627

Alkroosh IS, Nikraz H (2012) Predicting axial capacity of driven piles in cohesive soils using intelligent computing. Eng Appl Artif Intell 25(3):618–627

Alkroosh IS, Nikraz I (2013) Evaluation of pile lateral capacity in clay applying evolutionary approach. Int J Geomath 4(1):462–465

Alkroosh I, Nikraz H (2014) Predicting pile dynamic capacity via application of an evolutionary algorithm. Soils Found 54(2):233–242

Alkroosh IS, Bahadori M, Nikraz H, Bahadori A (2015) Regressive approach for predicting bearing capacity of bored piles from cone penetration test data. J Rock Mech Geotech Eng 7(5):584–592

Amanifard N, Nariman-Zadeh N, Farahani MH, Khalkhali A (2008) Modelling of multiple short-length-scale stall cells in an axial compressor using evolved GMDH neural networks. J Energy Convers Manag 49(10):2588–2594

Armaghani DJ, Raja RSNSB, Faizi K, Rashid ASA (2017) Developing a hybrid PSO–ANN model for estimating the ultimate bearing capacity of rock-socketed piles. Neural Comput Appl 28(2):391–405

Bezdek JC (1973) Cluster validity with fuzzy sets. J Cybern 3:58–73. https://doi.org/10.1080/01969727308546047

Cevik A (2007) Unified formulation for web crippling strength of cold-formed steel sheeting using stepwise regression. J Constr Steel Res 63(10):1305–1316

Cevik A (2011) Modeling strength enhancement of FRP confined concrete cylinders using soft computing. Expert Syst Appl 38(5):5662–5673

Ebrahimian B, Movahed V (2017) Application of an evolutionary-based approach in evaluating pile bearing capacity using CPT results. Ships Offshore Struct 12(7):937–953

Farlow SJ (ed) (1984) Self-organizing method in modelling: GMDH type algorithm. Marcel Dekker Inc, New York

Fatehnia M, Tawfiq K, Hataf N, Ozguven EE (2015) New method for predicting the ultimate bearing capacity of driven piles by using Flap number. KSCE J Civil Eng 19(3):611–620

Gandomi AH, Alavi AH, Sahab MG (2010) New formulation for compressive strength of CFRP confined concrete cylinders using linear genetic programming. Mater Struct 43(7):963–983

Iba H, deGaris H (1996) Extending genetic programming with recombinative guidance. In: Angeline P, Kinnear K (eds) Advances in genetic programming, vol 2. MIT Press, Cambridge

Ivahnenko AG (1971) Polynomial theory of complex systems. IEEE Trans Syst Man Cybern 1(4):364–378

Jang JSR (1993) ANFIS: adaptive-network-based fuzzy inference system. IEEE Trans Syst Man Cybern 23(3):665–685

Józefiak K, Zbiciak A, Maślakowski M, Piotrowski T (2015) Numerical modelling and bearing capacity analysis of pile foundation. Procedia Eng 111:356–363

Kalantary F, Ardalan H, Nariman-Zadeh N (2009) An investigation on the Su–NSPT correlation using GMDH type neural networks and genetic algorithms. Eng Geol 104(1–2):144–155

Khandelwal M, Marto A, Fatemi SA, Ghoroqi M, Armaghani DJ, Singh TN, Tabrizi O (2018) Implementing an ANN model optimized by genetic algorithm for estimating cohesion of limestone samples. Eng Comput 34(2):307–317

Kohestani VR, Vosoughi M, Hassanlourad M, Fallahnia M (2017) Bearing capacity of shallow foundations on cohesionless soils: a random forest based approach. Civil Eng Infrastruct J 50(1):35–49

Kordjazi A, Nejad FP, Jaksa M (2014) Prediction of ultimate axial load-carrying capacity of piles using a support vector machine based on CPT data. Comput Geotech 55:91–102

Lee IM, Lee JH (1996) Prediction of pile bearing capacity using artificial neural networks. Comput Geotech 18(3):189–200

Long JH, Wysockey MH (1999) Accuracy of methods for predicting axial capacity of deep foundations. In: Proceedings of OTRC 99 conference: analysis, design, construction, and testing of deep foundation ASCE, Austin, TX, 29–30 April, GSP 88, pp 190–195

Maizir H (2017) Evaluation of shaft bearing capacity of single driven pile using neural network. In: Proceedings of the international multiconference of engineers and computer scientists, vol I, IMECS, March 15–17, Hong Kong

McLachlan GJ, Do K-A, Ambroise C (2004) Analyzing microarray gene expression data. Wiley, Hoboken

Mehrara M, Moeini A, Ahrari M, Erfanifard A (2009) Investigating the efficiency in oil futures market based on GMDH approach. Expert Syst Appl 36(4):7479–7483

Moayedi H, Armaghani DJ (2018) Optimizing an ANN model with ICA for estimating bearing capacity of driven pile in cohesionless soil. Eng Comput 34(2):347–356

Mohanty R, Suman S, Das SK (2018) Prediction of vertical pile capacity of driven pile in cohesionless soil using artificial intelligence techniques. Int J Geotech Eng 12(2):209–216

Momeni E, Nazir R, Jahed Armaghani D, Maizir H (2014) Prediction of pile bearing capacity using a hybrid genetic algorithm-based ANN. Measurement 57:122–131

Najafzadeh M, Barani GA (2011) Comparison of group method of data handling based genetic programming and back propagation systems to predict scour depth around bridge pier. Sci Iran Trans A 18(6):1207–1213

Najafzadeh M, Saberi-Movahed F (2018) GMDH-GEP to predict free span expansion rates below pipelines under waves. Mar Georesour Geotechnol. https://doi.org/10.1080/1064119X.2018.1443355

Najafzadeh M, Tafarojnoruz A (2016) Evaluation of neuro-fuzzy GMDH-based particle swarm optimization to predict longitudinal dispersion coefficient in rivers. Environ Earth Sci 75(2):157

Najafzadeh M, Barani GA, Hessami-Kermani MR (2013a) Abutment scour in live-bed and clear-water using GMDH network. Water Sci Technol IWA 67(5):1121–1128

Najafzadeh M, Barani GA, Azamathulla HMd (2013b) GMDH to predict scour depth around vertical piers in cohesive soils. Appl Ocean Res 40:35–41

Najafzadeh M, Barani GA, Hessami Kermani MR (2013c) GMDH network based back propagation algorithm to predict abutment scour in cohesive soils. Ocean Eng 59:100–106

Najafzadeh M, Barani GA, Hessami-Kermani MR (2013d) Group method of data handling to predict scour depth around vertical piles under regular waves. Sci Iran 30(3):406–413

Najafzadeh M, Barani GA, Hessami-Kermani M-R (2013e) Group method of data handling to predict scour depth around vertical piles under regular waves. Sci Iran 20(3):406–413

Najafzadeh M, Barani GA, Hessami-Kermani MR (2014a) GMDH networks to predict scour at downstream of a ski-jump bucket. Earth Sci Inf 7(4):231–248

Najafzadeh M, Barani GA, Azamathulla HMd (2014b) Prediction of pipeline scour depth in clear-water and live-bed conditions using GMDH. Neural Compu Appl 24(3–4):629–635

Najafzadeh M, Barani GA, Hessami-Kermani MR (2014c) Estimation of pipeline scour due to waves by the group method of data handling. J Pipeline Syst Eng Pract ASCE 5(3):06014002

Najafzadeh M, Rezaie-Balf M, Rashedi E (2016a) Prediction of maximum scour depth around piers with debris accumulation using EPR, MT, and GEP models. J Hydroinf 18(5):867–884

Najafzadeh M, Etemad-Shahidi A, Lim SY (2016b) Scour prediction in long contractions using ANFIS and SVM. Ocean Eng 111:128–135

Najafzadeh M, Saberi-Movahed F, Sarkamaryan S (2017) NF-GMDH-Based self-organized systems to predict bridge pier scour depth under debris flow effects. Mar Georesour Geotechnol. https://doi.org/10.1080/1064119x.2017.1355944

Nariman-Zadeh N, Darvizeh A, Ahmad-Zadeh GR (2003) Hybrid genetic design of GMDH-type neural networks using singular value decomposition for modelling and prediction of the explosive cutting process. Proc Inst Mech Eng Part B J Eng Manuf 217(6):779–790

Oh S, Pedrycz W (2006) The design of self-organizing neural networks based on PNs and FPNs with the aid of genetic optimization and extended GMDH method. Int J Approx Reason 43:26–58

Onwubolu GC (2008) Design of hybrid differential evolution and group method in data handling networks for modeling and prediction. Inf Sci 178:3618–3634

Qin Y, Langari R, Gu L (2015) A new modeling algorithm based on ANFIS and GMDH. J Intell Fuzzy Syst 29(4):1321–1329

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) GSA: a gravitational search algorithm. Inf Sci 179(13):2232–2248

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2010) BGSA: binary gravitational search algorithm. Nat Comput 9(3):727–745

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2011) Filter modeling using gravitational search algorithm. Eng Appl Artif Intell 24(1):117–122

Sakaguchi A, Yamamoto T (2000) A GMDH network using back propagation and its application to a controller design. J IEEE 4:2691–2697

Samui P, Shahin M (2014) Relevance vector machine and multivariate adaptive regression spline for modelling ultimate capacity of pile foundation. J Numer Methods Civil Eng 1(1):37–45

Sanchez E, Shibata T, Zadeh LA (1997) Genetic algorithms and fuzzy logic systems. World Scientific, Singapore

Shaghaghi S, Bonakdari H, Gholami A, Ebtehaj I, Zeinolabedini M (2017) Comparative analysis of GMDH neural network based on genetic algorithm and particle swarm optimization in stable channel design. Appl Math Comput 313:271–286

Shahin MA (2010) Intelligent computing for modeling axial capacity of pile foundations. Can Geotech J 47(2):230–243

Shahin MA (2015) Use of evolutionary computing for modelling some complex problems in geotechnical engineering. Geomech Geoeng 10(2):109–125

Srinivasan D (2008) Energy demand prediction using GMDH networks. Neuro Comput 72(1–3):625–629

Taherkhani A, Basti A, Nariman-Zadeh N, Jamali A (2018) Achieving maximum dimensional accuracy and surface quality at the shortest possible time in single-point incremental forming via multi-objective optimization. Proc Inst Mech Eng Part B J Eng Manuf. https://doi.org/10.1177/0954405418755822

Tanyildizi H, Cevik A (2010) Modeling mechanical performance of lightweight concrete containing silica fume exposed to high temperature using genetic programming. Constr Build Mater 24(12):2612–2618

Xie Y, Liu C, Gao S, Tang J, Chen Y (2017) Lateral load bearing capacity of offshore high-piled wharf with batter piles. Ocean Eng 142:377–387

Yaseen ZM, Ramal MM, Diop L, Jaafar O, Demir V, Kisi O (2018) Hybrid adaptive neuro-fuzzy models for water quality index estimation. Water Resour Manag. https://doi.org/10.1007/s11269-018-1915-7

Zahiri A, Najafzadeh M (2018) Optimized expressions to evaluate the flow discharge in main channels and floodplains using evolutionary computing and model classification. Int J River Basin Manag 16(1):123–132

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by V. Loia.

Rights and permissions

About this article

Cite this article

Harandizadeh, H., Toufigh, M.M. & Toufigh, V. Application of improved ANFIS approaches to estimate bearing capacity of piles. Soft Comput 23, 9537–9549 (2019). https://doi.org/10.1007/s00500-018-3517-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-3517-y