Abstract

The objective of this study is to present a novel method of level-2 uncertainty analysis in risk assessment by means of uncertainty theory. In the proposed method, aleatory uncertainty is characterized by probability distributions, whose parameters are affected by epistemic uncertainty. These parameters are described as uncertain variables. For monotone risk models, such as fault trees or event trees, the uncertainty is propagated analytically based on the operational rules of uncertain variables. For non-monotone risk models, we propose a simulation-based method for uncertainty propagation. Three indexes, i.e., average risk, value-at-risk and bounded value-at-risk, are defined for risk-informed decision making in the level-2 uncertainty setting. Two numerical studies and an application on a real example from literature are worked out to illustrate the developed method. A comparison is made to some commonly used uncertainty analysis methods, e.g., the ones based on probability theory and evidence theory.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Uncertainty modeling and analysis is an essential part of probabilistic risk assessment (PRA) and has drawn numerous attentions since 1980s (Apostolakis 1990; Parry and Winter 1981). Two types of uncertainty are usually distinguished: aleatory uncertainty, which refers to the uncertainty inherent in the physical behavior of a system, and epistemic uncertainty, which refers to the uncertainty in the modeling caused by lack of knowledge on the system behavior (Kiureghian and Ditlevsen 2009). In practice, uncertainty modeling and analysis involving both aleatory and epistemic uncertainty is often formulated in a level-2 setting: aleatory uncertainty is considered by developing probabilistic models for risk assessment, while the parameters in the probabilistic models might subject to epistemic uncertainty (Aven et al. 2014).

In general, it has been well acknowledged that aleatory uncertainty should be modeled using probability theory. However, there appears to be no consensus on which mathematical framework should be used to describe epistemic uncertainty, since its modeling usually involves subjective information from human judgements. Indeed, various mathematical frameworks have been proposed in the literature to model the epistemically uncertain variables, e.g., probability theory (subjective interpretation), evidence theory, possibility theory (Aven 2013; Aven and Zio 2011; Helton et al. 2010). As a result, different methods for level-2 uncertainty analysis are developed. Aven et al. (2014) systematically elaborate on level-2 uncertainty analysis methods and developed a purely probabilistic for level-2 uncertainty analysis. Limbourg and Rocquigny (2010) apply evidence theory to both level-1 and level-2 uncertainty modeling and analysis, and the two settings were compared through a benchmark problem. Some explanations of the results are discussed in the context of evidence theory. Considering the large calculation cost for level-2 uncertainty analysis, Limbourg et al. (2010) develop an accelerated method for monotonous problems using the monotonous reliability method (MRM). Pedroni et al. (2013) and Pedroni and Zio (2012) model the epistemic uncertainty using possibility distributions and develop a level-2 Monte Carlo simulation for uncertainty analysis, which is then compared to a purely probabilistic approach and an evidence theory-based (ETB) approach. Pasanisi et al. (2012) reinterpret the level-2 purely probabilistic frameworks in the light of Bayesian decision theory and apply the approach to risk analysis. Hybrid methods based on probability theory and evidence theory are also presented (Aven et al. 2014). Baraldi et al. (2013) introduce the hybrid level-2 uncertainty models to consider maintenance policy performance assessment.

In this paper, we enrich the research of level-2 uncertainty analysis by introducing a new mathematical framework, the uncertainty theory, to model the epistemically uncertain variables. Uncertainty theory has been founded in 2007 by Liu (2007) as an axiomatic mathematical framework to model subjective belief degrees. It is viewed as a reasonable and effective approach to describe epistemic uncertainty (Kang et al. 2016). To simulate the evolution of an uncertain phenomenon with time, concepts of uncertain process (Liu 2015) and uncertain random process (Gao and Yao 2015) are proposed. The uncertain differential equation is also developed as an effective tool to model events affected by epistemic uncertainty (Yang and Yao 2016). After these years of development, uncertainty theory has been applied in various areas, including finance (Chen and Gao 2013; Guo and Gao 2017), decision making under uncertain environment (Wen et al. 2015a, b), game theory (Yang and Gao 2013, 2016; Gao et al. 2017; Yang and Gao 2014). There are also considerable real applications in reliability analysis and risk assessment considering epistemic uncertainties. For example, Zeng et al. (2013) propose a new concept of belief reliability based on uncertainty theory accounting for both aleatory and epistemic uncertainties. Wen et al. (2017) develop an uncertain optimization model of spare parts inventory for equipment system, where the subjective belief degree is adopted to compensate the data deficiency. Ke and Yao (2016) apply uncertainty theory to optimize scheduled replacement time under block replacement policy considering human uncertainty. Wen and Kang (2016) model the reliability of systems with both random components and uncertain components. Wang et al. (2017) develop a new structural reliability index based on uncertainty theory.

To the best of our knowledge, in this paper, it is the first time that uncertainty theory is applied to level-2 uncertainty analysis. Through comparisons to some commonly used level-2 uncertainty analysis methods, new insights are brought with respect to strength and limitations of the developed method.

The remainder of the paper is structured as follows. Section 2 recalls some basic concepts of uncertainty theory. Level-2 uncertainty analysis method is developed in Sect. 3, for monotone and non-monotone risk models. Numerical case studies and applications are presented in Sect. 4. The paper is concluded in Sect. 5.

2 Preliminaries

In this section, we briefly review some basic knowledge on uncertainty theory. Uncertainty theory is a new branch of axiomatic mathematics built on four axioms, i.e., normality, duality, subadditivity and product axioms. Founded by Liu (2007) and refined by Liu (2010) , uncertainty theory has been widely applied as a new tool for modeling subjective (especially human) uncertainties. In uncertainty theory, belief degrees of events are quantified by defining uncertain measures:

Definition 1

(Uncertain measure Liu 2007) Let \(\varGamma \) be a nonempty set, and  be a \(\sigma \)-algebra over \(\varGamma \). A set function

be a \(\sigma \)-algebra over \(\varGamma \). A set function  is called an uncertain measure if it satisfies the following three axioms,

is called an uncertain measure if it satisfies the following three axioms,

Axiom 1

(Normality Axiom)  for the universal set \(\varGamma \).

for the universal set \(\varGamma \).

Axiom 2

(Duality Axiom)  for any event

for any event  .

.

Axiom 3

(Subadditivity Axiom) For every countable sequence of events \(\varLambda _1, \varLambda _2,\ldots \), we have

Uncertain measures of product events are calculated following the product axiom (Liu 2009):

Axiom 4

(Product Axiom) Let  be uncertainty spaces for \(k=1,2,\ldots \). The product uncertain measure

be uncertainty spaces for \(k=1,2,\ldots \). The product uncertain measure  is an uncertain measure satisfying

is an uncertain measure satisfying

where  are \(\sigma \)-algebras over \(\varGamma _k\), and \(\varLambda _k\) are arbitrarily chosen events from

are \(\sigma \)-algebras over \(\varGamma _k\), and \(\varLambda _k\) are arbitrarily chosen events from  for \(k=1,2,\ldots \), respectively.

for \(k=1,2,\ldots \), respectively.

In uncertainty theory, if an uncertain measure of one event can take multiple reasonable values, a value as close to 0.5 as possible is assigned to the event so as to maximize the uncertainty (maximum uncertainty principle) (Liu 2009). Hence, the uncertain measure of an arbitrary event in the product \(\sigma \)-algebra  is calculated by

is calculated by

Definition 2

(Uncertain variable Liu 2007) An uncertain variable is a function \(\xi \) from an uncertainty space  to the set of real numbers such that \(\left\{ \xi \in {\mathcal {B}} \right\} \) is an event for any Borel set \({\mathcal {B}}\) of real numbers.

to the set of real numbers such that \(\left\{ \xi \in {\mathcal {B}} \right\} \) is an event for any Borel set \({\mathcal {B}}\) of real numbers.

Definition 3

(Uncertainty distribution Liu 2007) The uncertainty distribution \(\varPhi \) of an uncertain variable \(\xi \) is defined by  for any real number x.

for any real number x.

For example, a linear uncertain variable  has an uncertainty distribution

has an uncertainty distribution

and a normal uncertain variable \(\xi \sim {\mathcal {N}}(e,\sigma ) \) has an uncertainty distribution

An uncertainty distribution \(\varPhi \) is said to be regular if it is a continuous and strictly increasing with respect to x, with \(0<\varPhi (x)<1 \), and \(\displaystyle \lim _{x \rightarrow -\infty } \varPhi (x)=0 \), \(\displaystyle \lim _{x \rightarrow +\infty } \varPhi (x)=1 \). A regular uncertainty distribution has an inverse function, and this inverse function is defined as the inverse uncertainty distribution, denoted by \(\varPhi ^{-1}(\alpha ) \), \(\alpha \in (0,1) \). It is clear that linear uncertain variables and normal uncertain variables are regular, and their inverse uncertainty distributions are written as:

Inverse uncertainty distributions play a central role in uncertainty theory, since the uncertainty distribution of a function of uncertain variables is calculated using the inverse uncertainty distributions:

Theorem 1

(Operational law Liu 2010 Let \(\xi _1,\) \(\xi _2,\ldots ,\xi _n \) be independent uncertain variables with regular uncertainty distributions \(\varPhi _1,\varPhi _2,\ldots ,\varPhi _n \), respectively. If \(f(\xi _1,\xi _2,\ldots ,\xi _n) \) is strictly increasing with respect to \(\xi _1,\xi _2,\ldots ,\xi _m \) and strictly decreasing with respect to \(\xi _{m+1},\) \(\xi _{m+2},\ldots ,\xi _n \), then \(\xi =f(\xi _1,\xi _2,\ldots ,\xi _n) \) has an inverse uncertainty distribution

Definition 4

(Expected value Liu 2007) Let \(\xi \) be an uncertain variable. Then the expected value of \(\xi \) is defined by

It is clear that, if \(\xi \) has an uncertainty distribution \(\varPhi (x) \), the expected value of \(\xi \) can be calculated by (Liu 2015):

For \(\xi \) with a regular uncertainty distribution, the expected value \(E\left[ \xi \right] \) is given by (Liu 2015)

3 Level-2 uncertainty analysis based on uncertainty theory

In this section, a new method for level-2 uncertainty analysis is presented based on uncertainty theory. Sect. 3.1 formally defines the problem of level-2 uncertainty analysis. Then, the uncertainty analysis method is introduced for monotone and non-monotone models in Sects. 3.2 and 3.3, respectively.

3.1 Problem definition

Conceptually, uncertainty analysis of a risk model can be represented as:

where z is the safety variable of the system of interest, \({\mathbf {x}}=(x_1,x_2,\ldots ,x_n) \) is a vector of input parameters, p is the risk indicator expressed in probabilistic terms and calculated by a distance function \(h(\cdot ) \) between the value of z and safety threshold \(z_{th} \):

In practice, \(g(\cdot ) \) could be logical models, e.g., fault trees, event trees, Bayesian networks, or physical models of failure dynamics, e.g., see Baraldi and Zio (2008) and Ripamonti et al. (2013).

Uncertainty in (10) is assumed to come from the input parameters \({\mathbf {x}} \), i.e., model uncertainty (e.g., see Nilsen and Aven (2003)) is not considered in the present paper. Aleatory and epistemic uncertainty are considered separately. Depending on the ways the uncertainty in the model parameters is handled, level-1 and level-2 uncertainty models are distinguished.

Level-1 uncertainty models separate the input vector into \({\mathbf {x}}=({\mathbf {a}}, {\mathbf {e}}) \), where \({\mathbf {a}}=(x_1,x_2,\ldots ,x_m) \) represents the parameters affected by aleatory uncertainty while \({\mathbf {e}}=(x_{m+1},x_{m+2},\ldots , x_{n}) \) represents the parameters that are affected by epistemic uncertainty (Limbourg and Rocquigny 2010). In level-1 uncertainty models, probability theory is used to model the aleatory uncertainty in \({\mathbf {a}}=(x_1,x_2,\ldots ,x_m) \) by identifying their probability density functions (PDF) \(f(x_i|\theta _i) \). These PDFs are assumed to be known, i.e., the parameters in the PDFs, denoted by \({\varvec{\Theta }}=(\theta _1,\theta _2,\ldots ,\theta _n)\), are assumed to have precise values. In practice, however, \({\varvec{\Theta }}=(\theta _1,\theta _2,\ldots ,\theta _n) \), are subject to epistemic uncertainty, and the corresponding uncertainty model is called level-2 uncertainty model.

In this paper, we consider the generic model in (10) and develop a new method for level-2 uncertainty analysis, based on uncertainty theory. More specifically, it is assumed that:

-

(1)

The aleatory uncertainty in the input parameters is described by the PDFs \(f(x_i|\theta _i), \quad i=1,2,\ldots ,n \).

-

(2)

\({\varvec{\Theta }}=(\theta _1,\theta _2,\ldots ,\theta _n) \) are modeled as independent uncertain variables with regular uncertainty distributions \(\varPhi _1,\varPhi _2,\ldots ,\varPhi _n \).

The uncertainty distributions \(\varPhi _1,\varPhi _2,\ldots ,\varPhi _n \) describe the epistemic uncertainty in the parameter values of \({\varvec{\Theta }}=(\theta _1,\theta _2,\ldots ,\theta _n) \) and can be determined based on expert knowledge, using uncertain statistical methods such as interpolation (Liu 2015), optimization (Hou 2014) and the method of moments (Wang and Peng 2014). The problem, then, becomes: given \(\varPhi _1,\varPhi _2,\ldots ,\varPhi _n \), how to assess the epistemic uncertainty in the risk index of interest p. In the following sections, we first develop the uncertainty analysis method for monotone models in Sect. 3.2, where p is a monotone function of the parameters \({\varvec{\Theta }} \), and, then, discuss a more general case in Sect. 3.3, where there are no requirements on the monotony of the risk model.

3.2 Monotone risk model

3.2.1 Uncertainty analysis using operational laws

In monotone uncertainty models, the risk index of interest can be explicitly expressed as:

where \({\varvec{\Theta }}=(\theta _1,\theta _2,\ldots ,\theta _n) \) is the vector of the parameters in the PDFs whose values are subject to epistemic uncertainty and h is a strictly monotone function with respect to \({\varvec{\Theta }} \). According to Assumption (2) in Sect. 3.1, the risk index of interest p is also an uncertain variable. Given regular uncertainty distributions \(\varPhi _1,\varPhi _2,\ldots ,\varPhi _n \) for \(\theta _1,\theta _2,\ldots ,\theta _n \), the epistemic uncertainty in p can be represented by an uncertainty distribution \(\varPsi (p) \). Without loss of generality, we assume h is strictly increasing with respect to \(\theta _1,\theta _2,\ldots ,\theta _m \), and strictly decreasing with respect to \(\theta _{m+1},\theta _{m+2},\ldots ,\theta _n \). Then, the inverse uncertainty distribution of p can be calculated based on Theorem 1, i.e.,

The uncertainty distribution \(\varPsi (p) \) can be obtained from the inverse function \(\varPsi _p^{-1}(\alpha ) \).

Two risk indexes are defined for risk-informed decision making, considering the level-2 uncertainty settings presented.

Definition 5

Let p represent a probabilistic risk index and \(\varPsi (p) \) be the uncertainty distribution of p. Then

is defined as the average risk, and

is defined as the value-at-risk.

It should be noted that the average risk can be also calculated using the inverse distribution of p:

and the value-at-risk can also be calculated by

According to Definition 5, the average risk is the expected value of the uncertain variable p, which reflects our average belief degree of the risk index p. A greater value of the average risk indicates that we believe the risk is more severe. The physical meaning of value-at-risk is that, with belief degree \(\gamma \), we believe that the value of the risk index is p. It is clear that, for a fixed value of \(\gamma \), a greater \(\text {VaR}(\gamma ) \) means that the risk is more severe.

3.2.2 Numerical case study

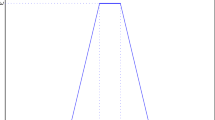

We take a simple fault tree (shown in Fig. 1) as a numerical case study to demonstrate the application of the developed method. The fault tree represents a top event A as the union (logic gate OR) of the two basic events \(B_1\) and \(B_2\). The risk index of interest is the probability that event A occurs before time \(t_0\), determined by

where \(t_A\) denotes the occurrence time of A. Let \(t_{B1} \) and \(t_{B2} \) be the occurrence times of events \(B_1\) and \(B_2\), respectively. Then, \(t_A=\min (t_{B1},t_{B2}) \). Assume that \(t_{B1} \) and \(t_{B2} \) follow exponential distributions with parameters \(\lambda _1\) and \(\lambda _2\), respectively. Thus, (5) can be further expressed as:

It is assumed that \(\lambda _1\) and \(\lambda _2\) are subject to epistemic uncertainty. The developed methods in Sect. 3.2.1 are used for level-2 uncertainty analysis based on uncertainty theory. In accordance with expert experience, linear uncertainty distributions are used to model the epistemic uncertainty in \(\lambda _1\) and \(\lambda _2\), i.e.,  and

and  . From (13), the inverse uncertainty distribution of the risk index p is calculated as

. From (13), the inverse uncertainty distribution of the risk index p is calculated as

and the uncertainty distribution of p is

According to (16) and (17), \(\overline{p}\) and VaR can be calculated by

Assuming the parameter values in Table 1, we have \(\overline{p}=0.1519 \) and \(\text {VaR}(0.9)=0.1755 \). The results are compared to those from a similar method based on probability theory, hereafter indicated as probability-based (PB) method, whereby the belief degrees on \(\lambda _1\), \(\lambda _2\) and p are modeled by random variables. In this paper, we assume that \(\lambda _1\) and \(\lambda _2\) follow uniform distributions whose parameter values are given in Table 1. Monte Carlo (MC) sampling is used to generate samples from the probability distribution of p. Average risk and value-at-risk can, then, be calculated using the MC samples:

where \(p_i,i=1,2,\ldots ,n \) are the samples obtained by MC simulation.

Figure 2 compares the distributions of the risk indexes obtained from the two methods. Both distributions have the same supports, but the uncertainty distribution has more weights on high values of the risk index than the probability theory. This means that the uncertainty theory-based (UTB) method is more conservative than the PB method, since it tends to evaluate a higher risk. This is obtained also from the values in Table 2: although both methods have roughly the same \(\overline{p} \), the UTB method yields a higher VaR(0.9), which indicates a high risk.

3.3 Non-monotone risk model

3.3.1 Uncertainty analysis using uncertain simulation

In many practical situations, the risk index of interest cannot be expressed as a strictly monotone function of the level-2 uncertain parameters. For such cases, we cannot obtain the exact uncertainty distributions for p by directly applying the operational laws. Rather, the maximum uncertainty principle needs to be used to derive the upper and lower bounds for the uncertainty distribution based on an uncertain simulation method developed by (Zhu 2012). The uncertain simulation can provide a reasonable uncertainty distribution of a function of uncertain variables and does not require the monotonicity of the function with respect to the variables. In this section, the method is extended to calculate the upper and lower bounds of an uncertainty distribution for risk assessment.

Definition 6

(Zhu 2012) An uncertain variable \(\xi \) is common if it is from the uncertain space  to \(\mathfrak {R}\) defined by \(\xi (\gamma )=\gamma \), where \({\mathcal {B}} \) is the Borel algebra over \(\mathfrak {R}\). An uncertain vector \(\varvec{\xi }=(\xi _1,\xi _2,\ldots ,\xi _n) \) is common if all the elements of \(\varvec{\xi }\) are common.

to \(\mathfrak {R}\) defined by \(\xi (\gamma )=\gamma \), where \({\mathcal {B}} \) is the Borel algebra over \(\mathfrak {R}\). An uncertain vector \(\varvec{\xi }=(\xi _1,\xi _2,\ldots ,\xi _n) \) is common if all the elements of \(\varvec{\xi }\) are common.

Theorem 2

(Zhu 2012) Let \(f:\mathfrak {R}^n\rightarrow \mathfrak {R}\) be a Borel function, and \(\varvec{\xi }=(\xi _1,\xi _2,\ldots ,\xi _n) \) be a common uncertain vector. Then the uncertainty distribution of f is:

In (26), \(\varLambda =f^{-1}(-\infty ,x) \), \(\{A_i\} \) denotes a collection of all intervals of the form \((-\infty ,a] \), \([b,+\infty ) \), \(\varnothing \) and \(\mathfrak {R}\), and each  is derived based on (27):

is derived based on (27):

where \(B\in {\mathcal {B}} \), and \(B\subset \bigcup \nolimits _{i=1}^{\infty }A_i \).

From Theorem 2, it can be seen that (27) gives a theoretical bound of each  in (26). Let

in (26). Let  ,

,  . It is clear that any values within m and \(1-n\) is a reasonable value for

. It is clear that any values within m and \(1-n\) is a reasonable value for  . Hence, we use m as the upper bound and \(1-n\) as the lower bound of

. Hence, we use m as the upper bound and \(1-n\) as the lower bound of  and develop a numerical algorithm for level-2 uncertainty analysis.

and develop a numerical algorithm for level-2 uncertainty analysis.

Algorithm 1. (Level-2 uncertainty analysis for non-monotone models)

- step 1:

-

Set \(m_1(i)=0 \) and \(m_2(i)=0 \), \(i=1,2,\ldots ,n \).

- step 2:

-

Randomly generate \(u_k=\left( \gamma _k^{(1)},\gamma _k^{(2)},\ldots ,\gamma _k^{(n)} \right) \) with \(0<\varPhi _i\left( \gamma _k^{(i)}\right) <1 \), \(i=1,2,\ldots ,n \), \(k=1,2,\ldots ,N \).

- step 3:

-

From \(k=1\) to \(k=N\), if \(f(u_k)\le c \), \(m_1(i)=m_1(i)+1 \), denote \(x_{m_1(i)}^{(i)}=\gamma _k^{(i)} \);

otherwise, \(m_2(i)=m_2(i)+1 \), denote \(y_{m_2(i)}^{(i)}=\gamma _k^{(i)} \), \(i=1,2,\ldots ,n \).

- step 4:

-

Rank \(x_{m_1}^{(i)} \) and \(y_{m_2}^{(i)} \) from small to large, respectively.

- step 5:

-

Set

$$\begin{aligned} a^{(i)}=&\,\,\varPhi \left( x_{m_1(i)}^{(i)}\right) \wedge \left( 1-\varPhi \left( x_1^{(i)}\right) \right) \wedge \\&\left( \varPhi \left( x_1^{(i)}\right) +1-\varPhi \left( x_2^{(i)}\right) \right) \wedge \\&\left( \varPhi \left( x_{m_1(i)-1}^{(i)}\right) +1 -\varPhi \left( x_{m_1(i)}^{(i)}\right) \right) ;\\ b^{(i)}=&\,\,\varPhi \left( y_{m_2(i)}^{(i)}\right) \wedge \left( 1-\varPhi \left( y_1^{(i)}\right) \right) \wedge \\&\left( \varPhi \left( y_1^{(i)}\right) +1-\varPhi \left( y_2^{(i)}\right) \right) \wedge \\&\left( \varPhi \left( y_{m_2(i)-1}^{(i)}\right) +1 -\varPhi \left( y_{m_2(i)}^{(i)}\right) \right) . \end{aligned}$$ - step 6:

-

\(L_{1U}^{(i)}=a^{(i)},L_{1L}^{(i)}=1-b^{(i)},L_{2U}^{(i)}=b^{(i)},L_{2L}^{(i)}=1-a^{(i)}\).

- step 7:

-

If \(a_U=L_{1U}^{(1)}\wedge L_{1U}^{(2)}\wedge \cdots \wedge L_{1U}^{(n)}>0.5 \), \(L_U=a_U \); if \(b_U=L_{2L}^{(1)}\wedge L_{2L}^{(2)} \wedge \cdots \wedge L_{2L}^{(n)}>0.5 \), \(L_U=1-b_U \); otherwise, \(L_U=0.5 \).

If \(a_L=L_{1L}^{(1)}\wedge L_{1L}^{(2)}\wedge \cdots \wedge L_{1L}^{(n)}>0.5 \), \(L_L=a_L \); if \(b_L=L_{2U}^{(1)}\wedge L_{2U}^{(2)} \wedge \cdots \wedge L_{2U}^{(n)}>0.5 \), \(L_L=1-b_L \); otherwise, \(L_L=0.5 \).

Through this algorithm, the upper and lower bounds for the uncertainty distribution of p can be constructed, denoted by \(\left[ \varPsi _L(p),\varPsi _U(p)\right] \). Similar to the monotone case, we define two risk indexes considering the level-2 uncertainty:

Definition 7

Let p described by (11) be the probability that a hazardous event will happen, and let \(\varPsi _L(p) \) and \(\varPsi _U(p) \) be the lower bound and upper bound of the uncertainty distribution of p, respectively. Then

is defined as average risk, and

is defined as bounded value-at-risk.

The defined average risk is a reflection of the average belief degree of the risk index p, and a greater value of \(\overline{p} \) means more severe risk that we believe we will suffer. The meaning of the bounded value-at-risk is that, with belief degree \(\gamma \), we believe that the value of risk index is within the interval \(\left[ \text {VaR}_L,\text {VaR}_U\right] (\gamma ) \). Obviously, if we fix the value of \(\gamma \), a wider bounded value-at-risk means a more conservative assessment result. Meanwhile, we believe a greater \(\text {VaR}_U(\gamma ) \) reflects that the risk is more severe.

3.3.2 Numerical case study

We consider a problem of structural reliability in Choi et al. (2007) to further elaborate on the developed method. Let the limit-state function of a structure be

where \(x_1\) and \(x_2\) are random variables, and the risk index of interest is the probability that the structure fails, which can be written as

Assume that \(x_1\) and \(x_2\) follow normal distributions with parameters \((\mu _1,\sigma _1) \) and \((\mu _2,\sigma _2) \), respectively. The parameters \(\mu _1\) and \(\mu _2\) are not precisely known due to the epistemic uncertainty, whereas \(\sigma _1\) and \(\sigma _2\) are known as crisp values. Based on experts knowledge, the belief degree of \(\mu _1\) is modeled by a linear uncertainty distribution and \(\mu _2\) is described by a normal uncertainty distribution (see Table 3). The bounded uncertainty distribution can, then, be obtained through Algorithm 1.

The solid line and dashed line in Fig. 3 show the upper and lower uncertainty distributions of the risk index \(p_f\), respectively. Average risk and bounded value-at-risk are calculated using the numerical method based on (28) and (29), i.e., \(\overline{p}=0.001980 \) and \(\left[ \text {VaR}_L,\text {VaR}_U\right] (0.9)=[0.001689, 0.003548] \).

Since the developed method offers a bounded uncertainty distribution of \(p_f\), it is then compared with an evidence theory-based (ETB) method, in which the belief degree of \(p_f\) is also given as upper and lower distributions called plausibility (Pl) and belief (Bel) function, respectively. In this paper, the ETB method models the belief degrees of \(\mu _1\) and \(\mu _2\) using probability distributions (see Table 3). A double loop Monte Carlo simulation combined with a discretization method for getting basic probability assignments (BPAs) is used to obtain \(Bel\left( p_f\right) \) and \(Pl\left( p_f\right) \) (Limbourg and Rocquigny 2010; Tonon 2004). In Fig. 3, the dotted line and dot–dash line represent Bel and Pl, respectively. It should be noted that although we use Bel and Pl as mathematical constructs, they are not strictly the concepts of belief and plausibility defined by Shafer (i.e., the degree of truth of a proposition Shafer 1976). The two functions only represent bounds on a true quantity. To illustrate this, a cumulative density function (CDF) of \(p_f\) is calculated via a double loop MC simulation method, shown as the crossed line in Fig. 3. It is seen that the CDF is covered by the area enclosed by Bel and Pl. In this sense, the CDF obtained in PB method is a special case of the ETB model, and the \(Bel\left( p_f\right) \) and \(Pl\left( p_f\right) \) give a reasonable bound of the probability distribution of \(p_f\).

Given \(Bel\left( p_f\right) \) and \(Pl\left( p_f\right) \), the two risk indexes can be calculated by

and

and the results are tabulated in Table 4.

Figure 3 shows a comparison of the distributions of belief degrees on \(p_f\) in UTB method and ETB method. The distributions have the same supports, whereas the upper and lower uncertainty distributions fully cover the CDF and the area enclosed by Bel and Pl, which indicates that the developed method is more conservative. This is because the subjective belief described by uncertainty distributions usually tends to be more conservative and is more easily affected by epistemic uncertainty. This phenomenon is also reflected by the two defined risk indexes: the average risk \(\overline{p}\)s are nearly the same on different theory basis, while the bounded value-at-risk of ETB method is within that of the UTB method.

We also find that the bounded value-at-risk obtained by the developed UTB method may be too wide for some decision makers. This may be a shortcoming of the proposed method. Therefore, when choosing a method for risk analysis from the PB method, ETB method and UTB method, we need to consider the attitude of decision maker. For a conservative decision maker, the bounded uncertainty distribution is an alternative choice.

4 Application

In this section, the developed level-2 uncertainty analysis method is applied to a real application of flood risk assessment. In Sect. 4.1, we briefly introduce the system of interest. Sections 4.2 and 4.3 show the process of level-2 uncertainty analysis based on uncertainty theory, to illustrate the effectiveness of the method.

Flooding risk system (Limbourg and Rocquigny 2010)

4.1 System description

In this case study, we consider a residential area located near a river, which is subject to potential risks of floods, as shown in Fig. 4. As a mitigation and prevention measure, a dike is constructed to protect this area. The final goal is to calculate the risk of floods to determine whether the dike needs to be heightened. A mathematical model is develop in (Limbourg and Rocquigny 2010) which calculates the maximal water level \(Z_c\):

where \(Z_m\) denotes the riverbed level at the upstream part of the river, \(Z_v\) denotes the riverbed level at the downstream part of the river, \(K_s\) denotes the friction coefficient of the riverbed, Q denotes the yearly maximal water flow, l denotes the length of river, and b denotes the width of river (Limbourg et al. 2010). The risk of floods can, then, be calculated as the probability that the annual maximum water level exceeds the dike height:

4.2 Parameter setting

The input variables in 34 are assumed to be random variables and the form of their PDFs are assumed to be known, as shown in Table 5 (Limbourg and Rocquigny 2010). However, due to limited statistical data, the distribution parameters of these PDFs cannot be precisely estimated using statistic methods and, therefore, are affected by epistemic uncertainty, which should be evaluated based on experts knowledge. In this paper, the experts knowledge on the distribution of these parameters is obtained by asking the experts to give the uncertainty distributions of the parameters, as shown in Table 5. For example, the yearly maximal water flow, denoted by Q, follows a Gumbel distribution \(Gum(\alpha ,\beta ) \), and according to expert judgements, \(\alpha \) and \(\beta \) follow normal uncertainty distributions \({\mathcal {N}}_\alpha (1013,48) \) and \({\mathcal {N}}_\beta (558,36) \), respectively. In addition, considering some physical constraints, the input quantities also have theoretical bounds, as given in Table 5.

4.3 Results and discussion

Uncertain simulation method is used to propagate the level-2 uncertainty using Algorithm 1. The theoretical bounds in Table 5 are considered by truncating the probability distributions at these bounds. The lower and upper bounds for the uncertainty distributions of \(p_{\text {flood}}\) are shown in Fig. 5, which represents the belief degrees on \(p_{\text {flood}}\) considering the level-2 uncertainty. Average risk and bounded value-at-risk are calculated based on (28) and (29) and presented in Table 6.

It follows that the average yearly risk is \(p_{\text {flood}}\), which corresponds to an average return period of 62 years. This is unacceptable in practice, because it is too short when compared to a commonly required 100-year-return period. To solve this problem, one measure is to heighten the dike for a more reliable protection. Another solution might be increasing the friction coefficient of the riverbed \(K_s\), noting from 34 that \(Z_c\) decreases with \(K_s\).

The bounded value-at-risk is relatively wide, which indicates that due to the presence of epistemic uncertainty, we cannot be too confirmed on the calculated risk index. The same conclusion is also drawn from Fig. 5: the difference between the upper and lower bounds of the uncertainty distributions are large, indicating great epistemic uncertainty. To reduce the effect of epistemic uncertainty, more historical data need to be collected to support a more precise estimation of the distribution parameters in the level-1 probability distributions.

5 Conclusions

In this paper, a new level-2 uncertainty analysis method is developed based on uncertainty theory. The method is discussed in two respects: for monotone risk models, where the risk index of interest is expressed as an explicit monotone function of the uncertain parameters, and level-2 uncertainty analysis is conducted based on operational laws of uncertainty variables; for non-monotone risk models, an uncertain simulation-based method is developed for level-2 uncertainty analysis. Three indexes, i.e., average risk, value-at-risk and bounded value-at-risk, are defined for risk-informed decision making in the level-2 uncertainty setting. Two numerical studies and an application on a real example from literature are worked out to illustrate the developed method. The developed method is also compared to some commonly used level-2 uncertainty analysis methods, e.g., PB method and ETB method. The comparisons show that, in general, the UTB method is more conservative than the methods based on probability theory and evidence theory.

References

Apostolakis G (1990) The concept of probability in safety assessments of technological systems. Science 250(4986):1359–1364

Aven T (2013) On the meaning of a black swan in a risk context. Saf Sci 57(8):44–51

Aven T, Zio E (2011) Some considerations on the treatment of uncertainties in risk assessment for practical decision making. Reliab Eng Syst Saf 96(1):64–74

Aven T, Baraldi P, Flage R, Zio E (2014) Uncertainty in risk assessment: the representation and treatment of uncertainties by probabilistic and non-probabilistic methods. Wiley, Chichester

Baraldi P, Zio E (2008) A combined monte carlo and possibilistic approach to uncertainty propagation in event tree analysis. Risk Anal 28(5):1309–1326

Baraldi P, Compare M, Zio E (2013) Maintenance policy performance assessment in presence of imprecision based on dempstercshafer theory of evidence. Inf Sci 245(24):112–131

Chen X, Gao J (2013) Uncertain term structure model of interest rate. Soft Comput 17(4):597–604

Choi SK, Canfield RA, Grandhi RV (2007) Reliability-based structural design. Springer, London

Gao J, Yao K (2015) Some concepts and theorems of uncertain random process. Int J Intell Syst 30(1):52–65

Gao J, Yang X, Liu D (2017) Uncertain shapley value of coalitional game with application to supply chain alliance. Appl Soft Comput 56:551–556

Guo C, Gao J (2017) Optimal dealer pricing under transaction uncertainty. J Intell Manuf 28(3):657–665

Helton JC, Johnson JD, Oberkampf WL, Sallaberry CJ (2010) Representation of analysis results involving aleatory and epistemic uncertainty. Int J Gen Syst 39(6):605–646

Hou Y (2014) Optimization model for uncertain statistics based on an analytic hierarchy process. Math Probl Eng 2014:1–6

Kang R, Zhang Q, Zeng Z, Zio E, Li X (2016) Measuring reliability under epistemic uncertainty: review on non-probabilistic reliability metrics. Chin J Aeronaut 29(3):571–579

Ke H, Yao K (2016) Block replacement policy with uncertain lifetimes. Reliab Eng Syst Saf 148:119–124

Kiureghian AD, Ditlevsen O (2009) Aleatory or epistemic? Does it matter? Struct Saf 31(2):105–112

Limbourg P, Rocquigny ED (2010) Uncertainty analysis using evidence theory c confronting level-1 and level-2 approaches with data availability and computational constraints. Reliab Eng Syst Saf 95(5):550–564

Limbourg P, Rocquigny ED, Andrianov G (2010) Accelerated uncertainty propagation in two-level probabilistic studies under monotony. Reliab Eng Syst Saf 95(9):998–1010

Liu B (2007) Uncertainty theory, 2nd edn. Springer, Berlin

Liu B (2009) Some research problems in uncertainty theory. J Uncertain Syst 3(1):3–10

Liu B (2010) Uncertainty theory: a branch of mathematics for modeling human uncertainty. Springer, Berlin

Liu B (2015) Uncertainty theory, 5th edn. Springer, Berlin

Nilsen T, Aven T (2003) Models and model uncertainty in the context of risk analysis. Reliab Eng Syst Saf 79(3):309–317

Parry GW, Winter PW (1981) Characterization and evaluation of uncertainty in probabilistic risk analysis. Nucl Saf 22:1(1):28–42

Pasanisi A, Keller M, Parent E (2012) Estimation of a quantity of interest in uncertainty analysis: some help from bayesian decision theory. Reliab Eng Syst Saf 100(3):93–101

Pedroni N, Zio E (2012) Empirical comparison of methods for the hierarchical propagation of hybrid uncertainty in risk assessment in presence of dependences. Int J Uncertain Fuzziness Knowl Based Syst 20(4):509–557

Pedroni N, Zio E, Ferrario E, Pasanisi A, Couplet M (2013) Hierarchical propagation of probabilistic and non-probabilistic uncertainty in the parameters of a risk model. Comput Struct 126(2):199–213

Ripamonti G, Lonati G, Baraldi P, Cadini F, Zio E (2013) Uncertainty propagation in a model for the estimation of the ground level concentration of dioxin/furans emitted from a waste gasification plant. Reliab Eng Syst Saf 120(6):98–105

Shafer G (1976) A mathematical theory of evidence. Princeton University Press, Princeton

Tonon F (2004) Using random set theory to propagate epistemic uncertainty through a mechanical system. Reliab Eng Syst Saf 85(1):169–181

Wang X, Peng Z (2014) Method of moments for estimating uncertainty distributions. J Uncertain Anal Appl 2(1):5

Wang P, Zhang J, Zhai H, Qiu J (2017) A new structural reliability index based on uncertainty theory. Chin J Aeronaut 30(4):1451–1458

Wen M, Kang R (2016) Reliability analysis in uncertain random system. Fuzzy Optim Decis Mak 15(4):491–506

Wen M, Qin Z, Kang R, Yang Y (2015a) The capacitated facility location–allocation problem under uncertain environment. J Intell Fuzzy Syst 29(5):2217–2226

Wen M, Qin Z, Kang R, Yang Y (2015b) Sensitivity and stability analysis of the additive model in uncertain data envelopment analysis. Soft Comput 19(7):1987–1996

Wen M, Han Q, Yang Y, Kang R (2017) Uncertain optimization model for multi-echelon spare parts supply system. Appl Soft Comput 56:646–654

Yang X, Gao J (2013) Uncertain differential games with application to capitalism. J Uncertain Anal Appl 1(17):1–11

Yang X, Gao J (2014) Bayesian equilibria for uncertain bimatrix game with asymmetric information. J Intell Manuf 28(3):515–525

Yang X, Gao J (2016) Linear\(-\)quadratic uncertain differential game with application to resource extraction problem. IEEE Trans Fuzzy Syst 24(4):819–826

Yang X, Yao K (2016) Uncertain partial differential equation with application to heat conduction. Fuzzy Optim Decis Mak 16(3):379–403

Zeng Z, Wen M, Kang R (2013) Belief reliability: a new metrics for products reliability. Fuzzy Optim Decis Mak 12(1):15–27

Zhu Y (2012) Functions of uncertain variables and uncertain programming. J Uncertain Syst 6(4):278–288

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Nos. 61573043, 71671009).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Human and animal rights

This article does not contain any studies with human participants performed by any of the authors.

Additional information

Communicated by Y. Ni.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, Q., Kang, R. & Wen, M. A new method of level-2 uncertainty analysis in risk assessment based on uncertainty theory. Soft Comput 22, 5867–5877 (2018). https://doi.org/10.1007/s00500-018-3337-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-018-3337-0