Abstract

We consider tessellations of the Euclidean \((d-1)\)-sphere by \((d-2)\)-dimensional great subspheres or, equivalently, tessellations of Euclidean d-space by hyperplanes through the origin; these we call conical tessellations. For random polyhedral cones defined as typical cones in a conical tessellation by random hyperplanes, and for random cones which are dual to these in distribution, we study expectations for a general class of geometric functionals. They include combinatorial quantities, such as face numbers, as well as, for example, conical intrinsic volumes. For isotropic conical tessellations (those generated by random hyperplanes with spherically symmetric distribution), we determine the complete covariance structure of the random vector whose components are the k-face contents of the induced spherical random polytopes. This result can be considered as a spherical counterpart of a classical result due to Roger Miles.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A major theme of stochastic geometry, since the seminal work of Rényi and Sulanke in 1963/64, has always been the investigation of geometric functionals of random convex polytopes. The survey articles [19, 33, 39] give an impressive picture of the progress in recent years. They also reveal that, as far as expectations and higher moments, a prerequisite for the study of limit theorems, are concerned, one generally has to be satisfied with asymptotic results and estimates, whereas explicit results are very rare.

Most of the random polytopes studied so far live in Euclidean spaces. In other spaces of constant curvature, several results may have parallel versions, but also new phenomena are to be expected, in particular in spherical space due to its compactness. A recent study [7] of spherically convex hulls of random points in \({\mathbb S}^{d-1}\) already exhibited some phenomena which cannot be observed in Euclidean spaces. The present paper is devoted to random polytopes in the unit sphere \({\mathbb S}^{d-1}\) of Euclidean space \({\mathbb R}^d\). For basic classes of random convex polytopes in \({\mathbb S}^{d-1}\), we find explicit formulas for the first and mixed second moments of a series of quite general geometric functionals. The spherically convex polytopes in \({\mathbb S}^{d-1}\) are in one-to-one correspondence with their positive hulls, which are convex polyhedral cones in \({\mathbb R}^d\). Thus, the study of random polytopes in the sphere is equivalent to the study of random polyhedral convex cones in Euclidean space. The geometry of polyhedral cones has recently found increased interest, due to applications in convex optimization and compressed sensing (see, e.g., [2, 3, 5, 11, 16, 24]).

Let us first describe the random polytopes in \({\mathbb S}^{d-1}\) and the geometric functionals of them that we consider. First, take \(n\ge d\) independent, identically distributed random points in \({\mathbb S}^{d-1}\). Their distribution need only satisfy some mild requirements, besides evenness they guarantee general position with probability one. The spherically convex hull of the random points, under the condition that it is not the whole sphere, defines a random polytope. It was first studied by Cover and Efron [9]. Therefore, we call its positive hull a Cover–Efron cone. In distribution, this random cone is dual to the random Schläfli cone, which we define as follows. To the given random vectors in the unit sphere, we consider the orthogonal hyperplanes through the origin. They induce a random tessellation of \({\mathbb R}^d\) into convex cones. Among its d-dimensional cones, we choose one at random, with equal chances. This defines what we call a random Schläfli cone. Its intersection with \({\mathbb S}^{d-1}\) yields the second type of spherical random polytope that we consider, again following Cover and Efron.

For a spherical polytope P, contained in an open hemisphere, the jth quermassintegral \(U_j(P)\) is, up to a normalizing factor, the total invariant measure of the set of \((n-j)\)-flats through the origin that meet P. Then, we define \(Y_{k,j}(P)\) as the sum of \(U_j(F)\) over all \((k-1)\)-faces F of P (or correspondingly for polyhedral cones). These general functionals comprise combinatorial functionals, such as numbers of k-faces, as well as metric functionals, such as total k-face contents, and they allow to express the kth conical intrinsic volume. These conical, or spherical, intrinsic volumes appeared first, with different terminology, in Santaló’s work on integral geometry and the Gauss–Bonnet formula in spherical spaces, for example, in [34, 35]. To the linear relations between the spherical intrinsic volumes listed in [34], McMullen [25] later found, in the case of polyhedral cones, a new combinatorial approach. For later appearances of the spherical intrinsic volumes in spherical geometry, we refer to [14, 15], [40, Sect. 6.5], [13]. More recently, the conical intrinsic volumes, and also their integral geometry, have found very interesting applications in convex optimization and compressed sensing. We refer to [2, 5, 16, 24]. As a sequel to this, new approaches to, and new perspectives on, conical intrinsic volumes of polyhedral cones came forward, with relations to combinatorial aspects being in the foreground; see [1, 4]. We emphasize, however, that the following is meant as a contribution to stochastic geometry, where first and higher moments of geometric random variables are in the focus of interest, often as a first step towards more sophisticated distribution and limit results.

In the following, after introducing the announced random cones and geometric functionals and some of their properties, we first extend the work of Cover and Efron by determining the expectations of the functionals \(Y_{k,j}\) for random Schläfli cones. By specialization, this yields the results of Cover and Efron on face numbers, and also new results, such as for the conical intrinsic volumes. By dualization, corresponding results for the Cover–Efron cones are obtained. The major part of this paper is devoted to the functionals \({\varLambda }_k= Y_{k,k-1}\) of a polyhedral cone. For a spherical polytope \(P\subset {\mathbb S}^{d-1}\), the value \({\varLambda }_{k+1}(\mathrm{pos}\,P)\) is the total k-face content, that is, the sum of the k-dimensional normalized Lebesgue measures of the k-faces of P, in other words, the k-dimensional normalized Hausdorff measure of its k-skeleton. As examples, for \(k=0,1,d-2,d-1\) we get, respectively, the vertex number and, up to constant factors, the total edge length, the surface area and the volume of P. Thus, these functionals interpolate, in a natural way, between vertex number and volume. Recently, Amelunxen ([1], with different notation) has proved kinematic formulas for these functionals in the case of polyhedral cones. The expectations of the \({\varLambda }_k\) for a random Schläfli cone are special cases of our results mentioned above.

Our main result is the determination of the complete covariance structure of the sequence \({\varLambda }_0(S),\dots ,{\varLambda }_d(S)\) for an isotropic random Schläfli cone S. This is a conical counterpart to a result of Miles, who in [26] considered the typical cell of a stationary, isotropic Poisson hyperplane mosaic in \({\mathbb R}^d\) and determined all mixed moments of its total face contents. Miles presented his result also in [30, Formula (63)]. As remarked in [38], the proof given by Miles in [26] makes heavy use of ergodic theory and is not explicitly carried out in all details. A simpler proof was given in [38], where the result of Miles was extended to the non-isotropic case and to typical faces of lower dimensions. Our proof in the following carries over an idea of Miles to the conical case, but is essentially different in the details.

Since our random Schläfli cones are induced by random hyperplanes through the origin, this paper is also a contribution to random conical tessellations (which explains the title), or equivalently to tessellations of the sphere by random great subspheres, yielding special spherical mosaics. Random mosaics in Euclidean spaces are an intensively studied topic of stochastic geometry. We refer the reader to Chapter 10 in the book [40] and to the more recent survey articles [8, 19, 32, 41]. A much investigated particular class, besides the Voronoi tessellations, are hyperplane tessellations, in particular those generated by stationary Poisson processes of hyperplanes, initiated by the seminal work of Miles [26–30] and Matheron [22, 23]. Relatively little has been done on random tessellations of spaces other than the Euclidean. Tessellations of the sphere of arbitrary dimension by great subspheres (of codimension 1) were briefly considered by Cover and Efron [9], and those of the two-dimensional sphere in more detail by Miles [31]; see in particular Theorem 6.3 on some mixed second moments, which is widely generalized by our result. Relations between various densities of random mosaics in spherical spaces were studied by Arbeiter and Zähle [6].

In Sect. 2 we introduce the geometric functionals of polyhedral cones that will be studied, and in Sect. 3 the two types of random cones for which we investigate first and second moments of these functionals. Expectation results for the functionals \(Y_{k,j}\), which extend formulas of Cover and Efron, are derived in Sect. 4. Sections 5, 6 and 7 are then preparatory to our main result on mixed second moments, which is finally obtained in Sect. 8. Hints to the proof strategy are given at the beginning of Sects. 6 and 8.

2 Geometric Functionals of Convex Cones

We work in d-dimensional Euclidean space \({\mathbb R}^d\) (\(d\ge 2\)), with scalar product \(\langle \cdot \,,\cdot \rangle \), and denote by \({\mathbb S}^{d-1}\) its unit sphere. Let \(\sigma _m\), \(m\in \mathbb {N}_0\), be the m-dimensional spherical Lebesgue measure (i.e., the m-dimensional Hausdorff measure) on m-dimensional great subspheres of \({\mathbb S}^{d-1}\). For \(n\in \mathbb {N}\) we put

Let \({\mathcal C}^d\) denote the set of (nonempty) closed convex cones in \({\mathbb R}^d\), which includes k-dimensional linear subspaces, \(k\in \{0,\ldots ,d\}\). We equip \({\mathcal C}^d\) with the topology induced by the Fell topology (see [40, Sect. 12.2]), or equivalently, with the topology induced by the Euclidean Hausdorff distance restricted to the intersections of the cones in \({\mathcal C}^d\) with the unit ball centered at the origin. A cone \(C\in {\mathcal C}^d\) is called pointed if it does not contain a line. We write \({\mathcal {PC}}^d\) for the set of polyhedral cones in \({\mathcal C}^d\). This set is a Borel subset of \({\mathcal C}^d\). For \(C\in {\mathcal {PC}}^d\) and for \(k\in \{0,\dots ,d\}\), we denote by \({\mathcal F}_k(C)\) the set of k-dimensional faces of C.

For \(C\in {\mathcal C}^d\), the dual cone is defined by

This is again a cone in \({\mathcal C}^d\), and \(C^{\circ \circ }:= (C^\circ )^\circ =C\). If C is pointed and d-dimensional, then \(C^\circ \) has the same properties. If \(C\in {\mathcal {PC}}^d\) and \(F\in {\mathcal F}_k(C)\) for \(k\in \{0,\dots ,d\}\), then the normal cone N(C, F) of C at F is a \((d-k)\)-face of the polyhedral cone \(C^\circ \), also called the conjugate face (of F with respect to C) and denoted by \(\widehat{F}_C\). If \(\widehat{F}_C=G\), then \(\widehat{G}_{C^\circ }=F\).

The following fact is occasionally useful. We give a proof for convenience.

Lemma 2.1

Suppose that \(C\in {\mathcal C}^d\) is pointed, and let \(L\subset {\mathbb R}^d\) be a linear subspace. Then

Proof

Suppose that \(L\cap C\not =\{0\}\). Choose \(v\in L\cap C\), \(v\not = 0\). Suppose there exists \(y\in L^\perp \cap \mathrm{int}\,C^\circ \). Since \(y\in L^\perp \), we have \(\langle y,v\rangle =0\). Since \(y\in \mathrm{int}\,C^\circ \), the points \(y'\) in some neighbourhood of y belong to \(C^\circ \) and hence satisfy \(\langle y',v\rangle \le 0\). But since \(\langle y,v\rangle =0\) and \(v\not =0\), this is impossible.

Suppose that \(L^\perp \cap \mathrm{int}\,C^\circ =\emptyset \). The disjoint convex sets \(L^\perp \) and \(\mathrm{int}\,C^\circ \) can be separated by a hyperplane, hence there is a vector \(v\not =0\) with \(\langle v, y\rangle \le 0\) for all \(y\in \mathrm{int}\,C^\circ \) and \(\langle v,z\rangle \ge 0\) for all \(z\in L^\perp \); the latter implies \(\langle v,z\rangle = 0\) for \(z\in L^\perp \) and thus \(v\in L\). Since C does not contain a line, \(\mathrm{int}\,C^\circ \not =\emptyset \), hence \(\langle v, y\rangle \le 0\) holds for all \(y\in C^\circ \). Therefore, \(v\in C^{\circ \circ }=C\). Thus, \(v\in L\cap C\). \(\square \)

A set \(M\subset {\mathbb S}^{d-1}\) is spherically convex if \(\mathrm{pos}\,M\) is convex; here \(\mathrm{pos}\) denotes the positive hull. To include some degenerate cases in the following, we define \(\mathrm{pos}\,\emptyset :=\{0\}\). If \(C\in {\mathcal C}^d\), the set \(K=C\cap {\mathbb S}^{d-1}\) is called a convex body in \({\mathbb S}^{d-1}\), and we have \(C=\mathrm{pos}\, K\). In particular, the empty set and k-dimensional great subspheres, that is, intersections of \((k+1)\)-dimensional linear subspaces with \({\mathbb S}^{d-1}\), for \(k\in \{0,\ldots ,d-1\}\) (and thus including \({\mathbb S}^{d-1}\)), are convex bodies in \({\mathbb S}^{d-1}\). The set of convex bodies in \({\mathbb S}^{d-1}\) is denoted by \({\mathcal K}_s\) (this notation, as well as the term ‘convex body’, differs from the usage in [40, Sect. 6.5], where the empty set is excluded). For \(K\in {\mathcal K}_s\), the dual convex body \(K^\circ \) is defined by

To introduce the conical quermassintegrals and the conical intrinsic volumes, we make use of the correspondence between convex cones in \({\mathbb R}^d\) and spherically convex sets in \({\mathbb S}^{d-1}\). For the latter, the functionals to be considered were already introduced by Santaló, see [36, Part IV], with different notation. We follow here the approach of Glasauer [14] and refer to [40, Sect. 6.5] for further details.

Let G(d, k) denote the Grassmannian of k-dimensional linear subspaces of \({\mathbb R}^d\), and let \(\nu _k\) be its normalized Haar measure (the unique rotation invariant Borel probability measure on G(d, k)), \(k=0,\dots ,d\). For \(K\in {\mathcal K}_s\), the spherical quermassintegrals are defined by

where \(\chi \) denotes the Euler characteristic. (Of course, \(U_0(K)=\tfrac{1}{2}\chi (K)\) and \(U_d(K)=0\), but this is included for formal reasons). These are, essentially, the ‘Grassmann angles’ of Grünbaum [18], who derived for them various polyhedral relations. We recall from [40, p. 262] that if K is a convex body in \({\mathbb S}^{d-1}\) and not a great subsphere, then \(\chi (K\cap L)=\mathbf {1}\{K\cap L\ne \emptyset \}\) for \(\nu _{d-j}\) almost all \(L\in G(d,d-j)\). Hence, in this case \(2\,U(K)\) is the total invariant probability measure of the set of all \((d-j)\)-dimensional linear subspaces hitting K. Since \(\chi ({\mathbb S}^k)=1+(-1)^k\) for a great subsphere \({\mathbb S}^k\) of dimension \(k\in \{0, \dots , d-1\}\), we have

For cones \(C\in {\mathcal C}^d\), we now define

If \(C\in {\mathcal C}^d\) is not a linear subspace, then

If \(L^k\subset {\mathbb R}^d\) is a linear subspace of dimension k, then

Let \(0\le j\le m\le d-1\), let \(M\subset {\mathbb R}^d\) be an m-dimensional linear subspace and \(C\in {\mathcal C}^d\) a cone with \(C\subset M\). The image measure of \(\nu _{d-j}\) under the map \(L\mapsto L\cap M\) from \(G(d,d-j)\) to the Grassmannian of \((m-j)\)-subspaces in M is the normalized Haar measure on the latter space. Here (and subsequently) we tacitly use the fact that \(\nu _{d-j}(\{L\in G(d,d-j):L\cap M\notin G(d,m-j)\})=0\); see [40, Lem. 13.2.1]. Therefore, it follows from (1), (2) that \(U_j(C)\) does not depend on whether it is computed in \({\mathbb R}^d\) or in M.

In particular, for \(C\in {\mathcal C}^d\) and \(m\in \{1,\dots ,d\}\), we have

If \(C\in {\mathcal C}^d\) is not a linear subspace, the duality relation

holds for \(j=0,\dots ,d\). If C is pointed and d-dimensional, this follows from (3) and Lemma 2.1. If \(C\in {\mathcal C}^d\) is not a subspace, the assertion can be obtained from the previous case by approximation, using easily established continuity properties. If C is a subspace, duality is of little interest, in view of (4).

We now recall the spherical intrinsic volumes and refer to [40, Sect. 6.5] for details. Let \(d_s\) be the spherical distance on \({\mathbb S}^{d-1}\); thus, for \(x,y\in {\mathbb S}^{d-1}\), \(d_s(x,y)=\arccos \,\langle x,y\rangle \). For \(K\in {\mathcal K}_s\setminus \{\emptyset \}\) and \(x\in {\mathbb S}^{d-1}\), the distance of x from K is \(d_s(K,x):=\min \{d_s(y,x):y\in K\}\). For \(0<\varepsilon <\pi /2\), the (outer) parallel set of K at distance \(\varepsilon \) is defined by

By the spherical Steiner formula, the measure of this set can be written in the form

with

for \(0\le \varepsilon <\pi /2\). This defines the numbers \(v_0(K),\dots ,v_{d-2}(K)\) uniquely. The definition is supplemented by setting \(v_m(\emptyset ):=0\),

and

Note that \(v_m({\mathbb S}^{d-1})=0\) for \(m=0,\ldots ,d-2\) and \(v_{d-1}({\mathbb S}^{d-1})=1\). The numbers \(v_i(K)\) are the spherical intrinsic volumes of K. In particular, for \(K\in {\mathcal K}_s\) and \(m=0,\ldots ,d-1\),

For spherical polytopes, the spherical intrinsic volumes have representations in terms of angles, similar as in the Euclidean case. For a spherical polytope P and for \(k\in \{0,\dots ,d-2\}\), we denote by \({\mathcal F}_k(P)\) the set of k-faces of P. Let P be a spherical polytope and \(F\in {\mathcal F}_k(P)\). The external angle \(\gamma (F,P)\) of P at F is defined by

With these notations, we have

For cones \(C \in {\mathcal C}^d\), the conical intrinsic volumes are now defined by

The shift in the index has the advantage that the highest occurring index is equal to the maximal possible dimension of C. Since C is a cone, there is no danger of confusion with the intrinsic volumes of compact convex bodies.

For a cone \(C\in {\mathcal C}^d\) with \(\dim C=k\), the internal angle of C at 0 is defined by

Then, for an arbitrary polyhedral cone \(C\in \mathcal {PC}^d\) and for \(m=1,\dots ,d-1\), we have

In particular, if \( \dim C= m\), then \(V_m(C)= \beta (0,C)\).

In contrast to the quermassintegrals and intrinsic volumes of convex bodies in Euclidean space, which differ only by their normalizations, the conical quermassintegrals and conical intrinsic volumes are essentially different functionals. However, they are closely related. A spherical integral-geometric formula of Crofton type (see [40, (6.63)]) implies that

for \(C\in {\mathcal C}^d\) and \(j=0,\dots ,d-1\). From (6) it follows that

The duality relation

holds for \(C\in {\mathcal C}^d\). For \(m\in \{0,d\}\) it holds by definition. For \(m\in \{1,\dots ,d-1\}\), it follows from (5) and (7) if C is not a subspace, and from

(Kronecker symbol) if \(C=L^k\) is a k-dimensional subspace; here (9) follows from (4) and (7).

As did Miles [26, Sect. 5.8] for convex polytopes in \({\mathbb R}^d\), we use the conical quermassintegrals to define a more general series of functionals for polyhedral cones, which comprises the geometrically most interesting functionals as special cases. For \(C\in {\mathcal PC}^d\), \(k=1,\dots ,d\) and \(j=0,\dots ,k-1\), let

Then, in particular,

According to (7), also the conical intrinsic volumes can be expressed in terms of suitable functions \(Y_{k,j}\).

If \(C\in {\mathcal PC}^d\) is such that the k-faces of C are not linear subspaces, then

where \(f_k(C)\) denotes the number of k-faces of C.

We see that for a d-dimensional pointed polyhedral cone both, the combinatorial functionals given by the face numbers and the metric functionals given by the conical intrinsic volumes, can be expressed in terms of suitable functionals \(Y_{k,j}\).

Further, for \(C\in {\mathcal PC}^d\) and \(k\in \{1,\dots ,d\}\), we define the functional \({\varLambda }_k\) by

As explained in the introduction, \({\varLambda }_k\) can be considered as the total k-face content, also for a polyhedral cone, if ‘content’ is interpreted properly. Since the conical intrinsic volumes and the conical quermassintegrals are intrinsically defined, it follows from (7) that

3 Conical Tessellations and the Cover–Efron Model

In this section, we introduce random conical tessellations and the two basic types of random polyhedral cones that they induce. These random cones were first considered by Cover and Efron [9]. We slightly modify and formalize the approach of [9], to meet our later requirements.

Recall that \(G(d,d-1)\) denotes the Grassmannian of \((d-1)\)-dimensional linear subspaces of \({\mathbb R}^d\). We say that hyperplanes \(H_1,\dots ,H_n\in G(d,d-1)\) are in general position if any \(k\le d\) of them have an intersection of dimension \(d-k\). For a vector \(x\in {\mathbb R}^d\setminus \{0\}\), let

We shall repeatedly make use of the duality

for \(x_1,\dots ,x_n\in {\mathbb R}^d\).

Vectors \(x_1,\dots ,x_n\in {\mathbb R}^d\) are said to be in general position if any d or fewer of these vectors are linearly independent. Thus, the hyperplanes \(x_1^\perp ,\dots ,x_n^\perp \) are in general position if and only if \(x_1,\dots ,x_n\) are in general position. If this is the case, then

where the last implication \(\Rightarrow \) follows from general position. In fact, suppose that \(C:=\bigcap _{i=1}^n x^-_i\) satisfies \(0<k=\dim C<d\). Let \(L_k=\mathrm{lin}\,C\). Choose \(p\in \text {relint}\, C\) and define \(I:=\{i\in \{1,\ldots ,n\}:p\in x_i^\perp \}\), hence \(p\in \text {int}\, x_j^-\) for \(j\in \{1,\ldots ,n\}\setminus I\). Then \(C\subset \bigcap _{i\in I}x_i^\perp \) implies that \(L_k\subset \bigcap _{i\in I}x_i^\perp \subset \bigcap _{i\in I}x_i^-\). Since \(p\in \text {int}\, x_j^-\) for \(j\in \{1,\ldots ,n\}\setminus I\), we also have \(\bigcap _{i\in I}x_i^-\subset L_k\), and thus \(L_k=\bigcap _{i\in I}x_i^\perp =\bigcap _{i\in I}x_i^-\) and \(L_k^\perp =\text {pos}\{x_i:i\in I\}\), by (13). But then necessarily \(|I|\ge d-k\). The assumption of general position implies that \(|I|=d-k\), which is a contradiction to \(L_k^\perp =\text {pos}\{x_i:i\in I\}\).

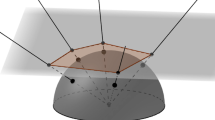

Suppose that \(H_1,\dots ,H_n\in G(d,d-1)\) are in general position. Then the hyperplanes \(H_1,\dots ,H_n\) induce a tessellation \({\mathcal T}\) of \({\mathbb R}^d\) into d-dimensional polyhedral cones. We call \({\mathcal T}\) a conical tessellation of \({\mathbb R}^d\). For \(k\in \{1,\ldots ,d\}\), the set of k-faces of \(\mathcal {T}\) is defined as the union of the sets of k-faces of these polyhedral cones (the d-dimensional cones are the d-faces). We write \({\mathcal F}_k(H_1,\dots ,H_n)\) for the set of k-faces of the tessellation \({\mathcal T}\). Later, we shall often abbreviate \((H_1,\dots ,H_n)=:\eta _n\) and then write \(\mathcal F_k(\eta _n)\) for \({\mathcal F}_k(H_1,\dots ,H_n)\). By \(f_k({\mathcal T})\) we denote the number of k-faces of the tessellation \({\mathcal T}\).

The spherical polytopes \(C\cap {\mathbb S}^{d-1}\), where C is a cone of \({\mathcal T}\), form a tessellation of the sphere \({\mathbb S}^{d-1}\), or spherical tessellation. In the following, it will be more convenient to work with convex cones than with their intersections with \({\mathbb S}^{d-1}\).

If we denote by \(H^-\) one of the two closed halfspaces bounded by the hyperplane H, then it follows from (14) that the d-dimensional cones of the tessellation \({\mathcal T}\) induced by \(H_1,\dots ,H_n\) are precisely the cones different from \(\{0\}\) of the form

We call these cones the Schläfli cones induced by \(H_1,\dots ,H_n\), \(n\ge 1\), because Schläfli (generalizing a result of Steiner) has shown that there are exactly

of them (the simple inductive proof is reproduced in [40, Lem. 8.2.1]; also references are found there). We consistently define \(C(0,d):=1\) (where the only cone is \({\mathbb R}^d\) itself) and \(C(n,d):=0\) for \(n<0\).

Each choice of \(d-k\) indices \(1\le i_1<\dots < i_{d-k}\le n\) determines a k-dimensional subspace \(L= H_{i_1}\cap \dots \cap H_{i_{d-k}}\). For \(i\in \{1,\dots ,n\}\setminus \{i_1,\dots ,i_{d-k}\}\), the intersections of L with the hyperplanes \(H_i\) are in general position in L and hence determine \(C(n-d+k,k)\) Schläfli cones with respect to L. Each of these is a k-face of the tessellation \({\mathcal T}\), and each k-face of \({\mathcal T}\) is obtained in this way. Thus, the total number of k-faces is given by

for \(k=1,\dots ,d\). In particular, \(f_k(\mathcal {T})=1\) if \(n=d-k\) and \(f_k(\mathcal {T})=0\) if \(n<d-k\).

Now we turn to random cones. The random vectors appearing in the following can be assumed as unit vectors, since only their spanned rays are relevant. All measures on \({\mathbb S}^{d-1}\) or \(G(d,d-1)\) appearing in the following are Borel measures. Generally, we denote by \({\mathcal B}(T)\) the \(\sigma \)-algebra of Borel sets of a given topological space T. Let \(\phi \) be a probability measure on \({\mathbb S}^{d-1}\) which is symmetric with respect to 0 (also called even) and assigns measure zero to each \((d-2)\)-dimensional great subsphere. Let \(X_1,\dots ,X_n\) be independent random points in \({\mathbb S}^{d-1}\) with distribution \(\phi \). With probability 1, they are in general position. In the following, we denote probabilities by \({\mathbb P}\) and expectations by \({\mathbb E}\).

From Schläfli’s result (15), Wendel has deduced that

(see [40, Thm. 8.2.1]). This result, having an essentially geometric core, does not depend on the choice of the distribution \(\phi \), as long as the latter has the specified properties.

Cover and Efron [9] have considered the spherically convex hull of \(X_1,\dots ,X_n\), under the condition that this convex hull is different from the whole sphere. We talk of the Cover–Efron model if a spherically convex random polytope or its spanned cone is generated in this way.

Definition 3.1

Let \(\phi \) be as above. Let \(n\in {\mathbb N}\) and let \(X_1,\dots ,X_n\) be independent random points with distribution \(\phi \). The

is the random cone defined as the positive hull of \(X_1,\dots ,X_n\) under the condition that this is different from \({\mathbb R}^d\).

Thus, \(C_n\) is a random convex cone with distribution given by \({\mathbb P}(C_n={\mathbb R}^d)=0\) and

for \(B\in {\mathcal B}(\mathcal {PC}^d_p)\), where \(\mathcal {PC}^d_p:=\mathcal {PC}^d\setminus \{{\mathbb R}^d\}\). Hence, \(C\in B \subset \mathcal {PC}^d_p\) implies \(C\not ={\mathbb R}^d\).

By duality, the Cover–Efron model is connected to random conical tessellations, as we now explain.

Let \(\phi ^*\) be the image measure of \(\phi \) under the mapping \(x\mapsto x^\perp \) from the sphere \({\mathbb S}^{d-1}\) to the Grassmannian \(G(d,d-1)\). Every probability measure \(\phi ^*\) on \(G(d,d-1)\) that assigns measure zero to each set of hyperplanes in \(G(d,d-1)\) containing a fixed line is obtained in this way. Let \(\mathcal H_1,\dots ,\mathcal H_n\) be independent random hyperplanes in \(G(d,d-1)\) with distribution \(\phi ^*\). With probability 1, they are in general position.

Definition 3.2

Let \(\phi ^*\) be as above. Let \(n\in {\mathbb N}\) and let \(\mathcal H_1,\dots ,\mathcal H_n\) be independent random hyperplanes with distribution \(\phi ^*\). The

is obtained by picking at random (with equal chances) one of the Schläfli cones induced by \(\mathcal H_1,\dots ,\mathcal H_n\).

Since consecutive random constructions, of which this is an example, will also appear later, we indicate, once and for all, how such a procedure can be formalized. Let \({\varOmega }_1^n:= G(d,d-1)_*^n\) be the set of n-tuples of \((d-1)\)-subspaces in general position. The probability measure \(P_n\) on \({\varOmega }_1^n\) is defined by  (where

(where  denotes the restriction of a measure). We interpret the choice described in Definition 3.2 as a two-step experiment and define a kernel \( K_2^1: {\varOmega }_1^n\times {\mathcal B}(\mathcal {PC}^d)\rightarrow [0,1]\) by

denotes the restriction of a measure). We interpret the choice described in Definition 3.2 as a two-step experiment and define a kernel \( K_2^1: {\varOmega }_1^n\times {\mathcal B}(\mathcal {PC}^d)\rightarrow [0,1]\) by

for \(\eta _n\in {\varOmega }_1^n\) and \(B\in {\mathcal B}(\mathcal {PC}^d)\). Then (following, e.g., [12, Satz 1.8.10]), we define a probability measure \(P_n\times K_2^1\) on \({\mathcal B}({\varOmega }_1^n) \otimes {\mathcal B}(\mathcal {PC}^d)\) by

for \(A\in {\mathcal B}({\varOmega }_1^n) \otimes {\mathcal B}(\mathcal {PC}^d)\). Now \(S_n\) is defined as the random cone whose distribution is equal to \((P_n\times K_2^1)({\varOmega }_1^n\times \cdot )\). Thus,

for \(B\in {\mathcal B}(\mathcal {PC}^d)\).

To relate \(S_n\) and \(C_n\), we rewrite Eq. (18), using the symmetry of \(\phi \) and then (17) and (13). For \(B\in {\mathcal B}(\mathcal {PC}^d_p)\), we obtain

where (19) was used in the last step. Since also \({\mathbb P}(S_n^\circ ={\mathbb R}^d) = {\mathbb P}(S_n=\emptyset )=0\), we can formulate the following.

Theorem 3.1

Let \(\phi \) be an even probability measure on \({\mathbb S}^{d-1}\) which assigns measure zero to each \((d-2)\)-dimensional great subsphere, let \(n\in {\mathbb N}\). Then the \((\phi ,n)\)-Cover–Efron cone \(C_n\) and the dual of the \((\phi ^*,n)\)-Schläfli cone, \(S_n^\circ \), are stochastically equivalent,

4 Expectations for Random Schläfli and Cover–Efron Cones

In this section, \(\phi ^*\) is a probability measure on the Grassmannian \(G(d,d-1)\) with the property that it is zero on each set of hyperplanes containing a fixed line through 0. For \(n\in {\mathbb N}\), we consider the \((\phi ^*,n)\)-Schläfli cone and want to compute the expectations of the geometric functionals \(Y_{k,j}\), defined by (10), for this random cone.

In his study of Poisson hyperplane tessellations in Euclidean spaces, Miles [26, Chap. 11] has employed the idea of defining, by means of combinatorial selection procedures, different weighted random polytopes, which could then be combined to give results about first and second moments. In this and subsequent sections, we adapt this approach to conical tessellations.

First we describe a combinatorial random choice. Let \(H_1,\dots ,H_n \in G(d,d-1)\) be hyperplanes in general position, and let \(L\in G(d,k)\), for \(k\in \{1,\dots ,d\}\), be a k-dimensional linear subspace in general position with respect to \(H_1,\dots ,H_n\), which means that \(H_1\cap L,\dots ,H_n\cap L\) are \((k-1)\)-dimensional subspaces of L which are in general position in L. Let \(j\in \{1,\dots ,k\}\). The tessellation \({\mathcal T}_L\) induced in L by \(H_1\cap L,\dots ,H_n\cap L\) has C(n, k, j) faces of dimension j, by (16). If \(n<k-j\), then clearly \(C(n,k,j)=0\). The following is an immediate consequence of general position.

Lemma 4.1

Let \(j\ge 1\). To each j-face \(F_j\) of \({\mathcal T}_L\), there is a unique \((d-k+j)\)-face F of the tessellation \({\mathcal T}\) induced by \(H_1,\dots ,H_n\), such that \(F_j=F\cap L\).

Conversely, if \(F\in {\mathcal F}_{d-k+j}({\mathcal T})\) and \(F\cap L\not =\{0\}\), then \(F\cap L\) is a j-face of \({\mathcal T}_L\).

In the following, we assume that \(n\ge k-j\). We choose one of the j-faces of \({\mathcal T}_L\) at random (with equal chances) and denote it by \(F_j\). Then \(F_j=L\cap F\) with a unique face \(F\in {\mathcal F}_{d-k+j}({\mathcal T})\). The face \(F_j\) is contained in \(2^{k-j}\) Schläfli cones of \({\mathcal T}_L\) and thus in \(2^{k-j}\) Schläfli cones of \({\mathcal T}\). These are precisely the Schläfli cones of \({\mathcal T}\) that contain F. We select one of these at random (with equal chances) and call it \(C^{[k,j]}(H_1,\dots ,H_n,L)\).

Let \(\mathcal H_1,\dots ,\mathcal H_n\) be independent random hyperplanes with distribution \(\phi ^*\). We apply the described procedure to these hyperplanes and to a random k-dimensional subspace. This random subspace will here be chosen as explained below, and in a different way in Sect. 6.

Let \(\mathcal L\in G(d,k)\) be a random subspace with distribution \(\nu _k\), which is independent of \(\mathcal H_1,\dots ,\mathcal H_n\); for \(k=d\), \(L={\mathbb R}^d\) is deterministic. We may assume, since this happens with probability 1, that \(\mathcal H_1,\dots ,\mathcal H_n\) and \(\mathcal L\) are in general position. Then we define

More formally, \(C_n^{[k,j]}\) is a random polyhedral cone with distribution given by

for \(B\in {\mathcal B}(\mathcal {PC}^d)\) and \(n\ge k-j\) (recall that \(\eta _n\) is a shorthand notation for \((H_1,\dots ,H_n)\)).

If \(n>k-j\), then almost surely \(F\in {\mathcal F}_{d-k+j}(\eta _n)\) is not a linear subspace. Thus, (3) implies that the inner integral in (22), up to the combinatorial factors, can be written as

according to (10). Therefore, we obtain

From (19) and (23) (both formulated for expectations) we get, for every nonnegative, measurable function g on \(\mathcal {PC}^d\) and \(n> k-j\), the equation

Choosing \(g=1\) in (24), we obtain the following theorem.

Theorem 4.1

The expected size functionals \({\mathbb E}\,Y_{i,j}\) of the \((\phi ^*,n)\)-Schläfli cone \(S_n\) are given by

for \(1\le j\le k\le d\) and \(n> k-j\).

As a consequence, we can also write

Thus, the distribution of \(C_n^{[k,j]}\) is obtained from the distribution of \(S_n\) by weighting it with the function \(Y_{d-k+j,d-k}\). This is the conical counterpart to [26, Sect. 11.3, Lemma]. In analogy to [26, Sect. 11.3], we point out some special cases.

If \(k=j=1\), the procedure described above is equivalent to choosing a uniform random point in \({\mathbb S}^{d-1}\), independent of \(\mathcal H_1,\dots ,\mathcal H_n\), and taking for \(C_n^{[1,1]}\) the Schläfli cone containing it. The weight function satisfies \(Y_{d,d-1}(C) = V_d(C)\).

If \(k=d\), the procedure is equivalent to choosing a j-face of the tessellation \({\mathcal T}\) at random (with equal chances) and then choosing at random (with equal chances) one of the Schläfli cones containing it, which gives \(C_n^{[d,j]}\). The weight function satisfies \(Y_{j,0}(C) =\tfrac{1}{2} f_j(C)\), since the assumption \(n>d-j\) implies that the j-faces of C are not linear subspaces. In particular, for \(j=d\) it is constant, and \(C_n^{[d,d]}=S_n\) in distribution.

By specialization, Eq. (25) includes the following results, which were obtained by Cover and Efron [9].

Corollary 4.1

For \(k=1,\dots ,d\),

and for \(k=0,\dots ,d-1\),

Equation (26) is formula (3.1) in [9], after correction of misprints. This equation is obtained from (25) by choosing \(k=d\) and then replacing j by k (and observing (11) and (16)), if \(n> d-k\). For \(n=d-k\), both sides are equal to 1, and for \(n<d-k\) both sides are zero. The duality (20) gives (27), which is formula (3.3) in [9].

The following expectations do not appear in [9].

Corollary 4.2

The expected conical quermassintegrals of the \((\phi ^*,n)\)-Schläfli cone \(S_n\) and the \((\phi ,n)\)-Cover–Efron cone \(C_n\) are given by

for \( k=0,\dots , d-1\), and by

for \(k=1,\dots ,d-1\).

Equation (28) is obtained by replacing k and j in (25) both by \(d-k\). Note that if \(n\le d-k\), then both sides of the equation are equal to 1 / 2. Since \(C_n\) is almost surely pointed, the dualities (5) and (20) yield (29), where both sides of the equation are equal to 0 if \(n<k\).

We can now apply (7) for \(j=1,\dots ,d\) together with (28), and (8) for \(j=0\) together with (20) and (29), to obtain (30) below. The duality relations (8) and (20) then yield (31).

Corollary 4.3

and

Remark. After a first version of this manuscript had been posted in the arXiv, Martin Lotz kindly pointed out to the authors that relation (30) can also be deduced from a result of Klivans and Swartz [21], for which he sketched a simpler proof. Let \({\mathcal A}\) be an arrangement of n hyperplanes through 0 in \({\mathbb R}^d\). The main result of [21] connects the polynomial \(\sum _{k=0}^d \sum V_k(C)t^k\), where the inner sum extends over the d-cones of the tessellation induced by \({\mathcal A}\), with the characteristic polynomial of \({\mathcal A}\) and thus with the Möbius function of the intersection poset of \({\mathcal A}\). Under our assumption of general position, this Möbius function is easily determined, therefore the result of [21] yields (30) (though with a less direct proof). Meanwhile, a short proof of the Klivans–Swartz formula has independently been given by Kabluchko et al. in [20, Thm. 4.1], and Amelunxen and Lotz [4, Thm. 6.1] have generalized that formula to faces of all dimensions.

In the summary of their paper [9], Cover and Efron also announced results on the ‘expected natural measure of the set of k-faces’. As such a natural measure one can consider the total k-face content \({\varLambda }_k\) defined by (12) for polyhedral cones (or its natural analogue in the case of spherical polytopes). The following can be stated.

Proposition 4.1

For the functionals defined by \({\varLambda }_k(C)=\sum _{F\in {\mathcal F}_k(C)} V_k(F)\), the expectations for random Schläfli cones are given by

for \(k=1,\dots ,d\), and for Cover–Efron cones by

for \(k=1,\dots ,d-1\).

In contrast to (33), relation (32) holds also for \(k=d\), by (25). Cover and Efron did not formulate these results; however, some arguments leading to them are contained in the proofs of their Theorems 2 and 4. We note that (32) is the special case of (25) which is obtained by replacing k by \(d-k+1\) and setting \(j=1\). Here we use that for \(n>d-k\), the k-faces of \(S_n\) are not in G(d, k). For \(n\le d-k\), the equation is apparently true as well.

For (33), we extend and complete the arguments given in [9]. For the proof, we can assume that \(n\ge k\). Let \(k\in \{1,\dots ,d-1\}\). By (19) and (20),

Let \(\eta _n=(H_1,\dots ,H_n)\), where \(H_1,\dots ,H_n\in G(d,d-1)\) are in general position. Let \(F\in {\mathcal F}_{d-k}(\eta _n)\). Then there are indices \(1\le i_1<\dots < i_k\le n\) such that

Let \({\mathcal C}_F\) be the set of Schläfli cones \(C\in {\mathcal F}_d(\eta _n)\) with \(F\subset C\). Let \(u_j\) be a unit normal vector of \(H_{i_j}\), \(j=1,\dots ,k\). Then the cones \(C\in {\mathcal C}_F\) are in one-to-one correspondence with the choices \(\varepsilon _1,\dots ,\varepsilon _k\in \{-1,1\}\) such that

The face of \(C^\circ \) conjugate to F (with respect to C) is then given by

It follows that the faces \(\widehat{F}_C\), \(C\in {\mathcal C}_F\), form a tiling of \(L_{i_1,\dots ,i_k}^\perp \), and therefore

The faces \(F\in {\mathcal F}_{d-k}(\eta _n)\) with \(F\subset L_{i_1,\dots ,i_k}\) are the Schläfli cones of the tessellation induced in \(L_{i_1,\dots ,i_k}\), hence there are precisely \(C(n-k,d-k)\) of them. Now we obtain, using (34) and the latter remark,

which yields (33).

We point out that the results obtained so far hold for general distributions \(\phi ^*\), as specified at the beginning of this section (which exhibits their essentially combinatorial character).

5 Some First and Second Order Moments

We have defined the random Schläfli cone by picking at random, with equal chances, one of the d-cones generated by a finite number of i.i.d. random hyperplanes through 0 (with a suitable distribution). A different model of a random cone is obtained by taking the (almost surely unique) cone that contains a fixed given ray. This is in analogy to the Euclidean case, where, for a stationary random mosaic, the typical cell and the zero cell (containing the origin) are classical examples of random polytopes. In that case, it is known (e.g., [40, Thm. 10.4.1]) that the distribution of the zero cell is, up to translations, the volume-weighted distribution of the typical cell. In this section, we derive an analogous statement for conical tessellations generated by hyperplanes with rotation invariant distribution (Lemma 5.2), and also some expectation results in analogy to the Euclidean case. While this is of independent interest, our main goal is to derive from this, together with the expectation (39), the mixed second moment (41), because this is an essential prerequisite for the proof of our main result, Theorem 8.1.

Recall that \(\nu _{d-1}\) denotes the unique rotation invariant probability measure on the Grassmannian \(G(d,d-1)\). The subsequent results require this special distribution for the considered random hyperplanes, instead of the general distribution \(\phi ^*\) of the previous sections.

First we formulate a simple lemma.

Lemma 5.1

If \(A\in {\mathcal B}({\mathbb S}^{d-1})\) and \(k\in \{1,\dots ,d-1\}\), then

Proof

As a function of A, the left-hand side of (35) is a finite measure, which, due to the rotation invariance of \(\nu _{d-1}\) and of \(\sigma _{d-k-1}\), must be invariant under rotations. Up to a constant factor, there is only one such measure on \({\mathcal B} ({\mathbb S}^{d-1})\), namely \(\sigma _{d-1}\). The choice \(A={\mathbb S}^{d-1}\) then reveals the factor. \(\square \)

Now let \(\mathcal H_1,\dots ,\mathcal H_n\) be independent random hyperplanes through 0 with distribution \(\nu _{d-1}\). Before treating the \((\nu _{d-1},n)\)-Schläfli cone, we consider a different random cone, which corresponds to the zero cell in the theory of Euclidean tessellations. Let \(e\in {\mathbb S}^{d-1}\) be a fixed vector. With probability 1, the vector e is contained in a unique Schläfli cone induced by \(\mathcal H_1,\dots ,\mathcal H_n\), and we denote this cone by \(S_n^e\). If \(e\notin H\in G(d,d-1)\), we denote by \(H^e\) the closed halfspace bounded by H that contains e.

Let \(k\in \{0,\dots ,d-1\}\). Almost surely, each \((d-k)\)-face of \(S_n^e\) is the intersection of \(S_n^e\) with exactly k of the hyperplanes \(\mathcal H_1,\dots ,\mathcal H_n\). Conversely, each intersection of k distinct hyperplanes from \(\mathcal H_1,\dots ,\mathcal H_n\) a.s. intersects \(S_n^e\) either in a \((d-k)\)-face or in \(\{0\}\). Observing this, we compute

If \(n=k\), the outer integration does not appear, and \(\mathcal H_{k+1}^e\cap \dots \cap \mathcal H_n^e\) has to be interpreted as \({\mathbb R}^d\). For \(n<k\), both sides of the equation are zero.

By Lemma 5.1, the inner integral is equal to

hence we obtain

for \(k=0,\ldots ,d-1\). Here both sides of the equation are zero if \(n<k\), and they are equal to 1 for \(n=k\).

We next derive a similar formula for \({\mathbb E}\,f_{d-k}(S_n^e)\) (in analogy to [37, Sect. 5]). Let \(k\in \{0,\dots ,d-1\}\) and \(n>k\). As above, we obtain

Let \(G(d,d-1)^k_*\) denote the set of all k-tuples of \((d-1)\)-dimensional linear subspaces with linearly independent normal vectors. The image measure of \(\nu _{d-1}^k\) under the mapping \((H_1,\dots ,H_k)\mapsto H_1\cap \dots \cap H_k\) from \(G(d,d-1)^k_*\) to \(G(d,d-k)\) is the invariant measure \(\nu _k\), hence

for \(C=H_{k+1}^e\cap \dots \cap H_n^e\in {\mathcal C}^d\) and \(\nu _{d-1}^{n-k}\) almost all \((H_{k+1},\dots ,H_n)\in G(d,d-1)^{n-k}\). We conclude that

for \(k\in \{0,\ldots ,d-1\}\) and \(n>k\). If \(n=k\), then \({\mathbb E}\,f_{d-k}(S_n^e)=1\), and the expectation is zero for \(n<k\).

To compute \({\mathbb E}\,V_d(S^e_n)\), let \(P\subset {\mathbb S}^{d-1}\) be a closed spherically convex set containing e. Writing \(u\in {\mathbb S}^{d-1}\) in the form \(u=te+\sqrt{1-t^2}\,\overline{u}\) with \(\overline{u}\in e^\perp \cap {\mathbb S}^{d-1}\), we have

with

Let \(Z_n^e:= S_n^e\cap {\mathbb S}^{d-1}\). For fixed \(\overline{u}\in e^\perp \cap {\mathbb S}^{d-1}\), the distribution function of the random variable \(\rho (Z_n^e,\overline{u})\) is given by

since \(\rho (Z_n^e,\overline{u}) >x\) holds if and only if none of the hyperplanes \(\mathcal H_1,\dots ,\mathcal H_n\) intersects the great circular arc connecting e and \((\cos x)e+(\sin x)\overline{u}\). Let

From (37) we have \(G(\pi )=\omega _d/\omega _{d-1}\). Since the distribution of the random variable \(\rho (Z_n^e, \overline{u})\) does not depend on \(\overline{u}\), we obtain

After using the binomial theorem, the integral can be evaluated by using recursion formulas and known definite integrals; e.g., see [17, p. 117]. (The evaluation of the integral for \(d=3\) in [31, (6.16)] is corrected in [10].)

Defining the constant \(\theta (n,d)\) by

and by \(\theta (n,d):=0\) for \(n<0\), and recalling that \(V_d(S_n^e)=\sigma _{d-1}(Z_n^e)/ \omega _d\), we can write the result as

Note that \(\theta (0,d)=1\). As a corollary, we obtain from (36) that

for \(k\in \{0,\ldots ,d-1\}\). For \(n=k\) both sides are equal to 1, and they are zero for \(n<k\).

The following lemma relates the distribution of \(S_n^e\) to that of the random \((\nu _{d-1},n)\)-Schläfli cone \(S_n\).

Lemma 5.2

Let \( \mathcal H_1,\dots ,\mathcal H_n\) be independent random hyperplanes with distribution \(\nu _{d-1}\), and let \(S_n^e\) be the induced Schläfli cone containing the fixed given vector \(e\in {\mathbb S}^{d-1}\).

Let f be a nonnegative measurable function on \(\mathcal {PC}^d\) which is invariant under rotations. Then

Proof

In the following, we denote by \(\nu \) the invariant probability measure on the rotation group \(\mathrm{SO}(d)\), and we make use of the fact that

for every nonnegative measurable function g on \({\mathbb S}^{d-1}\). Using the rotation invariance of the function f and of the probability distribution \(\nu _{d-1}\), we obtain, with \(\vartheta \in \mathrm{SO}(d)\),

by (19) (with \(\phi ^*=\nu _{d-1}\)). \(\square \)

From Lemma 5.2 and (40) we get

for \(k=0,\dots ,d-1\). The case \(k=0\) reads

Equation (41) is a conical counterpart to Miles [26, Thm. 11.1.1]. The special case \(d=3\) of (41) is contained in Miles [31, Thm. 6.3].

6 Another Selection Procedure

In this section, we begin with the proof of our main result, Theorem 8.1, which will yield all the mixed moments \({\mathbb E}\,({\varLambda }_s{\varLambda }_r)(S_n)\). Before that, we sketch the proof strategy. The principal idea can already be seen from the way the mixed second moment (41) for the random Schläfli cone \(S_n\) was obtained. We had defined another random cone, \(S_n^e\), with the property (expressed in Lemma 5.2) that its distribution is the \(V_d\)-weighted distribution of \(S_n\). Since the expectation of \({\mathbb E}\,{\varLambda }_{d-k}(S_n^e)\) (see (40)) could be determined by a direct geometric argument, we thus obtained the expectation \({\mathbb E}\,({\varLambda }_{d-k}V_d)(S_n)\).

A more sophisticated version of this argument will finally allow us to determine explicitly the mixed moments \({\mathbb E}\,({\varLambda }_s{\varLambda }_r)(S_n)\). In the present section, we use successive random choices to define a random cone \(D_n^{[k,j]}\), for which we show in (43) that its distribution is the \(Y_{d-k+j,d-k}\)-weighted distribution of \(S_{n-d+k}\). The expectation \({\mathbb E}\,{\varLambda }_r (D_n^{[k,j]})\) is expressed in (48) in terms of expectations for certain Schläfli cones. To obtain this, a geometric decomposition argument is needed, which is provided in Sect. 7. Both results together yield the expectation \({\mathbb E}\,({\varLambda }_r Y_{d-k+j,d-k})(S_{n-d+k})\), which we can specialize and simplify to obtain \({\mathbb E}\,({\varLambda }_s{\varLambda }_r)(S_n)\).

In Sect. 4, we have used a selection procedure to define a random cone \(C_n^{[k,j]}\). This selection procedure will now be modified. The assumptions are the same as in Sect. 5: \(\mathcal H_1,\dots ,\mathcal H_n\) are independent random hyperplanes through 0, each with distribution \(\nu _{d-1}\), the rotation invariant probability measure on \(G(d,d-1)\).

The second selection procedure is equivalent to a conical analogue of the one in [26, Sect. 11.4], though we describe it in a different way. We assume again that \( 1\le j\le k\le d\) and \(n\ge d-j\) (that is, \(n-(d-k)\ge k-j\)). Now a subspace \({\mathcal L}\in G(d,k)\) is chosen at random (with equal chances) from the k-dimensional intersections of the hyperplanes \(\mathcal H_1, \dots , \mathcal H_n\). (If \(k=d\), then \({\mathcal L}={\mathbb R}^d\) is deterministic. Corresponding adjustments can be made below.) There are indices \(i_1,\dots ,i_{d-k}\in \{1,\dots ,n\}\) such that

since \(n\ge d-j\ge d-k\). In the following, if \(\eta _n=(H_1,\dots ,H_n)\), we denote by \(\eta _n\langle i_1,\dots , i_{d-k} \rangle \) the \((n-d+k)\)-tuple that remains when \(H_{i_1},\dots ,H_{i_{d-k}}\) have been removed from \((H_1,\dots ,H_n)\). Similarly, \(\mathsf{H}_n\langle i_1,\dots ,i_{d-k}\rangle \) is obtained from \(\mathsf{H}_n=(\mathcal H_1,\dots ,\mathcal H_n)\). Then, employing the definition (21), we define

(Note that the indices \(i_1,\dots ,i_{d-k}\) are determined by \({\mathcal L}\).)

Let \(B\in {\mathcal B}(\mathcal {PC}^d)\). According to the definition of \(D_n^{[k,j]}\), we have

For \(k=d\), the condition \(F\cap H_{i_1}\cap \dots \cap H_{i_{d-k}} \not =\{0\}\) is empty and can be deleted. Moreover, if \(n=d-k\), then \(j=k\), \(F=C={\mathbb R}^d\) and \(D_{n}^{[k,j]}=D_{d-k}^{[k,k]}={\mathbb R}^d\) almost surely. After interchanging the integration and the first summation, the summands of the sum \(\sum _{1\le i_1<\dots < i_{d-k}\le n}\) are all the same. Therefore, we obtain

If \(n=d-k\), then the outer integration is omitted and \(F=C={\mathbb R}^d\). We have split the n-fold integration, since the image measure of the measure \(\nu _{d-1}^{d-k}\) under the map \((H_1,\dots ,H_{d-k})\mapsto H_1\cap \dots \cap H_{d-k}\) from \(G(d,d-1)_*^{d-k}\) to G(d, k) is (for reasons of rotation invariance) the Haar probability measure \(\nu _k\) on G(d, k). Therefore, for the inner integral we obtain

Assume now that \(n>d-j\). Then, arguing as in the derivation of (23), we see that the latter is equal to

We conclude that

Together with (19) (for \(\phi ^*=\nu _{d-1}\)) this yields the following.

Lemma 6.1

For every nonnegative, measurable function g on \(\mathcal {PC}^d\) and for \(n>d-j\),

As a consequence, we have

This is the conical counterpart to [26, Thm. 11.5.1] (but in contrast to that, we have no equivalence here: n on the left side and \(n-d+k\) on the right side).

For later application, we note the special case \(k=j\). From (42) and (43) we obtain

for \(n> d-j\).

7 A Geometric Identity

To draw conclusions from the previous results, we need a geometric identity, given by (46), in analogy to [26, Sect. 11.6]. Let \(\eta _n=(H_1,\dots ,H_n)\in G(d,d-1)^n_*\), let \(j\in \{1,\dots ,d-1\}\), \(n> d-j\) and

Let \(F_j\in {\mathcal F}_j(\eta _n)\) be a j-face such that \(F_j\subset L_j\). Let \(k\in \{j,\dots ,d\}\). We delete the hyperplanes \(H_{k-j+1},\dots ,H_{d-j}\). From the tessellation induced by the remaining hyperplanes, we collect the d-cones containing \(F_j\) and then classify their r-faces for fixed r. Thus, we define

Let \(r\in \{1,\dots ,d\}\). For \(p\in {\mathbb N}\) with \(r\le p\le d\) and \(d-p\le k-j\), let

We recall that \({\varLambda }_r(C)\), defined for \(C\in \mathcal {PC}^d\) by (12), is the normalized spherical \((r-1)\)-volume of the \((r-1)\)-skeleton of \(C\cap {\mathbb S}^{d-1}\), that is,

We have

since each \(F\in {\mathcal F}_{r,p}\) belongs to precisely \(2^{d-p}\) cones \(C\in {\mathcal F}_d(\eta _n,F_j,k)\).

Let Q be the unique cone in \({\mathcal F}_d(\eta _n\langle 1,\dots , d-j \rangle )\) with \(F_j\subset Q\), and define

Thus, \({\mathcal C}_p\) is a set of p-dimensional cones, and \({\mathcal C}_d=\{Q\}\). Each r-face \(F\in {\mathcal F}_{r,p}\) satisfies \(F\subset G\in {\mathcal F}_r(D)\) for a unique \(D\in {\mathcal C}_p\) and a unique \(G\in {\mathcal F}_r(D)\). Conversely, for \(D\in {\mathcal C}_p\) and \(G\in {\mathcal F}_r(D)\), the r-face G is the union of r-faces from \({\mathcal F}_{r,p}\), which pairwise have no relatively interior points in common. It follows that

We conclude that

Relation (45) was derived for any \(F_j\in {\mathcal F}_j(\eta _n)\) with \(F_j\subset L_j\). We sum over all such j-faces and note that \(C\in {\mathcal F}_d(\eta _n\langle k-j+1,\dots ,d-j\rangle )\) satisfies \(F_j\subset C\) for some j-face \(F_j\in {\mathcal F}_j(\eta _n)\) with \(F_j\subset L_j\) if and only if \(C\cap L_j\not =\{0\}\). (Recall that \(\eta _n\langle i_1,\dots ,i_{d-k}\rangle \) was defined early in Sect. 6.) Concerning the set \(\mathcal {C}_p\) appearing on the right-hand side of (45), we note that \(Q\in {\mathcal F}_d(\eta _n\langle 1,\dots ,d-j\rangle )\) satisfies \(F_j\subset Q\) for some j-face \(F_j\in {\mathcal F}_j(\eta _n)\) with \(F_j\subset L_j\) if and only if \(Q\cap L_j\not =\{0\}\). Therefore, we obtain the geometric identity

which will be required in Sect. 8. (For \(k=j\), the middle sum on the right-hand side has to be deleted, and the equation becomes a tautology.) This holds for \(\eta _n=(H_1,\dots ,H_n )\in G(d,d-1)^n_*\), \(j\in \{1,\dots ,d-1\}\), with \( L_j:= H_1\cap \dots \cap H_{d-j}\), \(r=1,\dots ,d\), \(k\in \{j,\ldots ,d\}\) and for \(n>d-j\).

8 A Covariance Matrix

We are now in a position to combine the preceding results, in order to finish the proof of Theorem 8.1. The crucial task is to compute the expectation \({\mathbb E}\,{\varLambda }_r(D_n^{[k,j]})\) (Formula (48)). To do this, we use the explicit representation (42) of the distribution of \(D_n^{[k,j]}\) and employ the geometric decomposition result (46) obtained in Sect. 7, together with properties of invariant measures.

We use (42), extended to expectations and then applied to the expectation of \({\varLambda }_r\), for given \(r\in \{1,\dots ,d\}\). However, it will be convenient to replace the index tuple \((1,\dots ,d-k)\) by \((k-j+1,\dots ,d-j)\), for given \(j\in \{1,\dots ,d-1\}\) and \(k\in \{j,\dots ,d\}\). As before we assume that \(n> d-j\). Then we have (splitting the multiple integral appropriately)

(Recall that, for \(k=d\), the condition \(F\cap H_{k-j+1}\cap \dots \cap H_{d-j}\not = \{0\}\) is empty and can be deleted.) If \(k>j\), we split the first sum above in the form

Then, after interchanging in (47) the first summation on the right side and integration, the outer sum has \(\left( {\begin{array}{c}n-d+k\\ k-j\end{array}}\right) \) equal terms, hence we obtain (again regrouping the integrals)

(If \(k=j\), the condition \(F\subset H_{1}\cap \dots \cap H_{k-j}\) is empty and can be deleted.) For fixed subspaces \(H_1,\dots ,H_{k-j}\), we consider the inner integral

A cone \(C\in {\mathcal F}_d(\eta _n\langle k-j+1,\dots ,d-j\rangle )\) has a face \( F \in {\mathcal F}_{d-k+j} (\eta _n\langle k-j+1,\dots ,d-j\rangle )\) satisfying

if and only if

and it can have at most one such face. Using this and (46), we obtain

We conclude that

To evaluate the inner integral above, we fix \(H_1,\dots ,H_{d-p}\) in general position and write \(H_1\cap \dots \cap H_{d-p} =: L_p\). The image measure of \(\nu _{d-1}\) under the (\(\nu _{d-1}\) almost everywhere well defined) map \(H\mapsto H\cap L_p\) from \(G(d,d-1)\) to the Grassmannian \(G(L_p,p-1)\) of \((p-1)\)-dimensional subspaces of \(L_p\) is the invariant probability measure \(\mu _{p-1}\) on \(G(L_p,p-1)\). Therefore, the inner integral can be written as

Note that \(p\ge j\). If \(p=j\), then the second condition under the last sum is empty and can be deleted. Here \({\mathcal F}_p(h_{d-j+1},\dots ,h_n)\) denotes the set of Schläfli cones in \(L_p\) that are generated by the \((p-1)\)-planes \(h_{d-j+1},\dots ,h_n\) in \(L_p\). Identifying \(L_p\) with \({\mathbb R}^p\), we can apply (44) in \(L_p\). For this, we replace d by p, the number n by \(n-d+p\), and raise the indices of the integration variables in (44) by \(d-p\). Then (44), with \(g={\varLambda }_r\), reads

where \(S^{(p)}_m\) denotes the \((\mu _{p-1},m)\)-Schläfli cone in \(L_p\). We conclude that

Comparing (43) and (48), and recalling that \(n>d-j\), we arrive at

Here we substitute \(d-k+j=s\) and \(d-k=t\). Then we replace n by \(n+t\) and assume that \(n> d-s\). The result is

This is the conical (or spherical) counterpart to [26, Thm. 11.7.1]. (The result is also true for \(n<d-s\), since then both sides of the equation are zero.)

We specialize the latter to \(t=s-1\). We have \(Y_{s,s-1}={\varLambda }_s\). Further, \(U_{p-s+t}=U_{p-1}= V_p\) in a space of dimension p. The value of \({\mathbb E}(V_p{\varLambda }_r)(S^{(p)}_{n-d+s})\) is seen from (41). In this way, we obtain the following result.

Theorem 8.1

The face contents of the \((\nu _{d-1},n)\)-Schläfli cone \(S_n\) satisfy

for \(r,s=1,\dots ,d\), where \(\theta \) is defined by (38).

An alternative formulation of (49), which exhibits the symmetry in r and s, is given by

Theorem 8.1 is the conical counterpart to [26, Corollary to Thm. 11.7.1]. It holds for all \(n\in \mathbb {N}\). In fact, if \(n<d-r\) (or \(n<d-s\)), then both sides of (49) are zero. For \(n=d-r\) (or \(n=d-s\)) Eq. (49) is equivalent to (32). Also note that (41) is obtained as the special case \(s=d-k\) and \(r=d\) of (49).

Since the expectations \({\mathbb E} {\varLambda }_r(S_n)\) are known by (32), Theorem 8.1 allows us to write down the complete covariance matrix for the random vector \(({\varLambda }_1(S_n),\dots ,{\varLambda }_d(S_n))\).

For the Cover–Efron cone \(C_n\), there is only one second moment that we can obtain from Theorem 8.1 by dualization, namely \({\mathbb E}f_{d-1}^2(C_n)= {\mathbb E} f_1^2(S_n)= 4{\mathbb E} {\varLambda }_1^2(S_n)\) for \(n\ge d\).

References

Amelunxen, D.: Measures on polyhedral cones: characterizations and kinematic formulas (2015). http://arxiv.org/abs/1412.1569v3

Amelunxen, D., Bürgisser, P.: Intrinsic volumes of symmetric cones and applications in convex programming. Math. Program. 149(1–2), 105–130 (2015)

Amelunxen, D., Lotz, M.: Gordon’s inequality and condition numbers in conic optimization. http://arxiv.org/abs/1408.3016

Amelunxen, D., Lotz, M.: Intrinsic volumes of polyhedral cones: a combinatorial perspective (2015). http://arxiv.org/abs/1512.06033

Amelunxen, D., Lotz, M., McCoy, M.B., Tropp, J.A.: Living on the edge: phase transitions in convex programs with random data. Inf. Inference 3, 224–294 (2014)

Arbeiter, E., Zähle, M.: Geometric measures for random mosaics in spherical spaces. Stochastics Stochastics Rep. 46(1–2), 63–77 (1994)

Bárány, I., Hug, D., Reitzner, M., Schneider, R.: Random points in halfspheres. Random structures algorithms (2016), to appear. http://arxiv.org/abs/1505.04672

Calka, P.: Asymptotic methods for random tessellations. In: Spodarev, E. (ed.) Stochastic Geometry, Spatial Statistics and Random Fields, Asymptotic Methods. Lecture Notes in Mathematics, vol. 2068, pp. 183–204. Springer, Berlin (2013)

Cover, T.M., Efron, B.: Geometrical probability and random points on a hypersphere. Ann. Math. Stat. 38, 213–220 (1967)

Cowan, R., Miles, R.E.: Letter to the Editor. Adv. Appl. Probab. (SGSA) 41(4), 1002–1004 (2009)

Donoho, D.L., Tanner, J.: Counting the faces of randomly-projected hypercubes and orthants, with applications. Discrete Comput. Geom. 43, 522–541 (2010)

Gänssler, P., Stute, W.: Wahrscheinlichkeitstheorie. Springer, Berlin (1977)

Gao, F., Hug, D., Schneider, R.: Intrinsic volumes and polar sets in spherical space. Homage to Luis Santaló, vol 1. Math. Notae 41(2001/02), 159–176 (2003)

Glasauer, S.: Integralgeometrie konvexer Körper im sphärischen Raum. Doctoral Thesis, Albert-Ludwigs-Universität, Freiburg i. Br. (1995)

Glasauer, S.: Integral geometry of spherically convex bodies. Diss. Summ. Math. 1, 219–226 (1996)

Goldstein, L., Nourdin, I., Peccati, G.: Gaussian phase transitions and conic intrinsic volumes: Steining the Steiner formula (2014). http://arxiv.org/abs/1411.6265

Gröbner, W., Hofreiter, N.: Integraltafel. Zweiter Teil, Bestimmte Integrale. Springer, Wien (1950)

Grünbaum, B.: Grassmann angles of convex polytopes. Acta Math. 121, 293–302 (1968)

Hug, D.: Random polytopes. In: Spodarev, E. (ed.) Stochastic Geometry, Spatial Statistics and Random Fields. Lecture Notes in Mathematics, vol. 2068, pp. 205–238. Springer, Berlin (2013)

Kabluchko, Z., Vysotsky, V., Zaporozhets, D.: Convex hulls of random walks, hyperplane arrangements, and Weyl chambers (2015). http://arxiv.org/abs/1510.04073

Klivans, C.E., Swartz, E.: Projection volumes of hyperplane arrangements. Discrete Comput. Geom. 46, 417–426 (2011)

Matheron, G.: Hyperplans Poissoniens et compact de Steiner. Adv. Appl. Probab. 6, 563–579 (1974)

Matheron, G.: Random Sets and Integral Geometry. Wiley, New York (1975)

McCoy, M.B., Tropp, J.A.: From Steiner formulas for cones to concentration of intrinsic volumes. Discrete Comput. Geom. 51(4), 926–963 (2014)

McMullen, P.: Non-linear angle sum relations for polyhedral cones and polytopes. Math. Proc. Camb. Philos. Soc. 78, 247–261 (1975)

Miles, R.E.: Random polytopes: the generalisation to \(n\) dimensions of the intervals of a Poisson process. Ph.D. Thesis, Cambridge University (1961)

Miles, R.E.: Random polygons determined by random lines in a plane. Proc. Natl Acad. Sci. USA 52, 901–907 (1964)

Miles, R.E.: Random polygons determined by random lines in a plane II. Proc. Natl Acad. Sci. USA 52, 1157–1160 (1964)

Miles, R.E.: Poisson flats in Euclidean spaces. I: A finite number of random uniform flats. Adv. Appl. Probab. 1, 211–237 (1969)

Miles, R.E.: A synopsis of ‘Poisson flats in Euclidean spaces’. Izv. Akad. Nauk Arm. SSR, Mat. 5, pp. 263–285 (1970). Reprinted in: Harding, E.F., Kendall, D.G. (eds.), Stochastic Geometry, pp. 202–227, Wiley, New York (1974)

Miles, R.E.: Random points, sets and tessellations on the surface of a sphere. Sankhyā Ser. A 33, 145–174 (1971)

Redenbach, C., Liebscher, A.: Random tessellations and their application to the modelling of cellular materials. In: Schmidt, V. (ed.) Stochastic Geometry, Spatial Statistics and Random Fields, Models and Algorithms. Lecture Notes in Mathematics, vol. 2120, pp. 73–93. Springer, Cham (2015)

Reitzner, M.: Random polytopes. In: Kendall, W.S., Molchanov, I. (eds.) New Perspectives in Stochastic Geometry, pp. 45–76. Oxford University Press, Oxford (2010)

Santaló, L.A.: Sobre la formula de Gauss-Bonnet para poliedros en espacios de curvatura constante. Revista Un. Mat. Argentina 20, 79–91 (1962)

Santaló, L.A.: Sobre la formula fundamental cinematica de la geometria integral en espacios de curvatura constante. Math. Notae 18, 79–94 (1962)

Santaló, L.A.: Integral Geometry and Geometric Probability. Encyclopedia of Mathematics and Its Applications, vol. 1. Addison-Wesley, Reading (1976)

Schneider, R.: Weighted faces of Poisson hyperplane tessellations. Adv. Appl. Probab. (SGSA) 41(3), 682–694 (2009)

Schneider, R.: Second moments related to Poisson hyperplane tessellations. J. Math. Anal. Appl. 434, 1365–1375 (2016). doi:10.1016/j.jmaa.2015.10.005

Schneider, R.: Discrete aspects of stochastic geometry. In: Toth, C.S., et al. (eds.), Handbook of Discrete and Computational Geometry, 3rd edn, to appear. http://home.mathematik.uni-freiburg.de/rschnei/DCG.Chapter13.pdf

Schneider, R., Weil, W.: Stochastic and Integral Geometry. Springer, Berlin (2008)

Voss, F., Gloaguen, C., Schmidt, V.: Random tessellations and Cox processes. In: Spodarev, E. (ed.) Stochastic Geometry, Spatial Statistics and Random Fields, Asymptotic Methods. Lecture Notes in Mathematics, vol. 2068, pp. 151–182. Springer, Berlin (2013)

Acknowledgments

Supported in part by DFG Grants FOR 1548 and HU 1874/4-2.

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor in Charge: Günter M. Ziegler

Rights and permissions

About this article

Cite this article

Hug, D., Schneider, R. Random Conical Tessellations. Discrete Comput Geom 56, 395–426 (2016). https://doi.org/10.1007/s00454-016-9788-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-016-9788-0

Keywords

- Conical tessellation

- Spherical tessellation

- Random polyhedral cones

- Conical quermassintegrals

- Conical intrinsic volumes

- Number of k-faces

- First and second order moments