Abstract

We discuss subordination of random compact \({\mathbb R}\)-trees. We focus on the case of the Brownian tree, where the subordination function is given by the past maximum process of Brownian motion indexed by the tree. In that particular case, the subordinate tree is identified as a stable Lévy tree with index 3/2. As a more precise alternative formulation, we show that the maximum process of the Brownian snake is a time change of the height process coding the Lévy tree. We then apply our results to properties of the Brownian map. In particular, we recover, in a more precise form, a recent result of Miller and Sheffield identifying the metric net associated with the Brownian map.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Subordination is a powerful tool in the study of random processes, see in particular Feller [11, Chapter X], and Sato [26, Chapter 6] in the context of Lévy processes. In the present work, we investigate subordination of random trees, and we apply our results to properties of the random metric space called the Brownian map, which has been proved to be the universal scaling limit of many different classes of random planar maps (see in particular [2, 17, 20]). These applications have been motivated by the work of Miller and Sheffield [21], which is part of a program aiming at the construction of a conformal structure on the Brownian map (see [22,23,24] for recent developments in this direction).

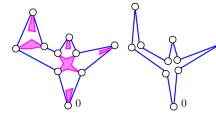

To explain our starting point, let us consider a compact \({\mathbb R}\)-tree \({\mathcal T}\). This means that \({\mathcal T}\) is a compact metric space such that, for every \(a,b\in {\mathcal T}\), there exists a unique (continuous injective) path from a to b, up to reparameterization, and the range of this path, which is called the geodesic segment between a and b and denoted by \(\llbracket a,b \rrbracket \), is isometric to a compact interval of the real line. We assume that \({\mathcal T}\) is rooted, so that there is a distinguished point \(\rho \) in \({\mathcal T}\). This allows us to define a generalogical order on \({\mathcal T}\), by saying that \(a\prec b\) if and only if \(a\in \llbracket \rho ,b\rrbracket \). Consider then a continuous function \(g:{\mathcal T}\longrightarrow {\mathbb R}_+\), such that \(g(\rho )=0\) and g is nondecreasing for the genealogical order. The basic idea of subordination is to identify a and b if g is constant on the geodesic segment \(\llbracket a,b \rrbracket \). So, for every \(a\in {\mathcal T}\), the set of all points that are identified with a is a closed connected subset of \({\mathcal T}\). This gluing operation yields another compact \({\mathbb R}\)-tree \(\widetilde{\mathcal T}\), which is equipped with a metric such that the distance between \(\rho \) and a is g(a) and is called the subordinate tree of \({\mathcal T}\) with respect to g (see Fig. 1 for an illustration). Furthermore, if our initial tree \({\mathcal T}\) was given as the tree coded by a continuous function \(h:[0,\sigma ]\longrightarrow {\mathbb R}_+\) (see [8] or Sect. 2 below), the subordinate tree \(\widetilde{\mathcal T}\) is coded by \(g\circ p_h\), where \(p_h\) is the canonical projection from \([0,\sigma ]\) onto \({\mathcal T}\).

Our main interest is in the case where \({\mathcal T}\) is random, and more precisely \({\mathcal T}={\mathcal T}_\zeta \) is the “Brownian tree” coded by a positive Brownian excursion \(\zeta =(\zeta _s)_{0\le s\le \sigma }\) under the Itô excursion measure. One may view \({\mathcal T}_\zeta \) as a variant of Aldous’ Brownian CRT, for which the total mass is not equal to 1, but is distributed according to an infinite measure on \((0,\infty )\). As previously, we write \(p_\zeta \) for the canonical projection from \([0,\sigma ]\) onto \({\mathcal T}_\zeta \). Next, to define the subordination function, we let \((Z_a)_{a\in {\mathcal T}_\zeta }\) be (linear) Brownian motion indexed by \({\mathcal T}_\zeta \), starting from 0 at the root \(\rho \). A simple way to construct this process is to use the Brownian snake approach, letting \(Z_a=\widehat{W}_s\) if \(a=p_\zeta (s)\), where \(\widehat{W}_s\) is the “tip” of the random path \(W_s\), which is the value at time s of the Brownian snake driven by \(\zeta \). Since \(\zeta \) is distributed according to the Itô measure, W follows the Brownian snake excursion measure away from 0, which we denote by \({\mathbb N}_0\) (see [14, Chapter IV]). We then set \(\overline{Z}_a=\max \{Z_b : b\in \llbracket \rho ,a \rrbracket \}\). In terms of the Brownian snake, we have \(\overline{Z}_a=\overline{W}_s:=\max \{ W_s(t):0\le t\le \zeta _s\}\) whenever \(a=p_\zeta (s)\). We also use the notation \(\underline{W}_s:=\min \{ W_s(t):0\le t\le \zeta _s\}\).

Theorem 1

Let \(\widetilde{\mathcal T}_\zeta \) stand for the subordinate tree of \({\mathcal T}_\zeta \) with respect to the (continuous nondecreasing) function \(a\mapsto \overline{Z}_a\). Under the Brownian snake excursion measure \({\mathbb N}_0\), the tree \(\widetilde{\mathcal T}_\zeta \) is a Lévy tree with branching mechanism

Recall that Lévy trees represent the genealogy of continuous-state branching processes [8], and can be characterized by a regenerative property analogous to the branching property of Galton–Watson trees [27]. Our identification of the distribution of \(\widetilde{\mathcal T}_\zeta \) is reminiscent of the classical result stating that the right-continuous inverse of the maximum process of a standard linear Brownian motion is a stable subordinator with index 1/2.

In view of our applications, it turns out that it is important to have more information than the mere identification of \(\widetilde{\mathcal T}_\zeta \) as a random compact \({\mathbb R}\)-tree. As mentioned above, \(\widetilde{\mathcal T}_\zeta \) can be viewed as the tree coded by the random function \(s\mapsto \overline{Z}_{p_\zeta (s)}=\overline{W}_s\). This coding induces a “lexicographical” order structure on \({\mathcal T}_\zeta \) (see [6] for a thorough discussion of order structures on \({\mathbb R}\)-trees). Somewhat surprisingly, it is not immediately clear that the order structure on \(\widetilde{\mathcal T}_\zeta \) induced by the coding from the function \(s\mapsto \overline{W}_s\) coincides with the usual order structure on Lévy trees, corresponding to the uniform random shuffling at every node in the terminology of [6]. To obtain this property, we relate the function \(s\mapsto \overline{W}_s\) to the height process of [7, 8]. We recall that, from a Lévy process with Laplace exponent \(\psi _0\), we can construct a continuous random process \((H_t)_{t\ge 0}\) called the height process, which codes the \(\psi _0\)-Lévy tree (here and below we say \(\psi _0\)-Lévy tree rather than Lévy tree with branching mechanism \(\psi _0\) for simplicity). See Sect. 3 below for more details.

Theorem 2

There exists a process H, which is distributed under \({\mathbb N}_0\) as the height process of a Lévy tree with branching mechanism \(\psi _0(r)=\sqrt{8/3}\,r^{3/2}\), and a continuous random process \(\Gamma \) with nondecreasing sample paths, such that we have, \({\mathbb N}_0\) a.e. for every \(s\ge 0\),

Both H and \(\Gamma \) will be constructed in the proof of Theorem 2, and are measurable functions of \((W_s)_{s\ge 0}\). It is possible to identify the random process \(\Gamma \) as a continuous additive functional of the Brownian snake, but we do not need this fact in the subsequent applications, and we do not discuss this matter in the present work.

Theorem 2 implies that the tree coded by \(\overline{W}_s\) is isometric to the tree coded by \(H_s\), and we recover Theorem 1. But Theorem 2 gives much more, namely that the order structure induced by the coding via \(s\rightarrow \overline{W}_s\) is the same as the order structure induced by the usual height function of the Lévy tree. Order structures are crucial for our applications to the Brownian map. Similarly as in [21], we deal with a version of the Brownian map with randomized volume, which is constructed as a quotient space of the tree \({\mathcal T}_\zeta \): Two points a and b of \({\mathcal T}_\zeta \) are identified if \(Z_a=Z_b\) and if \(Z_c\ge Z_a\) for every \(c\in [a,b]\), where [a, b] is the set of all points that are visited when going from a to b around the tree in “clockwise” order (for this to make sense, it is essential that \({\mathcal T}_\zeta \) has been equipped with a lexicographical order structure). We write \(\mathbf{m}\) for the resulting quotient space (the Brownian map) and \(D^*\) for the metric on the Brownian map (see [16, 17] for more details). The space \(\mathbf{m}\) comes with two distinguished points, namely the root \(\rho \) of \({\mathcal T}_\zeta \) and the unique point \(\rho _*\) where Z attains its minimal value—in a sense that can be made precise, these two points are independent and uniformly distributed over \(\mathbf{m}\). For every \(r\ge 0\), let B(r) be the closed ball of radius r centered at \(\rho _*\) in \(\mathbf{m}\). For \(r\in [0,D(\rho ^*,\rho ))\), define the hull \(B^\bullet (r)\) as the complement of the connected component of the complement of B(r) that contains \(\rho \) (informally, \(B^\bullet (r)\) is obtained by filling in all holes of B(r) except for the one containing \(\rho \)). In particular \(B^\bullet (0)=\{\rho _*\}\). Following [21], we define the metric net \(\mathcal {M}\) as the closure of

(This definition is in fact a little different from [21] which does not take the closure of the union in the last display.) We can equip the set \(\mathcal {M}\) with an “intrinsic” metric \(\Delta ^*\) derived from the Brownian map metric \(D^*\).

It is not hard to verify that, in the construction of \(\mathbf{m}\) as a quotient space of \({\mathcal T}_\zeta \), points of \(\mathcal {M}\) exactly correspond to vertices a of \({\mathcal T}_\zeta \) such that \(Z_a=\underline{Z}_a:=\min \{Z_b:b\in \llbracket \rho ,a\rrbracket \}\) (Proposition 10). This suggests that the metric net \(\mathcal {M}\) is closely related to the subordinate tree of \({\mathcal T}_\zeta \) with respect to the function \(a\mapsto -\underline{Z}_a\), which is a \(\psi _0\)-Lévy tree by the preceding results. To make this relation precise, we need the notion of the looptree introduced by Curien and Kortchemski [4] in a more general setting. Informally, the looptree associated with a Lévy tree is constructed by replacing each point a of infinite multiplicity by a loop of “length” equal to the “weight” of a, in such a way that the subtrees that are the connected components of the complement of a branch along this loop in an order determined by the coding function (see Fig. 3 below for an illustration). To give a more precise definition, first note that Theorem 2 and an obvious symmetry argument allow us to find a process \((H'_s)_{s\ge 0}\) distributed as the height process of a \(\psi _0\)-Lévy tree, and a continuous random process \((\Gamma '_s)_{s\ge 0}\) with nondecreasing sample paths such that \(-\underline{W}_s= H'_{\Gamma '_s}\). Then let \(\chi :=\sup \{s\ge 0:H'_s>0\}\), and let \(\sim \) be the equivalence relation on \([0,\chi ]\) whose graph is the smallest closed symmetric subset of \([0,\chi ]^2\) that contains all pairs (s, t) with \(s\le t\), \(H'_s=H'_t\) and \(H'_r>H'_s\) for every \(r\in (s,t)\). The looptree \({\mathcal L}\) is defined as the quotient space \([0,\chi ]{/}\sim \) equipped with an appropriate metric (see [4] for a definition of this metric). Clearly, \(H'_\alpha \) makes sense for any \(\alpha \in {\mathcal L}\) and is interpreted as the height of \(\alpha \). We observe that this height is not directly related to the metric of the looptree. The latter metric is in fact not relevant for us, since we consider instead the pseudo-metric \(\mathcal {D}^\circ \) defined for \(\alpha ,\beta \in {\mathcal L}\) by

where \([\alpha ,\beta ]\) corresponds to the subset of \({\mathcal L}\) visited when going from \(\alpha \) to \(\beta \) “around” \({\mathcal L}\) in “clockwise order” (see Sect. 7 for a more formal definition). We write \(\alpha \simeq \beta \) if \(\mathcal {D}^\circ (\alpha ,\beta )=0\) (informally this means that \(\alpha \) and \(\beta \) “face each other” in the tree, in the sense that they are at the same height, and that points “between” \(\alpha \) and \(\beta \) are at a smaller height). It turns out that this defines an equivalence relation on \({\mathcal L}\). Finally, we let \(\mathcal {D}^*\) be the largest symmetric function on \({\mathcal L}\times {\mathcal L}\) that is bounded above by \(\mathcal {D}^\circ \) and satisfies the triangle inequality.

Theorem 3

The metric net \((\mathcal {M}, \Delta ^*)\) is a.s. isometric to the quotient space \({\mathcal L}{/}\simeq \) equipped with the metric induced by \(\mathcal {D}^*\).

See Theorem 12 in Sect. 7 for a more precise formulation. Theorem 3 is closely related to Proposition 4.4 in [21], where, however, the metric net is not identified as a metric space. The description of the metric net is an important ingredient of the axiomatic characterization of the Brownian map discussed in [21].

Let us briefly comment on the motivations for studying the metric net. Roughly speaking, the Brownian map \(\mathbf{m}\) can be recovered from the metric net \(\mathcal {M}\) by filling in the “holes”. To make this precise, we observe that the connected components of \(\mathbf{m}\backslash \mathcal {M}\) are bounded by Jordan curves (Proposition 14) and that these components are in one-to-one correspondence with connected components of \({\mathcal T}_\zeta \backslash \Theta \), where \(\Theta :=\{a\in {\mathcal T}_\zeta : Z_a=\underline{Z}_a\}\). Each of the latter components is associated with an excursion of the Brownian snake above its minimum, in the terminology of [1], and the distribution of such an excursion only depends on its boundary size as defined in [1] (this boundary size can be interpreted as a generalized length of the Jordan curve bounding the corresponding component of \(\mathbf{m}\backslash \mathcal {M}\)). Theorem 40 in [1] shows that, conditionally on their boundary sizes, these excursions are independent and distributed according to a certain “excursion measure”. In the Brownian map setting, this means that the holes in the metric net are filled in independently, conditionally on the lengths of their boundaries (see [18, Section 11] for a precise statement).

The paper is organized as follows. Section 2 gives a brief discussion of subordination for deterministic trees, and Sect. 3 recalls the basic facts about Lévy trees that we need. After a short presentation of the Brownian snake, Sect. 4 gives the distribution of the subordinate tree \(\widetilde{\mathcal T}_\zeta \) (Theorem 1). In view of identifying the order structure of this subordinate tree, Sect. 5 provides a technical result showing that the height process coding a Lévy tree is the limit in a strong sense of the discrete height functions coding embedded Galton–Watson trees. This result is related to the general limit theorems of [7, Chapter 2] proving that Lévy trees are weak limits of Galton–Watson trees, but the fact that we get a strong approximation is crucial for our applications. In Sect. 6, we prove that the Brownian snake maximum process \(s\mapsto \overline{W}_s\) is a time change of the height process associated with a \(\psi _0\)-Lévy tree (Theorem 2). This result is a key ingredient of the developments of Sect. 7, where we identify the metric net of the Brownian map (Theorem 3). Section 8 discusses the connected components of the complement of the metric net, showing in particular that they are in one-to-one correspondence with the points of infinite multiplicity of the associated Lévy tree, and that the boundary of each component is a Jordan curve. Section 9, which is mostly independent of the preceding sections, discusses more general subordinations of the Brownian tree \({\mathcal T}_\zeta \), which lead to stable Lévy trees with an arbirary index. This section is related to our previous article [3], which dealt with subordination for spatial branching processes, but the latter work did not consider the associated genealogical structures as we do here, and the subordination method, based on the so-called residual lifetime process, was also different. Finally, the appendix presents a more general and more precise version of the special Markov property of the Brownian snake (first established in [13]), which plays an important role in several proofs.

2 Subordination of deterministic trees

In this short section, we present a few elementary considerations about deterministic \({\mathbb R}\)-trees. We refer to [10] for the basic facts about \({\mathbb R}\)-trees that we will need, and to [6] for a thorough study of the coding of compact \({\mathbb R}\)-trees by functions.

Let us consider a compact \({\mathbb R}\)-tree \(({\mathcal T},d)\). We will always assume that \({\mathcal T}\) is rooted, meaning that there is a distinguished point \(\rho \in {\mathcal T}\). If \(a,b\in {\mathcal T}\), the geodesic segment between a and b (the range of the unique geodesic from a to b) is denoted by \(\llbracket a,b \rrbracket \). The point \(a\wedge b\) is then defined by \(\llbracket \rho ,a\wedge b \rrbracket =\llbracket \rho , a \rrbracket \cap \llbracket \rho ,b \rrbracket \). The genealogical partial order on \({\mathcal T}\) is denoted by \(\prec \;\): we have \(a\prec b\) if and only if \(a\in \llbracket \rho ,b\rrbracket \), and we then say that a is an ancestor of b, or b is a descendant of a. Finally, the height of \({\mathcal T}\) is defined by

Let \(g:{\mathcal T}\longrightarrow {\mathbb R}_+\) be a nonnegative continuous function on \({\mathcal T}\). Assume that \(g(\rho )=0\) and that g is nondecreasing with respect to the genealogical order (\(a\prec b\) implies that \(g(a)\le g(b)\)). We then define, for every \(a,b\in {\mathcal T}\),

Notice that \(d^{(g)}(a,b)\) is a symmetric function of a and b and satisfies the triangle inequality. We can thus consider the equivalence relation

Thus \(a\approx _{g} b\) if and only if \(g(a)=g(b)=g(a\wedge b)\), and this is also equivalent to saying that \(g(c)=g(a)\) for every \(c\in \llbracket a,b\rrbracket \). Write \({\mathcal T}^{(g)}\) for the quotient \({\mathcal T}{/}\approx _{g}\), and \(\pi ^{(g)}\) for the canonical projection from \({\mathcal T}\) onto \({\mathcal T}^{(g)}\). We equip \({\mathcal T}^{(g)}\) with the distance induced by \(d^{(g)}\), for which we keep the same notation \(d^{(g)}\).

Proposition 4

The metric space \(({\mathcal T}^{(g)},d^{(g)})\) is again a compact \({\mathbb R}\)-tree.

Proof

We first note that \(\pi ^{(g)}\) is continuous because, if \((a_n)_{n\ge 0}\) is a sequence in \({\mathcal T}\) that converges to \(a\in {\mathcal T}\), the continuity of g implies that \(d^{(g)}(a_n,a)\) tends to 0. It follows that the metric space \(({\mathcal T}^{(g)},d^{(g)})\) is compact. Then one easily verifies that, for every \(a\in {\mathcal T}\), \(\pi ^{(g)}(\llbracket \rho ,a \rrbracket )\) is a segment in \(({\mathcal T}^{(g)},d^{(g)})\) with endpoints \(\pi ^{(g)}(\rho )\) and \(\pi ^{(g)}(a)\). From Lemma 3.36 in [10], to verify that \(({\mathcal T}^{(g)},d^{(g)})\) is an \({\mathbb R}\)-tree, it suffices to check that the four-point condition

holds for every \(a_1,a_2,a_3,a_4\in {\mathcal T}\). This is straightforward and we omit the details. \(\square \)

We call \(({\mathcal T}^{(g)},d^{(g)})\) the subordinate tree of \(({\mathcal T},d)\) with respect to the function g. By convention, \({\mathcal T}^{(g)}\) is rooted at \(\pi ^{(g)}(\rho )\). Since \(d^{(g)}(\rho ,a)= g(a)\) for every \(a\in {\mathcal T}\), we have \(\mathcal {H}({\mathcal T}^{(g)})=\max \{g(a):a\in {\mathcal T}\}\).

Consider now a continuous function \(h:[0,\sigma ]\longrightarrow {\mathbb R}_+\), where \(\sigma \ge 0\), such that \(h(0)=h(\sigma )=0\), and assume that \(({\mathcal T},d)\) is the tree coded by h in the sense of [8] or [6]. This means that \({\mathcal T}={\mathcal T}_h\) is the quotient space \([0,\sigma ]{/}\sim _h\), where the equivalence relation \(\sim _h\) is defined on \([0,\sigma ]\) by

and \(d=d_h\) is the distance induced on the quotient space by

Notice that the topology of \(({\mathcal T}_h,d_h)\) coincides with the quotient topology on \({\mathcal T}_h\).

The canonical projection from \([0,\sigma ]\) onto \({\mathcal T}_h\) is denoted by \(p_h\), and \({\mathcal T}_h\) is rooted at \(\rho _h=p_h(0)\). For \(s\in [0,\sigma ]\), the quantity \(h(s)=d_h(0,s)\) is interpreted as the height of \(p_h(s)\) in the tree. One easily verifies that, for every \(s,t\in [0,\sigma ]\), the property \(p_h(s)\prec p_h(t)\) holds if and only if \(h(s)=\min \{h(r):s\wedge t\le r\le s\vee t\}\).

Remark

The function h is not determined by \({\mathcal T}_h\). In particular, if \(\phi :[0,\sigma ']\rightarrow [0,\sigma ]\) is continuous and nondecreasing, and such that \(\phi (0)=0\) and \(\phi (\sigma ')=\sigma \), the tree coded by \(h\circ \phi \) is isometric to the tree coded by h. This simple observation will be useful later.

Proposition 5

Under the preceding assumptions, if g is a nonnegative continuous function on \({\mathcal T}_h\) such that \(g(\rho _h)=0\) and g is nondecreasing with respect to the genealogical order on \({\mathcal T}_h\), the subordinate tree \(({\mathcal T}_h^{(g)},d_h^{(g)})\) of \(({\mathcal T}_h,d_h)\) with respect to the function g is isometric to the tree \(({\mathcal T}_G,d_G)\) coded by the function \(G=g\circ p_h\).

Proof

Note that the function G is nonnegative and continuous on \([0,\sigma ]\), and \(G(0)=G(\sigma )=0\). We can therefore make sense of the tree \(({\mathcal T}_G,d_G)\) and as above we denote the canonical projection from \([0,\sigma ]\) onto \({\mathcal T}_G\) by \(p_G\). We first notice that, for every \(s,t\in [0,\sigma ]\), the property \(p_h(s)=p_h(t)\) implies \(p_G(s)=p_G(t)\). Indeed, if \(p_h(s)=p_h(t)\), then, for every \(r\in [s\wedge t,s\vee t]\), we have \(p_h(s)\prec p_h(r)\) and therefore \(g(p_h(s))\le g(p_h(r))\), so that

and \(p_G(s)=p_G(t)\). We can thus write \(p_G=\mathbf{p}\circ p_h\), where the function \(\mathbf{p}:{\mathcal T}_h \longrightarrow {\mathcal T}_G\) is continuous and onto.

Then, let \(a,b\in {\mathcal T}_h\) and write \(a=p_h(s)\) and \(b=p_h(t)\), with \(s,t\in [0,\sigma ]\). We note that, for every \(r\in [s\wedge t,s\vee t]\), \(p_h(s)\wedge p_h(t)\prec p_h(r)\), and furthermore \(p_h(s)\wedge p_h(t)=p_h(r_0)\), if \(r_0\) is any element of \([s\wedge t,s\vee t]\) at which h attains its minimum over \([s\wedge t,s\vee t]\). It follows that

Hence,

If \(\pi ^{(g)}\) is the projection from \({\mathcal T}_h\) onto the subordinate tree \({\mathcal T}_h^{(g)}\), we see in particular that the condition \(\pi ^{(g)}(a)=\pi ^{(g)}(b)\) implies \(p_G(s)=p_G(t)\) and therefore \(\mathbf{p}(a)=\mathbf{p}(b)\).

It follows that \(\mathbf{p}= I \circ \pi ^{(g)}\), where \(I:{\mathcal T}_h^{(g)} \longrightarrow {\mathcal T}_G\) is onto. It remains to verify that I is isometric, but this is immediate from the identities in the last display. \(\square \)

Remark

It is known that any compact \({\mathbb R}\)-tree is isometric to \({\mathcal T}_h\) for some function h (see [6, Corollary 1.2]). Thus Proposition 5 provides an alternative proof of Proposition 4.

3 Lévy trees

In the next sections, we will consider the case where \({\mathcal T}\) is the (random) tree coded by a Brownian excursion distributed under the Itô excursion measure \(\mathbf{n}(\cdot )\), and we will identify certain subordinate trees as Lévy trees. In this section, we recall the basic facts about Lévy trees that will be needed later. We refer to [7, 8] for more details.

We consider a nonnegative function \(\psi \) defined on \([0,\infty )\) of the type

where \(\alpha ,\beta \ge 0\), and \(\pi (\mathrm {d}u)\) is a \(\sigma \)-finite measure on \((0,\infty )\) such that \(\int (u\,{\wedge }\,u^2)\,\pi (\mathrm {d}u) <\infty \). With any such function \(\psi \), we can associate a continuous-state branching process (see [12] and references therein), and \(\psi \) is then called the branching mechanism function of this process. Notice that the conditions on \(\psi \) are not the most general ones, because we restrict our attention to the critical or subcritical case. Additionally, we will assume that

This condition, which implies that at least one of the two properties \(\beta >0\) or \(\int u\,\pi (\mathrm {d}u)=\infty \) holds, is equivalent to the a.s. extinction of the continuous-state branching process with branching mechanism \(\psi \) [12]. Special cases include \(\psi (r)=r^\gamma \) for \(1<\gamma \le 2\).

Under the preceding assumptions, one can make sense of the Lévy tree that describes the genealogy of the continuous-state branching process with branching mechanism \(\psi \). We consider, under a probability measure \(\mathbf {P}\), a spectrally positive Lévy process \(X=(X_t)_{t\ge 0}\) with Laplace exponent \(\psi \), meaning that \(\mathbf {E}[\exp (-\lambda X_t)]=\exp (t\psi (\lambda ))\) for every \(t\ge 0\) and \(\lambda >0\). We define the associated height process by setting, for every \(t\ge 0\),

where the limit holds in probability under \(\mathbf {P}\). Then [7, Theorem 1.4.3] the process \((H_t)_{t\ge 0}\) has a continuous modification, which we consider from now on. We have \(H_t=0\) if and only if \(X_t=I_t\), where \(I_t=\inf \{X_s:0\le s\le t\}\) is the past minimum process of X.

Let \(\mathbf {N}\) stand for the (infinite) excursion measure of \(X-I\). Here the normalization of \(\mathbf {N}\) is fixed by saying that the local time at 0 of \(X-I\) is the process \(-I\). Let \(\chi \) stand for the duration of the excursion under \(\mathbf {N}\). The height process H is well defined (and has continuous paths) under \(\mathbf {N}\), and we have \(H_0=H_\chi =0\), \(\mathbf {N}\) a.e. To simplify notation, we will write \(\max H=\max \{H_s:0\le s\le \chi \}\) under \(\mathbf {N}\).

By definition (see [8, Definition 4.1]), the Lévy tree with branching mechanism \(\psi \) (or in short the \(\psi \)-Lévy tree) is the random compact \({\mathbb R}\)-tree \({\mathcal T}_H\) coded by the function \((H_t)_{0\le t\le \chi }\) under \(\mathbf {N}\), or more generally any random tree with the same distribution—note that the distribution of the Lévy tree is an infinite measure. We refer to [7, 8] for several results explaining in which sense the Lévy tree codes the genealogy of the continuous-state branching process with branching mechanism \(\psi \). In the special case \(\psi (r)=r^2{/}2\), X is just a standard linear Brownian motion, \(H_t=2(X_t-I_t)\) is twice a reflected Brownian motion, and the Lévy tree is the tree coded by (twice) a positive Brownian excursion under the (suitably normalized) Itô measure. Conditioning on \(\chi =1\) then yields the Brownian continuum random tree. When \(\psi (r)=r^\gamma \) with \(1<\gamma <2\), one gets the stable tree with index \(\gamma \).

The distribution of the height of a Lévy tree is given as follows. For every \(h>0\),

where the function \(v:(0,\infty )\rightarrow (0,\infty )\) is determined by

Remark

In the preceding considerations, the normalization of the infinite measure \(\mathbf {N}\) is fixed by our choice of the local time at 0 of \(X-I\), and we can recover \(\psi \) from \(\mathbf {N}\) by the formulas for the distribution of \(\mathcal {H}({\mathcal T}_H)\) under \(\mathbf {N}\). What happens if we multiply \(\mathbf {N}\) by a constant \(\lambda >0\) ? The tree \({\mathcal T}_H\) under \(\lambda \mathbf {N}\) is still a Lévy tree in the previous sense, but the associated branching mechanism is now \(\widetilde{\psi }(r)=\lambda \psi (r{/}\lambda )\). To see this, consider the Lévy process \(X'_t=\frac{1}{\lambda }X_{\lambda t}\), whose Laplace exponent is \(\widetilde{\psi }(\lambda )\). It is not hard to verify that the height process corresponding to \(X'\) is \(H'_t=H_{\lambda t}\). Furthermore, if \(\mathbf {N}'\) is the excursion measure of \(X'\) above its past minimum process, one also checks that the distribution of \((H'_{t{/}\lambda })_{t\ge 0}\) under \(\mathbf {N}'\) is the distribution of \((H_t)_{t\ge 0}\) under \(\lambda \mathbf {N}\). However, the tree coded by \((H'_{t{/}\lambda })_{t\ge 0}\) is the same as the tree coded by \((H'_{t})_{t\ge 0}\). This shows that \({\mathcal T}_H\) under \(\lambda \mathbf {N}\) is a Lévy tree with branching mechanism \(\widetilde{\psi }\). Note that this is consistent with the formula for \(\mathbf {N}(\mathcal {H}({\mathcal T}_H)>h)\).

We now state two results that will be important for our purposes. We first mention that, for every \(h\ge 0\), one can define under \(\mathbf {P}\) a local time process of H at level h, which is denoted by \((L^h_t)_{t\ge 0}\) and is such that the following approximation holds for every \(t>0\),

and the latter convergence is uniform in h (see [7, Proposition 1.3.3]). When \(h=0\) we have simply \(L^0_t=-I_t\). The definition of \((L^h_t)_{t\ge 0}\) also makes sense under \(\mathbf {N}\), with a similar approximation.

We fix \(h>0\), and let \((u_j,v_j)_{j\in J}\) be the collection of all excursion intervals of H above level h: these are all intervals (u, v), with \(0\le u<v\), such that \(H_u=H_v=h\) and \(H_r>h\) for every \(r\in (u,v)\). For each such excursion interval \((u_j,v_j)\), we define the corresponding excursion by \(H^{(j)}_s=H_{(u_j+s)\wedge v_j} -h\), for every \(s\ge 0\). Then \(H^{(j)}\) is a random element of the space \(C({\mathbb R}_+,{\mathbb R}_+)\) of all continuous functions from \({\mathbb R}_+\) into \({\mathbb R}_+\). We also let \(\mathbf {N}^\circ \) be the \(\sigma \)-finite measure on \(C({\mathbb R}_+,{\mathbb R}_+)\) which is the “law” of \((H_s)_{s\ge 0}\) under \(\mathbf {N}\).

Proposition 6

-

(i)

Under the probability measure \(\mathbf {P}\), the point measure

$$\begin{aligned} \sum _{j\in J} \delta _{(L^h_{u_j},H^{(j)})}(\mathrm {d}\ell ,\mathrm {d}\omega ) \end{aligned}$$is Poisson with intensity \(\mathbf {1}_{[0,\infty )}(\ell )\,\mathrm {d}\ell \,\mathbf {N}^\circ (\mathrm {d}\omega )\).

-

(ii)

Under the probability measure \(\mathbf {N}(\cdot \mid \max H >h)\) and conditionally on \(L^h_\chi \), the point measure

$$\begin{aligned} \sum _{j\in J} \delta _{(L^h_{u_j},H^{(j)})}(\mathrm {d}\ell ,\mathrm {d}\omega ) \end{aligned}$$is Poisson with intensity \(\mathbf {1}_{[0,L^h_\chi ]}(\ell )\,\mathrm {d}\ell \,\mathbf {N}^\circ (\mathrm {d}\omega )\).

See [8, Proposition 3.1, Corollary 3.2] for a slightly more precise version of this proposition. It follows from the preceding proposition that Lévy trees satisfy a branching property analogous to the classical branching property of Galton–Watson trees. To state this property, we introduce some notation. If \(({\mathcal T},d)\) is a (deterministic) compact \({\mathbb R}\)-tree rooted at \(\rho \), and \(0<h< \mathcal {H}({\mathcal T})\), we can consider the subtrees of \({\mathcal T}\) above level h. Here, a subtree above level h is just the closure of a connected component of \(\{a\in {\mathcal T}:d(\rho ,a)> h\}\). Such a subtree is itself viewed as a rooted \({\mathbb R}\)-tree (the root is obviously the unique point at height h in the subtree).

Proposition 7

Let \({\mathcal T}\) be a random compact \({\mathbb R}\)-tree defined under an infinite measure \(\mathcal {N}\), such that \(\mathcal {N}(\mathcal {H}({\mathcal T})=0)=0\) and \(0<\mathcal {N}(\mathcal {H}({\mathcal T})>h)<\infty \) for every \(h>0\). For every \(h,\varepsilon >0\), write \(M(h,h+\varepsilon )\) for the number of subtrees of \({\mathcal T}\) above level h with height greater than \(\varepsilon \).

-

(i)

Suppose that \({\mathcal T}\) is a Lévy tree. Then, for every \(h,\varepsilon >0\), for every integer \(p\ge 1\), the distribution under \(\mathcal {N}(\cdot \mid M(h,h+\varepsilon )=p)\) of the unordered collection formed by the p subtrees of \({\mathcal T}\) above level h with height greater than \(\varepsilon \) is the same as that of the unordered collection of p independent copies of \({\mathcal T}\) under \(\mathcal {N}(\cdot \mid \mathcal {H}({\mathcal T})>\varepsilon )\).

-

(ii)

Conversely, if the property stated in (i) holds, then \({\mathcal T}\) is a Lévy tree.

The property stated in (i) is called the branching property. The fact that it holds for Lévy trees is a straightforward consequence of Proposition 6 (ii). For the converse, we refer to [27, Theorem 1.1]. Note that the branching property remains valid if we multiply the underlying measure \(\mathcal {N}\) by a positive constant, which is consistent with the remark above.

To conclude this section, let us briefly comment on points of infinite multiplicity of the Lévy tree \({\mathcal T}_H\). The multiplicity of a point a of \({\mathcal T}_H\) is the number of connected components of \({\mathcal T}_H\backslash \{a\}\), and a is called a leaf if it has multiplicity one. Suppose that there is no quadratic part in \(\psi \), meaning that the constant \(\beta \) in (1) is 0 (note that condition (2) then implies that \(\pi \) has infinite mass). Then [8, Theorem 4.6], all points of \({\mathcal T}_H\) have multiplicity 1, 2 or \(\infty \). The set of all points of infinite multiplicity is a countable dense subset of \({\mathcal T}_H\), and these points are in one-to-one correspondence with local minima of H, or with jump times of X. More precisely, let a be a point of infinite multiplicity of \({\mathcal T}_H\). Then, if \(s_1=\min p_H^{-1}(a)\) and \(s_2=\max p_H^{-1}(a)\), we have \(s_1<s_2\), \(H_{s_1}=H_{s_2}=\min \{H_s:s_1\le s\le s_2\}\), and \(p_H^{-1}(a)=\{s\in [s_1,s_2]: H_s= H_{s_1}\}\) is a Cantor set contained in \([s_1,s_2]\). In terms of the Lévy process X, \(s_1\) is a jump time of X and \(s_2=\inf \{s\ge s_1: X_s\le X_{s_1-}\}\), and \(p_H^{-1}(a)\) consists exactly of those \(s\in [s_1,s_2]\) such that \(\inf \{X_r:r\in [s_1,s]\}=X_s\). Furthermore, if for every \(r\in [0,\Delta X_{s_1}]\) we set \(\eta _r=\inf \{s\ge s_1: X_{s}<X_{s_1}-r\}\), the points of \(p_H^{-1}(a)\) are all of the form \(\eta _r\) or \(\eta _{r-}\). The quantity \(\Delta X_{s_1}\) is the “weight” of the point of infinite multiplicity a. See [8] for more details.

4 Subordination by the Brownian snake maximum

In this section, we prove Theorem 1. We start with a brief presentation of the Brownian snake. We refer to [14, Chapter IV] for more details.

We let \(\mathcal {W}\) be the space of all finite paths in \({\mathbb R}\). Here a finite path is simply a continuous mapping \(\mathrm {w}:[0,\zeta ]\longrightarrow {\mathbb R}\), where \(\zeta =\zeta _{(\mathrm {w})}\) is a nonnegative real number called the lifetime of \(\mathrm {w}\). The set \(\mathcal {W}\) is a Polish space when equipped with the distance

The endpoint (or tip) of the path \(\mathrm {w}\) is denoted by \(\widehat{\mathrm {w}}=\mathrm {w}(\zeta _{(\mathrm {w})})\). For every \(x\in {\mathbb R}\), we set \(\mathcal {W}_x=\{\mathrm {w}\in \mathcal {W}:\mathrm {w}(0)=x\}\). We also identify the trivial path of \(\mathcal {W}_x\) with zero lifetime with the point x.

The standard (one-dimensional) Brownian snake with initial point x is the continuous Markov process \((W_s)_{s\ge 0}\) taking values in \(\mathcal {W}_x\), whose distribution is characterized by the following properties:

-

(a)

The process \((\zeta _{(W_s)})_{s\ge 0}\) is a reflected Brownian motion in \({\mathbb R}_+\) started from 0. To simplify notation, we write \(\zeta _s=\zeta _{(W_s)}\) for every \(s\ge 0\).

-

(b)

Conditionally on \((\zeta _{s})_{s\ge 0}\), the process \((W_s)_{s\ge 0}\) is time-inhomogeneous Markov, and its transition kernels are specified as follows. If \(0\le s\le s'\),

-

\(W_{s'}(t)=W_{s}(t)\) for every \(t\le m_\zeta (s,s'):=\min \{\zeta _r:s\le r\le s'\}\);

-

the random path \((W_{s'}(m_\zeta (s,s')+t)-W_{s'}(m_\zeta (s,s')))_{0\le t\le \zeta _{s'}- m_\zeta (s,s')}\) is independent of \(W_s\) and distributed as a real Brownian motion started at 0 and stopped at time \(\zeta _{s'}- m_\zeta (s,s')\).

-

Informally, the value \(W_s\) of the Brownian snake at time s is a one-dimensional Brownian path started from x, with lifetime \(\zeta _s\). As s varies, the lifetime \(\zeta _s\) evolves like reflected Brownian motion in \({\mathbb R}_+\). When \(\zeta _s\) decreases, the path is erased from its tip, and when \(\zeta _s\) increases, the path is extended by adding “little pieces” of Brownian paths at its tip.

For every \(x\in {\mathbb R}\), we write \({\mathbb P}_x\) for the probability measure under which \(W_0=x\), and \({\mathbb N}_x\) for the (infinite) excursion measure of W away from x. Also \(\sigma :=\sup \{s>0: \zeta _s> 0\}\) stands for the duration of the excursion under \({\mathbb N}_x\). Under \({\mathbb N}_x\), \((\zeta _s)_{s\ge 0}\) is distributed according to the Itô excursion measure \(\mathbf{n}(\cdot )\), and the normalization is fixed by the formula

The following property of the Brownian snake will be used in several places below. Recall that \(\widehat{W}_s=W_s(\zeta _s)\) is the tip of the path \(W_s\). We say that \(r\in [0,\sigma )\) is a time of right increase of \(s\mapsto \zeta _s\), resp. of \(s\mapsto \widehat{W}_s\), if there exists \(\varepsilon \in (0,\sigma -r]\) such that \(\zeta _u\ge \zeta _r\), resp. \(\widehat{W}_u\ge \widehat{W}_r\), for every \(u\in [r,r+\varepsilon ]\). We can similarly define points of left increase. Then according to [19, Lemma 3.2], \({\mathbb N}_x\) a.e., no time \(r\in (0,\sigma )\) can be simultaneously a time of (left or right) increase of \(s\mapsto \zeta _s\) and a point of (left or right) increase of \(s\mapsto \widehat{W}_s\).

Le us fix \(x=0\) and argue under \({\mathbb N}_0\). As previously, we write \({\mathcal T}_\zeta \) for the (random) tree coded by the function \((\zeta _s)_{0\le s\le \sigma }\), \(p_\zeta :[0,\sigma ]\longrightarrow {\mathcal T}_\zeta \) for the canonical projection, and \(\rho _\zeta =p_\zeta (0)\) for the root of \({\mathcal T}_\zeta \). Properties of the Brownian snake show that the condition \(p_\zeta (s)=p_\zeta (s')\) implies \(W_s=W_{s'}\), and thus we can define \(W_a\) for every \(a\in {\mathcal T}_\zeta \) by setting \(W_a=W_s\) if \(a=p_\zeta (s)\). We note that, if \(a=p_\zeta (s)\) and \(t\in [0,\zeta _s]\), \(W_s(t)\) coincides with \(\widehat{W}_b\) where b is the unique point of \(\llbracket \rho _\zeta ,a\rrbracket \) at distance t from \(\rho _\zeta \). For every \(\mathrm {w}\in \mathcal {W}\), set

Then, the function \(a\mapsto \overline{W}_a\) is continuous and nondecreasing on \({\mathcal T}_\zeta \) (if \(a=p_\zeta (s)\) and \(b=p_\zeta (s')\), the condition \(a\prec b\) implies that \(\zeta _s\le \zeta _{s'}\) and that \(W_a\) is the restriction of \(W_b\) to the interval \([0,\zeta _s]\), so that obviously \(\overline{W}_a\le \overline{W}_b\)). As in Theorem 1, we write \(\widetilde{\mathcal T}_\zeta \) for the subordinate tree of \({\mathcal T}_\zeta \) with respect to the function \(a\mapsto \overline{W}_a\).

Proof of Theorem 1

We first verify that the branching property stated in Proposition 7 holds for \(\widetilde{\mathcal T}_\zeta \) under \({\mathbb N}_0\), and to this end we rely on the special Markov property of the Brownian snake.

Let \(h>0\), and, for every \(\mathrm {w}\in \mathcal {W}\), set \(\tau _h(\mathrm {w})=\inf \{t\in [0,\zeta _{(\mathrm {w})}]:\mathrm {w}(t)\ge h\}\). Let \((a_i,b_i)_{i\in I}\) be the connected components of the open set \(\{s\ge 0: \tau _h(W_s)<\zeta _s\}\). For every such connected component \((a_i,b_i)\), for every \(s\in [a_i,b_i]\), the path \(W_s\) coincides with \(W_{a_i}=W_{b_i}\) up to time \(\tau _h(W_{a_i})=\zeta _{a_i}=\zeta _{b_i}=\tau _h(W_{b_i})\) (these assertions are straightforward consequences of the properties of the Brownian snake, and we omit the details). We then set, for every \(s\ge 0\),

We view \(W^{(i)}\) as a random element of the space of all continuous functions from \({\mathbb R}_+\) into \(\mathcal {W}_h\), and the \(W^{(i)}\)’s are called the excursions of W outside the domain \((-\infty ,h)\) (see the appendix below for further details in a more general setting).

By a compactness argument, only finitely many of the excursions \(W^{(i)}\) hit \((h+\varepsilon ,\infty )\). Let \(M_{h,\varepsilon }\) be the number of these excursions. It follows from Corollary 22 in the appendix that, for every \(p\ge 1\), conditionally on \(\{M_{h,\varepsilon }=p\}\), the unordered collection formed by the excursions of W outside \((-\infty ,h)\) that hit \((h+\varepsilon ,\infty )\) is distributed as the unordered collection of p independent copies of W under \({\mathbb N}_h(\cdot \mid \sup \{\widehat{W}_s:s\ge 0\} > h+\varepsilon )\). On the other hand, noting that \(\widetilde{\mathcal T}_\zeta \) is the tree coded by the function \([0,\sigma ]\ni s\mapsto \overline{W}_s\) (by Proposition 5), we also see that subtrees of \(\widetilde{\mathcal T}_\zeta \) above level h with height greater than \(\varepsilon \) are in one-to-one correspondence with excursions of W outside \((-\infty ,h)\) that hit \((h+\varepsilon ,\infty )\), and if a subtree \(\widetilde{\mathcal T}^{(i)}\) corresponds to an excursion \(W^{(i)}\), \(\widetilde{\mathcal T}^{(i)}\) is obtained from the excursion \(W^{(i)}\) (shifted so that it starts from 0) by exactly the same procedure that allows us to construct \(\widetilde{\mathcal T}_\zeta \) from W under \({\mathbb N}_0\): To be specific, \(\widetilde{\mathcal T}^{(i)}\) is coded by the function \(s\mapsto \overline{W}^{(i)}_s-h\) just as \(\widetilde{\mathcal T}_\zeta \) is coded by \(s\mapsto \overline{W}_s\). The preceding considerations show that \(\widetilde{\mathcal T}_\zeta \) satisfies the branching property, and therefore is a Lévy tree.

To get the formula for \(\psi _0\), we note that the distribution of the height of \(\widetilde{\mathcal T}_\zeta \) is given by

where the last equality can be found in [14, Section VI.1]. Since we also know that the function \(v(r)={\mathbb N}_0(\mathcal {H}(\widetilde{\mathcal T}_\zeta )>r)\) solves \(v'=-\psi _0(v(r))\), the formula for \(\psi _0\) follows. \(\square \)

5 Approximating a Lévy tree by embedded Galton–Watson trees

In this section, we come back to the general setting of Sect. 3. Our goal is to prove that the Lévy tree \({\mathcal T}_H\) is (under the probability measure \(\mathbf {N}(\cdot \mid \mathcal {H}({\mathcal T}_H)>h)\) for some \(h>0\)) the almost sure limit of a sequence of embedded Galton–Watson trees, and that this limit is consistent with the order structure of the Lévy tree. We refer to [15] for basic facts about Galton–Watson trees. A key property for us is the fact that Galton–Watson trees are rooted ordered (discrete) trees, also called plane trees, so that there is a lexicographical ordering on vertices.

In what follows, we argue under the probability measure \(\mathbf {P}\). Recall that X is under \(\mathbf {P}\) a Lévy process with Laplace exponent \(\psi \), and that H is the associated height process. We fix an integer \(n\ge 1\), and, for every integer \(j\ge 0\), we consider the sequence of all excursions of H above level \(j\,2^{-n}\) that hit level \((j+1)2^{-n}\). We let

be the ordered sequence consisting of all the initial times of these excursions, for all values of the integer \(j\ge 0\) (so, \(\alpha ^n_0\) corresponds to the beginning of an excursion of H above 0 that hits \(2^{-n}\), \(\alpha ^n_1\) may be either the beginning of an excursion of H above 0 that hits \(2^{-n}\) or the beginning of an excursion of H above \(2^{-n}\) that hits \(2\times 2^{-n}\), and so on). For every \(j\ge 0\), we also let \(\beta ^n_j\) be the terminal time of the excursion starting at time \(\alpha ^n_j\).

We then set, for every integer \(k\ge 0\),

Proposition 8

The process \((H^n_k)_{k\ge 0}\) is the discrete height process of a sequence of independent Galton–Watson trees with the same offspring distribution \(\mu _n\).

Recall that the discrete height process of a sequence of Galton–Watson trees gives the generation of the successive vertices in the trees, assuming that these vertices are listed in lexicographical order in each tree and one tree after another. See [15] or [7, Section 0.2]. The (finite) height sequence of a single tree is defined analogously.

Proof

By construction, \(\alpha ^n_0\) is the initial time of the first excursion of H above 0 that hits \(2^{-n}\). Notice that this excursion is distributed as H under \(\mathbf{N}(\cdot \mid \max H \ge 2^{-n})\). Let \(K\ge 1\) be the (random) integer such that \(\alpha ^n_{K-1}<\beta ^n_0\le \alpha ^n_K\), so that \( \alpha ^n_K\) is the initial time of the second excursion of H above 0 that hits \(2^{-n}\).

With the excursion of H during interval \([\alpha ^n_0,\beta ^n_0]\), we can associate a (plane) tree \({\mathcal T}^n_0\) constructed as follows. The children of the ancestor correspond to the excursions of H above level \(2^{-n}\), during the time interval \([\alpha ^n_0,\beta ^n_0]\), that hit \(2\times 2^{-n}\) and the order on these children is obviously given by the chronological order. Equivalently, the children of the ancestor correspond to the indices \(i\in \{1,\ldots ,K-1\}\) such that \(H^n_i=1\). Then, assuming that the ancestor has at least one child (equivalently that \(K\ge 2\)), the children of the first child of the ancestor correspond to the excursions of H above level \(2\times 2^{-n}\), during the time interval \([\alpha ^n_1,\beta ^n_1]\), that hit \(3\times 2^{-n}\), and so on. See Fig. 2 for an illustration.

Write \(N^n_0\) for the number of children of the ancestor in \({\mathcal T}^n_0\). It follows from Proposition 6 (ii) that, conditionally on \(N^n_0\), the successive excursions of H above level \(2^{-n}\), during the time interval \([\alpha ^n_0,\beta ^n_0]\), that hit \(2\times 2^{-n}\) are independent and distributed as H under \(\mathbf{N}(\cdot \mid \max H \ge 2^{-n})\) (recall that our definition shifts excursions above a level h so that they start from 0). Recalling the construction of the tree \({\mathcal T}^n_0\), we now obtain that, conditionally on \(N^n_0\), the subtrees of \({\mathcal T}^n_0\) originating from the children of the ancestor are independent and distributed according to \({\mathcal T}^n_0\). This just means that \({\mathcal T}^n_0\) is a Galton–Watson tree, and its offspring distribution \(\mu _n\) is the law under \(\mathbf{N}(\cdot \mid \max H \ge 2^{-n})\) of the number of excursions of H above level \(2^{-n}\) that hit \(2\times 2^{-n}\).

With the second excursion of H above 0 that hits \(2^{-n}\), we can similarly associate a Galton–Watson tree \({\mathcal T}^n_1\) with offspring distribution \(\mu _n\), and so on. The trees \({\mathcal T}^n_0,{\mathcal T}^n_1,\ldots \) are independent as a consequence of the strong Markov property of the Lévy process X. By construction, the process \((H^n_k)_{k\ge 0}\) is the discrete height process of the sequence \({\mathcal T}^n_0,{\mathcal T}^n_1,\ldots \). \(\square \)

Proposition 9

For every \(n\ge 1\), set \(v_n:= 2^nv(2^{-n})=2^n \mathbf{N}(\max H \ge 2^{-n})\). Then, for every \(A>0\),

in probability under \(\mathbf {P}\).

Proof

Recall that, for every \(h\ge 0\), \((L^h_t)_{t\ge 0}\) denotes the local time of H at level h. It will be convenient to introduce, for every \(n\ge 1\) and every \(j\ge 0\), the increasing process

As a consequence of Proposition 6(i) applied with \(h=j 2^{-n}\), we get that \(\mathcal {N}^n_{(j)}(t)\) is a Poisson process with parameter \(v(2^{-n})=\mathbf{N}(\max H \ge 2^{-n})\).

We claim that, for every \(A>0\),

To see this, first observe that, for every \(s>0\),

and then write

Then, if \(\mathcal {N}(t)\) stands for a standard Poisson process, we have by a classical martingale inequality

It follows that, for every \(j\ge 0\),

and the proof of (4) is completed by noting that \(v(2^{-n})\longrightarrow \infty \) as \(n\rightarrow \infty \), and that

because \(L^{j2^{-n}}_A\) is bounded above in distribution by \(L^0_A\) (cf Definition 1.3.1 in [7]), and we know that \(L^0_A=-I_A\) has exponential moments.

Let \(\ell \ge 1\) be an integer. By summing the convergence in (4) over possible choices of \(0\le j< \ell 2^n\), we also obtain that

On the other hand, we have, for every \(s\ge 0\),

and it follows from (3) that

By combining (5) and (6), we get

where \(v_n=2^nv(2^{-n})\). Since \(\mathbf {P}(\max \{H_s:0\le s\le A\}\ge \ell )\) can be made arbitrarily small by choosing \(\ell \) large, we have obtained that

in probability. Elementary arguments show that this implies

in probability, and therefore also

in probability. This completes the proof. \(\square \)

In what follows, we will need an analog of the preceding two propositions for the height process under its excursion measure \(\mathbf{N}\). Let us fix \(n\ge 1\). Under the measure \(\mathbf{N}(\cdot \cap \{ \max H\ge 2^{-n}\})\), we define

as the ordered sequence consisting of the initial times of all excursions of H above level \(j\,2^{-n}\) that hit level \((j+1)2^{-n}\), for all values of the integer \(j\ge 0\). The analog of Proposition 8 says that, under \(\mathbf{N}(\cdot \mid \max H\ge 2^{-n})\), the finite sequence

is distributed as the height sequence of a Galton–Watson tree with offspring distribution \(\mu _n\). This is immediate from the fact that an excursion with distribution \(\mathbf{N}(\cdot \mid \{ \max H\ge 2^{-n}\})\) is obtained by taking (under \(\mathbf {P}\)) the first excursion of H with height greater than \(2^{-n}\). By convention, we take \(\bar{\alpha }^n_k=\chi \) and \(\bar{H}^n_k=0\) if \(k\ge \bar{K}_n\).

We next fix a sequence \((n_p)_{p\ge 1}\) such that both (7) and the convergence of Proposition 9 hold \(\mathbf {P}\) a.s. along this sequence, for each \(A>0\). From now on, we consider only values of n belonging to this sequence. We claim that we have then also

To see this, note that it suffices to argue under \(\mathbf{N}(\cdot \mid \max H >\delta )\) for some \(\delta >0\), and then to consider the first excursion (under \(\mathbf {P}\)) of H away from 0 with height greater than \(\delta \). We abuse notation by still writing \(0= \bar{\alpha }^n_0<\bar{\alpha }^n_1<\bar{\alpha }^n_2<\cdots <\bar{\alpha }^n_{\bar{K}_n-1}\) for the finite sequence of times defined as explained above, now relative to this first excursion with height greater than \(\delta \).

We observe that, provided n is large enough so that \(2^{-n}<\delta \), we have

where \(d_n\) is the index such that \(\alpha ^n_{d_n}=:d_{(\delta )}\) is the initial time of the first excursion of H away from 0 with height greater than \(\delta \), and \(r_n\) is the first index \(k>d_n\) such that \(\alpha ^n_k\) does not belong to the interval \([d_{(\delta )},r_{(\delta )}]\) associated with this excursion. Notice that \(\alpha ^n_{r_n}\) decreases to \(r_{(\delta )}\) as \(n\rightarrow \infty \). Our claim (8) then reduces to verifying that

which follows from Proposition 9 and (7), recalling that both these convergences hold a.s. on the sequence of values of n that we consider.

6 The coding function of the subordinate tree as a time-changed height process

In this section, we prove Theorem 2. We consider the Brownian snake under its excursion measure \({\mathbb N}_0\), and we recall the notation \(\overline{W}_s=\max \{W_s(t):0\le t\le \zeta _s\}\), and \(\overline{W}_a=\overline{W}_s\) if \(a=p_\zeta (s)\). As in Theorem 1, we write \(\widetilde{\mathcal T}_\zeta \) for the subordinate tree of the Brownian tree \({\mathcal T}_\zeta \) with respect to the function \(a\mapsto \overline{W}_a\).

Proof of Theorem 2

We set \(W^*=\max \{\overline{W}_s:0\le s\le \sigma \}\). Fix \(n\ge 1\) and argue under the probability measure \({\mathbb N}_0(\cdot \mid W^*\ge 2^{-n})\). We define a discrete plane tree \({\mathcal T}^{(n)}\) which is constructed from \((\overline{W}_s)_{0\le s\le \sigma }\) in exactly the same way as \({\mathcal T}^n_0\) was constructed from the excursion of H during \([\alpha ^n_0,\beta ^n_0]\) in the proof of Proposition 8 (see Fig. 2). In this construction, the children of the root correspond to the excursions of the process \(s\rightarrow \overline{W}_s\) above level \(2^{-n}\) that hit \(2\times 2^{-n}\), or equivalently to the excursions of W outside \((-\infty ,2^{-n})\) that hit \(2\times 2^{-n}\) (recall the definition of these excursions from the proof of Theorem 1). The point is that, if (u, v) is the time interval associated with an excursion of W outside \((-\infty ,2^{-n})\), the process \(s\mapsto \overline{W}_s\) remains strictly above \(2^{-n}\) on the whole interval (u, v)). Similarly as in the previous section, the children of the root are ordered according to the chronological order of the Brownian snake.

By the special Markov property, in the form given in Corollary 21 in the appendix below, conditionally on the number of excursions of W outside \((-\infty ,2^{-n})\) that hit \(2\times 2^{-n}\), these excursions listed in chronological order are independent and distributed according to \({\mathbb N}_0(\cdot \mid W^*\ge 2^{-n})\), modulo the obvious translation by \(-2^{-n}\). This shows that the random plane tree \({\mathcal T}^{(n)}\) satisfies the branching property at the first generation and therefore \({\mathcal T}^{(n)}\) is a Galton–Watson tree.

Let \(\mu _n\) be the offspring distribution found in Proposition 8 in the case where \(\psi (r)=\psi _0(r)\). We claim that \(\mu _n\) is also the offspring distribution of \({\mathcal T}^{(n)}\). To see this, observe that \(\mu _n\) is, by definition, the distribution of the number of points of a \(\psi _0\)-Lévy tree at height \(2^{-n}\) that have descendants at height \(2\times 2^{-n}\) (conditionally on the event that the height of the tree is at least \(2^{-n}\)). Thanks to Theorem 1 and to the fact that \(\widetilde{\mathcal T}_\zeta \) is the tree coded by the function \(s\mapsto \overline{W}_s\), we know that this is the same as the conditional distribution of the number of excursions of \(s\mapsto \overline{W}_s\) above level \(2^{-n}\) that hit \(2\times 2^{-n}\), under \({\mathbb N}_0(\cdot \mid W^*\ge 2^{-n})\).

Let

be the ordered sequence consisting of the initial times of all excursions of \(s\rightarrow \overline{W}_s\) above level \(j\,2^{-n}\) that hit level \((j+1)2^{-n}\), for all values of the integer \(j\ge 0\). Note that each such excursion corresponds to a vertex of the tree \({\mathcal T}^{(n)}\), and so \( K_n\) is just the total progeny of \({\mathcal T}^{(n)}\). By convention, we also define \(\xi ^n_{K_n}=\sigma \). Set

and \(\widetilde{H}^n_k=0\) if \(k\ge K_n\). Then \((\widetilde{H}^n_k,0\le k< K_n)\) is the height sequence of \({\mathcal T}^{(n)}\) (note that the lexicographical ordering on vertices of \({\mathcal T}^{(n)}\) corrresponds to the chronological order on the associated excursion initial times). Hence \((\widetilde{H}^n_k)_{k\ge 0}\) has the same distribution as the sequence \((\bar{H}^n_k)_{k\ge 0}\) which was defined at the end of the preceding section from the height process H under \(\mathbf{N}(\cdot \mid \max H \ge 2^{-n})\).

But in fact more in true: the whole collection of the discrete sequences \((\widetilde{H}^n_k)_{k\ge 0}\) for all \(n\ge 1\) has the same distribution under \({\mathbb N}_0\) as the similar collection of sequences \((\bar{H}^n_k)_{k\ge 0}\) constructed from the height process H under \(\mathbf{N}\) (the reason is the fact that, in both constructions, the tree at step n can be obtained from the tree at step \(n+1\) by the deterministic operation consisting in keeping only those vertices at even generation that have at least one child, and viewing that set of vertices as a plane tree in the obvious manner). The convergence (8) now allows us to set, for every \(t\ge 0\),

and the process \((\widetilde{H}_t)_{t\ge 0}\) is distributed as the height process of the Lévy tree with branching mechanism \(\psi _0(r)=\sqrt{8/3}\,r^{3/2}\). The limit in the preceding display holds uniformly in t, \({\mathbb N}_0\) a.e., provided we argue along the subsequence of values of n introduced at the end of the preceding section. We set \(\widetilde{\chi }=\sup \{s\ge 0:\widetilde{H}_s>0\}\).

We observe that the distribution of \((\widetilde{H}, (\widetilde{H}^n_k)_{n\ge 1,k\ge 0})\) under \({\mathbb N}_0\) is the same as that of \((H, (\bar{H}^n_k)_{n\ge 1,k\ge 0})\) under \(\mathbf {N}\), and so we must have, for every \(n\ge 1\) and \(k\ge 0\),

where \(\widetilde{\alpha }^n_0<\widetilde{\alpha }^n_1<\cdots <\widetilde{\alpha }^n_{\widetilde{K}_n-1}\) are the initial times of the excursions of \(\widetilde{H}_s\) above level \(j\,2^{-n}\) that hit level \((j+1)2^{-n}\), for all values of the integer \(j\ge 0\), and \(\widetilde{\alpha }^n_k=\widetilde{\chi }\) if \(k\ge \widetilde{K}_n\). Notice that \(\widetilde{K}_n=K_n\) because the height sequence of the tree \({\mathcal T}^{(n)}\) is \((\widetilde{H}^n_k)_{0\le k\le K_n-1}\). Also, if \(n<m\) and \(k\in \{0,1,\ldots ,K_n-1\}\), \(k'\in \{0,1,\ldots ,K_m-1\}\), the property \(\tilde{\alpha }^n_k= \tilde{\alpha }^m_{k'}\) holds if and only if \(\xi ^n_k=\xi ^n_{k'}\): Indeed these properties hold if and only if the vertex with index k in the (lexicographical) ordering of \({\mathcal T}^{(n)}\) coincides with the vertex with index \(k'\) in the ordering of \({\mathcal T}^{(m)}\), modulo the identification of the vertex set of \({\mathcal T}^{(n)}\) as a subset of the vertex set of \({\mathcal T}^{(m)}\), in the way explained above.

We need to verify that \(\overline{W}_s\) can be written as a time change of \(\widetilde{H}\). As a first step, we notice that, for every \(0\le k< K_n\),

and so \(\overline{W}_{\xi ^n_k}=\widetilde{H}_{\widetilde{\alpha }^n_k}\). This suggests that the process \(\Gamma \) in the statement of the theorem should be such that \(\Gamma _{\xi ^n_k}=\widetilde{\alpha }^n_k\), for every \(k\in \{0,1,\ldots ,K_n-1\}\) and every n.

At this point, we observe that

Indeed, if this property fails, a compactness argument gives two times \(u,v\in [0,\widetilde{\chi }]\) with \(u<v\) such that \(t\rightarrow \widetilde{H}_t\) is monotone nonincreasing on [u, v]. To see that this cannot occur, we may replace \(\widetilde{H}\) by the process H constructed from a Lévy process excursion X as explained in Sect. 3. We then note that jumps of X are dense in \([0,\chi ]\), and the strong Markov property shows that, for any jump time s of X, for any \(\varepsilon >0\), we can find \(s',s''\in [s,s+\varepsilon ]\), with \(s''>s'\), such that \(H_{s''}>H_{s'}\) (use formula (20) in [7], or see the comments at the end of Sect. 3).

Let \(s\in [0,\sigma )\) and, for every integer \(n\ge 1\), let \(k_n(s)\in \{0,1,\ldots ,K_n-1\}\) be the unique integer such that \(\xi ^n_{k_n(s)}\le s<\xi ^n_{k_n(s)+1}\). We note that the sequence \(\xi ^n_{k_n(s)}\) is monotone nondecreasing (this is obvious since \((\xi ^n_0,\ldots ,\xi ^n_{K_n})\) is a subset of \((\xi ^{n+1}_0,\ldots ,\xi ^{n+1}_{K_{n+1}})\)). It follows that the sequence \(\widetilde{\alpha }^n_{k_n(s)}\) is also monotone nondecreasing: Indeed, if \(n<m\) and \(k\in \{0,1,\ldots ,K_n-1\}\), \(k'\in \{0,1,\ldots ,K_m-1\}\) are such that \(\xi ^n_k\le \xi ^m_{k'}\), we have automatically \(\widetilde{\alpha }^n_k\le \widetilde{\alpha }^m_{k'}\) since, writing \(\xi ^n_k=\xi ^m_{k^*}\), the fact that \(k^*\le k'\) implies that \(\widetilde{\alpha }^n_k=\widetilde{\alpha }^m_{k^*}\le \widetilde{\alpha }^m_{k'}\).

We can now set

Note that this limit will exist simultaneously for all \(s\in [0,\sigma )\) outside a set of \({\mathbb N}_0\)-measure 0. We also take \(\Gamma _s=\widetilde{\chi }\) for all \(s\ge \sigma \). Clearly \(s\mapsto \Gamma _s\) is nondecreasing and, by construction, the property \(\Gamma _{\xi ^n_k}=\widetilde{\alpha }^n_k\) holds for every \(k\in \{0,1,\ldots ,K_n\}\) and every n, and we have \(\overline{W}_s=H_{\Gamma _s}\) when s is of the form \(\xi ^n_k\). We also note that \(s\longrightarrow \Gamma _s\) is continuous as a consequence of the property (9). To check right-continuity, observe that, if \(s\in [0,\sigma )\) is fixed, and \(s'\) is such that \(\xi ^n_{k_n(s)}<s'<\xi ^n_{k_n(s)+1}\) then, for every \(m\ge n\), the property \(\xi ^m_{k_m(s')}\le s'<\xi ^n_{k_n(s)+1}\) forces \(\widetilde{\alpha }^m_{k_m(s')}\le \widetilde{\alpha }^n_{k_n(s)+1}\), hence (letting m tend to \(\infty \)) \(\Gamma _{s'}\le \widetilde{\alpha }^n_{k_n(s)+1}\), and use (9) and the definition (10) of \(\Gamma _s\). Left-continuity is derived by a similar argument.

For \(s\in [0,\sigma )\), set \(s'=\lim \uparrow \xi ^n_{k_n(s)}\) and \(s''=\lim \downarrow \xi ^n_{k_n(s)+1}\). Note that \(s'\le s\le s''\). On one hand, by passing to the limit \(n\rightarrow \infty \) in the equality

we obtain that \(\overline{W}_{s'}=\widetilde{H}_{\Gamma _s}\). On the other hand, \(\overline{W}\) must be constant on the interval \([s',s'']\). To see this, we first observe that \(\overline{W}\) must be nonincreasing on \([s',s'']\) (otherwise there would be some n and some \(k\in \{0,1,\ldots ,K_n-1\}\) such that \(\xi ^n_{k_n(s)}<\xi ^n_k <\xi ^n_{k_n(s)+1}\), which is absurd). So we need to verify that there is no nontrivial interval \([s_1,s_2]\) such that \(s\mapsto \overline{W}_s\) is both nonincreasing and nonconstant on \([s_1,s_2]\), and, to prove this, we may replace nonincreasing by nondecreasing thanks to the invariance of \({\mathbb N}_0\) under time-reversal. Argue by contradiction, and suppose that \(s_1<s_2\) are such that that the event where \(0<s_1<s_2<\sigma \) and \(s\mapsto \overline{W}_s\) is both nondecreasing and nonconstant on \([s_1,s_2]\) has positive \({\mathbb N}_0\)-measure. We can then find a stopping time T such that, with positive \({\mathbb N}_0\)-measure on the latter event, we have \(s_1<T<s_2\), \(\overline{W}_T=\widehat{W}_T\) and \(\max \{W_T(s):0\le s \le \zeta _T\}\) is attained only at \(\zeta _T\) (take \(T=\inf \{s>s_1: \widehat{W}_s\ge \overline{W}_{s_1}+\delta \}\), with \(\delta >0\) small enough). Using the strong Markov property of the Brownian snake, we then find \(r\in (T,s_2)\) such that \(W_r\) is the restriction of \(W_T\) to \([0,\zeta _T-\varepsilon ]\), for some \(\varepsilon >0\), which implies \(\overline{W}_r<\overline{W}_T\) and gives a contradiction with the fact that \(s\mapsto \overline{W}_s\) is nondecreasing on \([s_1,s_2]\).

Finally, since \(\overline{W}\) is constant on \([s',s'']\), we have \(\overline{W}_s=\overline{W}_{s'}=\widetilde{H}_{\Gamma _s}\), which was the desired result. This completes the proof of Theorem 2. \(\square \)

7 Applications to the Brownian map

In this section, we discuss applications of the previous results to the Brownian map. Analogously to [21], we consider a version of the Brownian map with “randomized volume”, which may be constructed under the Brownian snake excursion measure \({\mathbb N}_0\) as follows. Recall that \(({\mathcal T}_\zeta ,d_\zeta )\) stands for the tree coded by \((\zeta _s)_{0\le s\le \sigma }\) and \(p_\zeta \) is the canonical projection from \([0,\sigma ]\) onto \({\mathcal T}_\zeta \). For \(a,b\in {\mathcal T}_\zeta \), the “lexicographical interval” [a, b] stands for the image under \(p_\zeta \) of the smallest interval [s, t] (\(s,t\in [0,\sigma ]\)) such that \(p_\zeta (s)=a\) and \(p_\zeta (t)=b\) (here we make the convention that if \(s>t\) the interval [s, t] is equal to \([s,\sigma ]\cup [0,t]\)).

For every \(a\in {\mathcal T}_\zeta \), we set \(Z_a=\widehat{W}_s\), where s is such that \(p_\zeta (s)=a\). In particular \(Z_{\rho _\zeta }=0\). The random mapping \({\mathcal T}_\zeta \ni a \mapsto Z_a\) is interpreted as Brownian motion indexed by the “Brownian tree” \({\mathcal T}_\zeta \).

We then define a mapping \(D^\circ :{\mathcal T}_\zeta \times {\mathcal T}_\zeta \longrightarrow {\mathbb R}_+\) by setting

For \(a,b\in {\mathcal T}_\zeta \), we set \(a\approx b\) if and only if \(D^\circ (a,b)=0\), or equivalently

One can verify that if \(a\approx a'\) then \(D^\circ (a,b)=D^\circ (a',b)\) for any \(b\in {\mathcal T}_\zeta \) (the point is that, if \(a\approx a'\) with \(a\not =a'\), then necessarily a and \(a'\) are leaves of \({\mathcal T}_\zeta \), and the reals \(t,t'\in [0,\sigma )\) such that \(p_\zeta (t)=a\) and \(p_\zeta (t')=a'\) are unique, which implies that \([a,b]\subset [a,a']\cup [a',b]\) and \([b,a]\subset [b,a']\cup [a',a]\)).

We also set

where the infimum is over all choices of the integer \(p\ge 1\) and of the points \(a_1,\ldots ,a_{p-1}\) of \({\mathcal T}_\zeta \). If \(a\approx a'\) then \(D^*(a,b)=D^*(a',b)\) for any \(b\in {\mathcal T}_\zeta \). Furthermore one can also prove that \(D^*(a,b)=0\) if and only if \(a\approx b\). Since \(D^*\) satisfies the triangle inequality, it follows that \(\approx \) is an equivalence relation on \({\mathcal T}_\zeta \). The Brownian map (with randomized volume) is the quotient space \(\mathbf{m}:={\mathcal T}_\zeta {/}\approx \), which is equipped with the distance induced by the function \(D^*\) (with a slight abuse of notation, we still denote the induced distance by \(D^*\)). We write \(\Pi \) for the canonical projection from \({\mathcal T}_\zeta \) onto \(\mathbf{m}\), and \(\mathbf{p}=\Pi \circ p_\zeta \). We also write \(D^\circ (x,y)=D^\circ (a,b)\) if \(x=\Pi (a)\) and \(y=\Pi (b)\).

In the usual construction of the Brownian map, one deals with the conditioned measure \({\mathbb N}_0(\cdot \mid \sigma =1)\) instead of \({\mathbb N}_0\), but otherwise the construction is exactly the same and we refer to [16, 17] for more details.

The Brownian map \(\mathbf{m}\) comes with two distinguished points. The first one \(\rho =\mathbf{p}(0)=\Pi (\rho _\zeta )\) corresponds to the root \(\rho _\zeta \) of \({\mathcal T}_\zeta \). The second distinguished point is \(\rho _*=\Pi (a_*)\), where \(a_*\) is the (unique) point of \({\mathcal T}_\zeta \) at which Z attains its minimum:

We will write \(Z_*=Z_{a_*}\) to simplify notation. The reason for considering \(\rho _*\) comes from the fact that distances from \(\rho _*\) have a simple expression. For any \(a\in {\mathcal T}_\zeta \),

The following “cactus bound” [16, Proposition 3.1] also plays an important role. Let \(a,b\in {\mathcal T}_\zeta \), and let \(\gamma :[0,1]\rightarrow \mathbf{m}\) be a continuous path in \(\mathbf{m}\) such that \(\gamma (0)=\Pi (a)\) and \(\gamma (1)=\Pi (b)\). Then,

where we recall that \(\llbracket a,b \rrbracket \) is the geodesic segment between a and b in \({\mathcal T}_\zeta \), not to be confused with the interval [a, b]. In other words, any continuous path from \(\Pi (a)\) to \(\Pi (b)\) must come at least as close to \(\rho _*\) as the (image under \(\Pi \) of the) geodesic segment from a to b in \({\mathcal T}_\zeta \).

We now introduce the metric net, in the terminology of [21]. For every \(r\ge 0\), we consider the ball B(r) defined by

For \(0\le r<D^*(\rho _*,\rho )=-Z_*\), we define the hull \(B^\bullet (r)\) as the complement of the connected component of B(r) that contains \(\rho \). Informally, \(B^\bullet (r)\) is obtained from B(r) by “filling in” the holes of B(r) except for the one containing \(\rho \). Write \(\partial B^\bullet (r)\) for the topological boundary of \(B^\bullet (r)\). We define the metric net \(\mathcal {M}\) as the closure in \(\mathbf{m}\) of the union

Our goal is to investigate the structure of \(\mathcal {M}\).

If \(a\in {\mathcal T}_\zeta \) and \(s\in [0,\sigma ]\) are such that \(p_\zeta (s)=a\), we write \(W_a=W_s\) (as previously) and we use the notation

where the last equality holds because, as already mentioned, the quantities \(W_s(t)\) for \(0\le t\le \zeta _s\) correspond to the values of \(Z_b=\widehat{W}_b\) along the geodesic segment \(\llbracket \rho _\zeta ,a\rrbracket \).

We then introduce the closed subset of \({\mathcal T}_\zeta \) defined by

We note that points of \(\Theta \) have multiplicity either 1 or 2 in \({\mathcal T}_\zeta \). Indeed, there are only countably many points of multiplicity 3, and it is not hard to see that these points do not belong to \(\Theta \).

Proposition 10

Let \(x\in \mathbf{m}\). Then \(x\in \mathcal {M}\) if and only if \(x=\Pi (a)\) for some \(a\in \Theta \).

Proof

Fix \(r\in [0,-Z_*)\) and \(x\in \mathbf{m}\). We claim that \(x\in \partial B^\bullet (r)\) if and only if we can write \(x=\Pi (a)\) with both \(\widehat{W}_a=Z_*+r\), and

Indeed, if these conditions hold, we have \(D^*(\rho _*,x)=r\) by (11), and the image under \(\Pi \) of the geodesic segment from a to \(\rho _\zeta \) provides a path from x to \(\rho \) that stays outside B(r) except at the initial point x. It follows that \(\Pi (a)\) belongs to \(\partial B^\bullet (r)\).

Conversely, if \(x\in \partial B^\bullet (r)\), then it is obvious that \(D^*(\rho _*,x)=r\) giving \(\widehat{W}_a=Z_*+r\) for any a such that \(\Pi (a)=x\). Write \(x=\lim x_n\), where \(x_n \notin B^\bullet (r)\), and, for every n, let \(a_n\in {\mathcal T}_\zeta \) such that \(\Pi (a_n)=x_n\). The fact that \(x_n \notin B^\bullet (r)\) implies that, for every c belonging to the geodesic segment between \(a_n\) and \(\rho _\zeta \), we have \(Z_c> Z_*+r\) (otherwise the cactus bound (12) would imply that any path between \(x_n\) and \(\rho \) visits B(r), which is a contradiction). By compactness, we may assume that \(a_n\) converges to a as \(n\rightarrow \infty \), and we have \(\Pi (a)=x\). We then get that the property \(Z_c> Z_*+r\) holds for c belonging to the geodesic segment between a and \(\rho _\zeta \), except possibly for \(c=a\). This completes the proof of our claim.

It follows from the claim that the property \(x=\Pi (a)\) for some \(a\in {\mathcal T}_\zeta \) such that \(\widehat{W}_a = \underline{W}_a\) holds for every \(x\in \mathcal {M}\) (this property holds if \(x\in \partial B^\bullet (r)\) for some \(0\le r<-Z_*\) and is preserved under passage to the limit, using the compactness of \({\mathcal T}_\zeta \)). Conversely, suppose that this property holds, with \(a\not =\rho _\zeta \) to discard a trivial case. If the path \(W_a\) hits its minimum only at its terminal point, the first part of the proof shows that \(x\in \partial B^\bullet (r)\) for \(r= \widehat{W}_a-Z_*\). If the path \(W_a\) hits its minimum both at its terminal time and at another time, then Lemma 16 in [1] shows that \(a=\lim a_n\), where \(\widehat{W}_{a_n}<\widehat{W}_a\) for every n. Let \(b_n\) be the first point (i.e. the closest point to \(\rho _\zeta \)) on the ancestral line of \(a_n\) such that \(\widehat{W}_{b_n}= \widehat{W}_{a_n}\). Then, by the first part of the proof, \(\Pi (b_n)\) belongs to \(\partial B^\bullet (r_n)\), with \(r_n= \widehat{W}_{a_n}-Z_*\). Noting that \(b_n\) must lie on the geodesic segment between a and \(a_n\) in the tree \({\mathcal T}_\zeta \), we see that we have also \(a=\lim b_n\), so that we get that \(x=\Pi (a)=\lim \Pi (b_n)\) belongs to \(\mathcal {M}\). \(\square \)

Remark

The preceding arguments are closely related to [5, Section 3] (see in particular formula (16) in [5]), which deals with the slightly different setting of the Brownian plane.

If \(x\in \mathcal {M}\) and \(a\in \Theta \) is such that \(x=\Pi (a)\), the image under \(\Pi \) of the geodesic segment from a to \(\rho _\zeta \) provides a path in \(\mathbf{m}\) that stays in the complement of B(r) for every \(0\le r< D^*(\rho ^*,x)=Z_a-Z_*\) (indeed the values of \(Z_b\) for b belonging to this segment are the numbers \(W_a(t)\ge \underline{W}_a=\widehat{W}_a=Z_a\)). It follows that all points belonging to a geodesic from x to \(\rho _*\) also belong to \(\mathcal {M}\).

We note that we can define an “intrinsic” metric on \(\mathcal {M}\) by setting, for every \(x,y\in \mathcal {M}\),

It is obvious that \(\Delta ^*(x,y)\ge D^*(x,y)\). In particular, \(\Delta ^*(x,y)=0\) implies \(x=y\), and it follows that \(\Delta ^*\) is a metric on \(\mathcal {M}\). The quantity \(\Delta ^*(x,y)\) corresponds to the infimum of the lengths (computed with respect to \(D^*\)) of paths from x to y that are obtained by the concatenation of pieces of geodesics from points of \(\mathcal {M}\) to \(\rho ^*\) (we already noticed that these geodesics stay in \(\mathcal {M}\)). We have clearly \(\Delta ^*(\rho _*,x)=D^*(\rho _*,x)=D^\circ (\rho _*,x)\) for every \(x\in \mathcal {M}\), and \(\Delta ^*\)-geodesics from x to \(\rho _*\) coincide with \(D^*\)-geodesics from x to \(\rho _*\) (if \(x,y\in \mathcal {M}\) and x, y belong to the same \(D^*\)-geodesic to \(\rho _*\), the results of [16] imply that \(D^*(x,y)=D^\circ (x,y)=\Delta ^*(x,y)\)).

Remark

The topology induced by \(\Delta ^*\) on \(\mathcal {M}\) coincides with the topology induced by \(D^*\). Since \(\Delta ^*\ge D^*\) and \((\mathcal {M},D^*)\) is compact, it is enough to prove that \((\mathcal {M},\Delta ^*)\) is also compact. However, if \((x_n)_{n\ge 1}\) is a sequence in \(\mathcal {M}\), we may write \(x_n=\Pi (a_n)\), with \(a_n\in \Theta \), and then extract a subsequence \((a_{n_k})\) that converges to \(a_\infty \) in \({\mathcal T}_\zeta \). We have \(a_\infty \in \Theta \) because \(\Theta \) is closed. Furthermore the fact that \(a_{n_k}\) converges to \(a_\infty \) implies that \(D^\circ (a_{n_k},a_\infty )\) tends to 0, and therefore \(\Delta ^*(x_{n_k},\Pi (a_\infty ))\) also tends to 0, showing that \((x_n)_{n\ge 1}\) has a convergent subsequence in \((\mathcal {M},\Delta ^*)\).

The preceding proposition shows that the metric net \(\mathcal {M}\) has close connections with the subset \(\Theta \) of \({\mathcal T}_\zeta \). The latter set is itself related to the subordinate tree of \({\mathcal T}_\zeta \) with respect to the function \(a\mapsto -\underline{W}_a\). By Theorem 2 (and an obvious symmetry argument) we can construct a process \((H_t)_{0\le t\le \chi }\) distributed as the height process of the Lévy tree with branching mechanism \(\psi _0(r)=\sqrt{8/3}\,r^{3/2}\), and a continuous random process \((\Gamma _s)_{s\ge 0}\) with nondecreasing sample paths such that \(\Gamma _0=0\), \(\Gamma _\sigma =\chi \), and for every \(s\in [0,\sigma ]\),

Formula (14) obviously implies that, if \(\Gamma \) is constant on some interval, then \(\underline{W}\) is constant on the same interval. The converse is also true since H is not constant on any nontrivial interval.

We define a random equivalence relation \(\sim \) on \([0,\chi ]\), by requiring that the graph of \(\sim \) is the smallest closed symmetric subset of \([0,\chi ]^2\) that contains all pairs (s, t) with \(s\le t\), \(H_s=H_t\), and \(H_r>H_s\) for all \(r\in (s,t)\). We leave it to the reader to check that this set is indeed the graph of an equivalence relation (use the comments at the end of Sect. 3). In addition to the pairs (s, t) satisfying the previous relation, the graph of \(\sim \) contains a countable collection of pairs (u, v), each of them associated with a point of infinite multiplicity a of the tree \({\mathcal T}_H\) by the relations \(u=\min p_H^{-1}(a)\) and \(v=\max p_H^{-1}(a)\).

A simulation of a looptree (simulation by Igor Kortchemski). For technical reasons, some of the trees branching off a loop are pictured inside this loop, but, from the point of view of the present work, it is better to think of these trees as growing outside the loop, so that the space inside the loop may be “filled in” appropriately

We denote the quotient space \([0,\chi ]{/}\sim \) by \({\mathcal L}\). Then \({\mathcal L}\) can be identified with the “looptree” associated with H. Roughly speaking (see [4] for more details) the looptree is obtained by replacing each point of infinite multiplicity a of the tree \({\mathcal T}_H\) by a loop of “length” equal to the weight of a, so that the subtrees that are the connected components of the complement of a in the tree branch along this loop in an order determined by the coding function H (see Fig. 3). Note that the looptree associated with H is equipped in [4] with a particular metric. Here we avoid introducing this metric on \({\mathcal L}\), because it will be more relevant to our applications to introduce a pseudo-metric that will be described below.

Let us introduce the right-continuous inverse of \(\Gamma \). For every \(u\in [0,\chi )\), we set

By convention, we also set \(\tau _\chi =\sigma \). The left limit \(\tau _{u-}\) is equal to \(\inf \{s\ge 0:\Gamma _s=u\}\). Note that \(\Gamma \) (and therefore also \(\underline{W}\)) is constant on every interval \([\tau _{u-},\tau _u]\). We also observe that \(\Gamma _{\tau _u}=u\) and thus \(-\underline{W}_{\tau _u}=H_u\). Furthermore, for every \(\varepsilon >0\), \(\Gamma _{\tau _u+\varepsilon }>\Gamma _{\tau _u}\), and therefore \(\underline{W}\) is not constant on \([\tau _u,\tau _u+\varepsilon ]\) [recall (14)], which implies \(\underline{W}_{\tau _u}=\widehat{W}_{\tau _u}\). We have thus \(p_\zeta (\tau _u)\in \Theta \) and a similar argument gives \(p_\zeta (\tau _{u-})\in \Theta \).

We next consider the subsets \(\Theta ^\circ \) and \(\Theta ^1\) of \(\Theta \) defined as follows. We let \(\mathcal {S}^1\) be the countable subset of \([0,\sigma ]\) that consists of all times \(\tau _{u-}\) for \(u\in (0,\chi ]\) such that \(\tau _{u-}<\tau _u\) and we set \(\Theta ^1=p_\zeta (\mathcal {S}^1)\). By the preceding remarks, \(\Theta ^1\subset \Theta \), and we also set \(\Theta ^\circ =\Theta \backslash \Theta ^1\). We note that \(s\in [0,\sigma )\) belongs to \(\mathcal {S}^1\) if and only if s is the left end of a maximal open interval on which \(\underline{W}\) (or equivalently \(\Gamma \)) is constant. Alternatively, s belongs to \(\mathcal {S}^1\) if and only if \(\underline{W}_s=\widehat{W}_s\) and \(\underline{W}\) is constant on \([s,s+\varepsilon ]\) for some \(\varepsilon >0\). Indeed, if the latter properties hold then \(\underline{W}\) is not constant on any interval \([s-\delta ,s]\), \(\delta >0\) (thus implying that \(s=\tau _{u-}\) with \(u=\Gamma _s\)) because otherwise, using the fact that s cannot be a time of left increase of \(\zeta \), this would mean that \(W_s\) attains its minimum both at \(\zeta _s\) and at a time \(r<\zeta _s\), leading to a contradiction with [1, Lemma 16].