Abstract

We study the random metric space called the Brownian plane, which is closely related to the Brownian map and is conjectured to be the universal scaling limit of many discrete random lattices such as the uniform infinite planar triangulation. We obtain a number of explicit distributions for the Brownian plane. In particular, we consider, for every \(r>0\), the hull of radius r, which is obtained by “filling in the holes” in the ball of radius r centered at the root. We introduce a quantity \(Z_r\) which is interpreted as the (generalized) length of the boundary of the hull of radius r. We identify the law of the process \((Z_r)_{r>0}\) as the time-reversal of a continuous-state branching process starting from \(+\infty \) at time \(-\infty \) and conditioned to hit 0 at time 0, and we give an explicit description of the process of hull volumes given the process \((Z_r)_{r>0}\). We obtain an explicit formula for the Laplace transform of the volume of the hull of radius r, and we also determine the conditional distribution of this volume given the length of the boundary. Our proofs involve certain new formulas for super-Brownian motion and the Brownian snake in dimension one, which are of independent interest.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Much recent work has been devoted to understanding continuous limits of random graphs drawn on the two-dimensonal sphere or in the plane, which are called random planar maps. A fundamental object is the random compact metric space known as the Brownian map, which has been proved to be the universal scaling limit of several important classes of random planar maps conditioned to have a large size (see in particular [1, 3, 6, 23, 30]). The main goal of this work is to study the random (non-compact) metric space called the Brownian plane, which may be viewed as an infinite-volume version of the Brownian map. The Brownian plane was first introduced and studied in [9], where it was shown to be the scaling limit in distribution of the uniform infinite planar quadrangulation (UIPQ) in the local Gromov–Hausdorff sense. The Brownian plane is in fact conjectured to be the universal scaling limit of many discrete random lattices including the uniform infinite planar triangulation (UIPT) introduced by Angel and Schramm [5] and studied then by several authors. It was proved in [9] that the Brownian plane is locally isometric to the Brownian map, in the following sense. Recalling that both the Brownian map and the Brownian plane are equipped with a distinguished point called the root, one can couple these two random metric spaces in such a way that, for every \(\delta >0\), there exists \(\varepsilon >0\) such that the balls of radius \(\varepsilon \) centered at the root in the two spaces are isometric with probability at least \(1-\delta \). As a consequence, the Brownian plane shares many properties of the Brownian map. On the other hand, the Brownian plane also enjoys the important additional property of invariance under scaling: multiplying the distance by a constant factor \(\lambda >0\) does not change the distribution of the Brownian plane. This property suggests that the Brownian plane should be more tractable for calculations than the Brownian map, for which very few explicit distributions are known. Our purpose is to obtain such explicit distributions for the Brownian plane, and in particular to give a detailed probabilistic description of the growth of “hulls” centered at the root.

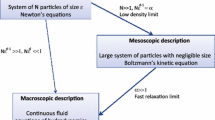

In order to give a more precise presentation of our results, let us introduce some notation. As in [9], we write \(({\mathcal {P}}_\infty , D_\infty )\) for the Brownian plane, and we let \(\rho _\infty \) stand for the distinguished point of \({\mathcal {P}}_\infty \) called the root. We recall that \({\mathcal {P}}_\infty \) is equipped with a volume measure, and we write |A| for the volume of a measurable subset of \({\mathcal {P}}_\infty \). For every \(r> 0\), the closed ball of radius r centered at \(\rho _\infty \) in \({\mathcal {P}}_\infty \) is denoted by \(B_r({\mathcal {P}}_\infty )\). In contrast with the case of Euclidean space, the complement of \(B_r({\mathcal {P}}_\infty )\) will have infinitely many connected components (see [24] for a detailed discussion of these components in the slightly different setting of the Brownian map) but only one unbounded connected component. We then define the hull of radius r as the complement of the unbounded component of the complement of \(B_r({\mathcal {P}}_\infty )\), and we denote this hull by \(B^\bullet _r({\mathcal {P}}_\infty )\). Informally, \(B^\bullet _r({\mathcal {P}}_\infty )\) is obtained by “filling in the holes” of \(B_r({\mathcal {P}}_\infty )\)—see Fig. 1 below, and Fig. 3 in Sect. 5 for a discrete version of the hull.

In what follows, we give a complete description of the law of the process \((|B^\bullet _r({\mathcal {P}}_\infty )|)_{r>0} \). To formulate this description, it is convenient to introduce another process \((Z_r)_{r>0}\) which gives for every \(r>0\) the size of the boundary of \(B^\bullet _r({\mathcal {P}}_\infty )\).

Proposition 1.1

Let \(r>0\). There exists a positive random variable \(Z_r\) such that

in probability.

Illustration of the geometric meaning of the processes \((Z_r)_{r\ge 0}\) and \((|B_r^\bullet ({\mathcal {P}}_\infty )|)_{r\ge 0}\). The Brownian plane is represented as a two-dimensional “cactus” where the height of each point is equal to its distance to the root. The shaded part represents the hull \(B_r^\bullet ({\mathcal {P}}_\infty )\) and \(Z_r\) corresponds to the (generalized) length of its boundary. At time s, both processes \(Z_\cdot \) and \( |B_{\cdot }^\bullet ( {\mathcal {P}}_\infty )|\) have a jump. Geometrically this corresponds to the creation of a “bubble” above height s

In view of this proposition, one interprets \(Z_r\) as the (generalized) length of the boundary of the hull of radius r (this boundary is expected to be a fractal curve of dimension 2). A key intermediate step in the derivation of our main results is to identify the process \((Z_r)_{r>0}\) as a time-reversed continuous-state branching process. For every \(u\ge 0\), set \(\psi (u)=\sqrt{8/3}\,u^{3/2}\). The continuous-state branching process with branching mechanism \(\psi \) is the Feller Markov process \((X_t)_{t\ge 0}\) with values in \({\mathbb {R}}_+\), whose semigroup is characterized as follows: for every \(x,t\ge 0\) and every \(\lambda > 0\),

See Sect. 2.1 for a brief discussion of this process. Note that X gets absorbed at 0 in finite time. It is easy to construct a process \((\widetilde{X}_t)_{t\le 0}\) indexed by the time interval \((-\infty ,0]\) and which is distributed as the process X “started from \(+\infty \)” at time \(-\infty \) and conditioned to hit zero at time 0 (see Sect. 2.1 for a more rigorous presentation).

Proposition 1.2

-

(i) For every \(r>0\), we have for every \(\lambda \ge 0\),

$$\begin{aligned} E\bigg [ \exp (-\lambda Z_r)\bigg ]= \bigg (1+\frac{2\lambda r^2}{3}\bigg )^{-3/2}. \end{aligned}$$Equivalently, \(Z_r\) follows a Gamma distribution with parameter \(\frac{3}{2}\) and mean \(r^2\).

-

(ii) The two processes \((Z_r)_{r>0}\) and \((\widetilde{X}_{-r})_{r>0}\) have the same finite-dimensional marginals.

We observe that results closely related to Proposition 1.2 have been obtained by Krikun [16, 17] in the discrete setting of the UIPT and the UIPQ.

Part (ii) of the preceding proposition implies that the process \((Z_r)_{r>0}\) has a càdlàg modification, with only negative jumps, and from now on we deal with this modification. We can now state the main results of the present work. For every \(r>0\), we write \(\Delta Z_r\) for the jump of Z at time r.

Theorem 1.3

Let \(s_1,s_2,\ldots \) be a measurable enumeration of the jumps of Z, and let \(\xi _1,\xi _2,\ldots \) be a sequence of i.i.d. real random variables with density

which is independent of the process \((Z_r)_{r> 0}\). The following identity in distribution of random processes holds:

This theorem identifies the conditional distribution of the process of hull volumes knowing the process of hull boundary lengths, whose distribution is given by the preceding proposition. Informally, each jump time r of Z corresponds to the creation of a new connected component of the complement of the ball \(B_r({\mathcal {P}}_\infty )\), which is “swallowed” by the hull, leading to a negative jump for the boundary of the hull and a positive jump for its volume. The common distribution of the variables \(\xi _i\) should then be interpreted as the law of the volume of a newly created connected component knowing that the “length” of its boundary is equal to 1 (see [4, Proposition 6.4] and especially [10, Proposition 9] for related results about the asymptotic distribution of the volume of a triangulation with a boundary of size tending to infinity). This heuristic discussion is made much more precise in the companion paper [10], where many of the results of the present work are interpreted in terms of asymptotics for the so-called “peeling process” studied by Angel [4] for the UIPT.

The proof of Theorem 1.3 depends on certain explicit calculations of distributions, which are of independent interest.

Theorem 1.4

Let \(r>0\). For every \(\mu >0\),

Furthermore, for every \(\ell >0\),

In view of the first assertion of the theorem, one may ask whether a similar formula holds for the volume \(|B_r({\mathcal {P}}_\infty )|\) of the ball of radius r. In principle our methods should also be applicable to this problem, but our calculations did not lead to a tractable expression. One may still compare the expected volumes of the hull and the ball. From the first formula of the theorem, one easily gets that \(E[|B^\bullet _r({\mathcal {P}}_\infty )|]=r^4/3\). On the other hand, using the method of the proof of [26, Proposition 5], one can verify that \(E[|B_r({\mathcal {P}}_\infty )|]=2r^4/21\).

We also note that there is an interesting analogy between the second formula of Theorem 1.4 and classical formulas for Bessel processes (see Corollary 1.8 and Corollary 3.3 in [31, Chapter XI]), which also involve hyperbolic functions—in special cases these formulas can be restated in terms of linear Brownian motion via the Ray–Knight theorems.

The preceding results can also be interpreted in terms of asymptotics for the UIPQ. In the last section of this article, we prove that the process of hull volumes of the UIPQ converges in distribution, modulo a suitable rescaling, to the process \((|B^\bullet _r({\mathcal {P}}_\infty )|)_{r>0}\). A similar invariance principle should hold for the UIPT and for more general random lattices such as the ones constructed by Addario-Berry [2] and Stephenson [32].

Our proofs depend on a new representation of the Brownian plane, which is different from the one used in [9]. Roughly speaking, this representation is a continuous analog of the construction of the UIPQ that was given by Chassaing and Durhuus in [7], whereas [9] used a continuous version of the construction in [12]. Similarly as in [9], the representation of the Brownian plane in the present work uses a random infinite real tree \({\mathcal {T}}_\infty \) whose vertices are assigned real labels. The probabilistic structure of the real tree \({\mathcal {T}}_\infty \) is more complicated than in [9], but the labels are now nonnegative and correspond to distances from the root in \({\mathcal {P}}_\infty \) (whereas in [9] labels corresponded in some sense to “distances from infinity”). This is of course similar to the well-known Schaeffer bijection between rooted quadrangulations and well-labeled trees [8]. The fact that labels are distances from the root is important for our purposes, since it allows us to give a simple representation of the hull of radius r: the complement of this hull corresponds to the set of all points a in \({\mathcal {T}}_\infty \) such that labels stay greater than r along the (tree) geodesic from a to infinity. See formula (16) below. There is a similar interpretation for the boundary of the hull, and a key observation is the fact that the “boundary length” \(Z_r\) can be obtained in terms of exit measures from \((r,\infty )\) associated with the “subtrees” branching off the spine of the infinite tree \({\mathcal {T}}_\infty \) at a level greater than the last occurence of label r on the spine [see formula (18) below].

The construction of the infinite tree \({\mathcal {T}}_\infty \) and of the labels assigned to its vertices, as well as the subsequent calculations, make a heavy use of the Brownian snake and its properties. In particular the special Markov property of the Brownian snake [18] and its connections with partial differential equations play an important role. Because of the close relation between super-Brownian motion and the Brownian snake, some of the results that follow can be written as statements about super-Brownian motion, which may be of independent interest. In particular, Corollary 4.7, which is essentially equivalent to the second formula of Theorem 1.4, gives the Laplace transform of the total integrated mass of a super-Brownian motion started from \(u\delta _a\) (for some \(u,a>0\)) knowing that the minimum of the range is equal to 0. Similarly, Corollary 4.9 determines for a super-Brownian motion starting from \(\delta _0\) the law of the process whose value at time \(r>0\) is the pair consisting of the exit measure from \((-r,\infty )\) and the mass of those historical paths that do not hit level \(-r\).

The paper is organized as follows. Section 2 presents a number of preliminaries. In particular, we recall basic facts about the (one-dimensional) Brownian snake including exit measures and the special Markov property, and its connections with super-Brownian motion. We also state a recent result from [25] giving a decomposition of the Brownian snake knowing its minimal spatial position. The latter result is especially useful in Sect. 3, where we derive our new representation of the Brownian plane. In order to show that this new construction is equivalent to the one in [9], we use the fact that the distribution of the Brownian plane is characterized by the invariance under scaling and the above-mentioned property stating that the Brownian plane is locally isometric to the Brownian map. Section 4 contains the proof of our main results: Propositions 1.1 and 1.2 are proved in Sect. 4.1, Theorem 1.4 is derived in Sect. 4.2, and Theorem 1.3 is proved in Sect. 4.3. Finally, Sect. 5 is devoted to our invariance principle relating the hull process of the UIPQ to the process \((|B^\bullet _r({\mathcal {P}}_\infty )|)_{r>0}\).

2 Preliminaries

2.1 A continuous-state branching process

An important role in this work will be played by a particular continuous-state branching process, which was already mentioned in the introduction. We refer to [19, Chapter 2] and references therein for the general theory of continuous-state branching processes, and content ourselves with a brief exposition of the case of interest in this work. We fix a constant \(c>0\). The continuous-state branching process with branching mechanism \(\psi (u)=c\,u^{3/2}\) is the Feller Markov process \((X_t)_{t\ge 0}\) with values in \({\mathbb {R}}_+\), càdlàg paths and no negative jumps, whose semigroup is characterized as follows. If \(P_x\) stands for the probability measure under which X starts from \(X_0=x\), then, for every \(x,t\ge 0\) and every \(\lambda > 0\),

where the function \(u_t(\lambda )\) is determined by the differential equation

It follows that \(u_t(\lambda )=(\lambda ^{-1/2} + \frac{c}{2}\,t)^{-2}\), and thus,

By differentiating with respect to \(\lambda \), we have also

Let \(T:=\inf \{t\ge 0:X_t=0\}\), and note that \(X_t=0\) for every \(t\ge T\), a.s. Since \(P_x(T\le t)=P_x(X_t=0)=\exp (-\frac{4x}{c^2t^2})\), we readily obtain that the density of T under \(P_x\) is (when \(x>0\)) the function

For future purposes, it will be useful to introduce the process X conditioned on extinction at a fixed time. To this end, we write \(q_t(x,{\mathrm {d}}y)\) for the transition kernels of X. We fix \(\rho >0\) and define the process X “conditioned on extinction at time \(\rho \)” as the time-inhomogeneous Markov process indexed by the interval \([0,\rho ]\) with values in \((0,\infty )\) (with 0 serving as a cemetery point) whose transition kernel between times s and t is

if \(0\le s<t<\rho \) and \(x>0\), and

if \(s\in [0,\rho )\) and \(x>0\). This is just a standard h-transform in a time-inhomogeneous setting, and the interpretation can be justified by the fact that, for every choice of \(0<s_1<\cdots <s_p<\rho \), the conditional distribution of \((X_{s_1},\ldots ,X_{s_p})\) under \(P_x(\cdot \mid \rho \le T<\rho +\varepsilon )\) converges to \(\pi _{0,s_1}(x,{\mathrm {d}}y_1)\pi _{s_1,s_2}(y_1,{\mathrm {d}}y_2)\ldots \pi _{s_{p-1},s_p}(y_{p-1},{\mathrm {d}}y_p)\) as \(\varepsilon \downarrow 0\).

If \(0\le s<t<\rho \) and \(x>0\), the Laplace transform of \(\pi _{s,t}(x,{\mathrm {d}}y)\) is

where the second equality follows from the explicit expression of \(\phi _{\rho -s}\) and formula (2).

Finally, let us briefly discuss the process \(\widetilde{X}\) which was introduced in Sect. 1. Simple arguments give the existence of a process \((\widetilde{X}_t)_{t\in (-\infty ,0]}\) with càdlàg paths and no negative jumps, which is indexed by the time interval \((-\infty ,0]\) and such that:

-

\(\widetilde{X}_t>0\) for every \(t<0\), and \(\widetilde{X}_0=0\), a.s.;

-

\(\widetilde{X}_t\longrightarrow +\infty \) as \(t\downarrow -\infty \), a.s.;

-

for every \(x>0\), if \(\widetilde{T}_x:=\inf \{t\in (-\infty ,0]: \widetilde{X}_t\le x\}\), the process \((\widetilde{X}_{(\tilde{T}_x+t)\wedge 0})_{t\ge 0}\) has the same distribution as X started from x.

To get an explicit construction of \(\widetilde{X}\), one may concatenate independent copies of the process X started at n and stopped at the hitting time of \(n-1\), for every integer \(n\ge 1\). We omit the details.

2.2 Preliminaries about the Brownian snake

We give below a brief presentation of the Brownian snake, referring to the book [19] for more details. We write \({\mathcal {W}}\) for the set of all finite paths in \({\mathbb {R}}\). An element of \({\mathcal {W}}\) is a continuous mapping \({\mathrm {w}}:[0,\zeta ]\longrightarrow {\mathbb {R}}\), where \(\zeta =\zeta _{({\mathrm {w}})}\ge 0\) depends on \({\mathrm {w}}\) and is called the lifetime of \({\mathrm {w}}\). We write \(\widehat{\mathrm {w}}={\mathrm {w}}(\zeta _{({\mathrm {w}})})\) for the endpoint of \({\mathrm {w}}\). For \(x\in {\mathbb {R}}\), we set \({\mathcal {W}}_x:=\{{\mathrm {w}}\in {\mathcal {W}}:{\mathrm {w}}(0)=x\}\). The trivial path \({\mathrm {w}}\) such that \({\mathrm {w}}(0)=x\) and \(\zeta _{({\mathrm {w}})}=0\) is identified with the point x of \({\mathbb {R}}\), so that we can view \({\mathbb {R}}\) as a subset of \({\mathcal {W}}\). The space \({\mathcal {W}}\) is equipped with the distance

The Brownian snake \((W_s)_{s\ge 0}\) is a continuous Markov process with values in \({\mathcal {W}}\). We will write \(\zeta _s=\zeta _{(W_s)}\) for the lifetime process of \(W_s\). The process \((\zeta _s)_{s\ge 0}\) evolves like a reflecting Brownian motion in \({\mathbb {R}}_+\). Conditionally on \((\zeta _s)_{s\ge 0}\), the evolution of \((W_s)_{s\ge 0}\) can be described informally as follows: When \(\zeta _s\) decreases, the path \(W_s\) is shortened from its tip, and when \(\zeta _s\) increases the path \(W_s\) is extended by adding “little pieces of linear Brownian motion” at its tip. We refer to [19, Chapter IV] for a more rigorous presentation.

It is convenient to assume that the Brownian snake is defined on the canonical space \(C({\mathbb {R}}_+,{\mathcal {W}})\) of all continuous functions from \({\mathbb {R}}_+\) into \({\mathcal {W}}\), in such a way that, for \(\omega =(\omega _s)_{s\ge 0}\in C({\mathbb {R}}_+,{\mathcal {W}})\), we have \(W_s(\omega )=\omega _s\). The notation \({\mathbb {P}}_{\mathrm {w}}\) then stands for the law of the Brownian snake started from \({\mathrm {w}}\).

For every \(x\in {\mathbb {R}}\), the trivial path x is a regular recurrent point for the Brownian snake, and so we can make sense of the excursion measure \({\mathbb {N}}_x\) away from x, which is a \(\sigma \)-finite measure on \(C({\mathbb {R}}_+,{\mathcal {W}})\). Under \({\mathbb {N}}_x\), the process \((\zeta _s)_{s\ge 0}\) is distributed according to the Itô measure of positive excursions of linear Brownian motion, which is normalized so that, for every \(\varepsilon >0\),

We write \(\sigma :=\sup \{s\ge 0: \zeta _s>0\}\) for the duration of the excursion under \({\mathbb {N}}_x\). For every \(\ell >0\), we will also use the notation \({\mathbb {N}}_0^{(\ell )}:={\mathbb {N}}_0(\cdot \mid \sigma =\ell )\).

We set

We will consider \({\mathcal {R}}\) and \(W_*\) under the excursion measures \({\mathbb {N}}_x\), and we note that we have also \({\mathcal {R}}=\{\widehat{W}_s:0\le s\le \sigma \}\) and \(W_*=\min \{\widehat{W}_s:0\le s\le \sigma \}\), \({\mathbb {N}}_x\) a.e. Occasionally we also write \(\omega _*=W_*(\omega )\) for \(\omega \in C({\mathbb {R}}_+,{\mathcal {W}})\).

If \(x,y\in {\mathbb {R}}\) and \(y<x\), we have

(see e.g. [19, Section VI.1]).

It is known (see e.g. [27, Proposition 2.5]) that \({\mathbb {N}}_x\) a.e. there is a unique instant \(s_{\mathbf{m}}\in [0,\sigma ]\) such that \(\widehat{W}_{s_{\mathbf{m}}}=W_*\).

2.2.1 Decomposing the Brownian snake at its minimum

We will now recall a key result of [25] that plays an important role in what follows. This result identifies the law of the minimizing path \(W_{s_\mathbf{m}}\) under \({\mathbb {N}}_0\), together with the distribution of the “subtrees” that branch off the minimizing path. Let us define these subtrees in a more precise way.

For every \(s\ge 0\), we set

We let \((\hat{a}_i,\hat{b}_i)\), \(i\in \hat{I}\) be the excursion intervals of \(\hat{\zeta }_s\) above its past minimum. Equivalently, the intervals \((\hat{a}_i,\hat{b}_i)\), \(i\in \hat{I}\) are the connected components of the set

Similarly, we let \((\check{a}_j,\check{b}_j)\), \(j\in \check{I}\) be the excursion intervals of \(\check{\zeta }_s\) above its past minimum. We may assume that the indexing sets \(\hat{I}\) and \(\check{I}\) are disjoint. In terms of the tree \({\mathcal {T}}_\zeta \) coded by the excursion \((\zeta _s)_{0\le s\le \sigma }\) under \({\mathbb {N}}_0\) (see e.g. [20, Section 2]), each interval \((\hat{a}_i,\hat{b}_i)\) or \((\check{a}_j,\check{b}_j)\) corresponds to a subtree of \({\mathcal {T}}_\zeta \) branching off the ancestral line of the vertex associated with \(s_{\mathbf{m}}\). We next consider the spatial displacements corresponding to these subtrees. The properties of the Brownian snake imply that, for every \(i\in \hat{I}\), the paths \(W_{s_\mathbf{m}+s}\), \(s\in [\hat{a}_i,\hat{b}_i]\), are the same up to time \(\zeta _{s_\mathbf{m}+\hat{a}_i}=\zeta _{s_\mathbf{m}+\hat{b}_i}\), and similarly for the paths \(W_{s_\mathbf{m}-s}\), \(s\in [\check{a}_j,\check{b}_j]\), for every \(j\in \check{I}\). Then, for every \(i\in \hat{I}\), we let \(W^{[i]}\in C({\mathbb {R}}_+,{\mathcal {W}})\) be defined by

Similarly, for every \(j\in \check{I}\),

We finally introduce the point measures on \({\mathbb {R}}_+\times C({\mathbb {R}}_+,{\mathcal {W}})\) defined by

Theorem 2.1

-

(i) Let \(a>0\). Under the excursion measure \({\mathbb {N}}_0\) and conditionally on \(W_*=-a\), the random path \((a+W_{s_{\mathbf{m}}}(\zeta _{s_{\mathbf{m}}}-t))_{0\le t\le \zeta _{s_{\mathbf{m}}}}\) is distributed as a nine-dimensional Bessel process started from 0 and stopped at its last passage time at level a.

-

(ii) Under \({\mathbb {N}}_0\), conditionally on the minimizing path \(W_{s_{\mathbf{m}}}\), the point measures \(\hat{\mathcal {N}}({\mathrm {d}}t,{\mathrm {d}}\omega )\) and \( \check{\mathcal {N}}({\mathrm {d}}t,{\mathrm {d}}\omega )\) are independent and their common conditional distribution is that of a Poisson point measure with intensity

$$\begin{aligned} 2\,{\mathbf {1}}_{[0,\zeta _{s_{\mathbf{m}}}]}(t)\,{\mathbf {1}}_{\{\omega _*> \widehat{W}_{s_\mathbf{m}}\}}\,{\mathrm {d}}t\,{\mathbb {N}}_{W_{s_{\mathbf{m}}}(t)}({\mathrm {d}}\omega ). \end{aligned}$$

Parts (i) and (ii) of the theorem correspond respectively to Theorem 5 and Theorem 6 of [25]. Note that when applying Theorem 5 of [25], we also use the fact that the time-reversal of a Bessel process of dimension \(-5\) started from a and stopped when hitting 0 is a nine-dimensional Bessel process started from 0 and stopped at its last passage time at level a (see e.g. [31, Exercise XI.1.23]). We refer to [31, Chapter XI] for basic facts about Bessel processes.

2.2.2 Exit measures and the special Markov property

Let D be a nonempty open interval of \({\mathbb {R}}\), such that \(D\not ={\mathbb {R}}\). We fix \(x\in D\) and, for every \({\mathrm {w}}\in {\mathcal {W}}_x\), set

with the usual convention \(\inf \varnothing =\infty \). The exit measure \({\mathcal {Z}}^D\) from D (see [19, Chapter 5]) is a random measure on \(\partial D\), which is defined under \({\mathbb {N}}_x\) and is supported on the set of all exit points \(W_s(\tau _D(W_s))\) for the paths \(W_s\) such that \(\tau _D(W_s)<\infty \) (note that here \(\partial D\) has at most two points, but the following discussion remains valid for the d-dimensional Brownian snake and an arbitrary subdomain D of \({\mathbb {R}}^d\)). Note that \({\mathbb {N}}_x({\mathcal {Z}}^D\not =0)<\infty \). It is easy to prove, for instance by using Proposition 2.2 below, that

A crucial ingredient of our study is the special Markov property of the Brownian snake [18]. In order to state this property, we first observe that, \({\mathbb {N}}_x\) a.e., the set

is open and thus can be written as a union of disjoint open intervals \((a_i,b_i)\), \(i\in I\), where I may be empty. From the properties of the Brownian snake, one has, \({\mathbb {N}}_x\) a.e. for every \(i\in I\) and every \(s\in [a_i,b_i]\),

and more precisely all paths \(W_s\), \(s\in [a_i,b_i]\) coincide up to their exit time from D. For every \(i\in I\), we then define an element \(W^{(i)}\) of \(C({\mathbb {R}}_+,{\mathcal {W}})\) by setting, for every \(s\ge 0\),

Informally, the \(W^{(i)}\)’s represent the “excursions” of the Brownian snake outside D (the word “outside” is a little misleading here, because although these excursions start from a point of \(\partial D\), they will typically come back inside D).

We also need to introduce a \(\sigma \)-field that contains the information about the paths \(W_s\) before they exit D. To this end, we set, for every \(s\ge 0\),

and we let \({\mathcal {E}}^D\) be the \(\sigma \)-field generated by the process \((W_{\eta ^D_s})_{s\ge 0}\) and the class of all sets that are \({\mathbb {N}}_x\)-negligible. The random measure \({\mathcal {Z}}^D\) is measurable with respect to \({\mathcal {E}}^D\) (see [18, Proposition 2.3]).

We now state the special Markov property [18, Theorem 2.4].

Proposition 2.2

Under \({\mathbb {N}}_x\), conditionally on \({\mathcal {E}}^D\), the point measure

is Poisson with intensity

Remarks

-

(i)

Since on the event \(\{{\mathcal {Z}}^D=0\}\) there are no excursions outside D, the previous proposition is equivalent to the same statement where \({\mathbb {N}}_x\) is replaced by the probability measure \({\mathbb {N}}_x(\cdot \mid {\mathcal {Z}}^D\not =0)\).

-

(ii)

In what follows we will apply the special Markov property in a conditional form. Suppose that \(D=(a,\infty )\) for some \(a>0\) and that \(x>a\). Then the preceding statement remains valid if we replace \({\mathbb {N}}_x\) by \({\mathbb {N}}_x(\cdot \cap \{ {\mathcal {R}}\subset (0,\infty )\})\), provided we also replace \(\int {\mathcal {Z}}^D({\mathrm {d}}y)\,{\mathbb {N}}_y\) by \(\int {\mathcal {Z}}^D({\mathrm {d}}y)\,{\mathbb {N}}_y(\cdot \cap \{{\mathcal {R}}\subset (0,\infty )\})\). This follows from the fact that conditioning a Poisson point measure on having no point on a set of finite intensity is equivalent to removing the points that fall into this set. We omit the details.

For \(a<x\), we write \({\mathcal {Z}}_{a}:=\langle {\mathcal {Z}}^{(a,\infty )},1 \rangle \) for the total mass of the exit measure outside \((a,\infty )\). We will use the Laplace transform of \({\mathcal {Z}}_a\) under \({\mathbb {N}}_x\), which is given by

for every \(\mu \ge 0\). This formula is easily derived from the fact that the (nonnegative) function \(u(x)={\mathbb {N}}_x(1-\exp (-\mu {\mathcal {Z}}_a))\) defined for \(x\in (a,\infty )\) solves the differential equation \(u''=4u^2\) with boundary conditions \(u(a)=\mu \) and \(u(\infty )=0\) (see [19, Chapter V]). On the other hand, an application of the special Markov property shows that, for every \(b<a<x\),

If we substitute formula (6) in the last display, and compare with (1), we easily get that the process \(({\mathcal {Z}}_{x-a})_{a> 0}\) is Markov under \({\mathbb {N}}_x\), with the transition kernels of the continuous-state branching process with branching mechanism \(\psi (u)=\sqrt{8/3} \,u^{3/2}\). Although \({\mathbb {N}}_x\) is an infinite measure, the preceding assertion makes sense, simply because we can restrict our attention to the finite measure event \(\{{\mathcal {Z}}_{x-\varepsilon }>0\}\), for any choice of \(\varepsilon >0\). It follows that \(({\mathcal {Z}}_{x-a})_{a>0}\) has a càdlàg modification under \({\mathbb {N}}_x\), which we consider from now on.

We finally explain an extension of the special Markov property where we consider excursions outside a random domain. For definiteness, we fix \(x=0\), and for every \(a>0\), we set \({\mathcal {E}}_a={\mathcal {E}}^{(-a,\infty )}\). Let H be a random variable with values in \((0,\infty ]\), such that \({\mathbb {N}}_0(H<\infty )<\infty \), and assume that H is a stopping time of the filtration \(({\mathcal {E}}_a)_{a> 0}\) in the sense that, for every \(a>0\), the event \(\{H\le a\}\) is \({\mathcal {E}}_a\)-measurable. As usual we can define the \(\sigma \)-field \({\mathcal {E}}_H\) that consists of all events A such that \(A\cap \{H\le a\}\) is \({\mathcal {E}}_a\)-measurable, for every \(a>0\). Since \({\mathcal {Z}}_{-a}\) is \({\mathcal {E}}_a\)-measurable for every \(a>0\), it follows by standard arguments that the random variable \({\mathcal {Z}}_{-H}\) is \({\mathcal {E}}_H\)-measurable (at this point it is important that we have taken a càdlàg modification of the process \(({\mathcal {Z}}_{-a})_{a>0}\)).

We may consider the excursions \((W^{H,(i)})_{i\in I}\) of the Brownian snake outside \((-H,\infty )\). These excursions are defined in exactly the same way as in the case where H is deterministic, considering now the connected components of the open set \(\{s\ge 0: W_s(t)<-H\hbox { for some }t\in [0,\zeta _s]\}\). We define \(\widetilde{W}^{H,(i)}\) by shifting \(W^{H,(i)}\) so that it starts from 0.

Proposition 2.3

Under the probability measure \({\mathbb {N}}_0(\cdot \mid H<\infty )\), conditionally on the \(\sigma \)-field \({\mathcal {E}}_{H}\), the point measure

is Poisson with intensity

This proposition can be obtained by arguments very similar to the derivation of the strong Markov property of Brownian motion from the simple Markov property: we approximate H with stopping times greater than H that take only countably many values, then use Proposition 2.2 and finally perform a suitable passage to the limit. We leave the details to the reader.

2.2.3 The Brownian snake and super-Brownian motion

The initial motivation for studying the Brownian snake came from its connection with super-Brownian motion, which we briefly recall. Under the excursion measure \({\mathbb {N}}_x({\mathrm {d}}\omega )\), the lifetime process \((\zeta _s(\omega ))_{s\ge 0}\) is distributed as a Brownian excursion, and so we can define for every \(t\ge 0\) the local time proces \((\ell ^t_s(\omega ))_{s\ge 0}\) of this excursion at level t. Next let \(\mu \) be a finite measure on \({\mathbb {R}}\), and let

be a Poisson measure on \(C({\mathbb {R}}_+,{\mathcal {W}})\) with intensity \(\int \mu ({\mathrm {d}}x)\,{\mathbb {N}}_x({\mathrm {d}}\omega )\). For every \(t>0\), let \({\mathcal {X}}_t\) be the random measure on \({\mathbb {R}}\) defined by setting, for every nonnegative measurable function \(\varphi \) on \({\mathbb {R}}\),

If we also set \({\mathcal {X}}_0=\mu \), the process \(({\mathcal {X}}_t)_{t\ge 0}\) is then a super-Brownian motion with branching mechanism \(\psi _0(u)=2u^2\) started from \(\mu \) (see [19, Theorem IV.4]). A nice feature of this construction is the fact that it also gives the associated historical process: Just consider for every \(t>0\) the random measure \({\mathbf {X}}_t\) defined by setting

for every nonnegative measurable function \(\Phi \) on \({\mathcal {W}}\). Some of the forthcoming results are stated in terms of super-Brownian motion and its historical process. Without loss of generality we may and will assume that these processes are obtained by formulas (7) and (8) of the previous construction. This also means that we consider the special branching mechanism \(\psi _0(u)=2u^2\), but of course the case of a general quadratic branching mechanism can then be handled via scaling arguments.

3 The Brownian plane

3.1 The Brownian plane as a random metric space

We start by giving a characterization of the Brownian plane as a random pointed metric space satisfying appropriate properties. We let \({\mathbb {K}}_{bcl}\) denote the space of all isometry classes of pointed boundedly compact length spaces. The space \({\mathbb {K}}_{bcl}\) is equipped with the local Gromov–Hausdorff distance \({\mathrm {d}}_{LGH}\) (see [9, Section 2.1]) and is a Polish space, that is, separable and complete for this distance. For \(r>0\) and \(F\in {\mathbb {K}}_{bcl}\), we use the notation \(B_r(F)\) for the closed ball of radius r centered at the distinguished point of F. Note that \(B_r(F)\) is always viewed as a pointed compact metric space.

The Brownian plane \({\mathcal {P}}_\infty \) is then a random variable taking values in the space \({\mathbb {K}}_{bcl}\).

Definition 3.1

Let \(E_1\) and \(E_2\) be two random variables with values in \({\mathbb {K}}_{bcl}\). We say that \(E_1\) and \(E_2\) are locally isometric if, for every \(\delta >0\), there exists a number \(r>0\) and a coupling of \(E_1\) and \(E_2\) such that the balls \(B_r(E_1)\) and \(B_r(E_2)\) are isometric with probability at least \(1-\delta \).

We leave it to the reader to verify that this is an equivalence relation (only transitivity is not obvious). The interest of this definition comes from the next proposition. If E is a (random) metric space and \(\lambda >0\), we use the notation \(\lambda \cdot E\) for the same metric space where the distance has been multiplied by \(\lambda \).

Proposition 3.2

The distribution of the Brownian plane is characterized in the set of all probability measures on \({\mathbb {K}}_{bcl}\) by the following two properties:

-

(i) The Brownian plane is locally isometric to the Brownian map.

-

(ii) The Brownian plane is scale invariant, meaning that \(\lambda \cdot {\mathcal {P}}_\infty \) has the same distribution as \({\mathcal {P}}_\infty \), for every \(\lambda >0\).

Proof

The fact that property (i) holds is Theorem 1 in [9]. Property (ii) is immediate from the construction in [9], or directly from the convergence (1) in [9, Theorem 1]. So we just have to prove that these two properties characterize the distribution of the Brownian plane. Let E be a random variable with values in \({\mathbb {K}}_{bcl}\), which is both locally isometric to the Brownian map and scale invariant. Then, E is also locally isometric to the Brownian plane, and, for every \(\delta >0\), we can find \(r>0\) and a coupling of E and \({\mathcal {P}}_\infty \) such that

where the equality is in the sense of isometry between pointed compact metric spaces. Trivially this implies that, for every \(a>0\),

By scale invariance, \(\frac{a}{r}\cdot E\) and \(\frac{a}{r}\cdot {\mathcal {P}}_\infty \) have the same distribution as E and \({\mathcal {P}}_\infty \) respectively. So we get that for every \(\delta >0\), for every \(a>0\), we can find a coupling of E and \({\mathcal {P}}_\infty \) such that

Recalling the definition of the local Gromov–Hausdorff distance \({\mathrm {d}}_{LGH}\) (see e.g. [9, Section 2.1]) we obtain that, for every \(\varepsilon >0\) and every \(\delta >0\), there exists a coupling of E and \({\mathcal {P}}_\infty \) such that

Clearly this implies that the Lévy–Prokhorov distance between the distributions of E and \({\mathcal {P}}_\infty \) is 0 and thus E and \({\mathcal {P}}_\infty \) have the same distribution. \(\square \)

3.2 A new construction of the Brownian plane

In this section, we provide a construction of the Brownian plane, which is different from the one in [9]. We then use Proposition 3.2 and Theorem 2.1 to prove the equivalence of the two constructions.

We consider a nine-dimensional Bessel process \(R=(R_t)_{t\ge 0}\) starting from 0 and, conditionally on R, two independent Poisson point measures \({\mathcal {N}}'({\mathrm {d}}t,{\mathrm {d}}\omega )\) and \({\mathcal {N}}''({\mathrm {d}}t,{\mathrm {d}}\omega )\) on \({\mathbb {R}}_+\times C({\mathbb {R}}_+,{\mathcal {W}})\) with the same intensity

It will be convenient to write

where the indexing sets I and J are disjoint.

We also consider the sum \({\mathcal {N}}={\mathcal {N}}'+{\mathcal {N}}''\), which conditionally on R is Poisson with intensity

and we have

We start by introducing the infinite random tree that will be crucial in our construction of the Brownian plane. For every \(i\in I\cup J\), write \(\sigma _i=\sigma (\omega ^i)\) and let \((\zeta ^i_s)_{s\ge 0}\) be the lifetime process associated with \(\omega ^i\). Then the function \((\zeta ^i_s)_{0\le s\le \sigma _i}\) codes a rooted compact real tree, which is denoted by \({\mathcal {T}}^i\), and we write \(p_{\zeta ^i}\) for the canonical projection from \([0,\sigma _i]\) onto \({\mathcal {T}}^i\) (see e.g. [20, Section 2] for basic facts about the coding of trees by continuous functions). We construct a random non-compact real tree \({\mathcal {T}}_\infty \) by grafting to the half-line \([0,\infty )\) (which we call the “spine”) the tree \({\mathcal {T}}^i\) at point \(t_i\), for every \(i\in I\cup J\). Formally, the tree \({\mathcal {T}}_\infty \) is obtained from the disjoint union

by identifying the point \(t_i\) of \([0,\infty )\) with the root \(\rho _i\) of \({\mathcal {T}}^i\), for every \(i\in I\cup J\). The metric \(d_\infty \) on \({\mathcal {T}}_\infty \) is determined as follows. The restriction of \(d_\infty \) to each tree \({\mathcal {T}}^i\) is (of course) the metric \(d_{{\mathcal {T}}^i}\) on \({\mathcal {T}}^i\). If \(x\in {\mathcal {T}}^i\) and \(t\in [0,\infty )\), we take \(d_\infty (x,t)= d_{{\mathcal {T}}^i}(x,\rho _i)+ |t_i-t|\). If \(x\in {\mathcal {T}}^i\) and \(y\in {\mathcal {T}}^j\), with \(i\not = j\), we take \(d_\infty (x,y)= d_{{\mathcal {T}}^i}(x,\rho _i) + |t_i-t_j| + d_{{\mathcal {T}}^j}(\rho _j,y)\). By convention, \({\mathcal {T}}_\infty \) is rooted at 0. The infinite tree \({\mathcal {T}}_\infty \) is equipped with a volume measure \({\mathbf {V}}\), which puts no mass on the spine and whose restriction to each tree \({\mathcal {T}}^i\) is the natural volume measure on \({\mathcal {T}}^i\) defined as the image of Lebesgue measure on \([0,\sigma _i]\) under the projection \(p_{\zeta ^i}\).

We also define labels on the tree \({\mathcal {T}}_\infty \). The label \(\Lambda _x\) of a vertex \(x\in {\mathcal {T}}_\infty \) is defined by \(\Lambda _x= R_t\) if \(x=t\) belongs to the spine \([0,\infty )\), and \(\Lambda _x= \widehat{\omega }^i_s\) if \(x=p_{\zeta ^i}(s)\) belongs to the subtree \({\mathcal {T}}^i\), for some \(i\in I\cup J\). Note that the mapping \(x\mapsto \Lambda _x\) is continuous almost surely (for continuity at points of the spine, observe that, for every \(K>0\) and \(\varepsilon >0\), there are a.s. only finitely many values of \(i\in I\cup J\) such that \(t_i\le K\) and \(\sup \{|\widehat{\omega }^i_s-R_{t_i}|:s\ge 0\}>\varepsilon \)). For future use, we also notice that, if \(x=p_{\zeta ^i}(s)\) belongs to the subtree \({\mathcal {T}}^i\), the quantities \(\omega ^i_s(t)\), \(0\le t\le \zeta ^i_s\) are the labels of the ancestors of x in \({\mathcal {T}}^i\).

We will use the fact that labels are “transient” in the sense of the following lemma. Recall the notation \(\omega _*=W_*(\omega )\).

Lemma 3.3

We have a.s.

Proof

It is enough to verify that, for every \(A>0\), we have

However by construction,

using (4). The desired result easily follows from the fact that the integral \(\int ^\infty {\mathrm {d}}t\,(R_t)^{-3}\) is convergent. \(\square \)

Until now, we have not used the fact that \({\mathcal {N}}\) is decomposed in the form \({\mathcal {N}}={\mathcal {N}}'+{\mathcal {N}}''\). This decomposition corresponds intuitively to the fact that the trees \({\mathcal {T}}^i\) are grafted on the left side of the spine \([0,\infty )\) when \(i\in I\), and on the right side when \(i\in J\). We make this precise by defining an exploration process of the tree. To begin with, we define, for every \(u\ge 0\),

Note that both \(u\mapsto \tau '_u\) and \(u\mapsto \tau ''_{u}\) are nondecreasing and right-continuous. The left limits of these functions are denoted by \(\tau '_{u-}\) and \(\tau ''_{u-}\) respectively, and \(\tau '_{0-}=\tau ''_{0-}=0\) by convention.

Then, for every \(s\ge 0\), there is a unique \(u\ge 0\), such that \(\tau '_{u-}\le s\le \tau '_{u}\), and:

-

Either there is a (unique) \(i\in I\) such that \(u=t_i\), and we set

$$\begin{aligned} \Theta '_s:= p_{\zeta ^i}\left( s-\tau '_{t_i-}\right) . \end{aligned}$$ -

Or there is no such i and we set \(\Theta '_s=u\).

We define similarly \((\Theta ''_s)_{s\ge 0}\) by replacing \((\tau '_u)_{u\ge 0}\) by \((\tau ''_u)_{u\ge 0}\) and I by J. Informally, \((\Theta '_s)_{s\ge 0}\) and \((\Theta ''_s)_{s\ge 0}\) correspond to the exploration of respectively the left and the right side of the tree \({\mathcal {T}}_\infty \). Noting that \(\Theta '_0=\Theta ''_0=0\), we define \((\Theta _s)_{s\in {\mathbb {R}}}\) by setting

It is straightforward to verify that the mapping \(s\mapsto \Theta _s\) is continuous. We also note that the volume measure \({\mathbf {V}}\) on \({\mathcal {T}}_\infty \) is the image of Lebesgue measure on \({\mathbb {R}}\) under the mapping \(s\mapsto \Theta _s\).

This exploration process allows us to define intervals on \({\mathcal {T}}_\infty \). Let us make the convention that, if \(s>t\), the “interval” [s, t] is defined by \([s,t]=[s,\infty )\cup (-\infty ,t]\). Then, for every \(x,y\in {\mathcal {T}}_\infty \), there is a smallest interval [s, t], with \(s,t\in {\mathbb {R}}\), such that \(\Theta _s=x\) and \(\Theta _t=y\), and we define

Note that \([x,y]\not =[y,x]\) unless \(x=y\). We may now turn to our construction of the Brownian plane. We set, for every \(x,y\in {\mathcal {T}}_\infty \),

and then

where the infimum is over all choices of the integer \(p\ge 1\) and of the finite sequence \(x_0,x_1,\ldots ,x_p\) in \({\mathcal {T}}_\infty \) such that \(x_0=x\) and \(x_p=y\). Note that we have

for every \(x,y\in {\mathcal {T}}_\infty \). Furthermore, it is immediate from our definitions that

for every \(x\in {\mathcal {T}}_\infty \). As a consequence of the continuity of the mapping \(s\mapsto \Lambda _{\Theta _s}\), we have \(D^\circ _\infty (x_0,x)\longrightarrow 0\) (hence also \(D_\infty (x_0,x)\longrightarrow 0\)) as \(x\rightarrow x_0\), for every \(x_0\in {\mathcal {T}}_\infty \).

It is not hard to verify that \(D_\infty \) is a pseudo-distance on \({\mathcal {T}}_\infty \). We put \(x\approx y\) if and only if \(D_\infty (x,y)=0\) and we introduce the quotient space \(\widetilde{\mathcal {P}}_\infty ={\mathcal {T}}_\infty \, /\approx \,\), which is equipped with the metric induced by \(D_\infty \) and with the distinguished point which is the equivalence class of 0. The volume measure on \(\widetilde{\mathcal {P}}_\infty \) is the image of the volume measure \({\mathbf {V}}\) on \({\mathcal {T}}_\infty \) under the canonical projection.

Theorem 3.4

The pointed metric space \(\widetilde{\mathcal {P}}_\infty \) is locally isometric to the Brownian map and scale invariant. Consequently, \(\widetilde{\mathcal {P}}_\infty \) is distributed as the Brownian plane \({\mathcal {P}}_\infty \).

Proof

The fact that \(\widetilde{\mathcal {P}}_\infty \) is scale invariant is easy from our construction. Hence the difficult part of the proof is to verify that \(\widetilde{\mathcal {P}}_\infty \) is locally isometric to the Brownian map. Let us start by briefly recalling the construction of the Brownian map \(\mathbf{m}_\infty \). We argue under the conditional excursion measure \({\mathbb {N}}_0^{(1)} ={\mathbb {N}}_0(\cdot \mid \sigma =1)\). Under \({\mathbb {N}}_0^{(1)}\), the lifetime process \((\zeta _s)_{0\le s\le 1}\) is a normalized Brownian excursion, and the tree \({\mathcal {T}}_\zeta \) coded by \((\zeta _s)_{0\le s\le 1}\) is the so-called CRT. As previously, \(p_\zeta \) stands for the canonical projection from [0, 1] onto \({\mathcal {T}}_\zeta \). We can define intervals on \({\mathcal {T}}_\zeta \) in a way analogous to what we did before for \({\mathcal {T}}_\infty \): If \(x,y\in {\mathcal {T}}_\zeta \), \([x,y]=\{p_\zeta (r):r\in [s,t]\}\), where [s, t] is the smallest interval such that \(p_\zeta (s)=x\) and \(p_\zeta (t)=y\), using now the convention that the interval [s, t] is defined by \([s,t]=[s,1]\cup [0,t]\) when \(s>t\). Then we equip \({\mathcal {T}}_\zeta \) with Brownian labels by setting \(\Gamma _x=\widehat{W}_s\) if \(x=p_\zeta (s)\). For every \(x,y\in {\mathcal {T}}_\zeta \), we define \(D^\circ (x,y)\), resp. D(x, y), by exactly the same formula as in (10), resp. (11), replacing \(\Lambda \) by \(\Gamma \). We have again the bound \(D(x,y)\ge |\Gamma _x-\Gamma _y|\). We then observe that D is a pseudo-distance on \({\mathcal {T}}_\zeta \), and the Brownian map \(\mathbf{m}_\infty \) is the associated quotient metric space. The distinguished point of \(\mathbf{m}_\infty \) is chosen as the (equivalence class of the) vertex \(x_\mathbf{m}\) of \({\mathcal {T}}_\zeta \) with minimal label, and we note that \(D(x_\mathbf{m},x)= \Gamma _x-\Gamma _{x_\mathbf{m}}= \Gamma _x-W_*\) for every \(x\in {\mathcal {T}}_\zeta \).

If we replace the normalized Brownian excursion by a Brownian excursion with duration \(r>0\), that is, if we argue under \({\mathbb {N}}_0^{(r)}\), and perform the same construction, simple scaling arguments show that the resulting pointed metric space is distributed as \(r^{1/4}\cdot \mathbf{m}_\infty \) and is thus locally isometric to \(\mathbf{m}_\infty \) (both are locally isometric to the Brownian plane). Consequently, under the probability measure

the preceding construction also yields a random pointed metric space which is locally isometric to \(\mathbf{m}_\infty \). Let us write \({\mathbf {M}}\) for this random pointed metric space. We will argue that \({\mathbf {M}}\) is locally isometric to \(\widetilde{\mathcal {P}}_\infty \), which will complete the proof. Some of the arguments that follow are similar to those used in [9, Proof of Proposition 4] to verify that the Brownian plane is locally isometric to the Brownian map.

We set for every \(b>0\),

where we used the notation \(\tau _D({\mathrm {w}})\) introduced in Sect. 2.2. Still with the notation of this subsection, the random variable \(A_b\) is \({\mathcal {E}}_b\)-measurable, and it follows that

is a stopping time of the filtration \(({\mathcal {E}}_a)_{a>0}\). Observe that \(\{H<\infty \}=\{\sigma >1\}\), \({\mathbb {N}}_0\) a.e. From Proposition 2.3, we get that under the probability measure \({\mathbb {N}}_0(\cdot \mid \sigma >1)\), and conditionally on the pair \((H,{\mathcal {Z}}_{-H})\), the excursions of the Brownian snake outside \((-H,\infty )\) form a Poisson point process with intensity \({\mathcal {Z}}_{-H}\,{\mathbb {N}}_{-H}\) (incidentally this also implies that \({\mathcal {Z}}_{-H}>0\) a.e. on \(\{\sigma >1\}\)). Among the excursions outside \((-H,\infty )\), there is exactly one that attains the minimal value \(W_*\), and conditionally on \(H=h\) and \(W_*=a\) (with \(a<-h\)), this excursion is distributed according to \({\mathbb {N}}_{-h}(\cdot \mid W_*=a)\).

Now compare Theorem 2.1 with the construction of \(\widetilde{\mathcal {P}}_\infty \) given above to see that we can find a coupling of the Brownian snake under \({\mathbb {N}}_0(\cdot \mid \sigma >1)\) and of the triplet \((R,{\mathcal {N}}',{\mathcal {N}}'')\) determining the labeled tree \(({\mathcal {T}}_\infty , (\Lambda _x)_{x\in {\mathcal {T}}_\infty })\), in such a way that the following properties hold. There exists a (random) real \(\delta >0\) and an isometry \({\mathcal {I}}\) from the ball \(B_\delta ({\mathcal {T}}_\zeta )\) [centered at the distinguished vertex \(x_\mathbf{m}=p_\zeta (s_\mathbf{m})\)] onto the ball \(B_\delta ({\mathcal {T}}_\infty )\) (centered at 0). This isometry preserves intervals, in the sense that if \(x, y \in B_\delta ({\mathcal {T}}_\zeta )\), \({\mathcal {I}}([x,y] \cap B_\delta ({\mathcal {T}}_\zeta ))= [{\mathcal {I}}(x),{\mathcal {I}}(y)] \cap B_\delta ({\mathcal {T}}_\infty )\). Furthermore, the isometry \({\mathcal {I}}\) preserves labels up to a shift by \(-W_*\), meaning that \(\Lambda _{{\mathcal {I}}(x)}= \Gamma _{x} - W_*\) for every \(x\in B_\delta ({\mathcal {T}}_\zeta )\). Consequently, we have

for every \(x\in B_\delta ({\mathcal {T}}_\zeta )\).

Next we can choose \(\eta >0\) small enough so that labels on \({\mathcal {T}}_\zeta \backslash B_\delta ({\mathcal {T}}_\zeta )\) are all strictly larger than \(W_*+ 2\eta \) and labels on \({\mathcal {T}}_\infty \backslash B_\delta ({\mathcal {T}}_\infty )\) are all strictly larger than \(2\eta \) (we use Lemma 3.3 here). In particular, if \(x\in {\mathcal {T}}_\zeta \), the condition \(D(x_\mathbf{m},x)\le 2\eta \) implies that \(x\in B_\delta ({\mathcal {T}}_\zeta )\), and, if \(x'\in {\mathcal {T}}_\infty \), the condition \(D_\infty (0,x')\le 2\eta \) implies that \(x'\in B_\delta ({\mathcal {T}}_\infty )\). We claim that

for every \(x,y\in {\mathcal {T}}_\zeta \) such that \(D(x_\mathbf{m},x)\le \eta \) and \(D(x_\mathbf{m},y)\le \eta \). To verify this claim, first note that, if \(x',y'\in {\mathcal {T}}_\infty \) are such that \(D_\infty (0,x')=\Lambda _{x'}\le 2\eta \) and \(D_\infty (0,y')=\Lambda _{y'}\le 2\eta \), we can compute \(D^\circ _\infty (x',y')\) using formula (10), and in the right-hand side of this formula we may replace the interval \([ x',y']\) by \([ x',y']\cap B_\delta ({\mathcal {T}}_\infty )\) (because obviously the minimal value of \(\Lambda \) on \([ x',y']\) is attained on \([ x',y']\cap B_\delta ({\mathcal {T}}_\infty )\)). A similar replacement may be made in the analogous formula for \(D^\circ (x,y)\) when \(x,y\in {\mathcal {T}}_\zeta \) are such that \(\Gamma _{x}\le W_*+2\eta \) and \(\Gamma _{y}\le W_*+2\eta \). Using the isometry \({\mathcal {I}}\), we then obtain that

for every \(x,y\in {\mathcal {T}}_\zeta \) such that \(D(x_\mathbf{m},x)\le 2\eta \) and \(D(x_\mathbf{m},y)\le 2\eta \). Then, let \(x',y'\in {\mathcal {T}}_\infty \) be such that \(\Lambda _{x'}\le \eta \) and \(\Lambda _{y'}\le \eta \). If we use formula (11) to evaluate \(D_\infty (x',y')\), we may in the right-hand side of this formula restrict our attention to “intermediate” points \(x_i\) whose label \(\Lambda _{x_i}\) is smaller than \(2\eta \) (indeed if one of the intermediate points has a label strictly greater than \(2\eta \), then it follows from (12) that the sum in the right-hand side of (11) is strictly greater than \(2\eta \ge D_\infty (x',y')\)). A similar observation holds if we use the analog of (11) to compute D(x, y) when \(x,y\in {\mathcal {T}}_\zeta \) are such that \(D(x_\mathbf{m},x)\le \eta \) and \(D(x_\mathbf{m},y)\le \eta \). Our claim (13) is a consequence of the preceding considerations and (14).

It follows from (13) that \({\mathcal {I}}\) induces an isometry from the ball \(B_\eta ({\mathbf {M}})\) onto the ball \(B_\eta (\widetilde{\mathcal {P}}_\infty )\). This implies that \({\mathbf {M}}\) is locally isometric to \(\widetilde{\mathcal {P}}_\infty \), and the proof is complete. \(\square \)

In view of Theorem 3.4, we may and will write \({\mathcal {P}}_\infty \) instead of \(\widetilde{\mathcal {P}}_\infty \) for the random metric space that we constructed in the first part of this subsection. We denote the canonical projection from \({\mathcal {T}}_\infty \) onto \({\mathcal {P}}_\infty \) by \(\Pi \). The fact that \(D_\infty (x_0,x)\longrightarrow 0\) as \(x\rightarrow x_0\), for every fixed \(x_0\in {\mathcal {T}}_\infty \), shows that \(\Pi \) is continuous. The argument of the preceding proof makes it possible to transfer several known properties of the Brownian map to the space \({\mathcal {P}}_\infty \). First, for every \(x,y\in {\mathcal {T}}_\infty \), we have

Indeed this property will hold for x and y belonging to a sufficiently small ball centered at 0 in \({\mathcal {T}}_\infty \), by [21, Theorem 3.4] and the coupling argument explained in the preceding proof. The scale invariance of the Brownian plane then completes the argument. Similarly, we have the so-called “cactus bound”, for every \(x,y\in {\mathcal {T}}_\infty \) and every continuous path \((\gamma (t))_{0\le t\le 1}\) in \({\mathcal {P}}_\infty \) such that \(\gamma (0)=\Pi (x)\) and \(\gamma (1)=\Pi (y)\),

where \(\llbracket x, y\rrbracket \) stands for the geodesic segment between x and y in the tree \({\mathcal {T}}_\infty \). The bound (15) follows from the analogous result for the Brownian map [22, Proposition 3.1] and the coupling argument of the preceding proof.

Since labels correspond to distances from the distinguished point, we have, for every \(r>0\),

Recall the definition of the hull \(B_r^\bullet ({\mathcal {P}}_\infty )\) in Sect. 1. We claim that

where \(\llbracket x,\infty \llbracket \) is the geodesic path from x to \(\infty \) in the tree \({\mathcal {T}}_\infty \). The fact that \(B_r^\bullet ({\mathcal {P}}_\infty )\) is contained in the right-hand side of (16) is easy: If \(x\in {\mathcal {T}}_\infty \) is such that \(\Lambda _y > r\) for every \(y\in \llbracket x,\infty \llbracket \), then \(\Pi (\llbracket x,\infty \llbracket )\) gives a continuous path going from \(\Pi (x)\) to \(\infty \) and staying outside the ball \(B_r({\mathcal {P}}_\infty )\). Conversely, suppose that \(x\in {\mathcal {T}}_\infty \) is such that

Then, if \((\gamma (t))_{t\ge 0}\) is any continuous path going from \(\Pi (x)\) to \(\infty \) in \({\mathcal {P}}_\infty \), the bound (15) leads to

and it follows that \(\Pi (x)\in B_r^\bullet ({\mathcal {P}}_\infty )\), proving our claim.

Write \(\partial B_r^\bullet ({\mathcal {P}}_\infty )\) for the topological boundary of \(B_r^\bullet ({\mathcal {P}}_\infty )\). It follows from (16) that

with the obvious notation \(\rrbracket x,\infty \llbracket \). The latter formula motivates the definition of the (generalized) length of the boundary of \(B_r^\bullet ({\mathcal {P}}_\infty )\). We observe that this boundary contains (the image under \(\Pi \) of) a single point on the spine, corresponding to the last visit of r by the process R,

Any other point \(x\in {\mathcal {T}}_\infty \) such that \( \Lambda _x=r\) and \(\Lambda _y > r\) for every \(y\in \,\rrbracket x,\infty \llbracket \) must be of the form \(p_{\zeta ^i}(s)\), for some \(i\in I\cup J\), with \(t_i>L_r\), and some \(s\in [0,\sigma _i]\) such that the path \(\omega ^i_s\) hits r exactly at its lifetime. For each fixed i (with \(t_i>L_r\)), the “quantity” of such values of s is measured by the total mass \({\mathcal {Z}}_r(\omega ^i)\) of the exit measure of \(\omega ^i\) from \((r,\infty )\). Here we use the same notation \({\mathcal {Z}}_r=\langle {\mathcal {Z}}^{(r,\infty )},1\rangle \) as previously.

Following the preceding discussion, we define, for every \(r>0\),

We observe that the quantities \({\mathcal {Z}}_r(\omega ^i)\) in (18) are well defined since each \(\omega ^i\) is a Brownian snake excursion starting from \(R_{t_i}\) and the condition \(t_i>L_r\) guarantees that \(R_{t_i}>r\). We interpret \(Z_r\) as measuring the size of the boundary of the hull \(B_r^\bullet ({\mathcal {P}}_\infty )\).

Note that at the present stage, it is not clear that the random variable \(Z_r\) coincides with the one introduced in Proposition 1.1 (which we have not yet proved). At the end of Sect. 4.1 below, we will verify that the approximation result of Proposition 1.1 holds with the preceding definition of \(Z_r\).

4 The volume of hulls

4.1 The process of boundary lengths

Our main goal in this subsection is to describe the distribution of the process \((Z_r)_{r>0}\). We fix \(a>0\). By formula (18) and the exponential formula for Poisson measures, we have, for every \(\lambda \ge 0\),

The quantity in the right-hand side will be computed via the following two lemmas.

Lemma 4.1

For every \(x>a\) and \(\lambda \ge 0\),

Proof

We have

by (4). In order to compute the first term in the right-hand side, we observe that we have \({\mathcal {R}}\subset (a,\infty )\subset (0,\infty )\) on the event \(\{{\mathcal {Z}}_a=0\}\), \({\mathbb {N}}_x\) a.e., by (5). Therefore, we can write

In the second equality we used the special Markov property, together with formula (4), to obtain that the conditional probability of the event \(\{{\mathcal {R}}\subset (0,\infty )\}\) given \({\mathcal {Z}}_a\) is \(\exp (-\frac{3{\mathcal {Z}}_a}{2a^2})\). The formula of the lemma follows from the preceding two displays and (6). \(\square \)

Lemma 4.2

For every \(\alpha \in (0,a)\),

Proof

By dominated convergence, we have

Let us fix \(b>a\). By the time-reversal property of Bessel processes already mentioned after the statement of Theorem 2.1, the process \((\widetilde{R}_t)_{t\ge 0}\) defined by

is a Bessel process of dimension \(-5\) started from b. Set \(T_a:=\inf \{t\ge 0:\widetilde{R}_t=a\}=L_b-L_a\). Write \((B_t)_{t\ge 0}\) for a one-dimensional Brownian motion which starts from r under the probability measure \(P_r\), and for every \(y\in {\mathbb {R}}\), let \(\gamma _y:=\inf \{t\ge 0:B_t=y\}\). Then,

where the last equality is a special case of the classical absolute continuity relations between Bessel processes (see [25, Lemma 1] for this special case). Next observe that

where the second equality is well known (and can again be viewed as a consequence of Lemma 1 in [25]). By combining the last two displays, we get

and the desired result follows by letting \(b\uparrow \infty \). \(\square \)

We can now identify the law of \(Z_a\).

Proof of Proposition 1.2 (i)

We start from formula (19) and use first Lemma 4.1 and then Lemma 4.2 to obtain, for every \(\lambda \ge 0\),

which yields the desired result. \(\square \)

Our next goal is to obtain the law of the whole process \((Z_a)_{a\ge 0}\), where by convention we take \(Z_0=0\). To this end it is convenient to introduce a “backward” filtration \(({\mathcal {G}}_a)_{a\ge 0}\), which we will define after introducing some notation. If \({\mathrm {w}}\in {\mathcal {W}}\), we set \(\tau _a({\mathrm {w}}):=\inf \{t\ge 0: {\mathrm {w}}(t)\notin (a,\infty )\}\), with the usual convention \(\inf \varnothing =\infty \). Then, let \(a\ge 0\) and \(x>a\), and let \(\omega =(\omega _s)_{s\ge 0}\in C({\mathbb {R}}_+,{\mathcal {W}}_x)\) be such that \(\omega _s=x\) for all s large enough. For every \(s\ge 0\), we define \(\mathrm{tr}_a(\omega )_s \in {\mathcal {W}}_x\) by the formula

where, for every \(s\ge 0\),

From the properties of the Brownian snake, it is easy to verify that \({\mathbb {N}}_x({\mathrm {d}}\omega )\) a.e., \(\mathrm{tr}_a(\omega )\) belongs to \(C({\mathbb {R}}_+,{\mathcal {W}}_x)\), and the paths \(\mathrm{tr}_a(\omega )_s\) do not visit \((-\infty ,a)\), and may visit a only at their endpoint (what we have done is removing those paths that hit a and survive for some positive time after hitting a). Note that we are using a particular instance of the time change \(\eta ^D_s\) introduced when defining the \(\sigma \)-field \({\mathcal {E}}^D\) in Sect. 2.2 (indeed, the \(\sigma \)-field \({\mathcal {E}}^{(a,\infty )}\) is generated by the mapping \(\omega \mapsto \mathrm{tr}_a(\omega )\) up to negligible sets).

Recall formula (9) for the point measure \({\mathcal {N}}\). For every \(a\ge 0\), we let \({\mathcal {G}}_a\) be the \(\sigma \)-field generated by the process \((R_{L_a+t})_{t\ge 0}\) and the point measure

and by the P-negligible sets. Note that, in the definition of \({\mathcal {N}}^{(a)}\), we keep only those excursions that start from the “spine” at a time greater than \(L_a\) (so that obviously their initial point is greater than a) and we truncate these excursions at level a. Also notice that \({\mathcal {N}}^{(0)}={\mathcal {N}}\).

From our definitions it is clear that \({\mathcal {G}}_a\supset {\mathcal {G}}_b\) if \(a<b\). Furthermore, it follows from the measurability property of exit measures that \(Z_a\) is \({\mathcal {G}}_a\)-measurable, for every \(a>0\) (the point is that \({\mathcal {Z}}_a(\omega ^i)\) is equal a.s. to a measurable function of \(\mathrm{tr}_a(\omega ^i)\), see [18, Proposition 2.3]). We also notice that, for every \(a>0\), the process \((R_{L_a+t})_{t\ge 0}\) is independent of \((R_t)_{0\le t\le L_a}\). This follows from last exit decompositions for diffusion processes, or in a more straightforward way this can be deduced from the time-reversal property already mentioned above.

Proposition 4.3

Let \(0<a<b\). Then, for every \(\lambda \ge 0\),

If \(b>0\) is fixed, the proposition shows that the process \((Z_{b-a})_{0\le a<b}\) is time-inhomogeneous Markov with respect to the (forward) filtration \(({\mathcal {G}}_{b-a})_{0\le a<b}\), and identifies the Laplace transform of the associated transition kernels. Since the law of \(Z_b\) is also given by Proposition 1.2 (i), this completely characterizes the law of the process \((Z_a)_{a\ge 0}\). The more explicit description of this law given in Proposition 1.2 (ii) will be derived later.

Proof

Recall that \(0<a<b\) are fixed. We write

where

From the fact that \((R_{L_b+t})_{t\ge 0}\) is independent of \((R_t)_{0\le t\le L_b}\) and properties of Poisson measures, it easily follows that \(Y_{a,b}\) and \(\widetilde{Y}_{a,b}\) are independent, and more precisely \(\widetilde{Y}_{a,b}\) is independent of \(\sigma (Y_{a,b})\vee {\mathcal {G}}_b\). This implies that

From the special Markov property (see also the remark following Proposition 2.2), we have

where the last equality is Lemma 4.1.

Using Proposition 1.2 (i), we have thus,

and since \(Y_{a,b}\) and \(\widetilde{Y}_{a,b}\) are independent,

The statement of the proposition follows from (20) and the preceding calculations. \(\square \)

We will now identify the transition kernels whose Laplace transform appears in the previous proposition. To this end, we recall the discussion of Sect. 2.1, which we will apply with the particular value \(c= \sqrt{8/3}\).

Proposition 4.4

Let \(\rho >0\) and \(x>0\). The finite-dimensional marginal distributions of \((Z_{\rho -a})_{0\le a\le \rho }\) knowing that \(Z_\rho =x\) coincide with those of the continuous-state branching process with branching mechanism \(\psi (u)=\sqrt{8/3}\,u^{3/2}\) started from x and conditioned on extinction at time \(\rho \).

Proof

Recall the notation introduced in Sect. 2.1. By comparing the right-hand side of (3) with the formula of Proposition 4.3, we immediately see that, for \(0\le s<t<\rho \),

Arguing inductively, we obtain that, for every \(0<s_1<\cdots <s_p<\rho \), the conditional distribution of \((Z_{\rho -{s_1}},\ldots ,Z_{\rho -s_p})\) knowing \({\mathcal {G}}_\rho \) is \(\pi _{0,s_1}(Z_{\rho },{\mathrm {d}}y_1)\pi _{s_1,s_2}(y_1,{\mathrm {d}}y_2)\ldots \pi _{s_{p-1},s_p}(y_{p-1},{\mathrm {d}}y_p)\). The desired result follows. \(\square \)

We can now complete the proof of Proposition 1.2.

Proof of Proposition 1.2 (ii)

We first verify that \(Z_a\) and \(\widetilde{X}_{-a}\) have the same distribution, for every fixed \(a>0\). Let \(\lambda >0\) and set \(f(y)=e^{-\lambda y}\) to simplify notation. By the properties of the process \(\widetilde{X}\), we have

where \(T=\inf \{t\ge 0: X_t=0\}\) as previously. On the other hand, recalling the definition of the functions \(\phi _t\) in Sect. 2.1,

where dominated convergence is easily justified by the fact that \(E_x[T]<\infty \) and \(\phi _b(0)=0\) for every \(b>0\). Now use the form of \(\phi _a\) together with formula (2) (with \(c= \sqrt{8/3}\)) to see that the right-hand side of the last display is equal to

We then let \(x\uparrow \infty \) to get that

by assertion (i) of the proposition.

Knowing that \(Z_a\) and \(\widetilde{X}_{-a}\) have the same distribution, the proof is completed as follows. We observe that, for every \(a>0\), the law of \((\widetilde{X}_{-a+t})_{0\le t\le a}\) conditionally on \(\widetilde{X}_{-a}=x\) coincides with the law of X started from x and conditioned on extinction at time a (we leave the easy verification to the reader). By comparing with Proposition 4.4, we get the desired statement. \(\square \)

As a consequence of Proposition 1.2, the process \((Z_r)_{r>0}\) has a càdlàg modification, and from now on we deal only with this modification. We conclude this subsection by proving Proposition 1.1: we need to verify that our definition of the random variable \(Z_r\) matches the approximation given in this proposition.

Proof of Proposition 1.1

If \(x\in {\mathcal {T}}_\infty \) and x is not on the spine, the point \(\Pi (x)\) belongs to \(B_r^\bullet ({\mathcal {P}}_\infty )^c\cap B_{r+\varepsilon }({\mathcal {P}}_\infty )\) if and only if \(\Lambda _x\in (r,r+\varepsilon ]\) and \(\Lambda _y>r\) for every \(y\in \llbracket x,\infty \llbracket \). Recalling our notation \({\mathbf {V}}\) for the volume measure on \({\mathcal {T}}_\infty \), we can thus write

We will first deal with indices i such that \(t_i>L_{r+\varepsilon }\), and we set

to simplify notation. Recall that if \(x\in {\mathcal {T}}^i\) and \(x=p_{\zeta ^i}(s)\), we have \(\Lambda _x=\widehat{\omega }^i_s\) and \(\{\Lambda _y:y\in \llbracket \rho _i,x\rrbracket \}=\{\omega ^i_s(t):0\le t\le \zeta ^i_s\}\). An application of the special Markov property shows that the conditional distribution of \(A_\varepsilon \) knowing \(Z_{r+\varepsilon }\) is the law of \(U_\varepsilon (Z_{r+\varepsilon })\), where \(U_\varepsilon \) is a subordinator whose Lévy measure is the “law” of

under \({\mathbb {N}}_{r+\varepsilon }\) (and \(U_\varepsilon \) is assumed to be independent of \(Z_{r+\varepsilon }\)). From the first moment formula for the Brownian snake [19, Proposition IV.2], one easily derives that

where we have used the notation of the proof of Lemma 4.2. On the other hand, scaling arguments show that

and the law of large numbers implies that \(t^{-1}U_1(t)\) converges a.s. to 1 as \(t\rightarrow \infty \). Since the conditional distribution of \(\varepsilon ^{-2} A_\varepsilon \) knowing \(Z_{r+\varepsilon }\) is the law of \(\varepsilon ^{2}U_1(\frac{Z_{r+\varepsilon }}{\varepsilon ^2})\), it follows from the preceding observations that

in probability. Since \(Z_{r+\varepsilon }\) converges to \(Z_r\) as \(\varepsilon \rightarrow 0\), we conclude that

in probability. To complete the proof, we just have to check that

in probability. We leave the easy verification to the reader. \(\square \)

4.2 The law of the volume of the hull

This subsection is devoted to the proof of Theorem 1.4. We fix \(a>0\) and recall our notation \(B^\bullet _a({\mathcal {P}}_\infty )\) for the hull of radius a in the Brownian plane \({\mathcal {P}}_\infty \). To simplify notation, we write \(B^\bullet _a\) instead of \(B^\bullet _a({\mathcal {P}}_\infty )\), and we also write \(|B^\bullet _a|\) for the volume of this hull. Recall that \(Z_a\) is interpreted as a generalized length of the boundary of \(B^\bullet _a\).

Thanks to the construction of the Brownian plane explained in Sect. 3.2 and to formula (16), we can express the volume \(|B^\bullet _a|\) as the sum of two independent contributions, namely, on the one hand, the total volume of those subtrees that branch off the spine below level \(L_a\), and, on the other hand, the contribution of the subtrees that branch off the spine above level \(L_a\) (for these, we need to sum, over all indices \(i\in I\cup J\) with \(t_i>L_a\), the Lebesgue measure of the set of all \(s\in [0,\sigma _i]\) such that the path \(\omega ^i_s\) hits level a).

The beginning of this subsection is devoted to calculating the Laplace transform of the first of these two contributions. Thanks to Theorem 2.1, this is also the Laplace transform of \(\sigma \) under the conditional probability measure \({\mathbb {N}}_a(\cdot \mid W_*=0)\). This motivates the following calculations.

We recall the notation \({\mathcal {Z}}_0\) for the (total mass of the) exit measure from \((0,\infty )\), and we also set

where we recall that \(\tau _0({\mathrm {w}})=\inf \{t\ge 0:{\mathrm {w}}(t)\notin (0,\infty )\}\). We note that \({\mathcal {Y}}_0=\sigma \) under the conditional probability measure \({\mathbb {N}}_a(\cdot \mid W_*=0)\). Our first goal is to compute, for every \(\lambda ,\mu > 0\), the function \(u_{\lambda ,\mu }(x)\) defined for every \(x>0\) by

Note that \(u_{\lambda ,0}(x)\) is given by formula (6). On the other hand, the limit of \(u_{\lambda ,\mu }\) as \(\lambda \uparrow \infty \) is

by [14, Lemma 7]. The latter formula is generalized in the next lemma.

Lemma 4.5

We have, for every \(x>0\):

-

if \(\lambda > \sqrt{\frac{\mu }{2}}\),

$$\begin{aligned} u_{\lambda ,\mu }(x)= \sqrt{\frac{\mu }{2}}\bigg ( 3\bigg (\coth ^2\bigg ((2\mu )^{1/4} x +\coth ^{-1}\sqrt{\frac{2}{3} +\frac{1}{3} \sqrt{\frac{2}{\mu }}\lambda }\bigg )\bigg ) -2 \bigg ); \end{aligned}$$ -

if \(\lambda < \sqrt{\frac{\mu }{2}}\),

$$\begin{aligned} u_{\lambda ,\mu }(x)= \sqrt{\frac{\mu }{2}}\bigg ( 3\bigg (\tanh ^2\bigg ((2\mu )^{1/4} x +\tanh ^{-1}\sqrt{\frac{2}{3} +\frac{1}{3} \sqrt{\frac{2}{\mu }}\lambda }\bigg )\bigg ) -2 \bigg ). \end{aligned}$$

Remark

If \(\lambda = \sqrt{\frac{\mu }{2}}\), we have simply

This can be obtained by a passage to the limit from the previous formulas, but a direct proof is also easy.

Proof

By results due to Dynkin, the function \(u_{\lambda ,\mu }\) solves the differential equation

This is indeed a very special case of Theorem 3.1 in [13]. For the reader who is unfamiliar with the general theory of superprocesses, a direct proof can be given along the lines of the proof of Lemma 6 in [14].

It is also easy to verify that

The formulas of the lemma then follow by solving Eq. (22), which requires some tedious but straightforward calculations. \(\square \)

For future reference, we note that, if \(\lambda > \sqrt{\frac{\mu }{2}}\), we have, for every \(x>0\),

where the function \(\theta _\mu \), which is defined on \(( \sqrt{\frac{\mu }{2}},\infty )\) by

is the functional inverse of \(u_{\infty ,\mu }\). Of course (23) is nothing but the flow property of solutions of (22).

Proposition 4.6

Let \(a>0\). Then, for every \(\mu >0\),

Remark

The conditioning on \(\{W_*=0\}\) may be understood as a limit as \(\varepsilon \rightarrow 0\) of conditioning on \(\{-\varepsilon <W_*\le 0\}\). Equivalently, we may use Theorem 2.1, which provides an explicit description of the conditional probabilities \({\mathbb {N}}_0(\cdot \mid W_*=y)\) for every \(y<0\). Recall that \({\mathcal {Y}}_0=\sigma \) under \({\mathbb {N}}_a(\cdot \mid W_*=0)\).

Proof

We first observe that, for every \(\varepsilon >0\),

by (4). On the other hand,

using the special Markov property in the second equality, and then (4). Set \(\alpha =\frac{3}{2\varepsilon ^2}\) to simplify notation. Then,

using (23) in the last equality. Since

it follows from the preceding discussion that

The result of the proposition follows using also (24). \(\square \)

We state the next result in terms of super-Brownian motion, although our main motivation comes from our application to the Brownian plane in Theorem 1.4. Recall that, in order to use the connection with the Brownian snake, we always assume that the branching mechanism of super-Brownian motion is \(\psi _0(u)=2u^2\).

Corollary 4.7

Let \(a>0\) and \(r>0\). Assume that \(({\mathcal {X}}_t)_{t\ge 0}\) is a super-Brownian motion that starts from \(r\delta _a\) under the probability measure \({\mathbb {P}}_{r\delta _a}\). Set

and write \({\mathcal {R}}^{{\mathcal {X}}}\) for the range of \({\mathcal {X}}\). Then, for every \(\mu >0\),

Proof

We may assume that \(({\mathcal {X}}_t)_{t\ge 0}\) is constructed from a Poisson point measure \({\mathcal {N}}\) with intensity \(r{\mathbb {N}}_a\) via formula (7). Then, we immediately verify that

and properties of Poisson measures lead to the formula

The first term in the right-hand side is given by Proposition 4.6. As for the second term we observe that

and we use formula (21). This completes the proof. \(\square \)

Proof of Theorem 1.4

The first formula of the theorem is a straightforward consequence of the second one since we know the distribution of \(Z_a\). More precisely, using Proposition 1.2 (ii), we observe that

If we multiply this quantity by

we get the desired formula for \(E[\exp (-\mu |B^\bullet _a|)]\).

Not suprisingly, the second formula of Theorem 1.4 is a consequence of the analogous formula in Corollary 4.7. Let us explain this. Using our representation of the Brownian plane, and formula (16), we can write \(|B^\bullet _a|\) as the sum of two independent contributions:

-

The contribution of subtrees branching off the spine at a level smaller than \(L_a\). Using Theorem 2.1, we see that this contribution is distributed as \(\sigma \) under the conditional probability measure \({\mathbb {N}}_a(\cdot \mid W_*=0)\). We also note that this contribution is independent of the \(\sigma \)-field \({\mathcal {G}}_a\).

-

The contribution of subtrees branching off the spine at a level greater than \(L_a\). This contribution is \({\mathcal {G}}_a\)-measurable. Furthermore, an application of the special Markov property (similar to the one in the proof of Proposition 4.3) shows that its conditional distribution given \(Z_a=r\) is the law of

$$\begin{aligned} \sum _{k\in K} \sigma (\omega _{(k)}) \end{aligned}$$where \(\sum _{k\in K} \delta _{\omega _{(k)}}\) is a Poisson measure with intensity \(r{\mathbb {N}}_a(\cdot \cap \{W_*>0\})\).

The preceding discussion shows that the conditional distribution of \(|B^\bullet _a|\) given \(Z_a=r\) coincides with the distribution of \(\Sigma \) under \({\mathbb {P}}_{r\delta _a}(\cdot \mid \min {\mathcal {R}}^X=0)\), with the notation of Corollary 4.7. This completes the proof. \(\square \)

4.3 The process of hull volumes

Our goal in this subsection is to prove Theorem 1.3. In a way similar to Corollary 4.7, we consider a super-Brownian motion \(({\mathcal {X}}_t)_{t\ge 0}\), and the probability mesure \({\mathbb {P}}_{r\delta _0}\) under which this super-Brownian motion starts from \(r\delta _0\). We also introduce the associated historical process \(({\mathbf {X}}_t)_{t\ge 0}\). As previously, we may and will assume that \(({\mathcal {X}}_t)_{t\ge 0}\) and \(({\mathbf {X}}_t)_{t\ge 0}\) are constructed from a Poisson measure

with intensity \(r{\mathbb {N}}_0\), via formulas (7) and (8). We then set, for every \(a<0\),

and, for every \(a\le 0\),

where

We also set \({\mathscr {Z}}_{0}=r\) by convention.

In the theory of superprocesses [13], \({\mathscr {Z}}_{a}\) corresponds to the total mass of the exit measure of the historical process \(({\mathbf {X}}_t)_{t\ge 0}\) from \((a,\infty )\) (for our present purposes, we do not need this interpretation). We also note that, for every \(a\le 0\), we have

and the right-hand side is the total integrated mass of those historical paths that do not hit a.

As previously, \(X=(X_t)_{t\ge 0}\) denotes a continuous-state branching process with branching mechanism \(\psi (u)=\sqrt{8/3}\,u^{3/2}\) that starts from r under the probability measure \(P_r\). We will use the “Lévy-Khintchine representation” for \(\psi \): we have

where \(\kappa ({\mathrm {d}}y)\) is the measure on \((0,\infty )\) given by

Proposition 4.8

Let \(a>0\). The law under \({\mathbb {P}}_{r\delta _0}\) of the pair \(({\mathscr {Z}}_{-a},{\mathscr {Y}}_{-a})\) coincides with the law under \(P_r\) of the pair

where \(s_1,s_2,\ldots \) is a measurable enumeration of the jumps of X, and \(\xi _1,\xi _2,\ldots \) is a sequence of i.i.d. real random variables with density

which is independent of the process \((X_t)_{t\ge 0}\).

Proof

We first observe that, for every \(\lambda ,\mu >0\), we have

by the exponential formula for Poisson measures. We will prove that the joint Laplace transform of the pair (25) is given by the same expression.

To this end, we fix \(\mu >0\) and write \(\alpha =\sqrt{2\mu }\) to simplify notation. We also set \(w_a(\lambda )=u_{\lambda ,\mu }(a)\) for every \(a\ge 0\). As a consequence of (22) (or directly from Lemma 4.5) we have for every \(a,b\ge 0\),

and \(w_0(\lambda )=\lambda \). Furthermore, the derivative of \(w_a(\lambda )\) at \(a=0\) is easily computed from the formulas of Lemma 4.5:

where we recall that \(\alpha =\sqrt{2\mu }\).

Let us consider now the Laplace transform of the pair (25). We first observe that the Laplace transform of the variables \(\xi _i\) is given by