Abstract

We study the thermal properties of a pinned disordered harmonic chain weakly perturbed by a noise and an anharmonic potential. The noise is controlled by a parameter \(\lambda \rightarrow 0\), and the anharmonicity by a parameter \(\lambda ^{\prime } \le \lambda \). Let \(\kappa \) be the conductivity of the chain, defined through the Green–Kubo formula. Under suitable hypotheses, we show that \(\kappa = \mathcal O (\lambda )\) and, in the absence of anharmonic potential, that \(\kappa \sim \lambda \). This is in sharp contrast with the ordered chain for which \(\kappa \sim 1/\lambda \), and so shows the persistence of localization effects for a non-integrable dynamics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The mathematically rigorous derivation of macroscopic thermal properties of solids, starting from their microscopic description, is a serious challenge [8, 17]. On the one hand, numerous experiments and numerical simulations show that, for a wide variety of materials, the heat flux is related to the gradient of temperature through a simple relation known as Fourier’s law:

where \(\kappa (T)\) is the thermal conductivity of the solid. On the other hand, the mathematical understanding of this phenomenological law from the point of view of statistical mechanics is still lacking.

A one-dimensional solid can be modelled by a chain of oscillators, each of them being possibly pinned by an external potential, and interacting through a nearest neighbour coupling. The case of homogeneous harmonic interactions can be readily analysed, but it has been realized that this very idealized solid behaves like a perfect conductor, and so violates Fourier’s law [21]. To take into account the physical observations, it is thus needed to consider more elaborate models, where ballistic transport of energy is broken. Here are two possible directions.

On the one hand, adding some anharmonic interactions can drastically affect the conductivity of the chain [2, 19]. Unfortunately, the rigorous study of anharmonic chains is in general out of reach, and even numerical simulations do not lead to completely unambiguous conclusions. In order to draw some clear picture, anharmonic interactions are mimicked in [3, 6] by a stochastic noise that preserves total energy and possibly total momentum. The thermal behaviour of anharmonic solids is, at a qualitative level, correctly reproduced by this partially stochastic model. By instance, the conductivity of the one-dimensional chain is shown to be positive and finite if the chain is pinned, and to diverge if momentum is conserved.

On the other hand, another element that can affect the conductivity of an harmonic chain is impurities. In [22] and [10], an impure solid is modelled by a disordered harmonic chain, where the masses of the atoms are random. In these models, localization of eigenmodes induces a dramatic fall off of the conductivity. In the presence of everywhere onsite pinning, it is known that the chain behaves like a perfect insulator (see Remark 1 after Theorem 1). The case of unpinned chain is more delicate, and turns out to depend on the boundary conditions [14]. The principal cases have been rigorously analysed in [24] and [1].

The thermal conductivity of an harmonic chain perturbed by both disorder and anharmonic interactions is a topic of both practical and mathematical interest. We will in the sequel only consider a one-dimensional disordered chain with everywhere on-site pinning. Doing so we avoid the pathological behaviour of unpinned one-dimensional chains, and we focus on a case where the distinction between ordered and disordered harmonic chain is the sharpest. We will consider the joint action of a noise and an anharmonic potential ; we call \(\lambda \) the parameter controlling the noise, and \(\lambda ^{\prime }\) the parameter controlling the anharmonicity (see Sect. 2.1 below).

The disordered harmonic chain is an integrable system where localization of the eigenmodes can be studied rigorously [16]. However, if some anharmonic potential is added, very few is known about the persistence of localization effects. In [13], it is shown through numerical simulations that an even small amount of anharmonicity leads to a normal conductivity, destroying thus the localization of energy. In [20], an analogous situation is studied and similar conclusions are reached. This is confirmed rigorously in [5], if the anharmonic interactions are replaced by a stochastic noise preserving energy. Nothing however is said there about the conductivity as \(\lambda \rightarrow 0\). Later, this partially stochastic system has been studied in [12], where numerical simulations indicate that \(\kappa \sim \lambda \) as \(\lambda \rightarrow 0\).

Let us mention that, although the literature on the destruction of localized states seems relatively sparse in the context of thermal transport, much more is to find in that of Anderson’s localization and disordered quantum systems (see [4] and references in [4, 12]). There as well however, few analytical results seem to be available. Moreover, the interpretation of results from these fields to the thermal conductivity of solids is delicate, in part because many studies deal with systems at zero temperature: the time evolution of an initially localized wave packet.

The main goal of this article is to establish that disorder strongly influences the thermal conductivity of a harmonic chain, when both a small noise and small anharmonic interactions are added. We will always assume that \(\lambda ^{\prime } \le \lambda \), meaning that the noise is the dominant perturbative effect. Our main results, stated in Theorems 1 and 2 below, are that \(\kappa = \mathcal O (\lambda )\) as \(\lambda \rightarrow 0\), and that \(\kappa \sim \lambda \) if \(\lambda ^{\prime } = 0\). Strictly speaking, our results do not imply anything about the case where \(\lambda ^{\prime } >0\) and \(\lambda = 0\). However, in the regime we are dealing with, the noise is expected to produce interactions between localized modes, and so to increase the conductivity. We thus conjecture that \(\kappa = \mathcal O (\lambda ^{\prime })\) in this later case. This is in agreement with numerical results in [20], where it is suggested that \(\kappa \) could even decay as \(\mathrm e ^{-c/\lambda ^{\prime }}\) for some \(c >0\).

In the next section, we define the model studied in this paper, we state our results and we give some heuristic indications. The rest of the paper is then devoted to the proof of Theorems 1 and 2. Let us already indicate its main steps. The principal computation of this article consists in showing that the current due to harmonic interactions between particles \(k\) and \(k+1\), called \(j_{k,har}\), can be written as \(j_{k,har} = -A_{har} u_{k}\), where \(u_{k}\) is localized near \(k\), and where \(A_{har}\) is the generator of the harmonic dynamics. This is stated precisely and shown in Sect. 4; the proof ultimately rests on localization results first established by Kunz and Souillard (see [16] or [11]). Once this is seen, general inequalities on Markov processes allow us to obtain, in Sect. 3, the desired upper bound \(\kappa = \mathcal O (\lambda )\) in presence of both a noise and non-linear forces. The lower bound \(\kappa \ge c \lambda \), valid when \(\lambda ^{\prime } = 0\), is established by means of a variational formula (see [23]), using a method developed by the first author in [5]. This is carried out in Sect. 6.

2 Model and results

2.1 Model

We consider a one-dimensional chain of \(N\) oscillators, so that a state of the system is characterized by a point

where \(q_{k}\) represents the position of particle \(k\), and \(p_{k}\) its momentum. The dynamics is made of a hamiltonian part perturbed by a stochastic noise.

The Hamiltonian. The Hamiltonian writes

with the following definitions.

-

The pinning parameters \(\nu _k\) are i.i.d. random variables whose law is independent of \(N\). It is assumed that this law has a bounded density and that there exist constants \(0 < \nu _- < \nu _+ < \infty \) such that

$$\begin{aligned} \mathsf P (\nu _- \, \le \, \nu _k \, \le \, \nu _+) = 1. \end{aligned}$$ -

The value of \(q_{N+1}\) depends on the boundary conditions (BC). For fixed BC, we put \(q_{N+1} = 0\), while for periodic BC, we put \(q_{N+1} = q_1\). For further use, we also define \(q_0 = q_1\) for fixed BC, and \(q_0 = q_{N}\) for periodic BC.

-

We assume \(\lambda ^{\prime } \ge 0\). The potentials \(U\) and \(V\) are symmetric, meaning that \(U(-x) = U(x)\) and \(V(-x) = V(x)\) for every \(x \in \mathbb R \). They belong to \(\mathcal C ^{\infty }_{temp}(\mathbb R )\), the space of infinitely differentiable functions with polynomial growth. It is moreover assumed that

$$\begin{aligned} \int \limits _\mathbb R \mathrm e ^{-U(x)} \, \mathrm d x \; < \; + \infty \quad \text{ and} \quad \partial _x^2 U (x) \; \ge \; 0, \end{aligned}$$and that there exists \(c > 0\) such that

$$\begin{aligned} c \; \le \; 1 + \lambda ^{\prime } \partial _x^2 V (x) \; \le \; c^{-1}. \end{aligned}$$

For \(x=(x_1, \ldots , x_d)\in \mathbb{R }^d\) and \(y=(y_1, \ldots ,y_d) \in \mathbb R ^d\), let \(\langle x,y\rangle = x_1 y_1 + \dots + x_d y_d\) be the canonical scalar product of \(x\) and \(y\). The harmonic hamiltonian \(H_{har}\) can also be written as

if we introduce the symmetric matrix \(\Phi \in \mathbb R ^{N\times N}\) of the form \(\Phi = -\Delta + W\), where \(\Delta \) is the discrete Laplacian, and \(W\) a random “potential”. The precise definition of \(\Phi \) depends on the BC:

for \(1 \le j,k \le N\).

The dynamics. The generator of the hamiltonian part of the dynamics is written as

with

and

Here, for \(x=(x_1, \ldots , x_N) \in \mathbb{R }^N\), \(\nabla _x = (\partial _{x_1}, \ldots , \partial _{x_N})\). The generator of the noise is defined to be

with \(\lambda \ge \lambda ^{\prime }\). The generator of the full dynamics is given by

We denote by \(X_{(\lambda ,\lambda ^{\prime })}^t(x)\), or simply by \(X^t (x)\), the value of the Markov process generated by \(L\) at time \(t\ge 0\), starting from \(x = (q,p)\in \mathbb R ^{2N}\).

Expectations. Three different expectations will be considered. We define

-

\(\mu _T\): the expectation with respect to the Gibbs measure at temperature \(T\),

-

\(\mathsf E \): the expectation with respect to the realizations of the noise,

-

\(\mathsf E _\nu \): the expectation with respect to the realizations of the pinnings.

In Sect. 5, it will sometimes be useful to specify the dependence of the Gibbs measure on the system size \(N\) ; we then will write it \(\mu _T^{(N)}\).

The Gibbs measure \(\mu _T\) is explicitly given by

where \(Z_T\) is a normalizing factor such that \(\mu _T\) is a probability measure on \(\mathbb R ^{2N}\). We will need some properties of this measure. Let us write

with \(\rho ^{\prime } (p_k) = \mathrm e ^{-p_k^2/2T} / \sqrt{2\pi T}\) for \(1 \le k \le N\).

When \(\lambda ^{\prime } = 0\), the density \(\rho ^{\prime \prime }\) is Gaussian:

Since \(\nu _k \ge \nu _- > 0\), it follows from Lemma 1.1 in [9] that \(|(\Phi ^{-1})_{i,j}| \le \mathrm C \, \mathrm e ^{-c |j-i|}\), for some constants \(\mathrm C <+\infty \) and \(c > 0\) independent of \(N\). This implies in particular the decay of correlations

When \(\lambda ^{\prime } > 0\), the density \(\rho ^{\prime \prime }\) is not Gaussian anymore. We here impose the extra assumption that \(\nu _-\) is large enough. In that case, our hypotheses ensure that the conclusions of Theorem 3.1 in [7] hold: there exist constants \(\mathrm C < + \infty \) and \(c >0\) such that, for every \(f,g \in \mathcal C ^\infty _{temp} (\mathbb R ^N)\) satisfying \(\mu _T (f) = \mu _T (g) = 0\),

Here, \(\mathrm S (u) \) is the support of the function \(u\), defined as the smallest set of integers such that \(u\) can be written as a function of the variables \(x_l\) for \(l \in \mathrm S (u)\), whereas \( d ( \mathrm S (f), \mathrm S (g))\) is the smallest distance between any integer in \(\mathrm S (f)\) and any integer in \(\mathrm S (g)\). Using that \(\mu _T (q_k) = 0\) for \(1 \le k \le N\), it is checked from (2.1) that every function \(u\in \mathcal C ^\infty _{temp}(\mathbb R ^N)\) with given support independent of \(N\) is such that \(\Vert u \Vert _\mathrm{L ^1 (\mu _T)}\) is bounded uniformly in \(N\).

The current. The local energy \(e_k\) of atom \(k\) is defined as

with

and

For periodic B.C., these expressions are still valid when \(k=1\) or \(k=N\). For fixed B.C. instead, all the terms involving the differences \((q_0 - q_1)\) or \((q_{N+1} - q_N)\) in the previous expressions have to be multiplied by \(2\). These definitions ensure that the total energy \(H\) is the sum of the local energies.

The definition of the dynamics implies that

for local currents

defined as follows for \(0 \le k \le N\). First, for \(1 \le k \le N-1\),

Next, \(j_{0,1} = j_{N,N+1} = 0\) for fixed B.C. Finally, \(j_{0}\) and \(j_{N}\) are still given by (2.2) for periodic B.C., with the conventions \(p_0 = p_N\) and \(p_{N+1}=p_1\). The total current and the rescaled total current are then defined by

2.2 Results

For a given realization of the pinnings, the (Green–Kubo) conductivity \(\kappa = \kappa (\lambda , \lambda ^{\prime })\) of the chain is defined as

if this limit exists. The choice of the boundary conditions is expected to play no role in this formula since the volume size \(N\) is sent to infinity for fixed time. The disorder averaged conductivity is defined by replacing \( \mu _T\mathsf E \) by \(\mathsf E _\nu \mu _T\mathsf E \) in (2.5). By ergodicity, the conductivity and the disorder averaged conductivity are expected to coincide for almost all realization of the pinnings (see [5]). The dependence of \(\kappa (\lambda , \lambda ^{\prime })\) on the temperature \(T\) will not be analysed in this work, so that we can consider \(T\) as a fixed given parameter.

We first obtain an upper bound on the disorder averaged conductivity.

Theorem 1

Let \(0 \le \lambda ^{\prime } \le \lambda \). With the assumptions introduced up to here, if \(\nu _-\) is large enough, and for fixed boundary conditions,

Remarks

-

1.

When \(\lambda = 0\), the proof (see Sect. 3) actually shows that

$$\begin{aligned} \frac{1}{T^2} \limsup _{N\rightarrow \infty }\mathsf E _\nu \mu _T \left( \frac{1}{\sqrt{t}}\int \limits _0^t \mathcal J _N \circ X^s_{(0,0)}\, \mathrm d s \right)^2 = \mathcal O \big ( t^{-1} \big ) \qquad \text{ as} \ t\rightarrow \infty . \end{aligned}$$This bound had apparently never been published before. It says that the unperturbed chain behaves like a perfect insulator: the current integrated over arbitrarily long times remains bounded in \(\mathrm L ^2(\mathsf E _\nu \mu _T)\).

-

2.

The proof (see Sect. 3) shares some common features with a method used in [18] to obtain a weak coupling limit for noisy hamiltonian systems. In our case, we may indeed see the eigenmodes of the unperturbed system as weakly coupled by the noise and the anharmonic potentials.

-

3.

The choice of fixed boundary conditions just turns out to be more convenient for technical reasons (see Sect. 4).

-

4.

The hypothesis that \(\nu _-\) is large enough is only used to ensure the exponential decay of correlations of the Gibbs measure when \(\lambda ^{\prime } >0\).

Next, in the absence of anharmonicity (\(\lambda ^{\prime } = 0\)), results become more refined.

Theorem 2

Let \(\lambda > 0\), let \(\lambda ^{\prime } = 0\), and let us assume that hypotheses introduced up to here hold. For almost all realizations of the pinnings, the Green–Kubo conductivity (2.5) of the chain is well defined, and in fact

this last limit being independent of the choice of boundary conditions (fixed or periodic). Moreover, there exists a constant \(c > 0\) such that, for every \(\lambda \in ]0,1[\),

The rest of this article is devoted to the proof of these theorems, which is constructed as follows.

Proof of Theorems 1 and 2

The upper bound (2.6) is derived in Sect. 3, assuming that Lemma holds. This lemma is stated and shown in Sect. 4; it encapsulates the informations we need about the localization of the eigenmodes of the unperturbed system (\(\lambda = \lambda ^{\prime } = 0\)). The existence of \(\kappa (\lambda , 0)\) for almost every realization of the pinnings, together with (2.7), are shown in Sect. 5. Finally, a lower bound on the conductivity when \(\lambda ^{\prime } = 0\) is obtained in Sect. 6. This shows (2.8). \(\square \)

2.3 Heuristic comments

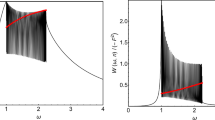

We would like to give here some intuition on the conductivity of disordered harmonic chains perturbed by a weak noise only, so with \(\lambda >0\) small and \(\lambda ^{\prime } = 0\). We will develop in a more probabilistic way some ideas from [12]. Our results cover the case where the pinning parameters \(\nu _k\) are bounded from below by a positive constant, but it could be obviously desirable to understand the unpinned chain as well, in which case randomness has to be putted on the value of the masses. We handle here both cases.

Let us first assume that \(\nu _k \ge c\) for some \(c > 0\), and let us consider a typical realization of the pinnings. In the absence of noise (\(\lambda = 0\)), the dynamics of the chain is actually equivalent to that of \(N\) independent one-dimensional harmonic oscillators, called eigenmodes (see Sect. 4.1 and formulas (4.5–4.6) in particular). Since the chain is pinned at each site, the eigenfrequencies of these modes are uniformly bounded away from zero. As a result, all modes are expected to be exponentially localized. We can thus naively think that, to each particle, is associated a mode localized near the equilibrium position of this particle.

When the noise is turned on (\(\lambda >0\)), energy starts being exchanged between near modes. Let us assume that, initially, energy is distributed uniformly between all the modes, except around the origin, where some more energy is added. We expect this extra amount of energy to diffuse with time, with a variance proportional to \(\kappa (0,\lambda ) \cdot t\) at time \(t\). Since flips of velocity occur at random times and with rate \(\lambda \), we could compare the location of this extra energy at time \(t\) to the position of a standard random walk after \(n = \lambda t\) steps. Therefore, denoting by \(\delta _k\) the increments of this walk, we find that

This intuitive picture will only be partially justified, as explained in the remark after the proof of Theorem 1 in Sect. 3.

Let us now consider the unpinned chain. So we put \(\nu _k = 0\) and we change \(p_k^2\) by \(p_k^2 /m_k\) in the Hamiltonian, where the masses \(m_k\) are i.i.d. positive random variables. We consider a typical realization of the masses. In contrast with the pinned chain, the eigenfrequencies of the modes are now distributed in an interval of the form \([0,c]\), for some \(c >0\). This has an important consequence on the localization of the modes. It is indeed expected that the localization length \(l\) of a mode and its eigenfrequency \(\omega \) are related through the formula \(l \sim 1/ \omega ^2\).

Here again, the noise induces exchange of energy between modes, and we still would like to compare \(\kappa (\lambda , 0) \cdot t\) with the variance of a centered random walk with increments \(\delta _k\). However, due to the unlocalized low modes, \(\delta _k\) can now take larger values than in the pinned case. Assuming that the eigenfrequencies are uniformly distributed in \([0,c]\), we guess that, for large \(a\),

This however neglects a fact. Since energy does not travel faster than ballistically, and since successive flips of the velocity are spaced by time intervals of order \(1/\lambda \), it is reasonable to introduce the cut-off \(\mathsf P (|\delta _k| > 1/ \lambda ) = 0\). With this distribution for \(|\delta _k|\), and with \(n = \lambda t\), we now find

This scaling is numerically observed in [12]. The arguments leading to this conclusion are very approximative however, and it should be desirable to analyse this case rigorously as well.

3 Upper bound on the conductivity

We here proceed to the proof of Theorem 1. We assume that Lemma 1 in Sect. 4 holds: there exists a sequence \((u_N)_{N\ge 1} \subset \mathrm L ^2 (\mathsf E _\nu \mu _T)\) such that \(- A_{har} u_N = \mathcal J _{N,har}\), and that \((u_N)_{N \ge 1}\) and \((A_{anh} u_N)_{N\ge 1}\) are both bounded sequences in \(\mathrm L ^2 (\mathsf E _\nu \mu _T)\). Moreover \(u_N\) is of the form \(u_N (q,p) = \langle q, \alpha _N q \rangle + \langle p, \gamma _N p \rangle + c_N\), where \(\alpha _N,\gamma _N \in \mathbb R ^{N\times N}\) are symmetric matrices, and where \(c_N \in \mathbb R \).

Proof of (2.6)

Let \(0 \le \lambda ^{\prime } \le \lambda \), and let \(u_N\) be the sequence obtained by Lemma 1 in Sect. 4. Before starting, let us observe that, due to the special form of the function \(u_N\), we may write

with

where \((\gamma _{k,l})_{1 \le k,l\le N}\) are the entries of \(\gamma _N\). It follows in particular that

Now, since \(\mathcal J _N = \mathcal J _{N,har} + \lambda ^{\prime } \mathcal J _{N,anh}\), we find using Cauchy–Schwarz inequality that

Since \(\mathcal J _{N,anh} = \frac{1}{2}(-S)\mathcal J _{N,anh}\), a classical bound [15], Appendix 1, Proposition 6.1] furnishes

where \(\mathrm C < +\infty \) is a universal constant. By (2.1), \(\mathsf E _\nu \mu _T \big ( \mathcal J _{N,anh}^2 \big )\) is uniformly bounded in \(N\). Therefore

It suffices thus to establish that

We write

where the second equality is obtained by means of (3.3). Therefore

where \(\mathcal M _t\) is a martingale given by

with \(\mathrm N _s^j\) the Poisson process that flips the momentum of particle \(j\).

It now suffices to establish that the three terms in the right hand side of (3.4) are \(\mathcal O (\lambda )\) in \(\mathrm L ^2 (\mathsf E _\nu \mathsf E _T)\). Let us first show that \(\mu _T ( u_N \cdot (-S) u_N ) \le 4 \Vert u_N \Vert _\mathrm{L ^2 (\mu _T)}^2\). Writing

with

we get indeed

and

The claim follows since \(\mu _T \big ( (u_{N}^{p,p,1} + u_{N}^{q,q} + c_N ) \cdot u_N^{p,p,0} \big ) = 0\).

So first,

Next,

Finally, by a classical bound ([15], Appendix 1, Proposition 6.1),

where (3.3) and

have been used to get the second equality. Taking the expectation over the pinnings, the proof is completed since \((u_N)_N\) and \((A_{anh} u_N)_N\) are bounded sequences in \(\mathrm L ^{2}(\mathsf E _\nu \mu _T)\). \({}\square \)

Remark

When \(\lambda ^{\prime } = 0\), formula (3.4) becomes

Now, since \(Su_N = -4 \sum _{1 \le k\ne l \le N}\gamma _{k,l}\, p_k p_l\), it is computed that

The measure on the paths being invariant under time reversal, it thus holds that

We therefore deduce from (3.5) that

where \(r (t)\) is quantity that vanishes in the limit \(t\rightarrow \infty \). We see thus that our proof does not completely justify the heuristic developed in Sect. 2.3, due to the second term in the right hand side of this last equation. As explained after the statement of Lemma 1 below, the sequence \(u_N\) should not be unique. It could be that a good choice of sequence \(u_N\) makes this second term of order \(\mathcal O (\lambda ^2)\).

4 Poisson equation for the unperturbed dynamics

In this section, we state and prove the following lemma. Fixed BC are assumed for the whole section.

Lemma 1

Let \(\lambda ^{\prime } \ge 0\), and assume fixed boundary conditions. For every \(N \ge 1\), and for almost every realization of the pinnings, there exist a function \(u_N\) of the form

where \(\alpha _N,\gamma _N\in \mathbb R ^{N\times N}\) are symmetric matrices and where \(c_N \in \mathbb R \), such that

Moreover, the functions \(u_N\) can be taken so that

Remarks

-

1.

The parameter \(\lambda ^{\prime }\) only plays a role through the definition of the measure \(\mu _T\).

-

2.

For a given value of \(N\) and for almost every realization of the pinnings, the unperturbed dynamics is integrable, meaning here that it can be decomposed into \(N\) ergodic components, each of them corresponding to the motion of a single one-dimensional harmonic oscillator (see Sects. 4.1 and (4.5–4.6) in particular). This has two implications. First, since (4.1) admits a solution, we conclude that the current \(\mathcal J _N\) is of mean zero with respect to the microcanonical measures of each ergodic component of the dynamics. Next, the solution \(u_N\) is not unique since every function \(f\) constant on the ergodic components of the dynamics satisfies \(-A_{har} f = 0\).

Proof of Lemma 1

To simplify notations, we will generally not write the dependence on \(N\) explicitly. The proof is made of several steps. \(\square \)

4.1 Identifying \((u_N)_{N\ge 1}\): eigenmode expansion

Let \(z > 0\) and let \(1 \le l,m \le N\). Let us consider the equation

The solution \(v_{l,m,z}\) exists and is unique. It is given by

We will analyse \(v_{l,m,z}\) to obtain the sequence \(u_N\). Although we assumed fixed BC, all the results of this subsection apply for periodic BC as well.

Solutions to Hamilton’s equations. The matrix \(\Phi \) is a real symmetric positive definite matrix in \(\mathbb R ^{N\times N}\), and there exist thus an orthonormal basis \((\xi ^k)_{1 \le k \le N}\) of \(\mathbb R ^N\), and a sequence of positive real numbers \((\omega _k^2)_{1 \le k \le N}\), such that

It may be checked that

for \(1 \le k \le N\). According to Proposition II.1 in [16], for almost all realization of the pinnings, none of the eigenvalue is degenerate:

In the sequel, we will assume that (4.4) holds.

When \(\lambda = \lambda ^{\prime } = 0\), Hamilton’s equations write

For initial conditions \((q,p)\), the solutions write

An expression for \(v_{l,m,z}\). To determine \(v_{l,m,z}\), we just need to insert the solutions (4.5–4.6) into the definition (4.2), and then compute the integral, which is a sum of Laplace transforms of sines and cosines:

where \(\langle j,\xi ^k \rangle \) denotes the \(j{\text{ th}}\) component of the vector \(\xi ^k\), and where the rest term \(\mathcal O (z)\) is a polynomial of the form \(\langle q, \tilde{\alpha }_z q \rangle + \langle q, \tilde{\beta }_z p \rangle + \langle p, \tilde{\gamma }_z p \rangle \), where \(\tilde{\alpha }_z\) and \(\tilde{\gamma }_z\) can be taken to be symmetric. We define

It is observed that \(v_{l,m}\) is of the form \(\langle q, \tilde{\alpha }q \rangle + \langle p, \tilde{\gamma }p \rangle \) where \(\tilde{\alpha }\) and \(\tilde{\gamma }\) can be taken to be symmetric.

Defining the solution \(u_N\). For fixed BC, the total current is given by

Setting

for \(2 \le l \le N\) and

we define

The function \(u_N\) is of the form \(u_N = \langle q, \alpha _N q \rangle + \langle p, \gamma _N p \rangle + c_N\), where \(\alpha _N\) and \(\gamma _N\) are symmetric matrices, and where \(c_N\in \mathbb R \).

Let us show that \(u_N\) solves \(-A_{anh} u_N = \mathcal J _N\). We may assume that \(c_N = 0\) without loss of generality. The current \(\mathcal J _N\) can be written as \(\mathcal J _N = \langle q, B p \rangle \). The function \(u_N\) has been obtained as the limit as \(z \rightarrow 0\) of the function \(u_{z}\) of the form \(u_{z} = \langle q, \alpha _z q \rangle + \langle q, \beta _z p \rangle + \langle p, \gamma _z p \rangle \) which solves \((z - A_{har}) u_{z} = \mathcal J _N\), and with \(\alpha _z\) and \(\gamma _z\) symmetric matrices. Since

it holds thatFootnote 1

We know that \((\alpha _z,\beta _z,\gamma _z) \rightarrow (\alpha , 0, \gamma )\) as \(z\rightarrow 0\), with \(\alpha \) and \(\gamma \) symmetric, so that \(-2 (\alpha - \Phi \gamma ) = B\). Taking into account that \(\alpha \) and \(\gamma \) are symmetric, we deduce that

It is checked that, if two symmetric matrices \(\alpha \) and \(\gamma \) satisfy these relations, then \(u_N = \langle q, \alpha q \rangle + \langle p, \gamma p \rangle \) solves the equation \(- A_{har} u_N = \mathcal J _N\).

4.2 A new expression for \(w_{l}\)

For \(1 \le l \le N\), the function \(w_l\) defined by (4.8) or (4.9) can be written as

where \(\alpha (l)\) and \(\gamma (l)\) are symmetric matrices, and where \(c(l)\in \mathbb R \). A relation similar to (4.10) is satisfied: with the definitions

for \(1 \le m,n \le N\), we write

for \(1 \le l \le N\). Therefore the knowledge of the matrices \(\gamma \) implies that of the matrices \(\alpha \).

An expression for the matrices \(\gamma (l)\) can be recovered from (4.7) with \(z=0\). We will now work this out in order to obtain a more tractable formula. We show here that, for \(2 \le l \le N\),

and

Formula (4.13) is directly derived from (4.7), noting that \(\gamma (1)\) is the only symmetric matrix such that \(w_1 (0,p) = \sum _{s,t} \gamma _{s,t}(1) p_s p_t\). To derive (4.12), we observe that \(\gamma (l)\) is the only symmetric matrix such that \(w_l (0,p) = \sum _{s,t} \gamma _{s,t}(l) p_s p_t\). Starting from (4.7), we deduce

For fixed BC, the eigenvectors \(\xi ^j\) satisfy the following relations for \(1 \le j \le N\):

So the following recurrence relation is satisfied:

Let us first compute \(w_2(0,p)\). Using (4.14), it comes

Therefore

where the last equality follows from the fact that \((\xi ^k)_k\) forms an orthonormal basis.

Let us now compute \(w_l(0,p)\) for \(2 < l \le N\). Again by (4.14),

Therefore

Combining (4.15) and (4.16), we arrive to an expression valid for \(2 \le l \le N\):

Let us now write \(\langle j, p \rangle ^2 = p_j^2\) and

We obtain

In this formula, the coefficients of \(p_s^2\) coincide with \(\gamma _{s,s}(l)\) given by (4.12) for \(l \le s \le N\), and the coefficients of \(p_s p_t\) with \(s \ne t\) coincide with the first expression of \(\gamma _{s,t}(l)\) given by (4.12). To recover the coefficients \(\gamma _{s,s}(l)\) for \(1 \le s \le l-1\), just use the fact that \((\xi ^k)_k\) and \((| k \rangle )_k\) are orthonormal basis:

The second expression for the coefficients \(\gamma _{s,t}(l)\) with \(s\ne t\) in (4.12) is obtained by a similar trick.

4.3 Exponential bounds

We show here that there exist constants \(\mathrm C < + \infty \) and \(c > 0\) independent of \(N\) such that

for \(2 \le l \le N\) and for \(1 \le j,k \le N\). This is still valid for \(l=1\) if \(|k-l|\) is replaced by \(\min \{ |k-1|,|k-N|\}\) and \(|j-l|\) by \(\min \{ |j-1|,|j-N|\}\). Due to (4.11), it suffices to establish these bounds for the matrices \(\gamma \).

Let us first observe that the almost sure bounds

hold for \(1 \le s,t \le N\). This is directly deduced from (4.12) and (4.13) by taking absolute values inside the sums if needed, using that \((\xi ^k)_k\) and \((| k \rangle )_k\) are orthonormal basis, and Cauchy-Schwarz inequality if needed. By (4.3), \(\min \{ \omega _k^2 : 1 \le k \le N\} \ge c > 0\), where \(c\) does not depend on \(N\). In particular \(\mathsf E _\nu \left( |\gamma _{s,t}|^p \right) \le \mathrm C _p \mathsf E _\nu |\gamma _{s,t}|\) for every \(p \ge 1\), so that we only need to bound \( \mathsf E _\nu |\gamma _{s,t}|\).

We now will apply localization results originally derived by Kunz and Souillard [16], but we follow the exposition given by [11]. From (4.12) and (4.13), we see that we are looking for upper bound on the absolute value of sums of the type

and of the type

Since \(|\langle r, \xi ^k\rangle | \le 1\) for \(1 \le r,k \le N\), all of them can be bounded by

By the formula before Lemma 4.3 in [11], and the lines after the proof of this lemma, we may conclude that there exist constants \(\mathrm C < + \infty \) and \(c > 0\) independent of \(N\) such that

Together with the remarks formulated up to here, this allows to deduce (4.17).

4.4 Concluding the proof of Lemma 1

We write

We will establish that there exist constants \(\mathrm C < +\infty \) and \(c >0\) such that

for \(1 \le m,n\le N\). This will conclude the proof.

Let us fix \(1 \le m,n \le N\). Let us first consider \(| \mathsf E _\nu \mu _T (w_{m} \cdot w_{n}) |\). Let us observe that the functions \(w_l\) are of zero mean by construction, and so the relation

holds for \(1 \le l \le N\). Using this relation, it is computed that

Using (4.19) and the fact that \(\int p^2 \, \mathrm d \mu _T = T\), the sum \(S_1\) is rewritten as

Then, still using (4.19), we get

Finally, the terms in the sum \(S_4\) are non zero only when

Using that \(\int p^4 \mathrm d \mu _T = 3 (\int p^2 \, \mathrm d \mu _T)^2\) and that \(\int p^2 \, \mathrm d \mu _T = T\), \(S_4\) is seen to be equal to

Therefore

Applying the decorrelation bound (2.1) and the exponential estimate (4.17), the result is obtained.

Let us next consider \(| \mathsf E _\nu \mu _T ( A_{anh} w_{m} \cdot A_{anh} w_{n}) |\). We find from (3.1) that

Now, it follows from (3.2) that \(\phi _t (q,k) \; = \; \sum _s \gamma _{s,t} (k) \rho _s (q)\) for \(1 \le k \le N\). Here \(\rho _s (q) = \rho _s (q_{s-1},q_s,q_{s+1})\) is a function of mean zero since the potentials \(U\) and \(V\) are symmetric. We write

Applying the decorrelation bound (2.1) and the exponential estimate (4.17) yield the result. \(\square \)

5 Convergence results

In this section we show the convergence result (2.7). We assume thus \(\lambda >0\) and \(\lambda ^{\prime } = 0\).

We start with some definitions (see [5] for details). The dynamics defined in Sect. 2 can also be defined for a set of particles indexed in \(\mathbb Z \) instead of \(\mathbb Z _N\). Points on the phase space are written \(x = (q,p)\), with \(q = (q_k)_{k\in \mathbb Z }\) and \(p = (p_k)_{k \in \mathbb Z }\). Let us denote by \(\mathcal L \) the generator of this infinite-dimensional dynamics. We remember here that \(\mu _T^{(N)}\) represents the Gibbs measure of a system of size \(N\) ; we denote by \(\mu _T^{(\infty )}\) the Gibbs measure of the infinite system (the dependence on the size will still be dropped in the cases where it is irrelevant). We extend the definition (2.2) of local currents \(j_{k}\) to all \(k\in \mathbb Z \) (\(j_k = j_{k,har}\) since \(\lambda ^{\prime } = 0\)). If \(u = u (x,\nu )\), with \(\nu = (\nu _k)_{k \in \mathbb Z }\) a sequence of pinnings, and if \(k \in \mathbb Z \), we write \(\tau _k u (x,\nu ) = u(\tau _k x, \tau _k \nu )\), where

Finally, we denote by \(\ll \cdot , \cdot \gg \) the inner-product defined, for local bounded functions \(u\) and \(v\), by

where \(v^*\) is the complex conjugate of \(v\), and by \({\mathcal{H }}\) the corresponding Hilbert space, obtained by completion of the bounded local functions.

We start with two lemmas. We have no reason to think that Lemma 2 still holds if an anharmonic potential is added, and this is the main reason why we here restrict ourselves to harmonic interactions.

Lemma 2

There exists a constant \(\mathrm C < +\infty \) such that, for any realization of the pinnings, for the finite dimensional dynamics with free or fixed B.C., or for the infinite dynamics, for any \(k\ge 1\) and for any \(l \in \mathbb Z _N\) (resp. \(k \in \mathbb Z \) for the infinite dynamics),

Proof

Let us consider the infinite dimensional dynamics ; other cases are similar. We can take \(l=0\) without loss of generality. The function \(j_0\) is of the form \(j_0 = \langle q, \alpha q \rangle + \langle q, \beta p \rangle + \langle p, \gamma p \rangle \), with \(\alpha = \gamma = 0\) and \(\beta \) defined by

Now, if \(u\) is any function of the type \(u = \langle q, \alpha q \rangle + \langle q, \beta p \rangle + \langle p, \gamma p \rangle \), then \(\mathcal L u = \langle q, \alpha ^{\prime } q \rangle + \langle q, \beta ^{\prime } p \rangle + \langle p, \gamma ^{\prime } p \rangle \) with

where \(\tilde{\gamma }\) is such that \((\tilde{\gamma })_{i,i} = 0\) and \((\tilde{\gamma })_{i,j} = \gamma _{i,j}\) for \(i \ne j\). Thus

and there exists a constant \(\mathrm C < + \infty \) such that \(\zeta _{i,j} = 0\) whenever \(|i| \ge \mathrm C k\) or \(|j| \ge \mathrm C k\) and such that \(|\zeta _{i,j}| \le \mathrm C ^k\) otherwise, with \(\zeta \) one of the three matrices \(\alpha _{(k)}, \beta _{(k)}\) or \(\gamma _{(k)}\). The claim is obtained by expressing \(\Vert \mathcal L ^k j_{l} \Vert _\mathrm{L ^2 (\mu _T)} \) in terms of the matrices \(\alpha _{(k)}\), \(\beta _{(k)}\) and \(\gamma _{(k)}\). \(\square \)

Explicit representation for the matrix \(\Phi ^{-1}\) in Lemma 1.1. in [9] allows to deduce the following lemma.

Lemma 3

Let \(f\) and \(g\) be two polynomials of the type \(\langle q, \alpha q \rangle + \langle p, \beta p \rangle + \langle p, \gamma p \rangle \), and assume that there exists \(n\in \mathbb N \) such that \(\alpha _{i,j} = \beta _{i,j} = \gamma _{i,j} = 0\) whenever \(|i|> n\) or \(|j|> n\). Then there exists \(c >0\) such that, for fixed or periodic B.C.,

Let then \(\mathbb D = \{ z \in \mathbb{C }: \mathfrak R z > 0 \}\). For every \(z\in \mathbb D \), let \(u_z\) be the unique solution to the resolvent equation in \({\mathcal{H }}\)

We know from Theorem 1 in [5]Footnote 2, and from its proof, that

and that

For \(z \in \mathbb D \) and \(N \ge 3\), let \(u_{k,z,N}\) be the unique solution to the equation

so that

Lemma 4

For fixed or free boundary conditions and for almost all realizations of the pinnings,

Proof

By (5.2) and (5.3), it suffices to establish separately that, for every \(z \in \mathbb D \), and for almost every realization of the pinnings,

The proof of these four relations is in fact very similar, and we will focus on the first one. We proceed in two steps: we first show the result for \(|z|\) large enough, and then extend it to all \(z\in \mathbb D \).

First step. Here we fix \(z \in \mathbb D \) with \(|z|\) large enough. We first assume periodic boundary conditions. The function \(u_z\) solving (5.1) may be given by

this series converging in virtu of Lemma 2 for \(|z|\) large enough. Let now \(n \ge 1\). We compute

For every given \(k\), the sum over \(l\) is actually a sum over \(\mathrm C \, k\) non-zero terms only, for some \(\mathrm C < + \infty \). From this fact and from Lemma , it is concluded that the second sum in the right hand side of (5.6) converges to 0 as \(n \rightarrow \infty \). Similarly we write

Here as well, the second term in (5.7) is such that

To handle the first term in (5.7), let us write

Then in fact

The result is obtained by letting \(N \rightarrow \infty \), invoking Lemma 3 and the ergodic theorem, and then letting \(n \rightarrow \infty \). If we had started with fixed boundary conditions, then, for every fixed \(n\), all the previous formulas remain valid up to some border terms that vanish in the limit \(N \rightarrow \infty \) due to the factor \(1/N\).

Second step. Denote by \({\mathfrak{L }}_{N,\nu } (z)\) and \({\mathfrak{L }} (z)\) the complex functions defined on \({\mathbb{D }}\) by

The first observation is that these functions are well defined and analytic on \({\mathbb{D }}\). Moreover, similarly to what is proved in [5], they are uniformly bounded on \({\mathbb{D }}\) by a constant independent of \(N\) and the realization of the pinning \(\nu \).

Let us fix a realization of the pinnings. The family \(\{ \mathfrak{L }_{N,\nu } \; ;\; N \ge 1\}\) is a normal family and by Montel’s Theorem we can extract a subsequence \(\{\mathfrak{L }_{N_{k}, \nu }\}_{k \ge 1}\) such that it converges (uniformly on every compact set of \({\mathbb{D }}\)) to an analytic function \(f^{\star }_{\nu }\).

By the first step we know that \(f_{\nu }^{\star } (z) ={\mathfrak{L }} (z)\) for any real \(z>z_0\). Thus, since the functions involved are analytic, \(f^{\star }_{\nu }\) coincides with \({\mathfrak{L }} \) on \({\mathbb{D }}\). It follows that the sequence \(\{{\mathfrak{L }}_{N,\nu } (z)\}_{N \ge 1}\) converges for any \(z \in \mathbb{D }\) to \({\mathfrak{L }} (z)\). \(\square \)

Following a classical argument, we can now proceed to the

Proof of (2.7)

For any \(z > 0\), it holds that

Here \(\mathcal M _{z,N,t}\) is a stationary martingale with variance given by

Here the equality \(\mu _T \big ( u_{z,N} \cdot A_{har} u_{z,N} \big ) = 0\) has been used. Next

and

Reminding that \(\mu _T \big ( u_{z,N} \cdot (z - L) u_{z,N} \big ) = \mu _T \big ( u_{z,N} \cdot \mathcal J _N \big )\), the proof is completed by taking \(z = 1/t\) and invoking Lemma 4. \(\square \)

6 Lower bound in the absence of anharmonicity

We here establish the lower bound in (2.8), and so we assume \(\lambda >0\) and \(\lambda ^{\prime } = 0\). We also assume periodic boundary conditions. We use the same method as in [5] (see also [12]). According to Sect. 5, it is enough to establish that there exists a constant \(c>0\) such that, for almost every realization of the pinnings, for every \(z >0\) and for every \(N \ge 3\),

Indeed, by (5.5), \(\mu _T \big ( \mathcal J _N \cdot ( z - L)^{-1} \mathcal J _N \big ) = \mu _T \big ( u_{z,N} \cdot ( z - L) u_{z,N} \big )\), and, by (5.8), this quantity converges to the right hand side of (2.7).

Proof of (6.1)

For periodic B.C., the total current \(J_N\) is given by

To get a lower bound on the conductivity, we use the following variational formula

where the supremum is carried over the test functions \(f\in \mathcal C ^{\infty }_{temp}(\mathbb R ^{2N})\). See [23] for a proof. We take \(f\) in the form

where \(M\) is the antisymmetric matrix such that \(M_{i,j}= \delta _{i,j-1} - \delta _{i,j+1}\), with the convention of periodic B.C.: \(\delta _{1,N+1} = \delta _{1,1}\) and \(\delta _{N,0} = \delta _{N,N}\).

First, we have

since \(\beta \Phi = \Phi M \Phi \) is antisymmetric, and since \(\beta _{i,i} = 0\) for \(1\le i \le N\). Since \(S(p_i p_j)= -4 p_i p_j\) for \(i \ne j\), we obtain

for some constant \(\mathrm C < + \infty \). Next, since \(S p_k = -2 p_k\) for \( \le k \le N\), there exists some constant \(\mathrm C < + \infty \) such that

Let us finally estimate the term \(\mu _T (J_N \, f)\):

By (6.3), (6.4), (6.5) and the variational formula (6.2), we find that there exists a constant \(\mathrm C < + \infty \), independent of the realization of the disorder, of \(\lambda \) and of \(N\), such that for any positive \(a\),

By optimizing over \(a\), this implies

Since \(\mathcal J _N = J_N/\sqrt{N}\), this shows (6.1). \(\square \)

Notes

Here and in the following \(M^\dagger \) denotes the transpose matrix of the matrix \(M\).

The model studied there is not exactly the same. The proof of the properties we mention here can be however readily adapted.

References

Ajanki, O., Huveneers, F.: Rigorous scaling law for the heat current in disordered harmonic chain. Commun. Math. Phys. 301(3), 841–883 (2011)

Aoki, K., Lukkarinen, J., Spohn, H.: Energy transport in weakly anharmonic chains. J. Stat. Phys. 124(5), 1105–1129 (2006)

Basile, G., Bernardin, C., Olla, S.: Thermal conductivity for a momentum conservative model. Commun. Math. Phys. 287(1), 67–98 (2009)

Basko, D.M.: Weak chaos in the disordered nonlinear Schroödinger chain: destruction of Anderson localization by Arnold diffusion. Ann. Phys. 326(7), 1577–1655 (2011)

Bernardin, C.: Thermal conductivity for a noisy disordered harmonic chain. J. Stat. Phys. 133(3), 417–433 (2008)

Bernardin, C., Olla, S.: Fourier’s law for a microscopic model of heat conduction. J. Stat. Phys. 121(3–4), 271–289 (2005)

Bodineau, T., Helffer, B.: Correlations, spectral gap and log-Sobolev inequalities for unbounded spin systems. In: Weikard, R., Weinstein, G. (eds.) Differential Equations and Mathematical Physics: Proceedings of an International Conference Held at the University of Alabama in Birmingham, pp. 51–66. American Mathematical Society and International Press (2000)

Bonetto, F., Lebowitz, J.L., Rey-Bellet, L.: Fourier’s law: a challenge to theorists. In: Fokas, A., Grigoryan, A., Kibble, T., Zegarlinsky, B. (eds.) Mathematical physics 2000, pp. 128–150. Imperial College Press (2000)

Brydges, D., Frölich, J., Spencer, T.: The random walk representation of classical spin systems and correlation inequalities. Commun. Math. Phys. 83(1), 123–150 (1982)

Casher, A., Lebowitz, J.L.: Heat flow in regular and disordered harmonic chains. J. Math. Phys. 12(8), 1701–1711 (1971)

Damanik, D.: A short course on one-dimensional random Schrödinger operators, pp. 1–31 (2011). arXiv:1107.1094

Dhar, A., Venkateshan, K., Lebowitz, J.L.: Heat conduction in disordered harmonic lattices with energy-conserving noise. Phys. Rev. E 83(2), 021108 (2011)

Dhar, A., Lebowitz, J.L.: Effect of phonon–phonon interactions on localization. Phys. Rev. Lett. 100(13), 134301 (2008)

Dhar, A.: Heat conduction in the disordered harmonic chain revisited. Phys. Rev. Lett. 86(26), 5882–5885 (2001)

Kipnis, C., Landim, C.: Scaling Limits of Interacting Particle Systems. Springer, Berlin (1999)

Kunz, H., Souillard, B.: Sur le spectre des opérateurs aux différences finies aléatoires. Commun. Math. Phys. 78(2), 201–246 (1980)

Lepri, S., Livi, R., Politi, A.: Thermal conduction in classical low-dimensional lattices. Phys. Rep. 377(1), 1–80 (2003)

Liverani, C., Olla, S.: Toward the Fourier law for a weakly interacting anharmonic crystal, pp. 1–35 (2010). arXiv:1006.2900

Lukkarinen, J., Spohn, H.: Anomalous energy transport in the FPU\(-\beta \) chain. Commun. Pure Appl. Math. 61(12), 1753–1786 (2008)

Oganesyan, V., Pal, A., Huse, D.: Energy transport in disordered classical spin chains. Phys. Rev. B. 80(11), 115104 (2009)

Rieder, Z., Lebowitz, J.L., Lieb, E.H.: Properties of harmonic crystal in a stationary nonequilibrium state. J. Math. Phys. 8(5), 1073–1078 (1967)

Rubin, R.J., Greer, W.L.: Abnormal lattice thermal conductivity of a one-dimensional. Harmonic, isotopically disordered crystal. J. Math. Phys. 12(8), 1686–1701 (1971)

Sethuraman, S.: Central limit theorems for additive functionals of the simple exclusion process. Ann. Probab. 28(1), 277–302 (2000)

Verheggen, T.: Transmission coefficient and heat conduction of a harmonic chain with random masses: asymptotic estimates on products of random matrices. Commun. Math. Phys. 68(1), 69–82 (1979)

Acknowledgments

We thank J.-L. Lebowitz and J. Lukkarinen for their interest in this work and T. Bodineau for useful discussions. We thank C. Liverani, S. Olla and L.-S. Young, as organizers of the Workshop on the Fourier Law and Related Topics, as well as the kind hospitality of the Fields Institute in Toronto, where this work was initiated. C.B. acknowledges the support of the French Ministry of Education through the grants ANR-10-BLAN 0108. F.H. acknowledges the European Advanced Grant Macroscopic Laws and Dynamical Systems (MALADY) (ERC AdG 246953) for financial support.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bernardin, C., Huveneers, F. Small perturbation of a disordered harmonic chain by a noise and an anharmonic potential. Probab. Theory Relat. Fields 157, 301–331 (2013). https://doi.org/10.1007/s00440-012-0458-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-012-0458-8