Abstract

Effective functioning of outdoor vision systems depends upon the quality of input. Varying effects of light create different weather conditions (like raining, snowfall, haze, mist, fog, and cloud) due to optical properties of light and physical existence of different size particles in the atmosphere. Thus, outdoor images and videos captured in adverse environmental conditions have poor visibility due to scattering of light by atmospheric particles. Visibility restoration (dehazing) of degraded (hazy) images is critical for the useful performance of outdoor vision systems. Most of the existing methods of image dehazing considered atmospheric scattering model (ASM) to improve the visibility of hazy images or videos. According to ASM, the visual quality of dehazed image depends upon accurate estimation of transmission. Existing methods presented different priors with strong assumptions to estimate transmission. The proposed method introduces a tight lower bound on transmission. However, the accuracy of the proposed tight lower bound depends upon minimum color channel of haze-free image. Therefore, a prior is proposed to estimate the minimum color channel of the haze-free image. Furthermore, a blind assessment metric is proposed to evaluate the dehazing methods. Restored and matching corner points of the hazy and haze-free image are used to compute the proposed blind assessment metric. Obtained results are compared with renowned dehazing methods by qualitative and quantitative analysis to prove the efficacy of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Outdoor vision systems perform well in perfect visibility. However, physical properties of atmospheric particles and optical behavior of light create varying weather conditions (like hazy, mist, foggy, snowfall, and raining), which restrain visibility of outdoor environment. The capability of outdoor visual systems (like surveillance, traffic monitoring, object detection/recognition, and intelligent transportation) is perverted due to amid weather. Images/videos which are captured in poor weather have low contrast and faint color due to improper visibility [1]. Therefore, an artificial intelligence-based solution is essential for better utilization of outdoor vision systems [2, 3].

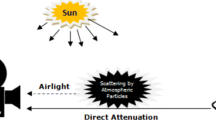

Light reflected from the surface of an object gets scattered and absorbed by atmospheric particles. Therefore, images fade in imperfect weather. Scattering produces the severe effect than absorption, which is best described by ASM. ASM considers the consequences of physical and optical properties of light (such as varying shape and size of particles present in the atmosphere, and wavelength of light) in image formation [4,5,6]. According to ASM, the camera observes two types of radiance: (1) a fraction of reflected light which is not scattered (direct attenuation) and (2) a fraction of scattered light (airlight). Therefore, the captured images lose contrast and color [7]. Thus, dehazing using ASM is vital for visual assisted systems.

Classical methods of image restoration are based on the improvement in the histogram using equalization and stretching, which enhance brightness and contrast of the hazy image. However, these methods do not consider ASM. Thus, these methods do not restore ideal image [8,9,10].

The depth of a scene point determines the concentration of haze/fog in ASM [5, 11]. The prime objective of the dehazing is to recover the depth of each scene point. Once scene depth is known, then ASM can be solved easily to obtain ideal dehazing. Thus, dehazing is classified into three groups: (1) extra information based, (2) multiple images based, and (3) single image based.

Classical dehazing methods need extra information like depth cues. These methods require user interaction for extra information. Therefore, these methods are not good for the real-time applications [12, 13]. Multiple image-based methods [14,15,16,17,18] require more than one images of the same scene from varying degree of polarization to measure the depth of scene. These methods involve extra hardware, which limits their applicability. Thus, single image-based dehazing is of prime focus nowadays [5].

Single image dehazing is based on observations or priors with strong assumptions. The success of single image dehazing depends upon these assumptions. A few prominent methods are presented in [5, 19, 20] based on single image dehazing. In [5], a strong prior is presented to estimate transmission, which is further refined using soft matting. However, it is computationally expensive due to soft matting. In [19], a fast guided filter is presented, which replaced soft matting in [5] to improve computational efficiency. In [20], a boundary constraint on the transmission is presented, which is further refined using optimization. Method of [20] produces realistic results. However, it is computationally inefficient for large images. The proposed work presents a tight lower bound on transmission, which is further optimized to estimate original transmission using regularization. The arrangement of the paper is as follows. Section 2 presents the literature. ASM is discussed in Sect. 3. Details of the proposed work are presented in Sect. 4. Comparison of experimental results and their analysis is discussed in Sect. 5. Finally, paper is concluded in Sect. 6.

2 Related work

Single image-based dehazing is broadly classified into four categories: (1) priors with filtering based, (2) observation and statistics based, (2) sky region segmentation based, and (4) optimization based.

Noteworthy single image dehazing methods based on strong priors with filtering have been presented in [5, 19,20,21,22,23,24,25,26,27,28,29,30,31,32,33]. In [21], it is observed that haze-free images have more contrast than the hazy images. Thus, local contrast of hazy image is maximized to remove the haze. Obtained results are bright. However, maximization of contrast distorts original color which invalidates the physical significance of this method.

The method in [22] approximates atmospheric veil with the help of median filter. It can restore gray or color image. However, it does not preserve gradients and performs poorly in dense haze.

In [23], problem of [22] is solved by using a joint bilateral filter to preserve edges. The initial atmospheric veil is estimated using median filter and refined using joint bilateral filter to recover edge information at depth. The strong point of this method is computational efficiency, and it can be utilized in real-time applications. However, this method does not perform well for an object brighter than atmospheric light.

An eminent work is presented in [5], in which statistics of color channels of the outdoor haze-free images reveal that most of the non-sky pixels are black at least in a channel. By applying minimum filtering in the local neighborhood of minimum color channel of the haze-free image, a dark channel prior (DCP) is obtained. Usually, DCP is completely dark and used to solve ASM. DCP produces quality and bright results. However, it generates poor results in case of sky images and produces blocking artifacts near depth discontinuities due to minimum filtering in the local neighborhood. Soft matting is used to remove these artifacts, which is computationally expensive.

The efficiency of DCP is increased by replacing soft matting with the guided filter in [19]. Transmission obtained by DCP is further refined using the guided filter. Guided filter transfers structure of a guide image to another image. Guide image could be the same image or some other image. Guided filter preserves the structure of the image and is comparably faster than DCP. However, color distortion in images with sky region is amplified by the guided filter.

In [24], DCP is modified to reduce blocking artifacts. The modified DCP is computed as square root mean of pixels in the local neighborhood of the hazy image to correctly estimate transmission in the sky region. However, performance of this method depends upon the proper selection of the size of the local neighborhood.

Filtering-based methods seem good. However, accuracy in transmission strongly depends upon assumptions and priors, and these methods are computationally inefficient. Thus, some fast dehazing methods based on observation and statistics have been presented in [2, 30, 31].

In [30], it is observed that the difference in saturation from brightness increases with depth. Thus, a linear depth estimation model is presented. However, the difference in the brightness and saturation at edges will be very high even if the edge is close to the camera. This indicates the wrong estimation of depth at edges. Therefore, this method loses some edges. An improved depth model is presented in [2], which improves the depth and preserves more edge using median filter. However, it restores images with homogeneous fog.

In [31], a fast linear transformation-based method is presented to estimate the minimum color channel of the haze-free image. It produces visually compelling results. This method is fast and has real-time applicability. However, the quality of results by this method depends upon the selection of dehazing control factor ( a constant to suppress the value of the minimum color channel).

DCP-based methods produced quality results for non-sky images. Thus, a little effort has been made to handle images with sky region based on segmentation of sky and non-sky region. Generally, these methods obtain transmission for the sky and non-sky region separately. Important methods based on sky region segmentation have been presented in [28, 32, 33].

An adaptive sky region prior is presented in [32] to estimate atmospheric light. Sky region is obtained by the color edge detection technique. Color normalization is used to avoid color distortion in the sky region. However, this method is computationally inefficient.

In [33], sky/non-sky regions are separated. Region-wise transmission is obtained using color characteristics of the sky region, and DCP is used to obtain transmission of the non-sky region. The guided filter is used to preserve the edges. However, the selection of feature pixels is biased by maximum brightness and gradient, which limits the applicability of this method.

In [28], a method is presented to decompose the hazy image into sub-sky regions using quad-tree decomposition. The image is segmented in sky/non-sky region using region growing algorithm in which decomposed image is fed as seed. The segmented image is further smoothen using the gaussian filter. This method obtains natural and clear results with less blocky artifacts. However, this method works well for the images with large sky regions and inefficient for real-world images.

Dehazing based on priors with filtering, observation and statistics, and sky region segmentation requires significant improvement to recover edges, restoration of original colors, reduced artifacts, and efficiency. Dehazing based on optimization [20, 25,26,27] fills these gaps.

The optimization-based contrast improvement technique is presented in [25] with an objective to reduce information loss. Further, a method based on quad-tree sub-division is used to obtain the value of atmospheric light. This method solves the problem of overestimation. However, it loses some edges due to window shifting operation in the local neighborhood.

A boundary constraint on the transmission is presented in [20], which is further refined using contextual regularization. The problem of dehazing is modeled as an optimization problem based on combined constraint with a weighted \(l1-\) norm. This method produces quality results with few assumptions. However, the performance of this method depends on (1) selection of two constants to compute boundary constraint and (2) the number of iterations used to refine transmission using regularization.

Dehazing problem is solved from the perspective of noise in [26]. Two maps have been constructed to label severity of noise and atmospheric light. Noise severity is defined as the weighted sum of brightness and saturation. Particle swarm optimization technique is used with an objective to maximize the saturation. This method estimates pixel-wise noise. However, this method does not consider ASM. Thus, it is far from the realistic dehazing.

A new non-local prior has been presented in [27]. The method in [27] has observed that an image has less number of distinct colors than the size of the image (number of pixels). Pixels are clustered based on the amount of added airlight ( these clusters are called haze lines). Haze lines are used to recover transmission, which is further refined using regularization. The advantage is that (1) it estimates pixel-wise transmission and (2) it is linear and deterministic. However, this method may produce wrong results if the atmospheric light is significantly brighter than the scene, because most of the pixels will point in the similar direction in such cases.

Optimization-based techniques produced compelling results. These methods unveil the structure of the image, produce quality visual results, the faithful colors, restore/preserve edges, and minimize overestimation of transmission. However, these methods lack in the proper focus of researchers due to computational inefficiency.

3 Problem formulation

The process of image formation in imperfect weather is shown in Fig. 1. The sunlight reaches to an object by penetrating the environment and reflected by the surface of the object. Thus, camera receives the sum of the direct attenuation and airlight as irradiance [4, 5].

Physical properties of particles (such as size, type, density, and the presence of humidity) which are suspended in the air determine the exact amount of light received by the camera. Varying weather conditions depend upon type(T), size(S), concentration(C) of atmospheric particles, and humidity level (H) of atmosphere. Summary of typical atmospheric conditions is presented in Table 1 [4].

Table 1 presents that smallest particles are air molecules. Thus, air molecules scatter a negligible amount of light. Therefore, the image captured in the presence of air molecules is noiseless. Haze particles (such as ashes of the volcano, combustion products, and sea salts) are larger than the air molecules and are suspended in the gas. When humidity increases to a very high level, these particles act as center of small water droplets. Fog is a form of haze with saturated humidity. Due to condensation, some of the nuclei grow to produce water droplets under saturated humidity. Thus, increased humidity turns haze into fog. Cloud is a form of fog. However, clouds are formed at higher altitude. Rain is a very complex atmospheric condition, which causes spatial as well as temporal variations in images [2, 4].

ASM is mathematical represented by Eq. (1) [4, 14].

where the first term of Eq. (1) is direct attenuation and the second term is airlight, y is position of a scene point (usually coordinate), \(c\in (R,G,B)\) is color channel, \(J_o(y)\) is the intensity of a scene point at location y in haze-free image, \(J_d(y)\) is intensity of a scene point at location y in hazy image, \(A_r\) is atmospheric light, and Tran(y) is the fraction of light which directly reaches to camera and is termed as transmission at location y. Transmission is expressed as:

where \(0 \le depth(y)\le \infty \) is depth of a scene point from camera at location y and \(\beta (\lambda )\) is atmospheric scattering coefficient. Scattering coefficient is defined as in Eq. (2).

where the wavelength of incident light is \(\lambda \), scattering coefficient of incident light with wavelength \(\lambda \) is \(\beta (\lambda )\), and \(0 \le \eta \le 4\) is a constant which depends upon the size of atmospheric particles. Pleasant weather composed of air molecules, which are smaller in size than the wavelength of light. Therefore, \(\eta =4\), which increases the effect of wavelength. Thus, the sky seems blue in pleasant weather. Wavelength will be less compared to the size of atmospheric particles in hazy/foggy conditions. Thus, \(\eta =0\), in which case scattering is independent of wavelength and \(\beta (\lambda )=\beta \) will be a constant. This scattering effect is known as homogeneous scattering. However, atmospheric particle size varies from \(10^{-4}\mu m\) to \(10\mu m\) under thin fog or mild haze and produces varying scattering effects (heterogeneous scattering) [2, 4].

The proposed method is based on an assumption that atmosphere is composed of small particles like haze and fog. Therefore, \(\beta (\lambda )=\beta \) will be a constant. Thus, transmission is redefined as in Eq. (3).

Equation (3) implies that \(0 \le Tran(y)\le 1\). Given an input hazy image \(J_{d}^{c}(y)\), objective of dehazing is to estimate \(J_o^c(y)\), Tran(y) and \(A_r^c\). Due to additive airlight in Eq. (1), \(J_o(y)\), \(J_d(y)\) and \(A_r\) are coplanar vectors with colinearity at end points [5]. Thus, dehazing is an ill-posed problem.

As mentioned in Eq. (1), the haze-free image \(J_{o}^c(y)\) is degraded due to multiplicative transmission Tran(y) and additive airlight \(A_r(1-Tran(y))\). Both these terms are influenced by Tran(y). Thus, accurate estimation of Tran(y) is essential for effective dehazing. The proposed method obtains a tight lower bound on Tran(y) based on an observation of minimum color channel of hazy image. Contributions of the proposed work are as follows:

- 1.

Tight lower bound on the transmission is proposed, which is further refined using contextual regularization to obtain accurate Tran(y).

- 2.

Proposed tight lower bound depends upon minimum color channel of the haze-free image. Therefore, a prior is proposed to estimate the minimum color channel of the haze-free image using the hazy image.

- 3.

A new blind assessment metric is proposed to measure the effectiveness of the dehazing method based on restored and matching corner points.

4 Proposed method

4.1 Mathematical foundation of the proposed method

Transmission can be obtained using Eq. (1) as in Eq. (4):

The proposed method is based on an assumption that transmission at a pixel is identical in each color channel of hazy image. Therefore, the minimum color of hazy image can be used to obtain transmission. Thus, Eq. (4) is transformed as:

where min(, , ) is a function to find minimum of three values at location y, \(\min _{c\in (r,g,b)}(J_{d}^c(y))\) is minimum color channel of hazy image, and \( \min _{c\in (r,g,b)}(J_{o}^c(y))\) is minimum color channel of haze-free image. Equation (5) can be rewritten as:

According to Eqs. (3) and (6).

From Eq. (1) \(\min _{c\in (r,g,b)}(J_{d}^c(y)) \ge \min _{c\in (r,g,b)}(J_{o}^c(y))\) due to additive airlight. Thus, \(\left( \min _{c\in (r,g,b)}(J_{d}^c(y))-A_r \right) < 0 \). However, \(\left( \min _{c\in (r,g,b)}(J_{d}^c(y))-A_r \right) >0 \), if an object is brighter than \(A_r\). Therefore, Eq. (6) is corrected as:

where \(\mid \cdot \mid \) is modulus operator which returns absolute value, and \(\gamma (y) = \delta (\min _{c\in (r,g,b)}(J_{d}^c(y))-\min _{c\in (r,g,b)}(J_{o}^c(y)))\). Parameter \(\delta \) is introduced to control the value of \(\gamma (y)\). Equation (7) can estimate correct transmission of objects brighter than atmospheric light.

The proposed method is based on statistics of \(\gamma (y)\). It can be observed from Eq. (7) that increased value of \(\gamma (y)\) will produce smooth and tight lower bound on Tran(y).

Figure 2 shows Tran(y) obtained using different depth maps with varying \(\delta =[1.2,1.5]\). Image shown in Fig. 2a is a haze-free image \(J_{o}^c(y)\). Different depth maps depth(y) which are prepared randomly using uniform distribution are shown in Fig. 2b. Atmospheric light is computed randomly using uniform distribution to compute each Tran(y). Original transmission Tran(y) are computed using different depth maps (depth(y)) in Eq. (3) with \(\beta =1\). Figure 2d shows a graph between Tran(y) and depth(y). Image \(J_{o}^c(y)\) is corrupted using Eq. (1) with different Tran(y) as shown in Fig. 2d to obtain hazy images \(J_{d}^c(y)\). Hazy images \(J_{d}^c(y)\) are shown in Fig. 2c.

In Fig. 2e, black curve is representing original Tran(y) and red curve is representing Tran(y) with \(\delta =1.2\). It can be observed that the red graph is smooth and behave as tight lower bound on original transmission. Thus, it can be inferred that increased \(\gamma (y)\) gives lower bound on original transmission.

Haze-free image and ground truth of depth image selected from NYU dataset [34]. a Haze-free image. b Ground truth of depth Image

Original transmissions obtained with varying \(\beta =[1,3,5]\) using ground truth of depth image shown in Fig. 3b and behavior of the transmission with varying \(\delta =[1.2,1.5]\). In Fig. 4e, black, red and blue curve represents original transmission, transmission with \(\delta =1.2\), and transmission with \(\delta =1.5\), respectively

Blue curve in Fig. 2f is representing Tran(y) with \(\delta =1.5\). It can be observed that the blue curve relaxes the lower bound represented by the red curve. Thus, it infers that increasing value of \(\delta \) provides tight lower bound. However, insignificant increase in \(\gamma (y)\) relaxes tight lower bound. Therefore, tight lower bound can be obtained by increasing \(\gamma (y)\) significantly. Thus, the proposed method is based on estimation of \(\min _{c\in (r,g,b)}(J_{o}^c(y))\) with an objective to increase \(\gamma (y)\) significantly.

Furthermore, the proposed tight lower bound is verified on NYU dataset (NYU) [34], which contains haze-free images, hazy images, and ground truth of depth images. A haze-free image and ground truth of its depth image are selected to validate the proposed tight lower bound. Selected images are shown in Fig. 3. Ground truth of depth image shown in Fig. 3b is used to compute original Tran(y) with increased haze density (\(\beta =[1,3,5]\)). These transmissions are used to contaminate haze-free image shown in Fig. 3a using ASM to obtain hazy images. Figure 4 shows obtained hazy images, original Tran(y), and behavior of Tran(y) with \(\delta =[1.2,1.5]\) using Eq. (7).

Figure 4a shows that original Tran(y) is smooth and reduces with increased haze density. Figure 4b shows hazy images which are obtained using the transmissions shown in Fig. 4a. Transmissions obtained using Eq. (7) with \(\delta =[1.2,1.5]\) are shown in Fig. 4c, d.

Comparison graph of transmissions is shown in Fig. 4e. Black, red, and blue curves in Fig. 4e are representing original Tran(y), Tran(y) with \(\delta =1.2\), and Tran(y) with \(\delta =1.5\), respectively. Figure 4e proves that red curve is a tight lower bound on original Tran(y), whereas blue curve relaxes the tight lower bound. The proposed tight lower bound is independent of haze density as shown in Fig. 4e.

Flow diagram of the proposed prior to estimate the minimum color channel of the haze-free image using Eq. (8). Hazy image is represented by \(minJ_{d}\), local neighborhood centered at location y is represented by \(\omega (y)\), and \(C_1(y)\) and \(C_2(y)\) are minimum and maximum values in \(\omega (y)\). Circles with the symbol −, \(*\), and / represent element-wise subtraction, multiplication, and division, respectively

Moreover, Fig. 4b shows that ceiling light is brighter than atmospheric light. Transmissions obtained using Eq. (7) with true atmospheric light are shown in Fig. 4c, d. Transmissions of ceiling light in Fig. 4c, d are approximately accurate. Thus, Figs. 2 and 4 validate the proposed tight lower bound which is obtained using Eq. (7).

4.2 Estimation of minimum color channel

Minimum color channel of haze-free image \(\min _{c\in (r,g,b)}(J_{o}^c(y))\) is to be estimated from minimum color channel of hazy image \(\min _{c\in (r,g,b)}(J_{d}^c(y))\). Let \(minJ_d(y)=\min _{c\in (r,g,b)}(J_{d}^c(y))\) and \(minJ_o(y)=\min _{c\in (r,g,b)}(J_{o}^c(y))\).

Some methods in the literature have been presented to estimate \(minJ_o(y)\). In [24], a \(l2-norm-\)based modified DCP is presented. Modified DCP is robust and reduces blocky artifacts. However, it estimates more value of \(minJ_o(y)\) at long distance, which overestimates transmission at long distance. In [31], a piece-wise linear transformation-based technique is presented. In [31], \(minJ_o(y)\) is overestimated, which also increases transmission at long distance. In [31], overestimated transmission is further tuned using a dehazing control factor.

The proposed prior is based on the statistical behavior of the local neighborhood of a pixel. The proposed prior assumes that \(minJ_o(y)\) non-linearly depends upon \(minJ_d(y)\) in local neighborhood \(\omega (y)\). Thus, the proposed prior can be expressed as:

where \(C_1(y) = \min _{x\in \omega (y)}^{l}(minJ_d(x))\), \(C_2(y) = \max _{x\in \omega (y)}^{l}(minJ_d(x))\), \(\min _{x\in \omega (y)}^{l}(:)\) and \(\max _{x\in \omega (y)}^{l}(:)\) are the function to find minimum and maximum in local neighborhood \(\omega (y)\) which is centered at location y.

In [31], \(C_1(y)\) and \(C_2(y)\) are minimum and maximum intensities of \(minJ_d(y)\), respectively. Thus, \(C_1(y)\) and \(C_2(y)\) are constants. Due to constant value of \(C_1\) and \(C_2\), value of numerator increases with depth. Due to increased value of numerator, transmission at long distance is overestimated. However, the proposed method is different than [31] due to varying values of \(C_1\) and \(C_2\) in each local neighborhood \(\omega (y)\).

Equation (8) ensures \(minJ_o(y) \le minJ_d(y)\). Numerator in Eq. (8) measures variance of a pixel in local neighborhood. Variance will be low in smooth regions; thus, \(minJ_o(y) \simeq minJ_d(y)\). There will be sudden change in variance at depth discontinuities. Therefore, \(minJ_o(y)\) will be overestimated. To reduce overestimation, the proposed method further refines \(minJ_o(y)\) as:

where \(minJ_{o}^{r}(y)\) is refined value of \(minJ_o(y)\) at location y.

Figure 5 shows flow diagram of the proposed prior to estimate \(minJ_o(y)\) using Eq. (8). A patch represented by a local neighborhood \(\omega (y)\) is processed to obtain value of \(minJ_o(y)\) at location y as shown in Fig. 5. Process shown in Fig. 5 is repeated for each local neighborhood of each pixel of hazy image to obtain \(minJ_o(y)\). The proposed method obtained the results with local neighborhood of size \(15\times 15\).

Figure 6 shows a comparison of the transmissions which are obtained using the methods in [5, 24, 31] and the proposed method, for the hazy image shown in the last row of Fig. 2c. It can be observed that transmission obtained using estimated \(minJ_o(y)\) loses smoothness and accuracy. Transmission obtained using the method in [5] achieves \(psnr=55.90\) with respect to original transmission. However, it is overestimated at depth discontinuities, which produce halo artifacts as shown in Fig. 6a. Method in [24] obtained \(psnr=57.84\), which is more than psnr obtained by method in [5]. However, transmission estimated by [24] is more at long distance. Transmission obtained by [31] achieves \(psnr=58.84\), and it is more smooth and accurate than [5] and [24]. However, the transmission obtained by the proposed method is more accurate and smooth than [5, 24, 31]. The proposed method obtains \(psnr=61.14\), which proves its accuracy.

Furthermore, it can be observed that the proposed method is very close to the original transmission, which infers that the proposed method tightens the bound on original transmission. However, it losts smoothness due to the wrong estimation at depth discontinuities. Therefore, the proposed method used contextual regularization technique [20] to improve accuracy and smoothness of the obtained transmission.

4.3 Estimation of atmospheric light

The proposed method uses existing techniques [5, 20] of atmospheric light estimation. However, selection of atmospheric light using these methods is based on an assumption that input image is hazy. Atmospheric light does not exist in the clean image. Dehazing of clean images results into unwanted enhancement due to incorrect transmission, which darkens the dehazed image. Therefore, it is important to verify that the input image is hazy or not using haze detection methods [35, 36].

The proposed method relies on method in [20] for estimation of atmospheric light (\(A_r\)), which estimates \(A_r\) for each color channel c as \(A^c\). The method in [20] applies minimum filter in the local neighborhood of each color channel and then selects the maximum value of each channel as A. However, the proposed method computes atmospheric light as:

where \(c\in (r,g,b)\) is color channel, A is atmospheric light obtained using method in [20], and \(A_r\) is atmospheric light estimated by the proposed method.

4.4 Recovery of haze-free image

The proposed method computes \(A_r\), estimates \(minJ_{o}^r(y)\), and obtains Tran(y) by plugging values of \(A_r\) and \(minJ_{o}^r(y)\) in Eq. (7) with \(\delta =1\). Further, Tran(y) is regularized using regularization method in [20]. Transmission obtained after regularization is used to recover haze-free image using Eq. (10).

where \(J_{o}^c(y)\) is intensity in c color channel of the haze-free image at location y, \(J_{d}^c(y)\) is intensity in c color channel of hazy image at location y, \(A_r\) is atmospheric light, and Tran(y) is estimated transmission at location y. In denominator of Eq. (10), maximum of Tran(y) and 0.01 is used to handle divide by zero exception.

5 Experimental analysis

Experiments have been performed to validate the effectiveness and accuracy of the proposed method. MATLAB with version MATLABR2014a is used to implement the proposed method on Intel CORE(TM) i7-4790 @3.60 GHz platform. The proposed method is tested using qualitative and quantitative metrics on Waterloo dataset [37], Frida dataset [38], NYU [34] dataset, and challenging hazy images taken from [39, 40].

Quantitative analysis of the proposed method is based on measurement of visibility and structure of the image. Thus, visibility of edges, gradients, color, and corner points are used to measure the performance of the proposed method. Quantitative metrics are classified into two classes: (1) reference-based performance metrics and (2) non-reference-based performance metrics [41, 42].

5.1 Reference-based performance metrics

In reference-based metrics [24], dehazed image and ground truth of respective haze-free image is required to measure the performance. In real scenarios, it is not possible to acquire haze-free image with respect to the same hazy image due to the natural constraint. Therefore, synthetic haze-free images and their respective hazy images are obtained from Frida dataset [38] and NYU [34].

Peak signal-to-noise ratio (psnr), color distance (\(\overline{\varDelta E}\)), and metric \(Q_u\) are used as the reference-based metric to evaluate the proposed method [43]. High psnr is an indication of quality dehazing. The dehazed image is compared with ground truth of the haze-free image to compute psnr as:

where Error is mean square error and \({Image}_{max}\) is maximum intensity of image.

Metric \(\overline{\varDelta E}\) and \(Q_u\) are computed using the formulas presented in [43]. Metric \(\overline{\varDelta E}\) measures color difference (color restoration capability) between ground truth of haze-free image and dehazed image. Range of metric \(\overline{\varDelta E}\) is \([0(worst)-1(best)]\). Metric \(Q_u\) measures combined effect of structural similarity index (ssim) and color distance [43,44,45,46,47]. High value of metric \(Q_u\) represents better performance of dehazing.

5.2 Non-reference-based performance metrics

The capability of dehazing can be measured using recovered edges, their position, and matching. Three types of metrics are used as non-reference-based parameter: (1) Metric e measures number of edges recovered, (2) metric \(\overline{r}\) is used to show average visibility effect in the form of gradients, which measures strength of dehazing method to preserve existing edges, and (3) a new metric \(C_{rm}\) is introduced to measure effectiveness of dehazing method on the basis of restored and matching corners. As high as the e and \(\overline{r}\), better will be the performance of the dehazing method. Formulas used to compute e and \(\overline{r}\) are given in Eqs. (12) and (13) [40, 41, 48].

where \(N_{res}\) and \(N_{ori}\) are number of visible edges in dehazed image \(I_{o}\) and hazy image \(I_{d}\), respectively.

where \(r_i = \frac{\varDelta I_i^r}{\varDelta I_i^o}\), \(\varDelta I^r\), and \(\varDelta I^o\) are the gradient of image \(I_{o}\) and image \(I_{d}\), respectively, and \(Q_r\) is visible edges of the image \(I_{o}\).

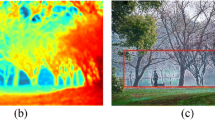

Values of \((nc-rc)\) and mc obtained using NYU [34]. a Values of \((nc-rc)\) and mc computed from hazy images and images which are dehazed using original transmission, and b values of \((nc-rc)\) and mc computed from hazy images and images which are dehazed using noisy transmission. Red and blue curve represents the obtained values of mc and \((nc-rc)\), respectively

Furthermore, structural similarity of hazy image and dehazed image is measured using ssim. A metric qm is derived from e, \(\overline{r}\), and ssim to measure combined effect of e, \(\overline{r}\), and ssim. Formula used to calculate qm is given as:

Moreover, quality correlate (Q) is computed using High-Dynamic Range Visual Difference Predictor2 (HDRVDP2) with version 2.2.1 [47, 49, 50], which measures degradation in visual quality of dehazed image with respect to the reference image. Metric Q varies in the range \([0(worst)-100(best)]\).

5.3 Metric \(C_{rm}\)

Strength and effectiveness of dehazing method depend upon smoothness and accuracy of estimated transmission. A non-smooth and less accurate transmission generate wrong gradients and edges, which improves the value of metric e and \(\overline{r}\). Corner points affect the quality of results in image processing. Thus, a new metric \(C_{rm}\) is proposed to measure the performance of the dehazing based on corner points. Metric \(C_{rm}\) is a non-reference-based blind assessment metric, which measures the effectiveness of dehazing based on the number of restored and matching corners. The formula used to calculate \(C_{rm}\) is as follows.

where nc is the number of corners present in hazy image \(J_d(y)\), rc is the number of restored corners in dehazed image \(J_o(y)\), and mc is the number of most likely matching corners. The high value of \(C_{rm}\) indicates improved strength of dehazing method. However, increased negative \(C_{rm}\) infers that dehazing method has preserved original corners and restored degraded corners also. Thus, negative \(C_{rm}\) indicates improved effectiveness and efficacy of dehazing method.

The proposed method computes nc and rc using harris corner detector [51]. Restored corners are matched with existing corners in hazy image to track the number of most likely matching corners mc.

5.4 Dataset

The proposed method used available standard dataset [34, 37, 38]. Two types of the standard dataset are used to test the performance of the proposed method: (1) Waterloo IVC dehazed image database(WIVC) [37] and (2) Frida and NYU dataset [34, 38].

WIVC dataset is available online and consists of 25 hazy images of varying outdoor scenes as well as indoor static objects. Out of 25 images, 23 images are of real-world outdoor scenes which are faded by varying degree of haze and 3 images are of indoor static objects and contaminated by homogeneous haze. This dataset also contains 8 different dehazed images for every 25 images based on restoration techniques proposed between 2009 and 2014. Thus, it consists of 9 (1 hazy, 8 dehazed) images for each image in dataset. The dataset provided all the 225 images [37]. This dataset is used to compute non-reference-based metrics.

However, WIVC does not have ground truth of the haze-free image. Thus, synthetic image dataset Frida [38] is used. Frida dataset contains synthetic hazy images and their respective ground truths. Synthetic hazy images are categorized into four categories, and each category has 18 hazy images: (1) uniform fog, (2) heterogeneous fog, (3) cloudy fog, and (4) cloudy heterogeneous fog. Frida is a synthetic dataset which does not contain real-world images. Therefore, NYU is used to evaluate the proposed method. NYU contains 1449 real-world indoor hazy images with ground truth of depth images and respective haze-free images.

Furthermore, a few images captured by us during spring season using Asus Zenfone with android 4.4.2 are also used to validate the proposed method.

Images from WIVC are used to perform the qualitative comparison. Frida and NYU are used to present quantitative analysis. Results are compared with renowned existing methods [2, 5, 20, 23, 29, 30]. Visual comparison of results is shown in Figs. 8, 9, 10, 11, 12, 13, and 14.

5.5 Evaluation of the proposed metric

Proposed metric \(C_{rm}\) is based on ratio of mc and \((nc-rc)\). Thus, the statistics of \((nc-rc)\) and mc on first 50 images taken from NYU [34] is used to test the robustness of \(C_{rm}\).

Original transmissions are required to evaluate the robustness of \(C_{rm}\). Therefore, the ground truth of depth images is used to obtain original transmission using Eq. (3) with \(\beta =1\). A little gaussian noise (with zero mean and standard deviation \(\sigma _g=0.05\)) is added to these original transmissions to reduce their accuracy and smoothness. The original transmissions and noisy transmissions are used to obtain 50 dehazed images using ASM.

Graph shown in Fig. 7a, b presents the values of \((nc-rc)\) and mc for 50 images. Values shown in Fig. 7a are obtained from images which are dehazed using original transmissions. Values shown in Fig. 7b are obtained from images which are dehazed using noisy transmissions. Figure 7a shows that \(mc>0\) and \((nc-rc)<0\) for all images. Thus, Fig. 7a proves that if the accurate and smooth transmission is used to restore haze-free images, then \(C_{rm}<0\), which confirms the accuracy of the proposed metric. However, in the case of noisy transmission, the rate of reduction in \((nc-rc)\) is very high as shown in Fig. 7b. Figure 7b proves that the value of \((nc-rc)\) is 100 times less than the respective value of \((nc-rc)\) in Fig. 7a, and \(mc\simeq 0\). Thus, \(C_{rm}\) for images which are dehazed using noisy transmission will be either very close to zero or zero. As well as, it is observed that increased noise level in transmission grows the rate of reduction, which results in \(C_{rm}=0\).

Therefore, it can be concluded that accurate transmission results into high value(negative or positive) of \(C_{rm}\). Inaccurate transmission will result in \(C_{rm}\simeq 0\), which proves the robustness of the proposed metric.

5.6 Qualitative comparison

Figures 8, 9, 10, 11, 12, 13, and 14 show comparison of visual results obtained by the methods in [2, 5, 20, 23, 29, 30] and the proposed method. Varying images are used to measure quality of the proposed method. Figures 8 and 9 are outdoor images with sky region. Figures 10 and 11 are images with small sky region. Figures 12 and 13 are images without sky region. Figure 14 is an image with large sky region.

Method in [5] produces blocky and halo artifacts near depth discontinuities due to patch-based filtering, which is shown in each Figs. 8b, 9, 10, 11, 12, 13, and 14b. It can also be observed that method in [5] produces color distortion in sky region due to invalidity of DCP in sky, as shown in Figs. 8b, 9b, and 14b. Method in [23] produces fine results in comparison with method in [5] for sky images. However, this method performs poorly for image in Fig. 13c due to the presence of white goose in scene at short distance.

Handling of haze-free images. a Haze-free image, b dehazed image obtained using method in [20], c transmission obtained using method in [20], d estimated minimum color channel of haze-free image using the proposed method, e transmission obtained by the proposed method, f dehazed image obtained by the proposed method

Method in [20] produces much fine results. However, this method overbrightens the pixels at long distance in the case of large sky region as shown in Figs. 8d, 9d, and 14d. In [29], haze relevant features are learned to gauge haze density. Results produces by method in [29] are shown in Figs. 8e, 9e, 10e, 11e, 12e, 13e, and 14e. However, visual quality of results is poor at long distance due to increased effect of haze. Results obtained in [30] are pleasant. However, visibility at long distance in Figs. 9f, 10f, 13f, and 14f is not as good as of [5, 20] due to wrong depth estimation. In [2], visibility at long distance is good compared to [30] due to improved depth estimation. However, method in [2] overdarkens the image in Fig. 11g due to wrong estimated transmission.

Result obtained by the proposed method for image in Fig. 8a is shown in Fig. 8h. It can be observed that the visual quality of image in Fig. 8h is better than methods in [2, 5, 23, 29, 30]. There exists a fence in Fig. 8a, which is highlighted by a red rectangle. It can be noticed that the fence is visible in Fig. 8b, c, e, f, g, and h. However, it can be noticed in Fig. 8d that method in [20] produces smooth overbrighten sky region, which results in loss of information at long distance in the form invisible fence. This proves accuracy of the proposed method. Figures 8h, 9h, and 14h show that the proposed method clearly removes haze at long distance points in large sky regions without overbrightening of the image.

Image shown in Fig. 11a is a challenging hazy image [2]. Haze distribution in Fig. 11a is complex as highlighted by a blue rectangle. Figure 11b shows a little residual haze in highlighted area due to halo artifacts. Method in [23] removes residual haze as shown in Fig. 11c. However, it loses leaves as highlighted by a yellow circle in Fig. 11c. Method in [20] obtains better results as shown in Fig. 11d. Method in [29] is unable to remove haze in highlighted area as shown in Fig. 11e. Method in [30] generated black spots inside rectangle as shown in Fig. 11f due to inaccurate depth. Method in [2] improved the results of method in [30] as shown in Fig. 11g. However, it darkens the result as highlighted by a red rectangle in Fig. 11g. The proposed method obtains better results in comparison with methods in [2, 5, 20, 23, 29, 30] for Fig. 11a. Visual quality of images in Figs. 10h, 11h and 12h is much better than other existing methods, which proves that the proposed method obtains natural results for images with non-sky region, small sky region, large sky region, and white objects.

Dehazing methods are sensitive to handle the exceptional cases such as dehazing of the haze-free image and images with man-made lights. A comparison of the proposed method with [20] for haze-free images and images with man-made light is shown in Figs. 15 and 16.

Figure 15a is a haze-free image. It can be noticed that dehazed images are dark due to over enhancement as shown in Fig. 15b, f. Dehazed image in Fig. 15f is little dark in comparison with Fig. 15b due to the proposed tight lower bound on transmission. However, it can be noticed from Fig. 15d that the minimum channel obtained by the proposed method is almost dark in the entire image except for bright objects. Thus, the proposed method will produce accurate transmission if the atmospheric light is estimated correctly.

Figure 16 shows the visual comparison of results obtained by the method in [5, 20] and the proposed method on hazy image containing man-made light in the form of headlights of the train. These types of hazy images are the typical case of non-uniform atmospheric light [48, 52]. Dehazed image obtained by method in [5] is shown in Fig. 16b. The method in [5] estimates atmospheric light from brightest pixels of the hazy image due to which headlights of the train are overbrighten as shown in Fig. 16b. Method in [20] modifies method in [5] to estimate atmospheric light, which controls overbrightening of headlights of train as shown in Fig. 16c. The proposed method relies on the method in [20] for atmospheric light estimation. Thus, in Fig. 16d visual quality of headlights is same as shown in Fig. 16c. The dehazed image in Fig. 16c is dark, which proves that method in [20] overenhanced the image. However, the proposed method obtained natural color with significant enhancement as shown in Fig. 16d. It can be noticed that there exists a residual haze at long distance in dehazed images shown in Fig. 16b–d due to inaccurate atmospheric light estimation. Therefore, it can be concluded that atmospheric light estimation influences transmission estimation which controls visual quality of dehazed image.

However, the proposed method estimates the minimum color channel of the haze-free image, which is independent of atmospheric light. Thus, Figs. 15 and 16 prove that the proposed method is robust to handle exceptional cases if the atmospheric light is estimated accurately.

5.7 Quantitative comparison

Non-reference-based metrics e, \(\overline{r}\), ssim, qm, rc, and mc are computed for images shown in Figs. 8, 9, 10, 11, 12, 13 and 14. Tables 2, 3, 4, and 5 present values of e, \(\overline{r}\), ssim, qm and \(C_{rm}\) on each image.

Comparison based on metric Q, ssim, \(\overline{\varDelta E}\) and \(Q_u\) using NYU. a Metric Q, b metric ssim, c metric \(\overline{\varDelta E}\), and d metric \(Q_u\). Blue, red, green, and purple curve represents value of metrics (Q, ssim, \(\overline{\varDelta E}\) and \(Q_u\)) obtained using methods in [2, 20, 30] and the proposed method

Table 2 proves that the proposed method better recovers edges than method in [2, 5, 29, 30] for Fig. 8. Methods in [20, 23] recover little more edges than the proposed method due to wrong transmission. However, these methods lose gradients as presented in Table 3. The proposed method surpasses method in [2, 5, 20, 23, 29, 30] on value of \(\overline{r}\) for Fig. 8.

The proposed method obtains better value of e and \(\overline{r}\) for Fig. 9 than methods in [2, 5, 20, 23, 29, 30]. The proposed method performs well on the basis of e and \(\overline{r}\) for Fig. 10 in comparison with other methods except method in [20, 30] due to overestimation of transmission by these methods.

For image shown in Fig. 11, the proposed method obtains better values of e and \(\overline{r}\) than all other existing methods. The proposed method proved to be better than methods in [2, 5, 23, 29, 30] for images in Figs. 12 and 13 on the basis of e and \(\overline{r}\) value. Method in [20] recovers little more edges and has a little better visibility than the proposed method. However, the proposed method recovers more edges and has proven visibility for image in Fig. 14 in comparison with method in [2, 5, 20, 23, 29, 30].

Furthermore, values of ssim and qm are presented in Table 4 to measure combined effect of e, \(\overline{r}\) and ssim. Table 4 shows that the proposed method outperform on the basis of qm value. Method in [20] has obtained high value of qm for image shown in Fig. 8 due to recovery of wrong edges and gradients. However, method in [20] obtains less value of \(C_{rm}\) as presented in Table 5. The proposed method restores more corners than method in [20] as proved by \(C_{rm}\) value for Fig. 8 in Table 5.

Value of qm obtained by the proposed method for image in Fig. 10 is more than the value obtained by methods in [2, 5, 20, 23, 29, 30]. Value of qm obtained by methods in [20, 23, 29] is more than the proposed method due to wrong obtained values of e and \(\overline{r}\), which is proved by obtained value of \(C_{rm}\) by these methods as presented in Table 5. Obtained value of qm for image in Fig. 11 by the proposed method is more or equal to the value of qm obtained by methods in [2, 5, 20, 23, 29, 30]. The proposed method has better visibility and recovers more edges for image in Figs. 12 and 13, which is proved by obtained qm and \(C_{rm}\). Method in [20] obtained high value of qm for image in Fig. 14 due to overestimation of transmission at long distance point. However, it loses matching corners which is proved by value of \(C_{rm}\).

Furthermore, Table 5 proves that the proposed method perform well in comparison with methods in [2, 5, 20, 23, 29, 30] on the basis of metric \(C_{rm}\). Obtained value of e, r, ssim, \(\overline{r}\), qm, and \(C_{rm}\) proves that the proposed method recovers original edges with better visibility.

Graph shown in Fig. 17 compares psnr between the transmission obtained by method in [31] and the proposed method on images taken from Frida [38] to differentiate the proposed method from method in [31]. Figure 17 shows that the transmission obtained by the proposed method is much closer to ground truth, which is proved by psnr value achieved by the proposed method. The graph shows that the proposed method surpasses method of [31] on the basis of psnr for all images of Frida.

Furthermore, results obtained by the proposed method are compared on the basis of metric Q, ssim, \(\overline{\varDelta E}\) and \(Q_u\) using NYU [34] with methods in [2, 20, 30]. Figure 18 shows values of metric Q, ssim, \(\overline{\varDelta E}\) and \(Q_u\) on first 50 images of NYU in the form of graph. Table 6 presents average values of these metrics obtained by methods in [2, 20, 30] and the proposed method.

The proposed method obtains better visual quality of dehazed images in comparison with methods in [2, 20, 30] as shown in Fig. 18a. Structural similarity achieved by the proposed method is much better than methods in [2, 20, 30], which is proved in Fig. 18b. The proposed method restores original colors in comparison with methods in [2, 20, 30] as shown in Fig. 18c. Figure 18d proves that the proposed method surpasses all other methods on the basis of metric \(Q_u\).

Table 6 proves that the proposed method obtains high average value of metrics Q, ssim, \(\overline{\varDelta E}\) and \(Q_u\) in comparison with methods in [2, 20, 30]. The proposed method achieves an accuracy of 61.31% in visual quality restoration as proved by the average value of metric Q. Structural similarity obtained by the proposed method is 88% accurate, which proves that the proposed method restores better contrast and preserves the structure of the dehazed image. Average color distance obtained by the proposed method is 87%, which infers that the proposed method restores original colors.

6 Conclusion

Tight lower bound on the transmission is proposed. Tight lower bound is further regularized using contextual regularization to obtain accurate transmission. The accuracy of the lower bound depends upon the correct estimation of the minimum color channel of the haze-free image. Thus, a prior is proposed to estimate the minimum color channel of the haze-free image based on the method in [31]. Further, a new blind assessment metric \(C_{rm}\) is proposed to evaluate the performance of dehazing methods. Effectiveness of the proposed method is proved by visual quality of results and quantitative metrics (such as e, \(\overline{r}\), ssim, psnr, qm, \(C_{rm}\), Q, \(\overline{\varDelta E}\) and \(Q_u\)). The proposed method obtains better results than existing methods. The accuracy of the transmission obtained by the proposed method is proved by psnr. However, the proposed method is based on the regularization technique, which is computationally intensive. Thus, a fast technique to estimate precise transmission is essential. Moreover, estimation of atmospheric light influences transmission estimation. Thus, a robust method to estimate atmospheric light is required to improve the performance of the proposed method. In the future, we would like to focus on these issues.

References

Lu, H., Li, Y., Nakashima, S., Serikawa, S.: Single image dehazing through improved atmospheric light estimation. Multimed. Tools Appl. 75(24), 17081–17096 (2016)

Raikwar, S.C., Tapaswi, S.: An improved linear depth model for single image fog removal. Multimed. Tools Appl. 77(15), 19719–19744 (2018). https://doi.org/10.1007/s11042-017-5398-y

Huimin, L., Li, Y., Chen, M., Kim, H., Serikawa, S.: Brain intelligence: go beyond artificial intelligence. Mob. Netw. Appl. 23(2), 368–375 (2018)

Narasimhan, Srinivasa G.: Models and Algorithms for Vision Through the Atmosphere. PhD thesis, New York, NY, USA (2004). AAI3115363

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2011)

Zhang, Y.-Q., Ding, Y., Xiao, J.-S., Liu, J., Guo, Z.: Visibility enhancement using an image filtering approach. EURASIP J. Adv. Signal Process. 2012(1), 220–225 (2012)

Ling, Z., Fan, G., Gong, J., Wang, Y., Xiao, L.: Perception oriented transmission estimation for high quality image dehazing. Neurocomputing 224, 82–95 (2017)

Kim, T.K., Paik, J.K., Kang, B.S.: Contrast enhancement system using spatially adaptive histogram equalization with temporal fltering. IEEE Trans. Consum. Electron. 44(1), 82–87 (1998)

Alex Stark, J.: Adaptive image contrast enhancement using generalizations of histogram equalization. IEEE Trans. Image Process. 9(5), 889–896 (2000)

Kim, J.-Y., Kim, L.-S., Hwang, S.-H.: An advanced contrast enhancement using partially overlapped sub-block histogram equalization. IEEE Trans. Circuits Syst. Video Technol. 11(4), 475–484 (2001)

Li, Y., Huimin, L., Li, J., Li, X., Li, Y., Serikawa, S.: Underwater image de-scattering and classification by deep neural network. Comput. Electr. Eng. 54(C), 68–77 (2016)

Tan, K., Oakley, J.P.: Enhancement of color images in poor visibility conditions. In: Proceedings of IEEE Conference on Image Processing, vol. 2, pp. 788–791 (September 2000)

Nayar, S.K., Narasimhan, S.G.: Interactive deweathering of an image using physical models. In: Proceedings of IEEE Workshop on Color and Photometric Methods in Computer Vision in cnjunction with IEEE Conference on Computer Vision (October 2003)

Nayar, S.K., Narasimhan, S.G.: Vision in bad weather. In: Proceedings of IEEE Conference on Computer Vision, vol. 2, 820–827 (September 1999)

Schechner, Y.Y., Narasimhan, S.G., Nayar, S.K.: Instant dehazing of images using polarization. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, vol. 1, pp. 325–332 (February 2001)

Narasimhan, S.G., Nayar, S.K.: Contrast restoration of weather degraded images. IEEE Trans. Pattern Anal. Mach. Intell. 25(6), 713–724 (2003)

Narasimhan, S.G., Nayar, S.K.: Chromatic framework for vision in bad weather. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, vol. 1, pp. 598–605 (June 2000)

Shwartz, S., Namer, E., Schechner, Y.Y.: Blind haze separation. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, vol. 2, pp. 1984–1991 (February 2006)

He, K., Sun, J., Tang, X.: Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 35(6), 1397–1409 (2012)

Meng, G., Wang, Y., Duan, J., Xiang, S., Pan, C.: Efficient image dehazing with boundary constraint and contextual regularization. In: Proceedings of IEEE International Conference on Computer Vision, pp. 617 – 624 (2013)

Tan, R.: Visibility in bad weather from a single image. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 24–26 (June 2008)

Tarel, J.P., Hautière, N.: Fast visibility restoration from a single color or gray level image. In: Proceedings of IEEE International Conference on Computer Vision, pp. 2201–2208 (September 2009)

Xiao, C., Gan, J.: Fast image dehazing using guided joint bilateral filter. Vis. Comput. Int. J. Comput. Graph. 28(6–8), 713–721 (2012)

Jha, D.K., Gupta, B., Lamba, S.S.: l2-norm-based prior for haze-removal from single image. IET Comput. Vis. 10(5), 331–341 (2016)

Kim, J.-H., Jang, W.-D., Sim, J.-Y., Kim, C.-S.: Optimized contrast enhancement for real-time image and video dehazing. J. Vis. Commun. Image Represent. 24(3), 410–425 (2013)

Liu, S., Rahman, M.A., Liu, S.C., Wong, C.Y., Lin, C.-F., Wu, H., Kwok, N.: Image de-hazing from the perspective of noise filtering. Comput. Electr. Eng. 62(August 2017), 345–359 (2016)

Berman, D., Treibitz, T., Avidan, S.: Non-local image dehazing. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Wang, W., Yuan, X., Xiaojin, W., Liu, Y.: Dehazing for images with large sky region. Neurocomputing 238(Supplement C), 365–376 (2017)

Tang, K., Yang, J., Wang, J.: Investigating haze-relevant features in a learning framework for image dehazing. In: Proceedings of IEEE International Conference on Computer Vision and Pattern Recognition, pp. 2995–3002 (2014)

Zhu, Q., Mai, J., Shao, L.: A fast single image haze removal algorithm using color attenuation prior. IEEE TRans. Image Process. 24(11), 3522–3533 (2015)

Wang, W., Yuan, X., Xiaojin, W., Liu, Y.: Fast image dehazing method based on linear transformation. IEEE Trans. Multimed. 19(6), 1142–1155 (2017)

Li, Y., Miao, Q., Song, J., Quan, Y., Li, W.: Single image haze removal based on haze physical characteristics and adaptive sky region detection. Neurocomputing 182, 221–234 (2016)

Yuan, H., Liu, C., Guo, Z., Sun, Z.: A region-wised medium transmission based image dehazing method. IEEE Access 5, 1735–1742 (2017)

Silberman, N., Kohli, P., Hoiem, D., Fergus, R.: Indoor segmentation and support inference from rgbd images. In: ECCV (2012)

Hautiére, N., Tarel, J.-P., Lavenant, J., Aubert, D.: Automatic fog detection and estimation of visibility distance through use of an onboard camera. Mach. Vis. Appl. 17(1), 8–20 (2006)

Choi, K.Y., Jeong, K.M., Song, B.C.: Fog detection for de-fogging of road driving images. In: 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), pp. 1–6 (Oct 2017)

Ma, K., Liu, W., Wang, Z.: Perceptual evaluation of single image dehazing algorithms. In: Proceedings of IEEE International Conference on Image Processing (September 2015)

Tarel, J.-P., Hautière, N., Cord, A., Gruyer, D., Halmaoui, H.: Improved visibility of road scene images under heterogeneous fog. In: Proceedings of IEEE Intelligent Vehicle Symposium(IV’2010), San Diego, California, USA, pp. 478–485 (2010). http://perso.lcpc.frtarel.jean-philippe/publis/iv10.html

Bui, T.M., Kim, W.: Single image dehazing using color ellipsoid prior. IEEE Trans. Image Process. 27(2), 999–1009 (2018)

Khmag, A., Al-Haddad, S.A.R., Ramli, A.R., Kalantar, B.: Single image dehazing using second-generation wavelet transforms and the mean vector l2-norm. Vis. Comput. 34(5), 675–688 (2018)

Yong, X., Wen, J., Fei, L., Zhang, Z.: Review of video and image defogging algorithms and related studies on image restoration and enhancement. IEEE Access 4, 165–188 (2015)

Wang, R., Li, R., sun, H.: Haze removal based on multiple scattering model with superpixel algorithm. J. Signal Process. 127(C), 24–36 (2016)

Lu, H., Li, Y., Xu, X., He, L., Li, Y., Dansereau, D., Serikawa, S.: Underwater image descattering and quality assessment. In: 2016 IEEE International Conference on Image Processing (ICIP), pp. 1998–2002 (Sept 2016)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image qualifty assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Wang, Z.: The SSIM index for image quality assessment (2003). https://ece.uwaterloo.ca/~z70wang/research/ssim/. Accessed 9 Nov 2014

Huimin, L., Li, Y., Zhang, L., Serikawa, S.: Contrast enhancement for images in turbid water. J. Opt. Soc. Am. A 32(5), 886–893 (2015)

Serikawa, S., Huimin, L.: Underwater image dehazing using joint trilateral filter. Comput. Electr. Eng. 40(1), 41–50 (2014). 40th-year commemorative issue

Ling, Z., Li, S., Wang, Y., Shen, H., Xiao, L.: Adaptive transmission compensation via human visual system for efficient single image dehazing. Vis. Comput. 32(5), 653–662 (2016)

Mantiuk, R., Kim, K.J., Rempel, A.G., Heidrich, W.: Hdr-vdp-2: a calibrated visual metric for visibility and quality predictions in all luminance conditions. ACM Trans. Graph. 30(4), 40:1–40:14 (2011)

Mantiuk, R., Kim, K.J., Rempel, A.G., Heidrich, W.: Hdr-vdp-2: A calibrated visual metric for visibility and quality predictions in all luminance conditions. In: ACM SIGGRAPH 2011 Papers, SIGGRAPH’11, New York, NY, USA. ACM, pp. 40:1–40:14 (2011)

Dawn, D.D.Â., Shaikh, S.H.: A comprehensive survey of human action recognition with sspatio-temporal interest point (stip) detector. Vis. Comput. 32(3), 289–306 (2016)

Li, Y., Lu, H., Li, K.-C., Kim, Y., Serikawa, S.: Non-uniform de-scattering and de-blurring of underwater images. Mobile Netw. Appl. 23(2), 352–362 (2018)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Authors Suresh Chandra Raikwar and Shashikala Tapaswi declare that they do not have any conflict of interest.

Rights and permissions

About this article

Cite this article

Raikwar, S.C., Tapaswi, S. Tight lower bound on transmission for single image dehazing. Vis Comput 36, 191–209 (2020). https://doi.org/10.1007/s00371-018-1596-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-018-1596-5