Abstract

There exist multiple dehazed images corresponding to a single hazy image due to ill-posed nature of single image dehazing (SID), making it a challenging problem. Usually, the SID used atmospheric scattering model (ASM) to obtain haze-free image from a hazy image. According to ASM, recovery of lost visibility depends upon accurate transmission. The proposed method presents a linear multiplicative bounding function (MBF) for estimation of difference channel (DC) to compute the value of transmission. The results obtained by the MBF has been compared with renowned SID methods. The accuracy of the proposed MBF has been proved by visual and objective evaluation of the dehazed images.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Outdoor vision based systems (i.e., recognition, surveillance, and intelligent transportation) perform in clear visibility, which degrades under poor weather [30].

The atmospheric light is scattered by the molecules, suspended in the air [36]. The camera captures a noisy image due to the scattering of light [4, 31, 41, 45]. This noise comes in the form of two fractions, (i) the amount of non-scattered-light, and (ii) the amount of scattered-light. Thus, the visual quality of images (such as contrast, visibility, brightness, etc.) fade in bad weather [13]. Hence, it requires image dehazing.

The main purpose of single image dehazing (SID) is to obtain haze-free image from the hazy image [4, 32]. The SID used atmospheric scattering model (ASM) to perform deahzing. The ASM described formation of an image in poor weather with two prime factors, (i) transmission, and (ii) atmospheric light.

The SID is an inverse problem. Many assumptions and priors have been used in SID. The existing SID methods works under these assumptions, which introduced inaccuracy in estimated transmission. The smoothing operations have been used for accurate transmission, making the SID computation intensive.

The minimum channel (MC) of haze-free image is effective to compute accurate transmission. However, the value of MC increases with haze-density, resulting in inaccurate transmission. This inaccuracy reduces visibility in the scene, and distorts the color of image. The proposed work presents a multiplicative bounding function (MBF) for accurate estimation of transmission and to perform the fast dehazing. The MBF computes difference between MC of hazy and haze-free images (difference channel) to minimize error in estimation process.

The following Sections have been used to present the paper. The Section 2 focused on the literature review. The details of ASM, and gaps in the research have been presented in Section 3. The proposed MBF is detailed in Section 4. The proposed method has been compared and analyzed with methods in [2, 4, 24, 27, 38, 47] in Section 5. The conclusion and future scope is given in Section 6.

2 Related work

The single image dehazing (SID) estimates parameters of ASM. Therefore, the literature of SID is based on accurate estimation of these parameters. The methods of SID can be best described in six categories as follows: (i) priors based methods, (ii) sky-region segmentation based methods, (iii) statistics of hazy images based methods, (iv) mathematical optimization based methods, (v) convolution neural network (CNN) based methods, (vi) Generative Adversial Network (GAN) based methods.

2.1 Priors based methods

The priors based methods uses some known knowledge about the haze to restore visibility of the scene [1, 4,5,6,7, 10, 14, 19, 32,33,34, 37,38,39, 44, 47].

The visibility is increased by maximizing local contrast in [32]. This method distorts color due to alteration in contrast, which limits its practical use. The haze density is approximated using atmospheric veil by the method in [34]. But it cannot preserve edges and is unable to handle dense haze. The bi-lateral filter is employed by [39] to refine the estimated atmospheric veil by the method in [34]. This helps in recovering and preserving the edges. But the method in [39] does not work properly for objects brighter than atmospheric light.

A strong prior (called dark channel prior (DCP)) is presented in [4]. The DCP defines that minimum non-linear filtering on MC of haze-free image without bright region produces a dark image (i.e., dark channel). The dark channel is used to estimate haze-free image from the hazy image. However, artifacts such as halos, blocking, and color distortion are generated by the method in [4] due to size of neighborhood in non-linear filtering.

The artifacts has been reduced by using soft-matting, but it increased computational cost. Hence, the processing speed is reduced. Further, dark channel is valid for haze-free images without bright region. Thus, dark channel fails for images with bright region or sky-region. The processing speed SID is improved by using guided filter presented in [5]. The guided filter reduced artifacts and preserved the structure with fast processing speed. But the guided filter increased color distortion in the images with bright region.

Further, the blocking artifacts are reduced by the modified DCP presented in [6]. The modified dark channel prior handled images with bright region. But dehazed quality controlled by the size of local neighborhood in [6].

In summary, the priors based methods worked with strong assumptions. They produced quality results for images without bright region.

2.2 Sky-region segmentation based methods

The sky-region segmentation based methods have been introduced to handle bright regions in the image [10, 37, 44].

There exist less number of edges in bright or sky-region, hence edge detection is used to segment sky-region in [10]. But it is inefficient. In the method in [44], the transmission of skyregion is computed with color characteristics whereas dark channel prior is utilized to compute transmission of non sky-region. The transmission is refined by using guided filter to preserve edges and structure. A part of the sky-region is identified using quad-tree decomposition by the method in [37], and region growing algorithm is applied to segment skyregion. This method reduced blocking artifacts, and obtained natural colors for images having large skyregion. But this method fails for real-world images due to presence of small sky-region or absence of sky-region.

Overall, the sky-region segmentation based methods depends on accuracy of segmentation, and beneficial for images with sky-region only.

2.3 Statistics of hazy images based methods

The statistics of hazy image has been used by the methods in [24, 38, 43, 47] for SID.

The distance (depth) of objects from the camera in a scene measures the severity of visibility. Hence, the method in [47] estimated depth of a scene using statistics of hazy images. It has been observed by the method in [47] that brightness of the hazy image is directly proportional to depth whereas saturation is negatively correlated with depth. This relationship is utilized to estimate depth of the scene, and refined using local minimum filtering, resulting in wrong estimated depth at discontinuities. The depth is corrected at discontinuities using median filter by the method in [24]. But this method works well with homogeneous haze distribution.

Further, the MC of haze-free image represents amount of noise present in the scene, making a base for the methods in [23, 25, 38]. The method in [38] linearly transformed the MC of hazy image into MC of haze-free image to obtain dehazed image. This method is computationally efficient for real-time dehazing, but it is over-exposes the objects present at long distance in the scene due to wrong estimated transmission. The method in [25] linearly transformed local region of the hazy image into respective local region of haze-free image. This method has improved accuracy of the transmission at long distance. However, it is computationally slow than the method in [38]. The dehazing control factor is presented in [23] to improve accuracy and speed of dehazing. But this method fails under dense haze. The effect of illumination (environmental) is minimized by the method in [43] by using correlation of haze-free and hazy image, and preserved the structure of the dehazed image, but retrieved correlated haze-free image controls the quality of dehazing.

In summary, the statistics of hazy images based methods are computationally fast. However, the quality of dehazing depends on amount of haze present in the scene.

2.4 Mathematical optimization based methods

The optimization based methods improved accuracy of dehazing by increasing the smoothness of the transmission [1, 19].

The boundary constraint is presented in [19], helps in estimation of initial transmission, which is smoothed using contextual regularization. But this method may over-estimate the transmission under heterogeneous haze, and results in color distortion. The novel non-local prior based on clustering is presented in [1]. The pixels have been clustered, based on airlight to form haze-lines to compute initial transmission, which is refined using regularization. But this method estimates wrong transmission under bright atmospheric light.

The optimization based methods improved accuracy of the transmission, making quality visual results at the increased computational cost.

2.5 CNN based methods

The CNN is evolved as a tool to improve quality and accuracy in dehazing. Recently, CNN based methods have been presented in [2, 8, 9, 11, 27, 28, 42].

The two layers are employed by the method in [27] to estimate the transmission. The first layer computes initial transmission by using coarse-scale net, and second layer refines it with a fine scale net. A novel DehazeNet is presented in [2] to compute transmission. The transmission and atmospheric light estimated in unified manner in [11], to minimize the reconstruction error. These methods works well for small images, but their computational efficiency decreases with increased image size. The pixel-wise transmission using patch quality comparator is computed by the method in [28]. This method estimates accurate transmission. However, this method does not consider amount of haze to interpolate the value of transmission. Hence, it may produce poor visibility in dehazed image.

The energy function based on DCP and transmission prior has been presented in [42], to improve smoothness of the transmission. The proximal-network (an end-to-end CNN) has been constructed in order to minimize the value of energy function. However, this method does not perform for dense haze and dark hazy images. A cascaded CNN to jointly estimate the transmission and global atmospheric light is presented in [9]. The transmission is calculated by using a dense CNN, whereas a lightweight CNN is utilized to compute atmospheric light. The PDR-Net (a deep CNN) with a multi-term loss function has been presented in [8]. It jointly minimized multi-term loss function to refine contrast and color.

The CNN based methods best suited for SID, but they have increased computational cost.

2.6 GAN based methods

The generative adversial network is introduced in 2014, and exploited very well in the filed on SID. The recent methods based on GAN have been presented in [3, 46].

The multilevel pooling is utilized by the method in [46] to compute the transmission. Further, a GAN checks whether the transmission and respective dehazed image are real or fake to maintain their structural correlation. This method does not works well under dense haze and it distorts color in bright region.

The cycle-dehazing GAN is presented in [3]. The unpaired hazy and haze-free images are used to train cycle-dehaze network. The perceptual loss function may distorts color and structure in [3].

3 Atmospheric scattering model (ASM)

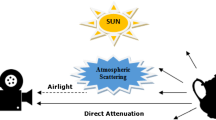

Single image dehazing methods use ASM as the base model to perform dehazing [4, 20]. ASM represents effects of scattered light on the captured image. Fig. 1 shows the process of atmospheric scattering. An object reflects incident sunlight, which then scattered by air molecules. The camera received scattered light (Airlight) and non-scattered light (Direct attenuation) [20]. ASM is mathematically represented by (1) [22].

where location (coordinate ) of a pixel is (x, y), c ∈ (r, g, b), Io and Id represent haze-free and hazy image, respectively. The global illumination (atmospheric light) is Ar. The amount of non-scattered light (transmission) is given by Tr(x, y), and illustrated by (2).

where, Depth(x, y) is distance of a pixel from the surface of the camera (depth of the scene). The strength of scattering is represented by γ (scattering coefficient).

The noise has been introduced in hazefree image by multiplicative T(x, y) and additive Ar(1 − Tr(x, y)), as illustrated in (1). Therefore, accurate value of T(x, y) is essential of quality SID.

The value of Tr(x, y) can be estimated by using (1), or (2). Equation (1) has been utilized by DCP in [4]. However, it increases reconstruction error due to the estimated value of Ar, and in the presence of sky-region in hazy image. The method in [47] have used (2) with γ = 1 to obtain the value of Tr(x, y) by computing Depth(x, y). Thus, it is unable to handle varying weather conditions (with varying value of scattering coefficient). Method in [38] have reduced the error in transmission by computing MC, but it over-estimated Tr(x, y) with increased haze-density.

Moreover, we have translated the problem of SID into estimation of difference channel in [26]. Method in [26] assumed that the color channels share same transmission and atmospheric light. Therefore, MC can be used to find transmission by using (3).

where, \(minI_{d}(x,y)=\min \limits _{c\in (r,g,b)}{({I_{d}^{c}}(x,y))}\), \(minI_{o}(x,y)=\min \limits _{c\in (r,g,b)}({I_{o}^{c}}(x,y))\), representing MC of hazy and hazefree image, respectively. Term (minId(x, y) − minIo(x, y)) in (3) is the difference (Derr(x, y)) channel. Minimization of error in Derr(x, y) will generate accurate transmission. The method in [26] computed Derr(x, y) by using a non-linear bounding function. However, the non-linearity of this function increases the computational complexity.

Further, Tr(x, y) can be calculated from (3), if Ar and minIo(x, y) have been known in prior. A recent technique in [38] estimated minIo(x, y) to increase accuracy in transmission using (3). The method in [38] presented a linear transformation based method to estimate minIo(x, y). However, error in minIo(x, y) increases with increased haze density due to overestimation of minIo(x, y). Wrong estimated minIo(x, y) results into increased reconstruction error in dehazed image. Therefore, the proposed method contributed in the following manner.

-

i.

The proposed method computes difference channel to estimate initial value of the transmission, which minimized the reconstruction error.

-

ii

The difference channel depends upon multiplicative bounding function (MBF). Therefore, a linear model has been presented to estimate MBF.

-

iii.

The smoothness of initial transmission is increased using the contextual regularization with few iterations to reduce computational cost of dehazing.

4 Proposed method

4.1 Computation of difference channel

Equation (1) illustrates that minId(x, y) increased with depth. Therefore, Derr(x, y) will increase, resulting in decreased value of psnr(x, y) with depth. If psnr(x, y) is above a minimum threshold value(ζ), then human-eye cannot differentiate the two pixels. Let human eye cannot notice a difference in minId(x, y) and minIo(x, y)) iff:

where, δ(x, y) is MBF, which binds the value of PSNR in the range (0,ζ), \(0\le \zeta \le \infty \) is constant parameters. It can be inferred from (4) that MBF must be a decreasing function of depth.

Furthermore, the inequality in (5) can be obtained from (3) and (4).

Equation (5) presents the value of Derr(x, y) = Imax ∗ 10− 0.05∗δ(x, y), and is dependent on the value of bounding function (δ(x, y)). The method in [26] used non-linear bounding function for estimation of Derr(x, y). However, the non-linearity increased the complexity of the method in [26], and it violates linear property of the ASM. Hence, the proposed method presents a linear multiplicative bounding function (MBF).

The denominator of (5) must satisfy the following inequality due to (2).

But minId(x, y) > Ar for objects brighter than Ar. Thus, Tr(x, y) < 0 for these objects. Hence, the proposed method takes absolute value of Ar − minId(x, y) in (5), which results in (6).

where, |⋅| operator returns absolute value. Now, the aim of the proposed work is to compute MBF for accuracy in SID. As the error in MBF will reduce, so accuracy will increase. This reflects the motivation for direct estimation of Derr(x, y).

4.2 Mathematical formulation of MBF

The MBF should decrease with depth as proved by (4). The proposed method assumes that MBF is linear decreasing function of depth due to linearity of ASM. The MC of hazy image has negative correlation with MBF. Hence, the proposed method models estimation of MBF as:

The method in [4] observed that minId(x, y) = 0 for hazefree points, and 0 < minId(x, y) ≤ 1 for hazy points. Thus, (7) has been modeled as (8).

where, 0 ≤ minId(x, y) ≤ 1, and 0 < a ≤ 1 is linear coefficient. The symbol 𝜖(x, y) represents random error, which has been modeled as a random image based on the Normal distribution with variable σ2.

4.3 Training data and computation of coefficient

The linear regression (LR) is based on minimization of squared error. Therefore, LR is used to compute linear coefficient a, which required ground truth (GT) of δ(x, y) and minId(x, y).

The value of minId(x, y) is obtained from given hazy image. The pair of hazy and haze-free image is essential to obtain the GT of δ(x, y) by using (4). But the natural haze free image corresponding to hazy image can not be obtained due to the constraint (the object in a scene moves with time, hence may have variable depth at different instant). Thus, 200 hazefree images has been collected from Google search engine. These are the images of natural scenes (with mountains, forests, trees, peoples, roads, etc.) with size (194 × 259). But the depth information of these images is not available. It has been assumed that the contents of the scene are independent of it’s depth [47]. Hence, the random depth images corresponding to the haze-free images have been calculated using Normal distribution (\(N(0,{\sigma _{n}^{2}})\)). The value of random transmission is computed using these depth images corresponding to each haze-free image with help of (2). The random value of atmospheric light has been computed using uniform distribution for each image. The pair of hazy and haze-free image have been prepared by putting the values of haze-free image, atmospheric light, and random transmission in (1).

The set of these hazy and their respective haze-free images has been utilized as the training dataset. The GT of psnr(x, y) has been computed to obtain the GT of δ(x, y) by using (4). This training dataset is used to learn the values of linear coefficient as a = 0.9996, and variance σ2 = 0.0018.

4.4 Significance of the MBF

The method in [4] overestimated the transmission in extreme case (i.e., for an image with sky region) due to invalidity of DCP (minId(x, y)≠ 0). But the value of δ(x, y) > 0 in these conditions, hence the MBF computes accurate transmission in comparison with method in [4].

Assuming an extreme condition with Ar = 1, and minId(x, y) = 0.95. The method in [4] produced Tr(x, y) = 0.05. But the value of δ(x, y) = 0.049 in this condition, resulting in Tr(x, y) ≥ 0.06, which is less than the ground truth value of transmission. The smoothing process can further increase this estimated value of transmission.

4.5 The process for smoothing of the initial transmission

Equation (6) computes non-smooth initial transmission, and accuracy is controlled by the parameter ζ. It has been proved that for ζ < 30, the human eye is able to detect difference between two images. Effect of different values of ζ on initial transmission has been shown in Fig. 2. The noise has been added in the image in Fig. 2a with depth image in Fig. 2b using (1), to obtain hazy image in Fig. 2c. Initial value of transmission is computed from image in Fig. 2c with different values of ζ = [25,35,45,55] by using (6), and is shown in Fig. 2d as the graph.

Black curve depicts GT of transmission in Fig. 2d. Two red curves in Fig. 2d represent transmissions with ζ = [45,55], respectively. Red curves are far away from the GT. This inferred that high ζ relaxes upper-bound. Blue curve represent transmission with ζ = 35, and is close to the GT. However, blue curve is neither completely upper nor lower bound on transmission. Transmission with ζ = 25 is represented by green curve, and is completely a tight lower limit on the GT. Thus, it can be concluded that ζ < 30 will produce tight lower limit on transmission. The lower value of ζ generates contrasting dehazed image.

The initially estimated value of transmission is non-smooth, hence smoothing is important for better dehazing. The contextual regularization has been utilized to increase smoothness of the initial transmission to improves it’s accuracy. The following objective function has been minimized to perform the contextual regularization.

where, regularization parameter is λ, indexes of pixels in transmission represented by Ω, Dik represents the differential operation, the filters presented in [19] have been used to obtain the weight matrix (wk), Tr represents smooth transmission, \(\hat {Tr}\) represents initially estimated transmission, \(\bigodot \) is convolution operation, ∘ is pixel wise multiplication operator. The contextual regularization has been adapted from [19] to find the solution of (9). But the regularization process of the proposed method terminates in few iterations in comparison with method in [19].

4.6 Computation of atmospheric light

The techniques to estimate the value of atmospheric light has been presented in [4, 19, 38]. The average value of the intensities of the brightest pixels in DCP has been used in [4, 19] as atmospheric light. The method in [19] has been used by the proposed work to compute the value of atmospheric light.

4.7 Computation of dehazed image

The dehazed (estimated haze free) image has been obtained by plugging values of smooth transmission and atmospheric light in (10).

where, \(\hat {{I_{o}^{c}}}(x,y)\) represents dehazed image, \(\max \limits (,)\) function returns maximum of two values, and avoids divide by zero exception in (10).

5 Comparison of results and analysis

The Intel CORE(TM) i7-4790@3.60 GHz processor with Windows8.1 Pro has been used to perform the experiments. The proposed SID method has been programmed on MATLABR2014a. The result analysis shows the comparative analysis of the proposed method with six of the renowned dehazing methods: Dark-Channel Prior (DP) [4], Boundary Constraint Context Regularization (BR) [19], Color Attenuation Prior (CP) [47], MSCNN [27] (CN), Improved Linear Depth Model (IM) [24], Fast Dehazing using Linear Transformation (FT) [38] and Adaptive Dehazing Control Factor (ADCF) [23].

The real-world and synthesized hazy images have been used to test the performance. These hazy images have been collected from Waterloo-IVC (IVC) [16] and NYU datasets (NYU) [21], respectively. Next, the proposed method has been evaluated using RESIDE ([12]) dataset, containing 500 real-world hazy image with their respective haze-free images. The objective and qualitative comparison of results have been presented in the following subsections.

5.1 Evaluation of transmission

The quality of dehazing is controlled by accurate value of transmission. Hence, smooth transmissions resulted using the proposed MBF is compared with DP, BR, FT, and CP on NYU dataset.

Images shown in Fig. 3 have been taken from NYU dataset. Figure 3a is a haze-free image and Fig. 3b is depth image of Fig. 3a. The haze has been added in Fig. 3a using (1) with different values of γ = {1,1.5,2}, and corresponding hazy images have been obtained. The smooth transmissions have been estimated from these hazy images.

The MSE is computed to measure the accuracy of the smooth transmission. Table 1 shows the MSE obtained for the proposed, BR, CP and FT method. Best results are represented using Bold in Table 1. Table 1 depicts that the accuracy of the proposed transmission improves with increase in haze density, and is better as compared to FT, CP, and BR. Hence, we can conclude that the transmission estimated by the proposed method is more accurate than the transmission computed by BR, CP, FT.

The smoothness of the proposed initial transmission has been improved by adapted contextual regularization from [19]. The smoothness process terminates in 1 − 3 iterations in comparison to method in [19], which requires more iterations. Initial transmission and transmission obtained after each iteration(Itr) of contextual regularization for a hazy image, are shown in Fig. 4. Initial transmission is shown in Fig. 4b, and transmissions after each iteration are shown in Fig. 4c-e.

The proposed method obtains less detailed initial transmission as shown in Fig. 4b. Thus, it requires only three iterations to improve smoothness of the initial transmission. Therefore, the proposed method is computationally more fast than the method in [19].

5.2 The visual analysis and comparison

The four categories of hazy images (synthetic, real-world, challenging, and images with man-made light) have been utilized to present visual results in Figs. 5, 6, 7, 8, 9 and 10.

For synthetic images:

The dehazed images of synthetic image have been presented in Fig. 5. The invalidity of DCP in [4] for Fig. 5 leaves residual haze (see Fig. 5b). The image in Fig. 5c is over-saturated by [19]. Figure 5d and e proved that the methods in [27, 47] obtains over estimated transmission. Method in [24] improves the transmission of objects present at short distance (see newspaper in Fig. 5f). However, transmission at long distance is over estimated, as observed in Fig. 5f. The accuracy of transmission by the method in [38] is better than the methods in [4, 19, 24, 27, 47]. However, residual haze still exists in Fig. 5g. The dehazed image acquired by the proposed method has more visibility than methods in [4, 19, 24, 27, 38, 47], as presented in Fig. 5h. Thus, it proves that the proposed method obtained accurate transmission for synthetic images than methods in [4, 19, 24, 27, 38, 47].

For Real-world Images: Figures 6, 7 and 8 presented results on real-world images. Fig. 6 presented a comparison on outdoor images (which do not have sky-region), whereas comparative analysis of hazy images having sky-region have been presented in Fig. 7. The dehazed image having vibrant colors is shown in Fig. 8. In Fig. 6, visually pleasant result has been computed by [4] (see Fig. 6b, which favors DCP). However, there exist halos in Fig. 6b. The method in [19] extracts more details (see hairs of dog in Fig. 6c) than [4]. However, dehazed image is little dark. Method in [47] obtained more dark results than method in [19]. The method in [27] produced a little amount of saturation in Fig. 6e, which indicates overestimation of transmission. The saturation is controlled by [24] in Fig. 6f. However, method in [24] darkens hair of dog. Method in [38] over-brighten the image (see white area around nose of dog in Fig. 6g) with less details. The proposed method produces contrasted dehazed image with controlled brightness, and with more details (see Fig. 6h).

There is clear visibility in the non-sky region in Fig. 7b due to valid DCP. However, method in [4] is incapable of removing haze from sky-region. The method in [19] improved visibility of entire scene (sky as well as non-sky region) in Fig. 7c. However, it has less visibility at long distance. Dehazed image shows less visibility in Fig. 7d as compared to methods in [4, 19] because of inaccurate depth estimation. There is residual haze in Fig. 7e due to wrong value of transmission by [27]. Method in [24] improved estimated depth, which increased the visibility in comparison with [47] in Fig. 7f. However, the obtained image in Fig. 7f is dark. The haze is removed at long distance by method in [38] in Fig. 7g. But dehazed image is not visually pleasant. Better visibility is shown by the image in Fig. 7(h as compared to [4, 19, 24, 27, 38, 47]. Hence, it indicates accuracy of the transmission by the proposed method, compared to other methods.

Figure 8b presents the dehazed image obtained by method in [4], which has better visibility. But it obtained fine visibility due to soft-matting which is computation intensive, and method in [4] produce halos at depth discontinuity. Method in [19] obtains more fine results with fast computation than [4] (see Fig. 8c). However it distorts color (see stadium seating(stand) ). There exists residual haze in image shown in Fig. 8d, which proves that method in [47] wrongly estimates the depth of scene. Method in [27] improves visibility in Fig. 8e. However it also distorted the colors at long distance(see stadium seating). Result produced by the method in [24] is little dark due to wrong depth estimation (see Fig. 8f). Method in [38] increased contrast of dehazed image in Fig. 8g). However it distorted color (see color of tracks) due to incorrect estimated transmission. The proposed method improved visibility of whole image without distortion of color in Fig. 8h. Thus, it indicates that the proposed method retrieves original colors with improved visibility.

For challenging images:

Figure 9a presents hazy image with highly clustered objects and illumination variation. This is a challenging hazy image. The method in [4] recovers dehazed image with higher quality in Fig. 9b, but it shows darkness and halos. Method in [19] has increased the darkness locally (see color of white canopy in Fig. 9c) due to over estimated transmission. Method in [47] increased darkness in entire image due to inaccurately estimated depth, as shown in Fig. 8d. Method in [27] reduced darkness, as shown in Fig. 9e. However, it has not removed haze completely. Method in [24] removed haze completely, in Fig. 9f. However, it distorted the color (see color of black and pink T-shirt). The method in [38] wrongly estimated transmission at depth discontinuity (color at top of head of each person is too bright in Fig. 9g). Higher contrast dehazed image is computed by the proposed method in Fig. 9h, which has less color distortion as compared to [4, 19, 24, 27, 38, 47]. Hence, the proposed method obtained accurate transmission for densely cluttered objects with varying illumination.

For Image with man-made light:

The process of transmission estimation is affected by man-made lights. Therefore, Fig10 shows comparative dehazing results on an image with man-made light. The method in [4] removed haze at short distance (see gray color boxes in dehazed image in Fig. 10b). However, it over-brighten head-lights of train due to inaccurate transmission and does not remove haze at long distance (see at orange bogie in Fig. 10b). Method in [19] reduced over-brightness of head-lights, in Fig. 10c. But it distorted overall color of image (blueish color of dehazed image) due to inaccurate atmospheric light. Original colors are recovered by the method in [47] as compared to [19] (See Fig. 10d). But it increased darkness of entire scene. Method in [27] is unable to remove haze in Fig. 10e. Method in [24] produced black spots in Fig. 10f due to wrong depth. Method in [38] computed a dehazed image, which is over-brightened and dark (see at upper left corner of Fig. 10g). In Fig. 10h, the haze has been removed fully with increased visibility at long distance (see at orange bogie in Fig. 10h), by the proposed method. The proposed method computed dehazed image which shows contrast without any color distortion, in Fig. 10h. Thus, it demonstrates the accuracy of the proposed method in comparison to method in [4, 19, 24, 27, 38, 47] for images containing man-made lights.

Figure 11 presents the comparison of the proposed method with recent methods in [23, 38] by using RESIDE dataset ([12]), containing 500 real-world hazy images with varying degree of haze and including bright objects (like man-made lights). Figure 11a and b present haze-free and hazy images, respectively. The dehazed images obtained by using the methods in [23, 38] and the proposed method, have been shown in Fig. 11c, d and e, respectively. The method in [38] estimated wrong value of the transmission, resulting in over-brighten dehazed images (with low value of ssim in comparison to method in [23]), as shown in Fig. 11c and d. The proposed method achieved high value of ssim in comparison to the methods in [38] and [23], as presented in Fig. 11e, indicating the ability of the proposed method to recover original colors and structure of the dehazed image.

5.3 Objective analysis and comparison

The reference based and non-reference [35, 40] based performance metrics have been used to measure the amount of visibility obtained by the dehazing methods.Metrics e and \(\overline {r}\) have been used as non-reference performance metric and described in [40]. Recovered edges are measured by metric e. The average visibility in dehazed image is measured by metric \(\overline {r}\). The increased values of e and \(\overline {r}\) represent quality of dehazing. Furthermore, unified effect of e and \(\overline {r}\) is obtained by metric Q, defined as:

Metric e, \(\overline {r}\) and Q have been shown in Table 2. These values have been obtained using methods in [4, 19, 27, 38, 47] and the proposed method by using images in Figs. 5-10. For Figs. 5, 6 and 10, the performance of the proposed method is better in comparison to methods in [4, 19, 27, 38, 47] based on e, \(\overline {r}\) and Q as presented in Table 2. Methods in [19, 38] computes higher \(\overline {r}\) for Figs. 7 and 8 because of the over-brightening and over color distortion, which is indicated in earlier discussion. Higher brightness obtained by the proposed method as in Fig. 8 without color distortion. Thus, the proposed method shows better results than [4, 19, 27, 38, 47] for Fig. 8. Method in [38] yield high e for Fig. 9 in comparison to methods in [4, 19, 27, 47] and the proposed method due to inaccurate transmission as discussed earlier. Still, the proposed method is better compared to other methods due to better e, \(\overline {r}\) and Q for Fig. 9. Thus, it proves accuracy and efficacy of the proposed method.

Reference based metrics have been computed on NYU dataset [21]. Structural similarity index (ssim), metric \(\overline {\Delta E}\) and Qu have been used as the reference based performance metric [15] to evaluate dehazing methods. Metric \(\overline {\Delta E}\) and Qu have been presented in [15]. Color restoration capability of the dehazing method is measured by \(\overline {\Delta E}\) in the range [0(worst) − 1(best)]. Combined effect of ssim and \(\overline {\Delta E}\) is measured by metric Qu. Higher is the value of Qu, better is the quality of dehazing.

Further, visual quality is measured using quality correlate (Qc) [17, 18, 29]. The values of metric Qc lies in the range [0(worst) − 100(best)].

Figures 12, 13, 14, and 15 shows values of metric Qc, ssim, \(\overline {\Delta E}\) and Qu respectively on 35 images of NYU dataset. It can be observed that the proposed method surpassed methods in [19, 24, 47] on the basis of metric Qc as shown in Fig. 12. Therefore, it infers that the proposed method recovered better visual quality than the methods in [19, 24, 47].

The proposed method obtained high ssim in comparison to methods in [19, 24, 47] as shown in Fig. 13. Thus, the proposed method is more accurate than methods in [19, 24, 47]. The proposed method surpassed methods in [19, 24, 47] based on metric \(\overline {\Delta E}\) and Qu as shown in Figs. 14 and 15.

Furthermore, average values of metrics Qc, ssim, \(\overline {\Delta E}\) and Qu for same images of NYU have been presented in Table 3, which shows that the proposed method outruns in comparison to methods in [19, 24, 47] on the basis of average value of Qc, ssim, \(\overline {\Delta E}\) and Qu. Therefor, it justifies that the proposed method recovers better visibility, improved brightness with original colors.

Next, the proposed method has been compared with recent methods in [23, 38] on the basis of ssim, by using 500 images of RESIDE dataset. Table 4 presents the average value of ssim obtained by methods in [23, 38] and the proposed method. The best results highlighted in bold. It can be noticed in Table 4 that method in [23] is able to preserve structural similarity compared to method in [38]. However, the proposed method is best in compared to methods in [23, 38] on the basis of ssim. It proved the accuracy of the proposed method in comparison to methods in [23, 38].

5.4 Comparison of computation time

The processing speed of the presented technique has been compared with the methods in [2, 4, 19, 27, 34, 47] to prove its efficiency. Running time of methods in [2, 4, 19, 27, 34, 47] and the proposed method is computed on MATLAB environment on images with different size. Google search engine has been used to the download images, which are then used for evaluation. All methods comprise of regularization, image dehazing with filtering, airlight computation, soft matting, and transmission estimation. To ensure fairness, methods are executed 4 times on each image. Average time (in seconds) is computed, and presented in Table 5. The some methods run out of memory during execution, which is represented by symbol ′−′ in Table 5.

The method in [4] shows high computation time in Table 5. Furthermore, it can be seen that, computation time doubles with increasing size of the image, and physical memory of the machine runs out for larger images due to soft matting, which is indicated by ′−′ symbol. The method of [34] used median filter, results into less computation time compared to [4]. However, computation time is increasing very quickly as image size increases. The method in [19] is much faster than the method in [34].

The processing speed of the method in [27] is less for images with small size (such as 600 × 400, 800 × 600, and 1086 × 731) as compared to method in [19]. However, there is a rapid increase in computation time for larger images. The method in [2] is slower than the methods in [19, 47]. However, it is faster than the method in [27] for large size of image.

Computation time of [47] increases very slowly, as size of the images increases. This indicates that the method of [47] is fast as compared to [4, 19, 27, 34], and [2]. But the proposed method shows lesser computation time as compared to other methods because of difference channel. The computation time of the proposed method is low due to accuracy of initial estimated transmission, which is achieved by direct estimation of Derr. The proposed method shows a slow increase in computation time with size of image. Hence, it depicts efficiency of the proposed method.

6 Conclusion and future work

The difference channel is estimated using MBF. MBF is computed using a novel linear model. LR method is used to learn the parameters of the linear model. Robustness, efficiency and usefulness of the proposed work is demonstrated by objective and visual analysis. Objective survey is conducted using the metrics such as e, ssim, \(\overline {r}\), Q, Qc, \(\overline {\Delta E}\) and Qu. The proposed method shows contrasting results in comparison to methods in [4, 19, 24, 27, 38, 47] with faithful colors. Accuracy is demonstrated by MSE. Although, the transmission rate of the proposed method is fast, but it over-brightens the bright objects of the scene. Thus, a method to control transmission rate is essential. In future, focus will be on this issue.

References

Berman D, Treibitz T, Avidan S (2016) Non-local image dehazing. In: IEEE Conference on computer vision and pattern recognition, pp 1674–1682

Cai B, Xu X, Jia K, Qing C, Tao D (2016) Dehazenet: an end-to-end system for single image haze removal. IEEE Trans Image Process 25(11):5187–5198

Engin D, Genc A, Ekenel H (2018) Cycle-dehaze: Enhanced cyclegan for single image dehazing. In: IEEE Conference on computer vision and pattern recognition, pp 938–9388

He K, Sun J, Tang X (2011) Single image haze removal using dark channel prior. IEEE Trans Pattern Anal Mach Intell 33(12):2341–2353

He K, Sun J, Tang X (2012) Guided image filtering. IEEE Trans Pattern Anal Mach Intell 35(6):1397–1409

Jha DK, Gupta B, Lamba SS (2016) L2-norm-based prior for haze-removal from single image. IET Comput Vis 10(5):331–341

Kim J-H, Jang W-D, Sim J-Y, Kim C-S (2013) Optimized contrast enhancement for real-time image and video dehazing. J Vis Commun Image Represent 24(3):410–425

Li C, Guo C, Guo J, Han P, Fu H, Cong R (2019) Pdr-net: Perception-inspired single image dehazing network with refinement. IEEE Trans Multimed:1–1

Li C, Guo J, Porikli F, Fu H, Pang Y (2018) A cascaded convolutional neural network for single image dehazing. IEEE Access 6:24 877–24 887

Li Y, Miao Q, Song J, Quan Y, Li W (2016) Single image haze removal based on haze physical characteristics and adaptive sky region detection. Neurocomputing 182:221–234. [Online] Available: http://www.sciencedirect.com/science/article/pii/S0925231215019694

Li B, Peng X, Wang Z, Xu J, Feng D (2017) Aod-net: All-in-one dehazing network. In: IEEE International conference on computer vision, pp 4780–4788

Li B, Ren W, Fu D, Tao D, Feng D, Zeng W, Wang Z (2019) Benchmarking single-image dehazing and beyond. IEEE Trans Image Process 28(1):492–505

Ling Z, Fan G, Gong J, Wang Y, Lu X (2017) Perception oriented transmission estimation for high quality image dehazing. Neurocomputing 224:82–95. [Online] Available: http://www.sciencedirect.com/science/article/pii/S0925231216312917

Liu S, Rahman MA, Liu SC, Wong CY, C-F Lin H. W. u., Kwok N (2016) Image de-hazing from the perspective of noise filtering. Comput. Electr. Eng. 62:345–359. [Online] Available: http://www.sciencedirect.com/science/article/pii/S0045790616308266

Lu H, Li Y, Xu X, He L, Li Y, Dansereau D, Serikawa S (2016) Underwater image descattering and quality assessment. In: IEEE International conference on image processing, pp 1998–2002

Ma K, Liu W, Wang Z (2015) Perceptual evaluation of single image dehazing algorithms. In: IEEE International conference on image processing

Mantiuk R (2011) Hdr-vdp-2: A calibrated visual metric for visibility and quality predictions in all luminance conditions. In: ACM SIGGRAPH 2011 Papers, ser. SIGGRAPH ’11. ACM, New York, pp 40:1–40:14. [Online]. Available: https://doi.org/10.1145/1964921.1964935

Mantiuk R, Kim KJ, Rempel AG, Heidrich W (2011) Hdr-vdp-2: A calibrated visual metric for visibility and quality predictions in all luminance conditions. ACM Trans Graph 30(4):40:1–40:14. [Online]. Available: https://doi.org/10.1145/2010324.1964935

Meng G, Wang Y, Duan J, Xiang S, Pan C (2013) Efficient image dehazing with boundary constraint and contextual regularization. In: IEEE International conference on computer vision, pp 617–624

Narasimhan SG (2004) Models and algorithms for vision through the atmosphere. Ph.D. dissertation, New York

Nathan Silberman PK, Hoiem D, Fergus R (2012) Indoor segmentation and support inference from rgbd images. In: European conference on computer vision, vol 7576, pp 746–760

Nayar SK, Narasimhan SG (1999) Vision in bad weather. In: IEEE Conference on computer vision, pp 820–827

Raikwar SC (2019) Adaptive dehazing control factor based fast single image dehazing. Multimedia Tools and Applications, pp 891–918. [Online] Available: https://doi.org/10.1007/s11042-019-08120-z

Raikwar SC, Tapaswi S (2017) An improved linear depth model for single image fog removal. Multimed Tools Appl 77(15):19 719–19 744

Raikwar SC, Tapaswi S (2018) Tight lower bound on transmission for single image dehazing. The Visual Computer. [Online] Available: https://doi.org/10.1007/s00371-018-1596-5

Raikwar SC, Tapaswi S (2020) Lower bound on transmission using non-linear bounding function in single image dehazing. IEEE Trans Image Process 29:4832–4847

Ren W, Liu S, Zhang H, Pan J, Cao X, Yang M. -H. (2016) Single image dehazing via multi-scale convolutional neural networks. In: European conference on computer vision, pp 154–169

Santra S, Mondal R, Chanda B (2018) Learning a patch quality comparator for single image dehazing. IEEE Trans Image Process 27(9):4598–4607

Serikawa S, Lu H (2014) Underwater image dehazing using joint trilateral filter. Comput Electr Eng 40(1):41–50. [Online]. Available: http://www.sciencedirect.com/science/article/pii/S0045790613002644

Shi LF, Chen BH, Huang SC, Larin A, Seredin O, Kopylov A, Kuo SY (2018) Removing haze particles from single image via exponential inference with support vector data description. IEEE Trans Multimed 20(9):2503–2512

Song Y, Li J, Wang X, Chen X (2018) Single image dehazing using ranking convolutional neural network. IEEE Trans Multimed 20(6):1548–1560

Tan R (2018) Visibility in bad weather from a single image. In: IEEE Conference on computer vision and pattern recognition, pp 24–26

Tang K, Yang J, Wang J (2014) Investigating haze-relevant features in a learning framework for image dehazing. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 2995–3002

Tarel JP, Hautière N (2009) Fast visibility restoration from a single color or gray level image. In: IEEE International conference on computer vision, pp 2201–2208

Wang R, Li R, sun H (2016) Haze removal based on multiple scattering model with superpixel algorithm. J Signal Process 127(C):24–36

Wang J, Wang W, Wang R, Gao W (2016) Csps: an adaptive pooling method for image classification. IEEE Trans Multimed 18(6):1000–1010

Wang W, Yuan X, Wu X, Liu Y (2017) Dehazing for images with large sky region. Neurocomputing 238(Supplement C):365–376. [Online] Available: http://www.sciencedirect.com/science/article/pii/S0925231217302412

Wang W, Yuan X, Wu X, Liu Y (2017) Fast image dehazing method based on linear transformation. IEEE Trans Multimed 19(6):1142–1155

Xiao C, Gan J (2012) Fast image dehazing using guided joint bilateral filter. Vis Comput Int J Comput Graph 28(6-8):713–721

Xu Y, Wen J, Fei L, Zhang Z (2015) Review of video and image defogging algorithms and related studies on image restoration and enhancement. IEEE Access 4:165–188

Yang M, Liu J, Li Z (2018) Super-pixel based single nighttime image haze removal. IEEE Trans Multimed 20(11):3008–3018

Yang D, Sun J (2018) Proximal dehaze-net: a prior learning-based deep network for single image dehazing. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (eds) European conference on computer vision. Springer International Publishing, Cham, pp 729–746

Yuan F, Huang H (2018) Image haze removal via reference retrieval and scene prior. IEEE Trans Image Process 27(9):4395–4409

Yuan H, Liu C, Guo Z, Sun Z (2017) A region-wised medium transmission based image dehazing method. IEEE Access 5:1735–1742

Zhang Y. -Q., Ding Y, Xiao J. -S., Liu J, Guo Z (2012) Visibility enhancement using an image filtering approach. EURASIP J Adv Signal Process 2012(1):220–225

Zhang H, Patel VM (2018) Densely connected pyramid dehazing network. In: IEEE Conference on computer vision and pattern recognition, pp 3194–3203

Zhu Q, Mai J, Shao L (2015) A fast single image haze removal algorithm using color attenuation prior. IEEE Trans Image Process 24(11):3522–3533

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Raikwar, S.C., Tapaswi, S. & Chakraborty, S. Bounding function for fast computation of transmission in single image dehazing. Multimed Tools Appl 81, 5349–5372 (2022). https://doi.org/10.1007/s11042-021-11752-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11752-9