Abstract

To achieve superior image reconstruction, this paper investigates a hybrid regularizers model for image denoising and deblurring. This approach closely incorporates the advantages of the total generalized variation and wavelet frame-based methods. Computationally, a highly efficient alternating minimization algorithm containing no inner iterations is introduced in detail, which synchronously restores the degraded image and automatically estimates the regularization parameter based on Morozov’s discrepancy principle. Illustrationally, we demonstrate that our proposed strategy significantly outperforms several current state-of-the-art numerical methods and closely matches the performance of human vision in solving the image deconvolution problem, with respect to restoration accuracy, staircase artifacts suppression and features preservation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image restoration aims at recovering an underlying image u from its observed degradation f. Mathematically, the basic image restoration model is usually formulated as \(f=Ku+n\), with K being a bounded linear blurring (or convolution) operator and n a white additive Gaussian noise with variance \(\sigma ^2\). A classical way to solve this ill-posed inverse problem is to add a regularization, resulting in the following energy functional

Here, J(u) denotes the regularization term and \(\lambda \) is a positive regularization parameter.

As is well known, several popular regularization techniques have been developed in image processing. Among them, partial differential equation and wavelet frame regularized methods are two popular approaches, which have been studied extensively and made great successes. One successful example of variational methods is the total variation (TV) [1] regularized model as

Numerically, solving for the above model, there exist several highly efficient numerical methods. Thereinto, simultaneously recovering the degraded image and estimating the regularization parameter \(\lambda \) adaptively is a challenging subject. Up to now, various approaches have been sprung up for the parameter selection automatically, such as Morozov’s discrepancy principle [2,3,4,5,6], the generalized cross-validation method [7, 8], the L-curve approach [9] and the variational Bayesian method [10, 11]. One thing to be noted is that, when the noise variance is available, Morozov’s discrepancy principle is preferred to achieve the optimal parameter \(\lambda \) adaptively.

This model performs well in preserving important detail features for image denoising. Unfortunately, the numerous staircase effect inevitably emerges due to the TV regularized framework. To overcome this drawback, researchers recently introduced the total generalized variation (TGV) as penalty functional in image processing. More specifically, the second-order TGV with weight \(\alpha \) (\({\mathrm{TGV}}_{\alpha }^2\)) regularized models [12,13,14,15,16,17,18,19] have been achieved extensive research and attention. Thereinto, applied for image restoration, the resulting model is given by

Calculating for the minimization problem (3), Bredies et al. [14] proposed a spatially dependent regularization parameter selection algorithm based on statistical methods. Later, He et al. [18] introduced an adaptive parameter estimation approach using the discrepancy principle.

Another well-adopted regularizer technique is the wavelet frame-based methods [20,21,22,23,24] with the \(\ell \)1-norm of frame coefficients. This shrinkage method makes the relevant theoretical analyses and calculations easier, and performs well in image processing too. However, the only fly in the ointment is that the Gibbs-like oscillations emerge frequently around the image discontinuities.

Therefore, to better reconstruct the degraded image and simultaneously preserve image features, a new edge-preserving regularization scheme is reported in this work. Namely, by integrating the merits of \({\mathrm{TGV}}_{\alpha }^2\) and wavelet frame transform, and avoiding their main shortcomings, we concentrate on a novel hybrid regularizers model for image restoration. The optimization problem is established in the following form

where W is the wavelet frame transform. It is noteworthy that, applying Morozov’s discrepancy principle, we will present a fast numerical algorithm that can be used to achieve the regularization parameter \(\lambda \) in (4) automatically. Therefore, the concerned image reconstruction problem is formulated as

where \(m^2=\tau n_1n_2\sigma ^2\), with \(\tau \) being a noise-dependent predetermined parameter and \(n_1\times n_2\) the image pixels. Generally, as discussed in [8], one can simply set \(\tau =1\).

Subsequently, let us define the characteristic function \(I_D(u)\) as

Then, the constrained optimization problem (5) can be transferred into an unconstrained one as follows:

Our significant contributions of this article can be summarized as follows. First off, on the basis of the TGV and wavelet frame-based methods, we put forward a new hybrid regularizers model for image restoration. The inclusion of multiple regularizers helps to obtain more accurate and stable numerical solutions. The second important advantage is to develop an extremely efficient alternating minimization algorithm for solving the resulting model without any inner iteration, which simultaneously recovers the degenerated image and adaptively selects the optimal parameter \(\lambda \) using the discrepancy principle.

The outline of this article is generalized as follows. Section 2 gives a summary of the necessary definitions and the basic properties on the proposed model. In Sect. 3, we describe in more detail the alternating minimization method that adaptively updates the regularization parameter in each iteration step. And the convergence proof is also analyzed in Sect. 4 in brief. Numerical results aiming at demonstrating the effectivity of the new algorithm are provided in Sect. 5. Finally, concluding remarks are drawn in Sect. 6.

2 Preliminaries

Our objective in this section is to give a brief introduction and summarize the properties on the model (7). Referring to [12, 13, 17], we begin with the concept of second-order TGV.

Definition 1

Let \({\varOmega }\subset {\mathbb {R}}^{d}\) be a bound domain, and \(\alpha =(\alpha _0,\alpha _1)>0\). Then, the second-order total generalized variation for \(u\in L^1({\varOmega })\) is defined as the value of the functional

where \(S^{{d}\times {d}}\) represents the set of all symmetric \({d}\times {d}\) matrices and \(C_{c}^{2}({\varOmega }, S^{{d}\times {d}})\) is the space of compactly supported symmetric \({d}\times {d}\) matrix fields. Moreover, the space of bound generalized variation (BGV) of order 2 endowed with

is a Banach space. Furthermore, thanks to [25], if \({\varOmega }\subset {\mathbb {R}}^{{d}}\) is a bounded Lipschitz domain, then \(\mathrm{BGV}_\alpha ^2({\varOmega })=\mathrm{BV}({\varOmega })\) for all \((\alpha _0,\alpha _1)>0\) in the sense of topologically equivalent Banach spaces.

In the following, we focus on the dimension \({d}=2\) and denote the spaces: \(U=C_{c}^{2}({\varOmega }, {\mathbb {R}}), V=C_{c}^{2}({\varOmega }, {\mathbb {R}}^2)\), and \(W=C_{c}^{2}({\varOmega }, S^{2\times 2})\). Based on Refs. [12, 17], the discretized \({\mathrm{TGV}}_{\alpha }^2(u)\) of \(u\in U\) is then rewritten as

where \(p=(p_1,p_2)^\mathrm{T}\in V\), and \(\varepsilon (p)=\frac{1}{2}(\nabla p+\nabla p^\mathrm{T})\) stands for the symmetrized derivative. Here, the operators \(\nabla u\) and \(\varepsilon (p)\) are characterized by

Next, we briefly review the concepts of tight frame and tight wavelet frame for \(L^2({\mathbb {R}}^2)\). More details regarding this issue can be found in [21].

Definition 2

A countable set \({\mathcal {X}}\subset L^2({\mathbb {R}}^2)\) is called a tight frame if

where \(\langle \cdot ,\cdot \rangle \) is the inner product of \(L^2({\mathbb {R}}^2)\).

Furthermore, for given \({\varPsi }=\{\psi _1,\ldots ,\psi _r\}\subset L^2({\mathbb {R}}^2)\), the wavelet system \({\mathcal {X}}({\varPsi })\) is defined by the collection of the dilations and the shifts of \({\varPsi }\) as

where \(\psi _{l,s,t}\) is characterized by

If \({\mathcal {X}}({\varPsi })\) forms a tight frame of \(L^2({\mathbb {R}}^2)\), then the system \({\mathcal {X}}({\varPsi })\) is called a tight wavelet frame and each function \(\psi _{i}\in {\varPsi }(i=1,\ldots ,r)\) is called a (tight) framelet.

At last, let us return to the existence of the solution to (7).

Theorem 1

The optimization problem (7) admits a solution.

Proof

Let \(\{u^{k}\}\) be a bounded minimizing sequence. By the compactness property in the space \(\mathrm{BV}({\varOmega })\), there exists a subsequence of \(\{u^{k}\}\) denoted by the same symbol and \(u^*\in \mathrm{BV}({\varOmega })\), such that \(\{u^{k}\}\) converges to \(u^*\) in \(L^{1}({\varOmega })\). Subsequently, according to the standard arguments in [12, 21, 26, 27], the functions \({\mathrm{TGV}}_{\alpha }^2(u)\), \(\Vert Wu\Vert _1\) and \(I_D(u)\) are all lower semi-continuous, proper and convex, and so is their weighted sum. Therefore, this leads to that

which implies that \(u^*\) is a minimizer of the problem (7). \(\square \)

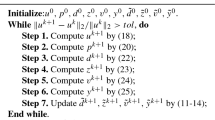

3 Numerical algorithm

With the formulation of \({\mathrm{TGV}}_{\alpha }^2\) in (10), this results in the minimization problem as follows:

By the variable splitting technique [20, 28,29,30,31], we introduce four auxiliary variables d, v, w, z and consider the following constrained optimization problem:

To deal with the above constrained problem, we convert it into an unconstrained one by adding the quadratic penalty functions. This yields

with \(\gamma _1,\gamma _2,\gamma _3,\gamma >0\) being four penalty parameters. This formulation of the problem is very advantageous because the optimization problem (16) can be solved by employing an efficient alternating minimization method. This results in the following iterative framework:

with the updates for \({\tilde{d}}^{k+1},{\tilde{v}}^{k+1},{\tilde{w}}^{k+1}\) and \({\tilde{z}}^{k+1}\):

More precisely, to implement the algorithm (17), we can perform this minimization efficiently by iteratively minimizing with respect to u, p, d, v, w and z, respectively, i.e.,

In the first step, for solving the subproblem with respect to u, the Karush–Kuhn–Tucker (KKT) necessary conditions assert that

which means that

where \({\mathcal {A}}^{T}\) denotes the adjoint of \({\mathcal {A}}, \nabla ^{T}\nabla =-{\varDelta }\) and \(W^{T}W=I\). Notice that, under the periodic boundary condition, \(K^{T}K\) and \(\nabla ^{T}\nabla \) are all block circulant, so that they can be diagonalized by fast Fourier transform (FFT). Hence, \(u^{k+1}\) is calculated by FFT and inverse FFT efficiently. Alternatively, (23) can be solved by discrete cosine transform (DCT) under the Neumann boundary condition with mirror extension and assuming that K is symmetric (see [32]). In our simulation results, we apply the periodic boundary condition and FFTs.

Next, for the p-subproblem, by differentiating the second equation of (22) of both hand sides with respect to p, we have

which we can rewrite as in the following formulation:

As can be seen from (25), in essence, it is a system of linear equations in two unknowns \(p_1^{k+1}\) and \(p_2^{k+1}\). This observation leads to that the coefficient matrix associated with \((p_1^{k+1},p_2^{k+1})\) can be diagonalized blockwise under the Fourier transform.

Before going further, let us introduce some necessary notations used in what follows. In the sequel, for notational convenience, we denote the expressions by

This convention, together with (25), yields that

It is noteworthy that linear operators \(a_1,a_2\) and \(a_3\) are all block-circulant matrices under the periodic boundary condition, and which further can be diagonalized by FFT. As a consequence, this together with the Cramer’s rule, two variables \(p_1^{k+1}\) and \(p_2^{k+1}\) in the system (26) can be efficiently computed in the Fourier domain.

As for the d, v and w subproblems shown in (22), the generalized shrinkage formula, similarly as in [33], can be adopted preferentially. Namely,

with \(\mathrm{shrink}(t,\delta )=\mathrm{sign}(t)\cdot \max (|t|-\delta ,0)\).

At last, we are now in the position to solve the z-subproblem. It can be written as

which indicates that

where the regularization parameter \(\lambda ^{k+1}\) is updated by the discrepancy principle in the \((k+1)\)th iteration. Obviously, the solutions of \(\lambda ^{k+1}\) and \(z^{k+1}\) are related together. More precisely, if \(\Vert (Ku^{k+1}+{\tilde{z}}^k)-f\Vert _2^2\le m^2 \ (\star )\) holds, we set \(\lambda ^{k+1}=0\) and \(z^{k+1}=Ku^{k+1}+{\tilde{z}}^k\). On the contrary, by the discrepancy principle, we should solve the following equation:

To this end, the relationship (31), together with (32), leads to that

In conclusion, putting all of these elements together, this results in the following algorithmic framework: alternating minimization method, devoted for solving (7).

4 Convergence analysis

In this section, as far as the convergence property of the above iterative algorithm is concerned, we will present the main theorem in what follows. Here, we only give the basic proof frameworks and do not repeat the lengthy proving process. Similar to the classical alternating direction method of multiplier (ADMM) developed in [34,35,36], we have the following theorem.

Theorem 2

For given \(\gamma _1, \gamma _2, \gamma _3, \gamma >0\), the sequence \(\{u^{k},p^{k},d^{k},v^{k}, w^{k},z^{k},\lambda ^{k}\}\) generated by the proposed algorithm from any initial point converges to a solution of (16).

Proof

It follows from (17) that the (u, p)-subproblem and d, v, w, z subproblems are decoupled each other. Thus, six variables can be grouped into two blocks (u, p) and (d, v, w, z). Therefore, our method can be regarded as an application of ADMM. Concerning the convergence proof of the proposed approach, let us first construct the Lagrangian functional as follows:

and denote three variables by \(X=(u,p),Y=(d,v,w,z)\) and \(Z=({\tilde{d}}, {\tilde{v}}, {\tilde{w}}, {\tilde{z}})\). Recurring to the standard arguments on ADMM stated above, for given \(\gamma _1,\gamma _2,\gamma _3,\gamma >0\), then the sequence generated by the resulting iterative framework (22) converges to the saddle point of (34), and the proof is completed. \(\square \)

5 Experimental results

In this section, we illustrate the effectiveness of the proposed hybrid model with different wavelet frames on five \(256 \times 256\) test images: Lena, Cameraman, Boat, Peppers and Butterfly. We also compare the recovered results with four closely related TGV, wavelet frame, TV+wavelet (TVW for short) and deep learning-based methods, by measuring the reconstruction quality, staircase effect suppression and edge-preserving ability. For fair comparisons, under the discrepancy principle, these regularized models are all solved by adopting the regularization parameter update algorithms.

An important remark is that all images are processed by our scheme with the parameters \(\gamma _1=0.5, \gamma _2=1, \gamma _3=1\) and \(\gamma =10^{(\mathrm{BSNR}/10-1)}\) for reconstructing the reasonable results, with \(\mathrm{BSNR}=10\log _{10}(\Vert f\Vert _2^2/\Vert n\Vert _2^2)\). The other parameters are firmly fixed to \(\alpha _0=3, \alpha _1=1.5\) and \(\beta =0.5\). Additionally, as is suggested in [5, 6], the parameter \(\tau \) can be selected as \(\tau =-\tau _0\times \mathrm{BSNR}+1.09\), with \(\tau _0=0.03\) for image denoising and \(\tau _0=0.006\) for image deblurring, respectively. All experiments are implemented using MATLAB R2011b on a PC with Intel(R) Core(TM) i5 CPU and 4 GB of RAM under Windows 7.

The stopping criterion for all the tested algorithms is set to \(\Vert u^{k+1}-u^k\Vert _2/\Vert u^k\Vert _2<10^{-4}\) or the number of iterations is larger than 1000. The quality of the recovered image is quantitatively measured by peak signal-to-noise ratio (PSNR), which is defined as

where u and \({\tilde{u}}\) denote the original image and the restored data, respectively. Also, the optimal \(\lambda \) is chosen in achieving the best restoration with respect to the PSNR value. Furthermore, the Pratt’s figure of merit (FOM) criterion [37] is employed to evaluate the edge-preserving ability of different models. Meanwhile, we also compare their recovered results by adapting the feature similarity (FSIM) index [38] for image quality assessment. Generally speaking, the larger PSNR, FOM and FSIM values normally indicate that the restoration is of higher quality.

Example 1

First, we validate the ability of the proposed hybrid regularizers strategy for image denoising and compare it with two successful methods: the wavelet frame-based model and the TGV model. Here, the wavelet frame is a 4-scale redundant Haar frame. The original image Lena is shown in Fig. 1a. Figure 1b (\({\hbox {PSNR}}=28.1281\) dB) stands for its noisy version corrupted by white random Gaussian noise with standard variance 10. Figure 1c, d denotes the denoised versions by the wavelet frame model and the TGV model, respectively. And we display in Fig. 1e the performance of our novel scheme. The local enlarged images and recovered results by three different models are separately listed in Fig. 2 and Table 1. As might be expected, it follows from Fig. 1 and Table 1 that our proposed hybrid model provides the best restoration, visually and quantitatively, in terms of suppressing noise and preserving details over some existing sophisticated numerical methods.

Example 2

We take another standard test image Cameraman (Fig. 3a) as an example for image deconvolution. Its degraded version shown in Fig. 3b (\({\hbox {PSNR}}=23.0625\) dB) is blurred by Gaussian convolution with a \(5\times 5\) window and a standard deviation of 3, and noisy by white Gaussian noise with variance \(\sigma ^2=15\). In this case, the wavelet frame is selected as the 4-scale Daubechies-4 wavelet. The deconvolution results by the wavelet frame model, the TV+wavelet model and our method are displayed in Fig. 3c–e and Table 2, respectively.

Example 3

In Fig. 4, we compare the denoised and deblurred results for image Boat, by using the wavelet frame model, the TV+wavelet model and our proposed strategy. We use 2-scale Coiflet filter for our wavelet frame to deal with the contaminated image (Fig. 4b, \({\hbox {PSNR}}=24.1873\) dB), blurred by motion blur with parameters “len = 6” and “theta = 30,” and noisy by white Gaussian noise with variance \(\sigma ^2=15\). Subsequently, provided Fig. 4 and Table 3 indicate the deconvolution results by using three different models in more detail.

Example 4

Subsequently, to further evaluate the performance of the addressed hybrid regularizers approach to image deblurring, we use 4-scale symmetric framelet [39] for our model and deal with the contaminated Peppers image. Figure 5b (\({\hbox {PSNR}}=22.3220\) dB) denotes the degenerated version blurred by a \(6\times 6\) averaging filer and noisy by white Gaussian noise with variance \(\sigma ^2=20\). Furthermore, the restored results by the TGV model, the TV+wavelet model and our proposed algorithm are presented in Table 4 and Fig. 5 at great length.

Example 5

At last, with the aim of further illustrating the performance, we compare our reconstructions with the TGV and another popular and powerful deep learning-based methods [40, 41]. It is worth pointing out that the Daubechies wavelet D6 is chosen for wavelet frame in the implementation of our algorithm. As suggested in [40], the MLP approach is carried out with the Gaussian window width of 2 and a stride size of 3. Five degraded images are all corrupted by additive Gaussian noise with standard variance 15. Recovered results and measurable comparisons obtained using three different strategies are intuitively depicted and listed in Table 5 and Fig. 6, respectively.

Observing the restorations in Figs. 3, 4, 5 and 6 gives that the oscillation and staircasing artifacts are frequently produced by the canonical wavelet frame and TV-based methods. However, the images recovered by our novel model are more visually natural and veritable. Other comparisons outlined in Tables 2, 3, 4 and 5, especially in achieving higher PSNR, FOM and FSIM values than those of the wavelet frame, TGV, TV+wavelet and deep learning-based efficient methods, concertedly illustrate the outstanding performance of the proposed approach to image deblurring, with respect to both restoration accuracy and edge-preserving ability.

6 Conclusions

In this article, by incorporating the advantages of two recently developed wavelet frame-based and TGV methods, we introduce a novel hybrid regularizers model for image denoising and deblurring. Associated with the alternating minimization method, the proposed framework is calculated by an efficient adaptive parameter estimation algorithm, where the parameter \(\lambda \) is changing automatically during the iterations. Convergence of the algorithm is also briefly described. Finally, in comparison with some state-of-the-art techniques, experimental results distinctly illustrate the unexampled superiority of our developed strategy in solving the image restoration problem, in terms of reconstruction quality, staircasing effect reduction and details preservation.

References

Rudin, L., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Physica D 60(1), 259–268 (1992)

Aujol, J.F., Gilboa, G.: Constrained and SNR-based solutions for TV-Hilbert space image denoising. J. Math. Imaging Vis. 26(1), 217–237 (2006)

Weiss, P., Blanc-Féraud, L., Aubert, G.: Efficient schemes for total variation minimization under constraints in image processing. SIAM J. Sci. Comput. 31(3), 2047–2080 (2009)

Ng, M.K., Weiss, P., Yuan, X.: Solving constrained total-variation image restoration and reconstruction problems via alternating direction methods. SIAM J. Sci. Comput. 32(5), 2710–2736 (2010)

Wen, Y.W., Chan, R.H.: Parameter selection for total-variation-based image restoration using discrepancy principle. IEEE Trans. Image Process. 21(4), 1770–1781 (2012)

He, C., Hu, C., Zhang, W., Shi, B.: A fast adaptive parameter estimation for total variation image restoration. IEEE Trans. Image Process. 23(12), 4954–4967 (2014)

Golub, G.H., Heath, M., Wahba, G.: Generalized cross-validation as a method for choosing a good ridge parameter. Technometrics 21(2), 215–223 (1979)

Galatsanos, N.P., Katsaggelos, A.K.: Methods for choosing the regularization parameter and estimating the noise variance in image restoration and their relation. IEEE Trans. Image Process. 1(3), 322–336 (1992)

Hansen, P.C.: Analysis of discrete ill-posed problems by means of the L-curve. SIAM Rev. 34(4), 561–580 (1992)

Babacan, S.D., Molina, R., Katsaggelos, A.K.: Parameter estimation in TV image restoration using variational distribution approximation. IEEE Trans. Image Process. 17(3), 326–339 (2008)

Babacan, S.D., Molina, R., Katsaggelos, A.K.: Variational Bayesian blind deconvolution using a total variation prior. IEEE Trans. Image Process. 18(1), 12–26 (2009)

Bredies, K., Kunisch, K., Pock, T.: Total generalized variation. SIAM J. Imaging Sci. 3(3), 492–526 (2010)

Knoll, F., Bredies, K., Pock, T., Stollberger, R.: Second order total generalized variation (TGV) for MRI. Magn. Reson. Med. 65(2), 480–491 (2011)

Bredies, K., Dong, Y., Hintermüller, M.: Spatially dependent regularization parameter selection in total generalized variation models for image restoration. Int. J. Comput. Math. 90(1), 109–123 (2012)

Bredies, K., Holler, M.: A TGV regularized wavelet based zooming model. Lect. Notes Comput. Sci. 7893, 149–160 (2013)

Valkonen, T., Bredies, K., Knoll, F.: Total generalized variation in diffusion tensor imaging. SIAM J. Imaging Sci. 6(1), 487–525 (2013)

Guo, W., Qin, J., Yin, W.: A new detail-preserving regularity scheme. SIAM J. Imaging Sci. 7(2), 1309–1334 (2014)

He, C., Hu, C., Yang, X., He, H., Zhang, Q.: An adaptive total generalized variation model with augmented Lagrangian method for image denoising. Math. Probl. Eng. 2014, 157893 (2014)

Liu, X.: Augmented Lagrangian method for total generalized variation based Poissonian image restoration. Comput. Math. Appl. 71(8), 1694–1705 (2016)

Cai, J.F., Osher, S., Shen, Z.: Split Bregman methods and frame based image restoration. Multiscale Model. Simul. 8(2), 337–369 (2010)

Cai, J.F., Dong, B., Osher, S., Shen, Z.: Image restoration: total variation, wavelet frames, and beyond. J. Am. Math. Soc. 25(4), 1033–1089 (2012)

Zhang, Y., Kingsbury, N.: Improved bounds for subband-adaptive iterative shrinkage/thresholding algorithms. IEEE Trans. Image Process. 22(4), 1373–1381 (2013)

He, L., Wang, Y., Xiang, Z.: Wavelet frame-based image restoration using sparsity, nonlocal, and support prior of frame coefficients. Vis. Comput. (2017). https://doi.org/10.1007/s00371-017-1440-3

Wang, C., Yang, J.: Poisson noise removal of images on graphs using tight wavelet frames. Vis. Comput. (2017). https://doi.org/10.1007/s00371-017-1418-1

Bredies, K., Valkonen, T.: Inverse problems with second-order total generalized variation constraints. In: Proceedings of SampTA 2011, 9th International Conference on Sampling Theory and Applications (2011)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, New York (2004)

Chen, D.-Q., Cheng, L.-Z.: Deconvolving Poissonian images by a novel hybrid variational model. J. Vis. Commun. Image R. 22(7), 643–652 (2011)

Goldstein, T., Osher, S.: The split Bregman algorithm for L1 regularized problems. SIAM J. Imaging Sci. 2(2), 323–343 (2009)

Setzer, S.: Operator splittings, Bregman methods and frame shrinkage in image processing. Int. J. Comput. Vis. 92(3), 265–280 (2011)

Liu, X.: Alternating minimization method for image restoration corrupted by impulse noise. Multimed. Tools Appl. 76(10), 12505–12516 (2017)

Zha, Z., Liu, X., Zhang, X., Chen, Y., Tang, L., Bai, Y., Wang, Q., Shang, Z.: Compressed sensing image reconstruction via adaptive sparse nonlocal regularization. Vis. Comput. 34(1), 117–137 (2018)

Ng, M.K., Chan, R.H., Tang, W.-C.: A fast algorithm for deblurring models with Neumann boundary conditions. SIAM J. Sci. Comput. 21(3), 851–866 (1999)

Wang, Y., Yin, W., Zhang, Y.: A fast algorithm for image deblurring with total variation regularization. CAAM Technical Report TR07-10 (2007)

Bertsekas, D., Tsitsiklis, J.: Parallel and Distributed Computation: Numerical Methods. Athena Scientific, Belmont (1997)

He, B., Liao, L.Z., Han, D., Yang, H.: A new inexact alternating directions method for monotone variational inequalities. Math. Program. 92(1), 103–118 (2002)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Hajiaboli, M.R.: A self-governing fourth-order nonlinear diffusion filter for image noise removal. IPSJ Trans. Comput. Vision Appl. 2, 94–103 (2010)

Zhang, L., Zhang, L., Mou, X., Zhang, D.: FSIM: a feature similarity index for image qualtiy assessment. IEEE Trans. Image Process. 20(8), 2378–2386 (2011)

Selesnick, I.W., Abdelnour, A.F.: Symmetric wavelet tight frames with two generators. Appl. Comput. Harmon. Anal. 17(2), 211–225 (2004)

Burger, H.C., Schuler, C.J., Harmeling, S.: Image denoising: can plain neural networks compete with BM3D? IEEE Conf. Comput. Vis. Pattern Recogn. 157(10), 2392–2399 (2012)

Zhang, K., Chen, Y., Chen, Y., Meng, D., Zhang, L.: Beyond a Gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 26(7), 3142–3155 (2016)

Acknowledgements

The author would like to thank the editors and anonymous reviewers for their constructive comments and valuable suggestions.

Funding

This work was supported by National Natural Science Foundation of China (61402166) and Hunan Provincial Natural Science Foundation of China (14JJ3105).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Rights and permissions

About this article

Cite this article

Liu, X. Total generalized variation and wavelet frame-based adaptive image restoration algorithm. Vis Comput 35, 1883–1894 (2019). https://doi.org/10.1007/s00371-018-1581-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-018-1581-z