Abstract

After many years of study, the subject of image denoising on the flat domain is well developed. However, many practical problems arising from different areas, such as computer vision, computer graphics, geometric modeling and medical imaging, involve images on the irregular domain sets such as graphs. In this paper, we consider Poisson and mixed Poisson–Gaussian noise removal of images on graphs. Based on the statistical characteristic of the observed noisy images, we propose a wavelet frame-based variational model to restore images on graphs. The model contains a weighted \(\ell _2\) fidelity term and an \(\ell _1\)-regularized term which makes additional use of the tight wavelet frame transform on graphs in order to preserve key features such as textures and edges of images. We then apply the popular alternating direction method of multipliers (ADMM) to solve the model. Finally, we provide supporting numerical experiments on graphs and compare with other denoising methods. The results on some image denoising tasks indicate the effectiveness of our method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, many interesting scientific problems have increasingly involved analyzing and manipulating structured data. Such data often consist of sampled real-valued functions defined on some irregular domain sets such as graphs. As many traditional methods for signal processing are designed for data defined on regular Euclidean spaces, such as image and video processing, the development of mathematical tools and methods that are able to accommodate complicated data domains is also an important topic. In practical applications, many data sets such as mesh surfaces, point clouds and data defined on network-like structures can naturally be modeled as scalar functions defined on the vertices of graphs, which are generally considered as a certain discretization or random samples from some Riemannian manifold [1, 10, 16, 19, 31, 37].

Image processing on the graph domain is interesting, for it arises in many practical applications and provides new insights in signal processing (see e.g. [12, 25, 38]). Graphs provide a flexible generalization of regular Euclidean domain and surface domain [2, 4, 15, 20, 28, 35, 37], and they can approximate arbitrary topology and geometry structure. For example, Niyobuhungiro et al. [25] considered an analogue of the well-known ROF denoising model [28] on a general finite directed and connected graph. Zosso et al. [38] considered a graph-based approach for image segmentation. More recently, Dong [12] introduced a tight wavelet frame transform on graphs and discussed graph data denoising.

A graph is denoted by \(G=\{V,E,\omega \}\), where \(V:=\{v_k:k=0, \ldots , K-1\}\) is the set of vertices, \(E\subset V \times V\) is the set of edges and \(\omega :E\mapsto \mathbb {R}^+\) denotes a weighted function of every two edges. Let \(u:V\mapsto \mathbb {R}\) be an image function defined on the graph G, which can be viewed as a vector in \(\mathbb {R}^K\). Due to sampling measurements and instruments, sampling noise is inevitable [29]. Thus, a fundamental problem is to remove noise to obtain high-quality images before further processing. Let \(f_G\) be an observed image on graph G which is formulated as

where u is the clean image and \(\eta \) is the perturbation noise. When \(\eta \) in (1.1) is additive white Gaussian noise, it is mostly considered in the early literature for its good characterization of system noise, whereas non-Gaussian type of noise are also encountered in many real observations due to noisy sensors and channel transmission (see e.g. [18]).

An important variant is Poisson noise, which is generally produced by low number of photons, such as fluorescence microscopy, emission tomography. The poisson noise data, i.e., the probability of receiving k particles is given by

where \(\tau \) is the expected value and the variance of random counts. When images are defined on Euclidean space, many studies have been made for poisson noise removal in the past decade (see e.g. [9, 18, 21, 22, 36]). For example, based on the statistics of Poisson noise, the generalized Kullback–Leibler (KL)-divergence fidelity was used in a variational model for Poisson noise removal [11]. Luisier et al. in [23] constructed a Stein’s unbiased risk estimator (SURE) in the wavelet domain for removal of mixed Poisson–Gaussian noise. Gong et al. in [18] proposed a universal \(\ell _1 + \ell _2\) fidelity term for mixed or unknown noise removal. Recently, Staglianò et al. [33] and Li et al. [22] studied Poisson noise removal by approximating the generalized KL-divergence in terms of a weighted least-squares function.

The main focus of this paper is to extend Poisson noise removal of images on regular Euclidean space to images defined on graphs. We assume that the observation \(f_G\) on graph is corrupted by Poisson noise, i.e.

and the noise on each pixel is independent. Inspired by some recent wavelet frames-based image restoration methodologies (see [8, 14, 18, 22] and the references therein), we propose the following variational model to remove noise:

Here, the first term can be rewritten as \(\frac{1}{2}\Vert u-f_G\Vert _{\Sigma ^{-1}}^2\), where \(\Sigma =\text {diag}(u)\), is a reweighed \(\ell _2\) fidelity term. This term was first introduced in [22, 33] for approximating the KL-divergence fidelity. In practical implement, in order to guarantee the numerical stability, we add a very small positive constant (the fixed background image, see [3, 6, 30]) to u in model (1.3). The second term is a regularization term, where \(\mathcal {W}\) is the tight wavelet frame transform on graphs, \(\Vert \cdot \Vert _{\ell _1 (G)}\) denotes the \(\ell _1\) vector norm on graphs, and \(\lambda \) is a positive parameter to balance the two terms. The detailed derivation of model (1.3) will be given in Sect. 3.

The regularization term is designed based on a priori assumption on u. One of the assumptions commonly used is the sparsity of the underlying solutions in the wavelet frame domain [7, 8, 14, 22]. The effectiveness of wavelet tight frames has been proved in many applications in signal and image processing [8, 14, 18, 22], since they are able to sparsely approximate piecewise smooth functions in an efficient way and provide fast decomposition and reconstruction algorithms. We will show that such a simple system can also be used to effectively restore images defined on graphs from noisy data. The extension of image denoising model on flat domain to graph data denoising is not trivial because of the nonlinear nature of the graphs and the corresponding algorithms [12, 13]. Furthermore, because of the \(\ell _1\)-norm, the regularization term \(\Vert \mathcal {W}u\Vert _{\ell _1(G)}\) gives preference to a solution u whose wavelet coefficient sequence is sparse, and to keep the important features of image data such as textures and edges while removing spurious information.

The difficulty for solving (1.3) is the nonlinear nature of the graph domain, and to either approximate or directly solve the weighted square problem involving unknown u in the fidelity term. We are interested in taking advantage of the weighted least square structure and utilizing popular efficient sparse regularization scheme, such as the alternating direction method of multipliers (ADMM) [5, 8, 17] to solve the model.

The rest of this paper is organized as follows: in Sects. 2.1 and 2.2, we give a brief review of spectral graph theory and the wavelet frame transform on graphs. Next, in Sect. 3.1, we propose a wavelet frame-based variational model for removal of Poisson noise of images on graphs. Then, we present an algorithm to solve the model. In Sect. 3.2, we consider the case of removal of mixed Poisson–Gaussian noise of images on graphs. In the last section, we present some numerical experiments and compare with other denoising methods.

2 Background

2.1 Graph Fourier transform

To understand and analyze the data on graphs, we first review the spectral graph theory, especially the graph Laplacian, which is widely used to reveal the geometric properties of the graph. Let \(G:=\{V,E,\omega \}\) be a graph, where \(V:=\{v_k:k=0, \ldots ,K-1\}\) is the set of vertices, \(E\subset V \times V\) is the set of edges, and \(\omega :E\mapsto \mathbb {R}^+\) denotes a weight function. Here, we choose the following commonly used weight function

Let \(A:=(a_{k,k'})\) be the adjacency matrix of G with

Let \(D:=\text {diag}\{d[0],d[1], \ldots ,d[K-1]\}\) be the degree matrix of G, where d[k] is the degree of node \(v_k\) defined by \(d[k]:=\Sigma _{k'}a_{k,k'}\). Then the (unnormalized) graph Laplacian \(\mathcal {L}\) can be defined by the following form

With eigenvalue decomposition, we denote the set of pairs of eigenvalues and eigenfunctions of \(\mathcal {L}\) as \(\{(\lambda _k,u_k)\}_{k=0}^{K-1}\) with \(0=\lambda _0<\lambda _1\le \lambda _2\le \cdots \le \lambda _{K-1}\). The eigenfunctions form an orthonormal basis for all functions on the graph:

Let \(f_G:V\mapsto \mathbb {R}\) be a function on the graph G. Then its Fourier transform is defined by

2.2 Wavelet frame transform on graphs

Wavelet frames have been proved over the past decade to be an exceptionally useful tool for image denoising, inpainting, function and surface reconstruction, etc. (see [7, 8, 13, 14, 22, 34, 35] and many references therein). Much of the power of wavelet methods comes from their ability to represent both smooth and/or locally bumpy functions in an efficient way and provide time and frequency localization. In this subsection, we introduce wavelet frame transform on graphs. Interested readers should consult [12] for more details.

A countable set \(X \subset L_2(\mathbb R)\) is called a tight frame of \(L_2(\mathbb R)\) if

where \(\langle \cdot , \cdot \rangle \) denotes the inner product of \(L_2(\mathbb R)\). A wavelet system \(X(\Psi )\) is defined to be a collection of dilations and shifts of a finite set \(\Psi :=\{\psi _1, \dots , \psi _r \} \subset L_2(\mathbb R)\),

When \(X(\Psi )\) forms a tight frame, it is called a wavelet tight frame.

To construct wavelet tight frames, one usually starts with a refinable function \(\phi \) with a refinement mask \(a_0\) satisfying

The idea of an MRA-based construction of a wavelet tight frame is to find masks \(a_{\ell }\), which are finite sequences, such that

The sequences \(a_1, \ldots , a_r\) are the high pass filters of the system, and \(a_0\) is the low pass filter.

The Unitary Extension Principle (UEP) of [26, 27] provides a general theory of the construction of tight wavelet frame. Roughly speaking, \(X(\Psi )\) forms a tight frame provided that

where the Fourier series of \(a_{\ell }\) is denoted as

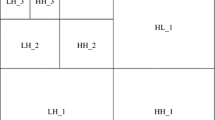

As an application of UEP, a family of wavelet tight frame systems is derived in [26] by using uniform B-splines as the refinable function \(\phi \). The simplest system in this family is the piecewise linear B-spline frame, where \(\phi =B_2=\max (1-|x|, 0)\) with the refinement mask \(a_0=\frac{1}{4}[1, 2, 1]\), and \(a_1=\frac{\sqrt{2}}{4} [1, 0, -1 ]\), \(a_2=\frac{1}{4} [-1, 2, -1]\). Then, the system \(X(\{\psi _1, \psi _2\})\) defined in (2.2) is a tight wavelet frame of \(L_2(\mathbb R)\).

For a graph \(G:=\{V,E,\omega \}\), we formulate function \(f_G:V\mapsto \mathbb {R}\) by a K-dimensional vector defined on the vertices. Let \(\{ \lambda _k: {k=0} \ldots , {K-1} \}\) be the eigenvalues of \(\mathcal {L}\) defined in Sect. 2.1. In the following, we introduce the discrete tight wavelet frame transform of \(f_G\) in the Fourier domain.

Let \(\{a_{\ell }: 0 \le \ell \le r \}\) be the masks of a tight frame system \(X(\Psi )\) and \(\widehat{a}_{\ell }^*\) be the complex conjugate of \(\widehat{a}_{\ell }\). The (undecimated) L-level tight wavelet frame decomposition \(\mathcal {W}\) is defined as

with

Here, the index \(\ell , 0 \le \ell \le r\), denotes the band of the transform, and the index p denotes the level of the transform. The dilation scale N is chosen as the smallest integer such that \(\lambda _{{\max }}:=\lambda _{K-1}\le 2^N \pi \). Note that the scale N is selected such that \(2^{-N}\lambda _k\in [0,\pi ]\) for \(0 \le k \le {K-1}\).

Let \(\varvec{\alpha }:= \mathcal {W}f_G :=\{\alpha _{\ell , p}:0\le \ell \le r,\, 1 \le p \le L\}\) with \(\alpha _{\ell , p} := \mathcal {W}_{\ell , p} f_G\), be the tight wavelet frame coefficients of \(f_G\). We denote the tight wavelet frame reconstruction as \(\mathcal {W}^T\varvec{\alpha }\), which is defined by the following iterative procedure

where \(\alpha _{0,0}:= \mathcal {W}^T\varvec{\alpha }\) is the reconstructed graph data from \(\varvec{\alpha }\). By [12, Theorem 3.1], we have \(\mathcal {W}^T\mathcal {W} = \mathcal {I}\), i.e. \(\mathcal {W}^T\mathcal {W}{f}_{G}={f}_{G}\) for any function \({f}_{G}\) defined on graph G.

In practical computations, it is very expensive to obtain the full set of eigenvectors and eigenvalues of the graph Laplacian of large graphs. A solution to such challenge is to approximate masks by Chebyshev polynomials [24, 32], so that the eigenvalue decomposition of the graph Laplacian is not needed. The masks \(a_{\ell }, 0 \le \ell \le r\), that we use are finitely supported sequences, thus \(\widehat{a}_{\ell }\) are trigonometric polynomials and can be accurately approximated by low-degree Chebyshev polynomials. The Chebyshev polynomial approximation of the mask \(\widehat{a}_{\ell }(\xi )\), \(\xi \in [0,\pi ]\) can be formulated as

where

and

Note that the graph Laplacian \({\mathcal {L}}\) admits the eigenvalue decomposition \({\mathcal L} =U \Lambda U^T\), where \(\Lambda :=\text {diag}\{\lambda _0, \lambda _1,\ldots ,\lambda _{K-1}\}\) and columns of U are the eigenvectors. Then the wavelet frame transform (2.2) can be rewritten in the matrix form in time domain:

where \({{\widehat{\mathbf {a}}}_{\ell }^*} (\gamma \Lambda )\!\!:= \!\text {diag} \{ \widehat{a}_{\ell }^*(\gamma \lambda _0), \widehat{a}_{\ell }^*(\gamma \lambda _1), \ldots , \widehat{a}_{\ell }^*(\gamma \lambda _{K-1}) \}\). If we substitute \(\widehat{a}_{\ell }\) by polynomial \(\mathcal {T}_{\ell }^{n}\), then

and for \(2 \le p \le L\),

Thus, by the iterative definition of Chebyshev polynomials, only matrix-vector multiplications are involved for computations of (2.3) and (2.4), and we don’t need to obtain the full set of eigenvectors and eigenvalues of \(\mathcal L\). Similarly, the wavelet frame reconstruction \(\mathcal {W}^T\) can also be approximately computed.

3 Model and algorithm

3.1 Poisson noise removal of images on graphs

For a graph \(G:=\{V,E,\omega \}\), we formulate function \(f_G:V\mapsto \mathbb {R}\) by a K-dimensional vector defined on the vertices. Let \(f_G\) be the observed noisy image on graph which is formulated as

where \(\eta \) is the perturbation noise. We assume that \(f_G\) is corrupted by Poisson noise (see 1.2), i.e.

and the noise on each pixel is independent.

Then, given u, we have the likelihood of observing \(f_G\)

where \(u_i\) and \({f}_i\) denote the ith element of u and \(f_G\). By the properties of Poisson distribution, we obtain the mean and variance of \(f_G\) as follows

Let

be the “additive” random noise of the underlying image u on graph G. In the following, we approximate \(\eta \) by the normal distribution.

Given u, we have

Then, we approximate \(\eta \) by the additive Gaussian noise \(\mathcal {N}(0,u)\), i.e.,

where \(\Sigma \) is the covariance matrix. Due to the independence of noise at each element, we have

We then take negative \(\log \) of the normal distribution (3.1) and have

Thus, by using the maximum likelihood estimates, we obtain the following fidelity term

Here, the weighted \(\ell _2\) norm of a vector \( \text{ x }\in \mathbb {R}^{K}\) is defined as \({\Vert \text{ x }\Vert }_{\Sigma ^{-1}}^2:=\text{ x }^{T}{\Sigma ^{-1}}\text{ x }\). The fidelity term (3.2) was introduced in [22, 33] for Poisson data reconstruction. We here extend it to remove Poisson noise of images on graphs.

Under the assumption that the underlying solution is sparse in the wavelet frame domain, we propose the following model for removing Poisson noise of images on graphs

Here, the first term is a fitting term based on the noise characteristic and derived by the likelihood function discussed above; the second term is a regularization term, and \(\lambda \) is a parameter to balance the two terms.

Note that the division and square root operator in the fitting term are both element wise. In case \(u=0\), in practical implement, in order to guarantee the numerical stability, we add a very small positive constant (the fixed background image [3, 6, 30]) to u in model (3.3). To handle the unknown weight in the fitting term, following the line of [22], we apply the following iteration to solve the model.

Let \(u_0=f_G\). For \(k=1,2, \ldots \), let

The functional in (3.4) is an \(\ell _1\)-regularized least-squares problem. Then, there are iterative solvers like the alternating direction method of multipliers (ADMM) [5, 8, 17] to obtain \(u^{k+1}\), i.e.

Let \(\Sigma _k=\text {diag}(u_k)\). Then, the solution to the first subproblem of (3.5) can be determined by solving the system of equations

which, because of \(\mathcal {W}^T \mathcal {W}=\mathcal I \), can be simplified to

The second subproblem of (3.5) has a closed form solution, and \(d_{k+1}\) is given by the soft-shrinkage operator (see [5, 8, 17])

where each operation is performed componentwisely.

Now, combining (3.6) and (3.7), we obtain Algorithm 1 for Poisson noise removal of images on graphs.

Denoising results of the simulated noisy images on graphs corrupted by Poisson noise. From left to right noisy-free images on graphs, noisy images, denoised images. Parameters in the algorithm (Slope: \(\lambda =0.035\), \(\mu =0.35\); Eric: \(\lambda =0.035\), \(\mu =0.7\); Earth: \(\lambda =0.01\), \(\mu =0.1\); flower: \(\lambda =0.035\), \(\mu =0.875\); cup: \(\lambda =0.1\), \(\mu =1\))

3.2 Mixed Poisson–Gaussian noise

Previously, we discussed Poisson noise removal. In real graph data observation, there may exist other system-born noise such as mixed Poisson–Gaussian. The observed image \(f_G\) on graph is corrupted by mixed Poisson–Gaussian noise following the distribution

where \(\sigma ^2\) is the variance of additive Gaussian noise. Similar to the approach in Sect. 3.1, by applying the normal distribution (3.1) with covariance matrix \(\Sigma =\text {diag}(u)+\sigma ^{2}I\), the probability density function of the observed image data \(f_G\) can be approximated again. Then, we have a new weighted \(\ell _2\) fidelity for removing mixed Poisson–Gaussian noise on graphs,

Combining with the tight wavelet frame regularization, we propose the following denoising model

Compared with model (3.3), here we extended the weighted \(\ell _2\) fidelity term to mixed Poisson–Gaussian noise by a small modification of the Poisson noise version. Thus, the corresponding algorithm solving (3.8) is similar to Algorithm 1 except adding \(\sigma ^2\) in estimation and updating covariance matrix.

4 Numerical results and discussions

In the previous section, we discussed the model and algorithm for removing Poisson and mixed Poisson–Gaussian noise of images on graphs. In this section, we provide numerical experiments to test the performance of our method and compare with certain existing methods, such as the classical Laplacian smoothing method [4], the KL-divergence model [33] and Gong et al.’ method [18]. Here, the KL-divergence model [33] and Gong et al.’ model [18] are, respectively, formulated as

and

In the first three experiments, the image functions defined on a graph are generated by mapping three images, ‘Slope’, ‘Eric’ and ‘Earth’, onto the unit sphere (see Fig. 1). Here, a unit sphere with 16,728 sampled vertices is selected as the graph. In the fourth and fifth experiments, two graphs named as ‘flower’ and ‘cup’ with 7919 and 10,840 sampled vertices are considered. The ‘Slope’ image function is mapped onto the graphs to test the performance of our approach (see Fig. 2). The two graphs are borrowed from the public 3D model database: http://3dmdb.com/.

In the computation of graph Laplacian, we choose parameter \(\rho = 10\) in the weight function (2.1). For wavelet frame transform on graphs, we use the piecewise linear B-spline tight wavelet frame [26],

and we use the Chebyshev polynomials of degree 7 to approximate the masks, i.e., \(n=8\) in (2.3). For simplicity, we fix the level of wavelet frame transformation to 1, since using higher decomposition levels only slightly improves the denoising quality while the computation cost is noticeably increased.

The \(\text {Algorithms 1}\) and 2 are implemented with MATLAB and experimented on a laptop with Intel Core i3-2310M (2.10 GHz) CPU and 6.0 GB RAM. The parameters in our algorithm are tuned to get the best visual outcome for the simulated noisy images on the graph. The denoised results \(\overline{u}\) can be evaluated quantitatively by the mean squared error (MSE), the signal-to-noise ratio (SNR) and the normalized cross-correlation (NCC), which are defined by

where u denotes the noisy-free images on graph, and K is the number of vertices.

Denoising results of the noisy images on graphs corrupted by mixed Poisson–Gaussian noise. From left to right noisy-free images on graphs, noisy images on graphs, denoised images on graphs. Parameters in the algorithm (Slope: \(\lambda =0.035\), \(\mu =0.35\); Eric: \(\lambda =0.035\), \(\mu =0.7\); Earth: \(\lambda =0.01\), \(\mu =0.1\); flower: \(\lambda =0.035\), \(\mu =0.875\); cup: \(\lambda =0.1\), \(\mu =1\))

4.1 Poisson noise removal

In this subsection, we test the performance of our approach for Poisson noise removal of images on graphs. Here, the clean image u is rescaled to an intensity ranging from 0 to 1200, and then the Poisson noise is added in MATLAB using the function ‘poissrnd’. The denoised results are shown in Fig. 3. The denoised error, number of iterations and computational time for each image are given in Table 1.

Then we compare the results of our approach with other denoising methods. In Fig. 4, we visually show the difference between our method and the classical Laplacian smoothing method [4], the KL-divergence model [33] and Gong et al.’ method [18]. It can be seen that our results preserve the local features better. Furthermore, we quantitatively compare these methods by MSE, SNR and NCC, see Tables 2, 3 and 4 for the results.

4.2 Mixed Poisson–Gaussian noise removal

In this subsection, we test the performance of mixed Poisson–Gaussian removal of images on graphs. Here, a Poisson noise is added to the original image first as in Sect. 4.1. Then, a Gaussian noise with distribution \(\mathcal {N}(0, \sigma ^2)\) is added to the image. We choose \(\sigma =6\). The denoised results are shown in Fig. 5, and the denoised error, number of iterations and computational time for each image are given in Table 5.

In the end, we compare the results of our approach with the Laplacian smoothing method [4], the KL-divergence model [33] and Gong et al.’ method [18] both visually and quantitatively. Figure 6 shows that our results preserve most textures and edges on graphs better. Furthermore, we compare these methods by MSE, SNR and NCC, see Tables 6, 7 and 8 for the results.

5 Conclusion

In this paper, we considered Poisson and mixed Poisson–Gaussian noise removal of images on graphs. Based on the statistical characteristic of noise, we approximated the probability density function of observed images by the normal distributions. Then, a fidelity term was derived by the likelihood function. In addition, under the assumption that the underlying image in the wavelet frame domain is sparse, we proposed a variational model to denoise the images on graphs. We then applied the popular iterative algorithm ADMM to solve the model. Finally, we demonstrated the numerical experiments to verify the practicability and effectiveness of our approach, and compared with the classical Laplacian smoothing method [4], the KL-divergence model [33] and Gong et al.’ method [18]. The results on some image denoising tasks indicate the effectiveness of our method.

References

Belkin, M., Niyogi, P.: Towards a theoretical foundation for Laplacian-based manifold methods. In: Proceedings of the 18th Annual Conference on Learning Theory (COLT), pp. 486–500. Springer, (2005)

Benninghoff, H., Garcke, H.: Segmentation and restoration of images on surfaces by parametric active contours with topology changes. J. Math. Imaging Vis. 55(1), 105–124 (2016)

Bertero, M., Boccacci, P., Talenti, G., Zanella, R., Zanni, L.: A discrepancy principle for Poisson data. Inverse Probl. 26(10), 105004–105023 (2010)

Botsch, M., Kobbelt, L., Pauly, M., Alliez, P., Levy, B.: Polygon Mesh Processing. AK Peters, Natick (2010)

Boyd, S., Parikh, N., Chu, E., et al.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundations and Trends\(^{\textregistered }\). Mach. Learn. 3(1), 1–122 (2011)

Sawatzky, A., Brune, C., Kösters, T., Wübbeling, F., Burger, M.: EM-TV Methods for Inverse Problems with Poisson Noise. In: Level Set and PDE Based Reconstruction Methods in Imaging, vol 2090, pp. 71–142. Springer, Cham (2013)

Cai, J.-F., Dong, B., Osher, S., Shen, Z.: Image restoration: total variation, wavelet frames, and beyond. J. Am. Math. Soc. 25(4), 1033–1089 (2012)

Cai, J.-F., Osher, S., Shen, Z.: Split Bregman methods and frame based image restoration. Multiscale Model. Simul. SIAM Interdiscip. J. 8(2), 337–369 (2009)

Chan, R.H., Chen, K.: Multilevel algorithm for a Poisson noise removal model with total variation regularization. Int. J. Comput. Math. 84(8), 1183–1198 (2007)

Coifman, R.R., Lafon, S.: Diffusion maps. Appl. Comput. Harmon. Anal. 21(1), 5–30 (2006)

Csiszár, I.: Why least squares and maximum entropy? An axiomatic approach to inference for linear inverse problems. Ann. Stat. 19(4), 2032–2066 (1991)

Dong, B.: Sparse representation on graphs by tight wavelet frames and applications. Appl. Comput. Harmon. Anal. 42(3), 452–479 (2017)

Dong, B., Jiang, Q.T., Liu, C.Q., Shen, Z.: Multiscale representation of surfaces by tight wavelet frames with applications to denoising. Appl. Comput. Harmon. Anal. 41(2), 561–589 (2016)

Dong, B., Shen, Z.: MRA-based wavelet frames and applications. In: Zhao, H. (ed.) IAS Lecture Notes Series, Summer Program on The Mathematics of Image Processing. Park City Mathematics Institute, Salt Lake City, (2010)

El Ouafdi, A.F., Ziou, D.: Global diffusion method for smoothing triangular mesh. Vis. Comput. 31(3), 295–310 (2015)

Giné, E., Koltchinskii, V.: Empirical graph Laplacian approximation of Laplace–Beltrami operators: large sample results. IMS Lect. Notes Monogr. Ser. 51, 238–259 (2006)

Goldstein, T., Osher, S.: The split Bregman algorithm for L1-regularized problems. SIAM J. Imaging Sci. 2(2), 323–343 (2009)

Gong, Z., Shen, Z., Toh, K.: Image restoration with mixed or unknown noises. Multiscale Model. Simul. 12(2), 458–487 (2014)

Hein, M. Audibert, J.-Y., Von Luxburg, U.: From graphs to manifolds-weak and strong pointwise consistency of graph Laplacians. In: Proceedings of the 18th Annual Conference on Learning Theory, pp. 470–485. Springer (2005)

Jain, P., Tyagi, V.: An adaptive edge-preserving image denoising technique using tetrolet transforms. Vis. Comput. 31(5), 657–674 (2015)

Le, T., Chartrand, R., Asaki, T.J.: A variational approach to reconstructing images corrupted by Poisson noise. J. Math. Imaging Vis. 27(3), 257–263 (2007)

Li, J., Shen, Z., Yin, R., Zhang, X.: A reweighted \(\ell ^2\) method for image restoration with Poisson and mixed Poisson–Gaussian noise. Inverse Probl. Imaging 9(3), 875–894 (2015)

Luisier, F., Blu, T., Unser, M.: Image denoising in mixed Poisson–Gaussian niose. IEEE Trans. Image Process. 20(3), 696–708 (2011)

Mason, J.C., Handscomb, D.C.: Chebyshev Polynomials. CRC Press, Boca Raton (2002)

Niyobuhungiro, J., Setterqvist, E.: A new reiterative algorithm for the Rudin–Osher–Fatemi denoising model on the graph. In: Proceedings of the 2nd International Conference on Intelligent Systems and Image Processing 2014, pp. 81–88. (2014)

Ron, A., Shen, Z.: Affine systems in \(L_2(\mathbb{R}^d)\): the analysis of the analysis operator. J. Funct. Anal. 148(2), 408–447 (1997)

Ron, A., Shen, Z.: Compactly supported tight affine spline frames in \(L_2(\mathbb{R}^d)\). Math. Comput. 67(221), 191–207 (1998)

Rudin, L., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D 60(1–4), 259–268 (1992)

Rusu, R.B., Marton, Z.C., Blodow, N., Dolha, M., Beetz, M.: Towards 3D Point cloud based object maps for household environments. Robot. Auton. Syst. 56(11), 927–941 (2008)

Shepp, L.A., Vardi, Y.: Maximum likelihood reconstruction in positron emission tomography. IEEE Trans. Med. Imaging 1(2), 113–122 (1982)

Singer, A.: From graph to manifold Laplacian: the convergence rate. Appl. Comput. Harmon. Anal. 21(1), 128–134 (2006)

Shuman, D.I., Vandergheynst, P., Frossard, P.: Chebyshev polynomial approximation for distributed signal processing. In: International Conference on Distributed Computing in Sensor Systems and Workshops, pp. 1–8. (2011)

Staglianò, A., Boccacci, P., Bertero, M.: Analysis of an approximate model for Poisson data reconstruction and a related discrepancy principle. Inverse Probl. 27(12), 125003 (2011)

Yang, J., Stahl, D., Shen, Z.: An analysis of wavelet frame based scattered data reconstruction. Appl. Comput. Harmon. Anal. 42(3), 480–507 (2017)

Yang, J., Wang, C.: A wavelet frame approach for removal of mixed Gaussian and impulse noise on surfaces. Inverse Probl. Imaging. 11(5), 1 (2017). doi:10.3934/ipi.2017037

Zhang, B., Fadili, J.M., Starck, J.-L.: Wavelets, ridgelets, and curvelets for Poisson noise removal. IEEE Trans. Image Process. 17(7), 1093–1108 (2008)

Zhang, H., Wu, C., Zhang, J., Deng, J.: Variational mesh denoising using total variation and piecewise constant function space. IEEE Trans. Vis. Comput. Graph. 21(7), 873–886 (2015)

Zosso, D., Osting, B., Osher, S.: A dirichlet energy criterion for graph-based image segmentation. In: IEEE 15th International Conference on Data Mining Workshops, pp. 821–830. (2015)

Acknowledgements

The authors are grateful to the referees for their valuable comments and suggestions that led to the improvement of this paper. The work of Cong Wang was partially supported by the Fundamental Research Funds for the Central Universities 2015B38014; the work of Jianbin Yang was partially supported by the research grant \(\#11101120\) from NSFC and the Fundamental Research Funds for the Central Universities 2015B19514, China.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, C., Yang, J. Poisson noise removal of images on graphs using tight wavelet frames. Vis Comput 34, 1357–1369 (2018). https://doi.org/10.1007/s00371-017-1418-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-017-1418-1