Abstract

Harris Hawks optimization (HHO) is a recently introduced meta-heuristic approach, which simulates the cooperative behavior of Harris’ hawks in nature. In this paper, an improved variant of HHO is proposed, called HHSC, to relieve the main shortcomings of the conventional method that converges either fast or slow and falls in the local optima trap when dealing with complex problems. Two search strategies are added into the conventional HHO. First, the sine function is used to improve the convergence speed of the HHO algorithm. Second, the cosine function is used to enhance the ability of the exploration and exploitation searches during the early and later stages, respectively. The incorporated new two search methods significantly enhanced the convergence behavior and the searchability of the original algorithm. The performance of the proposed HHSC method is comprehensively investigated and analyzed using (1) twenty-three classical benchmark functions such as unimodal, multi-modal, and fixed multi-modal, (2) ten IEEE CEC2019 benchmark functions, and (3) five common engineering design problems. The experimental results proved that the search strategies of HHO and its convergence behavior are significantly developed. The proposed HHSC achieved promising results, and it got better effectiveness in comparisons with other well-known optimization methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Optimization problems have gained attention in the field of practical engineering and scientific research [1, 2]. In general, optimization problems aim to maximize benefits from outcomes and minimize costs and numerical fitting. Sometimes these problems, in real-world environments, have different constraints that must be satisfied. Some of those constraints include, for example, decision-making [3], image segmentation, feature selection, clustering, classification, and different engineering [4,5,6].

There are two types of optimization problems, local and global optimization; each has its own aims [1, 7]. For example, local optimization aims to determine the minimum/maximum value in a specific region. Global optimization, on the other hand, seeks to determine minimum/maximum value in a given domain. Accordingly, global optimization problems are more challenging than local optimization and they face several limitations.

To handle these limitations of the global optimization problems [8,9,10], several techniques have been developed; however, the meta-heuristic techniques (MHTs) provide more suitable solutions. Those MHTs can be categorized into swarm optimization algorithms, evolutionary optimization algorithms, natural phenomena, and human inspiration algorithms. The first category (i.e., swarm optimization) simulates communication between swarms in nature [11, 12]. Some examples are particle swarm optimization (PSO) [13], salp swarm algorithm (SSA) [14], grey wolf optimization (GWO) [15], and others [16,17,18]. The second category is inspired by concepts of natural genetics that apply crossover, mutation, and natural selection. These methods include genetic algorithm (GA) [19, 20], differential evolution (DE) [21], and evolutionary programming [22]. The third group emulates natural phenomena such as the light, the spiral, the rain, and the wind. The following algorithms are an example of natural phenomena: water cycle algorithm (WCA) [23], wind-driven optimization (WDO) [24], spiral optimization (SO) [25], simulated annealing [26], flow regime algorithm (FRA) [27], and chemical reaction optimization (CRO) [28]. Additionally, the fourth group simulates the behavior of humans such as teaching learning-based optimization (TLBO) [29], seeker optimization algorithm (SOA) [30], elephant herding optimization (EHO) [31], volleyball premier league algorithm (VPL) [32], and reptile search algorithm (RSA) [33].

From these categories, swarm techniques generate the most attention since they are both simple and efficient. In this study, we consider providing improvements for the performance of recently developed swarm techniques named Harris Hawks optimization (HHO) [34]. It is proposed as a swarm-based technique that simulates the cooperation hawks demonstrate to capture prey. Harris Hawks use different strategies to perform this task, so the HHO formulates these behaviors as a set of operators that are used during either the exportation or exploitation of the search space. According to these mathematical operators, HHO has established its performance among a set of applications such as image de-noising [35], the productivity of active solar [36], multilevel thresholding segmentation [37], forecasting slope stability [38], drug design and discovery [39], soil compression [40], friction stir welding [41], and other applications [42,43,44].

Moreover, the performance of HHO has been improved by using several approaches that aim to enhance either the exploration or exploitation ability of HHO [45]. For example, the global searchability of HHO is improved and applied to determine the optimal reconfiguration for the photovoltaic array [46]. In [47], the chaotic maps and opposition-based learning are used to enhance HHO and applied to find solutions for global optimization problems. The operators of the flower pollination algorithm have been applied to enhance the performance of HHO as in [48]; to assess the quality of this modification it has been used to determine the parameters of photovoltaic systems. In addition, SCA has been combined with HHO and used to find a solution for different engineering problems [49]. In [50], a competition between SSA and HHO is used to develop a modified version of HHO; this model is applied to solve global optimization and to determine the optimal multi-level thresholding to segment the images.

Based on these applications, HHO has advantages to support its ability to discover the solution in search space. However, HHO still needs more improvement to avoid its limitations, such as its exploration is stronger than its exploitation. This motivated us to provide an alternative modification based on the Sine Cosine algorithm (SCA) [51] which was used to enhance the exploration of HHO. SCA is used in this study, since it has been applied to several applications. Consider, for example, forecasting oil consumption [52], optimal power flow [53], engineering design [54], machine scheduling [55], power usage security [56], and others [57,58,59,60].

1.1 Contributions and implications

The main contributions of the current study are summarized as follows:

-

An alternative global optimization technique is proposed based on a modified improved various of the HHO algorithm.

-

The HHO algorithm is enhanced using the gradual change strategies (i.e., sine and cosine functions), where sine is applied to improve the convergence rate. Conversely, cosine is applied to balance the exploration and exploitation during the search optimization process.

-

The developed method’s performance is evaluated using a set of different optimization and engineering problems, including various benchmark functions and various real-world industrial engineering design problems.

-

The quality of the developed method is assessed and compared with several MH techniques and state-of-the-art methods.

The remainder of this paper is organized as follows. Section 2 introduces the information of the HHO, SCA, and the developed method. In Sect. 3, we describe experiments and present discussion of the obtained results. Finally, the conclusion and future work are given in Sect. 4.

2 The proposed method

In this section, three main subsections are presented to show the conventional Harris Hawks optimization (HHO) in the first subsections [34], conventional sine cosine algorithm (SCA) in the second subsections [51], and the proposed augmented Harris Hawks optimizer by gradual changes of sine cosine algorithm in the third subsections, which is called HHSC.

2.1 Conventional Harris Hawks optimization (HHO)

In this section, the conventional Harris Hawks optimization (HHO) is presented with its main search strategies (i.e., exploration and exploitation). It simulates the searching prey, surprise pounce, and various attacking techniques of Harris Hawks in real life. Figure 1 presents all search procedures of the conventional HHO, which is explained below.

Search phases of the basic HHO [61]

2.2 Exploration procedures

In the HHO method, the initial candidate solutions are generated randomly as the other optimization methods, and in the optimization process, the best-obtained solution so far is considered as the target prey, or in other terms, is considered as the optimal solution so far. The HHO method examines various search spaces randomly to discover the optimal solution by adopting two search procedures. Suppose that an equal chance called (q) exists for each searching procedure, and the new solution is generated by Eq. (1) if \(q < 0.5\), or search on random locations if q \(\ge\) 0.5. Normally, HHO searches for the next candidate solution according to the positions of current solutions:

where X (t+1) is the new generated solution at the \(t_\mathrm{th}\) iteration, and \(X_\mathrm{rabbit}\)(t) is the location of the current prey. X (t) is the current solution at the \(t_\mathrm{th}\) iteration, \(r_1\), \(r_2\), \(r_3\), \(r_4\), and q are random values taken between 0 and 1, LB and UB are the lower and upper boundary factors, \(X_\mathrm{rand}\)(t) is a random selected solution from the current candidates, and \(X_m\) presents the average values of the candidate solutions as a vector determined using Eq. (2)

where N the total number of candidate solutions, and \(X_i\)(t) the location of the current solution at \(t^th\) iteration.

2.3 The transition between the HHO’s search procedures

In the conventional HHO method, two search procedures are employed: the first procedure is the exploration search, and the second procedure is the exploitation search. To switch between the search procedures, the escaping energy factor is used to model this approach. The energy of prey shrinks repeatedly as the escaping performance. To model this case, the energy of prey is illustrated in Eq. (3):

where E indicates the escaping energy of the target solution (prey), t indicates the number of the current iteration, and T indicates the maximum number of iterations, and \(E_0\) indicates the initial energy. The value of \(E_0\) is determined between \(-1\) and 1 throughout iterations. When the rabbit is flagging, the \(E_0\) is reduced from 0 to \(-1\); conversely, when the rabbit is strengthening, the \(E_0\) is increased from 0 to 1. When |E| \(\ge\) 1, the search will be in other areas to examine a new prey location; therefore, the exploration phase will be operated, and when |E| < 1, the algorithm seeks to employ the neighborhood of the solutions by the exploitation steps. In brief, exploration happens while \(|E| \ge 1\), while exploitation happens while |E| < 1. The time-dependent performance of E is given in Fig. 2.

2.4 Exploitation procedures

In this phase, four different procedures are given to represent the attacking steps. In this respect, the soft besiege occurs if \(|E| \ge 0.5\), and the hard besiege happens if \(|E| < 0.5\).

2.4.1 Soft besiege

The prey still has enough energy if \(r \ge 0.5\) and \(|E| \ge 0.5\). Moreover, it seeks to elope by several random leaps, but eventually, it fails. Through these endeavors, the HHO surrounds it quietly to make the prey extra tired and then make the soft attack. The following rules model this behavior:

where \(\Delta\) X(t) indicates the variation among the positions of the prey and the candidate positions at \(t_\mathrm{th}\) iteration, \(r_5\) is a random value between 0 and 1, and J=2(1-\(r_5\)) indicates the arbitrary leap of the prey by the escaping scheme. The J is changed stochastically to mimic the characteristics of prey actions.

2.4.2 Hard besiege

When \(r \ge 0.5\) and \(|E| < 0.5\), the expected prey is so tired, and it becomes a soft target; besides, the HHO gradually surround the expected prey to make the hard pounce eventually. The new positions are replaced using Eq. (6):

2.4.3 Soft besiege with progressive rapid dives

When the |E| \(\ge\) 0.5 and r < 0.5, the rabbit owns sufficient power to avoid the attack; however, a soft besiege is formed in advance of the soft attack. This method is more capable than the former procedure. To produce a soft besiege, suppose that the hawks can assess the subsequent progress using Eq. (7):

Next, they analyze the potential outcome of such a move to discover if it will be a conventional dive or not. If it was not wise (if they understand that the target victim is making more deceptive acts), they also begin to make irregular and fast deceptive dives while nearing the prey. Here, suppose that they will be deceptive according to the Levy flight-based (LF) guides using Eq. (8):

where D is the length size, and S is random values by 1\(\times\) D, and LF is the Levy flight procedure determined by Eq. (9):

where,

where u, \(\upsilon\) are random numbers between 0 and 1, \(\beta\) is a value fixed to 1.5. So, the last search approach for renewing the positions of the candidate solutions in the soft attack stage is calculated using Eq. (11):

where Z and Y are determined using Eqs. (7) and (9).

2.4.4 Hard besiege with progressive rapid dives

The prey has weak strength to leap, and a hard attack is formed before the surprise attack to find and destroy the target; when the |E| < 0.5 and r < 0.5. The following Equation presents the hard besiege condition:

where Y and Z are determined using Eqs. (13) and (14):

After that, the pseudo code of the conventional HHO is shown in Algorithm 1.

2.5 Conventional sine cosine algorithm (SCA)

Generally, population search algorithms start the optimization process by a set of randomly initial solutions (population). These random solutions are evaluated iteratively by the used objective function and developed by a set of optimization operators that are the basis of the used optimization.

Sine cosine algorithm (SCA) is a population-based optimization algorithm introduced in 2016 for solving several optimization problems [51]. The SCA generates various initial random solutions and asks them to shift towards the best solution using a mathematical model based on sine and cosine functions. In SCA, the mathematical equations for updating positions are given below. For both phases, see Eqs. (15) and (16):

where \(X_{i}^{t}\) denotes the positions of the current solution in \(i_\mathrm{th}\) dimension at \(t_\mathrm{th}\) iteration, \(r_{1}\), \(r_{2}\), \(r_{3}\) are three random numbers, \(P_{i}\) denotes the position of the place point in the \(i_{th}\) dimension, and || denotes the absolute value. These two equations are connected to be applied as follows:

where \(r_{4}\) is a random number between [0,1]. Because of the value of sine and cosine in this equation, this algorithm is named SCA.

Figure 3 explains how this equation reduces the range of sine and cosine uses throughout a number of iterations. It may be concluded from Fig. 3 that the SCA explores the available search space while the limits of sine and cosine functions are in [1,2] and [\(-2,-1\)]. But, this algorithm, SCA, exploits the search space while the ranges are in between [\(-1,1\)].

Decreasing guide for a range of sine and cosine (\(a = 3\)) [51]

After that, the pseudo code of the conventional SCA is shown in Algorithm 2. This Algorithm presents that the SCA begins the optimization procedures by a set of initial random solutions. The algorithm then caches the best solutions achieved so far, specifies it as the destination point (target), and updates other solutions while taking into account the selected best solution. The ranges of sine and cosine functions are refreshed to maintain exploitation of the search space as the iteration number increments. The SCA stops the optimization procedures when the iteration number reaches the maximum number of iterations.

2.6 The proposed Harris Hawks-based sine cosine algorithm (HHSC)

The conventional HHO seeks the optimal solution by the main updating exploration procedures and by following the renewal exploitation procedures. Once the main search procedure (exploration procedures) has slumped into local optimal, the exploitation procedures are expected to fall into the local optimum because of their renewed approach relying on the exploration procedures. On the other hand, when addressing high-dimensional and complicated problems, its solution efficiency and convergence acceleration suffers from difficulties and problems. Further, the solutions maintain changing their positions without disturbances. Therefore, the search capability of conventional HHO requires further improvements. To overcome these weaknesses, this paper introduces an augmented version of HHO, which combines the search operators of the HHO and SCA, called HHSC. The pseudo code of the proposed HHSC method is presented in Algorithm 3.

In the proposed HHSC (see Algorithm 3), the solutions’ positions are generated in a uniform distribution manner, and the current best solution is determined. The optimal positions are used to generate the solutions’ positions of the next iteration.

The next phase is based on the condition in line 8, if the E value smaller or equal to 1 and the rand value less than the given ratio (0.9), the updating process will be executed by using the exploration of the HHO. In case the first condition is met in line 8, after applying the updating process using the exploration search procedure in line 9, four exploitation procedures of the HHO are available based on four conditions. These include: (1) if r \(\ge\) 0.5 and |E| \(\ge\) 0.5, the exploitation procedure of the HHO by using soft besiege strategy is executed. (2) If r \(\ge\) 0.5 and |E| < 0.5, the exploitation procedure of the HHO by using hard besiege strategy is executed. (3) If r < 0.5 and |E| \(\ge\) 0.5, the exploitation procedure of the HHO by using soft besiege with rapid dives strategy is executed. (4) If r < 0.5 and |E| < 0.5, the exploitation procedure of the HHO by using hard besiege with rapid dives strategy is executed. Otherwise, if the condition in line 8 is not met, the updating process will be executed by using the operators (i.e., if rand less than 0.5, the exploration procedure will be executed; otherwise, the exploitation will be executed) of the SCA in line 20.

It is remarkable that the time complexity of the proposed HHSC is basically based on three elements: population size (N), dimension (Dim), and the maximum number of iterations (T). The calculation complexity is as follows. O(initialization) = O(N \(\times\) Dim), and O(optimization process) = O(N \(\times\) Dim \(\times\) T \(\times\) LogN). Therefore, the total computational complexity of the HHSC is: O(HHSC) = O(N \(\times\) Dim \(\times\) T \(\times\) LogN) + O(N \(\times\) Dim).

3 Experiments and results

In this section, fair experiments are performed to measure the performance of the introduced method (HHSC) in terms of four evaluation measurements: worst fitness values, average fitness values, best fitness values, and standard deviation (STD); they are standard in the community. For the first round, twenty-three classical benchmark functions and CEC2019 benchmark functions are applied to evaluate the performance of HHSC by comparison to various well-known optimization methods in realizing the dimensional sizes and complexity. For the second round, the proposed HHSC is verified utilizing five constrained real-world engineering problems.

The compared optimization methods include Harris Hawks optimizer (HHO) [34], grasshopper optimization algorithm (GOA) [62], Salp swarm algorithm (SSA) [14], whale optimization algorithm (WOA) [63], sine cosine algorithm (SCA) [51], dragonfly algorithm (DA) [16], Grey wolf optimizer (GWO) [15], particle swarm optimization (PSO) [64], ant lion optimizer (ALO) [65], marine predators algorithm (MPA) [66], and equilibrium optimizer (EO) [67]. To achieve fairness, the comparative methods are run in the same test conditions. All experiments in this paper are executed using MATLAB R2015b software under a Windows 10 with Core i7 CPU and 16 GB RAM. The population size (N) and the maximum number of iterations (T) of all experimented methods are set as 50 and 1000, respectively. The important parameters values for the used methods are presented in Table 1.

The Friedman ranking test [68] is employed to decide whether there are significant improvements between the proposed HHSC and other comparative methods or not. In results tables, the “Summation” identifies total ranks obtained by a method, in which the smallest value is the best. The “Mean rank” identifies the mean rank obtained by a method over all the test cases, in which the smallest value is the best. The “Final ranking” identifies the final test ranking obtained by a method over all the test cases, in which the smallest value is the best compared to all other comparative methods.

3.1 Experiment 1: benchmark functions

3.1.1 Details of the classical benchmark functions

In this part, a set of twenty-three benchmark functions is used, including three categories: seven unimodal functions (i.e., F1–F7), six multimodal functions (i.e., F8–F13), and ten fixed-dimension multimodal functions (i.e., F14–F23). These classical benchmark functions are usually used in the literature of optimization testing. The idea of the unimodal functions is to verify the exploitation searchability of the proposed method. The accuracy and convergence speed of the proposed method is the main test criteria as there are no local optima in this category (i.e., unimodal functions; F1–F7). As opposed to this category, the other two categories (i.e., multimodal; F8–F13, and fixed-dimension multimodal functions; F14–F23) with various local optimal solutions are used to test the exploration search competence of the proposed method. The mathematical equations and their characteristics are listed in Table 2 for all the given classical benchmark functions [69].

3.1.2 Qualitative metrics for the classical functions

As shown, in the first column, in Fig. 4, the given benchmark functions mimic the problems of real search areas by giving a massive quantity of local optima and various shapes for several regions of the available search space. Any optimization method should establish an equilibrium for the exploration and exploitation of search methods to determine the global optima. Hence, exploration and exploitation connected can be evaluated by this set of benchmark functions.

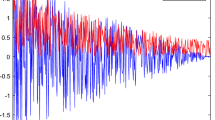

The convergence of the proposed HHSC method is investigated in this part. To prove the convergence of the HHSC, three main metrics are used including the best values of the first dimension, average fitness values, and convergence speed. The experiments are conducted on several benchmark functions (i.e., F1, F2, F3, F9, F11, F12, F16, F17, and F18) with some variables and using 5 solutions over 200 iterations. The results are presented in Fig. 4.

The first indicator (i.e., second column) is a qualitative metric, which explains the variations in the first dimension of the first solution throughout the optimization process (improvements). This indicator helps us to recognize if the first solution faces sharp or unexpected movements in the initial repetitions and gradual shifts in the last repetitions. As stated in [70], the behavior of the proposed HHSC can confirm that a population-based method converges to a location and explores locally in the given search area. It is clear that the changes are reduced gradually over a number of repetitions, a behavior that supports the change between exploration and exploitation. Finally, the evolution of the solution proceeds gradually which makes the exploitation search.

The second indicator (i.e., third column) is a qualitative metric, which is the average fitness value of all solutions over a course of repetitions. If an optimization method enhances its competitor solutions, certainly, the average fitness value should be developed over the given repetitions. As the average fitness trajectories in Fig. 4, the proposed HHSC method gives lower fitness values on all tested functions. Another point deserving introduction here is the accelerated reduction in the average fitness trajectories, which explains that the development of the current solutions grows faster and more reliable over the course of repetitions.

The third indicator (i.e., fourth column) is a qualitative metric, which is the convergence rate of the best solution over a course of repetitions. The fitness values of the best solution in each repetition are collected and represented as the convergence curve in Fig. 4. The decrease of fitness values over the given repetitions demonstrates the convergence of the proposed HHSC method. It is also clear that the convergence rate is degraded and observed in the given convergence curve, as well, which is due to the above-explained basis. Moreover, the proposed method got the best results in a fast manner in several functions, such as in F1, F2, F3, F9, and F11.

3.1.3 Effects of the number of candidate solutions

Initially, in order to evaluate the proposed methods, we investigate the effects of the number of candidate solutions (N) on the performance of the proposed HHSC method. As shown in Fig. 5, several numbers of solutions (i.e., \(N= 5, 10, 15, 20, 25, 30, 35, 40, 45, 50\)) are studied and analyzed by examining the changing in the numbers of solutions parameter over a course of repetitions (i.e., 500 repetitions). It can be seen that the obtained results in Fig. 5 when applied to different population sizes (number of solutions), the proposed HHSC method maintains its advantages, which indicates that the proposed HHSC is an efficient method and less affected by the number of solutions used in the process of the algorithm. So, the proposed HHSC method is more stable when the population changes, such as in F2, F3, F5, F6, F10, F12, F16, F17, F18, F19, F21, and F22. In other words, the best number of solutions was 50 in most of the applied benchmark functions (i.e., F9, F10, F11, F13, F15, F16, F17, F18, F21, etc). However, there is little variation among the obtained results of the given number of solutions using all the tested classical benchmark functions.

3.1.4 Performance evaluation using classical benchmark functions

In this part, the effectiveness of the proposed HHS method is investigated using twenty-three benchmark functions.

By analyzing the obtained results in Tables 3 and 4, the average of HHSC is the smallest. The numbers of best obtained (0.0) with the average and STD values are the largest, where it got the best solution in several problems, including F1, F2, F3, F4, F9, and F11. As can be seen, the impact of HHSC in trading with this set of benchmark functions, including F1, F2, F3, F4, F5, F6, F7, F15, and F23, is better than all other well-known comparative methods (i.e., HHO, GOA, SSA, WOA, SCA, DA, GWO, PSO, ALO, MPA, and EO). It can be demonstrated that the gradual changes strategy of the Sine Cosine Algorithm significantly enhanced the exploitation search capability of the proposed HHSC compared with other comparative optimization methods. The solutions of the proposed HHSC method for unimodal functions, multimodal functions, and fixed-dimensional multimodal functions is almost the best among all tested optimization methods. In other cases, the solutions obtained by the proposed HHSC in F12, F13, F14, F18, F21, F22, and F23 are also competitive to other methods.

According to the Friedman ranking test in Table 3, HHSC also ranked as the best method (first ranking) in finding the optimum solution for fixed-dimensional multimodal benchmark functions, followed by HHO, MPA, EO, GWO, WOA, PSO, SSA, SCA, ALO, DA, and GOA. The given summation of the proposed HHSC in that table demonstrated that it overcomes all comparative methods by getting the smallest summation measure as well as by getting the smallest mean rank measure. According to the Friedman ranking test in Table 4, HHSC also ranked as the best method (first ranking) in finding the optimum solution for unimodal functions and multimodal functions, followed by MPA (second-ranking), GWO (third-ranking), PSO got the fourth-ranking, SSA got the fifth-ranking, ALO got the sixth-ranking, HHO got the seventh-ranking, EO got the eighth-ranking, DA got the ninth-ranking, WOA got the tenth-ranking, SCA got the eleventh-ranking, and finally, GOA got the twelfth-ranking. These outcomes proved the ability of the proposed HHSC method in solving the classical benchmark functions. The given results in that table demonstrated that the proposed HHSC overcomes all comparative methods by getting the smallest summation value and the smallest mean rank measure.

3.1.5 Scalability test using thirteen classical benchmark functions

In this part, the scalability of the proposed HHS method is investigated using thirteen benchmark functions with different dimension (Dim) sizes (i.e., 50, 100, and 500).

To evaluate the capability of the proposed HHSC for solving the benchmark functions with higher dimensions, the scalability analysis for HHSC is conducted by extending the dimensional size of the given problems. The statistical results of all comparative methods using the Friedman ranking test for classical problems at Dim = 50, 100, and 500 are given in Tables 5, 6 and 7, respectively, including average, mean, and STD values for each problem. The summation, mean rank, and final ranking of the Friedman ranking test for the tested optimization methods are also demonstrated. As noted in Table 5, the proposed method achieved the best solutions in most of the given cases, including F1, F2, F3, F4, F9, F10, and F11. Moreover, the proposed HHSC method obtained the lowest value of the Friedman ranking test (i.e., the first ranking) compared to other well-known optimization methods at Dim = 50. Clearly, the results proved the efficacy of the proposed HHSC method.

In case the dimension size is 100 (see Table 6), the proposed method achieved the best solutions in most of the given cases, including F1, F2, F3, F4, F9, F10, and F11. This reflects the ability of the HHSC in solving high dimensional problems. According to the results of the Friedman ranking test, the proposed HHSC got the best results and is ranked as the first method, followed by HHO, MPA, EO, WOA, PSO, SCA, DA, ALO, SSA, and GOA. As well, HHSC got the smallest summation value and mean rank value compared to other methods. We noticed that the ability of the proposed method (HHSC) in solving higher-dimensional problems is stable and its scalability is excellent. To confirm the mentioned assumption, higher dimensional size is further investigated by using the dimension size equal to 500 as shown in Table 7. In this experiment, the performance of the proposed HHSC is still better than almost all the comparative methods. It got several best solutions for the given problems (i.e., F1, F2, F3, F4, F9, F10, and F11) although the complexity of the problems is higher. According to the statistical test, the proposed HHSC got the first ranking, followed by HHO, MAP, WOA, EO, GWO, PSO, DA, SCA, ALO, SSA, and GOA. We concluded that the proposed HHSC method has distinguished advantages in addressing problems with higher dimensions.

3.1.6 Convergence curves using classical benchmark functions

In this section, the convergence curves of the tested methods are given in Fig. 6 to prove the ability of the proposed HHSC method and to show its behavior during solving the problems. Due to the local optima problem, the exploitation searchability of conventional HHS has been boosted by proposing a new version of hybrid HHO and SCA. Hence, the convergence velocity can be increased as illustrated in figure; convergence curves of F1, F2, F3, F4, F5, F6, F7, F8, F9, F10, F11, F14, F21, F22, and F23 can prove this fact. The proposed HHSC method can improve the exploitation searchability of HHO by the gradual changes of SCA in the current solutions, and it can evidently improve the convergence efficiency of HHO. The incorporated local optima method can make the proposed HHSC method efficient by getting a balance between exploration and exploitation trends. As shown in Fig. 6, HHSC got a better convergence rate than the original HHO in all cases, recommending that, with applying the HHO and SCA search strategies, the convergence production of the conventional HHO can be enhanced considerably as in F1, F2, F3, F4, F5, etc. Moreover, with the fastest convergence acceleration, HHSC defeated all other comparative optimization methods in handling these problems. As illustrated earlier, it can be concluded that the proposed HHSC is not only powerful and effective in achieving the best results, but it also includes a higher convergence speed than other optimization methods, which shows that the proposed HHSC has a high potential to be employed to particular practical optimization problems.

3.2 CEC2019 benchmark functions

In this part, a set of complex and recent benchmark functions, called CEC2019, is used to investigate the performance of the proposed HHSC method in solving advanced problems. Table 8 shows the characteristics of the ten CEC2019 benchmark functions, including the number of functions (No.), mathematical presentation, best value, dimension size, and the given search space.

Table 9 shows the results of the comparative methods on the tested functions. The proposed HHSC method is compared with other well-known optimization methods published in the literature, including HHO, GOA, SSA, WOA, SCA, DA, GWO, PSO, ALO, MPA, and EO. The proposed method is evaluated also in terms of the worst, mean, and best fitness values. As well, the STD is given for the obtained results. The proposed method as shown in Table 9 got the best results in three cases (i.e., F6, F8, F10). In comparison with other methods, the proposed HHSC obtained better results almost in all cases. According to the Friedman test, the proposed method got the smallest summation value and mean rank. Moreover, the proposed method is reported as the best method compared to all comparative methods. It achieved first ranking, followed by ALO, MPA, PSO, SSA, EO, HHO, GOA, GWO, WOA, SCA, and finally, DA. We concluded that the proposed method (HHSC) is also efficient in solving complex problems. The modification improves the ability of conventional HHO to find better solutions for these problems.

The convergence speed of the tested methods is plotted in Fig. 7 and is compared through the algorithm’s convergence curve. In these sub-figures, the mean fitness values during a course of repetitions of the tested methods are shown. On the horizontal axis, the number of iterations is given alongside the fitness function values, which is shown on the vertical axis of the graphs. From the sub-figures, it can be clearly seen that the proposed HHSC method is better in terms of convergence speed compared to other optimization methods. One of the main features of the proposed HHSC is that it controls the diversity of the solutions. The gradual changes strategy of SCA helps the proposed HHSC to avoid the high heterogeneity or high disruption of search solutions through the optimization process (searching). This strategy can produce a more satisfying balance between the search strategies in the last phase. Such a strategy is beneficial for the cases that the search area is constrained, and unnecessary exploration can reduce the convergence acceleration of the proposed HHSC without improving the quality of solutions. Moreover, the proposed HHSC acquires all core advantages of the HHO and SCA together. Additionally, one more benefit is that the proposed HHSC obtained high-quality solutions compared to conventional HHO and SCA as well.

3.3 Experiment 2: engineering design problems

In this section, five engineering design problems are used to further confirm the effectiveness of the proposed HHSC method, which are listed below.

-

Tension/compression spring design problem.

-

Pressure vessel design problem.

-

Welded beam design problem.

-

Three-bar truss design problem.

-

Speed reducer design problem.

These optimization problems are generally recognized and have been applied to sufficiently reveal the ability of the proposed methods in solving complex real-world engineering problems [71, 72]. The proposed HHSC method is compared with other well-known optimization methods published in the literature. Note that, for the HHSC, the number of solutions (population size) is fixed to 50, and the total number of iterations is fixed to 1000.

In this paper, general and bound-constrained optimization problems are taken to investigate the effectiveness of the proposed HHSC method. For the bound-constrained optimization problems [73], each design variable is usually needed to present a boundary limitation:

where \(LB_j\) and \(UB_j\) are the lower bound and upper bound of the position \(x_{ij}\), and n is the number of given positions. Furthermore, a general constrained problem can be usually given as:

where m is the number of various constraints, and l is the number of equilibrium constraints.

In the achievement evaluation of the proposed HHSC method, all the constrained problems in Eq. (19) are outlined in the bound-constrained design by utilizing the static cost function. For any infeasible solution, a cost function will be merged with the targeted objective function. Due to its advantage in trade, the static cost function is explained. It only needs an auxiliary cost function and is proper for all various problems [74, 75]. By using this procedure, the above-mentioned constrained optimization problem can be given in Eq. (20).

where \(Pe_j\) and \(Pe_k\) are cost functions and usually charged a significant value. \(\varepsilon\) is the error of equilibrium constraints, which is set to 1\(e-6\) in this paper.

3.3.1 Tension/compression spring design problem

The tension/compression spring design problem is presented to degrade the total weight of a given spring [76]. Three variables are investigated in this problem: wire diameter (d), mean coil diameter (D), and the number of active coils (N), as given in Fig. 8. The result of the proposed HHSC is compared with other well-known methods, including PSO [77], HS [78], MPM [79] GSA [80], ES [81], MFO [18], BA [82], WOA [63], MVO [83], RO [84], and HHO [34].

Table 10 presents the results of the proposed HHSC and the other well-known comparative methods on this application. As well, the obtained constraints values and optimal decision variables of the obtained optimal solution for all the comparative methods are presented in Table 10. It is evident from Table 10 that the proposed HHSC is a superior method compared to other well-known optimization methods by producing better results where the optimal variables at \(x^* = (d = 0.0565354231, D = 0.5470311127, N = 4.4803180173\)) with the best value of the objective function: F(\(x^*) = 0.0113305147\). Figure 9 draws the curves of the objective values, the trajectory of the first solution, and the convergence curve of the proposed HHSC when solving the tension/compression spring design problem. The HHSC method arrived at the optimal solution very fast, before the iteration number 50, according to the presented convergence curve in Fig. 9. The curves of the decision variables and the trajectory of the first solution are provided to show the ability of the HHSC to explore the search space.

3.3.2 Pressure vessel design problem

The pressure vessel design problem is presented to reduce the total cost of the cylindrical pressure vessel [85]. Four variables are analyzed and studied in this problem, including the width of the shell (\(T_s\)), the width of the head (\(T_h\)), internal radius (R), and height of the cylindrical part without studying the head (L), as shown in Fig. 10. The result of the proposed HHSC is compared with other well-known methods, including Branch-bound [86], OBSCA [87], HS [88], GA [89], CSCA [90], CPSO [77], PSO-SCA [91], MVO [83], HPSO [92], WOA [63], ES [81], GSA [80], and ACO [93].

Table 11 presents the results of the proposed HHSC method and the other well-known comparative methods: Branch-bound, OBSCA, HS, GA, CSCA, PSO-SCA, MVO, HPSO, WOA, ES, GSA, and ACO on this application. As well, the obtained constraints values and optimal decision variables of the optimal solution for all the comparative methods are presented in Table 11. It is evident from Table 11 that the proposed HHSC defeated almost all well-known optimization methods by giving optimal variables at \(x^* = (T_s = 0.8156485, T_h = 0.426025476, R = 0.4209198565, L = 176.7402563141\)) with the best value of the objective function: \(F(x^*) = 6046.632127\). Figure 11 represents the curves of the decision values, the trajectory of the 1st solution, and the convergence curve of the proposed HHSC when solving the pressure vessel design problem. The proposed HHSC archived the optimal solution at the beginning of the enhancement process and it is clear that the diversity of the solution is stable as shown in Fig. 11. Moreover, we concluded that the proposed HHSC method obtained the most fitting parameters and the minimum objective function.

3.3.3 Welded beam design problem

The welded beam design problem is presented to reduce the total cost of a welded beam. Four variables are investigated in this problem: the width of weld (h), the height of the clamped bar (l), the length of the bar (t), and the width of the bar (b), as shown in Fig. 12. The result of the proposed HHSC is compared with other well-known methods, including GA [94], ABC [95], SIMPLEX [95], APPROX [95], CPSO [77], WOA [63], DAVID [95], CSCA [90], GSA [83], HS [78], MVO [83], OBSCA [87], and RO [84].

Table 12 presents the results of the proposed HHSC and the other well-known comparative methods: GA, ABC, SIMPLEX, APPROX, CPSO, WOA, DAVID, CSCA, GSA, HS, MVO, OBSCA, and RO on this application. As well, the obtained constraints values and optimal decision variables of the optimal solution for all the comparative methods are presented in Table 12. It is evident from Table 12 that the proposed HHSC obtained promising results compared to other well-known optimization methods by providing optimal variables at \(x^* = (h = 0.16157750428, I = 3.71707468861, t = 8.95429260793, b = 0.2095302433\)) with the best value of the objective function: \(F(x^*) = 1.7064136\). Figure 13 draws the curves of the decision values, the trajectory of the 1st solution, and the convergence curve of the proposed HHSC while solving the welded beam design problem. Also, the HHSC finds the optimal solution at the beginning search steps and the diversity is clearly observed as shown in the figure. Moreover, we concluded that the proposed HHSC method obtained promising results by finding fitting parameters and the best objective function.

3.3.4 Three-bar truss design problem

The three-bar truss design problem is presented to reduce the total weight of a three-bar truss [71]. Two variables are investigated and analyzed in this problem, including the cross-sectional areas of member 1 (\(A_1\)) and the cross-sectional areas of member 2 (\(A_2\)), as shown in Fig. 14. The result of the proposed HHSC is compared with other well-known methods, including CS [96], DEDS [97], PSO-DE [91], Tsa [98], SSA [14], Ray and Sain [99], PHSSA [69], and MBA [100].

Table 13 presents the results of the proposed HHSC method and the other well-known comparative methods: CS, DEDS, PSO-DE, Tsa, SSA, Ray and Sain, PHSSA, and MBA on this application. Also, the received constraints values and optimal decision variables of the optimal solution for all the comparative methods are presented in Table 13. It is evident from Table 13 that the proposed HHSC got better and comparable results compared to other well-known optimization methods by providing optimal variables at \(x^* = (A_1 = 0.7964229, A_2 = 0.385674\)) with the best value of the objective function: \(F(x^*) = 263.82983\). Figure 15 represents the curves of the decision values, the trajectory of the 1st solution, and the convergence curve of the proposed HHSC while solving the 3-bar truss design problem. The HHSC method got comparable results and it reached the optimal solution very fast according to the given convergence curve. The diversity of the solutions is also recognized.

3.3.5 Speed reducer design problem

The speed reducer design optimization problem is presented to reduce the total weight of a speed reducer [101]. Seven variables are considered in this problem: the face diameter (b), module of teeth (m), number of teeth on pinion (z), the distance of shaft 1 between bearings (\(l_1\)), the distance of shaft 2 between bearings (\(l_2\)), the width of shaft 1 (\(d_1\)), and width of shaft 2 (\(d_2\)), as presented in Fig. 16. The result of the proposed HHSC is compared with other well-known methods, including FA [102], MFO [18], LGSI4 [103], WSA [103], LGSI2 [103], APSO [104], PSO-DE [91], CS [96], GWO [15], SCA [51], and AAO [105].

Table 14 presents the results of the proposed HHSC method and the other well-known comparative methods: FA, MFO, LGSI4, WSA, LGSI2, AAO, APSO, PSO-DE, CS, GWO, and SCA on this application. Also, the obtained constraints values and optimal decision variables of the optimal solution for all the comparative methods are presented in Table 14. It is evident from Table 14 that the proposed HHSC got better results in some cases and the comparable results in other cases compared to the well-known optimization methods by providing optimal variables at \(x^* = (x_1 = 3.50379, x_2 = 0.7, x_3 = 17.0, x_4 = 7.3, x_5 = 7.7294014, x_6 = 3.356511, x_7 = 5.28668965\)) with the best value of the objective function: \(F(x^*) = 2997.89844\). Figure 17 represents the curves of the decision values, the trajectory of the 1st solution, and the convergence curve of the proposed HHSC during solving the speed reducer design problem. The HHSC method produced equivalent results to the other well-known methods. It reached the optimal solution very fast at iteration 100 according to the presented convergence curve in Fig. 17.

4 Conclusion and future work

In this paper, a new variant of Harris Hawks optimization (HHO), namely, improved Harris Hawks optimization by gradual change strategies, is proposed, called HHSC. In the proposed HHSC method, two efficient search strategies of the Sine Cosine Algorithm (i.e., gradual change strategies by sine and cosine functions) are incorporated into the conventional HHO.

The experimental results reported that these two search strategies are significantly beneficial to improve the abilities of the HHO further and avoid the premature convergence obstacle. Firstly, the abilities of the proposed HHSC method is confirmed by comparing it with different kinds of well-known methods, including HHO, GOA, SSA, WOA, SCA, DA, GWO, PSO, ALO, MPA, and EO. The comparisons showed that HHSC can obtain more suitable results and is clearly superior to all competitors. Secondly, the proposed HHSC is utilized to determine the parameters of engineering design problems. Aiming at five problems, HHSC is tested with other common improved methods, including SIMPLEX, APPROX, CPSO, Branch-bound, OBSCA, DAVID, PHSSA, DEDS, PSO-DE, Ray and Sain, APSO, PSO, LGSI2, LGSI4, HS, MPM, GSA, PSO-SCA, GSA, MBA, ES, Tsa, MFO, BA, GA, CS, WOA, MVO, ACO, RO, and HHO, in cost evaluation criteria. The results demonstrated that HHSC is better than other improved methods in solving complex engineering problems.

In future research, however, there are many directions worth investigating. For example, the proposed HHSC can be combined with other new optimization operators and search strategies to improve its optimization skills further. Moreover, continuing the proposed HHSC to solve multi-objective problems, IoT task scheduling, image segmentation, clustering problems, intrusion detection in wireless sensor networks, appliances management in smart homes, and feature selection are also interesting problems. In future works, we will also examine the influence of chaotic maps in digital computers on the performance of the proposed HHSC method.

References

Abualigah L, Diabat A, Geem ZW (2020) A comprehensive survey of the harmony search algorithm in clustering applications. Appl Sci 10(11):3827

Abualigah L (2020) Group search optimizer: a nature-inspired meta-heuristic optimization algorithm with its results, variants, and applications. Neural Comput Appl 1–24

Osman IH, Laporte G (1996) Metaheuristics: a bibliography

Ewees AA, Elaziz MA, Houssein EH (2018) Improved grasshopper optimization algorithm using opposition-based learning. Expert Syst Appl 112:156–172

Yousri D, Allam D, Eteiba M (2019) Chaotic whale optimizer variants for parameters estimation of the chaotic behavior in permanent magnet synchronous motor. Appl Soft Comput 74:479–503

Yousri D, AbdelAty AM, Said LA, Elwakil AS, Maundy B, Radwan AG (2019) Chaotic flower pollination and grey wolf algorithms for parameter extraction of bio-impedance models. Appl Soft Comput 75:750–774

Abualigah L (2020) Multi-verse optimizer algorithm: a comprehensive survey of its results, variants, and applications. Neural Comput Appl 1–21

Zheng R, Jia H, Abualigah L, Liu Q, Wang S (2021) Deep ensemble of slime mold algorithm and arithmetic optimization algorithm for global optimization. Processes 9(10):1774

Wang S, Liu Q, Liu Y, Jia H, Abualigah L, Zheng R, Wu D (2021) A hybrid ssa and sma with mutation opposition-based learning for constrained engineering problems. Comput Intell Neurosci

Ewees AA, Abualigah L, Yousri D, Algamal ZY, Al-qaness MA, Ibrahim RA, Abd Elaziz M (2021) Improved slime mould algorithm based on firefly algorithm for feature selection: a case study on qsar model. Eng Comput 1–15

Abualigah L, Shehab M, Alshinwan M, Mirjalili S, Abd Elaziz M (2020) Ant lion optimizer: a comprehensive survey of its variants and applications. Arch Comput Methods Eng

Abualigah L, Shehab M, Alshinwan M, Alabool H (2019) Salp swarm algorithm: a comprehensive survey. Neural Comput Appl 1–21

Eberhart R, Kennedy J (1995) A new optimizer using particle swarm theory. In: Proceedings of the sixth international symposium on micro machine and human science, MHS’95. IEEE, pp 39–43

Mirjalili S, Gandomi AH, Mirjalili SZ, Saremi S, Faris H, Mirjalili SM (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Mirjalili S (2016) Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput Appl 27(4):1053–1073

Karaboga D, Ozturk C (2011) A novel clustering approach: artificial bee colony (abc) algorithm. Appl Soft Comput 11(1):652–657

Mirjalili S (2015) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl-Based Syst 89:228–249

Holland J (1975) Adaptation in artificial and natural systems The University of Michigan Press, Ann Arbor

Goldberg DE, Holland JH (1988) Genetic algorithms and machine learning. Mach Learn 3(2):95–99

Storn R, Price K (1997) Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359

Yao X, Liu Y, Lin G (1999) Evolutionary programming made faster. IEEE Trans Evol Comput 3(2):82–102

Eskandar H, Sadollah A, Bahreininejad A, Hamdi M (2012) Water cycle algorithm—a novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput Struct 110:151–166

Bayraktar Z, Komurcu M, Werner DH (2010) Wind driven optimization (wdo): a novel nature-inspired optimization algorithm and its application to electromagnetics. In: Antennas and propagation society international symposium (APSURSI), 2010 IEEE. IEEE, pp 1–4

Tamura K, Yasuda K (2011) Primary study of spiral dynamics inspired optimization. IEEJ Trans Electr Electron Eng 6(S1):S98–S100

Kirkpatrick S, Gelatt CD, Vecchi MP (1983) Optimization by simulated annealing. Science 220(4598):671–680

Tahani M, Babayan N (2018) Flow regime algorithm (fra): a physics-based meta-heuristics algorithm. Knowl Inf Syst 1–38

Lam AY, Li VO (2010) Chemical-reaction-inspired metaheuristic for optimization. IEEE Trans Evol Comput 14(3):381–399

Rao RV, Savsani VJ, Vakharia D (2011) Teaching-learning-based optimization: a novel method for constrained mechanical design optimization problems. Comput Aided Des 43(3):303–315

Dai C, Zhu Y, Chen W (2006) Seeker optimization algorithm. In: International conference on computational and information science. Springer, pp 167–176

Wang G-G, Deb S, Coelho LS (2015) Elephant herding optimization. In: 3rd international symposium on computational and business intelligence (ISCBI). IEEE 2015, pp 1–5

Moghdani R, Salimifard K (2018) Volleyball premier league algorithm. Appl Soft Comput 64:161–185

Abualigah L, Abd Elaziz M, Sumari P, Geem ZW, Gandomi AH (2021) Reptile search algorithm (rsa): a nature-inspired meta-heuristic optimizer. Expert Syst Appl 116158

Moayedi H, Osouli A, Nguyen H, Rashid ASA (2021) A novel Harris Hawks optimization and k-fold cross-validation predicting slope stability. Eng Comput 37(1):369–379

Golilarz NA, Gao H, Demirel H (2019) Satellite image de-noising with Harris Hawks meta heuristic optimization algorithm and improved adaptive generalized gaussian distribution threshold function. IEEE Access 7:57459–57468

Essa F, Abd Elaziz M, Elsheikh AH (2020) An enhanced productivity prediction model of active solar still using artificial neural network and Harris Hawks optimizer. Appl Therm Eng 170:115020

Bao X, Jia H, Lang C (2019) A novel hybrid Harris Hawks optimization for color image multilevel thresholding segmentation. IEEE Access 7:76529–76546

Abualigah L, Diabat A (2021) Chaotic binary group search optimizer for feature selection. Expert Syst Appl 192:116368

Houssein EH, Hosney ME, Oliva D, Mohamed WM, Hassaballah M (2020) A novel hybrid Harris Hawks optimization and support vector machines for drug design and discovery. Comput Chem Eng 133. https://doi.org/10.1016/j.compchemeng.2019.106656

Moayedi H, Gör M, Lyu Z, Bui DT (2020) Herding behaviors of grasshopper and Harris Hawk for hybridizing the neural network in predicting the soil compression coefficient. Measurement 152. https://doi.org/10.1016/j.measurement.2019.107389

Shehabeldeen TA, AbdElaziz M, Elsheikh AH, Zhou J (2019) Modeling of friction stir welding process using adaptive neuro-fuzzy inference system integrated with Harris Hawks optimizer. J Mark Res 8(6):5882–5892

Dhou K, Cruzen C (2020) A new chain code for bi-level image compression using an agent-based model of echolocation in dolphins. In: 2020 IEEE 6th international conference on dependability in sensor, cloud and big data systems and application (DependSys). IEEE, pp 87–91

Dhou K, Cruzen C (2021) A highly efficient chain code for compression using an agent-based modeling simulation of territories in biological beavers. Futur Gener Comput Syst 118:1–13

Mouring M, Dhou K, Hadzikadic M (2018) A novel algorithm for bi-level image coding and lossless compression based on virtual ant colonies. In: COMPLEXIS, pp 72–78

Dhou K (2019) An innovative employment of virtual humans to explore the chess personalities of garry kasparov and other class-a players. In: International conference on human-computer interaction. Springer, pp 306–319

Yousri D, Allam D, Eteiba MB (2020) Optimal photovoltaic array reconfiguration for alleviating the partial shading influence based on a modified Harris Hawks optimizer. Energy Convers Manag 206. https://doi.org/10.1016/j.enconman.2020.112470

Chen H, Jiao S, Wang M, Heidari AA, Zhao X (2020) Parameters identification of photovoltaic cells and modules using diversification-enriched Harris Hawks optimization with chaotic drifts. J Clean Prod. https://doi.org/10.1016/j.jclepro.2019.118778

Ridha HM, Heidari AA, Wang M, Chen H (2020) Boosted mutation-based Harris Hawks optimizer for parameters identification of single-diode solar cell models. Energy Convers Manag. https://doi.org/10.1016/j.enconman.2020.112660

Kamboj VK, Nandi A, Bhadoria A, Sehgal S (2020) An intensify Harris Hawks optimizer for numerical and engineering optimization problems. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2019.106018

Abd Elaziz M, Heidari AA, Fujita H, Moayedi H (2020) A competitive chain-based Harris Hawks optimizer for global optimization and multi-level image thresholding problems. Appl Soft Comput 106347

Mirjalili S (2016) Sca: a sine cosine algorithm for solving optimization problems. Knowl Based Syst 96:120–133

Al-Qaness MA, Elaziz MA, Ewees AA (2018) Oil consumption forecasting using optimized adaptive neuro-fuzzy inference system based on sine cosine algorithm. IEEE Access 6:68394–68402

Attia A-F, El Sehiemy RA, Hasanien HM (2018) Optimal power flow solution in power systems using a novel sine-cosine algorithm. Int J Electr Power Energy Syst 99:331–343

Tawhid MA, Savsani V (2019) Multi-objective sine-cosine algorithm (mo-sca) for multi-objective engineering design problems. Neural Comput Appl 31(2):915–929

Jouhari H, Lei D, AA Al-qaness M, Abd Elaziz M, Ewees AA, Farouk O (2019) Sine-cosine algorithm to enhance simulated annealing for unrelated parallel machine scheduling with setup times. Mathematics 7(11):1120

Mahdad B, Srairi K (2018) A new interactive sine cosine algorithm for loading margin stability improvement under contingency. Electr Eng 100(2):913–933

Li S, Fang H, Liu X (2018) Parameter optimization of support vector regression based on sine cosine algorithm. Expert Syst Appl 91:63–77

Abd Elaziz M, Oliva D, Xiong S (2017) An improved opposition-based sine cosine algorithm for global optimization. Expert Syst Appl 90:484–500

Gupta S, Deep K (2019) A hybrid self-adaptive sine cosine algorithm with opposition based learning. Expert Syst Appl 119:210–230

Neggaz N, Ewees AA, Abd Elaziz M, Mafarja M (2020) Boosting salp swarm algorithm by sine cosine algorithm and disrupt operator for feature selection. Expert Syst Appl 145:113103

Abbasi A, Firouzi B, Sendur P (2021) On the application of Harris Hawks optimization (hho) algorithm to the design of microchannel heat sinks. Eng Comput 37(2):1409–1428

Saremi S, Mirjalili S, Lewis A (2017) Grasshopper optimisation algorithm: theory and application. Adv Eng Softw 105:30–47

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67

Eberhart R, Kennedy J (1995) Particle swarm optimization. In: Proceedings of the IEEE international conference on neural networks, vol 4. Citeseer, pp 1942–1948

Mirjalili S (2015) The ant lion optimizer. Adv Eng Softw 83:80–98

Faramarzi A, Heidarinejad M, Mirjalili S, Gandomi AH (2020) Marine predators algorithm: a nature-inspired metaheuristic. Expert Syst Appl 113377

Faramarzi A, Heidarinejad M, Stephens B, Mirjalili S (2020) Equilibrium optimizer: a novel optimization algorithm. Knowl Based Syst 191:105190

Mack GA, Skillings JH (1980) A friedman-type rank test for main effects in a two-factor anova. J Am Stat Assoc 75(372):947–951

Abualigah L, Shehab M, Diabat A, Abraham A (2020) Selection scheme sensitivity for a hybrid salp swarm algorithm: analysis and applications. Eng Comput 1–27

Van Den Bergh F, Engelbrecht AP (2006) A study of particle swarm optimization particle trajectories. Inf Sci 176(8):937–971

Pathak VK, Srivastava AK (2020) A novel upgraded bat algorithm based on cuckoo search and sugeno inertia weight for large scale and constrained engineering design optimization problems. Eng Comput 1–28

Wang Z, Luo Q, Zhou Y (2020) Hybrid metaheuristic algorithm using butterfly and flower pollination base on mutualism mechanism for global optimization problems. Eng Comput

Gandomi AH, Deb K (2020) Implicit constraints handling for efficient search of feasible solutions. Comput Methods Appl Mech Eng 363:112917

Rao SS (2019) Engineering optimization: theory and practice. Wiley, New York

de Melo VV, Banzhaf W (2018) Drone squadron optimization: a novel self-adaptive algorithm for global numerical optimization. Neural Comput Appl 30(10):3117–3144

Arora S, Singh S (2019) Butterfly optimization algorithm: a novel approach for global optimization. Soft Comput 23(3):715–734

He Q, Wang L (2007) An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng Appl Artif Intell 20(1):89–99

Lee KS, Geem ZW (2005) A new meta-heuristic algorithm for continuous engineering optimization: harmony search theory and practice. Comput Methods Appl Mech Eng 194(36–38):3902–3933

Belegundu AD, Arora JS (1985) A study of mathematical programming methods for structural optimization. Part i: theory. Int J Numer Methods Eng 21(9):1583–1599

Rashedi E, Nezamabadi-Pour H, Saryazdi S (2009) Gsa: a gravitational search algorithm. Inf Sci 179(13):2232–2248

Mezura-Montes E, Coello CAC (2008) An empirical study about the usefulness of evolution strategies to solve constrained optimization problems. Int J Gen Syst 37(4):443–473

Gandomi AH, Yang X-S, Alavi AH, Talatahari S (2013) Bat algorithm for constrained optimization tasks. Neural Comput Appl 22(6):1239–1255

Mirjalili S, Mirjalili SM, Hatamlou A (2016) Multi-verse optimizer: a nature-inspired algorithm for global optimization. Neural Comput Appl 27(2):495–513

Kaveh A, Khayatazad M (2012) A new meta-heuristic method: ray optimization. Comput Struct 112:283–294

Long W, Wu T, Liang X, Xu S (2019) Solving high-dimensional global optimization problems using an improved sine cosine algorithm. Expert Syst Appl 123:108–126

Sandgren E (1990) Nonlinear integer and discrete programming in mechanical design optimization. J Mech Des 112(2):223–229

Elaziz MA, Oliva D, Xiong S (2017) An improved opposition-based sine cosine algorithm for global optimization. Expert Syst Appl 90:484–500

Mahdavi M, Fesanghary M, Damangir E (2007) An improved harmony search algorithm for solving optimization problems. Appl Math Comput 188(2):1567–1579

Coello CAC (2000) Use of a self-adaptive penalty approach for engineering optimization problems. Comput Ind 41(2):113–127

Huang F-Z, Wang L, He Q (2007) An effective co-evolutionary differential evolution for constrained optimization. Appl Math Comput 186(1):340–356

Liu H, Cai Z, Wang Y (2010) Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Appl Soft Comput 10(2):629–640

He Q, Wang L (2007) A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Appl Math Comput 186(2):1407–1422

Kaveh A, Talatahari S (2010) An improved ant colony optimization for constrained engineering design problems. Eng Comput 27(1):155–182

Deb K (1991) Optimal design of a welded beam via genetic algorithms. AIAA J 29(11):2013–2015

Ragsdell K, Phillips D (1976) Optimal design of a class of welded structures using geometric programming

Gandomi AH, Yang X-S, Alavi AH (2013) Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Eng Comput 29(1):17–35

Zhang M, Luo W, Wang X (2008) Differential evolution with dynamic stochastic selection for constrained optimization. Inf Sci 178(15):3043–3074

Tsai J-F (2005) Global optimization of nonlinear fractional programming problems in engineering design. Eng Optim 37(4):399–409

Ray T, Saini P (2001) Engineering design optimization using a swarm with an intelligent information sharing among individuals. Eng Optim 33(6):735–748

Sadollah A, Bahreininejad A, Eskandar H, Hamdi M (2013) Mine blast algorithm: a new population based algorithm for solving constrained engineering optimization problems. Appl Soft Comput 13(5):2592–2612

Truong KH, Nallagownden P, Baharudin Z, Vo DN (2019) A quasi-oppositional-chaotic symbiotic organisms search algorithm for global optimization problems. Appl Soft Comput 77:567–583

Baykasoğlu A, Ozsoydan FB (2015) Adaptive firefly algorithm with chaos for mechanical design optimization problems. Appl Soft Comput 36:152–164

Baykasoğlu A, Akpinar Ş (2015) Weighted superposition attraction (wsa): a swarm intelligence algorithm for optimization problems-part 2: constrained optimization. Appl Soft Comput 37:396–415

Guedria NB (2016) Improved accelerated pso algorithm for mechanical engineering optimization problems. Appl Soft Comput 40:455–467

Czerniak JM, Zarzycki H, Ewald D (2017) Aao as a new strategy in modeling and simulation of constructional problems optimization. Simul Model Pract Theory 76:22–33

Acknowledgements

This study was financially supported via a funding grant by Deanship of Scientific Research, Taif University Researchers Supporting Project number (TURSP-2020/300), Taif University, Taif, Saudi Arabia

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Abualigah, L., Diabat, A., Altalhi, M. et al. Improved gradual change-based Harris Hawks optimization for real-world engineering design problems. Engineering with Computers 39, 1843–1883 (2023). https://doi.org/10.1007/s00366-021-01571-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-021-01571-9