Abstract

Harris Hawks Optimization (HHO) is a newly proposed metaheuristic algorithm, which primarily works based on the cooperative system and chasing behavior of Harris’ hawks. In this paper, an augmented modification called HHMV is proposed to alleviate the main shortcomings of the conventional HHO that converges tardily and slowly to the optimal solution. Further, it is easy to trap in the local optimum when solving multi-dimensional optimization problems. In the proposed method, the conventional HHO is hybridized with Multi-verse Optimizer to improve its convergence speed, the exploratory searching mechanism through the beginning steps, and the exploitative searching mechanism in the final steps. The effectiveness of the proposed HHMV is deeply analyzed and investigated by using classical and CEC2019 benchmark functions with several dimensions size. Moreover, to prove the ability of the proposed HHMV method in solving real-world problems, five engineering design problems are tested. The experimental results confirmed that the exploration and exploitation search mechanisms of conventional HHO and its convergence speed have been significantly augmented. The HHMV method proposed in this paper is a promising version of HHO, and it obtained better results compared to other state-of-the-art methods published in the literature.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Metaheuristic (MH) techniques have recently attracted more attention since they can provide a suitable solution to solve different real-world optimization problems (El Aziz et al., 2016; Koziel et al., 2014; Premkumar et al., 2021). In general, the MH techniques have several properties and strengths that support them to find near-optimal solutions to the tested problems (Abualigah, 2020a). Besides, we wish to avoid the limitations of traditional optimization techniques such as gradient descent and Newton that are considered time-consuming techniques, especially in real-time applications (Abualigah & Diabat, 2021). These traditional methods provide only one solution at each iteration (Baykasoglu, 2012; Abualigah et al., 2020). In contrast, the MH is a global optimization method with high efficiency, low complexities, and different solutions at each iteration, making these solutions competitive in reaching an optimal solution (Yang, 2008; Ewees et al., 2018; Shehab et al., 2020).

In general, MH techniques can be classified into different categories. For example, there are evolutionary algorithms and swarm intelligence (SI) (Abualigah et al., 2020, 2021). Evolutionary algorithms (EA) simulate the natural evolutionary mechanisms; an example of this category includes Genetic Algorithms (GA) (Han et al., 2016), Genetic Programming (GP) (Cheng et al., 2009), Evolutionary Strategy (ES) (Beyer & Schwefel, 2002), Gradient-based Optimizer (GBO) (Jiang et al., 2021), Differential Evolution (DE) (Sarker et al., 2014), and Evolutionary Programming (EP) (Yao et al., 1999). The SI inspired their steps from the behavior of agents in nature (Yang, 2008). These agents live in groups and they cooperate together to find their food/prey. This SI category includes, for example, Glowworm Swarm Optimization Algorithm (GSO) (Krishnanand & Ghose, 2005), Firefly Algorithm (Altabeeb et al., 2021), Dragonfly Algorithm (DA) (Alshinwan et al., 2021), Bacterial Foraging Optimization Algorithm (BFO) (Yi et al., 2016), Cuckoo Search (CS) (Yousri et al., 2021), Fruit Fly Optimization algorithm (FOA) (Pan, 2012), Sine Cosine Algorithm (SCA) (Abualigah & Dulaimi, 2021; Abualigah & Alkhrabsheh, 2021), Ant Colony Optimization (ACO) (Kaveh & Talatahari, 2010), Krill Herd (KH) Algorithm (Abualigah et al., 2019), Aquila Optimizer (AO) (Abualigah et al., 2021), Moth–flame optimizer (Shehab et al., 2020), Marine Predators Algorithm (MPA) (Eid et al., 2021), Bat Algorithm (BA) (Alsalibi et al., 2020), Arithmetic Optimization Algorithm (AOA) (Abualigah et al., 2021), Artificial Bee Colony (ABC) Algorithm (Karaboga, 2005), ant colonies algorithm (ACA) (Xu et al., 2017), Reptile Search Algorithm (RSA) (Abualigah et al., 2021), and Harmony Search (HS) (Abualigah et al., 2020). New trends in swarm intelligence include the application of echolocation in dolphins, predators and prey, and beaver territories (Dhou & Cruzen, 2021; Dhou, 2020; Dhou & Cruzen, 2020). Other examples inspired by biological systems are Neural Network Algorithm (Sadollah et al., 2018), Slime Mould Algorithm (Hassan et al., 2021), and Genetic Algorithm (Şahin et al., 2021). Generally, conventional optimization algorithms suffer from several weaknesses, such as the problem of solutions diversity (Cully & Demiris, 2017; Zheng et al., 2021). They are sometimes getting a high similarity between the candidate solutions because of the machinists of the updating process. This problem needs an effort to be solved for almost all the optimization problems. The equilibrium between the search rules is considered one of the most common problems raised in the current optimization algorithms (Cuevas et al., 2014; Wang et al., 2021). This problem also forced the search process to exploit one of the search mechanisms and neglect the others.

In the same context, Harris Hawks optimization (HHO) is developed as a metaheuristic technique that inspired its steps from Harris Hawks’ behavior in nature during the process of searching and catching their prey (Heidari et al., 2019). In the HHO algorithm, there is a cooperation between hawks to attack the prey using several strategies. According to these behaviors, the HHO has been applied to several applications; for example, Golilarz et al. (2019) devoted HHO to improving satellite image de-noising through determining the optimal thresholding. Bao et al. (2019) introduced a color image multilevel thresholding segmentation method based on HHO.

There are several attempts to enhance the performance of HHO and apply it to real applications. In Ridha et al. (2020), the improved version of HHO, based on the flower pollination algorithm, is developed to enhance photovoltaic systems. Authors in Chen et al. (2020) introduced a modified version of HHO based on opposition-based learning techniques and chaos maps. In Yousri et al. (2020), a modified version of HHO based on improving the global search ability is developed to find a suitable reconfiguration for the photovoltaic array. In Kamboj et al. (2020), a modified HHO based on SCA is proposed and applied to solve different engineering problems. Also, there are other applications such as forecasting slope stability (Moayedi et al., 2019), prediction cores for realizing the soil compression coefficient (Moayedi et al., 2020), the productivity of active solar (Essa et al., 2020), and drug design and discovery (Houssein et al., 2020). With these advantages achieved by HHO, it still suffers from some limitations such as weak diversity, leading to premature convergence and slow convergence. Therefore, HHO needs more enhancement and improvement to avoid premature convergence. As a result, an enhanced approach based on Multi-Verse Optimizer (MVO) is developed and investigated using global optimization problems (Mirjalili et al., 2016).

The MVO algorithm is used in this paper since its performance has been established to solve different problems. For example, MVO has been cited in feature selection (Faris et al., 2018; Ewees et al., 2019), estimate parameters of Proton Exchange Membrane Fuel Cell (PEMFC) model (Fathy & Rezk, 2018), clustering (Shukri et al., 2018; Abasi et al., 2020), Multi-level thresholding-based greyscale image segmentation (Abd Elaziz et al., 2019; Wang et al., 2020), PV parameter (Ali et al., 2016), test scheduling (Hu et al., 2016), and others (Abualigah, 2020b). The main reason for the high performance of MVO is its high ability to balance between the two phases of the optimization search process (i.e., exploration and exploitation). Besides, it has a few sets of parameters that are easy and simple to implement.

Therefore, in this paper, the traditional HHO is combined with MVO to enhance its convergence rate. The proposed model, named HHMV, starts by setting the initial value for a set of agents, followed by computing each of their performances. Then, the best solution is determined, and it is used to update the value of other agents. According to each agent’s energy value, the operators of MVO will be used during exploration, or the operators of HHO will be used in exploitation. This supports HHO by an optimal tool that combines the strength of MVO and HHO. The optimization process is performed until it reaches the stop conditions. A comprehensive set of experiments are conducted on two different kinds of benchmark functions, including thirteen classical functions and ten CEC2019 functions, to validate the proposed HHMV method’s performance. Moreover, several engineering design problems are used to test the HHMV in solving real-world optimization problems. The results showed that the proposed HHMV got better results than other well-known methods, and it proved its ability to deal with different real-world problems by giving promising results. The main contributions are stated as follows:

-

We proposed an alternative MH technique based on enhancing the performance of HHO by adding new operators to refine the search process.

-

We combined the leading operators of MVO to enhance the exploration ability of the conventional HHO. The integrated operators assisted the original HHO in finding new best solutions by general new and different solutions helping in finding new solutions values.

-

We evaluated the performance of developed HHMV using classical and CEC2019 benchmark functions.

-

We evaluated the applicability of HHMV using real-world engineering problems.

The rest of this paper’s organization is given as follows: The developed HHMV method is discussed in “The proposed Harris Hawks-based multi-verse optimizer (HHMV)” section. “Experiments and results” section presents the experimental results and their discussion. “Conclusion and future works” section presents the conclusion and future works.

The proposed Harris Hawks-based multi-verse optimizer (HHMV)

In this section, the search strategies (i.e., exploration and exploitation) of the proposed method, a hybrid Harris Hawks optimization (HHO) with Multi-verse Optimizer (MVO), are presented (HHMV). HHO is motivated by searching prey, surprise pounce, and various attacking methods of Harris Hawks. The structure of the original HHO is given in “Exploration phase of HHO”, “The shift from exploration to exploitation” and “Exploitation phase of HHO” sections. These discussions present the exploration search mechanisms, the shift from exploration to exploitation search mechanisms, the exploitation search mechanisms, respectively. The new operators that have been added to the original HHO are given in “Exploitation phase of HHO” section. The search phases of the proposed HHMV method are given below.

Exploration phase of HHO

In the proposed HHMV method, the initial candidate solutions are generated. In each iteration, the best solution so far is the target prey, or, in other words, the optimal solution, approximately. HHMV searches randomly at various search spaces to determine the optimal solution by utilizing two main search strategies. Assume that an equal chance (q) for each searching procedure exists. They search according to the positions of other members and the prey as given in Eq. (1) if q < 0.5, or they search in random locations if q \(\ge \) 0.5, as given in Eq. (1).

X(t+1) indicates the next candidate solution at iteration number t, and \(X_{rabbit}\)(t) indicates the locations of prey. X(t) indicates the current solution at iteration number t, \(r_1\), \(r_2\), \(r_3\), \(r_4\), and q are random values [0, 1], LB and UB indicates the lower and upper variables, \(X_{rand}\)(t) indicates a random solution from the candidates, and \(X_m\) indicates the average values of the candidate solutions, which are determined using Eq. (2).

where N is the total number of candidate solutions, and \(X_i\)(t) is the location of the current solution at \(t_{th}\) iteration.

The shift from exploration to exploitation

In the proposed HHMV algorithm, we offered two exploitation search strategies; the first strategy comes from the original HHO, and the second strategy comes from the MVO. This proposal will improve the diversity of the solutions and maintain the performance stability of the HHO by avoiding being stuck in local optima. In the exploitation phase of the proposed HHMV, two main strategies are presented. In this respect, the exploitation of the HHO occurs if |E| \(\ge \) 1, and the exploitation of the MVO occurs if |E| < 1, E is calculated using Eq. (3). The search strategies are represented as follows.

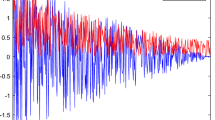

where E indicates the escaping energy of the target solution (prey), t indicates the number of the current iteration, and T indicates the maximum number of iterations, and \(E_0\) indicates the initial energy. The value of \(E_0\) is determined between -1 and 1 throughout iterations. When the rabbit is flagging, the \(E_0\) is reduced from 0 to -1. When the rabbit is strengthening, the \(E_0\) is increased from 0 to 1. When |E| \(\ge \) 1, the search is in other areas to examine a new prey location; therefore, the exploration phase will be operated, and when |E| < 1, the algorithm seeks to employ the neighborhood of the solutions by the exploitation steps. In brief, exploration happens while |E| \(\ge \) 1, while exploitation happens while |E| < 1. The time-dependent performance of E is given in Fig. 1.

Exploitation phase of HHO

In this phase, four different approaches are presented to represent the attacking step. In this respect, the soft besiege occurs if |E| \(\ge \) 0.5, and the hard besiege happens if |E| < 0.5.

Soft besiege

The prey still has enough energy if r \(\ge \) 0.5 and |E| \(\ge \) 0.5. Moreover, it seeks to escape by several random leaps, but eventually, it fails. Through these endeavors, the HHO surrounds it quietly to make the prey extra tired, and then they make a soft attack. The following rules model this behavior:

where \(\Delta \) X(t) indicates the variation among the positions of the prey and the candidate positions at \(t_{th}\) iteration, \(r_5\) is a random value between 0 and 1, and J=2(1-\(r_5\)) indicates the prey’s arbitrary leaps attempting to escape. The J is changed stochastically to mimic the characteristics of the prey’s actions.

Hard besiege

When |E| \(\ge \) 0.5 and |E| < 0.5, the anticipated prey is tired and unable to avoid capture. Hence, the HHO gradually surrounds the prey and eventually make their hard pounce. The new positions are replaced using Eq. (6).

Soft besiege with progressive rapid dives

When the |E| \(\ge \) 0.5 and r < 0.5, the rabbit retains sufficient power to avoid the attack; however, a soft besiege is formed ere the soft attack. This method is more capable than the former procedure. To produce a soft besiege, suppose that the hawks can assess the subsequent progress using Eq. (7).

Next, they analyze the potential outcome of such a move to determine if it will be a conventional strike or not. If it is seen as unwise, or if they understand that the target victim is making erratic moves, they also begin to make irregular, fast, deceptive dives while nearing the prey. Here, suppose that they will act deceptively according to the Levy flight-based (LF) guides using Eq. (8).

where D is the length size, and S is random values by \(1\times D\), and LF is the Levy flight procedure determined by Eq. (9).

where,

where u, \(\upsilon \) are random numbers between 0 and 1. \(\beta \) is a value fixed to 1.5. So, the last search approach for renewing the positions of the candidate solutions in the soft attack stage is calculated using Eq. (11).

where Z and Y are determined using Eqs. (7) and (9).

Hard besiege with progressive rapid dives

The prey is weak and has little strength to leap, and a hard attack is formed before the surprise attack to find and destroy the target when the |E| < 0.5 and r < 0.5. The following Equation presents the hard besiege condition:

where Y and Z are determined using Eqs. (13) and (14).

Exploitation phase of MVO

According to the big bang theory, it is the start of everything in the universe, and there was nothing before it. The multiverse concept is another successful and well-established concept among physicists (Mirjalili et al., 2016). White holes, black holes, and wormholes have been chosen as the MVO algorithm’s inspiration from the multiverse hypothesis. Although a white hole has never been observed in our world, scientists believe that the big bang may be regarded a white hole and that it is possibly the most important component in the formation of a universe.

For further improvement, the solutions maintain changing their positions without incident, to preserve the diversity of the candidate solutions of HHO and to perform exploitation. The exploitation process is taken from Multi-verse Optimizer (MVO) (Abualigah, 2020b). The mathematical Equation of this procedure is given as follows:

where \(X_{j}\) presents the \(j_{th}\) solution, TDR and WEP are variables determined by Eqs. (16) and (17), respectively.

where the min is the minimum value fixed to 0.2, and the max is maximum value fixed to 1.

where p is a fixed value, which is 6, that presents the exploitation action by the iterations. The pseudo-code of the proposed HHMV is presented in Algorithm 1. We make the description of the search rules as given in Algorithm 1. This algorithm can clearly show the rules of transitions between the search processes.

Experiments and results

To evaluate the ability of the proposed HHMV method in solving different optimization problems, three sets of experiments are accomplished, and the obtained results by various methods are discussed in detail. In the first experiments, 23 classical benchmark functions are used to validate the proposed HHMV method’s effectiveness. In the second experiment, another set of advanced benchmark functions are used, called CEC2019, to validate the performance of the proposed HHMV using more complex problems. In the third experiment, the proposed HHMV method is tested in solving real engineering problems to prove its ability to solve real-world problems.

All experiments are executed over Windows 10 (64 bit) that runs on CPU Core i7 with 16GB RAM, and Matlab 2016b are utilized. All underlying optimization methods are executed thirteen times in fair runs for each problem. For fair comparisons, the maximum number of function evaluations is fixed to 1000*50, where the maximum number of iterations is 1000, and the population size is 50. Note, the HHO parameters have been taken from the first paper (Heidari et al., 2019). We fixed the number of iterations to 1000 as it is clear that before this amount the algorithm got the best results. The essential parameter settings for the used approaches are presented in Table 1.

Classical benchmark functions

In this section, the results of the proposed HHMV using classical benchmark problems are given in detail. Table 2 shows the details (i.e., functions, descriptions, dimensions, range, and \(f_{min}\) for all problems) of the 23 classical benchmark functions (Digalakis & Margaritis, 2001; Yao et al., 1999). There functions are divided into three categories: unimodal functions mean possessing a unique mode with changeable dimensions (F1–F7), multimodal functions deal with an optimization process to find multiple solutions of a problem with changeable dimensions (F8-F13), and fixed-dimension multimodal functions deal with an optimization process to find multiple solutions of a problem with fixed dimensions (F14-F23). Several related works have used these benchmark functions (Abd Elaziz et al., 2017, 2021).

Qualitative results

In this section, the qualitative outcomes of the tested benchmark functions are offered. As shown in Fig. 2, four metrics are implemented in 2D diagrams to demonstrate the proposed HHMV method’s convergence behavior. These diagrams are function topology, best values trajectory of first dimension, average fitness values, and convergence curve (objective space).

In the first column in Fig. 2, the typical 2D plots of the fitness function are given for the 23 benchmark functions that have been used in this research. In the second column, the location history is provided of the first positions obtained by the proposed HHMV method during the optimization processes. It is observed that HHMV explores promising regions in the given search space for test functions. Some positions are offered in a wide search space. While most of the positions are spread around the local area for the given benchmark functions, this is because of these given functions’ challenge level. The trajectory shapes, the second column, show that the solution presents significant and sharp changes in the optimization’s first steps. This behavior can guarantee that the proposed HHMV can finally converge to the optimal position. In the third one, the average fitness function in each iteration is presented. The curves show decreasing behavior on the used benchmark functions. This confirms that the proposed HHMV improves the efficiency of the searching through the course of iterations. In the last column, the objective function value of the best solution in each iteration is shown. Hence, the proposed HHMV method has superior exploration and exploitation capabilities.

Parameters tuning and analysis

In this section, the influence of the number of solutions (i.e., N) is examined on the classical test functions (23 benchmark functions). In order to adequately analyze the parameter sensitivity of the proposed HHMV algorithm, we tested several numbers of solutions (i.e., 5, 10, 15, 20, 25, 30, 35, 40, 45, and 50) by comparing the changes in the number of solution parameters throughout iterations (i.e., 500 iterations). It can be observed from the obtained results in Fig. 3 that when these many population sizes are used, the proposed HHMV method keeps its advantages, which means that HHMV is more robust and less overwhelmed by population size. The proposed HHMV is more stable when the population changes, such as F10, F16, F17, F18, F19, F21, F22, and F23. In other words, the best population size was 50 in most of the used benchmark functions. But because there is little difference between the given number of solutions, the claim as mentioned earlier is supported. As given in Fig. 3, during the optimization process of the original optimizer, the performance on some iterations stuck and got in the same area, which means that the solutions almost become the same.

Comparative results of the classical benchmark functions

In this section, 23 classical benchmark functions are tested with different dimensions to evaluate the achievement of the proposed HHMV method. The proposed method is compared with other well-known optimization methods, including Harris Hawks Optimizer (HHO) (Heidari et al., 2019), Grasshopper Optimization Algorithm (GOA) (Saremi et al., 2017), Salp Swarm Algorithm (SSA) (Mirjalili et al., 2017), Whale Optimization Algorithm (WOA) (Mirjalili & Lewis, 2016), Sine Cosine Algorithm (SCA) (Mirjalili, 2016a), Dragonfly Algorithm (DA) (Mirjalili, 2016b), Grey Wolf Optimizer (GWO) (Mirjalili et al., 2014), Particle Swarm Optimization (PSO) (Eberhart & Kennedy, 1995), Ant Lion Optimizer (ALO) (Mirjalili, 2015), Marine Predators Algorithm (MPA) (Faramarzi et al., 2020), and Equilibrium Optimizer (EO) (Faramarzi et al., 2020). In the experiments reported below, Tables 3 and 4 depict the terms of the worst, mean, and best fitness functions. Moreover, the Friedman ranking test (Mack & Skillings, 1980) is applied to prove the ability of the proposed HHMV method. For fairness, all used optimization methods run in the same test conditions.

The results of the tested optimization methods using thirteen benchmark functions (F1–F13) are given in Table 3 when the dimension size is fixed to 10. The proposed HHMV method obtained better results than other tested optimization methods in almost all cases (F1–F13). In some cases, the HHMV recorded the best solutions. According to the Friedman outcomes, the HHMV method got first ranking, followed by HHO, MPA, EO, GWO, WOA, PSO, SCA, SSA, ALO, DA, and GOA. The results of the tested optimization methods, using ten fixed-dimension benchmark functions (F14-F23), are given in Table 4. The proposed HHMV keeps its advantages given its better solutions and promising results than other tested optimization methods. According to the Friedman test, the proposed HHMV got the first ranking, followed by MPA, PSO, SSA, GWO, EO, WOA, HHO, ALO, DA, SCA, and GOA.

Stability of the HHMV on various dimensions size

In this section, the stability of the proposed HHMV is investigated using the classical benchmark functions with different dimensions sizes, such as Dim = 50, 100, and 500. Tables 5, 6 and 7 show the results of the tested optimization methods using thirteen benchmark functions (F1–F13) with different dimensions sizes, such as Dim = 50, 100, and 500, respectively. The proposed method (HHMV) overcame the conventional HHO, and it obtained better results compared to other tested methods when the Dim is fixed to 50, as shown in Table 5. The Friedman test proved that the proposed HHMV is the best method against comparative methods. Moreover, the obtained results are shown in Table 6 when the dimension size is fixed to 100.

Compared with the conventional HHO, the proposed HHMV defeated the conventional version of the HHO and achieved more reliable results compared to other tested methods. The Friedman test also realized the competence of the HHMV in addressing high-dimensional benchmark functions. HHMV got the first ranking amongst all the tested methods. Finally, the comparisons when the Dim is fixed to 500 are presented in Table 7. This experiment is conducted using high dimensional problems, and the results show that the proposed HHMV is the best-tested method compared with all comparative optimization methods. It got the first ranking according to the outcomes of the Friedman measure. In general, the STD results illustrate that the proposed HHMV provided low distributed values, which means the stability of the HHMV is good. From this part of the experiments, we concluded that the proposed HHMV significantly surpasses all comparative methods.

Convergence analysis using classical benchmark functions

In this section, the convergence of the tested optimization algorithms is presented using 13 classical benchmark functions. Figure 4 shows a comparison between the proposed HHMV, conventional HHO, and other comparative methods for the convergence speed of the best-obtained results through the optimization processes (iterations). The dimension size of the given problems, in this figure, is fixed to 10.

From this figure, it can be clearly observed that the HHMV outperforms all other comparative algorithms regarding the convergence rate with relatively better convergence curves to find the optimal solution. As shown in Fig. 4, the proposed HHMV has the fastest convergence rate, and it gets the best solution early at the search process, such as in F1, F2, F3, F4, F5, F6, F7, F8, F9, F10, F11, F12, F13, F14, F16, F18, F21, F22, and F23. The proposed HHMV has solved trapping in the local area, and we can see that there are several algorithms trapped in local optima, such as in F1, GOA, SSA, ALO, MPA, WOA, and PSO are all stuck in the optimal local area. Further, in F3, GOA, SSA, WOA, SCA, DA, PSO, ALO, MPA, and EO are stuck in the optimal local area, and so on. This problem is also clearly seen in the F2 and F8 regions. The proposed HHMV performs better in terms of stability, convergence speed, and accuracy for all tested functions to recap. Furthermore, the HHO (HHMV) suggested version made an equilibrium between the search mechanisms and avoided the local search area.

CEC2019 benchmark functions

In this section, the proposed HHMV is further investigated to prove its ability to solve different optimization problems. CEC2019 benchmark functions are complex mathematical optimization problems that have been used to validate the performance of the proposed algorithms. Table 8 shows the details of the CEC2019 benchmark functions. Note that all tested algorithms have the same parameter settings as given in the original papers. Several related works have used these benchmark functions (Mohammed & Rashid, 2020; Rahman & Rashid, 2021).

Performance evaluation using CEC2019 benchmark functions

In this section, ten CEC2019 benchmark functions are tested with fixed dimensions to assess the presented HHMV method’s achievement. The proposed method is compared with other well-established optimization methods regarding the worst, average, and best fitness function values. For fairness, all used optimization methods run in the same test conditions.

The obtained results of the tested optimization methods are given in Table 9. The HHMV method obtained excellent results in almost all test cases. Compared with the conventional HHO, the proposed HHMV overcame the original algorithm (HHO), and it got a promising performance compared to other comparative methods published in the literature. Moreover, according to the Friedman test results, the proposed HHMV achieved the first ranking, followed by MPA, ALO, SSA, EO, HHO, GOA, GWO, PSO, WOA, SCA, and DA. Overall, the STD results of the CEC2019 benchmark functions demonstrate that the proposed HHMV achieved the same results in almost different runs. In other words, it got low distributed results for several runs, which means the proposed HHMV has stable performance. We concluded that the proposed HHMV got excellent results. It accomplished this research’s main aim by avoiding the local optima problem and achieved a proper balance between the search process. The results of the Wilcoxon test show that the proposed HHMV overcame all the comparative methods.

Convergence analysis using CEC2019 benchmark functions

For the comparison purposes of the convergence speed, ten CEC2019 benchmark functions are utilized. The convergence curves of the tested problems are given in Fig. 5. It is evident in Fig. 5 that the proposed HHMV algorithm bestows a much faster convergence speed than other well-known optimization algorithms. The HHMV method reached the best results quickly for functions F1, F4, F7, and F9, which means that it converges to the optimal area very fast while the other algorithms converge slower. The proposed HHMV method significantly enhanced the search performance, solution precision and sped up the convergence velocity to recap. These features mean that the proposed method has a robust global search capability, making an appropriate balance between the search mechanisms (i.e., exploration and exploitation).

HHMV for solving engineering design problems

In this section, five constrained real engineering design problems are employed to validate the proposed HHMV’s effectiveness further. The design problems include tension/compression spring design problem, pressure vessel design problem, welded beam design problem, three-bar truss design problem, and speed reducer design problem (Abd Elaziz et al., 2021). These problems are generally considered and have been employed to adequately show the ability of the underlying algorithms in solving different optimization problems (Singh et al., 2020; Sattar & Salim, 2020; Gupta et al., 2020; Wang et al., 2021). The proposed HHMV is analyzed with other methods. Note that for the HHMV, the population size and iterations are 50 and 1000, respectively.

Formulations of constrained optimization problems

To test the efficacy of the proposed HHMV, bound constrained and general constrained optimization problems are used in this study. In the case of bound-constrained optimization issues (Gandomi & Deb, 2020; Fesanghary et al., 2008). Each pattern variable is frequently needed to specify a boundary constraint:

where \(LB_j\) and \(UB_j\) are the lower bound and upper bound of the position \(x_{ij}\), and n is the number of given positions. Furthermore, a generic constrained issue is commonly expressed as:

where m is the number of various constraints, and l is the number of equilibrium constraints.

All of the constrained issues in Eq. (19) are transferred into the bound-constrained design in the performance evaluation of the proposed HHMV using the static cost function. A cost function will be incorporated into the underlying goal function for any infeasible solution. The static cost function is simplified because of its ease of use in employment. It just requires an auxiliary cost function and is suitable for a wide range of issues (Rao, 2019; de Melo & Banzhaf, 2018). The above-mentioned restricted optimization issue may be expressed as follows using this procedure:

where \(Pe_j\) and \(Pe_k\) are cost functions and usually charged a significant value. \(\varepsilon \) is the error of equilibrium constraints, which is set to 1e-6 in this paper.

Tension/compression spring design problem

The tension/compression spring design problem aims to reduce the total weight of a given spring (Arora & Singh, 2019). Three variables are studied in this problem: wire diameter (d), mean coil diameter (P), and the number of active coils (N), as presented in Fig. 6.

Table 10 shows the proposed HHMV method’s performance and the other well-known comparative methods: PSO, HS, MPM, GSA, ES, MFO, BA, WOA, MVO, RO, and HHO on this application. The obtained constraints and optimal variables of the best solution for all the used algorithms are given in Table 10. It is obvious from Table 10 that the presented HHMV got better results compared to other well-known optimization methods by giving optimal variables at \(x^*\) = (0.05863903230, 0.61201955543, 3.70245663034) with the best objective function: F(\(x^*\)) = 0.012000542. Figure 7 represents the curves of the objective values, the trajectory of the 1st solution, and the convergence curve of the proposed HHMV while solving the tension/compression spring design problem. The HHMV method reached the optimal solution very fast according to the given convergence curve.

Pressure vessel design problem

The pressure vessel design problem aims to reduce the total cost of the cylindrical pressure vessel (Long et al., 2019). Four variables are studied in this problem: the width of the shell (\(T_s\)), the width of the head (\(T_h\)), internal radius (R), and height of the cylindrical part without studying the head (L), as presented in Fig. 8.

Table 11 shows the proposed HHMV method’s performance and the other well-known comparative methods: Branch-bound, WOA, OBSCA, GA, PSO-SCA, HPSO, CSCA, ES, MVO, GSA, HS, and ACO on this application. The obtained constraints and optimal variables of the best solution for all used methods are given in Table 11. It is obvious from Table 11 that the presented HHMV overcame all comparative methods by providing optimal variables at \(x^*\) = (0.8149581, 0.4259851, 42.092054, 176.73998141) with the best objective function: F(\(x^*\)) = 6041.743. Figure 9 represents the curves of the objective values, the trajectory of the 1st solution, and the convergence curve of the proposed HHMV while solving the pressure vessel design problem. We found that the HHMV method obtained the most fitting parameters and the minimum objective function.

Welded beam design problem

The welded beam problem aims to minimize the cost of the welded beam shape (Chen et al., 2020). Four variables are analysed in this issue: the width of weld (h), the height of the clamped bar (l), the length of the bar (t), and the width of the bar (b), as presented in Fig. 10.

Table 12 shows the proposed HHMV method’s performance and the other well-known comparative methods on this application. The obtained constraints and optimal variables of the best solution for all tested methods are given in Table 12. It is obvious from Table 12 that the proposed HHMV obtained a promising result compared to other methods by giving optimal variables at \(x^*\) = (0.1505226573, 3.2960453639, 9.0346909849, 0.2058176787) with the best objective function: F(\(x^*\)) = 1.6298. Figure 11 represents the curves of the objective values, the trajectory of the 1st solution, and the convergence curve of the proposed HHMV while solving the welded beam design problem. We found that the HHMV method obtained a promising result by finding fitting parameters and the best objective function.

Three-bar truss design problem

The three-bar truss problem seeks to minimize the weight of the three-bar truss (Pathak & Srivastava, 2020). Two variables are analyzed in this problem: the cross-sectional areas of member 1 (\(A_1\)) and the cross-sectional areas of member 2 (\(A_2\)), as presented in Fig. 12.

Table 13 shows the proposed HHMV method’s performance and the other well-known comparative methods on this application. The obtained constraints and optimal variables of the best solution for all tested methods are given in Table 13. It is obvious from Table 13 that the presented HHMV got comparable results compared to other methods by giving optimal variables at \(x^*\) = (0.79368745, 0.39425474) with the best objective function: F(\(x^*\)) = 263.914185. Figure 13 represents the curves of the objective values, the trajectory of the 1st solution, and the convergence curve of the proposed HHMV while solving the three-bar truss design problem. The HHMV method got comparable results, and it reached the optimal solution very fast according to the given convergence curve.

Speed reducer design problem

The speed reducer problem seeks to minimize the total weight of the speed reducer shape (Truong et al., 2019). Seven variables are analyzed in this problem: the face diameter (x1), module of teeth (x2), number of teeth on pinion (x3), the distance of shaft 1 between bearings (x4), the distance of shaft 2 between bearings (x5), the width of shaft 1 (x6), and width of shaft 2 (x7), as presented in Fig. 14.

Table 14 shows the proposed HHMV method’s performance and the other well-known comparative methods on this application. The obtained constraints and optimal variables of the best solution for all tested methods are given in Table 14. It is evident from Table 14 that the presented HHMV got a comparable result compared to several methods and got better results compared to other well-known optimization methods by giving optimal variables at \(x^*\) = (3.50381, 0.7, 17, 7.3, 7.72934, 3.356501, 5.286692) with the best objective function: F(\(x^*\)) = 2997.90388. Figure 15 represents the curves of the objective values, the trajectory of the 1st solution, and the convergence curve of the proposed HHMV while solving the speed reducer design problem. The HHMV method produced equivalent results to the other well-known methods. It reached the optimal solution very fast at iteration 200 according to the presented convergence curve in Fig. 15.

We concluded that the improvements that have been made to the proposed method can positively affect the other methods. So, this is one motivation to use the enhanced method for modifying other methods from the same algorithm category.

Conclusion and future works

In this paper, a new variant of Harris Hawks Optimizer (HHO) is proposed, named Improved Harris Hawks Optimizer (HHMV), which hybridizes the conventional HHO with Multi-Verse Optimizer (MVO). The main aim of the proposed HHMV method is to improve the ability of the conventional HHO by maintaining the diversity of the solutions during the search processes and developing its convergence mode.

Several benchmark functions with various dimensions sizes, including thirteen classical, ten CEC2019 problems, and five engineering design problems are used to validate the proposed method’s performance. The experimental results demonstrate that HHMV got more suitable results, and it is clearly superior to the other competitors, including Harris Hawks Optimization (HHO), Grasshopper Optimization Algorithm (GOA), Salp Swarm Algorithm (SSA), Whale Optimization Algorithm (WOA), Sine Cosine Algorithm (SCA), Dragonfly Algorithm (DA), Grey Wolf Optimizer (GWO), Particle Swarm Optimization (PSO), Ant Lion Optimizer (ALO), Marine Predators Algorithm (MPA), and Equilibrium Optimizer (EO). The proposed HHMV has a more vital global searchability and a more proper solution in terms of accuracy. Furthermore, the tested engineering problems results demonstrate that HHMV is better than other well-known optimization methods in total cost evaluation criteria. The proposed method can be used by other researchers to solve different optimization problems due to its promising performance.

In future works, we intend to investigate further the ability of HHMV in solving other different applications, such as non-linear problems, machine learning, image segmentation, IoT task scheduling in cloud and fog computing, optimal power flow, and parameter tuning of neural networks. Besides, we will study the influence of chaotic maps on the performance of the proposed HHMV method.

References

Abasi, A. K., Khader, A. T., Al-Betar, M. A., Naim, S., Makhadmeh, S. N., & Alyasseri, Z. A. A. (2020). Link-based multi-verse optimizer for text documents clustering. Applied Soft Computing, 87, 106002.

Abd Elaziz, M., Elsheikh, A. H., Oliva, D., Abualigah, L., Lu, S., & Ewees, A. A. (2021). Advanced metaheuristic techniques for mechanical design problems. Archives of Computational Methods in Engineering, 29, 1–22.

Abd Elaziz, M., Ewees, A. A., Neggaz, N., Ibrahim, R. A., Al-qaness, M. A., & Lu, S. (2021). Cooperative meta-heuristic algorithms for global optimization problems. Expert Systems with Applications, 176, 114788.

Abd Elaziz, M., Oliva, D., Ewees, A. A., & Xiong, S. (2019). Multi-level thresholding-based grey scale image segmentation using multi-objective multi-verse optimizer. Expert Systems with Applications, 125, 112–129.

Abd Elaziz, M., Oliva, D., & Xiong, S. (2017). An improved opposition-based sine cosine algorithm for global optimization. Expert Systems with Applications, 90, 484–500.

Abualigah, L. (2020). Group search optimizer: A nature-inspired meta-heuristic optimization algorithm with its results, variants, and applications. Neural Computing and Applications, 7, 1–24.

Abualigah, L. (2020). Multi-verse optimizer algorithm: A comprehensive survey of its results, variants, and applications. Neural Computing and Applications, 32, 1–21.

Abualigah, L., Abd Elaziz, M., Sumari, P., Geem, Z. W., & Gandomi, A. H. (2021). Reptile search algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Systems with Applications, 191, 116158.

Abualigah, L., & Alkhrabsheh, M. (2021). Amended hybrid multi-verse optimizer with genetic algorithm for solving task scheduling problem in cloud computing. The Journal of Supercomputing, 78, 1–26.

Abualigah, L., & Diabat, A. (2021). Advances in sine cosine algorithm: A comprehensive survey. Artificial Intelligence Review, 54, 1–42.

Abualigah, L., Diabat, A., & Geem, Z. W. (2020). A comprehensive survey of the harmony search algorithm in clustering applications. Applied Sciences, 10(11), 3827.

Abualigah, L., Diabat, A., Mirjalili, S., Abd Elaziz, M., & Gandomi, A. . H. (2021). The arithmetic optimization algorithm. Computer Methods in Applied Mechanics and Engineering, 376, 113609.

Abualigah, L., & Dulaimi, A. J. (2021). A novel feature selection method for data mining tasks using hybrid sine cosine algorithm and genetic algorithm. Cluster Computing, 24, 1–16.

Abualigah, L., Shehab, M., Alshinwan, M., Mirjalili, S., & Abd Elaziz, M. (2021). Ant lion optimizer: A comprehensive survey of its variants and applications. Archives of Computational Methods in Engineering, 28, 1397–1416.

Abualigah, L., Shehab, M., Diabat, A., & Abraham, A. (2020). Selection scheme sensitivity for a hybrid salp swarm algorithm: Analysis and applications. Engineering with Computers, 1–27.

Abualigah, L., Yousri, D., Abd Elaziz, M., Ewees, A. A., Al-qaness, M. A., & Gandomi, A. H. (2021). Aquila optimizer: A novel meta-heuristic optimization algorithm. Computers & Industrial Engineering, 157, 107250.

Abualigah, L. M., Khader, A. T., & Hanandeh, E. S. (2019). Modified krill herd algorithm for global numerical optimization problems. In S. Shandilya, S. Shandilya, & A. Nagar (Eds.), Advances in nature-inspired computing and applications (pp. 205–221). Springer.

Ali, E., El-Hameed, M., El-Fergany, A., & El-Arini, M. (2016). Parameter extraction of photovoltaic generating units using multi-verse optimizer. Sustainable Energy Technologies and Assessments, 17, 68–76.

Alsalibi, B., Abualigah, L., & Khader, A. T. (2020). A novel bat algorithm with dynamic membrane structure for optimization problems. Applied Intelligence, 51, 1–26.

Alshinwan, M., Abualigah, L., Shehab, M., Abd Elaziz, M., Khasawneh, A. M., Alabool, H., & Al Hamad, H. (2021). Dragonfly algorithm: A comprehensive survey of its results, variants, and applications. Multimedia Tools and Applications, 80, 1–38.

Altabeeb, A. M., Mohsen, A. M., Abualigah, L., & Ghallab, A. (2021). Solving capacitated vehicle routing problem using cooperative firefly algorithm. Applied Soft Computing, 108, 107403.

Arora, S., & Singh, S. (2019). Butterfly optimization algorithm: A novel approach for global optimization. Soft Computing, 23(3), 715–734.

Bao, X., Jia, H., & Lang, C. (2019). A novel hybrid Harris Hawks optimization for color image multilevel thresholding segmentation. IEEE Access, 7, 76529–76546.

Baykasoglu, A. (2012). Design optimization with chaos embedded great deluge algorithm. Applied Soft Computing, 12, 1055–1567.

Baykasoğlu, A., & Akpinar, Ş. (2015). Weighted superposition attraction (WSA): A swarm intelligence algorithm for optimization problems-part 2: Constrained optimization. Applied Soft Computing, 37, 396–415.

Baykasoğlu, A., & Ozsoydan, F. B. (2015). Adaptive firefly algorithm with chaos for mechanical design optimization problems. Applied Soft Computing, 36, 152–164.

Belegundu, A. . D., & Arora, J. . S. (1985). A study of mathematical programming methods for structural optimization. Part i: Theory. International Journal for Numerical Methods in Engineering, 21(9), 1583–1599.

Beyer, H.-G., & Schwefel, H.-P. (2002). Evolution strategies—A comprehensive introduction. Natural Computing, 1(1), 3–52.

Chen, H., Jiao, S., Wang, M., Heidari, A. A., & Zhao, X. (2020). Parameters identification of photovoltaic cells and modules using diversification-enriched Harris Hawks optimization with chaotic drifts. Journal of Cleaner Production, 244, 118778.

Chen, H., Wang, M., & Zhao, X. (2020). A multi-strategy enhanced sine cosine algorithm for global optimization and constrained practical engineering problems. Applied Mathematics and Computation, 369, 124872.

Cheng, H., Zhang, Y., & Li, F. (2009). Improved genetic programming algorithm. In International Asia symposium on intelligent interaction and affective computing, ASIA’09 (pp. 168–171). IEEE. https://doi.org/10.1109/ASIA.2009.39

Coello, C. A. C. (2000). Use of a self-adaptive penalty approach for engineering optimization problems. Computers in Industry, 41(2), 113–127.

Cuevas, E., Echavarría, A., & Ramírez-Ortegón, M. A. (2014). An optimization algorithm inspired by the states of matter that improves the balance between exploration and exploitation. Applied intelligence, 40(2), 256–272.

Cully, A., & Demiris, Y. (2017). Quality and diversity optimization: A unifying modular framework. IEEE Transactions on Evolutionary Computation, 22(2), 245–259.

Czerniak, J. M., Zarzycki, H., & Ewald, D. (2017). AAO as a new strategy in modeling and simulation of constructional problems optimization. Simulation Modelling Practice and Theory, 76, 22–33.

de Melo, V. V., & Banzhaf, W. (2018). Drone squadron optimization: A novel self-adaptive algorithm for global numerical optimization. Neural Computing and Applications, 30(10), 3117–3144.

Deb, K. (1991). Optimal design of a welded beam via genetic algorithms. AIAA Journal, 29(11), 2013–2015.

Dhou, K. (2020). A new chain coding mechanism for compression stimulated by a virtual environment of a predator–prey ecosystem. Future Generation Computer Systems, 102, 650–669.

Dhou, K., & Cruzen, C. (2020). A new chain code for bi-level image compression using an agent-based model of echolocation in dolphins. In 2020 IEEE 6th international conference on dependability in sensor, cloud and big data systems and application (DependSys) (pp. 87–91). IEEE.

Dhou, K., & Cruzen, C. (2021). A highly efficient chain code for compression using an agent-based modeling simulation of territories in biological beavers. Future Generation Computer Systems, 118, 1–13.

Digalakis, J. G., & Margaritis, K. G. (2001). On benchmarking functions for genetic algorithms. International Journal of Computer Mathematics, 77(4), 481–506.

Eberhart, R., & Kennedy, J. (1995). Particle swarm optimization. In: Proceedings of the IEEE international conference on neural networks (Vol. 4, Citeseer, pp. 1942–1948).

Eid, A., Kamel, S., & Abualigah, L. (2021). Marine predators algorithm for optimal allocation of active and reactive power resources in distribution networks. Neural Computing and Applications, 33, 1–29.

El Aziz, M. A., Ewees, A. A., & Hassanien, A. E. (2016). Hybrid swarms optimization based image segmentation. In S. Bhattacharyya, P. Dutta, S. De, & G. Klepac (Eds.), Hybrid soft computing for image segmentation (pp. 1–21). Springer.

Elaziz, M. A., Oliva, D., & Xiong, S. (2017). An improved opposition-based sine cosine algorithm for global optimization. Expert Systems with Applications, 90, 484–500.

Essa, F., Abd Elaziz, M., & Elsheikh, A. . H. (2020). An enhanced productivity prediction model of active solar still using artificial neural network and Harris Hawks optimizer. Applied Thermal Engineering, 170, 115020.

Ewees, A. A., Abd El Aziz, M., & Hassanien, A. E. (2019). Chaotic multi-verse optimizer-based feature selection. Neural Computing and Applications, 31(4), 991–1006.

Ewees, A. A., Elaziz, M. A., & Houssein, E. H. (2018). Improved grasshopper optimization algorithm using opposition-based learning. Expert Systems with Applications, 112, 156–172.

Faramarzi, A., Heidarinejad, M., Mirjalili, S., & Gandomi, A. H. (2020). Marine predators algorithm: A nature-inspired metaheuristic. Expert Systems with Applications, 152, 113377.

Faramarzi, A., Heidarinejad, M., Stephens, B., & Mirjalili, S. (2020). Equilibrium optimizer: A novel optimization algorithm. Knowledge-Based Systems, 191, 105190.

Faris, H., Hassonah, M. A., Ala’M, A.-Z., Mirjalili, S., & Aljarah, I. (2018). A multi-verse optimizer approach for feature selection and optimizing SVM parameters based on a robust system architecture. Neural Computing and Applications, 30(8), 2355–2369.

Fathy, A., & Rezk, H. (2018). Multi-verse optimizer for identifying the optimal parameters of PEMFC model. Energy, 143, 634–644.

Fesanghary, M., Mahdavi, M., Minary-Jolandan, M., & Alizadeh, Y. (2008). Hybridizing harmony search algorithm with sequential quadratic programming for engineering optimization problems. Computer Methods in Applied Mechanics and Engineering, 197(33–40), 3080–3091.

Gandomi, A. H., & Deb, K. (2020). Implicit constraints handling for efficient search of feasible solutions. Computer Methods in Applied Mechanics and Engineering, 363, 112917.

Gandomi, A. H., Yang, X.-S., & Alavi, A. H. (2013). Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Engineering with Computers, 29(1), 17–35.

Gandomi, A. H., Yang, X.-S., Alavi, A. H., & Talatahari, S. (2013). Bat algorithm for constrained optimization tasks. Neural Computing and Applications, 22(6), 1239–1255.

Golilarz, N. A., Gao, H., & Demirel, H. (2019). Satellite image de-noising with Harris Hawks meta heuristic optimization algorithm and improved adaptive generalized gaussian distribution threshold function. IEEE Access, 7, 57459–57468.

Guedria, N. B. (2016). Improved accelerated PSO algorithm for mechanical engineering optimization problems. Applied Soft Computing, 40, 455–467.

Gupta, S., Deep, K., Moayedi, H., Foong, L. K., & Assad, A. (2020). Sine cosine grey wolf optimizer to solve engineering design problems. Engineering with Computers, 37, 1–27.

Han, S. -Y., Wan, X. -Y., Wang, L., Zhou, J., & Zhong, X. -F. (2016). Comparison between genetic algorithm and differential evolution algorithm applied to one dimensional bin-packing problem. In 2016 3rd International conference on informative and cybernetics for computational social systems (ICCSS) (pp. 52–55). IEEE.

Hassan, M. H., Kamel, S., Abualigah, L., & Eid, A. (2021). Development and application of slime mould algorithm for optimal economic emission dispatch. Expert Systems with Applications, 182, 115205.

Heidari, A. A., Mirjalili, S., Faris, H., Aljarah, I., Mafarja, M., & Chen, H. (2019). Harris hawks optimization: Algorithm and applications. Future Generation Computer Systems, 97, 849–872.

He, Q., & Wang, L. (2007). A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Applied Mathematics and Computation, 186(2), 1407–1422.

He, Q., & Wang, L. (2007). An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Engineering Applications of Artificial Intelligence, 20(1), 89–99.

Houssein, E. H., Hosney, M. E., Oliva, D., Mohamed, W. M., & Hassaballah, M. (2020). A novel hybrid Harris Hawks optimization and support vector machines for drug design and discovery. Computers & Chemical Engineering, 133, 106656.

Huang, F.-Z., Wang, L., & He, Q. (2007). An effective co-evolutionary differential evolution for constrained optimization. Applied Mathematics and Computation, 186(1), 340–356.

Hu, C., Li, Z., Zhou, T., Zhu, A., & Xu, C. (2016). A multi-verse optimizer with levy flights for numerical optimization and its application in test scheduling for network-on-chip. PLoS One, 11(12), e0167341.

Jiang, Y., Luo, Q., Wei, Y., Abualigah, L., et al. (2021). An efficient binary gradient-based optimizer for feature selection. Mathematical Biosciences and Engineering, 18(4), 3813–3854.

Kamboj, V. K., Nandi, A., Bhadoria, A., & Sehgal, S. (2020). An intensify Harris Hawks optimizer for numerical and engineering optimization problems. Applied Soft Computing, 89, 106018.

Karaboga, D. (2005). An idea based on honey bee swarm for numerical optimization, Tech. Rep. 2, Technical Report-tr06, Erciyes University, Engineering Faculty, Computer Engineering Department.

Kaveh, A., & Khayatazad, M. (2012). A new meta-heuristic method: ray optimization. Computers & Structures, 112, 283–294.

Kaveh, A., & Talatahari, S. (2010). An improved ant colony optimization for constrained engineering design problems. Engineering Computations, 27(1), 155–182.

Koziel, S., Leifsson, L., & Yang, X.-S. (2014). Solving computationally expensive engineering problems: Methods and applications (Vol. 97). Springer.

Krishnanand, K., & Ghose, D. (2005). Detection of multiple source locations using a glowworm metaphor with applications to collective robotics. In Proceedings 2005 IEEE swarm intelligence symposium, 2005. SIS 2005 (pp. 84–91). IEEE.

Lee, K. S., & Geem, Z. W. (2005). A new meta-heuristic algorithm for continuous engineering optimization: harmony search theory and practice. Computer Methods in Applied Mechanics and Engineering, 194(36–38), 3902–3933.

Liu, H., Cai, Z., & Wang, Y. (2010). Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Applied Soft Computing, 10(2), 629–640.

Long, W., Wu, T., Liang, X., & Xu, S. (2019). Solving high-dimensional global optimization problems using an improved sine cosine algorithm. Expert Systems with Applications, 123, 108–126.

Mack, G. A., & Skillings, J. H. (1980). A Friedman-type rank test for main effects in a two-factor ANOVA. Journal of the American Statistical Association, 75(372), 947–951.

Mahdavi, M., Fesanghary, M., & Damangir, E. (2007). An improved harmony search algorithm for solving optimization problems. Applied Mathematics and Computation, 188(2), 1567–1579.

Mezura-Montes, E., & Coello, C. A. C. (2008). An empirical study about the usefulness of evolution strategies to solve constrained optimization problems. International Journal of General Systems, 37(4), 443–473.

Mirjalili, S. (2015). The ant lion optimizer. Advances in Engineering Software, 83, 80–98.

Mirjalili, S. (2015). Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowledge-Based systems, 89, 228–249.

Mirjalili, S. (2016). SCA: A sine cosine algorithm for solving optimization problems. Knowledge-Based systems, 96, 120–133.

Mirjalili, S. (2016). Dragonfly algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Computing and Applications, 27(4), 1053–1073.

Mirjalili, S., Gandomi, A. H., Mirjalili, S. Z., Saremi, S., Faris, H., & Mirjalili, S. M. (2017). Salp swarm algorithm: A bio-inspired optimizer for engineering design problems. Advances in Engineering Software, 114, 163–191.

Mirjalili, S., & Lewis, A. (2016). The whale optimization algorithm. Advances in Engineering Software, 95, 51–67.

Mirjalili, S., Mirjalili, S. M., & Hatamlou, A. (2016). Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Computing and Applications, 27(2), 495–513.

Mirjalili, S., Mirjalili, S. M., & Lewis, A. (2014). Grey wolf optimizer. Advances in Engineering Software, 69, 46–61.

Moayedi, H., Gör, M., Lyu, Z., & Bui, D. T. (2020). Herding behaviors of grasshopper and Harris Hawk for hybridizing the neural network in predicting the soil compression coefficient. Measurement, 152, 107389.

Moayedi, H., Osouli, A., Nguyen, H., & Rashid, A. S. A. (2019). A novel Harris Hawks’ optimization and k-fold cross-validation predicting slope stability. Engineering with Computers. https://doi.org/10.1007/s00366-019-00828-8.

Mohammed, H., & Rashid, T. (2020). A novel hybrid GWO with WOA for global numerical optimization and solving pressure vessel design. Neural Computing and Applications, 32, 1–18.

Pan, W.-T. (2012). A new fruit fly optimization algorithm: taking the financial distress model as an example. Knowledge-Based Systems, 26, 69–74.

Pathak, V. K., & Srivastava, A. K. (2020). A novel upgraded bat algorithm based on Cuckoo search and Sugeno inertia weight for large scale and constrained engineering design optimization problems. Engineering with Computers, 1–28.

Premkumar, M., Jangir, P., Kumar, B. S., Sowmya, R., Alhelou, H. H., Abualigah, L., Yildiz, A. R., & Mirjalili, S. (2021). A new arithmetic optimization algorithm for solving real-world multiobjective CEC-2021 constrained optimization problems: Diversity analysis and validations, IEEE Access.

Ragsdell, K., & Phillips, D. (1976). Optimal design of a class of welded structures using geometric programming. Journal of Engineering for Industry, 98, 1021–1025.

Rahman, C. M., & Rashid, T. A. (2021). A new evolutionary algorithm: Learner performance based behavior algorithm. Egyptian Informatics Journal, 22, 213–223.

Rao, S. (2019). Engineering optimization: Theory and practice. Wiley.

Rashedi, E., Nezamabadi-Pour, H., & Saryazdi, S. (2009). GSA: A gravitational search algorithm. Information Sciences, 179(13), 2232–2248.

Ray, T., & Saini, P. (2001). Engineering design optimization using a swarm with an intelligent information sharing among individuals. Engineering Optimization, 33(6), 735–748.

Ridha, H. M., Heidari, A. A., Wang, M., & Chen, H. (2020). Boosted mutation-based Harris Hawks optimizer for parameters identification of single-diode solar cell models. Energy Conversion and Management, 209, 112660.

Sadollah, A., Bahreininejad, A., Eskandar, H., & Hamdi, M. (2013). Mine blast algorithm: A new population based algorithm for solving constrained engineering optimization problems. Applied Soft Computing, 13(5), 2592–2612.

Sadollah, A., Sayyaadi, H., & Yadav, A. (2018). A dynamic metaheuristic optimization model inspired by biological nervous systems: Neural network algorithm. Applied Soft Computing, 71, 747–782.

Şahin, C. B., Dinler, Ö. B., & Abualigah, L. (2021). Prediction of software vulnerability based deep symbiotic genetic algorithms: Phenotyping of dominant-features. Applied Intelligence, 51, 1–17.

Sandgren, E. (1990). Nonlinear integer and discrete programming in mechanical design optimization. Journal of Mechanical Design, 112(2), 223–229.

Saremi, S., Mirjalili, S., & Lewis, A. (2017). Grasshopper optimisation algorithm: Theory and application. Advances in Engineering Software, 105, 30–47.

Sarker, R. A., Elsayed, S. M., & Ray, T. (2014). Differential evolution with dynamic parameters selection for optimization problems. IEEE Transactions on Evolutionary Computation, 18(5), 689–707.

Sattar, D., & Salim, R. (2020). A smart metaheuristic algorithm for solving engineering problems. Engineering with Computers, 37, 1–29.

Shehab, M., Abualigah, L., Al Hamad, H., Alabool, H., Alshinwan, M., & Khasawneh, A. . M. (2020). Moth–flame optimization algorithm: Variants and applications. Neural Computing and Applications, 32(14), 9859–9884.

Shehab, M., Alshawabkah, H., Abualigah, L., & Nagham, A.-M. (2020). Enhanced a hybrid moth-flame optimization algorithm using new selection schemes. Engineering with Computers, 37, 1–26.

Shukri, S., Faris, H., Aljarah, I., Mirjalili, S., & Abraham, A. (2018). Evolutionary static and dynamic clustering algorithms based on multi-verse optimizer. Engineering Applications of Artificial Intelligence, 72, 54–66.

Singh, N., Chiclana, F., Magnot, J.-P., et al. (2020). A new fusion of salp swarm with sine cosine for optimization of non-linear functions. Engineering with Computers, 36(1), 185–212.

Truong, K. H., Nallagownden, P., Baharudin, Z., & Vo, D. N. (2019). A quasi-oppositional-chaotic symbiotic organisms search algorithm for global optimization problems. Applied Soft Computing, 77, 567–583.

Tsai, J.-F. (2005). Global optimization of nonlinear fractional programming problems in engineering design. Engineering Optimization, 37(4), 399–409.

Wang, S., Liu, Q., Liu, Y., Jia, H., Abualigah, L., Zheng, R., & Wu, D. (2021). A hybrid SSA and SMA with mutation opposition-based learning for constrained engineering problems. Computational Intelligence and Neuroscience.

Wang, Z., Luo, Q., & Zhou, Y. (2021). Hybrid metaheuristic algorithm using butterfly and flower pollination base on mutualism mechanism for global optimization problems. Engineering with Computers, 37, 3665–3698.

Wang, X., Pan, J.-S., & Chu, S.-C. (2020). A parallel multi-verse optimizer for application in multilevel image segmentation. IEEE Access, 8, 32018–32030.

Xu, M., You, X., & Liu, S. (2017). A novel heuristic communication heterogeneous dual population ant colony optimization algorithm. IEEE Access, 5, 18506–18515.

Yang, X. .-S. (2008). Nature-inspired metaheuristic algorithms. Luniver Press.

Yao, X., Liu, Y., & Lin, G. (1999). Evolutionary programming made faster. IEEE Transactions on Evolutionary computation, 3(2), 82–102.

Yi, J., Huang, D., Fu, S., He, H., & Li, T. (2016). Multi-objective bacterial foraging optimization algorithm based on parallel cell entropy for aluminum electrolysis production process. IEEE Transactions on Industrial Electronics, 63(4), 2488–2500. https://doi.org/10.1109/TIE.2015.2510977.

Yousri, D., Abd Elaziz, M., Abualigah, L., Oliva, D., Al-Qaness, M. A., & Ewees, A. A. (2021). Covid-19 x-ray images classification based on enhanced fractional-order cuckoo search optimizer using heavy-tailed distributions. Applied Soft Computing, 101, 107052.

Yousri, D., Allam, D., & Eteiba, M. B. (2020). Optimal photovoltaic array reconfiguration for alleviating the partial shading influence based on a modified Harris Hawks optimizer. Energy Conversion and Management, 206, 112470.

Zhang, M., Luo, W., & Wang, X. (2008). Differential evolution with dynamic stochastic selection for constrained optimization. Information Sciences, 178(15), 3043–3074.

Zheng, R., Jia, H., Abualigah, L., Liu, Q., & Wang, S. (2021). Deep ensemble of slime mold algorithm and arithmetic optimization algorithm for global optimization. Processes, 9(10), 1774.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Abualigah, L., Diabat, A., Svetinovic, D. et al. Boosted Harris Hawks gravitational force algorithm for global optimization and industrial engineering problems. J Intell Manuf 34, 2693–2728 (2023). https://doi.org/10.1007/s10845-022-01921-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-022-01921-4