Abstract

Blasting has been widely recognized as an economical and viable method in geo-engineering projects. However, the induced ground vibration in terms of peak particle velocity (PPV) potentially can damage the nearby environment and inhabitants. Therefore, more accurate prediction of the PPV can lead to reduce undesirable and hazardous effects of blasting. With the increase in the computational power, wide variety of predictive PPV models using numerical tools and data mining approaches have been presented. In this paper, the optimum predictive PPV model was specified using generalized feedforward neural network (GFFN) structure integrated with a novel automated intelligent setting parameter approach. Subsequently, two new optimized hybrid models using GFFN incorporated with firefly and imperialist competitive metaheuristic algorithms (FMA and ICA) were developed and applied on 78 monitored events in Alvand–Qoly mine, Iran. According to analyzed metrics, the predictability level of hybrid GFFN-FMA dedicated 6.67% and 20% progress than GFFN-ICA and optimum GFFN. The pursued performance using precision–recall curves and ranked accuracy criteria also exhibited superior improvement in GFFN-FMA. Sensitivity analyses implied on the importance of the distance and burden as the most and least effective factors on predicted induced PPV in the study area.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

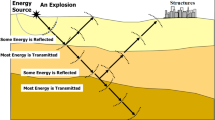

Blasting as a powerful and fast but cost-effective tool widely have been applied to accomplish rock handling in civil (e.g. dam, road, tunnel), mining (e.g. underground, open pit, quarries) and construction purposes [1,2,3]. However, the industry experts and independent analysts believe that most of the blast-produced energy is wasted due to ground vibration and partial of this loss may then cause for fly-rock debris, air blast, back break, and seismic waves [1, 4,5,6]. Moreover, other possible induced blasting dangers (e.g. block size, environmental and utilities impacts, local disruptions, and premature detonation/misfires) are underestimated. The peak particle velocity (PPV) as an indicator index of undesirable ground vibration measurements is used to control the structural damage criteria and decrease the possible risk of blasting on environmental complaints [1, 7,8,9,10]. Therefore, providing predictive PPV-based models for assessing the effect of induced ground vibration is great of interest.

Traditionally, the PPV is estimated by empirical–statistical predictors [11,12,13,14,15,16]. However, such predictors due to incorporating of only limited numbers of influential parameters are not consistent and almost represent different scores of accuracies [7, 17, 18]. Despite presented updates using other related parameters [19,20,21], the PPV models in wide expanded range of data cannot simulate the process efficiently and thus provide unreliable predictions [1, 6, 22].

With parallel of progress in numerical simulation tools, soft computing-based techniques also have been applied to develop PPV predictive models. In literatures, capability of artificial neural network (ANNs) [1, 9, 10, 23], ANFIS and fuzzy logic [22, 24,25,26], support vector machine [27,28,29], and hybrid intelligent models [2, 30,31,32] in producing more precise results than other conventional or regression analyses have been highlighted.

Hybridized architectures aim to combine and optimize different knowledge schemes and learning strategies to solve a computational task [33, 34]. In this perspective, the metaheuristic algorithms (MAs) due to flexibility, effective dealing with complex constraints, problem-independent strategies, and user-defined special conditions [35, 36] have contributed to large number of new systems designs to overcome on limitations of individual models. Therefore, integrating the ANNs-MAs can provide innovative intelligent computational frameworks. Such computational intelligence incorporations have shown remarkable progress in predictability level of developed PPV models [26, 30, 32, 37,38,39,40,41]. Almost in all these literatures, the multilayer perceptrons (MLPs) is the core of hybridizing. Dense MLPs lead to high variance and thus slow training that is deemed insufficient to converge to a solution for modern advanced computer vision tasks. Moreover, large numbers of total parameters corresponding to characteristic of fully connected layers should be adjusted that can make redundancy in such high dimensions. However, if proper internal characteristics are set, the MLPs can approximate any input/output map.

Owing to the lack of a unified framework, comparative performance and conceptual analysis of various hybrid models often have been remained difficult. These issues motives for developing novel hybridized models using other subclass of ANNs.

In this paper, an optimum PPV-predictive model using generalized feedforward neural network (GFFN) structure integrated with a novel automated intelligent setting parameter approach is presented. The model then was hybridized with two prominent swarm-intelligence MAs including firefly and imperialist competitive algorithms (FMA and ICA). Applying the GFFN enhances the computing power, while automating process tunes the optimal hyper parameters. This implies that the performance of optimized FMA and ICA in different size of the search spaces are investigated. The adopted models were applied on 78 compiled datasets of a quarry in west of Iran (Table 1). Compared predictability and accuracy level using different metrics demonstrated superior performance in hybrid GFFN-FMA than GFFN-ICA and optimum GFFN. The importance of the used components then also was identified using sensitivity and weight analyses.

2 Hybridizing and optimization

Optimization techniques lead to more efficient and cost-effective procedures to find an optimal solution among various iteratively compared responses. The classical methods lead to a set of nonlinear simultaneous equations that may be difficult to solve, while the computing capacities can be enhanced by utilizing the MAs and intelligent computer-aided activities [36]. This implies that combinatorial incorporations of ANNs-MAs can capture the feasible solution with less computational effort than traditional approaches [33, 34, 36, 42]. As presented in Fig. 1, the MAs are categorized in practical-oriented branches of optimization techniques. However, an algorithm may not exactly fit into each category. It can be a mixed type or hybrid, which uses some combination of deterministic components with randomness, or combines one algorithm with another to design more efficient system.

Therefore, in the design of hybrid architectures, the incorporation and interaction of applied techniques are more important than merging different methods to create ever-new techniques [34, 42].

2.1 FMA

Referring to Fig. 1, the FMA is a swarm population-based stochastic intelligence method inspired by the flashing behavior of fireflies [43] with approved efficiency in solving the hardest global and local optimization problems [44]. This algorithm (Fig. 2) is formulated using introduced parameters in Table 1 which depend on the problem should appropriately be tuned.

The FMA aims to optimize the I (Table 1) as the objective function. Accordingly, better fireflies have smaller error and thus higher intensity. As presented in Table 1, I and β for each firefly are functions of distance coordinate in which γ plays crucial role on the convergence speed. This parameter in most optimizing problems typically varies within [0.1–10] interval (Table 1).

According to level of I, the optimal solution in population is found through the individual fitness function (FT) for any binary combination of fireflies using:

where αt varies within [0, 1] interval. The rand function corresponds to a random number of solutions I. sj is a solution with lower FT than si and (sj-si) represents the updated step size.

In each iteration, the FT is compared with the previous results to keep only one new solution [34]. Briefly, using FT the position of moved firefly i towards the brighter one (new solution) in the current population is evaluated using:

The third condition means that the FTbest (the lowest) is retained while others are discarded.

2.2 ICA

ICA is an evolutionary and robust optimization algorithm inspired by imperialist competitive through expanding power and political system [45]. Like other evolutionary algorithms, ICA also starts with a random initial ensemble of countries (Ncou) in which those with minimum cost are selected to be imperialists (Nimp) and the rests play the role as colonies (Ncol). The outcome of configured formulation in this algorithm is to eliminate the weakest empires through a competition process according to total power of an empire (Fig. 3). The more empire power, the more attracted colonies, and thus all the countries are converged to only one robust empire in the domain of the problem as the desired solution.

Similar to other evolutionary algorithms, the involved parameters in ICA (Table 2) should also properly be adjusted. Appropriate initial guess for these parameters (Table 2) can be set through the previous studies [33, 45,46,47].

The total power of nth empire (TCn) as summation of the power of imperialist and its attracted colonies is expressed by:

where ξ theoretically falls within [0, 1] interval. The total power is affected by imperialist power in small value of ξ and can be influenced by the mean power of colonies in large ξ value. Thus, usually the value of ξ is considered close to 0.

Accordingly, the competition process among the empires represents the possession probability of each empire (pn) based on its total power and is calculated using normalized total cost of empire as:

where TCn and NTCn denote the total and normalized cost of nth empire.

3 Layout of GFFN model

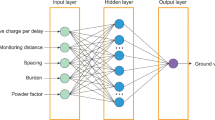

ANNs are simple simulation of human brain structure in learning the nonlinear models through the interconnected processing neurons. In the MLPs (Fig. 4A), as the main core of ANNs the result of mth neuron in output layer (Om) is expressed using:

where xi denotes the inputs. f and g are the applied activation function on the hidden and output layers. zj shows the output of jth neuron in hidden layer using assigned weights (wij). Accordingly, the weight of hidden to output layer is shown by wjk. bj, and bk are the biases for setting the threshold values.

As presented in Fig. 4B, replacing the perceptron with generalized shunting neuron (GSN) can provide considerable plausibility. The shunting model [48] due to spatial extent of the dendritic tree and receiving two inputs (one excitatory and one inhibitory) dedicates the GFFN (Fig. 4C) in which the connecting system can jump over one or more layers [49]. This ability allows neurons to operate as adaptive nonlinear filters and provide higher flexibility [33, 46, 49,50,51].

In GFFN, the input lines are rectified in a postsynaptic neuron in such way that excitatory input transmits the signals in preferred directions while in the null direction the response of the excitatory synapse is shunted by the simultaneous activation of the inhibitory synapse [49]. Therefore, in the same number of neurons, the GFFN due to using shunting inhibition and applied GSN not only often solve the problem much more efficiently than MLPs [33, 50, 52], but also can speed up the training procedure and enhance the computability level to save the memory. This characteristic then utilizes more freedom to select optimum topology and get higher resolution in complex nonlinear decision classifiers [1, 33, 49, 50]. To produce the output of jth neuron in hidden layer (zj), all input is summed and passed through activation function as:

where xi and xj show the inputs to the ith and jth neurons. wj0 and cj0 are bias constants. aj is a positive constant represents the passive decay rate of the neuron and bj reflects the output bias. wji and cji express the connection weight from the ith inputs to the jth neuron, where cji refers to “shunting inhibitory” connection weight.

The network error (E) of the kth output neuron in tth iteration in terms of the actual (tk) and predicted values (\({o}_{k}^{t}\)) then is defined as:

To reduce the error between the desired and actual outputs, the weights are optimized using an updating procedure for (t + 1)th pattern subjected to:

where η is the learning rate.

4 Case study and acquired datasets

In the current study, a number 78 monitored PPV from the Alvand–Qoly quarry at 5 km distance from Bijar town in Kurdistan province of Iran (Fig. 5) was used. This mine with 124 million tones of deposit in an area of 15.93km2 is the resource of limestone for Kurdistan cement industry. The PPV values have been recorded during the blasting of benches with 13.5 m depth and 1 m subdrill, where the distances between located geophones to shot point vary between 241 and 1500 m. The statistical description of employed data is given in Table 3. These data were normalized within [0, 1] interval and then randomized into 55%, 25%, and 20% to generate the training, testing, and validation sets.

5 Configuring the hybrid predictive models

The learning algorithm of ANNs in weight space intends to minimize the output error and converge to a locally optimal solution. However, there is no guarantee in finding a global solution. This implies that adjustment of internal characteristics (e.g., number of neurons, activation function, learning rate, and layer organization) to capture an appropriate network size is a difficult task, where there is no unified accepted method [51, 53].

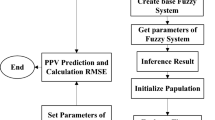

As presented in Fig. 6, in this paper an automated setting parameter procedure using a trial–error method was designed to find the optimum topology of predictive GFFN model. The proposed parameter setting approach aims to capture the optimal of one-dimensional array including several items, such as the number of epochs, learning rate, training algorithm, the number of neurons in hidden layers, and activation functions. Using the defined iterative procedure, the best performance of each produced topology after three runs is then evaluated using error metrics to represent the quality of found solution. The results then are reported back to the training algorithm to construct new topology. This procedure then was incorporated to FMA and ICA to investigate possible improvement in prediction process. To minimize the risk of getting trapped in local minima, overfitting, or early convergence, two internal loops was embedded to provide high flexibility in monitoring different training algorithms and activation functions. In this process, five training algorithms (QP quick propagation, CGD conjugate gradient descent, QN quasi-Newton, L–M Levenberg–Marquardt, MO momentum) and three activation functions (log logistic, hyt hyperbolic tangent, lin Linear) were used. The number of neurons as user defined parameter then can be arranged in diverse topologies even in similar structures, but different internal characteristics. Here, the possibilities of 16 neurons in maximum tow hidden layers were investigated. Obviously, by changing the number of neurons or defining more hidden layers, the procedure is able to capture much more topologies. Using 16 neurons, the system automatically will capture a large number of topologies (e.g., 6-16-1, 6–1-15–1, 6-2-14–1… 6-7-9-1… 6-15-1-1). Each topology then is tested by one of the training algorithms and one activation function. Accordingly, after checking all structures, it will be switched to another algorithm and subsequently activation function. This corresponds to monitoring of similar topologies subjected to different internal characteristics. All tested topologies are saved in a temporary query to be ranked using root mean square error (RMSE) and R2 to select the best optimum model. This procedure was programmed with C + + .

To decrease the number of variables, value of 0.7 for learning rate was set for all implemented algorithms and the step sizes of hidden layers were changed in domain of [1.0–0.001]. The sum of squares and network root mean square error (RMSE) as well as number of epochs were also employed as output errors function and termination criteria, respectively. The priority of termination is to satisfy the RMSE and if not achieved then the number of epochs will use. Here the number of epochs was set for 500. As a result of carried out efforts, the minimum observed RMSE against the number of neurons subjected to different training algorithm and activation functions were reflected in Fig. 7A. The results of examined structures to find the optimum topology subjected to MO and Thy then was reflected in Fig. 7B. A brief summary of other training algorithms and corresponding optimum topologies is given in Table 4.

The required parameters of ICA (Table 1) were obtained through a series of parametric analyses. Referring to the previous studies, the values of 2, π/4, and 0.02 were managed as the values for β, θ, and ζ, respectively (Abbaszadeh Shahri et al., 2020a). To capture the optimal of Ncou, Nimp, and Ndec, 12 hybrid models subjected to optimum GFFN (Table 4) were trained. Using analyzed R2 and RMSE, the values of 150, 15, and 250 were assigned to Ncou, Nimp, and Ndec, respectively (Fig. 8A–E). In case of FMA (Fig. 5), the pointed parameters in Table 2 should be tuned. This process is executed using gen-counter parameter (t) that calculates the new values for α through the function Δ = 1–10−4/0.91/max Gen and α(t+1) = 1-Δ. α(t), where Δ determines the step size of changing parameter α(t+1) and descends with the increasing of t. The required parameters for FMA then were captured through series analyses using RMSE and R2. Accordingly, values of 1, 0.2, 0.05, 0.2, and 0.5 corresponding to γ, β, Δ, α, and β0 can be selected as the most appropriate parameters to adjust FMA (Fig. 8F–H). Referring to convergence history, ICA in the number of 150 and FMA in 40 populations can optimize the GFFN model (Fig. 9). Subsequently, calculated residuals and also comparison between measured and predicted values were executed and plotted in Fig. 10.

6 Discussion and validation

Identifying the system confusing for different classes and improved performance of generated models can be quantified and evaluated using confusion matrix [54]. The established confusion matrixes for the validation datasets of GFFN, GFFN-ICA, and GFFN-FMA were presented in Table 5. The similar process for test and train data was carried out to determine the correct classification rate (CCR) and classification error (CE) [33, 46, 50] as reflected in Table 6. According to observed results, GFFN-FMA shows 6.67% and 20% improvement regarding to GFFN-ICA and GFFN, respectively.

The performance analyses of the models using mean absolute percentage error (MAPE), variance account for (VAF), RMSE, index of agreement (IA), and R2 criteria for validation datasets were reflected and ranked in Table 7.

To check or visualize the performance of the multiclass problem at various thresholds settings, the area under the curve of receiver-operating characteristics (AUCROC) can be employed. The ROC is a probability curve showing the performance of a model at all classification thresholds and AUC represents capability of model in distinguishing between classes. Referring to ROC, the precision–recall is a useful tool to reflect the success of prediction when the classes are very imbalanced. In information retrieval, precision is a measure of result relevancy, while recall reflects the returned numbers of truly relevant. Therefore, this curve displays the relevant and corresponding number of truly predicted results. Accordingly, the curves of different models then can directly be compared for different thresholds to get the full picture of evaluating. In Fig. 11A, the AUC of precision–recall for hybrid GFFN-FMA is 2.5% and 12.5% more than GFFN-ICA and GFFN. This improvement demonstrates higher accuracy in predicted outputs of GFFN-FMA. The comparison between measured and predicted values and corresponding calculated residuals were also presented in Fig. 11B and C.

Sensitivity analyses techniques as a what-if simulation for determining the effect of inputs on particular output is especially useful tool in black box processes where the output is an opaque function of several inputs [55, 56]. As presented in Eq. 12, the importance of input parameters using the cosine amplitude and partial derivative (PaD) were presented in Fig. 12.

where Okp and xip are output and input values for pattern P, and SSDi is the sum of the squares of the partial derivatives, respectively.

Both applied sensitivity analysis methods identified the distance and total charge as the most and burden as the least effective factors on PPV.

7 Conclusion and remarks

To control and mitigate the effects of blasting on nearby vicinities, developing more accurate PPV predictive models is of great importance. In this study, two optimum hybridized structures using GFFN incorporated to FMA and ICA were presented. The optimum GFFN topology was tuned through an automated parameter setting procedure subjected to 78 monitored datasets of blasting events in Alvand–Qoly mine, Kurdistan Province-Iran. To increase the efficiency of hybrid structures, the corresponding internal variables of FMA and ICA optimally were adjusted using parametric analyses. The results of optimized hybrid architectures proved to be more accurate than the only GFFN.

Referring to conducted RMSE-iteration curves subjected to different number of populations, the higher tendency for convergence in FMA than ICA led to 11.37% and 10.42% improvements in hybrid GFFN-FMA and GFFN-ICA than GFFN. Accordingly, the R2 value of GFFN form 0.90 was updated to 0.97 (GFFN-FMA) and 0.96 (GFFN-ICA). The results of CCR showed 93.75% success for GFFN-FMA while it was decreased to 87.5% and 75% in GFFN-ICA and GFFN, respectively. Pursued accuracy performance and ranked statistical error criteria exhibited relative superiority of the GFFN-FMA than GFFN-ICA. However, the differences were not significant. The calculated AUCROC as an index of model skill demonstrated for 2.3% and 12.5% improving in predictability level of the GFFN-FMA than other models. Using different sensitivity analyses techniques, the distance, total charge, and the burden were recognized as the most and least effective factors on predicted PPV. The study showed that implementing of the FMA and ICA not only can significantly improve the robustness and performance of the GFFN model, but also provide more flexible and reliable tool for the purpose of PPV prediction. It was observed that for the current study FMA is more applicable than ICA.

Abbreviations

- GFFN:

-

Generalized feed forward neural network

- FMA:

-

Firefly metaheuristic algorithm

- ICA:

-

Imperialistic competitive metaheuristic algorithm

- PPV:

-

Peak particle velocity

- MAs:

-

Metaheuristic algorithms

- FT:

-

Fitness function

- ANN:

-

Artificial neural network

- MLP:

-

Multilayer perceptron

- GSN:

-

Generalized shunting neuron

- TA:

-

Training algorithm

- AF:

-

Activation function

- QP:

-

Quick propagation

- CGD:

-

Conjugate gradient descent

- QN:

-

Quasi-Newton

- L–M:

-

Levenberg–Marquardt

- MO:

-

Momentum

- Log:

-

Logistic

- Hyt:

-

Hyperbolic tangent

- Lin:

-

Linear

- AUC:

-

The area under the curve

- ROC:

-

Receiver-operating characteristics

References

Abbaszadeh Shahri A, Asheghi R (2018) Optimized developed artificial neural network based models to predict the blast-induced ground vibration. Innov Infrastruct Solut 3:34. https://doi.org/10.1007/s41062-018-0137-4

Sołtys A, Twardosz M, Winzer J (2017) Control and documentation studies of the impact of blasting on buildings in the surroundings of open pit mines. J Sustain Min 16(4):179–188. https://doi.org/10.1016/j.jsm.2017.12.004

Tripathy GR, Shirke RR, Kudale MD (2016) Safety of engineered structures against blast vibrations: a case study. J Rock Mech Geotech Eng 8(2):248–255. https://doi.org/10.1016/j.jrmge.2015.10.007

Ak H, Iphar M, Yavuz M, Konuk A (2009) Evaluation of ground vibration effect of blasting operations in a magnesite mine. Soil Dyn Earthq Eng 29(4):669–676. https://doi.org/10.1016/j.soildyn.2008.07.003

Taheri K, Hasanipanah M, Bagheri Golzar S, Abd Majid MZ (2016) A hybrid artificial bee colony algorithm-artificial neural network for forecasting the blast-produced ground vibration. Eng Comput. https://doi.org/10.1007/s00366-016-0497-3

Verma AK, Maheshwar S (2014) Comparative study of intelligent prediction models for pressure wave velocity. J Geosci Geomatic 2(3):130–138. https://doi.org/10.12691/jgg-2-3-9

ISRM (1992) Suggested method for blast vibration monitoring. Int J Rock Mech Min Sci Geomech Abst 29(2):145–146. https://doi.org/10.1016/0148-9062(92)92124-U

Kahriman A (2002) Analysis of ground vibrations caused by bench blasting at can open-pit lignite mine in Turkey. Environ Earth Sci 41:653–661. https://doi.org/10.1007/s00254-001-0446-2

Rajabi AM, Vafaee A (2019) Prediction of blast-induced ground vibration using empirical models and artificial neural network (Bakhtiari Dam access tunnel, as a case study). J Vib Control 26(7–8):520–531. https://doi.org/10.1177/1077546319889844

Xue X, Yang X (2014) Predicting blast-induced ground vibration using general regression neural network. J Vib Control 20(10):1512–1519. https://doi.org/10.1177/1077546312474680

Ambraseys NR, Hendron AJ (1968) Dynamic behavior of rock masses: rock mechanics in engineering practices. Wiley, London

Davies B, Farmer IW, Attewell PB (1964) Ground vibrations from shallow sub-surface blasts. Engineer 217:553–559

Duvall WI, Petkof B (1959) Spherical propagation of explosion of generated strain pulses in rocks. USBM, RI-5483.

Langefors U, Kihlstrom B (1963) The modern technique of rock blasting. Wiley, New York

Nicholls HR, Johnson CF, Duvall WI (1971) Blasting vibrations and their effects on structures. United States Department of Interior, USBM, Bulletin, p 656

Roy PP (1993) Putting ground vibration predictors into practice. Coll Guard 241:63–67

Dowding CH (1985) Blast vibration monitoring and control. Prentice-Hall Inc, Englewood’s Cliffs

Hagan TN (1973) Rock breakage by explosives. In Proceedings of the national symposium on rock fragmentation, Adelaide, 1–17.

Hudaverdi T (2012) Application of multivariate analysis for prediction of blast-induced ground vibrations. Soil Dyn Earthq Eng 43:300–308. https://doi.org/10.1016/j.soildyn.2012.08.002

Radojica L, Kostić S, Pantović R, Vasović N (2014) Prediction of blast-produced ground motion in a copper mine. Int J Rock Mech Min Sci 69:19–25. https://doi.org/10.1016/j.ijrmms.2014.03.002

Verma AK, Singh TN (2011) Intelligent systems for ground vibration measurement: a comparative study. Eng Comput 27(3):225–233. https://doi.org/10.1007/s00366-010-0193-7

Mohamed MT (2011) Performance of fuzzy logic and artificial neural network in prediction of ground and air vibrations. Int J Rock Mech Min Sci 48:845–851

Lawal AI, Idris MA (2020) An artificial neural network-based mathematical model for the prediction of blast-induced ground vibrations. Int J Environ Stud 77(2):318–334. https://doi.org/10.1080/00207233.2019.1662186

Iphar M, Yavuz M, Ak H (2008) Prediction of ground vibrations resulting from the blasting operations in an open-pit mine by adaptive neurofuzzy inference system. Environ Geol 56:97–107. https://doi.org/10.1007/s00254-007-1143-6

Xue X (2019) Neuro-fuzzy based approach for prediction of blast-induced ground vibration. Appl Acoust 152:73–78. https://doi.org/10.1016/j.apacoust.2019.03.023

Yang H, Hasanipanah M, Tahir MM, Tien Bui D (2020) Intelligent prediction of blasting-induced ground vibration using ANFIS optimized by GA and PSO. Nat Resour Res 29:739–750. https://doi.org/10.1007/s11053-019-09515-3

Dindarloo SR (2015) Peak particle velocity prediction using support vector machines: a surface blasting case study. J South Afr Inst Min Metall 115(7):637–643. https://doi.org/10.17159/2411-9717/2015/V115N7A10

Khandelwal M (2011) Blast-induced ground vibration prediction using support vector machine. Eng Comput 27(3):193–200. https://doi.org/10.1007/s00366-010-0190-x

Nguyen H, ChoiY BXN, Thoi TN (2020) Predicting blast-induced ground vibration in open-pit mines using vibration sensors and support vector regression-based optimization algorithms. Sensors 20(1):132. https://doi.org/10.3390/s20010132

Tian E, Zhang J, Tehrani MS, Surendar A, Ibatova AZ (2018) Development of GA-based models for simulating the ground vibration in mine blasting. Eng Comput 55:849–855. https://doi.org/10.1007/s00366-018-0635-1

Yu Z, Shi X, Zhou J, Chen X, Qiu X (2020) Effective assessment of blast-induced ground vibration using an optimized random forest model based on a Harris Hawks optimization algorithm. Appl Sci 10(4):1403. https://doi.org/10.3390/app10041403

Zhang X, Nguyen H, Bui X, Tran Q, Nguyen D, Bui DT, Moayedi H (2020) Novel soft computing model for predicting blast-induced ground vibration in open-pit mines based on particle swarm optimization and XGBoost. Nat Resour Res 29:711–721. https://doi.org/10.1007/s11053-019-09492-7

Abbaszadeh Shahri A, Asheghi R, Khorsand Zak M (2020) A hybridized intelligence model to improve the predictability level of strength index parameters of rocks. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05223-9

Grosan C, Abraham A (2011) Hybrid intelligent systems. In: Intelligent systems. Intelligent systems reference library, vol 17, pp 423–450. Springer, Berlin Heidelberg, https://doi.org/10.1007/978-3-642-21004-4_17

Bekdaş G, Nigdeli SM, Kayabekir AE, Yang XS (2019) Optimization in civil engineering and metaheuristic algorithms: a review of state-of-the-art developments. In: Platt G, Yang XS, Silva Neto A (eds) Computational intelligence, optimization and inverse problems with applications in engineering. Springer, Cham, pp 111–137. https://doi.org/10.1007/978-3-319-96433-1_6

Bianchi L, Dorigo M, Gambardella LM, Gutjahr WJ (2009) A survey on metaheuristics for stochastic combinatorial optimization. Nat Comput 8(2):239–287. https://doi.org/10.1007/s11047-008-9098-4

Azimi Y, Khoshrou SH, Osanloo M (2019) Prediction of blast induced ground vibration (BIGV) of quarry mining using hybrid genetic algorithm optimized artificial neural network. Measurement 147:106874. https://doi.org/10.1016/j.measurement.2019.106874

Bui X, Jaroonpattanapong P, Nguyen H, Tran QH, Long NQ (2019) A novel hybrid model for predicting blast-induced ground vibration based on k-nearest neighbors and particle swarm optimization. Sci Rep 9:13971. https://doi.org/10.1038/s41598-019-50262-5

Faradonbeh RS, Monjezi M (2017) Prediction and minimization of blast-induced ground vibration using two robust meta-heuristic algorithms. Eng Comput 33:835–851. https://doi.org/10.1007/s00366-017-0501-6

Nguyen H, Drebenstedt C, Bui X, Bui DT (2020) Prediction of blast-induced ground vibration in an open-pit mine by a novel hybrid model based on clustering and artificial neural network. Nat Resour Res 29:691–709. https://doi.org/10.1007/s11053-019-09470-z

Shang Y, Nguyen H, Bui X, Tran Q, Moyaedi H (2020) A novel artificial intelligence approach to predict blast induced ground vibration in open-pit mines based on the firefly algorithm and artificial neural network. Nat Resour Res 29:723–737. https://doi.org/10.1007/s11053-019-09503-7

Agrawal A, Gans J, Goldfarb A (2018) Prediction machines: the simple economics of artificial intelligence. Harvard Business Press, Boston

Yang XS (2008) Nature-inspired metaheuristic algorithms. Luniver Press

Yang XS (2013) Multiobjective firefly algorithm for continuous optimization. Eng Comput 29(2):175–184. https://doi.org/10.1007/s00366-012-0254-1

Atashpaz Gargari E, Lucas C (2007) Imperialist competitive algorithm: an algorithm for optimization inspired by imperialistic competition. Proc IEEE Congr Evol Comput. https://doi.org/10.1109/CEC.2007.4425083

Asheghi R, Abbaszadeh Shahri A, Khorsand Zak M (2019) Prediction of uniaxial compressive strength of different quarried rocks using metaheuristic algorithm. Arab J Sci Eng 44:8645–8659. https://doi.org/10.1007/s13369-019-04046-8

Atashpaz-Gargari E, Hashemzadeh F, Rajabioun R, Lucas C (2008) Colonial competitive algorithm, a novel approach for PID controller design in MIMO distillation column process. Int J Intell Comput Cybern 1(3):337–355. https://doi.org/10.1108/17563780810893446

Furman GG (1965) Comparison of models for subtractive and shunting lateral-inhibition in receptor-neuron fields. Kybernetik 2:257–274. https://doi.org/10.1007/BF00274089

Arulampalam G, Bouzerdoum A (2003) Expanding the structure of shunting inhibitory artificial neural network classifiers. IJCNN IEEE. https://doi.org/10.1109/IJCNN.2002.1007601

Abbaszadeh Shahri A, Renkel C, Larsson S (2020) Artificial intelligence models to generate visualize bed rock level—a case study in Sweden. Model Earth Syst Environ 6:1509–1528. https://doi.org/10.1007/s40808-020-00767-0

Ghaderi A, Abbaszadeh Shahri A, Larsson S (2018) An artificial neural network based model to predict spatial soil type distribution using piezocone penetration test data (CPTu). Bull Eng Geol Env 78:4579–4588. https://doi.org/10.1007/s10064-018-1400-9

Vida I, Bartos M, Jonas P (2006) Shunting inhibition improves robustness of gamma oscillations in hippocampal interneuron networks by homogenizing firing rates. Neuron 49:107–117. https://doi.org/10.1016/j.neuron.2005.11.036

Abbaszadeh Shahri A (2016) Assessment and prediction of liquefaction potential using different artificial neural network models: a case study. Geotech Geol Eng 34:807–815. https://doi.org/10.1007/s10706-016-0004-z

Stehman S (1997) Selecting and interpreting measures of thematic classification accuracy. Remote Sens Environ 62(1):77–89. https://doi.org/10.1016/S0034-4257(97)00083-7

Asheghi R, Hosseini SA, Sanei M, Abbaszadeh Shahri A (2020) Updating the neural network sediment load models using different sensitivity analysis methods: a regional application. J Hydroinf 22(3):562–577. https://doi.org/10.2166/hydro.2020.098

Saltelli A, Ratto M, Andres T, Campolongo F, Cariboni J, Gatelli D, Saisana M, Tarantola S (2008) Global sensitivity analysis: the primer. Wiley

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Abbaszadeh Shahri, A., Pashamohammadi, F., Asheghi, R. et al. Automated intelligent hybrid computing schemes to predict blasting induced ground vibration. Engineering with Computers 38 (Suppl 4), 3335–3349 (2022). https://doi.org/10.1007/s00366-021-01444-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-021-01444-1