Abstract

In this paper, we develop and study a stochastic predator–prey model with stage structure for predator and Holling type II functional response. First of all, by constructing a suitable stochastic Lyapunov function, we establish sufficient conditions for the existence and uniqueness of an ergodic stationary distribution of the positive solutions to the model. Then, we obtain sufficient conditions for extinction of the predator populations in two cases, that is, the first case is that the prey population survival and the predator populations extinction; the second case is that all the prey and predator populations extinction. The existence of a stationary distribution implies stochastic weak stability. Numerical simulations are carried out to demonstrate the analytical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Classical predator–prey models typically assume that individual predators have the same capability to hunt prey. However, natural populations are more complex; while the mature individuals can hunt for food by themselves, the immature individuals are raised by their mature parents, and the rate at which they attack prey can be ignored. Particularly, we have in mind mammalian populations and some amphibious animals, which exhibit these two stages. Recently, predator–prey models with stage structure for the predator have received much attention and many authors have studied the dynamical behaviour of these models (Freeman and Wu 1991; Wang and Chen 1997; Wang 1997; Wang et al. 2001; Xiao and Chen 2004; Deng et al. 2014; Al-Omari 2015; Xu and Ma 2008; Georgescu et al. 2010). These models typically provide a richer dynamics, leading to better understanding of the interactions within the biological system while they also incorporate important physiological parameters, such as different death rates for immature and mature predators.

Moreover, all predator–prey models use some interaction functions especially Holling interaction functions between predators and preys based on reasonable biological assumptions because these functions do not allow the predators to grow arbitrarily fast, if prey is abundant (Sahoo and Poria 2014). Holling type II functional response captures density-dependent growth rate that is a concave function, leading to a saturation of prey consumption, and it measures the average feeding rate of a predator when it spends some time finding and processing the prey (Skalski and Gilliam 2001). The functional form \(f(x)=\frac{bx}{1+mx}\) denotes the Holling type II functional response, b being the search rate and m being the search rate multiplied by the handling time. This type of functional response has been extensively used in biological systems, such as chemostat model (Monod 1950; Novick and Szilard 1950) and epidemic model (Zhang and Teng 2008; Xiang et al. 2009).

A predator–prey model with stage structure for predator and Holling type II functional response was developed by Wang and Chen (1997) and takes the form

where \(x=x(t)\) denotes the density of prey at time t and \(y=y(t)\) and \(z=z(t)\) denote the densities of immature and mature predators at time t, respectively. The parameters r, a, b, m, k, D, \(d_{1}\) and \(d_{2}\) are positive constants: r represents the intrinsic growth rate of the prey, a denotes the intraspecific competition rate of the prey, k \((k\le 1\) due to its biological significance) is the coefficient in converting prey into a new immature predator, \(d_{1}\) and \(d_{2}\) represent the death rates of immature and mature predators, respectively, and D denotes the rate at which immature predators become mature predators. By defining the basic reproduction number of the predator

as being the average number of offsprings produced by a mature predator in its lifetime when introduced in a prey-only environment with prey at carrying capacity, they have shown that if \(R_{0}\le 1\), then the prey-only equilibrium \(\left( \frac{r}{a},0,0\right) \) is globally asymptotically stable on \((0,\infty )^{3}\), while if \(R_{0}>1\), then the prey-only equilibrium \(\left( \frac{r}{a},0,0\right) \) is unstable; there is a unique positive equilibrium. These results can be found in the works (Georgescu and Hsieh 2006; Georgescu and Moroşanu 2006).

However, as a matter of fact, parameters involved in the system are not absolute constants, and they always fluctuate around some average values due to fluctuations in the environment (see e.g. Gard 1984, 1986), such as earthquakes, epidemics, tsunami, malaria and dengue fever. These events are so severe and strong that they can change the population size greatly in a short time. So these sudden events can be described as noise. Introducing environmental noise into the underlying population systems may be a good approach to describe these phenomena. May (2001) has revealed the fact that due to environmental fluctuations, the birth rates, death rates, carrying capacities, competition coefficients and other parameters involved in the system exhibit random fluctuations to a greater or lesser extent, and as a result, the equilibrium population distribution cannot attain a steady value, but fluctuates randomly around some average value (Ji and Jiang 2011). Hence, in order to fit to the reality better, many authors have introduced stochastic perturbations into deterministic models to reveal the effect of environmental variability on the population dynamics in mathematical biology (Zhang et al. 2014; Zhang and Jiang 2015; Liu and Wang 2011). By considering the effect of randomly fluctuating environment, Zhang et al. (2014) considered a predator–prey model with disease in the prey and discussed the asymptotic behaviour of the solution. Moreover, they investigated whether there exists a stationary distribution for the model and if it has the ergodic property. In Zhang and Jiang (2015), Zhang and Jiang discussed a stochastic Lotka–Volterra model with two predators competing for one prey. They obtained sufficient conditions guaranteeing the principle of coexistence for this perturbed model via Markov semigroup theory. Furthermore, they proved that the densities of the distributions of the solutions can converge in \(L^{1}\) to an invariant density or can converge weakly to a singular measure under appropriate conditions. Liu and Wang (2011) investigated a stochastic stage-structured predator–prey model with Beddington–DeAngelis functional response. They gave sufficient conditions for global asymptotic stability of the system. For this reason, we study the dynamics of a stochastic predator–prey model with stage structure for predator and Holling type II functional response.

Since the intrinsic growth rate r and death rates \(d_{i}\) \((i=1,2)\) are most influential in determining the fate of predators and preys, the literature has mainly focused on studying the effect of random perturbations on these parameters (Beddington and May 1977; Pang et al. 2008; Braumann 2008; Li and Mao 2009; Liu et al. 2011). Consequently, we follow the approach proposed by Liu et al. (2016) and perturb the parameters r, \(-d_{1}\) and \(-d_{2}\) by

So

where \(B_{1}(t)\) \(B_{2}(t)\), \(B_{3}(t)\) are mutually independent standard Brownian motions with \(B_{1}(0)=B_{2}(0)=B_{3}(0)=0\), \(\sigma _{1}^{2}\), \(\sigma _{2}^{2}\), \(\sigma _{3}^{2}\) are the intensities of the environmental white noise. Then, system (1.1) becomes

Our paper is organized as follows: In Sect. 2, we give some known results, definitions and lemmas which will be used in the following analysis. In Sect. 3, we prove the existence and uniqueness of the global positive solution of system (1.2). In Sect. 4, we establish sufficient conditions for the existence and uniqueness of an ergodic stationary distribution of the solutions to system (1.2). In Sect. 5, we obtain sufficient conditions for extinction of the predator populations in two cases, that is, the first case is that the prey population survival and the predator populations extinction; the second case is that all the prey and predator populations extinction. In Sect. 6, numerical simulations are introduced to illustrate the theoretical results. Finally, some concluding remarks and future directions are presented to end this paper. In order to make our proofs easier to follow, we present some auxiliary results in Appendixes A, B and C.

Throughout this paper, unless otherwise specified, let \((\Omega ,\mathcal {F},\{\mathcal {F}_{t}\}_{t\ge 0},\mathbb {P})\) be a complete probability space with a filtration \(\{\mathcal {F}_{t}\}_{t\ge 0}\) satisfying the usual conditions (i.e. it is increasing and right continuous, while \(\mathcal {F}_{0}\) contains all \(\mathbb {P}\)-null sets) and we also let \(B_{i}(t)\) be mutually independent standard Brownian motions defined on the complete probability space, \(i=1,2,3\). Define

2 Preliminaries

In this section, we shall present some known results, definitions and lemmas which will be used later. Firstly, we give some basic theory of stochastic differential equations (see Mao 1997 for a detailed introduction).

Consider the d-dimensional Markov process described by the stochastic differential equation

with the initial value \(X(0)=X_{0}\in \mathbb {R}^{d}\), where B(t) denotes a d-dimensional standard Brownian motion defined on the complete probability space \((\Omega ,\mathcal {F},\{\mathcal {F}_{t}\}_{t\ge 0},\mathbb {P})\). Denote by \(C^{2}(\mathbb {R}^{d};\overline{\mathbb {R}}_{+})\) the family of all nonnegative functions V(X) defined on \(\mathbb {R}^{d}\) such that they are continuously twice differentiable in X. The differential operator L of Eq. (2.1) is defined by (Mao 1997)

If L acts on a function \(V\in C^{2}(\mathbb {R}^{d};\overline{\mathbb {R}}_{+})\), then

where \(V_{X}=\left( \frac{\partial V}{\partial X_{1}},\ldots ,\frac{\partial V}{\partial X_{d}}\right) \), \(V_{XX}=\left( \frac{\partial ^{2}V}{\partial X_{i}\partial X_{j}}\right) _{d\times d}\). According to Itô’s formula (Mao 1997), if \(X(t)\in \mathbb {R}^{d}\), then

Definition 2.1

(Has’minskii 1980) The transition probability function P(s, x, t, A) is said to be time homogeneous (and the corresponding Markov process is called time homogeneous) if the function \(P(s,x,t+s,A)\) is independent of s, where \(0\le s\le t\), \(x\in \mathbb {R}^{d}\) and \(A\in \mathcal {B}\) and \(\mathcal {B}\) denotes the \(\sigma \)-algebra of Borel sets in \(\mathbb {R}^{d}\).

Let X(t) be a regular time-homogeneous Markov process in \(\mathbb {R}^{d}\) described by the stochastic differential equation

The diffusion matrix of the process X(t) is defined as follows

Lemma 2.1

(Has’minskii 1980) The Markov process X(t) has a unique ergodic stationary distribution \(\mu (\cdot )\) if there exists a bounded open domain \(U\subset \mathbb {R}^{d}\) with regular boundary \(\Gamma \), having the following properties:

\(A_{1}\) The diffusion matrix A(x) is strictly positive definite for all \(x\in U\).

\(A_{2}\) There exists a nonnegative \(C^{2}\)-function V such that L(V) is negative on \(\mathbb {R}^{d}\setminus U\).

3 Existence and Uniqueness of the Global Positive Solution

Since x, y, z in system (1.2) denote population densities, they should be nonnegative. So for further study, we should first give some condition under which system (1.2) has a unique global positive solution. To this end, we establish the following theorem.

Theorem 3.1

For any initial value \((x(0),y(0),z(0))\in \mathbb {R}_{+}^{3}\), there exists a unique solution (x(t), y(t), z(t)) of system (1.2) on \(t\ge 0\) and the solution will remain in \(\mathbb {R}_{+}^{3}\) with probability one, namely \((x(t),y(t),z(t))\in \mathbb {R}_{+}^{3}\) for all \(t\ge 0\) almost surely (a.s.).

Proof

Since the coefficients of system (1.2) satisfy the local Lipschitz condition, then for any initial value \((x(0),y(0),z(0))\in \mathbb {R}_{+}^{3}\), there exists a unique local solution \((x(t),y(t),z(t))\in \mathbb {R}_{+}^{3}\) on \(t\in [0,\tau _{e})\) a.s., where \(\tau _{e}\) denotes the explosion time (Mao 1997). Now we shall prove that this solution is global, i.e. to prove that \(\tau _{e}=\infty \) a.s. To this end, let \(n_{0}\ge 1\) be sufficiently large such that x(0), y(0) and z(0) all lie within the interval \(\left[ \frac{1}{n_{0}},n_{0}\right] \). For each integer \(n\ge n_{0}\), define the stopping time as (Mao 1997) \(\square \)

where throughout this paper, we set \(\inf \emptyset =\infty \) (as usual \(\emptyset \) denotes the empty set). Clearly, \(\tau _{n}\) is increasing as \(n\rightarrow \infty \). Set \(\tau _{\infty }=\lim _{n\rightarrow \infty }\tau _{n}\), whence \(\tau _{\infty }\le \tau _{e}\) a.s. If we can verify that \(\tau _{\infty }=\infty \) a.s., then \(\tau _{e}=\infty \) and \((x(t),y(t),z(t))\in \mathbb {R}_{+}^{3}\) a.s. for all \(t\ge 0\). That is to say, to complete the proof all we need to prove is that \(\tau _{\infty }=\infty \) a.s. If this assertion is not true, then there exists a pair of constants \(T>0\) and \(\epsilon \in (0,1)\) such that

Hence, there exists an integer \(n_{1}\ge n_{0}\) such that

Define a \(C^{2}\)-function \(V:\mathbb {R}_{+}^{3}\rightarrow \mathbb {R}_{+}\) by

where c is a positive constant to be determined later. The nonnegativity of this function can be seen from

Applying Itô’s formula to V, we have

where, according to the definition of the operator L,

where in the third inequality, we have used the fact that \(0<k\le 1\). Choose \(c=\frac{d_{2}}{b}\) such that \(cb-d_{2}=0\), then we have

where K is a positive constant. Hence, one can obtain that

Integrating both sides of (3.2) from 0 to \(\tau _{n}\wedge T=\min \{\tau _{n},T\}\) yields

where

are three local martingales.

Since the solution (x(t), y(t), z(t)) of system (1.2) is \(\mathcal {F}_{t}\)-adapted, taking the expectation on both sides of (3.3), we have

Therefore,

Let \(\Omega _{n}=\{\omega \in \Omega :\tau _{n}=\tau _{n}(\omega )\le T\}\) for \(n\ge n_{1}\) and by (3.1), we have \(\mathbb {P}(\Omega _{n})\ge \epsilon \). Note that for every \(\omega \in \Omega _{n}\), there is \(x(\tau _{n},\omega )\) or \(y(\tau _{n},\omega )\) or \(z(\tau _{n},\omega )\) equals either n or \(\frac{1}{n}\). Hence, \(V(x(\tau _{n},\omega ),y(\tau _{n},\omega ),z(\tau _{n},\omega ))\) is no less than either

Consequently, one can get that

It follows from (3.4) that

where \(I_{\Omega _{n}}\) denotes the indicator function of \(\Omega _{n}\). Letting \(n\rightarrow \infty \), then one can see that

which leads to the contradiction and so we must have \(\tau _{\infty }=\infty \) a.s. This completes the proof.

4 Existence of Ergodic Stationary Distribution

In this section, we shall establish sufficient conditions for the existence of a unique ergodic stationary distribution. We first give two lemmas which will be used later.

Lemma 4.1

If \(l_{1},l_{2},l_{3}>0\), then

and the equality holds if and only if \(l_{1}=l_{2}=l_{3}\).

Proof

Obviously, (4.1) is equivalent to the following inequality \(\square \)

Since

then the assertion in Lemma 4.1 is true. This completes the proof.

Lemma 4.2

For any \(\xi >0\), the following inequality holds

Proof

Since \(\square \)

then the assertion in Lemma 4.2 is true. This completes the proof.

Theorem 4.1

Assume that \(r>\frac{\sigma _{1}^{2}}{2}\) and \(R_{0}^{S}=\frac{kbDr}{(a+mr)\left( D+d_{1}+\frac{\sigma _{2}^{2}}{2}\right) \left( d_{2}+\frac{\sigma _{3}^{2}}{2}\right) }\left( 1-\frac{\sigma _{1}^{2}}{2r}\right) ^{3}>1\), then for any initial value \((x(0),y(0),z(0))\in \mathbb {R} _{+}^{3}\), system (1.2) has a unique ergodic stationary distribution \(\mu (\cdot )\).

Proof

In order to prove Theorem 4.1, it suffices to validate conditions \(A_{1}\) and \(A_{2}\) in Lemma 2.1. Now we prove the condition \(A_{1}\). The diffusion matrix of system (1.2) is given by \(\square \)

Obviously, the matrix A is positive definite for any compact subset of \(\mathbb {R}_{+}^{3}\); then, the condition \(A_{1}\) in Lemma 2.1 is satisfied.

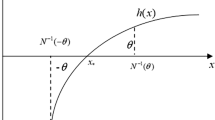

Now we verify Assumption \(A_{2}\) by constructing a \(C^{2}\)-function V(x, y, z) and an open bounded set U such that \(L(V)\le -1\) on \(\mathbb {R}_{+}^{3}\setminus U\). Define

where \(c_{1}\) and \(c_{2}\) are positive constants to be determined later and \(\theta >0\) is a sufficiently small constant satisfying \(3^{\theta }\theta <\min \left\{ \frac{d_{1}}{4\sigma _{\max }},\frac{d_{2}}{2\sigma _{\max }}\right\} \) and \(\sigma _{\max }:=\sigma _{1}^{2}\vee \sigma _{2}^{2}\vee \sigma _{3}^{2}=\max \left\{ \sigma _{1}^{2},\sigma _{2}^{2},\sigma _{3}^{2}\right\} \). Moreover, let

where M is a positive constant satisfying the following condition

and

Note that \(\tilde{V}(x,y,z)\) is not only continuous, but also tends to \(+\infty \) as (x, y, z) approaches the boundary of \(\mathbb {R}_{+}^{3}\) and as \(\Vert (x,y,z)\Vert \rightarrow \infty \), where \(\Vert \cdot \Vert \) denotes the Euclidean norm of a point in \(\mathbb {R}_{+}^{3}\). Therefore, it must be lower bounded and achieve this lower bound at a point \((x_{0},y_{0},z_{0})\) in the interior of \(\mathbb {R}_{+}^{3}\). Then, we define a nonnegative \(C^{2}\)-function \(V:\mathbb {R}_{+}^{3}\rightarrow \mathbb {R}_{+}\cup \{0\}\) as

It is shown in Appendix B that

where U is the bounded open set defined as

and \(0<\epsilon <1\) is a sufficiently small number. Consequently, condition \(A_{2}\) in Lemma 2.1 is satisfied and system (1.2) has a unique ergodic stationary distribution \(\mu (\cdot )\). This completes the proof.

5 Extinction of the Predator Populations

In this section, we shall establish sufficient conditions for extinction of the predator populations in two cases, that is, the first case is that the prey population survival and the predator populations extinction; the second case is that all the prey and predator populations extinction. We establish the following two theorems.

Theorem 5.1

Let (x(t), y(t), z(t)) be the solution of system (1.2) with any initial value \((x(0),y(0),z(0))\in \mathbb {R}_{+}^{3}\). If \(r>\frac{\sigma _{1}^{2}}{2}\), then for almost \(\omega \in \Omega \), we have

where \(\upsilon =\min \{D+d_{1},d_{2}\}(\sqrt{R_{0}}-1)I_{\{\sqrt{R_{0}}\le 1\}}+\max \{D+d_{1},d_{2}\}(\sqrt{R_{0}}-1)I_{\{\sqrt{R_{0}}>1\}}+\frac{kbD\sigma _{1}}{(a+mr)(D+d_{1})}\left( \frac{r}{2R_ {0}}\right) ^{\frac{1}{2}}-\left( 2\left( \sigma _{2}^{-2}+\sigma _{3}^{-2}\right) \right) ^{-1}\) and \(R_{0}=\frac{kbDr}{d_{2}(a+mr)(D+d_{1})}\). Particularly, if \(\upsilon <0\), then the predator populations y and z die out exponentially with probability one, i.e.

Furthermore, the distribution of x(t) converges weakly a.s. to the measure which has the density

where \(Q=\bigg [\sigma _{1}^{-2}\left( \frac{\sigma _{1}^{2}}{2a}\right) ^{\frac{2r}{\sigma _{1}^{2}}-1}\Gamma \left( \frac{2r}{\sigma _{1}^{2}}-1\right) \bigg ]^{-1}\) is a constant satisfying \(\int _{0}^{\infty }\pi (x)\mathrm{d}x=1\). This statement means that if \(\mu _{t}(x)\) denotes the probability density of the prey population at time t, then for any (sufficiently smooth) function f(x),

Proof

Since for any initial value \((x(0),y(0),z(0))\in \mathbb {R}_{+}^{3}\), the solution of system (1.2) is positive, and we obtain \(\square \)

Consider the following auxiliary 1-dimensional stochastic differential equation

with the initial value \(X(0)=x(0)>0\).

Setting

we compute that

Hence,

Obviously, we obtain

Therefore, the condition of Theorem 1.16 in Kutoyants (2003) follows clearly from (5.3). So system (5.2) has the ergodic property, and the invariant density is given by

where \(Q=\bigg [\sigma _{1}^{-2}\left( \frac{\sigma _{1}^{2}}{2a}\right) ^{\frac{2r}{\sigma _{1}^{2}}-1}\Gamma \left( \frac{2r}{\sigma _{1}^{2}}-1\right) \bigg ]^{-1}\) is a constant satisfying \(\int _{0}^{\infty }\pi (x)\mathrm{d}x=1\).

Next, we will prove statement (5.1). In order to prove statement (5.1), we only need to validate conditions \(A_{1}\) and \(A_{2}\) in Lemma 2.1. We first prove the condition \(A_{1}\). The diffusion matrix A of system (5.2) is positive definite for any compact subset of \(\mathbb {R}_{+}\), so the condition \(A_{1}\) in Lemma 2.1 holds.

Now we prove the condition \(A_{2}\). To this end, let us define a \(C^{2}\)-function \(\bar{V}:\mathbb {R}_{+}\rightarrow \mathbb {R}\) as

where \(\tilde{M}\) is a positive constant satisfying the following condition

Note that \(\bar{V}(X)\) is not only continuous, but also tends to \(+\infty \) as X approaches the boundary of \(\mathbb {R}_{+}\) and as \(|X|\rightarrow \infty \), where \(|\cdot |\) denotes the modulus of a point in \(\mathbb {R}_{+}\). Thus, it must be lower bounded and achieve this lower bound at a point \(X_{0}\) in the interior of \(\mathbb {R}_{+}\). Then, we can define a nonnegative \(C^{2}\)-function \(V:\mathbb {R}_{+}\rightarrow \mathbb {R}_{+}\cup \{0\}\) as

Applying Itô’s formula to (5.5) leads to

Define a bounded open set \(U_{\epsilon }\) as

where \(0<\epsilon <1\) is a sufficiently small number. In the set \(\mathbb {R}_{+}\setminus U_{\epsilon }\), we can choose \(\epsilon \) sufficiently small such that the following conditions hold

For convenience, we can divide \(U_{\epsilon }^{c}=\mathbb {R}_{+}\setminus U_{\epsilon }\) into two domains,

Obviously, \(U_{\epsilon }^{c}=U_{\epsilon }^{1}\cup U_{\epsilon }^{2}\). Next, we prove that \(L(V(X))\le -1\) for any \(X\in U_{\epsilon }^{c}\), which is equivalent to proving it on the above two domains, respectively.

Case 1 For any \(X\in U_{\epsilon }^{1}\), we have

which follows from (5.4) and (5.6). Therefore,

Case 2 For any \(X\in U_{\epsilon }^{1}\), we obtain

which follows from (5.7). Hence,

Clearly, from (5.8) and (5.9), we get that for a sufficiently small \(\epsilon \),

Consequently, the condition \(A_{2}\) in Lemma 2.1 holds and system (5.2) has a unique ergodic stationary distribution \(\mu _{t}(x)\). In view of Theorem 4.3 of Khasminskii (2012), p.117, we can immediately obtain statement (5.1).

Let X(t) be the solution of Eq. (5.2) with the initial value \(X(0)=x(0)>0\); then by the comparison theorem of 1-dimensional stochastic differential equation (Peng and Zhu 2006), we have \(x(t)\le X(t)\) for any \(t\ge 0\) a.s.

Moreover, let

where \((\omega _{1},\omega _{2})=\left( \frac{D}{d_{2}},\sqrt{R_{0}}\right) \) and \(M_{0}=\left( \begin{array}{ccccc} 0&{}\displaystyle \frac{kbr}{(a+mr)(D+d_{1})}\\ \displaystyle \frac{D}{d_{2}}&{}0 \end{array}\right) \).

Define a \(C^{2}\)-function \(V:\mathbb {R}_{+}^{2}\rightarrow \mathbb {R}_{+}\) as

where \(\alpha _{1}=\frac{\omega _{1}}{D+d_{1}}\), \(\alpha _{2}=\frac{\omega _{2}}{d_{2}}\). Making use of Itô’s formula to differentiate \(\ln V\) leads to

where

In addition, one can get that

and

In view of (5.11) and (5.12), we have

It follows from (5.10) that

Integrating (5.13) from 0 to t and then dividing by t on both sides, one obtains

where \(M_{1}(t):=\int _{0}^{t}\frac{\alpha _{1}\sigma _{2}y(s)}{V(s)}\mathrm{d}B_{2}(s)\), \(M_{2}(t):=\int _{0}^{t}\frac{\alpha _{2}\sigma _{3}z(s)}{V(s)}\mathrm{d}B_{3}(s)\) are local martingales whose quadratic variations are \(\langle M_{1},M_{1}\rangle _{t}=\sigma _{2}^{2}\int _{0}^{t}\left( \frac{\alpha _{1}y(s)}{V(s)}\right) ^{2}\mathrm{d}s\le \sigma _{2}^{2}t\) and \(\langle M_{2},M_{2}\rangle _{t}=\sigma _{3}^{2}\int _{0}^{t}\left( \frac{\alpha _{2}z(s)}{V(s)}\right) ^{2}\mathrm{d}s\le \sigma _{3}^{2}t\). Using the strong law of large numbers for local martingales (Mao 1997) yields

Since X(t) is ergodic and \(\int _{0}^{\infty }x\pi (x)\mathrm{d}x<\infty \), we have

where a detailed calculation of the last integral can be found in Appendix C.

Taking the superior limit on both sides of (5.14) and combining with (5.15) and (5.16) lead to

which is the desired assertion. Furthermore, if \(\upsilon <0\), we can easily conclude that

which implies that \(\lim _{t\rightarrow \infty }y(t)=0\) and \(\lim _{t\rightarrow \infty }z(t)=0\) a.s. In other words, the predator populations y and z tend to zero exponentially with probability one. This completes the proof.

Theorem 5.2

Let (x(t), y(t), z(t)) be the solution of system (1.2) with any initial value \((x(0),y(0),z(0))\in \mathbb {R}_{+}^{3}\). If \(r<\frac{\sigma _{1}^{2}}{2}\), then the prey population x and the predator populations y and z die out exponentially with probability one, i.e.

Proof

Applying Itô’s formula to the first equation of system (1.2), we get \(\square \)

Integrating from 0 to t on both sides of (5.17), we obtain

Dividing by t on both sides of (5.18) leads to

Taking the superior limit on both sides of (5.19) and noting that \(\lim _{t\rightarrow \infty }\frac{B_{1}(t)}{t}=0\) a.s., we obtain

which implies that

Hence, \(x(t)\rightarrow 0\) exponentially a.s. as claimed. In particular, there exists \(t_{0}\) and a set \(\Omega _{\epsilon }\subset \Omega \) such that \(\mathbb {P}(\Omega _{\epsilon })>1-\epsilon \) and \(\frac{kbx}{1+mx}\le kbx\le kb\epsilon \) a.s. for \(t\ge t_{0}\) and \(\omega \in \Omega _{\epsilon }\). To prove that y and z also tend to zero, let \(P(t)=y(t)+z(t)\). Applying Itô’s formula to \(\ln P(t)\) yields

Integrating (5.20) from 0 to \(t\ge t_{0}\) and then dividing by t on both sides, we get

where \(M_{3}(t):=\int _{0}^{t}\frac{\sigma _{2}y(s)}{y(s)+z(s)}\mathrm{d}B_{2}(s)\), \(M_{4}(t):=\int _{0}^{t}\frac{\sigma _{3}z(s)}{y(s)+z(s)}\mathrm{d}B_{3}(s)\) are local martingales whose quadratic variations are \(\langle M_{3},M_{3}\rangle _{t}=\sigma _{2}^{2}\int _{0}^{t}\left( \frac{y(s)}{y(s)+z(s)}\right) ^{2}\mathrm{d}s\le \sigma _{2}^{2}t\) and \(\langle M_{4},M_{4}\rangle _{t}=\sigma _{3}^{2}\int _{0}^{t}\left( \frac{z(s)}{y(s)+z(s)}\right) ^{2}\mathrm{d}s\le \sigma _{3}^{2}t\). Applying the strong law of large numbers for local martingales (Mao 1997) leads to

Taking the limit supremum of (5.21) as \(t\rightarrow \infty \) and combining with (5.22), one obtains

Letting \(\epsilon \rightarrow 0\), we have

which implies that

That is to say, the predator populations y and z die out exponentially with probability one. This completes the proof.

6 Numerical Simulations

In this section, we illustrate our theoretical results using numerical simulations of system (1.2). The initial state of the system is taken fixed at (1.0, 1.0, 1.2). For the numerical simulations, we use Milstein’s higher-order method mentioned in Higham (2001) to obtain the following discretization transformation of system (1.2)

where the time increment \(\Delta t\) is positive and \(\varepsilon _{i,j}\) are the Gaussian random variables which follow the distribution N(0, 1), \(i=1,2,3\).

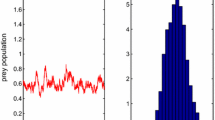

The solution of the stochastic system (1.2) and its histogram. The blue lines represent the solution of system (1.2), and the red lines represent the solution of the corresponding undisturbed system (1.1). The pictures on the right are the histogram of the probability density function for x, y and z populations at \(t=300\) for the stochastic system (1.2) with \(\sigma _{1}^{2}=0.02\), \(\sigma _{2}^{2}=0.1\), \(\sigma _{3}^{2}=0.08\). Other parameters are taken in Table 1 (Color figure online)

Example 6.1

In order to check the existence of an ergodic stationary distribution, in Fig. 1, we choose the values of the system parameters as follows: \(\sigma _{1}^{2}=0.02\), \(\sigma _{2}^{2}=0.1\), \(\sigma _{3}^{2}=0.08\). Other values of the system parameters, see Table 1. Direct calculation leads to \(r=0.6>0.01=\frac{\sigma _{1}^{2}}{2}\) and \(R_{0}^{S}=7.3024>1\), where \(R_{0}^{S}\) is defined in Theorem 4.1. In other words, the conditions of Theorem 4.1 hold. In view of Theorem 4.1, there is an ergodic stationary distribution \(\mu (\cdot )\) of system (1.2). Figure 1 illustrates this. The dynamics of system (1.2) can be visible on a log-linear plot. See Fig. 2.

Example 6.2

In order to illustrate the conclusions of Theorem 5.1, in Fig. 3, we choose the values of the system parameters as follows: \(\sigma _{1}^{2}=0.01\), \(\sigma _{2}^{2}=2\), \(\sigma _{3}^{2}=2\). Other values of the system parameters see Table 1. Direct calculation shows that \(\frac{\sigma _{1}^{2}}{2}=0.005<0.6=r\) and \(\upsilon =1.2675-2=-0.7325<0\), where \(\upsilon \) is defined in Theorem 5.1. Thus, the conditions of Theorem 5.1 hold. According to Theorem 5.1, the distribution of x(t) converges weakly a.s. to the stationary measure and the predator populations y and z tend to zero exponentially. See Fig. 3. The dynamics of system (1.2) can be visible on a log-linear plot. See Fig. 4.

Example 6.3

In order to obtain the extinction of the predator populations, in Fig. 5, we choose the values of the system parameters as follows: \(\sigma _{1}^{2}=1.8\), \(\sigma _{2}^{2}=1.8\), \(\sigma _{3}^{2}=2\). Other values of the system parameters see Table 1. By a simple computation, we obtain \(r=0.6<0.9=\frac{\sigma _{1}^{2}}{2}\). That is to say, the condition of Theorem 5.2 is satisfied. By Theorem 5.2, both the prey and predator populations die out exponentially. Figure 5 shows this. The dynamics of system (1.2) can be visible on a log-linear plot. See Fig. 6. From Figs. 3 and 5, we can observe that the larger white noise can lead to the extinction of predator populations.

Simulation of paths of \(\ln x(t)\), \(\ln y(t)\), \(\ln z(t)\) of model (1.2) with the initial value \((x(0),y(0),z(0))=(1.0,1.0,1.2)\) under the parameter values \(r=0.6\), \(a=0.1\), \(b=0.8\), \(m=0.5\), \(k=0.8\), \(D=0.4\), \(d_{1}=0.05\), \(d_{2}=0.06\), \(\sigma _{1}^{2}=0.02\), \(\sigma _{2}^{2}=0.1\), \(\sigma _{3}^{2}=0.08\)

The left column shows the paths of x(t), y(t), z(t) of model (1.2) with the initial value \((x(0),y(0),z(0))=(1.0,1.0,1.2)\) under the parameter values \(r=0.6\), \(a=0.1\), \(b=0.8\), \(m=0.5\), \(k=0.8\), \(D=0.4\), \(d_{1}=0.05\), \(d_{2}=0.06\), \(\sigma _{1}^{2}=0.01\), \(\sigma _{2}^{2}=2\), \(\sigma _{3}^{2}=2\). The blue lines represent the solution of system (1.2), and the red lines represent the solution of the corresponding undisturbed system (1.1). The right column displays the histogram of x(t) with values of \(\sigma _{1}^{2}=0.01\), \(\sigma _{2}^{2}=2\), \(\sigma _{3}^{2}=2\) (Color figure online)

Simulation of paths of \(\ln x(t)\), \(\ln y(t)\), \(\ln z(t)\) of model (1.2) with the initial value \((x(0),y(0),z(0))=(1.0,1.0,1.2)\) under the parameter values \(r=0.6\), \(a=0.1\), \(b=0.8\), \(m=0.5\), \(k=0.8\), \(D=0.4\), \(d_{1}=0.05\), \(d_{2}=0.06\), \(\sigma _{1}^{2}=0.01\), \(\sigma _{2}^{2}=2\), \(\sigma _{3}^{2}=2\)

Simulation of paths of x(t), y(t), z(t) of model (1.2) with the initial value \((x(0),y(0),z(0))=(1.0,1.0,1.2)\) under the parameter values \(r=0.6\), \(a=0.1\), \(b=0.8\), \(m=0.5\), \(k=0.8\), \(D=0.4\), \(d_{1}=0.05\), \(d_{2}=0.06\), \(\sigma _{1}^{2}=1.8\), \(\sigma _{2}^{2}=1.8\), \(\sigma _{3}^{2}=2\). The blue lines represent the solution of system (1.2), and the red lines represent the solution of the corresponding undisturbed system (1.1) (Color figure online)

Simulation of paths of \(\ln x(t)\), \(\ln y(t)\), \(\ln z(t)\) of model (1.2) with the initial value \((x(0),y(0),z(0))=(1.0,1.0,1.2)\) under the parameter values \(r=0.6\), \(a=0.1\), \(b=0.8\), \(m=0.5\), \(k=0.8\), \(D=0.4\), \(d_{1}=0.05\), \(d_{2}=0.06\), \(\sigma _{1}^{2}=1.8\), \(\sigma _{2}^{2}=1.8\), \(\sigma _{3}^{2}=2\)

7 Concluding Remarks and Future Directions

This paper studied a stochastic predator–prey model with stage structure for predator and Holling type II functional response. Firstly, by constructing a suitable stochastic Lyapunov function, we obtained sufficient conditions for the existence of a unique ergodic stationary distribution of the positive solutions to system (1.2). Then, we established sufficient conditions for extinction of the predator populations in two cases, that is, the first case is that the prey population survival and the predator populations extinction; the second case is that all the prey and predator populations extinction. The existence of a stationary distribution implies stochastic weak stability. More precisely, we have obtained the following results:

\(\bullet \) If \(r>\frac{\sigma _{1}^{2}}{2}\) and \(R_{0}^{S}=\frac{kbDr}{(a+mr)\left( D+d_{1}+\frac{\sigma _{2}^{2}}{2}\right) \left( d_{2}+\frac{\sigma _{3}^{2}}{2}\right) }\left( 1-\frac{\sigma _{1}^{2}}{2r}\right) ^{3}>1\), then for any initial value \((x(0),y(0),z(0))\in \mathbb {R} _{+}^{3}\), system (1.2) has a unique ergodic stationary distribution \(\mu (\cdot )\).

\(\bullet \) If \(r>\frac{\sigma _{1}^{2}}{2}\), then for any initial value \((x(0),y(0),z(0))\in \mathbb {R} _{+}^{3}\), the solution (x(t), y(t), z(t)) of system (1.2) has the following property

where \(\upsilon =\min \{D+d_{1},d_{2}\}(\sqrt{R_{0}}-1)I_{\{\sqrt{R_{0}}\le 1\}}+\max \{D+d_{1},d_{2}\}(\sqrt{R_{0}}-1)I_{\{\sqrt{R_{0}}>1\}}+\frac{kbD\sigma _{1}}{(a+mr)(D+d_{1})}\left( \frac{r}{2 R_{0}}\right) ^{\frac{1}{2}}-\left( 2\left( \sigma _{2}^{-2}+\sigma _{3}^{-2}\right) \right) ^{-1}\) and \(R_{0}=\frac{kbDr}{d_{2}(a+mr)(D+d_{1})}\). Especially, if \(\upsilon <0\), then the predator populations y and z die out exponentially with probability one. Moreover, the distribution of x(t) converges weakly a.s. to the measure which has the density

where \(Q=\bigg [\sigma _{1}^{-2}\left( \frac{\sigma _{1}^{2}}{2a}\right) ^{\frac{2r}{\sigma _{1}^{2}}-1}\Gamma \left( \frac{2r}{\sigma _{1}^{2}}-1\right) \bigg ]^{-1}\) is a constant satisfying \(\int _{0}^{\infty }\pi (x)\mathrm{d}x=1\). That is to say, if we use \(\mu _{t}(x)\) to denote the probability density of the prey population at time t, then for any (sufficiently smooth) function f(x),

\(\bullet \) If \(r<\frac{\sigma _{1}^{2}}{2}\), then the prey population x and the predator populations y and z die out exponentially with probability one.

Some interesting problems deserve further consideration. On the one hand, one can propose some more realistic but complex models, such as considering the effects of impulsive perturbations on system (1.2). The motivation for studying this is that ecological systems are often deeply perturbed by natural and human factors, such as planting, drought and flooding. To better describe these phenomena mathematically, impulsive effects should be taken into account (Bainov and Simeonov 1993; Lakshmikantham et al. 1989). On the other hand, while our model is autonomous, it is of interest to investigate the nonautonomous case and study other important dynamical properties, such as the existence of positive periodic solutions, the motivation is that due to the seasonal variation, individual life cycle, food supplies, mating habits, hunting, harvesting and so on, the birth rate, the death rate of the population and other parameters will not remain constant, but exhibit a more or less periodicity. It is also interesting to introduce the coloured noise, such as continuous-time Markov chain, into model (1.2). The motivation is that the dynamics of population may suffer sudden environmental changes, e.g. temperature, humidity, harvesting. Frequently, the switching among different environments is memoryless and the waiting time for the next switch is exponentially distributed; then, the sudden environmental changes can be modelled by a continuous-time Markov chain (see e.g. Luo and Mao 2007; Zhu and Yin 2007). Moreover, for more complex predator–prey systems, the Lyapunov function used in this paper is no longer applicable, and we need to construct new different Lyapunov functions to study the existence and uniqueness of ergodic stationary distributions. These problems will be the subject of future work.

References

Al-Omari, J.F.M.: The effect of state dependent delay on harvesting on a stage-structured predator–prey model. Appl. Math. Comput. 271, 142–153 (2015)

Bainov, D., Simeonov, P.: Impulsive Differential Equations: Periodic Solutions and Applications. Longman, Harlow (1993)

Beddington, J.R., May, R.M.: Harvesting natural populations in a randomly fluctuating environment. Science 197, 463–465 (1977)

Braumann, C.A.: Growth and extinction of populations in randomly varying environments. Comput. Math. Appl. 56, 631–644 (2008)

Deng, L., Wang, X., Peng, M.: Hopf bifurcation analysis for a ratio-dependent predator–prey system with two delays and stage structure for the predator. Appl. Math. Comput. 231, 214–230 (2014)

Freeman, H.I., Wu, J.: Persistence and global asymptotic stability of single species dispersal models with stage structure. Q. Appl. Math. 49, 351–371 (1991)

Gard, T.C.: Persistence in stochastic food web models. Bull. Math. Biol. 46, 357–370 (1984)

Gard, T.C.: Stability for multispecies population models in random environments. Nonlinear Anal. 10, 1411–1419 (1986)

Georgescu, P., Hsieh, Y.-H.: Global dynamics of a predator–prey model with stage structure for predator. SIAM J. Appl. Math. 67, 1379–1395 (2006)

Georgescu, P., Moroşanu, G.: Global stability for a stage-structured predator–prey model. Math. Sci. Res. J. 10, 214–226 (2006)

Georgescu, P., Hsieh, Y.-H., Zhang, H.: A Lyapunov functional for a stage-structured predator–prey model with nonlinear predation rate. Nonlinear Anal. Real World Appl. 11, 3653–3665 (2010)

Has’minskii, R.Z.: Stochastic Stability of Differential Equations. Sijthoff and Noordhoff, Alphen aan den Rijn (1980)

Higham, D.J.: An algorithmic introduction to numerical simulation of stochastic differential equations. SIAM Rev. 43, 525–546 (2001)

Ji, C., Jiang, D.: Dynamics of a stochastic density dependent predator-prey system with Beddington–DeAngelis functional response. J. Math. Anal. Appl. 381, 441–453 (2011)

Khasminskii, R.: Stochastic Stability of Differential Equations, 2nd edn. Springer-Verlag, Berlin (2012)

Kutoyants, A.Y.: Statistical Inference for Ergodic Diffusion Processes. Springer, London (2003)

Lakshmikantham, V., Bainov, D., Simeonov, P.: Theory of Impulsive Differential Equations. World Scientific Press, Singapore (1989)

Li, X., Mao, X.: Population dynamical behavior of non-autonomous Lotka–Volterra competitive system with random perturbation. Discret. Contin. Dyn. Syst. 24, 523–545 (2009)

Liu, M., Wang, K.: Global stability of stage-structured predator–prey models with Beddington–DeAngelis functional response. Commun. Nonlinear Sci. Numer. Simul. 16, 3792–3797 (2011)

Liu, M., Wang, K., Liu, X.: Long term behaviors of stochastic single-species growth models in a polluted environment. Appl. Math. Model. 35, 752–762 (2011)

Liu, Q., Zu, L., Jiang, D.: Dynamics of stochastic predator-prey models with Holling II functional response. Commun. Nonlinear Sci. Numer. Simul. 37, 62–76 (2016)

Luo, Q., Mao, X.: Stochastic population dynamics under regime switching. J. Math. Anal. Appl. 334, 69–84 (2007)

Mao, X.: Stochastic Differential Equations and Applications. Horwood Publishing, Chichester (1997)

May, R.: Stability and Complexity in Model Ecosystems. Princeton University Press, Princeton (2001)

Monod, J.: La technique de la culture continue, theorie et applications. Annales de I’Institut Pasteur 79, 390–410 (1950)

Novick, A., Szilard, L.: Description of the chemostat. Science 112, 715–716 (1950)

Pang, S., Deng, F., Mao, X.: Asymptotic properties of stochastic population dynamics. Dyn. Contin. Discrete Impuls. Syst. Ser. A Math. Anal 15, 603–620 (2008)

Peng, S., Zhu, X.: Necessary and sufficient condition for comparison theorem of 1-dimensional stochastic differential equations. Stoch. Process. Appl. 116, 370–380 (2006)

Sahoo, B., Poria, S.: Diseased prey predator model with general Holling type interactions. Appl. Math. Comput. 226, 83–100 (2014)

Skalski, G.T., Gilliam, J.F.: Functional responses with predator interference: viable alternatives to the Holling type II model. Ecology 82, 3083–3092 (2001)

Wang, W.: Global dynamics of a population model with stage structure for predator. In: Chen, L., Ruan, S., Zhu, J. (eds.) Advanced Topics in Biomathematics, pp. 253–257. World Scientific, River Edge (1997)

Wang, W., Chen, L.: A predator–prey system with stage-structure for predator. Comput. Math. Appl. 33, 83–91 (1997)

Wang, W., Mulone, G., Salemi, F., Salone, V.: Permanence and stability of a stage-structured predator–prey model. J. Math. Anal. Appl. 262, 499–528 (2001)

Xiang, Z., Li, Y., Song, X.: Dynamic analysis of a pest management SEI model with saturation incidence concerning impulsive control strategy. Nonlinear Anal. Real World Appl. 10, 2335–2345 (2009)

Xiao, Y., Chen, L.: Global stability of a predator–prey system with stage structure for the predator. Acta Math. Sin. (Engl. Ser.) 20, 63–70 (2004)

Xu, R., Ma, Z.: Stability and Hopf bifurcation in a predator–prey model with stage structure for the predator. Nonlinear Anal. Real World Appl. 9, 1444–1460 (2008)

Zhang, Q., Jiang, D.: The coexistence of a stochastic Lotka–Volterra model with two predators competing for one prey. Appl. Math. Comput. 269, 288–300 (2015)

Zhang, T., Teng, Z.: Global asymptotic stability of a delayed SEIRS epidemic model with saturation incidence. Chaos Solitons Fract. 37, 1456–1468 (2008)

Zhang, Q., Jiang, D., Liu, Z., O’Regan, D.: The long time behavior of a predator–prey model with disease in the prey by stochastic perturbation. Appl. Math. Comput. 245, 305–320 (2014)

Zhu, C., Yin, G.: Asymptotic properties of hybrid diffusion systems. SIAM J. Control. Optim. 46, 1155–1179 (2007)

Acknowledgements

We are very grateful to the editor and the anonymous referees for their careful reading and valuable comments, which greatly improved the presentation of the paper. We also thank the National Natural Science Foundation of People’s Republic of China (No. 11371085), Natural Science Foundation of Guangxi Province (No. 2016GXNSFBA380006), the Fundamental Research Funds for the Central Universities (No. 15CX08011A), KY2016YB370 and 2016CSOBDP0001.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Charles R. Doering.

Appendices

Appendix A: Calculation of the Derivation of the Bound

Making use of Itô’s formula leads to

where

and in the third inequality we have used the fact that \(|\sum _{i=1}^{k}v_{i}|^{p}\le k^{p-1}\sum _{i=1}^{k}|v_{i}|^{p}\) for \(\forall p\ge 1\).

Appendix B: Verification of the Condition \(A_{2}\) in Lemma 2.1

By system (1.2), we have

where the second inequality holds due to the fact that \(a(x-\frac{r}{a})^{2}\ge 0\). Moreover, we obtain

and

It follows from (B.1), (B.2) and (B.3) that

where in the second inequality we have used Lemma 4.1. Moreover, we get

and

In view of Lemma 4.2, we have

Therefore, by (B.4) and (B.5), one can see that

Taking \(c_{1}=\frac{kbDam\left( 1-\frac{\sigma _{1}^{2}}{2r}\right) ^{3}}{(a+mr)^{2}\left( d_{2}+\frac{\sigma _{3}^{2}}{2}\right) }\), \(c_{2}=\frac{kbDr\left( 1-\frac{\sigma _{1}^{2}}{2r}\right) ^{3}}{(a+mr)\left( d_{2}+\frac{\sigma _{3}^{2}}{2}\right) ^{2}}\), according to (B.6), we obtain

where

Noting that

then by (B.7) and (B.8), we obtain

where

Furthermore, we have (see Appendix A for a detailed derivation)

where B is a positive constant, depending only on the system parameters, whose precise definition can be found in Appendix A.

It follows from (B.2), (B.9) and (B.10) that

In order to validate the condition \(A_{2}\) in Lemma 2.1 holds, we only need to construct a bounded open set U. Define a bounded open set U as

where \(0<\epsilon <1\) is a sufficiently small number. In the set \(\mathbb {R}_{+}^{3}\setminus U\), we can choose \(\epsilon \) sufficiently small such that the following conditions hold

where C is a positive constant which will be given explicitly in expression (B.22). For convenience, we can divide \(U^{c}=\mathbb {R}_{+}^{3}\setminus U\) into six domains,

Clearly, \(U^{c}=U_{1}\cup U_{2}\cup U_{3}\cup U_{4}\cup U_{5}\cup U_{6}\). Next, we prove that \(L(V(x,y,z))\le -1\) for any \((x,y,z)\in U^{c}\), which is equivalent to proving it on the above six domains, respectively.

Case 1 For any \((x,y,z)\in U_{1}\), since \(\frac{xz}{1+mx}\le \frac{\epsilon }{1+m\epsilon }z\le \frac{\epsilon }{1+m\epsilon }\frac{\theta +z^{\theta +1}}{\theta +1}=\frac{\theta \epsilon }{(\theta +1)(1+m\epsilon )}+\frac{\epsilon }{( \theta +1)(1+m\epsilon )}z^{\theta +1}\), we have

which follows from (4.2), (B.11) and (B.12). Thus

Case 2 For any \((x,y,z)\in U_{2}\), since \(\frac{xz}{1+mx}\le xz\le \epsilon x\le \epsilon \frac{\theta +1+x^{\theta +2}}{\theta +2}=\frac{(\theta +1)\epsilon }{\theta +2}+\frac{\epsilon }{\theta +2}x^{\theta +2}\), we obtain

which follows from (4.2), (B.13) and (B.14). Hence,

Case 3 For any \((x,y,z)\in U_{3}\), one can get that

which follows from (B.15) and

Therefore,

Case 4 For any \((x,y,z)\in U_{4}\), one can see that

which follows from (B.16). So

Case 5 When \((x,y,z)\in U_{5}\), one can obtain that

which follows from (B.17). Consequently

Case 6 If \((x,y,z)\in U_{6}\), one can see that

which follows from (B.18). As a result

Obviously, from (B.19), (B.20), (B.21), (B.23), (B.24) and (B.25), we obtain that for a sufficiently small \(\epsilon \),

Hence, the condition \(A_{2}\) in Lemma 2.1 holds.

Appendix C: Detailed Calculation of the Last Integral of (5.16)

and

Hence, we have

Rights and permissions

About this article

Cite this article

Liu, Q., Jiang, D., Hayat, T. et al. Dynamics of a Stochastic Predator–Prey Model with Stage Structure for Predator and Holling Type II Functional Response. J Nonlinear Sci 28, 1151–1187 (2018). https://doi.org/10.1007/s00332-018-9444-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00332-018-9444-3

Keywords

- Predator–prey model

- Stage structure

- Holling type II functional response

- Stationary distribution

- Ergodicity

- Extinction