Abstract

The mathematical theory of pattern formation in electrically coupled networks of excitable neurons forced by small noise is presented in this work. Using the Freidlin–Wentzell large-deviation theory for randomly perturbed dynamical systems and the elements of the algebraic graph theory, we identify and analyze the main regimes in the network dynamics in terms of the key control parameters: excitability, coupling strength, and network topology. The analysis reveals the geometry of spontaneous dynamics in electrically coupled network. Specifically, we show that the location of the minima of a certain continuous function on the surface of the unit n-cube encodes the most likely activity patterns generated by the network. By studying how the minima of this function evolve under the variation of the coupling strength, we describe the principal transformations in the network dynamics. The minimization problem is also used for the quantitative description of the main dynamical regimes and transitions between them. In particular, for the weak and strong coupling regimes, we present asymptotic formulas for the network activity rate as a function of the coupling strength and the degree of the network. The variational analysis is complemented by the stability analysis of the synchronous state in the strong coupling regime. The stability estimates reveal the contribution of the network connectivity and the properties of the cycle subspace associated with the graph of the network to its synchronization properties. This work is motivated by the experimental and modeling studies of the ensemble of neurons in the Locus Coeruleus, a nucleus in the brainstem involved in the regulation of cognitive performance and behavior.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Direct electrical coupling through gap-junctions is a common way of communication between neurons, as well as between cells of the heart, pancreas, and other physiological systems (Connors and Long 2004). Electrical synapses are important for synchronization of the network activity, wave propagation, and pattern formation in neuronal networks. A prominent example of a gap-junctionally coupled network, whose dynamics is thought to be important for cognitive processing, is a group of neurons in the Locus Coeruleus (LC), a nucleus in the brainstem (Aston-Jones and Cohen 2005; Berridge and Waterhouse 2003; Sara 2009). Electrophysiological studies of the animals performing a visual discrimination test show that the rate and the pattern of activity of the LC network correlate with the cognitive performance (Usher et al. 1999). Specifically, the periods of the high spontaneous activity correspond to the periods of poor performance, whereas the periods of low synchronized activity coincide with good performance. Based on the physiological properties of the LC network, it was proposed that the transitions between the periods of high and low network activity are due to the variations in the strength of coupling between the LC neurons (Usher et al. 1999). This hypothesis motivates the following dynamical problem: to study how the dynamics of electrically coupled networks depends on the coupling strength. This question is the focus of the present work.

The dynamics of an electrically coupled network depends on the properties of the attractors of the local dynamical systems and the interactions between them. Following Usher et al. (1999), we assume that the individual neurons in the LC network are spontaneously active. Specifically, we model them with excitable dynamical systems forced by small noise. We show that, depending on the strength of electrical coupling, there are three main regimes of the network dynamics: uncorrelated spontaneous firing (weak coupling), formation of clusters and waves (intermediate coupling), and synchrony (strong coupling). The qualitative features of these regimes are independent from the details of the models of the individual neurons and network topology. Using the center-manifold reduction (Chow and Hale 1982; Guckenheimer and Holmes 1983) and the Freidlin–Wentzell large-deviation theory (Freidlin and Wentzell 1998), we derive a variational problem, which provides a useful geometric interpretation for various patterns of spontaneous activity. Specifically, we show that the location of the minima of a certain continuous function on the surface of the unit n-cube encodes the most likely activity patterns generated by the network. By studying the evolution of the minima of this function under the variation of the control parameter (coupling strength), we identify the principal transformations in the network dynamics. The minimization problem is also used for the quantitative description of the main dynamical regimes and transitions between them. In particular, for the weak and strong coupling regimes, we present asymptotic formulas for the activity rate as a function of the coupling strength and the degree of the network. The variational analysis is complemented by the stability analysis of the synchronous state in the strong coupling regime. In analyzing various aspects of the network dynamics, we pay special attention to the role of the structural properties of the network in shaping its dynamics. We show that in weakly coupled networks, only very rough structural properties of the underlying graph matter, whereas in the strong coupling regime, the finer features, such as the algebraic connectivity and the properties of the cycle subspace associated with the graph of the network, become important. Therefore, this paper presents a comprehensive analysis of electrically coupled networks of excitable cells in the presence of noise. It complements existing studies of related deterministic networks of electrically coupled oscillators (see, e.g., Coombes 2008; Gao and Holmes 2007; Lewis and Rinzel 2003; Medvedev and Kopell 2001; Medvedev 2011a and references therein).

The outline of the paper is as follows. In Sect. 2, we formulate the biophysical model of the LC network. Section 3 presents numerical experiments elucidating the principal features of the network dynamics. In Sect. 4, we reformulate the problem in terms of the bifurcation properties of the local dynamical systems and the properties of the linear coupling operator. We then introduce the variational problem, whose analysis explains the main dynamical regimes of the coupled system. In Sect. 5, we analyze the stability of the synchronous dynamics in the strong coupling regime, using fast–slow decomposition. The results of this work are summarized in Sect. 6.

2 The Model

2.1 The Single Cell Model

According to the dynamical mechanism underlying action potential generation, conductance-based models of neurons are divided into Type I and Type II classes (Rinzel and Ermentrout 1989). The former assumes that the model is near the saddle-node bifurcation, while the latter is based on the Andronov–Hopf bifurcation. Electrophysiological recordings of the LC neurons exhibit features that are consistent with the Type I excitability. The existing biophysical models of LC neurons use a Type I action potential generating mechanism (Alvarez et al. 2002; Brown et al. 2004). In accord with these experimental and modeling studies, we use a generic Type I conductance-based model to simulate the dynamics of the individual LC neuron:

Here, dynamical variables v(t) and n(t) are the membrane potential and the activation of the potassium current, I K, respectively. C stands for the membrane capacitance. The ionic currents I ion(v,n) are modeled using the Hodgkin–Huxley formalism (see the Appendix for the definitions of the functions and parameter values used in (2.1) and (2.2)). A small Gaussian white noise is added to the right hand side of (2.1) to simulate random synaptic input and other possible fluctuations affecting the system’s dynamics. Without noise (σ=0), the system is in the excitable regime. For σ>0, it exhibits spontaneous spiking. The frequency of the spontaneous firing depends on the proximity of the deterministic system to the saddle-node bifurcation and on the noise intensity. A typical trajectory of (2.1) and (2.2) stays in a small neighborhood of the stable equilibrium most of the time (Fig. 1a). Occasionally, it leaves the vicinity of the fixed point to make a large excursion in the phase plane and then returns to the neighborhood of the steady state (Fig. 1a). These versions of the dynamics generate a train of random spikes in the voltage time series (Fig. 1b).

(a) The phase plane for (2.1) and (2.2): nullclines plotted for the deterministic model (σ=0) and a trajectory of the randomly perturbed system (σ>0). The trajectory spends most of the time in a small neighborhood of the stable fixed point. Occasionally, it leaves the basin of attraction of the fixed point to generate a spike. (b) The voltage timeseries, v(t), corresponding to the spontaneous dynamics shown in plot (a)

In neuroscience, the (average) firing rate provides a convenient measure of activity of neural cells and neuronal populations. It is important to know how the firing rate depends on the parameters of the model. In this paper, we study the factors determining the rate of firing in electrically coupled network of neurons. However, before setting out to study the network dynamics, it is instructive to discuss the behavior of the single neuron model first. To this end, we use the center-manifold reduction to approximate (2.1) and (2.2) by a 1D system:

where z(t) is the rescaled projection of (v(t),n(t)) onto a 1D slow manifold, μ>0 is the distance to the saddle-node bifurcation, and \(\tilde{\sigma}>0\) is the noise intensity after rescaling. We postpone the details of the center-manifold reduction until we analyze a more general network model in Sect. 4.1.

The time between two successive spikes in voltage time series corresponds to the first time the trajectory of (2.3) with initial condition \(z(0)=z_{0}<\sqrt {\mu}\) overcomes potential barrier \(U(\sqrt{\mu})-U(-\sqrt{\mu})\). The large-deviation estimates (cf. Freidlin and Wentzell 1998) yield the logarithmic asymptotics of the first crossing time τ:

where \(\mathbb{E}_{z_{0}}\) stands for the expected value with respect to the probability generated by the random process z(t) with initial condition z(0)=z 0. Throughout this paper, we use ≍ to denote logarithmic asymptotics. It is also known that the first exit time τ is distributed exponentially as shown in Fig. 2 (cf. Day 1983).

Equation (2.4) implies that the statistics of spontaneous spiking of a single cell is determined by the distance of the neuronal model (2.1) and (2.2) to the saddle-node bifurcation and the intensity of noise. Below we show that, in addition to these two parameters, the strength and topology of coupling are important factors determining the firing rate of the coupled population.

2.2 The Electrically Coupled Network

The network model includes n cells, whose intrinsic dynamics is defined by (2.1) and (2.2), coupled by gap-junctions. The gap-junctional current that Cell i receives from the other cells in the network is given by

where g≥0 is the gap-junction conductance and

Adjacency matrix A=(a ij )∈ℝn×n defines the network connectivity. By adding the coupling current to the right hand side of the voltage equation (2.1) and combining the equations for all neurons in the network, we arrive at the following model:

where w (i) are n independent copies of the standard Brownian motion.

The network topology is an important parameter of the model (2.6) and (2.7). The following terminology and constructions from the algebraic graph theory (Biggs 1993) will be useful for studying the role of the network structure in shaping its dynamics. Let G=(V(G),E(G)) denote the graph of interactions between the cells in the network. Here, V(G)={v 1,v 2,…,v n } and E(G)={e 1,e 2,…,e m } denote the sets of vertices (i.e., cells) and edges (i.e., the pairs of connected cells), respectively. Throughout this paper, we assume that G is a connected graph. For each edge \(e_{j}=(v_{j_{1}},v_{j_{2}})\in V(G)\times V(G)\), we declare one of the vertices \(v_{j_{1}}, v_{j_{2}}\) to be the positive end (head) of e j , and the other to be the negative end (tail). Thus, we assign an orientation to each edge from its tail to its head. The coboundary matrix of G is defined as follows (cf. Biggs 1993):

Let \(\tilde{G}=(V(\tilde{G}),E(\tilde{G}))\subset G\) be a spanning tree of G, i.e., a connected subgraph of G such that \(|V(\tilde{G})|=n\), and there are no cycles in \(\tilde{G}\) (Biggs 1993). Without loss of generality, we assume that

Denote the coboundary matrix of \(\tilde{G}\) by \(\tilde{H}\).

Matrix

is called a graph Laplacian of G. The Laplacian is independent of the choice of orientation of edges that was used in the definition of H (Biggs 1993). Alternatively, the Laplacian can be defined as

where D=diag{deg(v 1),deg(v 2),…,deg(v n )} is the degree map and A is the adjacency matrix of G.

Let

denote the eigenvalues of L arranged in the increasing order counting the multiplicity. The spectrum of the graph Laplacian captures many structural properties of the network (cf. Biggs 1993; Bollobas 1998; Chung 1997). In particular, the first eigenvalue of L, λ 1(L)=0, is simple if and only if the graph is connected (Fiedler 1973). The second eigenvalue \(\mathfrak{a}=\lambda_{2}(L)\) is called the algebraic connectivity of G, because it yields a lower bound for the edge and the vertex connectivity of G (Fiedler 1973). The algebraic connectivity is important for a variety of combinatorial, probabilistic, and dynamical aspects of the network analysis. In particular, it is used in the studies of the graph expansion (Hoory et al. 2006), random walks (Bollobas 1998), and synchronization of dynamical networks (Jost 2007; Medvedev 2011b).

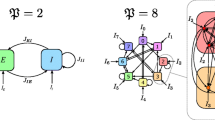

Next, we introduce several examples of the network connectivity including nearest-neighbor arrays of varying degree and a pair of degree 4 symmetric and random graphs. These examples will be used to illustrate the role of the network topology in pattern formation.

Example 2.1

The nearest-neighbor coupling scheme is an example of the local connectivity (Fig. 3a). For simplicity, we consider a 1D array. For higher dimensional lattices, the nearest-neighbor coupling is defined similarly. In this configuration, each cell in the interior of the array is coupled to two nearest neighbors. This leads to the following expression for the coupling current:

The coupling currents for the cells on the boundary are given by

The corresponding graph Laplacian is

Example 2.2

The k-nearest-neighbor coupling scheme is a natural generalization of the previous example. Suppose each cell is coupled to k of its nearest neighbors from each side whenever they exist or as many as possible otherwise:

where we use a customary convention that \(\sum_{j=a}^{b}\dots=0\) if b<a. The coupling matrix can be easily derived from (2.13).

Example 2.3

The all-to-all coupling features global connectivity (Fig. 3c):

The Laplacian in this case has the following form:

The graphs in the previous examples have different degrees: ranging from 2 in Example 2.1 to n−1 in Example 2.3. In addition to the degree of the graph, the pattern of connectivity itself is important for the network dynamics. This motivates our next example.

Example 2.4

Consider a pair of degree-4 graphs shown schematically in Fig. 4. The graph in Fig. 4a has symmetric connections. The edges of the graph in Fig. 4b were selected randomly. Both graphs have the same number of nodes and equal degrees.

Graphs with random connections like the one in the last example represent expanders, a class of graphs used in many important applications in mathematics, computer science and other branches of science and technology (cf. Hoory et al. 2006). In Sect. 5 we show that dynamical networks on expanders have very good synchronization properties (see also Medvedev 2011b).

Example 2.5

Let {G n } be a family of graphs on n vertices, with the following property:

Such graphs are called (spectral) expanders (Hoory et al. 2006; Sarnak 2004). There are known explicit constructions of expanders, including the celebrated Ramanujan graphs (Margulis 1988; Lubotzky et al. 1988). In addition, families of random graphs have good expansion properties. In particular, it is known that

where G n stands for the family of random graphs of degree d≥3 and n≫1 (Friedman 2008).

3 Numerical Experiments

The four parameters controlling the dynamics of the biophysical model (2.6) and (2.7) are the excitability, the noise intensity, the coupling strength, and the network topology. Assuming that the system is at a fixed distance from the bifurcation, we study the dynamics of the coupled system for sufficiently small noise intensity σ. Therefore, the two remaining parameters are the coupling strength and the network topology. We focus on the impact of the coupling strength on the spontaneous dynamics first. At the end of this section, we discuss the role of the network topology. The numerical experiments of this section show that activity patterns generated by the network are effectively controlled by the variations of the coupling strength.

3.1 Three Phases of Spontaneous Activity

To measure the activity of the network for different values of the control parameters, we will use the average firing rate—the number of spikes generated by the network per one neuron and per unit time. Figure 5a shows that the activity rate varies significantly with the coupling strength. The three intervals of monotonicity of the activity rate plot reflect three main stages in the network dynamics en route to complete synchrony: weakly correlated spontaneous spiking, formation of clusters and wave propagation, and synchronization. We discuss these regimes in more detail below.

The fundamental relation of the rate of spontaneous activity and the coupling strength. The graphs in (a) are plotted for three coupling configurations: the nearest-neighbor (dashed line), the 2-nearest-neighbor coupling (solid line) and all-to-all coupling (dash-dotted line) (see Examples 2.1–2.3). The graphs in (b) are plotted for the symmetric and random degree-4 graphs in solid and dashed lines, respectively (see Example 2.4). (c) The firing rate plot for the model, in which the coupling is turned off for values of the membrane potential above the firing threshold. The symmetric (solid line) and random (dashed line) degree-4 graphs are used for the two plots in (c)

Weakly Correlated Spontaneous Spiking

For g>0 sufficiently small, the activity retains the features of spontaneous spiking in the uncoupled population. Figure 6b shows no significant correlations between the activity of distinct cells in the weakly coupled network. The distributions of the interspike intervals are exponential in both cases (see Figs. 6c, d). There is an important change, however: the rate of firing goes down for increasing values of g≥0 for small g. This is clearly seen from the graphs in Fig. 5. The decreasing firing rate for very weak coupling can also be noted from the interspike interval distributions in Figs. 6c, d: the density in Fig. 6d has a heavier tail. Thus, weak electrical coupling has a pronounced inhibitory (shunting) effect on the network dynamics: it drains the current from a neuron developing a depolarizing potential and redistributes it among the cells connected to it. This effect is stronger for networks with a greater number of connections. The three plots shown in Fig. 5a correspond to nearest-neighbor coupling, 2-nearest neighbor coupling, and all-to-all coupling. Note that the slope at zero is steeper for networks with greater degree.

Coherent Structures

For increasing values of g>0 the system develops clusters, short waves, and robust waves (see Fig. 7). The appearance of these spatio-temporal patterns starts in the middle of the first decreasing portion of the firing rate plot in Fig. 5a and continues through the next (increasing) interval of monotonicity. While patterns in Fig. 7 feature progressively increasing role of coherence in the system’s dynamics, the dynamical mechanisms underlying cluster formation and wave propagation are distinct. Factors A and B below identify two dynamical principles underlying pattern formation in this regime.

- Factor A :

-

At the moment when one neuron fires due to large deviations from the rest state, neurons connected to it are more likely to be closer to the threshold and, therefore, are more likely to fire within a short interval of time.

- Factor B :

-

When a neuron fires, it supplies neurons connected to it with a depolarizing current. If the coupling is sufficiently strong, the gap-junctional current triggers action potentials in these cells and the activity propagates through the network.

Factor A follows from the variational interpretation of the spontaneous dynamics in weakly coupled networks, which we develop in Sect. 4. It is responsible for the formation of clusters and short waves, like those shown in Fig. 7a. To show numerically that Factor A (vs. Factor B) is responsible for the formation of clusters, we modified the model (2.6) and (2.7) in the following way. Once a neuron in the network has crossed the threshold, we turn off the current that it sends to the other neurons in the network until it gets back close to the stable fixed point. We will refer to this model as the modified model (2.6) and (2.7). Numerical results for the modified model in Figs. 8a, b, show that clusters are formed as the result of the subthreshold dynamics, i.e., are due to Factor A. Factor B becomes dominant for stronger coupling. It results in robust waves with constant speed of propagation. The mechanism of the wave propagation is essentially deterministic and is well known from the studies of waves in excitable systems (cf. Keener 1987). However, in the presence of noise, the excitation and termination of waves become random (see Figs. 7b, c).

Synchrony

The third interval of monotonicity in the graph of the firing rate vs. the coupling strength is decreasing (see Fig. 5a). It features synchronization, the final dynamical state of the network. In this regime, once one cell crosses the firing threshold the entire network fires in unison. The distinctive feature of this regime is a rapid decrease of the firing rate for increasing g (see Fig. 5a). The slowdown of firing in the strong coupling regime was studied in Medvedev (2009) (see also Medvedev 2010, 2011b; Medvedev and Zhuravytska 2011). When the coupling is strong the effect of noise on the network dynamics is diminished by the dissipativity of the coupling operator. The reduced effect of noise results in the decrease of the firing rate. In Sect. 5.4, we present analytical estimates characterizing denoising by electrical coupling for the present model.

3.2 The Role of the Network Topology

All connected networks of excitable elements (regardless of the connectivity pattern) undergo the three dynamical regimes, which we identified above for weak, intermediate, and strong coupling. The topology becomes important for quantitative description of the activity patterns. In particular, the topology affects the boundaries between different phases. We first discuss the role of topology for the onset of synchronization. The transition to synchrony corresponds to the beginning of the third phase and can be approximately identified with the location of the point of maximum on the firing rate plot (see Figs. 5a, b). The comparison of the plots for 1- and 2-nearest-neighbor coupling schemes shows that the onset of synchrony takes place at a smaller value of g for the latter network. This illustrates a general trend: networks with a greater number of connections tend to have better synchronization properties. However, the degree is not the only structural property of the graph that affects synchronization. The connectivity pattern is important as well. Figure 9 shows that a randomly connected degree-4 network synchronizes faster than its symmetric counterpart (cf. Example 2.4). The analysis in Sect. 4.4 shows that the point of transition to synchrony can be estimated using the algebraic connectivity of the graph \(\mathfrak{a}\). Specifically, the network is synchronized, if \(\gamma> \mathfrak{a}^{-1}\), where γ stands for the coupling strength in the rescaled nondimensional model. The algebraic connectivity is easy to compute numerically. For many graphs with symmetries including those in Examples 2.1–2.3, the algebraic connectivity is known analytically. On the other hand, there are effective asymptotic estimates of the algebraic connectivity available for certain classes of graphs that are important in applications, such as random graphs (Friedman 2008) and expanders (Hoory et al. 2006). The algebraic connectivities of the graphs in Examples 2.1–2.2 \(\mathfrak{a}=O(n^{-2})\) tend to zero as n→∞. Therefore, for such networks one needs to increase the strength of coupling significantly to maintain synchrony in networks growing in size. This situation is typical for symmetric or almost symmetric graphs. In contrast, it is known that for the random graph from Example 2.4 the algebraic connectivity is bounded away from zero (with high probability) as n→∞ (Friedman 2008; Hoory et al. 2006). Therefore, one can guarantee synchronization in dynamical networks on such graphs using finite coupling strength when the size of the network grows without bound. This counter-intuitive property is intrinsic to networks on expanders, sparse well connected graphs (Hoory et al. 2006; Sarnak 2004). For a more detailed discussion of the role of network topology in synchronization, we refer the interested reader to Sect. 5 in Medvedev (2011b).

The discussion in the previous paragraph suggests that connectivity is important in the strong coupling regime. It is interesting that to a large extent the dynamics in the weak coupling regime remains unaffected by the connectivity. For instance, the firing rate plots for the random and symmetric degree-4 networks (Example 2.5) shown in Fig. 5b coincide over an interval in g near 0. Furthermore, the plots for the same pair of networks based on the modified model (2.6) and (2.7) are almost identical, regardless the disparate connectivity patterns underlying these networks. The variational analysis in Sect. 4.3 shows that, in the weak coupling regime, to leading order the firing rate of the network depends only on the number of connections between cells. The role of the connectivity in shaping network dynamics increases in the strong coupling regime.

4 The Variational Analysis of Spontaneous Dynamics

In this section, we analyze dynamical regimes of the coupled system (2.6) and (2.7) under the variation of the coupling strength. In Sect. 4.1, we derive an approximate model using the center-manifold reduction. In Sect. 4.2, we relate the activity patterns of the coupled system to the minima of a certain continuous function on the surface of an n-cube. The analysis of the minimization problem for weak, strong, and intermediate coupling is used to characterize the dynamics of the coupled system in these regimes.

4.1 The Center-Manifold Reduction

In preparation for the analysis of the coupled system (2.6) and (2.7), we approximate it by a simpler system using the center-manifold reduction (Chow and Hale 1982; Guckenheimer and Holmes 1983). To this end, we first review the bifurcation structure of the model. Denote the equations governing the deterministic dynamics of a single neuron by

where x∈ℝd and f:ℝd×ℝ1→ℝd is a smooth function and μ is a small parameter, which controls the distance of (4.1) from the saddle-node bifurcation.

Assumption 4.1

Suppose that at μ=0, the unperturbed problem (4.1) has a nonhyperbolic equilibrium at the origin such that D f(0,0) has a single zero eigenvalue and the rest of the spectrum lies to the left of the imaginary axis. Suppose further that at μ=0 there is a homoclinic orbit to O entering the origin along the 1D center manifold.

Then under appropriate nondegeneracy and transversality conditions on the local saddle-node bifurcation at μ=0, for μ near zero the homoclinic orbit is transformed into either a unique asymptotically stable periodic orbit or to a closed invariant curve C μ having two equilibria: a node and a saddle (Chow and Hale 1982) (Fig. 10). Without loss of generality, we assume that the latter case is realized for small positive μ, and the periodic orbit exists for negative μ. Let μ>0 be a sufficiently small fixed number, i.e., (4.1) is in the excitable regime (Fig. 10). For simplicity, we assume that the stable node near the origin is the only attractor of (4.1).

Local system (4.1) is near the saddle-node on an invariant circle bifurcation

We are now in a position to formulate our assumptions on the coupled system. Consider n local systems (4.1) that are placed at the nodes of the connected graph G=(V(G),E(G)),|V(G)|=n, and coupled electrically:

where X=(x (1),x (2),…,x (n))T∈ℝd×…×ℝd=ℝnd, F(X,μ)=(f(x (1),μ),f(x (2),μ),…,f(x (n),μ))T, I n is an n×n identity matrix, P∈ℝd×d, and L∈ℝn×n is the Laplacian of G. The matrix J∈ℝd×d defines the linear combination of the local variables engaged in coupling. In the neuronal network model above, J=diag(1,0). Parameters g and σ control the coupling strength and the noise intensity, respectively. \(\dot{W}\) is a Gaussian white noise process in ℝnd. The local systems are taken to be identical for simplicity. The analysis can be extended to cover nonhomogeneous networks.

We next turn to the center-manifold reduction of (4.2). Consider (4.2)0 (the zero subscript refers to σ=0) for μ=g=0. By our assumptions on the local system (4.1), D f(0,0) has a 1D kernel. Denote

By the center-manifold theorem, there is a neighborhood of the origin in the phase space of (4.2), B, and δ>0 such that for |μ|<δ and |g|<δ, in B, there exists an attracting locally invariant n-dimensional slow manifold \(\mathcal{M}_{\mu,g}\). The trajectories that remain in B for sufficiently long time can be approximated by those lying in \(\mathcal{M}_{\mu,g}\). Thus, the dynamics of (4.2)0 can be reduced to \(\mathcal{M}_{\mu,g}\), whose dimension is d times smaller than that of the phase space of (4.2)0. The center-manifold reduction is standard. Its justification relies on the Lyapunov–Schmidt method and Taylor expansions (cf. Chow and Hale 1982). Formally, the reduced system is obtained by projecting (4.2)0 onto the center subspace of (4.2)0 for μ=g=0 (see Kuznetsov 1998):

where y=(y 1,y 2,…,y n )∈ℝn, \(y^{2}:=(y^{2}_{1},y^{2}_{2},\ldots,y^{2}_{n})\); provided that the following nondegeneracy conditions hold:

Conditions (4.5) and (4.6) are the nondegeneracy and transversality conditions of the saddle-node bifurcation in the local system (4.1). Condition (4.7) guarantees that the projection of the coupling onto the center subspace is not trivial. All conditions are open. Without loss of generality, assume that nonzero coefficients a 1,2,3 are positive.

Next, we include the random perturbation in the reduced model. Note that near the saddle-node bifurcation (0<μ≪1), the vector field of (4.2)0 is much stronger in the directions transverse to \(\mathcal{M}_{\mu,g}\) than in the tangential directions. The results of the geometric theory of randomly perturbed fast–slow systems imply that the trajectories of (4.2) with small positive σ that start close to the node of (4.2)0 remain in a small neighborhood of \(\mathcal{M}_{\mu,g}\) on finite intervals of time with overwhelming probability (see Berglund and Gentz 2006 for specific estimates). To obtain the leading order approximation of the stochastic system (4.2) near the slow manifold, we project the random perturbation onto the center subspace of (4.2)0 for μ=g=0 and add the resultant term to the reduced equation (4.4):

We replace \(B\dot{W}\) by identically distributed \(a_{4} \dot{w}\), where \(\dot{w}\) is a white noise process in ℝn and a 4=|P T p|. Here, |⋅| stands for the Euclidean norm of P T p∈ℝd. After rescaling the resultant equation and ignoring the higher order terms, we arrive at the following reduced model:

where w stands for a standard Brownian motion in ℝn and 1 n =(1,1,…,1)∈ℝn. Here, with a slight abuse of notation, we continue to use σ to denote the small parameter in the rescaled system. In the remainder of this paper, we analyze the reduced model (4.9).

4.2 The Exit Problem

In this subsection, the problem of identifying most likely dynamical patterns generated by (4.2) is reduced to a minimization problem for a smooth function on the surface of the unit cube.

Consider the initial value problem for (4.9)

where

and

where auxiliary parameter b>0 will be specified later. Let

denote a subset of the boundary of D, ∂D. If z(τ)∈∂ + D, then at least one of the neurons in the network is at the firing threshold. It will be shown below that the trajectories of (4.10) exit from D through ∂ + D with probability 1 as σ→0, provided b>0 is sufficiently large.Footnote 1 Therefore, the statistics of the first exit time

and the distribution of the location of the points of exit z(τ)∈∂ + D characterize the statistics of the interspike intervals and the most probable firing patterns of (2.1) and (2.2), respectively. The Freidlin–Wentzell theory of large deviations (Freidlin and Wentzell 1998) yields the asymptotics of τ and z(τ) for small σ>0.

To apply the large-deviation estimates to the problem at hand, we rewrite (4.10) as a randomly perturbed gradient system

where

where 〈⋅,⋅〉 stands for the inner product in ℝn−1. The additive constant 2/3 in the definition of the potential function F(ξ) is used to normalize the value of the potential at the local minimum F(−1)=0.

The following theorem summarizes the implications of the large-deviation theory for (4.15).

Theorem 4.2

Let \(\bar{z}^{(1)},\bar{z}^{(2)},\ldots, \bar{z}^{(k)}, \;k\in\mathbb{N}\), denote the points of minima of U γ (z) on ∂D

and \(\bar{Z}=\bigcup_{i=1}^{k}\{\bar{z}^{(i)}\}\). Then for any z 0∈D and δ>0,

where ρ(⋅,⋅) stands for the distance in ℝn.

The statements (A)–(C) can be shown by adopting the proofs of Theorems 2.1, 3.1, and 4.1 of Chap. 4 of Freidlin and Wentzell (1998) to the case of the action functional with multiple minima.

Theorem 4.2 reduces the exit problem for (4.10) to the minimization problem

In the remainder of this section, we study (4.20) for the weak, strong, and intermediate coupling strength.

4.3 The Weak Coupling Regime

In this subsection, we study the minima of U γ (z) on ∂D for small |γ|. First, we locate the points of minima of the U γ (z) for γ=0 (cf. Lemma 4.3). Then, using the Implicit Function Theorem, we continue them for small |γ| (cf. Theorem 4.4).

Lemma 4.3

Let b>0 in the definition of D (4.12) be fixed. The minimum of U 0(z) on ∂ + D is achieved at n points

The minimal value of U 0(z) on ∂D is

Proof

Denote

and \(\partial_{i} D=\partial_{i}^{-} D\cup\partial_{i}^{-} D, i\in[n]\).

Consider the restriction of U 0(z) on \(\partial_{1}^{+} D\)

where y=(y 1,y 2,…,y n−1). The gradient of \(\tilde{U}_{0}\) is equal to

The definition of f (4.11) implies that U 0 restricted to \(\partial_{1}^{+} D\) has a unique critical point at z=(1,−1 n−1 ) and U 0((1,−1 n−1 ))=4/3. On the other hand, on the boundary of \(\partial_{1}^{+} D\), \(\partial \partial_{1}^{+} D\), the minimum of U 0(z) satisfies

for any b>0 in (4.12).

Likewise, as follows from the definitions of F (4.16) and D (4.12), for \(z\in\partial_{1}^{-} D\), U(z)>4/3, for any choice of b>0 in (4.12). Thus, z=(1,−1 n−1 ) minimizes U 0 over ∂ 1 D. The lemma is proved by repeating the above argument for the remaining faces ∂ i D, i∈[n]∖{1}. □

Theorem 4.4

Suppose b>0 in (4.12) is sufficiently large. There exists γ 0>0 such that for |γ|≤γ 0, on each face \(\partial_{i}^{+} D, i\in[n]\), U γ (z) achieves minimum

where \(\phi^{i}:\ [-\gamma_{0}, \gamma_{0}]\to\partial_{i}^{+} D, i\in[n]\), is a smooth function such that

and l i∈ℝn−1 is the ith column of the graph Laplacian L after deleting the ith entry. The equations in (4.24) are written using the following local coordinates for \(\partial^{+}_{i} D\)

Moreover, the minimal value of U γ on \(\partial_{i}^{+} D\) is given by

Consequently,

Proof

Let \(\tilde{U}_{\gamma}(y):=U_{\gamma}((1,y)),\; y\in \mathbb{R}^{n-1}\) denote the restriction of U γ on \(\partial_{1}^{+} D\):

where \(y=(y_{1},y_{2},\ldots,y_{n-1}),\; z(y):=(1,y_{1},y_{2},\ldots,y_{n-1})\).

Next, we compute the gradient of \(\tilde{U}_{\gamma}\):

where \(\tilde{\mathbf{f}}(y)=(f(y_{1}), f(y_{2}),\ldots, f(y_{n-1}))\). Further,

where L i, i∈[n], stands for the matrix obtained from L by deleting the ith row and ith column. By plugging (4.29) in (4.28), we have

The equation for the critical points has the following form:

Note that

Above we used the relation

which follows from the fact that the rows of L sum to zero.Footnote 2

By the Implicit Function Theorem, for small |γ|, the unique solution of (4.31) is given by the smooth function ϕ 1: [−γ 0,γ 0]→ℝn−1 that satisfies

By taking into account

from (4.34) we have

This shows (4.24). To show (4.25), we use the Taylor expansion of \(\tilde{U}_{\gamma}\):

By choosing b>0 in (4.12) large enough one can ensure that \(\tilde{U}_{\gamma}(\phi^{1}(\gamma))\) for γ∈[−γ 0,γ 0], remains smaller than the values of U(z) on the boundary \(z\in \partial^{+}_{1} D\). To complete the proof, one only needs to apply the same argument to all other faces of ∂ + D, and note that on ∂ − D, the values of U γ ,γ∈[−γ 0,γ 0], can be made arbitrarily large by choosing sufficiently large b>0 in (4.12). □

Remark 4.5

The second equation in (4.24) shows that the minima of the potential function lying on the faces corresponding to connected cells move toward the common boundaries of these faces, under the variation of γ>0.

4.4 The Strong Coupling Regime

For small |γ|, the minima of U γ (z) are located near the minima of the potential function Φ(z) (cf. (4.16)). In this subsection, we show that for larger |γ|, the minima of U γ (z) are strongly influenced by the quadratic term 〈Hz,Hz〉, which corresponds to the coupling operator in the differential equation model (4.10). To study the minimization problem for |γ|≫1, we rewrite U γ (z) as follows:

Thus, the problem of minimizing U γ for γ≫1 becomes the minimization problem for

Lemma 4.6

U 0(z) attains the global minimum on ∂ + D at z=1 n :

Proof

U 0(z)=〈Hz,Hz〉 is nonnegative, moreover,

Finally, 1 n =ker H∩∂ + D. □

Theorem 4.7

Let \(\lambda_{1}(L^{i}),\; i\in[n]\), denote the smallest eigenvalue of matrix L i, obtained from L by deleting the ith row and the ith column. Then for 0<λ≤2−1min i∈[n] λ 1(L i), U λ(z) achieves its minimal value on ∂D at z=1 n :

provided b>0 in the definition of D (cf. (4.12)) is sufficiently large.

Remark 4.8

By the interlacing eigenvalues theorem (cf. Theorem 4.3.8, Horn and Johnson 1999), \(\lambda_{1}(L^{i})\le\lambda_{2}(L),\;\forall i\in[n]\). (Note that L i is not a graph Laplacian.) Furthermore, \(\lambda_{1}(L^{i})>0, \; \forall i\in[n]\), because G is connected (cf. Theorem 6.3, Biggs 1993). With these observations, Theorem 4.7 yields an estimate for the onset of synchrony in terms of the eigenvalues of L:

Note that (4.41) yields smaller lower bounds for the onset of synchrony for graphs with larger algebraic connectivity. In particular, for the families of expanders {G n } (cf. Example 2.5), it provides bounds on the coupling strength guaranteeing synchronization that are uniform in n∈ℕ.

For the proof of Theorem 4.7, we need the following auxiliary lemma.

Lemma 4.9

For z∈∂ + D there exists i∈[n] such that

where y=(y 1,y 2,…,y n−1)T is defined by

Proof

Since z=(z 1,z 2,…,z n )T∈∂ + D, z i =1 for some i∈[n]. Without loss of generality, let z 1=1. Then

and

where the quadratic function \(Q(y)=\langle H\tilde{y}, H\tilde{y}\rangle\). Differentiating Q(y) yields

Therefore,

□

Proof of Theorem 4.7

Let z∈∂ + D. By Lemma 4.9, for some i∈[n] and y defined in (4.43), we have

We will use the following observations:

-

(a)

L i is a positive definite matrix, and, therefore,

$$y^\mathsf{T}{L}^iy\ge\lambda_1\bigl(L^i\bigr)y^\mathsf{T}{y}.$$ -

(b)

For ξ≥0,

$$F(1-\xi)={4\over3} -\xi^2 +{\xi^3\over3}\ge{4\over3} -\xi^2.$$ -

(c)

$$\varPhi (\mathbf{1}_\mathbf{n})= {\lambda4n\over3}.$$

Using (a) and (b), from (4.44), we have

provided λ<2−1 λ 1(L i).

This shows that z=1 n minimizes U λ on ∂ + D for λ<2−1min i∈[n] λ 1(L i). On the other hand, on ∂ − D, U λ can be made arbitrarily large for any λ>0 provided b>0 in (4.12) is sufficiently large. □

4.5 Intermediate Coupling Strength: Formation of Clusters

In this subsection, we develop a geometric interpretation of the spontaneous dynamics of (2.6) and (2.7). After introducing certain auxiliary notation, we discuss how the spatial location of the minima of U γ (z) on the surface of the n-cube encodes the most likely activity patterns of (2.6) and (2.7). Then we proceed to derive a lower bound on the coupling strength necessary for the development of coherent structures.

Let k∈[n],1≤i 1<i 2<⋯<i k ≤n and define a (n−k)-dimensional face of D by

The union of all (n−k)-dimensional faces is denoted by

Suppose ξ is a point of minimum of U γ (z) on ∂ + D. If ξ∈∂ n−k D, k≥2, then with high probability the network discharges in k-clusters. The analysis in Sect. 4.3 shows that for small |γ| there is practically no correlation between the activity of distinct neurons. On the other hand, when the coupling is strong, all cells fire in unison (cf. Sect. 4.4). Lemma 4.10 provides a lower bound on the coupling strength needed for the formation of clusters.

Lemma 4.10

Let ξ∈∂D be a point of global minimum of U γ on ∂D. If ξ∈∂ (n−k) D for some k≥2, then

Proof

Suppose \(\hat{z}\in\partial^{n-2}D\). Without loss of generality, we assume that

Denote \(y=(y_{1},y_{2},y_{3},\ldots,y_{n-1})=(y_{1},\hat{y}), \; y_{1}\ge0\), and z=1 n−1 −(0,y). Thus,

where L 12 is a matrix obtained from L by deleting the first and the second rows and columns. The Laplacian of G can be represented as

where the adjacency matrix A is nonnegative (cf. (2.11)). Therefore, for nonnegative \(y=(y_{1},\hat{y})\in \mathbb{R}^{n-1}\),

Further, for any 0<δ<1,

provided 0≤y 2<3δ. The combination of (4.48)–(4.51) yields

for y 2∈(0,3δ). By (4.52),

provided

The statement of the lemma follows from the observation above by noting that δ∈(0,1) in (4.53) is arbitrary. □

5 An Alternative View on Synchrony

The variational analysis of the previous section shows that when the coupling is strong (γ>(λ 2(L))−1), the neurons in the network fire together (cf. Theorem 4.7). In this section, we use a complementary approach to studying synchrony in the coupled system. We show that strong coupling brings about the separation of the timescales in the system’s dynamics, which defines two principal modes in the strong coupling regime: the fast synchronization and slow large-deviation type escape from the potential well. The analysis of the fast subsystem elucidates the stability of the synchronization subspace. In particular, it reveals the contribution of the network topology to the robustness of synchrony. The analysis of the slow subsystem yields the asymptotic rate of the network activity in the strong coupling regime (cf. Theorem 4.7).

5.1 The Slow–Fast Decomposition

Our first goal is to show that when coupling is strong the dynamics of the coupled system (4.10) has two disparate timescales. To this end, we introduce the following coordinate transformation:

where

and \(\tilde{H}\in \mathbb{R}^{(n-1)\times n}\) is the coboundary matrix corresponding to the spanning tree \(\tilde{G}\) of G (see (2.9)).

Lemma 5.1

Equation (5.1) defines an invertible linear transformation:

Matrix S=(s ij )∈ℝn×(n−1) satisfies

Proof

Fix i∈[n]. For each j∈[n]∖{i} there exists a unique path connecting nodes v i ∈V(G) and v j ∈V(G) and belonging to \(\tilde{G}\)

Thus,

where ξ=(ξ 1,ξ 2,…,ξ n−1). By summing n−1 equations (5.5), adding the identity z i =z i to the resultant equation, and dividing the result by n, we obtain

The first inequality in (5.3) follows from the formula for s ij in (5.6) and |σ k (i,j)|≤1. To show the second identity in (5.3), add up equations (5.6) for i∈[n] and use the definition of η:

□

Lemma 5.2

Suppose G is a connected graph and L∈ℝn×n is its Laplacian. There exists a unique \(\hat{L}\in \mathbb{R}^{(n-1)\times(n-1)}\) such that

where \(\tilde{H}\in \mathbb{R}^{(n-1)\times n}\) is the coboundary matrix of the \(\tilde{G}\subset G\), a spanning tree of G. The spectrum of \(\hat{L}\) consists of all nonzero eigenvalues of L

Proof

Since G is connected and \(\tilde{G}\) is a spanning tree of G,

The existence and uniqueness of the solution of the matrix equation (5.7), \(\hat{L}\), is shown in Lemma 2.3 of Medvedev (2011b). Equation (5.8) follows from Lemma 2.5 of Medvedev (2011b). □

We are now in a position to rewrite (4.10) in terms of (ξ,η).

Lemma 5.3

The following system of stochastic differential equations is equivalent in form to (4.10):

where

Throughout this section, \(\dot{W}\) and \(\dot{w}\) denote the white noise processes in ℝn and ℝ, respectively.

Proof

After multiplying both sides of (4.10) by \(\tilde{H}\) and using (5.7), we have

where

This shows (5.10). By multiplying (4.10) by \(n^{-1}\mathbf{1}_{\mathbf{n}}^{\mathsf{T}}\), using 1 n ∈ker L T, and the definition of η, we have

Here, we are using the fact that the distributions of \(n^{-1}\mathbf{1}_{\mathbf{n}}^{\mathsf{T}}\dot{W}\) and \({\sim}{1\over\sqrt{n}} \dot{w}\) coincide. Using the definition of f (4.11) and (5.3), we have

□

5.2 Fast Dynamics: Synchronization

For γ≫1, the system of equations (5.10) and (5.11) has two disparate timescales. The stable fixed point ξ=0 of the fast subsystem (5.10) corresponds to the synchronous state of (4.10):

In this section, we analyze the stability of the steady state of the fast subsystem. Specifically, we identify the network properties that determine the rate of convergence of the trajectories of the fast subsystem to the steady state and its degree of stability to random perturbations. These results elucidate the contribution of the network topology to the synchronization properties of the coupled system (4.10).

By switching to the fast time (5.10) and (5.11), we have

where

The leading order approximation of the fast equation (5.14) does not depend on the slow variable Y:

The solution of (5.17) with deterministic initial condition \(\tilde{X}(0)=x\in \mathbb{R}^{n-1}\) is a Gaussian random process. The mean vector and the covariance matrix functions

satisfy linear equations (Karatzas and Shreve 1991):

Recall that \(\hat{L}\) is a positive definite matrix, whose smallest eigenvalue \(\lambda_{1}(\hat{L})\) is equal to the algebraic connectivity of G, \(\mathfrak{a}=\lambda_{2}(L)\) (see Lemma 5.2). By integrating the first equation in (5.19), we find that

for some C 1>0. Thus, the trajectories of the fast subsystem converge in mean to the stable fixed point \(\tilde{X}=0\), which corresponds to the synchronization subspace of (4.10). Moreover, the rate of convergence is set by the algebraic connectivity \(\mathfrak{a}\).

Further,

measures the spread of the trajectories around the synchronization subspace. By integrating the second equation in (5.19), we have

where

Parameter \(\kappa(G,\tilde{G})\) quantifies the mean-square stability of the synchronization subspace. In Sect. 5.4, we show that \(\kappa(G,\tilde{G})\) depends on the properties of the cycle subspace of G.

For small \(\tilde{\sigma}>0\), a typical trajectory of (5.17) converges to a small neighborhood of the stable equilibrium at the origin. However, eventually it leaves the neighborhood of the origin under persistent random perturbations. Next, we estimate the time that the trajectory of the fast subsystem spends near the origin.

Let ρ>0 and \(B_{\rho}=\{X\in \mathbb{R}^{n-1}:\; |X|<\rho\},\; \rho>0\). By \(\tilde{X}(t)\) we denote the solution of (5.17) satisfying initial condition \(\tilde{X}(0)=x\in B_{\rho}\). Define the first exit time of the trajectory of (5.17) from B ρ :

Using the large-deviation estimates (cf. Freidlin and Wentzell 1998), we have

where

is the minimum of the positive definite quadratic form 2−1〈Lx,x〉 on the boundary of B ρ .

By combining (5.25) and (5.26) we obtain the logarithmic asymptotics of the first exit time from B ρ :

For small δ>0 (i.e., for large γ≫1), (5.27) applies to the fast equation (5.14). Switching back to the original time, we rewrite the estimate for the first exit time for the fast equation (5.10):

Finally, choosing \(\rho:=\gamma^{-3+\iota\over2}\) for an arbitrary fixed \(0<\iota<{3\over2}\), we have

5.3 The Slow Dynamics: Escape from the Potential Well

We recap the results of the analysis of the fast subsystem. The trajectories of the fast subsystem (5.10) enter an \(O(\gamma^{-3+\iota\over 2})\) neighborhood of the stable equilibrium ξ=0 (corresponding to the synchronization subspace of the full system) in time O(γ −1lnγ) and remain there with overwhelming probability over time intervals O(exp{O(σ −2 γ ι)}. During this time, the dynamics is driven by the slow equation (5.11), which we analyze next.

While the trajectory of the fast subsystem stays in the \(O(\gamma^{-3+\iota\over2})\) neighborhood of the equilibrium, the quadratic term in (5.11) Q 2(ξ)=O(γ −3+ι) is small. Thus, the leading order approximation of the slow equation is independent of ξ on time intervals O(exp{O(σ −2)})

Equation (5.30) has a stable fixed point at the origin, where the potential function F(η) attains its minimum value (see (4.16)). The escape of the trajectories of (5.30) from the potential well, defined by F(η) corresponds to spontaneous synchronized discharge of the coupled subsystem. Suppose \(\tilde{\eta}(0)=\eta_{0}<1\) and define

By the large-deviation theory, we have

Equation (5.32) provides the estimate for the frequency of spontaneous activity in the strong coupling regime. Note that (5.32) is consistent with the estimate in Theorem 4.7 derived using the variational argument. Therefore, the analysis in this section yields the dynamical interpretation for the minimization problem for (4.20) in the strong coupling regime.

We summarize the results of the slow–fast analysis. In the strong coupling regime, the dynamics splits into two modes: fast synchronization and slow synchronized fluctuations leading to large-deviation type discharges of the entire network. By analyzing the fast subsystem, we obtain estimates of stability of the synchronization subspace. The analysis of the slow subsystem yields the asymptotic estimate of the firing rate in the strong coupling regime.

5.4 The Network Topology and Synchronization

The stability analysis in Sect. 5.2 provides interesting insights into what structural properties of the network are important for synchronization. In this subsection, we discuss the implications of the stability analysis in more detail.

First, we rewrite (5.20) and (5.22), using the original time

where \(\tilde{\xi}(t)=\tilde{X}(\delta^{-1}t)\). (5.33) shows that the rate of convergence to the synchronous state depends on the coupling strength, γ, and the algebraic connectivity of the network, \(\mathfrak{a}\). The convergence is faster for stronger coupling and larger \(\mathfrak{a}\). This, in particular, implies that the rate of convergence to synchrony in networks on spectral expanders (cf. Example 2.5) remains O(1), as the size of the network grows without bound. In particular, the families of the random graphs (cf. Example 2.4) have this property. In contrast, for many networks with symmetries (cf. Example 2.2) the algebraic connectivity \(\mathfrak{a}=o(1)\) as n→∞, and, therefore, by (5.33), synchronization requires longer time, if the size of the network grows. For a more detailed discussion of the synchronization properties of the networks on expanders, we refer the interested reader to Medvedev (2011b).

Next, we turn to (5.34), which characterizes the dispersion of the trajectories around the synchronization subspace. \(\mathbb{E}|\tilde{\xi}|^{2}\) may be viewed as a measure of robustness of synchrony to noise or, more generally, to constantly acting perturbations. By (5.34), the synchrony is more robust for stronger coupling, because the asymptotic value of \(\mathbb{E}\vert \tilde{\xi}(t)\vert ^{2}\to0\) as γ→∞. The contribution of the network topology to the mean-square stability of the synchronous state is reflected in \(\kappa(G,\tilde{G})\). Trajectories of the networks with smaller \(\kappa(G,\tilde{G})\) are more tightly localized around the synchronization subspace.

To explain the graph-theoretic interpretation of \(\kappa(G,\tilde{G})\), we review the structure of the cycle subspace of G. Recall that the edge set of the spanning tree \(\tilde{G}\) consists of the first n−1 edges (see (2.9)):

If G is not a tree, then

where c is the corank of G. To each edge e n+k ,k∈[c], there corresponds a unique cycle O k of length |O k |, such that it consists of e k and the edges from \(E(\tilde{G})\). The following lemma relates the value of \(\kappa(G,\tilde{G})\) to the properties of the cycles \(\{O_{k}\}_{k=1}^{c}\).

Lemma 5.4

(Medvedev 2011b)

Let \(G=(V(G), E(G)), \; |V(G)|=n\), be a connected graph:

-

(A)

If G is a tree then

$$ \kappa(G,\tilde{G})=n-1.$$(5.35) -

(B)

Otherwise, let \(\tilde{G}\subset G\) be a spanning tree of G a and \(\{O_{k}\}_{k=1}^{c}\) be the corresponding independent cycles.

-

(B.1)

Denote

$$\mu={1\over n-1}\sum_{k=1}^c\bigl(|O_k|-1\bigr).$$Then

$$ {1\over1+\mu} \le{\kappa(G,\tilde{G})\over n-1}\le1,$$(5.36) -

(B.2)

If 0<c<n−1 then

$$ 1-{c\over n-1} \biggl(1-{1\over M} \biggr)\le{\kappa(G,\tilde{G})\over n-1}\le1,$$(5.37)where

$$M=\max_{k\in[c]} \biggl\{|O_k| +\sum _{l\neq k} |O_k\cap O_l|\biggr\}.$$ -

(B.3)

If O k ,k∈[c] are disjoint. Then

$$ {\kappa(G,\tilde{G})\over n-1}= 1-{c\over n-1} \Biggl(1-{1\over c}\sum_{k=1}^c |O_k|^{-1}\Biggr).$$(5.38)In particular,

$${\kappa(G,\tilde{G})\over n-1} \le 1-{c\over n-1} \biggl(1-{1\over \min_{k\in[c]} |O_k|} \biggr)$$and

-

(B.1)

The asymptotic estimate of the mean-square stability of the synchronization subspace in (5.34) combined with the estimates of \(\kappa (G,\tilde{G})\) in Lemma 5.4 show how the structure of the cycle subspace of G translates into the stability of the synchronous state. In Medvedev (2011b), one can also find an estimate of the asymptotic stability of the synchronization subspace in terms of the effective resistance of the graph G. These results show how the structural properties of the network shape synchronization properties of the coupled system.

6 Discussion

In this paper, we presented a variational method, which reduces the problem of pattern formation in electrically coupled networks of neurons to the minimization problem for the potential function U γ (z) on the surface of a unit n-cube, ∂D. The variational problem provides geometric interpretation for the spontaneous dynamics generated by the network. Specifically, the location of the points of minima of the constrained potential function \(\tilde{U}_{\gamma}(z)=U_{\gamma}(z\in\partial D)\) corresponds to the most likely patterns of network activity.

The variational formulation has several important implications for the analysis of the dynamical problem. First, the minimization problem has an intrinsic bifurcation structure. By the bifurcation of the problem (4.20), it is natural to call the value of the parameter γ=γ ∗ corresponding to the structural changes in the singularity set of \(\tilde{U}_{\gamma}(z)\). For example, the number of the minima of \(\tilde{U}_{\gamma}(z)\) can change due to collisions of the singularities with each other or with the boundaries of the faces of a given co-dimension (a border collision bifurcation). In either case, the qualitative change in the configuration of the points of minima of \(\tilde{U}_{\gamma}(z)\) signals the transformation of the attractor of the randomly perturbed system (4.10). The study of the constrained minimization problem (4.20) identified three main regimes in the network dynamics for weak, intermediate, and strong coupling. These results hold for any connected network. We expect that under certain assumptions on the network topology (e.g., in the presence of symmetries, or alternatively in networks with random connections), a more detailed description of the bifurcation events preceding complete synchronization should be possible.

The analysis of the variational problem has also helped to obtain quantitative estimates for the network dynamics. For both weak and strong coupling, we derive the asymptotic formulas for the dependence of the firing rate as a function of the coupling strength (see (4.26) and (4.40)). Surprisingly, in each of these cases, the firing rate does not depend on the structure of the graph of the network beyond its degree and order. In particular, the networks of equal degree with connectivity patterns as different as symmetric and random exhibit the same activity rate (see Fig. 5c).

The geometric interpretation of the spontaneous dynamics yields a novel mechanism of the formation of clusters. It shows that the network fires in k-clusters, whenever U γ (z) has a minimum on a co-dimension k∈[n] face of ∂D. In particular, the network becomes completely synchronized, when the minimum of U γ (z) reaches 1 n ∈∂ + D∩ker(H). This observation allows one to estimate the onset of synchronization (cf. Theorem 4.7) and cluster formation (cf. Lemma 4.10). Furthermore, we show that in the strong coupling regime, the network dynamics has two disparate timescales: fast synchronization is followed by an ultra-slow escape from the potential well. The analysis of the slow–fast system yields estimates of stability of the synchronous state in terms of the coupling strength and structural properties of the network. In particular, it shows the contribution of the network topology to the synchronization properties of the network.

We end this paper with a few concluding remarks about the implications of this work for the LC network. The analysis of the conductance-based model of the LC network in this paper agrees with the study of the integrate-and-fire neuron network in Usher et al. (1999) and confirms that the assumptions of spontaneously active LC neurons coupled electrically with a variable coupling strength are consistent with the experimental observations of the LC network. Following the observations in Usher et al. (1999) that stronger coupling slows down network activity, we have studied how the firing rate depends on the coupling strength. We show that strong coupling results in synchronization and significantly decreases the firing rate (see also Medvedev 2009; Medvedev and Zhuravytska 2011). Surprisingly, we found that the rate can be effectively controlled by the strength of interactions already for very weak coupling. We show that the dependence of the firing rate on the strength of coupling is nonmonotone. This has an important implication for the interpretation of the experimental data. Because two distinct firing patterns can have similar firing rates, the firing rate alone does not determine the response of the network to external stimulation. This situation is illustrated in Fig. 11. We choose parameters such that two networks, the spontaneously active (Fig. 11a) and the nearly synchronous one (Fig. 11b), exhibit about the same activity rates (see Figs. 11c, d). However, because the activity patterns generated by these networks are different, so are their responses to stimulation (Figs. 11e, f). The network in the spontaneous firing regime produces a barely noticeable response (Fig. 11g), whereas the response of the synchronized network is pronounced (Fig. 11h). Network responses similar to these were observed experimentally and are associated with the good (Fig. 11h) and poor (Fig. 11g) cognitive performance (Usher et al. 1999). Our analysis suggests that the state of the network (i.e., the spatio-temporal dynamics), rather than the firing rate, determines the response of the LC network to afferent stimulation.

The responses to stimulation of the two networks at different values of the coupling strength: g=0 (left column) and g=0.02 (right column). The networks shown in (a) and (b) generate different spatio-temporal patterns. However, the firing rates corresponding to these activity patterns are close (see (c) and (d)). When 10 neurons in the middle of each of these networks receive a current pulse, the network responses differ (see (e) and (f)). The firing rate in the first network during the stimulation changes very little (see (g)), while the response of the second network is clearly seen from the firing rate plot (see (h))

The main hypotheses used in our analysis are that the local dynamical systems satisfy Assumption 4.1 and interact through electrical coupling. The latter means that the coupling is realized through one of the local variables, interpreted as voltage, and is subject to the two Kirchhoff’s laws for electrical circuits. In this form our assumptions cover many biological, physical, and technological problems, including power grids, sensor and communication networks, and consensus protocols for coordination of autonomous agents (see Medvedev 2011b and references therein). Therefore, the results of this work elucidate the principles of pattern formation in an important class of problems.

Notes

Positive parameter b in the definition of D (cf. (4.12)) is used to exclude the possibility of exit from D through ∂D∖∂ + D.

Note that l i≠0,i∈[n], as long as v i is not an isolated node of G. In particular, if G is connected then \(l^{i}\neq0\;\forall i\in[n]\).

References

Alvarez, V., Chow, C., Van Bockstaele, E.J., Williams, J.T.: Frequency-dependent synchrony in locus coeruleus: role of electronic coupling. Proc. Natl. Acad. Sci. USA 99, 4032–4036 (2002)

Aston-Jones, G., Cohen, J.D.: An integrative theory of locus coeruleus-norepinephrine function: adaptive gain and optimal performance. Annu. Rev. Neurosci. 28, 403–450 (2005)

Berglund, N., Gentz, B.: Noise-Induced Phenomena in Slow–Fast Dynamical Systems: A Sample-Paths Approach. Springer, Berlin (2006)

Berridge, C.W., Waterhouse, B.D.: The locus coeruleus-noradrenergic system: modulation of behavioral state and state-dependent cognitive processes. Brains Res. Rev. 42, 33–84 (2003)

Biggs, N.: Algebraic Graph Theory, 2nd edn. Cambridge University Press, Cambridge (1993)

Bollobas, B.: Modern Graph Theory. Graduate Texts in Mathematics, vol. 184. Springer, New York (1998)

Brown, E., Moehlis, J., Holmes, P., Clayton, E., Rajkowski, J., Aston-Jones, G.: The influence of spike rate and stimulus duration on noradrenergic neurons. J. Comput. Neurosci. 17, 13–29 (2004)

Chow, S.-N., Hale, J.K.: Methods of Bifurcation Theory. Springer, New York (1982)

Chung, F.R.K.: Spectral Graph Theory. CBMS Regional Conference Series in Mathematics, vol. 92 (1997)

Connors, B.W., Long, M.A.: Electrical synapses in the mammalian brain. Annu. Rev. Neurosci. 27, 393–418 (2004)

Coombes, S.: Neuronal networks with gap junctions: A study of piece-wise linear planar neuron models. SIAM J. Appl. Dyn. Syst. 7, 1101–1129 (2008)

Day, M.V.: On the exponential exit law in the small parameter exit problem. Stochastics 8, 297–323 (1983)

Fiedler, M.: Algebraic connectivity of graphs. Czechoslov. Math. J. 23, 98 (1973)

Freidlin, M.I., Wentzell, A.D.: Random Perturbations of Dynamical Systems, 2nd edn. Springer, New York (1998)

Friedman, J.: A Proof of Alon’s Second Eigenvalue Conjecture and Related Problems. Memoirs of the American Mathematical Society, vol. 195 (2008)

Gao, J., Holmes, P.: On the dynamics of electrically-coupled neurons with inhibitory synapses. J. Comput. Neurosci. 22, 39–61 (2007)

Guckenheimer, J., Holmes, P.: Nonlinear Oscillations, Dynamical Systems, and Bifurcations of Vector Fields. Springer, Berlin (1983)

Hoory, S., Linial, N., Wigderson, A.: Expander graphs and their applications. Bull. Am. Math. Soc. 43(4), 439–561 (2006)

Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge University Press, Cambridge (1999)

Jost, J.: Dynamical networks. In: Feng, J., Jost, J., Qian, M. (eds.) Networks: From Biology to Theory. Springer, Berlin (2007)

Karatzas, I., Shreve, S.E.: Brownian Motion and Stochastic Calculus, 2nd edn. Springer, New York (1991)

Keener, J.P.: Propagation and its failure in coupled systems of discrete excitable cells. SIAM J. Appl. Math. 47(3), 556–572 (1987)

Kuznetsov, Y.A.: Elements of Applied Bifurcation Theory. Springer, Berlin (1998)

Lewis, T., Rinzel, J.: Dynamics of spiking neurons connected by both inhibitory and electrical coupling. J. Comput. Neurosci. 14, 283–309 (2003)

Lubotzky, A., Phillips, R., Sarnak, P.: Ramanujan graphs. Combinatorica 8, 161–278 (1988)

Margulis, G.: Explicit group-theoretic constructions of combinatorial schemes and their applications in the construction of expanders and concentrators. Probl. Pereda. Inf. 24(1), 51–60 (1988) (Russian). (English translation in Probl. Inf. Transm. 24(1), 39–46 (1988))

Medvedev, G.S.: Electrical coupling promotes fidelity of responses in the networks of model neurons. Neural Comput. 21(11), 3057–3078 (2009)

Medvedev, G.S.: Synchronization of coupled stochastic limit cycle oscillators. Phys. Lett. A 374, 1712–1720 (2010)

Medvedev, G.S.: Synchronization of coupled limit cycles. J. Nonlinear Sci. 21, 441–464 (2011a)

Medvedev, G.S.: Stochastic stability of continuous time consensus protocols (2011b, submitted). arXiv:1007.1234

Medvedev, G.S., Kopell, N.: Synchronization and transient dynamics in the chains of electrically coupled FitzHugh-Nagumo oscillators. SIAM J. Appl. Math. 61, 1762–1801 (2001)

Medvedev, G.S., Zhuravytska, S.: Shaping bursting by electrical coupling and noise. Biol. Cybern. (2012, in press). doi:10.1007/s00422-012-0481-y

Rinzel, J., Ermentrout, G.B.: Analysis of neural excitability and oscillations. In: Koch, C., Segev, I. (eds.) Methods in Neuronal Modeling. MIT Press, Cambridge (1989)

Sara, S.J.: The locus coeruleus and noradrenergic modulation of cognition. Nat. Rev., Neurosci. 10, 211–223 (2009)

Sarnak, P.: What is an expander? Not. Am. Math. Soc. 51, 762–763 (2004)

Usher, M., Cohen, J.D., Servan-Schreiber, D., Rajkowski, J., Aston-Jones, G.: The role of locus coeruleus in the regulation of cognitive performance. Science 283, 549–554 (1999)

Acknowledgement

This work was partly supported by the NSF Award DMS 1109367 (to GM).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by P. Newton.

Appendix: The Parameter Values Used in the Biophysical Model (2.1) and (2.2)

Appendix: The Parameter Values Used in the Biophysical Model (2.1) and (2.2)

To emphasize that the results of this study do not rely on any specific features of the LC neuron model, in our numerical experiments we used the Morris–Lecar model, a common Type I biophysical model of an excitable cell (Rinzel and Ermentrout 1989). This model is based on the Hodgkin–Huxley paradigm. The function on the right hand side of the voltage equation (2.1), I ion=I Ca+I K+I l, models the combined effect of the calcium and sodium currents, I Ca, the potassium current, I K, and a small leak current, I l,

The constants E Ca, E K, and E l stand for the reversal potentials and g Ca, g K, and g l denote the maximal conductances of the corresponding ionic currents. The activation of the calcium and potassium channels are modeled using the steady-state functions

and the voltage-dependent time constant

The parameter values are summarized in the Table 1.

Rights and permissions

About this article

Cite this article

Medvedev, G.S., Zhuravytska, S. The Geometry of Spontaneous Spiking in Neuronal Networks. J Nonlinear Sci 22, 689–725 (2012). https://doi.org/10.1007/s00332-012-9125-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00332-012-9125-6