Abstract

We propose an interactive method for decision making under uncertainty, where uncertainty is related to the lack of understanding about consequences of actions. Such situations are typical, for example, in design problems, where a decision maker has to make a decision about a design at a certain moment of time even though the actual consequences of this decision can be possibly seen only many years later. To overcome the difficulty of predicting future events when no probabilities of events are available, our method utilizes groupings of objectives or scenarios to capture different types of future events. Each scenario is modeled as a multiobjective optimization problem to represent different and conflicting objectives associated with the scenarios. We utilize the interactive classification-based multiobjective optimization method NIMBUS for assessing the relative optimality of the current solution in different scenarios. This information can be utilized when considering the next step of the overall solution process. Decision making is performed by giving special attention to individual scenarios. We demonstrate our method with an example in portfolio optimization.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In multiple criteria decision making (MCDM), uncertainty can appear in many different forms. For instance, the decision maker (DM) can be uncertain about preferences, the underlying model can be inaccurate, or there may be imperfect knowledge concerning consequences of actions, etc. In this paper, we focus on the latter type of uncertainties that require the DM to make a decision at a specific point in time with imperfect knowledge of the future. In practice, this can mean, for instance, that the DM must decide about the current design of a system that operates under uncertainty related to future changes of the system’s working environment or types of future tasks to be performed so that the overall system’s performance is best.

A variety of MCDM methods has been designed to take uncertainty into account (see e.g., Stewart 2005), but most of them require a formal way of incorporating uncertainty into the model. Unfortunately, in practice there are plenty of situations in which uncertainty is very difficult or even impossible to be characterized in a formal way. More specifically, there is no data or knowledge available about the probability of events or the possibility and necessity of events, and in such cases MCDM methods based on probability theory or possibility theory may turn out to be impractical. However, even though our understanding about the future is in many cases rather limited, it is still possible to use intuition and utilize approaches such as scenario planning to build a framework in which decision making can be supported.

In scenario planning, the effects of different actions are systematically considered under a few different scenarios representing possible future states of the world. The motivation of combining MCDM and scenario planning is that MCDM can enrich the evaluation process in scenario planning while scenario planning can provide a deeper understanding of the uncertainties present in MCDM (Stewart 2005). Scenario planning approaches have already some history in the MCDM literature, for instance, in the form of so-called multistage approaches (see e.g., Klein et al. 1990). However, typically these early methods have not been motivated by using scenario planning ideology but through stochastic programming.

In recent years, new ways to apply scenario planning within MCDM have been presented. One approach is to regard instances of the possible combinations of initial objectives and scenarios as metaobjectives in the sense of the metacriteria defined in Stewart et al. (2013), following on from earlier work in Goodwin and Wright (2001). In this approach, it is recognized that good performance on each objective under each scenario is a desired aim of the decision maker. It may be necessary to trade off performances for the same objective under different scenarios against each other; or to trade-off performances for different objectives under the same scenario against each other. In fact more complicated trade-offs could be considered, although these may be more difficult for the decision maker to express directly. In principle, the complete decision problem may then be viewed as a higher dimensionality multiobjective problem, to which any multiobjective optimization method may be applied (by treating all metaobjectives as decision objectives). In view of the dimensionality of the resultant problem, however, the methods proposed in this paper allow performance of metaobjectives to be evaluated across subsets of these, for example, to explicitly analyze scenario-wise performance of actions with respect to different objectives, or vice versa.

In Urli and Nadeau (2004), this kind of an approach is used in a visual interactive multiobjective optimization method based on improving one objective at a time so that the extent of improvement is decided by studying the consequential impacts on the other objectives. The solution process continues by considering a new objective until a satisfactory compromise solution is found. In Durbach and Stewart (2003), an approach based on a model consisting of one scenario-specific goal programming problem in each scenario is presented. This is followed by an aggregation of scenario-wise results into an overall result. However, one has to still decide how to aggregate the results obtained with different scenarios to get a robust solution that does not only consider the worst-case performance of the actions (Pomerol 2001). Yet one related approach is given in Oliveira and Antunes (2009), in which scenarios are dealt with in the spirit of the best/worst case approach with interval coefficients in a linear optimization model. In Gutiérrez et al. (2004), a single objective dynamic lot size problem is considered under different scenarios yielding a multiobjective optimization problem.

Scenario planning with a collection of metaobjectives for each scenario can be viewed as a multiobjective optimization problem with a large number of objectives that had been decomposed into smaller-sized scenario-specific multiobjective problems. In Engau and Wiecek (2007, 2008), coordination methods have been proposed to find a preferred solution for the original large-scale problem by only solving the smaller-sized subproblems, while integrating both the DM’s preferences and trade-off information obtained from a sensitivity analysis. The basic approach is to solve a sequence of subproblems, each concerned with a single scenario, so that each new decision may impair objective function values already attained in the prior scenarios, but only to a pre-specified tolerance. The tolerances are set by the DM allowing for a preferred trade-off to be sought among the different scenarios. The solution process stops once the DM is satisfied with the function values in all scenarios, and the final solution is guaranteed to be weakly Pareto optimal to the overall problem. In Wiecek et al. (2009), the notion of multiscenario multiobjective optimization has been formalized for engineering design problems in which scenarios represented design disciplines, operating conditions of a product being designed, markets, types of users, etc. The design problem for each scenario is modeled as a multiobjective optimization problem while the designer’s preferences can change among the scenarios.

In this paper, based on the very preliminary ideas presented in Eskelinen et al. (2010), we propose an interactive method for solving optimization problems with multiple scenarios and multiple objectives in each scenario. Similar to Engau and Wiecek (2008), the method utilizes the scenario-wise optimal solutions to support producing a final decision that is acceptable for all scenarios. However, in our method, the solution process focuses all the time on improving the current overall solution to perform well in all the scenarios, whereas the scenario-wise solutions are used to show the relative performance of the overall solution in different scenarios. Another major difference to Engau and Wiecek (2008) is that the method proposed is built on the interactive NIMBUS method (Miettinen 1999; Miettinen and Mäkelä 2006), which provides a classification-based elicitation of preference information from the DM. In view of the new features, the method significantly facilitates computations and offers strong decision making support. The benefits include guaranteed Pareto optimality of the solutions (instead of only weak optimality) and the availability of a well-established computational platform and decision support methodology provided by the IND-NIMBUS software (IND-NIMBUS, http://ind-nimbus.it.jyu.fi/; Miettinen 2006).

In practice, the method provides the DM with information about suboptimality (or lack of optimality) of decisions in different scenarios. At each stage, the DM is presented with the current overall solution of the decision problem involving all multiple objectives as well as the solutions of the smaller problems consisting of metaobjectives associated with a scenario. The former is used to demonstrate the current optimal solution, whereas the latter shows the suboptimality of the current solution in different scenarios. The suboptimality information can be used to support, for instance, the consideration of which objective values should still be improved and which objective values are allowed to be relaxed. In this way, we can provide a valuable contribution to support understanding of the relative strengths and weaknesses of different solutions, options or alternatives in various scenarios.

It should be emphasized that we do use the term “scenario” in the broad sense of scenario planning, i.e., to describe a possible future state of the world with an aim to aid facilitating a “strategic conversation”. We are aware that some literature uses the term with a narrower technical focus as representative realizations of a random variable. Nevertheless, the broader “strategic conversation” sense does better reflect the thinking behind our method, and for this reason we will retain the term “scenario” in this broader sense in our description of the method proposed.

Besides scenario planning, the method is applicable to virtually any multiobjective optimization problem where groupings of objectives are relevant. That is, we can consider problems with meaningful decompositions of objectives into any number of possibly overlapping sets. For that reason, we formulate our method in terms of abstract subsets of objectives instead of scenarios and metaobjectives. For example, in group decision making, the objectives of each DM can form a subset so that each subproblem only considers the objectives of a single DM.

The rest of this paper is structured as follows: In Sect. 2, we formulate the multiobjective optimization problem with subsets of objectives and introduce the main elements of the NIMBUS method that are integral for our method. We introduce our grouping-based interactive method in Sect. 3 and demonstrate it with a portfolio optimization example in Sect. 4. Finally, after a discussion on various application areas in Sect. 5 we draw conclusions in Sect. 6.

2 Problem formulation and basics of NIMBUS

We consider a multiobjective optimization problem, referred to in the following as a decision problem,

where \(f_i:X\rightarrow \mathbb{R }\) with \(1\le i\le k, k\ge 2\), are objective functions and \(X\subset \mathbb{R }^n\) is a nonempty feasible set and \(\mathbb{R }^n\) is a Euclidean vector space. A vector \(\mathbf{x }\in X\) is called a decision (vector), and its image \(\mathbf{z }=\mathbf{f }(\mathbf{x })\) an objective vector consisting of objective (function) values. The image set \(Z=\mathbf{f }(X)\) is called an attainable set.

An objective vector \(\bar{\mathbf{z }}\in Z\) is said to be Pareto optimal if there does not exist another objective vector \(\mathbf{z }\in Z\) such that \({{z}}_i\le {\bar{z}}_i\) for all \(i=1,\ldots ,k\) and \(\mathbf{z }\ne \bar{\mathbf{z }}\). Furthermore, an objective vector \(\bar{\mathbf{z }}\in Z\) is said to be weakly Pareto optimal if there does not exist an objective vector \(\mathbf{z }\in Z\) such that \(z_i<\bar{z}_i\) for all \(i=1,\ldots ,k\). Clearly, every Pareto optimal objective vector is also weakly Pareto optimal. A decision \(\mathbf{x }\in X\) is said to be (weakly) Pareto optimal if \(\mathbf{f }(\mathbf{x })\) is (weakly) Pareto optimal.

The components of an ideal objective vector \(\mathbf{z }^\star \) are obtained by minimizing each of the objective functions subject to the feasible set. It gives information about the best individually attainable objective function values. The worst objective function values in the set of Pareto optimal solutions can be approximated to form a nadir objective vector (for further details, see Deb et al. 2010; Miettinen 1999).

In addition to problem (1), we consider a collection of \(S\) subproblems involving groupings of the original objective functions indexed by \(s\in \{1,2,\ldots ,S\}\). The subproblems, each involving \(k_s\) objectives, have the form

where \(2\le k_s<k\) and each objective function \(f^s_j, 1\le j\le k_s\), corresponds to one objective function \(f_i, 1\le i\le k\), of problem (1). Given problem (1), the subproblems of the form (2) are conveniently represented either by the functions \(\mathbf{f }^s\) or by subsets \(K_s\) of the index set \(K=\{1,2,\ldots ,k\}\). In other words, we can write \(f^s_j = f_{s(j)}\) with \(\lbrace {s(1),\ldots ,s(k_s)} \rbrace = K_s \subseteq K\). We have \(\cup K_s = K\).

The purpose of solving the subproblems is to allow the DM to evaluate the performance of a decision in different contexts. In a scenario planning problem, the decomposition of metaobjectives into scenarios could be reflected in the decomposition of the set \(K\) into subsets \(K_s\). The subsets \(K_s\) may also overlap, that is, an objective function \(f_i\) of problem (1) may appear in one or more of the subproblems. Pareto optimal decisions of problems (1) and (2) are related in the following way (Engau and Wiecek 2008).

Proposition 1

If a decision \(\mathbf{x }\in X\) is Pareto optimal to (2), then \(\mathbf{x }\) is weakly Pareto optimal to (1).

In general, the converse does not hold, that is, a Pareto optimal decision to (1) may not be even weakly Pareto optimal to (2). This discrepancy allows the suboptimality of a decision with respect to a given subset of objectives to be quantified: given a subproblem or grouping \(s\) and a Pareto optimal decision of (1), one can find out by solving (2) what the corresponding objective vector would be in the case that only the objective functions in \(\mathbf{f }^s\) were considered. Thus, solving the subproblems provides valuable information to the DM about the structure of problem (1) and the amount by which different subsets of the objectives conflict with each other.

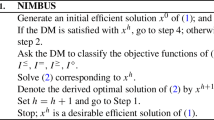

To solve problems (1) and (2), we employ elements of the interactive classification-based multiobjective optimization method NIMBUS (Miettinen 1999; Miettinen and Mäkelä 2000, 2006) that has successfully been applied in various design (Hakanen et al. 2011; Laukkanen et al. 2010), control (Miettinen 2007) and planning problems (Ruotsalainen et al. 2010) (see e.g., IND-NIMBUS, http://ind-nimbus.it.jyu.fi/; Miettinen et al. 2008 for further references). In NIMBUS, the DM can direct the interactive solution process by specifying preferences as a classification of objective functions indicating how the objective values in the current Pareto optimal objective vector \(\mathbf{f }(\mathbf{x }^c)\) should change to get a more preferred objective vector. The DM may classify the objective functions into up to five different classes:

-

\(I^<\) for those to be improved (i.e., decreased),

-

\(I^\leqslant \) for those to be improved till some desired aspiration level \(\hat{z}_i\),

-

\(I^=\) for those to be maintained at their current level,

-

\(I^\geqslant \) for those that may be impaired till an upper bound \(\varepsilon _i\) and

-

\(I^\lozenge \) for those that are temporarily allowed to change freely.

Here, each of the objective functions is assigned to one of the classes and because of Pareto optimality, some objectives must be allowed to impair in order to enable improvement in others. If aspiration levels or upper bounds are used, the DM is asked to provide them.

In the NIMBUS method, new Pareto optimal solutions are generated by solving a scalarized problem which includes preference information given by the DM in the form of a classification. In our method, we use the scalarized problem of the so-called synchronous NIMBUS method (Miettinen and Mäkelä 2006), which has the form

where \(\mathbf{x }^c\) is the current decision, \(\mathbf{z }^\star \) is the ideal objective vector, \(\hat{z}_i\) are the aspiration levels for the objective functions in \(I^\leqslant , \varepsilon _i\) are the upper bounds for impairing the objective functions in \(I^\geqslant , \rho >0\) is a relatively small scalar bounding trade-offs, and coefficients \(w_i\) (\(1\le i\le k\)) are constants used for scaling the objectives (e.g., based on estimated ranges, i.e., nadir minus ideal values of the objectives so that \(w_i\) times the range equals 1 for each \(i\)).

By comparing the objective values before and after the classification, the DM can see how attainable the desired changes were. For further details of NIMBUS, its other elements, an algorithm and proofs related to the Pareto optimality of solutions generated, see Miettinen and Mäkelä (2006).

When solving problems (1) and (2), it is not necessary to apply the NIMBUS method separately to each of the subproblems (2). Instead, it suffices to solve problem (1) repeatedly, because irrelevant objectives can be allowed to change freely with an appropriate classification. Therefore, our method can be readily deployed on top of an existing software implementation of the NIMBUS method, IND-NIMBUS (IND-NIMBUS, http://ind-nimbus.it.jyu.fi/; Miettinen 2006). Moreover, the augmentation term in (3) ensures that the obtained solutions are (properly) Pareto optimal to both (1) and (2) (see, e.g., Miettinen 1999). In what follows, we describe our method for grouping-based problems and details of how it uses the scalarized problem (3) to solve problems (1) and (2).

3 Method for grouping-based multiobjective optimization with NIMBUS

Our method is structured as an interactive multiobjective optimization method with iterating optimization and decision stages. Pareto optimal solutions to (1) are generated in the optimization stage using the NIMBUS scalarization (3) and evaluated in the decision stage subject to groupings, i.e., subproblems. This means that additional information is provided to the DM about Pareto optimality of the solution considered with respect to the subproblems. We assume that the computational cost of solving the decision problem (1) is not much higher than the cost of solving a subproblem (2) due to the same feasible set as we are using a scalarization-based multiobjective optimization method (generating one Pareto optimal solution at a time based on the preferences of the DM). We therefore perform the optimization stage for the decision problem containing all the objectives to get an overall solution. However, we expect the cognitive load to be high for the DM when assessing these Pareto optimal solutions with respect to the individual groupings and therefore perform the decision stage in a grouping-wise manner.

Our interactive method for grouping-based multiobjective optimization utilizes the basic idea of the NIMBUS method, that is, the classification of objectives. With the help of classification, the DM can direct the solution process towards the most preferred solution. By solving (3) with each grouping to be minimized at a time, we get to see grouping or scenario-wise Pareto optimal objective vectors and can assess the relative optimality of a solution with respect to each grouping separately. This information can help the DM in directing the solution process in the consideration of what objective values should still be improved and what objective values can be allowed to impair. In other words, the aim is to support gaining understanding of the relative strengths and weaknesses of different solutions in various groupings.

The algorithm of our grouping-based interactive method is the following (the steps where the actions of the DM are expected are indicated in italics):

-

1.

Set all objectives in \(I^<\).

-

2.

Generate a Pareto optimal solution \(\mathbf{x }^c\) for (1) by solving the NIMBUS scalarized problem (3) with the current classification.

-

3.

For each grouping \(s\), set its objectives in \(I^<\) and the other objectives in \(I^\lozenge \) and solve (3). Denote the solution by \(\mathbf{x }^s\) for each grouping.

-

4.

Present to the DM the current objective vector \(\mathbf{f }(\mathbf{x }^c)=[f_1(\mathbf{x }^c),\ldots ,f_k(\mathbf{x }^c)]\). Furthermore, for each grouping (i.e., for each \(s\)), present to the DM some or all of the following:

-

(a)

grouping-wise objective values \(\mathbf{f }^s(\mathbf{x }^c)\) and information whether \(\mathbf{f }^s(\mathbf{x }^c)\) is Pareto optimal in the grouping \(s\) and/or

-

(b)

Pareto optimal objective vector of the grouping \(s\), that is, \(\mathbf{f }^s(\mathbf{x }^s)\) and/or

-

(c)

visualization or some other means to support comparison (e.g. distance) of corresponding objective function values in \(\mathbf{f }^s(\mathbf{x }^c)\) and \(\mathbf{f }^s(\mathbf{x }^s)\).

-

(a)

-

5.

Ask the DM whether he/she is satisfied with the current solution \(\mathbf{x }^c\)? If yes, then stop with \(\mathbf{x }^c\) as the final solution. Otherwise, continue.

-

6.

Ask the DM to classify the objectives into (up to) the five classes and return to step 2.

Let us point out that thanks to the fact that solutions generated by NIMBUS are Pareto optimal, we know that the overall solutions and the grouping-wise solutions generated by the method proposed are Pareto optimal. For the sake of clarity, we have presented the algorithm in a general form, but the steps of it do not have to be followed faithfully. For example, in steps 3 and step 4, not every grouping has to be considered, but only those ones that the DM is interested in (at that iteration). Thus, the DM can focus on different aspects of the problem in consecutive iterations.

In step 4, various visualizations or other means can be used according to the desires of the DM to support comparison and analysis. For example, visual clues can be used in the user interface to draw attention to those groupings where the current solution violates some prescribed limits, either absolute or relative to a corresponding Pareto optimal objective vector. Overall, the user interface plays an important role in what comes to both the cognitive load and the effectiveness of the method.

With a large number of objectives, classification should be allowed as per objective grouping to make the classification phase more manageable (instead of forcing the DM to classify every objective). However, if the objective groupings overlap, then it is possible that the classifications made for two groupings are in conflict with each other for some objective. Then, the DM must resolve the conflict to be able to represent the resulting classification in a concise and intuitive way.

In Fig. 1, we give an example of how comparison of solutions can be implemented. For each of the four objectives, a horizontal bar graph (big bar) is used to represent the range (based on the components of the ideal and the nadir objective vectors) and the current value of the objective (the bar between the end points). To be more specific, in each big bar the left and the right edges correspond to the components of the ideal and the nadir objective vectors of (1), respectively, and the right edge of the darker bar corresponds to the current value of the objective. To represent the range and the current value of the objective with respect to its grouping, a half-height bar is drawn on top of the big bar. The latter bar is always enclosed in the former because the components of the ideal objective vector are equal, and the components of the nadir objective vector within a grouping are always lower than or equal to the nadir objective value with respect to all the objectives.

Another way to visualize the solution process by using absolute values of the objectives is presented in the example in the following section. This may be a more intuitive approach, especially in cases where different objectives have commensurate units.

In NIMBUS, the DM can also generate new Pareto optimal solutions as intermediate ones between any two Pareto optimal solutions available. This option can be offered to the DM also in our method as an alternative to classification in step 6 whenever the DM so desires.

4 Example

We have proposed a general-purpose method, which has not been tailored for any specific application domain. In this section, we demonstrate our method with a simulated example problem. The example is not drawn from a specific case study, but represents a class of frequently encountered problems as both illustration and test of our method. The example is built up around the well-known Markowitz portfolio optimization problem (Markowitz 1952) whose aim is to determine the asset allocation in a portfolio so that the portfolio’s expected return is maximized while the risk related to the expected return (variance) is minimized. (Even though modern portfolio theory does not consider variance as a suitable risk measure, we consider this well-known problem since our objective is simply to demonstrate how the method proposed can be applied). We extend this problem by introducing a third objective to maximize the amount of dividends obtained through the portfolio.

Let \(n\) be the number of assets and \(x_i\) be the amount of funds invested in asset \(i, i = 1, \ldots ,n\). In this example, for purposes of illustration we postulate three scenarios (i.e., \(S=3\)) which are taken into account while considering the performance of a portfolio, and the same three objectives are considered in each of these scenarios (i.e. \(k_s=3\) for all \(s=1,\ldots ,3\)). Thus, the total number of objective functions is nine, i.e., \(K=9\). Corresponding to (1) and (2), the optimal portfolio is found by solving the following single period multiobjective portfolio optimization problem (see, e.g., Ehrgott et al. 2004; Steuer et al. 2005 for similar kinds of formulations):

The overall solution is obtained by solving problem (4) simultaneously for all \(s=1,\ldots ,3\), and each scenario-wise solution by solving (4) separately for each \(s=1,\ldots ,3\). The decision vector \(\mathbf{x }\in \mathbb{R }^n\) reflects how the funds \(\sum _{i=1}^{n} x_{i}\) are distributed over \(n\) assets. The vector \(\bar{\mathbf{r }}_{s}\) and the \(n\times n\) matrix \({\varvec{\Sigma }}_{s}\) represent the expected value and a covariance matrix, respectively, related to the underlying random variable vector \(\mathbf{r }_{s}\), which reflects the returns related to \(n\) assets in scenario \(s\). The expected value and the variance of a random variable \(\mathbf{r }^{T}\mathbf{x }\) are denoted by \(\bar{\mathbf{r }}^{T}\mathbf{x }\) and  , respectively (as described in Markowitz 1952). The vector \(\mathbf{d }_{s}\) determines the relative amount of dividend paid in cash for each asset \(i=1,\ldots ,n\) in scenario \(s\). We assume that there is a dividend policy related to each asset so that the amount of dividend related to an asset is not going hand in hand with the price of the asset (see, e.g., Ross et al. 2006). Furthermore, like in Markowitz (1952), we assume that the underlying problem is to be solved when we have already somehow obtained (e.g., through modelling or historical data etc.) the necessary data related to each scenario \(s\).

, respectively (as described in Markowitz 1952). The vector \(\mathbf{d }_{s}\) determines the relative amount of dividend paid in cash for each asset \(i=1,\ldots ,n\) in scenario \(s\). We assume that there is a dividend policy related to each asset so that the amount of dividend related to an asset is not going hand in hand with the price of the asset (see, e.g., Ross et al. 2006). Furthermore, like in Markowitz (1952), we assume that the underlying problem is to be solved when we have already somehow obtained (e.g., through modelling or historical data etc.) the necessary data related to each scenario \(s\).

As said, we consider three different scenarios that are based on the occurrence of events A and B. At a certain moment during a single period investment plan either A or B occurs once or not at all and the events exclude each other. When either of these events occurs, it can be predicted how it will affect some particular asset returns. In other words, in each case we are able to produce scenario related data  and \(\mathbf{d }_{s}\). However, we are able to do only very subjective speculation whether either of these events occurs or not (no reliable past experience, statistical data, or probabilities are available). Regardless of these events we have to fix the portfolio at the moment and the decision cannot be postponed. In what follows, scenarios 1, 2 and 3 refer to the cases (not A and not B), (A and not B) and (not A and B), respectively. With three basic objectives and three scenarios we end up with nine objectives (i.e., metaobjectives) in formulation (1). We have chosen altogether \(n=24\) assets to be considered in our example.

and \(\mathbf{d }_{s}\). However, we are able to do only very subjective speculation whether either of these events occurs or not (no reliable past experience, statistical data, or probabilities are available). Regardless of these events we have to fix the portfolio at the moment and the decision cannot be postponed. In what follows, scenarios 1, 2 and 3 refer to the cases (not A and not B), (A and not B) and (not A and B), respectively. With three basic objectives and three scenarios we end up with nine objectives (i.e., metaobjectives) in formulation (1). We have chosen altogether \(n=24\) assets to be considered in our example.

In what follows, we demonstrate a possible course of the solution process by using the method proposed. The values used in the example are derived directly from the objective function values obtained by solving problem (4). However, these values have been transformed to make them more readable. That is, the performance of a portfolio in scenario \(s\) is presented by values \(c_{1}^{s}=100\cdot \sqrt{f_{1}^{s}}, c_{2}^{s}=100\cdot f_{2}^{s}\) and \(c_{3}^{s}=10\cdot f_{3}^{s}\) which are the standard deviation (as percentages) for the rate of return, the expected rate of return (as percentages) and a dividend index, respectively.

Since the portfolio optimization model assumes that the rate of return as a random variable is normally distributed in the risk evaluation, we can use as a guideline the normal distribution property that with a probability 98 % the transformed rate of return will have values in range \(c_{2}^{s}\pm 3\cdot {c_{1}^{s}}\) (of course, the lower bound is here the interesting one). Furthermore, the dividend index \(c_{3}^{s}\) reflects the relative (with respect to the assets considered) amount of dividend paid for an asset. We also emphasize that there is no direct risk related to the amount of dividends, and therefore they can be used to compensate potentially low rate of return values.

Next, we apply our grouping-based interactive method to this example with an aim to build up a robust portfolio which performs relatively well under all three scenarios.

Iteration round 1

The solution process starts by making the NIMBUS classification \(I^{<}=\{c_{1}^{1},\ldots ,c_{3}^{3}\}\) in step 1 of the first iteration round, i.e., by setting all nine objective functions to be minimized. In step 2, we calculate an overall Pareto optimal solution for the whole problem by solving the NIMBUS scalarized problem (3) and in step 3, a Pareto optimal solution is calculated for each scenario (i.e. grouping) by optimizing the performance of the objectives of this scenario only. For example, the scenario-wise solution for scenario 1 is obtained by solving problem (3) with the classification \(I^{<}=\{c_{1}^{1},c_{2}^{1},c_{3}^{1}\}\) and \(I^{\lozenge }=\{c_{1}^{2},c_{2}^{2},c_{3}^{2},c_{1}^{3},c_{2}^{3},c_{3}^{3}\}\). The resulting overall solution is shown with black, and the scenario-wise Pareto optimal solutions with white and different tones of gray in Fig. 2 (step 4). One should note that on each scenario-wise Pareto optimal solution, only the objective values \(c_{1}^{i}, \ldots , c_{3}^{i}\) related to this particular scenario \(i\) are shown, as these indicate the reference level for the optimal performance in this scenario. In this respect, the objective values of each solution in the other scenarios are not that interesting and, thus, they are not shown to make the figure more readable. For example, on the scenario-wise Pareto optimal objective vector for scenario 1 (white bars in Fig. 2), only the first three objective values related to scenario 1 are shown, but the objective values related to scenarios 2 and 3 are not shown.

By showing the scenario-wise optimal solutions in the same figure with the overall solution, one can see at a glance how good the overall solution is relative to scenario-wise Pareto optimal solutions that would be obtained by only considering one scenario at a time. This helps the DM in focusing the solution process to improving the most promising objectives. For example, it is useless to try to improve a poor overall performance in some scenario at the expense of other scenarios, if the scenario-wise Pareto optimal solution in this scenario is also poor.

Let us assume that our primary objective is to obtain a good performance in scenario 1, but the performance in the case of the other scenarios is not as important. This may be because the other scenarios are regarded less likely, although there may also be other reasons (of politics or public image, for example) which may stress the importance of certain scenarios even when not necessarily more likely than other scenarios. As can be seen from Fig. 2, the current overall solution is already relatively good in scenario 1 compared to a scenario-wise optimal solution in this scenario. However, we are not yet fully happy with this solution, and we want to test whether the rate of return and dividend index in this scenario (\(c_{2}^{1}\) and \(c_{3}^{1}\), respectively) could still be improved. Thus, in step 5 of our algorithm we decide to continue. To compensate these improvements, we allow the standard deviation in scenarios 1 (\(c_{1}^{1}\)) and 3 (\(c_{1}^{3}\)) to slightly increase. In step 6, we include these statements into our model by making a classification \(I^{<}=\{c_{2}^{1},c_{3}^{1}\}, I^{=}=\{c_{1}^{2},c_{2}^{2},c_{3}^{2},c_{2}^{3},c_{3}^{3}\}\) and \(I^{\geqslant }=\{c_{1}^{1},c_{1}^{3}\}\) with \(\varepsilon _{1}^{1}=4.0\) and \(\varepsilon _{1}^{3}=3.0\), and proceed to step 2 of the next iteration round.

Iteration round 2

As a result, we get a new Pareto optimal overall solution and the corresponding scenario-wise solutions (Fig. 3). The rate of return in scenario 1 (\(c_{2}^{1}\)) has now improved from 7.3 to 10.0, but it is still smaller than in the corresponding Pareto optimal scenario-wise solution (which has also improved, as we allowed \(c_{1}^{1}\) to deteriorate). Nevertheless, we think that the objective values in scenario 1 are now on a satisfactory level and, thus, we decide to focus next on improving the performance of the other scenarios. We notice that both in scenarios 2 and 3, the rate of return (\(c_{2}^{2}\) and \(c_{2}^{3}\), respectively) is quite far from the scenario-wise Pareto optimal solution. We are not satisfied with this, but want to improve these objectives. To compensate this, we allow the dividend indices of scenarios 2 and 3 (\(c_{3}^{2}\) and \(c_{3}^{3}\), respectively) to change freely, as we do not consider the amount of dividend that important, if either of these scenarios was realized. Thus, we make a classification \(I^{<}=\{c_{2}^{2},c_{2}^{3}\}, I^{=}=\{c_{1}^{1},c_{2}^{1},c_{3}^{1},c_{1}^{2},c_{1}^{3}\}\) and \(I^{\lozenge }=\{c_{3}^{2},c_{3}^{3}\}\), and proceed to step 2 of the next iteration round.

Iteration Round 3

As a result, we get again a new Pareto optimal overall solution and corresponding scenario-wise solutions (Fig. 4). Now, the rate of return in scenarios 2 and 3 (\(c_{2}^{2}\) and \(c_{2}^{3}\), respectively) has improved somewhat compared to the previous round at the expense of the dividend index \(c_{3}^{3}\), which was allowed to change freely. We also allowed the dividend index \(c_{2}^{3}\) to change freely, but its performance has, in fact, improved in this round. The worst relative performance compared to the optimal scenario-wise performance seems to be now obtained in scenario 3, but we consider this to be on a satisfactory level. Thus, we stop here (step 5) by saying that the last portfolio seems to give robust performance especially in scenario 1, but also in scenarios 2 and 3. Naturally, it is assumed that this interpretation is related to the knowledge about the events A and B so that we are able to evaluate what the tolerable performance is under each scenario. One should also note that even though the final solution can be considered to be robust with respect to the scenarios considered, it is not Pareto optimal to any single scenario.

In this example, we used a visualization that actually presents all the alternative information options (a)–(c) in step 4 of the algorithm. Although the information about Pareto optimality is not explicitly indicated, the DM can easily see if the objective values of the current overall solution coincidence with the scenario-wise solutions. Nevertheless, to further augment the visual illustration, the same information can also be presented in a numerical form. For example, Table 1 shows the course of the solution process about how we ended up with this solution.

5 Discussion

In general, the aim of scenario planning is to provide a robust performance in all the different scenarios. In this respect, our NIMBUS based method provides a convenient way to include scenarios in multiobjective modeling, as its classification-based approach is an intuitive way to deal with groupings of objectives based on their performance in different scenarios. The ease of use of the classification approach is also likely to help comprehending the relative performance of the objectives as well as the course of the solution process.

In the example, we used absolute objective values with suitable scaling factors to fit them on the same chart. Another way is to scale each objective, for example, to a relative 0–1 scale, which might be a more suitable approach in some cases. However, in our example, we had the same unit in the objectives standard deviation and the expected rate of return and, thus, the meaning of the unit would have blurred if some relative scaling was used.

One should also note that the visualization style shown in Figs. 2–4 is not the only possible way to illustrate the current solution. The aim of the applied method is to provide the DM with enough information needed to understand the overall performance of the current solution, but not to overload the DM with an excessive amount of information. Naturally, any other means to visualize multiobjective problems could also be applied here to provide further information. For example, Figs. 2–4 show only one possible scenario-wise Pareto optimal solution, but figures of the Pareto optimal sets in each scenario could also be illustrative. In practice, this would, however, only work with few objectives, but in case of more than three objectives, some advanced means to support the visualization would be needed (see e.g. Lotov and Miettinen 2008; Miettinen 2013). Yet, another way to make the method proposed more profound is to also present the metaobjective-wise optimal solutions (that would indicate the best possible level of each single metaobjective) along with the scenario-wise optimal solutions. One should, however, always consider also the possible cons of the applied visualization, as too much information of various forms might even complicate understanding the situation rather than clarify it.

5.1 Application areas of the method proposed

The method proposed is expected to be especially suitable for such multiobjective optimization problems, where scenario planning has already been successfully applied. The origins of scenario planning are in supporting strategic planning in organizational and managerial decision making (see e.g., Maack 2001; Schoemaker 1995), which often are also multiobjective problems and, thus, potential application areas of the method proposed, too.

In recent years, scenario planning has also been found useful in environmental management. In this area, scenarios can deal with, for example, the impacts of climate change for which both the possible impacts and the related uncertainties are of great extent (see e.g., Duinker and Greig 2007), or with the impacts of such uncertainties that cannot be explicitly considered in underlying models due to the complexity of corresponding ecosystems (Bennett et al. 2003). An example of the possible practical use of the method proposed in environmental management is the planning of protective actions for flood management, which include, for example, land area planning and terracing. These are typically cases where the flooding normally stays within reasonable limits, but we should also be prepared for such flooding scenarios that become realized, for example, only once in 50 years. Then, our method can be used to consider the extent of the protective actions so that normally they would not become too expensive but in scenarios of severe flooding, a reasonable level of protection would still be obtained.

Another environmental example is the water management on an area of agriculture eutrophicating the underneath water system. The eutrophication could be reduced for example, by allocating protective zones between the fields and water areas or by building wetland areas that filter nutrients. However, there can be considerable uncertainties in the future related to, for example, the extent of agriculture on that area and the annual amount of raining due to the climate change. Both these are clearly scenario type uncertainties, under which the water management actions can be considered so that in each scenario the water quality would be on a satisfactory level, but without setting too strict restrictions on the agriculture.

In industry, scenario planning is well-suited, for example, in process and control design (Pajula and Ritala 2006; Suh and Lee 2001). Our method can be used to consider optimization problems having uncertainty, for example, in a future market situation. In these problems, the method can be applied both in cases where scenarios are used to represent alternative future events and in cases where scenarios represent future events that can all happen in time. The latter may come into question, for example, in an optimal design problem in which the scenarios correspond to various anticipated use cases or operating conditions that must be accounted for in the design.

5.2 Other possible uses of the method proposed

The initial motivation of the method proposed is to support the use of scenarios in multiobjective optimization, but we have formulated the problem so that the method can be applied to any case where groupings of objectives are relevant. For example, another natural situation for using groupings is group decision making, where the objectives of each decision maker form one grouping. Then, the method proposed shows the relative goodness of the current solution in terms of the Pareto optimal solution for each individual decision maker.

When applying the method proposed to group decision making, several decision makers may share the same objectives. The method proposed allows using single objectives in multiple groupings when calculating the grouping-wise optimal solutions.

6 Conclusions

We have introduced an interactive method for solving optimization problems involving multiple scenarios (or groupings) and multiple objectives in each scenario. This enables the decision maker to focus on a single scenario at a time and find a solution which is preferred for all scenarios.

The new grouping-based interactive method is based on the interactive classification-based NIMBUS method. NIMBUS provides an intuitive framework for combining multiobjective optimization with scenario planning, as its classification-based approach allows an easy way to deal with groupings of objectives based on their performance in different scenarios. The scenario-wise information is used to support understanding the relative goodness of the overall solution in each scenario, but all the time the solution process itself focuses on finding a better overall solution that is satisfactory in all the scenarios. Applying the NIMBUS method also guarantees the Pareto optimality of the solutions generated. Because of the existence of the IND-NIMBUS implementation of NIMBUS, our new method is ready to be applied once a user interface module for grouping-based classification and visualization is made.

We have demonstrated our method with an example in portfolio optimization with three objectives and three scenarios. The example showed that with the method proposed (and suitable means to visualize it), the cognitive load on understanding and solving the problem can be decreased.

References

Bennett EM, Carpenter SR, Peterson GD, Cumming GS, Zurek M, Pingali P (2003) Why global scenarios need ecology. Front Ecol Environ 1:322–329

Deb K, Miettinen K, Chaudhuri S (2010) Towards an estimation of nadir objective vector using a hybrid of evolutionary and local search approaches. IEEE Trans Evol Comput 14:821–841

Duinker PN, Greig LA (2007) Scenario analysis in environmental impact assessment: improving explorations of the future. Environ Impact Assess Rev 27:206–219

Durbach I, Stewart TJ (2003) Integrating scenario planning and goal programming. J Multi Criteria Decis Anal 12:261–271

Ehrgott M, Klamroth K, Schwehm C (2004) An MCDM approach to portfolio optimization. Eur J Oper Res 155:752–770

Engau A, Wiecek MM (2007) 2D decision-making for multicriteria design optimization. Struct Multidiscip Optim 34:301–315

Engau A, Wiecek MM (2008) Interactive coordination of objective decompositions in multiobjective programming. Manag Sci 54:1350–1363

Eskelinen P, Ruuska S, Miettinen K, Wiecek M, Mustajoki J (2010) A scenario-based interactive multiobjective optimization method for decision making under uncertainty. In: Antunes CH, Rios Insua D, Dias LC (eds) CD-Proceedings of the 25th mini-EURO conference on uncertainty and robustness in planning and decision making. University of Coimbra, Coimbra

Goodwin P, Wright G (2001) Enhancing strategy evaluation in scenario planning: a role for decision analysis. J Manag Stud 38:1–16

Gutiérrez J, Puerto J, Sicilia J (2004) The multiscenario lot size problem with concave costs. Eur J Oper Res 156:168–182

Hakanen J, Miettinen K, Sahlstedt K (2011) Wastewater treatment: new insight provided by interactive multiobjective optimization. Decis Support Syst 51(2):328–337

Klein G, Moskowitz H, Ravindran A (1990) Interactive multiobjective optimization under uncertainty. Manag Sci 36:58–75

Laukkanen T, Tveit T-M, Ojalehto V, Miettinen K, Fogelholm C-J (2010) An interactive multi-objective approach to heat exchanger network synthesis. Comput Chem Eng 34(6):943–952

Lotov AV, Miettinen K (2008) Visualizing the Pareto frontier. In: Branke J, Deb K, Miettinen K, Slowinski R (eds) Multiobjective optimization: interactive and evolutionary approaches. Springer, Berlin, pp 213–243

Maack JN (2001) Scenario analysis: a tool for task managers. In: Social analysis: selected tools and techniques, social development. The Social Development Department, the World Bank, Washington, DC, pp 62–87

Markowitz HM (1952) Portfolio selection. J Finance 7(1):77–91

Miettinen K (1999) Nonlinear multiobjective optimization. Kluwer, Boston

Miettinen K (2006) IND-NIMBUS for demanding interactive multiobjective optimization. In: Trzaskalik T (ed) Multiple criteria decision making ’05. The Karol Adamiecki University of Economics in Katowice, Katowice, pp 137–150

Miettinen K (2007) Using interactive multiobjective optimization in continuous casting of steel. Mater Manuf Process 22(5):585–593

Miettinen K (2013) Survey of methods to visualize alternatives in multiple criteria decision making problems. OR Spectrum. doi:10.1007/s00291-012-0297-0 (to appear)

Miettinen K, Mäkelä MM (2000) Interactive multiobjective optimization system WWW-NIMBUS on the internet. Comput Oper Res 27(7–8):709–723

Miettinen K, Mäkelä MM (2006) Synchronous approach in interactive multiobjective optimization. Eur J Oper Res 170:909–922

Miettinen K, Ruiz F, Wierzbicki AP (2008) Introduction to multiobjective optimization: interactive approaches. In: Branke J, Deb K, Miettinen K, Slowinski R (eds) Multiobjective optimization: interactive and evolutionary approaches. Springer, Berlin, pp 27–57

Oliveira C, Antunes CH (2009) An interactive method of tackling uncertainty in interval multiple objective linear programming. J Math Sci 161:854–866

Pajula E, Ritala R (2006) Measurement uncertainty in integrated control and process design—a case study. Chem Eng Process 45:312–322

Pomerol J-C (2001) Scenario development and practical decision making under uncertainty. Decis Supp Syst 31:197–204

Ross SA, Westerfield RW, Jordan BD (2006) Fundamentals of corporate finance, 7th edn. McGraw-Hill, New York

Ruotsalainen H, Miettinen K, Palmgren J-E, Lahtinen T (2010) Interactive multiobjective optimization for anatomy based three-dimensional HDR brachytherapy. Phys Med Biol 55(16):4703–4719

Schoemaker PJ (1995) Scenario planning: a tool for strategic thinking. Sloan Manag Rev (Winter):25–40

Steuer RE, Qi Y, Hirschberger M (2005) Multiple objectives in portfolio selection. J Financ Decis Making 1(1):11–26

Stewart TJ (2005) Dealing with uncertainties in MCDA. In: Figueira J, Greco S, Ehrgott M (eds) Multiple criteria decision analysis—state of the art surveys. Springer, New York, pp 445–470

Stewart TJ, French S, Rios J (2013) Integrating multicriteria decision analysis and scenario planning—review and extension. Omega 41:679–688

Suh M, Lee T (2001) Robust optimization method for the economic term in chemical process design and planning. Ind Eng Chem Res 40:5950–5959

Urli B, Nadeau R (2004) Promise/scenarios: an interactive method for multiobjective stochastic linear programming under partial uncertainty. Eur J Oper Res 155:361–372

Wiecek MM, Blouin VY, Fadel GM, Engau A, Hunt BJ, Singh V (2009) Multi-scenario multi-objective optimization with applications in engineering design. In: Barichard V, Ehrgott M, Gandibleux X, T’Kindt V (eds) Multiobjective programming and goal programming: theoretical results and practical applications. Springer, Berlin, pp 283–298

Acknowledgments

The authors are grateful to Petri Eskelinen, Sauli Ruuska and Margaret M. Wiecek for their contribution in the earlier phases of the work. The research of Kaisa Miettinen was partly supported by the Jenny and Antti Wihuri Foundation, Finland, and Jyri Mustajoki by the Academy of Finland (project 127264).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Miettinen, K., Mustajoki, J. & Stewart, T.J. Interactive multiobjective optimization with NIMBUS for decision making under uncertainty. OR Spectrum 36, 39–56 (2014). https://doi.org/10.1007/s00291-013-0328-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00291-013-0328-5