Abstract

The ambiguity acceptance test is an important quality control procedure in high precision GNSS data processing. Although the ambiguity acceptance test methods have been extensively investigated, its threshold determine method is still not well understood. Currently, the threshold is determined with the empirical approach or the fixed failure rate (FF-) approach. The empirical approach is simple but lacking in theoretical basis, while the FF-approach is theoretical rigorous but computationally demanding. Hence, the key of the threshold determination problem is how to efficiently determine the threshold in a reasonable way. In this study, a new threshold determination method named threshold function method is proposed to reduce the complexity of the FF-approach. The threshold function method simplifies the FF-approach by a modeling procedure and an approximation procedure. The modeling procedure uses a rational function model to describe the relationship between the FF-difference test threshold and the integer least-squares (ILS) success rate. The approximation procedure replaces the ILS success rate with the easy-to-calculate integer bootstrapping (IB) success rate. Corresponding modeling error and approximation error are analysed with simulation data to avoid nuisance biases and unrealistic stochastic model impact. The results indicate the proposed method can greatly simplify the FF-approach without introducing significant modeling error. The threshold function method makes the fixed failure rate threshold determination method feasible for real-time applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Integer ambiguity resolution (AR) is the key technique for precise GNSS positioning applications. With the correctly fixed integer ambiguity parameters, centimetre to millimetre positioning accuracy is achievable. However, incorrectly fixed integer ambiguity can introduce an unacceptable large error into positioning results without notice. Reasonably reject unreliable integer ambiguities can reduce the failure risk and improves the AR reliability. The procedure of determining whether to accept the fixed integer ambiguity is known as ambiguity acceptance test.

The integer aperture (IA) estimation theory has been established to solve the ambiguity acceptance test problem (Teunissen 2003a, b). Under the IA framework, different IA estimators are constructed with different acceptance regions. The most popular IA estimators are derived from the ’discrimination test’, e.g. the ratio test (Euler and Schaffrin 1991; Abidin 1993), the difference test (Tiberius and De Jonge 1995) and the projector test (Han 1997; Wang et al. 1998).

Besides the acceptance region shape, the size of the acceptance region (or named the test threshold) is also important in ambiguity acceptance test. Generally, the threshold determination method can be classified into two classes: the empirical approach and the fixed failure rate (FF-) approach. The empirical approach gives a fixed threshold according to indivadual experience,e.g. (Euler and Goad 1991; Han 1997). This method is simple but lacking in theoretical basis. The FF-approach (Teunissen and Verhagen 2009) determines the threshold according to the underlying model and the failure rate tolerance. The FF-approach incorporates the impact of underlying model in decision making and the reliability becomes controllable. The limitation of the FF-approach is its complexity,which is caused by the failure rate calculation and the inverse integration equation problem. The failure rate calculation is a high-dimensional integration problem over an irregular region. It is difficult to obtain the analytical solution, thus the Monte Carlo method is adopted as an alternative. The Monte Carlo method requires large-scale simulation work and introduces the ‘computation burden’ problem into the FF-approach. The threshold determination problem is the ‘inversion’ of the integral equation that links the failure rate to the size of the aperture (Teunissen and Verhagen 2009). A look-up table method for the FF-ratio test has been proposed to overcome the ‘inversion’ problem in the FF-approach (Teunissen and Verhagen 2009; Verhagen and Teunissen 2013; Wang and Feng 2013).

In this paper, a new threshold determination method named threshold function method is proposed to simplify the FF-approach. The remaining parts of the paper are organized as follows: current threshold determination methods, including the empirical approach and the FF-approach, are reviewed in Sect. 2. The methodology of establishing the threshold function is introduced in Sect. 3. The modeling error and performance validation of the threshold function method is discussed in Sect. 4. Finally, the conclusion and outlook are summarized in Sect. 5.

2 Threshold determination methods in ambiguity acceptance test

The ambiguity acceptance test is a quality control procedure deciding whether to accept the estimated integer ambiguity. The threshold determination is an important aspect of the ambiguity acceptance test problem. Current threshold determination methods can be divided into two classes: the empirical approach and the fixed failure rate approach. In this section, these threshold determination approaches are briefly reviewed.

2.1 The procedure of the ambiguity estimation and validation

The carrier phase based GNSS positioning model can be given as:

where \(E(\cdot )\) and \(D(\cdot )\) are the mathematical expectation and dispersion operators respectively. \(y\) is the observation vector including both code and carrier phase measurements. \(a\) and \(b\) are the integer parameter vector and the real-valued parameter vector respectively. \(A\) and \(B\) are the design matrices of \(a\) and \(b\) respectively. The observation vector \(y\) is assumed following the normal distribution and corresponding variance-covariance (vc-) matrix is denoted as \(Q_{yy}\).

The mixed integer model (1) can be solved in four steps:

-

1.

Solving Eq. (1) with standard least-squares method. In this step, the integer nature of \(a\) is not considered. The estimated parameters \(\hat{a}\) and \(\hat{b}\) are known as ’float solution’.

-

2.

Integer ambiguity estimation. The float solution \(\hat{a}\) is mapped to integer vector \(\check{a}\) by the integer estimator. The optimal integer estimator is the integer least-squares (ILS) (Teunissen 1999). The integer estimation procedure can be described as \(\check{a}=I(\hat{a})\), with \(I:\mathbb {R}^n\rightarrow \mathbb {Z}^n\). The integer estimator maps all \(\hat{a}\) falling in particular pull-in region \(S_{\check{a}}\) to same integer vector. The shape of the pull-in region \(S_{\check{a}}\) is defined by the integer estimator.

-

3.

Ambiguity acceptance test. This step determines whether to accept the integer vector \(\check{a}\) with IA estimators. This study discusses how to reasonably determine the threshold of the IA estimators.

-

4.

If the fixed integer ambiguity \(\check{a}\) is accepted by the IA estimators, the real-valued parameters can be updated with \(\check{b}=\hat{b}-Q_{\hat{b}\hat{a}}Q^{-1}_{\hat{a}\hat{a}}(\hat{a}-\check{a})\). The \(\check{a}\) and \(\check{b}\) are known as the ’fixed solution’. If \(\check{a}\) is rejected, the float solution \(\hat{b}\) is preferred.

2.2 The ratio test and the difference test

There are many IA estimators available for the ambiguity acceptance test problem, e.g. the ellipsoidal integer aperture (EIA) (Teunissen 2003a), the optimal integer aperture (OIA) (Teunissen 2005). In this study, the ratio test and difference test are selected due to their simplicity and high performance (Verhagen 2005).

The ratio test is defined as (Frei and Beutler 1990):

where \(\mu _R\) and \(\bar{\mu }_R\) as the ratio test statistic and corresponding threshold respectively. \(\check{a}\) and \(\check{a}_2\) are the ’best integer candidate’ and the ’second best integer candidate’ respectively. The best integer candidate \(\check{a}\) is defined as \(\check{a}=\mathrm{arg}\min \limits _{z\in \mathbb {Z}^n}\left\| \hat{a}-z\right\| ^2_{Q_{\hat{a}\hat{a}}}\) and the second best integer candidate \(\check{a}_2\) has the second smallest Euclidean norm. \(\left\| \hat{a}-z\right\| ^2_{Q_{\hat{a}\hat{a}}}=(\hat{a}-z)^{T}Q^{-1}_{\hat{a}\hat{a}}(\hat{a}-z)\). The float ambiguity \(\hat{a}\) and its vc-matrix \(Q_{\hat{a}\hat{a}}\) can be obtained by the standard least-squares and \(\check{a}\), \(\check{a}_2\) can be obtained from the integer estimation.

The ratio test is a member of integer aperture estimator,which is known as ratio test integer aperture (RTIA). Its acceptance region can be constructed from its definition (2), which is given as (Verhagen 2005; Verhagen and Teunissen 2006a):

where \(\Omega _{0,R}\) is the acceptance region of the RTIA centered at the integer vector \(\{0\}\). The acceptance region is an overlap of many hyper-ellipsoids, and its size is determined by the threshold \(\mu _R\). The details of the ratio test acceptance region have been discussed (Verhagen 2005; Verhagen and Teunissen 2006a; Wang et al. 2014).

Besides the ratio test, the difference test is a high performance IA estimator as well. Recent research indicated the FF-difference test is an approximation of OIA and achieves higher success rate than the FF-ratio test in strong models (Wang et al. 2014), so the difference test is worth to investigation.

The difference test is defined as (Tiberius and De Jonge 1995):

Similarly, \(\mu _D\) and \(\bar{\mu }_D\) are the difference test statistic value and corresponding threshold. The difference test uses the difference of the two distances rather than the ratio to test the closeness between \(\hat{a}\) and \(\check{a}\).

Similar to the ratio test, the difference test is known as the difference test integer aperture (DTIA) (Verhagen 2005; Verhagen and Teunissen 2006a). The definition of the DTIA can be derived from Eq. (4) and expressed as:

where \(x^TQ^{-1}_{\hat{a}\hat{a}}z\) is a dot product of the vector \(x\) and \(z\) in \(Q_{\hat{a}\hat{a}}^{-1}\) spanned space. The geometrical interpretation of the difference test is shown in Fig. 1. \(z_\mathrm{min}=\mathrm{arg}\min \limits _{z\in \mathbb {Z}^n{\backslash }\{0\}}\left\| \hat{a}-z\right\| ^2_{Q_{\hat{a}\hat{a}}}\). The projection of vector \(x\) on the vector \(z_\mathrm{min}\) is \(\frac{x^TQ^{-1}_{\hat{a}\hat{a}}z_\mathrm{min}}{z_\mathrm{min}^TQ^{-1}_{\hat{a}\hat{a}}z_\mathrm{min}}z_\mathrm{min}\), which is denoted as the vector \(x_p\) in Fig. 1. \(B\) is the intersection of the DTIA acceptance region bound and the vector \(z_\mathrm{min}\). The difference test compares the norm of vector \(\left\| x_p\right\| ^2_{Q_{\hat{a}\hat{a}}}\) and \(\left\| B\right\| ^2_{Q_{\hat{a}\hat{a}}}\), if \(\left\| x_p\right\| ^2_{Q_{\hat{a}\hat{a}}}\le \left\| B\right\| ^2_{Q_{\hat{a}\hat{a}}}\), \(x\) will be accepted. The size of the DTIA acceptance region is controlled by the threshold \(\bar{\mu }_D\).

2.3 The empirical approach

The ratio test and the difference test define their acceptance region shape and their thresholds determine the acceptance region size. With regard to the threshold determination problem, there are two classes of approaches: the empirical approach and the fixed failure rate (FF-) approach.

The empirical approach determines the threshold according to individual experiences. Normally, the empirical threshold is a constant. Landau and Euler (1992) and Wei and Schwarz (1995) recommended 2 as ratio test threshold, while Han (1997) suggested 1.5 as the threshold with an improved stochastic model in kinematic data process. A more conservative ratio test threshold (e.g. 3) is also popular in GNSS data process, e.g. (Leick 2004; Takasu and Yasuda 2010). Difference test threshold is suggested as 15 by Tiberius and De Jonge (1995).

The empirical thresholds may work well in particular scenarios, but it is still far from enough. The empirical approach simply assumes the threshold is independent from underlying model, while it is unrealistic. The definition of the ratio test and the difference test has indicated the test statistics are connected to \(Q_{\hat{a}\hat{a}}\). While \(Q_{\hat{a}\hat{a}}\) is determined by the design matrices \(A\), \(B\) and the observation noise vc-matrix \(Q_{yy}\). Moreover, it is difficult to evaluate the performance of the ambiguity acceptance test with empirical threshold, since there is no reliability indicator in the empirical approach.

2.4 The fixed failure rate approach

The FF-approach determines the threshold based on the probability theory, while it is more complex than the empirical approach.

The probability model for the ambiguity acceptance test is demonstrated in Fig. 2. In one dimensional case, the integer estimator pull-in region \(S_{0}\) is the interval \([-0.5,0.5]\) and \(\Omega _{0}\subset S_{0}\). Assuming the float solution \(\hat{a}\) follows normal distribution N(a,\(Q_{\hat{a}\hat{a}}\)), the probability density function (PDF) of \(\hat{a}\) is given as:

where \(|\cdot |\) is the determinant of the matrix. The expectation of \(\hat{a}\) is the unknown integer vector \(a\). The ambiguity residuals \(\check{\epsilon }\) is defined as \(\check{\epsilon }=\hat{a}-\check{a}\) and its PDF is given as (Teunissen 2002; Verhagen and Teunissen 2006b):

where \(S_{z}\) is the integer estimator pull-in region centered at the integer vector \(z\), \(s_z(x)\) is an indicator function.

The two curves in the figure show the \(f_{\check{\epsilon }}(x)\) (upper) and \(f_{\hat{a}}(x-a)\) (lower). If the size of the acceptance region is determined, the success rate and failure rate of the IA estimators are defined as (Teunissen 2003b; Verhagen 2005):

\(P_{s}\) and \(P_{f}\) can be geometrically interpreted as the area of dark grey and light grey region in the acceptance region. If \(\Omega _0=S_0\), Eqs. (8) and (9) can be used to calculate the success rate and failure rate of integer estimator. The success rate and failure rate reflect the correct and incorrect probability in the acceptance region. The correct and incorrect probability in the rejected region are known as false alarm rate and correctly rejected rate respectively.

Illustration of one-dimensional integer aperture estimation model for the ambiguity validation problem. The two curves show \(f_{\check{\epsilon }}(x)\) (upper) and \(f_{\hat{a}}(x-a)\) (lower). The area of regions shows different probability (Wang and Feng 2013)

The FF-approach follows a four-step procedure, which is described as (Verhagen 2005; Verhagen and Teunissen 2013; Wang and Feng 2013):

-

1.

Calculating the test statistics value \(\mu \) (e.g. \(\mu _D=\left\| \hat{a}-\check{a}_2\right\| ^2_{Q_{\hat{a}\hat{a}}}-\left\| \hat{a}-\check{a}\right\| ^2_{Q_{\hat{a}\hat{a}}}\)) with the float solution \(\hat{a}\) and the fixed solution \(\check{a}\).

-

2.

Addressing the relationship between the threshold \(\bar{\mu }\) and the failure rate \(P_f\) with simulation. A number of samples (e.g. 100,000 samples)following the normal distribution \(N(0,Q_{\hat{a}\hat{a}})\) is simulated to numerically describe the probability distribution of \(\hat{a}\). The integer estimation procedure is performed on each sample and corresponding test statistic value can be calculated. The fixed solution of each sample is compared with the true ambiguity vector \(\varvec{0}\) to verify their correctness. The distribution of failed samples against the test statistic value \(\mu \) can be numerically determined with the simulated samples and corresponding PDF is denoted as \(f_{P_f}(x)\). The relationship between the failure rate \(P_f\) and the test threshold \(\bar{\mu }\) can be expressed as :

$$\begin{aligned} P_{f}(\bar{\mu })=\int ^{\infty }_{\bar{\mu }} f_{P_f}(x)\mathrm{d}x \end{aligned}$$(10)It is noticed that integration interval depends on the definition of IA estimator, the interval [\({\mu },\infty \)] is derived from the Eqs. (2) and (4). Equation (10) indicates large \(\bar{\mu }\) corresponds to small \(P_f\).

-

3.

Threshold determination. According to Eq. (10), the FF-threshold can be determined with \(\bar{\mu }=arg\min \limits _{P_f(\mu )\le \bar{P}_f}\{\mu \}\) with \(\bar{P}_f\) is the failure rate tolerance. It is noticed that obtaining \(\bar{\mu }\) from Eq. (10) is an ‘inverse integration’ problem. The problem can be solved by the numerical root-finding method (Verhagen 2005).

-

4.

Comparing the threshold \(\bar{\mu }\) calculated from step (3) with the test statistic value \(\mu \) from step (1) to make the final decision.

The key of the FF-approach is the function connecting the failure rate \(P_f\) and the threshold \(\bar{\mu }\). Unfortunately, the function depends on the vc-matrix \(Q_{\hat{a}\hat{a}}\), thus the simulation is always necessary for different \(Q_{\hat{a}\hat{a}}\). Moreover, the ’inverse integration’ problem in the third step is also difficult to find the analytical solution. The root-finding method can only find the numerical root of the implicit function (10).

2.4.1 The look-up table method for the FF-ratio test

The FF-approach presents a general threshold determination method for all IA estimators. With regarding to a specific IA estimator, the FF-approach can be simplified. The look-up table method is a simplified version of FF-approach for the ratio test (Teunissen and Verhagen 2009; Verhagen and Teunissen 2013). The method attempts to express the relationship between the failure rate \(P_f\) and the threshold \(\bar{\mu }\) with a two-dimensional table. In this method, the FF-ratio test threshold is expressed as a function of the ILS failure rate and the ambiguity dimension. How to establish the look-up table has been described by Verhagen and Teunissen (2013). An look-up table example is shown in Table 1. Since the key relationship in the FF-approach is explicitly modeled in the table, the threshold calculation becomes easier. The desired FF-threshold can be calculated with proper interpolation method rather than the root-finding method.

2.5 Comparison of the threshold determination methods

The principle of the empirical approach and the FF-approach has been discussed, while the difference between the two methods still needs to be examined.

Whether the underlying model impacts the threshold is the fundamental difference between the two approaches. In order to investigate this problem, two examples are employed. The first model is the single-frequency short-baseline model and the ionosphere is assumed absent. The second model is the single-frequency ionosphere-weighted model, which is suitable for medium baseline data processing (Odijk 2002). The ILS success rate \(P_{s,\mathrm{ILS}}\) of the two examples are 99.9 % and 61.3 % respectively, thus the first model is stronger than the second one. The success rate and failure rate of the ratio test are denoted as \(P_{s,\mathrm{RTIA}}\) and \(P_{f,\mathrm{RTIA}}\) and listed in Table 2.

With the empirical approach, for example, the threshold is set as 2. Corresponding failure rates are 0.001 % and 2.018 % for the two models respectively. In order to achieve the same failure rate as the first model, the second model has to set its ratio test threshold as 10. There is no doubt that choosing 10 as threshold can ensure both of the models have their failure rate smaller than 0.001 %, but it is unfair for the first model since the over-conservative threshold decreases its success rate from 99.068 % to 11.220 % for no reason. Thus it is difficult to find a proper empirical threshold fitting all underlying models.

The FF-approach determines the threshold according to the failure rate. Given \(\bar{P}_f=0.001~\%\), the FF-approach can automatically identify that the best threshold are 2 and 10 for the two models respectively. Comparing to the empirical approach, the limitation of the FF-approach is its complexity. Hence, reducing the complexity of the FF-approach is important for solving the threshold determination problem in ambiguity acceptance test.

3 The threshold function method for FF-difference test

The FF-approach has been attempted to simplified with a look-up table method for the ratio test. In this study, the FF-difference test is used to simplify the FF-approach.

3.1 The simulation strategy

In order to exclude the impact of inaccurate stochastic model, unexpected biases and outliers on ambiguity acceptance test, our study is numerical simulation based. Short- to medium-distance real-time kinematic (RTK) positioning scenario is considered in this study. The least-squares is adopted to estimate the float solution based on single epoch GPS observations. The elevation-dependent weighting strategy is used to capture the elevation-dependent observation noise and ionosphere noise, which is given as (Verhagen et al. 2012):

where \(w\) is the weight factor and \(E\) is the elevation angle in degree.

In order to capture the satellite geometry impact, a \(15^\circ \times 15^\circ \) global-covered, evenly distributed ground tracking network is simulated and 24 h observation data from all monitor stations are generated with 1,800 s sampling interval. In this case, the satellite geometry in different location, different time can be captured by the simulated data set. In order to investigate the impact of the underlying model, four simulation schemes are designed and the simulation configurations are listed in Table 3. These schemes are designed to reveal the impacts of the frequency number, the ionosphere variance and the observation noise on the ratio test and difference test threshold. The short-baseline model is used for single-frequency case, since it is too weak to handle the ionosphere parameters in single epoch mode. For dual frequency and triple frequency cases, the ionosphere standard deviation on zenith direction \(\sigma _{z,I}\) corresponds to 50 and 75 km baseline respectively (Odijk 2002). As ambiguity resolution is attempted only if the underlying model is strong enough, otherwise the fixed rate would be extremely low (Verhagen and Teunissen , 2013). In this study, the IB success rate \(P_{s,\mathrm{IB}}\) higher than 85 % is used as the model strength criterion empirically. The epochs with low IB success rate are ignored in the simulative study. For each epoch, 100,000 samples are generated to calculate its \(P_{s,\mathrm{ILS}}\) and \(P_{f,\mathrm{ILS}}\).

3.2 Comparison of the FF-difference test threshold and the FF-ratio test threshold

The characteristic of the FF-difference test threshold is compared with the FF-ratio test threshold since the FF-ratio test threshold has been modeled with the look-up table. In the comparison, the \(P_{s,\mathrm{ILS}}\) is used as the underlying model strength indicator and the FF-ratio test threshold and the FF-difference test threshold are expressed as a function of the \(P_{s,\mathrm{ILS}}\). The simulation results are presented in Fig. 3 with \(\bar{P}_f=1~\%\). The left panel shows the FF-difference test threshold decreases as the \(P_{s,\mathrm{ILS}}\) increases and the decreasing trends are consistent for all four schemes. The thresholds are distributed quite concentrated and the dispersion is caused by the simulation error and possibly other errors. The dispersion depends on the \(\bar{P}_f\). According to the result, the relationship between the FF-difference test threshold and the \(P_{s,\mathrm{ILS}}\) can be presented as a function. Once the function is given, the threshold can be calculated with a given \(P_{s,\mathrm{ILS}}\) rather than relying on the root-finding method. Hence, it is possible to simplify the FF-approach with a ‘threshold function’.

The variation of the FF-ratio test threshold is shown in the right panel of Fig. 3. The figure indicates that the FF-ratio test threshold also decreases as the \(P_{s,\mathrm{ILS}}\) increases, but the decreasing trends depend on the simulation schemes. The FF-ratio test threshold is more spread out in the single-frequency case. The dispersion of \(\bar{\mu }_R\) may be caused by the underlying model or other factors (e.g. the ambiguity dimension).

The comparison results indicate the FF-difference test threshold can be presented as a function of \(P_{s,\mathrm{ILS}}\) without considering other model factors e.g. the ambiguity dimension, the stochastic model. On the other hand, the FF-ratio test threshold is also possible to be fitted as a function of the \(P_{s,\mathrm{ILS}}\), but it is not as good as the FF-difference test threshold, since its threshold is more spread out and sensitive to other factors like ambiguity dimension.

3.3 Establishing the threshold function for the FF-difference test

The previous analysis has revealed the potential of establishing the threshold function for the FF-difference test, the methodology of establishing the function is discussed in this section. The regression analysis method is used to establish the threshold function for the FF-difference test. The \(P_{s,\mathrm{ILS}}\) and the threshold are selected as the independent variable and the dependent variable respectively in the regression analysis.

3.3.1 The rational model as the threshold function

The first step of establishing the threshold function is to identify the right model to fit the threshold. The distribution of the FF-difference test threshold has been shown in Fig. 3, it helps to select potential models. In order to keep the function simple, several commonly used non-linear models are attempted, including the exponential function, the hyperbolic function, the polynomial function and the rational function. The fitting residuals are used to evaluate the goodness of fitting. The fitting results indicate that the rational function has a simple form and relatively small fitting residuals, thus is selected as the threshold function model. The rational function refers to a fractional function with its numerator and the denominator are both polynomial functions. The threshold function for the FF-difference test is given as:

where \(\hat{\mu }\) means the FF-difference test threshold calculated from the threshold function method. \(\hat{\mu }\) is expressed as a function of \(P_{s,\mathrm{ILS}}\). \(e_1 ,\cdots , e_4\) are the coefficient of rational function.

It is noticed that the rational model is not the only valid threshold function model. Fitting the threshold function with other models is also possible, the rational model is chosen in this study is because of its small fitting residuals. The threshold function also can be fitted with higher order rational functions. High order rational function model achieves comparable fitting precision as Eq. (12), but they involves more coefficients. Overall, the advantages of the selected model are its simple form and small fitting residuals.

3.3.2 Fitting the threshold function

With the function model been identified, the next step is to estimate the coefficient in the model. There are several curve fitting methods applicable for this non-linear curve fitting problem, such as the Gauss–Newton method, the Levenberg–Marquardt method and the trust-region method (Teunissen 1990). In this study, the popular Levenberg–Marquardt method is adopted to fit the rational function. This method is an improved version of the Gauss–Newton method (Teunissen 1990), which can adaptively adjust the damping parameter according to the gradient descent to accelerate the convergence (Marquardt 1963).

Similar to the Gauss–Newton method, the Levenberg–Marquardt method relies on the gradient methods to approximate the true curve iteratively. The non-linear problem is linearized with the Taylor series, which is expressed as:

where \(\hat{\mu }_k\) is the rational function value calculated in the \(k\)th iteration. \(J\) is the Jacobian matrix \(\partial \mu _k/\partial e_k\). \(e_k\) is coefficient on \(k\)th iteration. \(\Delta e\) is a \(4\times 1\) increment vector of the coefficient parameters.

The Levenberg–Marquardt method adds a positive damping scaler \(\lambda \) into the cost function and its normal equation is expressed as (Marquardt 1963):

where \(W\) is the weight matrix. \(\mathrm{diag}(\cdot )\) means diagonalise the matrix, which keeps major diagonal entries and sets off-diagonal entries as 0. \(\mu _\mathrm{ILS}\) is the threshold calculated with the FF-approach and \(P_{s,\mathrm{ILS}}\), which is the observation in the modeling procedure. The coefficient increment \(\Delta e\) can be estimated by solving the normal Eq. (14).

The damping scaler \(\lambda \) is the key of the algorithm,which can be interpreted as a compromise between the Newton’s method and the steepest descent method. When \(\lambda =0\), the Levenberg-Marquardt method degrades as the Newton’s method and it becomes the steepest descent method when the \(\lambda \) is sufficient large (Teunissen 1990). Moreover, the term \(\lambda \mathrm{diag}(J^TWJ)\) can ensure the term \(J^TWJ+\lambda \mathrm{diag}(J^TWJ)\) is always positive definite. During the iteration process, the damping factor is controlled by the quadratic form of posterior residual \(\hat{\sigma }'\), which is defined as:

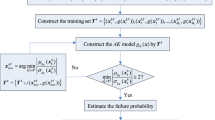

The procedure of the Levenberg-Marquardt method is described in Fig. 4. The criterion \(\epsilon _0\) can be calculated with \(\epsilon _0=(\mu _\mathrm{ILS}-\hat{\mu }_{0})^TW(\mu _\mathrm{ILS}-\hat{\mu }_{0})\). The iterative procedure requires several initial factors: the ILS success rate \(P_{s,\mathrm{ILS}}\) and the FF-difference test threshold \(\mu _\mathrm{, ILS}\). The initial damping parameter \(\lambda \) and initial coefficient \(e_{0}\) are essential as well. In the flowchart, the initial \(\lambda \) is an arbitrary positive scaler. \(\alpha \) is empirically given as 10. \(\epsilon \) is an arbitrary small positive number controls the convergency, which is given as \(10^{-5}\). \(|\cdot |\) means absolute value.

3.3.3 The fitted threshold function and the quality control issues

The quality of fitting can be described by the posteriori standard deviation, which is defined as:

where \(v=\mu _\mathrm{ILS}-\hat{\mu }_{k+1}\), \(n\) is the number of observation and \(r\) is the parameter number. In this study, the weight matrix \(W\) is an identity matrix and \(r=4\). \(\hat{\sigma }\) reflects the discrepancy between the observation and the fitted model. The discrepancy may be caused by the random error of the observation or the systematical bias of the model. For a given data set, the model with smallest \(\hat{\sigma }\) has the smallest systematical bias.

A group of fitted curve coefficient with different failure rate tolerance is listed in Table 4. With these coefficients, the FF-difference test threshold can be directly calculated with given ILS success rate. The fitted threshold function and corresponding \(\hat{\sigma }\) are illustrated in Fig. 5. The left panel shows the agreement of the fitted curve with different failure rate tolerance. The dots shows the FF-difference test threshold calculated with the FF-approach and the dashed line shows corresponding fitted rational function. The fitted function locates in the middle of the threshold dots and thus describes the relationship well.

The right panel shows the posterior standard deviation \(\hat{\sigma }\) of the threshold function. The figure shows the smaller failure rate tolerance case deserves larger fitting errors, which is consistent with the left panel.

3.4 The feasibility of replacing ILS success rate with IB success rate

The fitted threshold function approximately describes the relationship between the ILS success rate \(P_{s,\mathrm{ILS}}\) and the FF-difference test threshold and it solves the ‘inverse integration’ problem in the FF-approach. However, the calculation of the \(P_{s,\mathrm{ILS}}\) is still time-demanding. In order to further reduce the computation burden, we have to find an easier way to calculate the \(P_{s,\mathrm{ILS}}\). In this section, the possibility of approximate the \(P_{s,\mathrm{ILS}}\) with the integer bootstrapping (IB) success rate \(P_{s,\mathrm{IB}}\) is discussed.

Although the \(P_{s,\mathrm{ILS}}\) is difficult to calculate directly, calculating its upper bound or lower bound is possible (Hassibi and Boyd 1998; Teunissen 1998; Verhagen 2003; Feng and Wang 2011). Many upper bounds and lower bounds of the \(P_{s,\mathrm{ILS}}\) have been proposed from different point of view (Verhagen et al. 2013). If the bounds are sharp enough, it can be used to approximate the \(P_{s,\mathrm{ILS}}\).

The question is using the upper bound or the lower bound to approximate the \(P_{s,\mathrm{ILS}}\) in this context. The coefficient listed in Table 4 is the median curve of the threshold, it means about 50 % FF-difference test thresholds are larger than the threshold function. Hence, the threshold function is not conservative enough. Since the threshold functions are monotonously decreasing function, the lower bound of the \(P_{s,\mathrm{ILS}}\) makes the threshold function more conservative. The sharpest lower bound of the \(P_{s,\mathrm{ILS}}\) is the integer bootstrapping (IB) success rate \(P_{s,\mathrm{IB}}\) (Verhagen et al. 2013), thus the \(P_{s,\mathrm{IB}}\) is suitable to approximate the \(P_{s,\mathrm{ILS}}\). The \(P_{s,\mathrm{IB}}\) is easy-to-calculate, which can be calculated with (Teunissen 1998):

where \(n\) is the dimension of \(Q_{\hat{a}\hat{a}}\), \(\Phi (\cdot )\) is the cumulative distribution function (CDF) of the normal distribution. \(\sigma _{\hat{a}_{i|I}}\) is the \(i\)th conditional variance conditioning on \(\{1,\cdots ,i-1\}\). \(\sigma _{\hat{a}_{i|I}}\) can be obtained by the LDL decomposition. It is noticed that the decorrelated version of \(Q_{\hat{a}\hat{a}}\) must be used in the success rate calculation, as IB success rate is not invariant against parameterizations of the ambiguities (Teunissen 1998). The decorrelation method and the LDL decomposition method are described in Teunissen (1995).

The price of approximating the \(P_{s,\mathrm{ILS}}\) with the \(P_{s,\mathrm{IB}}\) is introducing the approximation error into the threshold function method. As a trade-off between the computational burden and the approximation error, the feasibility of the approximation has to be examined carefully, as the oversized approximation error would make the method meaningless.

The examination of the success rate approximation feasibility includes two aspects: checking the difference between the two success rates and checking the impact of the approximation on the failure rate. The difference between the \(P_{s,\mathrm{ILS}}\) and \(P_{s,\mathrm{IB}}\) is shown in Fig. 6. The figure shows the \(P_{s,\mathrm{IB}}\) is a sharp lower bound of the \(P_{s,\mathrm{ILS}}\). The difference between the \(P_{s,\mathrm{IB}}\) and \(P_{s,\mathrm{ILS}}\) is normally smaller than 5 % for \(P_{s,\mathrm{IB}}>90~\%\) case. The difference decreases as the \(P_{s,\mathrm{IB}}\) increases.

The second aspect of the feasibility examination is to investigate how the success rate difference changes the behavior of actual failure rate. The threshold calculated with the threshold function with \(P_{s,\mathrm{ILS}}\) and \(P_{s,\mathrm{IB}}\) are denoted as \(\hat{\mu }_\mathrm{ILS}\) and \(\hat{\mu }_\mathrm{IB}\) respectively. In the validation procedure, the failure rate calculated with \(\hat{\mu }_\mathrm{ILS}\) and \(\hat{\mu }_\mathrm{IB}\) are denoted as \(\hat{P}_{f,\mathrm{ILS}}\) and \(\hat{P}_{f,\mathrm{IB}}\) respectively.

Figure 7 presents \(\hat{P}_{f,\mathrm{ILS}}\) and \(\hat{P}_{f,\mathrm{IB}}\) with respect to corresponding failure rate tolerance \(\bar{P}_f\). The left panel shows there is about 50 % \(\hat{P}_{f,\mathrm{ILS}}\) larger than \(\bar{P}_f\). Thus \(\hat{\mu }_\mathrm{ILS}\) is not conservative enough as an approximation of FF-approach. The right panel shows the majority of \(\hat{P}_{f,\mathrm{IB}}\) is smaller than the \(\bar{P}_f\). Since \(P_{s,\mathrm{IB}}\le P_{s,\mathrm{ILS}}\), \(\hat{\mu }_\mathrm{IB}\ge \hat{\mu }_{\mathrm{ILS}}\) and then \(\hat{P}_{f,\mathrm{IB}}\le \hat{P}_{f,\mathrm{ILS}}\). After the approximation, the failure rate tolerance \(\bar{P}_f \) is close to the upper bound of \(\hat{P}_{f,\mathrm{IB}}\). Meanwhile, the uncertainty of \(\hat{P}_{f,\mathrm{IB}}\) is larger than \(\hat{P}_{f,\mathrm{ILS}}\). It is because \(\hat{\mu }_\mathrm{ILS}\) is free of the approximation error. Overall, the success rate approximation procedure makes the threshold function method more conservative and \(\hat{P}_{f,\mathrm{IB}}\) is still controllable in majority case.

4 Validation of the threshold function method and modeling error analysis

The modeling procedure and the approximation procedure can efficiently simplify the FF-approach, while these procedures also introduce errors inevitably. The impact of the errors on the decision making is analysed in this section. The FF-approach is only affected by the simulation error and the simulation error can be mitigated with larger sample size. The threshold function involves the modeling error and the approximation error besides the simulation error. The total impact of the modeling error and approximation error can be analysed by comparing with the original FF-approach and the magnitude of the simulation error can be analysed by repeatability check.

4.1 The modeling error and the approximation error impact

The modeling error and the approximation error are introduced to the threshold function method while the original FF-approach does not suffer from these errors. Thus, the impact of these two errors can be isolated by comparing the threshold function method with the original FF-approach. In this section, the impact of the two errors on the failure rate and the false alarm rate is analysed.

The failure rate and the false alarm rate difference between the threshold function method and the FF-approach are calculated and shown in Fig. 8. In this comparison,the actual failure rate calculated with the original FF-approach in validation procedure is denoted as \(P_{f,\mathrm{ILS}}\). The left panel shows \(\hat{P}_{f,\mathrm{IB}}\le P_{f,\mathrm{ILS}}\) holds in majority case. There are only a few samples having slightly higher \(\hat{P}_{f,\mathrm{IB}}\). The failure rate difference increases as \(P_{s,\mathrm{ILS}}\) increases. It is because the failure rate difference depends on the success rate difference and the gradient of the threshold function.

The right panel in Fig. 8 shows the false alarm rate difference between the two methods. As the \(\hat{\mu }_\mathrm{IB}\) is more conservative, the corresponding type I error will be inevitably increasing. The figures show the type I error of the threshold function method is larger than the original FF-approach in most cases. In most cases, the type I error difference between the two methods is smaller than 5 %.

4.2 The simulation error impact

Both the FF-approach and the threshold function method are inevitably impacted by the simulation error, since both of them employs numerical methods. The magnitude of the simulation error depends on the simulated sample size. As a trade-off between the simulation error and the computational efficiency, 100,000 samples are simulated to calculated the threshold in this experiment. Due to the randomness of the simulation samples, the threshold may slightly different between different experiment and it is considered as the simulation error.

The simulation error can be evaluated by checking the experiment repeatability. A 16-dimensional example with \(P_{s,\mathrm{ILS}}\approx 97.1~\%\) is used to investigate the simulation error impact. The validation process includes two steps: the first step is to determine the threshold with the FF-approach . The simulation error may cause the FF-threshold and \(P_{s,\mathrm{ILS}}\) slightly different. The second step is calculating the actual failure rate with a fixed threshold. In this experiment, the maximum and minimum threshold out of the 1,000 repeat experiments are used as the fixed threshold and the corresponding maximum and minimum actual failure rate are calculated. we denote the threshold and actual failure rate calculated with the original FF-approach as \(\mu _\mathrm{ILS}\) and \(P_{f,\mathrm{ILS}}\) for simplicity.

The experiment results are presented in Fig. 9. The left panel shows the simulation error impact on the FF-difference test threshold. The figure shows threshold calculated with the original FF-approach \(\mu _\mathrm{ILS}\) and the threshold function \(\hat{\mu }_\mathrm{ILS}\) are impacted by the simulation error. The simulation error impact on \(\hat{\mu }_\mathrm{ILS}\) is smaller than \(\mu _\mathrm{ILS}\). \(\hat{\mu }_\mathrm{IB}\) is immune from the simulation error as it does not employ simulation procedure. The figure also indicates the curve fitting procedure introduced in Sect. 3.3 can mitigate the simulation error impact and \(\hat{\mu }_\mathrm{IB}\ge \hat{\mu }_\mathrm{ILS}\). The right panel shows the maximum and minimum actual failure rate in the 1,000 repeat experiments. The uncertainty in this figure reflects the accumulated simulation error impact in the two validation steps. The simulation error impact on the actual failure rate is similar to its impact on the threshold, but the uncertainty increases as \(\bar{P}_f\) increases this time. The figure shows the actual failure rate of the original FF-approach may also exceed the failure rate tolerance due to the simulation error impact. While \(\hat{P}_{f,\mathrm{ILS}}\) have a smaller uncertainty than \(P_{f,\mathrm{ILS}}\). \(\hat{P}_{f,\mathrm{IB}}\) is immune from the simulation error impact and meets the tolerance in majority cases. With regarding to the simulation error impact, the \(\hat{\mu }_\mathrm{IB}\) somehow even outperforms the \(\mu _\mathrm{ILS}\).

4.3 Validation of the threshold function method

With errors been analysed, the performance of the threshold function is evaluated with extensive data. The performance can be measured by two indicators: the maximum actual failure rate \(\mathrm{Max}\{\hat{P}_{f,\mathrm{IB}}\}\) and the percentage of samples meeting \(\hat{P}_{f,\mathrm{IB}}\le P_f\).

The validation procedure described in Sect. 4.2 is applied to all samples described in Sect. 3.1 and the validation results are presented in Fig. 10. The left panel shows the largest overflowed actual failure rate \(\hbox {Max}\{\hat{P}_{f,\mathrm{IB}}\}-\bar{P}_{f}\). The largest overflowed failure rate related to the \(\bar{P}_f\). Small \(\bar{P}_f\) deserves small overflowed failure rate in general. In the worst case, the overflowed failure rate reaches 0.08 %, which is still smaller than simulation error impact on \(P_{f,\mathrm{ILS}}\). The right panel shows the percentage of samples meet the failure rate tolerance. There are more than 97 % samples meeting the requirement for \(\bar{P}_f>0.1~\%\) cases. The \(\bar{P}_f=0.1~\%\) case has a relatively lower percentage and it still achieves 92 %. Hence, the majority samples still meet the failure rate tolerance with the threshold function method.

4.4 The procedure of applying the threshold function method

In this section, the procedure of applying the threshold function method is summarized. Similar to the original FF-approach, the procedure of applying the threshold function method also follows four steps:

-

1.

Form the difference test statistics \(\mu _D=\left\| \hat{a}-\check{a}_2\right\| ^2_{Q_{\hat{a}\hat{a}}}-\left\| \hat{a}-\check{a}\right\| ^2_{Q_{\hat{a}\hat{a}}}\). The squared Euclidean norm \( \left\| \hat{a}-\check{a}\right\| ^2_{Q_{\hat{a}\hat{a}}}\) and \(\left\| \hat{a}-\check{a}_2\right\| ^2_{Q_{\hat{a}\hat{a}}}\) can be obtained from the integer least-squares estimator.

-

2.

Calculate the IB success rate of \(Q_{\hat{a}\hat{a}}\) with \(P_{s,\mathrm{IB}}=\prod ^n_{i=1}(2\Phi (\frac{1}{2\sigma _{\hat{a}_{i|I}}})-1)\). The decorrelated version of \(Q_{\hat{a}\hat{a}}\) has to be used in the IB success rate calculation, as the IB success rate depends on the parametrisation form of the ambiguities. The decorrelation methods can be found in Teunissen (1995) and De Jonge and Tiberius (1996)

-

3.

Calculate the test threshold with the threshold function. Choosing a group of coefficient from Table 4 according to the \(\bar{P}_f\), the FF-difference test threshold can be calculated with

$$\begin{aligned} \hat{\mu }= {\left\{ \begin{array}{ll} \infty ,&{} P_{s,\mathrm{IB}}<0.85 \\ \frac{e_1+e_2P_{s,\mathrm{IB}}}{1+e_3P_{s,\mathrm{IB}}+e_4P_{s,\mathrm{IB}}^2},&{} 0.85\le P_{s,\mathrm{IB}}<1-\bar{P}_f \\ 0,&{} P_{s,\mathrm{IB}}\ge 1-\bar{P}_f \end{array}\right. } \end{aligned}$$(18)If \(P_{s,\mathrm{IB}}<0.85\), the model is considered as too weak to resolve the ambiguity and more observations are required to improve the model strength. \(P_{s,\mathrm{IB}}\ge 1-\bar{P}_f\) means the failure rate of integer estimator is smaller than the tolerance, \(\hat{\mu }\) is set as \(0\) to avoid negative threshold in this case.

-

4.

Compare \(\mu _D\) and \(\hat{\mu }\). If \( \mu _D\ge \hat{\mu }\), \(\check{a}\) can be accepted, otherwise reject it.

The procedures of the three different threshold determination methods with controllable failure rate are compared in Table 5. The three methods follow a similar four-step procedure. The original FF-approach is the most general method and feasible to all IA estimators. The look-up table method is proposed for the ratio test and the threshold function method is designed for the difference test. The second step is the most time-demanding step in FF-approach due to large simulation work. The look-up table method still relies on the simulation to calculate the ILS failure rate. In contrast, the threshold function method enables to directly calculate the success rate rather than simulation, so it is more efficient than the other two methods. Both the look-up table method and the threshold function method circumvent the root-finding procedures. The look-up table method employs an interpolation procedure to obtain the threshold whereas the threshold function method resorts to a function to calculate the threshold. The decision-making step is the same for all three methods.

4.5 Some remarks

Besides above discussion, there are several interesting topics, which are discussed in this section.

4.5.1 Computation efficiency improvement

The threshold function method circumvents the simulation step in the FF-approach and thus greatly improves the computational efficiency. The computational efficiency of the original FF-approach depends on the underlying model strength. The essential time consumption of the FF-approach varies from a few seconds to several minutes. The threshold function method reduced the time consumption to a negligible level. Moreover, the computation time becomes independent from the underlying model. Thus, the threshold function method makes the fixed failure rate ambiguity validation approach always applicable for real-time applications.

4.5.2 Applicability of the threshold function

All above discussion about the threshold function method is confined to the FF-difference test, one may concern whether it is applicable to other IA estimators (e.g. the FF-ratio test). The threshold function is applicable to the ratio test according to Fig. 3. However, the threshold function may not performs as good as the FF-difference test, due to the FF-ratio test threshold distribution. The FF-ratio test threshold function may be different for different ambiguity dimension. The feasibility and performance of the threshold function need to be checked before applying it to other IA estimators.

4.5.3 Is success rate approximation applicable to the look-up table method?

The success rate approximation is also applicable for the look-up table method, but the approximation would makes the threshold over conservative. The thresholds listed in the look-up table are upper bound of the simulated thresholds, calculating with \(P_{s,\mathrm{ILS}}\) is a conservative solution already. If \(P_{s,\mathrm{ILS}}\) is approximated with \(P_{s,\mathrm{IB}}\), the calculated threshold would be over conservative. Although the step can reduce the computation burden, the approximation makes the threshold discrepancy between the FF-approach and the look-up table approach larger.

5 Conclusion and outlook

The paper has investigated the threshold determination issue in the ambiguity acceptance test problem. At first, current threshold determination methods, the empirical method and the FF-approach have been reviewed and compared. The FF-approach is more rigorous, but computationally demanding. Thus, the key challenge of the threshold determination issue is how to reduce the complexity of FF-approach.

A new method named the threshold function method is proposed to reduce the complexity of the FF-approach. The method reduces the FF-approach with a two-step procedure. In the first step, the relationship between the FF-difference test threshold and the ILS success rate is modeled as a rational function. With the rational function, the ‘inverse integration’ problem is converted to a direct calculation problem. Then, the ILS success rate in the model is replaced by the IB success rate, since the IB success rate can be easily calculated without any simulation.

The errors of the threshold function method are analysed in this paper as well. The experiment results indicate the threshold function modeling procedure can mitigate the simulation error impact. The success rate approximation procedure can improve the computational efficiency and also makes the threshold function method conservative. Extensive simulation results show the threshold function method can meet the failure rate tolerance in the majority cases. The occasional overflowed failure rate is smaller than the simulation error impact, thus the threshold function method reduced the computational burden of the FF-approach without degradation of its performance.

The proposed threshold function method reduces the computation burden of the FF-approach to a negligible level with proper modeling and approximation procedure.It circumvents the complex theory and computation successfully and makes the fixed failure rate ambiguity validation method easy-to-apply. However, the ambiguity validation problem is still challenging due to the potential discrepancy between real data and underlying model. The feasibility of the threshold function method is analysed from theoretical prospective, while the practical issues still need to be addressed before testing the method with real GNSS data.

References

Abidin HZ (1993) Computational and geometrical aspects of on-the-fly ambiguity resolution. Technical Report 164, UNB

De Jonge P, Tiberius C (1996) The LAMBDA method for integer ambiguity estimation: implementation aspects. Technical Report 12, Delft Geodetic Computing Centre

Euler HJ, Goad CC (1991) On optimal filtering of GPS dual frequency observations without using orbit information. J Geod 65(2):130–143

Euler HJ, Schaffrin B (1991) On a measure for the discernibility between different ambiguity solutions in the static-kinematic GPS-mode. In: IAG Symposium, pp 285–295

Feng Y, Wang J (2011) Computed success rates of various carrier phase integer estimation solutions and their comparison with statistical success rates. J Geod 85(2):93–103

Frei E, Beutler G (1990) Rapid static positioning based on the fast ambiguity resolution approach FARA: theory and first results. Manuscr Geod 15(6):325–356

Han S (1997) Quality-control issues relating to instantaneous ambiguity resolution for real-time GPS kinematic positioning. J Geod 71(6):351–361

Hassibi A, Boyd S (1998) Integer parameter estimation in linear models with applications to GPS. IEEE Trans Signal Process 46(11):2938–2952

Landau H, Euler H-J (1992) On-the-fly ambiguity resolution for precise differential positioning. In: Proceedings of ION GPS pp 607–613

Leick A (2004) GPS satellite surveying. Wiley, New York

Marquardt D (1963) An algorithm for least-squares estimation of nonlinear parameters. J Soc Ind Appl Math 11(2):431–441

Odijk D (2002) Weighting ionospheric corrections to improve fast GPS positioning over medium distances. In: Proceedings of the Institute of Navigation 2000 meeting. Delft Technology University

Takasu T, Yasuda A (2010) Kalman-filter-based integer ambiguity resolution strategy for long-baseline rtk with ionosphere and troposphere estimation. ION NTM, pp 161–171

Teunissen PJ (1990) Nonlinear least squares. Manuscr Geod 15(3):137–150

Teunissen PJG (1995) The least-squares ambiguity decorrelation adjustment: a method for fast GPS integer ambiguity estimation. J Geod 70(1):65–82

Teunissen PJG (1998) Success probability of integer GPS ambiguity rounding and bootstrapping. J Geod 72(10):606–612

Teunissen PJG (1999) An optimality property of the integer least-squares estimator. J Geod 73(11):587–593

Teunissen PJG (2002) The parameter distributions of the integer GPS model. J Geod 76(1):41–48

Teunissen PJG (2003a) A carrier phase ambiguity estimator with easy-to-evaluate fail rate. Art Sa 38(3):89–96

Teunissen PJG (2003b) Integer aperture GNSS ambiguity resolution. Art Sa 38(3):79–88

Teunissen PJG (2005) GNSS ambiguity resolution with optimally controlled failure-rate. Art Sa 40(4):219–227

Teunissen PJG, Verhagen S (2009) The GNSS ambiguity ratio-test revisited: a better way of using it. Surv Rev 41(312):138–151

Tiberius C, De Jonge P (1995) Fast positioning using the LAMBDA method. In: Proc. 4th Int Conf Differential satellite systems, Citeseer pp 1–8

Verhagen S (2003) On the approximation of the integer least-squares success rate: which lower or upper bound to use. J GPS 2(2):117–124

Verhagen S (2005) The GNSS integer ambiguities: estimation and validation. Ph.D. thesis, Delft University of Technology

Verhagen S, Li B, Teunissen PJG (2013) Ps-LAMBDA: ambiguity success rate evaluation software for interferometric applications. Comput Geosci 54:361–376

Verhagen S, Teunissen PJG (2006a) New global navigation satellite system ambiguity resolution method compared to existing approaches. J Guid Control Dyn 29(4):981–991

Verhagen S, Teunissen PJG (2006b) On the probability density function of the GNSS ambiguity residuals. GPS Solut 10(1):21–28

Verhagen S, Teunissen PJG (2013) The ratio test for future GNSS ambiguity resolution. GPS Solut 17(4):535–548

Verhagen S, Teunissen PJG, Odijk D (2012) The future of single-frequency integer ambiguity resolution. In: Sneeuw N, Novák P, Crespi M, Sansò F (eds) VII Hotine-Marussi Symposium on Mathematical Geodesy, volume 137 of International Association of Geodesy Symposia, Berlin, Heidelberg. Springer, Berlin Heidelberg

Wang J, Stewart MP, Tsakiri M (1998) A discrimination test procedure for ambiguity resolution on-the-fly. J Geod 72(11):644–653

Wang L, Feng Y (2013) Fixed failure rate ambiguity validation methods for GPS and COMPASS. In: China Satellite Navigation Conference (CSNC) 2013 Proceedings, volume 2. Springer pp 396–415

Wang L, Verhagen S, Feng Y (2014) Ambiguity acceptance testing : a comparison of the ratio test and difference test. In: China Satellite Navigation Conference (CSNC) 2014 Proceedings

Wei M, Schwarz K-P (1995) Fast ambiguity resolution using an integer nonlinear programming method. In: Proceedings of ION GPS pp 1101–1110

Acknowledgments

This work is financially supported by the Australia cooperative research center for spatial information (CRC-SI) project 1.01 New carrier phase processing strategies for achieving precise and reliable multi-satellite, multi-frequency GNSS/RNSS positioning in Australia. Large-scale simulation in this research is supported by QUT High performance computing facilities. Discussions with Prof. Teunissen on the theory of integer aperture estimation and the idea of using a functional description for the threshold values are greatly appreciated.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, L., Verhagen, S. A new ambiguity acceptance test threshold determination method with controllable failure rate. J Geod 89, 361–375 (2015). https://doi.org/10.1007/s00190-014-0780-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00190-014-0780-2