Abstract

Multi-objective design under uncertainty problems that adopt probabilistic quantities as performance objectives and consider their estimation through stochastic simulation are examined in this paper, focusing on development of a surrogate modeling framework to reduce computational burden for the numerical optimization. The surrogate model is formulated to approximate the system response with respect to both the design variables and the uncertain model parameters, so that it can simultaneously support both the uncertainty propagation and the identification of the Pareto optimal solutions. Kriging is chosen as the metamodel, and its probabilistic nature (its ability to offer a local estimate of the prediction error) is leveraged within different aspects of the framework. To reduce the number of simulations for the expensive system model, an iterative approach is established with adaptive characteristics for controlling the metamodel accuracy. At each iteration, a new metamodel is developed utilizing all available training points. A new Pareto front is then identified utilizing this surrogate model and is compared, for assessing stopping criteria, to the front that was identified in the previous iteration. This comparison utilizes explicitly the potential error associated with the metamodel predictions. If stopping criteria are not achieved, a set of refinement experiments (new training points) is identified and process proceeds to the next iteration. A hybrid design of experiments is considered for this refinement, with a dual goal of global coverage and local exploitation of regions of interest, separately identified for the design variables and the uncertain model parameters.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Design optimization plays an important role in analysis of engineering systems, aiming to identify the optimal design configuration for some chosen performance objectives and constraints. For engineering applications that involve conflicting and incommensurable objectives, this design approach is frequently formulated as a multi-objective problem (Marler and Arora 2004), and its solution identifies a set of Pareto optimal design configurations representing different trade-offs for these objectives. Such optimization problems pose always greater computational challenges than single-objective ones, with a requirement to thoroughly explore the design space in order to comprehensively assess performance trade-offs. Their complexity increases when modeling uncertainties are also addressed in the problem formulation. Such uncertainties, stemming from the incomplete knowledge about the examined system and its environment (Helton et al. 2004; Beck and Taflanidis 2013), impact performance predictions and therefore the design process. A rational framework to explicitly consider them in this process is a probability logic approach (Beck and Taflanidis 2013), employed by assigning probability distributions to the uncertain model characteristics. The design objectives are then expressed through the expectation of some chosen performance measure (Beck and Santana Gomes 2012; Papadimitriou and Papadimitriou 2016). Multi-objective design problems within this context are commonly formulated as applications of robust design optimization (RDO) (Lagaros and Papadrakakis 2007; Ren and Rahman 2013; Medina and Taflanidis 2015), with the conflicting objectives related to the mean and variance of the (same) system response. More general approaches, though, exist that examine broader performance quantifications, adopting objectives that relate to different response outputs and to advanced probabilistic descriptions for performance (Wang and Stengel 2000; Verros et al. 2005; Gidaris et al. 2016; Liang and Mahadevan 2017). This paper discusses such multi-objective design under uncertainty problems that employ general probabilistic quantities as design objectives (no specialized structure assumed), and focuses on applications that involve complex numerical models. For such applications, stochastic (i.e., Monte Carlo) simulation is the only applicable generalized method for calculating the probabilistic performances. Unfortunately, this approach has an associated high computational cost, requiring a large number of calls to the deterministic, computationally intensive simulation model.

A framework relying on surrogate modeling to approximate the system response is discussed here to alleviate this burden. Within the aforementioned multi-objective design under uncertainty setting, traditional approaches for incorporating metamodels can be distinguished in two general categories. In the first category, metamodels are formulated in the design optimization level, by approximating the objective function (estimated utilizing the exact time-consuming system model) in the design space (Ruiz et al. 2016; Leotardi et al. 2016). In the second category, metamodels are exploited at the uncertainty quantification level, by approximating the system performance in the random variable space for each design configuration the optimizer examines (Poles and Lovison 2009). Approaches that combine these two formulations also exist (Coelho et al. 2011), combining separate metamodels for an outer loop (optimization in the design space) and an inner loop (uncertainty quantification in the random variables space). Here, an alternative approach is adopted that considers the formulation of a single metamodel in the so-called augmented input space of the probabilistic design problem, composed of both the design variables and the random variables. The metamodel then simultaneously supports both the uncertainty propagation and the design optimization. Such a formulation has been examined primarily for single-objective applications, in a variety of settings with respect to performance objective definition. Approaches based on Kriging metamodeling have been extensively investigated (Dubourg et al. 2011; Bichon et al. 2013; Moustapha et al. 2016) for problems where the probability of response exceeding certain threshold or response’s specific quantile is of interest, as often encountered in reliability-based design optimization (RBDO). Applications dealing directly with the expected value of the response also exist, relying on Kriging (Janusevskis and Le Riche 2013) or stochastic collocation (Kouri et al. 2014). In Zhang et al. (2016), and using again Kriging as metamodel, a unified implementation was discussed (accommodating different statistical measures) through the introduction of a performance function related to the system response. Recently (Dubreuil et al. 2018), a metamodeling implementation combining polynomial chaos expansion and Kriging was examined for optimization of the extreme value of the response, investigating the performance under any possible system realization considering the uncertainty in the model description. All these single-objective applications have incorporated some approach to adaptively control the metamodel accuracy, focusing primarily on critical, near-optimum regions. In contrast, for multi-objective problems, the augmented input space formulation has been adopted simply as a direct implementation of the aforementioned ideas (Gidaris et al. 2016), with no effort to adaptively control the metamodel accuracy.

This work establishes a complete framework for adaptive implementation of surrogate modeling in the augmented input space for multi-objective design under uncertainty problems, and formally establishes the MODU-AIM (multi-objective design under uncertainty with augmented input metamodels) algorithm. Kriging (Sacks et al. 1989; Kleijnen 2009) is adopted as metamodel since it has been proven highly efficient for approximating complex functions, while simultaneously being able to provide a local approximation to the metamodel prediction error (Jin et al. 2003). The main novel contribution of this work is the development of an iterative optimization scheme that further reduces the number of calls to the time-consuming simulation model. The underlying principle of leveraging an iterative formulation to reduce computational burden is the same as the approach advocated in Zhang et al. (2016), though the implementation here for multi-objective problems is [and needs to be (Yang et al. 2002)] fundamentally different. A global metamodel covering the entire design domain is proposed and iteratively refined using information for the metamodel accuracy and the updated (at each iteration) Pareto front. The design of experiments (DoE) for this refinement and accuracy/convergence criteria for the iterative process are based on global comparisons, as opposed to the local, trust-region-based approach developed in Zhang et al. (2016). The probabilistic nature of the Kriging metamodel is explicitly leveraged in multiple aspects of the proposed framework: in the modification of the probabilistic objectives, in the DoE, and in the stopping criteria for assessing convergence.

In the next section, the multi-objective design under uncertainty problem is formulated, and in Section 3, the modification of the problem through the metamodel implementation is discussed. In Section 4, the iterative optimization scheme is presented, and in Section 5, the overall framework is reviewed, before discussing the illustrative applications in Section 6.

2 Problem formulation

Consider an engineering system with design vector \( \mathbf{x}\in X\subset {\Re}^{n_x} \), where X is the admissible design space, and uncertain parameters (i.e., random variables) \( \boldsymbol{\uptheta} \in \mathtt{\varTheta}\subset {\Re}^{n_{\theta }} \) with probability density function (PDF) p(θ) (facilitating ultimately the uncertainty quantification). Let \( \mathbf{z}\left(\mathbf{x},\boldsymbol{\uptheta} \right)\subset {\Re}^{n_z} \) denote the response vector of the system model (with zm representing its mth component), assumed here to be obtained through a time-consuming call to a deterministic numerical function (simulator), and let \( {h}_i\left(\mathbf{x},\boldsymbol{\uptheta} \right):{\Re}^{n_x\times {n}_{\theta }}\to \Re \) denote the performance measure used for quantification of the ith design objective. The nh-dimensional performance measure vector, including all such measures, will be denoted h. The notation h[z| x,θ] will be also used herein to denote the performance measure vector; this notation indicates explicitly that this measure is a function of the response vector z but assumes also knowledge of design variables x and model parameters θ.

The ith performance function (i.e., design objective) is defined as

where \( {\mathbb{E}}_{p\left(\boldsymbol{\uptheta} \right)}\left[.\right] \) denotes the expectation under probability model p(θ). The probabilistic performance function vectorH(x) is defined as the vector with components Hi(x). The multi-objective design under uncertainty problem corresponds to the identification of the feasible design vector that minimizes H(x):

Note that in this representation of the design problem, any deterministic constraints are assumed to be incorporated in the definition of admissible design space X.

For the multi-objective problem in (2), a design configuration x is defined as dominating (strongly) any other x′ if all components in its performance function vector are lower. If objectives are competing, there is no single design configuration that dominates all others. In such applications, design optimization of (2) is transformed to identification of the Pareto optimal solutions, corresponding to designs that are not dominated by any other feasible ones. The set of all such configurations is denoted as the Pareto setXp with its elements denoted as xp. The Pareto front is the performance function space representation of the Pareto set Hp = {H(x)| x ∈ Xp}. It is generally impractical to find all Pareto optimums so the optimization strategies usually aim at finding a subset of them that represents Hp well and can provide the decision maker with a comprehensive picture of trade-offs (Zitzler et al. 2000).

For identifying the Pareto set, the probabilistic objectives need to be calculated, and as discussed in Section 1, this is established here through stochastic simulation. Using Ni samples from some importance sampling (IS) density qi(θ), introduced for improving computational efficiency by concentrating the stochastic simulation effort in domains of importance for the uncertain model parameters (Robert and Casella 2004), the integral in (1) is estimated as

where \( {\left\{\boldsymbol{\uptheta} \right\}}_i=\left\{{\boldsymbol{\uptheta}}_i^j,j=1,\dots, {N}_i\right\} \) is the sample set from qi(θ) and the notation \( {\widehat{H}}_i\left(\mathbf{x}|{\left\{\boldsymbol{\uptheta} \right\}}_i\right) \) stresses that this estimate is dependent on the sample set utilized. Note that the IS density qi(θ) and number of samples Ni can be different for each objective. Should be pointed out that selection of an efficient IS density can be challenging (Medina and Taflanidis 2014), especially in the context of multi-objective optimization where the domains of importance for θ might be drastically different across the different Pareto optimal solutions. Identifying and properly combining information from these domains is not straightforward. In the context of the proposed Kriging-aided optimization framework, this will be further discussed in Section 5.1.

By combining all component estimates \( {\widehat{H}}_i\left(\mathbf{x}|{\left\{\boldsymbol{\uptheta} \right\}}_i\right) \) obtained through (3), the estimate for the probabilistic objective vector \( \widehat{\mathbf{H}}\left(\mathbf{x}|\left\{\boldsymbol{\uptheta} \right\}\right) \) is obtained, and the multi-objective design under uncertainty problem can be then solved by substituting H (x) with that estimate. The resultant optimization problem is computationally challenging to solve, primarily due to the high computational cost associated with each performance function vector evaluation and the existence of an unavoidable stochastic simulation estimation error. To address these challenges, a metamodeling-aided approach in augmented input space is formulated in this paper.

3 Kriging-aided multi-objective optimization in augmented input space

3.1 Kriging-aided optimization

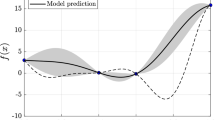

The metamodel output is chosen to correspond to the response vector z, rather than the performance measure vector h. The advantages of this choice are discussed in detail in Zhang et al. (2016). With respect to the metamodel input, this is chosen to encompass both the design and random variables, leading to definition of augmented input vector as y = [x; θ]. Modifications in this definition for applications that knowledge of all components of vectors x and θ is not necessary for evaluating z are discussed in Zhang et al. (2016). As discussed in Section 1, Kriging is adopted as metamodel. The fundamental hypothesis in Kriging is that any component in the response vector zm is approximated as a realization of a Gaussian process (Sacks et al. 1989; Kleijnen 2009) with some chosen correlation function R(y,y′). For forming the Kriging metamodel, the output {zm(yt), t = 1, …,n} is observed at n distinct locations for the input {yt, t = 1, …,n}, called training (or support) points or experiments. The selection of these locations is called design of experiments (DoE). Ultimately, Kriging provides the following distribution for the Gaussian process approximating zm based on observations Y = [y1…yn]T (Sacks et al. 1989)

where N (a,b) stands for Gaussian distribution with mean a and variance b, whereas μm(y) and \( {\sigma}_m^2\left(\mathbf{y}\right) \) correspond to the predictive mean and predictive variance, respectively, for the output zm. Once the Kriging metamodel is established, all estimates for the predictive mean and variance are provided with negligible computational burden (Lophaven et al. 2002; Jia and Taflanidis 2013). For the entire vector z, the approximation is established by combining the different components of the response vector and will be denoted as Mz(y| Y). The essential parts of this approximation are the predictive mean, μz(y), and variance, \( {\boldsymbol{\upsigma}}_{\mathbf{z}}^2\left(\mathbf{y}\right) \), vectors assembled through the components of μm(y) and \( {\sigma}_m^2\left(\mathbf{y}\right) \), respectively. Further details for the metamodel formulation in this context (augmented input space) may be found in Zhang et al. (2016). For applications whose response vector z is high-dimensional, developing a separate metamodel for each response component might be impractical. In such instances, alternative approaches should be preferred, for example, developing a single metamodel for the entire vector z as detailed in Zhang et al. (2016) [single metamodel directly predicts entire μz(y) and \( {\boldsymbol{\upsigma}}_{\mathbf{z}}^2\left(\mathbf{y}\right) \) vectors] or combining dimension reduction techniques (Jia and Taflanidis 2013). Adoption of such approaches avoids any constraints related to the nz value.

The optimization problem can be now solved by utilizing the metamodel approximation for z(y) when estimating the probabilistic performances, with metamodel error (and ultimately its probabilistic nature) explicitly incorporated in the formulation (Zhang et al. 2016). This leads to the following predictive performance measure\( {h}_i^{krig} \) that approximates hi

where \( {\mathbb{E}}_M\left[.|\mathbf{Y}\right] \) denotes conditional expectation under Mz(y| Y) and ϕ corresponds to the standard Gaussian PDF. The integration in (5) is equivalent to propagation of the error associated with the Kriging metamodel and typically can be analytically performed (Zhang et al. 2016). It is assumed herein that \( {h}_i^{krig}\left(\mathbf{x},\boldsymbol{\uptheta} \right)={h}_i^{krig}\left[{\boldsymbol{\upmu}}_{\mathbf{z}}\left(\mathbf{y}\right),{\boldsymbol{\upsigma}}_{\mathbf{z}}\left(\mathbf{y}\right)|\mathbf{x},\boldsymbol{\uptheta} \right] \) is an easy to compute measure and that it is continuous and differentiable with respect to μz(y) and σz(y).

This approach facilitates then the following approximation for Hi, denoted as the predictive performance function\( {H}_i^{krig} \):

which may be estimated though stochastic simulation as:

where, recall, \( {\left\{\boldsymbol{\uptheta} \right\}}_i=\left\{{\boldsymbol{\uptheta}}_i^j,j=1,\dots, {N}_i\right\} \) is the sample set from qi(θ). Selection of N and qi(θ) to establish a target accuracy for the estimate in (7) within the optimization framework will be addressed in Section 5. Evaluation of the gradient of the predictive performance function is discussed in Appendix 1.

3.2 Solution through epsilon-constraint method

For performing the multi-objective optimization, the epsilon-constraint method is adopted because of its ability to identify a Pareto front with a desired, prescribed density, even if the latter has nonconvex regions (Haimes et al. 1971). Herein, we focus on the application to bi-objective problem (nh = 2), though the presented framework can be extended to multi-objective problems with nh > 2, albeit with considerable increase in the computational complexity when combined with the epsilon-constraint approach (Mavrotas 2009). Without loss of generality assuming H1 as optimization function (and H2constraint function), the epsilon-constraint method converts the multi-objective optimization problem to a set of single-objective constraint optimization problems with different constraint bounds εr:

where the superscript r is utilized to describe the rth such constraint. Systematic variation of εr facilitates identification of the Pareto front.

Utilizing the metamodel-aided approximations for the performance functions (7), we establish the following single-objective constraint optimization problem:

Exploiting the numerical efficiency of the Kriging metamodels and the ability to obtain gradient information (as discussed in Appendix 1), the approximate optimization problem of (9) can be efficiently and accurately solved by any appropriate optimizer, even for a large number of constraints (different values of εr).

4 Iterative optimization scheme

The important question is how to obtain a predictive Pareto set through the use of the Kriging metamodel [i.e., optimization of (9)] that approximates the actual Pareto set [i.e., optimization of (8)] well, and perform this with small computational effort (i.e., number of calls to exact numerical models). With no a priori knowledge of regions of importance in Y (especially Pareto optimal regions), a globally accurate metamodel has to be developed. This could be, though, overly conservative and create an excessive computational burden. An iterative approach is established for this purpose, adaptively controlling the metamodel accuracy and identifying regions of importance for efficiently solving the multi-objective problem at hand.

4.1 Fundamentals for the iterative optimization

At each iteration, the metamodel is constructed from the current set of experiments, and the design configurations belonging to the predictive Pareto set are identified and compared to the set identified in the previous iteration. If convergence is not reached yet, the set of experiment is enriched with refinement experiments, a new metamodel is developed, and the optimization proceeds to the next iteration. In this manner, refinement experiments can be selected based on information from previous iterations.

To formalize this approach, let \( {\mathbf{X}}_p^{\left(k-1\right)}=\left\{{\mathbf{x}}_p^{r\left(k-1\right)};r=1,\dots, {n}_p^{\left(k-1\right)}\right\} \) be the predictive Pareto set available at the beginning of the kth iteration (this corresponds to the set identified at the end of the k-1st iteration), further denoted as current Pareto set. A superscript in parenthesis (k) will be used herein to denote the iteration. The total number of training points for developing the metamodel is n(k) whereas for all stochastic simulations performed within the kth iteration the same sample set \( {\left\{\boldsymbol{\uptheta} \right\}}_i^{(k)} \) is utilized for each of the performance functions. This approach corresponds to the concept of exterior sampling and creates a consistent estimation error in the comparisons within the current iteration (Spall 2003). This set changes, though, across iterations (interior sampling). All evaluations of the performance are established utilizing the current metamodel, leading to approximation \( {h}_i^{krig(k)}\left(\mathbf{x},\boldsymbol{\uptheta} \right) \) and estimate for the objective \( {\widehat{H}}_i^{krig(k)}\left(\mathbf{x}|{\left\{\boldsymbol{\uptheta} \right\}}_i^{(k)}\right) \) [obtained by (7)]. Using this estimate, the Pareto front can be identified using any appropriate algorithm, potentially using members of the current Pareto set \( {\mathbf{X}}_p^{\left(k-1\right)} \) as initial guesses (if chosen algorithm can leverage such information).

At each iteration, the developed framework includes three key tasks: identifying the new (representative subset of the) Pareto set, checking for convergence, and enriching the set of experiments. Details of these tasks are discussed sequentially next.

4.2 Pareto set generation

The Pareto set generation is facilitated through the proper selection of the constraint εr. This selection is performed with a dual goal: (i) restrict optimization of (9) in feasible regions; (ii) adequately represent the entire Pareto front by populating evenly its different regions. Figure 1 demonstrates some of these concepts in the context of the second illustrative example considered later.

The first goal is satisfied by identifying first the anchor points, i.e., the points representing the minimum for individual objectives. This is established through the unconstrained single-objective optimization:

These optimizations define the boundaries of current Pareto front and also establish the range for feasible constraints as \( {\varepsilon}^r\in \left[{\widehat{H}}_2^{krig}\left({\mathbf{x}}_2^{an(k)}|{\left\{\boldsymbol{\uptheta} \right\}}_2^{(k)}\right),{\widehat{H}}_2^{krig}\left({\mathbf{x}}_1^{an(k)}|{\left\{\boldsymbol{\uptheta} \right\}}_2^{(k)}\right)\right] \).

For the second goal, the remaining solutions (interior points) in the Pareto set are generated as following. The performance functions for the design configurations in \( {\mathbf{X}}_p^{\left(k-1\right)} \) are first updated using the current metamodel and new sample set \( {\left\{\boldsymbol{\uptheta} \right\}}_i^{(k)} \) (diamonds in Fig.1). Design configurations whose corresponding values for \( {\widehat{H}}_2^{krig} \) belong in the feasible constraint range \( \left[{\widehat{H}}_2^{krig}\left({\mathbf{x}}_2^{an(k)}|{\left\{\boldsymbol{\uptheta} \right\}}_2^{(k)}\right),{\widehat{H}}_2^{krig}\left({\mathbf{x}}_1^{an(k)}|{\left\{\boldsymbol{\uptheta} \right\}}_2^{(k)}\right)\right] \) are retained and utilized as values εr to update the Pareto set in their vicinity. If \( {\underset{\sim }{\mathbf{X}}}_p^{\left(k-1\right)}=\left\{{\underset{\sim }{\mathbf{x}}}_p^{r\left(k-1\right)};r=1,\dots, {\underset{\sim }{n}}_p^{\left(k-1\right)}\right\} \) denotes the set of such retained configurations, then this update corresponds to solution of optimization problem (squares in Fig. 1),

In cases the set \( {\underset{\sim }{\mathbf{X}}}_p^{\left(k-1\right)} \) is unavailable (i.e., in the first iteration) or in the extreme cases that none of its design configurations gets retained (\( {\underset{\sim }{n}}_p^{\left(k-1\right)}=0 \)), the constraint problem in (11) can be solved with a pre-defined number (ndp) of equi-spaced constraints across the boundaries of εr instead. Combining all interior points, obtained through (11) with the two anchor points obtained through (10), the candidate Pareto set is obtained. Even distribution of the corresponding Pareto front is enforced by an adaptive refinement (Kim and De Weck 2006), removing solutions in over-populated regions of the front, and identifying new solutions in under-populated regions (see also Fig. 1).

4.3 Stopping criteria

As stopping criteria, the discrepancy between the Pareto sets identified in consecutive iterations is utilized, using both convergence and coverage concepts (Deb 2001) to evaluate discrepancy. In early iterations, it is expected that the improvement of the metamodel accuracy will lead to identification of design configurations clearly dominating some precedent ones. As the optimization progresses, and the identified approximate Pareto set approaches the actual Pareto set, it is expected that design configurations identified in subsequent iterations should be non-dominant over each other, and also extend over similar regions in the objective function space. Since it is computationally prohibitive to evaluate the actual performance of the identified solutions, a probabilistic assessment of the performance is adopted (Teich 2001), utilizing the metamodel for the comparison, but explicitly considering its probabilistic nature (error).

To accommodate this, define the probability of superiority for the ith objective function \( {\mathbb{P}}_i\left(\mathbf{x}\succ {\mathbf{x}}^{\hbox{'}}\right) \) as the probability that the ith performance objective for x will be lower than the one for \( {\mathbf{x}}^{\hbox{'}} \) considering the uncertainty incorporated through the probabilistic nature of the metamodel. To calculate this probability, each performance function {Hi, i = 1, 2} is approximated through the conditional realization \( \left\{{H}_i^{cr},i=1,2\right\} \) considering the predictive distribution for the metamodel output Mz(y| Y). The conditional realizations can be given by an expression similar to (6) by substituting \( {h}_i^{krig}(.) \) with hi[z| .] and using realizations of response z from distribution Mz(y| Y). The probability \( {\mathbb{P}}_i\left(\mathbf{x}\succ {\mathbf{x}}^{\hbox{'}}\right) \) can be finally expressed as:

where the indicator function \( \mathbb{I}\left[.\right] \) is one if the condition within the brackets is satisfied, else it is zero. Evaluation of this two-dimensional integral requires generation of samples for \( {H}_i^{cr}\left(\mathbf{x}\right) \) and \( {H}_i^{cr}\left({\mathbf{x}}^{\hbox{'}}\right) \). This is established through the approach discussed in Appendix 2 which yields Ncr samples for \( {\left[{\widehat{H}}_i^{cr}\left(\mathbf{x}\right)\right]}^t\ \mathrm{and}\ {\left[{\widehat{H}}_i^{cr}\left({\mathbf{x}}^{\hbox{'}}\right)\right]}^t;t=1,\dots, {N}_{cr} \). The probability \( {\mathbb{P}}_i\left(\mathbf{x}\succ {\mathbf{x}}^{\hbox{'}}\right) \) is then estimated as

Expression ∣\( {\mathbb{P}}_i\left(\mathbf{x}\succ {\mathbf{x}}^{\hbox{'}}\right) \) − 0.5∣ quantifies proximity of x and \( {\mathbf{x}}^{\hbox{'}} \) with respect to the ith objective, with a value close to 0 meaning that x does not exhibit superiority or inferiority to \( {\mathbf{x}}^{\hbox{'}} \). The maximum value for this expression across both objectives is an indicator of closeness in the objective space. This observation leads to definition of the convergence indicator, which compares a new point to all members of a set (here, the previous Pareto set \( {\mathbf{X}}_p^{\left(k-1\right)} \) is utilized)

A small value for Iconv means that x is close to some member of \( {\mathbf{X}}_p^{\left(k-1\right)} \). A large value indicates a significant difference between x and all members of \( {\mathbf{X}}_p^{\left(k-1\right)} \) (concept also illustrated in Fig. 1). As the process approaches convergence, precedent Pareto set \( {\mathbf{X}}_p^{\left(k-1\right)} \) should be near Pareto optimal even in current iteration. This means, equivalently, that Iconv should not take high values for any point in \( {\mathbf{X}}_p^{(k)} \) which leads to following convergence stopping criterion:

where δconv is a predetermined threshold.

For evaluating coverage of the Pareto front, a secondary criterion is needed that compares performance to the anchor points of the previous front. The coverage indicator is introduced for this reason, defined as

A large value for Icov indicates that point x clearly dominates anchor point across the objective it corresponded to a minimum for, so coverage of the Pareto front has changed. This leads to the second coverage stopping criterion:

where δanch is a predetermined threshold.

The optimization progress is terminated when both convergence and coverage stopping criteria are satisfied. Parameters δconv and δanch affect the convergence rate of the iterative scheme and their selection represents a trade-off between quality of solutions and algorithmic efficiency. As a reference, consider the ideal case that current Pareto front is identical to the precedent one, leading to \( {\mathbb{P}}_i\left(\mathbf{x}\succ {\mathbf{x}}^{\hbox{'}}\right) \) = 0.5 for all objectives. Hence, δanch should be selected around 0.5–0.7 (probability up to 20% of exceeding previous minimums) and δconv also around 0–0.2 (probability of superiority is bounded between 30 and 70%). Parametric studies on the illustrative examples considered later showed little impact on the quality of the identified solutions of these selections (as long as they are chosen in the recommended range).

4.4 Hybrid design of experiments for refinement of metamodel

If convergence has not been achieved at the end of the kth iteration, the current set of experiments needs to be enriched to inform the development of a more accurate (refined) metamodel. In this work, we employ a hybrid DoE that combines both space-filling DoE and adaptive DoE to achieve: (Ro.i) satisfactory global accuracy and (Ro.ii) sufficient local accuracy for regions of importance for the augmented input under the multi-objective optimization context. New experiments na1 and na2 are obtained from each strategy for a total of na = na1 + na2 additional experiments. This leads to a total of n(k + 1) = n(k) + na training points for the metamodel in the next iteration.

The space-filling DoE populates the entire domain for the augmented input y using Latin hypercube sampling (LHS). For x LHS from uniform distribution within the design domain, X is adopted, whereas for θ LHS from distribution p(θ) is utilized. The space-filling DoE strategy guarantees that metamodel accuracy will be improved over the entire domain and, therefore, achieves (Ro.i), and reduces risk of missing optimum design configurations that could belong in yet unexplored regions with lower metamodel accuracy.

On the other hand, the adaptive DoE targets regions of importance for the augmented input. A sample-based DoE (Dubourg et al. 2011) is adopted here for this purpose that identifies a new set of experiments using a target density to represent domains of interests in Y, a utility function to incorporate metamodel accuracy in the DoE and clustering to eliminate close-proximity experiments. The target density is separately defined for the design variables x and the uncertain model parameters θ since for each domain of interests have different attributes. For the former, a kernel-based density \( {\tilde{\pi}}_d\left(\mathbf{x}|{\mathbf{X}}_d^{(k)}\right) \) around non-convergent regions of the Pareto front is chosen \( {\mathbf{X}}_d^{(k)}=\left\{{\mathbf{x}}_d^{r(k)};r=1,\dots, {n}_d\right\} \) whereas for the latter the density representing the IS density for each design configuration x is chosen, represented for computational efficiency as a kernel-based density conditional on the elements of \( {\mathbf{X}}_d^{(k)} \), \( \tilde{\pi}\left(\boldsymbol{\uptheta} |{\mathbf{x}}_d^{r(k)}\right) \). Details for these definitions are included in Appendix 3. The utility function incorporates in the DoE considerations about the metamodel accuracy by prioritizing candidate experiments with larger predicted error. The linearized prediction variance (Zhang et al. 2016) is chosen as utility function to facilitate this, given by

where \( {\left..\right|}_{\mu_m\left(\mathbf{y}\right)} \) denotes evaluation for z = μ(y).

The sample-based adaptive DoE strategy is composed of the following steps with objective to sample na2 experiments

-

Step 1.

Obtain a large number nc>>na2 of candidate experiments Y = {yj = [xj; θj], j = 1, …, nc} from the DoE target density \( {\pi}_s^{(k)}\left(\mathbf{x},\boldsymbol{\uptheta} \right)={\tilde{\pi}}_d\left(\mathbf{x}|{\mathbf{X}}_d^{(k)}\right)\tilde{\pi}\left(\boldsymbol{\uptheta} |{\mathbf{x}}_d^{r(k)}\right) \).

-

Step 2.

The linearized predictive variances of both performance measures \( \mathrm{VAR}\left[{h}_i^{krig}\left(\mathbf{y}\right)\right],i=1,2 \) are calculated for all nc candidate experiments. As scalar accuracy metric, the following quantity is adopted

corresponding to the larger normalized variance, where normalization is established with respect to the sample mean. Candidate samples are then ranked based on this metric and only a certain portion arnc>>na2 [where ar < 1] are retained corresponding to higher \( \overline{\mathrm{VAR}}\left(\mathbf{y}\right) \) values.

-

Step 3.

The retained experiments are finally clustered through K-means (Hartigan and Wong 1979) to the desired number of na2 experiments.

One final question is how to achieve the balance between space-filling and adaptive DoE. In early iterations, greater focus should be put on space-filling DoE to avoid premature convergence due to poor metamodel accuracy in specific domains, with this focus shifted to local exploitation of regions of importance (i.e., adaptive DoE) as convergence is potentially established. This is achieved by choosing the ratio of experiments selected by the adaptive DoE, \( {n}_{a2}^{(k)}/{n}_a \), as a monotonically decreasing function of the ratio of configurations in \( {\mathbf{X}}_p^{(k)} \) being retained into the DoE set \( {\mathbf{X}}_d^{(k)} \), i.e., \( {n}_d^{(k)}/{n}_p^{(k)} \). A lower value indicates that a greater portion of the Pareto front has converged, inferring sufficient global coverage of the metamodel, and thus greater potential benefits from concentrating efforts on the remaining, non-convergent domains. To avoid the extreme case of getting no experiments from the adaptive DoE when no configuration is retained (\( {n}_d^{(k)}=0 \)), a certain percent of experiments (20% chosen here) is always chosen from the adaptive DoE, while among the remaining ones (80%), the adaptive DoE also accounts for a portion proportional to \( {n}_d^{(k)}/{n}_p^{(k)} \).

5 Optimization implementation

This section reviews the overall iterative optimization approach starting with some remaining implementation details.

5.1 Initial DoE and selection stochastic simulation characteristics

The remaining implementation details pertain to the initial DoE strategy and the IS density and number of samples N selection. For the former, the space-filling DoE approach described in Section 4.4 is adopted, within an adaptive setting for selecting a sufficient number of experiments (Zhang et al. 2016). An initial number of training points ninit is obtained; then, the metamodel is developed and its accuracy is evaluated using leave-one-out cross validation. If some minimum desired accuracy is not achieved, ninita additional training points are obtained and the latter two tasks are repeated.

For the IS densities, the density for ith performance function is selected as a mixture of optimal IS densities πi(θ|x) [IS densities defined in Appendix 3 in (40)] for the design configurations in the precedent Pareto set \( {\mathbf{X}}_p^{\left(k-1\right)} \):

This mixture corresponds to an appropriate density across the entire current Pareto set and is efficiently approximated through samples from it using kernel density estimation (KDE) (Scott 1992): using rejection sampling (Robert and Casella 2004), nis samples are first obtained from each density \( {\pi}_i\left(\boldsymbol{\uptheta} |{{\mathbf{x}}_p^r}^{\left(k-1\right)}\right),r=1,\cdots, {n}_p^{\left(k-1\right)} \); these samples are then combined to yield a total of \( {n}_{is}\times {n}_p^{\left(k-1\right)} \) samples defining set \( {\left\{{\boldsymbol{\uptheta}}_{IS}\right\}}_i^{(k)} \) which is finally used for the KDE approximation [yielding, ultimately, a similar expression as in (38)]. For Ni, a sufficiently large value should be chosen to guarantee high accuracy in the stochastic simulation, something that is computationally affordable due to the numerical efficiency of the Kriging metamodel. This value can be adaptively adjusted if a sufficient accuracy is not achieved. For this purpose, the coefficient of variation for the current selection of the IS density can be utilized, approximated (Robert and Casella 2004) as

where \( \left\{{\boldsymbol{\uptheta}}_i^j,j=1,\dots, {N}_i\right\} \) is the sample set from \( {q}_i^{(k)}\left(\boldsymbol{\uptheta} \right) \). Ni can be adjusted across iterations to maintain a desired accuracy threshold \( {\delta}_{th}^{cv} \). For the kth iteration, and using the current Pareto set as reference (i.e., establish \( {\delta}_{th}^{cv} \) over this set), this leads to updated value

where Ni corresponds to number of samples used for estimate \( {\delta}_i^{cv}\left({\mathbf{x}}_p^{r\left(k-1\right)}|{q}_i^{\left(k-1\right)}\left(\boldsymbol{\uptheta} \right)\right) \). To guarantee adequate accuracy over the entire domain X, and since there is little gain in overall efficiency by using small Ni values (due to the metamodel computational efficiency), Ni should be chosen always larger than some minimum value Nmin, based on the computational burden consideration of estimation of (7) (for example, Ni that allows estimation of each performance objective in less than 1 s).

This IS density formulation addresses the challenges outlined in Section 2 regarding the IS density selection by primarily relying on the metamodeling computational efficiency. Note that more advanced approaches could be adopted for the IS formulation (Medina and Taflanidis 2014), something that will create, though, additional computational burden. In the proposed approach, the improvement of the stochastic simulation accuracy is sought after by leveraging the metamodel computational efficiency (use of large Ni value) and not by trying to identifying the optimal IS density to use. An easy-to-define, but still good, IS density is considered sufficient for the latter.

It should be finally pointed out that beyond the satisfaction of accuracy threshold \( {\delta}_{th}^{cv} \), the adoption of exterior sampling further reduces the impact of the stochastic simulation estimation error on the identified solutions (Spall 2003).

5.2 Review of algorithm

When combining the previous ideas, one can formulate the following optimization approach (illustrated also in Fig. 2), referenced herein as MODU-AIM. First, define the predictive performance vector hkrig(x, θ) and assign the optimization function H1 and constraint function H2. Set the thresholds for stopping criteria δconv and δanch and select the number of conditional realization samples Ncr. Set the rules for choosing the number of refinement experiments through the space-filling DoE \( {n}_{a1}^{(k)} \) and the number of refinement experiments through the adaptive DoE \( {n}_{a2}^{(k)} \), and for the adaptive DoE, choose the number of candidate samples nc and the reduction ratio ar based on metamodel accuracy. Finally, choose the number of samples for the IS density approximation nis, the minimum number of samples Nmin for the stochastic simulation and the accuracy threshold \( {\delta}_{th}^{cv} \).

-

Step 1.

(Initial DoE): In the first iteration employ the initial DoE strategy to obtain total of n(1) training points, gradually increasing number of points till satisfactory cross-validation accuracy is achieved. Evaluate model response for these points {z(yt); t = 1, …,n(1)}.

-

Step 2.

(Kriging model): Utilize all available observations in the database to formulate the Kriging metamodel and obtain approximation \( {h}_i^{krig(k)}\left(\mathbf{x},\boldsymbol{\uptheta} \right) \).

-

Step 3.

(IS density formulation): Obtain nis samples from each density \( {\pi}_i\left(\boldsymbol{\uptheta} |{{\mathbf{x}}_p^r}^{\left(k-1\right)}\right) \) and combine them to formulate IS density \( {q}_i^{(k)}\left(\boldsymbol{\uptheta} \right) \) for each performance function through kernel density approximation. For elements belonging to \( {\mathbf{X}}_d^{\left(k-1\right)} \), these samples are readily available from the previous iteration (step 9). In the first iteration, skip this step and use \( {q}_i^{(1)}\left(\boldsymbol{\uptheta} \right)=p\left(\boldsymbol{\uptheta} \right) \) [no prior information available].

-

Step 4.

(Stochastic simulation sample generation): Simulate set \( {\left\{\boldsymbol{\uptheta} \right\}}_i^{(k)} \) of Ni samples from \( {q}_i^{(k)}\left(\boldsymbol{\uptheta} \right) \) as the stochastic simulation sample set. For Ni, use Nmin in first iteration or maximum between Nmin and updated value given by (22).

-

Step 5.

(Anchor point identification): Identify anchor points for the predictive Pareto front by solving optimizations described by (10). Use as initial guess for gradient optimization the previously identified anchor points \( {\mathbf{x}}_{an,i}^{\left(k-1\right)} \).

-

Step 6.

(Interior point identification): Calculate first the retained Pareto set of design configurations identified at previous step \( {\underset{\sim }{\mathbf{X}}}_p^{\left(k-1\right)}=\left\{{\underset{\sim }{\mathbf{x}}}_p^{r\left(k-1\right)};r=1,\dots, {\underset{\sim }{n}}_p^{\left(k-1\right)}\right\} \) that fall within the current anchor points (skip in first iteration). Identify interior points of the predictive Pareto front by solving optimizations described by (11). Use as initial guess for gradient-based algorithms the reference points \( {\underset{\sim }{\mathbf{x}}}_p^{r\left(k-1\right)} \) for optimization in (11). Combine points from steps 5 to 6 to obtain candidate Pareto set \( {\mathbf{X}}_{cp}^{(k)} \).

-

Step 7.

(Refinement procedure of the Pareto front): Refine the Pareto set \( {\mathbf{X}}_{cp}^{(k)} \) by resolving under-populated and over-populated regions to obtain a uniform distribution of the set.

-

Step 8.

(Stopping criteria checking): Check if the stopping criteria have been reached as detailed in Section 4.3. If they are met or computational effort has been exhausted, the optimization process is terminated.

-

Step 9.

(Hybrid DoE for refinement): If optimization process is not terminated, employ the hybrid DoE strategy detailed in Section 4.4 to obtain total of na training points, \( {n}_{a1}^{(k)} \) from the space-filling DoE and \( {n}_{a2}^{(k)} \) from the adaptive DoE.

-

Step 10.

(Evaluation of the response): Evaluate the model response {z(yt); t = 1, …,na} for the newly identified training points at step 9 and combine with the previous observations in a database over all iterations. Proceed back to step 2 advancing to k + 1st iteration.

With respect to selection of the different characteristics of the algorithm (recall δconv, δanch, and Nmin have been already discussed), the following recommendations are provided. The number of conditional realization samples Ncr should be chosen higher than 100; for the case that \( {\mathbb{P}}_i\left(\mathbf{x}\succ {\mathbf{x}}^{\hbox{'}}\right) \) = 0.5 (designs having identical performance), this establishes a coefficient of variation below 5%. The number of the initial experiments ninit and the additional refinement experiments na should be chosen proportional to the dimension of input space ny following the recommendations in Jones et al. (1998). A 7- to 30-fold increase has been found effective in the applications examined: it avoids incremental only updates in the metamodel due to addition of small only number of experiments, something that can lead to premature convergence, while it also facilitates the adaptive characteristics of the algorithm, allowing a gradual increase of experiments. For the IS sampling density, nis should be chosen in the range of [10nθ, 50nθ] to facilitate adequate accuracy for the kernel-based approximation (Scott 1992). Finally, the number of candidate samples nc should be much larger than na to make both reduction and clustering effective. Appropriate selection for nc is 200na whereas for the reduction ar, value of 5–10% has been shown to work well in the applications examined. It should be noted that besides na, these selections have not been found to have a strong impact on algorithmic efficiency.

6 Illustrative examples

The framework is illustrated next with two examples. The first one corresponds to a simpler, low-dimensional problem with analytical performance measures and the second one to a challenging engineering application examining the design of bilinear passive dampers for the suspension of a half-car nonlinear model riding on a rough road. These two examples will be herein referenced, respectively, as “analytical” and “car suspension design”. All characteristics of the numerical optimization that are independent of ny (or nθ and nx), i.e., independent of the dimension of the design variables and the uncertain model characteristics, are selected common for both examples. For the stopping criteria characteristics, the thresholds δconv and δanch are taken as 15% and 65% respectively, whereas Ncr = 500 is assumed for the conditional realization samples. The number of candidate samples nc is taken as 200na with the reduction ar taken as 10%, whereas the number of DoE experiments \( {n}_{a1}^{(k)} \) and \( {n}_{a2}^{(k)} \) are chosen based on the criteria discussed at the end of Section 4.4. The stochastic simulation characteristics (dependent on nθ) and the number of experiments (dependent on ny) are reported individually for each of the examples. As pointed out earlier besides the number of experiments, these selections are not anticipated to have a strong impact on the efficiency of the algorithm and sensitivity studies performed have verified this.

For each problem, a reference (benchmark) solution is obtained (whose procedure will be elaborated later) in order to compare the quality of the identified Pareto front. For evaluating the computational efficiency of the proposed approach, the problem is also solved by another surrogate model aided optimizer appropriate for multi-objective problems, SOCEMO (Müller 2017). SOCEMO is a derivative-free algorithm developed for efficiently solving deterministic multi-objective problem with expensive, black-box performance functions, like the ones discussed in this manuscript. It allows for a direct control of the number of evaluations of the expensive performance objectives. It has been already shown (Müller 2017) to offer enhanced efficiency for applications with expensive functions when compared to many other standard approaches for solving multi-objective problems, like the NSGA-II algorithm (Deb et al. 2002). This is the reason it is selected here (instead of such, more popular, alternatives). As SOCEMO is not directly applicable for design under uncertainty problems, it is applied here adopting a “double-loop,” i.e., “nested” formulation, a common approach for such problems (Eldred et al. 2002): for each design configuration evaluation of the expensive design objectives is performed by stochastic simulation utilizing IS, as described by (3), adopting an exterior sampling approach. The total number of model evaluations for SOCEMO is the product of the number of required expensive evaluations of the design objectives and the sample size utilized in the stochastic simulation. For such double-loop approaches, an inevitable trade-off exists between the overall computational efficiency and the stochastic simulation accuracy. Higher sample size directly reduces computational efficiency but also reduces the stochastic simulation accuracy, lowering potentially quality of the identified solutions (Spall 2003). Since the inner loop is treated as a black-box, adaptive control of this accuracy is not possible. For the stochastic simulation, the IS densities are selected as the efficient ones identified in the final iteration of the MODU-AIM implementation, given by (20). In the overall comparisons of the computational efficiency, this provides a relative advantage to SOCEMO: formulation of good IS densities requires a considerable computational burden, especially in the context of design optimization, since such densities are not common across the whole design configurations examined (Medina and Taflanidis 2013). Here, efficient IS densities are provided for the inner loop of the SOCEMO implementation with no computational cost.

6.1 Analytical problem

6.1.1 Design problem formulation and optimization details

The expressions of two performance measures are:

where x = [x1x2] is the design vector and θ = θ the single uncertain parameter, taken as a standard Gaussian variable. For simplicity, the response vector corresponds directly to the performances measures, leading to z = [h1(x,θ) h2(x,θ)]T. The predictive performance measure in (5), the partial derivative in (34), and the linearized prediction variances of (18) can be expressed in closed forms as (i = 1,2):

The design domains X has upper bounds [10, 10], and lower bounds [− 10, − 10] and the starting point of the algorithm (first iteration) is set to be x(1) = [0, 0], the center of this domain. The pre-specified number of constraints ndp is taken at 20, while the refinement procedure guarantees the Pareto set to have 10 to 40 well-distributed members. For the DoE characteristics, the number of the initial support points ninit and the additional refinement support points na are chosen as 20, whereas the number of incremental support points with unsatisfied cross-validation accuracy ninita is taken as 10. The number of samples from the target IS density nis is taken as 20, the number of minimum samples Nmin for the stochastic simulation as 1000, and the accuracy threshold \( {\delta}_{th}^{cv} \) as 5%.

The reference solution is obtained by solving problem analytically and in this case corresponds to the actual Pareto front, whereas for the SOCEMO implementation two variants are examined: one (denoted as SOCEMO 502) terminates after 50 evaluations of the actual performance objectives with a IS sample size of 50 (total 2500 expensive model evaluations per optimization), while the other (SOCEMO 1002) terminates after 100 evaluations of the actual performance objectives with a IS sample size of 100 (10,000 expensive evaluations per run).

6.1.2 Results and discussion

Due to the relative simplicity of this example, focus is placed here on the final results only. Behavior across the iterations of the algorithm will be discussed in the next example. Results for a sample run of the proposed algorithm (MODU-AIM) and the two SOCEMO variants are shown in Table 1. The proposed algorithm converged in 4 iterations, requiring a total of 80 evaluations of the system response. This provides a considerable computational efficiency compared to any of the two SOCEMO variants examined. For comparing quality of the obtained solutions, once the Pareto sets are identified, the performance objectives, and corresponding Pareto fronts, are analytically evaluated (to avoid the influence of any errors stemming from the stochastic simulation in this comparison). Figure 3 presents the identified Pareto fronts along with the reference one. Apart from the visual comparison, a quantitative comparison is established using two common metrics for assessing quality of approximate solutions to multi-objective problems (Goh and Tan 2006). The first metric is the generational distance, GD, which compares the identified Pareto front to the actual one:

where np is the number of members in the Pareto set, and \( {d}_r^2 \) is the squared Euclidian distance in the objective space between the rth member and its closes neighbor in the actual Pareto front. Lower values of GD indicate smaller deviations from the reference front and therefore higher quality of the identified solution. The second metric is the maximum spread, MS, which computes the portion of the reference Pareto front that covered by the identified one:

where Hi,max, Hi,min represents the max/min value of the ith objective across the actual Pareto front, and \( \underset{r}{\max}\left[{H}_i\left({\mathbf{x}}_p^r\right)\right],\underset{r}{\min}\left[{H}_i\left({\mathbf{x}}_p^r\right)\right] \) represents the max/min value of that objective across the identified Pareto front. MS is bounded in [0, 1], and higher values for it indicate better coverage (i.e., higher quality identification). Note that MS metric can be implemented by comparing simply to anchor points whereas GD metric requires comparison to the actual Pareto front, or at least a reference discrete front with large number of solutions. This stems from the need to identify the closest neighbor, which assumes a very well populated front to compare to.

The results in Fig. 3 (qualitative, visual comparison) and Table 1 (quantitative comparison) show that the proposed implementation (MODU-AIM) identifies a Pareto front close to the actual one (GD value) and with the same coverage (MS value). In contrast, the Pareto fronts identified by both SOCEMO implementations include non-dominant design configurations (evident in Fig. 3 comparisons) and, in general, identify lower quality Pareto fronts (Table 1 measures). Consideration, additionally, of the difference in the number of evaluations of the performance measures required by the two approaches, 80 for MODU-AIM compared to 2500 or 10,000 by SOCEMO, reveals the advantages offered by the proposed approach: higher computational efficiency and higher identification quality for the Pareto front are accomplished. The double-loop approach employed for implementation of SOCEMO cannot outperform the efficiency offered by the formulation of a surrogate model in the augmented input space. This should not be considered as a deficiency of SOCEMO, rather than a limitation of generalized, nested optimization approaches for design under uncertainty design problems.

Another important question is what are the computational efficiency benefits provided by the iterative scheme discussed in Section 4. In other words, whether the advantages reported for MODU-AIM simply stem from the development of the metamodel in the augmented input space. Exploiting the numerical simplicity of this analytical example considered here, a non-iterative implementation is considered; this is accomplished by considering only the first iteration of the proposed algorithm with a predetermined number of experiments. This number is gradually increased till the same quality (similar values as the ones reported in Table 1) is established for the Pareto front identification. The non-iterative implementation required over 500 evaluations of the performance measures. Comparison to the computational effort required by the iterative implementation, only 80 evaluations, makes it evident that the iterative scheme, as expected, facilitates significant computational benefits.

Finally, the low dimensionality of this analytical example is leveraged to demonstrate the DoE sampling density for the design variables x in Fig. 4. The converged/non-converged parts of the Pareto set, as well as 1000 DoE candidate samples are shown in this figure for the 2nd, 3rd and 4th iterations of the algorithm. One thousand samples, as opposed to the 20 used in the algorithm, are utilized to better illustrate the sampling density characteristics. In earlier iterations, the DoE samples are distributed more evenly across the entire design domain X. This is desirable as discussed earlier, to avoid premature convergence due to locally poor metamodel accuracy. As iterations evolve, design subdomains close to the non-converged parts of the Pareto set are prioritized in the DoE, with samples populating more densely these regions. This facilitates, ultimately, an improvement of the convergence speed in these regions, without an impact on the other, converged already, domains.

6.2 Car suspension design problem

6.2.1 Design problem formulation

The schematic of the half-car model is shown in Fig. 5. A complete description of the numerical model is included in Medina and Taflanidis (2014). For the suspension damper, a passive nonlinear implementation is considered, allowing a different stroke reaction when the suspension is moving downwards as opposed to when moving upward (Verros et al. 2005). The average damping coefficients Cl and the percentage increase of damping rn for upwards movement of the suspension are taken as the design variables. They are allowed to be separately selected for the front and rear dampers (distinguished by subscript f or r), leading to the definition of design variable vector as x = \( {\left[{C}_f^l\kern0.5em {r}_f^n\kern0.5em {C}_r^l\kern0.5em {r}_r^n\right]}^T \). The road surface is modeled as a zero-mean Gaussian stationary stochastic process with power spectral density parameterized by the roughness coefficient, κi. A time-domain realization for this input is obtained by the spectral representation method assuming that the car drives with a constant horizontal velocity vc. Apart from the car length, all other parameters for its model are assumed to be uncertain; this includes the eccentricity between the chassis geometric center and center of mass, ex; the masses for the chassis and front and rear tires, mc, mtf, mtr, respectively; the chassis moment of inertia, Ic; the spring and dashpot coefficient for front and rear tire, Ktf, Ctf, Ktr, Ctr, respectively; and the linear and nonlinear spring coefficients for the front and rear suspension \( {K}_f^l \), \( {K}_f^n \),\( {K}_r^l \), \( {K}_r^n \), respectively. This leads to definition for the uncertain parameter vector θ = [κivcexmcmtfmtrIcKtfCtfKtrCtr\( {K}_f^l \)\( {K}_f^n \)\( {K}_r^l{K}_r^n \)] with nθ = 15. The probability models for θ are discussed in detail in Medina and Taflanidis (2014).

The performance of the suspension system is evaluated assuming that car drives on the rough road for a lengthy stretch, so that response reaches stationary characteristics that can be quantified for design purposes through root mean square (RMS) statistics (Verros et al. 2005). The only source of uncertainty impacting the response of the suspension system is, therefore, the uncertainty associated with the model parameter vector θ (no influence of the stochastic excitation characteristics, since stationary conditions achieved). The competing performance objectives for formulating the multi-objective design problem are related to the ride comfort and road holding, both common objectives for car suspension design (Dahlberg 1978). For the ride comfort the fragility of the RMS vertical acceleration at the center of mass RMSac is used, whereas for the road holding the sum of RMS dynamic forces developed between the ground and tires (RMStf, RMStr) is adopted. These forces are calculated for each tire considering both the spring and dashpot connecting it to the ground, whereas dynamic characteristics pertain to deviation from the static force developed to compensate for the car weight. For the acceleration fragility, log-normal characteristic are assumed (Medina and Taflanidis 2014) with threshold b = 1 m/s2 defining acceptable performance and coefficient of variation σb = 5% for the fragility. This leads to the following performance measures

The response vector is taken to correspond to the log of the RMS acceleration and the RMS tire forces, representing the quantities needed in the performance measures of (27), z = [ln(RMSac) RMStfRMStr]T. The RMS response is evaluated through time-domain numerical simulation (Medina and Taflanidis 2014) to address the various source of nonlinearities included in the car model.

6.2.2 Kriging implementation characteristics

The predictive performance measures are evaluated as

which lead ultimately to the desired analytic expressions for them. The partial derivatives in (34) are

In addition, the linearized prediction variances of (18) are calculated as

6.2.3 Numerical optimization details

The design domains X has upper bounds [4000 Ns/m, 1, 4000 Ns/m, 1], and lower bounds [400 Ns/m, − 1, 400 Ns/m, − 1]. The starting point of the algorithm (first iteration) is set to be x(1) = [500 Ns/m, 0, 500 Ns/m, 0] which correspond to small damper configurations with pure linear component. The pre-specified number of constraints ndp is taken at 10, while the refinement procedure guarantees the Pareto set to have 5 to 20 well-distributed members. Additionally, for the Pareto front refinement, the log of the first objective log (H1(x)) was utilized since the variation of that objective across the Pareto is large (ranging from 0.05 to 20%). This choice facilitates a better representation across the front of small failure probabilities, deemed of importance for the application here. For the DoE characteristics, two values will be examined for the number of the initial support points ninit and the additional refinement support points na, either (a) 500 or (b) 200, whereas the number of incremental support points with unsatisfied cross-validation accuracy ninita is taken as 100. The two different instances for na examined will be referenced as DoE500 and DoE200 herein. The number of samples from the target IS density nis is taken as 200, while the number of minimum samples Nmin for the stochastic simulation as 5000 and the accuracy threshold \( {\delta}_{th}^{cv} \) as 5%.

The reference (benchmark) solution cannot be obtained in this application analytically. Instead, it is approximated by a reference Pareto set \( {\mathbf{X}}_{ref}=\left\{{\mathbf{x}}_{ref}^r,r=1,\dots, {n}_{ref}\right\} \) containing nref = 15 well-distributed solutions. This reference solution is obtained here by solving the original design optimization problem of (2) using the adaptive weighted sum algorithm discussed in Kim and De Weck (2006) to convert the design problem to an unconstrained single-objective optimization, and the efficient global optimization algorithm (EGO) (Jones 2001) to solve the latter. These choices were promoted due to the high computational burden associated with estimation of the performance objectives, though any other appropriate methodology could had been adopted for identifying the reference solution. Like in the SOCEMO implementation, the performance is evaluated through stochastic simulation [i.e., through (3)] utilizing the exact numerical model and exterior sampling. The IS densities for generating the respective sample set were chosen as the ones used in the final iteration of the iterative Kriging-based optimization, described in (20). The number of samples N was selected to be sufficiently large (N = 5000) to guarantee high quality of the identified solutions. This sample sets is denoted as the reference sample set \( {\left\{\boldsymbol{\uptheta} \right\}}_i^{ref},i=1,2 \). To further reduce such computational cost, an initial set of common candidate solutions for EGO was constructed, consisting of 200 design configurations determined by LHS in the whole design domain. The total number of function evaluations to obtain the reference Pareto set is 3,590,000.

For comparing computational efficiency, the SOCEMO implementation is again considered. The variant with only 50 samples for the stochastic simulation provided poor results in this case (significant errors in identifying parts of the Pareto front close to one of the anchor points), so only the implementation SOCEMO 1002 is discussed further. Recall that a single run of SOCEMO 1002 requires 10,000 evaluations of the computational expensive system model.

6.3 Results and discussion

Five runs of the proposed algorithm (MODU-AIM) and one run of the SOCEMO approach are conducted. The proposed implementation converged in 4–8 iterations for the DoE500 implementation and 8–14 iterations for the DoE200. The average computational effort is then 3100 model runs (6 iterations) for DoE500 and 2000 model runs for DoE200 (10 iterations). Upon convergence, the performance functions for all identified Pareto sets are evaluated using the exact numerical model, utilizing the reference sample set \( {\left\{\boldsymbol{\uptheta} \right\}}_i^{ref},i=1,2 \) to facilitate consistent comparisons.

Discussion first focuses on a sample run of the proposed MODU-AIM algorithm. Figure 1 in Section 4.2 depicts the progression of the Pareto front in the second iteration, including the set identified in the first iteration \( {\widehat{H}}_i^{krig(1)}\left({\mathbf{x}}_p^{(1)}|{\left\{\boldsymbol{\uptheta} \right\}}_i^{(1)}\right) \), its update using the new metamodel, \( {\widehat{H}}_i^{krig(2)}\left({\mathbf{x}}_p^{(1)}|{\left\{\boldsymbol{\uptheta} \right\}}_i^{(2)}\right) \), and the set identified in the second iteration \( {\widehat{H}}_i^{krig(2)}\left({\mathbf{x}}_p^{(2)}|{\left\{\boldsymbol{\uptheta} \right\}}_i^{(2)}\right) \). Comparison between \( {\widehat{H}}_i^{krig(2)}\left({\mathbf{x}}_p^{(1)}|{\left\{\boldsymbol{\uptheta} \right\}}_i^{(2)}\right) \) and \( {\widehat{H}}_i^{krig(1)}\left({\mathbf{x}}_p^{(1)}|{\left\{\boldsymbol{\uptheta} \right\}}_i^{(1)}\right) \) shows the prediction accuracy improvement with the updated metamodel, especially in regions corresponding to smaller values for H1, whereas comparison of \( {\widehat{H}}_i^{krig(2)}\left({\mathbf{x}}_p^{(2)}|{\left\{\boldsymbol{\uptheta} \right\}}_i^{(2)}\right) \) to \( {\widehat{H}}_i^{krig(2)}\left({\mathbf{x}}_p^{(1)}|{\left\{\boldsymbol{\uptheta} \right\}}_i^{(2)}\right) \) shows the evolution of the identified solutions towards higher quality (i.e., dominant) designs. The bigger discrepancies shown for objective H1 should be attributed to its special characteristics; for certain parts of the front, objective H1 represents events with small likelihood of occurrence, for which an adaptive DoE strategy that target the domains in Θ contributing to failure can provide substantial benefits (Zhang et al. 2016). The proposed framework adaptively adjust to this challenge and improves metamodel accuracy and subsequently the quality of the identified solutions across the iterations, as also shown in Fig. 6 which depicts the evolution of the Pareto front across the different iterations of the algorithm using either [part (a)] the metamodel established at each iteration, i.e., \( {\widehat{H}}_i^{krig(k)}\left({\mathbf{x}}_p^{(k)}|{\left\{\boldsymbol{\uptheta} \right\}}_i^{(k)}\right) \) or [part (b)] the exact numerical model \( {\widehat{H}}_i\left({\mathbf{x}}_p^{(k)}|{\left\{\boldsymbol{\uptheta} \right\}}_i^{(k)}\right) \). As the algorithm progresses, discrepancies between the identified fronts gradually decrease and convergence is established with great computational savings, as pointed out earlier. This convergence holds for both the metamodel-based evaluation [part (a)], which corresponds to what the algorithm approximates as behavior of the objective function, as well as for and the exact numerical model based evaluation [part (b)]. Comparison between \( {\widehat{H}}_i^{krig} \) and \( {\widehat{H}}_i \) shows greater differences for small values of objective H1, agreeing with the aforementioned discussion of greater challenges in accurately approximating rare events. Note, though, that the proposed framework does not place any emphasis on agreement between \( {\widehat{H}}_i^{krig} \) and \( {\widehat{H}}_i \), and despite any differences existing in this comparison, it still converges to the correct front (see discussion on Fig. 7 next). This supports the philosophy for targeting convergence to correct solutions by an adaptive iterative scheme, rather than requiring development of accurate metamodels.

The focus moves now to the performance comparisons among all implementations. Results across the five different runs of MODU-AIM are reported in Fig. 7 for either the DoE500 [Fig. 7, part (a)] or DoE200 [Fig. 7, part (b)], and results for SOCEMO are reported in Fig. 8. In all instances, the reference Pareto front is included for comparison. These figures facilitate the visual comparison for the quality of the obtained solution. The results demonstrate good agreement between the reference and the identified solutions in both DoE cases for MODU-AIM. For SOCEMO, a significant portion of the identified Pareto set is non-dominant with respect to the reference solution. This behavior is especially evident for lower values of H1 objective and therefore should be attributed to the inaccurate stochastic simulation because of using 100 only samples, despite the efficient IS densities. This lower accuracy impacts disproportionally domains where H1 has characteristics of a rare event. An evident remedy is to increase N1 for SOCEMO (it was found that a 10-fold increase to N1 = 1000 greatly improves results). However, as discussed previously, this larger value will directly impact computational efficiency. For example, for maintaining the same total computational effort with an increase value of N1, the number of expensive evaluations of the design objectives would proportionally decrease. Beyond the visual comparison the quantitative comparison results are reported in Table 2, adopting similar metrics as in the previous example. In this case, the generational distance GD is no longer meaningful since only a discrete reference Pareto front is available. For this reason, the MS metric is only reported. For the DoE200 and DoE500 the coefficient of variation for MS over the 5 different trials is also reported in parenthesis. The comparison of the results in Figs. 6 and 7 and Table 2 shows that both variants of the proposed scheme, DoE200 and DoE500, outperform SOCEMO. This is established, similar to the previous example, with a remarkable computational efficiency (check also summary results in Table 3 later), with the number of expensive model evaluations not exceeding 4000 for DoE500, and 2800 for DoE200 compared to SOCEMO (10000) or the EGO for obtaining the reference solutions (3,590,000).

Focusing on MODU-AIM, for both DoE500 and DoE200 implementations, some discrepancies with respect to the second objective exist for regions close to the minimum for H1. These trends are anticipated: for most multi-objective problems, high sensitivity exists for the Pareto front close to the anchor points, with small improvements of the primary objective (the one that corresponds to the single-objective minimum) coming at large “sacrifice” for the secondary objective. This feature creates the differences between the approximate and reference solutions and can be resolved, if desired, by further refinement of the optimization around these domains, either by using a smaller value for δanch, or through a second optimization stage focusing explicitly at the anchor points, for example, through the single-objective optimization framework described in Zhang et al. (2016), leveraging existing experiments for the metamodel development. These challenges are compounded for regions close to the anchor point of H1 due to the rare event characteristics. Such challenges are greater for the DoE200 implementation, with DoE500 demonstrating better robustness across the different runs. This should be attributed to the fact that addition of only 200 experiments per iteration might not significantly alter the metamodel across consecutive iterations and therefore might lead to premature convergence. Remedy for this challenge could be to rely on the evaluation of the convergence across multiple iterations, i.e., not comparing only kth to k-1st iterations. Nevertheless, the overall Pareto front identified in all DoE implementations shows good agreement with the reference solution.

Finally, the discussion moves to the effect on different choices of na (the number of refinement experiments), based on the overall optimization efficiency and accuracy for DoE500 and DoE200. The following metrics are used for evaluating the performance across the different runs: (i) computational efficiency metrics, evaluated by the average (\( {N}_{avg}^{tot} \)) or worst-case (\( {N}_{wc}^{tot} \)) total numbers of calls to the exact system model, and (ii) accuracy metrics, evaluated by the average (\( {\mathrm{Err}}_{i, avg}^{ref} \)), and worst-case (\( {\mathrm{Err}}_{i, wc}^{ref} \)) error rate of ith performance function. The latter calculates the average relative absolute error of the metamodel across the entire front [i.e., comparing parts (a) and (b) of Fig. 6] and is given by

Lower error rate indicates higher accuracy over the critical regions in multi-objective optimization, namely the true Pareto set. In all instances, average results correspond to the mean over the five runs, whereas worst case corresponds to the least favorable performance over these five runs.

The results in Table 3 stress the computational efficiency of the proposed approach as the convergence to the Pareto front is accomplished with small computational effort, not exceeding 4000 model evaluations in either case. The DoE500 case takes extra computational effort (compare \( {N}_{tot}^{avg} \) and \( {N}_{wc}^{tot} \)), but also leads to higher quality results (compare \( {\mathrm{Err}}_{i, avg}^{ref} \) and \( {\mathrm{Err}}_{i, wc}^{ref} \)), which can provide greater robustness in identifying better quality solutions as discussed earlier. Further focusing on the predicted performance across the identified front, described through \( {\mathrm{Err}}_i^{ref} \), low error rate is reported for the first performance function (not higher than 0.114) and an almost vanishing error rate (not higher than 0.016) for the second one. The higher error rate of the first objective function is in line with the aforementioned trends. It is also interesting to compare this error across the iterations of the optimization algorithm. For the sample run, illustrated in Fig. 6 earlier, the evolution of Err across iterations for objective 1 (i = 1) from 1st iteration to 5th iteration is [0.798, 0.493, 0.194, 0.137, 0.088] and for objective 2 (i = 2) is [0.027, 0.014, 0.013, 0.016, 0.010]. Evidently, the proposed adaptive iterative scheme facilitates a gradual improvement of the metamodel accuracy over the domains of importance, which, in turn, allows the identification of higher quality solutions as demonstrated in Fig. 6.

Overall, the results in the figures and tables demonstrate the benefits from the proposed iterative scheme for adaptively adjusting metamodel accuracy: the algorithm gradually converges and identifies problematic regions for improving metamodel accuracy, while it demonstrates a very good agreement with reference solutions with great computational savings. Selection of smaller number of experiments to add per iteration leads to greater computational efficiency, but at the potential expense of robustness in identifying the part of Pareto front closer to the anchor points. This can be resolved through a second optimization refinement as discussed earlier.

7 Conclusions