Abstract

This contribution presents a computationally efficient method for reliability-based topology optimization for continuum domains under material properties uncertainty. Material Young’s modulus is assumed to be lognormally distributed and correlated within the domain. The computational efficiency is achieved through estimating the response statistics with stochastic perturbation of second order, using these statistics to fit an appropriate distribution that follows the empirical distribution of the response, and employing an efficient gradient-based optimizer. Two widely-studied topology optimization problems are examined and the changes in the optimized topology is discussed for various levels of target reliability and correlation strength. Accuracy of the proposed algorithm is verified using Monte Carlo simulation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Topology optimization is a systematic and general purpose computational tool for designing high-performance structures. The technique is different from shape optimization methods in that it allows for the introduction and removal of structural features by changing the layout of material within the design domain through variations in both its boundary and its connectivity. While the power of topology optimization has been demonstrated in the literature, deterministic conditions have often been assumed. Real-world applications, however, possess sources of uncertainty that exist either due to deviations in the built structure from the intended design or because the operating conditions are not predictable with full certainty. Such uncertainties, if overlooked, may lead to designs that are sub-optimal under real-world engineering conditions. In recent years attempts that aim at incorporating uncertainties into shape, size and topology optimization frameworks have emerged (Schuëller and Jensen 2008; Maute 2014). This incorporation of uncertainties into the optimization process leads to probabilistic design schemes where the structural response becomes a stochastic quantity. Among possible sources of uncertainty those that lead to stochastic structural stiffness are computationally burdensome to treat. The challenge here is that the displacements, indicating structural behavior, become a function of the inverse of a stochastic matrix. Most of the research in this direction has focused on robust topology optimization (RTO) under uncertainty (Guest and Igusa 2008; Chen et al. 2010; Asadpoure et al. 2010, 2011; Jalalpour et al. 2011; Schevenels et al. 2011; Lazarov et al. 2012a, b; Tootkaboni et al. 2012; Jang et al. 2012; Jansen et al. 2013). RTO is achieved, often, through controlling a combination of expected value and an arbitrary factor of standard deviation of the response. The higher this factor, the less variability is expected in the final design performance. This, however, is at the expense of diverging from the “mean” optimized design since the designs that constitute the Pareto front do not usually coincide with the“utopia” point in the design space. While RTO has shown to achieve manufacturing-tolerant designs, the measure of robustness remains somewhat qualitative and undefined (how many standard deviations one would need to include in the objective function?).

An alternative path to having a probabilistic measure of the design performance is to include structural reliability which has, at its core, the probability of failure. Including reliability in topology optimization algorithms, leads to reliability-based topology optimization (RBTO) (Maute and Frangopol 2003; Jung and Cho 2004; Kharmanda et al. 2004; Kang et al. 2004; Wang et al. 2006; Nguyen et al. 2011; Rozvany and Maute 2011). The first- and second-order reliability methods (FORM and SORM) have been shown to be accurate approaches to estimate the probability of failure (Rackwitz and Flessler 1978; Der Kiureghian et al. 1987; Ditlevsen and Madsen 1996; Zhao and Ono 2001; Xu and Cheng 2003; Rackwitz 2001). FORM and SORM, however, require an optimization algorithm to locate the most probable point (MPP) for accurate estimates of reliability. RBTO therefore poses the following challenges: (1) computational prohibitiveness as it essentially results in a double-loop optimization where the inner loop estimates structural reliability and the outer loop conducts topology optimization, (2) convergence issues which may be encountered when the search for the most probable point is performed (Enevoldsen and Sørensen 1994; Tu et al. 1999). This is more pronounced for cases where the number of basic variables is large (see Rackwitz (2001) and Schuëller et al. (2004) for a detailed review), a situation often faced in topology optimization under stochastic stiffness. Regardless, the double-loop approach method has been applied to topology optimization problems with uncertainty in loading, boundary conditions and non-structural mass and has been shown to produce designs that are tangibly different from deterministic designs (Maute and Frangopol 2003; Mogami et al. 2006).

Making RBTO practical for large-scale problems has generated a significant interest among researchers in recent years. The challenge here is to achieve acceptable estimate of reliability for low probabilities of failure while making the computations tractable, i.e. eliminating crude Monte Carlo simulations that are not amiable for use in situations involving a large number of (design) iterations. Attempts in this direction can be generally classified into: (1) turning the nested (two-level) reliability based optimization to a uni-level formulation (2) simulation techniques that leverage regression, machine learning or special sampling methods to estimate structural reliability with acceptable accuracy faster. From these two, the former is achieved by either replacing the inner loop by its corresponding first-order Karush–Kuhn–Tucker (KKT) necessary optimality conditions at the upper level optimization loop or using these optimality conditions to form schemes that update the design variables and the point in the stochastic space simultaneously. The main idea here is that because typically the optimization is solved by numerically satisfying the KKT conditions such strategies are computationally equivalent to solving the original double-loop optimization problem (Kuschel and Rackwitz 2000; Kharmanda et al. 2002; Liang et al. 2004; Agarwal 2004; Agarwal et al. 2007). Successful applications of this idea or its variants to component and system RBTO problems with few random variables representing uncertainty in the system have been reported in the literature. Silva et al. (2010) for example used a variant of a single-loop method proposed by Liang et al. (2004) to perform component and system RBTO under loading uncertainty. Kogiso et al. (2010) used a modification of single-loop single-vector (SLSV) (Chen et al. 1997) to perform RBTO of frame structures under uncertainty in loads and non-structural mass. Nguyen et al. (2011) extended the single-loop method proposed by Liang et al. (2004) for system reliability-based topology optimization under statistical dependence between limit-states. They increased the accuracy via a SORM-based formulation and facilitated the system reliability calculations using matrix based system reliability (MSR) analysis. They also reduced the computational cost (in a deterministic sense) by using multi-resolution topology optimization where they use different meshes for perceiving the design variables, density and finite elements. As for the other category, i.e. using surrogate models, machine learning techniques or special sampling methodologies, promising approaches that reduce the computational cost dramatically have recently emerged (Youn and Choi 2004; Agarwal and Renaud 2004; Kim and Choi 2008; Taflanidis and Beck 2008; Basudhar and Missoum 2008; Valdebenito and Schuëller 2011). Examples of applying these techniques to topology optimization problems, however, have not yet appeared in the literature. This is likely attributed to the nature of topology optimization problems where the number of design variables is large and significant topology changes between two consecutive design iterations may appear. The large number of the random variables in cases where uncertainty in the system is represented, for example, via a lightly correlated random field adds to the challenge. Nevertheless, Patel and Choi (2012) have recently applied neural network classification to reliability-based topology optimization of truss structures, showing that their proposed methodology is also applicable to problems with disjoint failure (or safe) sets (e.g. buckling limit-states). We refer the reader to Valdebenito and Schuëller (2010) for a review on the state-of-the-art techniques for reliability-based design.

A quick look at the above body of work shows that a RBTO methodology that tightly couples modeling of material uncertainty with topology optimization is absent from the literature. In this work, a computationally efficient RBTO algorithm for accounting for uncertainty in Young’s modulusis proposed that does not suffer from many of the shortcomings discussed above, such as the problems faced when searching for the MPP in a high dimensional space. The algorithm is based on our numerical observation that for the cases where Young’s modulus is modeled via a random field with known marginal distribution (lognormal in this work), the distribution of the displacement-based response does not change much (with a reasonable degree of accuracy) from one design iteration to another throughout the optimization process. The sensitivity of a measure of reliability (such as Reliability Index) can then be written in terms of the sensitivities of the parameters of this distribution which can be calculated via efficient techniques such as stochastic perturbation. Having calculated the sensitivities, the gradient-based optimizers are then the natural choice to further reduce the computational cost. The algorithm is tested on two widely-studied problems in topology optimization literature, and is shown to lead to designs that meet target reliabilities. Monte Carlo simulation is used to verify the accuracy of the results.

2 Deterministic topology optimization

Density-based deterministic topology optimization can be formulated in the following form:

Throughout this work we follow a standard notation, where the bold upper and lower case letters symbolize matrices and vectors respectively. In (1) the design variables are finite-element relative volumes and are stored in the vector ρ, ν is the vector of finite-element volumes for a unit density and V, the objective function, is the total volume. The stiffness matrix of the deterministic structure is denoted by K 0, d 0 is the vector of displacements under the applied loads f, l i is a unit vector associated with i th degree-of-freedom, and \(c^{\ast }_{i}\) is the deflection constraint at this degree-of-freedom. The first constraint enforces the equilibrium condition, the second restricts the displacement in the degrees of freedom of interest, and the third is to be interpreted componentwise as is often the case for inequalities involving matrices or vectors. For example, under a specific deflection constraint, c ∗ represents the maximum allowable deflection at degree of freedom i and c represents the corresponding actual deflection. It is, however, a common practice in structural topology optimization to set l i = f, in which case the product f T d becomes the compliance (Bendsoe and Sigmund 2004). Compliance is proportional to external work and is a proxy for structural stiffness. To motivate black-and-white solutions, the modified SIMP approach as described by Sigmund (2007) is used where Young’s modulus of finite elements are expressed as: 𝜖(ρ) = 𝜖 m i n + ρ p(𝜖 0−𝜖 m i n ). Here p is the SIMP penalization exponent (Bendsøe 1989; Zhou and Rozvany 1991; Mlejnek 1992), 𝜖 denotes member Young’s modulus, and 𝜖 m i n is set to a small number to avoid singularity of stiffness matrices. In the following we discuss the needed changes to the above problem definition to achieve optimized topologies that meet reliability constraints under stochastic stiffness.

3 Reliability-based topology optimization

3.1 Adding structural reliability as a constraint

The focus of this work is to obtain topologies that meet target probability of failure constraints under variability in materials Young’s modulus. To assess the structural reliability, structural performance has to be measured in terms of a load effect (stress, deflection, etc), and a limit-state or performance function has to be defined. In this work we define the limit-state function in terms of the compliance as:

where x is a vector that collects all uncertainties (Young’s modulus of elements), c ∗ is a predetermined structure capacity and c(x, ρ) is the compliance which is now a random variable. Therefore, g(x, ρ)<0 denotes failure, and our goal is to achieve designs with probabilities of failure lower than a prescribed value (target reliability). The deterministic topology optimization problem defined in (1) is thus turned into the following problem:

where P f denotes the probability of failure and P t denotes the target probability. It is noted that the deterministic constraints in (1) has been replaced with two stochastic constraints one involving the stochastic equilibrium and the other including the probability of failure. In what follows we explain our strategies in handling these two constraints. While perturbation will be used to replace the stochastic equilibrium with equivalent deterministic ones, the constraint on the probability of failure will be handled through Reliability Index Approach (RIA) (Tu et al. 1999); see Section 4.

3.2 Response under stochastic stiffness

When uncertainty is introduced into structural stiffness, such as in the form of material property uncertainties, obtaining the structural response is more challenging than when there is uncertainty in loading. This is because the response, the displacement field or a quantity that depends on the displacement field such as compliance, is a function of the inverse of a stochastic matrix. In this work, we adopt perturbation technique (Hisada and Nakagiri 1981; Liu et al. 1986; Kleiber and Hien 1992) to calculate the response statistics. The idea has recently been used in the context of topology optimization under uncertainty (Guest and Igusa 2008; Asadpoure et al. 2011; Jalalpour et al. 2011) and, in its most basic form, is based on writing response quantities in terms of Taylor series in the vector of uncertain quantities. We begin with expressing the randomness as:

where x is a vector of uncertain Young’s modulus for all finite elements (δ x i ), e i is the unit vector associated with each finite-element, and we have used standard indicial notation with repeated index meaning summation. Omitting the dependence on the vector of design variables ρ the equilibrium equation is written as:

where the applied loads f are assumed deterministic, and it is observed that the stochastic variability in structural stiffness has made the displacement vector a random vector. Assuming the uncertainty in Young’s modulus of elements (the variability around the mean of the modulus) is small, perturbation technique then attempts to write the dependence of a response quantity on uncertain variables in terms of series expansions involving derivatives of stiffness matrix evaluated at the mean value of input uncertainties, deterministic quantities obtained by solving a deterministic set of equations and the uncertain variables (Asadpoure et al. 2011; Jalalpour et al. 2011). The procedure enables the compliance for any structure with stochastic stiffness to be written in the following form:

In the above equation K 0 and K 0, i denote the stiffness matrix and its derivative with respect to the i th basic variable evaluated at the mean value of the vector of uncertain parameters and d 0 is the solution to the deterministic (mean) equilibrium problem. Observe that c is now written in terms of the deterministic matrices, which are straightforward to compute (see Guest and Igusa (2008) and Asadpoure et al. (2011) for details). To rewrite the above in a more compact form, let us define U as a dimensionless matrix collecting normalized structural displacements under a system of equivalent normalized loads (Jalalpour et al. 2011) as follows:

The system of equivalent normalized loads F is expressed in terms of first derivative of global stiffness matrix as:

Substituting (7) into (6) yields:

Equation (9) is general and can be used to determine the compliance in terms of the vector of uncertain parameters for any source of uncertainty and for all structural systems that behave in the linear elastic regime.

3.3 Response statistics

Equations for estimating the expected value and standard deviation of compliance under stochastic stiffness using perturbation have previously been derived in Guest and Igusa (2008), and (Asadpoure et al. 2011; Jalalpour et al. 2011). Following Jalalpour et al. (2011), we can write the expected value of compliance in (9) as:

where C is the covariance matrix of the basic random variables, and tr{⋅} is the trace operator. The variance of the compliance, obtained using a first order estimate of C, can be expressed as (Asadpoure et al. 2011; Jalalpour et al. 2013):

For the numerical problems considered in this work, we observed that the truncated equation above provides good estimates for the variance of compliance. Equations (10) and (11) are general and do not depend on the distribution of basic variables. It is also noted that this matrix notation does not pose any additional computational time for correlated basic variables in comparison to the uncorrelated case. These two estimated moments will be used the fit an appropriate lognormal distribution to the response as discussed in Section 4.

4 Reliability index based formulation and solution algorithm

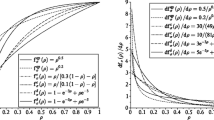

It was mentioned before that our numerical observation indicates that when Young’s modulus of elements is modeled as a marginally lognormal random field, the right tail of the distribution for compliance closely follows that of a lognormal distribution throughout the optimization process, that is c∼l o g N(l, s) with l and s the parameters of the lognormal distribution. We note that the distribution of response is (at the very least) a function of both the distribution and correlation structure of the input uncertainties. We furthermore note that the probabilistic properties of the response, being a function of the inverse of the stochastic stiffness matrix, is influenced by both what the input uncertainties represent (e.g. nodal locations vs material properties) and how the global stiffness matrix is formed from the assembly of the element stiffness matrices (e.g. truss elements vs continuum elements). For instance, while in the author’s previous paper (Jalalpour et al. 2013) the response was found to follow a Gumbel distribution for truss structures under uncorrelated normally distributed node location uncertainty, in the present work—a continuum structure under correlated lognormally distributed material uncertainties—the response follows a lognormal distribution. To find the appropriate distribution, we drew upon the work of Liu and Der Kiureghian (1986), which lists the most widely used two-parameter distributions for structural reliability analysis (normal, logistic, gumbel, weibull, and lognormal). We examined the tail of distribution of the response against these distributions and observed that lognormal distribution provides, by far, the closest fit to the distribution of compliance. We calculate the parameters of lognormal distribution employing method-of-moments which in essence means using the two following equations:

where μ c and \({\sigma ^{2}_{c}}\) are given in (10) and (11) respectively. Omitting dependence on the design variables ρ, the probability of failure can be written as:

with F denoting the cumulative distribution function (CDF). Now, following Ditlevsen (1979), the (generalized) Reliability Index is defined as β = Φ−1(1−P f ) resulting in:

Adopting RIA (Tu et al. 1999), the second constraint in (3) is written as a target Reliability Index constraint with β≥β t or:

Therefore, the reliability-based topology optimization problem can be expressed, similar to Jalalpour et al. (2013), as follows:

The major changes with respect to (3) are replacing the constraint on probability of failure by the Reliability Index through choosing a target index β t , and replacing the stochastic equilibrium with two deterministic ones where the dependence on the vector of uncertain variables is transformed to a “mean” linear equation plus another equation involving normalized load cases collected in the matrix F. It is noted that, unlike the right hand side of the first constraint, the right hand side of the second constraint depends on the vector of design variables ρ. It is also noted that that the second constraint is basically one single linear system with multiple right hand sides.

4.1 Sensitivities with respect to design variables

Because of the large number of design variables in density-based topology optimization, it is desirable to use gradient-based schemes (Sigmund 2011). Using (16) as the reliability constraint, the sensitivity of the constraint with respect to design variables now reads:

with primes denoting derivative with respect to design variables (ρ e). Hence, we need the sensitivities of l and s which we write in terms of sensitivities of response statistics given in the preceding section as:

It can be shown (Asadpoure et al. 2010; Jalalpour et al. 2013) that sensitivities of expected value and variance are of the following forms:

To avoid computing the inverse of the stiffness matrix in the sensitivity calculations we take the following steps. We consider the last term in (21), and rewrite it as:

The same argument holds for the last term in (22):

Therefore, we require the solution to the following linear problems:

The solutions to these subproblems can be obtained via fast Cholesky factorization of the matrix K 0 once. We therefore, in place of the last terms in (23) and (24), compute:

As every matrix in the finite-element method is an assembly of the elemental level matrices, we perform all the numerical computations at elemental level and assemble the final results. Having calculated the sensitivities of the response statistics with respect to design variables one then proceeds with calculating the sensitivity of the constraint involving the Reliability Index. These sensitivities, together with the sensitivity of other constraints and the objective function are then fed into an efficient gradient-based optimizer to search for the optimized topology.

4.2 Solution algorithm

Putting all the preceding discussions together, we can now summarize the RBTO algorithm as follows:

-

1.

Choose a capacity c ∗, and a required target reliability β t .

-

2.

Start with an initial guess for vector of design variables (ρ).

-

3.

Solve the deterministic equation f = K 0 d 0.

-

4.

Assemble the pseudo-force matrix \(\mathbf {F}=\mathbf {K}_{0,i}\mathbf {d}_{0}\mathbf {e}_{i}^{T}\), and solve F = K 0 U for U the matrix of normalized displacements.

-

5.

Evaluate the response expected value and variance using (10) and (11).

-

6.

Use (12) and (13) to fit the parameters of the lognormal distribution for compliance.

-

7.

Evaluate the objective function V = ν T ρ, and the constraint on the Reliability Index using (16).

-

8.

Compute the gradient of the objective function (ν T) and the gradient of the Reliability Index constraint using (18)–(20) with the help of (21)–(22), and update the design variables using a gradient-based optimizer.

-

9.

If not converged go to step 3.

It is noted that the number of operations needed to calculate the reliability in the perturbation-based approach adopted in this work is on the order of \(n_{dof}^{3}/3+n_{rv}\times n_{dof}^{2}\) with n r v the number of random variables and n d o f the number of degrees-of-freedom. In contrast, a Monte Carlo-based approach would require \(n_{dof}^{3}/3\times n_{mcs}\) operations (with n m c s the number of Monte Carlo simulations) suggesting a clear advantage for the perturbation-based approach. This is not considering the cost associated with computing sensitivities which, if taken into account, would further favor the perturbation approach from a computational cost point of view. It is also noted that, in comparison with algorithms for robust topology optimization (RTO) under stochastic stiffness, the only change here is in step 6. However, because this step uses estimated statistical moments, that have to be computed for RTO anyway, the increase in computational cost is minimal.

5 Results

We test the proposed algorithm on two widely studied problems in the literature. Consistent units are used throughout, hence all magnitudes are presented as unitless. Numerical implementation is achieved by taking the efficient and publicly available code by Andreassen et al. (2011) and adding modules for uncertainty analysis. The gradient-based optimizer used here is the Method of Moving Asymptotes (MMA) which is kindly provided by Svanberg (1987). MMA solves a sequence of convex approximations to the original problem. It is known to be efficient for density-based topology optimization problems, provided the number of active constraints is small. The tolerance for MMA is chosen as 0.001%. All numerical problems are solved for 𝜖 0 = 1, and use plane stress 4-node quadrilateral elements to discretize the domain. All designs begin with a uniform material distribution, and use heaviside projection method (HPM) (Guest et al. 2004) as implemented by Andreassen et al. (2011). HPM is typically conducted with a continuation scheme on the regularization parameter. However, we followed an approach proposed by Guest et al. (2011) and use a constant HPM regularization parameter of 16. We also use SIMP method with p = 3 to motivate black-and-white solutions. Material uncertainty in Young’s modulus is modeled with a marginally lognormal random field. The covariance structure is assumed to be exponentially decaying and of the following form:

where σ i j is the covariance between element i and j, σ i i = σ j j is the variance, \(\bar {d}_{i}\) is the coordinate for center of element i, and L e x p is a measure of correlation within the random field. The larger the correlation length the stronger the correlation. Different correlation lengths are considered and the RBTO problem is solved for a range of target reliability indices.

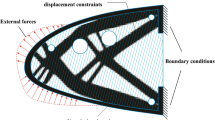

5.1 MBB beam

We begin with the simply supported beam with a unit load applied in the center (commonly referred as MBB beam in the literature). Design domain geometry, boundary conditions, and the applied load are shown in Fig. 1a. We discretize half of the domain using 150∗50 elements, and use filter radius of 0.0165L x with c ∗ = 192. This capacity value is chosen from the designs presented in Andreassen et al. (2011) (minimum compliance design with a volume fraction of 50% of the design domain). The deterministic design is shown in Fig. 1b.

We now assume that Young’s modulus of material is uncertain and marginally (that is at any given point within the design domain) follows a lognormal distribution with mean value of μ = 𝜖 0 and standard deviation of σ = 0.15𝜖 0. The random field representing the Young’s modulus is assumed correlated throughout the domain with a covariance structure that is defined by (29). We retain the chosen capacity as in the deterministic design, and use the proposed algorithm in Section 4 to design for various target reliability indices β t . To also examine the effect of correlation structure on the optimized designs we present the results for two different values of correlation length L e x p = L y /10 and L e x p = L y /2. The results are presented in Fig. 2. Comparing the results column-wise (identical correlation) indicates that, in general, a higher target reliability results in thicker members and/or more complex designs with more structural features to diversify the load path. While, depending on the range of the target Reliability Index, one of these two mechanisms (load path diversification vs member thickening) may dominate the change in topology, the mechanism by which the topological change is driven may switch from one to another as, for example, member thickening may lead to disappearance of small holes and less complex topologies. In fact, some initiated load paths may be removed or replaced at higher target reliabilities; see the first two designs for L e x p = L y /10 or the third and forth designs for L e x p = L y /2. This observation is also in agreement with the work of Zhao et al. (2013), where a higher Reliability Index led to less complexity in the design. All of the designs, however, feature more redundancy and load path diversification in comparison to the deterministic design. Comparing the results in each row (identical target reliability), it is seen that a smaller correlation length leads to lighter structures with fewer and thinner structural features.

Figure 3, finally, summarizes how changes in target Reliability Index and correlation length of the random field used to model the uncertainty in material property affect the weight of the optimized topologies. The figure plots the normalized volumes (with respect to the deterministic design) against the target reliability and P f , indicating that a smaller correlation strength results in a lighter structure for the same target Reliability Index or probability of failure. This has also been observed in previous studies on robust topology optimization under material uncertainty (see Tootkaboni et al. (2012)). It is also seen from this figure that for larger correlation strength, the increase in the reliability of the final topology comes at a higher price (more weight), which is likely to be more in the form of member thickening.

To verify the prediction accuracy, we conduct Monte Carlo simulation with 50000 samples on the final designs. Figure 4 depicts the resulted probability of failure plots for higher target reliability indices. It is observed that the fitted lognormal distribution follows the empirical distribution closely.

Table 1 reports the predicted statistics as well as probability of failure from the proposed methodology along with the results obtained from Monte Carlo simulation. Accuracy of the predicted values can be verified. Interestingly for this problem, we observe that lowering the target probability of failure, through a higher target Reliability Index, results in decreasing both the expected value and standard deviation of compliance (maximum deflection in this case), in a way that ultimately leads to a more reliable topology. There are cases for RBTO that this trend might not hold, but this is in contrast with RTO methods that use a combination of the two statistics, expected value and standard deviation, as the objective function (or in the constraints) where it is more likely that a decrease in one comes at the expense of an increase in the other.

5.2 Wheel problem

The second example is a 2L by L domain supported at L/3 from each end with a unit load applied at its bottom center. This problem has been previously studied in Tootkaboni et al. (2012) where a RTO algorithm was used to arrive at robust topologies in the presence of material uncertainty. We disceritize half of the domain with a 90∗90 mesh, and choose the filter radius as 0.03L y and c ∗ = 32 which is the same as the target compliance in Tootkaboni et al. 2012. The design domain, the supports, the load and the resulted deterministic topology are shown in Fig. 5, where the design topology looks similar to a “wheel” with thin spikes.

We now assume uncertainty in Young’s modulus modeled as a lognormal random field with the same mean value as in the deterministic design 𝜖 0, and a %20 coefficient of variation, that is σ = 0.20 𝜖 0. Similar to the previous problem, we present RBTO designs with various target reliabilities and for two different correlation lengths in Fig. 6. Again the general trend is a combination of load path diversification and member thickening. The effects of the nonlinear reliability constraint on the optimized topology can be clearly seen here where, for example for L e x p = L y /2, the design for β t = 3.01 features four spikes, whereas the design for β t = 2.66 features five spikes, all of which are thinner than the former design with higher target Reliability Index. Examining the results presented in the first column (L e x p = L y /4) it is evident that at lower target reliabilities, more reliability is achieved in the form of load path diversification and more spikes. At higher target reliabilities, however, the change in topology represents itself in the form of closing the holes and thicker members. The trend for L e x p = L y /2, on the other hand, is slightly different in the sense that the mechanism dominating the change in topology alternates between member splitting and merging/thickening of members. Nevertheless, increasing the target reliability results in an increase in total volume of the optimized topology in all cases regardless of the correlation length. Finally, to verify the prediction accuracy, Monte Carlo simulation with 50000 samples is conducted. The empirical probability of failure plots accompanied with plots pertaining to fitted lognormal distribution are depicted in Figs. 7 and 8 where it is observed that the fitted distribution follows the empirical distribution closely.

6 Concluding remarks

Real-world problems are accompanied with uncertainties. These uncertainties, if not taken into account throughout the design process, may lead to designs that are suboptimal under real-world engineering conditions. We presented an efficient topology optimization algorithm for designing continuum structures that are reliable in the presence of material uncertainties. Specifically, we optimized under constraints containing Reliability Index as a measure of probability of failure. The uncertainty in material property was modeled via a two-dimensional marginally lognormal random field with different correlation structures. The reliability index was estimated by fitting an appropriate distribution that was shown to follow the tail of the empirical distribution for the quantity of interest closely. The parameters of the distribution were estimated using second-order stochastic perturbation. The proposed approach allowed for efficient inclusion and handling of a constraint on the reliability index in the optimization framework by providing compact representations for the sensitivity equations.

The presented algorithm was demonstrated on two minimum weight design problems. The results showed that a change in target reliability, changes the optimized topology in primarily two ways: in the form of member splitting or introduction of new (additional) members and member thickening. These trends were more readily seen when the correlation length of the underlying random field was increased. The mechanism driving the topological changes was shown to switch from one to another depending on the range of target reliability index contained in the constraint. All forms of topological changes, however, led to an increase in the final volume of the optimized topology as the target reliability index was increased. The designs obtained using the proposed algorithm were examined via Monte Carlo simulation and the prediction accuracy on response statistics and the probability of failure was verified.

References

Agarwal H (2004) Reliability based design optimization: formulations and methodologies. PhD thesis

Agarwal H, Mozumder CK, Renaud JE, Watson LT (2007) An inverse-measure-based unilevel architecture for reliability-based design optimization. Struct Multidiscip Optim 33(3):217–227

Agarwal H, Renaud J (2004) Reliability based design optimization using response surfaces in application to multidisciplinary systems. Eng Optim 36(3):291–311

Andreassen E, Clausen A, Schevenels M, Lazarov BS, Sigmund O (2011) Efficient topology optimization in matlab using 88 lines of code. Struct Multidiscip Optim 43(1):1–16

Asadpoure A, Guest J, Igusa T (2010) Structural topology optimization considering correlated uncertainties in elastic modulus. In: Collection of Technical Papers - AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics and Materials Conference

Asadpoure A, Tootkaboni M, Guest JK (2011) Robust topology optimization of structures with uncertainties in stiffness: Application to truss structures. Comput Struct 89(11-12):1131–1141

Basudhar A, Missoum S (2008) Adaptive explicit decision functions for probabilistic design and optimization using support vector machines. Comput Struct 86(19):1904–1917

Bendsøe MP (1989) Optimal shape design as a material distribution problem. Struct Optim 1(4):193–202

Bendsoe MP, Sigmund O (2004) Topology optimization: theory, methods and applications. Springer

Chen S, Chen W, Lee S (2010) Level set based robust shape and topology optimization under random field uncertainties. Struct Multidiscip Optim 41(4):507–524

Chen X, Hasselman T K, Neill DJ (1997) Reliability based structural design optimization for practical applications. In: Proceedings of the 38th AIAA/ASME/ASCE/AHS/ASC structures, structural dynamics, and materials conference, pp 2724–2732

Der Kiureghian A, Lin H, Hwang S (1987) Second-order reliability approximations. J Eng Mech 113 (8):1208–1225

Ditlevsen O (1979) Generalized second moment reliability index. J Struct Mech 7(4):435–451

Ditlevsen O, Madsen HO (1996) Structural reliability methods, vol 178. Wiley, New York

Enevoldsen I, Sørensen JD (1994) Reliability-based optimization in structural engineering. Struct Saf 15 (3):169–196

Guest J, Igusa T (2008) Structural optimization under uncertain loads and nodal locations. Comput Meth Appl Mech Eng 198(1):116–124

Guest JK, Asadpoure A, Ha SH (2011) Eliminating beta-continuation from heaviside projection and density filter algorithms. Struct Multidiscip Optim 44(4):443–453

Guest JK, Prévost JH, Belytschko T (2004) Achieving minimum length scale in topology optimization using nodal design variables and projection functions. Int J Numer Methods Eng 61(2):238– 254

Hisada T, Nakagiri S (1981) Stochastic finite element method developed for structural safety and reliability. In: Proceedings of the 3rd international conference on structural safety and reliability, pp 395–408

Jalalpour M, Guest JK, Igusa T (2013) Reliability-based topology optimization of trusses with stochastic stiffness. Struct Saf 43(0):41–49

Jalalpour M, Igusa T, Guest J (2011) Optimal design of trusses with geometric imperfections: Accounting for global instability. Int J Solids Struct 48(21):3011–3019

Jang G-W, Dijk NP, Keulen F (2012) Topology optimization of mems considering etching uncertainties using the level-set method. Int J Numer Methods Eng 92(6):571–588

Jansen M, Lombaert G, Diehl M, Lazarov BS, Sigmund O, Schevenels M (2013) Robust topology optimization accounting for misplacement of material. Struct Multidiscip Optim 47(3):317–333

Jung H-S, Cho S (2004) Reliability-based topology optimization of geometrically nonlinear structures with loading and material uncertainties. Finite Elem Anal Des 41(3):311–331

Kang J, Kim C, Wang S (2004) Reliability-based topology optimization for electromagnetic systems. COMPEL: The International Journal for Computation and Mathematics in Electrical and Electronic Engineering 23 (3):715–723

Kharmanda G, Mohamed A, Lemaire M (2002) Efficient reliability-based design optimization using a hybrid space with application to finite element analysis. Struct Multidiscip Optim 24(3):233–245

Kharmanda G, Olhoff N, Mohamed A, Lemaire M (2004) Reliability-based topology optimization. Struct Multidiscip Optim 26(5):295–307

Kim C, Choi KK (2008) Reliability-based design optimization using response surface method with prediction interval estimation. Journal of Mechanical Design 130(12):121401

Kleiber M, Hien TD (1992) The stochastic finite element method: basic perturbation technique and computer implementation. Wiley, New York

Kogiso N, Hirano Y, Nishiwaki S, Izui K, Yoshimura M, Min S (2010) Reliability-based topology optimization of frame structures for multiple criteria using slsv method. Journal of Computational Science and Technology 4:172–184

Kuschel N, Rackwitz R (2000) A new approach for structural optimization of series systems. Applications of Statistics and Probability 2(8):987–994

Lazarov BS, Schevenels M, Sigmund O (2012a) Topology optimization considering material and geometric uncertainties using stochastic collocation methods. Struct Multidiscip Optim 46(4): 597–612

Lazarov BS, Schevenels M, Sigmund O (2012b) Topology optimization with geometric uncertainties by perturbation techniques. Int J Numer Methods Eng 90(11):1321–1336

Liang J, Mourelatos ZP, Tu J (2004) A single-loop method for reliability-based design optimization. In ASME 2004 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, pp 419–430. American Society of Mechanical Engineers

Liu P-L, Der Kiureghian A (1986) Multivariate distribution models with prescribed marginals and covariances. Probabilistic Engineering Mechanics 1(2):105–112

Liu WK, Belytschko T, Mani A (1986) Probabilistic finite elements for nonlinear structural dynamics. Comput Methods Appl Mech Eng 56(1):61–81

Maute K (2014) Topology optimization under uncertainty. In: Topology Optimization in Structural and Continuum Mechanics, pp 457–471. Springer

Maute K, Frangopol DM (2003) Reliability-based design of mems mechanisms by topology optimization. Comput Struct 81(8-11):813–824. K.J Bathe 60th Anniversary Issue

Mlejnek H (1992) Some aspects of the genesis of structures. Structural Optimization 5(1-2):64–69

Mogami K, Nishiwaki S, Izui K, Yoshimura M, Kogiso N (2006) Reliability-based structural optimization of frame structures for multiple failure criteria using topology optimization techniques. Struct Multi Optim 32:299–311

Nguyen TH, Song J, Paulino GH (2011) Single-loop system reliability-based topology optimization considering statistical dependence between limit-states. Struct Multi Optim 44(5):593–611

Patel J, Choi S-K (2012) Classification approach for reliability-based topology optimization using probabilistic neural networks. Struct Multi Optim 45(4):529–543

Rackwitz R (2001) Reliability analysisa review and some perspectives. Struct Saf 23(4):365–395

Rackwitz R, Flessler B (1978) Structural reliability under combined random load sequences. Comput Struct 9(5):489–494

Rozvany GI, Maute K (2011) Analytical and numerical solutions for a reliability-based benchmark example. Struct Multidiscip Optim 43(6):745–753

Schevenels M, Lazarov BS, Sigmund O (2011) Robust topology optimization accounting for spatially varying manufacturing errors. Comput Meth Appl Mech Eng 200:3613–3627

Schuëller G, Jensen H (2008) Computational methods in optimization considering uncertainties- an overview. Comput Meth Appl Mech Eng 198(1):2–13

Schuëller G, Pradlwarter H, Koutsourelakis P (2004) A critical appraisal of reliability estimation procedures for high dimensions. Probab Eng Mech 19(4):463–474

Sigmund O (2007) Morphology-based black and white filters for topology optimization. Struct Multi Optim 33(4-5):401–424

Sigmund O (2011) On the usefulness of non-gradient approaches in topology optimization. Struct Multidiscip Optim 43(5):589–596

Silva M, Tortorelli D, Norato J, Ha C, Bae H-R (2010) Component and system reliability-based topology optimization using a single-loop method. Struct Multi Optim 41:87–106

Svanberg K (1987) The method of moving asymptotesa new method for structural optimization. Int J Numer Methods Eng 24(2):359–373

Taflanidis A, Beck J (2008) Stochastic subset optimization for optimal reliability problems. Probabilistic Engineering Mechanics 23(2):324–338

Tootkaboni M, Asadpoure A, Guest JK (2012) Topology optimization of continuum structures under uncertainty : A polynomial chaos approach. Comput Meth Appl Mech Eng 201:204(0):263– 275

Tu J, Choi KK, Park YH (1999) A new study on reliability-based design optimization. J Mech Des 121(4):557–564

Valdebenito MA, Schuëller GI (2010) A survey on approaches for reliability-based optimization. Struct Multidiscip Optim 42(5): 645–663

Valdebenito MA, Schuëller GI (2011) Efficient strategies for reliability-based optimization involving non-linear, dynamical structures. Comput Struct 89(19):1797–1811

Wang S, Moon H, Kim C, Kang J, Choi KK (2006) Reliability-based topology optimization (rbto). In IUTAM Symposium on Topological Design Optimization of Structures, Machines and Materials, pp 493–504. Springer

Xu L, Cheng G (2003) Discussion on: moment methods for structural reliability. Struct Saf 25(2):193–199

Youn BD, Choi KK (2004) A new response surface methodology for reliability-based design optimization. Comput Struct 82(2):241–256

Zhao Q, Chen X, Lin Y (2013) Reliability-based topology optimization of control arm of suspension for lightweight design. In: Proceedings of the FISITA 2012 World Automotive Congress, pp 1129–1138. Springer

Zhao Y-G, Ono T (2001) Moment methods for structural reliability. Struct Saf 23(1):47–75

Zhou M, Rozvany G (1991) The COC algorithm, part ii: topological, geometrical and generalized shape optimization. Comput Methods Appl Mech Eng 89(1):309–336

Acknowledgments

The authors would like to thank Dr. Alireza Asadpoure for the fruitful discussions pertaining to topology optimization work. The second author acknowledges the support of National Science Foundation through grant No. CMMI 1401575.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jalalpour, M., Tootkaboni, M. An efficient approach to reliability-based topology optimization for continua under material uncertainty. Struct Multidisc Optim 53, 759–772 (2016). https://doi.org/10.1007/s00158-015-1360-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-015-1360-7