Abstract

An Occupant Protection System (OPS) has very important applications in an automobile accident, which can save an occupant from fateful hurt or death. To provide a feasible framework for decreasing occupant injury degree, this paper presents a practical approach of the Reliability-based Design Optimization (RBDO) for vehicle OPS performance development based on the ensemble of metamodels. The weight factors of the ensemble associated with each metamodel are determined using a heuristic method. The comparative result shows that the prediction accuracies of the ensemble of metamodels exceed all individual one. Generally, the deterministic optimum designs without considering the uncertainty of design variables frequently push design constraints to the limit of boundaries and lead objective performance variation to largely fluctuate. So, the RBDO is presented and aims to maintain design feasibility at a desired reliability level. The First Order Reliability Method (FORM) and Second Order Reliability Method (SORM) are used to calculate the reliability index, and the results are checked by Monte Carlo (MC) simulation respectively, from which the failure probability of the OPS performance design is obtained. The result demonstrates that the reliability design is more reliable than the deterministic optimization in real engineering application. Finally, the reliability-based design optimization result is validated by the simulation result, which shows that the proposed RBDO approach is very effective in obtaining an optimum design.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the past century, occupant safety has become an important design objective among all the performance criteria of vehicles. In the automotive-related industry, the effort goal of engineering design in the field of vehicle occupant protection is to fulfill or exceed the government regulations such as FMVSS, ECE, and NHTSA. The Occupant Protection System (OPS), including the energy-absorbing steering columns, three-point belts, front and side air bags, is a safety device that is designed to assist in restraining the occupant in the seating position, and reduce the risk of occupant contact with the vehicle interior, thus decreasing the risk of injury in a vehicular crash event. There are abundant literatures dealing with the effectiveness of the OPS such as belts and supplemental airbags in providing occupant protection in automobile crashes (Mizuno et al. 2011; Douglas and Hampton 2010; Huston 2001; Bruno et al. 1998)

The mandatory usages of the seatbelts and airbags have significantly decreased occupant fatality and injury. In order to effectively prevent the occupant hurt a number of Deterministic Design Optimization (DDO) strategies of the OPS based on Computer Aided Engineering (CAE) are discussed (Gu et al. 2013; Zhao et al. 2010; Dias and Pereira 2004; Liao et al. 2008) However, the DDO has little or even no room for uncertainties in the manufacturing process. Consequently, the DDO that is obtained without considering uncertainty of design variables may frequently push the design constraints to boundary and result in a lack of feasibility. So, it requires the Reliability-based Design Optimization (RBDO) which involves the evaluation of probabilistic constraints (Sinha 2007; Gu and Lu 2014; Lin et al. 2014; Gu et al. 2013; Youn et al. 2004; Yang et al. 2000; Taflanidis and Beck 2009b)

However, it is prohibitively expensive or not an appropriate application for many large-scale applications when RBDO involves the evaluation of probabilistic constraints. The computational cost would become excessively high by using the simulation results that require several hours of compute time. To replace the simulations results, metamodels (or surrogate models) with smooth analytic functions for efficient estimation of system responses have been widely used for highly efficient and stable RBDO. A number of metamodeling techniques have been investigated in approximating mathematical test functions in the open literature, such as Polynomial Response Surface (PRS), Radial Basis Function (RBF), Kriging (KRG), Support Vector Regression (SVR) (Forsberg and Nilsson 2005; Dubourg et al. 2011; Dyn et al. 1986; Martin and Simpson 2005; Smola and Scholkopf 2004)

The accuracies of metamodels in predicting critical crash responses of an automobile have been investigated by many researchers. For instance, Fang et al. (2005) found that RBF could give accurate metamodels for highly nonlinear responses, Simpson et al. (2001) found KRG to be most suitable for slightly nonlinear responses in high dimension spaces, and Jin et al. (2001) proposed the use of PRS for slightly nonlinear and noisy responses. An extensive review of metamodeling can be found in Wang and Shan (2007).

Since the metamodels are used in the RBDO process, the quality of metamodels affects directly the result of the optimization design and reliability analysis. Even the optimization results may be misleading if the quality of the metamodel cannot be ensured. It is necessary to further the investigation of developing efficient metamodeling techniques for approximating, as accurate as possible, highly nonlinear responses of OPS performances. The custom in metamodelling techniques is based on constructing many different metamodels and then selecting the best one and deleting the rest. Since the performances of different metamodels are dependent on the training data set used, the selected metamodel is not guaranteed to be the optimal choice with the other response data set. For example, Kriging sometimes works very well in the estimation of one function, but it may not work well in others simultaneously. These obstacles can be overcome by the use of an ensemble of metamodels rather than a single one. Therefore, several researchers combined multiple metamodels in the form of an ensemble. The main purpose for the use of an ensemble of metamodels is to protect against the error and variability in the prediction of individual metamodels. The use of an ensemble of different models is first introduced by Bishop (1995) and alternative formulations are proposed by Zerpa et al. (2005), Goel et al. (2007), Lee and Choi (2014) and Acar and Rais-Rohani (2009). These studies showed that the results of the ensemble of metamodels had taken advantage of the prediction ability of each individual metamodel to enhance the accuracy of the response predictions.

Although there have been substantial published works on the optimization for structural crashworthiness design using the ensemble of metamodels, limited reports have been available to optimize OPS The reliability-based design optimization for OPS considering the uncertainty also has received limited attention in the literature. Thinking for its significant practical value, the development of the RBDO methods for OPS is very important. This paper presents a comprehensive study approach of how non-deterministic optimization schemes are performed in the design of vehicle OPS under the frontal full impact rigid wall test modes based on GB11551 of China. Nine design variables are selected from the airbag, seat and seatbelt parameters which include mass flow rates, vent areas, belt limiter load etc. Based on the Design of Experimental (DOE) dominant design variables, optimization criteria and methods are established for the next step. To achieve high accuracy with limited number of simulations analyses, an ensemble of four individual metamodels including PRS, RBF, KRG and SVR is used to estimate OPS performance of an automobile in this paper The prediction capability of individual metamodels and the ensemble are discussed. After validating the ensemble of metamodels, the Particle Swarm Optimization (PSO) algorithm is applied to search the optimal solutions, and the First order reliability method (FORM) and Second Order Reliability Method (SORM) are applied to perform the reliability analysis. To validate the accuracy of the reliability analysis method, the results are checked by Monte Carlo (MC) simulation. The deterministic and non-deterministic optimization results are generated and their calculating efficiencies are compared, respectively. The reliability-based optimization results are validated with the simulation data and then will be applied for products design in the future.

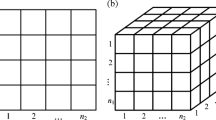

2 Ensemble of metamodels

In order to improve the accuracy of approximate models, it is a reasonable strategy to combine all the stand-alone metamodels into an ensemble model. Ensemble technique is an effective way to make up for the shortfalls of traditional strategy. For example, Goel et al. (2007) have demonstrated the advantages of an ensemble of metamodels instead of individual metamodel using analytical problems. For a given problem, if all the stand-alone metamodels developed for a given response happen to have the same level of accuracy, then an acceptable form for the ensemble would be a simple average of the metamodels (Zhou et al. 2011). However, this is not a general case because some metamodels tend to be more accurate than others. Hence, in attempting to enhance the accuracy of the ensemble, the members of the ensemble have to be multiplied by different weight factors (Bishop 1995; Acar 2010; 2014). An ensemble of metamodels for approximation can be expressed as:

where \(\hat {{y}}_{ensemble} \)is the prediction of the ensemble, N M is the number of metamodels, w i is the weight factor for the ith metamodel, and \(\hat {{y}}_{i} \)is the prediction of the ith metamodel. The weight factors satisfy

Goel et al. (2007) proposed a heuristic method for calculating the weight coefficients, where the weight coefficients are computed as:

Where E i is the Generalized Mean Square Error (GMSE) of the ith metamodel, while \(\bar {{E}}\) is the average error of all metamodels used in constructing each ensemble. α and β are two parameters which control the importance of averaging and importance of individual metamodel, respectively. The parameters of α<1 and β<0are selected by the analyst based on the importance of E i and \(\bar {{E}}\). Goel et al. (2007) suggested that two parameters of α and βare determined to be 0.05 and -1 respectively in (4). In this study, the weight factors of the individual metamodels are selected according to a heuristic method proposed by Goel et al. (2007).

The four different individual metamodels including PRS, KRG, RBF and SVR are used in the ensemble of metamodels. The rationale of selecting these metamodels to demonstrate the proposed approach was (1) these metamodels are commonly used by practitioners and (2) they represent different parametric and nonparametric approaches (Queipo et al. 2005).The features of the PRS, KRG, RBF and SVR models are described in the following.

Equatin (1) a general PRS model can be written as:

where\(y\left ({\mathbf {x}} \right )\)is the exact response function dependent upon a number of variables x and εis random error. The approximating function \(f\left ({\mathbf {x}} \right )\) is assumed to be a summation of basis functions:

where Lis the number of basis functions, φ i is used to approximate the model. The unknown coefficients a=[a 1,a 2,⋯,a L]T have to be determined in order to minimize the sum of the square error ε. The quadratic approximation is chosen as basis functions in this study. The more details of PRS can be also consulted from the literature (Redhe et al. 2002).

Equation (2) KRG is applied by empirical methods for determining true ore grade distributions from distributions based on sampled ore grades. In recent years, the Kriging method has been found for wider application as a spatial prediction method in engineering design. A general KRG model can be written as (Zhao and Xue 2011):

where y is the unknown function of interest, \(f\left ({\mathbf {x}} \right )\)is a known polynomial and Z(x)a known polynomial and is the stochastic component with mean zero and covariance. More details regarding the KRG model can be consulted from the literature (Timothy et al. 2001)

Equation (3) the RBF method was originally developed to approximate multi-variate functions based upon scattered data. For a dataset with input variables and responses at S sampling points, the typical RBF model takes the following form:

where \(\left \| {x-x_{s} } \right \|\) is the Euclidean norm between design variables x and the sth sampling point x s , φ is a basis function, λ s is the unknown weighting factor positioned at the sth sampling point, p k (x) is polynomial terms, K is the number of polynomial terms (usually K<S), and C k (k=1, 2,⋯,K) is the coefficient for p k (x). Therefore, an RBF model is actually a linear combination of S radial basis functions and K polynomial terms with the weighted coefficients. In this study, the multi-quadric formulation (specifically\(\varphi (r)=\sqrt {r^{2}+c^{2}} \), in whichcis the free shape parameter (Buhmann 2003)) of RBF was chosen for its proven prediction accuracy in the structural crashworthiness design problems, and commonly linear and possibly exponential rate of convergence with increased sampling points. More details of RBF can be found in these literatures (Dyn et al. 1986; Fang et al. 2005).

Equation (4) the SVR is a new surrogate modeling technique with higher accuracy and a lower standard deviation (Lee et al. 2008). SVR is derived from Support Vector Machine (SVM) technique introduced for dealing with regression recognition problems and approximate function. The algorithmic purpose of SVR is to find a flat function, which separates the data into two classes by using the maximum margin principle. The linear case can be given by:

where wis the parameter vector that defines the normal to the hyperplane, bis the threshold, and\(\left \langle {{\mathbf w}}\cdot {\mathbf {x}} \right \rangle \)is the dot product of wand x. The non-linear function approximations can be easily achieved by exchanging the dot product of input vectors for the kernel function. In this study, the SVR model is constructed by the Gaussian kernel function, which is frequently used in the SVR technique (Pan et al. 2010)

There are several error measures available to determine the accuracy of the metamodels, such as Root Mean Square Error (RMSE) Coefficient of determination (R 2), and the Relative Error (RE) (Hou et al. 2012; Su et al. 2011). The equations for these measures are given below, respectively.

Where \(\hat {{y}}_{i} \) is the corresponding predicted value; y i is the actual simulation value;\(\bar {{y}}_{i} \) is the mean of the y i Generally speaking, the larger values of R 2, as well as the smaller values of RMSE indicate a better fitness of the metamodels (Fang et al. 2005) The RE is an error measure for local region and a small RE value is preferred.

3 Particle swarm optimization algorithm

The Particle Swarm Optimization (PSO) algorithm has been proposed and applied for engineering optimization by some researchers in the last years (Reyes-Sierra and Coello 2006; Ebrahimi et al. 2011) It is found that the information given on this topic in Pinto et al. (2007) is most instructive. The development of the basic algorithm presented here draws heavily from this work. For completeness, some ideas from their papers are included here.

In PSO, each particle represents a candidate solution associated with two vectors: position (X i ) and velocity (V i ). In a d-dimensional search space, the position of particle i can be described as\({\mathbf {X}}_{i}^{t} =\left ({x_{i1}^{t} ,x_{i2}^{t} ,...,x_{id}^{t} } \right )\), the velocity of particlei can be represented as\({\mathbf V}_{i}^{t} =\left ({v_{i1}^{t} ,v_{i2}^{t} ,...,v_{id}^{t} } \right )\) at iteration t. Let \({\mathbf {P}}_{i}^{t} =\left ({p_{i1}^{t} ,p_{i2}^{t} ,...,p_{id}^{t} } \right )\) denotes the personal best (pBest), which is the best solution that particle i has obtained until iterationt; and \({\mathbf P}_{g}^{t} =\left ({p_{g1}^{t} ,p_{g2}^{t} ,...,p_{gd}^{t} } \right )\) represents the global best (gBest), which is the best solution obtained from \({\mathbf {P}}_{i}^{t} \) in the population at iterationt. To search for an optimal solution, each particle updates its velocity and position according to the following equation:

where c 1and c 2are the cognitive and social learning factor in the range of [0, 4], h 1 and h 2 are the uniform random numbers in the range of [0,1], \(c_{0}^{(k)} =eta\times c_{0}^{(k-1)} (k=1,{\cdots } ,k_{\max } )\) is the inertia weight that controls the influence of previous velocity in the new velocity and has a critical effect on PSO convergence behavior. eta is the decreasing rate of c 0defined by the user; k is the current iteration; \(k_{\max } \)is the maximum of iteration defined by the user.

4 Vehicle occupant protection system optimization design

The continuously increasing demands on vehicle safety performance and improving requirement of the government regulations have been a major challenge for vehicle manufacturers in the today. This has resulted in a rapid implementation of the various Occupant Protection System (OPS) into a new car. This paper describes the optimization of OPS to meet performance requirements for the frontal full impact rigid wall test modes according to the GB11551. One of very important problems in the development process is the optimal designs of OPS at a limited short time and in a cost economy way. The flow chart of the optimization procedure is shown in Fig. 1.

The solution process is systematically divided into seven steps:

-

1) Develop the computer code and validate the model by comparing the simulation and physical test results.

-

2) Define the optimization problem including objective functions and constraints, design variables and ranges etc.

-

3) Adopt the Optimal Latin Hypercube Sampling (OLHS) method for Design of Experiment (DOE) and construct the four individual metamodels.

-

4) Build the ensemble of metamodels, find the weight factors, and evaluate the error. The first loop continues until a satisfying result is obtained by adding the sampling points. In this research, the values of R 2, RMSE and RE are employed as the termination criterion.

-

5) Solve the deterministic optimization as well as the RBDO using PSO algorithm

-

6) Conduct the reliability analysis for every constraint based on the metamodels.

-

7) Validate the reliability optimization solution with simulation result. The second loop continues until a satisfying result is obtained. In this research, the error value between the optimal solution and simulation result is employed as the termination criterion.

4.1 Model description and validation

Mathematical Dynamic Model (MADYMO 2005) is the worldwide standard software for analyzing and optimizing occupant safety designs. Using MADYMO, researchers and engineers can model, thoroughly analyze and optimize safety designs early in the development process. This reduces the expense and time involved in building and testing prototypes. Adopting MADYMO also minimizes the risk of making design changes late in the development phase. For new or improved vehicle models and components, MADYMO cuts cost and reduces the time-to-market substantially. MADYMO simulations correlate well with real crash test results and are completed within minutes. Safety designers can easily apply design-of-experiment methods, optimization or stochastic techniques together with MADYMO to explore the effects of multiple design variables simultaneously. The MADYMO Solver is a flexible multi-physics simulation engine that uniquely combines the capabilities of Multi-Body (MB) and Finite Element (FE) in a single CAE solver. This makes the MADYMO solver a highly efficient tool for design and analysis of complex dynamic systems. MADYMO dummy models are famously accurate and also renowned for their computational speed, robustness and user-friendliness.

A restraint system and a driver-side airbag which are provided by an industrial partner are used to create a baseline model for simulation analysis. The baseline model consists of the following groups of components:

-

1) A biofidelic dummy is used in the driver seating positions and represented by a 50th percentile male dummy model of MADYMO program;

-

2) The restraint system includes the airbag model, the seat, seat belt, retractor, sash and webbing clamp, the steering column;

-

3) The vehicle interior consists of the dashboard, steering wheel, floor pan and toe pan;

-

4) The vehicle frontal structure is represented by a deceleration pulse taken from a vehicle test of full impact rigid wall with the initial velocity of 50km/h.

The dynamic model of the system is shown in Fig. 2.

To examine the availability of the MADYMO baseline model, the simulation results can be validated by the sled test. As Table 1 shows, the maximum error of the injury criteria between simulation and test is less than 8%. As Fig. 3 shows that simulation curves of the chest acceleration, chest compression and femur loads correlate well with the sled test results. It is found that the head deceleration differs in a slight time delay around the peak. This difference may be caused by an assumption of constant venting discharge coefficient in the MADYMO airbag model.

Figure 4 exhibits the dummy campaign gestures of the simulation model and corresponding physical test at times (a) t =0ms (b) t =50ms, (c) t =60ms and (d) t =100ms. Obviously, they agree well with the physical test. Therefore, the MADYMO simulation model is accurate enough for the subsequent OPS optimization.

4.2 Design responses and variables

The multi-criteria injury values of dummy are formulated as a single cost function with the Weight Injury Criteria (WIC) (Viano and Arepally 1990) subject to multiple functional constraints. The WIC combines these five injury criteria as a single-design objective in this paper as follows:

The value of WIC is chosen as the optimization objective and the injury measures of the 50th percentile male dummy including the HIC 36of head injury criterion for continuance 36ms, chest acceleration for cumulative 3ms, chest compression and femur loads are adopted as the constraint level. Table 2 summarizes the responses of initial design and the maximum allowance values of each constraint. The specified constraints should be less than the initial design values

Changes in the airbags, seat and seatbelts parameters have all contributed to a reduction in the safety risk from OPS, which are reflected in the dummy injury measure. So, nine related design variables in this study are selected from the parameters as followers.

Generally, an airbag has two vents that can adaptively release gas when the occupant impacts the airbag and the size of the vents changes depending on the pressure existing in the airbag. Internal straps used to control the shape of the air bag and the number of straps for the driver airbag has been a trend toward “2” tethers. An airbag trigger time is provided with a first sensor which is disposed in a predetermined position in a vehicle body. The average time for driver air bag trigger has approximately been consistent 15 ms in impact rigid wall with velocity of 50km/h. The rate of inflator mass flow is the gas that is generated during the inflation process to fill the air bag.

The seatbelt stiffness is the amount (in percent) a seat belt stretches when subjected to a specific force, and a low percent in elongation indicates a stiffer belt. For the driver and passenger sides, the seat belt stiffness has remained about constant at around eight to nine percent elongation per unit load. The seatbelt load limiter is the device that limits the forces imparted to the occupant by the seat belt during the crash event, and the forces are prevented from exceeding a pre-determined level by allowing the seat belt webbing to yield when forces reach this level. The seatbelt pretension is the device, usually pyrotechnic, to remove slack from the seat belt upon detection of a crash condition.

For reliability analysis, a probabilistic variable has a mean or nominal value, a variation around this mean value according to a statistical distribution and an optional lower and upper bound. Assume that xis a probabilistic design variable and the mean or expected value (μ x ) of xcan be calculated as

The variance (\(Var\left (x \right ))\) of x is a measure of the spread in the data about the mean and can be estimated as

The standard deviation (σ x ) is defined as the square root of \(Var\left (x \right )\) and can be expressed as

The Coefficient of Variation (COV) is expressed as

For a deterministic variable \(COV\left (x \right )\) is zero, a smaller value of the COV indicates a smaller amount of uncertainty in the variable. The design variables are assumed to distribute normally, whose COV is given as 3% from typical manufacturing tolerance in this study.

Table 3 provides the list of design variables, the values for the baseline design, COV (σ/μ), as well as the corresponding lower and upper bounds. The values of design parameters with the function are defined by the percentage changes relative to their baseline values. The design ranges of all the nine design variables are defined in terms of possible design changes allowed

4.3 Comparison and discussion of metamodel predictions

Considering affordability of simulation runs, the OLHS technique (Yang et al. 2005a; Yang et al. 2005b) is adopted for these nine design variables. Based on previous investigation, a 5N sample size is utilized to start the OLHS. A total of 45 initial sampling points are generated in the design space. The objective and constraint values of each sampling point are computed using MADYMO 6.3 version in a personal computer.

Following the simulation results, these individual metamodels including PRS, KRG, RBF and SVR are constructed. In order to further improve the approximation accuracy, the ensemble of metamodels is constructed and these individual metamodels (i.e., PRS, RBF, KRG and SVR) are used as the four members of the ensemble. The weight factors of the individual metamodels are gained based on the previously described techniques in this Section 2. The threshold values of two measures of R 2 and RMSE are set as 0.96 and 0.04 in this study The targets of threshold values of the two measures of R 2 and RMSE are met by adding 15 additional sampling points. The weight factors and validation results of the metamodels with 60 sampling points are clearly listed in Table 4. It is found that all of the individual metamodels cannot accord with the requirements of the threshold values except the ensemble of metamodels. Compared result for WIC except for PRS and KRG, other metamodels achieve good accuracy reflected by remarkably higher R 2 value and lower RMSE when all different training sets are used. For other five constraints, the SVR is the most accurate for \(F_{right}^{femur} \) and a 3m s ; RBF is the most accurate for HIC 36; KRG is the most accurate for C c o m p . So, the performance of a good model on the response does not necessarily mean good prediction of the other data.

In general, the weight factors are selected such that the metamodels with smaller errors have large weight factors and vice versa. However, the relation between the errors and the weight factors is complex. For instance, even though RBF is the most accurate model for H I C 36, the weight factor for RBF is not the largest value. Similarly, although the errors of SVR and KRG are much smaller than the error of PRS for a 3m s , the weight factor of PRS is larger than the weight factors of SVR and KRG. The main reason is that the weight factors are selected based on cross validation errors, whereas the accuracy of metamodels is evaluated using error at test points. The Fig. 5 shows that the error of the ensemble of metamodels is smaller than the error of the best individual metamodel.

These models are validated using eight more additional random actual simulation results. If the Relative Error (RE) of the point picked out exceeds 4%, the corresponding points are added into initial sample points and then re-construct the metamodels. The update process of the metamodels will be terminated until the RE is less than 4%. Through the two cycles, the REcurves of six metamodels are displayed clearly in Fig. 6 and variations are found to be less than 4% including all responses. Therefore, the accuracies of the current models are considered to be feasible and effective for the further study.

4.4 Reliability-based design optimization of occupant protection system

The deterministic optimization problem can be formulated as:

The deterministic optimization without considering uncertainties could lead to unreliable designs. So, the Reliability-based Design Optimization (RBDO) can be formulated by converting the constraints to probabilistic as follows:

The desired reliability P s is set as 0.99. The nine random variables are incorporated according to the probability distribution defined previously in Table 3.

The Particle Swarm Optimization (PSO) algorithm is chosen as the solver in both deterministic and reliable optimization in this study To compare the computational cost and solution quality, a simple convergence criterion which is a predefined maximum iteration number is used. Table 5 contains the PSO parameters used in this study.

Starting design point of RBDO is performed from the deterministic design. A normally distributed response is assumed for the estimation of the probability of failure giving the probability of failure as:

where Φis the cumulative density function of the normal distribution. The reliability index βof a response is computed as:

where μ and σ are the expected value and standard deviation of response respectively. In this study, the First Order Reliability Method (FORM) and Second Order Reliability Method (SORM) are used to calculate reliability index, which are shown to be an accurate and efficient procedure for estimation of the extreme value statistics of even very non-linear responses (Zhao and Ono 1999; Jensen 2007). For an analysis of a specific probability associated with one (or a few) target values, the FORM and SORM will usually be much more efficient than Monte Carlo (MC) Simulation. To validate the accuracy of the reliability analysis method, the results are checked by Monte Carlo (MC) simulation. It takes less than 1 minute for checking the reliable solution obtained by FORM or SORM method. This method has been performed by other researcher (Koch et al. 2004). The MC simulation is consisted with 10,000 descriptive sampling points using given distribution in this study. Performing the Monte Carlo analysis using metamodels to the functions instead of CAE function evaluations allows a significant reduction in the cost of the procedure.

The FORM and SORM analysis would be conducted for every constraint using the ensemble of metamodels in this study Probabilistic methods based on the most probable point of failure focus on finding the design perturbation most likely to cause failure. The method computes the standard deviation of the responses using the same metamodel as used for the deterministic optimization portion of the problem. No additional CAE runs are therefore required for the probabilistic computations.

The CPU time for the deterministic optimization is approximately 16 seconds and the reliability optimization time of FORM and SORM is about 9 minutes and 15 minutes on a personal computer, respectively. The deterministic and reliable optimization iteration convergence process can be shown in the Figs. 7 and 8. The results indicate that the 500 generations converged fairly and is adequate for solving the optimization problem. Nine design variable parameters of deterministic and reliable optimization in Table 6.

The final designs of the problem by different methods are summarized in Table 7. It is found that the differences of results between the FORM analysis and MC checking are very obvious Thus, the FORM cannot be an appropriate reliability method for the vehicle occupant protection system in this study The SORM method is more accurate than the FORM method if the responses are nonlinear functions of normally distributed design variables (Cai and Elishakoff 1994) So the accuracy of failure probability is acceptable using the SORM method in this study and the result will be applied for products design in the future.

It shows that approximate 47% and 52% sample points at deterministic optimum only satisfy the \(F_{left}^{femur} \) and \(F_{right}^{femur} \), leaving 53% and 48% out of 100% samples violating the constraints, respectively. On contrary, the RBDO has over 99% of 100 sample points lying in the feasible region using the SORM analysis.

The reduced WIC value by using deterministic optimization and RBDO is 0.604 (39.6%) and 0.755 (24.5%), respectively. As expected, the reduced WIC result from RBDO is conservative compared to the ones from deterministic optimization, which results from the fact that the uncertainties of the design variables are taken into account. It means that the 99% reliability of ORS design optimum goal is achieved or exceeded for all constraints in reliability design when the worst-case tolerances of design variables are considered. As the reliability of performance constraints is considered during the RBDO, the feasibility of OPS design for vehicle safety is greatly improved in the real engineering application.

The CAE result was used to verify the optimization solution. The error values between the reliability-based optimization solution and CAE result are listed in Table 8, it is indicated that the results at the optimal point are exactly close to each other and the optimal solution has sufficient accuracy.

5 Conclusions

An Occupant Protection System (OPS) design without considering uncertainty may result in a lack of feasibility, which indicates that it is important to incorporate uncertainty in engineering design optimization and develop computational techniques that enable engineers to make efficient and reliable decisions. The primary goals of this paper were to provide a system approach of the Reliability-based Design Optimization (RBDO), which has been developed to design and optimize the OPS by using Optimal Latin Hypercube Sampling (OLHS), the ensemble of metamodels, the First/Second Order Reliability Method (FORM/SORM) analysis, Monte Carlo (MC) simulation checking and Particle Swarm Optimization (PSO) algorithm Here computer simulations were the primary tool to explore the whole design space and search for the optimal designs, so physical tests need be used to correlate the baseline computer simulation models. The use of the ensemble of metamodels was investigated for improving the accuracy of automobile crash dummy injury response approximations. The prediction capability of the ensemble of metamodels was compared to the best individual metamodel. From the results obtained in this study, the error of the ensemble was smaller than that of the most accurate individual metamodel. The predominance in approximation accuracy indicated that the ensemble of metamodels held great potential in approximating highly nonlinear problems

It was found that the RBDO was more conservative than the results of deterministic optimization as expected, which was indicated by the fact that the reduced injury of dummy by using RBDO is less than the one obtained through deterministic optimization. However, as the variation of performance constraint functions raised by the uncertainties of design variables was considered, the reliability of the OPS design for the vehicle safety was greatly improved in the real engineering application.

Finally, the optimum solution was verified with simulation result. It was found that the OPS performance was substantially improved for meeting product development requirements based on the proposed optimization method. The continuous improvement of the system approach to complex OPS design and optimization is still an ongoing process.

References

Acar E (2010) Various approaches for constructing an ensemble of metamodels using local measures. Struct Multidisc Optim 42:879–896

Acar E (2014) Simultaneous optimization of shape parameters and weight factors in ensemble of radial basis functions. Struct Multidisc Optim 49:969–978

Acar E, Rais-Rohani M (2009) Ensemble of metamodels with optimized weight factors. Struct Multidiscip Optim 37:279–294

Bishop CM (1995) Neural Networks for Pattern Recognition. Oxford University Press Inc, New York

Bruno JY, Trosseille X, Coz JY (1998) Thoracic injury risk in front car crashes with occupant restrained with belt load limiter. SAE Paper 983166

Buhmann MD (2003) Radial basis functions: theory and implementations. Cambridge University Press, New York

Cai GQ, Elishakoff I (1994) Refined second-order reliability analysis. Struct Saf 14:267–276

Dias JP, Pereira MS (2004) Optimization methods for crashworthiness design using multibody models. Comput Struct 82:1371–1380

Douglas JG, Hampton CG (2010) The effects of airbags and seatbelts on occupant injury in longitudinal barrier crashes. J Saf Res 41:9–15

Dubourg V, Sudret B, Bourinet JM (2011) Reliability-based design optimization using kriging surrogates and subset simulation. Struct Multidisc Optim 44:673–690

Dyn N, Levin D, Rippa S (1986) Numerical procedures for surface fitting of scattered data by Radial Basis Functions. SIAM. J Sci Stat Comp 7(2):639–659

Ebrahimi M, Farmani MR, Roshanian J (2011) Multidisciplinary design of a small satellite launch vehicle using particle swarm optimization. Struct Multidisc Optim 44:773–784

Fang H, Rais-Rohani M, Liu Z, Horstemeyer MF (2005) A comparative study of metamodeling methods formulti-objective crashworthiness optimization. Comput Struct 83:2121– 2136

Forsberg J, Nilsson L (2005) On polynomial response surfaces and Kriging for use in structural optimization of crashworthiness. Struct Multidisc Optim 29:232–243

Goel T, Haftka RT, Shyy W, Queipo NV (2007) Ensemble of surrogates. Struct Multidiscip Optim 33(3):199–216

Gu XG, Lu JW (2014) Reliability-based robust assessment for multiobjective optimization design of improving occupant restraint system performance. Comput Ind. doi:10.1016/j.compind.2014.07.003

Gu XG, Sun GY, Li G.Y, Huang XD, Li YC (2013) Multiobjective optimization design for vehicle occupant restraint system under frontal impact. Struct Multidisc Optim 47:465–477

Gu XG, Sun GY, Li GY, Mao LC, Li Q (2013) A Comparative Study on Multiobjective Reliable and Robust Optimization for Crashworthiness Design of Vehicle. Struct Multidisc Optim 48:669–684

Hou SJ, Dong D, Ren LL, Han X (2012) Multivariable crashworthiness optimization of vehicle body by unreplicated saturated factorial design. Struct Multidisc Optim 46:891–905

Huston RL (2001) A review of the effectiveness of seat belt systems: Design and safety considerations. Int J Crashworthiness 6:243–252

Jensen JJ (2007) Efficient estimation of extreme non-linear roll motions using the first-order reliability method (FORM). J Mar Sci Technol 12:191–202

Jin R, Chen W, Simpson TW (2001) Comparative studies of metamodeling techniques under multiple modeling criteria. Struct Multidisc Optim 23:1–13

Koch PN, Yang RJ, Gu L (2004) Design for six sigma through robust optimization. Struct Multidisc Optim 26:235–248

Lee YB, Choi DH (2014) Pointwise ensemble of meta-models using v nearest points cross-validation

Lee Y, Oh S, Choi DH (2008) Design optimization using support vector regression. J of Mech Sci and Tech 22:213–220

Liao XT, Li Q, Yang XJ, Li W, Zhang WG (2008) A two-stage multi-objective optimization of vehicle crashworthiness under frontal impact. Int J Crashworthiness 13:279–288

Lin SP, Shi L, Yang RJ (2014) An alternative stochastic sensitivity analysis method for RBDO. Struct Multidisc Optim 49:569–576

MADYMO (2005) Theory Manual, Version 6.3.TNO, Road Vehicle Institute, Delft, the Netherlands

Martin JD, Simpson TW (2005) Use of Kriging models to approximate deterministic computer models. AIAA J 43(6):853–863

Mizuno K, Matsui Y, Ikari T, Toritsuka T (2011) Seatbelt effectiveness for rear seat occupants in full and offset frontal crash tests. Int J Crashworthiness 16(1):63–74

Pan F, Zhu P, Zhang Y (2010) Metamodel-based lightweight design of B-pillar with TWB structure via support vector regression. Comput Struct 88:36–44

Pinto A, Peri D et al (2007) Multiobjective optimization of a containership using deterministic particle swarm optimization. J Ship Res 51(3):217–228

Queipo NV, Haftka RT, Shyy W, Goel T, Vaidyanathan R, Tucker PK (2005) Surrogate-based analysis and optimization. Prog Aerosp Sci 41:1–28

Redhe M, Forsberg J, Jansson T, Marklund PO, Nilsson L (2002) Using the response surface methodology and the Doptimality criterion in crashworthiness related problems – an analysis of the surface approximation error versus the number of function evaluations. Struct Multidiscip Optim 24(3):185–194

Reyes-Sierra M, Coello C (2006) Multi-objective particle swarm optimizers: A survey of the state of the art. Int J Comput Intell Res 2(3):287–308

Simpson TW, Mauery TM, Korte JJ, Mistree F (2001) Kriging models for global approximation in simulation-based multidisciplinary design optimization. AIAA J 39(16):2233–2241

Sinha K (2007) Reliability-based multiobjective optimization for automotive crashworthiness and occupant safety. Struct Multidisc Optim 33:255–268

Smola AJ, Scholkopf B (2004) A tutorial on support vector regression. Statistics and computing 14(3):199–222

Su RY, Gui LJ, Fan ZJ (2011) Multi-objective optimization for bus body with strength and rollover safety constraints based on surrogate models. Struct Multidisc Optim 44:431–441

Taflanidis A, Beck J (2009b) Stochastic subset optimization for reliability optimization and sensitivity analysis in system design. Comput Struct 87(5–6):318–331

Timothy WS, Timothy MM, John JK, Farrokh M (2001) Kriging models for global approximation in simulation-based multidisciplinary design optimization. AIAA Journal 39(12):2233–2241

Viano DC, Arepally S (1990) Assessing the safety performance of occupant restraint system. SAE Paper 902328

Wang GG, Shan S (2007) Review of metamodeling techniques in support of engineering design optimization. ASME J Mech Des 129(6):370–380

Yang RJ, Akkerman A, Anderson DF, Faruque OM, Gu L (2000) Robustness optimization for vehicular crash simulations. Comput Sci Eng 2(6):8–13

Yang RJ, Chuang C, Gu L, Li G (2005a) Experience with approximate reliability-based optimization method II: an exhaust system problem. Struct Multidisc Optim 29(6):488–497

Yang RJ, Wang N, Tho CH, Bobineau JP (2005b) Metamodeling development for vehicle frontal impact simulation. J Mech Des 127:1014–1020

Youn BD, Choi KK, Yang RJ, Gu L (2004) Reliability-based design optimization for crashworthiness of vehicle side impact. Struct Multidisc Optim 26(3–4):272–283

Zerpa L, Queipo NV, Pintos S, Salager J (2005) An optimization methodology of alkaline-surfactant-polymer flooding processes using field scale numerical simulation and multiple surrogates. J Petrol Sci Eng 47:197–208

Zhao D, Xue DY (2011) A multi-surrogate approximation method for metamodeling. Eng Comput 27(2):139–153

Zhao YG, Ono T (1999) A general procedure for first/second-order reliability method (FORM/SORM). Struct Saf 21:95–112

Zhao ZJ, Jin XL, Cao Y, Wang JW (2010) Data mining application on crash simulation data of occupant restraint system. Expert Syst Appl 37:5788–5794

Zhou XJ, Ma YZ, Li XF (2011) Ensemble of surrogates with recursive arithmetic average. Struct Multidisc Optim 44:651– 671

Acknowledgments

The supports from China Postdoctoral Science Foundation funded project (2014M551795) and the Fundamental Research Funds for the Central Universities (2014HGQC0033) are acknowledged.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gu, X., Lu, J. & Wang, H. Reliability-based design optimization for vehicle occupant protection system based on ensemble of metamodels. Struct Multidisc Optim 51, 533–546 (2015). https://doi.org/10.1007/s00158-014-1150-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-014-1150-7